1. Introduction

The explosive growth of e-commerce has driven rapid development in the express delivery industry, placing significant pressure on processes such as sorting, transportation, and warehousing. Traditional manual sorting suffers from high costs, low efficiency, and high error rates, making the automated identification and tracking of express parcels an urgent need for the industry’s intelligent upgrade. As the foundation of visual intelligent sorting, the accuracy, speed, and robustness of object detection technology play a crucial role in improving logistics efficiency and reducing operational costs.

However, the automated detection of express parcels still faces numerous unique challenges. The first is dense stacking and severe occlusion—parcels on conveyor belts are often in a high-density stacked state, leading to extensive overlap and mutual occlusion, which causes feature confusion and boundary ambiguity, easily resulting in missed and false detections [

1]. Another is extreme scale variation and small object difficulties. The sizes of express parcels vary significantly, from small envelopes to large appliances; in particular, accessory marks (such as QR codes and barcodes) on small parcels occupy few pixels in images and lack sufficient feature information, leading to a decrease in detector recall. A third issue is complex backgrounds and lighting interference—illumination changes, surface reflections, stains, and wrinkles in the sorting environment severely degrade the apparent features of targets. Additionally, the diversity of parcel shapes and posture variations, including cubic, soft packages, and tubular types, may appear in flat, sideways, or inverted positions during conveyance, causing severe perspective deformation. The irregular boundaries and poor geometric stability of soft parcels further increase the difficulty of matching traditional rectangular detection boxes.

Currently, parcel detection primarily relies on three types of technologies. The first is laser scanning, which captures the 3D geometric contours of targets by actively emitting laser beams, offering significant advantages in spatial positioning accuracy. However, its high hardware costs and inability to capture key visual information such as color and texture greatly limit its potential in multimodal detection tasks [

2,

3,

4,

5]. The second is traditional visual methods (e.g., SIFT features combined with SVM classifiers) [

6,

7,

8,

9]. While computationally efficient, their heavy reliance on handcrafted features results in poor generalization under real-world conditions, such as low light and diverse parcel types, hindering the stable identification of low-contrast targets and distinction of visually similar parcels. The third is deep learning-based end-to-end detection frameworks [

10,

11,

12,

13], which have made significant progress in general object detection in recent years, offering new solutions for complex and variable parcel sorting tasks.

Among them, the two-stage detection methods represented by Faster R-CNN [

14] excel in localization accuracy and robustness to complex backgrounds but suffer from high computational costs and slow inference speeds, making it difficult to meet the efficiency requirements of real-time sorting. One-stage detectors like SSD [

15] have simple structures and fast inference speeds, but still face shortcomings in multi-scale feature fusion and small object detection, performing poorly in dense object scenarios and being prone to missed detections and localization inaccuracies. The YOLO series [

16,

17,

18,

19,

20,

21,

22], as a benchmark in the detection field, continuously breaks new ground in balancing speed and accuracy, providing strong support for industrial deployment. However, its performance in small object detection accuracy and under extreme occlusion still has room for improvement.

To address core issues such as detection failure due to dynamic lighting fluctuations, missed detections caused by soft parcel deformation, stringent time constraints in high-speed sorting, and the lack of specialized training datasets, this paper proposes an improved YOLO-based detection model: the Hybrid Dynamic Perception Network (HDPNet). Its core innovations include the following:

Dynamic Illumination-aware Module (DIM): Introduces global illumination encoding [

23,

24] and spatial and channel attention mechanisms [

25] to achieve adaptive feature enhancement under different lighting conditions, improving model robustness in challenging environments such as low light and reflections.

Interactive Attention Fusion Network (IAFN): Designs a lightweight Transformer-CNN [

26,

27,

28] interactive architecture to enhance semantic alignment and information complementarity between features, improving representation capability under occlusion and deformation.

Multi-branch Decomposition Network (MDN): Combines deformable convolution [

29] and sparse Transformer mechanisms [

30,

31] to effectively capture multi-scale contextual features and geometric structure changes, enhancing perception performance for extreme-scale targets and soft parcel deformation.

Additionally, nonlinear dimensionality reduction techniques [

32] and data visualization methods [

33,

34] provide theoretical support for understanding feature representations in complex environments, while the perception-decision framework from UAV trajectory optimization [

35] also offers inspiration for the design of our dynamic perception network.

Furthermore, we have constructed a dataset named njpackage, which contains 2572 images of industrial express packages under low-light conditions. The purpose of this dataset is to study the effects of low-light conditions, occlusion states, and package categories on the recognition performance. Experiments show that the proposed method achieves a detection accuracy (mAP@0.5) of 86.6% while maintaining real-time inference speed (≥45 FPS), significantly outperforming the baseline model. The proposed HDPNet demonstrates excellent robustness and detection accuracy in complex environments, providing more efficient and reliable technical support for automated parcel sorting systems.

2. Related Work

Although methods such as Faster R-CNN, YOLO, and FCOS [

36] have become widely adopted solutions in the field of express parcel object detection, key challenges remain in practical industrial environments. Balancing detection accuracy with real-time performance, and enhancing model robustness against illumination variations, deformation, and occlusion are critical issues requiring in-depth exploration.

Early express parcel detection primarily relied on traditional image processing techniques, such as Haar feature extraction [

37,

38], HOG feature extraction combined with SVM algorithms [

39], and the SIFT algorithm. In algorithms based on Haar feature extraction, the Adaboost classifier [

40] handles feature selection and cascade classification [

41,

42]. Increasing the number of classifiers can improve the detection rate of the strong classifier while reducing its false acceptance rate, thereby enhancing overall detection efficiency. However, this algorithm has significant drawbacks. Firstly, Haar-like features [

43] are inherently simple, leading to limited overall algorithm stability. Secondly, its weak classifiers use simple decision trees [

44], making them prone to overfitting. Consequently, the algorithm performs poorly in complex situations like occlusion. The combination of HOG feature extraction and SVM is a classic object detection approach. HOG, based on histograms of oriented gradients, effectively describes local shape information. SVM, grounded in statistical learning theory, excels at classifying high-dimensional data. This combination offers certain robustness to local deformation and illumination changes, and once achieved high accuracy in tasks like pedestrian detection. However, its generalization capability is weak, adapting poorly to occlusion and complex backgrounds. Additionally, the high dimensionality of HOG features results in high computational costs and suboptimal real-time performance. The SIFT algorithm ensures scale invariance by constructing Gaussian and difference in Gaussian pyramids [

45]. It achieves rotation invariance by precisely locating feature points and determining their orientation. As its feature descriptors primarily rely on image gradient information rather than absolute pixel values, it also possesses some robustness to illumination changes. However, SIFT involves substantial computation, leading to low real-time performance. Furthermore, when image edges are smooth or the image is blurred, the number of detectable feature points may decrease, making stable feature extraction difficult.

In 2012, the AlexNet [

11] deep convolutional neural network achieved results far surpassing traditional methods in the ImageNet [

46] image recognition challenge. The subsequent proposal of ResNet advanced CNNs further. These successes established the core role of deep learning in computer vision. Deep learning-based object detection methods are broadly divided into CNN-based detection and Transformer-based detection.

CNN-based object detection can be categorized into two-stage and one-stage methods based on the detection pipeline. Two-stage methods first generate region proposals, then classify and refine their positions. They offer higher accuracy but relatively slower speeds. Representative algorithms include R-CNN [

47], Fast R-CNN [

48], and Faster R-CNN. R-CNN uses a selective search algorithm to automatically generate object proposals, avoiding the redundant computations of traditional sliding windows and improving regional focus. For classification, it uses Support Vector Machines (SVM) [

49] to effectively distinguish target categories from the background. However, each stage of this algorithm requires independent training, and it necessitates storing features for a large number of proposal regions externally, leading to poor real-time performance and wasteful spatial resources. Fast R-CNN introduced several key improvements over R-CNN. It performs a single convolutional operation on the entire image to generate a global feature map, then extracts features corresponding to proposal regions directly from this map, significantly reducing computation through convolutional sharing. It also introduces an RoI pooling layer to unify feature sizes, improving feature extraction accuracy. Moreover, Fast R-CNN integrates all tasks into a single network, significantly simplifying the training process. However, Fast R-CNN still relies on external selective search for proposal generation, a process not integrated into network training, limiting training speed. Concurrently, pooling operations and the large number of proposals also hinder its real-time performance. Faster R-CNN is a major improvement over Fast R-CNN, introducing a Region Proposal Network (RPN) to replace the selective search method. The RPN generates proposals directly on the image’s feature map and shares most computations with the subsequent detection network, enabling end-to-end training. This design improves detection speed. Zhao et al. [

50] developed an improved Faster R-CNN-based parcel detection model that addressed mutual occlusion of parcels by adding an edge detection branch and a self-attention ROI Align [

51] module. One-stage detection methods directly predict both object categories and bounding boxes from the image simultaneously. They are faster and more suitable for real-time scenarios. Representative algorithms include the YOLO series, SSD, and RetinaNet [

52]. YOLOv1 was the first to treat object detection as a single regression problem, using a neural network to directly predict bounding boxes and class probabilities. It was fast and had a simple network structure. In subsequent developments, YOLOv2 introduced batch normalization, a high-resolution classifier, and a fully convolutional network, enabling detection of over 9000 categories. YOLOv3 incorporated a Feature Pyramid Network to improve multi-scale object detection capability. YOLOv4 combined technologies like CSPNet [

53], PANet [

54], and SAM [

55] to enhance feature extraction capability and detection efficiency. YOLOv6 offers models of various sizes to suit industrial applications. YOLOv7 designed several trainable “bag-of-freebies” that improve real-time detection accuracy without increasing inference cost. YOLOv9t introduced Programmable Gradient Information and a Generalized Efficient Layer Aggregation Network architecture based on gradient path planning [

56], improving model detection accuracy. YOLOv10 speeds up inference by eliminating NMS. YOLOv11 [

57] employs a multi-head attention mechanism with a C2PSA module to extract global features, enhancing object detection capability. Qi Kong Liu et al. researched a multi-object tracking algorithm based on improved YOLOv5 and DeepSORT [

58]. This algorithm integrates the SE attention mechanism [

59] into the YOLOv5 network and adopts the EIOU loss function [

60], improving the model’s feature extraction capability for parcels. The core technique of SSD is using a backbone network and additional convolutional layers to extract multi-scale feature maps. Its advantages include the absence of a proposal generation step, resulting in fast inference speed, and its inherent multi-scale detection capability. However, its anchor box scales and aspect ratios need manual adjustment based on the dataset, giving it weak generalization. RetinaNet extracts multi-scale features through a Feature Pyramid Network (FPN) [

61], which fuses features from different levels to adapt to detecting objects of various sizes. It also introduces focal loss to address the class imbalance problem. It possesses good multi-scale object detection capability, performing well especially on small objects. However, its feature fusion process is relatively complex and consumes significant hardware resources.

Transformer-based object detection methods represent an end-to-end detection framework. Their core idea is utilizing the self-attention mechanism to effectively capture dependencies among global features in the image. This approach first splits the image into patches and converts these patches into a sequence as model input. Then, through an encoder–decoder structure, the model directly predicts object locations and categories. It typically does not require predefined anchor boxes, simplifying model design and increasing flexibility. Representative algorithms include DETR (Detection Transformer) [

62], Focal Transformer [

63], and Swin Transformer [

64]. The core innovation of DETR is introducing the Transformer architecture into object detection. It first splits the image into patches and converts them into a sequence, performing global feature modeling via the Encoder. Subsequently, the Decoder receives the Encoder’s output and interacts with a set of learnable object queries to directly achieve end-to-end prediction of object locations and categories. This method eliminates the need for traditional components like anchor boxes and Non-Maximum Suppression (NMS), greatly simplifying the detection pipeline. It can effectively capture global relationships between objects and has strong generalization capability. However, DETR models converge slowly during training, have relatively weak capability for detecting small objects, and their inference speed is limited by the high computational complexity of Transformers. Focal Transformer introduces a focal attention mechanism. This mechanism dynamically adjusts the attention range, performing fine-grained self-attention within local image regions to capture detailed information, and coarse-grained self-attention globally to efficiently establish long-range dependencies. Its advantages include high computational efficiency, maintaining performance while reducing computational load, and dynamically adjustable focal sizes. Its drawbacks are that the multi-scale attention design makes the architecture relatively complex, and its window-based partitioning approach for visual attention has limitations in capturing global information. Swin Transformer employs a window-based multi-head self-attention mechanism (W-MSA) and achieves cross-window interaction through a shifting window approach. Its advantages include effectively reducing computational complexity and adapting to high-resolution images. Its disadvantages are that the shifted window mechanism might limit global information interaction, its capability for small object detection is limited, and its training and inference speeds are slow, requiring high computational power.

Addressing the challenges of low illumination, deformation, and real-time requirements in express sorting environments, our proposed HDPNet optimizes the network structure primarily from four aspects: low-light enhancement, attention mechanisms, multi-modal fusion, and feature extraction. In low-light object detection, existing methods can be divided into image enhancement and feature enhancement approaches. Image enhancement methods, such as RetinexNet [

65] and Zero-DCE [

66], improve visual quality through image preprocessing but increase computational overhead. Feature enhancement-based methods, such as CAF-YOLO [

67] and ADA-YOLO [

68], perform enhancement directly at the feature level, with the core goal of improving model accuracy for specific tasks. However, the introduced enhancement mechanisms might impair the model’s fundamental representational capacity and generalization. HDPNet employs a feature enhancement branch to ensure real-time performance while also incorporating a more comprehensive illumination modeling mechanism [

69]. Regarding attention mechanisms, SE-Net proposed channel attention, and CBAM [

70] considered both channel and spatial attention, but these methods were not specifically designed for illumination issues. The proposed DIM introduces global illumination encoding on this basis, enabling explicit modeling of illumination conditions. In multi-modal feature fusion, the IAFN module adopts an interactive fusion strategy, achieving effective complementarity between CNN’s local features and Transformer’s global features. The proposed MDN module combines deformable convolution [

71], which enhances geometric transformation modeling capability through adaptive sampling locations, with Transformer, which captures long-range dependencies via self-attention. This combination balances local details and global context, effectively improving the representational capacity for parcel deformation and multi-scale objects.

3. Method

This paper proposes a Hybrid Dynamic Perception Network (HDPNet) to address the issues of low recognition accuracy in low-light conditions and for irregular objects within the YOLO object detection framework: low recognition accuracy under low-light conditions and for irregularly shaped objects. Here, “low-light conditions” refer to imaging environments with insufficient illumination, which often lead to features such as low signal-to-noise ratio, loss of detail, and compromised color fidelity in the captured images, thereby increasing the difficulty of object detection. “Irregularly shaped objects” denote targets whose shapes deviate significantly from common regular geometries (e.g., rectangles or circles), such as non-convex, elongated, or highly deformable objects, which pose challenges for standard bounding box representations and feature extraction. The network modules include the Dynamic Illumination-aware Module (DIM), the Interactive Attention Fusion Network (IAFN), and the Multi-branch Decomposition Network (MDN). These modules work synergistically to tackle challenges such as feature degradation, insufficient contextual information, and scale variations in low-light environments, achieving end-to-end optimization from global illumination perception to local geometric modeling.

3.1. Overall Architecture Design

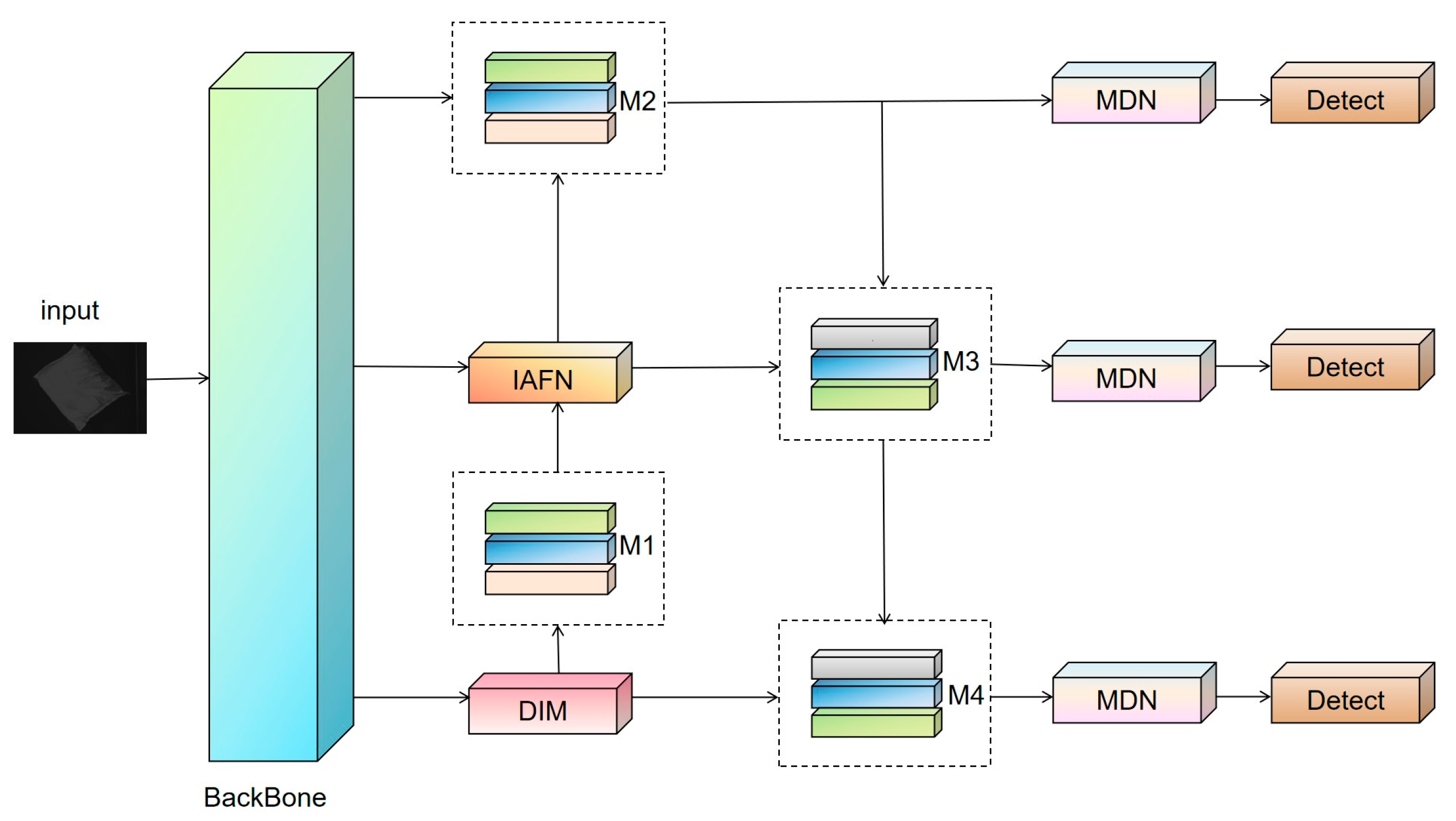

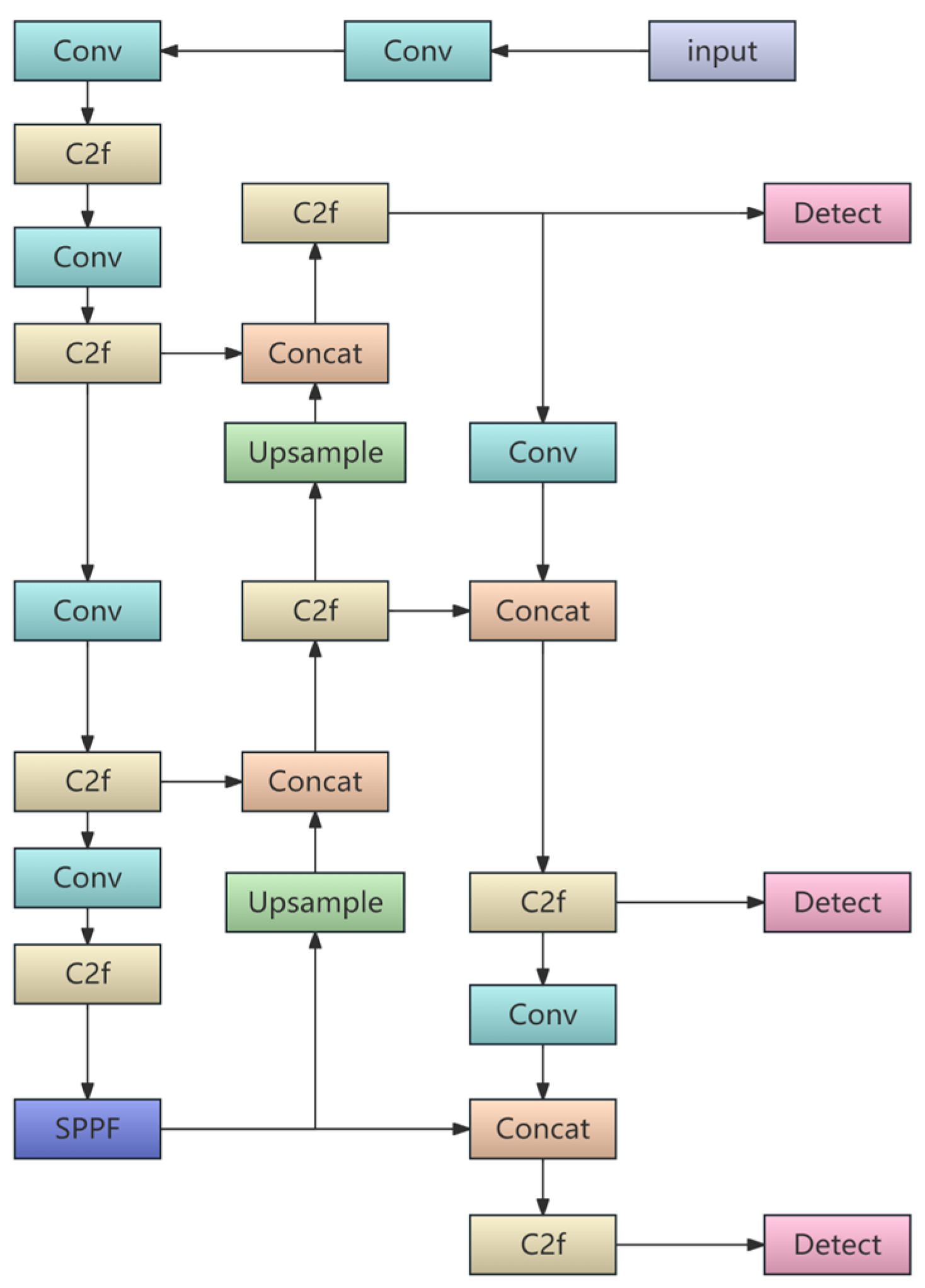

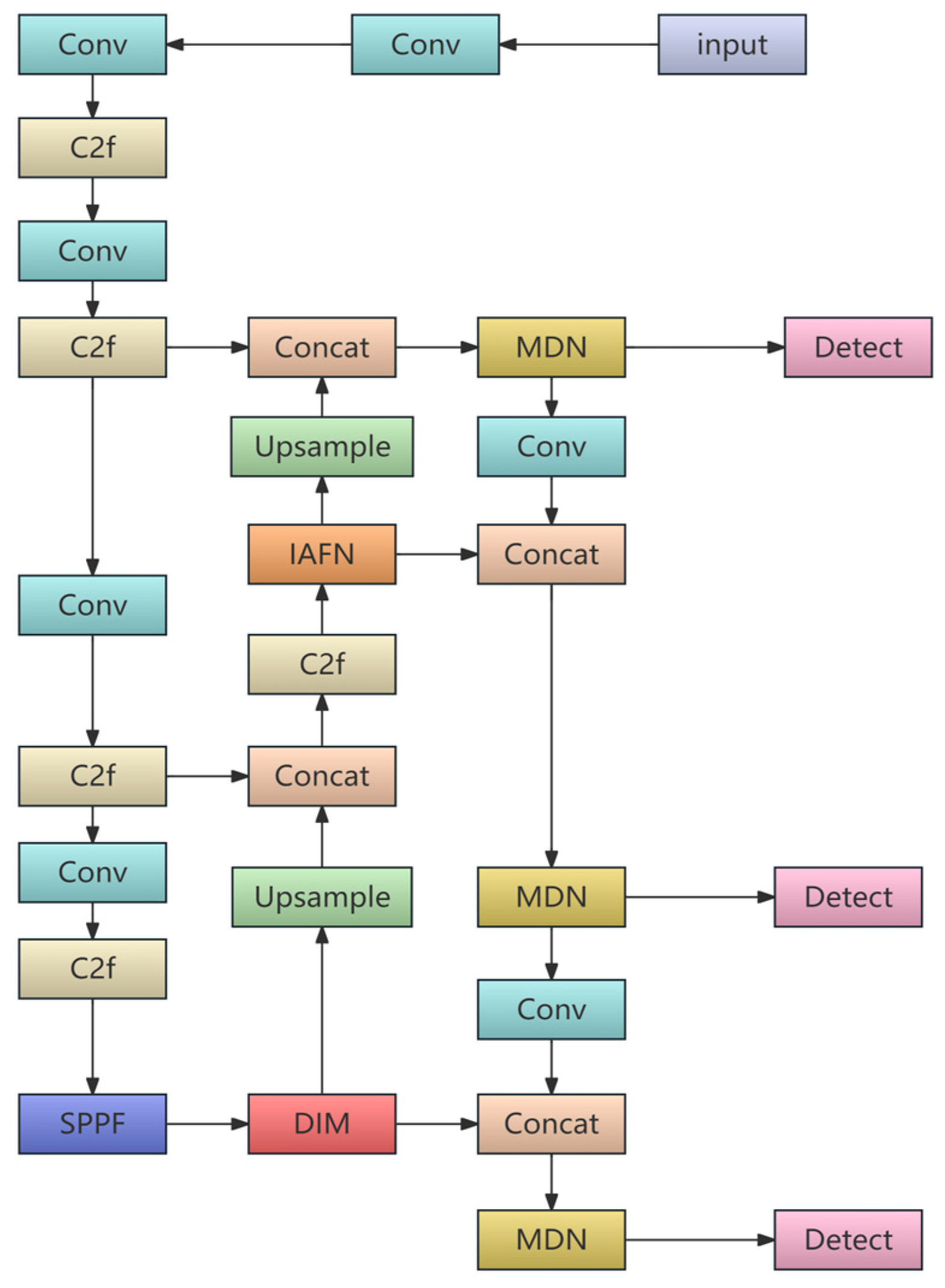

Figure 1 shows the generic YOLO architecture, which consists of four core components: Input, Backbone, Neck, and Detect. The Input is responsible for preprocessing the original image through operations such as resizing and pixel normalization to meet the network’s requirements and enhance training stability. The Backbone, serving as the core for feature extraction, encodes the input image into multi-level feature representations by stacking convolutional blocks, residual connections, Cross-Stage Partial (CSP) structures, and attention mechanisms. The Neck, composed of multi-scale feature maps (M1 to M4), employs operations like upsampling, downsampling, concatenation, and convolution to efficiently fuse the multi-scale features extracted by the Backbone, thereby generating an enhanced feature pyramid that combines rich semantic information with spatial details to improve the model’s multi-scale object detection capability. Finally, the Detect head converts the refined feature maps into concrete detection outcomes, outputting target bounding box coordinates, class labels, and confidence scores, thus completing an end-to-end object detection pipeline. HDPNet embeds three innovative modules into different stages of the network without altering its fundamental architecture, forming a hybrid perception framework. The framework demonstrates strong compatibility with various YOLO architectures, allowing flexible selection of YOLO models based on specific task requirements. For instance, YOLOv9 can be adopted when pursuing higher accuracy, while YOLOv8 or the YOLOv11 would be preferable for scenarios demanding an optimal balance between speed and precision. The overall architecture, as illustrated in

Figure 2, incorporates three proposed core modules embedded at different critical stages of the YOLO framework, collectively forming a hierarchical feature enhancement system. First, the DIM is embedded at the end of the Backbone network. This strategic placement is motivated by the fact that the Backbone has already performed basic feature extraction on the input image, but its deep features often contain significant noise and feature degradation caused by low light. Positioning the DIM at this juncture allows for the illumination-adaptive calibration and weight reconstruction of the deep feature maps with the richest semantic information at a pivotal point in the network. This provides a more robust feature representation for subsequent feature fusion and detection tasks. Next, the IAFN module is integrated into the Neck section. After processing by the DIM, the illumination inconsistencies in the feature maps are significantly alleviated. However, semantic gaps still exist between feature maps of different scales. At this stage, the IAFN module enhances cross-level and cross-scale information flow from the Backbone to the Neck through its internal interaction mechanism. Leveraging the features optimized by the DIM, this module aggregates richer contextual information during feature fusion, thereby compensating for the information loss caused by blurred object contours and missing textures under low-light conditions. Finally, the MDN is introduced just before the Detect head. After the progressive optimization by the preceding two modules, the quality of the feature maps and the effectiveness of fusion have been significantly improved. At the final detection stage, the MDN module employs deformable convolutions and a multi-branch structure, enabling the detection head to adaptively capture geometric deformations of irregular objects. The multi-scale contextual information provided by IAFN and the deformation modeling capability of MDN complement each other, working together to ensure precise localization and recognition of non-rigid or irregular objects in complex low-light scenarios. In summary, the innovation of HDPNet lies not only in the proposal of three independent modules but also in its systematic, end-to-end design. The DIM performs illumination-adaptive reconstruction of deep features, providing the IAFN module with feature representations that are more robust to illumination variations. IAFN, in turn, leverages these features to fuse richer multi-scale contextual information through enhanced cross-layer interactions. Finally, the MDN module achieves precise modeling of geometric deformations in irregular objects based on these optimized features. Together, these three modules form a deeply synergistic cascading mechanism, enabling simultaneous improvement in the feature discriminability, contextual awareness, and spatial deformation adaptability of the YOLO detector in low-light environments. The system-level performance gain achieved through the tight coupling of these modules cannot be attained by using any single module in isolation or through simple combinations.

3.2. Dynamic Illumination-Aware Module (DIM)

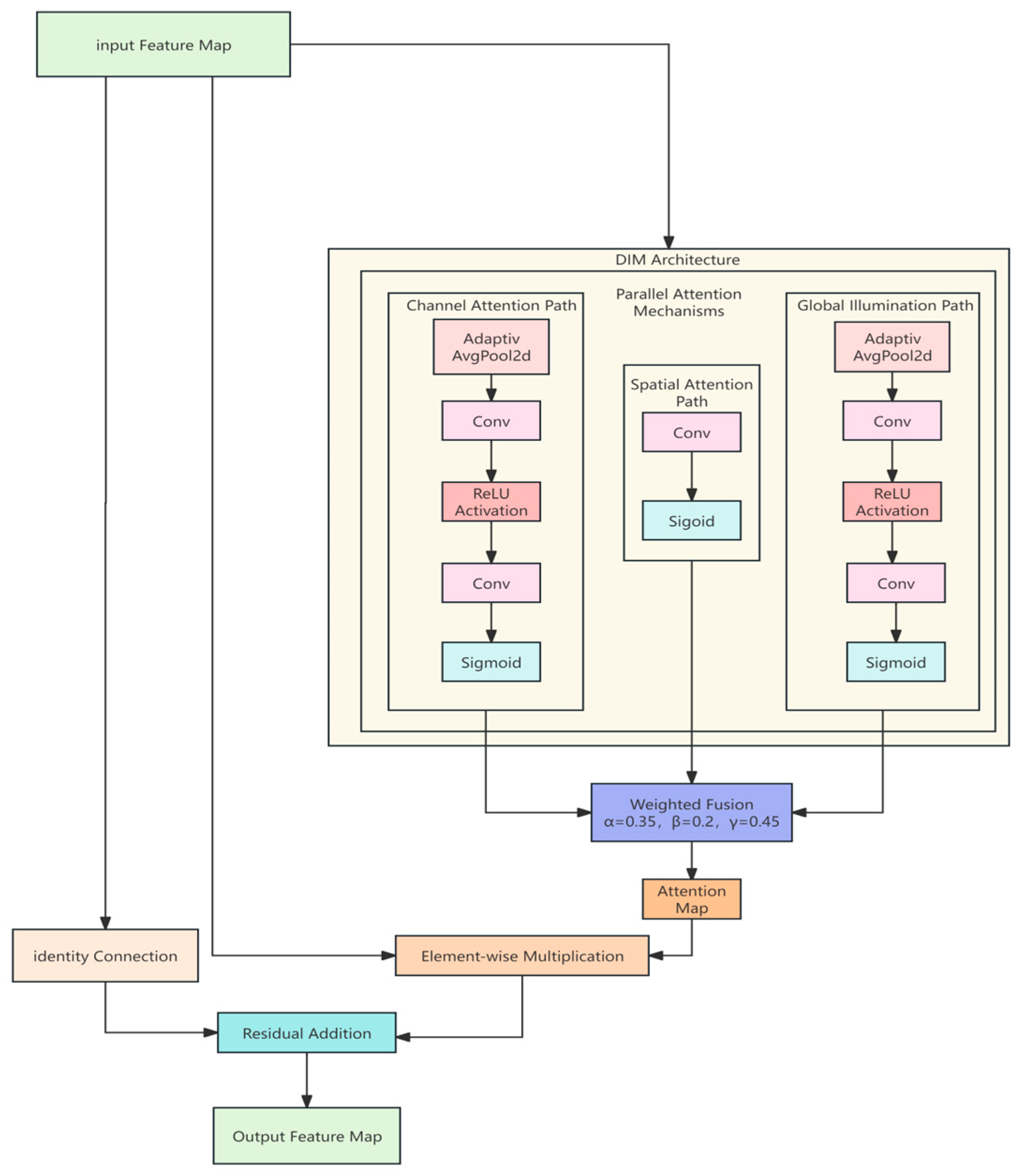

The Dynamic Illumination-aware Module (DIM) is specifically designed for low-light conditions to address feature degradation through a triple attention mechanism for feature recalibration. Its structure is shown in

Figure 3. DIM aims to explicitly model and adapt to different illumination conditions, which is crucial for low-light image processing. As shown, DIM operates via a triple attention mechanism, simultaneously processing spatial, channel, and global illumination information.

Illumination Encoding Branch: Captures image-level illumination statistics via Global Average Pooling (GAP), followed by two convolutional layers learning the illumination distribution pattern. The first convolution compresses the channel number to 1/32 of the input channels (with a minimum of 2) to reduce computation. The second convolution restores the original channel number and outputs a Sigmoid-activated attention map. This branch extracts image-level illumination statistics via adaptive average pooling and processes them through a compact network to generate illumination-aware weights. This process is represented by Formula (1), where

is the obtained attention feature, GAP denotes Global Average Pooling, and σ denotes the Sigmoid function.

Spatial Attention Branch: Employs a single 1 × 1 convolutional layer and a Sigmoid activation function to generate a spatial attention map. It emphasizes important regions while suppressing less relevant ones, enabling the acquisition of key information even in low-light environments. As shown in Formula (2), this branch generates the spatial attention map directly from the input features, offering high computational efficiency. It helps enhance feature responses in low-light areas and suppresses interference from over-exposed regions.

Channel Attention Branch: Focuses on the importance of different feature channels, adopting a design similar to the Squeeze-and-Excitation structure of the SE module. It utilizes GAP to capture channel statistics and models interdependencies between channels through two 1 × 1 convolutional layers (with a reduction ratio of 32). The process is shown in Formula (3). Unlike the illumination encoding branch, this branch uses independent convolutional weights specifically for learning channel relationships, unaffected by illumination factors.

In Formula (4), α, β, and γ represent the weighting coefficients for the spatial attention branch, channel attention branch, and illumination encoding branch, respectively. Through ablation experiments on the weight coefficients of different attention branches in the DIM, it was verified that when the ratio of α, β, and γ is 0.35:0.2:0.45, the DIM achieves optimal performance, indicating that illumination weighting information is the most critical in low-light scenarios. The module employs various optimization strategies, including in-place operations, gradient checkpointing, and memory-efficient computations, to minimize computational overhead. With only a slight increase in computational cost, it significantly improves feature representation under challenging lighting conditions. The DIM is positioned at the end of the backbone network, where the features contain the highest level of semantic information, allowing illumination adjustment to influence all subsequent processing stages.

3.3. Interactive Attention Fusion Network (IAFN)

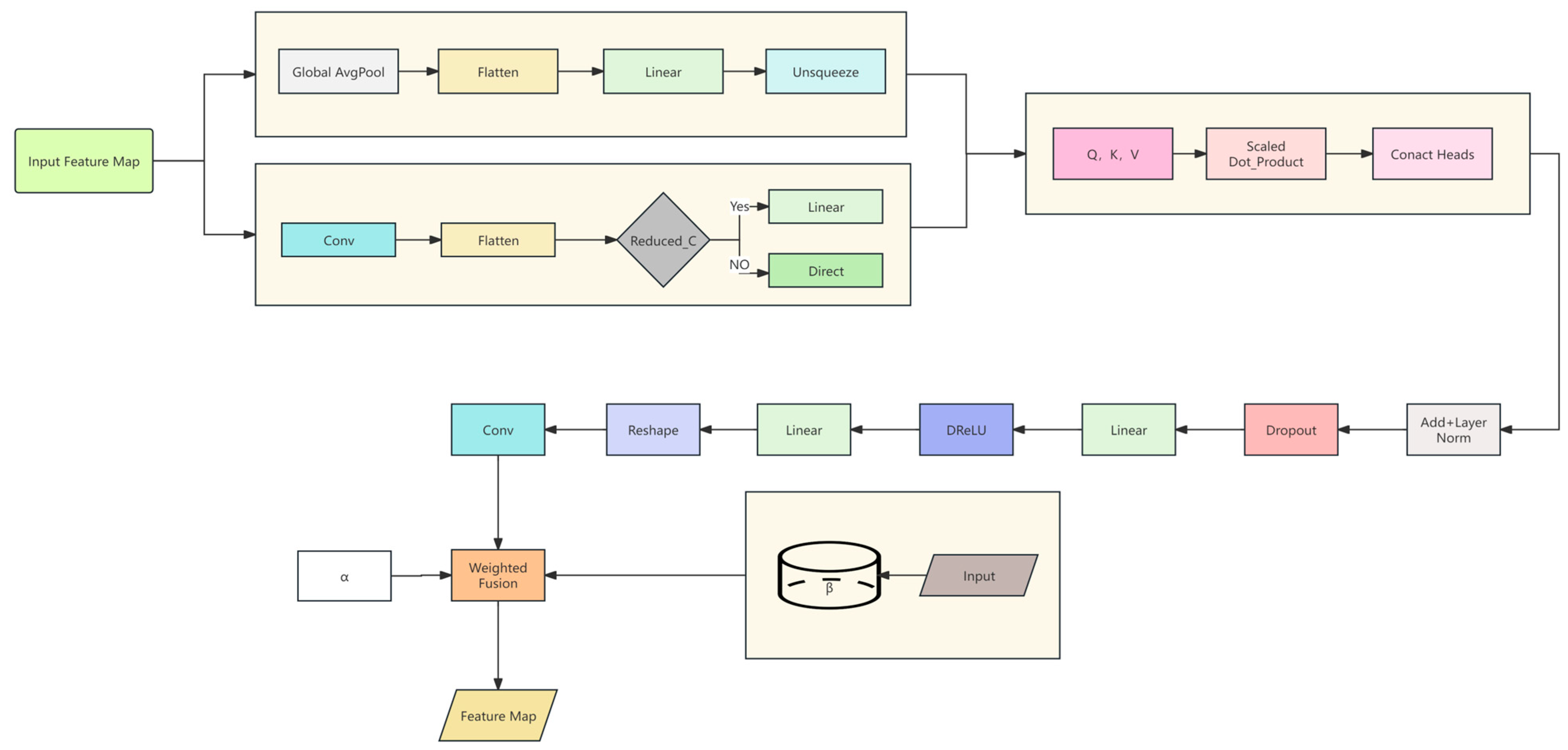

The Interactive Attention Fusion Network (IAFN) module facilitates efficient interaction between CNN local features and Transformer global features through a lightweight cross-attention mechanism. As shown in

Figure 4, the input features are first processed through two parallel branches: one yielding local features containing spatial information, and the other capturing global features representing the overall image. The local feature branch uses a 1 × 1 convolution layer with a reduction ratio of 4, compressing the channel dimension while maintaining spatial resolution. A reshape operation then transforms the 2D feature map into a dimension suitable for attention computation.

As shown in Formula (5), the global feature branch extracts image-level features via GAP and projects them into a low-dimensional space through two linear transformations with ReLU activation.

The core innovation of IAFN lies in its asymmetric cross-attention design, where local CNN features serve as Queries, and global context features serve as Keys and Values, as shown in Formula (6). This allows each spatial location to actively query relevant global context information, enabling the model to enhance local details with complementary global understanding. The attention mechanism uses a 4-head multi-head architecture and batch-first computation for efficiency.

After the attention computation, the module employs a lightweight feedforward network with a compression ratio of 2 to further refine the features while maintaining computational efficiency, as shown in Formula (7). Batch Normalization is applied across the entire network to maximize the use of batch size information and improve convergence, complemented by pre-norm residual connections that promote stable training.

The output feature sequence is transformed back to spatial dimensions via a specialized projection layer and restored to the original channel number through a 1 × 1 convolution. Formula (8) shows that a learnable residual connection with parameters α and β allows the network to dynamically balance the original and enhanced features, ensuring the module’s performance is no worse than the identity transformation. The IAFN module is placed at the feature fusion stage within the neck network, specifically after the P4 and P5 feature layers, where it can most effectively enhance multi-scale feature representations before they are passed to the detection head.

3.4. Multi-Branch Decomposition Network (MDN)

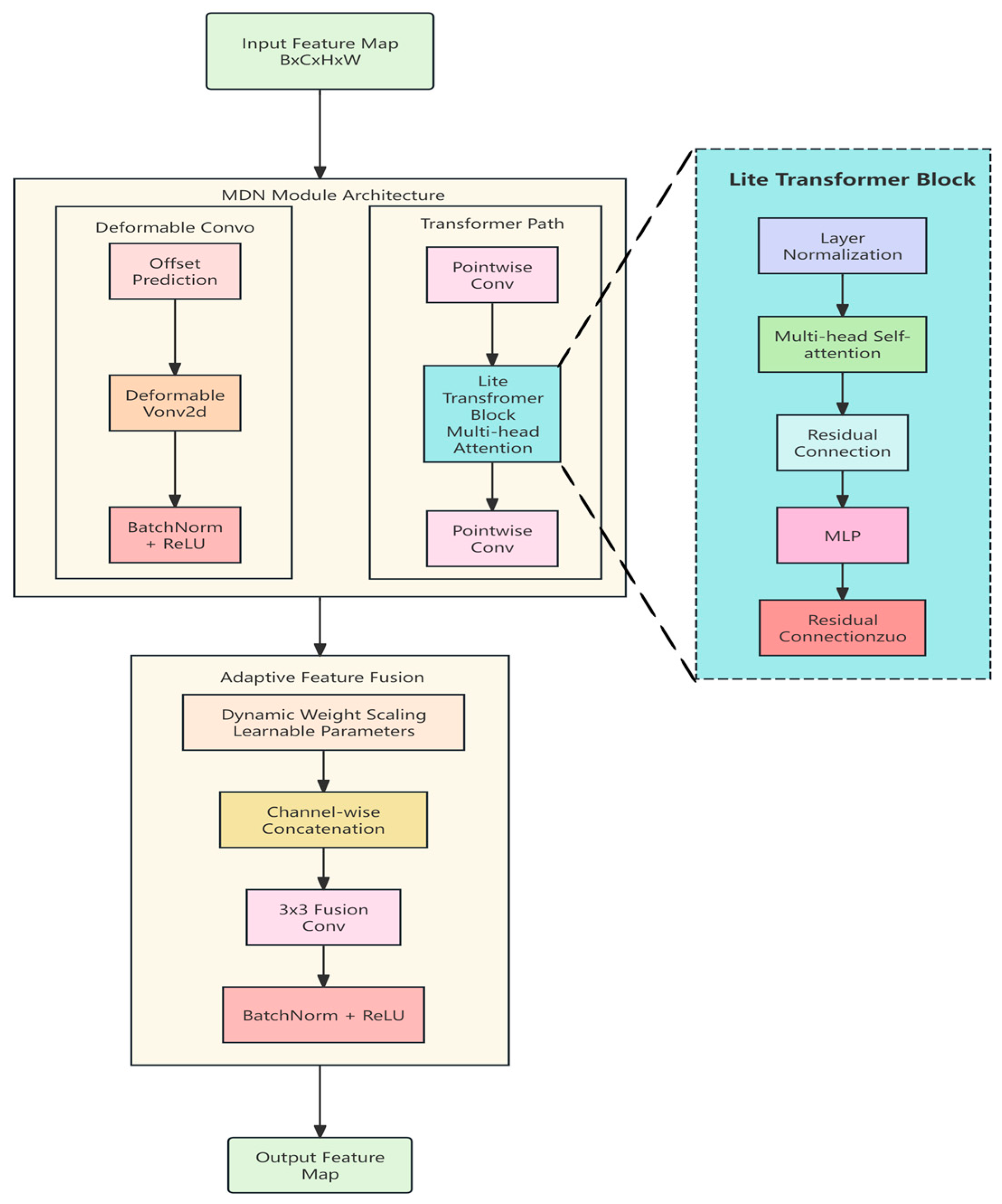

The Multi-branch Decomposition Network (MDN) addresses challenges of geometric deformation and multi-scale representation through a parallel architecture combining Deformable Convolutional Networks (DCN) [

29] with a lightweight Transformer. As shown in

Figure 5, the module processes input features through two distinct yet complementary branches: a Deformable Convolution branch adept at handling geometric deformations, and a Transformer branch capturing long-range dependencies. As shown in Formula (9), the Deformable Convolution branch employs an offset prediction network to learn adaptive sampling locations based on the input content, then applies the learned sampling pattern for deformable convolution. This approach enables the model to focus on relevant regions regardless of their spatial regularity, making it particularly suitable for irregularly shaped parcels and distorted QR codes.

The Transformer branch is carefully designed to maintain performance while ensuring efficiency. As shown in Formula (10), a 1 × 1 convolution first reduces the channel number to one-quarter of the original to lower computational complexity before subsequent processing.

The lightweight Transformer block uses single-head attention instead of multi-head attention to reduce computational cost while preserving global modeling capability. The Transformer adopts a standard architecture comprising Layer Normalization, self-attention, and a GELU-activated feedforward network, but operates on the dimension-reduced features to improve computational efficiency. After Transformer processing, another 1 × 1 convolution restores the channel dimension to match the Deformable Convolution branch.

A key innovation of MDN is its dynamic fusion mechanism, which uses learnable weights to combine features from the two branches. As shown in Formula (11), the weights are first normalized via Softmax to ensure a convex combination and are learned during training to automatically determine the optimal balance between the geometric features from deformable convolution and the contextual features from the Transformer. The fused features are further refined by a 3 × 3 convolutional layer with Batch Normalization and ReLU activation to enhance integration and produce the final output. The MDN module is positioned before the detection heads at multiple scales (P3, P4, P5) to provide enhanced features tailored specifically for small, medium, and large targets.

MDN features adaptive scale adjustment, dynamically allocating weights for each branch according to the feature scale (P3, P4, P5). For high-resolution P3 features, the Deformable Convolution branch is assigned a higher weight, focusing more on detailed features. For low-resolution P5 features, the Transformer branch is assigned a higher weight, emphasizing global context. For medium-resolution P4 features, the weights are balanced between the two branches.

3.5. HDPNet-YOLOv8n

This work integrates the three core modules—DIM, IAFN, and MDN—into the YOLOv8n framework using a synergistic strategy, as conceptually illustrated in

Figure 6 (YOLOv8n structure) and

Figure 7 (YOLOv8n-HDPNet structure).

The placement of each module is based on its specific function in addressing low-light and deformation problems, aiming to form a progressive processing pipeline from global environment perception to local feature refinement, and then to multi-scale geometric modeling.

The DIM is placed at the end of the Backbone. Features at this location, having undergone deep extraction, carry high-level semantic information with the largest receptive field, containing global contextual information. Since low light is a global degradation factor affecting the entire image, placing DIM here allows for the most effective encoding and compensation of the image’s illumination statistics, achieving global illumination normalization for the deep features. This provides a more illumination-robust feature foundation for subsequent modules.

The IAFN module is embedded within the Neck network, specifically after the fusion of the P4 and P5 feature layers. The primary responsibility of the neck network is multi-scale feature fusion, generating pyramid features rich in semantic and positional information. Introducing IAFN at this critical point aims to strengthen semantic alignment and information flow between different-scale feature maps through its lightweight Transformer-CNN interaction mechanism. Especially when handling parcel targets with significant scale variations, this module can optimize local features using global context, effectively mitigating feature confusion caused by occlusion and deformation.

The MDN module is deployed just before the detection Heads, covering all scales (P3, P4, P5). The detection heads are directly responsible for bounding box regression and classification, making the quality of their input features crucial. Given that MDN combines the geometric deformation modeling capability of deformable convolution with the long-range dependency capture capability of Transformer, placing it here allows for final refinement of the features used for prediction. Adaptively adjusting the contribution weights of deformable convolution and Transformer for different scales (P3 for small objects, P5 for large objects), MDN provides the most discriminative feature representations for targets of varying sizes.

As illustrated in

Figure 7, through this carefully designed module integration strategy, the HDPNet framework introduces powerful perception capabilities while ensuring balanced computational efficiency, thereby significantly improving detection performance in low-light, multi-deformation scenarios and meeting real-time application requirements.

4. Experiments and Analysis

4.1. Experimental Environment and Hyperparameter Configuration

All experiments in this study were conducted on a computer running the Windows 11 operating system. The hardware configuration included a 13th Gen Intel Core i7-13650HX processor (Intel Corporation, Santa Clara, CA, USA)(2.60 GHz), 24 GB RAM, and an NVIDIA GeForce RTX 4060 GPU (NVIDIA Corporation, Santa Clara, CA, USA) (8 GB VRAM). The software environment was Python 3.8.20. Model training used the Stochastic Gradient Descent (SGD) algorithm for weight optimization. Key hyperparameters were set as follows: training epochs were 300, batch size was 16, the number of data loading workers (num-workers) was 8, and the initial learning rate was 0.01. Input image sizes were uniformly resized to 640 × 640. Metrics for model comparison included Precision (P), Recall (R), mAP@0.5, mAP@0.5:0.95, Floating Point Operations Per Second (FLOPS), model size (Params), and Frames Per Second (FPS).

4.2. Dataset

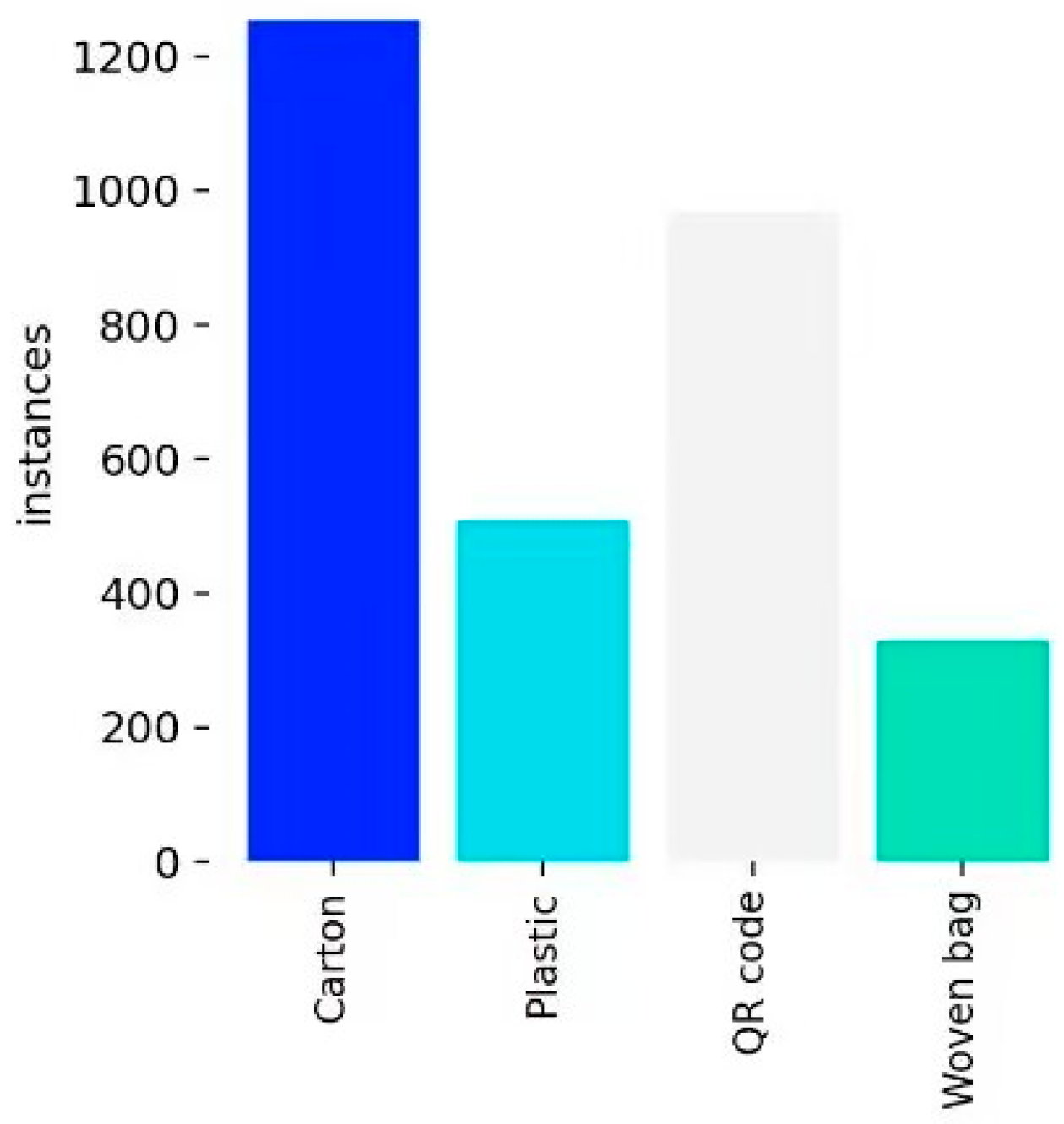

To address the challenges of object detection under real-world low-light conditions, this study has constructed a low-light industrial express package dataset, named njpackage. The dataset was collected from actual logistics sorting environments, containing 2572 images covering four typical categories: Carton, Plastic, Woven Bag, and QR code. Data augmentation was performed using rotation and color transformation to enhance model generalization. The dataset was split into training, validation, and test sets in a ratio of 0.78:0.14:0.08. As shown in

Figure 8, the dataset contains four categories: Carton (1489 instances), Plastic (590 instances), Woven Bag (370 instances), and QR code (847 instances).

4.3. Evaluation Metrics

To comprehensively evaluate model performance, we used Precision, Recall, mAP@0.5, mAP@0.5:0.95, FPS, model size, and FLOPs as measurement metrics. Precision is the proportion of samples predicted as positive that are actually positive. Recall is the proportion of samples predicted as positive among all actual positive samples. Here, TP (True Positive) indicates the model predicted positive and the actual is also positive. FP (False Positive) indicates the model predicted positive but the actual is negative. FN (False Negative) indicates the model predicted negative but the actual is positive.

mAP@0.5 is the average of the AP for each category calculated when the IoU is set to 0.5.

4.4. Comparative Experiments

4.4.1. Comparison with Mainstream Models

To comprehensively evaluate the effectiveness of the proposed HDPNet model, we conducted comprehensive comparative experiments with current mainstream object detection algorithms. The selected comparison models included representative CNN-based and Transformer-based methods to ensure the breadth and representativeness of the comparison. In the experiments, the number of epochs was set to 300 for all models to ensure full convergence and stable performance. Detailed experimental results are shown in

Table 1.

The experimental results are shown in

Table 1. Our model achieved an mAP@0.5 of 0.866 on the njpackage dataset, which is 7.3%, 4.4%, 7.1%, 4.0%, and 0.4% higher than the mAP@0.5 measured for Faster R-CNN (ResNet-50), YOLOv8n, YOLOv9t, YOLOv11n, and YOLOv10n on the njpackage dataset, respectively. The Precision of our model was 0.860, which is 8.7% higher than YOLOv8n’s Precision on the njpackage dataset, 8% higher than YOLOv9t’s Precision on the njpackage dataset, and 11.4% higher than Faster R-CNN’s Precision on the njpackage dataset. In terms of Recall, our model improved by 1.9%, 4%, 7.3%, and 1.4% compared to YOLOv8n, YOLOv9t, YOLOv10n, and YOLOv11n, respectively. It is important to note a nuanced trade-off observed in the results. While our HDPNet edges YOLOv11n on mAP@0.5 (0.866 vs. 0.862), it slightly lags behind on the more stringent mAP@0.5:0.95 metric (0.733 vs. 0.749). This suggests that our model excels at correct identifications with moderate localization accuracy (IoU ≥ 0.5) but exhibits a minor compromise in precise boundary regression required for higher IoU thresholds. We attribute this primarily to the design of our MDN and IAFN modules. The MDN module employs deformable convolutions to adapt to object shapes, which may prioritize capturing overall object extent over extremely fine-grained boundary details. Concurrently, the feature fusion and interaction within the IAFN module, while highly effective for integrating multi-scale context, might introduce a slight smoothing effect that relaxes the sharpness of localization at very high IoU levels. This represents a conscious trade-off, where the significant gains in recall and competitive performance at the standard IoU = 0.5 threshold are achieved while accepting a minor decrease in the strictest localization metric compared to the best performer. Although our model’s FPS is lower than that of YOLOv8n, YOLOv9t, YOLOv10n, YOLOv11n, and the model size is larger than these models. This is because the MDN and IAFN modules incorporate Transformer structures. The self-attention mechanism in Transformers has high computational complexity, which leads to a decrease in model FPS. Furthermore, Transformers employ multiple attention heads, and the cumulative effect of these heads increases the number of model parameters, resulting in a significant growth in model size. Nevertheless, it still meets the requirements for real-time parcel detection. RT-DETR achieved an mAP@0.5 of 0.874 on the njpackage dataset, only 0.8% higher than our model, but the model size of RT-DETR increased significantly compared to our model, increasing inference time, making it unsuitable for real-time detection and deployment on terminal devices. In summary, our model achieves a good balance between detection accuracy and lightweight design, demonstrating significant comprehensive advantages.

4.4.2. Generality Verification Experiment

To further verify the generality and portability of HDPNet, this study integrated it into several classic models of the YOLO series (including YOLOv9t, YOLOv10n, YOLOv11n, YOLOv12n) and compared their detection capabilities with the original models on the njpackage dataset. In this experiment, we set the number of training epochs to 100. The experimental results are shown in

Table 2.

As shown in

Table 2, YOLOv9t, YOLOv10n, YOLOv11n, and YOLOv12n with HDPNet added improved mAP@0.5 by 2.4%, 0.5%, 1.3%, and 1.2%, respectively, compared to the original models. This proves that models with HDPNet added have better recognition ability for parcels in low-light environments, and also confirms that HDPNet has good generality.

4.4.3. Generalization Verification Experiment on PASCAL VOC Dataset

To systematically evaluate the generalization ability of our model in general object detection tasks, we selected the VOC dataset [

72] and compared the detection capabilities of YOLOv8n, YOLOv10n, YOLOv11n, and our model on this dataset. In this experiment, we set the number of epochs to 300 for each experimental group.

The experimental results are shown in

Table 3. Our model improved mAP@0.5 by 6.2%, 5.3%, 6.3%, and 5.0% compared to YOLOv8n, YOLOv9t, YOLOv10n, and YOLOv11n, respectively. The Precision and Recall of our model also lead the above models. These results fully indicate that our model not only performs excellently in low-light scenes but also has good generalization ability, adapting to diverse detection environments and target categories.

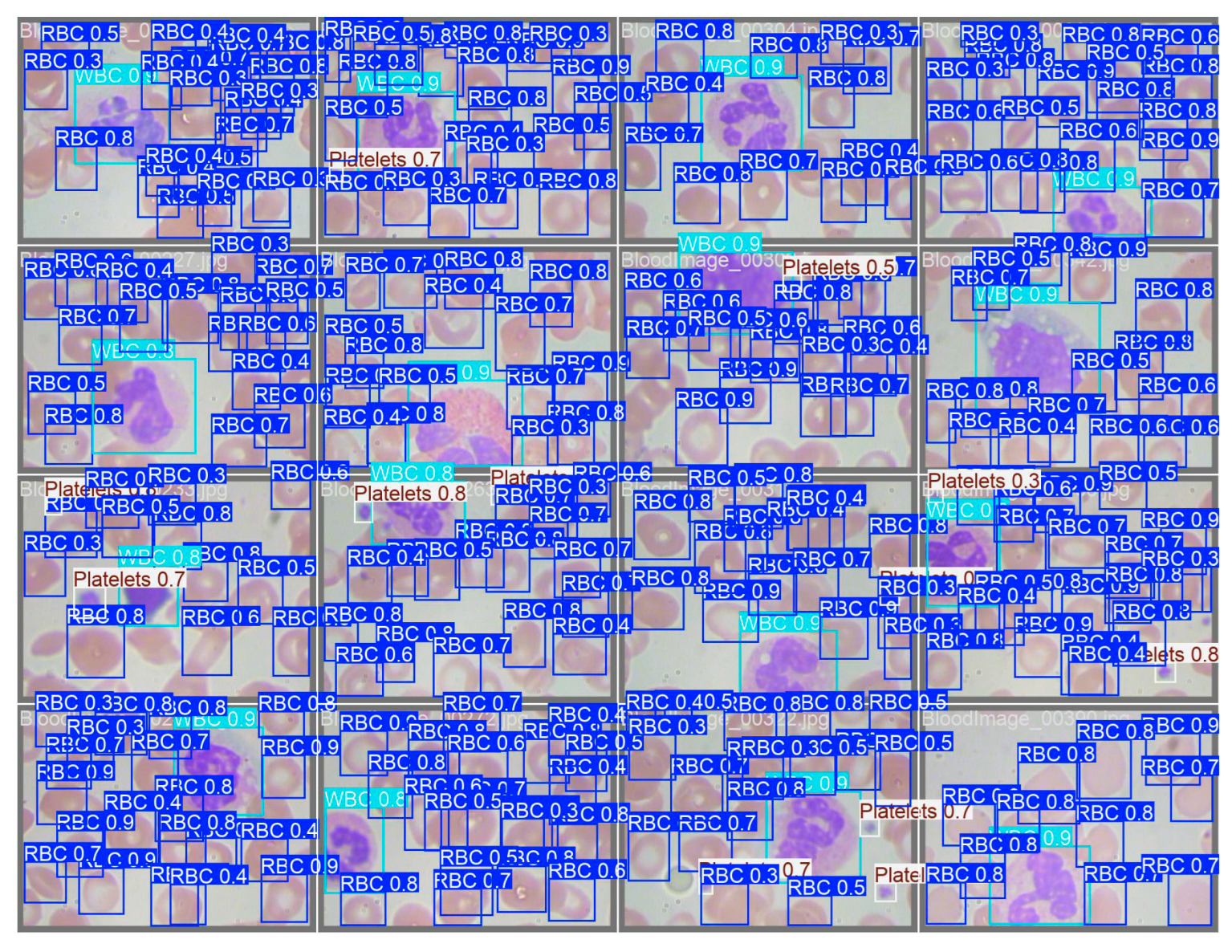

4.4.4. Comparative Experiment on BCCD Dataset

We also conducted comparative experiments with other object detection models on the BCCD dataset. The BCCD dataset contains red blood cells, white blood cells, and platelets, all of which are small objects. We set the number of experimental epochs to 300. The experimental results are shown in

Table 4.

As shown in

Table 4, the mAP@0.5 of our model on the BCCD dataset is 6.2%, 3.7%, 2.8%, 4.7%, 2.5%, 2.3%, and 1.3% higher than that of RT-DETR, YOLOv5, YOLOv7, YOLOv8n, YOLOv9t, ADA-YOLO, and CAF-YOLO, respectively. This experimental result proves that our model also has high accuracy in small object detection, and the model has good generalization ability.

The detection capability of our model on the validation set of the BCCD dataset is shown in

Figure 9. Our model successfully identified all categories in the BCCD dataset, and the bounding boxes for different categories of targets have a high degree of fit with the actual targets. This proves that our model has good generalization ability. In scenes with dense cell distribution, it can also accurately detect smaller cells. This proves that our model also has great advantages in small object detection.

4.5. Ablation Experiments

4.5.1. Ablation Experiments Conducted on HDPNet

To verify the effectiveness of HDPNet, we conducted ablation studies on the njpackage dataset. We used YOLOv8n as the baseline model. Under the same experimental settings, we gradually added our proposed DIM, IAFN, and MDN modules and conducted comprehensive comparisons to analyze the changes in model performance before and after each improvement. In the experiments, we set the number of experimental epochs to 300.

The experimental results are shown in

Table 5. After adding our proposed improvement modules to YOLOv8n, the model’s ability to detect parcels was improved, but the effects varied.

After adding the DIM to the backbone network of YOLOv8n, in the layer next to the SPPF module, compared to YOLOv8n, the model’s mAP@0.5 increased by 1.5%, mAP@0.5–0.95 increased by 2.5%, and Precision increased by 11.1%. These results indicate that the Dynamic Illumination-aware Attention Module can effectively detect express parcels in low-light environments. This is because DIM mitigates feature degradation under low-light conditions through its triple-attention mechanism, enabling better parcel recognition in such environments while maintaining both efficiency and high accuracy.

In YOLOv8n, after we added IAFN to the neck network, in the layer next to C2f, compared to the original YOLOv8n, the model’s mAP@0.5 increased by 2.1%, mAP@0.5–0.95 increased by 1.2%, Precision increased by 2.7%, and Recall increased by 2.6%. The results show that the asymmetric cross-attention design of the Interactive Attention Fusion Network (IAFN) enables the model to enhance local details with global understanding, and its multi-head architecture and batch-first computation also improve computational efficiency. However, the model’s FPS decreases significantly. This is attributed to the incorporation of the Transformer module within IAFN. The self-attention mechanism in Transformers possesses high computational complexity, leading to a notable drop in model FPS.

When combining these two improvements, compared to the original YOLOv8n, our model’s mAP@0.5 increased by 2.5%, mAP@0.5–0.95 increased by 2.7%, Precision increased by 3.7%, and Recall increased by 2%. At the same time, the model’s FPS decreased slightly, but the FPS was still about 106 frames, fully meeting the requirements for parcel object detection.

When replacing the C2f in the neck network of YOLOv8n with the MDN module, compared to the original YOLOv8n model, mAP@0.5 increased by 3.8%, mAP@0.5–0.95 increased by 4%, Precision increased by 5.4%, and Recall increased by 4.1%. This indicates that the deformable convolution and the Transformer capturing long-range dependencies in the Multi-branch Decomposition Network (MDN) can effectively identify irregularly shaped parcels with high accuracy. However, the model size increases noticeably, and FPS decreases. This occurs because MDN contains both Deformable Convolutional Networks and Transformers. Deformable Convolutional Networks inherently have high computational complexity, and the self-attention mechanism in Transformers also exhibits high computational complexity. The combination of both substantially increases the overall computational load, causing the FPS to drop. Deformable Convolutional Networks learn offsets through additional convolutional layers, increasing the model’s parameter count, and Transformers themselves contain numerous parameters, which collectively contribute to the larger model size. Despite this, the FPS remains at 45.0, satisfying the real-time requirements for express parcel detection.

When adding both the DIM and the MDN module to YOLOv8n simultaneously. Compared to YOLOv8n, our model’s mAP@0.5 increased by 2.6%, mAP@0.5–0.95 increased by 4.7%, Precision increased by 5.5%, and Recall slightly improved.

When adding both the IAFN module and the MDN module to YOLOv8n simultaneously, compared to the original YOLOv8n, the model’s mAP@0.5 increased by 3.8%, mAP@0.5–0.95 increased by 4%, Precision increased by 5.4%, and Recall increased by 4.1%. This proves that after fusing the new modules we added, the detection of parcels in low-light environments has a good effect.

When combining all three modules with YOLOv8n simultaneously, compared to the original YOLOv8n, this model’s mAP@0.5 increased by 4.4%, mAP@0.5–0.95 increased by 4.2%, Precision increased by 8.7%, and Recall increased by 1.9%. From the above experimental data, it can be seen that when the three modules are integrated into the YOLOv8n framework, the model has the optimal object detection effect for parcels.

In the above models, the model size of models with the MDN module added increased compared to the model size before adding the MDN module. This is because the two convolutional layers of the deformable convolution module and the Transformer module in the MDN module increase the number of model parameters, leading to an increase in model size.

4.5.2. MDN Module Ablation Experiment

To verify the effectiveness and quantity adaptability of the MDN module. We gradually added MDN modules to the YOLOv8n model and changed their positions, but did not add the DIM and IAFN modules, for an ablation experiment. In

Table 6, Re-one indicates that one MDN module was introduced into the YOLOv8n model, replacing the C2f structure at the 18th layer; Re-two indicates that two MDN modules were introduced, replacing the C2f at the 18th and 21st layers, respectively; Re-three further introduces three MDN modules, sequentially replacing the C2f at the 15th, 18th, and 21st layers. On the other hand, Af-one indicates that an additional MDN module was added after the C2f at the 18th layer of the YOLOv8n model; Af-two indicates that two MDN modules were added, placed after the C2f at the 18th and 22nd layers, respectively; Af-three indicates that three MDN modules were added individually after the C2f at the 15th, 19th, and 23rd layers. We selected the njpackage dataset. We set the number of training epochs to 100.

As shown in

Table 6, when adding 1 MDN, adding the MDN after the C2f at layer 18 of the YOLOv8n model improved mAP@0.5 by 1% compared to replacing the C2f at layer 21 with MDN. When the number of MDNs was 2, adding MDNs after the C2f at layers 18 and 22 in YOLOv8n improved mAP@0.5 by 0.6% compared to replacing the C2f at layers 18 and 21 of YOLOv8n with MDN. When the number of MDNs was 3, the mAP@0.5 for adding MDNs after the C2f at layers 15, 19, and 23 in YOLOv8n was 0.786, which is 2.2% higher than replacing the C2f at layers 15, 18, and 21 of YOLOv8n with MDN, respectively. In

Table 4, when the number of MDNs was 3, the mAP@0.5 was highest when MDNs were added after the C2f at layers 15, 19, and 23 in YOLOv8n. This experiment fully verifies the position and number of MDN modules added in the model, i.e., when MDN is added after the C2f at layers 15, 19, and 23 in YOLOv8n, the model possesses both accurate detection capability and real-time performance.

4.5.3. DIM Attention Branch Weight Comparison Experiment

To evaluate the relative importance of the Spatial, Channel, and Illumination attention branches in the DIM, this study introduced only the DIM into the YOLOv8n model and conducted comparative experiments by controlling the weighting coefficients of the three branches’ output features, with the sum of the weights constrained to 1. The experiments were conducted on the njpackage dataset for 100 epochs, and the results are presented in

Table 7.

The experimental results in

Table 7 demonstrate that by systematically adjusting the weighting ratios of the spatial, channel, and illumination attention branches in the DIM, the model performance shows significant variations. Comprehensive analysis indicates that when the weighting ratio for Spatial, Channel, and Illumination is 0.35:0.2:0.45, the model achieves the best overall detection performance, with the highest mAP@0.5 of 0.780 and the top mAP@0.5:0.95 of 0.681. Additionally, the recall (0.779) under this configuration is also the highest among all experiments, indicating that this weight allocation most effectively reduces missed detections. This result highlights the critical role of assigning a higher weight to the illumination attention branch for performance improvement, suggesting that addressing illumination variations is key to enhancing model robustness in our current task and dataset. Therefore, the weight allocation of 0.35 for Spatial, 0.2 for Channel, and 0.45 for Illumination is established as the optimal configuration for the DIM.

4.5.4. MDN Dynamic Weight Comparison Experiment

To verify the rationality of the dual-branch weight coefficients of the MDN module, we added only the MDN module to the YOLOv8n model and changed the weight coefficients of the deformable convolution branch and the Transformer branch within it. The experimental dataset selected was the njpackage dataset. The number of experimental epochs was set to 100. The experimental results are shown in

Table 8.

The experimental results are presented in

Table 8, where P3, P4, and P5 denote the MDN modules preceding the three detection heads. Among them, the detection head corresponding to P3 possesses the smallest receptive field and specializes in detecting small-sized objects. The head associated with P4 integrates rich semantic and detailed information, being primarily responsible for identifying medium-sized objects. In contrast, the head linked to P5 has the largest receptive field, with its strength being the detection of large objects. When the weight ratio of the deformable convolution branch to the Transformer branch at the P3 layer is 0.4:0.6, the weight ratio at the P4 layer is 0.5:0.5, and the weight ratio at the P5 layer is 0.6:0.4, the model’s mAP@0.5 is the highest, at 0.792. Analyzing from the principle level, deformable convolution can dynamically adjust the shape and size of the receptive field by learning offsets. This characteristic allows it to better adapt to the size of small targets, accurately focus on small target areas, and thus effectively extract features of small targets. Therefore, when the weight proportion of the deformable convolution branch is higher, the model’s detection effect on small targets like QR code is more outstanding. Transformer, with its powerful feature fusion capability, can effectively integrate features of different scales, fully utilizing the feature information of large objects at various scales, thereby enhancing the detection capability for large objects. So, when the weight proportion of the Transformer branch is higher, the model’s detection effect on large objects like Carton will be better. Experimental verification shows that when the weight ratio of the deformable convolution branch to the Transformer branch at the P3 layer is 0.4:0.6, at the P4 layer is 0.5:0.5, and at the P5 layer is 0.6:0.4, the MDN module can maximize its advantages.

4.5.5. Five-Fold Cross-Validation

To enhance the rigor and persuasiveness of the experimental findings, we conducted five-fold cross-validation on the njpackage dataset comparing our model with YOLOv8n. The experiments were set to 300 training epochs. Detailed results are shown in

Table 9.

As shown in

Table 9, our model achieves a 6.1% higher mAP@0.5 and a 4.1% higher mAP@0.5:0.95 than YOLOv8n on the njpackage dataset. These results strongly demonstrate the superior performance of our model in object detection tasks and further validate the reliability of the experimental data.

4.5.6. Comparative Experiments of Different Models on ExDark Dataset

To validate the generalization capability of our model in low-light scenarios, we conducted comparative experiments on the ExDark dataset, pitting our model against YOLOv8n, YOLOv9t, and YOLOv10n. All models were pre-trained on the PASCAL VOC dataset for 300 epochs and then fine-tuned on ExDark for 50 epochs. The experimental results are shown in

Table 10.

As shown in

Table 10, our model achieves a higher mAP@0.5 than all other compared models on the ExDark dataset, exceeding YOLOv8n, YOLOv9t, and YOLOv10n by 2.9%, 4.1%, and 11.1%, respectively. For mAP@0.5–0.95, our model outperforms YOLOv8n and YOLOv10n by 0.4% and 4.8%, but falls 2.6% below YOLOv9t. This discrepancy stems from fundamental differences in model design priorities: our model places greater emphasis on classification confidence accuracy, enabling more reliable classification decisions at lower IoU thresholds (0.5), while revealing limitations in bounding box regression precision under higher IoU requirements. In contrast, YOLOv9t incorporates a more refined bounding box regression design that maintains relatively stable localization performance across various IoU thresholds, though its classifier demonstrates inferior confidence calibration at lower thresholds compared to our approach.

4.6. Visualization Analysis

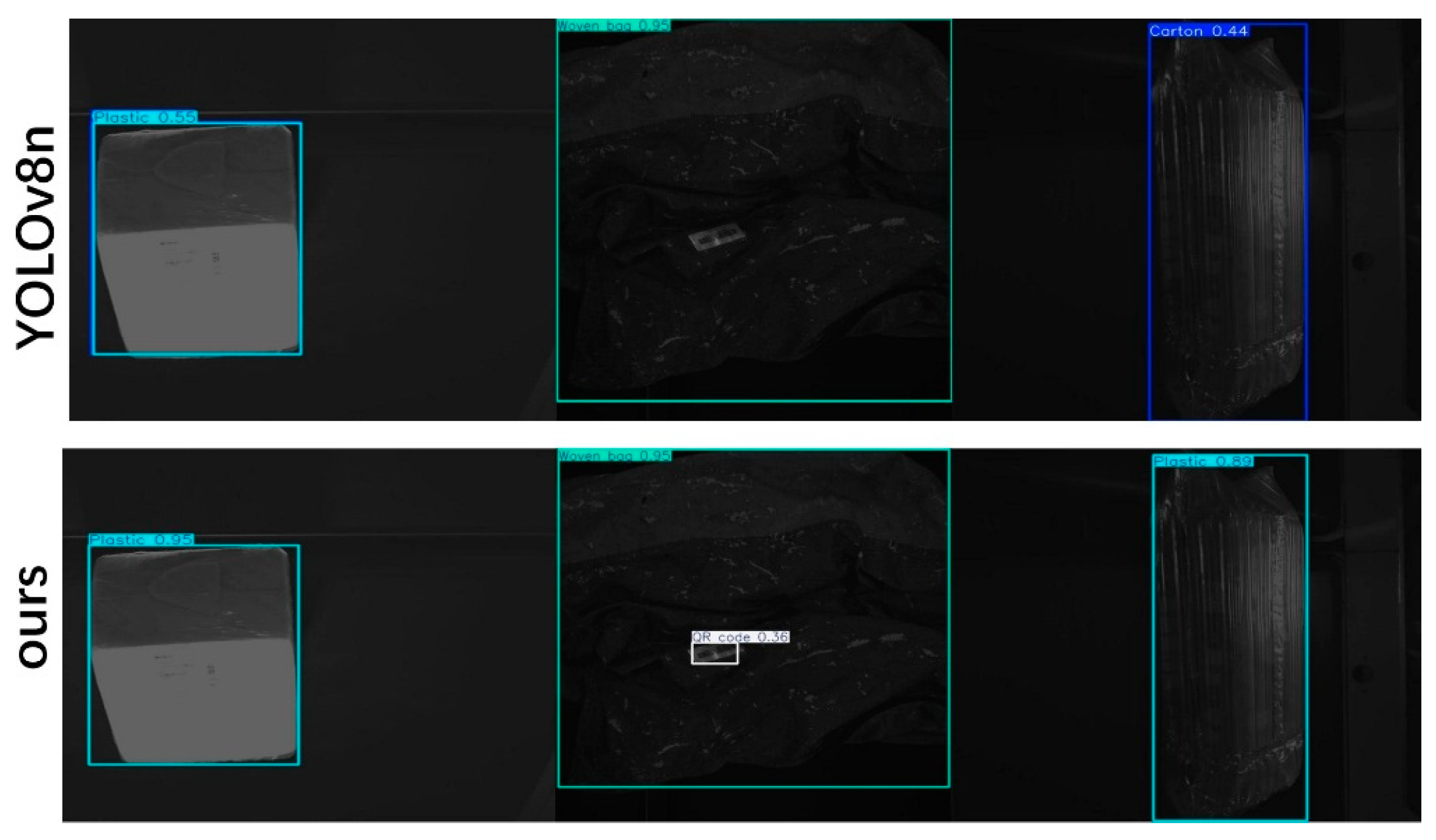

Figure 10 shows the detection performance comparison between our model and the baseline model YOLOv8n on the njpackage dataset. In each set of images, the top is the detection result of YOLOv8n, and the bottom is the detection result of our model (HDPNet), providing a visual comparison for the three types of targets: Plastic, Woven bag, and QR code. In the detection of the Plastic category on the left, both models can accurately locate and identify the target, but the confidence score output by our model is significantly higher than that of YOLOv8n, reflecting stronger feature certainty. In the Woven bag detection in the middle, both also performed stably, achieving correct detection. Particularly, in the QR code detection on the right, where the target size is small and texture features are weak, our model can still successfully detect it, although the confidence is relatively low, while YOLOv8n completely missed the detection. Furthermore, in another set of Plastic samples, our model accurately identified the target, while YOLOv8n mistakenly identified it as Carton. These comparative results indicate that our model exhibits better robustness and discriminative ability when facing irregularly shaped targets, small targets, and low-light conditions.

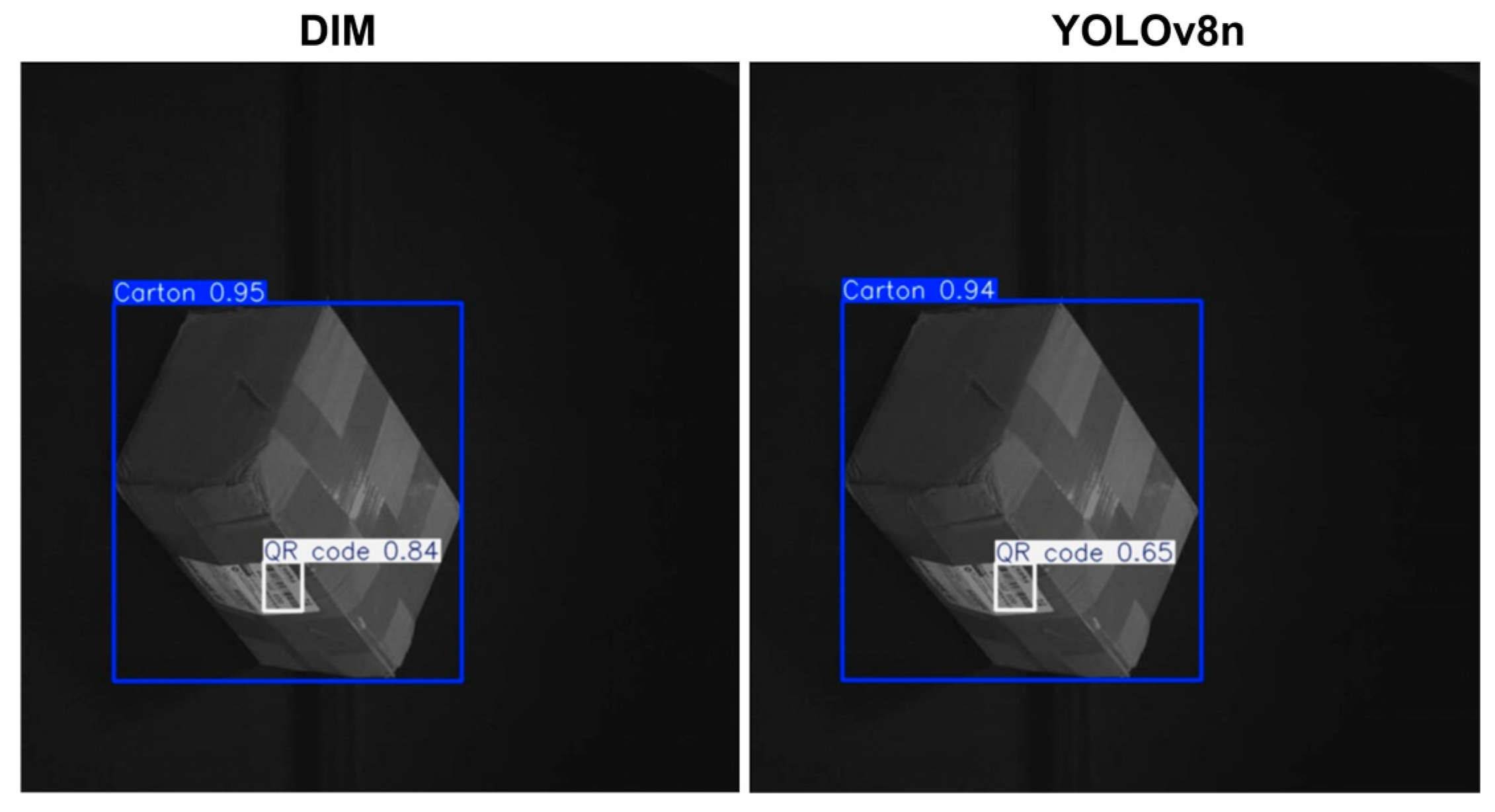

The visualization results in

Figure 11 demonstrate the robustness of the improved algorithm in handling complex targets under low-light conditions. Although both YOLOv8n and the DIM model successfully identified the carton and QR code, the latter showed significantly higher confidence (0.84 vs. 0.65) on the subtle QR code target. After adding the DIM, its perception ability became more accurate and stable. This advantage stems from the effective compensation of imaging conditions by the Dynamic Illumination-aware Attention Module, allowing it to maintain sharp capture of surface pattern details even in dark environments.

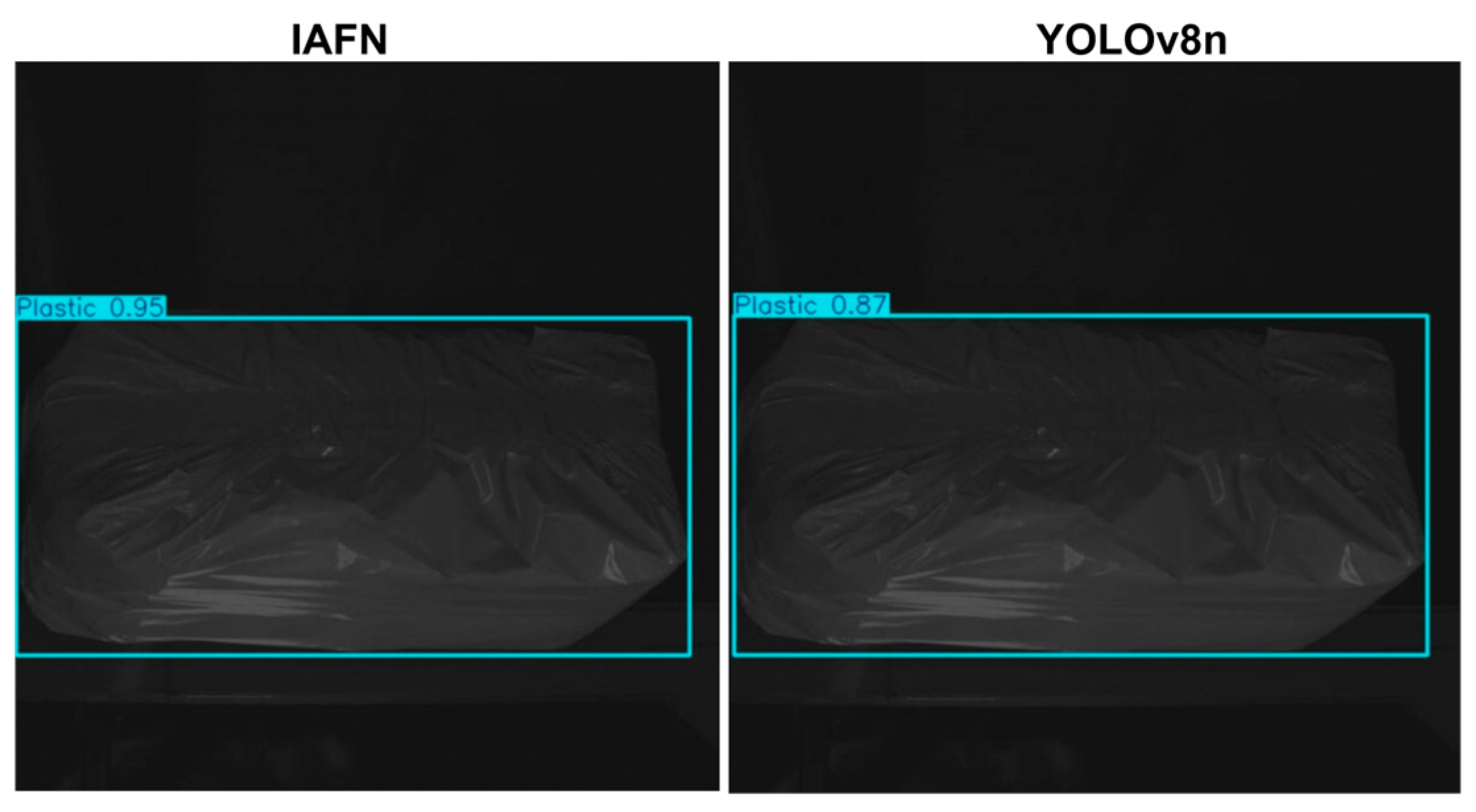

Figure 12 intuitively demonstrates the effectiveness of the Interactive Attention Fusion Network (IAFN) in improving model detection confidence. Facing the same plastic product, the IAFN model completed identification with a confidence of 0.95, significantly higher than the 0.87 of the YOLOv8n baseline model. This performance improvement mainly comes from the core working mechanism of the IAFN module, which deeply interacts and fuses semantic features from different levels of the network, effectively complementing and enhancing the detailed texture information from lower layers with the category semantic information from higher layers, enabling the model to more comprehensively “understand” the essential characteristics of the target. Therefore, when facing appearance changes in plastic materials due to reflections, wrinkles, etc., the IAFN-enhanced model exhibits stronger feature robustness, and its judgment is more decisive and accurate, reflecting the unique advantages of this module in complex feature extraction and fusion.

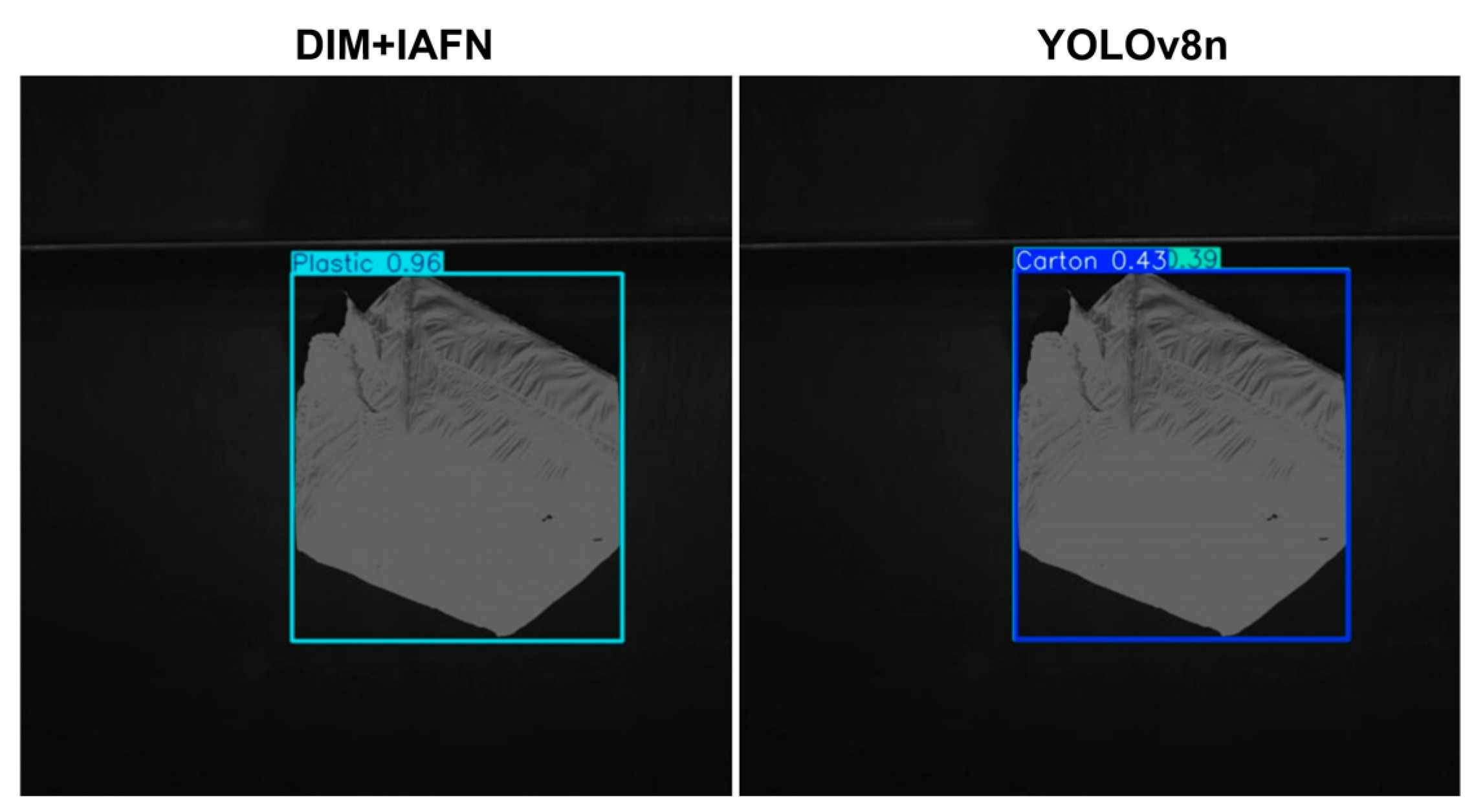

In

Figure 13, the left side shows the model integrated with our DIM+IAFN modules, accurately identifying “Plastic” with confidence (0.96), while on the right side, YOLOv8n misjudged it as “Carton”, with the confidence also dropping significantly to 0.43. This is because our Dynamic Illumination-aware Attention Module (DIM) has a strong adaptability to the rough texture of object surfaces, enabling it to effectively capture the essential features of plastic material and reduce the interference of light and shadow effects; secondly, the Interactive Attention Fusion Network (IAFN) enhances the unified understanding of the object’s global form and local details by deeply integrating semantic information from different levels, thereby improving classification confidence and accuracy. The overall results show that the collaborative work of these modules significantly improves the model’s robustness in complex scenes, solving the misdetection problem caused by inaccurate texture and shape judgment in the baseline model.

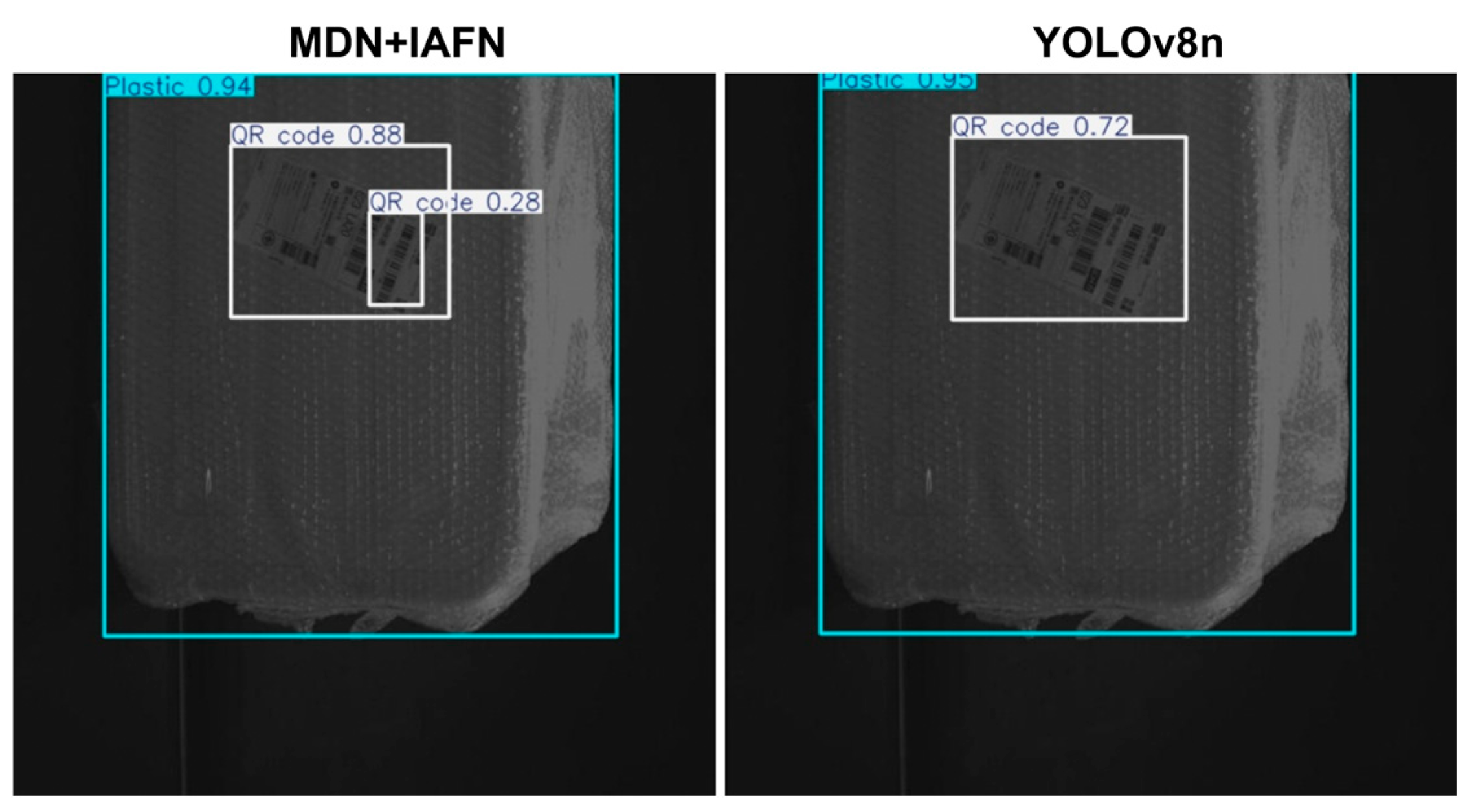

Figure 14 clearly shows the multi-target detection advantage brought by the collaborative work of the MDN and IAFN modules. Although both the MDN+IAFN model and YOLOv8n misidentified the order label as a QR code, the MDN+IAFN model successfully detected the QR code inside, even though the confidence score (0.28) was not high. This difference highlights the multi-scale perception capability of the MDN module, which enhances adaptability to targets of different sizes and angles through its deformable convolutional structure, enabling effective identification of dense QR codes; meanwhile, the IAFN module strengthens the extraction and utilization of subtle texture features through deep interaction and fusion of features from different levels, making even low-saliency targets effectively perceivable. This result indicates that the MDN+IAFN combination significantly improves the target recall rate and detail capture ability of the model in complex scenes.

Figure 15 clearly shows the performance advantage of the algorithm model integrating DIM, IAFN, and MDN compared to the baseline YOLOv8n in low-light environments. Although both models successfully detected the “Carton” and the “QR code” inside it, the improved model’s confidence in QR code recognition (0.64) was significantly higher than that of YOLOv8n (0.36). The synergistic effect of the three modules: the Dynamic Illumination-aware Attention Module (DIM) effectively improves the model’s adaptation to dark images and enhances the extraction of target features under low illumination; the Interactive Attention Fusion Network (IAFN) strengthens the perception of details of small targets like “QR code” by efficiently integrating semantic information from different levels; and the introduction of the Multi-branch Decomposition Network (MDN) likely allows its convolutional receptive field to more flexibly fit the target shape, thus achieving more stable and accurate positioning under complex lighting. The overall results show that these modules work together to significantly improve the model’s robustness under challenging lighting conditions and the reliability of small target detection.

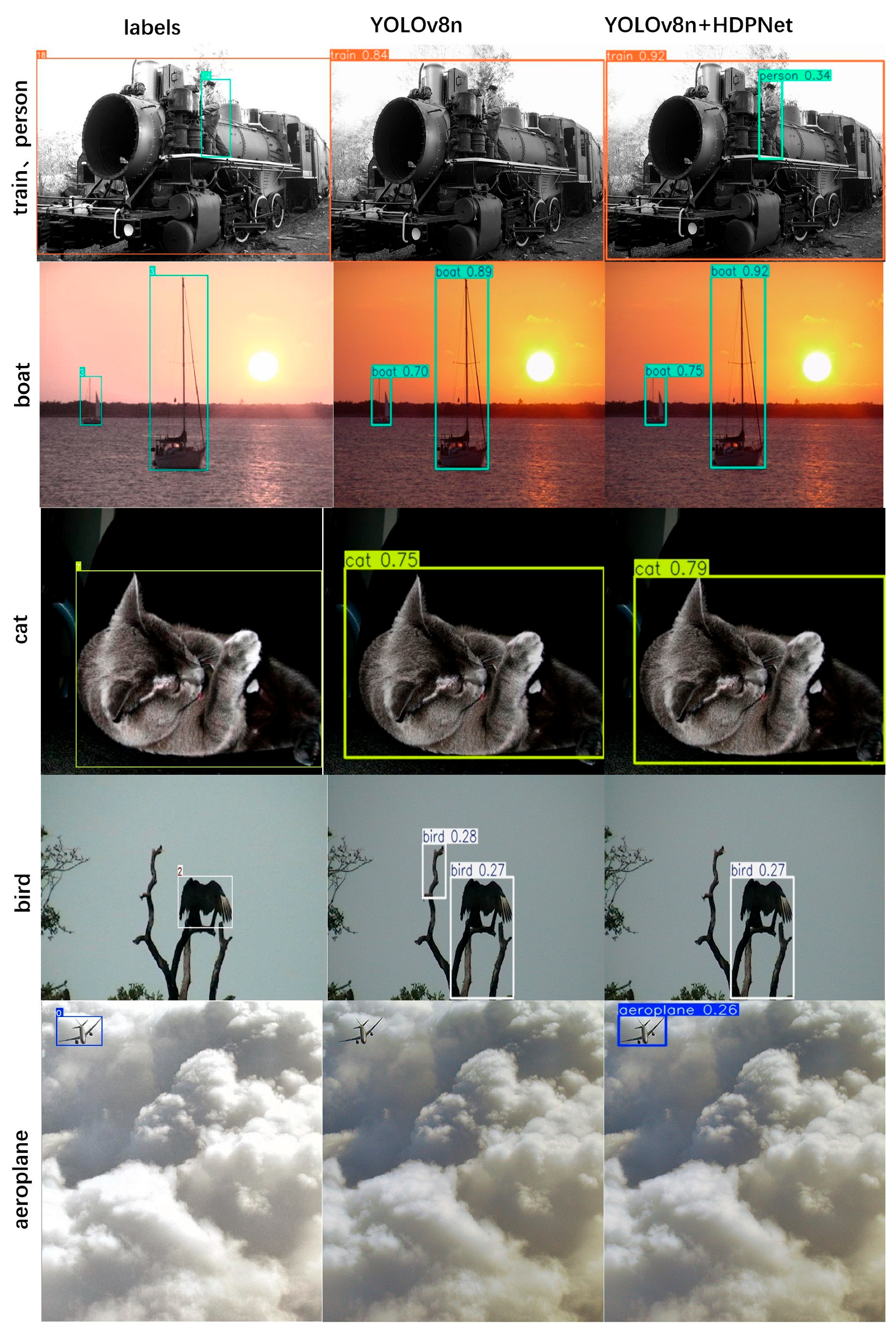

Figure 16 further shows the comprehensive performance comparison between our model and YOLOv8n on the public dataset Pascal VOC. In the images, the left is the image with labels, the middle is the YOLOv8n detection result, and the right is our model’s result. Overall, our model’s confidence in all categories is better than YOLOv8n, verifying its good generalization ability. Specifically, in the first comparison group, Train and Person have similar colors, so their visual features have some similarity. YOLOv8n failed to detect the Person target, while our model not only successfully detected it but also correctly identified the Train category, although its confidence still has room for improvement. Although YOLOv8n performs well in general object detection tasks, it still has obvious shortcomings in small object detection and the recognition of targets with high similarity to the background. Our model, by introducing a multi-modal dynamic perception mechanism, exhibits more stable detection performance and a higher recall rate in these challenging scenarios. In the second comparison group, both our model and YOLOv8n detected the ship, but our model’s confidence is higher. In the third comparison group, both our model and YOLOv8n correctly identified the cat, but our model’s confidence score is higher than YOLOv8n’s. In the fourth comparison group, both our model and YOLOv8n identified the bird, although the detection boxes of both slightly deviated from the label box. However, YOLOv8n misidentified a branch as a bird, resulting in a false positive, while our model did not have this false positive. In the fifth comparison group, YOLOv8n did not detect the airplane. Our model detected the airplane; although the confidence is not high, it is still better than YOLOv8n.

4.7. Discussion

This study, centered on the task of parcel object detection in low-light environments, systematically proposed and validated the effectiveness of the HDPNet model. Through multiple sets of comparative experiments, ablation experiments, and visualization analysis, the model’s performance in terms of accuracy, speed, and generalization ability was comprehensively evaluated, and the contributions and synergistic mechanisms of each module were deeply explored.

4.7.1. Model Comprehensive Performance Advantage Is Significant

Comparative experiments on the njpackage dataset show that HDPNet outperforms most mainstream detection models, such as YOLOv8n, YOLOv9t, YOLOv10n, etc., in key metrics like mAP@0.5, Precision, and Recall, especially excelling in Recall, indicating the model’s stronger ability to capture targets. Although it is slightly inferior to some lightweight YOLO variants in FPS and model size, it can still maintain an inference speed of approximately 46.9 FPS, meeting real-time detection requirements. Compared to RT-DETR, HDPNet has a smaller model size and higher inference efficiency while achieving similar accuracy, making it more suitable for edge deployment.

4.7.2. Module Synergy Enhances Detection Robustness

Ablation experiment results show that the three modules, DIM, IAFN, and MDN, each can bring performance improvements, and the effect is best when the three are used together, with mAP@0.5 improved by 4.4%. The DIM enhances the adaptability to low-light images through a dynamic illumination perception mechanism; the IAFN module strengthens the integration of local details and global semantics through interactive feature fusion; the MDN module improves the detection ability for irregular shapes and small targets through the synergy of deformable convolution and the Transformer branch. The three complement each other, jointly building a detection framework that balances illumination robustness, multi-scale perception, and detail enhancement.

4.7.3. Generality and Generalization Ability Are Verified

Experiments on public datasets such as PASCAL VOC and BCCD further prove the strong generalization ability of HDPNet. The model leads YOLOv8n and other models by over 5% in mAP@0.5 on the VOC dataset, and also achieved the optimal mAP@0.5 (0.925) on the BCCD small target dataset, indicating that it is not only suitable for low-light parcel detection but can also be well transferred to general object detection and small target detection tasks.

4.7.4. Visualization Results Confirm Module Effectiveness

Visualization comparisons show that HDPNet exhibits stronger discriminative ability in challenging scenarios such as low light, small targets, and complex textures. For example, on small targets like QR codes, the baseline model YOLOv8n is prone to missed detections, while HDPNet can still stably detect them; between categories with similar materials (such as Plastic and Carton), HDPNet significantly reduces the misjudgment rate by virtue of the enhanced feature representation of DIM and IAFN. These visual results intuitively reflect the practical role of each module in improving model robustness and discriminative power.

4.7.5. Limitations and Future Work

Although HDPNet performs excellently in multiple experiments, there is still a trade-off between its model complexity and inference speed. In future work, model lightweight strategies can be further explored to improve speed while maintaining accuracy. Furthermore, the stability of the model under extreme low light or dynamic lighting still has room for optimization, and more advanced illumination estimation and enhancement methods can be considered.

5. Conclusions

This paper addresses the core challenge of performance degradation in object detection under complex illumination and deformation conditions by proposing HDPNet, an innovative detection framework based on a Hybrid Dynamic Perception Network. By integrating the Dynamic Illumination-aware Module (DIM), the Interactive Attention Fusion Network (IAFN), and the Multi-branch Decomposition Network (MDN), the framework effectively enhances feature representation and robustness in low-light, deformed, and multi-scale scenarios.

Experiments on the njpackage dataset demonstrate that HDPNet achieves a detection accuracy (mAP@0.5) of 86.6% while maintaining a real-time inference speed of ≥45 FPS, significantly outperforming the baseline model and other comparative methods, thus validating the effectiveness of each core module. Additional generalization experiments on public datasets such as PASCAL VOC and BCCD further validate the model’s superiority in general object detection and small object recognition tasks.

The main contributions of this research can be summarized as follows:

An end-to-end dynamic perception network (HDPNet) is proposed, unifying global illumination encoding with a dual-attention mechanism, a lightweight Transformer-CNN interactive architecture, and a hybrid design of deformable convolution and sparse Transformer within one framework, offering a new approach to visual detection in complex environments.

The proposed method not only achieves excellent performance on specific tasks, but its modular design also ensures generality and flexibility, providing a transferable solution for other vision tasks facing similar challenges, such as close-range sensing, autonomous driving, and agricultural monitoring.

The research completes a full workflow from algorithm design and module integration to systematic experimental validation, offering a technically viable solution with practical potential for visual detection in logistics automation.

Although the expected results have been achieved, future work can be carried out on the following aspects. Firstly, further exploration of more lightweight network designs could be conducted to adapt to embedded platforms with more limited computational resources (e.g., Jetson Orin). Secondly, collecting more data from extreme scenarios (e.g., strong reflections, severe occlusion) could continuously improve the model’s generalization capability. Finally, applying the framework to more close-range sensing scenarios (e.g., wildlife monitoring, nighttime drone inspection) would help to verify its cross-domain applicability.