Advancing Retaining Wall Inspections: Comparative Analysis of Drone-Lidar and Traditional TLS Methods for Enhanced Structural Assessment

Abstract

1. Introduction

2. Materials and Methods

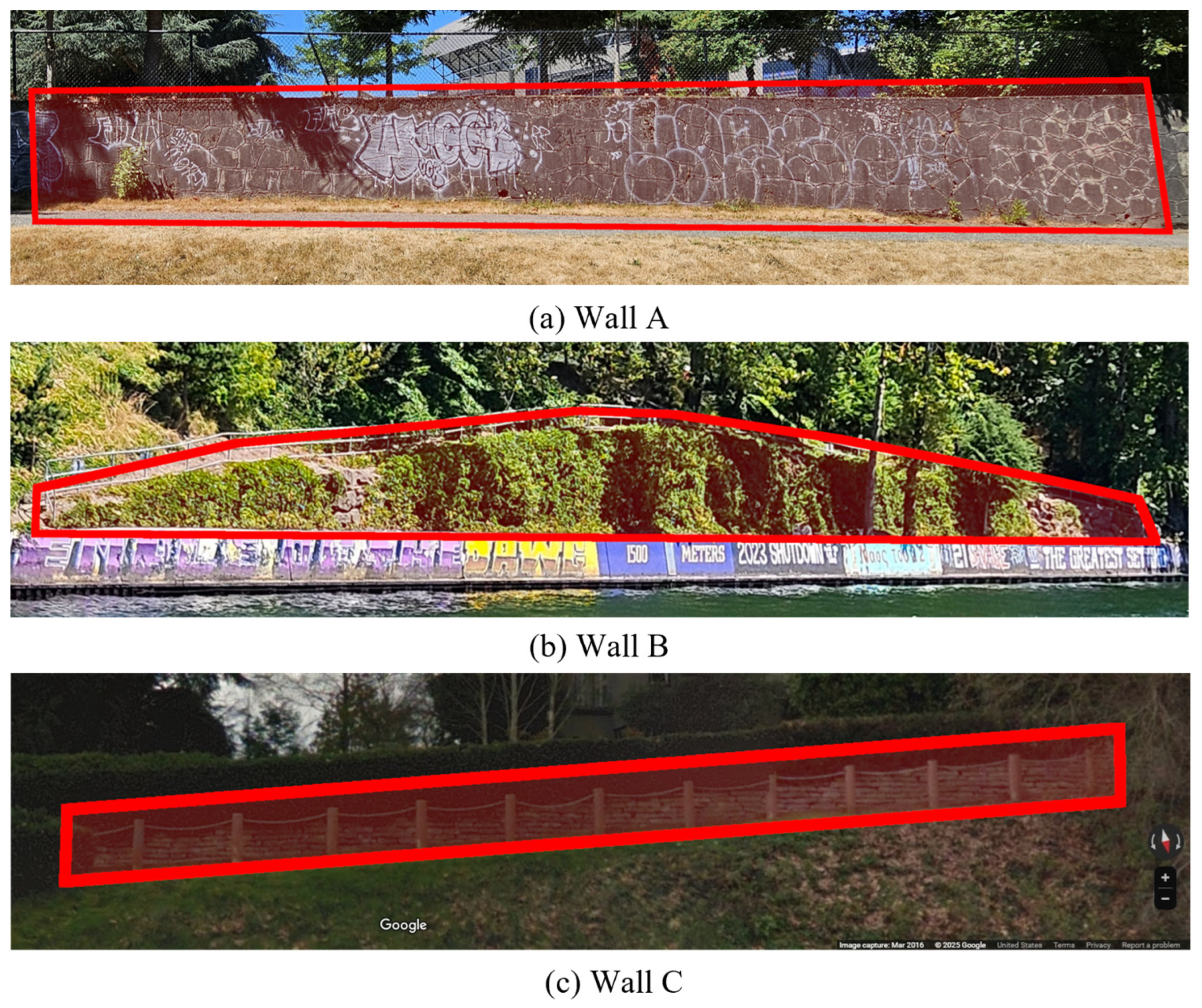

2.1. Overview of Structures

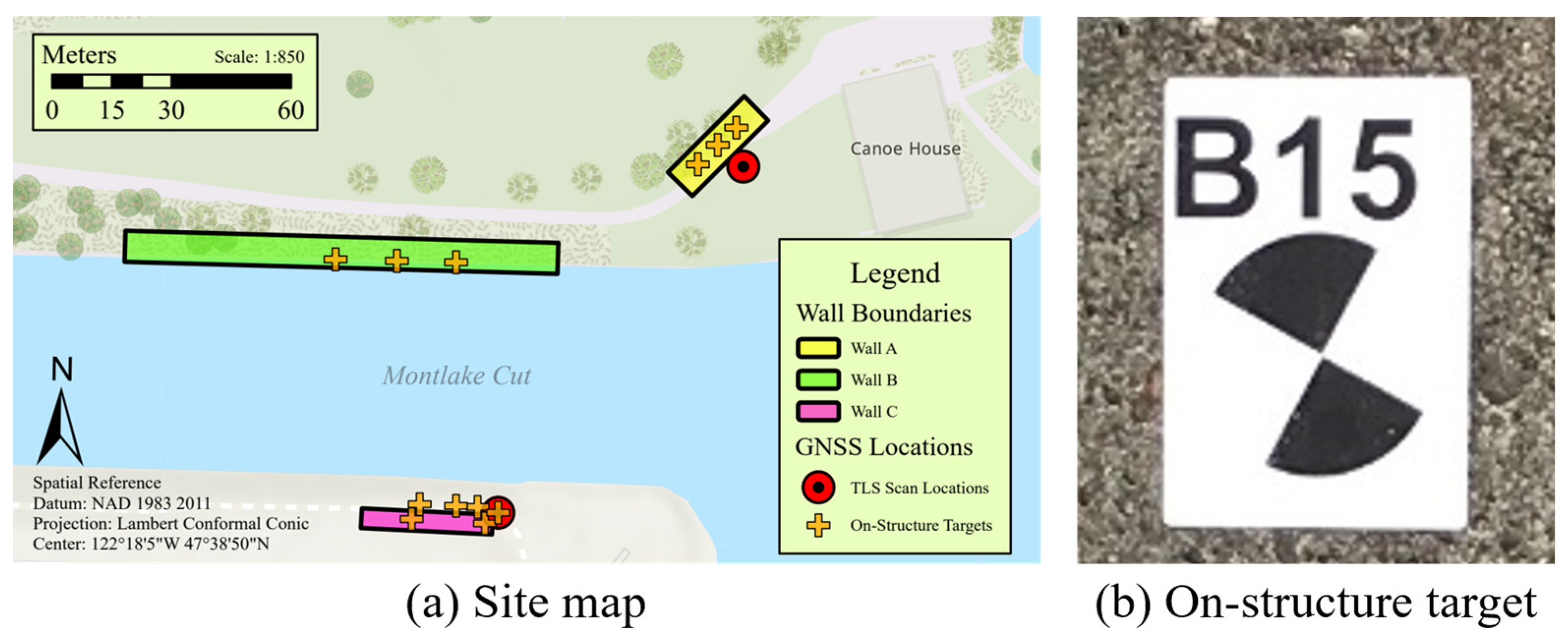

2.2. Ground Control Systems

2.2.1. Site Map

2.2.2. Total Station Electronic Distance Measurements

2.2.3. Global Navigation Satellite System (GNSS) Observation Data

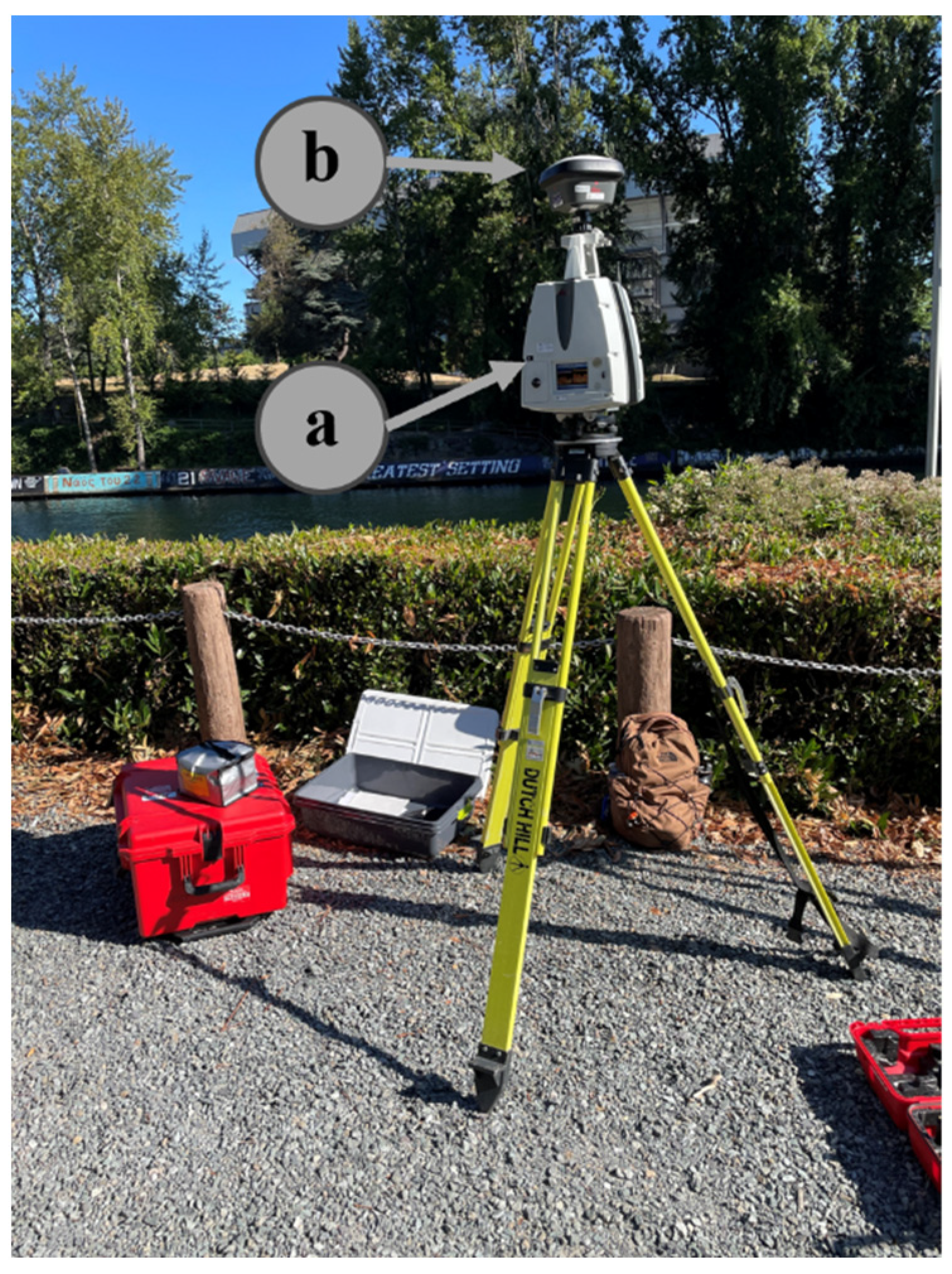

2.3. Terrestrial Laser Scanning (TLS) Ground Truth

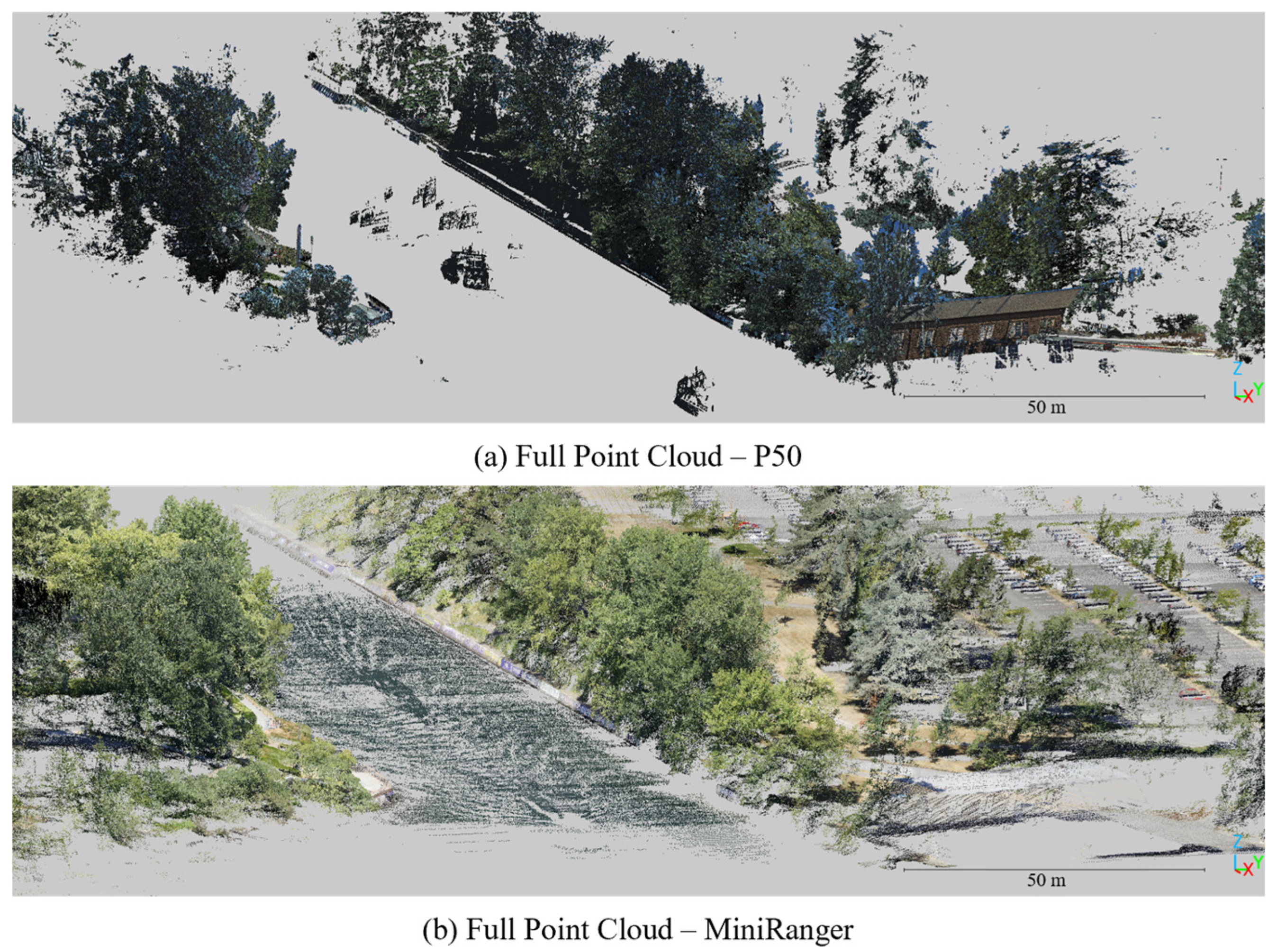

2.4. Drone-Lidar

2.5. Post Processing

2.5.1. Point Cloud Trimming

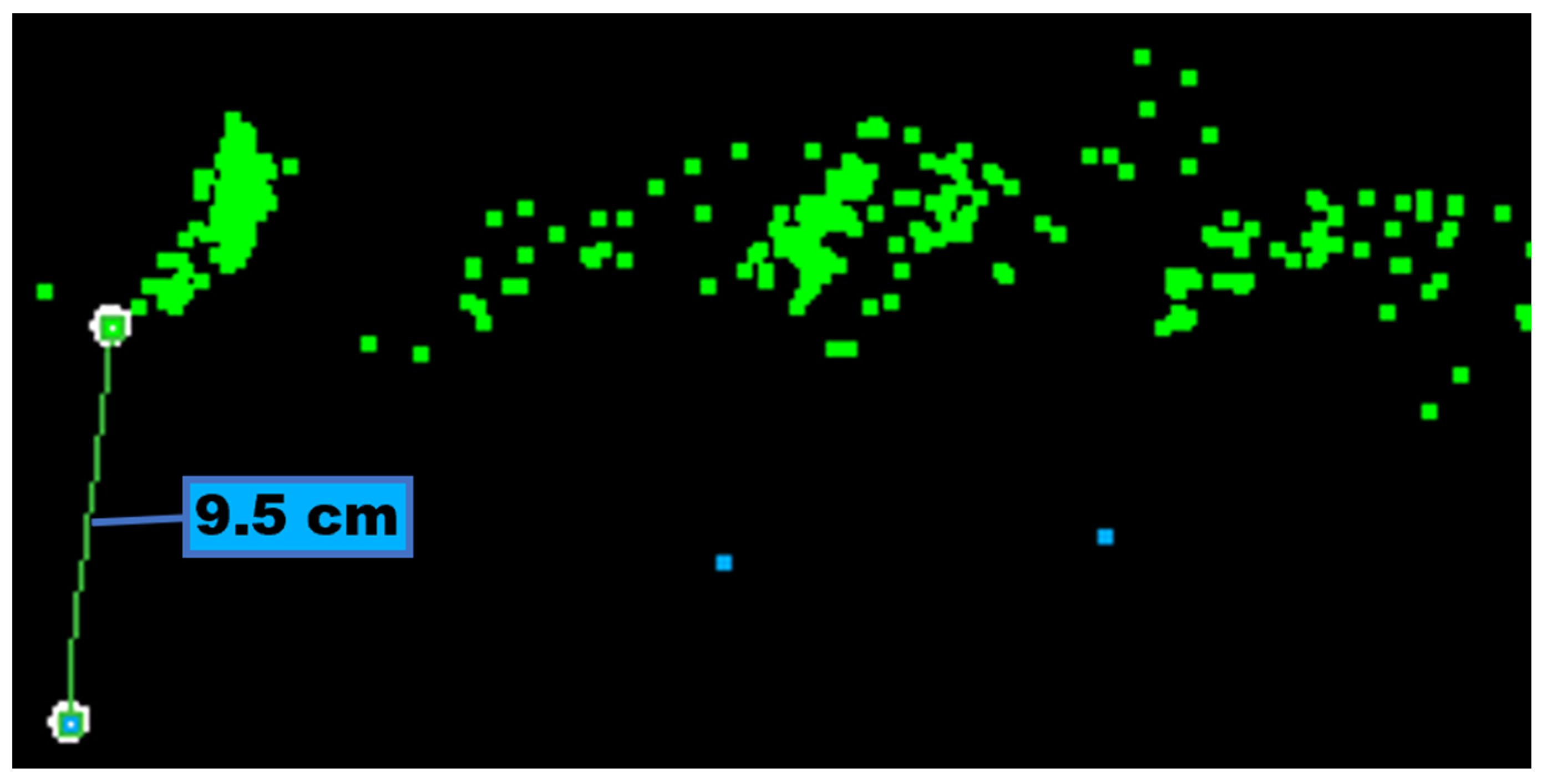

2.5.2. Fine Registration

2.5.3. Point Distribution Analyses

2.5.4. Cloud-to-Cloud Comparisons

2.5.5. Vegetation Correlation Analysis

3. Results

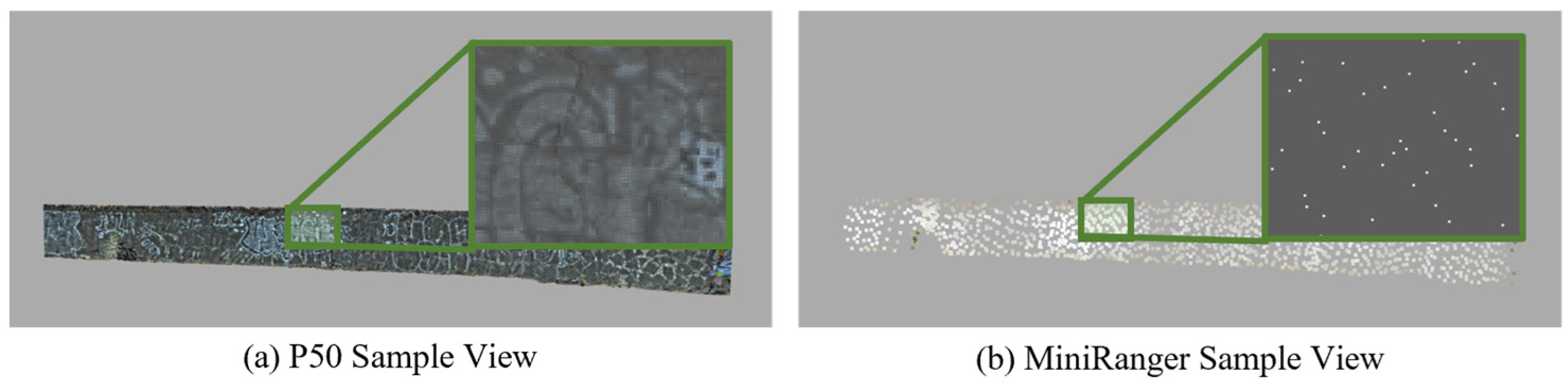

3.1. Point Cloud Density

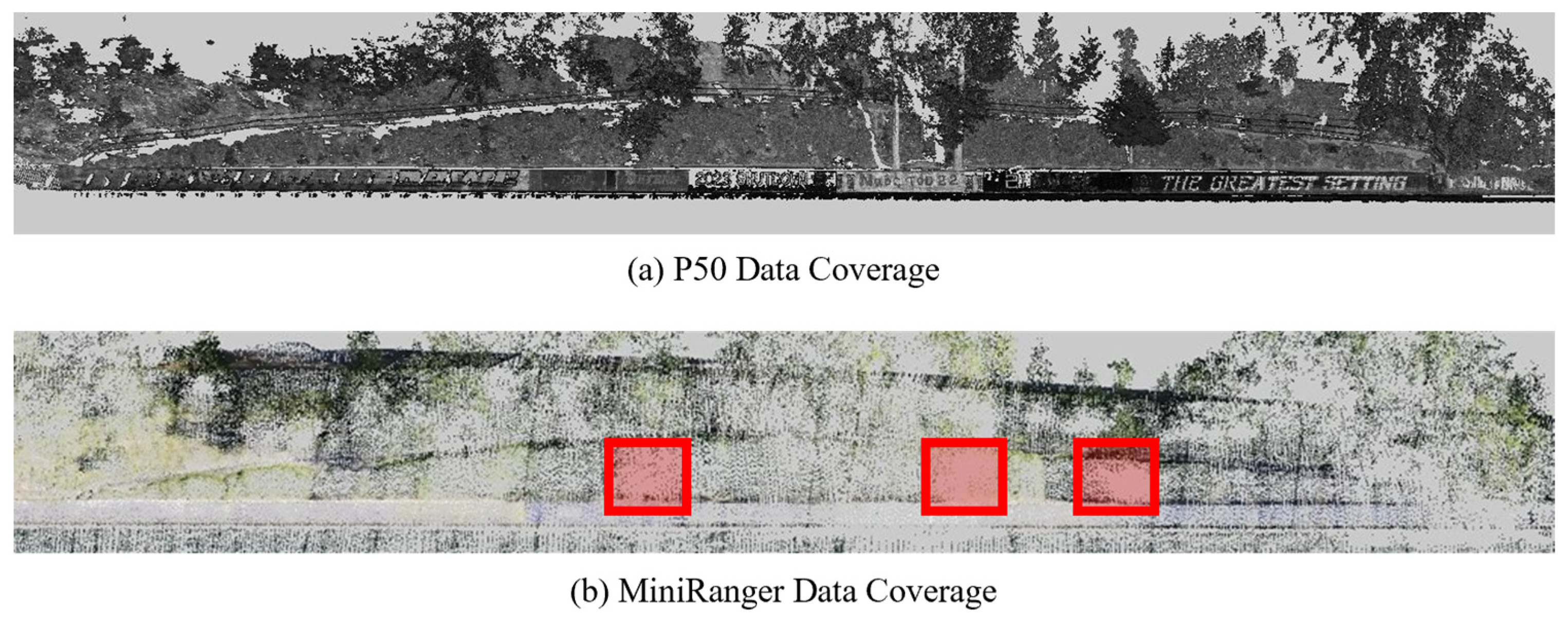

3.2. Data Coverage

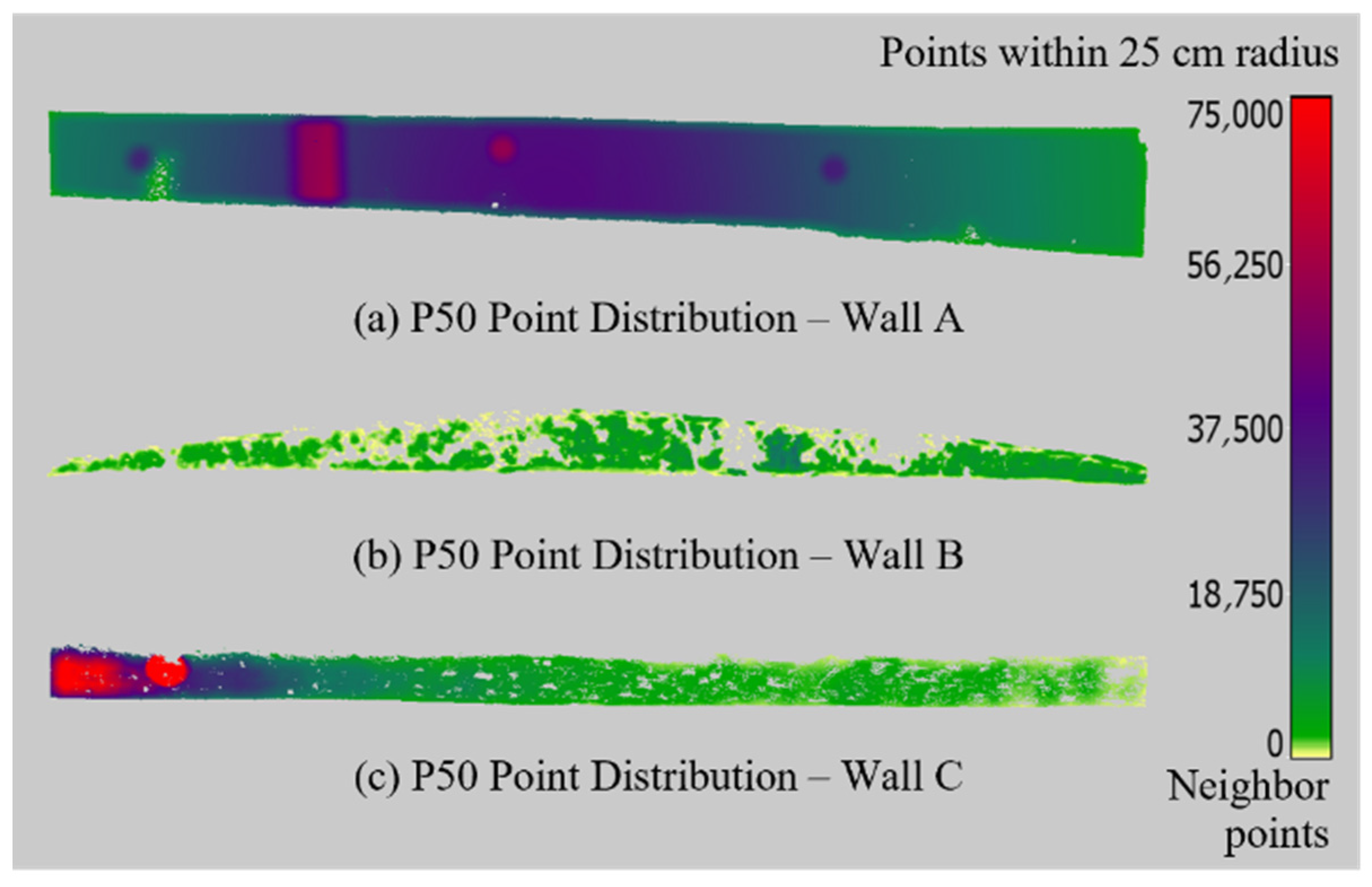

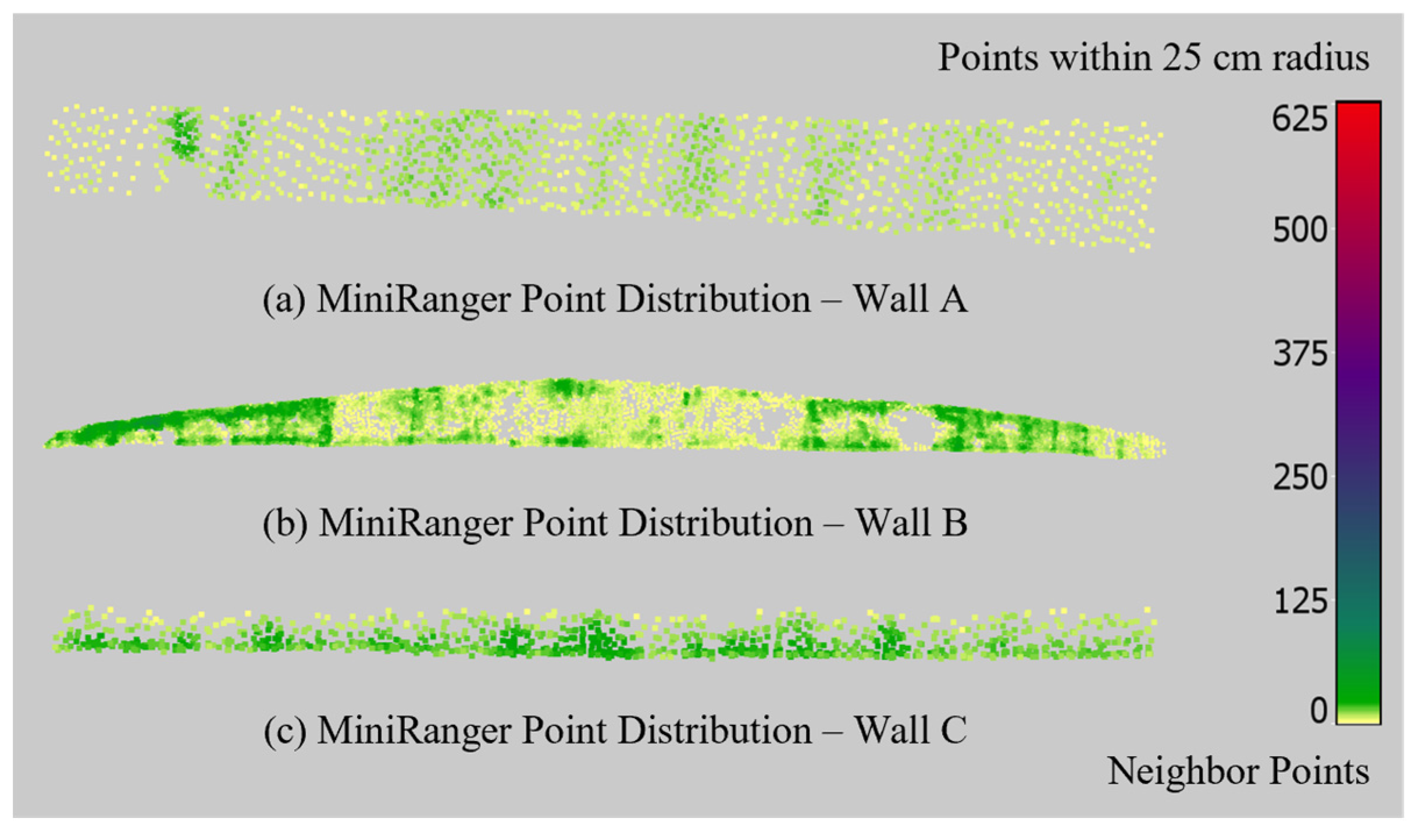

3.3. Point Distribution

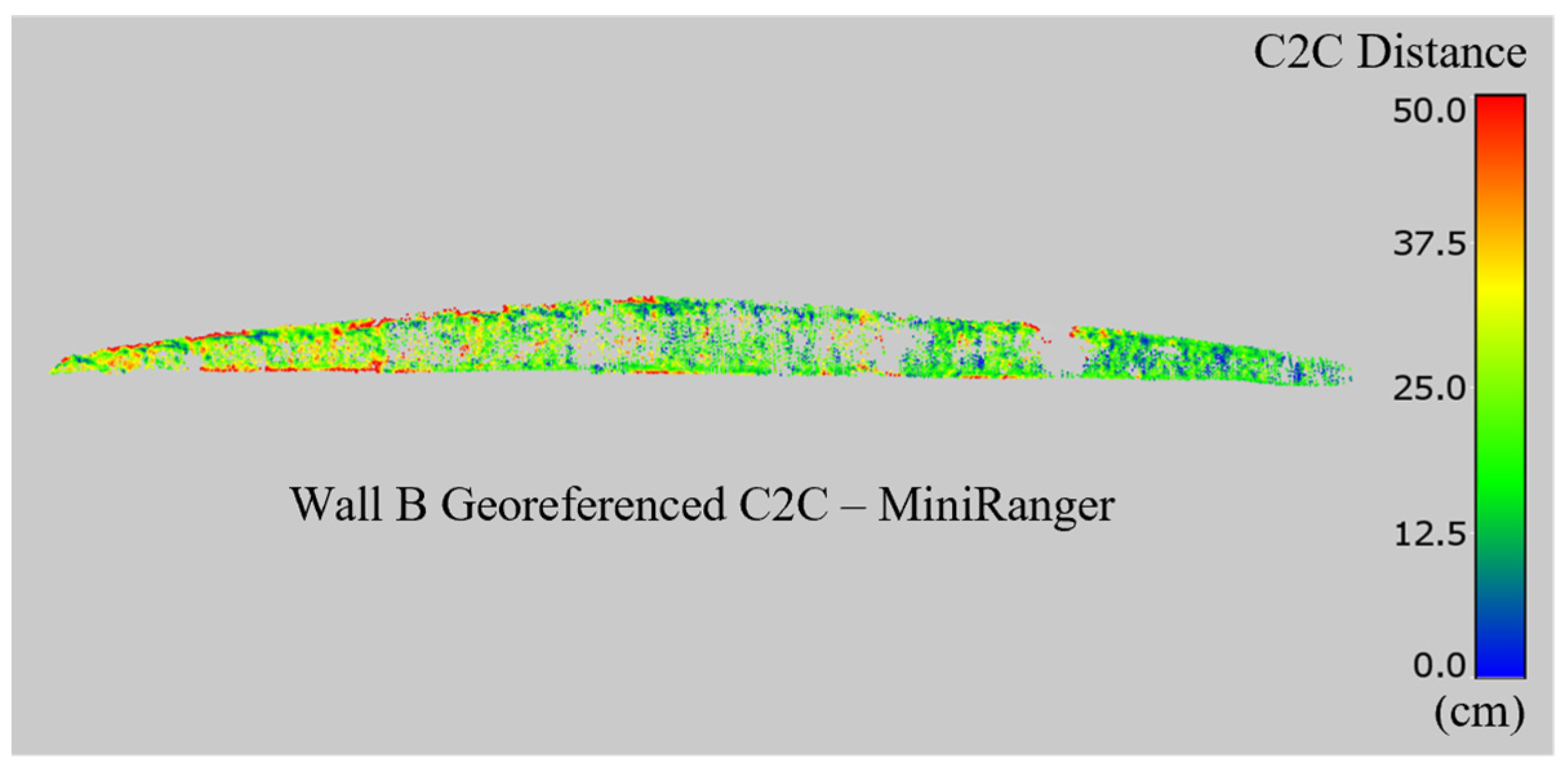

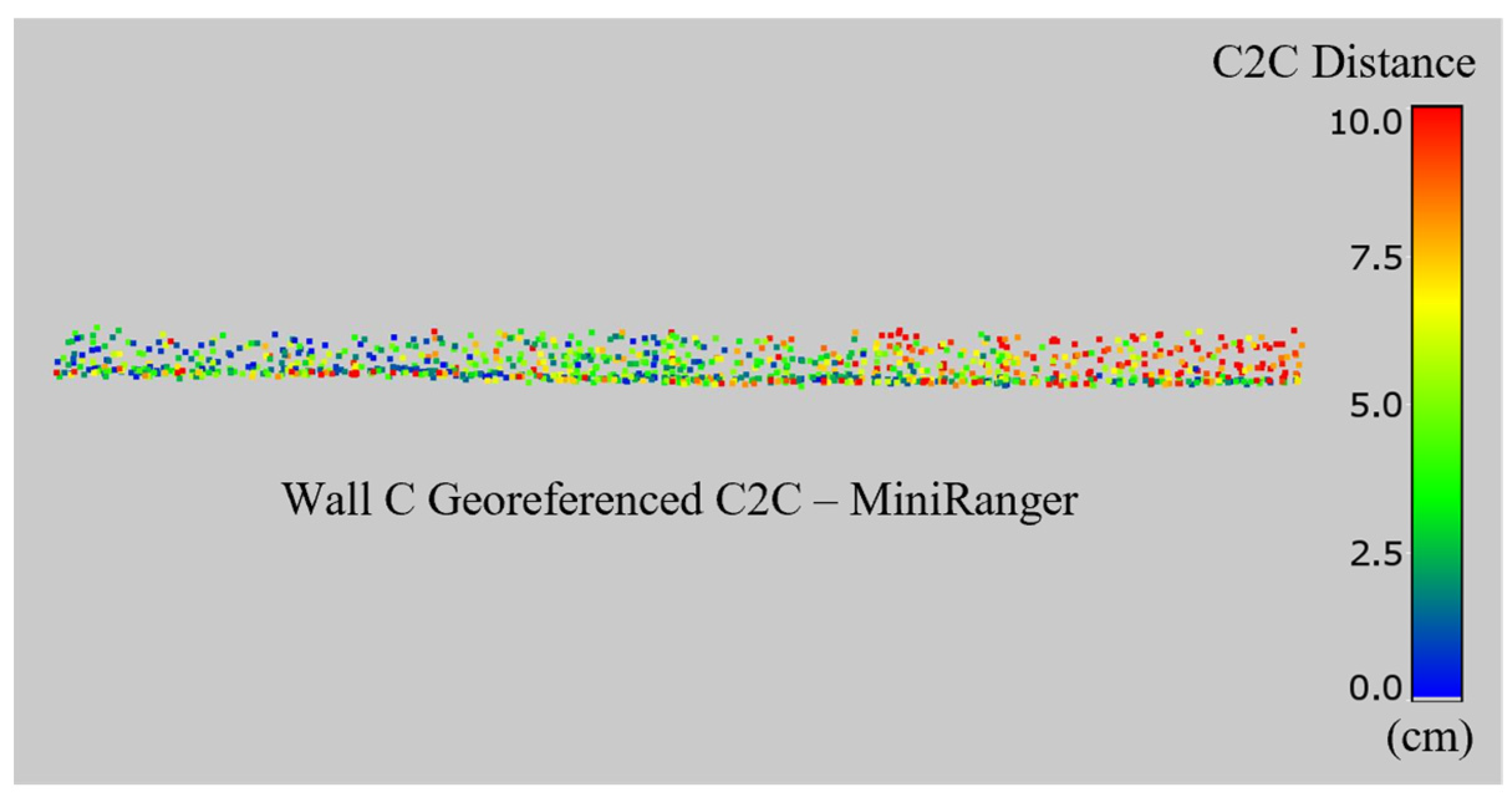

3.4. Georeferenced C2C Comparisons

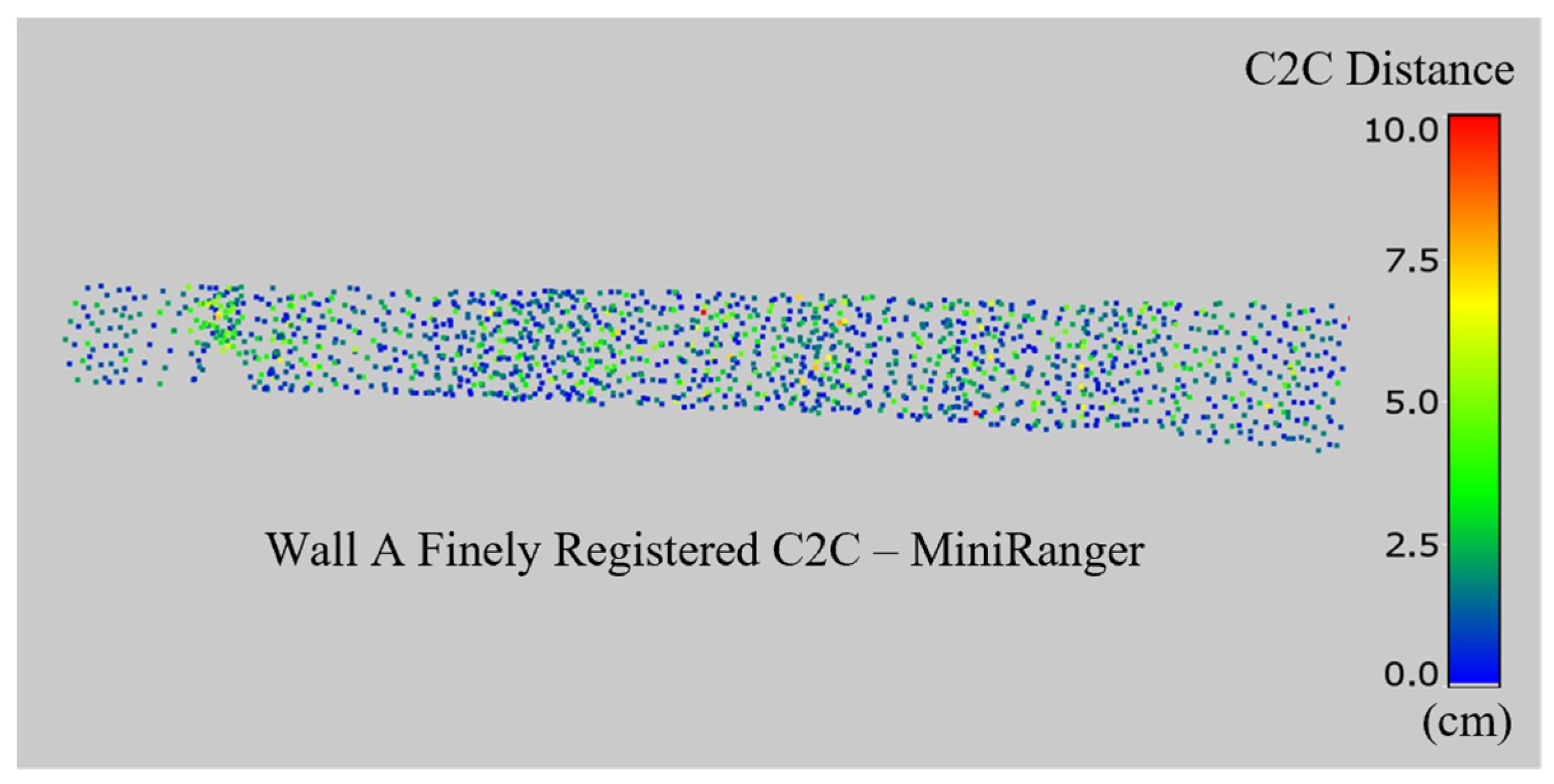

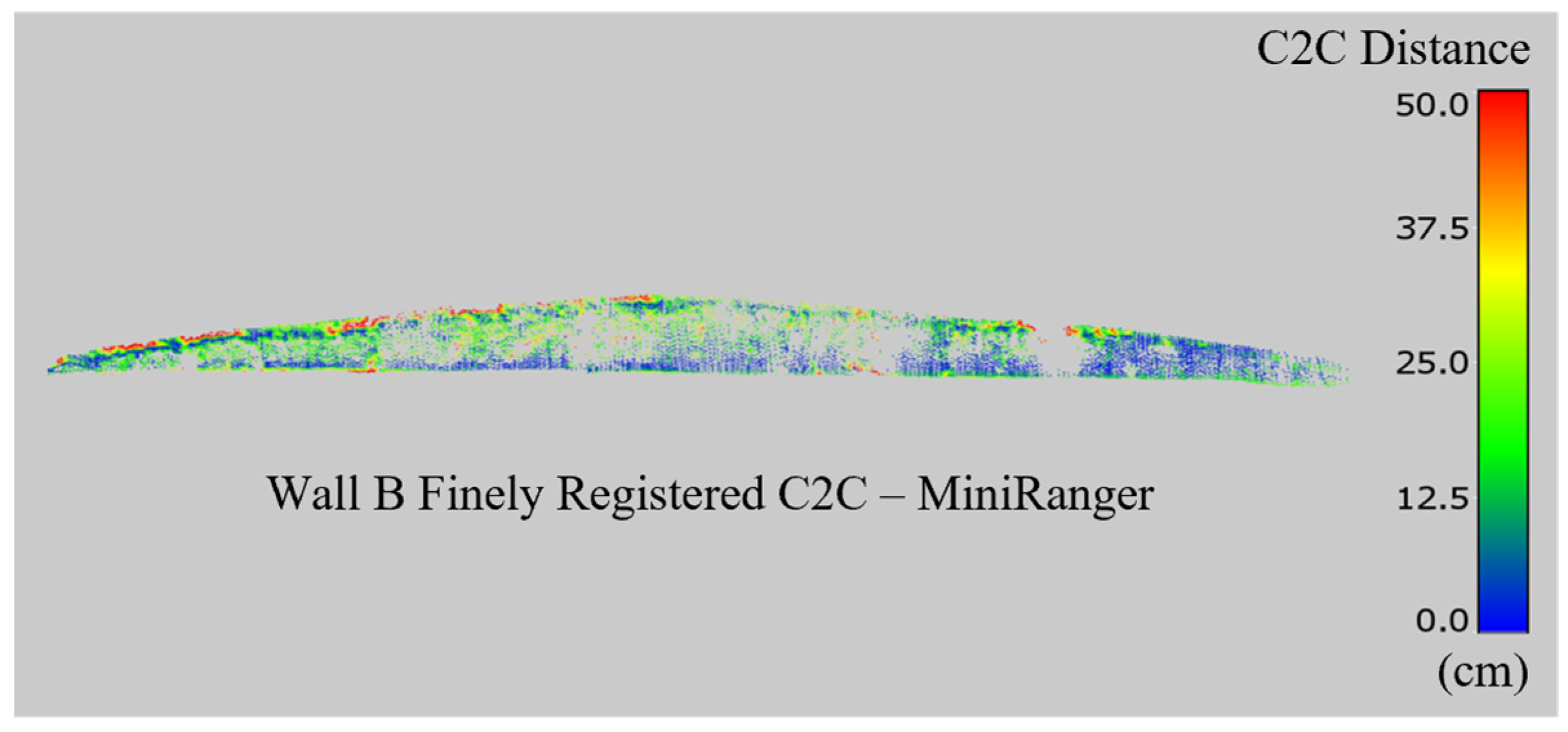

3.5. Finely Registered C2C Comparisons

3.6. Summary of C2C Results

4. Discussion

4.1. Sources of Error and Accuracy Improvements

4.2. Point Density Considerations

4.3. Comparing Drone-Lidar and TLS for Retaining Wall Inspection

4.4. Recommendation for Future Work

5. Conclusions

- Terrestrial lidar scanning is validated as a reliable method of 3D imaging with comprehensive data capture, even on structures with dense vegetation.

- The overall accuracy of retaining wall imaging using drone-lidar is more dependent on precise georegistration than the relative accuracy of imaging platforms.

- Drone-lidar systems are not recommended as the sole means of 3D imaging for retaining wall inspection purposes. Drone-lidar was found to offer centimeter-level accuracy on retaining walls under low to moderate vegetation with an average density of 40 pts/m2. This data quality is insufficient for most retaining wall monitoring purposes.

- Inspections combining TLS and drone-lidar may be considered to maintain the accuracy and density of measurements on the surface of retaining walls while leveraging the efficiency of drone-lidar to capture larger areas that contextualize measurements of the wall collected by TLS.

- Drone-lidar should not be used on walls with dense vegetation.

- The efficiency of drone-lidar must be weighed against the potential for inaccurate georeferencing and local measurements.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| TLS | Terrestrial Laser Scanning |

| GNSS | Global Navigation Satellite System |

| GPS | Global Positioning System |

| lidar | Light distance and ranging |

| UAS | Uncrewed Aerial System |

| RTK | Real-Time Kinematics |

| C2C | Cloud-to-Cloud Comparison |

| RMSE | Root Mean Square Error |

References

- NPS. National Park Service–WIP and GIP Reports. Available online: https://fhfl15gisweb.flhd.fhwa.dot.gov/NpsReports/GipWip (accessed on 13 June 2024).

- Butler, C.J.; Gabr, M.A.; Rasdorf, W.; Findley, D.J.; Chang, J.C.; Hammit, B.E. Retaining Wall Field Condition Inspection, Rating Analysis, and Condition Assessment. J. Perform. Constr. Facil. 2016, 30, 04015039. [Google Scholar] [CrossRef]

- New York State Department of Transportation. Retaining Wall Inventory and Inspection Program Manual. Available online: https://www.dot.ny.gov/divisions/engineering/technical-services/geotechnical-engineering-bureau/RWIIP%20Manual (accessed on 13 June 2024).

- Hain, A.; Zaghi, A.E. Applicability of Photogrammetry for Inspection and Monitoring of Dry-Stone Masonry Retaining Walls. Transp. Res. Rec. 2020, 2674, 287–297. [Google Scholar] [CrossRef]

- Oats, R.; Escobar-Wolf, R.; Oommen, T. A Novel Application of Photogrammetry for Retaining Wall Assessment. Infrastructures 2017, 2, 10. [Google Scholar] [CrossRef]

- Psimoulis, P.; Algadhi, A.; Grizi, A.; Neves, L. Assessment of accuracy and performance of terrestrial laser scanner in monitoring of retaining walls. In Proceedings of the 5th Joint International Symposium on Deformation Monitoring—JISDM 2022, Valencia, Spain, 20–22 June 2022. [Google Scholar] [CrossRef]

- Rasdorf, W.; Gabr, M.A.; Butler, C.J.; Findley, D.J.; Bert, S.A. Retaining Wall Inventory and Assessment System. NCDOT Project 2014-10; 2015. Available online: https://connect.ncdot.gov/projects/research/RNAProjDocs/2014-10FinalReport.pdf (accessed on 13 June 2024).

- Brutus, O.; Tauber, G. Guide to Asset Management of Earth Retaining Structures. Available online: https://onlinepubs.trb.org/onlinepubs/nchrp/docs/nchrp20-07(259)_fr.pdf (accessed on 13 June 2024).

- Neoge, S.; Mehendale, N. Review on LiDAR Technology. SSRN Electron. J. 2020. [Google Scholar] [CrossRef]

- Abbas, M.A.; Chong Luh, L.; Setan, H.; Majid ZKChong, A.; Aspuri, A.; Idris, K.M.; Ariff, M.F.M. Terrestrial Laser Scanners Pre-Processing: Registration and Georeferencing. J. Teknol. 2015, 71, 115–122. [Google Scholar] [CrossRef][Green Version]

- What Is LiDAR? Available online: https://www.artec3d.com/learning-center/what-is-lidar (accessed on 13 July 2024).[Green Version]

- Kaartinen, E.; Dunphy, K.; Sadhu, A. LiDAR-Based Structural Health Monitoring: Applications in Civil Infrastructure Systems. Sensors 2022, 22, 4610. [Google Scholar] [CrossRef] [PubMed]

- Yust, M.B.S.; McGuire, M.P.; Shippee, B.J. Application of Terrestrial Lidar and Photogrammetry to the As-Built Verification and Displacement Monitoring of a Segmental Retaining Wall. Geotech. Front. 2017, 2017, 461–471. [Google Scholar] [CrossRef]

- Laefer, D.F.; Lennon, D. Viability Assessment of Terrestrial LiDAR for Retaining Wall Monitoring. In Proceedings of the GeoCongress 2008, Orleans, LA, USA, 9–12 March 2008. [Google Scholar] [CrossRef]

- Kurdi, F.T.; Reed, P.; Gharineiat, Z.; Awrangjeb, M. Efficiency of Terrestrial Laser Scanning in Survey Works: Assessment, Modelling, and Monitoring. Int. J. Environ. Sci. Nat. Res. 2023, 32, 556334. [Google Scholar] [CrossRef]

- Leica ScanStation P50 3D Laser Scanner. Available online: https://secure.fltgeosystems.com/laser-scanners-3d/leica-scanstation-p50-3d-laser-scanner-lca6012700/?srsltid=AfmBOorNIjRttgv1pjcHY7cQf2APce9w5rrCQZDuzHDF4dv61kFouVww (accessed on 5 December 2024).

- Maar, H.; Zogg, H. WFD-Wave Form Digitizer Technology White Paper. 2021. Available online: https://leica-geosystems.com/en-us/about-us/content-features/wave-form-digitizer-technology-white-paper (accessed on 9 September 2025).

- Mukupa, W.; Roberts, G.W.; Hancock, C.M.; Al-Manasir, K. A review of the use of terrestrial laser scanning application for change detection and deformation monitoring of structures. Surv. Rev. 2016, 49, 99–116. [Google Scholar] [CrossRef]

- Chen, S.; Laefer, D.F.; Mangina, E.; Zolanvari, S.I.; Byrne, J. UAV Bridge Inspection through Evaluated 3D Reconstructions. J. Bridge Eng. 2019, 24, 05019001. [Google Scholar] [CrossRef]

- Wondolowski, M.; Hain, A.; Motaref, S.; Grilliot, M. Assessing Doming Mitigation Strategies for Enhanced Inspection of Masonry Retaining Walls with SfM as a Cost-Effective 3D Imaging Solution. Front. Built Environ. 2025, 11, 1517379. [Google Scholar] [CrossRef]

- Kwiatkowski, J.; Anigacz, W.; Beben, D. A Case Study on the Noncontact Inventory of the Oldest European Cast-iron Bridge Using Terrestrial Laser Scanning and Photogrammetric Techniques. Remote Sens. 2020, 12, 2745. [Google Scholar] [CrossRef]

- Khan, N.H.R.; Kumar, S.V. Terrestrial LiDAR derived 3D point cloud model, digital elevation model (DEM) and hillshade map for identification and evaluation of pavement distresses. Results Eng. 2024, 23, 102680. [Google Scholar] [CrossRef]

- Oskoui, P.; Becerik-Gerber, B.; Soibelman, L. Automated measurement of highway retaining wall displacements using terrestrial laser scanners. Autom. Constr. 2016, 65, 86–101. [Google Scholar] [CrossRef]

- Characterization of Laser Scanners for Detecting Cracks for Post-Earthquake Damage Inspection. In Proceedings of the 30th ISARC, Montréal, QC, Canada, 11–15 August 2013. [CrossRef]

- Border, R.; Chebrolu, N.; Tao, Y.; Gammell, J.D.; Fallon, M. Osprey: Multi-Session Autonomous Aerial Mapping with LiDAR-based SLAM and Next Best View Planning. arXiv 2023. [Google Scholar] [CrossRef]

- Moore, A.J.; Schubert, M.; Rymer, N.; Balachandran, S.; Consiglio, M.; Munoz, C.; Smith, J.; Lewis, D.; Schneide, P. Inspection of electrical transmission structures with UAV path conformance and lidar-based geofences. In Proceedings of the 2018 IEEE Power & Energy Society Innovative Smart Grid Technologies Conference (ISGT), Washington, DC, USA, 19–22 February 2018. [Google Scholar] [CrossRef]

- Yan, Y.; Mao, Z.; Wu, J.; Padir, T.; Hajjar, J.F. Towards automated detection and quantification of concrete cracks using integrated images and lidar data from unmanned aerial vehicles. Struct. Control Health Monit. 2021, 29, e2757. [Google Scholar] [CrossRef]

- Zhao, Y.; Im, J.; Zhen, Z.; Zhao, Y. Towards accurate individual tree parameters estimation in dense forest: Optimized coarse-to-fine algorithms for registering UAV and terrestrial LiDAR data. GISci. Remote Sens. 2023, 60, 2197281. [Google Scholar] [CrossRef]

- Suh, J.W.; Ouimet, W. Generation of High-Resolution Orthomosaics from Historical Aerial Photographs Using Structure-from-Motion and Lidar Data. Photogramm. Eng. Remote Sens. 2023, 89, 37–46. [Google Scholar] [CrossRef]

- Mundell, C.; McCombie, P.; Heath, A.; Harkness, J.; Walker, P. Behaviour of drystone retaining structures. Proc. Inst. Civ. Eng. Struct. Build. 2010, 163, 3–12. [Google Scholar] [CrossRef]

- 2016 & 2017 Retaining Wall and Landslide Report. Available online: https://www.cincinnati-oh.gov/sites/dote/assets/File/WallsHillsides/2016-2017_Retaining_Wall_Report.pdf (accessed on 13 July 2025).

- Esri Community Map Contributors, WSU Facilities Services GIS, City of Seattle, King County, WA State Parks GIS. Topographic Basemap. 2021; 1:16138; 1:1613; 122.3019453° W 47.6475268° N. Available online: https://www.arcgis.com/sharing/rest/content/items/27e89eb03c1e4341a1d75e597f0291e6/resources/styles/root.json (accessed on 25 October 2025).

- Mahun, J. Theodolite and Total Station. Open Access Surveying Library. 2017. Available online: https://www.jerrymahun.com/index.php/home/open-access/44-x-equipment-check-adjustment/199-d-theodolite-tsi?showall=1 (accessed on 24 June 2025).

- Scherer, M.; Lerma, J.L. From the Conventional Total Station to the Prospective Image Assisted Photogrammetric Scanning Total Station: Comprehensive Review. J. Surv. Eng. 2009, 135, 173–178. [Google Scholar] [CrossRef]

- Roziqin, A.; Gustin, O.; Kurniawan, A.; Syaifudin, M. Topographic Mapping Using Electronic Total Station (ETS). In Proceedings of the 2019 2nd International Conference on Applied Engineering (ICAE), Batam, Indonesia, 2–3 October 2019. [Google Scholar] [CrossRef]

- Zhao, L.; Wang, P.; Zhang, G. Shape Measurement of Parabolic Antenna Using Total Station TCRA1102. In Proceedings of the 2011 IEEE International Conference on Spatial Data Mining and Geographical Knowledge Services, Fuzhou, China, 29 June–1 July 2011. [Google Scholar] [CrossRef]

- Liang, Y.; Xie, R.; Liu, C.; Ye, A. Construction and Optimization Method of Large-scale Space Control Field based on Total Station. In Proceedings of the 2022 3rd International Conference on Geology, Mapping and Remote Sensing (ICGMRS) 2022, Zhoushan, China, 22–24 April 2022. [Google Scholar] [CrossRef]

- Leica Geosystems. Leica TS16 Data Sheet. Available online: https://leica-geosystems.com/-/media/files/leicageosystems/products/datasheets/leica_viva_ts16_ds.ashx (accessed on 13 June 2024).

- GPS.gov. GPS: The Global Positioning System. Available online: https://www.gps.gov/ (accessed on 6 June 2024).

- PAGNA Geodesy Lab CWU. ZSE1 (PANGA) Seattle WA. Available online: https://www.geodesy.org/sites/zse1/ (accessed on 12 November 2024).

- FAA. ZSE1 Site Information Form. Available online: https://www.ngs.noaa.gov/CORS/Sites/zse1.html (accessed on 12 November 2024).

- Phoenix Lidar. LiDAR Mapping Systems: Post Processing—Workflow with Inertial Explorer and TerraSolid. Available online: https://www.phoenixlidar.com/wp-content/uploads/2019/02/Phoenix-LiDAR-Systems-Post-Processing-Workflow-20190107.pdf (accessed on 28 June 2024).

- Leica. Leica GS18 T GNSS RTK Rover. Available online: https://leica-geosystems.com/en-us/products/gnss-systems/smart-antennas/leica-gs18-t (accessed on 6 June 2024).

- Leica. Leica Infinity Training Materials-Advanced GNSS Processing. Available online: https://leica-geosystems.com/-/media/539597242bfa46b99d1366acae90d133.ashx (accessed on 14 June 2024).

- Leica. Leica ScanStation P50 Because Every Detail Matters. Available online: https://leica-geosystems.com/-/media/files/leicageosystems/products/datasheets/leica_scanstation_p50_ds.ashx?la=en&hash=5EC270F6F529355910203E95EA1959EF (accessed on 21 September 2025).

- Laefer, D.F.; Fitzgerald, M.; Maloney, E.M.; Coyne, D.; Lennon, D.; Morrish, S.W. Lateral Image Degradation in Terrestrial Laser Scanning. Struct. Eng. Int. 2009, 19, 184. [Google Scholar] [CrossRef]

- C.R.Kennedy & Company. Leica Cyclone Basic User Manual. Available online: https://www.sdm.co.th/pdf/Cyclone%20Basic%20Tutorial.pdf (accessed on 14 June 2024).

- LASer (LAS) File Format Exchange Activities. Available online: https://www.asprs.org/divisions-committees/lidar-division/laser-las-file-format-exchange-activities (accessed on 13 July 2025).

- Phoenix miniRANGER-LITE Product Spec Sheet. Available online: https://www.phoenixlidar.com/wp-content/uploads/2019/11/PLS-miniRANGER-LITE-Spec-Sheet.pdf (accessed on 21 September 2025).

- Phoenix FlightPlanner User Manual. Available online: https://docs.phoenixlidar.com/flightplanner/flight-planning (accessed on 21 September 2025).

- Inertial Explorer 8.70 Manual. Available online: https://novatel.com/support/waypoint-software/inertial-explorer (accessed on 14 June 2023).

- Spatial Explorer Manual. Available online: https://www.phoenixlidar.com/wp-content/uploads/2019/03/PLSAcqTrainSE.pdf (accessed on 14 June 2024).

- Spatial Explorer 7-Airborne Lidar Workflow. Available online: https://docs-7.phoenixlidar.com/lidarmill-desktop/post-processing/airborne-lidar-workflow (accessed on 1 September 2025).

- CloudCompare. CloudCompare Version 2.6.1 User Manual. 2023. Available online: https://www.cloudcompare.org/doc/qCC/CloudCompare%20v2.6.1%20-%20User%20manual.pdf (accessed on 7 September 2025).

- Wondolowski, M.; Hain, A.; Motaref, S. Experimental evaluation of 3D imaging technologies for structural assessment of masonry retaining walls. Results Eng. 2024, 21, 101901. [Google Scholar] [CrossRef]

- Brodu, N.; Lague, D. 3D Terrestrial lidar data classification of complex natural scenes using a multi-scale dimensionality criterion: Applications in geomorphology. ISPRS J. Photogramm. Remote Sens. 2012, 68, 121–134. [Google Scholar] [CrossRef]

- CloudCompare. CANUPO (Plugin). Available online: https://www.cloudcompare.org/doc/wiki/index.php/CANUPO_(plugin)#Use_an_existing_.22.prm.22_file (accessed on 30 June 2024).

- Girardeau-Montaut, D.; Lague, D. qCANUPO (Classifier Files, etc.). Available online: https://www.cloudcompare.org/forum/viewtopic.php?t=808&start=90 (accessed on 1 July 2024).

- Li, L.; Wang, R.; Zhang, X. A Tutorial Review on Point Cloud Registrations: Principle, Classification, Comparison, and Technology Challenges. Math. Probl. Eng. 2021, 2021, 9953910. [Google Scholar] [CrossRef]

- CloudCompare. ICP. Available online: https://www.cloudcompare.org/doc/wiki/index.php/ICP (accessed on 6 December 2023).

- Density-CloudCompare. Available online: https://www.cloudcompare.org/doc/wiki/index.php/Density (accessed on 24 June 2025).

- Cloud-to-Cloud Distance. Available online: https://www.cloudcompare.org/doc/wiki/index.php/Cloud-to-Cloud_Distance (accessed on 20 September 2023).

- Liao, J.; Zhou, J.; Yang, W. Comparing LiDAR and SfM digital surface models for three land cover types. Open Geosci. 2021, 13, 497–504. [Google Scholar] [CrossRef]

- Pascucci, N.; Dominici, D.; Habib, A. LiDAR-Based Road Cracking Detection: Machine Learning Comparison, Intensity Normalization, and Open-Source WebGIS for Infrastructure Maintenance. Remote Sens. 2025, 17, 1543. [Google Scholar] [CrossRef]

- Merkle, D.; Schmitt, A.; Reiterer, A. Sensor Evaluation for Crack Detection in Concrete Bridges. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2020, XLIII-B2-2020, 1107–1114. [Google Scholar] [CrossRef]

- Stanley, M.H.; Laefer, D.F. Metrics for Aerial, Urban Lidar Point Clouds. J. Photogramm. Remote Sens. 2021, 175, 268–281. [Google Scholar] [CrossRef]

- Moenning, C.; Dodgson, N.A. A New Point Cloud Simplification Algorithm. Available online: https://www.semanticscholar.org/paper/A-new-point-cloud-simplification-algorithm-Moenning-Dodgson/d2fb5de95e13041f62a82d3f8099f82ba0c12f17 (accessed on 7 September 2025).

- Ohio Department of Transportation. Geotechnical Design Manual. 2025. Available online: https://www.transportation.ohio.gov/working/engineering/geotechnical/manuals/geotechnical-design (accessed on 25 October 2025).

- Vessely, M.; Robert, W.; Richrath, S.; Schaefer, V.R.; Smadi, O.; Loehr, E.; Boeckmann, A. Geotechnical Asset Management for Transportation Agencies, Volume 1: Research Overview; Transportation Research Board: Washington, DC, USA, 2019. [Google Scholar] [CrossRef]

- Remote Pilot-Small Unmannned Aircraft Systems Study Guide. 2016. Available online: https://www.faa.gov/sites/faa.gov/files/regulations_policies/handbooks_manuals/aviation/remote_pilot_study_guide.pdf (accessed on 7 September 2025).

- Amelunke, M.; Anderson, C.P.; Waldron, M.C.; Raber, G.T.; Carter, G.A. Influence of Flight Altitude and Surface Characteristics on UAS-LiDAR Ground Height Estimate Accuracy in Juncus roemerianus Scheele-Dominated Marshes. Remote Sens. 2024, 16, 384. [Google Scholar] [CrossRef]

- Set Ground Control Points for Use in Site Scan Manager for ArcGIS. Available online: https://support.esri.com/en-us/knowledge-base/how-to-set-ground-control-points-for-use-in-site-scan-m-000023042 (accessed on 13 July 2025).

- Alsadik, B.; Remondino, F. Flight Planning for LiDAR-Based UAS Mapping Applications. ISPRS Int. J. Geo-Inf. 2020, 9, 378. [Google Scholar] [CrossRef]

- Catharia, O.; Richard, F.; Vignoles, H.; Véron, P.; Aoussat, A.; Segonds, F. Smartphone LiDAR Data: A Case Study for Numerisation of Indoor Buildings in Railway Stations. Sensors 2023, 23, 1967. [Google Scholar] [CrossRef] [PubMed]

- Corrigan, M. Pocket Lidar for Assessing Mechanically Stabilized Earth (MSE) Retaining Wall for Bridge Abutments. 2024. Available online: https://rosap.ntl.bts.gov/view/dot/79630/dot_79630_DS1.pdf (accessed on 19 March 2025). [CrossRef]

- CTDOT. Connecticut Department of Transportation 2022 Highway Transportation Asset Management Plan. Available online: https://portal.ct.gov/dot/-/media/dot/tam/transportation-asset-management-plan-fhwa-certified-9302022.pdf?rev=989262de6f41476f8365f271f293ec6f&hash=21AC54AF2CCA5CE106855062270548E9 (accessed on 30 September 2025).

- Berman, J.W.; Wartman, J.; Olsen, M.; Irish, J.L.; Miles, S.B.; Tanner, T.; Gurley, K.; Lowes, L.; Bostrom, A.; Dafni, J.; et al. Natural Hazards Reconnaissance With the NHERI RAPID Facility. Front. Built Environ. 2020, 6, 573067. [Google Scholar] [CrossRef]

- Wartman, J.; Berman, J.W.; Bostrom, A.; Miles, S.; Olsen, M.; Gurley, K.; Irish, J.; Lowes, L.; Tanner, T.; Dafni, J.; et al. Research Needs, Challenges, and Strategic Approaches for Natural Hazards and Disaster Reconnaissance. Front. Built Environ. 2020, 6, 573068. [Google Scholar] [CrossRef]

| Flight Characteristic | MiniRanger | ||

|---|---|---|---|

| Average | Min | Max | |

| Survey flight altitude (AGL) | 80 m | 71 m | 113 m |

| Approximate survey flight speed | 6.81 m/s | 1.96 m/s | 9.30 m/s |

| Number of flight strips | 5 | ||

| Orientation of flight strips | Parallel | ||

| Lidar flight line overlap | 80% | ||

| Duration of flight | 4 min 50 s | ||

| Wall | Registration Method | RMSE (cm) | MAE (cm) | SD (cm) | Skewness |

|---|---|---|---|---|---|

| A | Georeferenced | 3.88 | 3.28 | 2.08 | 0.72 |

| Finely Registered | 2.33 | 1.75 | 1.54 | 1.52 | |

| B | Georeferenced | 28.3 | 23.4 | 15.9 | 2.65 |

| Finely Registered | 24.2 | 17.1 | 17.2 | 2.72 | |

| C | Georeferenced | 6.24 | 5.17 | 3.50 | 0.77 |

| Finely Registered | 3.63 | 2.78 | 2.33 | 2.11 |

| Pros | Cons | |

|---|---|---|

| Drone-lidar |

|

|

| Terrestrial lidar (TLS) |

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wondolowski, M.; Hain, A.; Motaref, S.; Dedinsky, K.; Grilliot, M. Advancing Retaining Wall Inspections: Comparative Analysis of Drone-Lidar and Traditional TLS Methods for Enhanced Structural Assessment. Appl. Sci. 2025, 15, 12008. https://doi.org/10.3390/app152212008

Wondolowski M, Hain A, Motaref S, Dedinsky K, Grilliot M. Advancing Retaining Wall Inspections: Comparative Analysis of Drone-Lidar and Traditional TLS Methods for Enhanced Structural Assessment. Applied Sciences. 2025; 15(22):12008. https://doi.org/10.3390/app152212008

Chicago/Turabian StyleWondolowski, Maxwell, Alexandra Hain, Sarira Motaref, Karen Dedinsky, and Michael Grilliot. 2025. "Advancing Retaining Wall Inspections: Comparative Analysis of Drone-Lidar and Traditional TLS Methods for Enhanced Structural Assessment" Applied Sciences 15, no. 22: 12008. https://doi.org/10.3390/app152212008

APA StyleWondolowski, M., Hain, A., Motaref, S., Dedinsky, K., & Grilliot, M. (2025). Advancing Retaining Wall Inspections: Comparative Analysis of Drone-Lidar and Traditional TLS Methods for Enhanced Structural Assessment. Applied Sciences, 15(22), 12008. https://doi.org/10.3390/app152212008