The MUG-10 Framework for Preventing Usability Issues in Mobile Application Development

Abstract

1. Introduction

2. Background

3. Methodology

3.1. Identify and Recruit Experts

3.2. Interview Protocol

3.3. Interview Organization

3.4. Data Sample

3.5. Data Analysis

3.6. Data Synthesis

4. Results

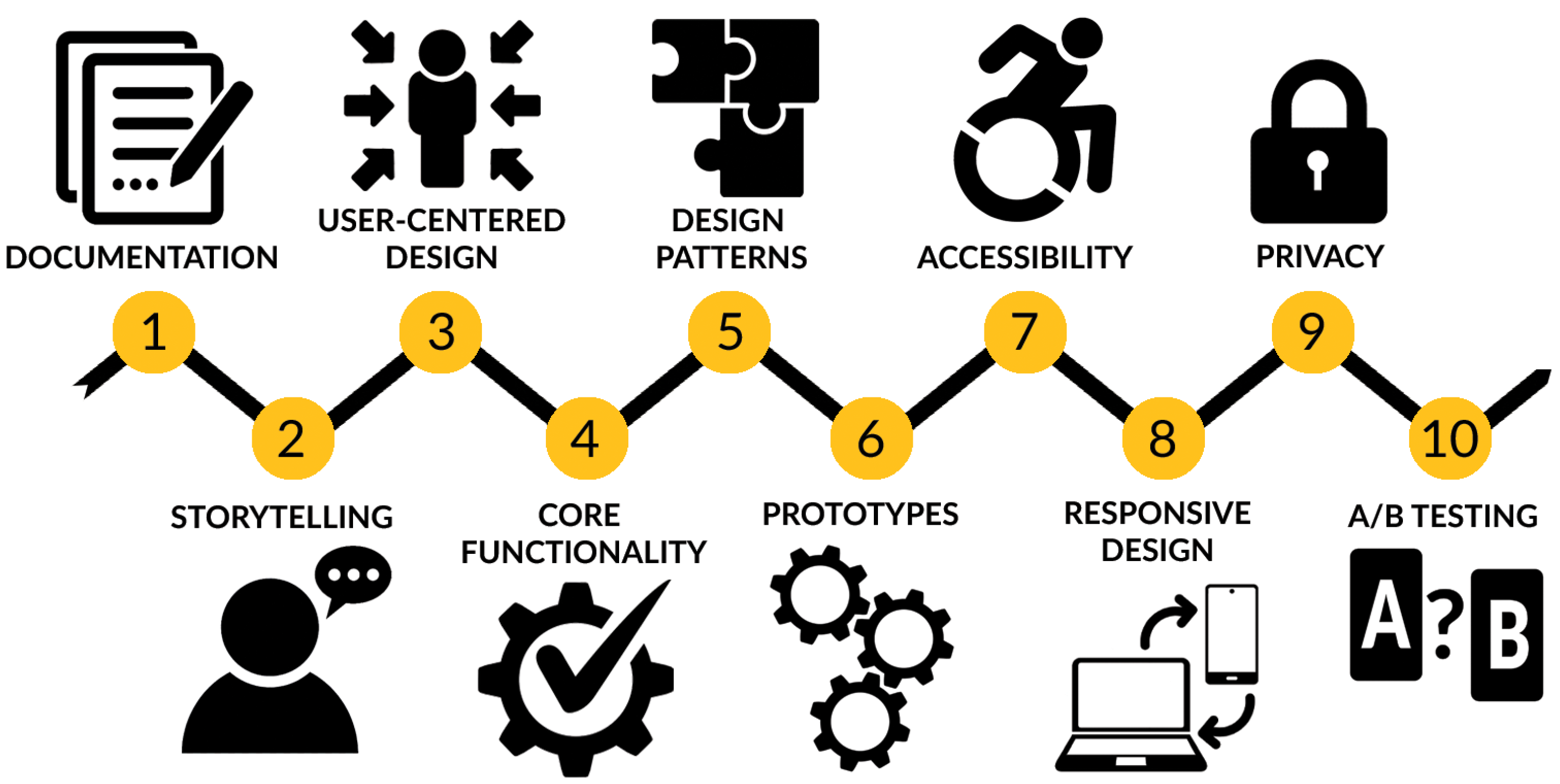

- G1:

- Prepare documentation. Maintain technical, accurate, and up-to-date documentation to ensure alignment across teams, and engage stakeholders in the documentation process to ensure their perspectives are captured and reflected in product development [46,47]. In a broader sense, well-prepared documentation supports consistent decision-making and minimizes the risk of miscommunication throughout the project lifecycle [48].

- G2:

- Use storytelling. Through scenarios and personas, storytelling techniques can be used to better understand and address user needs [49,50]. Storytelling builds connections, inspires action, and facilitates learning more effectively than presenting raw data alone by tapping into human emotions and shared experiences. Besides, this practice also promotes more effective communication among people by translating abstract usability narratives into specific objectives [51].

- G3:

- Apply User-Centered Design (UCD) principles. By definition, UCD is an iterative design process that emphasizes active engagement in the mobile application development process [52,53]. By involving users in research, testing, and continuous feedback, development teams can identify and resolve usability issues effectively. Early and frequent testing, in particular, helps ensure that the final application closely aligns with their expectations [54].

- G4:

- Prioritize core functionality. Mobile users typically engage with apps to quickly and efficiently perform specific tasks. Prioritizing core functionality eliminates distractions, reduces interface complexity, and improves the overall user experience [55,56]. Besides, by focusing development efforts on the most critical features, before adding additional functions or fine-tuning visuals, a minimum viable product (MVP) can be delivered without overwhelming users [57].

- G5:

- Use design patterns and templates. Established design patterns and templates offer reliable solutions to common UI issues, reducing cognitive load by providing well-known icons, symbols, and interface structures [44]. These solutions can speed up development by giving teams reusable components that are easier to implement, maintain, and test. Using familiar patterns also improves learnability [58], as users can take advantage of their prior knowledge.

- G6:

- Develop mock-ups and prototypes. Use static, both low- and high-fidelity visuals to illustrate an application layout [59]. Then follow up with interactive prototypes that enable early user testing, help validate design concepts, and gather first-hand user feedback [60,61]. Graphical artifacts also facilitate better communication among designers, developers, as well as other stakeholders by providing a shared reference point [62].

- G7:

- Follow accessibility guidelines. Design inclusive interfaces that follow POUR (Perceivable, Operable, Understandable, Robust) [63,64] principles to create truly inclusive content [65]. Adhering to POUR guidelines ensures that a mobile application is usable by people with diverse abilities, including those with visual, auditory, motor, or cognitive impairments. Additionally, following these standards supports legal compliance and shields organizations from reputational harm.

- G8:

- Ensure responsive design across devices. Design and test for multiple screen sizes to provide a smooth user experience [66], while key features include flexible grids, layouts, and images that automatically adjust to different resolutions and device types [67]. Additionally, a well-implemented responsive layout reduces the need for redundant code across separate app versions, streamlining both current maintenance and future development.

- G9:

- Assure user privacy. Design and communicate transparent authorization patterns that address the permissions granted to an application to access specific data and functions on a user’s device, and the means to protect the user’s personal information [68], including the right to control how such information is used, stored, and shared, while preserving the user’s anonymity where possible [69].

- G10:

- Conduct A/B testing. Design, implement, and test two product versions to collect data-driven insights and make informed decisions based on user behavior [70,71]. By making decisions based on factual user feedback rather than arbitrary assumptions, the A/B testing approach helps determine the best solution [72].

- User testing. Experts strongly emphasized involving at least five users in usability testing [73] through live and moderated sessions on a regular basic, on each stage of app development [74]. One can also consider A/B testing with the aim comparing two versions of a design to determine which one leads to better outcomes based on actual user behavior [75]. Such approach helps reduce opinion-based changes [76], allows testing of specific variables (e.g., layout, color, wording) [77], and supports data-driven decision making to ultimately select the optimal solution [78]. Remarkably, low-fidelity prototypes should be used in the early stages of application design as they tend to be visually unappealing and lack detail [79]. On the other hand, high-fidelity models should accompany feature implementation, allowing users to interact with the solution as if it were fully developed [80].

- Heuristics testing. In this approach, instead of users, experts assess an interface based on established usability principles, guidelines or rules of thumb, known as heuristics used to evaluate a user interface. Heuristics can be understood as a practical approach to quickly identify issues, but they are not fixed solutions. In this view, heuristics guide the evaluator in testing various scenarios by applying Nielsen’s 10 usability principles [81].

- Smoke testing. Depending on the stage of the development process and the team’s workflow, smoke testing is usually performed by quality assurance testers or developers [82]. Smoke testing is a quick way to detect critical or major defects early [83], helping avoid spending time on detailed testing when the app build is seriously flawed [84]. In this sense, smoke testing can be seen as a build verification testing that precedes user testing.

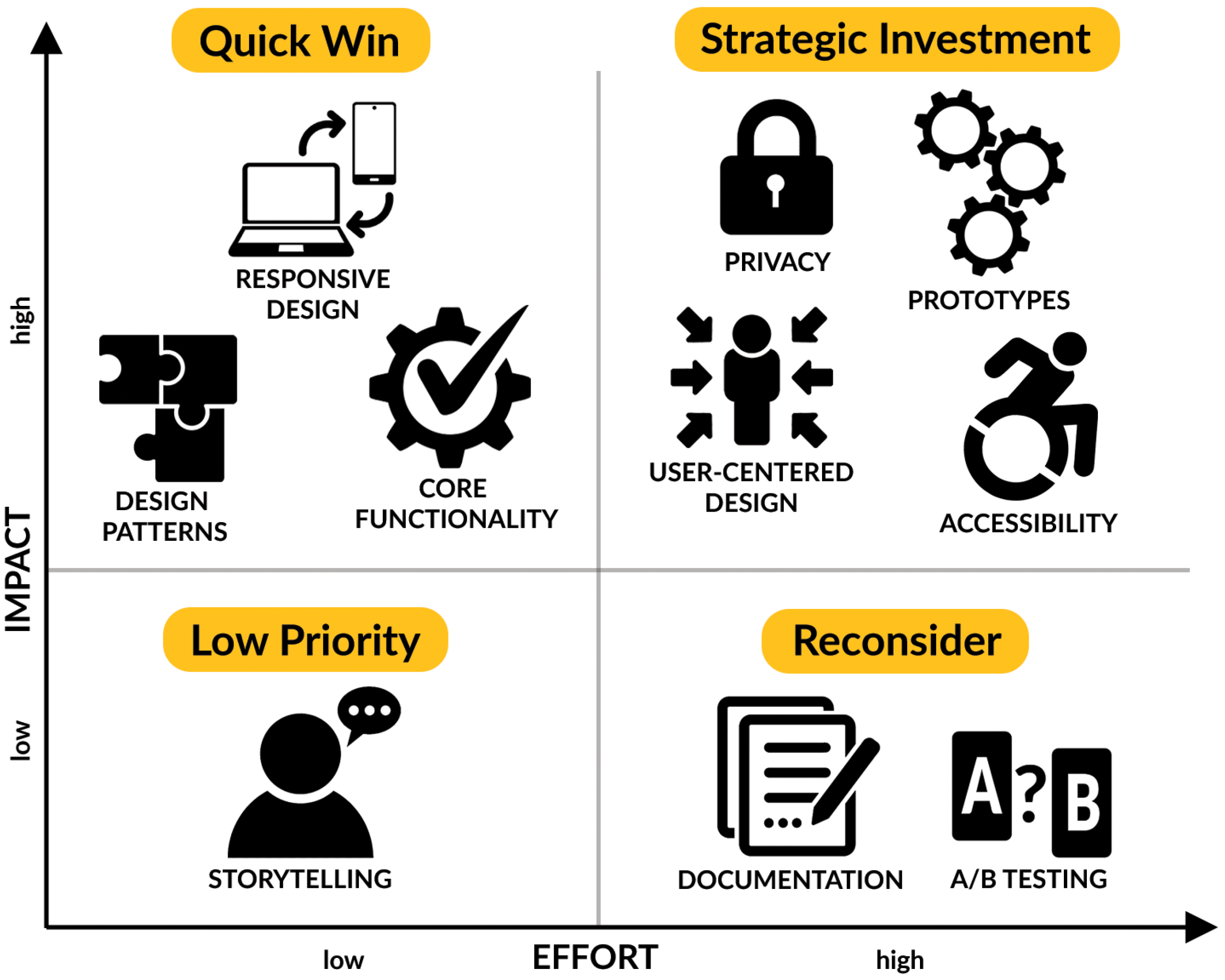

5. Framework Evaluation

- Quick Win: High Impact, Low Effort (upper left square).

- Strategic Investment: High Impact, High Effort (upper right square).

- Low Priority: Low Impact, Low Effort (lower left square).

- Reconsider: Low Impact, High Effort (lower right square).

6. Discussion

6.1. Contributions

6.2. Implications

6.3. Limitations

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Statista. Mobile App Usage —Statistics & Facts. 2025. Available online: https://www.statista.com/topics/1002/mobile-app-usage/ (accessed on 9 August 2025).

- Wagner, C. How Companies Can Do More with Mobile Apps. 2023. Available online: https://www.forbes.com/councils/forbestechcouncil/2023/12/08/how-companies-can-do-more-with-mobile-apps/ (accessed on 26 April 2025).

- Grand View Research. Mobile Application Market Size, Share & Trends Analysis Report By Store (Google Store, Apple Store, Others), by Application (Gaming, Music & Entertainment, Health & Fitness, Social Networking), and Region, Segment Forecasts, 2024–2030. 2025. Available online: https://www.grandviewresearch.com/industry-analysis/mobile-application-market (accessed on 23 April 2025).

- Gawlik-Kobylińska, M.; Kabashkin, I.; Misnevs, B.; Maciejewski, P. Education mobility as a service: A study of the features of a novel mobility platform. Appl. Sci. 2023, 13, 5245. [Google Scholar] [CrossRef]

- García-Méndez, S.; de Arriba-Pérez, F.; González-González, J.; González-Castaño, F.J. Explainable assessment of financial experts’ credibility by classifying social media forecasts and checking the predictions with actual market data. Expert Syst. Appl. 2024, 255, 124515. [Google Scholar] [CrossRef]

- Wang, Z.; Du, Y.; Wei, K.; Han, K.; Xu, X.; Wei, G.; Tong, W.; Zhu, P.; Ma, J.; Wang, J.; et al. Vision, application scenarios, and key technology trends for 6G mobile communications. Sci. China Inf. Sci. 2022, 65, 151301. [Google Scholar] [CrossRef]

- Falkowski-Gilski, P.; Uhl, T. Current trends in consumption of multimedia content using online streaming platforms: A user-centric survey. Comput. Sci. Rev. 2020, 37, 100268. [Google Scholar] [CrossRef]

- Ali, W.; Riaz, O.; Mumtaz, S.; Khan, A.R.; Saba, T.; Bahaj, S.A. Mobile application usability evaluation: A study based on demography. IEEE Access 2022, 10, 41512–41524. [Google Scholar] [CrossRef]

- Salman, H.M.; Ahmad, W.F.W.; Sulaiman, S. Usability evaluation of the smartphone user interface in supporting elderly users from experts’ perspective. IEEE Access 2018, 6, 22578–22591. [Google Scholar] [CrossRef]

- Weichbroth, P. Usability of mobile applications: A systematic literature study. IEEE Access 2020, 8, 55563–55577. [Google Scholar] [CrossRef]

- ISO 9241-11:1998(en); Ergonomic Requirements for Office Work with Visual Display Terminals (VDTs)—Part 11: Guidance on Usability. ISO: Geneva, Switzerland, 1998. Available online: https://www.iso.org/obp/ui/#iso:std:iso:9241:-11:ed-1:v1:en (accessed on 9 August 2025).

- Daif, A.; Dahroug, A.T.; López-Nores, M.; González-Soutelo, S.; Bassani, M.; Antoniou, A.; Gil-Solla, A.; Ramos-Cabrer, M.; Pazos-Arias, J.J. A mobile app to learn about cultural and historical associations in a closed loop with humanities experts. Appl. Sci. 2018, 9, 9. [Google Scholar] [CrossRef]

- Weichbroth, P. Usability attributes revisited: A time-framed knowledge map. In Proceedings of the IEEE 2018 Federated Conference on Computer Science and Information Systems (FedCSIS), Poznań, Poland, 9–12 September 2018; pp. 1005–1008. [Google Scholar]

- Acosta-Vargas, P.; Salvador-Acosta, B.; Salvador-Ullauri, L.; Villegas-Ch, W.; Gonzalez, M. Accessibility in native mobile applications for users with disabilities: A scoping review. Appl. Sci. 2021, 11, 5707. [Google Scholar] [CrossRef]

- Iakovets, A.; Balog, M.; Židek, K. The use of mobile applications for sustainable development of SMEs in the context of Industry 4.0. Appl. Sci. 2022, 13, 429. [Google Scholar] [CrossRef]

- Lee, H.J.; Lee, J.S.; Jee, E.; Bae, D.H. A user experience evaluation framework for mobile usability. Int. J. Softw. Eng. Knowl. Eng. 2017, 27, 235–279. [Google Scholar] [CrossRef]

- Au, F.T.; Baker, S.; Warren, I.; Dobbie, G. Automated usability testing framework. In Proceedings of the Ninth Conference on Australasian User Interface, Wollongong, Australia, 1 January 2008; Volume 76, pp. 55–64. [Google Scholar]

- Lee, K.B.; Grice, R.A. Developing a new usability testing method for mobile devices. In Proceedings of the IEEE International Professional Communication Conference, IPCC 2004, Minneapolis, MN, USA, 29 September–2 October 2004; pp. 115–127. [Google Scholar]

- Kluth, W.; Krempels, K.H.; Samsel, C. Automated usability testing for mobile applications. In Proceedings of the International Conference on Web Information Systems and Technologies, SCITEPRESS, Barcelona, Spain, 3–5 April 2014; Volume 2, pp. 149–156. [Google Scholar]

- Ma, X.; Yan, B.; Chen, G.; Zhang, C.; Huang, K.; Drury, J.; Wang, L. Design and implementation of a toolkit for usability testing of mobile apps. Mob. Netw. Appl. 2013, 18, 81–97. [Google Scholar] [CrossRef]

- Arif, K.S.; Ali, U. Mobile Application testing tools and their challenges: A comparative study. In Proceedings of the IEEE 2019 2nd International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 30–31 January 2019; pp. 1–6. [Google Scholar]

- Huang, Z.; Benyoucef, M. A systematic literature review of mobile application usability: Addressing the design perspective. Univers. Access Inf. Soc. 2023, 22, 715–735. [Google Scholar] [CrossRef]

- Kumar, B.A.; Chand, S.S.; Goundar, M.S. Usability testing of mobile learning applications: A systematic mapping study. Int. J. Inf. Learn. Technol. 2024, 41, 113–129. [Google Scholar] [CrossRef]

- Akmal Muhamat, N.; Hasan, R.; Saddki, N.; Mohd Arshad, M.R.; Ahmad, M. Development and usability testing of mobile application on diet and oral health. PLoS ONE 2021, 16, e0257035. [Google Scholar] [CrossRef]

- Storm, M.; Fjellså, H.M.H.; Skjærpe, J.N.; Myers, A.L.; Bartels, S.J.; Fortuna, K.L. Usability testing of a mobile health application for self-management of serious mental illness in a Norwegian community mental health setting. Int. J. Environ. Res. Public Health 2021, 18, 8667. [Google Scholar] [CrossRef] [PubMed]

- Yánez-Pérez, I.; Toma, R.B.; Meneses-Villagrá, J.Á. Design and usability evaluation of a mobile app for elementary school inquiry-based science learning. Sch. Sci. Math. 2025, 125, 247–258. [Google Scholar] [CrossRef]

- Weichbroth, P. Usability testing of mobile applications: A methodological framework. Appl. Sci. 2024, 14, 1792. [Google Scholar] [CrossRef]

- Ballard, B. Designing the Mobile User Experience; John Wiley & Sons: Hoboken, NJ, USA, 2007. [Google Scholar]

- Xiong, J.; Acemyan, C.Z.; Kortum, P. SUSapp: A Free Mobile Application That Makes the System Usability Scale (SUS) Easier to Administer. J. Usability Stud. 2020, 15, 135–144. [Google Scholar]

- La, H.J.; Lee, H.J.; Kim, S.D. An efficiency-centric design methodology for mobile application architectures. In Proceedings of the 2011 IEEE 7th International Conference on Wireless and Mobile Computing, Networking and Communications (WiMob), Shanghai, China, 10–12 October 2011; pp. 272–279. [Google Scholar]

- Al-Sakran, H.O.; Alsudairi, M.A. Usability and accessibility assessment of Saudi Arabia mobile e-government websites. IEEE Access 2021, 9, 48254–48275. [Google Scholar] [CrossRef]

- Palyama, D.G.; Tomasila, G. An Important Aspect of Satisfaction on Mobile Apps: An Usability Evaluation Based on Gender. JINAV J. Inf. Vis. 2022, 3, 93–98. [Google Scholar] [CrossRef]

- Hajesmaeel-Gohari, S.; Khordastan, F.; Fatehi, F.; Samzadeh, H.; Bahaadinbeigy, K. The most used questionnaires for evaluating satisfaction, usability, acceptance, and quality outcomes of mobile health. BMC Med. Inform. Decis. Mak. 2022, 22, 22. [Google Scholar] [CrossRef]

- Iacob, C.; Harrison, R. Retrieving and analyzing mobile apps feature requests from online reviews. In Proceedings of the IEEE 2013 10th Working Conference on Mining Software Repositories (MSR), San Francisco, CA, USA, 18–19 May 2013; pp. 41–44. [Google Scholar]

- Iacob, C.; Veerappa, V.; Harrison, R. What are you complaining about?: A study of online reviews of mobile applications. In Proceedings of the 27th International BCS Human Computer Interaction Conference (HCI 2013), BCS Learning & Development, London, UK, 9–13 September 2013. [Google Scholar]

- Ismail, N.; Ahmad, F.; Kamaruddin, N.; Ibrahim, R. A review on usability issues in mobile applications. IOSR J. Mob. Comput. Appl. 2016, 3, 47–52. [Google Scholar]

- Weichbroth, P.; Baj-Rogowska, A. Do online reviews reveal mobile application usability and user experience? The case of WhatsApp. In Proceedings of the IEEE 2019 Federated Conference on Computer Science and Information Systems (FedCSIS), Leipzig, Germany, 1–4 September 2019; pp. 747–754. [Google Scholar]

- Statista. Number of Mobile Game Users Worldwide from 2017 to 2030 (in Billions). 2025. Available online: https://www.statista.com/forecasts/667694/number-mobile-gamers-worldwide (accessed on 28 August 2025).

- Ho, S.C.; Tu, Y.C. The investigation of online reviews of mobile games. In Proceedings of the Workshop on E-Business, Shanghai, China, 4 December 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 130–139. [Google Scholar]

- Weichbroth, P. Usability issues with mobile applications: Insights from practitioners and future research directions. IEEE Access 2025, 13, 91301–91311. [Google Scholar] [CrossRef]

- Neil, T. Mobile Design Pattern Gallery: UI Patterns for Smartphone Apps; O’Reilly Media, Inc.: Cambridge, MA, USA, 2014. [Google Scholar]

- Grill, T.; Biel, B.; Gruhn, V. A pattern approach to mobile interaction design. Inf. Technol. 2009, 51, 93–101. [Google Scholar]

- Punchoojit, L.; Hongwarittorrn, N. Usability studies on mobile user interface design patterns: A systematic literature review. Adv. Hum.-Comput. Interact. 2017, 2017, 6787504. [Google Scholar] [CrossRef]

- da Silva, L.F.; Parreira Junior, P.A.; Freire, A.P. Mobile user interaction design patterns: A systematic mapping study. Information 2022, 13, 236. [Google Scholar] [CrossRef]

- Genc-Nayebi, N.; Abran, A. A systematic literature review: Opinion mining studies from mobile app store user reviews. J. Syst. Softw. 2017, 125, 207–219. [Google Scholar] [CrossRef]

- Aggarwal, C. Documenting the Right Things: Writing Documentation for Mobile App Development. 2024. Available online: https://chetan-aggarwal.medium.com/documenting-the-right-things-writing-documentation-for-mobile-app-development-5f0f024fa5ef (accessed on 9 July 2025).

- Mykhailov, M. How to Write a Proper Mobile App Requirements Document in 5 Steps. 2025. Available online: https://nix-united.com/blog/how-to-write-a-proper-mobile-app-requirements-document-in-5-steps/ (accessed on 9 July 2025).

- Kortum, F.; Klünder, J.; Schneider, K. Miscommunication in software projects: Early recognition through tendency forecasts. In Proceedings of the International Conference on Product-Focused Software Process Improvement, Trondheim, Norway, 22–24 November 2016; Springer: Cham, Switzerland, 2016; pp. 731–738. [Google Scholar]

- De Paolis, L.T.; Gatto, C.; Corchia, L.; De Luca, V. Usability, user experience and mental workload in a mobile Augmented Reality application for digital storytelling in cultural heritage. Virtual Real. 2023, 27, 1117–1143. [Google Scholar] [CrossRef]

- Chan, M. Storytelling in UX Work: Study Guide. 2024. Available online: https://www.nngroup.com/articles/storytelling-study-guide/ (accessed on 8 August 2025).

- Velhinho, A.; Quintero, E.; Pedro, L.; Almeida, P. Usability Study of a Mobile Platform for Community Memory Sharing and Audiovisual Storytelling. In Proceedings of the Iberoamerican Conference on Applications and Usability of Interactive TV, Santo Domingo, Dominican Republic, 13–15 November 2024; Springer: Cham, Switzerland, 2024; pp. 122–138. [Google Scholar]

- Mao, J.Y.; Vredenburg, K.; Smith, P.W.; Carey, T. The state of user-centered design practice. Commun. ACM 2005, 48, 105–109. [Google Scholar] [CrossRef]

- Trujillo-Lopez, L.A.; Raymundo-Guevara, R.A.; Morales-Arevalo, J.C. User-Centered Design of a Computer Vision System for Monitoring PPE Compliance in Manufacturing. Computers 2025, 14, 312. [Google Scholar] [CrossRef]

- Lopes, A.; Valentim, N.; Moraes, B.; Zilse, R.; Conte, T. Applying user-centered techniques to analyze and design a mobile application. J. Softw. Eng. Res. Dev. 2018, 6, 5. [Google Scholar] [CrossRef]

- Singh, V.P.; Golden Rules for Mobile System Design Interviews. Mobile System Design Explained in Detail with Examples. 2024. Available online: https://medium.com/\spacefactor\@m{}engineervishvnath/golden-rules-for-mobile-system-design-interviews-bd7b71e4f454 (accessed on 9 August 2025).

- Sky Web Design Technologies. How to Balance Performance and Design in Mobile App Development. 2024. Available online: https://www.linkedin.com/pulse/how-balance-performance-design-mobile-app-gyzic/ (accessed on 9 July 2025).

- Cheng, L.C. The mobile app usability inspection (MAUi) framework as a guide for minimal viable product (MVP) testing in lean development cycle. In Proceedings of the 2nd International Conference in HCI and UX Indonesia 2016, Jakarta, Indonesia, 13–15 April 2016; pp. 1–11. [Google Scholar]

- Yang, M.; Gao, Q.; Wang, X. A comprehensive learnability framework for mobile application design for older adults. Univers. Access Inf. Soc. 2024, 24, 1393–1423. [Google Scholar] [CrossRef]

- Zhang, T.; Rau, P.L.P.; Salvendy, G.; Zhou, J. Comparing low and high-fidelity prototypes in mobile phone evaluation. Int. J. Technol. Diffus. (IJTD) 2012, 3, 1–19. [Google Scholar] [CrossRef]

- Kanai, S.; Horiuchi, S.; Kikuta, Y.; Yokoyama, A.; Shiroma, Y. An integrated environment for testing and assessing the usability of information appliances using digital and physical mock-ups. In Proceedings of the International Conference on Virtual Reality, Beijing, China, 22–27 July 2007; Springer: Berlin/Heidelberg, Germany, 2007; pp. 478–487. [Google Scholar]

- Radziszewski, K.; Anacka, H.; Obracht-Prondzyńska, H.; Tomczak, D.; Wereszko, K.; Weichbroth, P. Greencoin: Prototype of a mobile application facilitating and evidencing pro-environmental behavior of citizens. Procedia Comput. Sci. 2021, 192, 2668–2677. [Google Scholar] [CrossRef]

- Wirtz, S.; Jakobs, E.M. Improving user experience for passenger information systems. Prototypes and reference objects. IEEE Trans. Prof. Commun. 2013, 56, 120–137. [Google Scholar] [CrossRef]

- The World Wide Web Consortium. Web Content Accessibility Guidelines (WCAG) 2.1. 2025. Available online: https://www.w3.org/TR/WCAG21/ (accessed on 8 August 2025).

- Halpin, M. Understanding the POUR Accessibility Principles. 2025. Available online: https://reciteme.com/news/pour-accessibility-principles/ (accessed on 8 August 2025).

- Ballantyne, M.; Jha, A.; Jacobsen, A.; Hawker, J.S.; El-Glaly, Y.N. Study of accessibility guidelines of mobile applications. In Proceedings of the 17th International Conference on Mobile and Ubiquitous Multimedia, Cairo, Egypt, 25–28 November 2018; pp. 305–315. [Google Scholar]

- Design Develop Now. Stop Losing Users: How Mobile App Responsive Design Saves You. 2025. Available online: https://designdevelopnow.com/blog/mobile-app-responsive-design/ (accessed on 9 August 2025).

- Almeida, F.; Monteiro, J. The Role of Responsive Design in Web Development. Webology 2017, 14, 48–65. [Google Scholar]

- Martin, K.; Shilton, K. Putting mobile application privacy in context: An empirical study of user privacy expectations for mobile devices. Inf. Soc. 2016, 32, 200–216. [Google Scholar] [CrossRef]

- Alkindi, Z.R.; Sarrab, M.; Alzeidi, N. User privacy and data flow control for android apps: Systematic literature review. J. Cyber Secur. Mobil. 2021, 10, 261–304. [Google Scholar] [CrossRef]

- Lee, M.; Kim, G.J. On applying experience sampling method to a/b testing of mobile applications: A case study. In Proceedings of the IFIP Conference on Human-Computer Interaction, Bamberg, Germany, 14–18 September 2015; Springer: Cham, Switzerland, 2015; pp. 203–210. [Google Scholar]

- Samuel, T.; Pfahl, D. Problems and solutions in mobile application testing. In Proceedings of the International Conference on Product-Focused Software Process Improvement, Trondheim, Norway, 22–24 November 2016; Springer: Cham, Switzerland, 2016; pp. 249–267. [Google Scholar]

- Neusesser, T. A/B Testing 101. 2024. Available online: https://www.nngroup.com/articles/ab-testing/ (accessed on 13 September 2025).

- Glance. How Many Users Do I Need For Effective App Testing? 2024. Available online: https://thisisglance.com/learning-centre/how-many-users-do-i-need-for-effective-app-testing (accessed on 14 September 2025).

- Samrgandi, N. User interface design & evaluation of mobile applications. Int. J. Comput. Sci. Netw. Secur. 2021, 21, 55–63. [Google Scholar]

- Adinata, M.; Liem, I. A/B test tools of native mobile application. In Proceedings of the IEEE 2014 International Conference on Data and Software Engineering (ICODSE), Bandung, Indonesia, 26–27 November 2014; pp. 1–6. [Google Scholar]

- Krüger, J.D. Optimizing e-commerce relaunches: A rigorous economic framework employing a/b testing for enhanced conversion rates and risk mitigation. Preprint 2025. [Google Scholar] [CrossRef]

- King, R.; Churchill, E.F.; Tan, C. Designing with Data: Improving the User Experience with A/B Testing; O’Reilly Media, Inc.: Cambridge, MA, USA, 2017. [Google Scholar]

- Goswami, A.; Han, W.; Wang, Z.; Jiang, A. Controlled experiments for decision-making in e-Commerce search. In Proceedings of the 2015 IEEE International Conference on Big Data (Big Data), Santa Clara, CA, USA, 29 October–1 November 2015; pp. 1094–1102. [Google Scholar]

- Saleh, A.M.; Ismail, R.B. The Impact of Dynamic Prototype on Usability Testing and User Satisfaction: Fidelity and In-situ Prototyping. In Proceedings of the 3rd Global Summit on Education GSE 2015, Kuala Lumpur, Malaysia, 9–10 March 2015. [Google Scholar]

- Kim, S.Y.; Lee, Y. Using High Fidelity Interactive Prototypes for Effective Communication to Create an Enterprise Mobile Application. In Proceedings of the International Conference on Applied Human Factors and Ergonomics, Virtual, 16–20 July 2020; Springer: Cham, Switzerland, 2020; pp. 173–178. [Google Scholar]

- Nielsen, J. 10 Usability Heuristics for User Interface Design. 1994. Available online: https://www.nngroup.com/articles/ten-usability-heuristics/ (accessed on 8 August 2025).

- Global App Testing. The Ultimate Guide to Smoke Testing. 2024. Available online: https://www.globalapptesting.com/blog/the-ultimate-guide-to-smoke-testing (accessed on 20 September 2025).

- Herbold, S.; Haar, T. Smoke testing for machine learning: Simple tests to discover severe bugs. Empir. Softw. Eng. 2022, 27, 45. [Google Scholar] [CrossRef]

- Reshma, S.; Mohan Kumar, H.; Manu, A. Smoke Test Execution in Software Application Testing. In Proceedings of the 4th International Conference on Emerging Research in Electronics, Computer Science and Technology, ICERECT, Mandya, India, 26–27 December 2022; pp. 26–27. [Google Scholar]

- Pérez-Fernández, L.; Sebastián, M.A.; González-Gaya, C. Methodology to optimize quality costs in manufacturing based on multi-criteria analysis and lean strategies. Appl. Sci. 2022, 12, 3295. [Google Scholar] [CrossRef]

- Six Sigma Development Solution. Impact Effort Matrix: Prioritize Projects with Our Simple Guide. 2025. Available online: https://sixsigmadsi.com/what-is-an-impact-effort-matrix-how-does-it-work/ (accessed on 16 September 2025).

- Gök, S. Design the Best UX with These 7 Guidelines. 2022. Available online: https://www.iienstitu.com/en/blog/design-the-best-ux-with-these-7-guidelines (accessed on 9 July 2025).

- Teka, D.; Dittrich, Y.; Kifle, M.; Ardito, C.; Lanzilotti, R. User involvement and usability evaluation in Ethiopian software organizations. Electron. J. Inf. Syst. Dev. Ctries. 2017, 83, 1–19. [Google Scholar] [CrossRef]

- Nilsson, E.G. Design patterns for user interface for mobile applications. Adv. Eng. Softw. 2009, 40, 1318–1328. [Google Scholar] [CrossRef]

- Dutson, P. Responsive Mobile Design: Designing for Every Device; Addison-Wesley Professional: Boston, MA, USA, 2014. [Google Scholar]

- Patel, J.; Gershoni, G.; Krishnan, S.; Nelimarkka, M.; Nonnecke, B.; Goldberg, K. A case study in mobile-optimized vs. responsive web application design. In Proceedings of the 17th International Conference on Human-Computer Interaction with Mobile Devices and Services Adjunct, Copenhagen, Denmark, 24–27 August 2015; pp. 576–581. [Google Scholar]

- Parlakkiliç, A. Evaluating the effects of responsive design on the usability of academic websites in the pandemic. Educ. Inf. Technol. 2022, 27, 1307–1322. [Google Scholar] [CrossRef] [PubMed]

- de Paula, D.F.; Menezes, B.H.; Araújo, C.C. Building a quality mobile application: A user-centered study focusing on design thinking, user experience and usability. In Proceedings of the International Conference of Design, User Experience, and Usability, Heraklion, Greece, 22–27 June 2014; Springer: Cham, Switzerland, 2014; pp. 313–322. [Google Scholar]

- Sedlmayr, B.; Schöffler, J.; Prokosch, H.U.; Sedlmayr, M. User-centered design of a mobile medication management. Inform. Health Soc. Care 2019, 44, 152–163. [Google Scholar] [CrossRef] [PubMed]

- Quezada, P.; Cueva, R.; Paz, F. A systematic review of user-centered design techniques applied to the design of mobile application user interfaces. In Proceedings of the International Conference on Human-Computer Interaction, Virtual, 24–29 July 2021; Springer: Cham, Switzerland, 2021; pp. 100–114. [Google Scholar]

- Jurgensen, C. Motivation in lifestyle changes: Using mock-ups as a tool for exploring the design space. In Proceedings of the IEEE 2011 5th International Conference on Pervasive Computing Technologies for Healthcare (PervasiveHealth) and Workshops, Dublin, Ireland, 23–26 May 2011; pp. 572–576. [Google Scholar]

- Jouppila, T.; Tiainen, T. Nurses’ Participation in the design of an intensive care unit: The use of virtual mock-ups. HERD Health Environ. Res. Des. J. 2021, 14, 301–312. [Google Scholar] [CrossRef]

- Duan, P.; Warner, J.; Li, Y.; Hartmann, B. Generating automatic feedback on ui mockups with large language models. In Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 11–16 May 2024; pp. 1–20. [Google Scholar]

- Kryszajtys, D.T.; Rudzinski, K.; Chan Carusone, S.; Guta, A.; King, K.; Strike, C. Do mock-ups, presentations of evidence, and Q&As help participants voice their opinions during focus groups and interviews about supervised injection services? Int. J. Qual. Methods 2021, 20, 16094069211033439. [Google Scholar]

- Huq, S.F.; Tafreshipour, M.; Kalcevich, K.; Malek, S. Automated Generation of Accessibility Test Reports from Recorded User Transcripts. In Proceedings of the 2025 IEEE/ACM 47th International Conference on Software Engineering (ICSE), Ottawa, ON, Canada, 27 April–3 May 2025; IEEE Computer Society: Los Alamitos, CA, USA, 2025; pp. 534–546. [Google Scholar]

- World Health Organization. Disability. 2023. Available online: https://www.who.int/news-room/fact-sheets/detail/disability-and-health (accessed on 17 September 2025).

- Assal, H.; Hurtado, S.; Imran, A.; Chiasson, S. What’s the deal with privacy apps? A comprehensive exploration of user perception and usability. In Proceedings of the 14th International Conference on Mobile and Ubiquitous Multimedia, Linz, Austria, 30 November– 2 December 2015; pp. 25–36. [Google Scholar]

- Amaral Cejas, O.; Abualhaija, S.; Sannier, N.; Ceci, M.; Bianculli, D. GDPR Compliance in Privacy Policies of Mobile Apps: An Overview of the State-of-Practice. In Proceedings of the the 33rd IEEE International Requirements Engineering 2025 Conference, Valencia, Spain, 1–5 September 2025. [Google Scholar]

- Asuquo, P.; Cruickshank, H.; Morley, J.; Ogah, C.P.A.; Lei, A.; Hathal, W.; Bao, S.; Sun, Z. Security and privacy in location-based services for vehicular and mobile communications: An overview, challenges, and countermeasures. IEEE Internet Things J. 2018, 5, 4778–4802. [Google Scholar] [CrossRef]

- Aïmeur, E.; Lawani, O.; Dalkir, K. When changing the look of privacy policies affects user trust: An experimental study. Comput. Hum. Behav. 2016, 58, 368–379. [Google Scholar] [CrossRef]

- Svanæs, D.; Alsos, O.A.; Dahl, Y. Usability testing of mobile ICT for clinical settings: Methodological and practical challenges. Int. J. Med. Inform. 2010, 79, e24–e34. [Google Scholar] [CrossRef]

- Hassanaly, P.; Dufour, J.C. Analysis of the regulatory, legal, and medical conditions for the prescription of mobile health applications in the United States, the European Union, and France. Med. Devices Evid. Res. 2021, 14, 389–409. [Google Scholar] [CrossRef] [PubMed]

- Schleser, M. Mobile Storytelling: Changes, Challenges and Chances. In Mobile Storytelling in an Age of Smartphones; Springer: Cham, Switzerland, 2022; pp. 9–24. [Google Scholar]

- Zhu, D.; Al Mahmud, A.; Liu, W.; Wang, D. Digital storytelling for people with cognitive impairment using available mobile apps: Systematic search in app stores and content analysis. JMIR Aging 2024, 7, e64525. [Google Scholar] [CrossRef] [PubMed]

- Yoo HyunKyung, Y.H.; Kim EunJin, K.E.; Yoon YooShik, Y.Y.; Lee HeyRon, L.H.; Kwon SoonWoo, K.S. Factors of Storytelling that Effect on Visitors’ Satisfaction and Loyalty. In Proceedings of the 5th Advances in Hospitality &Tourism Marketing and Management (AHTMM) Conference, Beppu, Japan, 18–21 June 2015; pp. 543–548. [Google Scholar]

- Ojonugwa, B.M.; Ogunwale, B.; Adanigbo, O.S.; Ochefu, A. Media Production in Fintech: Leveraging Visual Storytelling to Enhance Consumer Trust and Engagement. J. Front. Multidiscip. Res. 2022, 3, 425–431. [Google Scholar] [CrossRef]

- Salvietti, G. Fostering loyalty through engagement and gamification. In Loyalty Management; Routledge: Oxfordshire, UK, 2025; pp. 163–181. [Google Scholar]

- Thefinchdesignagency. The Role of Storytelling in UX Design: How to Create a Narrative. 2024. Available online: https://medium.com/@thefinchdesignagency/the-role-of-storytelling-in-ux-design-how-to-create-a-narrative-1c5809889a34 (accessed on 17 September 2025).

- Redlarski, K.; Weichbroth, P. Hard lessons learned: Delivering usability in IT projects. In Proceedings of the IEEE 2016 Federated Conference on Computer Science and Information Systems (FedCSIS), Gdansk, Poland, 11–14 September 2016; pp. 1379–1382. [Google Scholar]

- Balagtas-Fernandez, F.; Hussmann, H. A methodology and framework to simplify usability analysis of mobile applications. In Proceedings of the 2009 IEEE/ACM International Conference on Automated Software Engineering, Auckland, New Zealand, 16–20 November 2009; pp. 520–524. [Google Scholar]

- Zou, T.; Ertug, G. Unwitting participants at our expense: A/B testing and digital exploitation. Bus. Soc. 2024, 63, 1513–1517. [Google Scholar] [CrossRef]

- Lettner, F.; Holzmann, C.; Hutflesz, P. Enabling A/B testing of native mobile applications by remote user interface exchange. In Proceedings of the International Conference on Computer Aided Systems Theory, Las Palmas de Gran Canaria, Spain, 10–15 February 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 458–466. [Google Scholar]

- Zarzosa, J. Adopting a design-thinking multidisciplinary learning approach: Integrating mobile applications into a marketing research course. Mark. Educ. Rev. 2018, 28, 120–125. [Google Scholar] [CrossRef]

| Sex | Age | Education | Current Occupation | Experience | Projects | Words |

|---|---|---|---|---|---|---|

| Man | 39 | Higher | Front-End Developer | 16 | 4 | 322 |

| Man | 26 | Higher | Product Designer | 5 | 2 | 200 |

| Man | 42 | Higher | UX Designer | 17 | 25 | 172 |

| Woman | 30 | Higher | UX Designer | 8 | 9 | 163 |

| Woman | 26 | Hihger | UX Researcher | 4 | 3 | 160 |

| Man | 25 | Higher | Senior Data Engineer | 6 | 3 | 107 |

| Man | 29 | Higher | IT Team Leader | 3 | 2 | 103 |

| Woman | 46 | Higher | Mobile Front-End Dev. | 20 | 15 | 97 |

| Woman | 33 | Higher | UX Designer | 3 | 3 | 92 |

| Man | 26 | Secondary | Front-End Developer | 4 | 3 | 85 |

| Man | 37 | Higher | IT Consultant | 20 | 5 | 81 |

| Woman | 41 | Hihger | UX Writer | 20 | 3 | 73 |

| Woman | 45 | Higher | UX Designer | 20 | 19 | 67 |

| Man | 22 | Higher | Mobile Software Dev. | 2 | 2 | 67 |

| Woman | 42 | Higher | UX Writer | 16 | 2 | 62 |

| Woman | 26 | Hihger | UX Designer | 3 | 5 | 50 |

| Man | 31 | Secondary | UX Designer | 7 | 3 | 48 |

| Woman | 56 | Higher | Software Developer | 20 | 15 | 45 |

| Woman | 41 | Higher | Technical Writer | 16 | 2 | 15 |

| Man | 42 | Hihger | CPO | 10 | 2 | 12 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Weichbroth, P.; Szot, T. The MUG-10 Framework for Preventing Usability Issues in Mobile Application Development. Appl. Sci. 2025, 15, 11995. https://doi.org/10.3390/app152211995

Weichbroth P, Szot T. The MUG-10 Framework for Preventing Usability Issues in Mobile Application Development. Applied Sciences. 2025; 15(22):11995. https://doi.org/10.3390/app152211995

Chicago/Turabian StyleWeichbroth, Pawel, and Tomasz Szot. 2025. "The MUG-10 Framework for Preventing Usability Issues in Mobile Application Development" Applied Sciences 15, no. 22: 11995. https://doi.org/10.3390/app152211995

APA StyleWeichbroth, P., & Szot, T. (2025). The MUG-10 Framework for Preventing Usability Issues in Mobile Application Development. Applied Sciences, 15(22), 11995. https://doi.org/10.3390/app152211995