1. Introduction

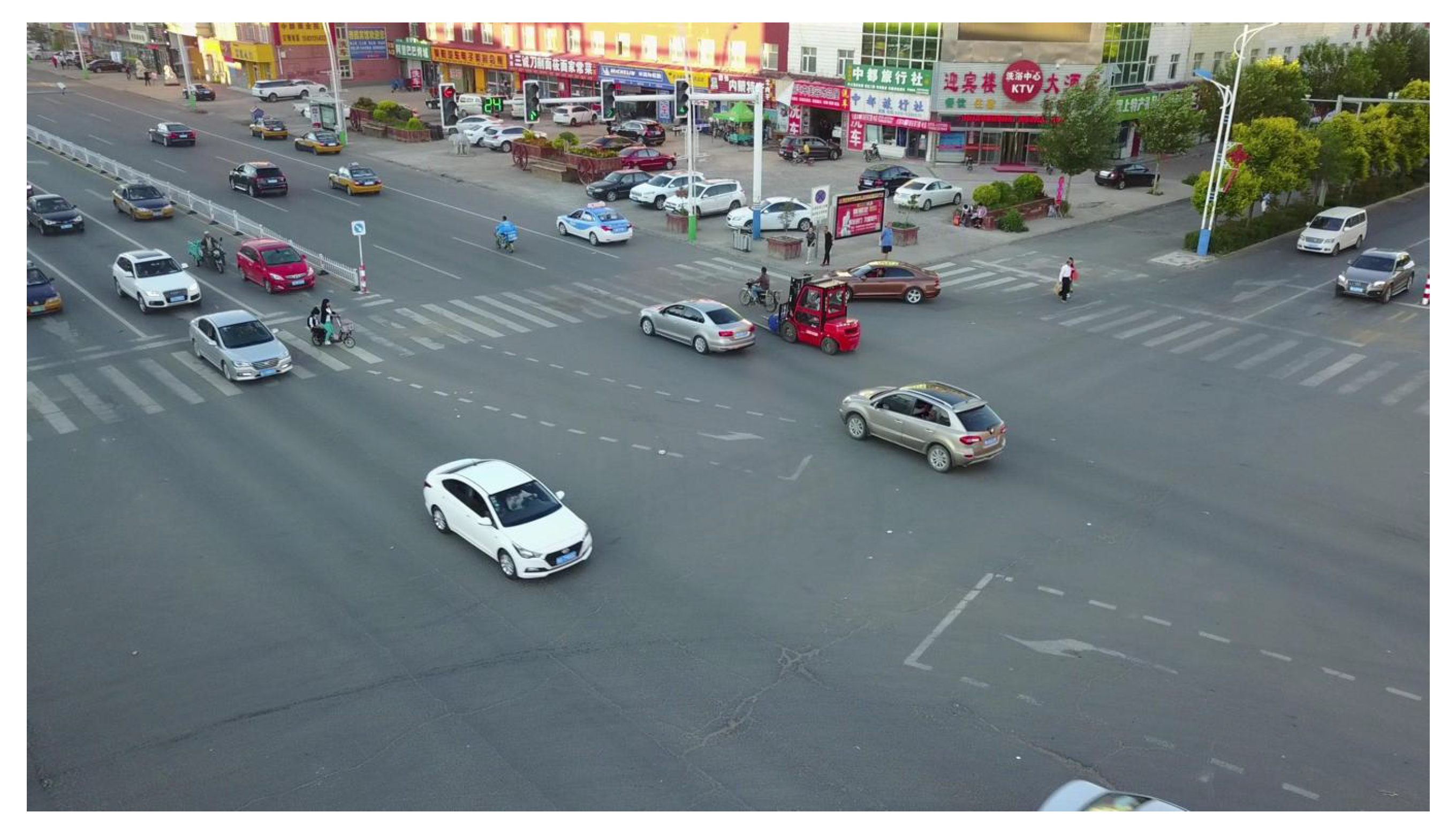

Visual sensor-based unmanned aerial vehicles (UAV) have been widely deployed in various application scenarios, such as disaster emergency responding [

1], urban traffic monitoring [

2], air map, and military reconnaissance. The explosion of UAV technology has resulted in an increasing demand for effective and high-performance solutions for online object detection on aerial images. Compared with ground images, UAV images also exhibit several distinctive characteristics, which have posed strong challenges to the traditional computer vision methods: comprehensive coverage of the scene with cluttered background, overwhelmingly top view with occlusion, large variations in scale (orders of magnitudes), very small quantity of small objects in terms of image scales, and diversity in the illumination [

3].

UAV platforms come with strict hardware constraints, which demand a detection algorithm to achieve millisecond-level inference at milliwatt-level power budgets. These severe resource constraints make researchers re-think the design paradigms of deep networks [

4]. The imaging features resulting from the bird’s-eye view perspective pose very challenging pattern recognition problems: target scale changes on the order of a hundred-fold, small targets are highly cluttered and can be easily timbered by complex surrounding objects, and target shapes are distorted by flight attitude, causing further difficulty during recognition [

5]. All of these obstacles have greatly promoted the technical difficulties of aerial intelligent perception and need to be urgently solved.

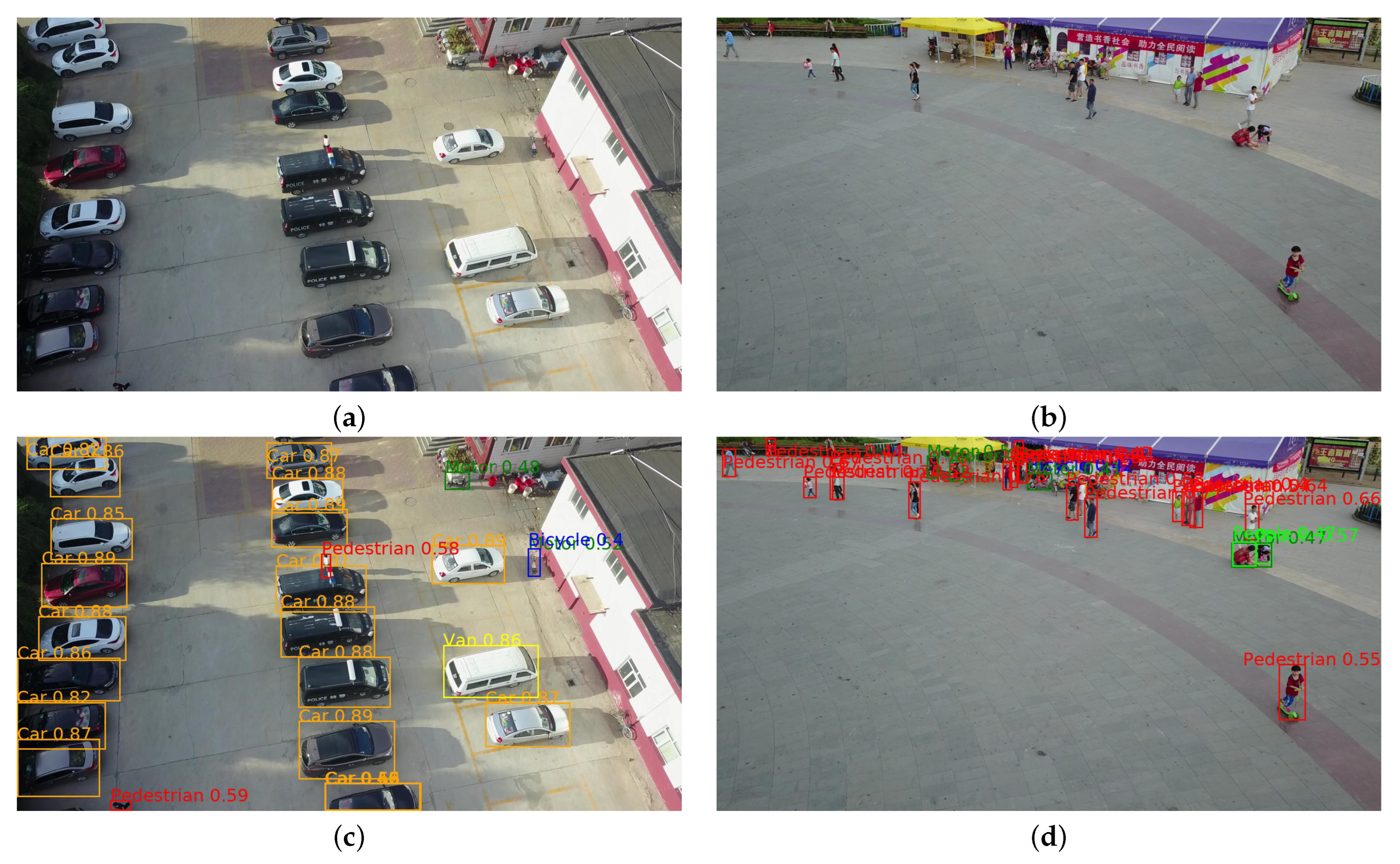

Figure 1 shows an UAV aerial image.

In recent years, object detection techniques have seen a transition from the anchor-box-based methods to end-to-end learning. Two-stage approaches form region proposals (region-based convolutional neural network (R-CNN) and its faster versions, including fast R-CNN, faster R-CNN, and mask R-CNN [

6,

7,

8,

9,

10]), achieve high accuracy but require a cascaded pipeline of components and, thus, are not real-time. Single-stage detectors (such as the You Only Look Once (YOLO) series [

11,

12,

13,

14,

15]) make dense predictions and effectively enhance inference efficiency, thus striking a balance between processing speed and accuracy in general environments. However, these convolutional neural network (CNN)-based methods have intrinsic drawbacks for aerial image processing: the local receptive field of the convolutional operation restricts the modeling of global context, leading to difficulty in capturing long-range dependencies between objects in aerial scenes. Meanwhile, the non-maximum suppression (NMS) in the post-processing phase dramatically increases the computational complexity in dense target areas and becomes the performance bottleneck of real-time detection [

16].

The introduction of the transformer architecture now provides a new route to overcome these constraints. Detection transformer (DETR) [

17] was the first to use self-attention in object detection, and the Hungarian algorithm was used to match in a one-to-one manner between queries and targets, completely discarding anchor box design and NMS post-treatment, and achieving real end-to-end learning. This simple yet principled design reduces the overhead of detection pipelines and shows its merits in occlusion and crowded scenarios. While the original DETR can be trained to acceptable accuracy, it leads to slow convergence and high time consumption, which is not suitable for real-world resources such as smart devices and graphics processing unit (GPU) servers [

18].

The effectiveness of diffusion models as generative models has motivated new investigations in the detection literature. Diffusion model with explicit instance matching (DEIM) [

19] creatively introduces instance matching to diffusion denoising and iteratively performs optimization to progressively refine target estimations. Different from classical one-shot prediction, DEIM adopts a progressive optimization mechanism so that the model iteratively evolves from coarse to accurate detection. Most importantly, DEIM’s dense one-to-one (O2O) matching augments the number of targets in training images, to achieve supervision density as high as one-to-many (O2M) but with the benefits of one-to-one matching. Together with the tailored dual-endpoint matching loss (matchability-aware loss), DEIM makes a breakthrough in training efficiency: it achieves better detection performance while halving the amount of training time.

The backbone network in the DEIM model is implemented by using high-performance GhostNet version 2 (HGNetv2) to extract features. For real-time object detection tasks, the HGStage modules in HGNetv2 can obtain strong feature representation power, but the structurally complex HGStage units and the squeeze-and-excitation (SE) attention mechanism are computationally intensive, making it difficult to deploy the model on edge devices. Research by Chen et al. [

20] showed that overly complex networks significantly increase inference latency and add barely any improvement in accuracy. Meanwhile, Liu et al. [

21] pointed out that single feature extraction modules cannot cope with the simultaneous detection of objects of various sizes, such as in complex scenes containing multi-scale objects. Several recent works have attempted to improve HGNetv2 in different domains. For instance, an improved YOLOv11n segmentation model has been proposed for lightweight detection and segmentation of crayfish parts [

22]; ELS-YOLO [

23] and LEAD-YOLO [

24] enhances UAV object detection performance in low-light conditions based on an improved YOLOv11 framework; and HCRP-YOLO, along with other HGNetv2-based approaches (LEAD-YOLO [

24]), has been applied in agricultural and inspection tasks, demonstrating the backbone’s potential for real-world UAV deployments [

25]. However, these improvements are often tailored for specific application scenarios or environmental constraints and do not provide a general solution to the challenges of multi-scale, cluttered, and resource-constrained UAV imagery, which motivates our proposed discriminative correlation filter network (DCFNet) design.

We propose a new DCFNet backbone network in this paper. DCFNet takes advantage of multi-scale features and background information for better performance. The network integrates the downsampling with reduced feature degradation (DRFD) module, which aims to improve standard downsampling techniques by maintaining higher spatial information during resolution reduction, leading to less feature loss. Moreover, we also propose an innovative feature enrichment module, FasterC3k2, by fusing the C3k2Block (as cross-stage partial network (CSPNet)) module and FasterBlock [

26], effectively alleviating the superfluous calculations through parameter settings. The whole network follows a progressive cyclic structure, which not only guarantees full feature extraction but also saves a large amount of computational resources.

In the neck network, the fusion module of DEIM is in charge of multi-scale feature fusion. Object distribution and scale structure change with shooting angle in UAVs to some extent. Existing conventional feature pyramid networks adopt only simple residual concatenation and re-parameterization block (RepBlock [

27]), which makes the model fail to fully express the complex features. To solve this problem, the paper proposes the lightweight feature diffusion pyramid network (LFDPN). The feature diffusion mode of LFDPN can effectively diffuse the context-rich features to different detection scales, through the bidirectional feature propagation paths from top-down to bottom-up. As shown in stage 1 of the network, the output of the feature focusing module is fused with high-resolution and low-resolution feature maps by downsampling and upsampling, respectively, to generate rough multi-scale feature representations. The second stage even realizes deep feature fusion together with complete context information diffusion by the second feature focusing (FocusFeature) and deep feature enhancement submodules (re-parameterized high-level multi-scale (RepHMS)). Moreover, this paper introduces lightweight adaptive weighted downsampling (LAWDown), which, together with LFDPN’s dual-stage feature spreading mechanism, successfully mitigates the loss of information caused by scale discrepancy in conventional feature pyramid networks, leading to a notable enhancement of consistent and expressive multi-scale features.

In summary, the main improvements and innovations of this paper are as follows:

- 1.

To minimize feature loss during the downsampling process and reduce redundant computations, we design a novel backbone network DCFNet for UAV object detection. It introduces the DRFD downsampling module and designs the FasterC3k2 feature enhancement module composed of C3k2Block and FasterBlock, which enhances feature extraction capability while achieving model lightweighting;

- 2.

To enable the model to fully express complex features, we design a novel neck network LFDPN, which effectively alleviates the information loss problem caused by scale differences in traditional feature pyramid networks through deep integration of cross-scale features and the design of the FocusFeature focusing module;

- 3.

To address the difficulty in balancing information preservation and computational efficiency in traditional convolutional downsampling, we design the LAWDown. It integrates adaptive weight mechanisms with grouped convolution, achieving new downsampling through dynamic feature selection and efficient channel reorganization, significantly reducing computational complexity while maintaining accuracy.

2. Materials and Methods

2.1. Overview

The full approach is described in this section, where DEIM framework is optimized for UAV object detection. The particular challenges presented by aerial images with widely varying scales, dense small target distributions, and complex backgrounds require dedicated architecture designs to reconcile detection accuracy and computational complexity. Our solution is designed to tackle these issues in a comprehensive manner by identifying and making incremental enhancements in several steps of the detection process.

Our proposed optimizations have a system-wide design, that golden, solid improvements to one component yield synergistic benefits for the system. We substitute the heavy HGNetv2 backbone with new designed DCFNet backbone, which is a shallower architecture and still has competitive feature learning ability with few more parameters. The feature fusion network of LFDPN adopts a novel bidirectional feature diffusion mechanism, so as to efficiently propagate semantic information as well as spatial details between scales. To deal with the needs for aerial object detection, we creatively compose dedicated modules, such as the DRFD module, the LAWDown sampler and the FocusFeature enhancer, to a systematic solution.

2.2. Base DEIM Framework

In this section, we first present the base DEIM framework, which serves as the foundation for our improved DL-DEIM model. The base framework establishes the overall detection pipeline and highlights the key modules that enable diffusion-based optimization. By understanding the architecture of the baseline system, we can better motivate the enhancements introduced later.

2.2.1. DEIM Architecture Overview

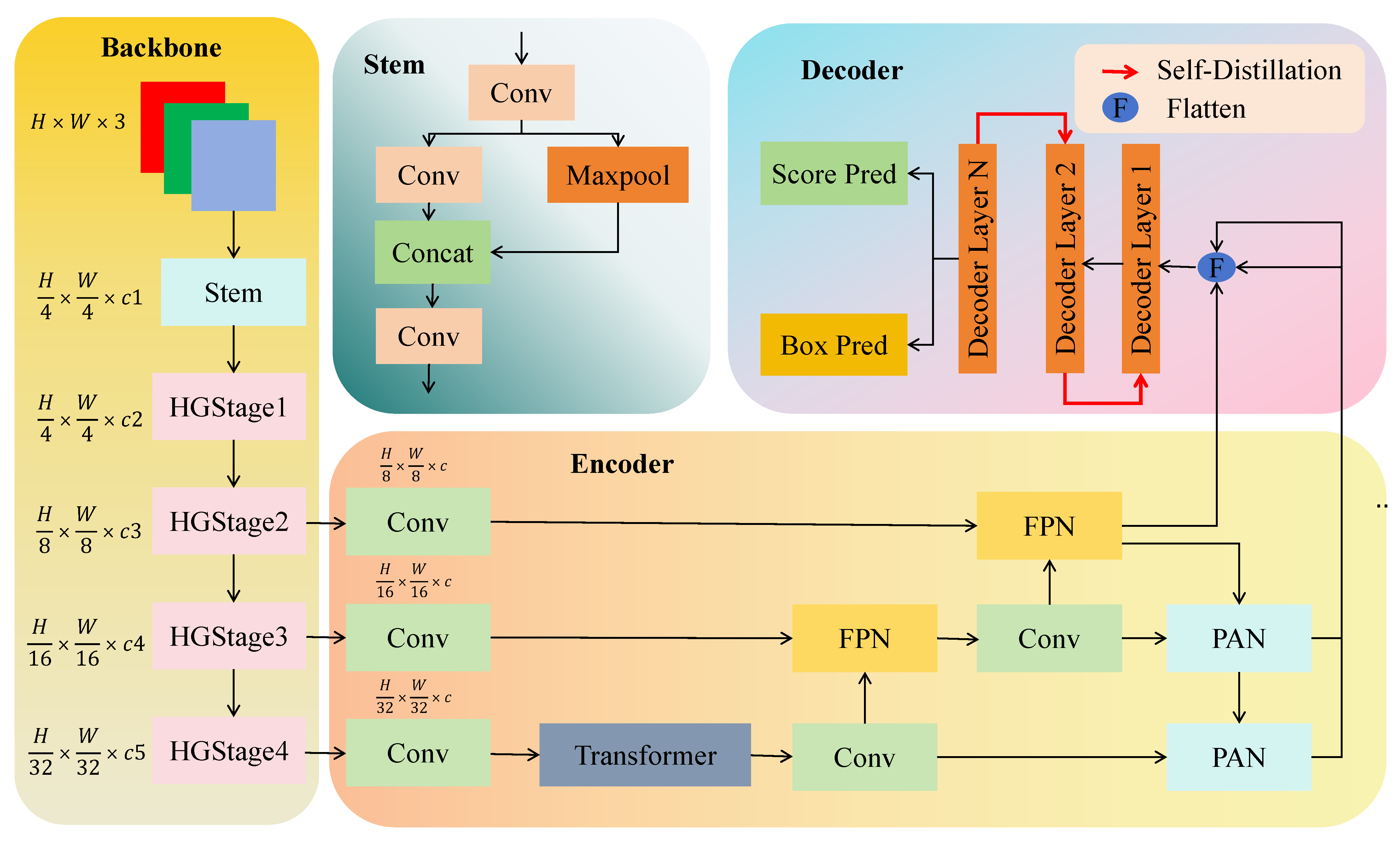

The base DEIM framework adopts a hierarchical architecture for end-to-end object detection, as illustrated in

Figure 2. The framework consists of three main components: (1) HGNetv2 backbone for multi-scale feature extraction, (2) HybridEncoder for feature enhancement and fusion, and (3) DFINETransformer decoder with self-distillation for iterative detection refinement.

The pipeline is designed to perform progressive feature extraction, multi-scale fusion, and end-to-end detection of UAV images directly without any additional post-processing (e.g., NMS). The most crucial contribution is to integrate diffusion-based fine-tuning and dense supervision schemes.

2.2.2. HGNetv2 Backbone

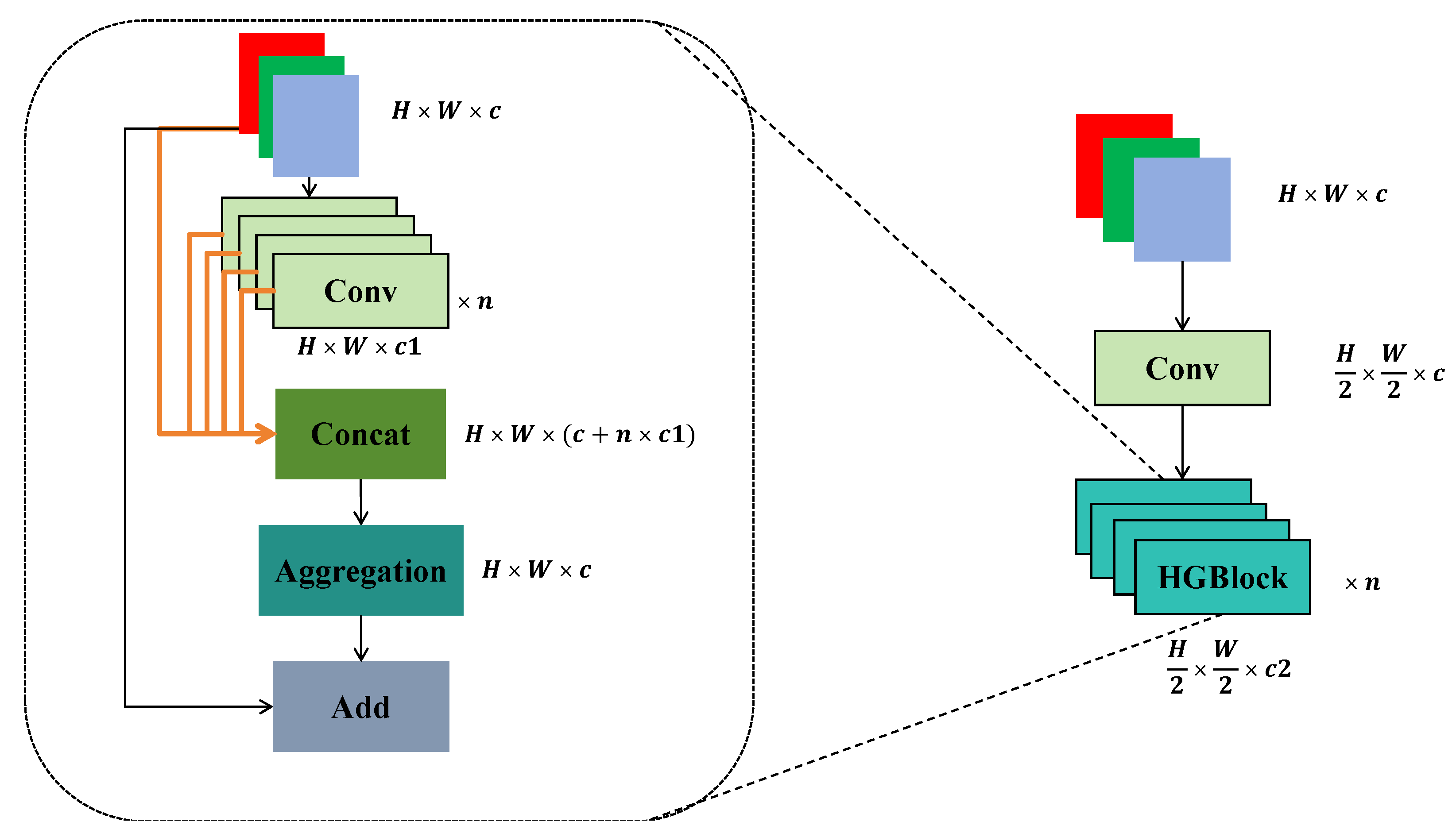

The hierarchical gradient aggregation is realized on the HGNetv2 backbone, which consists of the specialized HGStage modules and the multi-HGBlock units responsible for dense feature extraction. The backbone gradually extracts features at five scales with continuously increasing receptive fields and semantic abstraction. Given the initial Stem block that downsamples the spatial resolution to H/4 × W/4, the network goes through four hierarchical stages (HGStage1–4) to produce feature maps of scales P2/4, P3/8, P4/16, and P5/32, respectively. At each stage, the proposed pipeline carefully trade-off resolution reduction and channel dimension expansion, to retain fine-grained local details for small object detection, as well as to create sufficient capacity for global context information for semantic understanding. This hierarchical structure guarantees complete multi-scale feature representation and facilitates the gradient flow through dense connections. Plan views of the HGStage and HGBlock modules are shown in

Figure 3.

2.3. Improved DEIM Framework

Building upon the base DEIM framework, we introduce an improved version termed DL-DEIM.

DL-DEIM Architecture

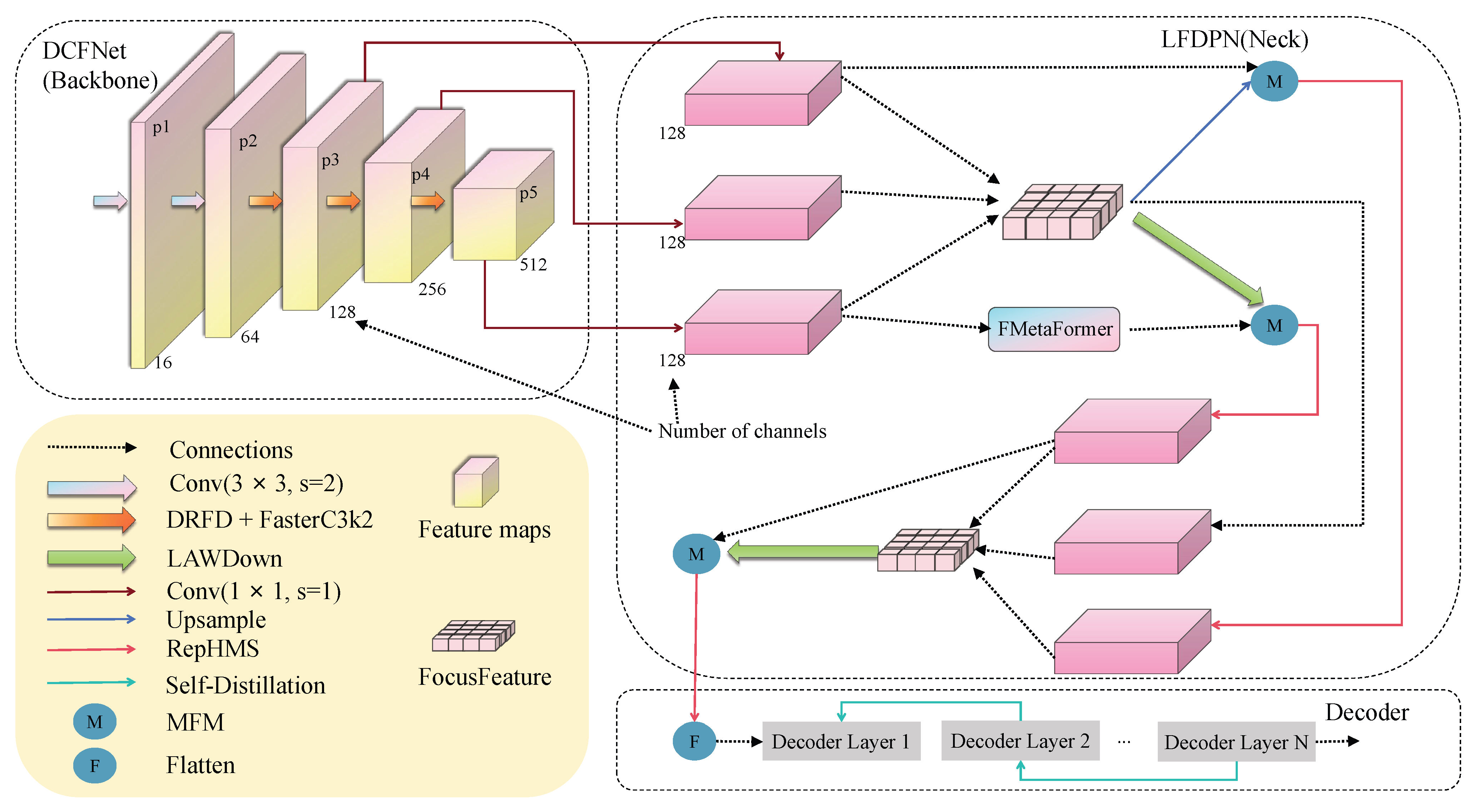

To overcome the deficiencies of the baseline DEIM without losing its main benefits, we suggest global architectural improvements in all components. The enhanced architecture substitutes the backbone HGNetv2 with our light-weight DCFNet, adds the neck LFDPN for better feature fusion, and keeps the powerful decoder DFINETransformer. The structure of the DL-DEIM architecture is illustrated in

Figure 4.

As shown in

Figure 4, feature maps extracted by the DCFNet backbone are first refined by the DRFD–FasterC3k2 compound block, which jointly balances receptive field diversity and computation. These refined multi-scale features are then propagated to the LFDPN neck for bidirectional diffusion and cross-scale fusion. Finally, the fused representations are passed through the DFINETransformer decoder for detection. The LAWDown module is embedded between LFDPN stages as an adaptive downsampling operator to maintain spatial consistency. This pipeline ensures smooth information flow from shallow to deep layers while minimizing information loss for small-object detection.

The DL-DEIM follows a clear top-down flow: features are first extracted by the DCFNet backbone, where each stage combines a DRFD unit with a FasterC3k2 refinement block. The resulting multi-scale feature maps (p3–p5) are then passed into the LFDPN neck, which diffuses and fuses semantic and spatial information bidirectionally. Finally, the fused representations are decoded by the DFINETransformer head to produce bounding box and confidence predictions. This structure ensures a consistent data stream and enables interpretable interaction between modules.

2.4. DCFNet Backbone

As a fundamental contribution, we propose a new kind of backbone: DCFNet architecture, in sharp contrast to the computational complexity of HGNetv2, the DCFNet we develop is a lightweight architecture yet preserves the strong feature extraction ability with dramatically less computational cost. The backbone adopts a progressive feature extraction strategy in four hierarchical stages, producing multi-scale representations at resolutions , , and , with the feature levels P2, P3, P4, and P5 for their levelsP2, P3, P4 and P5 respectively.

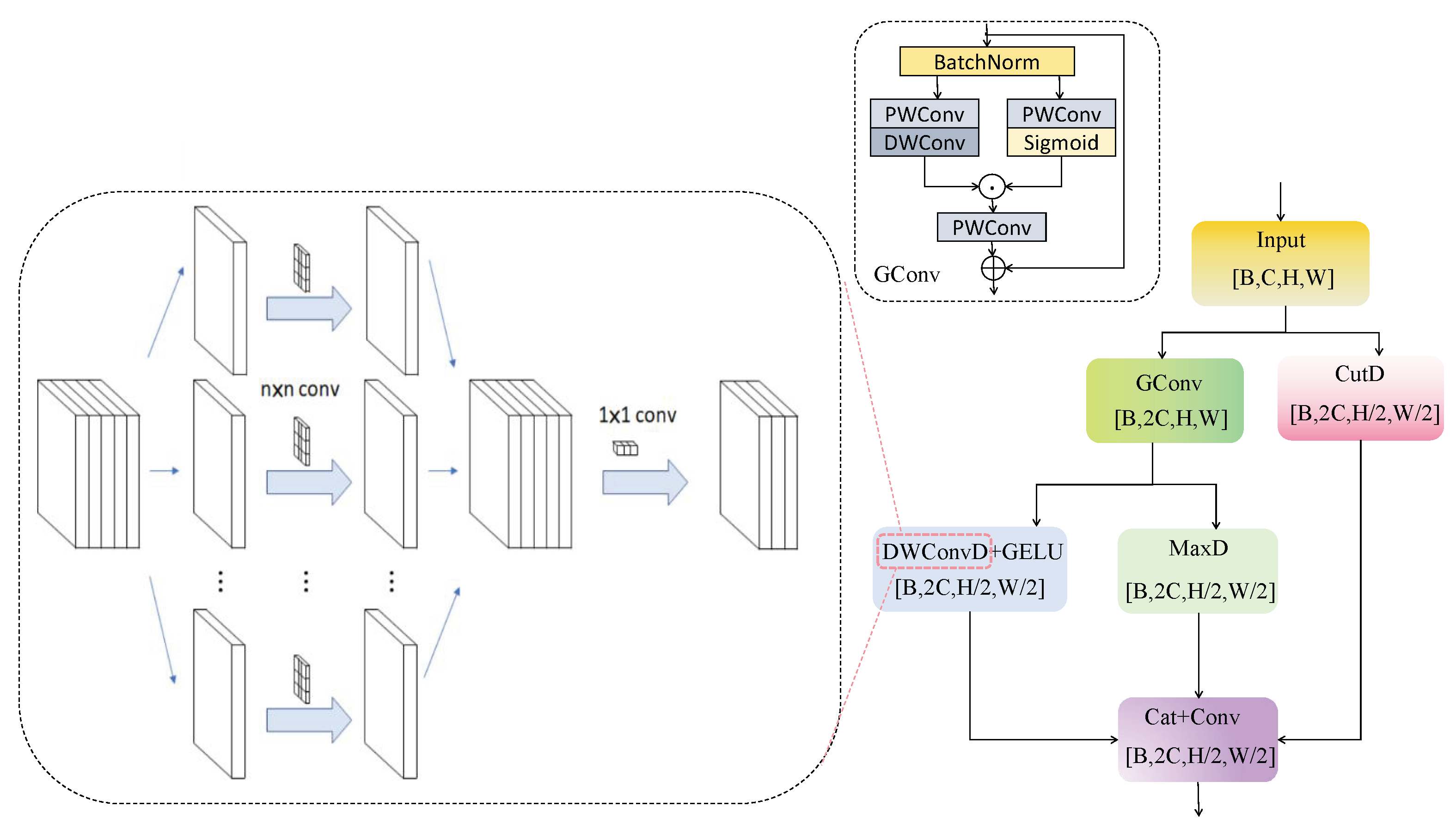

2.4.1. DRFD

Given an input feature

, the DRFD [

28] block performs downsampling through three complementary branches—CutD, ConvD, and MaxD—followed by feature fusion. Here, GConv denotes grouped convolution with

g groups (depth-wise when

), and CutD refers to pixel-rearrangement-based downsampling with

fusion.

CutD Branch—The input is split into four spatially interleaved sub-tensors and fused by a

convolution:

yielding

, where

.

ConvD and MaxD Branches—For local context preservation, ConvD applies grouped convolutions with strides 1 and 2:

while MaxD captures salient responses via pooling:

The three outputs are concatenated and fused through a

convolution:

The three-branch design jointly preserves fine details (CutD), spatial coherence (ConvD), and high-response regions (MaxD), effectively mitigating information loss from conventional strided downsampling with only marginal computational overhead. The architecture of the DRFD module is illustrated in

Figure 5.

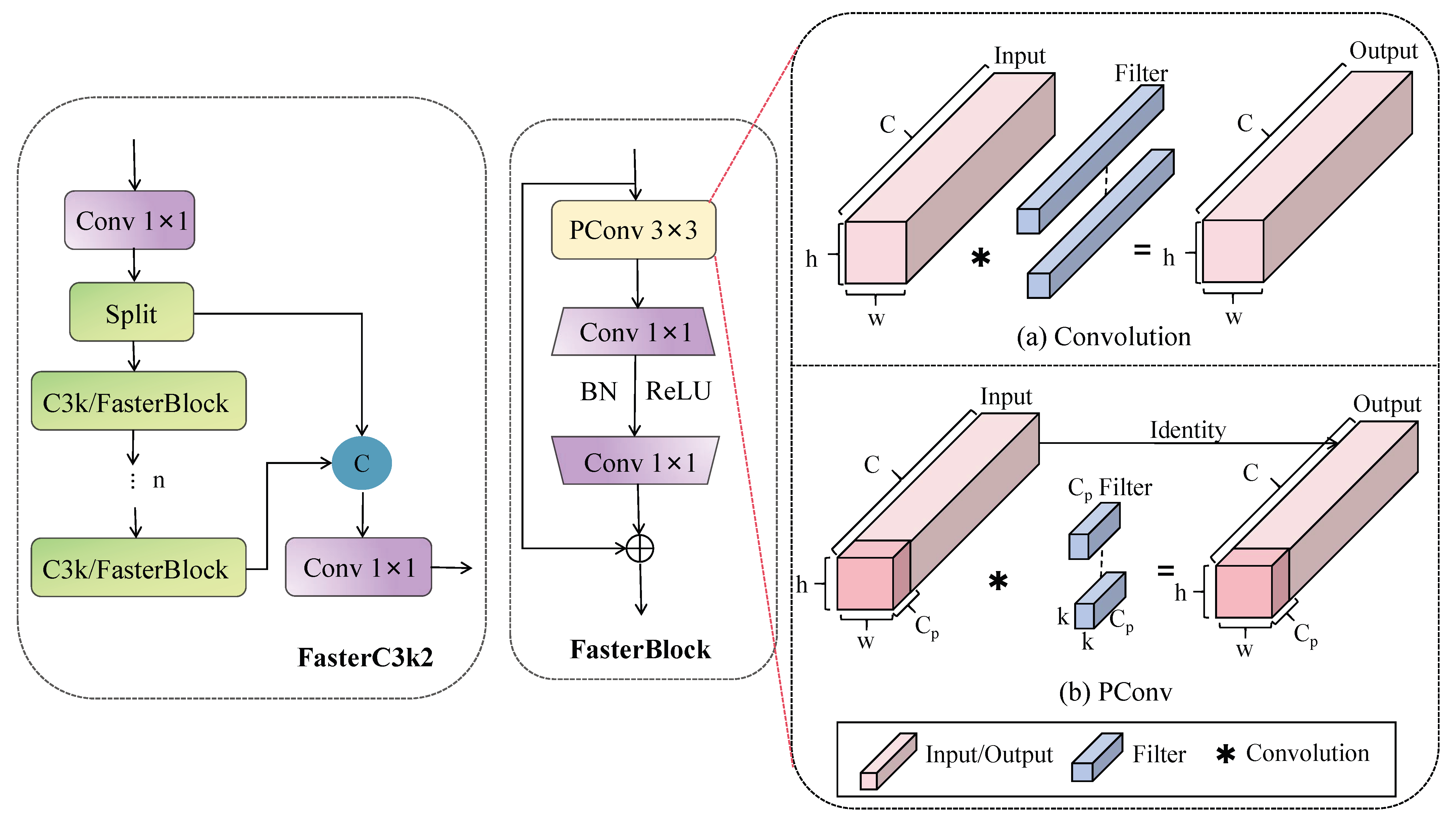

2.4.2. FasterC3k2

As illustrated by the orange arrows in

Figure 4, each DRFD module is immediately followed by a FasterC3k2 block, forming a compound downsampling–refinement unit. The DRFD stage performs multi-branch feature reduction, generating a compact yet information-rich representation

, where

is typically set to

. This output serves as the input to the FasterC3k2 block for efficient channel-wise refinement. Mathematically, the combined operation can be expressed as

where

denotes the channel division ratio used to perform partial convolution for efficiency.

This joint design allows DRFD to capture and preserve multi-scale contextual information while FasterC3k2 selectively refines and mixes feature channels with minimal computational overhead.

The compound structure ensures a balanced trade-off between receptive-field diversity and model compactness, leading to improved detection accuracy without increased complexity.

Following each DRFD module, the C3k2_Block incorporates Faster_Block components for efficient feature processing. The Faster_Block employs partial convolution strategies, processing only a fraction of channels (

) to reduce computational cost while maintaining representational capacity:

The architecture of the FasterC3k2 module is illustrated in

Figure 6.

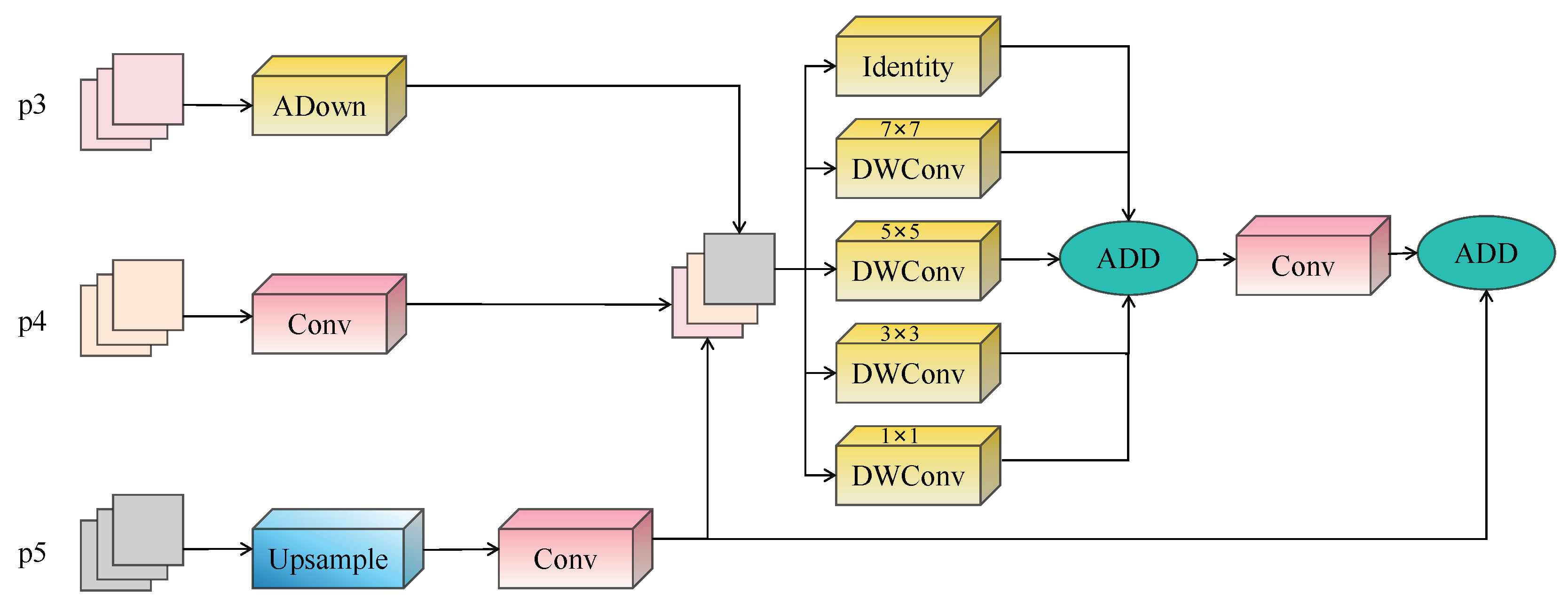

2.5. Lightweight Feature Diffusion Pyramid Network (LFDPN)

The LFDPN introduces a novel bidirectional feature propagation mechanism that efficiently diffuses both semantic and spatial information across scales.

2.5.1. Architectural Overview

The LFDPN introduces a novel bidirectional feature propagation mechanism that efficiently diffuses both semantic and spatial information across scales. The network architecture follows a sophisticated encoder-decoder pattern with three main pathways: top-down semantic flow, bottom-up detail enhancement, and lateral connections for feature fusion.

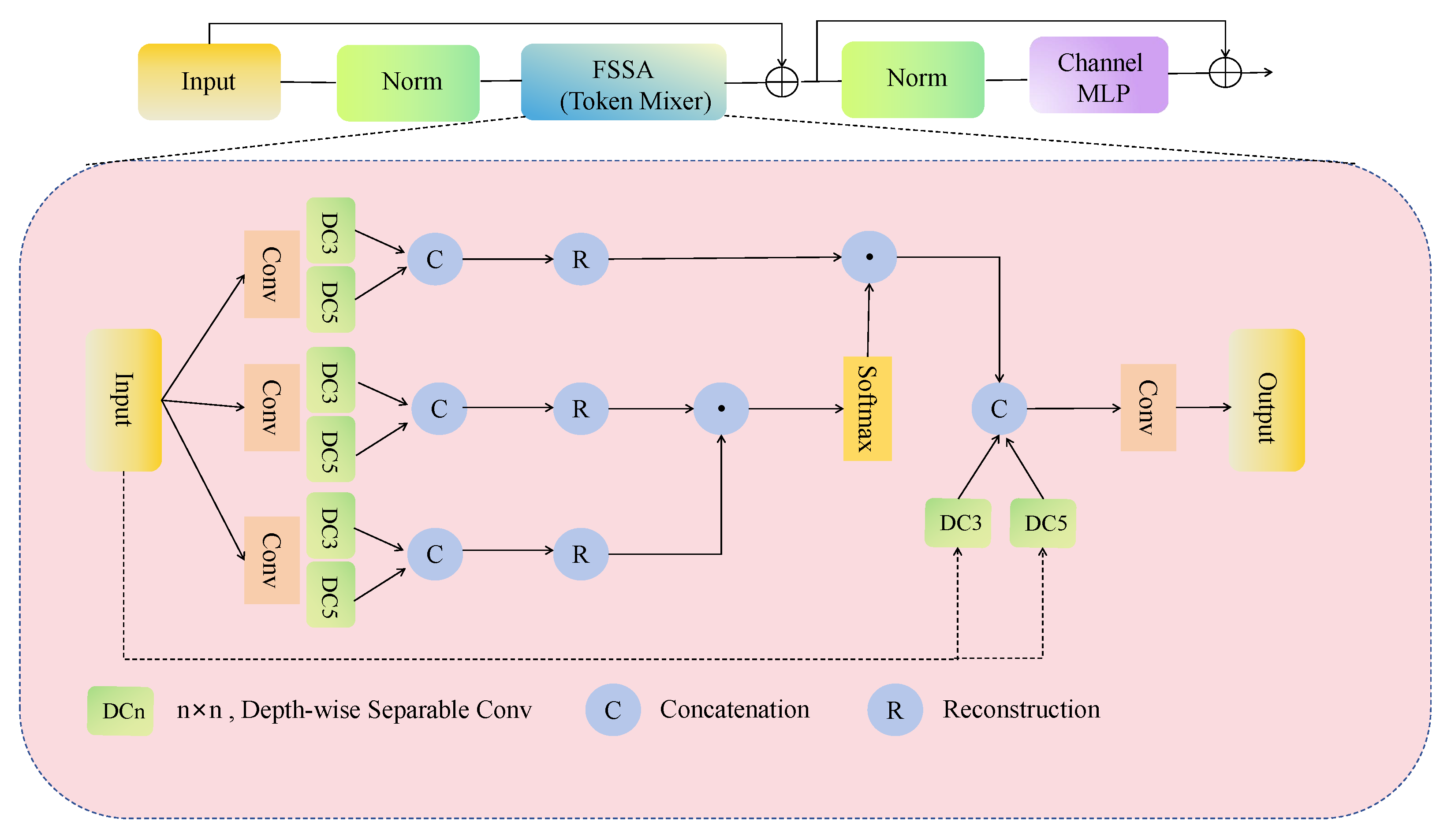

2.5.2. FMetaFormerBlock

Although the transformer block effectively captures long-range dependencies through self-attention, its quadratic complexity with respect to token number makes it inefficient for high-resolution UAV images. To address this limitation, we adopt the FMetaFormer block, which retains the overall transformer-like token-mixing paradigm but replaces the attention operation with a lightweight frequency spatial mixing strategy for improved efficiency and better feature generalization.

The MetaFormer block serves as the foundational computational unit, implementing a generalized transformer-style architecture. The block follows a dual-branch design with residual connections:

where the TokenMixer is FSSA module, and LayerNorm employs generalized normalization across spatial and channel dimensions. The architecture of the FMetaformer module is illustrated in

Figure 7.

2.5.3. FSSA

The FSSA module uniquely combines frequency-domain and spatial-domain attention mechanisms to capture both global and local dependencies [

29]. The frequency attention branch leverages the fast Fourier transform to operate in the frequency domain:

where

denotes the 2D FFT operation. The frequency attention scores are computed using complex-valued operations:

where

represents the Hermitian transpose,

are learnable temperature parameters, and ComplexNorm applies specialized normalization:

The spatial attention branch employs multi-scale depth-wise convolutions:

.

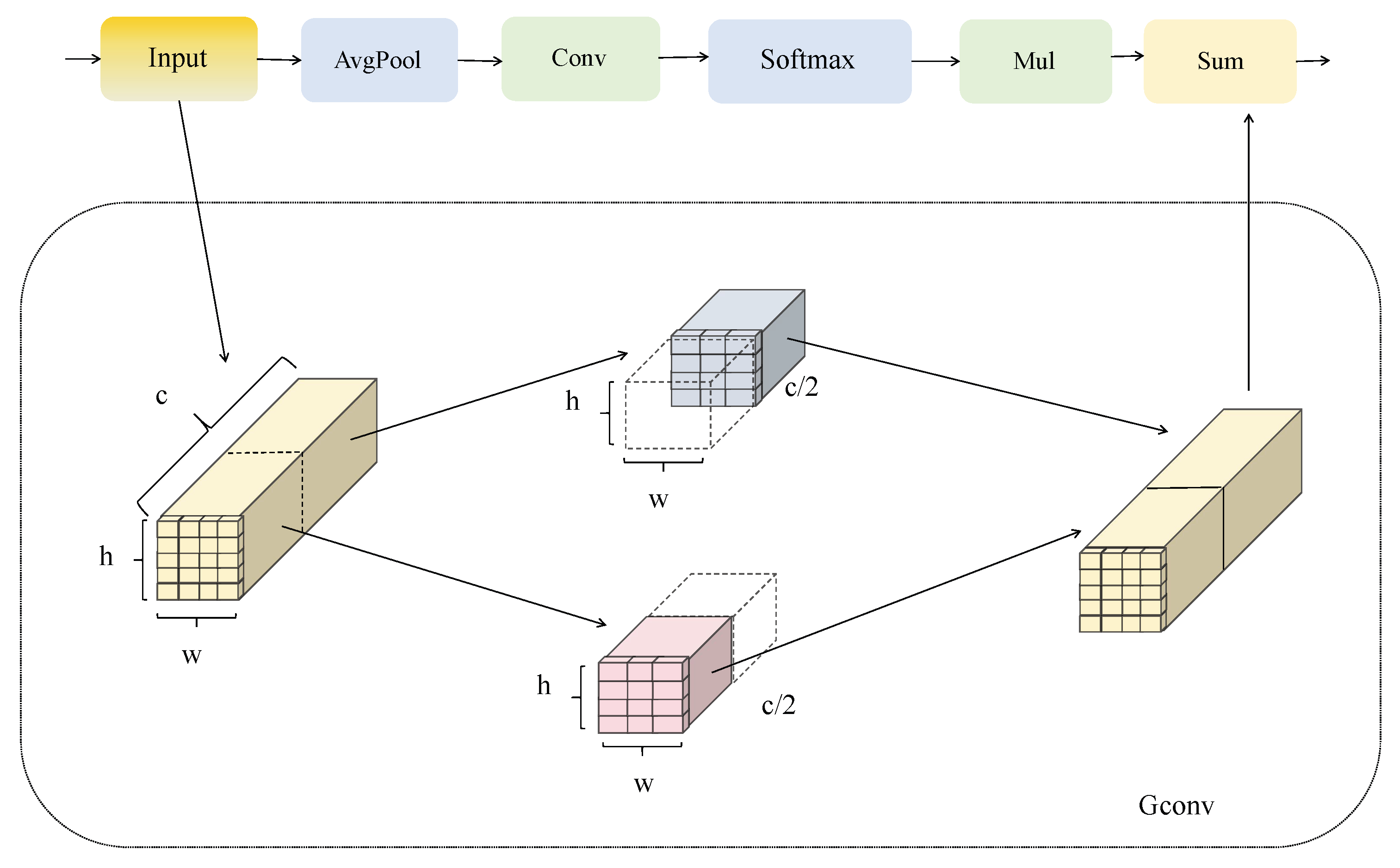

2.5.4. LAWDown

The LAWDown module realizes the attention-guided downsampling operation tailored for aerial imagery. The spatial-adaptive weights are generated dynamically by a global context-aware attention mechanism (average pooling and 1 × 1 convolution). This mechanism overcomes the limitation in traditional fixed-kernel downsampling, where the downsampling strategies can be adapted to the semantic content of input features, leading to high quality of feature preservation in different scenarios. We expanded the upsampled feature maps by channel rearrange operation, and then performed grouped convolution on these feature maps to reduce the dimension of each group, thus we can get even more groups. This architecture improves both feature representation ability and cross-channel semantic correlation maintaining using effective channel interactions. Reconstructing downsampled features from the s1 × s2 regions by softmax weights, LAWDown realizes fine-grained weighted information fusion in spatial dimension. The architecture of the LAWDown module is illustrated in

Figure 8.

The module computes spatially adaptive weights for each

region:

where

assigns importance weights. The downsampling process is as follows:

2.5.5. FocusFeature

The FocusFeature module performs adaptive multi-scale aggregation across three pyramid levels. The architecture of the FMetaformer module is illustrated in

Figure 9. The input features

from scales P3, P4, and P5 are as follows:

The ADown operation combines average pooling and max pooling paths:

Multi-scale context is captured through parallel depth-wise convolutions with kernel sizes

:

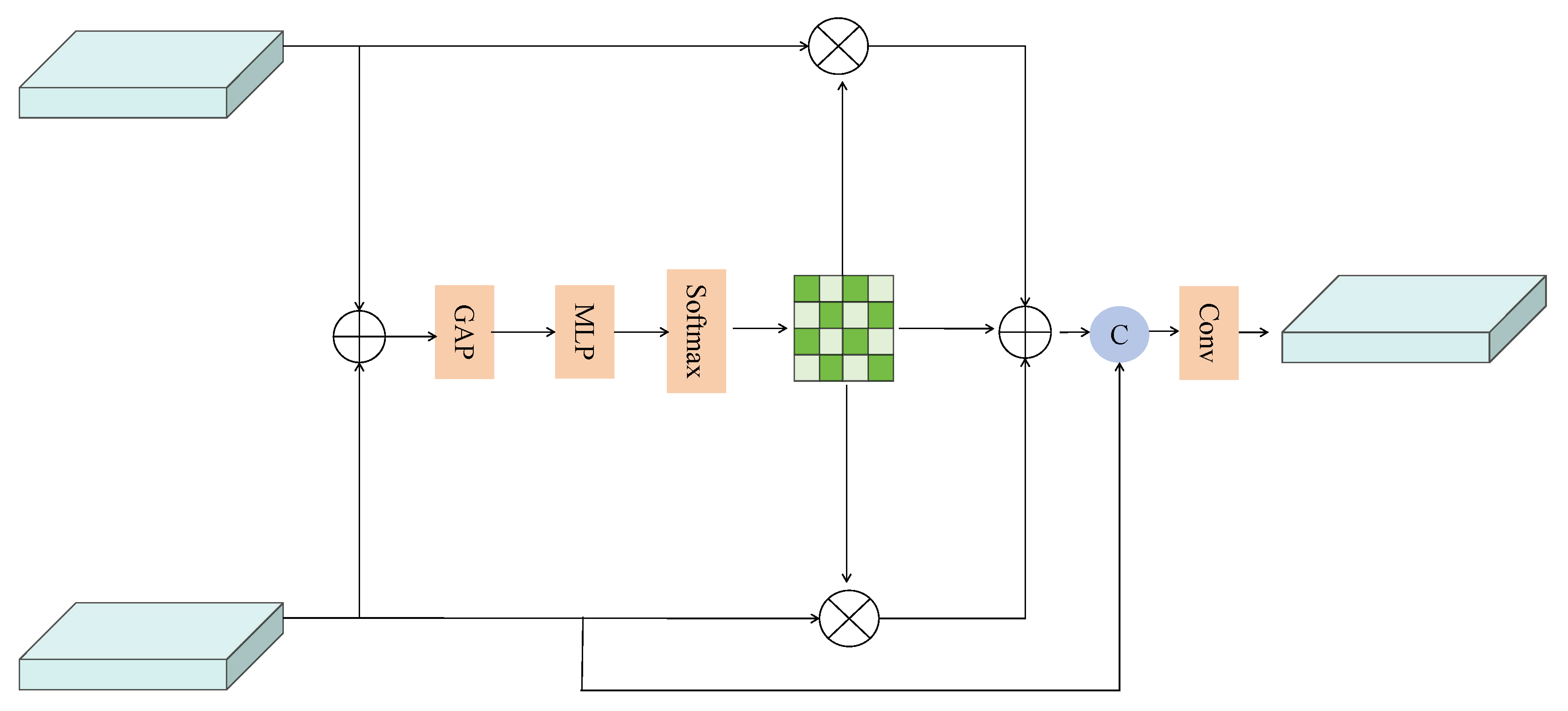

2.5.6. MFM

The MFM module dynamically calibrates contributions from different scales using channel attention [

30]. The architecture of the MFM module is illustrated in

Figure 10. Given features

from multiple sources:

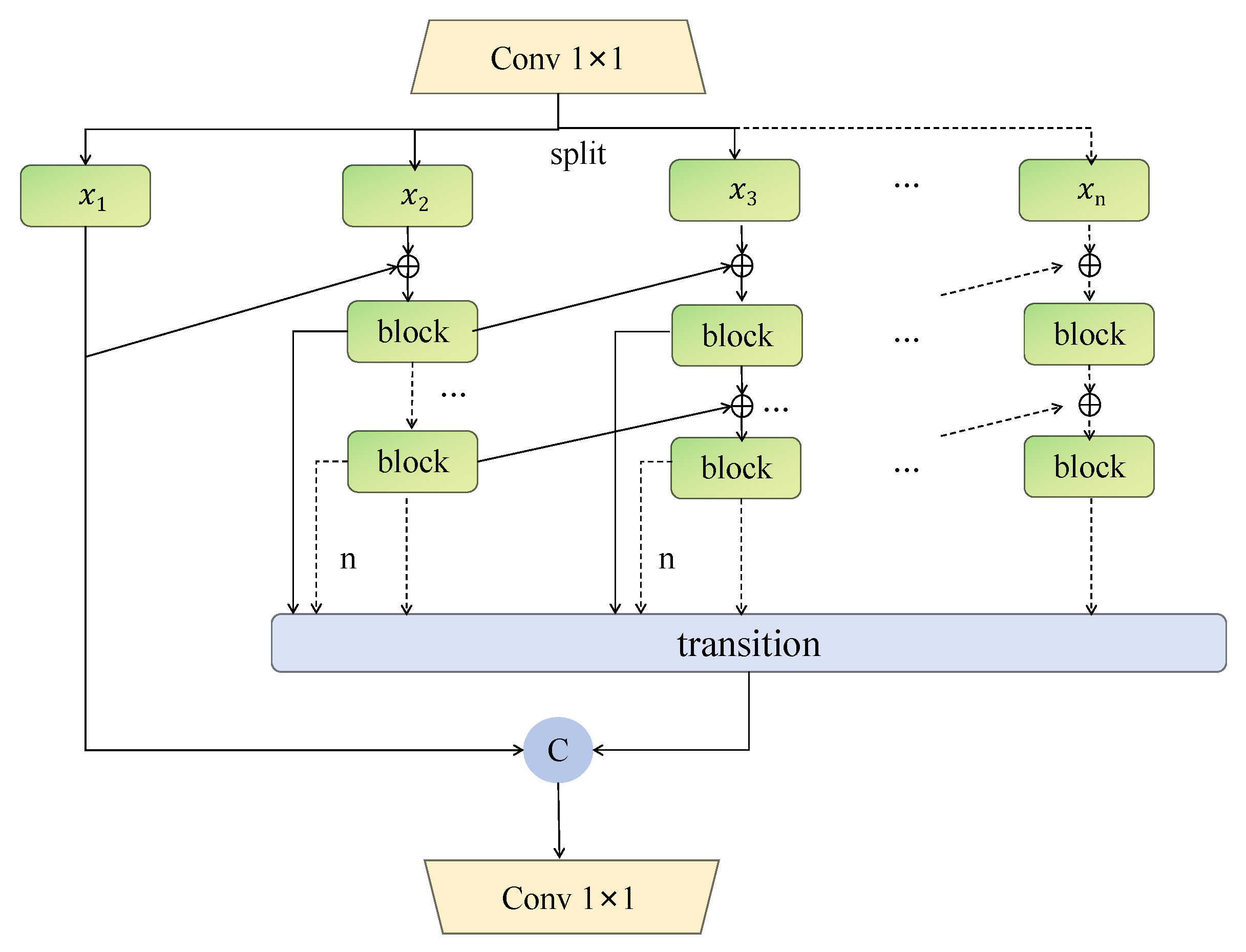

2.5.7. RepHMS

The RepHMS module enhances feature representation through hierarchical processing with UniRepLKNet blocks [

31]. The architecture of the RepHMS module is illustrated in

Figure 11. The module employs cascaded DepthBottleneck blocks for multi-scale feature extraction:

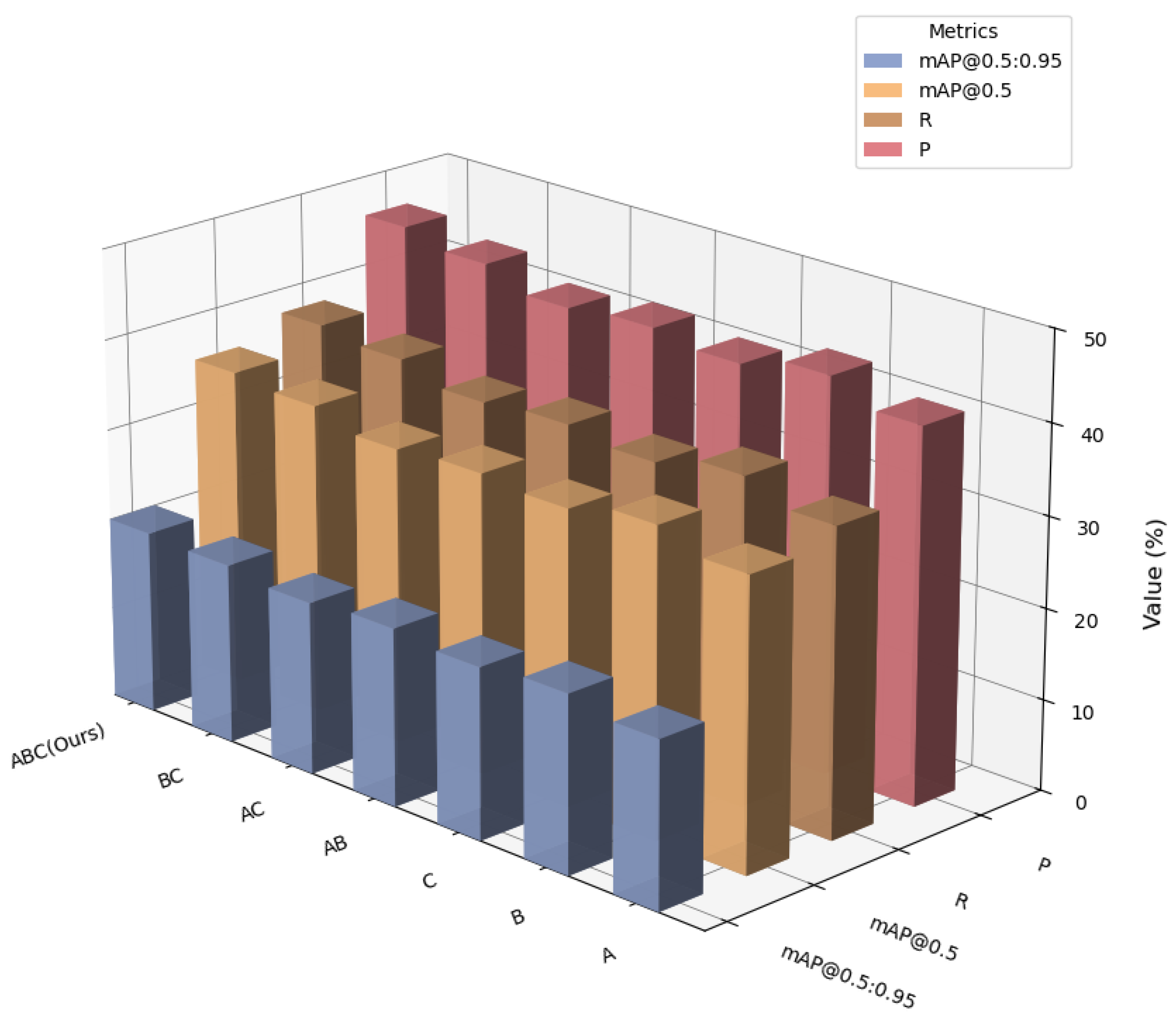

4. Discussion

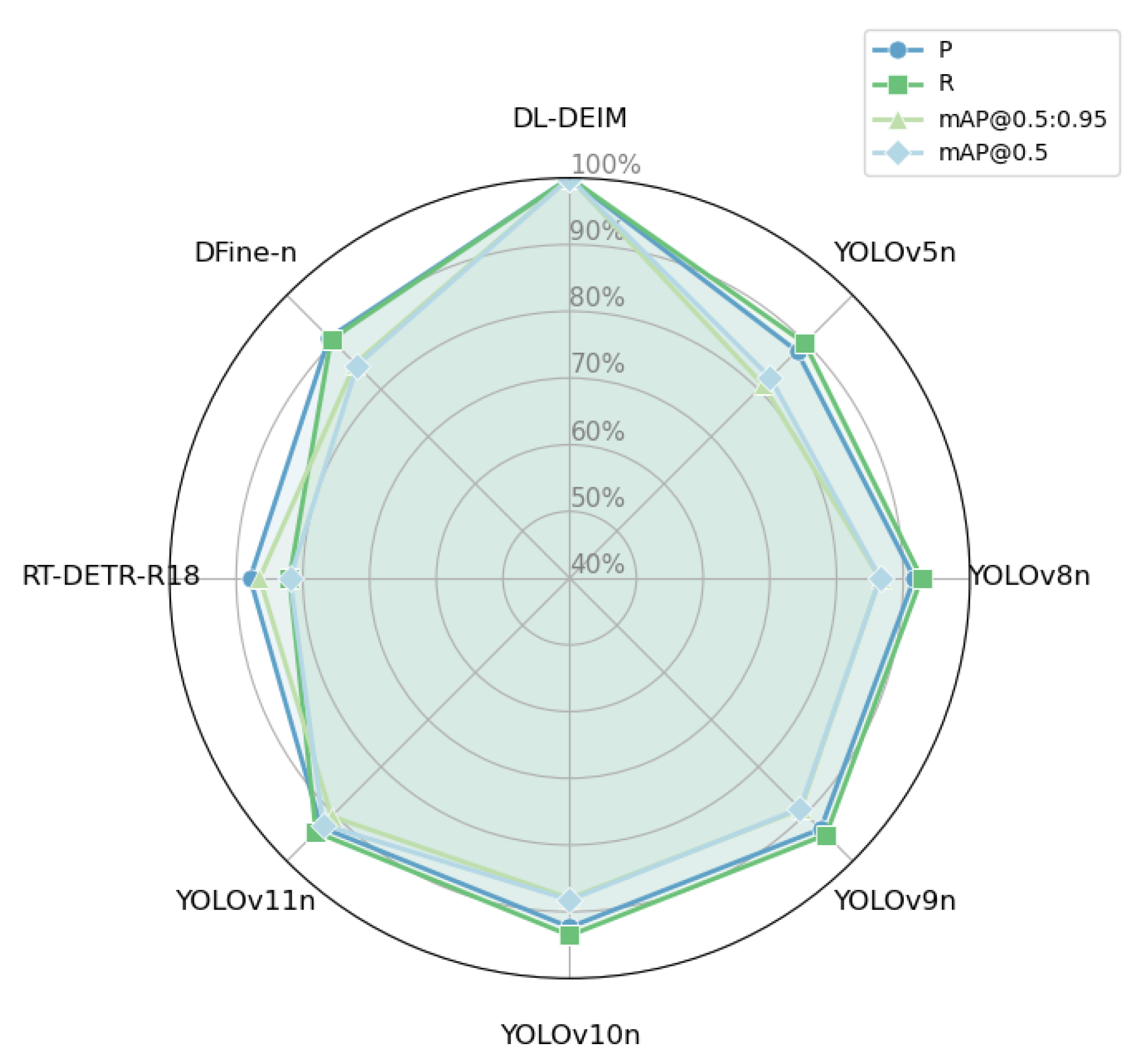

The proposed DL-DEIM framework demonstrates clear and consistent improvements over the baseline, achieving 34.9% mAP@0.5 and 20.0% mAP@0.5:0.95 on the VisDrone2019 dataset. These results validate the overall design philosophy that efficiency and accuracy in UAV object detection can be simultaneously enhanced through modular architectural optimization. This section discusses the individual contributions of each component and their synergistic effects on detection performance. An ablation analysis reveals the distinct roles of each major module:

DCFNet. By integrating the lightweight DCFNet backbone with the DRFD downsampling unit, the framework effectively mitigates information loss that typically occurs when processing small aerial targets. This component alone contributes approximately 1.8% mAP improvement while reducing computation by nearly 30%.

LFDPN. The bidirectional diffusion and frequency-domain attention in LFDPN enable more efficient semantic exchange across scales, strengthening object localization and recall—particularly for densely distributed small objects (+2.3% mAP).

LAWDown. The adaptive downsampling strategy dynamically adjusts spatial weighting based on content importance, preserving boundary information and improving precision on small targets (+2.1% mAP).

These findings indicate that each component addresses a complementary aspect of the detection pipeline: DCFNet, LFDPN, and LAWDown. Their joint operation leads to a synergistic performance boost greater than the sum of their isolated effects.

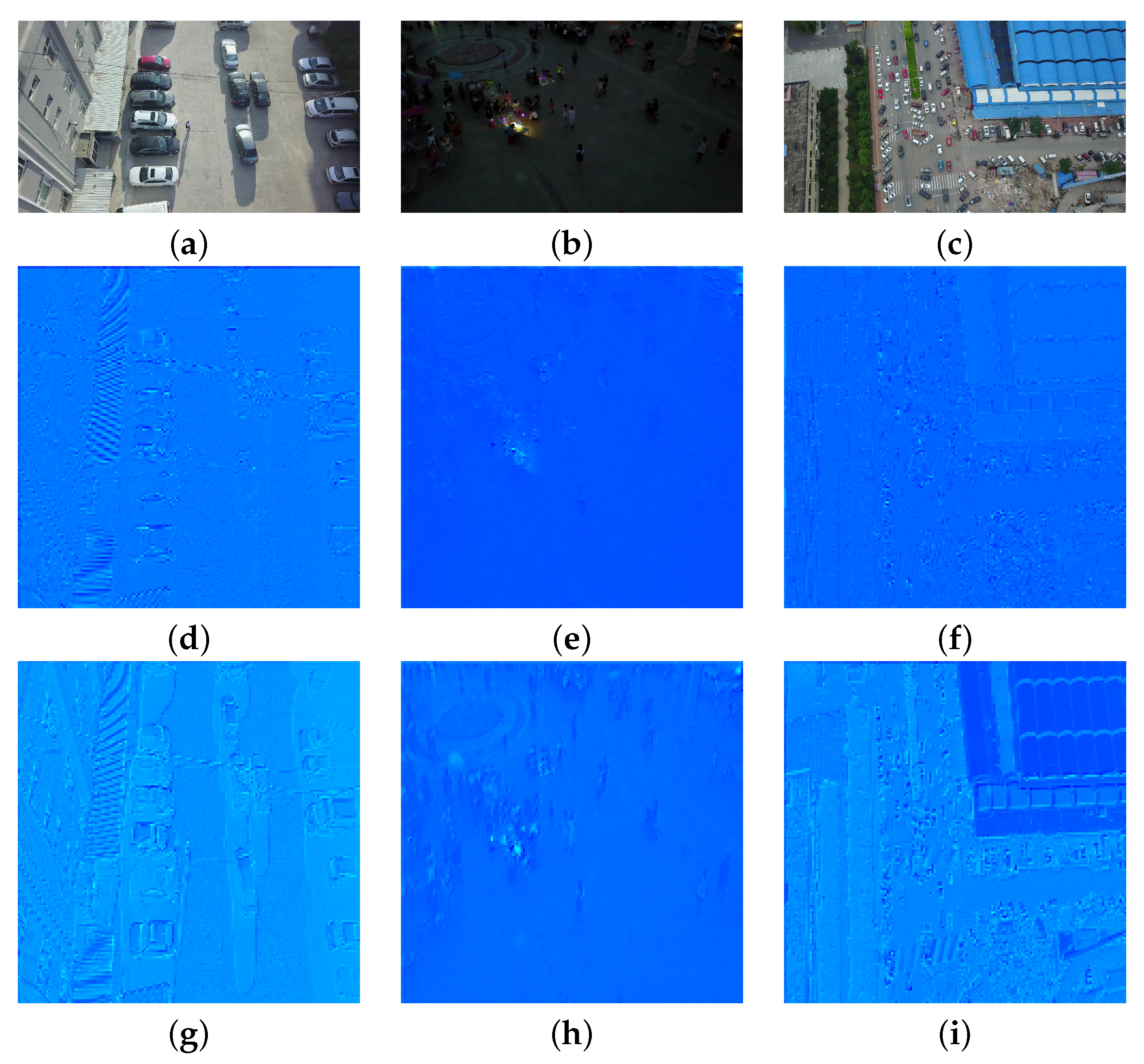

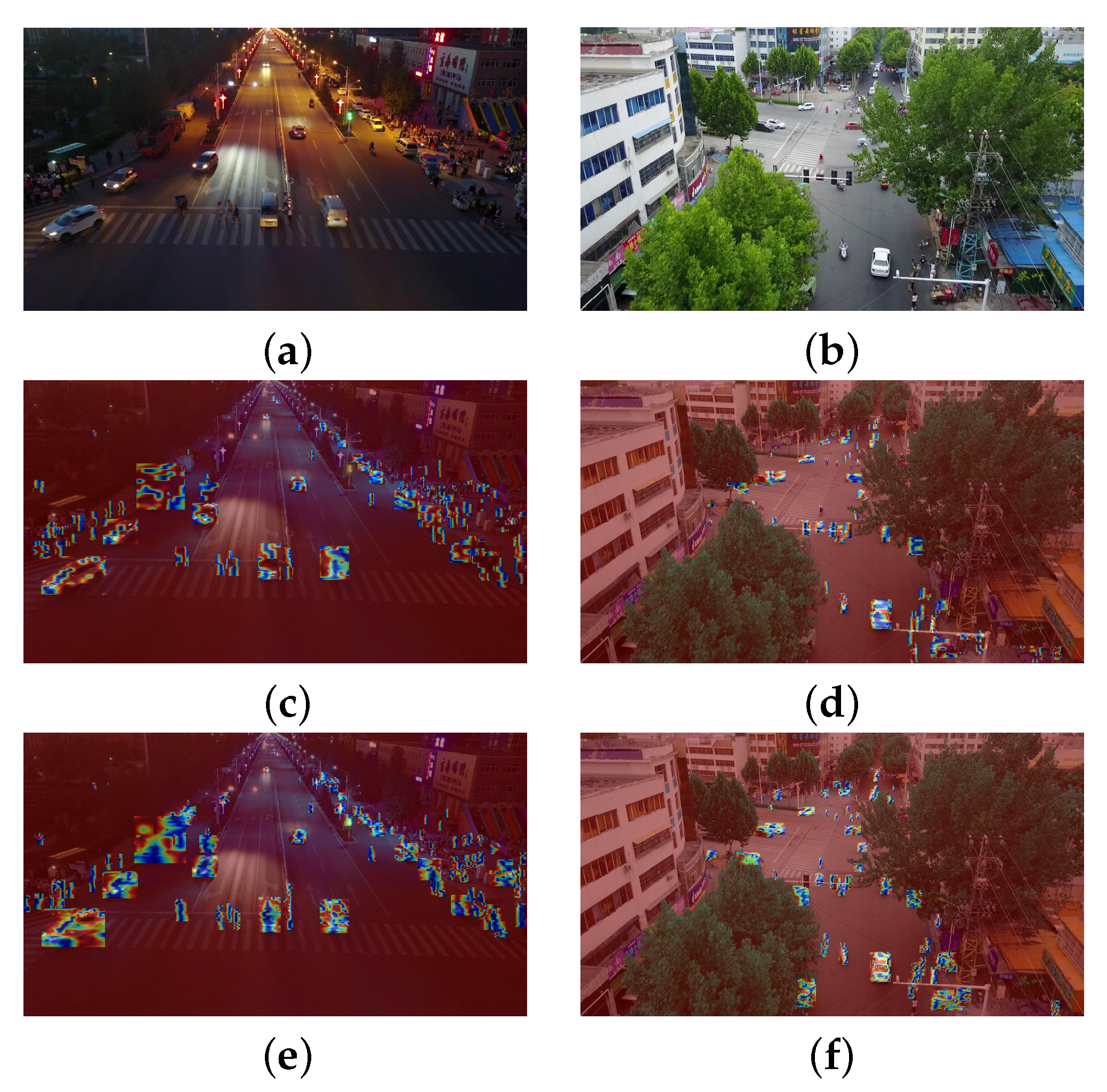

Visualization analysis further supports these quantitative findings. DL-DEIM produces sharper activation maps and more distinct object boundaries compared with FPN-based detectors, effectively suppressing background noise and emphasizing true positives in cluttered aerial scenes. This suggests that the network learns to better separate meaningful object cues from the complex backgrounds that are typical in UAV imagery.

Despite these advances, certain limitations remain. The model still depends on high-resolution inputs, imposing memory constraints that restrict batch size during training. Moreover, the generalizability of the proposed modules to other aerial benchmarks and real-time UAV applications warrants further investigation. Future work will explore model compression, knowledge distillation, and hardware-aware optimization to enhance deployment efficiency without sacrificing detection quality.

5. Conclusions

This paper presents DL-DEIM, a diffusion-enhanced object detection network tailored for aerial imagery in UAV systems. Built upon the foundation of DEIM, DL-DEIM integrates three well-established modules:DCFNet, LFDPN, and LAWDown. DCFNet reduces computational complexity while preserving spatial information, LFDPN improves multi-scale feature fusion, and LAWDown ensures high-quality discriminative representations at lower resolutions. The proposed model achieves a mean average precision (mAP@0.5) of 34.9% and a mAP@0.5:0.95 of 20.0%, outperforming the DEIM baseline by 4.6% and 2.9%, respectively. Additionally, DL-DEIM maintains real-time inference speed (356 FPS) with only 4.64 M parameters and 11.73 GFLOPs, making it highly efficient for deployment in resource-limited UAV systems. Ablation studies demonstrate the critical contributions of each module to both accuracy and efficiency, while visualizations highlight the model’s ability to localize objects in crowded scenes. Despite these advancements, DL-DEIM’s performance may still degrade under extreme conditions, such as highly dynamic scenes or challenging weather environments. Future work will focus on further refining the model to handle these edge cases and explore adaptive strategies for real-time UAV deployment in diverse operational settings.