Research on the Automatic Generation of Information Requirements for Emergency Response to Unexpected Events

Abstract

1. Introduction

2. Methods

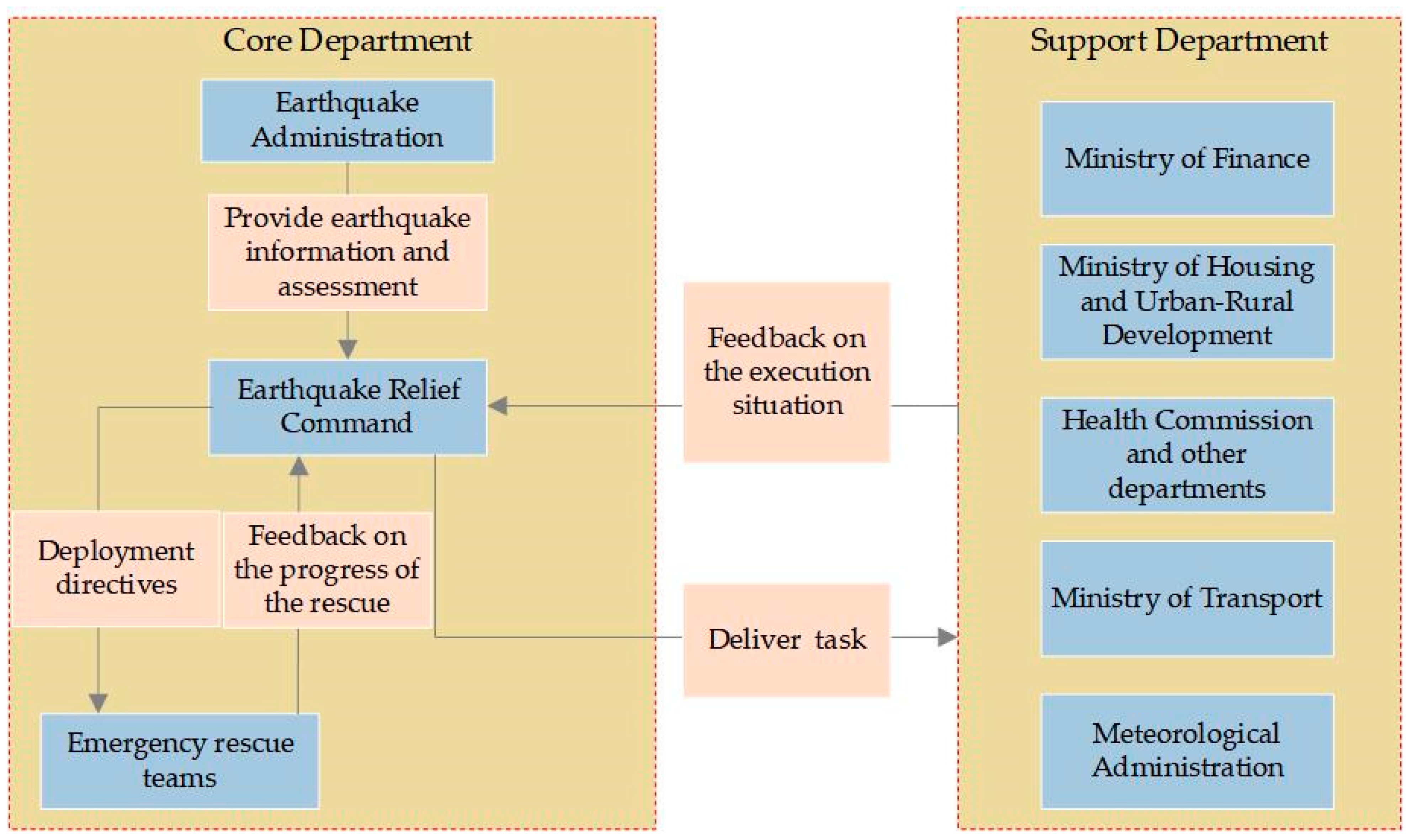

2.1. Research Object

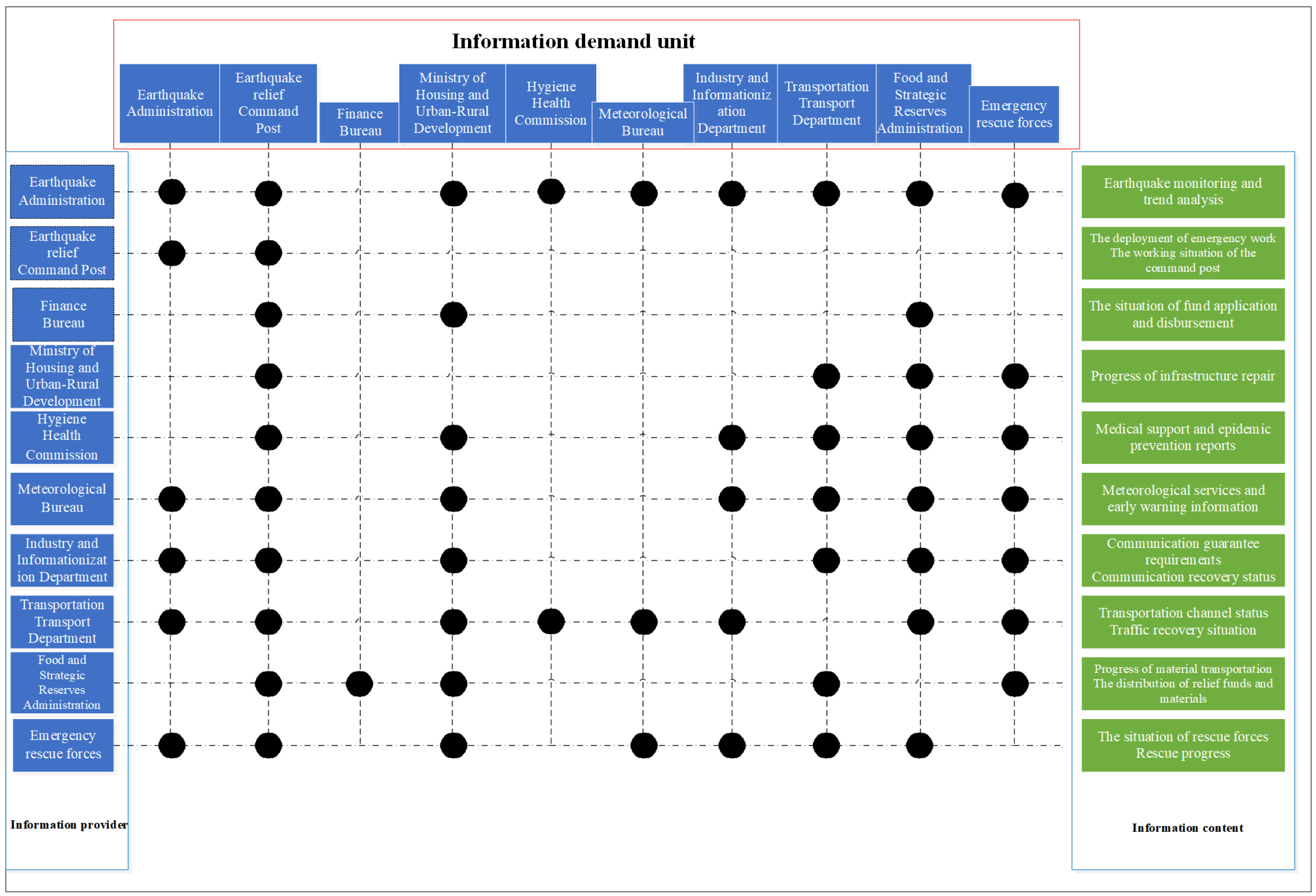

2.2. System Design

2.2.1. Knowledge Base Construction

2.2.2. Prompt Engineering Design

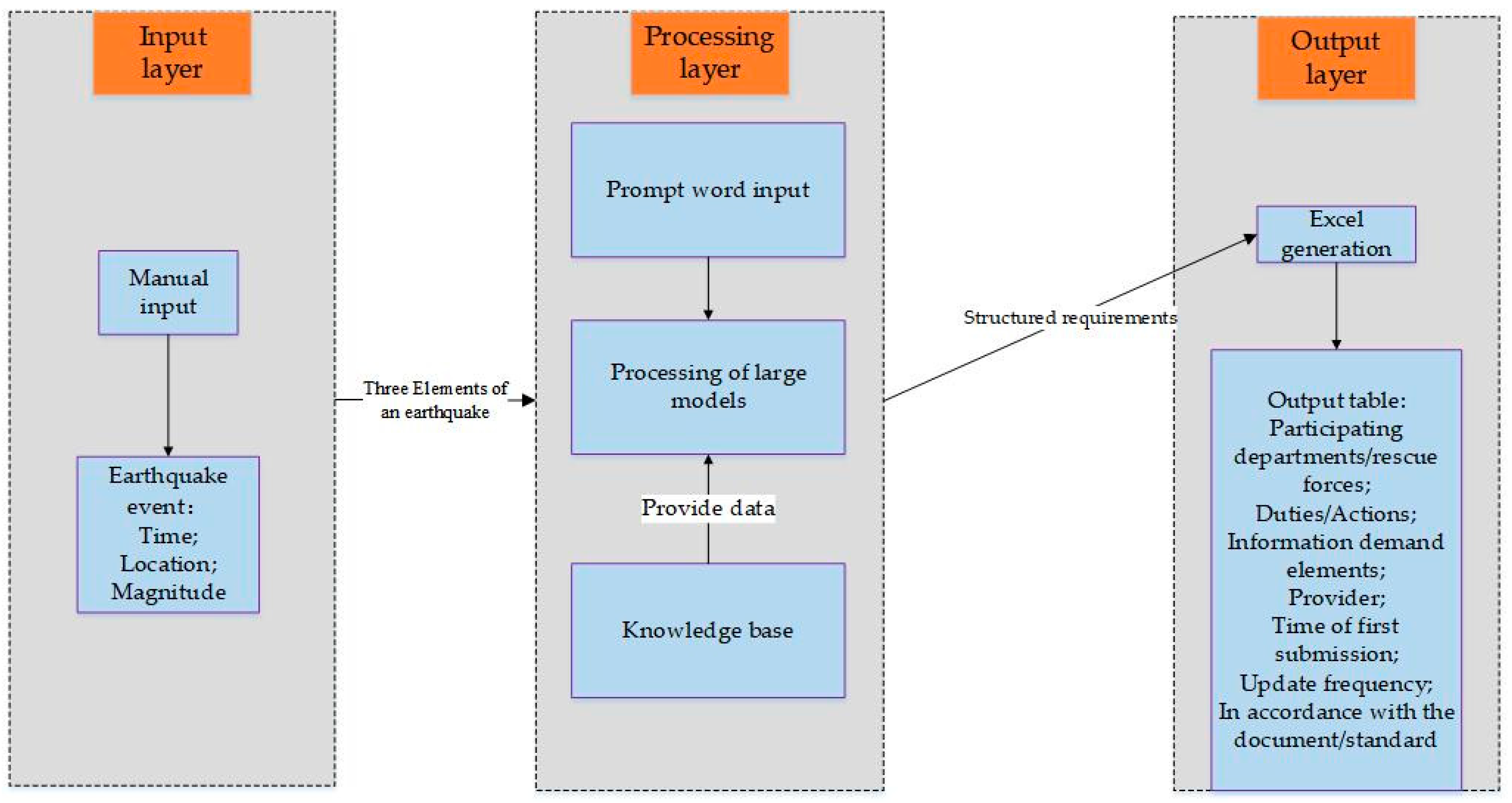

2.2.3. System Architecture Design

- Input Layer: Inputlayer is for entering a specific earthquake event, which can be done through API or manually. This paper uses manual input method. 14 April 2010, the time was 7:49 when a 7.1 magnitude of earthquake happened in area Yushu, China.

- Processing Layer: The processing layer invokes LLMs to parse earthquake parameters. Based on the input prompts, it matches relevant content in the knowledge base and generates information including participating departments/rescue forces, responsibilities/actions, information requirement elements, first submission time, and update frequency.

- Output Layer: The output layer generates information requirements in Excel format.

2.3. Experimental Design

2.3.1. Construction of Standard Information Requirements

2.3.2. Semantic Similarity Calculation

2.3.3. Difference Comparison and Performance Evaluation

Meanings of TP, FP, and FN

Performance Metrics

2.3.4. Software Performance Analysis

3. Results

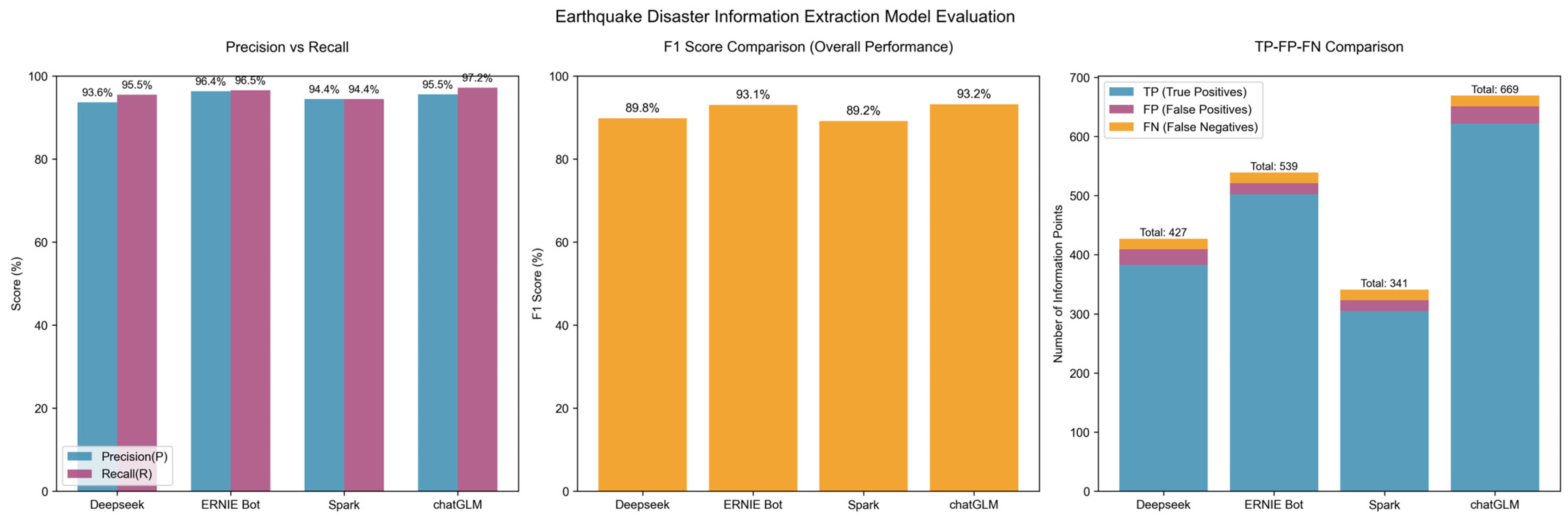

3.1. Experimental Result

3.2. Sub-Case Study and Analysis

3.3. Results Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| LLMs | Large Language Models |

| TP | rue positive |

| FP | false positive |

| FN | false negative |

| P | precision |

| R | recall |

| FireResc | fire rescue team |

| Freq | frequency |

| Upd | updated |

| h | hour |

| Num | number |

| Dep | Departments |

| HousMIN | ministry of housing |

| CivAffDept | civil affairs departments |

Appendix A

Appendix A.1

Appendix A.2

Appendix A.3

| Primary Category | Secondary Category | Content and Attribute Description |

|---|---|---|

| Earthquake Situation Information | Earthquake Parameters | The three key elements of an earthquake, the map of the epicenter location, and the distance from the epicenter to major cities. |

| Aftershock Information | Aftershock statistics across various time periods and the distribution of aftershocks. | |

| Hypocenter Information | Rupture process, focal mechanism solution, moment tensor, slip component, stress drop, and moment magnitude, among others. | |

| Strong Motion Records | Seismic Motion Waveform Data, Ground Motion Acceleration, Ground Motion Displacement. | |

| Earthquake Situation Trend Judgment | Opinions on the Trend Judgment of Earthquake Situations in Different Time Periods. | |

| Earthquake Cause Analysis | Analysis of earthquake causes by various institutions. | |

| Earthquake-Affected Area Background Information | Humanistic Background of the Affected Area | Administrative Division of the Affected Area, Transportation in the Affected Area, Population Distribution, Housing Information, Economic Statistics, Distribution of Ethnic Minorities, Distribution of Poverty-Stricken Counties. |

| Topographic Background of the Affected Area | The topography of the affected area, the terrain landscape of the affected area, and the remote sensing images of the affected area. | |

| Tectonic Background of the Affected Area | Distribution of active faults within the affected area, potential seismic source zones within the affected area, and the geological structure of the affected area. | |

| Disaster Background of the Affected Area | Historical earthquake catalog, earthquake isoseismal lines, disaster loss situation, direct earthquake disasters, major secondary disasters, etc. | |

| Seismic Safety Evaluation Background of the Affected Area | Seismic intensity zoning, seismic safety evaluation, the main achievements of seismic hazard prediction, and earthquake prevention and disaster mitigation efforts, as well as data from various earthquake prevention and disaster mitigation demonstration areas, among others. | |

| Major Lifeline Projects in the Affected Area | Distribution of lifeline projects, including transportation, electric power, communication facilities, gas supply, water supply, and drainage, in the affected area, as well as statistics on lifeline disaster damage, etc. | |

| Key Targets in the Affected Area | Statistics on the quantity and distribution of key targets, such as schools and hospitals, in the affected area. | |

| Rescue Forces in the Affected Area | Medical rescue capacity, fire-fighting capacity, public security capacity in the disaster area and adjacent areas, reserve of relief materials, information on rescue teams, type, quantity, quality, performance and distribution of rescue equipment. | |

| Disaster Situation Information | Disaster Situation Estimation | Seismic intensity estimation, casualty estimation, direct economic loss estimation, rapid earthquake assessment results, revised results of rapid assessment in different time periods, landslide risk distribution, seismic intensity assessment based on ground motion, damage estimation of lifeline projects and key targets, estimation of personnel burial points, and other disaster situation estimation results. |

| Rapid Disaster Situation Report | Actual disaster situation information obtained through short messages, telephone calls, the Internet, surveys, video conferences, etc. | |

| Casualty Disaster Situation Information | Statistics and Distribution of Actual Casualties. | |

| Building Damage | Statistics and Distribution of Actual Building Damage. | |

| Secondary Disasters | Statistics and Distribution of Actual Secondary Disasters. | |

| Key Focus Disaster Situation | Information on disaster situations requiring urgent attention for decision-making and rescue. | |

| Actual Surveyed Disaster Situation | Actual disaster situation from on-site earthquake surveys. | |

| Emergency Response and Rescue | Emergency Decision-Making | Various types of auxiliary decision-making information. |

| Headquarters Work Dynamics | Emergency work dynamics of national and regional headquarters. | |

| On-Site Work Dynamics | Dynamic changes of earthquake disasters and secondary disasters, real-time progress of rescue work, deployment and phased progress of disaster investigation work, etc. | |

| Historical Rescue Cases | Domestic and International Earthquake Rescue Cases. | |

| Rescue Team Work Dynamics | Input of rescue teams, progress of rescue operations, achievements of rescue efforts, real-time updates on rescue work, etc. |

Appendix A.4

| Library/Module | Type | Core Function |

|---|---|---|

| jieba | Third-party | Chinese word segmentation tool, used to extract domain keywords such as “magnitude” and “intensity” from text |

| json | Built-in | Processes JSON format data, e.g., storing evaluation results |

| os | Built-in | File path operations, e.g., reading expert standard documents |

| re | Built-in | Regular expression matching, e.g., extracting time information from text |

| pandas | Third-party | Structured storage of evaluation metrics (e.g., P/R/F1-score) |

| numpy | Third-party | Numerical computation, supporting mathematical operations for evaluation metrics |

| sklearn.metrics.pairwise | Third-party | Provides cosine similarity calculation function to support semantic matching algorithms |

| sklearn.feature_extraction.text | Third-party | Implements TF-IDF vector conversion to assist in semantic similarity calculation |

| matplotlib.pyplot | Third-party | Generates model performance comparison charts |

References

- Unconventional Emergency Management Research Group. Unconventional Emergency Management Research; Zhejiang University Press: Hangzhou, China, 2018. [Google Scholar]

- Wang, H.Y.; Li, Z.X.; Zhang, T.; Feng, J.; Zhang, X.Y. Information Needs and Acquisition Suggestions for Earthquake Emergency Rescue. J. Catastrophology 2016, 31, 176–180. [Google Scholar]

- Ma, W.; Liu, J.; Cai, Y.; Chen, H.-Z.; Liu, X.-F. Research on seismic information based on internet of things and cloud computing in big data era. Prog. Geophys. 2018, 33, 835–841. [Google Scholar] [CrossRef]

- Jo Pwavodi, J.; Ibrahim, A.U.; Pwavodi, P.C.; Al-Turjman, F.; Mohand-Said, A. The role of artificial intelligence and IoT in prediction of earthquakes: Review. Artif. Intell. Geosci. 2024, 5, 100075. [Google Scholar] [CrossRef]

- Xu, X.; Zhou, X.; Wang, W.; Xu, Z.; Cao, G. Analysis of the Characteristics of Secondary Sudden Environmental Events Induced by Natural Disasters in China. J. Geol. Hazards Environ. Preserv. 2025, 36, 121–128. [Google Scholar]

- Huang, L.; Li, J.; Liu, Z.; Wang, X.; Jie, S.; Lei, G.; Liu, Z. Review of Explainable Artificial Intelligence and Its Application Prospects in Earthquake Science. Prog. Earthq. Sci. 2025, 55, 1–11. [Google Scholar] [CrossRef]

- Linville, L.; Pankow, K.; Draelos, T. Deep learning models augment analyst decisions for event discrimination. Geophys. Res. Lett. 2019, 46, 3643–3651. [Google Scholar] [CrossRef]

- Kou, L.; Tang, J.; Wang, Z.; Jiang, Y.; Chu, Z. An adaptive rainfall estimation algorithm for dual-polarization radar. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1004805. [Google Scholar] [CrossRef]

- Titos, M.; Bueno, A.; Garcia, L.; Benitez, C.; Segura, J.C. Classification of isolated volcano-seismic events based on inductive transfer learning. IEEE Geosci. Remote Sens. Lett. 2020, 17, 869–873. [Google Scholar] [CrossRef]

- Peng, P.; He, Z.; Wang, L.; Jiang, Y. Microseismic records classification using capsule network with limited training samples in underground mining. Sci. Rep. 2020, 10, 13925. [Google Scholar] [CrossRef]

- Nakano, M.; Sugiyama, D.; Hori, T.; Kuwatani, T.; Tsuboi, S. Discrimination of seismic signals from earthquakes and tectonic tremor by applying a convolutional neural network to running spectral images. Seismol. Res. Lett. 2019, 90, 530–538. [Google Scholar] [CrossRef]

- Mousavi, S.M.; Zhu, W.; Ellsworth, W.; Beroza, G. Unsupervised clustering of seismic signals using deep convolutional autoencoders. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1693–1697. [Google Scholar] [CrossRef]

- Biswas, S.; Kumar, D.; Hajiaghaei-Keshteli, M.; Bera, U.K. An AI-based framework for earthquake relief demand forecasting: A case study in Türkiye. Int. J. Disaster Risk Reduct. 2024, 102, 104287. [Google Scholar] [CrossRef]

- Huang, J.C.; Liu, Z.; Huang, H.B.; Zhu, C.; Fang, Y.C.; Chen, Z.X. LLMs and Decision Intelligence Technology. J. Command Control. 2025, 2, 125–127. [Google Scholar]

- Li, Y.L.; Zhang, J.W.; Bao, H.H. Information Needs and Satisfaction of College Students in the Context of Public Health Emergencies. Lib. Inf. Serv. 2020, 64, 85–95. [Google Scholar]

- Shultz, T.R.; Wise, J.M.; Nobandegani, A.S. Text understanding in GPT-4 versus humans. R. Soc. Open Sci. 2025, 12, 241313. [Google Scholar] [CrossRef]

- Liu, Q.; He, Y.; Xu, T.; Lian, D.; Liu, C.; Zheng, Z.; Chen, E. UniMEL: A Unified Framework for Multimodal Entity Linking with Large Language Models. In Proceedings of the 33rd ACM International Conference on Information and Knowledge Management, CIKM 2024, Boise, ID, USA, 21–25 October 2024. [Google Scholar]

- Peng, W.; Li, G.; Jiang, Y.; Wang, Z.; Ou, D.; Zeng, X.; Xu, D.; Xu, T.; Chen, E. Large Language Model Based Long-Tail Query Rewriting in Taobao Search. In Proceedings of the Companion Proceedings of the ACM Web Conference 2024, Singapore, 13–17 May 2024; pp. 20–28. [Google Scholar]

- Sarnikar, A. Using LLMs for Querying and Understanding Long Legislative Texts. In Proceedings of the 2025 IEEE International Students’ Conference on Electrical, Electronics and Computer Science (SCEECS), Bhopal, India, 18–19 January 2025. [Google Scholar]

- Zhang, J. (Ed.) Textbook on the Law of Earthquake Prevention and Disaster Mitigation; Tsinghua University Press: Beijing, China, 2014. [Google Scholar]

- Zhang, Y.; Fan, K.; Guo, H.; Tang, Z.; Chen, W. Analysis of the Emergency Mode of Sichuan Earthquake Emergency Command Center in the Lushan Ms 7.0 Earthquake. Earthq. Res. Sichuan 2014, 2, 43–47. [Google Scholar]

- Zhang, W.; Hui, Y.; Hou, Z.; Yang, X.; Mao, J. Current Situation and Reflections on On-Site Earthquake Emergency Work in Liaoning Province. J. Disaster Prev. Mitig. 2023, 39, 66–70. [Google Scholar]

- China Legal Publishing House (Comp). Regulations on Earthquake Monitoring Management (2024); China Legal Publishing House: Beijing, China, 2025. [Google Scholar]

- State Council of the People’s Republic of China. National Earthquake Emergency Plan (Revised on August 28, 2012). Gaz. State Counc. People’s Repub. China 2012, 28, 16–24. [Google Scholar]

- DB/T 89-2022 [S]; Seismic Monitoring and Forecasting Standardization Technical Committee. Code for Operation of Seismic Networks: Strong Motion Observation. China Standards Press: Beijing, China, 2022.

- Dong, M.; Yang, T.Q. Discussion on Classification of Earthquake Emergency Disaster Information. Technol. Disaster Prev. 2014, 4, 937–943. [Google Scholar]

- Gong, Y.; Shen, W. Overview and Analysis of Emergency Information Output by Seismic Departments After Earthquakes. China Emerg. Rescue 2018, 4, 33–37. [Google Scholar]

- DB/T 1-2000; Seismic Industry Standard System Table. Industry Standard—Seismology. Seismological Press: Beijing, China, 2000.

- Oppenlaender, J.; Linder, R.; Silvennoinen, J. Prompting AI Art: An Investigation into the Creative Skill of Prompt Engineering. Int. J. Hum. –Comput. Interact. 2024, 41, 10207–10229. [Google Scholar] [CrossRef]

- Marvin, G.; Hellen, N.; Jjingo, D.; Nakatumba-Nabende, J. Prompt Engineering in Large Language Models. In Data Intelligence and Cognitive Informatics; Jacob, I.J., Piramuthu, S., Falkowski-Gilski, P., Eds.; Springer: Singapore, 2024; pp. 387–402. [Google Scholar]

- Son, M.; Won, Y.-J.; Lee, S. Optimizing Large Language Models: A Deep Dive into Effective Prompt Engineering Techniques. Appl. Sci. 2025, 15, 1430. [Google Scholar] [CrossRef]

- Federiakin, D.; Molerov, D.; Zlatkin-Troitschanskaia, O.; Maur, A. Prompt engineering as a new 21st century skill. Front. Educ. 2024, 9, 1366434. [Google Scholar] [CrossRef]

- Wu, L.; Qiu, Z.; Zheng, Z.; Zhu, H.; Chen, E. Exploring Large Language Model for Graph Data Understanding in Online Job Recommendations. In Proceedings of the 38th AAAI Conference on Artificial Intelligence, AAAI 2024, Vancouver, BC, Canada, 26–27 February 2024. [Google Scholar]

- Zheng, Z.; Chao, W.; Qiu, Z.; Zhu, H.; Xiong, H. Harnessing Large Language Models for Text-Rich Sequential Recommendation. In Proceedings of the ACM Web Conference 2024, Austin, TX, USA, 13–17 May 2024; pp. 3207–3216. [Google Scholar]

- Liu, P.; Yuan, W.; Fu, J.; Jiang, Z.; Hayashi, H.; Neubig, G. Pre-Train, Prompt, and Predict: A Systematic Survey of Prompting Methods in Natural Language Processing. ACM Comput. Surv. 2023, 55, 195. [Google Scholar] [CrossRef]

- Chen, B.; Zhang, Z.; Langrené, N.; Zhu, S. Unleashing the potential of prompt engineering for large language models. Patterns 2025, 6, 101260. [Google Scholar] [CrossRef] [PubMed]

- Tong, S.; Mao, K.; Huang, Z.; Zhao, Y.; Peng, K. Automating psychological hypothesis generation with AI: When large language models meet causal graph. Humanit. Soc. Sci. Commun. 2024, 11, 896. [Google Scholar] [CrossRef]

- Shah, C. From Prompt Engineering to Prompt Science with Humans in the Loop. Commun. ACM 2025, 68, 54–61. [Google Scholar] [CrossRef]

- Xu, D.; Zhang, Z.; Lin, Z.; Wu, X.; Zhu, Z.; Xu, T.; Zhao, X.; Zheng, Y.; Chen, E. Multi-Perspective Improvement of Knowledge Graph Completion with Large Language Models. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation, Torino, Italy, 20–25 May 2024; pp. 11956–11968. [Google Scholar]

- Li, X.; Zhou, J.; Chen, W.; Xu, D.; Xu, T.; Chen, E. Visualization Recommendation with Prompt-Based Reprogramming of Large Language Models. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics, Bangkok, Thailand, 11–16 August 2024; pp. 13250–13262. [Google Scholar]

- Liu, C.; Xie, Z.; Zhao, S.; Zhou, J.; Xu, T.; Li, M.; Chen, E. Speak from Heart: An Emotion-Guided LLM-Based Multimodal Method for Emotional Dialogue Generation. In Proceedings of the 2024 International Conference on Multimedia Retrieval, Phuket, Thailand, 10–14 June 2024; pp. 533–542. [Google Scholar]

- Corcho, O.; Fernandez-López, M.; Gómez-Pérez, A. Ontological Engineering: Principles, Methods, Tools and Languages. In Ontologies for Software Engineering and Software Technology; Springer: Berlin/Heidelberg, Germany, 2006; pp. 1–48. [Google Scholar]

| Category | Specific Content | Basis Documents/Standards (Examples) |

|---|---|---|

| Internal Industry Standards and Historical Emergency Response Scenarios | The content primarily encompasses laws, policies, historical cases related to emergency responses to unforeseen incidents, and emergency news. These sources offer data for the system to extract information on departments involved in earthquake emergency responses and their respective responsibilities. | 1. “Law on Earthquake Disaster Prevention and Mitigation” [20] 2. “Analysis of the Emergency Mode of the Earthquake Emergency Command Center during Earthquakes” [21] 3. “Current Status and Thoughts on Earthquake Emergency On-site Work” [22] |

| Time Specification Requirements | Clarify time-dimensional specifications related to earthquake emergency response. | 1. “Regulations on the Administration of Earthquake Monitoring” [23] 2. “National Earthquake Emergency Plan” [24] 3. “Observation Specifications for Seismic Network” [25] |

| Information Element List | The information requirements of participating departments during the emergency response to unforeseen incidents are primarily included. | 1. “Discussion on the Classification of Earthquake Emergency Disaster Information” [26] 2. “Information Needs and Acquisition Suggestions for Earthquake Emergency Rescue” [2] 3. “Overview and Analysis of Emergency Information Output by Earthquake Departments after the Earthquake” [27] 4. “Analysis of the Emergency Response Mode of the Earthquake Emergency Command Center during Earthquakes” [21] 5. “Seismic Industry Standards” [28] |

| Prompt | Specific Content Description |

|---|---|

| Phase 1: Extraction of Demand-Providing Units | Based on the inputted seismic event (time, location, magnitude), combined with industry internal standards and historical emergency response cases in the knowledge base, generate three items: “departments involved in the earthquake, rescue forces, responsibilities/actions, and basis documents”. Earthquake event: On 14 April 2010, at 7:49, a magnitude 7.1 earthquake occurred in Area A. Requirements: Extract information from the constructed knowledge base, including all participating departments and rescue forces after the earthquake, and output it in a tabular format. |

| Phase 2: Extraction of Information Requirement Elements and Time Elements | Incorporating the “participating departments/rescue forces” and “responsibilities/actions” extracted as mentioned above, and drawing from the regulatory documents listed in the “information element list” and “time specification requirements” within the knowledge base, generate the “information requirement elements, providing units, initial submission time, update frequency, and foundational documents/standards,” and present these in a tabular format. |

| Phase 3: Generation of Structured Information Requirements | Summarize the contents of the aforementioned tables to generate information requirements. The summary should include participating departments/rescue forces, responsibilities/actions, information requirement elements, providing units, initial submission time, and update frequency. Ensure that each information requirement element is paired with its corresponding providing unit. Present the results in tabular form. |

| Participating Departments/Rescue Forces | Core Responsibilities/Actions | Information Requirement Elements | Information Providing Units | First Submission Time | Update Frequency |

|---|---|---|---|---|---|

| Earthquake Administration | Earthquake Monitoring, Intensity Assessment, and Disaster Evaluation | 1. Basic earthquake parameters (epicenter, magnitude, focal depth). 2. Earthquake intensity distribution. 3. Aftershock sequence data 4. Distribution of secondary geological disasters. 5. Disaster situation evaluation report. | Earthquake Monitoring Center, earthquake departments, remote sensing monitoring units | Basic parameters: 0.5 to 1 h. Intensity distribution: 8 h. | Basic parameters: every 2 h. Intensity distribution: revised every 24 h. |

| Fire and Rescue Teams | Life search and rescue, identification of rescue targets | 1. Location of trapped persons. 2. Building collapse types (schools, hospitals). 3. Search and rescue priorities. | Fire departments, on-site rescue teams, and UAV monitoring units. | 1–24 h. | Real-time update. |

| National Health Commission | Medical treatment, medical resource statistics | 1. Casualty statistics (serious injuries, slight injuries, missing persons). 2. Damage to medical facilities. 3. List of urgently needed medicines. 4. Distribution of casualties. | Health departments, hospitals/emergency centers, and centers for disease control and prevention (CDC). | Within 2 h. | Every 12 h. |

| Ministry of Civil Affairs | Resettlement of affected people, coordination of living materials | 1. Number of affected individuals. 2. Number of individuals resettled. 3. Demand for relief supplies (tents, food, medicines). | Civil affairs departments at all levels, Red Cross Society, and charitable organizations. | 2–4 h. | Every 6–12 h. |

| Ministry of Transport | Road emergency repair, guarantee of rescue access | 1. Location of Damaged Roads. Bridges. 2. Emergency Repair Progress. 3. Passable Routes. | Transport departments, highway administration bureaus, road emergency repair teams. | 1–2 h. | Every 3–6 h. |

| Ministry of Industry and Information Technology | Communication restoration, power guarantee | 1. Scope of Communication/Power. Outage. 2. Damage to Communication Base Stations and Power Facilities. 3. Restoration Progress. | Communication operators, State Grid/local power companies, provincial communication/power dispatch centers. | Within 1 h. | Every 3–6 h. |

| Ministry of Housing and Urban-Rural Development | Building safety assessment, geological disaster monitoring | 1. Statistics on building damage. 2. Distribution of secondary geological disasters, (including landslides and debris flows. 3. Demand for relief materials. 4. Monitoring data of landslides and collapses. | Departments of housing and urban-rural development at all levels, departments of land and resources, and geological survey bureaus. | 8–48 h. | Every 4–24 h. |

| Ministry of Emergency Management | Coordination of Rescue Forces and Summary of the Disaster Situation | 1. Deployment of rescue forces. 2. Dynamic disaster situation. 3. Risk of secondary disasters. | Emergency Command Center, Geological Monitoring Station. | 1–2 h. | Real-time update/every 6 h. |

| Meteorological Bureau | Meteorological forecast for disaster-stricken areas | 1. Weather forecast for the next 72 h (including precipitation and temperature). 2. Assessment of the impact of wind and visibility. | Meteorological departments at all levels and satellite remote sensing monitoring units. | 1–2 h. | Every 6–24 h. |

| Local Governments | Local disaster situation statistics and reporting | 1. Number of casualties. 2. Outage of transportation, communication, and power. 3. Number of collapsed buildings. | Civil affairs departments, public security departments, transportation, power, and communication departments. | Within 2 h. | Every 1–6 h. |

| Imension | Expert Standards | chatGLM | DeepSeek |

|---|---|---|---|

| Information Requirement Elements (items) | 5 (Parameters/Intensity/Aftershocks/Secondary Disasters/Assessment Report) | 5 (matches core elements, with additional “fault zone analysis”) | 4 (lacks “Parameters/Aftershocks”) |

| First Submission Time | Basic parameters: 0.5–1 h; Intensity: 8 h | Within 30 min (exceeds the standard) | Within 8 h (meets the intensity requirement) |

| Information-Providing Units (number) | 3 (National Seismic Network/Provincial Departments/Remote Sensing Units) | 1 (lacks 2 units) | 4 (full coverage without redundancy) |

| F1-Score | - | 66.7% | 57.1% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Guo, C.; Lu, Z.; Zhang, C.; Gao, W.; Liu, J.; Yang, J. Research on the Automatic Generation of Information Requirements for Emergency Response to Unexpected Events. Appl. Sci. 2025, 15, 11953. https://doi.org/10.3390/app152211953

Li Y, Guo C, Lu Z, Zhang C, Gao W, Liu J, Yang J. Research on the Automatic Generation of Information Requirements for Emergency Response to Unexpected Events. Applied Sciences. 2025; 15(22):11953. https://doi.org/10.3390/app152211953

Chicago/Turabian StyleLi, Yao, Chang Guo, Zhenhai Lu, Chao Zhang, Wei Gao, Jiaqi Liu, and Jungang Yang. 2025. "Research on the Automatic Generation of Information Requirements for Emergency Response to Unexpected Events" Applied Sciences 15, no. 22: 11953. https://doi.org/10.3390/app152211953

APA StyleLi, Y., Guo, C., Lu, Z., Zhang, C., Gao, W., Liu, J., & Yang, J. (2025). Research on the Automatic Generation of Information Requirements for Emergency Response to Unexpected Events. Applied Sciences, 15(22), 11953. https://doi.org/10.3390/app152211953