MPCFN: A Multilevel Predictive Cross-Fusion Network for Multimodal Named Entity Recognition in Social Media

Abstract

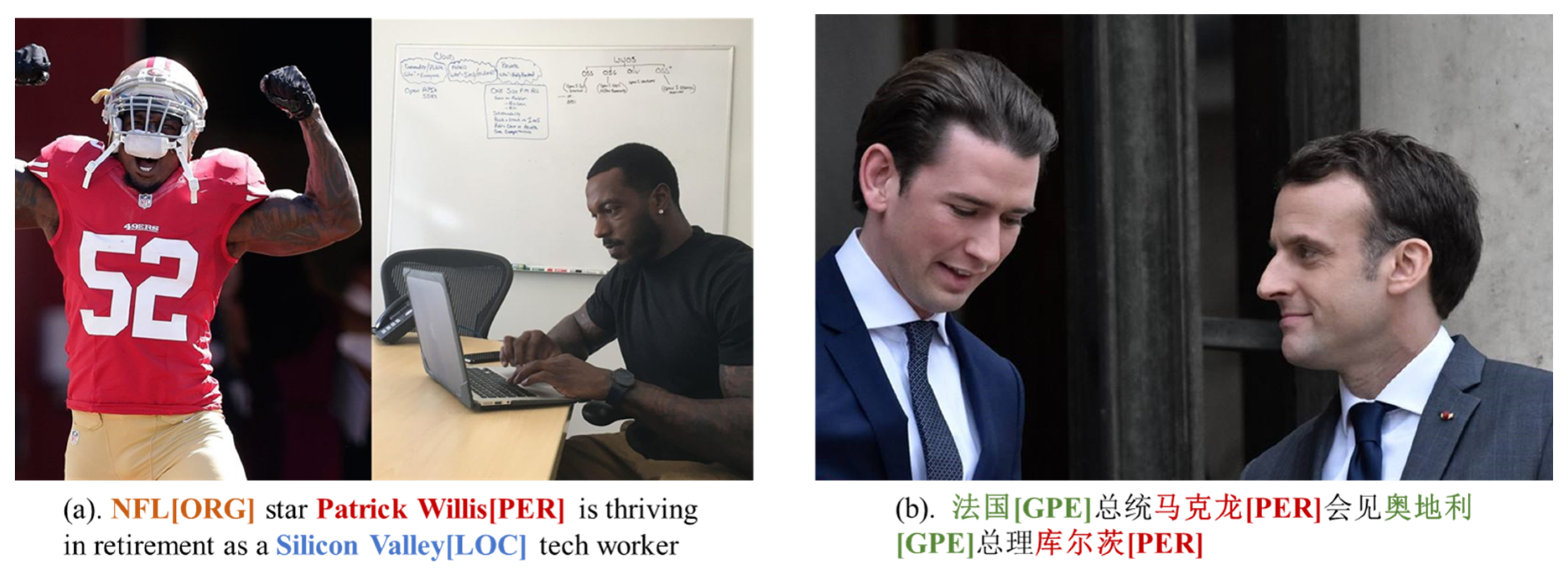

1. Introduction

- (1)

- We present a Multilevel Predictive Cross-Fusion Network model to enhance MNER’s performance on social media. This model predicts the correlation between text and images to mitigate irrelevant image interference and employs cross-fusion instead of visual prefix fusion for integrating multimodal information.

- (2)

- To address overfitting associated with model complexity, this study employs a technique known as Flooding to optimize the Conditional Random Field (CRF) layer. This approach effectively mitigates overfitting by modifying the CRF layer’s loss function, thereby enhancing the model’s generalization capability. By incorporating Flooding technology, we further improve the model’s robustness in managing complex sequence annotation tasks.

- (3)

- Through extensive experiments and analysis, we show that our model performs competitively compared to existing state-of-the-art models.

2. Related Work

Previous Research on Multimodal NER

- (1)

- Unbalanced feature alignment granularity: Most models (e.g., RpBERT [34], HVPNeT [11]) either rely solely on global image features or overemphasize local object features. They fail to achieve hierarchical collaboration and dynamic adaptation of “global–local” visual features, which limits the semantic matching accuracy between textual entities and visual regions.

- (2)

- Insufficient visual noise-filtering capability: Existing methods (e.g., MGCMT [35]) mostly use attention mechanisms in the late stage of feature fusion to select effective visual information, lacking early targeted noise-filtering designs. As a result, irrelevant visual noise tends to propagate continuously during model training, increasing the risk of entity misrecognition.

3. Methodology

3.1. Overall Framework

3.2. Stage 1: Text and Visual Feature Extraction

3.3. Stage 2: Correlation Prediction

3.4. Stage 3: Dynamic Gate

3.5. Stage 4: Cross-Fusion

3.6. Stage 5: CRF and Flooding Layer

4. Experiments

4.1. Dataset

4.2. Evaluation Metric

4.3. Baselines

- We utilize BERT (Devlin et al., 2018 [4]) as a language model for MNER with a softmax decoder, as it has been pre-trained on a substantial number of unlabeled text data.

- An extension of BERT, BERT-CRF uses a normal CRF layer as the decoder rather than a softmax layer.

- UMT (Yu et al., 2020 [8]): In order to acquire both Image-Aware Word Representations and visual representations inspired by the words, UMT (Yu et al., 2020) uses a multimodal interaction module.

- RpBERT (Sun et al., 2021 [34]) adopts a method of text–image relation propagation to select visual clues.

- UMGF (Zhang et al., 2021 [43]) presents a unified multimodal graph fusion approach for MNER.

- HVPNeT (Chen et al., 2022 [11]) introduces a dynamic gated aggregation method to obtain hierarchical multiscaled visual features, which are used as visual prefixes for fusion.

- GMNER (Yu et al., 2023 [44]) introduces a hierarchical indexing framework called H-Index, which generates entity–type–region triples hierarchically using a sequence-to-sequence model.

- MGCMT (Liu et al., 2024 [35]) improves word representation through semantic enhancement and cross-modal interaction at various levels, achieving effective multimodal guidance for each word.

4.4. Experiment Configuration

5. Result

5.1. Ablation Study

- (1)

- When the model is not integrated with all components, its performance is lower compared to the full model that includes all components. However, even in such partial-component integration scenarios, the model’s F1 score still exceeds that of the text-only baseline model, as well as that of most existing MNER methods. This finding fully demonstrates that each component in our model plays an indispensable key role in enhancing the final entity recognition results.

- (2)

- For the English datasets TWITTER-15 and TWITTER-17, the model performance significantly drops when the Flooding module is not integrated, indicating that integrating the Flooding module plays a crucial role in model training. In contrast, for the Chinese dataset WuKong, the performance decline (when the Flooding module is excluded) is less pronounced. This is because the WuKong dataset is over three times larger than the TWITTER datasets, making the data more diverse and reducing the likelihood of overfitting. Consequently, the contribution of integrating the Flooding module is less significant in this case.

- (3)

- The model experiences a not insignificant performance degradation when neither the Correlation Prediction Module nor the Cross-Fusion Module is integrated. This result suggests that integrating the Correlation Prediction Module (for filtering extraneous images from the data) and integrating the Cross-Fusion Module (for image fusion) are both beneficial for the NER task.

5.2. Analysis of Results Compared to Existing Models

- (1)

- Comparing SOTA multimodal methods with text-based unimodal approaches reveals that multimodal methods generally perform better, suggesting that incorporating additional visual information is often beneficial for NER tasks.

- (2)

- Comparing current multimodal models with text-based unimodal methods shows that multimodal approaches generally perform better on English datasets like TWITTER-2015 and TWITTER-2017, with a maximum F1 score improvement of about 3.5%. However, on the Chinese dataset WuKong, the maximum performance improvement is approximately 1% (for instance, when comparing RpBERT with BERT), and, in some cases, the performance of certain multimodal models (such as UMGF and MGCMT) is not superior to text-based methods. This highlights the need for further enhancement of multimodal models.

- (3)

- When comparing text-based unimodal methods with existing multimodal approaches, the proposed Multilevel Predictive Cross-Fusion Network demonstrates substantial performance improvements across three distinct datasets. Specifically, our model achieves improvements of 4.93%, 4.17%, and 3.44% over text-based unimodal methods on these datasets. Furthermore, relative to current state-of-the-art methods, our model yields performance gains of 1.42%, 0.74%, and 2.12%, respectively.

5.3. Parameter Sensitivity Analysis

5.4. Case Study

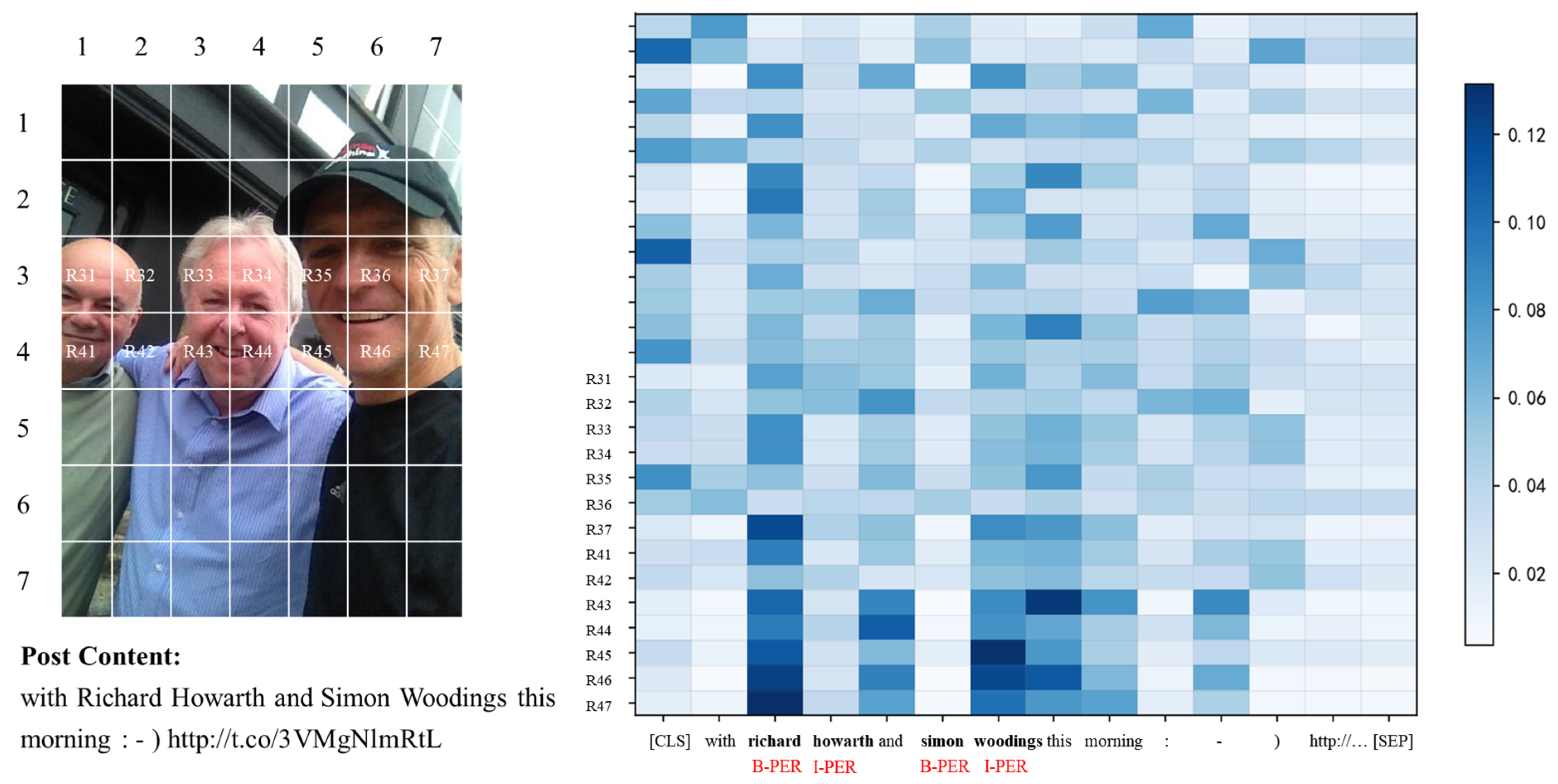

5.5. Attention Analysis Between Textual Entities and Visual Objects

5.6. Generalization Study

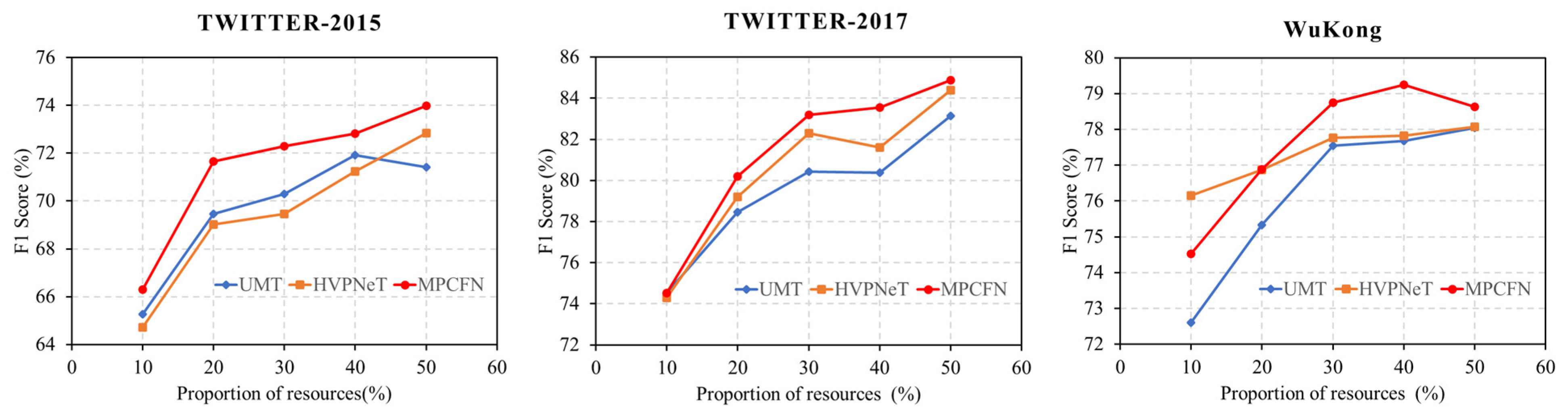

5.7. Low-Resource Experiment

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yu, J.; Bohnet, B.; Poesio, M. Named Entity Recognition as Dependency Parsing. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 6470–6476. [Google Scholar]

- Nasar, Z.; Jaffry, S.W.; Malik, M.K. Named entity recognition and relation extraction: State-of-the-art. ACM Comput. Surv. 2021, 54, 1–39. [Google Scholar] [CrossRef]

- Kim, J.H.; Woodland, P.C. A rule-based named entity recognition system for speech input. In Proceedings of the Sixth International Conference on Spoken Language Processing, Beijing, China, 16–20 October 2000. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; long and short papers. Volume 1, pp. 4171–4186. [Google Scholar]

- Jie, Z.; Lu, W. Dependency-Guided LSTM-CRF for Named Entity Recognition. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), Hong Kong, China, 3–7 November 2019; pp. 3862–3872. [Google Scholar]

- Wu, Z.; Zheng, C.; Cai, Y.; Chen, J.; Leung, H.; Li, Q. Multimodal representation with embedded visual guiding objects for named entity recognition in social media posts. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 1038–1046. [Google Scholar]

- Zheng, C.; Wu, Z.; Wang, T.; Cai, Y.; Li, Q. Object-aware multimodal named entity recognition in social media posts with adversarial learning. IEEE Trans. Multimed. 2020, 23, 2520–2532. [Google Scholar] [CrossRef]

- Yu, J.; Jiang, J.; Yang, L.; Xia, R. Improving multimodal named entity recognition via entity span detection with unified multimodal transformer. In Proceedings of the Association for Computational Linguistics, Online, 5–10 July 2020. [Google Scholar]

- Lu, D.; Neves, L.; Carvalho, V.; Zhang, N.; Ji, H. Visual attention model for name tagging in multimodal social media. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; Long Papers. Volume 1, pp. 1990–1999. [Google Scholar]

- Moon, S.; Neves, L.; Carvalho, V. Multimodal Named Entity Recognition for Short Social Media Posts. In Proceedings of the NAACL-HLT, New Orleans, LA, USA, 1–6 June 2018; pp. 852–860. [Google Scholar]

- Chen, L.; Kong, H.; Wang, H.; Yang, W.K.; Lou, J.; Xu, F.L. HVP-Net: A hybrid voxel-and point-wise network for place recognition. IEEE Trans. Intell. Veh. 2023, 9, 395–406. [Google Scholar] [CrossRef]

- Wang, X.; Gui, M.; Jiang, Y.; Jia, Z.; Bach, N.; Wang, T.; Huang, Z.; Huang, F.; Tu, K. ITA: Image-Text Alignments for Multi-Modal Named Entity Recognition. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Seattle, WA, USA, 10–15 July 2022; pp. 3176–3189. [Google Scholar]

- Jia, M.; Shen, X.; Shen, L.; Pang, J.; Liao, L.; Song, Y.; Chen, M.; He, X. Query prior matters: A MRC framework for multimodal named entity recognition. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; pp. 3549–3558. [Google Scholar]

- Lu, J.; Zhang, D.; Zhang, P. Flat Multi-modal Interaction Transformer for Named Entity Recognition. In Proceedings of the 29th International Conference on Computational Linguistics, Gyeongju, Republic of Korea, 12–17 October 2022; pp. 2055–2064. [Google Scholar]

- Asgari-Chenaghlu, M.; Feizi-Derakhshi, M.R.; Farzinvash, L.; Balafar, M.A.; Motamed, C. CWI: A multimodal deep learning approach for named entity recognition from social media using character, word and image features. Neural Comput. Appl. 2022, 34, 1905–1922. [Google Scholar] [CrossRef]

- Wang, X.; Ye, J.; Li, Z.; Tian, J.; Jiang, Y.; Yan, J.; Zhang, J.; Xiao, Y. CAT-MNER: Multimodal named entity recognition with knowledge-refined cross-modal attention. In Proceedings of the 2022 IEEE International Conference on Multimedia and Expo (ICME), Taipei, Taiwan, 18 July–22 July 2022; IEEE: New York, NY, USA, 2022; pp. 1–6. [Google Scholar]

- Xu, B.; Huang, S.; Sha, C.; Wang, H. MAF: A general matching and alignment framework for multimodal named entity recognition. In Proceedings of the Fifteenth ACM International Conference on Web Search and Data Mining, Tempe, AZ, USA, 21–25 February 2022; pp. 1215–1223. [Google Scholar]

- Kapur, J.N. Maximum-Entropy Models in Science and Engineering; John Wiley & Sons: Hoboken, NJ, USA, 1989. [Google Scholar]

- Eddy, S.R. Hidden markov models. Curr. Opin. Struct. Biol. 1996, 6, 361–365. [Google Scholar] [CrossRef] [PubMed]

- Hearst, M.A.; Dumais, S.T.; Osuna, E.; Platt, J.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. Their Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Lafferty, J.; McCallum, A.; Pereira, F. Conditional random fields: Probabilistic models for segmenting and labeling sequence data. In Proceedings of the ICML, Williamstown, MA, USA, 28 June–1 July 2001; Volume 1, p. 3. [Google Scholar]

- Borthwick, A.; Sterling, J.; Agichtein, E.; Grishman, R. NYU: Description of the MENE named entity system as used in MUC-7. In Proceedings of the Seventh Message Understanding Conference (MUC-7), Fairfax, VA, USA, 29 April–1 May 1998. [Google Scholar]

- Mccallum, A.; Li, W. Early results for Named Entity Recognition with Conditional Random Fields, Feature Induction and Web-Enhanced Lexicons. In Proceedings of the Seventh Conference on Natural Language Learning at HLT-NAACL 2003, Edmonton, AB, Canada, May 31–June 1 2003; pp. 188–191. [Google Scholar]

- Li, P.; Zhou, G.; Guo, Y.; Zhang, S.; Jiang, Y.; Tang, Y. EPIC: An epidemiological investigation of COVID-19 dataset for Chinese named entity recognition. Inf. Process. Manag. 2024, 61, 103541. [Google Scholar] [CrossRef]

- Chiu, J.P.C.; Nichols, E. Named entity recognition with bidirectional LSTM-CNNs. Trans. Assoc. Comput. Linguist. 2016, 4, 357–370. [Google Scholar] [CrossRef]

- Zhao, Z.; Yang, Z.; Luo, L.; Wang, L.; Zhang, Y.; Lin, H.; Wang, J. Disease named entity recognition from biomedical literature using a novel convolutional neural network. BMC Med. Genom. 2017, 10, 75–83. [Google Scholar] [CrossRef] [PubMed]

- Zheng, S.; Wang, F.; Bao, H.; Hao, Y.; Zhou, P.; Xu, B. Joint Extraction of Entities and Relations Based on a Novel Tagging Scheme. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July–4 August 2017; Long Papers. Association for Computational Linguistics: Stroudsburg, PA, USA; Volume 1. [Google Scholar]

- Gui, T.; Ye, J.; Zhang, Q.; Zhou, Y.; Gong, Y.; Huang, X. Leveraging document-level label consistency for named entity recognition. In Proceedings of the Twenty-Ninth International Conference on International Joint Conferences on Artificial Intelligence, Montreal, QC, Canada, 19–27 August 2021; pp. 3976–3982. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving language understanding by generative pre-training. Comput. Sci. 2018, in press. [Google Scholar]

- Shi, S.; Hu, K.; Xie, J.; Guo, Y.; Wu, H. Robust scientific text classification using prompt tuning based on data augmentation with L2 regularization. Inf. Process. Manag. 2024, 61, 103531. [Google Scholar] [CrossRef]

- Liu, Y.; Huang, S.; Li, R.; Yan, N.; Du, Z. USAF: Multimodal Chinese named entity recognition using synthesized acoustic features. Inf. Process. Manag. 2023, 60, 103290. [Google Scholar] [CrossRef]

- Zhang, Q.; Fu, J.; Liu, X.; Huang, X. Adaptive co-attention network for named entity recognition in tweets. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Chen, D.; Li, Z.; Gu, B.; Chen, Z. Multimodal named entity recognition with image attributes and image knowledge. In Database Systems for Advanced Applications, Proceedings of the 26th International Conference, DASFAA 2021, Taipei, Taiwan, 11–14 April 2021; Springer International Publishing: Berlin/Heidelberg, Germany, 2021; pp. 186–201. [Google Scholar]

- Sun, L.; Wang, J.; Zhang, K.; Su, Y.; Weng, F. RpBERT: A text-image relation propagation-based BERT model for multimodal NER. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 19–21 May 2021; Volume 35, pp. 13860–13868. [Google Scholar]

- Liu, P.; Wang, G.; Li, H.; Liu, J.; Ren, Y.; Zhu, H.; Sun, L. Multi-granularity cross-modal representation learning for named entity recognition on social media. Inf. Process. Manag. 2024, 61, 103546. [Google Scholar] [CrossRef]

- Wang, X.; Cai, J.; Jiang, Y.; Xie, P.; Tu, K.; Lu, W. Named Entity and Relation Extraction with Multi-Modal Retrieval. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2022, Abu Dhabi, United Arab Emirates, 7–11 December 2022; pp. 5925–5936. [Google Scholar]

- Ba, J.L.; Kiros, J.R.; Hinton, G.E. Layer normalization. arXiv 2016, arXiv:1607.06450. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5999–6009. [Google Scholar]

- Lample, G.; Ballesteros, M.; Subramanian, S.; Kawakami, K.; Dyer, C. Neural Architectures for Named Entity Recognition. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; Association for Computational Linguistics: Stroudsburg, PA, USA, 2016. [Google Scholar]

- Ishida, T.; Yamane, I.; Sakai, T.; Niu, G.; Sugiyama, M. Do We Need Zero Training Loss After Achieving Zero Training Error? In Proceedings of the International Conference on Machine Learning, PMLR, Online, 13–18 July 2020; pp. 4604–4614. [Google Scholar]

- Gu, J.; Meng, X.; Lu, G.; Hou, L.; Minzhe, N.; Liang, X.; Yao, L.; Huang, R.; Zhang, W.; Jiang, X.; et al. Wukong: A 100 million large-scale chinese cross-modal pre-training benchmark. Adv. Neural Inf. Process. Syst. 2022, 35, 26418–26431. [Google Scholar]

- Li, J.; Sun, A.; Han, J.; Li, C. A survey on deep learning for named entity recognition. IEEE Trans. Knowl. Data Eng. 2020, 34, 50–70. [Google Scholar] [CrossRef]

- Zhang, D.; Wei, S.; Li, S.; Wu, H.; Zhu, Q.; Zhou, G. Multi-modal graph fusion for named entity recognition with targeted visual guidance. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 19–21 May 2021; Volume 35, pp. 14347–14355. [Google Scholar]

- Yu, J.; Li, Z.; Wang, J.; Xia, R. Grounded multimodal named entity recognition on social media. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023; Volume 1, pp. 9141–9154. [Google Scholar]

| Entity Type | TWITTER-2015 | TWITTER-2017 | WuKong | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Train | Dev | Test | Train | Dev | Test | Train | Dev | Test | |

| PER | 2217 | 552 | 1816 | 2943 | 626 | 621 | 7780 | 2144 | 2088 |

| LOC | 2091 | 522 | 1697 | 731 | 173 | 178 | 1381 | 400 | 366 |

| ORG | 928 | 247 | 839 | 1674 | 375 | 395 | 6381 | 1677 | 1684 |

| MISC/GPE | 940 | 225 | 726 | 701 | 150 | 157 | 6865 | 1765 | 1854 |

| No. of entities | 6176 | 1546 | 5078 | 6049 | 1324 | 1351 | 22407 | 5986 | 5992 |

| No. of tweets | 4000 | 1000 | 3257 | 3373 | 723 | 723 | 36024 | 9699 | 9700 |

| Methods | TWITTER-2015 | TWITTER-2017 | WuKong | ||||||

|---|---|---|---|---|---|---|---|---|---|

| P | R | F1 | P | R | F1 | P | R | F1 | |

| Baseline model | 73.76 | 75.52 | 74.63 | 86.96 | 87.05 | 86.50 | 77.00 | 82.88 | 79.83 |

| +CP | 74.05 | 75.94 | 74.98 | 87.32 | 87.19 | 87.26 | 80.86 | 83.13 | 81.98 |

| +Flooding | 74.87 | 74.47 | 74.67 | 88.09 | 86.53 | 87.30 | 79.36 | 83.36 | 81.31 |

| +CF | 74.43 | 75.44 | 74.94 | 84.82 | 86.45 | 85.63 | 82.31 | 81.04 | 81.67 |

| +CP + Flooding | 76.08 | 75.67 | 75.88 | 88.38 | 86.68 | 87.52 | 80.41 | 83.66 | 82.01 |

| +CP + CF | 73.37 | 76.46 | 74.88 | 85.83 | 86.97 | 86.40 | 83.45 | 80.96 | 82.19 |

| +Flooding + CF | 76.64 | 75.98 | 76.31 | 88.74 | 85.71 | 87.20 | 80.34 | 83.29 | 81.79 |

| MPCFN (ours) | 76.84 | 76.65 | 76.74 | 88.34 | 86.90 | 87.61 | 81.82 | 82.89 | 82.35 |

| Modality | Methods | TWITTER-2015 | TWITTER-2017 | WuKong | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| P | R | F1 | P | R | F1 | P | R | F1 | ||

| Text | BERT | 68.30 | 74.61 | 71.32 | 82.19 | 83.72 | 82.95 | 76.82 | 81.12 | 78.91 |

| BERT-CRF | 69.22 | 74.59 | 71.81 | 83.32 | 83.57 | 83.44 | 78.37 | 79.39 | 78.88 | |

| Text + Image | UMT | 71.67 | 75.23 | 73.41 | 85.28 | 85.34 | 85.31 | 79.06 | 80.46 | 79.75 |

| RpBERT | 73.29 | 75.23 | 74.25 | 85.86 | 86.75 | 86.30 | 78.44 | 82.09 | 80.23 | |

| UMGF | 74.49 | 75.21 | 74.85 | 86.54 | 84.50 | 85.51 | 78.22 | 74.62 | 76.38 | |

| HVPNeT | 73.87 | 76.82 | 75.32 | 85.84 | 87.93 | 86.87 | 79.98 | 80.12 | 80.05 | |

| GMNER | 61.65 | 62.03 | 61.27 | 65.62 | 66.54 | 64.72 | - | - | - | |

| MGCMT | 73.57 | 75.59 | 74.57 | 86.03 | 86.16 | 86.09 | 75.28 | 80.56 | 77.83 | |

| MPCFN (ours) | 76.84 | 76.65 | 76.74 | 88.34 | 86.90 | 87.61 | 81.82 | 82.89 | 82.35 | |

| Parameter b | TWITTER-2015 | TWITTER-2017 | WuKong | ||||||

|---|---|---|---|---|---|---|---|---|---|

| P | R | F1 | P | R | F1 | P | R | F1 | |

| 0.2 | 76.07 | 75.58 | 75.82 | 87.09 | 85.86 | 86.47 | 82.01 | 80.59 | 81.30 |

| 0.3 | 76.84 | 76.65 | 76.74 | 87.90 | 87.12 | 87.51 | 81.19 | 83.39 | 82.28 |

| 0.4 | 76.88 | 76.05 | 76.46 | 86.93 | 85.64 | 86.28 | 80.33 | 83.28 | 81.78 |

| 0.5 | 77.32 | 75.60 | 76.45 | 88.34 | 86.90 | 87.61 | 81.08 | 83.29 | 82.17 |

| 0.6 | 76.39 | 75.50 | 75.94 | 87.89 | 85.94 | 86.90 | 81.82 | 82.89 | 82.35 |

| 0.7 | 75.80 | 76.49 | 76.15 | 89.10 | 85.27 | 87.14 | 81.12 | 83.24 | 82.17 |

| 0.8 | 75.51 | 75.25 | 75.38 | 87.42 | 85.42 | 86.41 | 80.79 | 83.18 | 81.97 |

| Methods | TWITTER-2015 | TWITTER-2017 | WuKong | ||||||

|---|---|---|---|---|---|---|---|---|---|

| P | R | F1 | P | R | F1 | P | R | F1 | |

| MPCFN | 76.84 | 76.65 | 76.74 | 88.34 | 86.90 | 87.61 | 81.82 | 82.89 | 82.35 |

| w/o uni-text | 73.76 | 75.52 | 74.63 | 86.96 | 87.05 | 86.50 | 77.00 | 82.88 | 79.83 |

| w/o uni-obj | 75.05 | 75.88 | 75.47 | 87.59 | 86.75 | 87.17 | 81.22 | 83.36 | 82.28 |

| w/o uni-img | 76.81 | 75.21 | 76.01 | 88.46 | 86.23 | 87.33 | 80.52 | 83.11 | 81.79 |

| Methods | TWITTER-2017 → TWITTER-2015 | TWITTER-2015 → TWITTER-2017 | ||||

|---|---|---|---|---|---|---|

| P | R | F1 | P | R | F1 | |

| UMT | 64.67 | 63.59 | 64.13 | 67.80 | 55.23 | 60.87 |

| MGCMT | 67.67 | 63.28 | 65.40 | 66.41 | 58.11 | 61.98 |

| MPCFN (ours) | 73.71 | 62.84 | 67.84 | 65.79 | 59.22 | 62.33 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiu, Q.; Tan, B.; Zhou, Y.; Chen, W.; Tian, M.; Tao, L. MPCFN: A Multilevel Predictive Cross-Fusion Network for Multimodal Named Entity Recognition in Social Media. Appl. Sci. 2025, 15, 11855. https://doi.org/10.3390/app152211855

Qiu Q, Tan B, Zhou Y, Chen W, Tian M, Tao L. MPCFN: A Multilevel Predictive Cross-Fusion Network for Multimodal Named Entity Recognition in Social Media. Applied Sciences. 2025; 15(22):11855. https://doi.org/10.3390/app152211855

Chicago/Turabian StyleQiu, Qinjun, Bo Tan, Yukuan Zhou, Wenjing Chen, Miao Tian, and Liufeng Tao. 2025. "MPCFN: A Multilevel Predictive Cross-Fusion Network for Multimodal Named Entity Recognition in Social Media" Applied Sciences 15, no. 22: 11855. https://doi.org/10.3390/app152211855

APA StyleQiu, Q., Tan, B., Zhou, Y., Chen, W., Tian, M., & Tao, L. (2025). MPCFN: A Multilevel Predictive Cross-Fusion Network for Multimodal Named Entity Recognition in Social Media. Applied Sciences, 15(22), 11855. https://doi.org/10.3390/app152211855