1. Introduction

A building must function optimally to support the activities of its occupants. Residential buildings should perform particularly well because occupants spend much of their time inside [

1]. Hence, it is important to manage defects to maintain the performance of residential buildings [

2,

3]. However, the construction of residential buildings entails the complex integration of diverse types of work, in which defects may result from the combined effect of unforeseen design errors, material deficiencies, construction faults, and environmental factors [

4]. As substantial costs are incurred to remedy construction defects, stakeholders, including project owners, contractors, and residents, frequently face financial losses and experience considerable psychological distress [

5].

Although various measures have been explored to detect and eliminate potential defects during the design and construction phases, a considerable number of defects continue to emerge even after the facilities are handed over to end users [

6]. In particular, various defects arising during the maintenance phase have increasingly led to customer dissatisfaction; however, contractors have remained relatively passive in responding to defects [

7]. Consequently, conflicts and disputes often arise between residents and contractors because of differences in interpretation and perception regarding defects that occur after handing over a housing project [

8].

As these issues accumulate during the maintenance phase, post-handover residential defects have become not only technical and financial concerns but also a significant source of legal and social conflict [

7,

8,

9]. The boundaries of responsibility among stakeholders, such as owners, contractors, designers, and residents, are often ambiguous, leading to disputes over liability, compensation, and repair obligations [

8,

9]. These disputes extend beyond the immediate costs of defect remediation and increasingly evolve into complex legal battles that reflect broader tensions between technical accountability, contractual interpretation, and public expectations of housing quality [

9].

To minimize post-handover building defect litigation, it is necessary to explore approaches that can rationally resolve conflicts between customers and contractors before litigation occurs. One of the most representative approaches is the alternative dispute resolution (ADR). Common ADR methods include negotiations, dispute review boards, mediation, and arbitration [

10]. Typical ADR implementation costs include fees and expenses paid to the owner’s/contractor’s employees, lawyers, claims consultants, third-party neutrals, and other experts associated with the resolution process [

11,

12]. Although ADR is recognized as a more effective and less adversarial technique than litigation in dispute resolution [

13], project participants face uncertainty regarding future ADR implementation costs [

14].

In South Korea, because such obstacles can impede the activation of ADR, committees dedicated to ADR have been established within public administrative organizations to resolve defect-related disputes at a low cost [

15]. However, because ADR is merely a negotiation-based settlement process and does not have a legally binding force, customers and contractors often prefer to pursue litigation when they believe that their positions are not sufficiently reflected, as they perceive that litigation could lead to more favorable outcomes [

16]. However, in litigation related to defect disputes, courts do not simply adopt defect repair costs estimated by experts. Instead, they consider various factors, such as the causes of the defects, the allocation of liability, and a reduction in excessive claims when rendering judgments. Consequently, the awarded defect repair costs are often lower than the amounts previously determined during pre-litigation settlements [

17,

18].

From this perspective, if the outcomes of defect-related litigation could be predicted and such predictive information were provided during the pre-litigation dispute resolution stage, it could support decision-making in ADR procedures and ultimately help to minimize litigation arising from defect disputes [

19]. However, to effectively utilize these predicted outcomes for dispute mediation among stakeholders during the ADR stage, a sufficient level of objectivity must be ensured. Despite this need, data-driven approaches for understanding and predicting judicial reasoning in post-handover building defect disputes, characterized by intricate legal and technical complexities, are largely absent, highlighting the need to explore viable solutions to address this issue.

Recent advances in artificial intelligence (AI) and natural language processing (NLP) provide a robust foundation for achieving this goal [

20]. Court judgments and precedent documents are primarily expressed in an unstructured textual form, containing complex legal reasoning, contextual relationships, and linguistic nuances that are difficult to interpret using traditional statistical or rule-based models [

21]. NLP techniques are particularly suitable for this problem because they can process large volumes of unstructured text, extract semantic features, and learn latent patterns that reflect judicial decision-making logic [

22]. In contrast to traditional methods that depend on manually crafted features or predefined quantitative variables, NLP-based deep learning models autonomously learn the contextual semantics and argumentative structures embedded in legal texts, thereby facilitating more accurate prediction of litigation outcomes [

23]. Therefore, NLP constitutes a robust data-driven methodology for comprehensively understanding and modeling the semantic and contextual dependencies embedded within court precedents [

20,

22].

In the general legal domain, previous studies have successfully applied NLP to analyze large legal documents, demonstrating its capability in discovering hidden linguistic and causal patterns [

24,

25]. However, many previous studies have focused on analyzing the causes of defects in residential buildings [

7,

26,

27] and have primarily focused on exploring ways to promote ADR from an institutional perspective [

10,

27,

28]. From this perspective, this study aims to develop an NLP-based model to predict litigation outcomes in post-handover defect disputes regarding residential buildings and to propose a decision-support framework for pre-litigation dispute resolution.

Through this study, the predictive use of NLP for analyzing precedents concerning building defect disputes can serve as an effective mechanism for minimizing unnecessary litigation by delivering objective data-driven insights prior to the escalation of disputes to court. By focusing on post-handover residential building defect disputes that arise during the maintenance phase, this study extends the scope of legal analytics in the construction field to a domain that has received limited research attention. In addition, it develops an NLP-based model capable of learning the semantic and argumentative structures embedded in court precedents, thereby enabling a data-driven understanding of judicial reasoning in defect litigation. Furthermore, by integrating predictive insights into pre-litigation ADR procedures, the study proposes a practical decision-support framework that enhances rational and evidence-based dispute resolution, reduces stakeholder uncertainty, and ultimately contributes to lowering the frequency of litigation related to residential building defects.

2. Literature Review

Defects in residential buildings not only directly affect the quality of life of residents but can also lead to disputes between contractors and customers. Specifically, various defects occurring during the post-handover stage can result in the wastage of additional resources and economic losses, causing material and psychological damage to residents and financial losses and reputational damage to construction firms. However, as defects can occur in diverse forms across most building components, their analysis is highly complex. Existing defect-related studies have primarily focused on establishing rational defect classification systems and analyzing the causes, characteristics, and patterns of defect occurrence in detail. One study developed a defect classification system for the Spanish housing sector with coverage from the construction stage until the operational stage [

29]. Some studies have proposed hierarchical defect classification systems to systematically organize various types of defects.

Other studies have combined these defect categories with factors such as building elements, locations, subcontract types, and building types, to closely examine the patterns of defect occurrence [

7,

26,

27,

30,

31]. These studies identified design errors, human errors, financial constraints, tight schedules, and material-related issues as the primary causes of defects. However, defects are driven by complex and nonlinear causal relationships between various contributing factors. Such complex defect occurrence mechanisms that are influenced by diverse causes can vary dynamically over time or across different project types, posing a major challenge in effectively managing defect risks. Thus, any attempt to fully prevent defects in multifamily housing is inherently limited, and defects are, to some extent, inevitable [

6,

7].

Therefore, identifying effective response measures when defects occur is critical. Typically, residents often lack technical expertise to recognize and effectively address defects when they appear, making it difficult for them to manage such issues on their own. Therefore, it is common practice to impose responsibility on contractors for defects during the defect liability period [

32]. From this perspective, some studies have explored ways to ensure the rational operation of a defect liability system [

33,

34]. Davey et al. [

33] identified key issues arising during the defect liability period and proposed various management measures to address them, such as inspections, contractual arrangements, and reporting. Hughes et al. [

34] conducted a study on determining the appropriate level of financial reserves required to cover the actual costs of remedying defects. In particular, because various defects that arise during the maintenance phase are highly likely to cause disagreements between occupants and contractors [

8], defect-related disputes continue to occur even when a defect liability system is in place.

To address these persistent disputes, various studies have demonstrated that ADR can serve as a more rational means of resolution than litigation. El-Sayegh et al. [

35] highlighted that ADR offers several advantages over litigation, including reduced time and costs as well as mitigation of decision-making delays. Kalogeraki and Antoniou [

36] noted that ADR is practically useful as a dispute resolution mechanism in the construction sector. However, Gamage and Kumar [

37] highlighted that although ADR methods are generally more effective than litigation in resolving defect-related disputes, their nonbinding and noncompulsory nature can lead parties to resort to litigation when ADR fails to deliver satisfactory outcomes. Cheung et al. [

38] systematically analyzed the core characteristics of ADR processes applied to defect disputes using the analytic hierarchy process and identified that factors such as enforceability and the possibility of reaching consensus are key determinants of the effectiveness of ADR.

Recent studies have attempted to enhance the enforceability and consensual nature of ADR by addressing commonly cited limitations using data-driven models. Mahfouz et al. [

39] applied text mining to litigation cases involving differing site conditions and extracted recurring legal factors, suggesting the potential to identify issues in advance of ADR negotiations. Seo et al. [

19] developed an NLP-based model to summarize general construction litigation precedents and predict their outcomes, providing prediction results that encouraged disputing parties to choose ADR voluntarily. Jallan et al. [

9] analyzed construction litigation data to automatically identify recurring defect types and dispute structures, thereby providing information to facilitate the formulation of reasonable settlement proposals during ADR processes. These studies indicate that providing predicted litigation outcomes as key information during ADR can support the decision-making of disputing parties and enhance the voluntariness and persuasiveness of ADR by clarifying the risks and costs of pursuing litigation. Therefore, linking predicted litigation outcomes with ADR represents an important strategy for enhancing the rationality of ADR and improving the effectiveness of resolving defect-related disputes.

Hence, this study aimed to develop an NLP-based defect litigation prediction model to support decision-making within ADR processes, focusing on defect-related disputes that arise between customers and contractors during the maintenance phase of residential buildings.

3. Research Methodology

This study aimed to predict court judgment outcomes of potential litigations based on claims and evidence regarding construction defects raised by clients, building managers (plaintiffs), and contractors (defendants) during the pre-litigation dispute resolution stage related to defect liability warranties. The ultimate goal is to facilitate early settlement before the cases proceed to court. This section describes the development of a legal judgment prediction model by training NLP-based deep learning models on court judgment documents, and how this model can be practically applied.

Defect liability warranty cases typically involve disputes over the scope of warranty payments and are directly affected by the grant ratio, which depends on the court judgment outcomes. As these two types of information are complementary for learning, this study aimed to build a model that jointly predicts both the judgment outcome (classification) and the grant ratio (regression). Allowing a single model to observe both types of information enables the classification task to leverage the fine-grained variation provided by regression, which smoothens the decision boundary, whereas regression benefits from the categorical guidance provided by classification, which stabilizes the scale interpretation. This multitask setup is particularly beneficial under label imbalance or limited data conditions, as the two tasks share underlying representations and provide a mutual regularization effect [

40].

The court judgment documents used for training contained both the plaintiffs’ and defendants’ claims, their evidence, and claimed amounts, which were available prior to the trial, as well as the court’s findings and final judgments, which were revealed only during the litigation process. As the purpose of this study is to support decision-making in the pre-litigation dispute resolution stage, it is essential that the input features of the prediction model be restricted to information that can be identified beforehand.

Therefore, the model was designed to learn exclusively from pre-trial information derived from past similar cases and to predict the judgment outcome (dismissed, granted in full, or granted in part) and the grant ratio (proportion of claims granted) before litigation begins. Considering the above constraints, this study employed a multitask learning architecture that integrated the bidirectional encoder representations from transformers (BERT) text encoder with numerical feature embeddings to jointly process textual and quantitative information [

41].

Finally, the trained case law model was applied to previously unseen cases, feeding in the four textual strands (plaintiff’s claims, plaintiff’s grounds, defendant’s claims, and defendant’s grounds) together with the claimed amount, and the predicted verdict and grant ratio were extracted. In this way, a model was built that can support decision-making by forecasting outcomes in the pre-litigation dispute resolution stage.

This section describes the preparation process of the dataset for the model input, model architecture, and performance evaluation methodology.

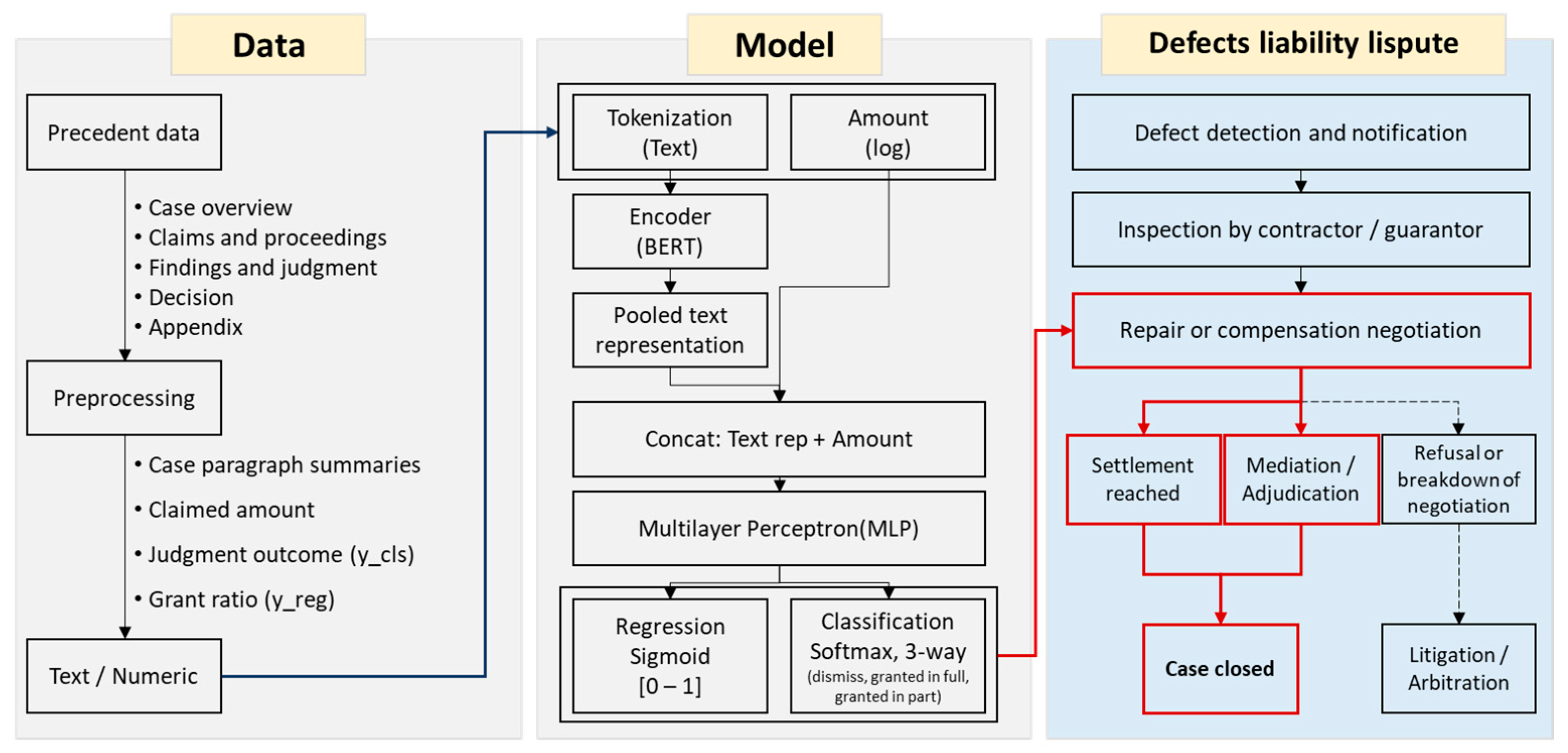

Figure 1 shows the proposed methodology for analyzing court judgments related to defect liabilities.

3.1. Data Processing

To achieve the goal of applying the model in disputes involving defect-repair guarantees, information was first extracted from case law documents that can be assessed ex ante. Case law documents contain contract-related information grounded in the plaintiff’s and defendant’s claims and grounds (e.g., defect liability warranty, construction contract, design documents, and specifications); the occurrence and condition of defects (expert appraiser’s inspection reports and technical appraisal findings); and guarantee-relationship information (guarantee-certificate issuer, guaranteed party, and beneficiary). They also include, as generated through litigation, information on the legal nature of the defect liability warranty, the scope of defects and attribution of responsibility, the legality of guarantee claims, and the legal criteria for quantifying damages. To design the dataset for this study, the plaintiff’s claims/grounds and the defendant’s claims/grounds were extracted and the claimed amount for the regression target was obtained. Considering usability at the dispute stage, the data were structured in a summary form that composed short sentences based on keywords.

The collected case law data included sections such as the plaintiff’s claims and the defendant’s claims. From these sections, sentences pertaining to the parties’ claims and grounds were extracted and the intended meaning of each sentence was organized in the form of summary keywords. The extracted textual data allowed the model to learn the reasoning structure of court judgments and the legal grounds supporting each decision. In addition, the claimed amount was extracted, reflecting the scale of the case, and the awarded amount, which was determined by the judgment outcome. These numerical features contribute to the estimation of the grant ratio (proportion of claims granted) by providing a quantitative context for the overall magnitude of each case.

3.2. Data Preprocessing

The extracted textual and numerical data were converted into machine-readable representations and carefully preprocessed to reduce noise, such as sequence length variations, scale discrepancies, and missing values, thereby preventing overfitting during model training [

42]. Accordingly, the textual components of each case, the overall case narrative and key legal issues, were processed separately using the BERT tokenizer, which tokenized each segment into subword units through the WordPiece algorithm, rather than splitting words into full tokens. This approach allowed rare or unseen words to be decomposed into multiple subwords, thereby mitigating the out-of-vocabulary problem and improving the generalization performance of the model even with small domain-specific corpora [

43].

For numerical features, a log1p transform was applied to the one-dimensional single scalar claimed amount to mitigate scale differences and stabilize training. Standardization was then fit on the training split only and the same transformation was applied to the validation and test splits.

The refined textual embeddings and normalized amount vectors were then integrated through multimodal fusion, enabling the model to perform multitask learning by jointly predicting both the judgment outcome (classification: dismissed, granted in full, or granted in part) and the grant ratio (regression: 0–1) simultaneously.

3.3. Model Architecture

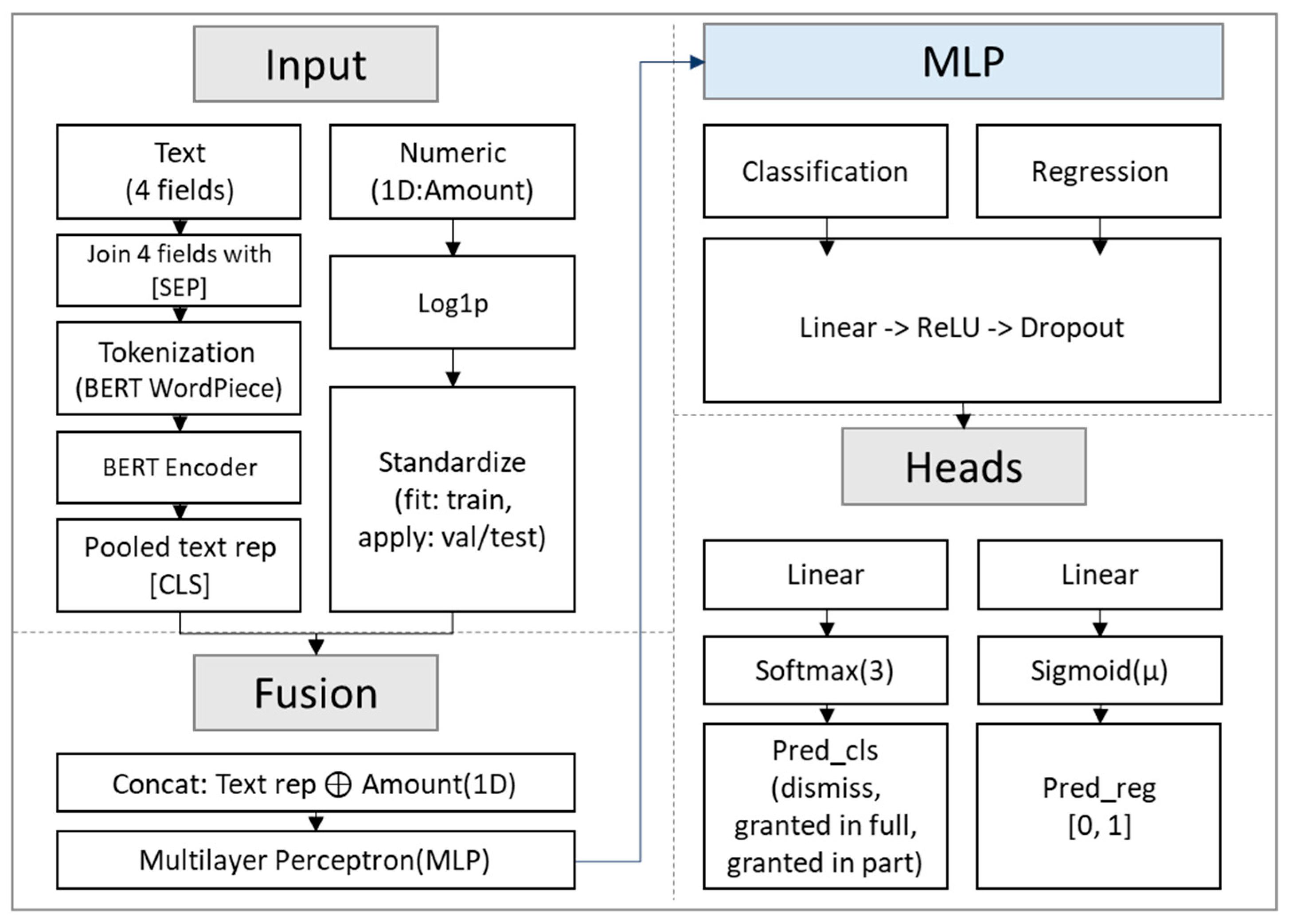

Case law for defect-repair-guarantee disputes comprises four textual strands, plaintiff’s claims, plaintiff’s grounds, defendant’s claims, and defendant’s grounds, together with a numerical scale feature for the claimed amount. Accordingly, a multimodal multitask model was adopted that learns from text and numbers jointly. In preprocessing, the four texts were concatenated into a single sequence and converted with the BERT tokenizer.

The multitask architecture formed a shared representation by combining the document embedding produced by the encoder with the numerical features and fed this fusion into a fully connected multilayer perceptron (MLP) that served as the common projection for both tasks. The model then jointly trained two heads: verdict classification and citation-rate regression. The final model read each case through four strands of text (plaintiff’s claims, plaintiff’s grounds, defendant’s claims, and defendant’s grounds) and combined them into a single narrative. After BERT captured the core context of the document, the claimed amount, which reflects the scale of the case, was appended so that the textual logic and monetary information were ingested together. Based on the MLP-projected fusion, the model learned the two decisions simultaneously. In this process, the MLP performed nonlinear transformations that condensed and highlighted salient signals, reorganizing textual context and monetary cues into a more cohesive representation that reliably supports the two downstream decisions [

44,

45,

46,

47].

Figure 2 shows the overall model architecture.

3.4. Model Performance Verification

As the proposed model jointly predicted both classification and regression outputs, its performance was evaluated using task-specific metrics for each prediction type. For the classification task, the accuracy and F1-score were used, whereas for the regression task, the mean absolute error (MAE) and root-mean-squared error (RMSE) were used as the evaluation metrics [

48,

49].

The accuracy represents the proportion of correct predictions among all samples. For each case, if the predicted class (dismissed, granted in full, or granted in part) matched the true label, it was counted as one; otherwise, it was counted as zero. The accuracy score was then computed as the average of the binary results across all samples, as follows:

where

denotes the ground truth label,

denotes the predicted label, and

n denotes the number of samples.

The F1-score was computed as the harmonic mean of the precision and recall for each class and then averaged across all classes (macro-averaged). This metric is particularly useful for evaluating whether a model performs consistently across all classes on imbalanced datasets.

where TP, FP, and FN denote true positives, false positives, and false negatives, which represent the numbers of correctly predicted positives, incorrectly predicted positives, and missed positive samples, respectively. The F1-score for class

k is defined as the harmonic mean of its precision and recall, and the macro-averaged F1-score helps to evaluate all the classes with equal weights.

The MAE measures how far the predictions deviate from the true values by computing the average of the absolute errors. In this study, where the grant ratio was normalized to a range of 0–1, the MAE indicates the average percentage error of the predictions. Due to its simple definition and scale dependence, the MAE is widely used as a baseline metric for regression tasks. The RMSE was calculated using the square root of the mean of the squared error. When the RMSE is noticeably higher than the MAE, it suggests that some samples incur relatively large errors. For both the MAE and RMSE, lower values (closer to 0) indicate a better performance.

where

n denotes the number of samples,

denotes the ground truth value,

denotes the predicted value, and

represents the mean of the ground truth values.

In legal outcome prediction, accuracy gauges how well predictions match the true verdicts across all cases, while the F1-score is used to examine whether the model tends to miss correct instances or produce incorrect positives. Together, these two metrics assess both the overall performance and the behavior of the model with respect to misclassification.

For citation-rate prediction, the MAE indicates the average magnitude of error, whereas the RMSE penalizes large deviations more heavily, highlighting performance on substantially off-target predictions. Taken together, these two metrics reveal both the mean error and tail risk, enabling an assessment of the shape and severity of the error distribution.

3.5. BERT

BERT is a pre-trained language model based on the transformer encoder architecture, which learns bidirectional contextual representations. It is trained on large text corpora by predicting masked tokens from their surrounding contexts, thereby enabling it to capture general language patterns. After this pretraining stage, lightweight task-specific heads for classification or regression can be attached, and the entire model can be fine-tuned for the target task [

50]. In the legal domain, domain-adapted models, such as Legal-BERT, are commonly used instead of the general BERT model. These models are further pretrained on large-scale legal corpora, including court judgments and statutory texts, enabling them to capture domain-specific terminology and reasoning patterns.

As a result, Legal-BERT variants achieved a higher performance than general BERT on tasks such as court judgment prediction and statute retrieval [

51]. Several Korean-specific pre-trained language models have been developed to improve performance by leveraging tokenization and vocabulary tailored for the Korean language. Examples include KLUE-BERT, which achieves a high performance with a Korean-optimized tokenizer and vocabulary, and KR-BERT, which is a smaller Korean-specific model designed for efficient training with limited resources. In addition, models such as KoELECTRA, which provides training signals to all tokens and thus converges quickly with fewer computational resources, and KLUE-RoBERTa, which achieves high performance through stronger pretraining, have also shown competitive results in Korean NLP tasks [

52,

53,

54]. As this study aimed to develop a prediction model for Korean court judgments, the aforementioned BERT-based models were adopted as the textual encoders.

4. Application of Trained Models to Court Judgment Cases

This section outlines the processing of real data, training of the model, and extraction of predictions based on the research methodology described in

Section 3. To assess the utility of the multitask model, single-task counterparts were also trained by suppressing the supervision signal for either classification or regression, and the results were compared. In addition, given the limited sample size, a fold-based (k-fold) analysis was conducted and the standard deviation was examined across folds for each BERT variant to validate the suitability of the multitask model adopted in this study.

4.1. Judgment Case Data

This section describes the datasets used in this study. A total of 422 cases of court judgments related to defect liability warranty disputes were collected, and the dataset included the components listed in

Table 1. The dataset was collected manually from BigCase, a Korean legal case search platform that provides free public access to case summaries and metadata; full-text opinions would have required user authentication or a paid license [

55].

Among the components of court judgment documents, the Disposition (Decision), Claims and Appeals, and Reasoning sections contain the core content used to train the proposed model.

Both the textual and numerical information included in these sections were used as model inputs. Specifically, the classification targets (dismissed, granted in full, and granted in part) and regression targets (grant ratio) were derived from the Disposition (Decision) section, stating the final judgment and awarded amount. The Reasoning section provides claims and evidence for the plaintiff and defendant, which were used for keyword-based and paragraph-level summarization to train the textual encoder.

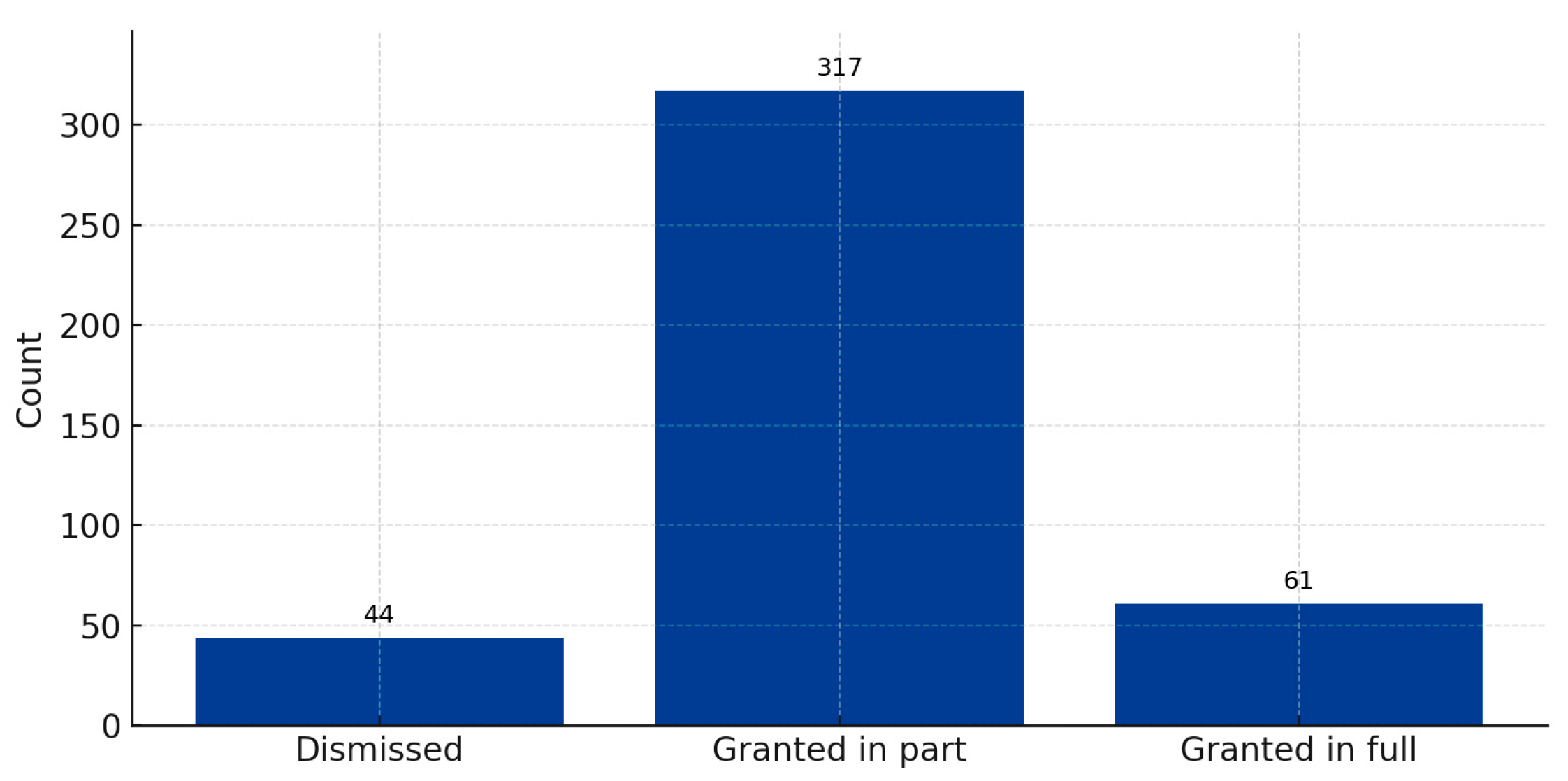

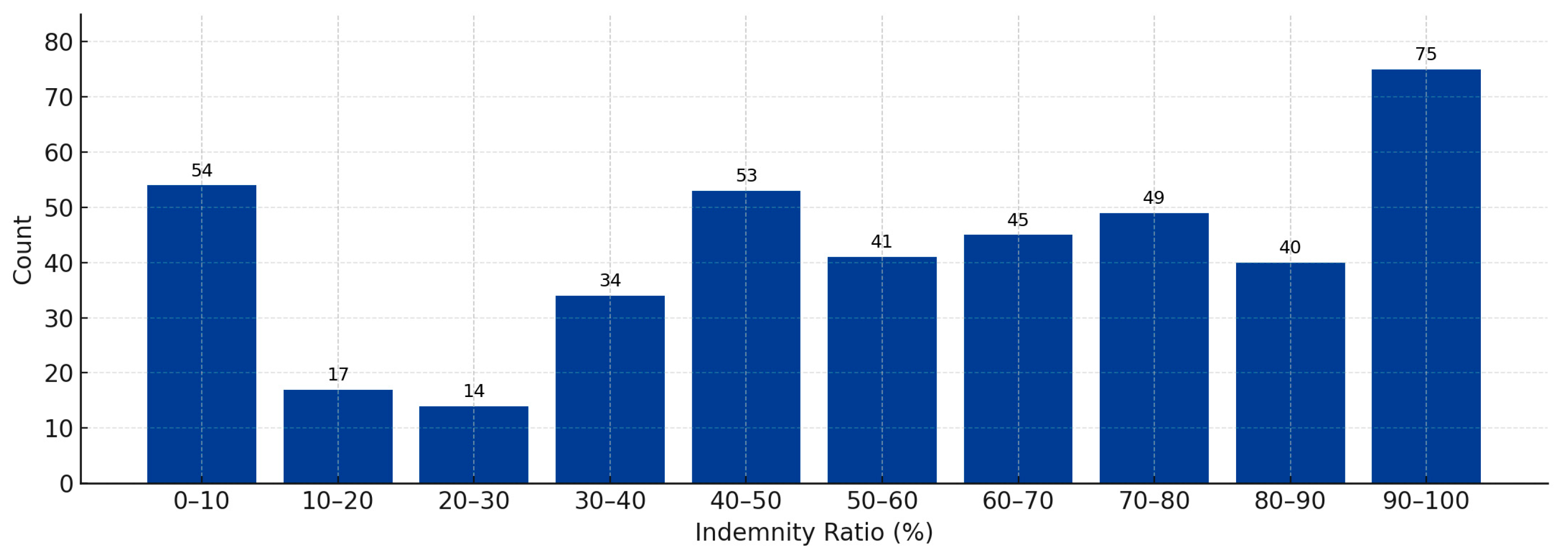

Figure 3 and

Figure 4 show the distributions of the 422 cases collected for both the classification and regression tasks, respectively.

The classification dataset contained 44 dismissed cases, 61 granted in full cases, and 317 granted in part cases, indicating a moderate class imbalance, with granted in part being the dominant category. For the regression task, the grant ratio values were grouped at 10% intervals. As shown in

Figure 4, cases with grant ratios between 10–30% were relatively underrepresented, whereas cases above 90% were highly concentrated. Ranges below 10% and above 90% primarily correspond to dismissed and granted in full cases, respectively, whereas granted in part cases in the collected dataset mostly fell between 30–90%.

4.2. Judgment Case Data Processing

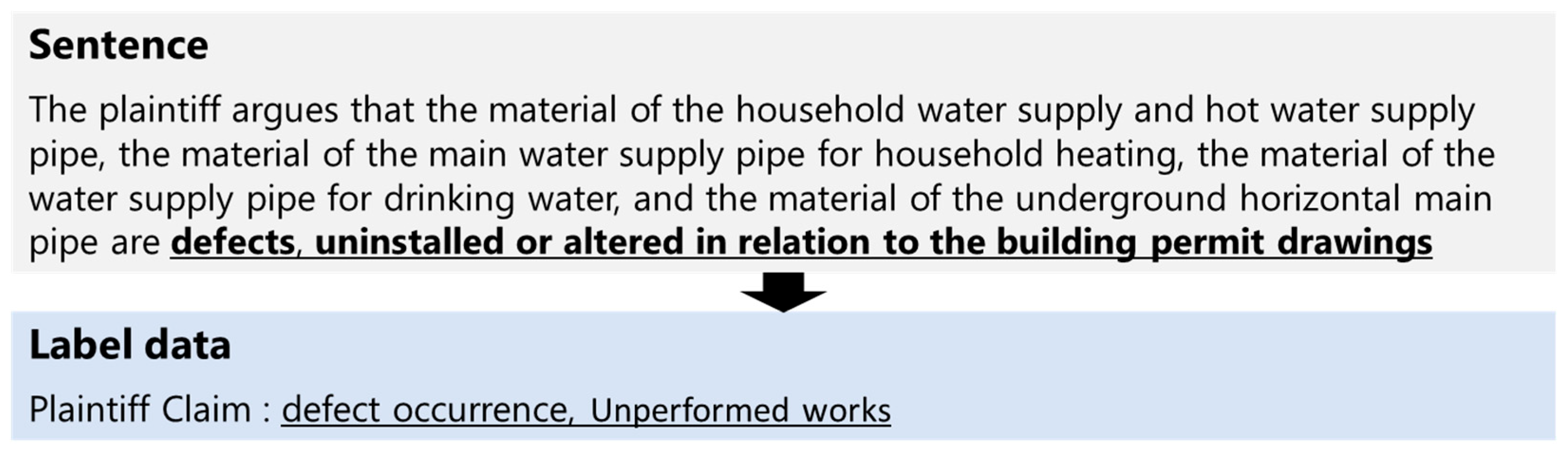

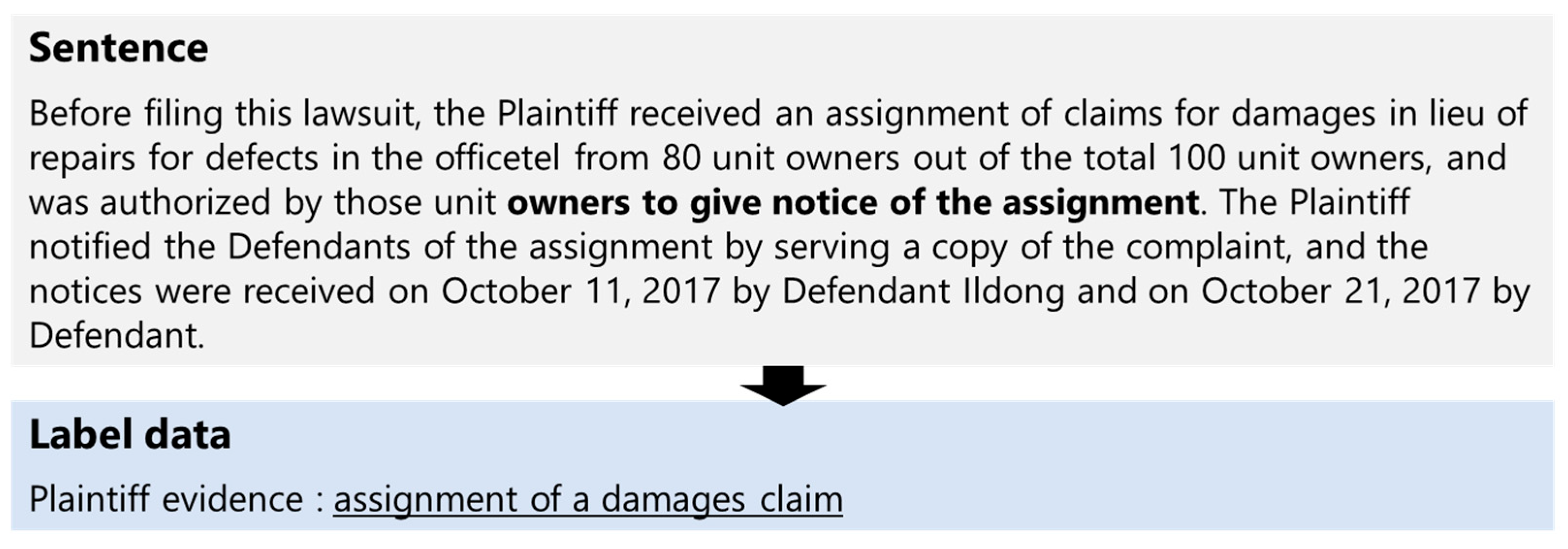

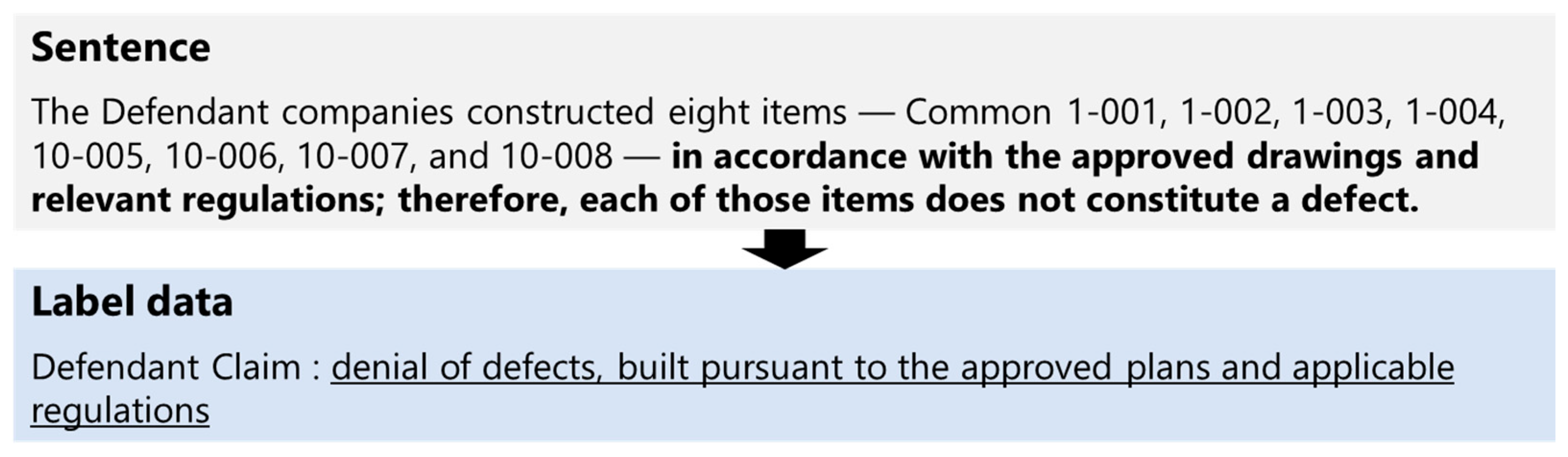

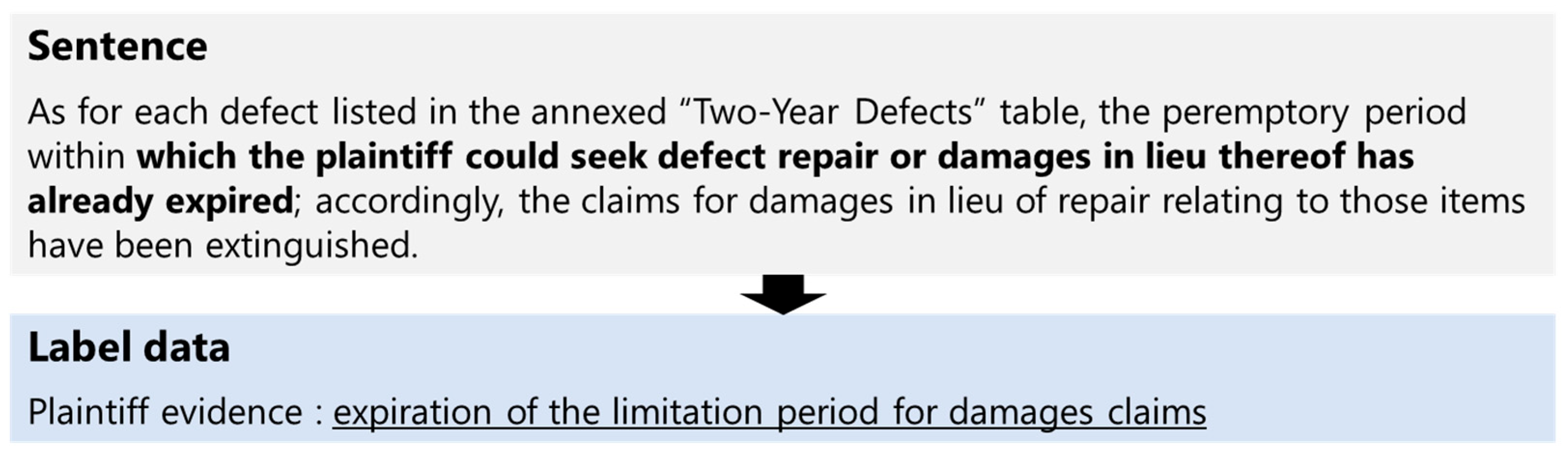

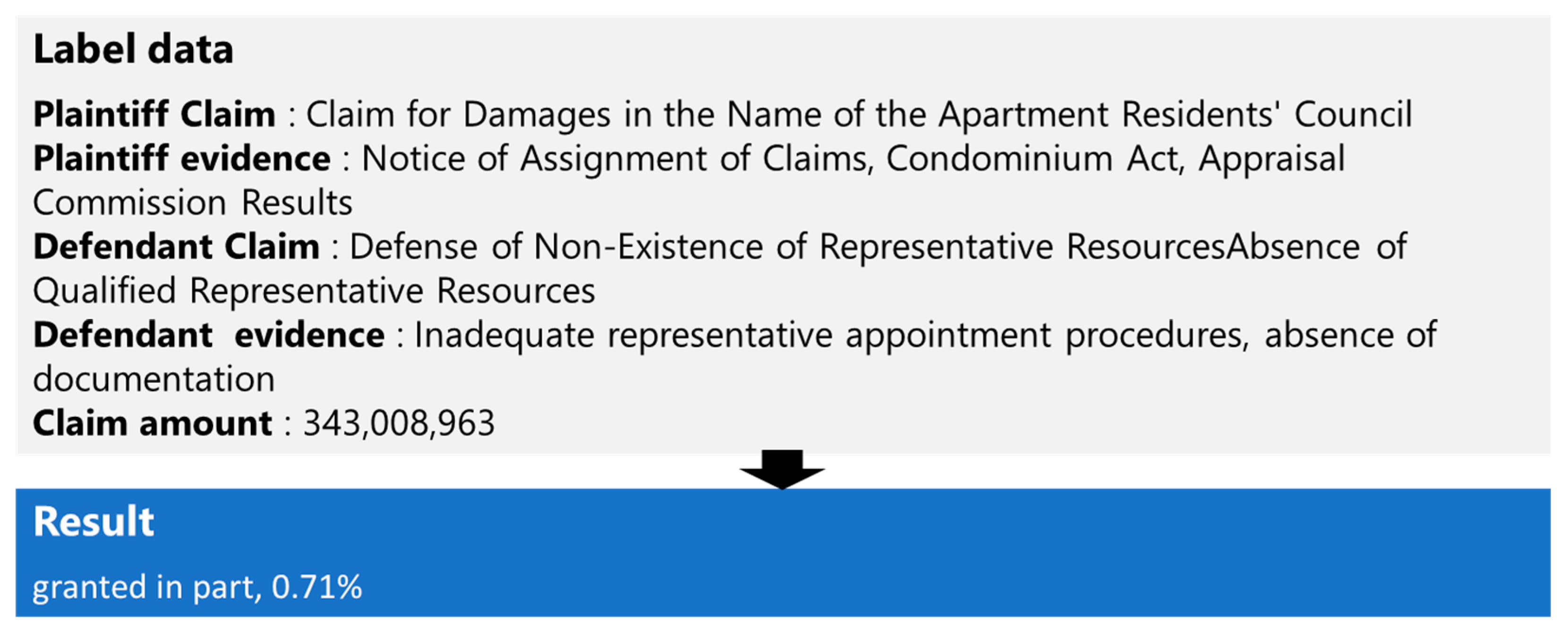

Next, to reflect the core issues of each case, the case text was reviewed and four fields were extracted as keywords: plaintiff’s claims, plaintiff’s grounds, defendant’s claims, and defendant’s grounds. For each case, up to eight keywords were extracted per category, focusing on the crux of the dispute. Keyword extraction proceeded by locating the sections of the case law document that contained the parties’ claims and grounds, extracting the relevant sentences, and converting their main points into keywords, which were then used as the plaintiff’s claims/grounds and defendant’s claims/grounds data. Illustrative examples are provided in

Figure 5,

Figure 6,

Figure 7 and

Figure 8.

Collected plaintiff claims commonly include specific defect items, such as the repair cost, leakage, and cracks; distinctions between common and exclusive areas; and liability-related keywords, such as defect liability warranty liability and joint and several liabilities. These claims focus primarily on the responsibilities and costs arising from construction defects. The plaintiff’s evidence supports these claims with factual documentation, such as appraisal (expert) reports, appraisal results, defect lists, and site images, as well as contractual materials, such as contracts for work and guarantee contracts or terms. In addition, legal provisions, such as the Civil Act and Housing Act, are used to establish the liability period and scope of defect repair guarantees, whereas procedural documents, such as content-certified mail and assignment notices, demonstrate procedural compliance. By contrast, defendants’ claims typically focus on reducing or denying responsibility and cost, arguing for overestimation and excessive appraisal to reduce the assessed amount, or claiming that the defects are minor, already repaired, or not causally related due to natural deterioration or the plaintiff’s management duty. The defendant’s evidence typically includes specifications, design drawings, work schedules, repair records, and maintenance records to argue compliance with standards and shift the discussion to management responsibility. Guarantee terms, contract clauses, and defect liability warranty periods are also cited to narrow the scope of liability under the applicable provisions, whereas rebuttals or supplemental appraisals are submitted to undermine the credibility of the plaintiff’s appraisal evidence. Furthermore, to represent the structure of claims from both parties quantitatively, the claimed and final awarded amounts were extracted from each judgment. The grant ratio was calculated as (awarded amount/claimed amount) and collected as a regression label. Finally, the judgment outcomes were categorized as dismissed, granted in full, or granted in part to form classification labels. This process yielded a dataset for model training that integrated five components: case-paragraph summaries, case-keyword summaries, claimed amounts, grant ratios, and judgment outcomes.

4.3. Experimental Environment

As discussed in

Section 4.1, the 422 collected cases exhibited a highly imbalanced class distribution across the three judgment outcomes (dismissed, granted in full, and granted in part), which posed the risk of biased model learning. In addition, on the regression task, cases labeled as granted in full typically corresponded to a grant ratio of 100%, whereas dismissed cases corresponded to 0%, and for granted in part cases, the value fell between these extremes. These intermediate values were not evenly distributed and were often densely concentrated within specific ranges, which could lead to performance disparities across different intervals if learned as-is.

Accordingly, to promote balanced learning, a keyword-mixing strategy was applied during training that shuffled keywords within each of the four textual fields of the existing training data, modifying 60% of training draws per epoch via shuffling, while no augmentation was used for the validation or test sets. As the model learned classification and regression jointly, the relative loss weights were tuned to balance the two tasks. In addition, to correct class imbalance, class-balanced sampling was incorporated with class_weighted CE = 4:1:4. For reference, the raw corpus distribution was 10.4% (dismissed), 75.1% (granted in part), and 14.5% (granted in full), whereas the class-balanced sampler yielded per-epoch sampling proportions of 28.8%, 31.3%, and 39.9% for these classes, respectively. For the regression target, which exhibited sparsity in the lower range, that region was strengthened by using the Huber loss for robustness to outliers and the quantile loss to preserve distributional characteristics. All augmentation and rebalancing techniques were used only on the training data and were not applied to the validation or test sets.

In addition, given the small dataset size, k-fold cross-validation was conducted for training and evaluation. In this study, five training runs were performed; for each fold, the training/validation split was generated with a fixed random seed (2025). The final results were reported as the mean ± sample standard deviation over the five test folds, and all comparative experiments reused the same fold partitions. Additionally, the data were split into 90% training data, 10% validation data, and 10% test data for training.

Training was conducted on an “NVIDIA GeForce RTX 3090 GPU developed by NVIDIA Corporation, Santa Clara, CA, USA, and Intel Core i9-10900X CPU (3.70 GHz) developed by Intel Corporation, Santa Clara, CA, USA.”, and 128 GB of RAM in a Windows environment. To ensure a fair comparison, all the experiments were performed under identical training conditions for each BERT variant, and the hyperparameters were fixed, as listed in

Table 2, throughout the training process.

The MAX_LEN parameter defines the maximum token length of the textual inputs, encompassing both the full narrative of the case and summarized keywords. In this study, the sentence lengths for the four textual fields (plaintiff’s claims, plaintiff’s grounds, defendant’s claims, and defendant’s grounds) comfortably fell within the defined token limits, because only the core keyword sentences required for training were collected from long-form case law. Additionally, the token lengths of the newly collected data had a mean of 74.66, a median of 77, and a maximum of 158, all well within the currently specified MAX_LEN of 512. The pretrained backbone (BERT encoder) was fine-tuned with a lower learning rate to ensure stable convergence, whereas the task-specific heads were trained with a higher learning rate to accelerate convergence. In addition, a warm-up ratio (WARMUP_RATIO) was applied to mitigate abrupt parameter updates during the early training stage.

4.4. Model Evaluation

First, the model was designed as a multitask architecture. To examine the validity of this design, ablations were conducted based on loss weights: a classification-only setting was trained by setting the classification loss weight to 0 (Weighted_cls), a regression-only setting was trained by setting the regression loss weight to 0 (Weighted_reg), and both settings were evaluated. In addition, using the same backbone, single-task baselines were trained with task-specific heads only: classification-only (Single_cls) and regression-only (Single_reg). All five settings were then compared: the two weighted ablations, the two single-task baselines, and the multitask model trained jointly.

Table 3 lists the performance metrics for the single-task and multitask settings. For classification, the MultiTask model attained Accuracy = 0.7647 and F1-Macro = 0.5743, outperforming both Weighted_cls and Single_cls. Although the F1-Macro showed a relatively large standard deviation, the results remained practically meaningful when considered together with the Accuracy. For regression, all models achieved broadly similar performance; in terms of the reported metrics, Weighted_reg yielded the best scores (MAE = 0.2417, RMSE = 0.3115). Accordingly, we do not claim superiority of the multitask model on regression metrics. We select the multitask model as the final configuration because of the clear classification gains, while explicitly acknowledging the MAE/RMSE advantage of Weighted_reg.

Next, building on the multitask model, the BERT backbone was replaced with several candidates commonly used for legal text processing and the most suitable BERT for the proposed model was evaluated. The corresponding evaluation metrics for the classification and regression tasks are listed in

Table 4.

Examining the results for each BERT variant, for classification, the KR-BERT backbone performed best with Accuracy = 0.7647 and F1-Macro = 0.5742. For regression, KoELECTRA achieved the strongest scores with MAE = 0.2277 and RMSE = 0.2896. Legal-BERT recorded the lowest classification performance (Accuracy = 0.2529, F1-Macro = 0.2048), which was expected because it was optimized for English legal corpora and thus incompatible with the Korean setting of this study. KLUE-BERT and KLUE-RoBERTa exhibited similar, but comparatively lower, performance, which was likely because, although they were pretrained on large-scale Korean data, the fine-tuning schedule and setup did not translate into clear gains.

This study aimed to support decision-making prior to litigation for defect-repair-guarantee disputes. As such cases centrally involve the claimed amount, stronger regression performance is particularly advantageous for decision-support. KoELECTRA showed the lowest error on the regression task relative to the other models, and its classification performance (Accuracy = 0.7294, F1-Macro = 0.5401) was also respectable. For classification, the relatively large standard deviation primarily reflects the underrepresentation of dismissed and granted in full and fold-to-fold exposure differences in the data. On regression, the MAE gap between KoELECTRA (0.2277) and KLUE-RoBERTa (0.2388) is ≈0.0111 on the 0–1 scale, which is small; in view of the observed variability and the data distribution in this study, we interpret this as a modest but practically useful improvement for decision-support rather than a broad claim of superiority. Accordingly, within this study, KoELECTRA is selected as the backbone for the model on a fit-for-purpose basis.

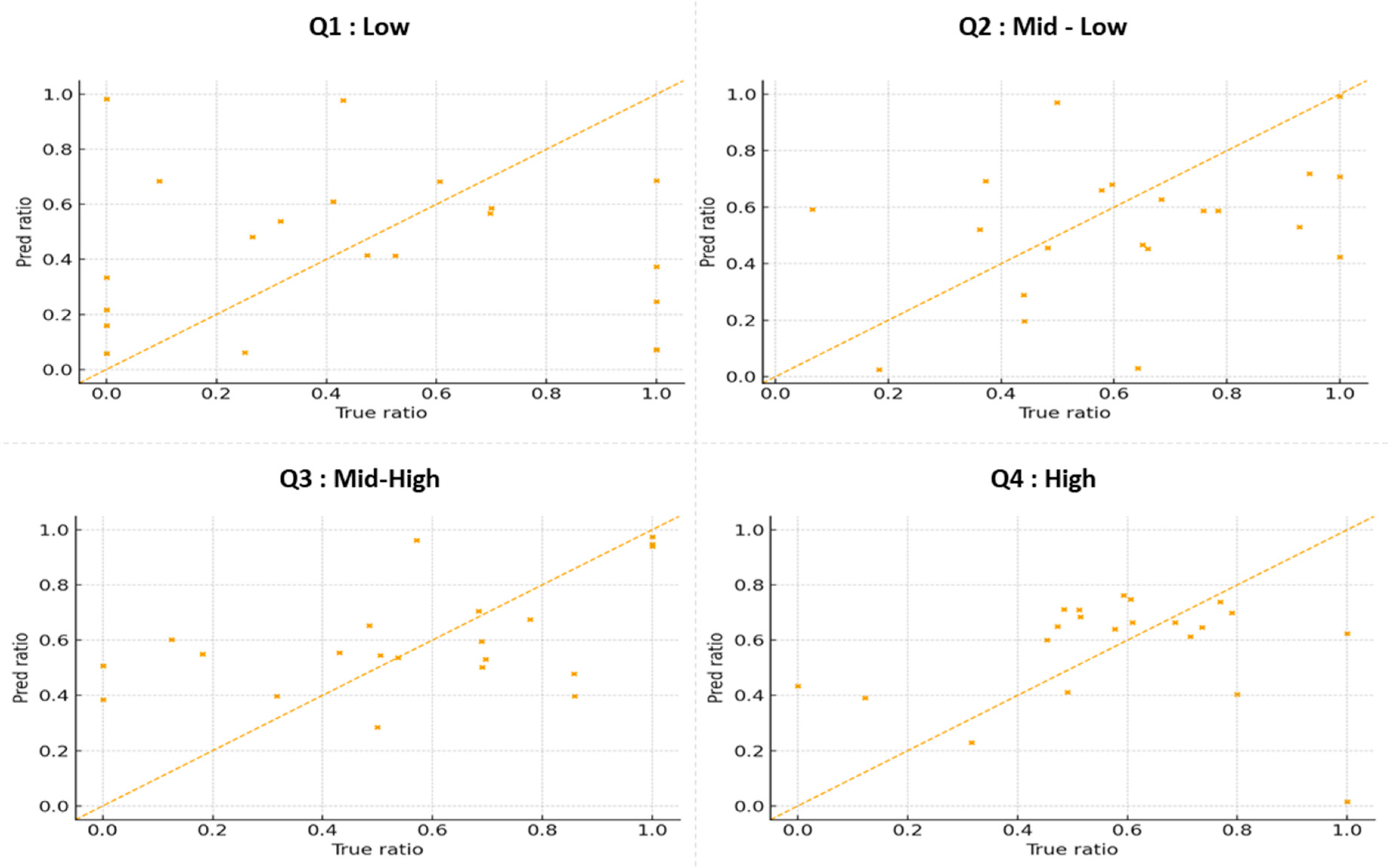

Based on the selected KoELECTRA backbone and the architecture proposed in this study, the test results are presented as a scatter plot and a confusion matrix in

Figure 9. In the scatter plot, correctly predicted dismissals and full citations clustered near 0 and 1, respectively, while partial citations generally fell within the correct region with deviations on the order of the mean error. When the model misclassified, cases predicted as dismissal concentrated near 0, those incorrectly predicted as granted in full concentrated near 1, and the remaining errors tended to gravitate toward the 0.5 range.

The confusion matrix shows a very high recall for granted in part, whereas the recall for dismissal and granted in full, which were comparatively underrepresented in the data, was lower, with many of those cases being misclassified as granted in part. However, considering the regression performance on instances where the classification was correct, the overall behavior aligned with the decision-support objective of this study.

Figure 10 shows four scatter plots created by dividing the data into four groups based on claim amount and sample size. Q1 (low amount) displays points widely dispersed around the diagonal, indicating a weak signal and high variability, with MAE = 0.3694 and RMSE = 0.4783—the largest errors among the four groups. Q2 (mid-low) has points more densely clustered near the diagonal and appears most stable, with MAE = 0.2053 and RMSE = 0.2642—the best performance. Q3 (mid-high) has more points below the diagonal, revealing a tendency toward underprediction, with MAE = 0.2455 and RMSE = 0.3011—larger errors than Q2 and suggesting room for calibration. Q4 (high amount) shows greater dispersion without a strong directional bias; with MAE = 0.2051 and RMSE = 0.2926, average performance is maintained, though individual cases vary considerably.

In view of these observations—and the comparatively sparse coverage at extreme outcomes and grant-ratio regions near 0 and 1—this study adopts a conservative scope for decision-support: recommendations are provided only for cases predicted as partial citations. Predictions of dismissals or full citations are labeled UNCERTAIN and deferred to human review. This scope reflects the present dataset distribution and aims to minimize high-impact errors in deployment.

For decision-support, the model’s inputs mirrored the training data: four textual strands (brief sentences for the plaintiff’s claims, plaintiff’s grounds, defendant’s claims, and defendant’s grounds) together with the claimed amount. Given these inputs, the model output the predicted verdict (dismissal, granted in full, or granted in part) and the grant ratio. A schematic of this setup is shown in

Figure 11 below.

5. Discussion and Conclusions

This study developed an NLP-based legal judgment prediction model for defect litigation to support decision-making in the pre-litigation dispute resolution stage and thus help prevent cases from proceeding to court. The model was trained on plaintiffs’ and defendants’ claims, evidence, and claimed amounts available prior to litigation. The claims and evidence were summarized into keyword-level representations to enhance practical usability. To ensure the quality of the model, five BERT-based variants were selected as candidate backbones, and their performances were evaluated by applying them to an identical multimodal multitask architecture.

As a result, KR-BERT achieved the best performance on classification, whereas KoELECTRA performed the best on regression. As the objective of this study, defect-repair-guarantee disputes, places greater emphasis on predicting the extent to which the claimed amount will be granted (grant ratio) rather than on the proportion of wins, KoELECTRA was selected as the backbone due to its superior regression performance.

Predictions were then presented using real case data: at the pre-litigation dispute resolution stage, users input concise statements of their claims and grounds rather than long passages, and the model returns the predicted verdict and grant ratio. This procedure supports decision-making by providing expected values should the case proceed to litigation, based on succinct claims and grounds.

The purpose of this study is to support decision-making at the pre-litigation dispute resolution stage by providing predictions of the litigation outcome and the grant ratio of the claimed amount. Using only minimal inputs, the plaintiff’s claims/grounds, the defendant’s claims/grounds, and the claimed amount, the model performed predictions [

56,

57,

58,

59].

Some previous studies have used the Facts section of case law to triage early cases (e.g., whether to accept or dismiss), and other studies improved accuracy by broadly combining statutes, case law, and knowledge. Whereas those approaches split long case law texts into sentences and feed them into training, the proposed approach summarizes the plaintiff’s and defendant’s claims and grounds into short key sentences that fit within the token limits, and, mindful of real-world usability, allows prediction from brief user-provided statements.

This design has the advantage of being lightweight and fast, though it may lose subtle context. Conversely, long-text approaches can preserve richer context and explanations; however, they increase the construction costs and complexity, require more computation, and, as in the consultation-stage scenario, impose impractical data-preparation burdens on the parties. From the perspective of the study objective, providing a tool that supports decisions with limited data at the consultation stage, the proposed approach is a pragmatic and valuable solution.

This functionality can be practically used in the dispute resolution stage before litigation, where project owners, management entities, and contractors can input their claims, evidence, and expected claim amounts to receive predicted outcomes as decision-making support. However, there are several limitations and areas for improvement:

In actual court rulings, decisions are not determined solely by the arguments and evidence submitted by the plaintiff and defendant. During litigation, the court may appoint an expert witness designated by the court itself, rather than one commissioned by the plaintiff or defendant, and such external evaluations can influence the outcome of the ruling. Therefore, using only currently available pre-litigation data inherently limits the predictive performance of the model. Future research should integrate additional variables arising during the litigation process as auxiliary learning features and collect more cases to enhance the reliability of the training dataset.

Only the principal (plaintiff’s) claim amounts were used for training to ensure balanced learning because only a small portion of the collected cases included both principal claims and counterclaims. Consequently, the current model cannot accurately predict the monetary amounts claimed by defendants as counterclaims. Future studies should incorporate cases that include both principals and counterclaims to improve the ability of the model to handle bidirectional monetary claims. In addition, because the training data reflect cases available up to the present time, the applicability of the model may be time-bounded if relevant laws, appraisal practices, market conditions, or litigation standards change after the present; accordingly, the reported findings should be interpreted within the period of data collection. If such changes occur after the present, subsequent studies should refresh the dataset with post-change cases and re-evaluate the model, optionally re-applying the post-hoc calibration used in this study to align regression outputs.

The high classification performance observed in this study was largely because most of the collected cases were labeled as granted in part, which may have biased the model toward predicting the majority class. Although data augmentation was applied to increase the number of dismissed and granted in full samples within the training set, the validation and test sets were kept imbalanced to maintain the strictness of the performance evaluation. Future work should collect additional dismissed and granted in full cases to improve the reliability and generalizability of the classification performance.

This study used data collected by a single annotator, which necessarily contains a degree of subjectivity, rather than data gathered in a strictly regularized manner via automated processing rules. Consequently, if a different annotator were to construct the same training set, perfect reproducibility would be limited. To mitigate this limitation, it is necessary to adopt methods that identify intent and semantic properties in semantically equivalent but phrased-differently sentences, so that they can be consolidated into a uniform training dataset. In addition, we will clarify the procedure by preparing concise inclusion/exclusion and normalization guidelines and will strengthen reproducibility by adopting double labeling with an agreement (adjudication) protocol in subsequent data collection.

The NLP-based judgment prediction model proposed in this study raises important ethical considerations regarding the legitimacy of employing AI for legal interpretation. Specifically, the data used for model training were extracted from historical court rulings, which may inherently contain historical bias embedded in past judicial decisions. Therefore, the proposed model should be regarded not as a determinant basis for decision-making in pre-litigation defect dispute mediation, but rather as an auxiliary reference tool to support rational and informed decision-making. Moreover, ensuring the structural transparency of the model and the interpretability of its outputs is essential to maintain both user trust and accountability in the decision-making process. From this perspective, further research is required to develop an ADR governance and process that appropriately utilizes the proposed model. Accordingly, in this study the model is interpreted strictly as an ex-ante advisory tool for pre-litigation ADR and is not intended to substitute for or reflect court-appointed appraisals or evidence generated during litigation. Future work will incorporate post-filing variables (e.g., court-appointed appraisal outcomes) as auxiliary features and examine how pre-appraisal predictions shift once such information becomes available.

Addressing the aforementioned limitations is expected to enhance the utility of the model for supporting decision-making in the pre-litigation dispute resolution stage and may also enable the model to be extended and referenced for practical use in actual court judgment prediction tasks.