2.3.1. Design of Improved Genetic Algorithm

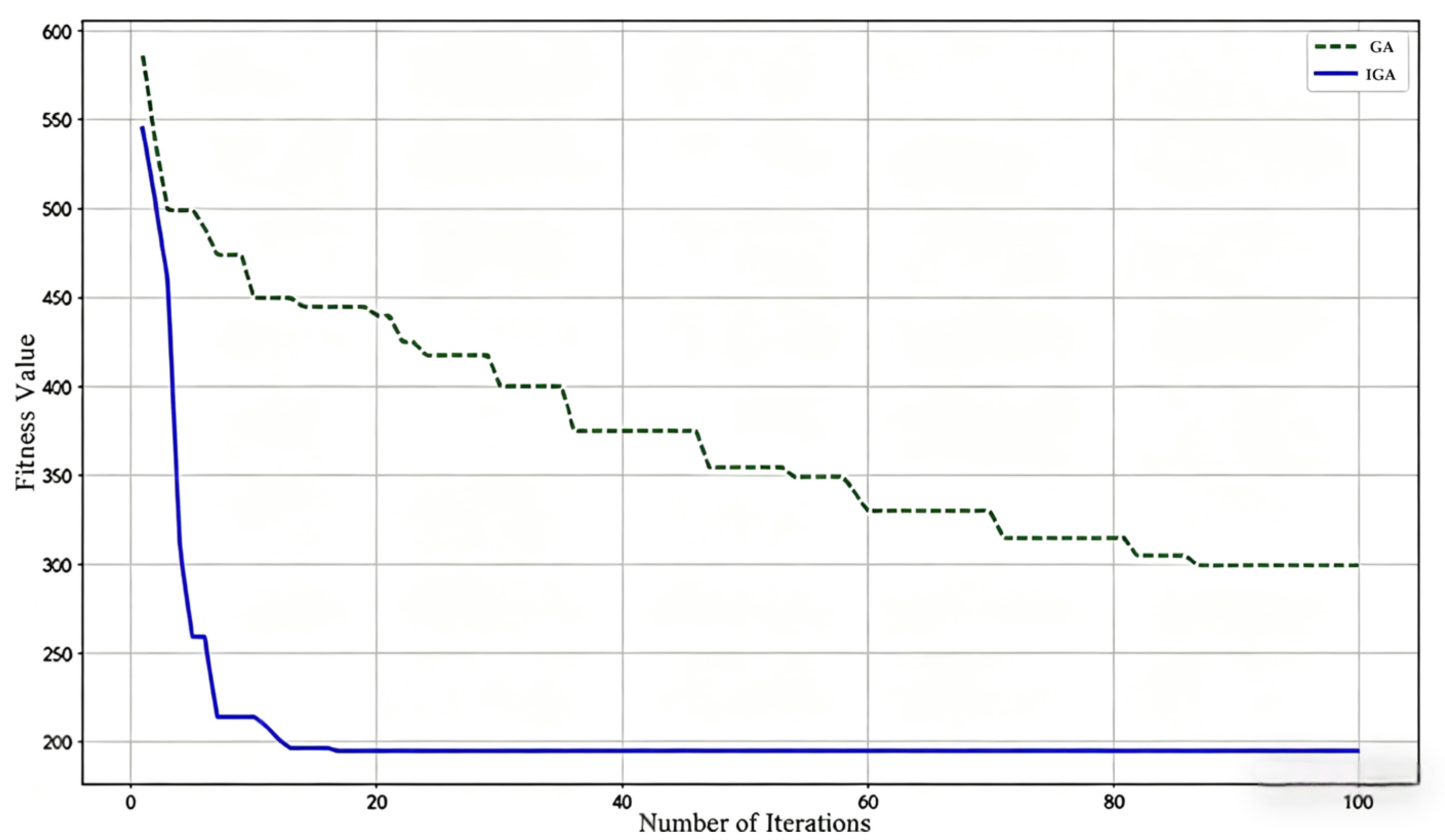

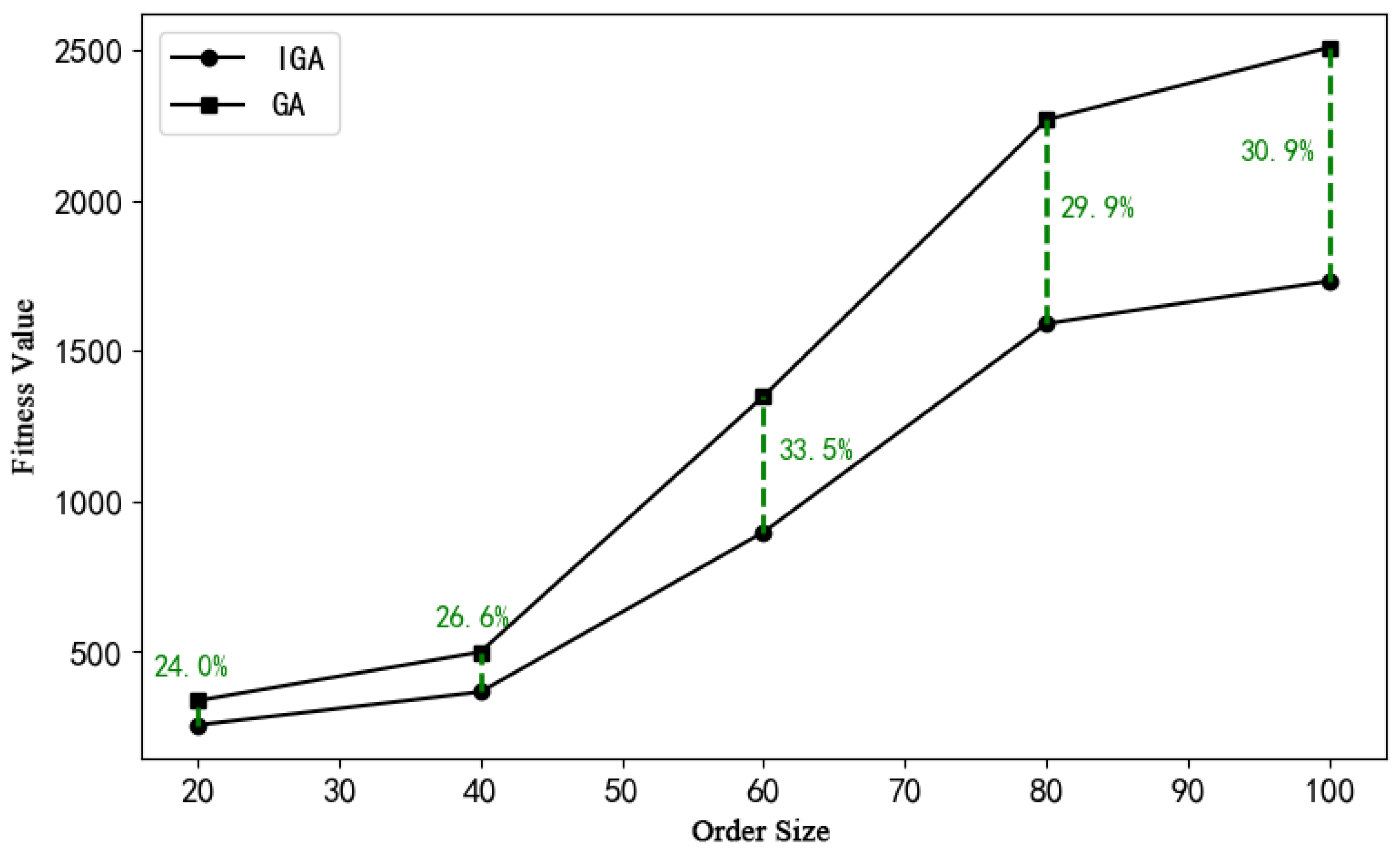

To optimize the task allocation and scheduling routes for four-way shuttles and elevators during collaborative operations, this study designs an improved genetic algorithm based on path reversal mutation and sequence retention crossover, taking into account the operational characteristics of the warehousing system. The algorithm adopts a hierarchical chromosome encoding structure and introduces a weighting method to balance three objectives: energy consumption, time, and load balancing, thereby enhancing the practicality and global optimality of the scheduling strategy.

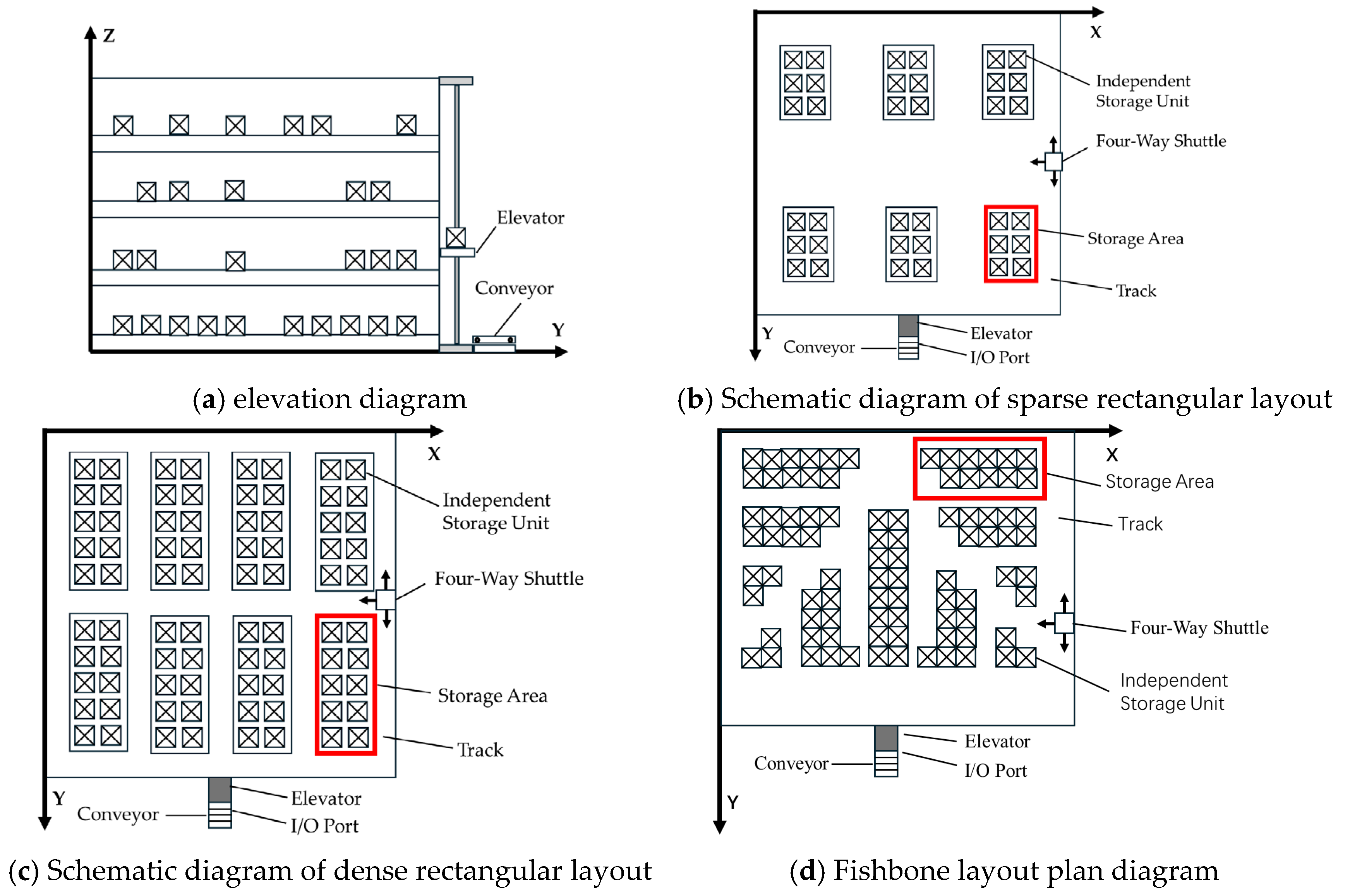

- (1)

Initialization of Population and Encoding

Cells containing existing goods are marked as 1, while vacant positions are marked as 0, forming an executable task region map. Based on order information, the system classifies all tasks into two categories:

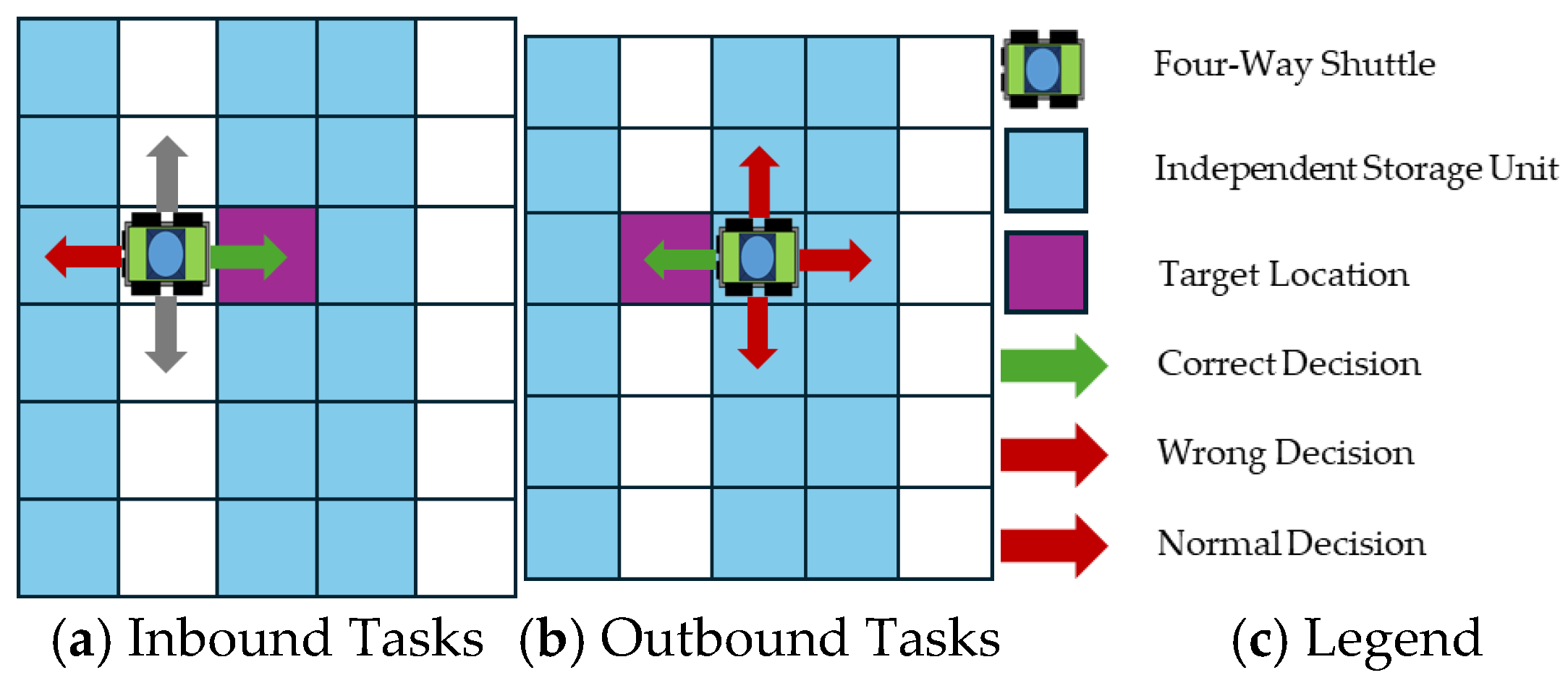

(1) Inbound tasks: Goods need to be transported from the bottom entrance to designated storage locations, and are assigned positive integer identifiers;

(2) Outbound tasks: Goods need to be transported from designated storage locations to the exit and are assigned negative integer identifiers.

According to the collaborative operation logic of four-way shuttles and elevators, this study designs a chromosome structure with

layers, where

denotes the number of four-way shuttles. Layers 1 to

represent the order sequence of tasks assigned to each shuttle, while the

-th layer represents the elevator’s operation sequence, which is determined based on the current position of the elevator and the operational relationships from layers 1 to

. For example, if the scheduling task includes four inbound and four outbound tasks, to be executed collaboratively by two four-way shuttles and one elevator, the chromosome structure is as shown in

Figure 3. In this structure, the first and second layers correspond to the tasks allocated to the two shuttles, and the third layer is the elevator operation sequence generated by the system according to cross-layer transfer requirements.

- (2)

Fitness Calculation

To minimize energy consumption, total operation time, and load balancing index, this study establishes a fitness model

.

In this equation, are the weighting coefficients for the three objectives, subject to the constraint . denotes the load balancing index.

To accommodate different operational modes, this study adopts two types of weighting strategies:

(1) Energy-Saving Priority Mode:

In scenarios with moderate task density and a focus on cost control, the weighting coefficients (for load balancing) are appropriately increased, while the emphasis on time constraint is reduced;

(2) Efficiency Priority Mode:

In peak order scheduling or emergency task handling scenarios, the weighting coefficient for minimizing total operation time is increased accordingly.

- (3)

Design of Genetic Operators

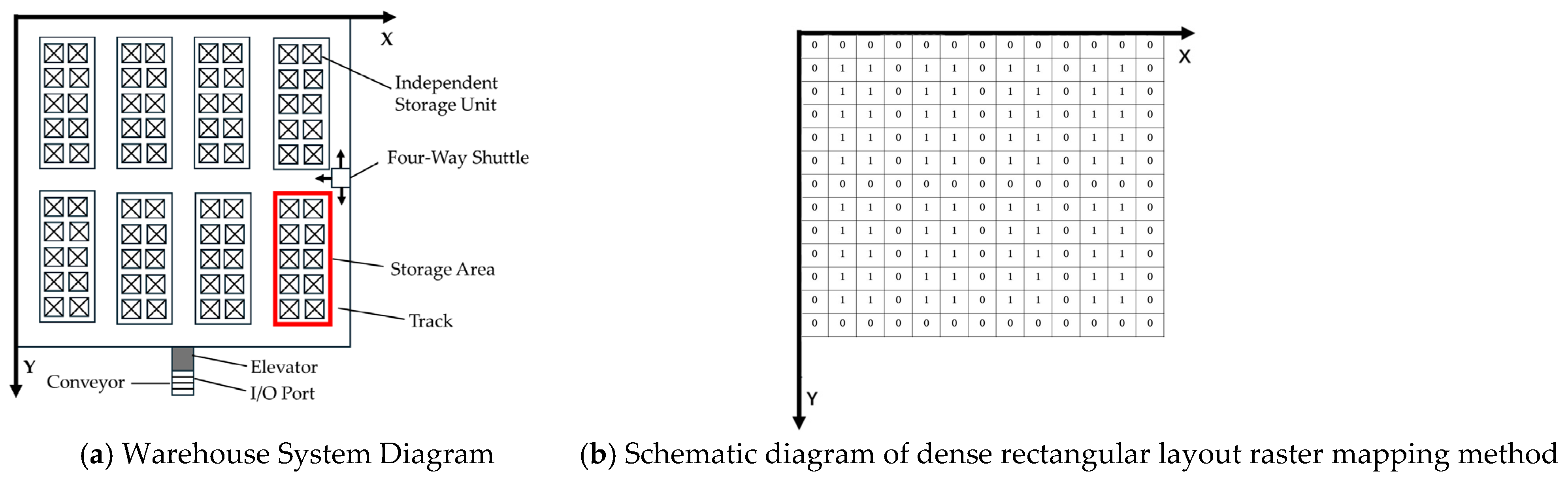

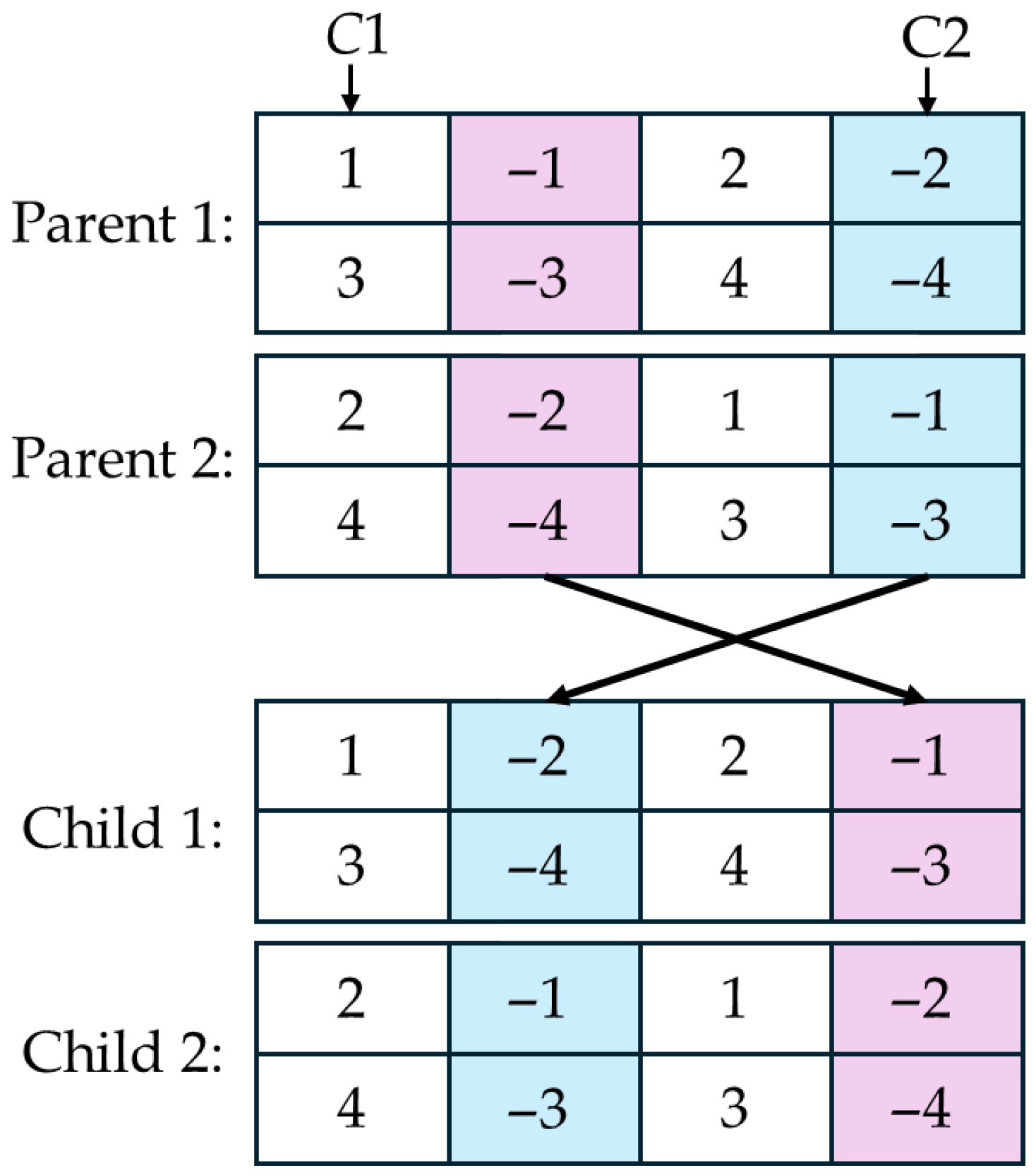

(1) Sequence-Retaining Crossover

To reduce the travel distance and energy consumption of the four-way shuttles, the system operates based on a combination of multiple outbound tasks to a single inbound task. In this design, sequence-retaining crossover is adopted, with chromosome crossover operations conducted in the order of inbound tasks followed by outbound tasks. The specific steps are as follows:

I. For a chromosome of length , two random numbers and are generated to determine the segment of the chromosome to participate in crossover; crossover operations are performed on the genes between positions and ;

II. A random number is generated to determine the type of task involved in the crossover. If , the crossover operation is applied to inbound tasks; if , the operation is applied to outbound tasks.

This method effectively enhances the feasibility and rationality of offspring solutions, avoiding decreased fitness due to operation logic errors. Taking the outbound tasks of two four-way shuttles as an example, a schematic diagram of the sequence-retaining crossover operation is shown in

Figure 4.

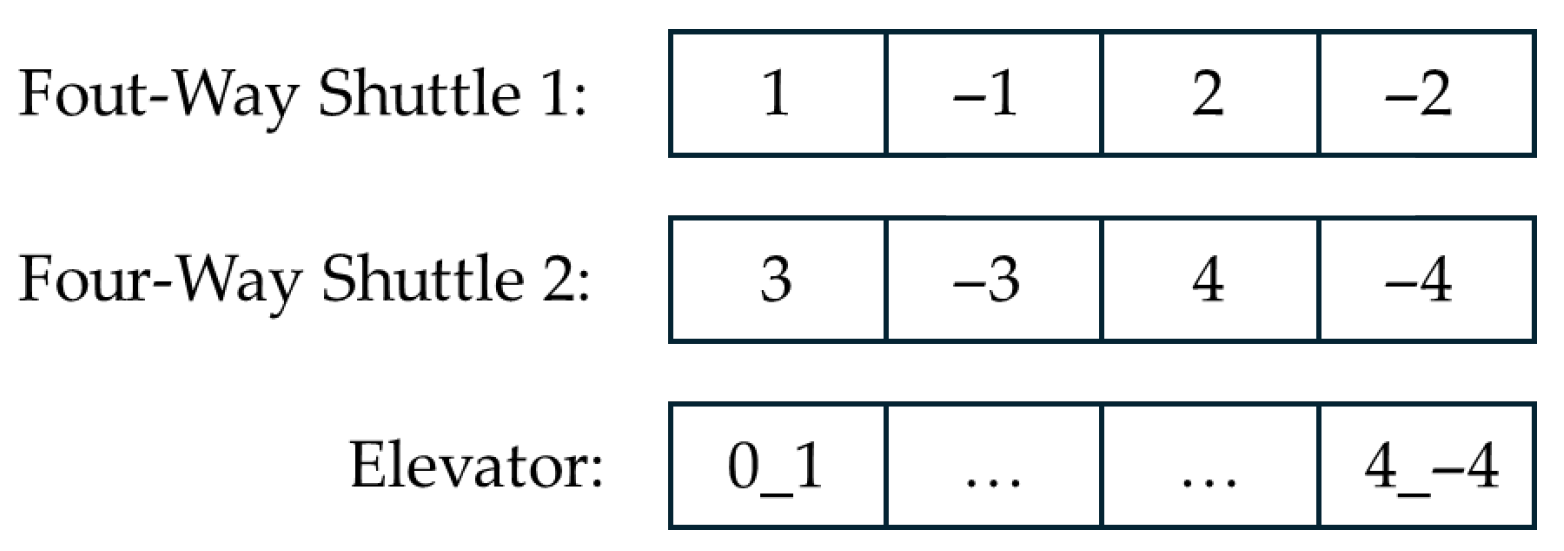

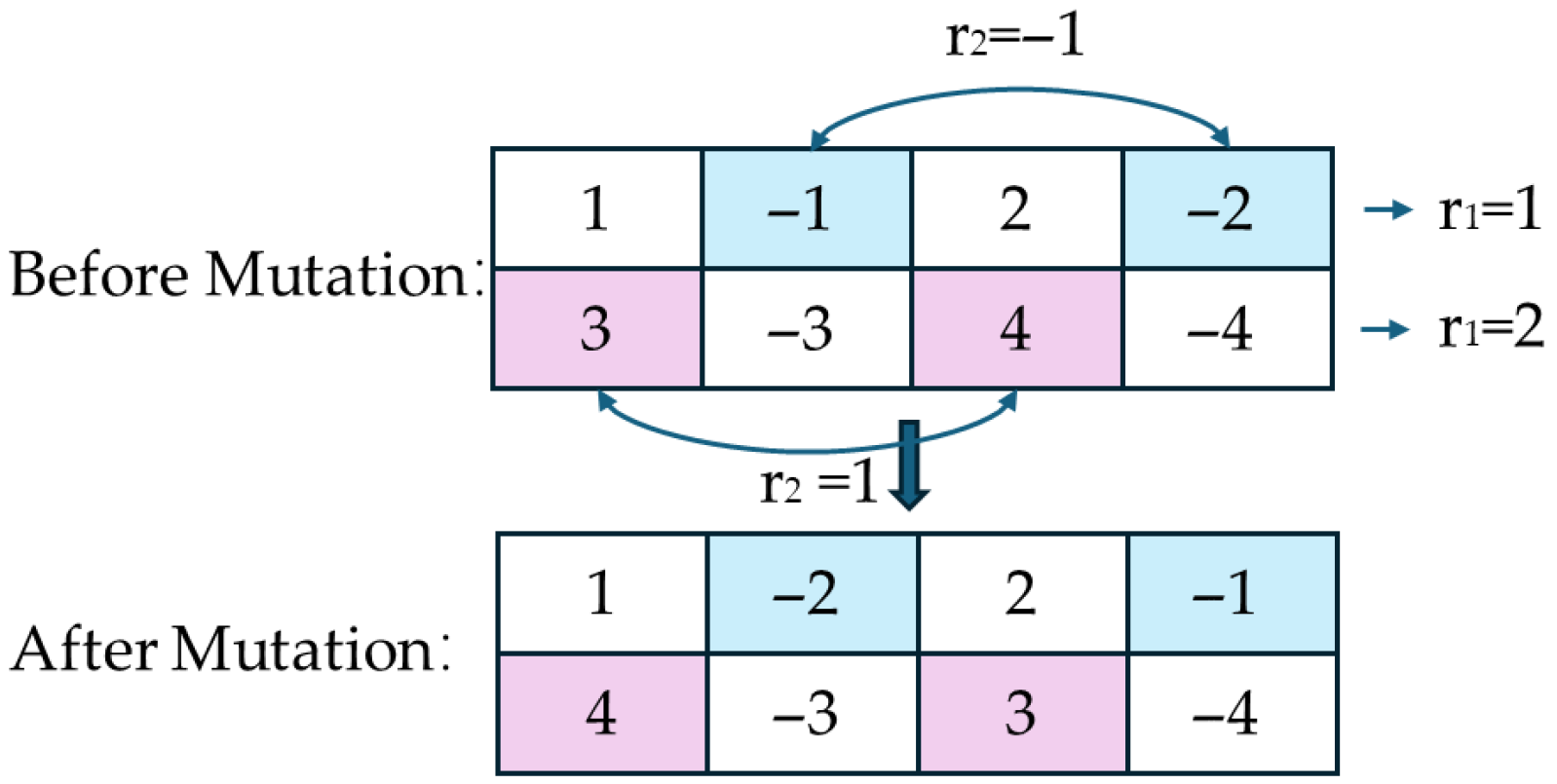

(2) Path Reversal Mutation

The mutation operation is designed to introduce new gene combinations, thereby enhancing chromosome diversity and preventing the algorithm from becoming trapped in local optima. In this study, a local path reversal mutation is employed, in which the task sequence of the four-way shuttle is reversed to simulate the impact of different task orders on scheduling. When there are four-way shuttles in operation, the specific procedure is as follows:

I. Generate a random positive integer, i.e., randomly select one of the four-way shuttles to perform the mutation operation.

II. Generate a random integer , where 1 and –1 represent mutation operations on inbound or outbound tasks, respectively.

Through the mutation operation, both the diversity of the search and the overall convergence performance of the algorithm can be improved. An example of this operation on the task sequences of two four-way shuttles is illustrated in

Figure 5.

- (4)

Flowchart of the Improved Genetic Algorithm

To achieve efficient collaborative scheduling of four-way shuttles and elevators in a multi-task environment, this study develops an improved genetic algorithm that integrates a hierarchical encoding structure, order-preserving crossover, and path reversal mutation strategies. The optimization process, as shown in

Figure 6, consists of six core steps: population initialization, fitness evaluation, selection, crossover, mutation, and updating. The optimal scheduling scheme is obtained through iterative optimization [

32].

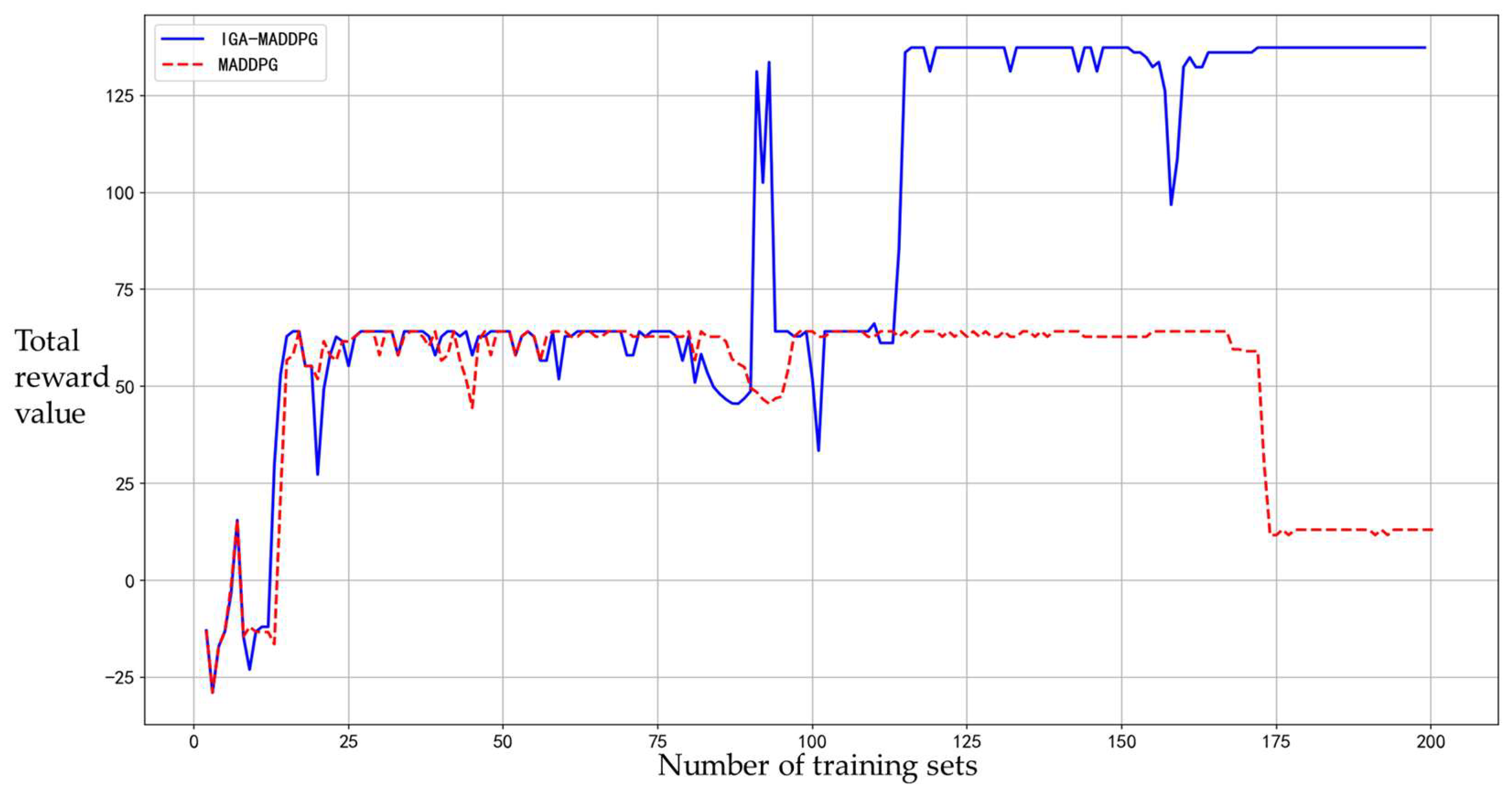

2.3.2. MADDPG Scheduling Algorithm

- (1)

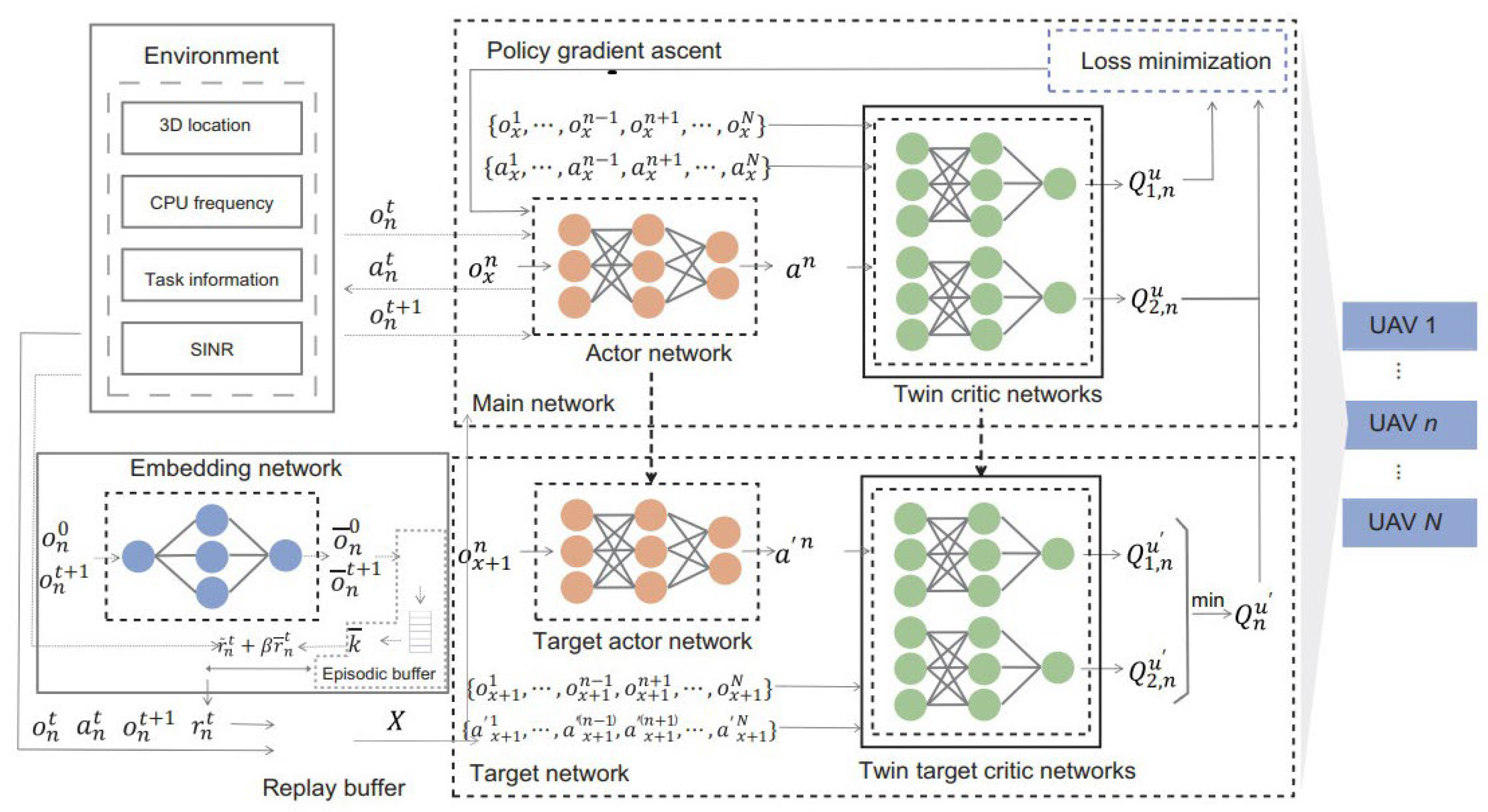

Principle of MADDPG

In a multi-agent system based on the MADDPG algorithm, each agent continuously interacts with the environment to construct and optimize a joint action policy. Specifically, each agent selects an action based on its own local observations, resulting in a joint action set [

33]. The environment then provides each agent with an immediate reward based on these joint actions and updates to a new joint state. During this process, agents collect reward information from the environment, calculate cumulative returns, and use these as the basis for adjusting their policies. Through this repeated action–state–reward interactive learning process, agents gradually learn the optimal strategy that maximizes their cumulative return.

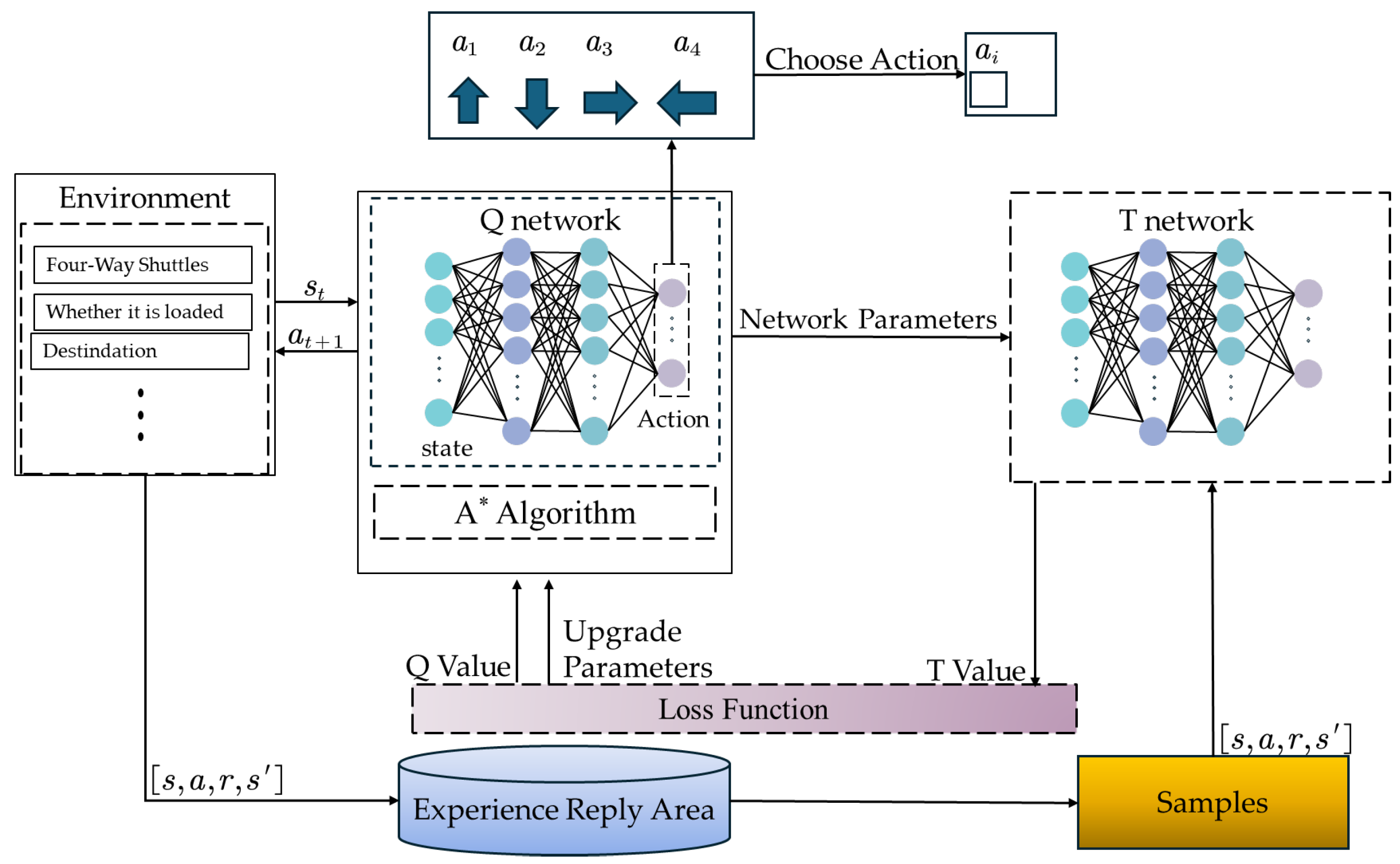

This algorithm trains multiple agents using actor and critic networks. In the actor network, the optimal action decisions are derived by integrating the state–action value function with policy gradients and optimizing the parameters

. In the critic network, the actions from the actor network are evaluated based on temporal-difference (TD) error. Both the actor and critic utilize an evaluation network and a target network. The evaluation network is responsible for estimating the state–action value function, and its parameters are continuously updated during training. The target network retains a copy of the evaluation network’s parameters from an earlier time and is not involved in training. By providing relatively stable target values, the target network enables the calculation of the TD error. The TD error is computed based on the outputs of the target and evaluation networks. By minimizing this error, the parameters of the critic network are optimized, allowing the evaluation network to better estimate the state–action value function. The neural network architecture of the MADDPG algorithm is illustrated in

Figure 7.

- (2)

Design

(1) State Space Design:

For a warehouse system with four-way shuttles and elevators, the system comprises a total of agents. Each agent constructs its local observation space according to its scheduling objectives, while the critic network uniformly utilizes the global state.

In the state space of the four-way shuttle, the local state vector for each shuttle

is defined as:

In the equation, indicate the discretized position coordinates; indicates the task queue information currently assigned to the th shuttle; indicates to the target layer information for each task in the task queue; indicates the number of remaining tasks for the current mission of the th shuttle.

In the elevator state space, the local observation

for each elevator includes:

In the equation, indicates the current floor where the elevator is located; indicates the current operational status of the elevator; is the waiting queue, representing the list of four-way shuttles requesting service.

During the centralized training phase, the global states used by the critic network during training includes:

In the equation, indicates the states of all four-way shuttles; indicates the states of all elevators; indicates the global task pool, which includes the status of all orders, including task ID, type, and status; indicates the load and energy consumption statistics.

(2) Action Space Design

To accommodate the hybrid optimization objective of scheduling and path adjustment, the action space is defined by equipment type as follows:

In these equations, indicates the action space for the four-way shuttle, and indicates the selection of the current task, indicating whether to switch the current task; indicates the velocity setting, indicates whether a elevator request is needed, indicates the action space of the elevator, indicates task selection, i.e., choosing the current service target from the request queue; indicates the layer-switching action, indicating whether to proactively move to the target floor to reduce waiting time.

(3) Reward Function Design

The reward function

for four-way shuttle

takes into account the energy consumption penalty, no-load energy consumption penalty, task timeliness reward, and load balancing reward:

In the equation, indicates the energy consumption penalty; indicates the no-load energy consumption penalty; indicates the task timeliness reward; indicates the load balancing reward of four-way shuttles; indicates the conflict penalty. and indicate the weighting of these five consideration metrics.

The reward function

for elevator

includes the following components:

where

is the penalty for vertical transport energy consumption;

is the waiting time penalty;

is the full-load task reward. When

equals 1, it indicates that the elevator is fully loaded; when

equals 0, it indicates that the elevator is unloaded.

- (3)

Optimization Strategy Combining Genetic Algorithm and MADDPG

(1) Collaborative Design

Since a single algorithm often struggles to balance global search capability and local responsiveness, this study develops a hierarchical optimization algorithm that integrates the Genetic Algorithm (GA) with Multi-Agent Deep Deterministic Policy Gradient (MADDPG), fully leveraging the complementary strengths of both approaches. The hierarchical optimization framework consists of a global optimization layer and a local execution optimization layer, detailed as follows:

Global Optimization Layer: The genetic algorithm is employed to generate the initial task allocation and operation sequence based on task information, the initial status of equipment, and the warehouse topology network.

Local Execution Optimization Layer: MADDPG is used to train multi-agent policies, enabling real-time adjustments to task sequences, speeds, and scheduling decisions during execution to enhance robustness and energy performance.

Unlike traditional optimization methods, this work does not merely utilize the genetic algorithm for static optimization; instead, the scheduling schemes produced by the genetic algorithm are further transformed and injected into the experience replay buffer of MADDPG, providing heuristic prior knowledge for reinforcement learning. This experience injection approach improves the training efficiency of MADDPG, facilitates the rapid acquisition of collaborative strategies, and reduces the risk of convergence to local optima.

(2) System Scheduling Process Design

The system scheduling process is shown in

Table 1.

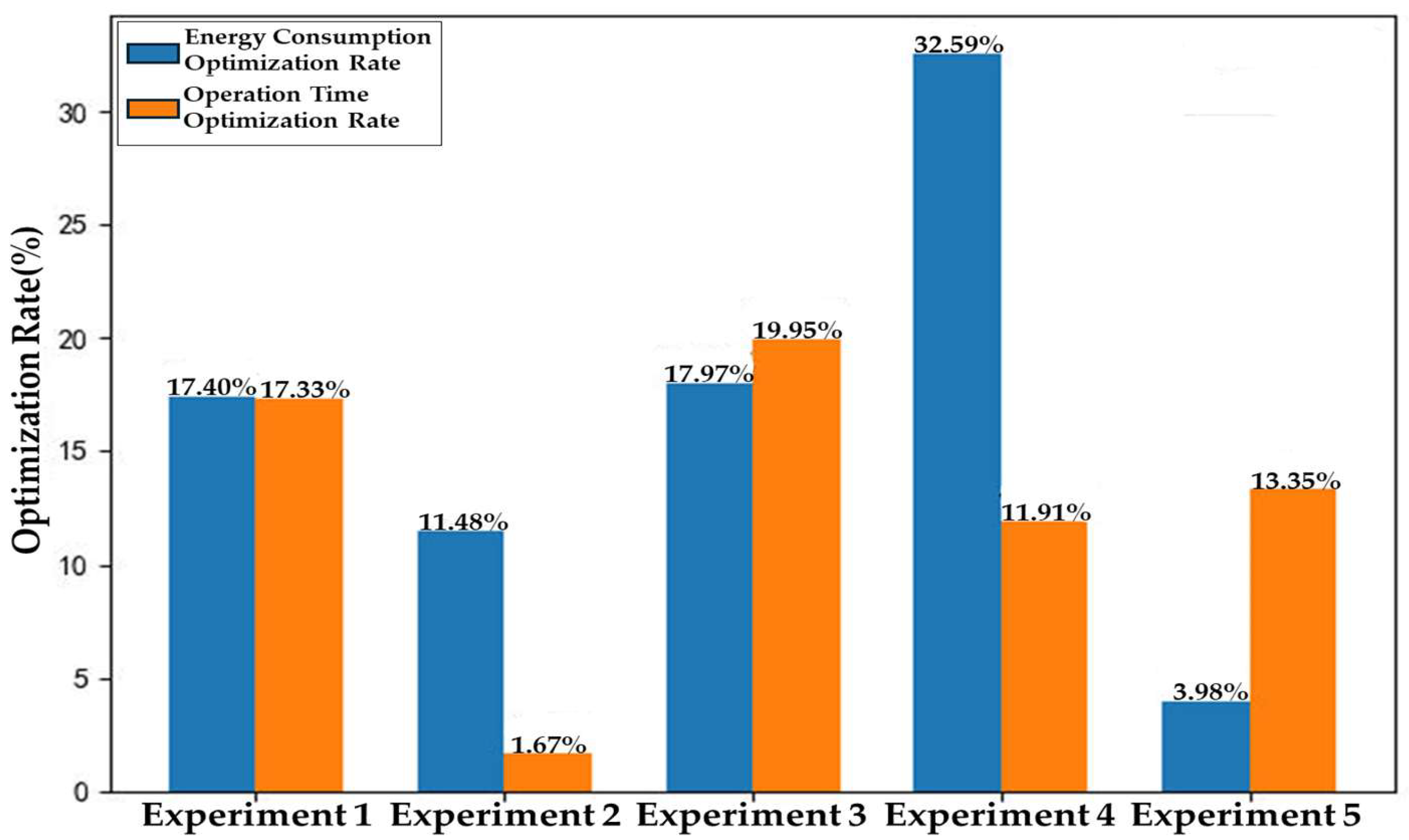

In the scheduling optimization framework proposed in this section, the Improved Genetic Algorithm (IGA) is responsible for global task allocation in the global optimization layer. By employing strategies such as hierarchical chromosome encoding, sequence retention crossover, and path-reversal mutation, the IGA generates high-quality initial task allocation schemes. This foundational scheme ensures the rationality and global optimality of task allocation throughout the entire scheduling process. The MADDPG algorithm operates at the local execution optimization layer, dynamically adjusting task sequences, speeds, and scheduling decisions based on real-time states. By injecting the scheduling schemes generated by IGA into the MADDPG experience pool, reinforcement learning can rapidly acquire collaborative strategies and further optimize local scheduling behaviors. This hierarchical optimization framework integrates global optimization with dynamic responsiveness, fully harnessing the advantages of both IGA and MADDPG algorithms. It overcomes the limitations of single algorithms in four-way shuttle scheduling scenarios and achieves multi-objective optimization for energy consumption, time, and load balancing. As a result, it effectively addresses challenges such as uneven task allocation, poor adaptability to dynamic environments, high idle rates of equipment, and difficulties in energy optimization in multi-shuttle cooperative operations.

By synergistically optimizing the Improved Genetic Algorithm (IGA) and the Multi-Agent Deep Deterministic Policy Gradient (MADDPG) algorithm, in combination with an A*-guided Deep Q-Network (A*-DQN) path planning method, the proposed scheduling algorithm can significantly reduce the energy consumption of four-way shuttle warehouse systems, lower equipment idle rates, and enhance operational efficiency. Furthermore, the algorithm achieves balanced task allocation, improves the degree of load balancing, and strengthens the system’s adaptability and stability in dynamic environments. This integrated optimization not only enhances the overall system performance but also provides robust support for energy conservation and intelligent operation in warehouse systems.

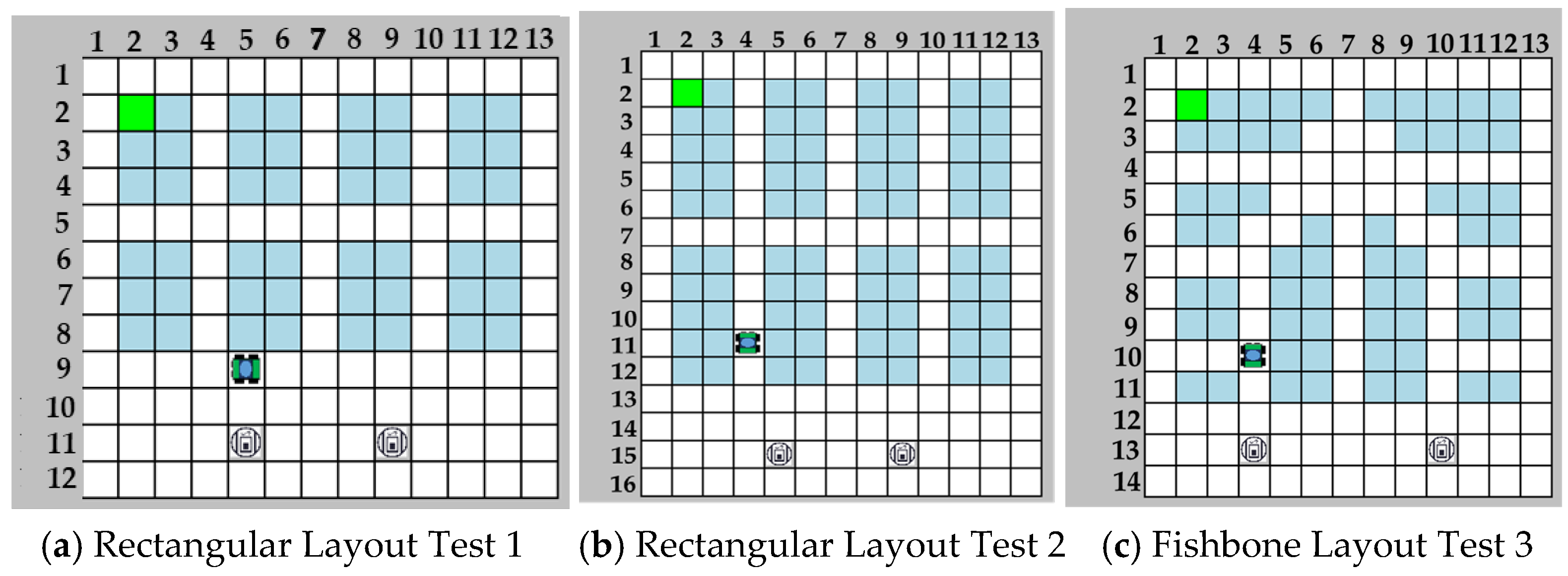

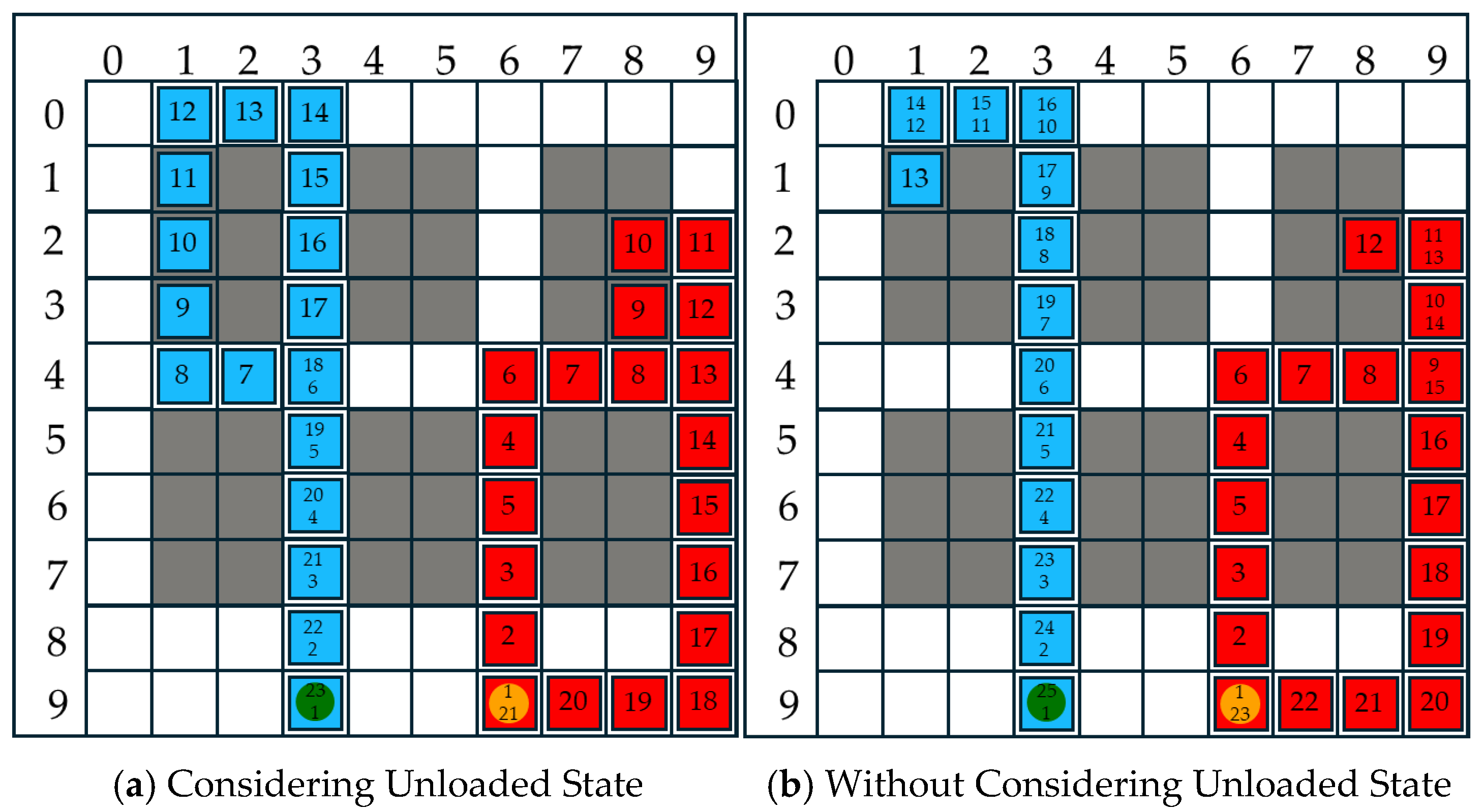

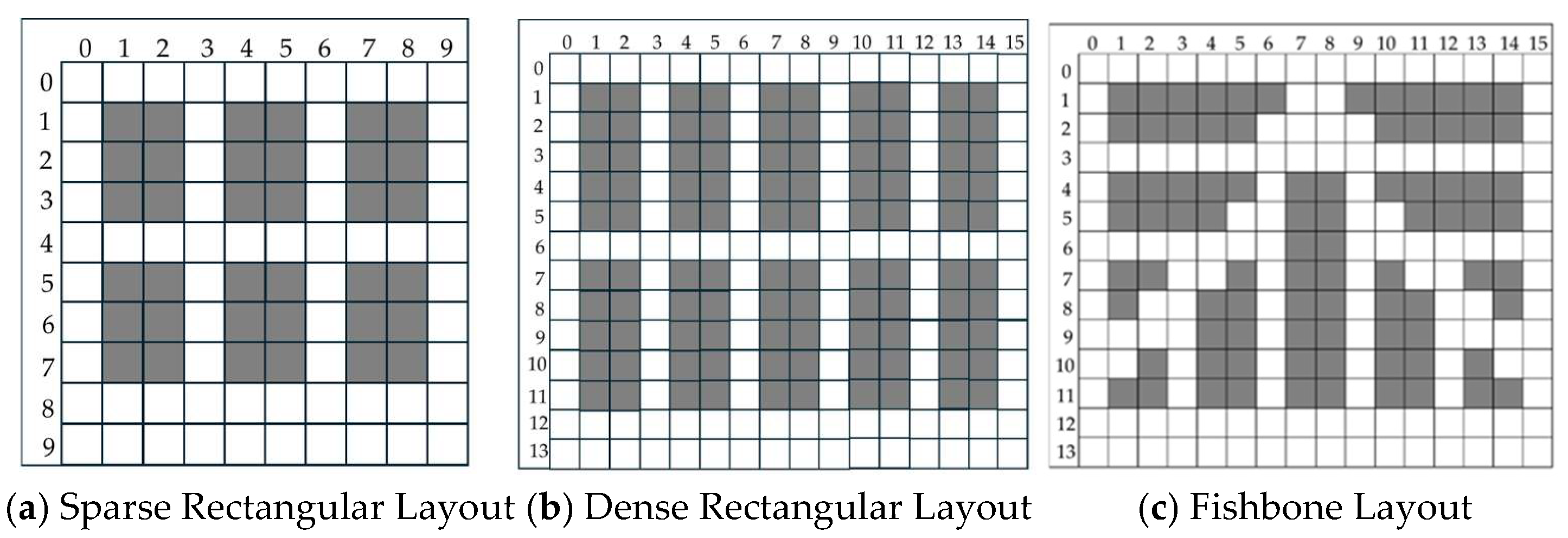

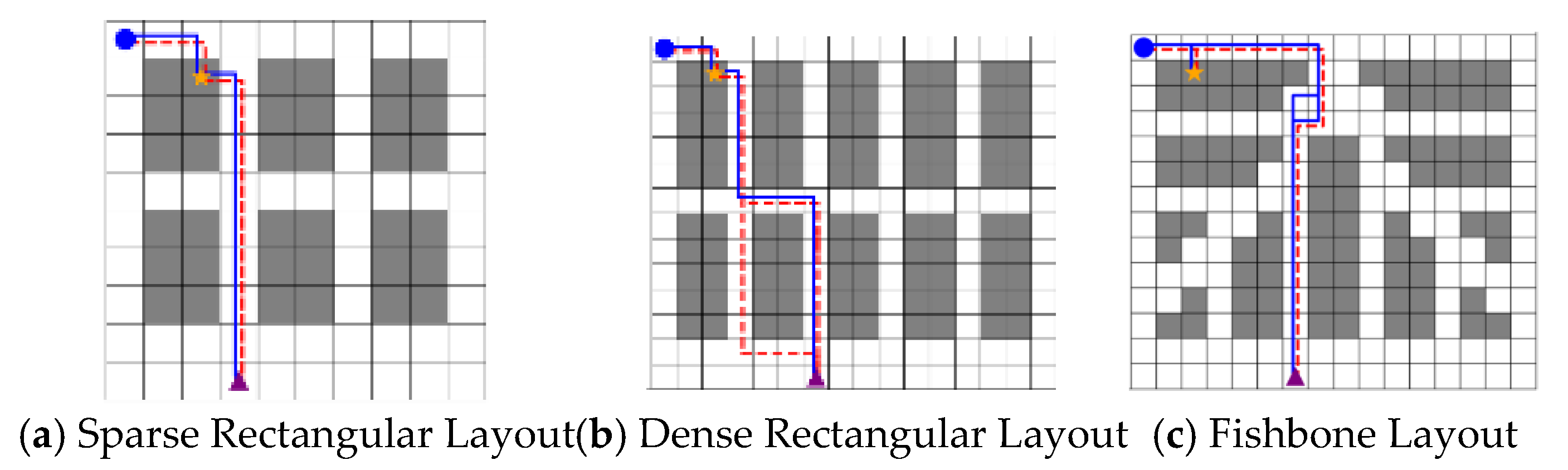

2.3.3. Path Planning Algorithm Based on A*-Guided DQN

- (1)

Path Planning Design Based on A* Algorithm

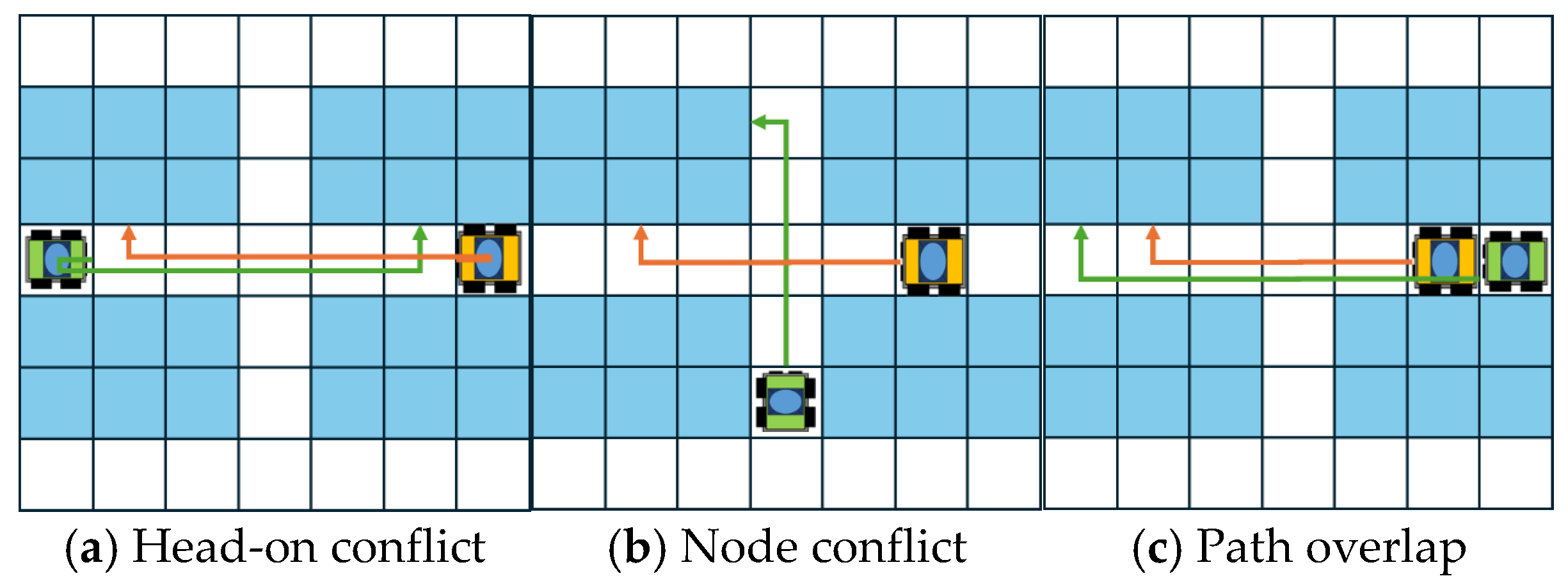

(1) Path Conflicts and Resolution

When multiple four-way shuttles operate on the same level, three types of conflicts may occur, as illustrated in

Figure 8: Head-on conflict, as shown in

Figure 8a, occurs when the angle between the directions of two shuttles is 180 degrees. Node conflict, as shown in

Figure 8b, arises when the angle between the directions of two shuttles is 90 degrees. Path overlap, as shown in

Figure 8c, occurs when two shuttles are moving in the same direction. If the leading shuttle makes a sudden stop or turns, a collision may occur with the following shuttle; in this case, the angle between the two shuttles is 0 degrees.

When two four-way shuttles,

and

, are operating simultaneously, their direction vectors are

,

, respectively. The angle between the vectors is calculated as shown in Equation (26). By converting the value to degrees and referring to the definitions above, the conflict type can be determined according to the criteria in Equation (27).

In the equation,

indicates the angle between the operational direction vectors of the two four-way shuttles, with specific values defined as follows:

To address head-on conflicts encountered during shuttle operations, the system assigns a decision-making priority to each shuttle based on the priority of its current task. When a conflict arises, the shuttle with lower priority must proactively yield according to predefined rules. During the yielding process, if it is feasible for the shuttle to reverse or change lanes, it performs the corresponding maneuver. If yielding in place is not feasible, the system dynamically generates an alternative detour path—subject to grid validity constraints—to enable the shuttle to circumvent the conflict.

When a node conflict occurs between two four-way shuttles,

and

, the arrival times

of both shuttles at the node are calculated according to Equation (28). Whether the time window offset threshold is satisfied is then determined. If the threshold is not met, the moving speed of the lower-priority shuttle must be adjusted.

In the equation, indicates the minimum time window offset threshold. and respectively indicate the distance from the starting point of four-way shuttle or four-way shuttle to the node.

When the paths of the current shuttle

and the following shuttle

overlap, the minimum safe distance between the two shuttles is calculated according to Equation (29). If

, the minimum safe distance is not met. The following shuttle must decelerate for adjustment.

where

is the minimum safe distance,

is the safety factor used to eliminate system errors, and

is the system response time.

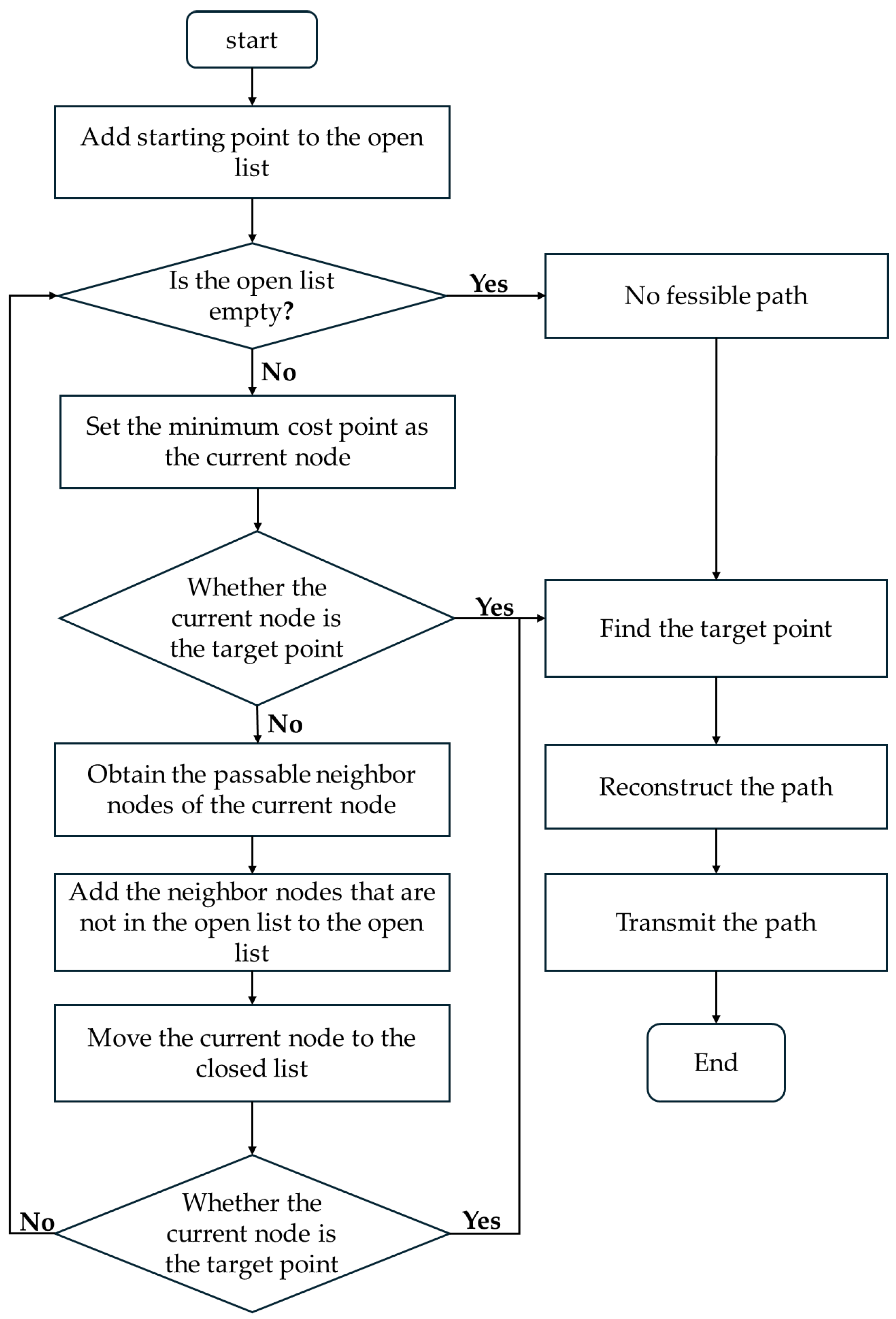

- (2)

A* Path Execution Modes

In this study, the A* path execution process is divided into two modes:

(1) Route-Guided Mode

In this approach, a complete path is generated directly during the path planning stage, and the four-way shuttle executes its tasks strictly according to the pre-defined path. This mode offers high computational efficiency and fast execution speed, making it suitable for scenarios with predetermined tasks and static environments [

34].

(2) Step-by-Step Guidance

In this mode, only the currently feasible segment of the path is planned. During execution, subsequent path segments are updated in real time according to environmental feedback until the task is completed. This mode demonstrates strong adaptability to environmental changes, enables dynamic obstacle avoidance, and is suitable for highly dynamic and frequently interactive dense warehousing systems.

In consideration of the practical operational characteristics of four-way shuttle-based warehouse systems and to enhance both path safety and system responsiveness, this study adopts the step-by-step guidance strategy as the path execution mode. The specific steps of the A* algorithm employed in this study are illustrated in

Figure 9.

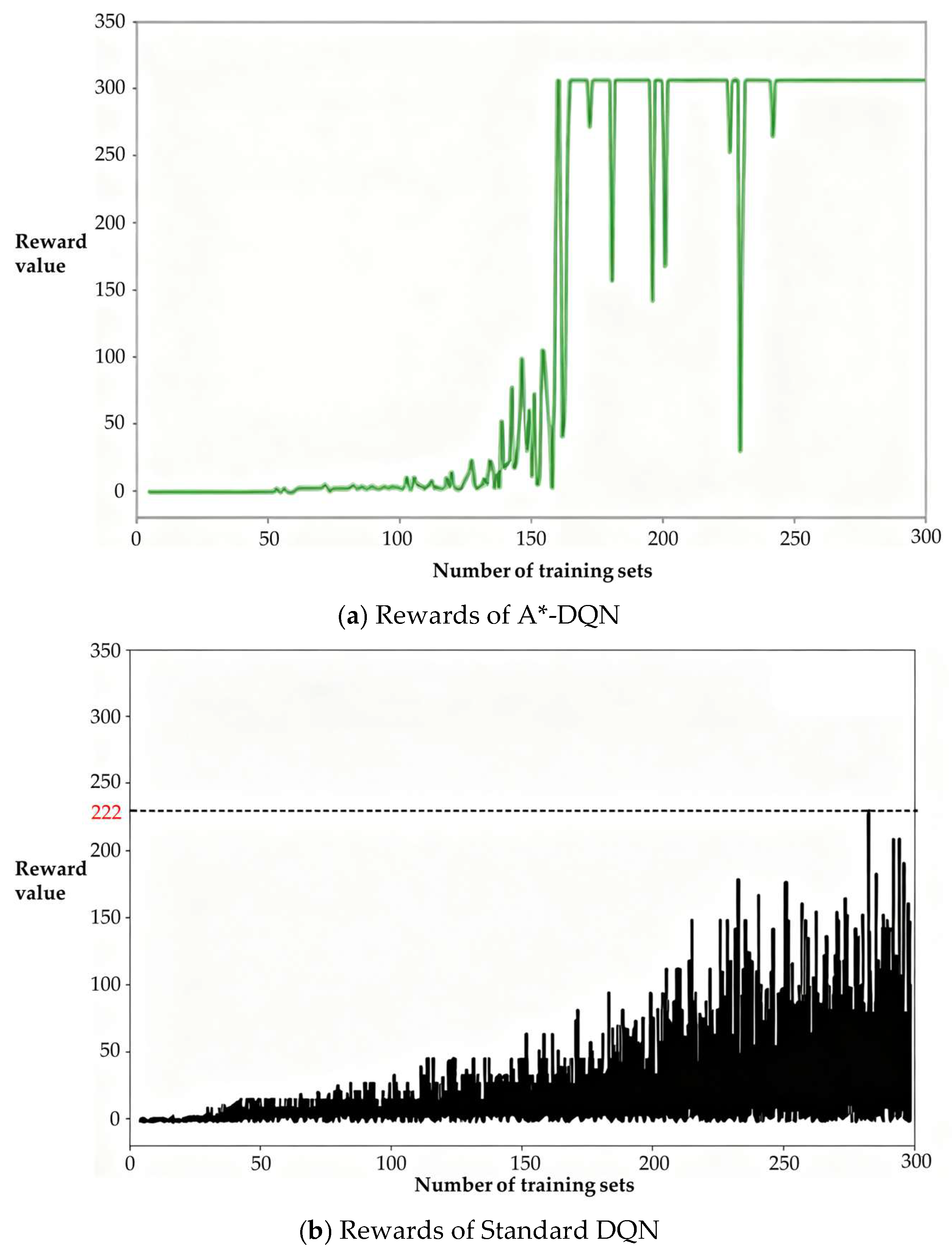

Deep Q-Networks (DQN) typically rely on random exploration in the initial stages, which, in high-dimensional spaces, can lead to inefficient and goal-deviating path behaviors. To improve decision-making efficiency, this study incorporates data from the A* algorithm to guide the decision process. At the early stage of training, the A*-guided DQN decision-making algorithm provides the agent with initial path planning recommendations based on the A* algorithm (see

Figure 10).

The design of the A*-guided DQN algorithm is as follows:

(1) Action Selection

By dynamically selecting actions planned by the A* algorithm according to the current state in real time, the agent’s exploration efficiency at the early stage can be significantly improved.

(2) Experience Replay

The paths generated by the A* algorithm are used as experience data for the DQN, enabling the agent to learn effective strategies more rapidly.

(3) Dynamic Adjustment

During the training process, increasing reliance is placed on the strategies learned by the DQN itself.

To enable the transition to neural network-based decision-making, this study adjusts the frequency of neural network decisions by setting the exploration rate ε, where 1-ε represents the probability of using the A* algorithm. The training is designed to allow the neural network to converge and make accurate decisions. In the initial stage, the A* algorithm is prioritized, and as training progresses, the proportion of neural network usage is gradually increased to ensure eventual convergence. During the first one-third of the training period, the exploration rate is fixed at 0.8, after which it is gradually increased to 1.

Through A* planning guidance, decision fusion, and dynamic adjustment of policy weights, the path learning ability of the four-way shuttle in complex environments is effectively enhanced. The structure of the A*-guided DQN algorithm (A*-DQN) is shown in

Figure 11.

The parameters of the current value network

are updated using the loss function:

In the equation, , indicates the learning rate, indicates the current reward, is the discount factor, is the next state after executing action , and indicates the -value of the optimal action selected at .

- (3)

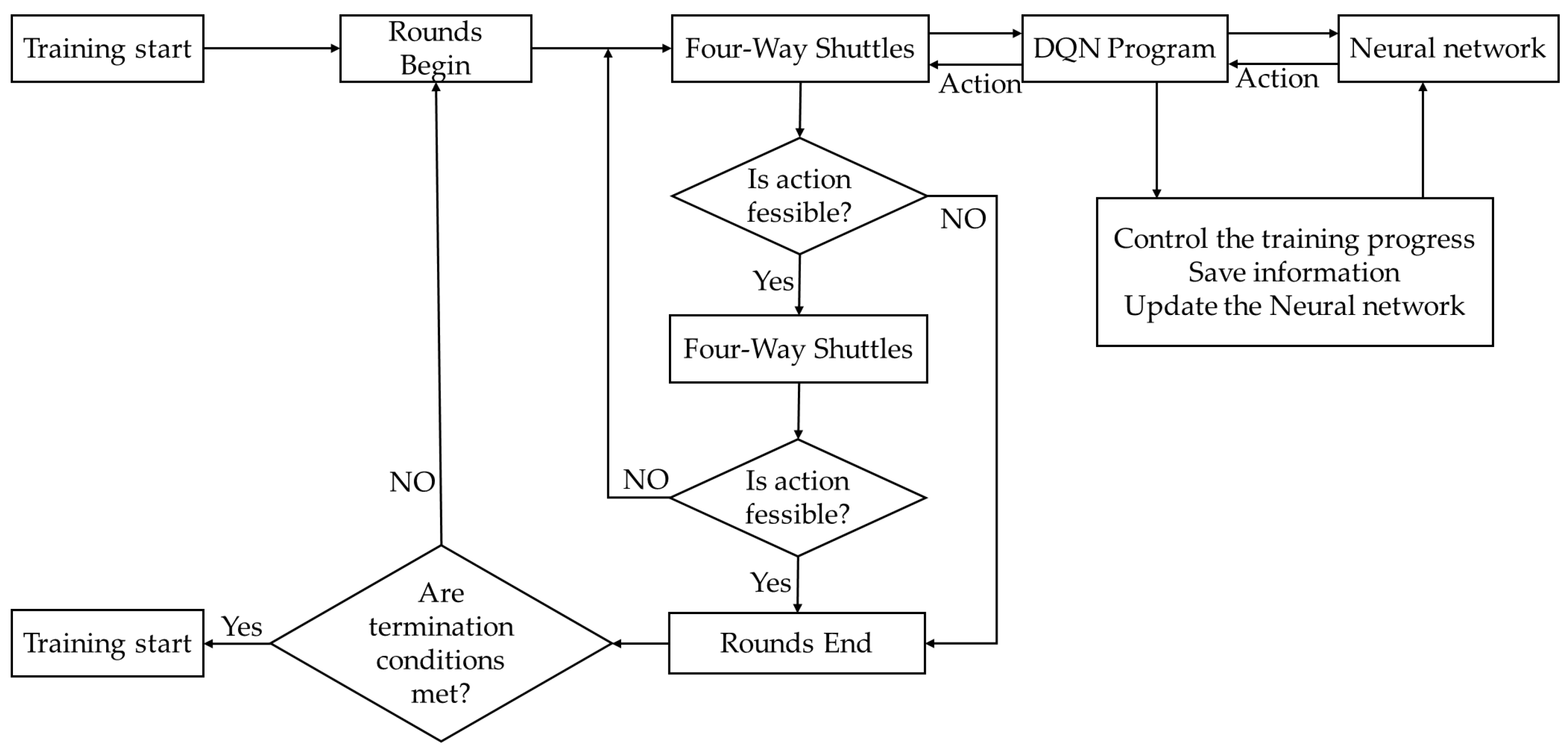

Training Process for Four-Way Shuttle

This study designs a training process tailored for the path planning task of the four-way shuttle, as illustrated in

Figure 12.

The procedure of the A*-DQN algorithm is as follows: The agent acquires the current state from the environment and inputs it into the DQN network, which outputs the optimal action. The legality of the action is then determined; if the action is illegal, the episode terminates, otherwise, the action is executed. The system subsequently returns a reward and the next state, forming an experience tuple that is stored in the experience replay buffer. Once the buffer reaches its capacity, mini-batches are sampled to compute the loss function and update the Q-network parameters. At fixed intervals, the parameters of the current Q-network are synchronized to the target network to enhance training stability, until the termination condition is met. This algorithm utilizes the path generated by the A* algorithm as the initial guiding policy for DQN, thereby leveraging the efficient planning capability of A* to improve exploration efficiency. As training progresses, the proportion of DQN’s own policy usage is gradually increased, ultimately enabling convergence of the path planning policy and improving both the efficiency and accuracy of path planning.

This section focuses on the path planning problem of four-way shuttle in dense warehouse environments and proposes an A*-DQN path optimization algorithm that integrates A* heuristic search with deep reinforcement learning. By using the A* path as the initial policy guiding signal, the algorithm enhances exploration efficiency, constructs a four-dimensional state space and a staged reward mechanism, thereby significantly improving the learning efficiency of the model and the quality of the planned paths. At the same time, by incorporating information on the shuttle’s loading status, the model dynamically adjusts path constraints to ensure the feasibility and safety of path planning. Moreover, based on the grid map state space and the designed staged reward mechanism, the agent is further guided to learn the optimal path planning policy, effectively transforming the path planning problem into a reinforcement learning problem and achieving deep integration of the model and algorithm.

In this section, the scheduling algorithm and path planning are tightly coupled and mutually reinforcing. In terms of path planning, the proposed A*-DQN algorithm introduces a path map switching mechanism by integrating the shuttle’s loading status information, thereby enabling adaptive adjustment of path constraints under different operating conditions. The A* algorithm provides initial path planning suggestions for DQN, accelerating the convergence process of DQN. This integrated approach not only improves the efficiency and accuracy of path planning but also significantly enhances the four-way shuttle’s path learning and obstacle avoidance capabilities in complex layouts and dynamic environments. Within the scheduling model, the results of task allocation provide the A*-DQN algorithm with the task sequence and target location information, enabling A*-DQN to dynamically adjust path planning accordingly. Through rational task allocation, the system reduces ineffective movements and waiting times of the four-way shuttles, while efficient path planning further minimizes path conflicts and detours. The synergistic effect of these two aspects achieves reduced energy consumption and improved operational efficiency. This integrated approach not only enhances the overall system performance but also improves the system’s adaptability and stability in dynamic environments, providing strong support for the intelligent upgrading of warehouse systems.