3.1. Overview

Given a pair of partially overlapping observations, an RGB image

and a LiDAR point cloud

, where

W and

H are the width and height of the image respectively, and

N is the number of points for the point cloud. The goal of I2P registration is to estimate the relative rigid transformation

between

and

, where

. A natural and intuitive approach is to establish the 2D–3D correspondences, represented by

, and estimate the relative pose by minimizing the reprojection error as follows:

where

is the projection function from 3D space to image plane and

is the intrinsic matrix of the camera. Assuming accurate correspondences are established, Equation (

1) can be iteratively solved by EPnP with RANSAC or Bundle Adjustment [

17,

41]. Therefore, the accuracy of 2D–3D correspondences becomes a crucial factor in determining the performance of I2P registration.

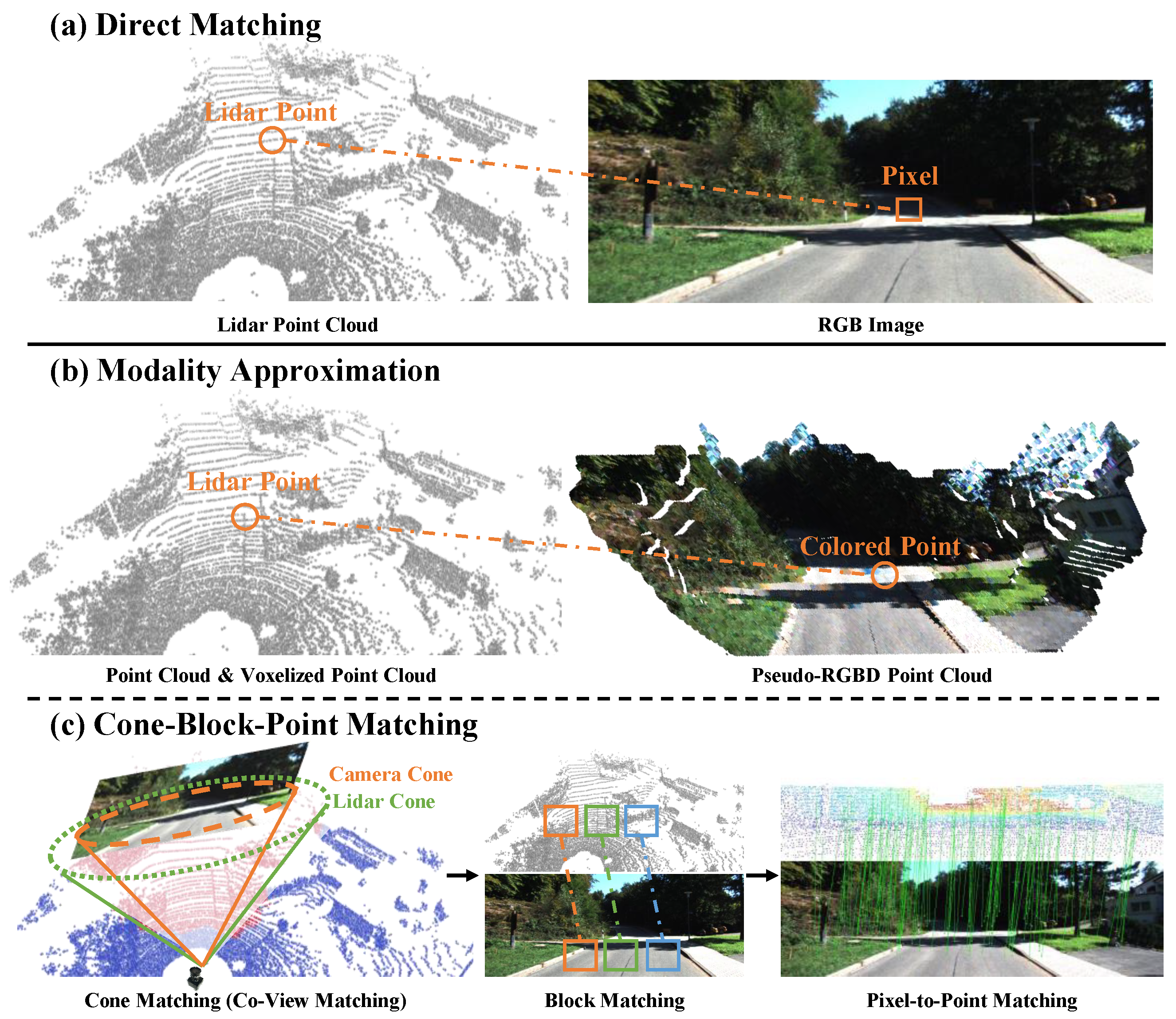

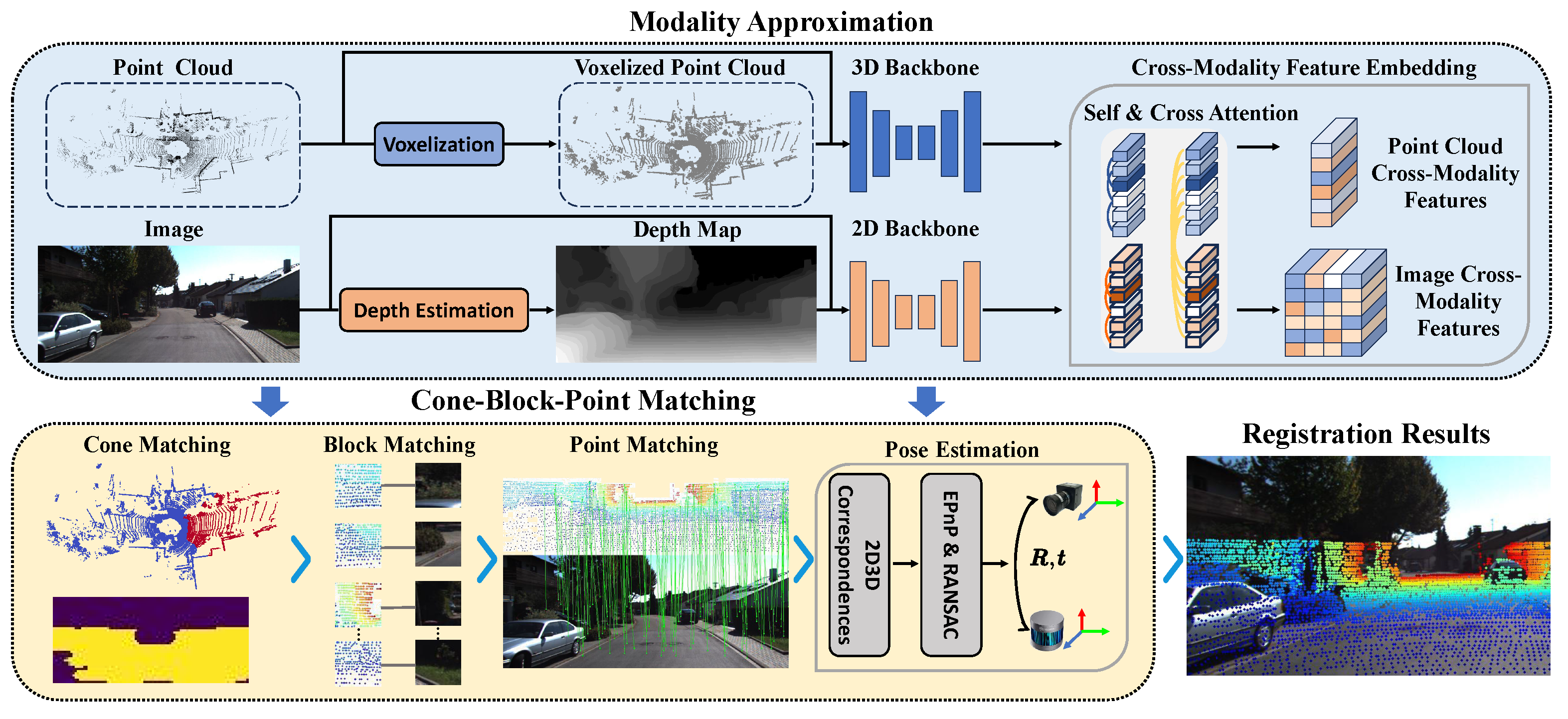

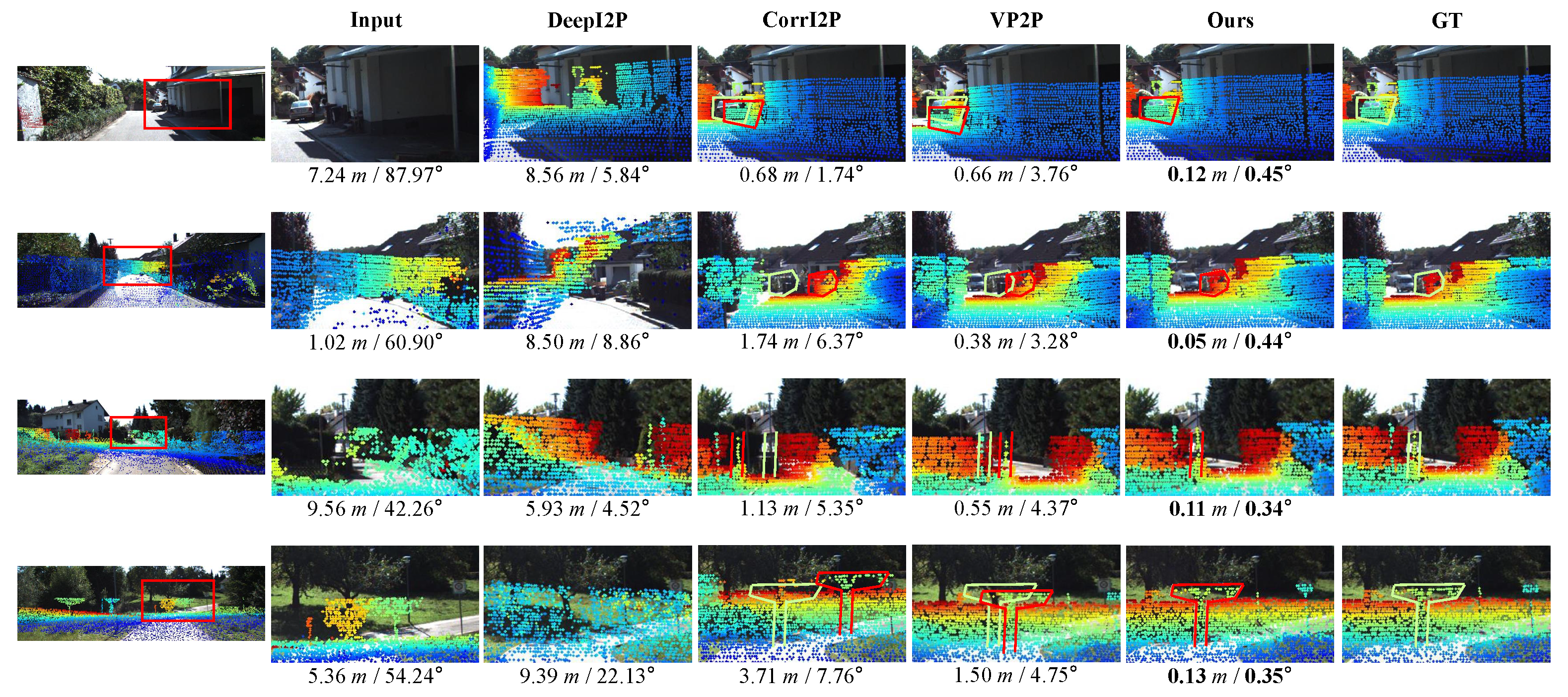

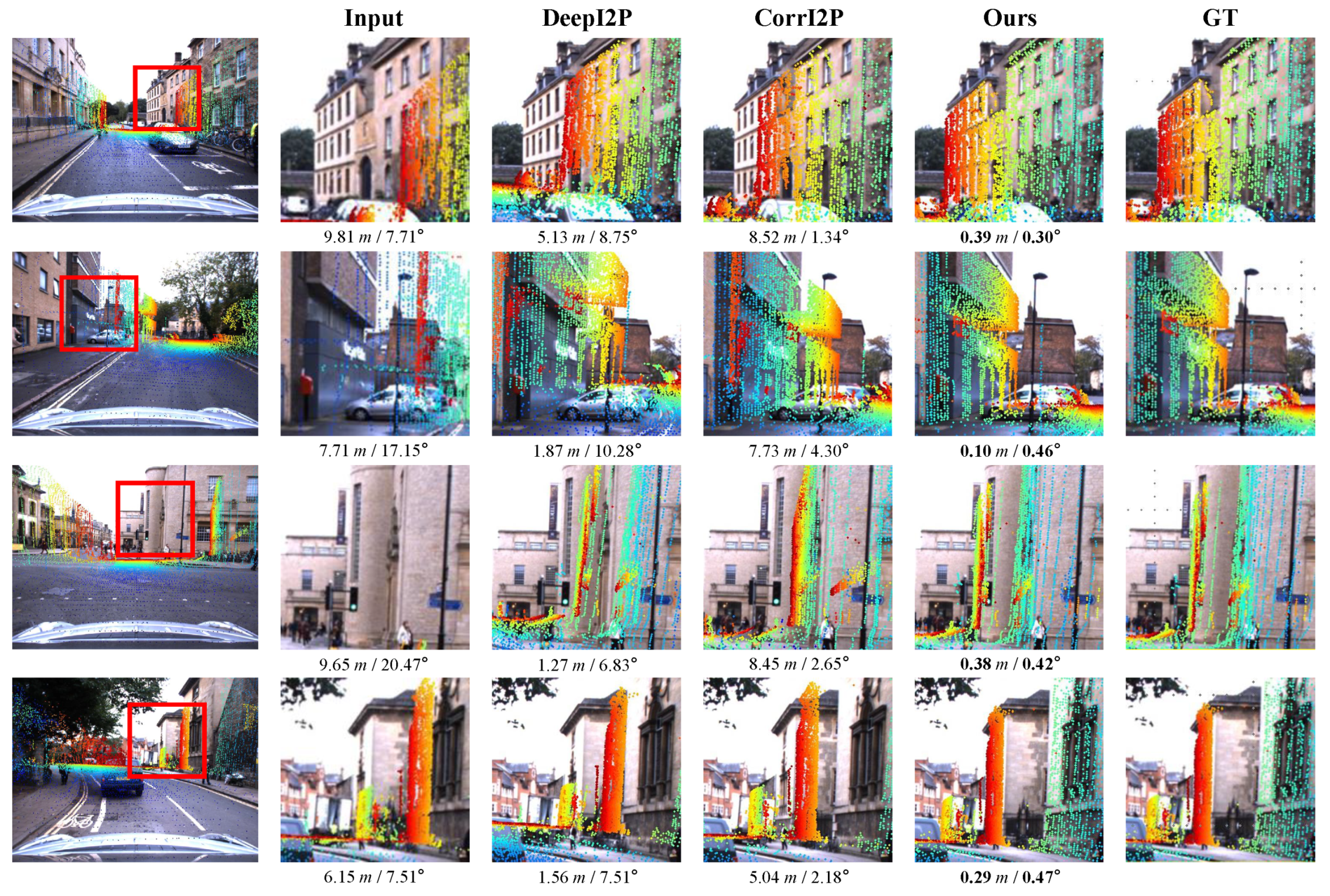

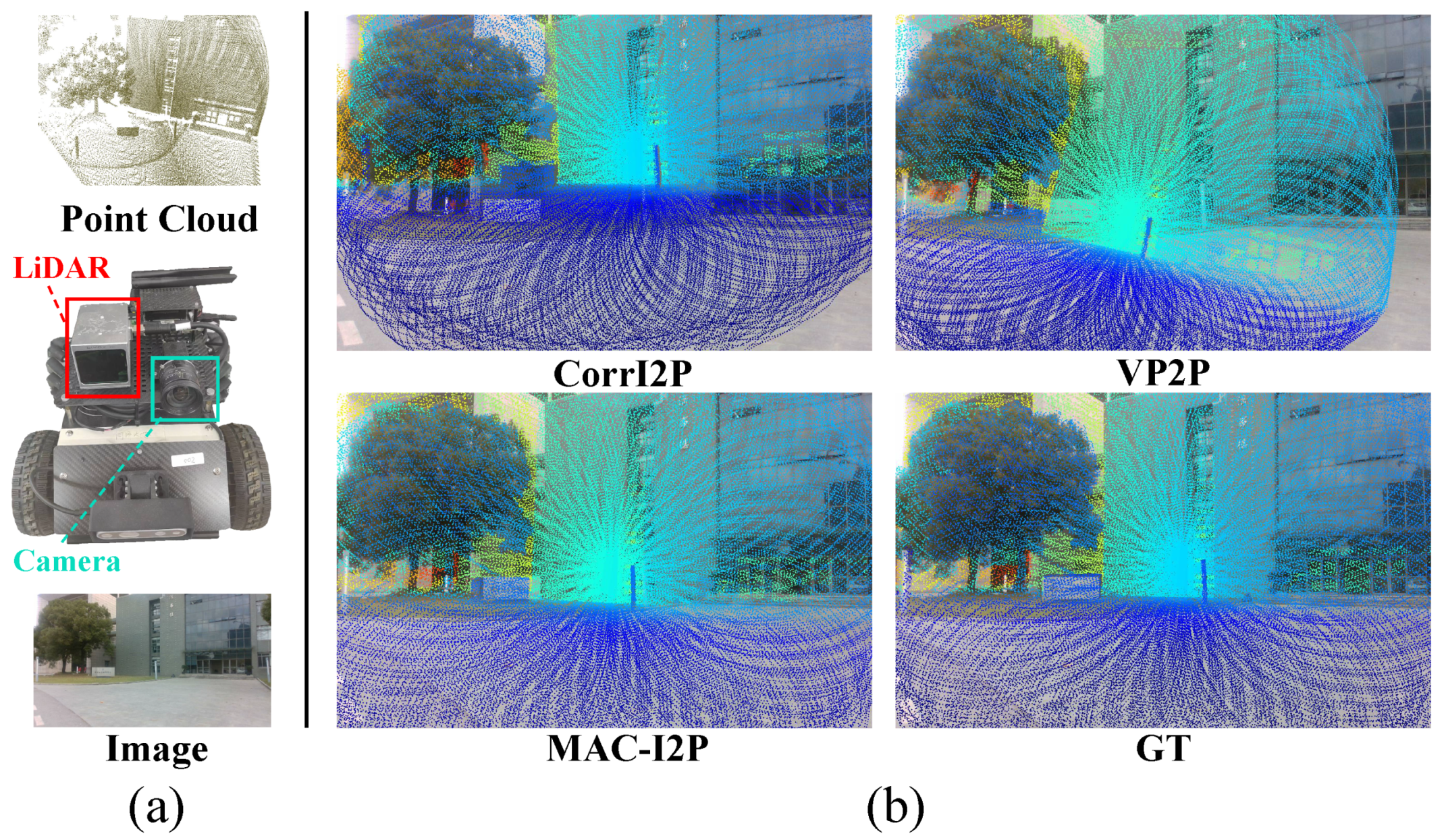

Figure 2 illustrates the overview of our MAC-I2P. In order to achieve accurate 2D–3D correspondences, our MAC-I2P is designed under an “

approximation–fusion–matching” architecture. It is composed of a modality approximation module and a cone–block–point matching strategy. The former utilizes image depth estimation and point cloud voxelization to align images and point clouds in terms of geometric structure and feature representation, respectively. Also, it adopts cross-modal feature embedding to enhance feature repeatability. The latter cone–block–point matching establishes pixel-to-point correspondences by leveraging aligned cross-modality features.

3.2. Modality Approximation

Images and point clouds exhibit complementary characteristics in scene representation, as they capture the texture and geometric structures of the scene, respectively. To fully utilize these complementary data, the relative pose between the image and the point cloud needs to be determined. In conditions where the LiDAR and camera cannot be fixed together (e.g., mounted on different robots), such complementarities become a hurdle to registration due to the modality discrepancies between images and point clouds.

To alleviate the modality discrepancies, the existing I2P methods usually rely on feature fusion to align the feature spaces of images and point clouds. However, the misalignment in geometry structures between images and point clouds limits the learning of cross-modality features, and these discrepancies are often ignored. Instead, under our modality approximation module, additional steps of generating pseudo-RGBD images and point cloud voxelization are performed before feature fusion. This module mitigates the modality discrepancies from both geometric structure and feature representation.

Pseudo-RGBD Images Generation. Directly estimating image depth and projecting point clouds onto the image plane are both viable approaches to recovering the geometric structures of the scene indicated by the image. Compared to the latter, the former can generate denser depth values, but its implementation is correspondingly more challenging. Fortunately, the development of monocular depth estimation techniques has made this approach possible. For the first time, it is introduced into the I2P registration. Based on the estimated depth, pseudo-RGBD images are generated. Additionally, adding a depth estimation module that requires training would increase the network’s burden and could even lead to performance degradation. Hence, an off-the-shelf depth estimation model

is leveraged to generate pseudo-RGBD images

[

42] by,

Then, pseudo-RGBD features

are extracted through a 2D backbone [

18]

,

Figure 3 illustrates the similarity between image features and point clouds before and after depth estimation. The warmer the color, the higher the probability of a match with the point cloud. It can be seen that with the introduction of depth maps, pseudo-RGBD images are more responsive to point clouds.

Point Cloud Voxelization. Through grid partitioning and aggregation, local features of images can be easily obtained. In contrast, extracting local features from unorganized point clouds is relatively complex. To enhance the repeatability of cross-modality features, we voxelize point clouds to render them a similar data structure as images. In this way, the local feature representation in point clouds can be made similar to image processing. Considering the information loss caused by voxelization, we designed a 3D backbone with two branches, a point branch and a voxel branch .

The local and global features of point clouds, denoted by

, respectively, are obtained through the point branch [

43],

Additionally, the local features of the voxelized point cloud

are extracted by voxel branch [

44],

where

resprents the volized point clouds.

Figure 3 demonstrates that the introduction of the voxel branch allows the point cloud features to have stronger compatibility with the image, resulting in improved I2P registration performance accordingly.

Cross-Modality Feature Embedding. In order to learn more discriminative cross-modality features, it is necessary to further align the features from different branches. In detail, a symmetric attention-based module is leveraged to fuse these features. The cross-modality features of point clouds

can be obtained by,

where

is the feature embedding function for point clouds [

43],

is the feature weights, and

is the cross-modality features without voxel-branch results.

In a similar way, the image cross-modality features

can be obtained by,

where

denotes the feature embedding for images and

is the feature weights of point clouds.

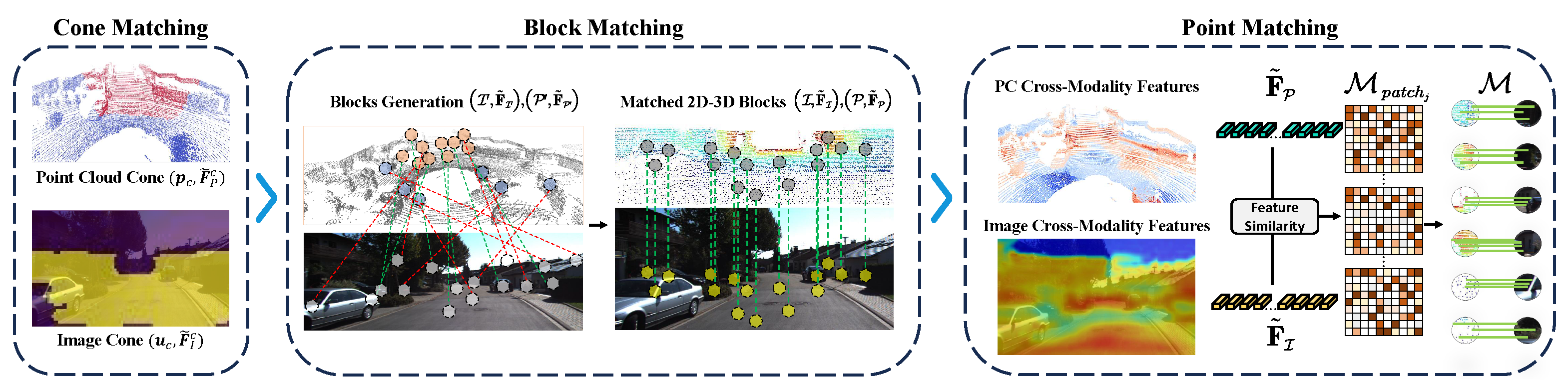

3.3. Cone–Block–Point Matching

After getting

and

, the objective in 2D–3D matching is to establish accurate pixel-to-point correspondences for subsequent pose estimation. Although the cross-modality features with stronger repeatability have been learned, the inter-modality matching between images and point clouds remains non-trivial. On the one hand, the detection angle of a typical LiDAR is 360°, while the field of view of a camera is usually less than 180°. This results in the overlapping observation region between the camera and the LiDAR being cone-shaped, and a significant portion of 3D points can not be observed by the camera. These invisible points are useless matching candidates and are expected to be filtered out. On the other hand, due to the different data densities, establishing one-to-one correspondences between pixels and points is difficult. Therefore, to improve the quality of matching, a cone–block–point matching strategy is proposed and illustrated in

Figure 4. It progressively refines the matching scale and relaxes strict matching criteria via multiple weak classifiers, leading to the establishment of accurate pixel-to-point correspondences. Firstly, cone matching is employed to detect the matching cone-shaped region between the camera and the LiDAR. Next, the matching blocks of 3D points and 2D pixels are picked out by block matching. Finally, pixel-to-point correspondences are established based on point matching.

Cone Matching. Due to the sparsity of the point cloud, certain regions corresponding to pixels in the image may not be observed by the LiDAR. Additionally, with the incorporation of semantic and geometric features, regions that possess higher similarity in terms of matching are expected to be selected. Hence, it is essential to identify the matching cone-shaped regions for both the image and the point cloud. To put it into practice, the task of determining the matching cone-shaped region is modeled as the co-view classification for both points and pixels and two co-view classifiers

and

for points and pixels are designed, respectively. Considering that this task focuses on the global context, cross-modality features that integrate global characteristics

and

are used as inputs to

and

. Then the co-view scores

and

can be derived as follows:

Based on and , co-visible points and pixels , along with their cross-modality features and , are identified through thresholds and . Specifically, the point/pixel is considered within the co-view region if the value of / is greater than /, respectively.

Two-Dimensional–Three-Dimensional Block Matching. Once the cone matching is performed, it seems possible to establish 2D–3D matches. However, it is important to note that point clouds have absolute scales, while images suffer from scale ambiguity due to perspective projection. This results in the size of the same object appearing to change in the image as the camera moves, while it remains constant in the point cloud. Due to this serious misalignment, the pixel-to-point matching is not a one-to-one correspondence. To overcome such matching ambiguity, an additional step is introduced between cone matching and point matching: generating the matched blocks of points and pixels.

Image blocks and their features

can be obtained through grid partitioning, where

. And the generation of point blocks

can be modeled as a classification problem. Specifically, a block classifier

is constructed for point clouds using the coordinates of image blocks as labels. With the help of

, the classification results

can be obtained,

where

.

represents the likelihood of a point

matching with each image blocks. The higher the value, the greater the probability of a match. To provide a clearer expression,

can formulated as follows:

Thus, the image block

matched by each point

can be computed by

In this way, the point blocks that match with the image blocks can be determined and denoted by . Finally, the point blocks with their features are represented by .

Dense Pixel-to-Point Correspondences. By utilizing cone matching and block matching, we construct matched point blocks

and image blocks

, and co-visible points

and pixels

. To achieve more accurate pose estimation, establishing pixe-to-point matching is necessary. In order to improve the matching quality, the points and pixels that are located in the co-view region and whose blocks are matched are selected. Correspondingly, their features are also picked out. They are represented by

and

, respectively.

is the intersection between

and

. It can be computed by Equation (

13),

where

, and

V is the number of point blocks. In a similar way,

can be obtained by Equation (

14),

where

, and the number of matched image blocks is denoted by

K.

For any pair of the matched point block

and the image block

, the similarity between point

and pixel

is defined as the cosine distance between their cross-modality features

and

:

Next, the pixel-to-point correspondences of

can be estimated based on the feature similarity

, where

(

/

denote the number of pixels/points). The matching between pixels and point clouds is not strictly one-to-one; that is, a pixel may be matched to multiple points. This kind of ambiguous matching can mislead pose optimization. To avoid the ambiguous matching caused by the imbalance in the number of pixels and points, the correspondences

are determined by finding the point with the largest similarity for each pixel,

where

denotes the

matched blocks. Furthermore, the final 2D–3D correspondences

can be get by integrating all the

,

With

and Equation (

1), a series of projection equations can be constructed, thereby modeling the I2P registration as a PnP problem. Subsequently, EPnP with RANSAC can be used to optimize the pose iteratively. To provide a more concise and clear explanation of our MAC-I2P’s pose estimating process, the forward inference process of MAC-I2P is presented in Algorithm 1.

| Algorithm 1: MAC-I2P inference process |

![Applsci 15 11212 i001 Applsci 15 11212 i001]() |

3.4. Loss Function

Our MAC-I2P is trained in a metric learning paradigm. The model is expected to perform cone matching, block matching, and pixel-point similarity estimation simultaneously. Therefore, we design a joint loss function which consists of the cone matching loss , the block matching loss , and point matching loss .

Cone Matching Loss. After getting

and

, based on the ground truth pose

and the intrinsic matrix

, the co-view region between

and

can be determined. The points and pixels within the co-view region are forced to have higher scores, and vice versa. In order to reduce the computational requirements,

H pairs of points and pixels from the co-view region with their scores

and

are sampled. Besides,

H pairs of pixels and points in the non-overlapping region are also collected and denoted by

and

. Inspired by CorrI2P [

12], the cone matching loss

is defined as follows:

Block Matching Loss. The block matching between

and

is formulated as a multi-label classification problem. Based on the image scaling factor

s, the intrinsic matrix

, and the ground truth pose

, the matching label

for each point

can be determined by projecting 3D points onto the image plane,

For brevity, the homogeneous and non-homogeneous transformations in Equation (

21) are omitted, and

denotes taking the first two rows of the vector. After obtaining

, the loss function for this multi-classification problem is defined as the cross-entropy,

where

denotes the logit of classifying

to

.

Point Matching Loss. We aim for the feature distance between matching pixel-point pairs to be significantly lower than that of non-matching pairs. Based on the matching ground truth obtained by Equation (

21), naturally, a contrastive loss or a triplet loss can be utilized to supervise the model optimization. Furthermore, to enhance the discriminative capacity of the model, we opt for circle loss [

45], which possesses a circular decision boundary, for model optimization. Consequently, our matching loss

is computed as,

where

represents the cosine distance between a matched pair (positive) of points and pixels, while

represents an unmatched one (negative).

and

are the margins for better similarity separation. The weighting factor for a positive pair is

, while for a negative pair is

.

Total Loss. After getting

,

and

, the joint loss

is defined as the weighted sum of these individual loss terms,

where

and

are weight coefficients. Based on

, our MAC-I2P can simultaneously perform the co-view region detection, the selection of matched 2D–3D blocks, and point-to-pixel matching.