1. Introduction

Text-to-SQL (Text2SQL) is a technology that translates natural language questions into structured query language (SQL) queries. The core objective is to simplify database access for users who lack specialized technical expertise, enabling them to retrieve and analyze structured data using plain language. With the rapid growth of data volume and complexity across many industries, Text2SQL plays an increasingly important role in connecting natural language queries with structured databases. This method is widely used in applications such as data analysis and business intelligence. For instance, in the power domain, engineers can utilize Text2SQL to access supply-chain records and assess the operational status of grid equipment. An example query is, “List the projects with the highest failure rate in service in 2024 for the Nanjing Power Supply Company,” which supports the identification of supply-chain problems and informs the optimization of resource allocation.

Early Text2SQL systems were based on rule matching and template filling, but these approaches proved insufficient for complex queries. Subsequent developments introduced deep learning techniques, with encoder–decoder architectures [

1] improving the mapping from natural language to SQL. For example, Seq2SQL breaks down SQL queries into aggregation operations, selected columns, and conditions in the WHERE clause and uses reinforcement learning to optimize the ordering of these conditions [

2]. SQLNet adopts a slot-filling mechanism to explicitly capture dependencies between SELECT and WHERE clauses [

3]. TypeSQL builds on this by adding type annotations, which enhance the system’s ability to recognize rare entities and numerical information [

4]. In recent years, the notable capabilities of LLMs [

5] have accelerated the adoption of LLM-based approaches in Text2SQL research. These methods generally fall into two categories. The first category leverages the in-context learning (ICL) capabilities of LLMs by designing prompts that guide SQL generation, exemplified by zero-shot inference with models such as ChatGPT. The second category focuses on fine-tuning open-source LLMs such as LLaMA [

6] and ChatGLM [

7] on standard datasets like Spider and WikiSQL to improve SQL-generation performance. Representative systems using these strategies include DB-GPT-Hub [

8] and Tool-SQL [

9].

Despite substantial advances in Text2SQL technology in recent years, two major challenges remain for practical deployment in specific domains. The first challenge involves a barrier posed by specialized domain knowledge. Industries such as healthcare and power systems rely heavily on technical terminology. As a result, models must possess both deep domain expertise and precise semantic understanding to accurately interpret user intent and the underlying data structures. For example, in the healthcare domain, the correct interpretation of International Classification of Diseases (ICD) codes, drug names, and diagnostic terms is essential. Similarly, in the power domain, correctly processing equipment identifiers and grid-topology information also requires substantial domain knowledge. However, high-quality training data in these fields usually needs to be annotated by domain experts, and acquiring such data is both expensive and subject to strict privacy constraints. This data scarcity limits the ability to perform domain-specific pretraining for general-purpose language models. As a result, such models often fail to capture domain-specific semantics and structural features, which may cause SQL statements to deviate from user intent and yield inaccurate query results.

The second core challenge concerns the consistency between generated SQL and domain knowledge. In real-world applications, syntactic correctness alone is insufficient. Generated SQL queries must also strictly conform to domain-specific business rules and data constraints, ensuring strong alignment with expert knowledge. Nevertheless, current LLMs often exhibit significant “domain hallucination”. They usually make plausible but inaccurate domain-specific content when producing SQL. For instance, these models may depend on generalized knowledge from their training data instead of the actual schema, leading to the introduction of nonexistent fields or table relationships or to the omission of enterprise-specific data-encoding conventions. Furthermore, even if the generated SQL is syntactically correct, the underlying query logic may conflict with actual business rules, resulting in subtle but potentially serious logical errors. For example, in the finance domain, a model might confuse the rules for calculating “account balance” and “available credit,” leading to fundamentally incorrect results.

To address the challenges, we propose Dynamic Knowledge Adaptation for Domain-Specific Text-to-SQL (DKASQL), a large language model-based approach that integrates dynamic knowledge validation for domain-specific Text2SQL tasks. As research on domain-specific Text2SQL is still in its early stages, this paper first clarifies the task definition for domain-specific Text2SQL and then presents the DKASQL framework in detail.

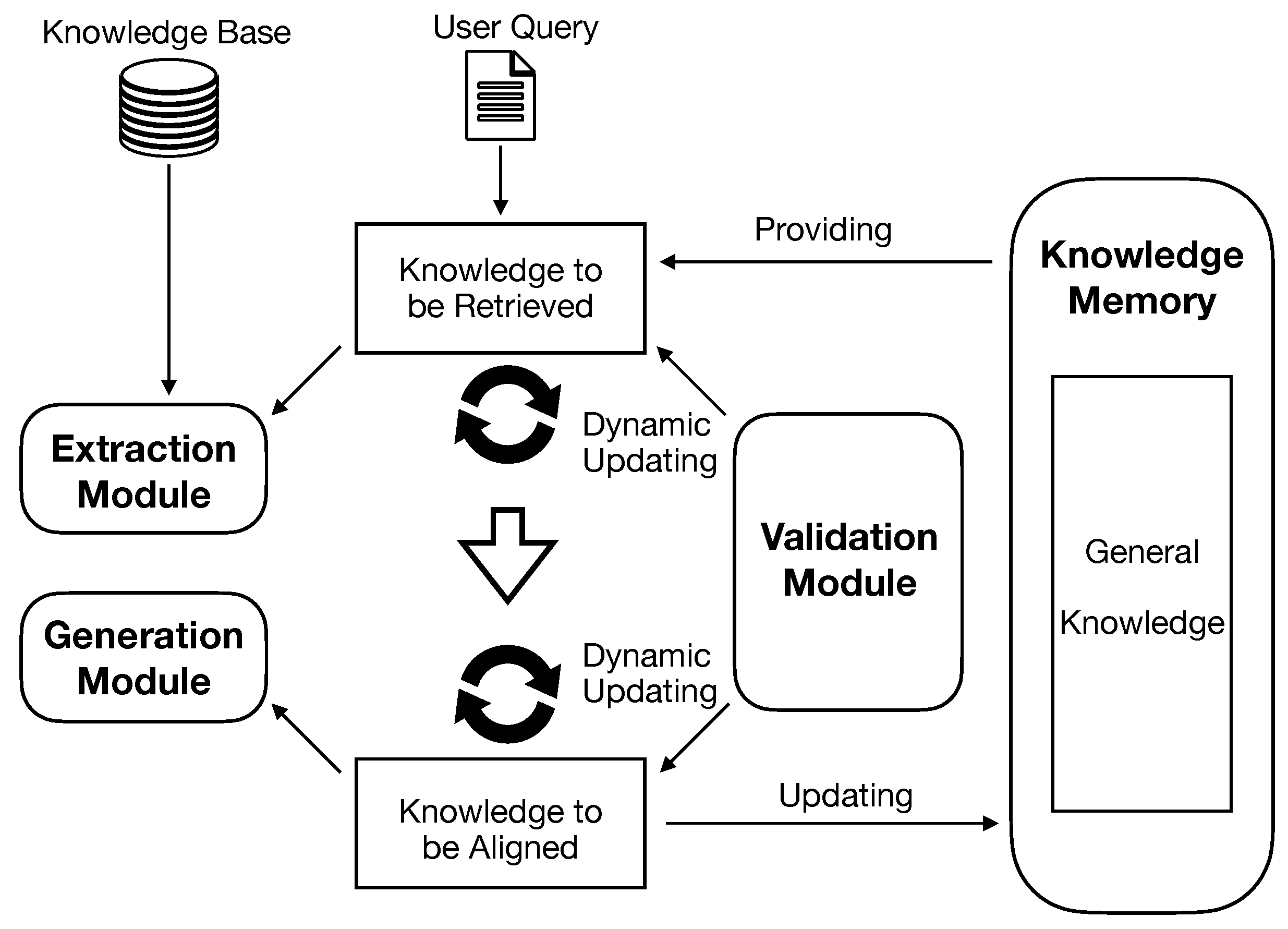

The DKASQL method is composed of three primary modules: an extraction module, a generation module, and a core verification module. The extraction module extracts relevant table structures, field semantics, and domain knowledge bases based on the user’s query, which helps the model understand specialized terminology and data structures. Extraction can mitigate the barrier of domain knowledge and provide a semantic foundation for SQL generation. The generation module takes the user’s natural language question, along with the retrieved domain knowledge, and generates SQL queries that are both syntactically correct and consistent with business logic.

The verification module serves as a quality gatekeeper, playing a critical role in ensuring that the generated results conform to domain knowledge. It fulfills two main functions: (1) verifying the accuracy and completeness of the knowledge retrieved by the extraction module, and (2) evaluating whether the SQL output by the generation module satisfies the requirements for syntax, logic, and consistency with domain knowledge. If the verification module detects issues in extraction or generation, it analyzes the source of errors and dynamically corrects the input to the relevant modules. This forms an iterative “extraction–verification” and “generation–verification” loop, enabling dynamic adaptation and continuous refinement of domain knowledge, which ultimately enhances the accuracy and reliability of SQL generation.

In addition, a knowledge memory module is designed to store reusable knowledge identified during execution, such as typical field formats or common date-field names. This module also supports dynamic updating: after each sample is processed, it automatically identifies and records reusable domain knowledge, reducing redundant retrievals and iterations of the same information, thereby improving overall inference efficiency.

Figure 1 illustrates the DKASQL workflow. Upon receiving a natural language query, the system first engages in a “extraction–verification” loop to dynamically update the domain knowledge to be retrieved and ensure that all relevant information is available. Once knowledge extraction is complete, the system enters the “execution–verification” phase, dynamically aligning the generated SQL with domain knowledge. After processing each sample, the knowledge memory module determines whether any domain knowledge used is generalizable and, if so, adds it to the knowledge memory for future use.

To validate the effectiveness of the proposed framework, we conduct experiments on the open-source multi-domain dataset BIRD [

10] and the ElecSQL dataset for power-grid supply chains. Experimental results show that DKASQL effectively addresses domain-specific Text2SQL challenges, achieving state-of-the-art performance on both datasets. Notably, DKASQL attains comparable or even superior results to much larger models such as GPT-4o mini and DeepSeek-V3, even when using smaller backbone LLMs (7B, 32B parameters). For example, on the ElecSQL dataset, DKASQL improves the ESR by up to +26.9% and the RA by up to +8.8% compared to GPT-4o mini while using smaller backbone models. When built on Qwen2.5-14B, DKASQL achieves a 96.15% ESR and a 15.38% RA, surpassing GPT-4o mini (75.96% ESR, 9.62% RA) and DeepSeek-V3 (71.15% ESR, 13.46% RA). These results highlight the effectiveness of dynamic knowledge adaptation and confirm the potential of DKASQL for domain-specific Text2SQL applications.

The key contributions of our framework are summarized as follows:

We define the task of domain-specific text-to-SQL and analyze the unique challenges it poses, including specialized terminology, data scarcity, and the need for strict alignment with domain-specific business rules.

We propose DKASQL, a novel framework that integrates extraction, generation, and verification modules, enabling dynamic adaptation of domain knowledge during SQL generation.

We design a knowledge memory module that automatically stores reusable domain knowledge, reducing redundant retrievals and improving overall inference efficiency.

We conduct extensive experiments on the BIRD and ElecSQL datasets, demonstrating that DKASQL achieves state-of-the-art performance and even outperforms larger models such as GPT-4o mini and DeepSeek-V3 when using smaller backbone LLMs.

The remainder of this paper is organized as follows.

Section 2 reviews related works.

Section 3 presents the formal definition of the task.

Section 4 describes the DKASQL framework in detail. The experimental settings and results are reported in

Section 5 and

Section 6, respectively. Finally,

Section 7 concludes this paper.

2. Related Works

Early Text2SQL research primarily relied on rule-based approaches, parsing user queries based on syntactic structures to generate corresponding SQL statements [

11]. With the rise of encoder–decoder architectures, researchers began to frame the Text2SQL task as a form of neural machine translation (NMT), drawing on techniques developed in the machine translation community [

12]. For example, many studies leveraged graph neural networks, sequential neural networks, and pretrained Transformer models to encode both user input and database schemas [

13,

14]. They then utilized decoding strategies such as slot filling [

3], graph neural decoding [

15], and autoregressive methods [

12] to process these encoded representations and ultimately generate SQL queries.

With the widespread adoption of LLMs, the research paradigm for Text2SQL has shifted significantly. Owing to their strong performance, LLMs have surpassed earlier methods and become the foundation of current Text2SQL systems. To adapt LLMs for Text2SQL, researchers have primarily adopted two strategies: supervised fine-tuning (SFT) and prompt engineering. Supervised fine-tuning involves training relatively lightweight open-source models on datasets such as Spider and WikiSQL, which enables efficient SQL generation with modest resource requirements [

16,

17]. Prompt engineering, on the other hand, seeks to fully utilize the capabilities of large closed-source models (such as GPT-4 [

18]) by carefully designing and optimizing prompts [

19]. These models have demonstrated remarkable generalization abilities and follow input instructions with high fidelity. Initial work in prompt-based Text2SQL focused on constructing chain-of-thought (CoT) and in-context learning (ICL) mechanisms [

20]. With the improvement of LLMs, new techniques such as self-correction [

21,

22], self-consistency [

23,

24,

25], and multi-agent collaboration [

26] have been introduced, effectively mitigating output instability and further enhancing overall Text2SQL performance.

However, both supervised fine-tuning (SFT) and traditional NMT approaches rely heavily on large, high-quality labeled datasets. In real-world domain-specific scenarios, this data is rarely public or available for training. This lack of data limits the models’ ability to understand unique business terminology, acronyms, and the specific data structures of a given domain. Moreover, prompt engineering with large closed-source LLMs can lead to schema hallucination, where the model invents table or column names not present in the database. This happens because the model, lacking true domain knowledge, fills in missing information based on its general training data, resulting in syntactically correct but functionally incorrect SQL queries that fail to execute. In contrast, DKASQL directly tackles these limitations with two key innovations that fundamentally change the approach to Text2SQL in domain settings. First, DKASQL introduces a dual-cycle validation process. The extraction–verification cycle proactively checks if the retrieved schema items and rules are correct and comprehensive for the user query. The generation–verification cycle then acts as a quality gate, either rejecting or repairing generated SQL queries that conflict with the established business rules and schema. DKASQL also adds a knowledge memory that accumulates reusable patterns, such as field formats and code lists. This memory acts as a dynamic cache, reducing the need for repeated context retrieval.

4. Dynamic Knowledge Adaptation for Domain-Specific Text-to-SQL

To address the challenges of domain-specific text-to-SQL, we propose Dynamic Knowledge Adaptation for Domain-Specific Text-to-SQL (DKASQL), a dynamic knowledge adaptation framework designed to enhance both the model’s comprehension of domain-specific knowledge and the execution reliability of generated SQL queries. DKASQL comprises three main modules: extraction, generation, and verification. The framework operates through iterative “extraction–verification” and “generation–verification” cycles, dynamically refining and updating domain knowledge throughout the process. Furthermore, DKASQL introduces an innovative knowledge memory mechanism to improve computational efficiency by storing and reusing generalizable domain knowledge. In the following section, we first introduce the overall structure of DKASQL and then provide details on each module.

4.1. Overall Structure

DKASQL starts with an extraction module, which gathers pertinent information—including table schemas, field semantics, and constraints—from both the database schema and the domain knowledge base. The verification module then evaluates the sufficiency of the extracted information against the knowledge memory. If deficiencies are identified, the system incrementally supplements missing knowledge until all requirements for SQL generation are fulfilled. After validating the necessary domain knowledge, the generation module synthesizes an initial SQL query based on the user’s input query and the extracted information. The verification module, equipped with SQL execution capabilities, assesses the generated query for semantic correctness and consistency with business rules by executing it against the relevant database. If errors are detected, the system performs root-cause analysis, updates the knowledge base as needed, and iteratively refines the SQL query.

Upon completion of each task, DKASQL assesses whether any of the knowledge components used are generalizable. Reusable knowledge is stored in the knowledge memory module, which reduces redundant extraction and verification in future tasks and further improves overall efficiency. DKASQL provides several advantages over existing approaches. First, dynamic knowledge validation leads to higher accuracy and improved domain adaptation, while the self-correcting process decreases reliance on large amounts of annotated data. Second, by decoupling the knowledge base from the model parameters, DKASQL supports seamless updates and maintains robust performance even in low-resource settings. Finally, the cyclical “extraction–verification–generation” process establishes a closed-loop system that continuously enhances both the quality of SQL generation and the relevance of the domain knowledge base.

4.2. Extraction Module

Unlike previous text-to-SQL approaches that mainly retrieve similar SQL examples to guide generation, DKASQL’s extraction module is tailored to identify and extract task-relevant domain knowledge, such as definitions of business metrics. The accuracy and efficiency of this module are critical, as they directly influence the quality of subsequent SQL generation.

The retrieval process consists of several key steps. First, the target knowledge for retrieval is initialized with the user’s natural language query. Next, the module conducts preliminary parsing to extract keywords and entities and determine the query intent. Using these extracted elements, the system then searches external knowledge sources to obtain relevant information. To enhance robustness, DKASQL adopts a hybrid retrieval strategy that combines vector similarity retrieval (based on BGE-m3 embeddings [

27]) with keyword-based matching. Vector similarity ensures semantic alignment between queries and knowledge items, while keyword matching guarantees precise coverage of domain-specific terminology. Throughout this process, the target knowledge for retrieval is dynamically updated by the verification module to ensure alignment with the task requirements. Formally, the optimization objective of the extraction module is defined as follows:

where

represents the output of the extraction module,

denotes an embedding-based semantic similarity function,

K is the domain knowledge base,

Q is the natural language query,

S is the database schema,

is the feedback from the verification module, and

is the similarity threshold.

4.3. Generation Module

The generation module transforms natural language queries into executable SQL statements. This process begins with the integration of multiple inputs, including the natural language query (Q), the retrieved domain knowledge (), and the general knowledge provided by the knowledge memory module. Based on these inputs, the module generates preliminary SQL queries that comply with SQL syntax rules and the constraints imposed by the database schema (S).

The knowledge utilized in the generation process is initially derived from the database schema and domain knowledge obtained via the extraction module and is subsequently refined through feedback from the verification module. The generation process can be formally represented as follows:

where

indicates the iteratively updated knowledge memory at the

n-th iteration,

denotes the

t-th token in the generated SQL statement

, and

is the optimized objective function. Prompt engineering is employed within the generation module to guide SQL construction. Specific prompt templates are designed to effectively integrate the relevant context and constraints into the generation process. The prompt used in the generation module is as follows:

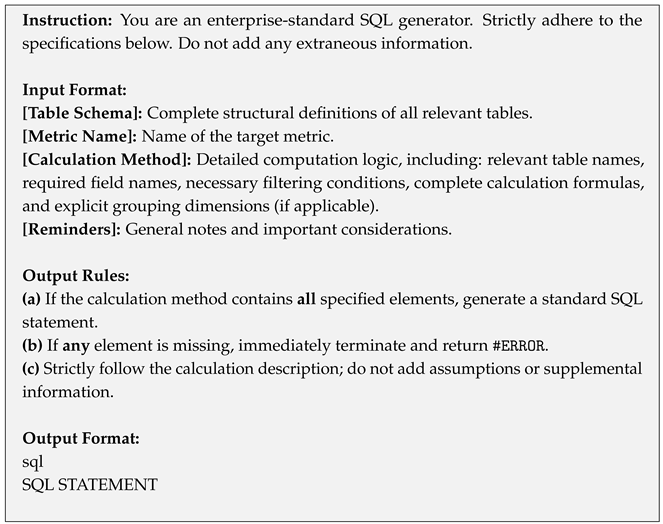

![Applsci 15 11121 i001 Applsci 15 11121 i001]()

Here, the [Reminders] correspond to the common knowledge stored in the knowledge memory module. Details of the knowledge memory module are provided in

Section 4.5.

4.4. Verification Module

The verification module plays a central role in ensuring the accuracy and reliability of the text-to-SQL process by validating and evaluating the outputs of the other modules. Its core functionality consists of two primary components: extraction–verification and generation–verification. In the extraction–verification process, the module dynamically updates the knowledge to be retrieved, thereby ensuring the completeness and correctness of the information provided by the extraction module. In the generation–verification process, it dynamically aligns and audits the knowledge used during SQL generation, rigorously evaluating the quality of the SQL statements produced by the generation module. Through these mechanisms, the verification module systematically identifies potential errors and offers corrections to the domain knowledge required by the other modules, thus driving continual optimization of the entire framework.

4.4.1. Extraction–Verification Process

To assess the completeness and correctness of the information extracted by the extraction module, the verification module first acquires the database schema and relevant external knowledge retrieved from the knowledge base. It then analyzes this information against the requirements of the natural language query. The evaluation is conducted along two dimensions: completeness, which focuses on identifying missing content, and correctness, which focuses on detecting and filtering out erroneous or irrelevant information. Following this analysis, the verification module updates the knowledge to be retrieved, enabling the extraction module to supplement missing information in subsequent iterations and avoid the recurrence of incorrect knowledge. This process is formalized as follows:

where

denotes the retrieved knowledge structure,

represents the verification feedback, and

Q,

K, and

S refer to the natural language query, the knowledge base, and the database schema, respectively.

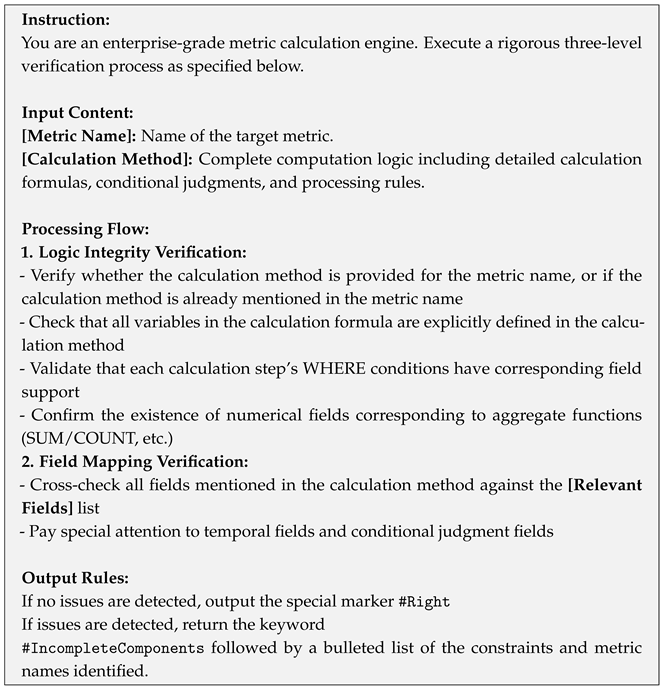

Prompt engineering is employed within the verification module to evaluate the outputs of the retrieval process. Specific prompt templates are designed to effectively assess the completeness and correctness of the retrieved knowledge.

![Applsci 15 11121 i002 Applsci 15 11121 i002]()

4.4.2. Generation–Verification Phase

In the generation–verification phase, the verification module is equipped with SQL execution tools to rigorously assess the quality of the SQL statements generated by the generation module. The process begins with the verification module receiving candidate SQL statements and performing syntax validation to ensure consistency with the intent expressed in the user’s natural language query. The module then executes the SQL statements to validate the correctness of the results and to identify any potential errors. Based on this evaluation, the verification module updates the aligned knowledge, providing targeted feedback to the generation module. The generation module subsequently refines and regenerates the SQL statements using the updated knowledge. This quality-assurance loop is repeated until the verification module confirms that the SQL statements are free of errors. The formal representation of this process is given by

where

denotes the

n-th SQL statement generated by the generation module,

represents the verification feedback on SQL quality, and

Q,

K, and

S retain their previous definitions (natural language query, the knowledge base, and the database schema, respectively).

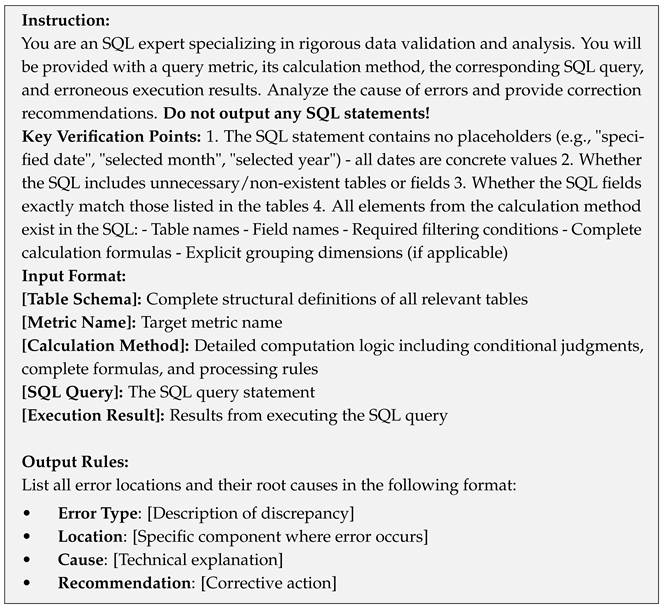

Prompt engineering is also employed within the verification module to facilitate systematic evaluation of generated SQL statements. Specific prompt templates are designed to support robust SQL validation throughout the iterative quality-assurance process.

![Applsci 15 11121 i003 Applsci 15 11121 i003]()

4.5. Knowledge Memory Mechanism

In domain-specific text-to-SQL tasks, user queries frequently involve common knowledge components, such as standardized date formats in database tables or commonly used abbreviations. Repeatedly processing this shared knowledge for each query results in unnecessary computational cost and reduced system efficiency. To address this issue, we introduce a knowledge memory mechanism, which consists of a memory storage module for persistent retention of generalizable knowledge and an integrated pipeline for knowledge provision and dynamic updating.

During query processing, the system first retrieves relevant general knowledge from memory and incorporates it into the initial knowledge base to support SQL generation. After each query is answered, the verification module analyzes all utilized knowledge and classifies it as either general knowledge, which is reusable across multiple queries, or query-specific knowledge, which is limited to the current context. Verified general knowledge is then stored in memory for future reuse. This automated and dynamic management approach effectively eliminates redundant knowledge processing and significantly improves the overall efficiency of the text-to-SQL system.

4.6. Module Collaboration Mechanism

The DKASQL framework advances text-to-SQL performance and robustness through the combined application of dynamic knowledge validation and the knowledge memory mechanism. As depicted in

Figure 1 and Algorithm 1, the framework employs an iterative workflow structured around extraction–verification and generation–verification cycles. Upon receiving a natural language query, the extraction module extracts relevant metadata and domain knowledge from the database schema and external resources. The verification module then conducts completeness checks on the retrieved content. If gaps or deficiencies are identified, the knowledge to be retrieved is updated accordingly. The generation module uses the optimized context to produce preliminary SQL statements, which are subsequently evaluated by the verification module for syntactic correctness, logical alignment, and knowledge consistency. If any errors or inconsistencies are detected, the system updates the knowledge base and regenerates the SQL statement. This iterative process continues until the optimal output is achieved, ensuring both the accuracy and reliability of the generated SQL.

| Algorithm 1: Dynamic knowledge-aware text-to-SQL generation |

![Applsci 15 11121 i004 Applsci 15 11121 i004]() |

6. Experimental Results and Analysis

We first conducted systematic experiments on the BIRD benchmark dataset and the power-grid domain dataset, ElecSQL, covering base LLMs of varying scales (7B/32B parameter models). Additionally, to investigate the contribution of each module, we conducted modular ablation studies.

6.1. Overall Results

Table 1 and

Table 2 present the performance of different methods on the BIRD and ElecSQL datasets, respectively. The experimental results demonstrate that DKASQL achieved superior performance across all tested base LLMs and datasets. On the BIRD dataset, DKASQL attained an ESR of 99.28% with DeepSeek-R1-Distill-Qwen-32B, significantly outperforming the original method (63.56%). Its RA also improved from 20.66% to 35.01%. Similarly, using the same base model, DKASQL increased the ESR on the ElecSQL dataset to 18.27% (vs. 13.46% for the original method). These results strongly validate DKASQL’s generalization capability and effectiveness in domain-specific Text2SQL tasks.

Furthermore, a comparison between BIRD and ElecSQL reveals that the latter presented greater challenges. Even state-of-the-art LLMs like GPT-4o mini exhibited markedly lower performance on ElecSQL, with an ESR of 75.96% and an RA of 9.62%, compared to 96.74% and 32.59% on BIRD. This suggests that while widely adopted benchmarks like BIRD effectively evaluate model performance on general Text2SQL tasks, real-world domain-specific scenarios—such as power-grid queries—involve more complex logic and specialized knowledge and demand stronger reasoning capabilities. The ElecSQL results highlight that despite LLMs’ promising performance on conventional SQL-generation benchmarks, substantial improvements are still needed for practical domain-adaptive applications.

The proposed DKASQL method consistently outperformed baseline approaches on both datasets, with particularly notable gains on the more complex and domain-intensive ElecSQL dataset. For instance, when using DeepSeek-R1-Distill-Qwen-32B, DKASQL achieved a 69.46% relative improvement in RA on BIRD (20.66% → 35.01%) and a 35.74% relative improvement on ElecSQL (13.46% → 18.27%). These findings underscore DKASQL’s strong advantages in handling specialized Text2SQL tasks.

Compared with state-of-the-art (SoTA) models, DKASQL still achieved superior performance on the ElecSQL dataset and comparable performance on the BIRD dataset. We attribute this difference to the varying knowledge requirements of the two benchmarks. BIRD primarily relies on general common-sense or factual knowledge that large language models can often acquire during pretraining (e.g., standard data handling practices or common entity types). In contrast, ElecSQL requires highly specialized domain knowledge, such as interpreting the “utilization rate of solidified IDs,” which cannot be accurately understood or computed without access to domain-specific expertise. This distinction underscores the motivation behind DKASQL, which is designed to address domain-specific challenges. The relatively lower performance of SoTA models on ElecSQL further highlights the necessity of focusing on domain-specific text-to-SQL problems.

6.2. Ablation Study

Due to the high computational demands of models with 32B parameters, ablation experiments were performed using the more efficient 7B models. As removing the generation module would prevent the system from completing Text2SQL tasks, this analysis focused on the roles of the extraction and verification modules. Specifically, we systematically ablated the extraction and verification modules to evaluate their individual contributions to DKASQL’s overall performance. The results are shown in

Table 3 and

Table 4.

The complete DKASQL framework consistently achieves the highest performance on both the BIRD and ElecSQL datasets, indicating that the interaction between the extraction and verification modules is critical for high-quality SQL generation. Excluding either module resulted in substantial performance declines.

Moreover, the ElecSQL dataset contains a larger amount of domain-specific terminology, making external knowledge extraction particularly important. When the extraction module was removed, the ESR decreased by 21.27% (from 90.38% to 71.15%), and the RA decreased by 44.48% (from 17.31% to 9.61%) when using Qwen2.5-Coder-7B. In comparison, the BIRD dataset experiences smaller declines in performance (ESR: −3.22%; RA: −26.43%), likely due to a lower reliance on specialized external knowledge.

The verification module is essential for logical validation and SQL optimization, particularly for the complex queries in BIRD. Ablating the verification module led to a 42.35% drop in the ESR (from 83.18% to 47.19%) and a 35.98% decrease in the RA (from 30.57% to 19.56%). For ElecSQL, where domain terminology (addressed by retrieval) is more important than logical refinement, the removal of the verification module resulted in a less pronounced impact (ESR: −7.45%; RA: −16.70%).

6.3. Case Studies

To provide a more intuitive analysis of DKASQL’s effectiveness, we present two representative case studies in

Table 5 and

Table 6, highlighting the roles of the extraction and verification modules. Example 1 (

Table 5) contrasts the SQL-generation outcomes with and without the extraction module, keeping all other conditions constant. In the absence of the extraction module, the model lacked access to external domain knowledge and tended to insert unnecessary or irrelevant information, resulting in SQL statements with clear deficiencies. Specifically, without the extraction module, the model only filtered records with

amount > 40, neglecting two essential components: it failed to compute the total income (by omitting the

SUM aggregate function) and excluded the

member_id field while misapplying the

HAVING clause.

Example 2 (

Table 6) examines the impact of the verification module by comparing the generation results with and without this component, again under identical settings. When the verification module was removed, the model generated semantically incorrect outputs (e.g., selecting an incorrect superhero name). The verification module effectively detected such errors, offered specific suggestions for correction, and guided the model to produce the correct answer.

In Example 3 (

Table 7), we present an incorrect output generated by DKASQL. The error in the SQL arose from a misinterpretation of the field name. Because the table contained multiple fields with highly similar names, DKASQL mistakenly selected a similar but incorrect field instead of the intended one.

6.4. Impact of Knowledge Memory on Framework

To visually illustrate the function of the knowledge memory module, we present examples from the ElecSQL power-grid dataset in

Table 8. Due to space limitations, three representative knowledge entries stored in the knowledge memory module are shown, along with one example of domain-specific knowledge not included in the module for comparison. The general knowledge entries primarily capture foundational elements common across multiple samples, such as date format conventions (e.g., the “YYYYMMDD” format) and regional coding standards (e.g., field names and value specifications used across different regions). In contrast, domain-specific knowledge addresses computational logic relevant to particular business scenarios. This distribution highlights the intended design of the knowledge memory module: consolidating general knowledge to minimize redundant computation while maintaining the flexibility to handle specialized cases. As a result, the module significantly improves the overall efficiency of the DKASQL framework.

7. Conclusions

We present DKASQL, a domain-specific text-to-SQL approach grounded in a dynamic knowledge validation mechanism. By leveraging iterative dynamic knowledge optimization, DKASQL effectively addresses major challenges in domain-specific text-to-SQL tasks, such as domain knowledge limitations and execution reliability. The framework implements collaborative “extraction–verification” and “generation–verification” cycles through the coordinated operation of extraction, generation, and evaluation modules, thereby enabling continuous updating and refinement of domain knowledge. The integrated knowledge memory module further supports the persistent accumulation and reuse of domain-specific knowledge.

Experimental results show that DKASQL achieves higher accuracy than state-of-the-art LLMs, including GPT-4o mini and DeepSeek-V3, on both the BIRD benchmark dataset and the ElecSQL power-grid dataset, while utilizing only 32 billion parameters. Remarkably, even with a 7-billion-parameter base model, DKASQL attains performance on par with substantially larger models. Consistent improvements across diverse datasets and model backbones demonstrate the generalizability and robustness of the proposed approach. This collaborative paradigm not only highlights the potential for achieving strong performance with compact models through effective cooperation but also provides extensible interfaces for dynamic knowledge updates owing to its modular architecture.

First, DKASQL depends on the quality and recall of the extraction module: incomplete or noisy retrieval can misguide both generation and verification, especially when schemas are implicit or poorly documented. Second, iterative extraction–verification and generation–verification loops add latency and computational cost, which can be noticeable in interactive use or with very large schemas. Third, the knowledge memory can bias the system toward past solutions. If schemas or rules change, stale patterns may degrade accuracy. The current work does not implement automatic decay, version pinning, or anomaly detection for memory entries.

We will jointly learn extraction and verification using execution feedback, with contrastive objectives that penalize missing fields or incorrect joins and reward stronger schema–query alignment prior to decoding. We will introduce versioned and private knowledge memory with tenant-level namespacing, schema-linked version tags, time-based decay, detectors for sensitive tokens, and audit tooling. Finally, we will add a planner based on an intermediate representation that maps a question to a compositional operator graph prior to SQL rendering; the validator will check each step against the schema and business rules to reduce hallucinated joins and missing filters.

In summary, DKASQL advances domain-specific text-to-SQL through dynamic knowledge validation and coordinated modules, but its reliability still depends on high-recall retrieval, efficient iterative control, and well-managed memory. Addressing these points will further improve accuracy, responsiveness, and safety in real deployments.