1. Introduction

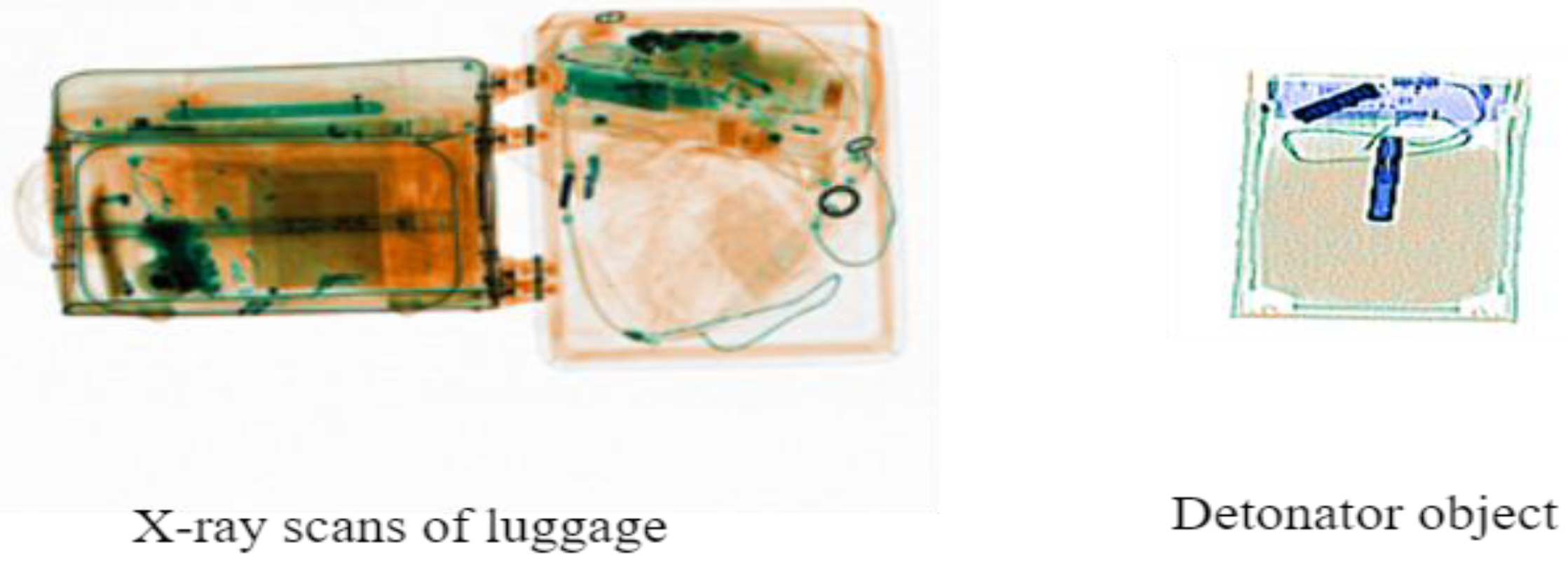

Raw luggage X-ray scans show low contrast, significant material overlap, and high noise levels, which make reliable interpretation difficult. Dual-energy acquisitions help distinguish materials but cause color inconsistencies that bias segmentation algorithms. Therefore, image fusion is essential for highlighting hidden threats, such as detonators. In this study, we use a connotative fusion approach that adds realistic detonator patches into genuine luggage X-rays to simulate believable threat scenarios, thereby improving automated detection without ground-truth annotations. Image fusion is a common technique that combines multiple images into a single, clearer, more informative image. Recently, many fusion algorithms and evaluation metrics have been developed. However, any new image fusion algorithm and its performance gains must be carefully evaluated within its specific application context. For better detonator detection, a multi-image fusion method is employed to enhance low-quality X-ray luggage images affected by poor lighting, low contrast, and potential color discrepancies. Poor lighting results in underexposed or overexposed areas, hiding small objects. Low contrast complicates the differentiation of object boundaries and materials. Color inconsistencies, caused by variable dual-energy imaging, lead to misleading material segmentation, which can hinder pattern recognition and detonator detection.

Our work focuses on connotative image fusion, which combines images to provide complementary information. Unlike spatial fusion, connotative fusion improves image quality and interpretability by preserving meaningful features. This study addresses image fusion in airport security, specifically integrating detonator patches into X-ray luggage images. Our goal is to simulate credible threat scenarios for automated threat detection system development. We use a dataset from HTDS (High Tech Detection Systems) with real radiographic luggage images and relevant object patches. This fusion approach creates visually and structurally coherent synthetic images to enhance the robustness of AI models in security screening. We evaluated the fusion performance and identified key challenges and unresolved issues.

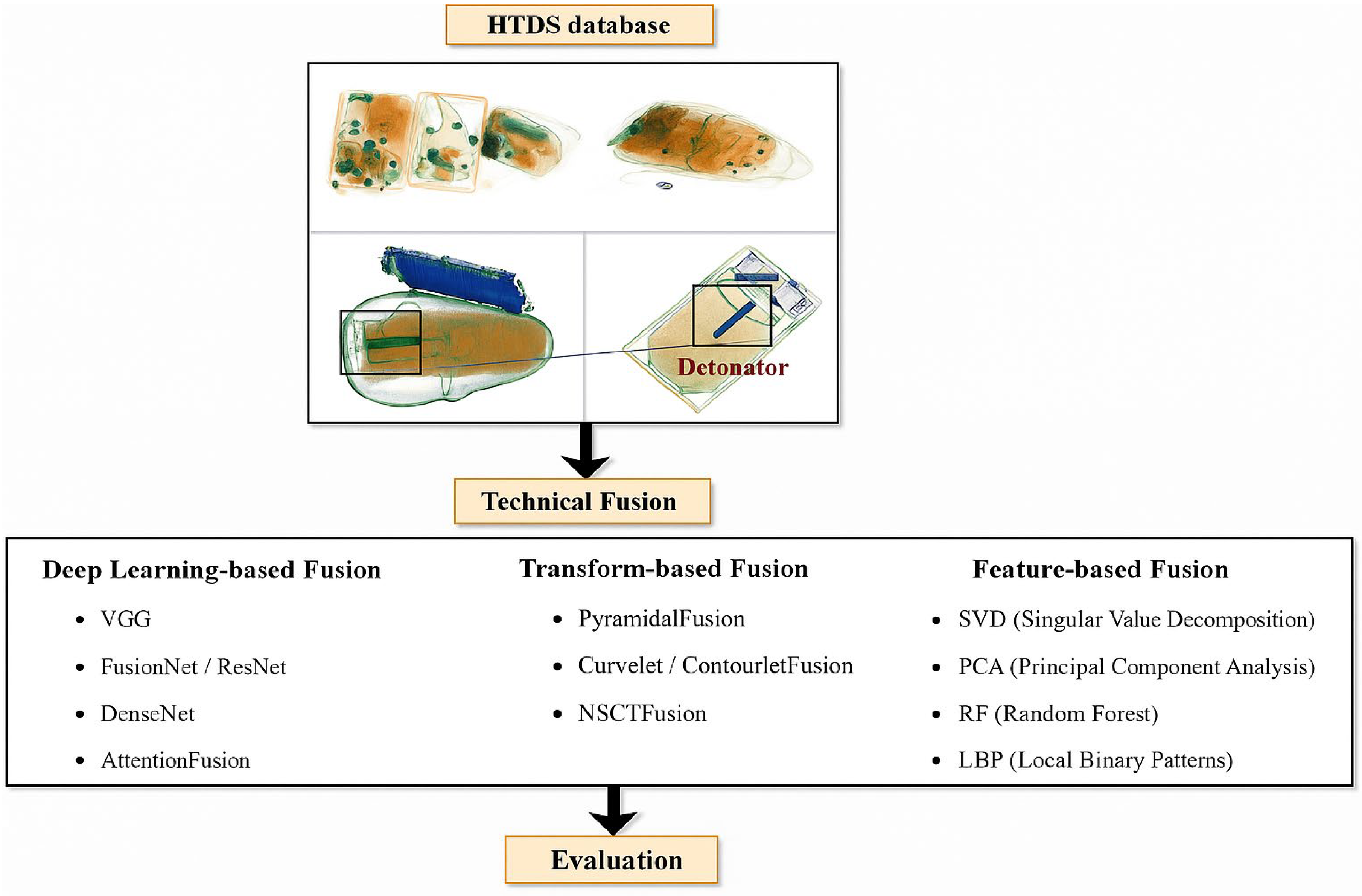

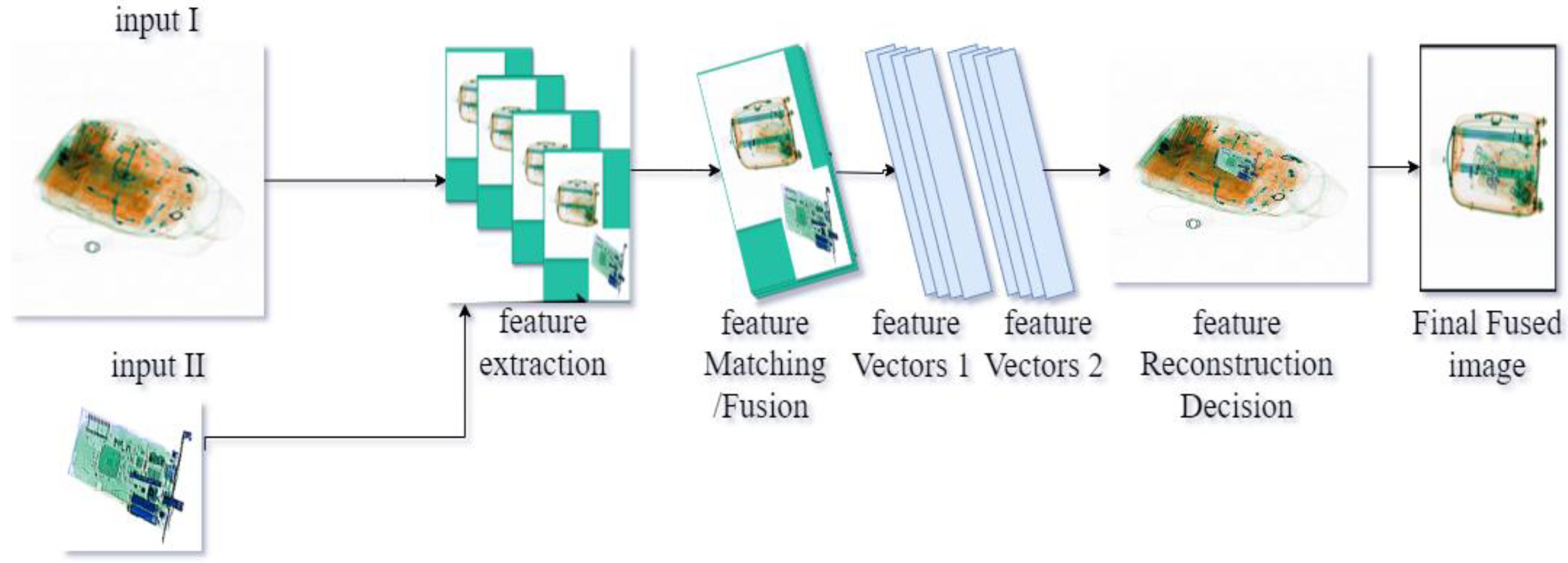

The proposed methodology consists of two main stages. The first step involves the integration of detonator patches into X-ray baggage scans using a range of image fusion techniques. The second step involves evaluating the quality of the fused images.

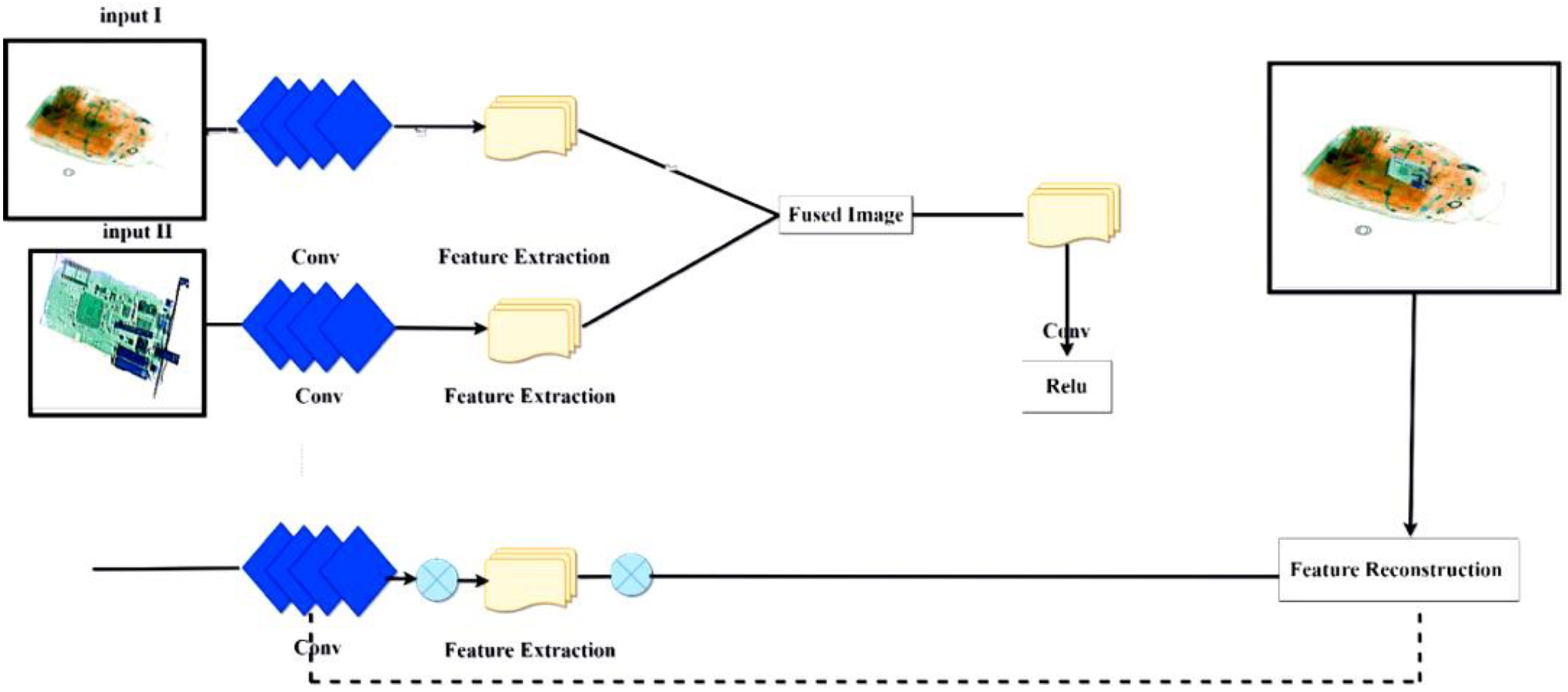

The image fusion techniques include deep learning-based image fusion, transform-based fusion, and feature-level fusion. Deep learning-based image fusion has shown significant promise in multi-image fusion tasks, particularly for enhancing X-ray imagery by preserving critical structural and spectral features, which are key elements for accurately identifying concealed threat objects like detonators. Three major families of methods are generally explored for image fusion, beginning with deep learning-based approaches that leverage convolutional and generative neural networks such as DenseFuse [

1,

2], FusionGAN [

3], and SwinFuse [

4,

5]. These models are designed to learn cross-modal representations, enabling the seamless and visually coherent fusion of patches into complex radiographic scenes. DenseFuse network preserves fine structural and edge details, works well on multi-spectral and dual-energy inputs, and its densely connected layers improve feature reuse, making fusion more efficient and informative. It enhances structural clarity in low-contrast or noisy X-ray images [

1]. FusionGAN network works as a generator that fuses very different images (e.g., severely underexposed and/or noisy input) and as a discriminator that enforces more realistic and informative outputs [

3]. The generator learns to fuse images into a natural-looking, high-quality result while the discriminator tries to distinguish between real and fused images. It generates better texture, contrast, and sharpness preservation and can be trained with various custom fusion losses. The SwinFuse network uses a self-attention mechanism to extract long-range dependencies and fine features from input images [

4,

5]. The feature maps provided by Swin blocks are fused using a channel-spatial attention mechanism, and a transformer-based decoder then realizes the image reconstruction. It can capture both global and local contexts that are critical in cluttered X-ray scenes and can fuse X-ray inputs with non-aligned illumination levels or non-uniform exposure. It is important to note that DenseFuse is directly related to convolutional neural network (CNN) architectures, whereas FusionGAN employs a generative adversarial framework with a different backbone, but may incorporate VGG-based perceptual loss, making it only indirectly related to CNNs. In contrast, SwinFuse is grounded in a transformer-based architecture, representing a different design philosophy, and is likewise only indirectly connected to CNN models. DenseFuse has a moderate computational cost, FusionGAN is computationally expensive and less stable due to adversarial optimization, and SwinFuse can capture long-range dependencies, but at the cost of higher memory and compute requirements. To address these limitations, this study investigates the fusion capabilities of VGG-based networks, FusionNet, and AttentionFuse models, focusing on their ability to capture multi-scale features, effectively combine complementary information, and enhance image quality in complex X-ray baggage scans.

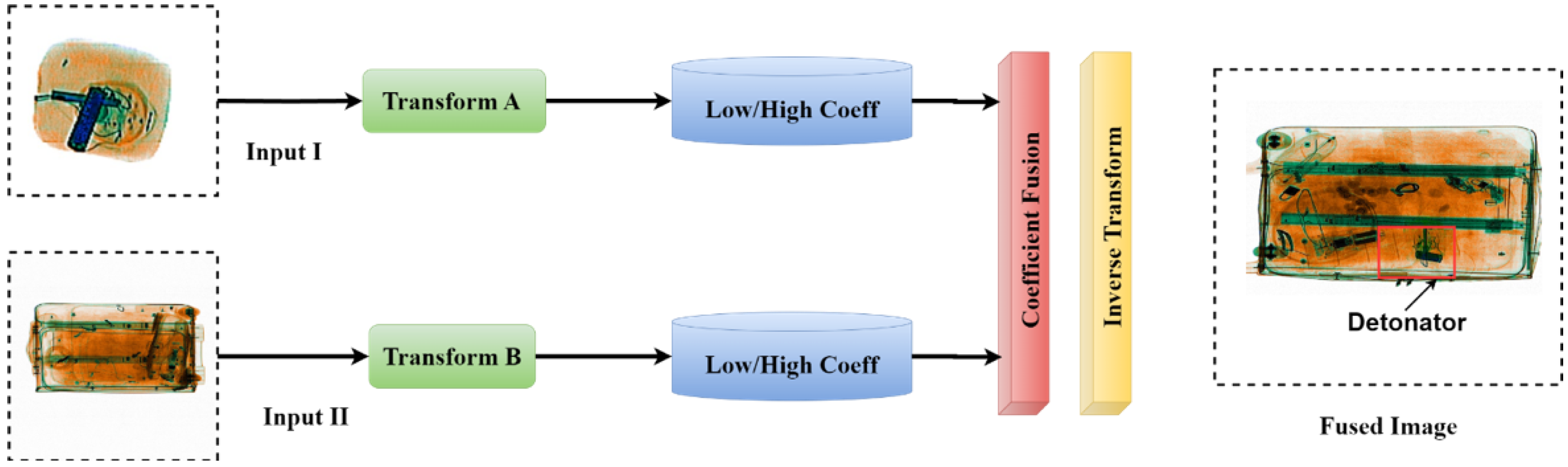

Transform-based methods form the second category, utilizing multi-resolution decomposition techniques, such as wavelet, contourlet, and shearlet transforms [

6], to extract and fuse information across various levels of detail. These approaches are effective in preserving both coarse and fine image features, contributing to a more accurate and detailed fusion outcome.

Finally, feature-based methods employ local visual descriptors like SIFT, SURF, and gray-level co-occurrence matrices (GLCM) [

7] to guide the fusion process based on the structural and textural characteristics of the source images. This feature-level guidance ensures that salient visual patterns are retained, supporting the realistic insertion of detonator patches within complex X-ray imagery.

The second step involves evaluating the quality of the fused images. The image quality assessment is carried out using three no-reference objective metrics. Entropy (EN) measures the informational richness of the fused image by assessing the diversity of intensity values, making it particularly useful for quantifying the preservation of fine details [

8]. Standard deviation (SD) indicates global contrast and keeps detail from the image, a key factor in visually distinguishing the inserted detonator from the baggage background [

9]. The average gradient (AG) assesses the sharpness of edges and how well edges are preserved in the fused image; a high AG suggests better definition of boundaries and intensity transitions, which is essential for segmentation and recognition tasks [

10]. To ensure a rigorous and unbiased comparison across the different fusion methods, all evaluation metrics are normalized using the min–max normalization technique [

11].

To summarize, we provide a comprehensive analysis and evaluation of various image fusion techniques designed to combine X-ray images of luggage with detonator images. Our goal is to identify the most effective methods for detonator detection. Notably, our framework works without the need for ground truth supervision, enhancing its applicability in real-world scenarios. The primary objective is to identify the fusion methods that best preserve and enhance the structural and textural details relevant to detonator components. The ideal fusion technique should produce output images that display more distinguishable features. Therefore, the main contributions of this paper are threefold:

- (1)

to explore image fusion algorithms grounded in machine learning and deep learning theory, focusing on how these approaches enhance fusion performance through feature extraction, representation learning, and adaptive integration;

- (2)

to evaluate eleven image fusion algorithms spanning the spatial domain, frequency domain, and deep learning paradigms, using objective image quality metrics such as EN, SD, and AG;

- (3)

to analyze the strengths and limitations of each fusion method, offering insights and recommendations on their suitability based on the combined assessment of three no-reference objective metrics, EN, SD, and AG.

2. Related Work

Image fusion aims to combine data from multiple sources or modalities to create a single, more informative image that is easier for humans or algorithms to interpret. This technique is important in security, surveillance, threat detection, and medical analysis, where it improves visual perception while maintaining essential details. In the domain of baggage security, specialized image fusion techniques have been developed to enhance threat detection in complex and cluttered environments.

Liu et al. [

12] provide a comprehensive overview of state-of-the-art image fusion techniques and recommend using statistical comparison tests to evaluate the performance of modern image fusion methods. Li et al. [

13] present an extensive review study of multi-sensor image fusion, organized into three approaches: pixel-level, feature-level, and decision-level. They also discussed the fusion quality assessment metrics, fusion frameworks, and signal processing approaches, such as multiscale transforms, sparse representations, and neural-network or pulse-coupled- neural-network-based methods. Ma et al. [

14] introduced a novel image fusion approach that combines gradient transfer with total variation minimization to merge infrared and visible images. The main idea was to preserve the salient structural features (edges and gradients) from both source images while suppressing noise and redundant information. They formulated the fusion task as an optimization problem and employed gradient transfer to retain strong edge information. The total variation regularization ensured smoothness and consistency in the fused image. Quantitative results demonstrated significant improvements over conventional methods. They reported an average EN increase of approximately 10%, reflecting a higher level of information content in the fused images. Additionally, the AG improved by nearly 20%, indicating superior preservation of structural and textural details. Zhang et al. [

15] proposed IFCNN (Image Fusion Convolutional Neural Network), a deep learning-based framework designed to fuse infrared and visible images. Unlike traditional hand-crafted or transform-based fusion methods, IFCNN leverages the representation power of convolutional neural networks to learn and extract meaningful features from source images automatically. The architecture is trained to generate fused outputs that retain the complementary information from both modalities.

Li et al. [

2] introduced DenseFuse, a deep learning-based image fusion model designed to merge infrared and visible images using a dense concatenation architecture. The key innovation of DenseFuse lies in its use of densely connected convolutional layers, which enhance feature propagation, encourage feature reuse, and improve gradient flow throughout the network. This enables the model to preserve both intensity from infrared images and fine-grained textures from visible images. It achieved a higher entropy compared to unimodal approaches, indicating a significant enhancement in the richness and utility of the fused images. Xie et al. [

16] introduced an end-to-end deep learning model that incorporates 3D convolutional neural networks directly into the image fusion process. The novelty of this study lies in extending attention mechanisms, commonly used in 2D image pair fusion networks, to 3D, and in introducing a comprehensive set of loss functions that enhance fusion quality and processing speed while avoiding image degradation. Park et al. [

17] proposed CMTFusion, a cross-modal transformer-based fusion algorithm for infrared and visible image fusion. Unlike conventional CNN-only approaches that have difficulties capturing long-range dependencies, this hybrid architecture combines CNNs for local feature extraction with transformer modules for modeling global cross-modal interactions. The multiscale feature maps of infrared and visible images were extracted, and the fusion result was obtained based on the spatial-channel information in refined feature maps using a fusion block. This design achieves superior fusion quality and robustness in complex and challenging environments. Wen et al. [

18] introduced a generator with separate encoders for infrared and visible images, utilizing multi-scale convolution blocks. Fusion happens via a Cross-Modal Differential Features Attention Module (CDAM), which employs spatial and channel attention paths to compute attention weights from differential features, thus reconstructing fused output progressively. In the specialized field of baggage security, Duan et al. [

19] introduced RWSC-Fusion, a region-wise style-controlled fusion network designed for synthesizing and enhancing X-ray images used in security screening. This model focused on localized fusion by applying style control mechanisms to specific regions of interest, namely those containing suspicious or prohibited items, while preserving the overall image consistency. The luminance fidelity and sharp edge details in critical areas were kept, which are essential for accurate threat identification. Quantitative results demonstrated significant improvements in EN and AG within regions containing threat objects, highlighting the method’s effectiveness in enhancing image quality where it matters most. Zhao et al. [

20] proposed MMFuse, a multi-scale fusion algorithm that integrates morphological reconstruction with membership filtering to improve thermal–visible image fusion. By preserving structural details and enhancing contrast, MMFuse operates across multiple scales to effectively combine both high- and low-frequency components, resulting in more comprehensive and informative fused images. Experimental results demonstrated significant gains in EN and AG metrics, reflecting higher information content and sharper edges in the fused outputs.

Shafay et al. [

21] introduced a temporal fusion-based multi-scale semantic segmentation framework designed specifically for detecting concealed threats in X-ray baggage imagery. Their method employed a residual encoder–decoder architecture that integrated features across sequential scan frames, allowing for improved visibility of partially occluded or hidden objects. They demonstrated that the model achieved superior detection accuracy and object localization compared to static image-based techniques. Wei et al. [

22] proposed a manual segmentation method for dangerous objects in the SIXRay dataset. They also introduced a composition method for augmenting positive samples using affine transformations and Hue Saturation Value features. This resulted in an algorithm variant called SofterMask RCNN, which improved detection accuracy by 6.2% when used with an augmented image dataset. Dumagpi and Jeong [

23] analysed the impact of employing Generative Adversarial Networks (GAN)-based image augmentation on the performance of threat object detection in X-ray images. They synthesised new X-ray security images by combining threat objects with background X-ray images, which were used to augment the dataset. The experiment results demonstrated that image synthesis is an effective approach to addressing the imbalance problem by reducing the false-positive rate (FPR) by up to 15.3%. Based on the real-world finding that items are randomly stacked in luggage scanning, causing penetration effects and heavily overlapping content, Miao et al. [

24] proposed a classification approach called class-balanced hierarchical refinement. This approach uses mid-level features in a weakly-supervised manner to accurately localise prohibited items. They report mean Average Precision improvements across all studied classes (gun, knife, wrench, pliers, scissors).

4. Results and Discussion

The proposed approach conducts a comprehensive cross-method evaluation on spatial resolution, contrast preservation, and information retention in fused images.

We utilised MATLAB 2019 for generating the derived images and employed PyTorch 1.5.0 as the machine learning and deep learning framework for model development. The Google Collaboratory environment, equipped with the A100 GPU hardware accelerator, was utilised to minimise runtime. Pre-trained CNNs were trained and tested in the software environment using Python (3.0), scikit-learn (1.5.2), TensorFlow (2.18.0), and Keras (3.6.0).

To verify the significance of the results from the analyzed method, we conducted a significance test along with a quantitative evaluation of the outcomes.

Table 2 shows the performance of different fusion techniques used to combine detonator images into dual-energy X-ray scans. Higher values indicate better fusion quality regarding detail preservation, contrast, and sharpness.

The highest EN is observed in the VGG-based fusion (5.4017), indicating superior retention of global detail, followed by NSCT (5.1666) and Attention Fusion (5.1599). In contrast, RF records the lowest EN (4.8939), suggesting potential information loss. PCA achieves the highest SD (75.4174), implying the most pronounced intensity differentiation between the detonator patch and its background. It is followed by LP (59.5764) and LBP (58.5407), while curvelet/contourlet-based fusion reports the lowest contrast (51.0655). Also, LBP leads significantly with an AG of 14.2265, which is over 16% higher than the next best method and LP (12.1236), highlighting its superior edge fidelity around the detonator. Classical approaches such as PCA and AttentionFuse exhibit more modest AG values (7.2482 and 7.0257, respectively).

Collectively, these results demonstrate that no single fusion algorithm excels across all evaluation metrics. VGG-based fusion is optimal for maximizing information content, Fusion PCA is best suited for enhancing contrast, and Fusion LBP excels in preserving edge and texture detail. However, for applications requiring a balanced trade-off, such as reliable detection of concealed threats under diverse imaging conditions, Fusion LP offers the most versatile performance, with strong results in EN (5.0128), SD (59.5764), and AG (12.1236).

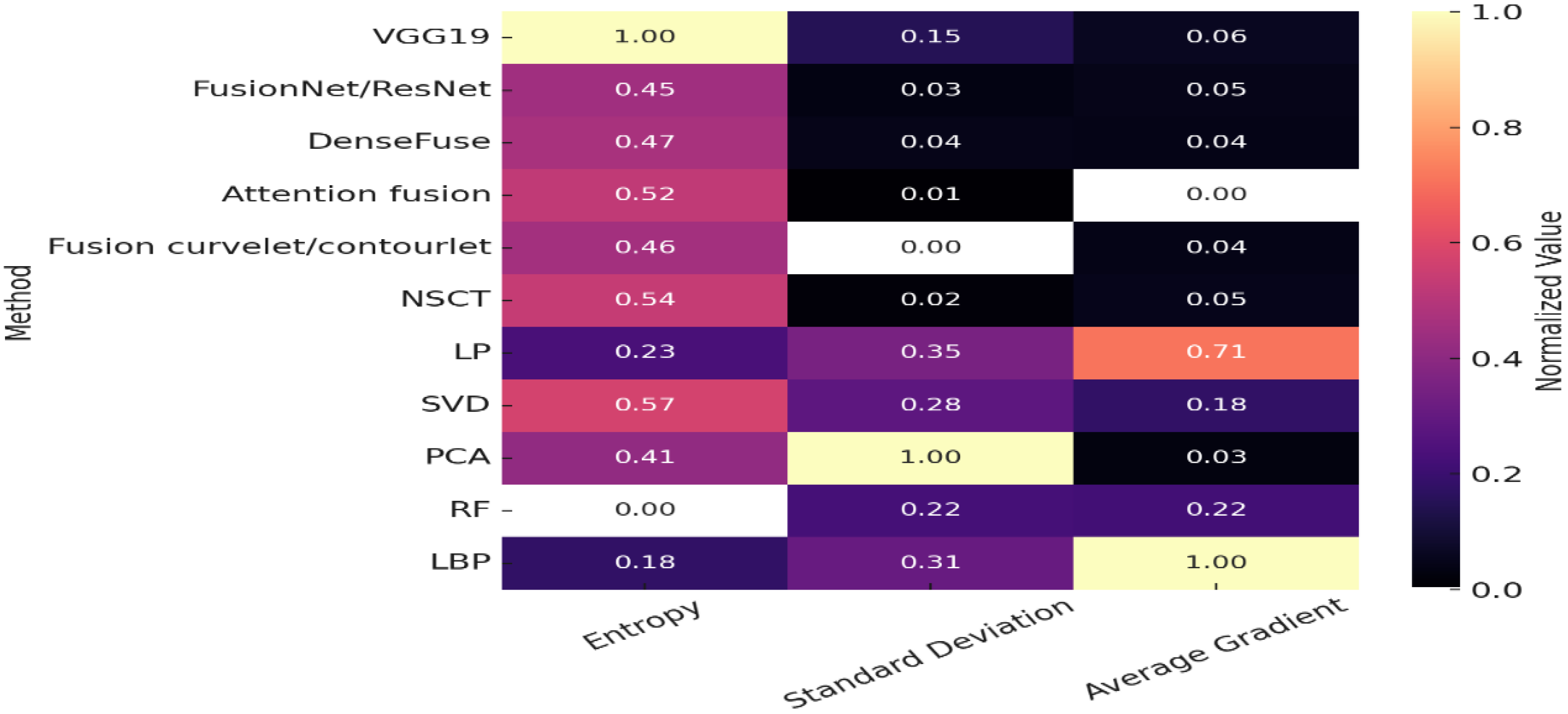

Figure 6 presents a normalized heatmap of the objective fusion metrics across eleven fusion algorithms. The heatmap visualizes the relative performance of various image fusion algorithms, while normalization facilitates direct comparison across metrics with different scales. EN peaks for the VGG-based method (normalized = 1.00), indicating superior retention of global details. SD, a proxy for contrast, is maximized by Fusion PCA (1.00), while Fusion LBP achieves the highest AG score (1.00), reflecting its exceptional preservation of fine textures and edges.

These results indicate that Transform-based approaches provide a balanced performance across metrics. In the normalized heatmap, the fusion LP consistently ranks among the top three methods, with normalized scores of 0.23 for EN, 0.35 for SD, and 0.71 for AG and a geometric mean of 0.387, consistent with its strong cross-metric balance. In contrast, NSCT performs well in information retention (high EN) but falls short in both contrast and edge detail; the geometric mean is 0.047. Feature-based methods tend to specialize in specific aspects: PCA emphasizes contrast (high SD) but sacrifices fine detail, as indicated by its low AG score (0.03); its geometric mean is 0.230. Conversely, LBP prioritizes texture preservation with a leading AG score (1.00) but captures less global information (0.18 EN); its geometric mean is 0.382. Deep learning-based models, including VGG, FusionNet/ResNet, DenseFuse, and AttentionFuse, consistently achieve high entropy values (≥0.45). This indicates strong global feature retention. However, the VGG’s geometric mean is only 0.208. However, they generally underperform in contrast and edge detail, with normalized SD and AG scores typically ≤0.07. All methods that perform exceptionally well on a single metric underperform on the composite geometric mean of the three min–max normalised metrics due to trade-offs with the other metrics.

The analysis reveals that VGG-based fusion is best suited for applications where maximizing information content is the primary objective. Fusion PCA is ideal for scenarios requiring enhanced contrast, while Fusion LBP and Fusion LP are preferable when preserving edge and texture details is critical. Among all methods, Fusion LP delivers the most balanced performance across entropy, contrast, and gradient metrics, making it the recommended choice for applications that demand a well-rounded fusion of global information, local contrast, and fine structural detail.

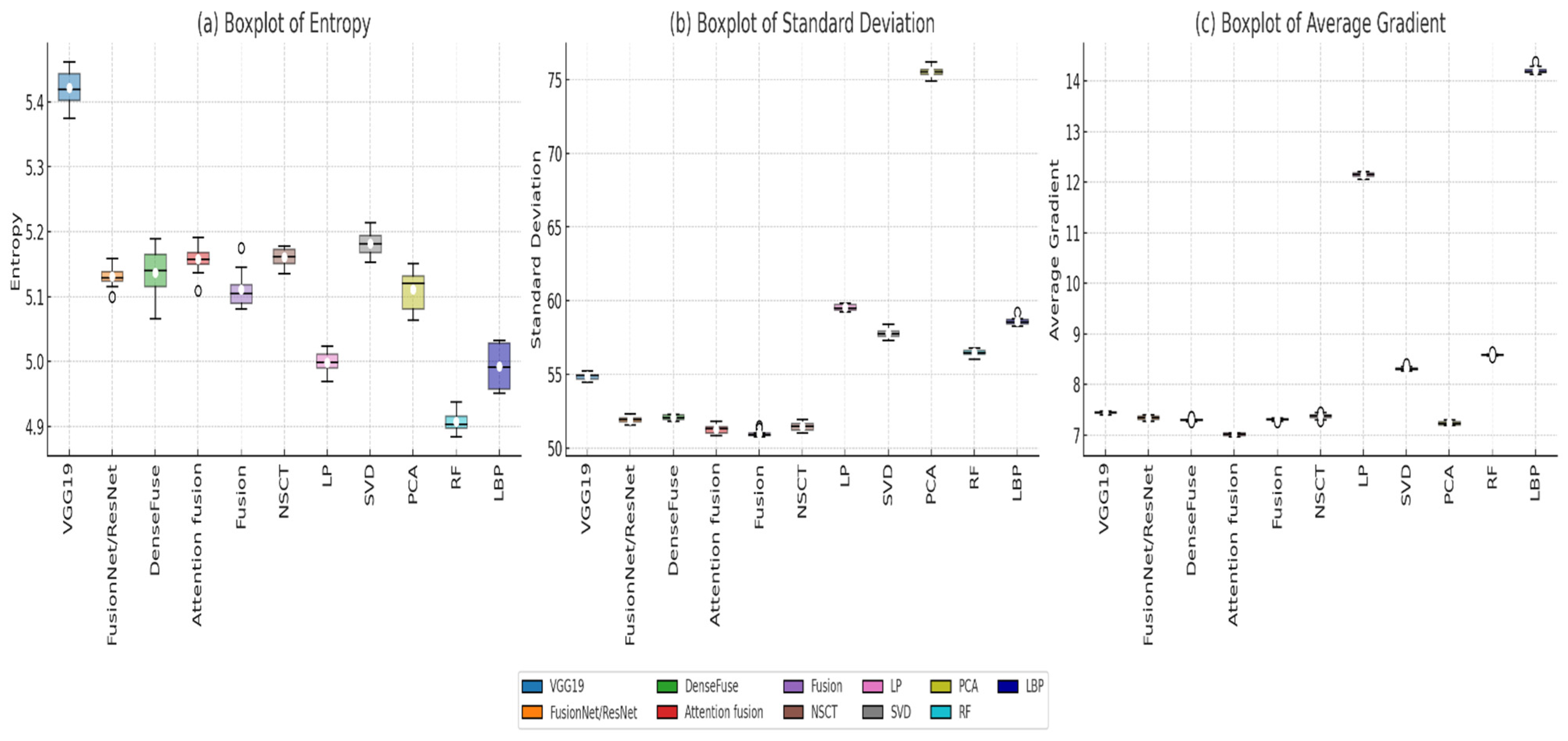

To nuance and enhance the comparison across diverse fusion strategies, we visualize the overall distribution, central tendency, and variability of each evaluation metric across all eleven image fusion methods (

Figure 7). These boxplots provide a comprehensive view of performance spread, highlighting not only the median and interquartile range but also potential outliers and the relative consistency of each method.

The average EN distribution (

Figure 7a) is tightly concentrated, with a median of approximately 5.125 and an interquartile range (IQR) of [5.058, 5.163], indicating consistent performance across most methods in terms of information preservation. Correlated to data in

Table 2, VGG-based fusion (5.402) emerges as a clear high-end outlier, confirming its dominant performance in retaining global information and structural richness. On the opposite end, Fusion RF (4.894) falls below the lower whisker, suggesting it persistently underperforms in preserving entropy, likely due to its limited capacity to capture diverse image features.

The SD boxplot (

Figure 7b) shows a broader performance spread, with an average median near 54.707 and an IQR of [51.664, 58.257]. This metric reflects the algorithms’ ability to enhance intensity contrast between the fused image elements. Correlated to data in

Table 2, Fusion PCA (75.417) is a pronounced upper outlier, reinforcing its strength in boosting contrast far beyond the other techniques, especially beneficial for applications requiring high visual differentiation between foreground and background. In contrast, Curvelet/Contourlet and NSCT-based fusion methods cluster near the lower whisker (≈51), suggesting a more conservative approach to contrast enhancement, which may lead to smoother but less visually distinct outputs.

AG values are moderately dispersed, with a median of 7.382 and an IQR of [7.324, 8.456], representing variation in how well fine textures and edges are preserved (

Figure 7c). Correlated to data in

Table 2, Fusion LBP (14.226) stands out as a significant high-end outlier, highlighting its superior ability to retain edge sharpness and micro-textural details, that is critical in applications where feature clarity is essential, such as object detection. Conversely, Attention Fusion (7.026) lies at the bottom whisker, indicating it delivers the least gradient sharpness, which could be a limiting factor in edge-sensitive tasks.

Thus, VGG excels in information content, PCA dominates contrast, and LBP leads in texture and edge preservation. Fusion Pyramidal, while not an outlier in any single metric, consistently ranks near the upper quartile across all three, further supporting its role as the most balanced and versatile fusion method among those evaluated.