1. Introduction

In the current digital era, cybersecurity has become a critical challenge for the protection of sensitive infrastructures and distributed information systems. The increasing frequency, complexity, and evasiveness of cyberattacks have exposed the limitations of traditional defense mechanisms, which rely on static rules, blacklists, or signature-based detection, proving ineffective against advanced threats. Furthermore, the massive expansion of the attack surface, driven by emerging technologies such as the Internet of Things (IoT), 5G networks, Web 3.0, and cloud virtualization, has further complicated detection. Although the widespread use of HTTPS improves privacy, it has also created encrypted channels that attackers exploit to evade traditional inspection systems [

1].

To address these challenges, the scientific community has strongly adopted artificial intelligence-based approaches. In particular, machine learning (ML) and deep learning (DL) have demonstrated high capability in detecting complex patterns and adapting to dynamic, encrypted, and large-scale data environments [

2,

3,

4,

5]. Hybrid models combining unsupervised clustering techniques with deep neural architectures such as recurrent neural networks (RNN) and gated recurrent units (GRU) have gained prominence due to their ability to model both static characteristics and temporal dependencies in network traffic flows [

6,

7].

In this context, Huo et al. [

8] proposed an innovative approach based on a cascaded architecture that integrates an enhanced GRU network with an optimized EFMS-KMeans clustering algorithm, achieving effective anomaly detection without requiring labeled data. Their model estimates dense centroids using electric potential and incorporates traffic predictions as inputs to the clustering process, significantly improving accuracy.

Building upon this proposal, the present study introduces a hybrid deep learning-based approach for network traffic anomaly detection. In the first stage, it employs an unsupervised clustering phase using an Enhanced Fast Mean Shift (EFMS) strategy to improve the initialization of K-Means centroids. Specifically, our modification refines centroid selection by leveraging density estimation of feature space distributions, which increases the stability of clustering results and achieves better separation between normal and anomalous flows compared with conventional K-Means++ initialization. These automatically generated cluster-based labels are subsequently used to train a sequential CNN-GRU model, which captures both spatial and temporal dependencies in network traffic. Unlike previous studies that rely on predefined ground-truth labels or conventional supervised classifiers such as SVM, the proposed approach reduces dependence on manual annotations, enhances adaptability to dynamic encrypted traffic, and improves the robustness of anomaly detection in heterogeneous environments. The methodology includes a feature-engineering process and balancing techniques such as SMOTE to enhance predictive performance in unbalanced datasets.

This architecture is specifically oriented toward detecting malicious traffic in local and metropolitan networks, leveraging real-world firewall logs collected in university campus environments. Unlike studies based on synthetic or industrial datasets, the use of perimeter security devices in academic networks ensures the capture of highly heterogeneous flows spanning administrative, student, and IoT traffic, which enhances the realism and robustness of anomaly-detection experiments. Similarly to the approach of Ylmaz and Daş, who demonstrated the effectiveness of hybrid deep learning models applied directly to campus firewall logs [

9], our methodology relies on authentic perimeter records to strengthen detection accuracy while minimizing false alarms in complex network scenarios.

Beyond achieving high accuracy, model explainability is crucial for its adoption in real-world environments. Although deep learning (DL) models offer high performance levels, they are often regarded as “black boxes,” which limits their usefulness in critical domains where decisions must be auditable. While tools such as LIME have been used to interpret deep networks, their effectiveness is reduced in highly nonlinear or heavily imbalanced scenarios, as is common in network traffic analysis. Recent research has explored more robust interpretability approaches, incorporating perturbations tailored to the stochastic nature of traffic, significantly improving the understanding of model predictions [

10].

Moreover, modern network environments demand distributed detection models capable of operating in heterogeneous contexts where data cannot always be centralized due to privacy or infrastructure limitations. Liu et al. [

11] emphasize the need for resilient distributed architectures that maintain model coherence even under data fragmentation. Similarly, Fotiadou et al. [

1] demonstrated that deep learning-based automatic feature extraction can effectively replace traditional manual engineering without sacrificing accuracy, enabling greater system scalability. Complementarily, the systematic review by Mohammadpour et al. [

12] highlights the utility of CNNs as key tools for intrusion detection, given their ability to identify complex spatial patterns and their strong performance when integrated into hybrid architectures.

In addition to the integration of hybrid architectures such as the proposed CNN-GRU with EFMS-KMeans, it is essential to address the scarcity of labeled data and the constantly evolving nature of threats in real-world environments. In this regard, strategies such as semi-supervised learning and transfer learning have proven effective in improving detection system performance when annotation availability is limited [

13]. Simultaneously, explainable models powered by reinforcement learning techniques are emerging as a promising solution to optimize detection in dynamic, uncertain, and highly variable environments, while also providing interpretability and adaptability [

14]. The incorporation of these paradigms reinforces the need to develop intrusion-detection solutions that are not only accurate but also resilient, interpretable, and capable of adapting to the complexities of real-world traffic in distributed infrastructures.

The main contributions of this article are summarized as follows:

Novel hybrid architecture: We propose an architecture that integrates EFMS-KMeans pre-clustering with a sequential CNN-GRU classifier, combining the strengths of unsupervised segmentation with deep sequential modeling. This integration enables the detection of anomalous traffic patterns in heterogeneous and large-scale environments, providing greater robustness against the dynamic nature of encrypted network traffic.

Advanced feature-engineering pipeline: We design a feature-engineering process that extends beyond conventional preprocessing. It includes risk encoding, reputation-based transformations, temporal decomposition, and behavioral ratios. These operations enrich the semantic representation of network flows, enhance the model’s discriminative capability, and reduce false positives.

Handling class imbalance with SMOTE: We incorporate the Synthetic Minority Oversampling Technique (SMOTE) to balance classes in real-world traffic datasets. This step mitigates the tendency of the model to favor the majority class and improves its generalization across diverse network scenarios, ensuring consistent results even when anomalies represent only a small fraction of total traffic.

Empirical validation with real encrypted traffic: We conduct an extensive evaluation using real traces from a corporate firewall, including encrypted HTTPS flows. The results demonstrate high accuracy, scalability, and resilience, highlighting the practical applicability of the proposed approach in contexts where encrypted traffic dominates and traditional inspection mechanisms become insufficient.

Critical discussion and future directions: In addition to reporting performance metrics, we provide a critical discussion of the strengths and limitations of the proposed architecture. We also highlight its potential for integration into real-time monitoring systems and outline future extensions toward lightweight deployments in edge computing environments, which would facilitate adaptive use in corporate and educational scenarios.

The remainder of this article is organized as follows.

Section 2 reviews the related work.

Section 3 describes the dataset preparation, feature-engineering steps, and the proposed methodology.

Section 4 presents the experimental results and provides a detailed discussion of the findings, including the strengths and limitations of the proposed model. Finally,

Section 5 concludes the study and outlines promising directions for future research.

2. Related Work

In recent years, anomaly detection in network traffic has increasingly moved toward hybrid architectures that combine supervised and unsupervised approaches, addressing the growing complexity of distributed environments and the widespread use of encrypted protocols such as TLS/HTTPS. These models integrate clustering for unsupervised segmentation with supervised techniques and deep sequential networks capable of capturing both spatial and temporal patterns, thereby improving accuracy and generalization capabilities against emerging threats. In this context, a review of the state of the art highlights the most relevant proposals that employ clustering, classification, and sequential modeling, laying the foundation for justifying hybrid architectures in real and encrypted traffic scenarios.

Intrusion detection and anomalous traffic analysis in networks have evolved toward hybrid architectures that integrate unsupervised clustering techniques with deep learning models. This trend responds to the need to overcome the limitations of purely supervised approaches, which depend on labeled datasets that are often unrepresentative or imbalanced and vulnerable to the emergence of unknown attacks (zero-day attacks). In this regard, multiple studies have demonstrated that the combination of clustering algorithms and sequential models significantly enhances accuracy, robustness, and generalization in heterogeneous real-traffic scenarios [

15,

16].

An early example of this paradigm is the hybrid proposal based on K-means and CNN-LSTM, where the initial clustering process reduces noise and segments traffic patterns, allowing the sequential model to capture temporal dependencies more effectively. Experimental results showed that the combination of clustering and recurrent neural networks achieved higher precision and recall compared to approaches relying solely on deep learning, validating the added value of this strategy [

15]. Furthermore, the integration of K-means with oversampling techniques, particularly SMOTE, has demonstrated high effectiveness in addressing class imbalance, which is intrinsic to network traffic logs where normal flows overwhelmingly outnumber attack traces. In our experiments, this combination not only balanced the dataset but also enhanced the stability of clustering-based labeling, ultimately leading to improved classification performance in the subsequent CNN-GRU model. The combination of clustering with synthetic balancing not only mitigates the bias toward the majority class but also increases the capacity to detect low-frequency attacks, thereby improving the overall sensitivity of the system [

16].

Recent research has also emphasized the ability of CNN-GRU–based architectures to simultaneously capture spatial and temporal features in network data. Convolutional layers extract spatial patterns from packet headers and traffic flows, while GRUs model long-term sequential dependencies. This synergy has demonstrated superior performance compared to traditional models and other recurrent variants, particularly in detecting distributed intrusions in large-scale environments. In this regard, works integrating CNN-GRU under federated learning schemes have highlighted the scalability and efficiency of such models, ensuring privacy in decentralized settings without compromising accuracy [

17,

18].

In parallel, research on EFMS-KMeans has gained importance as an improved alternative to conventional K-means. Its mechanism, based on selecting dense centroids through electric potential estimation, enables the identification of more representative clusters with reduced sensitivity to random initialization. A recent study that combined EFMS-KMeans with GRU demonstrated that this integration not only enhances automatic label generation but also improves the quality of subsequent supervised training, achieving higher anomaly-detection accuracy while reducing false positives [

19]. These findings are particularly relevant for encrypted traffic scenarios (TLS/HTTPS), where available features are limited, and robust segmentation becomes critical.

Additionally, comprehensive reviews of K-means variants and alternative clustering techniques reinforce the need to adopt more sophisticated approaches in cybersecurity environments. For instance, studies focused on mean-shift clustering highlight its ability to automatically determine the number of clusters without requiring initial parameters, while other works exploring density-optimized objective functions show significant improvements in detecting outliers and anomalous behaviors in high-dimensional datasets [

20,

21]. These contributions support the argument that incorporating EFMS-KMeans represents not only a technical extension but also a methodological strategy addressing scalability, heterogeneity, and volume challenges inherent to modern network traffic.

Taken together, these contributions demonstrate that the development of hybrid architectures integrating optimized clustering algorithms such as EFMS-KMeans with deep sequential models like CNN-GRU offers a promising framework for advanced anomaly detection in network traffic. The available empirical evidence confirms that this integration simultaneously addresses noise, class imbalance, encrypted traffic, and scalability, positioning such architectures as a robust and viable alternative for corporate and mission-critical environments.

3. Materials and Methods

The main objective of this section is to describe in detail the methodological framework that supports the proposed EFMS-KMeans + CNN-GRU hybrid architecture for anomaly detection in encrypted network traffic. The design of this section follows three guiding principles: (i) to ensure methodological transparency by clearly defining the mathematical foundations employed; (ii) to highlight the original contributions introduced in this work with respect to the state of the art; and (iii) to provide a structured roadmap of the procedures applied, ranging from clustering and feature engineering to class balancing and sequential deep learning modeling.

From a contribution perspective, this work advances existing research in three aspects. First, it introduces an Enhanced Fast Mean Shift (EFMS)-based initialization strategy for K-Means clustering, designed to obtain stable and representative dense centers without the need for multiple random restarts. Second, it integrates this clustering stage with a supervised CNN-GRU sequential classifier, allowing for robust detection of anomalous encrypted flows. Third, the proposed pipeline incorporates advanced feature engineering and class balancing with SMOTE, enabling better handling of the intrinsic imbalance present in real firewall traffic logs. The following subsections describe each methodological component in detail.

3.1. Clustering and Anomaly Detection Based on EFMS-KMeans

For the centroid initialization phase in the clustering process, an approach based on dense center estimation using the Enhanced Fast Mean Shift (EFMS) algorithm was implemented. This method identifies regions of the feature space with high point density, computing the local centroid

as the average of the neighboring samples

within a radius

, according to the following expression:

Equation (1) follows the general formulation of mean shift-based density estimation widely adopted in the clustering literature [

22,

23,

24,

25,

26]. In our approach, EFMS extends this principle by incorporating adaptive kernel bandwidth and efficient neighborhood search, improving scalability when applied to large-scale firewall log datasets. This represents the first original contribution of this work.

Once dense centers are obtained, they are used as initialization seeds in the K-Means algorithm, which optimizes the intra-cluster distance objective function:

Equation (2) corresponds to the standard K-Means objective function commonly employed in clustering approaches [

22,

23]. In this work, its novelty lies not in the mathematical definition itself, but in the integration with EFMS-based initialization, which ensures that the centroids are representative of the intrinsic data structure and reduces the internal variability within each cluster. In practice, the optimization is carried out iteratively, alternating between two steps: (i) assignment of each data point to the nearest centroid, and (ii) updating each centroid as the mean of the points assigned to it.

This clustering stage provides a robust unsupervised mechanism for anomaly detection, generating preliminary labels that are later leveraged by the supervised CNN-GRU model. By combining EFMS with K-Means, the methodology achieves a balance between computational efficiency and improved cluster stability, which is particularly relevant in heterogeneous and imbalanced traffic scenarios.

In the developed implementation, this optimization is specifically performed in the following call:

In this line, the fit_predict() method internally executes the iterative minimization process described above, using the dense centers generated by EFMS as initial points to promote faster convergence and a more accurate partitioning of the feature space. The use of dense centers as initialization increases the stability and consistency of the clustering process, reducing the likelihood of convergence to suboptimal local minima and improving the separation between clusters, thereby producing more coherent labels for the subsequent supervised phase based on the CNN-GRU architecture. This synergy between density estimation and iterative optimization of K-Means constitutes a key element for maximizing the performance and reliability of the proposed hybrid approach.

The anomaly label is defined using the threshold

corresponding to the 95th percentile of the distance to the nearest centroid:

This technique has been successfully applied in similar models based on GRU [

27]. In addition, the definition of the anomaly threshold as the 95th percentile of the distance to the nearest centroid has been widely recognized in the literature as a robust and statistically grounded criterion. This choice ensures that only the most extreme 5% of samples are considered anomalous, thereby achieving a balance between detection sensitivity and false alarm rate. For instance, Patel et al. demonstrated the effectiveness of applying the 95th percentile threshold in clustering-based anomaly detection of financial transactions, where outliers exhibited significantly higher distances to cluster centroids compared to normal instances [

28]. Similarly, unsupervised approaches applied to sensor data in marine engines confirm that percentile-based thresholds allow for reliable anomaly isolation in heterogeneous environments without requiring labeled data [

29]. Furthermore, recent studies in vehicular network traffic detection reinforce the relevance of percentile-based adaptive thresholds as part of anomaly scoring mechanisms integrated with deep learning models [

27]. These consistent findings across domains justify the adoption of the 95th percentile threshold in this work, ensuring methodological rigor and comparability with existing high-impact research.

3.2. Dimensionality Reduction and Feature Engineering

The data preprocessing included a critical stage of dimensionality reduction and the generation of new derived variables (feature engineering), aimed at optimizing the model’s discriminative capability, reducing redundancy, and accelerating the training of algorithms.

3.2.1. Dimensionality Reduction

Principal Component Analysis (PCA) was applied to project the original data onto a lower-dimensional orthogonal subspace, preserving as much variance as possible from the original set. This technique allows maintaining relevant information while eliminating noise from highly correlated attributes. Mathematically, the transformation is defined as:

where:

is the original feature matrix.

contains the eigenvectors of the covariance matrix of X, associated with the largest eigenvalues.

is the reduced projection with .

The use of PCA was particularly beneficial for visualizing clustering results and reducing the training time of complex models such as CNN-GRU.

3.2.2. Feature Engineering

Additionally, new variables were derived from the original dataset to capture complex relationships not explicitly represented in raw logs. Some of these correspond to standard metrics widely adopted in network traffic analysis, such as bytes_total and pkt_ratio, which summarize traffic volume and balance between sent and received packets. In contrast, other features were specifically designed within this study. In particular, the distance_to_centroid variable was introduced during the EFMS-KMeans clustering stage, where the Euclidean distance of each data point to its assigned cluster centroid was calculated and used as a predictive attribute. This combination of established traffic descriptors with newly formulated clustering-based features strengthens the representational capacity of the dataset and enhances the robustness of the proposed anomaly-detection architecture. Some of the most relevant incorporated variables were:

The feature

bytes_total represents the total traffic volume in a session, summing sent and received bytes:

The feature

pkt_ratio the ratio between sent and received packets, useful for detecting unbalanced or suspicious sessions:

The feature

distance_to_centroid the Euclidean distance of each point to the centroid of its cluster (calculated after EFMS-KMeans), used as a predictive variable:

where

is the feature vector of point

i, and

is the centroid of the assigned cluster

.

This feature-engineering process, aligned with findings from recent studies [

30,

31], improved the semantic representation of traffic, strengthened the classification stage, and reduced the false positive rate. The new variables were selected after an exploratory analysis that considered their correlation with anomaly labels and their distribution within the global dataset.

Overall, the combination of PCA-based dimensionality reduction and the generation of derived features significantly contributed to the final performance of the EFMS-KMeans + CNN-GRU architecture, particularly by enhancing the segmentation of anomalous traffic and facilitating the detection of complex patterns in encrypted environments.

3.3. Class Balancing with SMOTE

One of the main challenges in detecting malicious traffic is the marked class imbalance inherent to real-world data, where anomalous instances represent a very small fraction compared to legitimate traffic. This disproportion can bias the learning process of supervised models, reducing their sensitivity to critical events and increasing false negative rates.

To mitigate this problem, the Synthetic Minority Over-sampling Technique (SMOTE) was applied, which has been widely validated in cybersecurity and anomaly-detection scenarios [

30]. SMOTE generates new synthetic instances of the minority class by controlled interpolation between nearby examples in the feature space, preserving the data distribution without direct duplication or random noise injection. The generation of a new synthetic sample

is defined as:

where:

x is a sample of the minority class

is one of its k nearest neighbors

is a random coefficient.

This technique was implemented after preprocessing and before supervised training (CNN-GRU), ensuring a balanced dataset that facilitates the learning of discriminative representations even for rare anomalous patterns. As a result, a substantial improvement was observed in metrics such recall and F1-score, without sacrificing the overall accuracy of the model. Furthermore, the use of SMOTE is particularly suitable in environments with moderately sequential data, as it does not alter the original semantics of the series while preserving input coherence.

3.4. Sequential Modeling with CNN and GRU

To capture spatial and temporal patterns in encrypted network traffic, a hybrid model composed of a one-dimensional convolutional neural network (1D-CNN) followed by a Gated Recurrent Unit (GRU) network was employed. This design allows the detection of local correlations between adjacent features and the modeling of temporal evolution in traffic sessions.

3.4.1. Convolutional Neural Network (CNN)

The convolutional layer extracts local patterns from input sequences using sliding filters. The main operation is the following.

where:

x represents the input sequence,

are the weights of filter k,

is the bias,

is the activation function (ReLU in this case),

is the output of neuron i of filter k,

and m is the kernel size.

This operation is repeated for multiple filters and is followed by a grouping layer to reduce dimensionality and preserve relevant features.

3.4.2. Gated Recurrent Unit (GRU)

After feature extraction, a GRU is used to model temporal dependencies. GRUs preserve long-term information using gates that control the information flow. Their operation is governed by the following equations:

where:

is the input vector at time t,

is the updated hidden state,

is the update gate,

is the reset gate,

⊙ denotes the element-wise product,

and W, U are weight matrices learned during training.

This mechanism enables the model to capture anomalous patterns that emerge in traffic sequences, such as flows with atypical features in their temporal evolution.

This approach has proven effective in multiple recent studies focused on encrypted traffic, IoT networks, and distributed detection. For example, in [

32], the efficiency of an optimized CNN-GRU architecture for IIoT environments over edge computing is demonstrated, highlighting its low resource consumption and high malware-detection accuracy. In [

33], a hybrid strategy for anomaly detection in firewall logs is presented, integrating artificially generated data and deep learning techniques such as CNN-GRU, reinforcing its applicability in encrypted traffic analysis. Finally, the study in [

34] validates the use of advanced AI-based methods, including recurrent and convolutional architectures, for automated threat detection in security logs, achieving outstanding precision and robustness. As shown in (

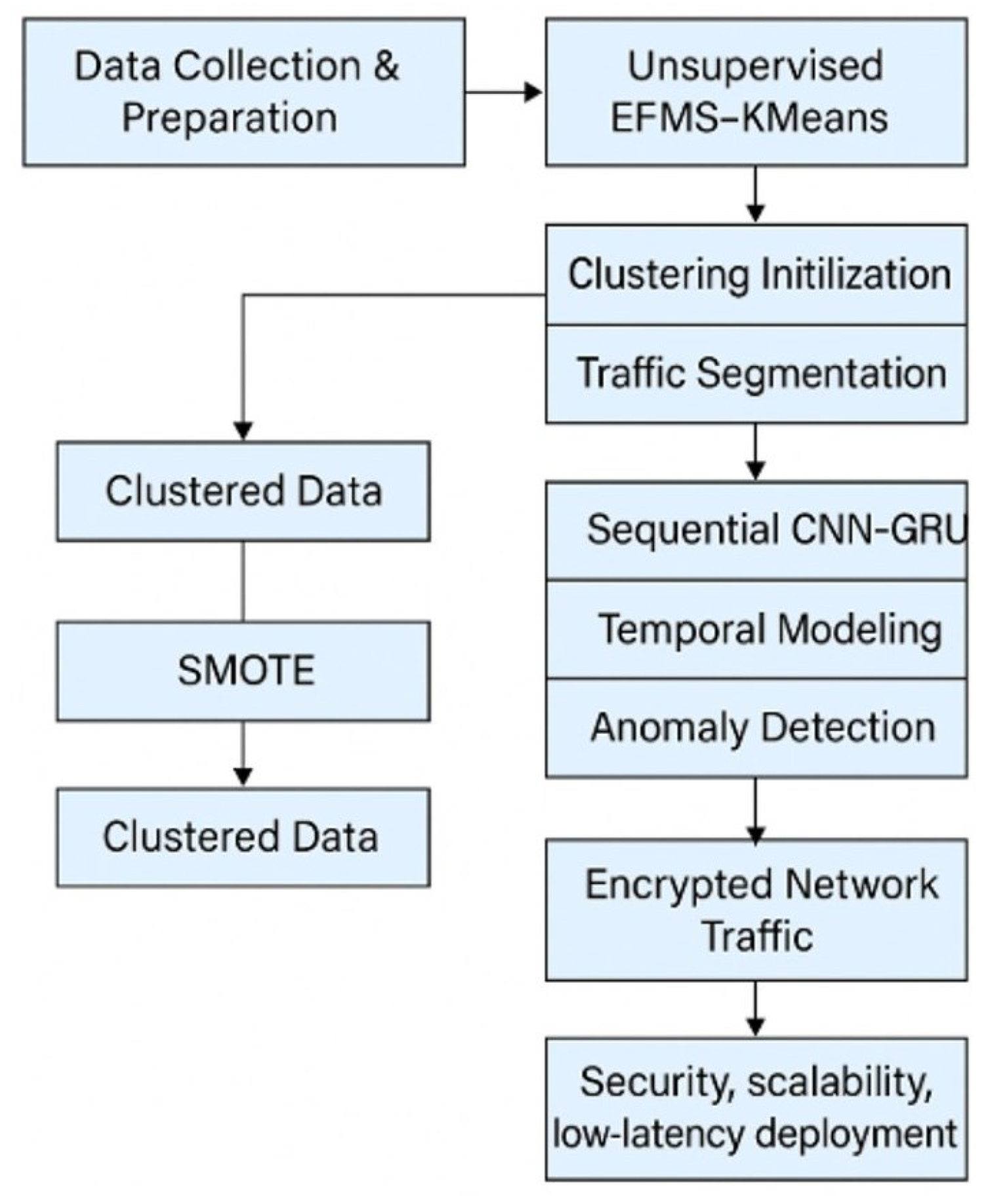

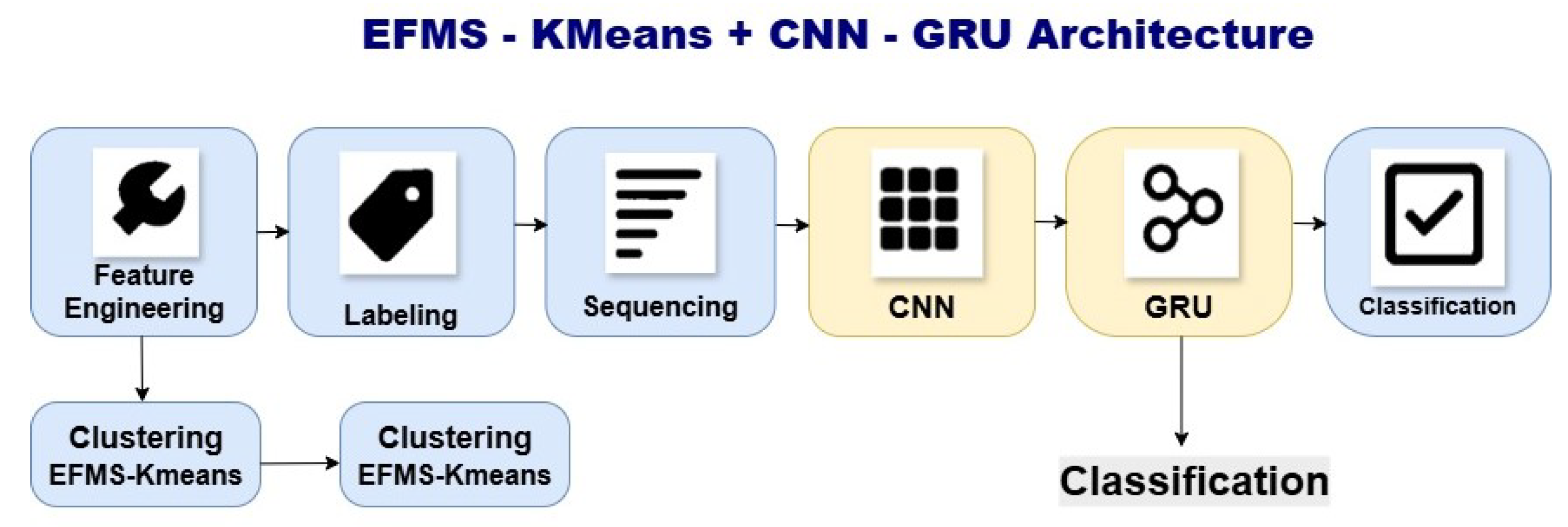

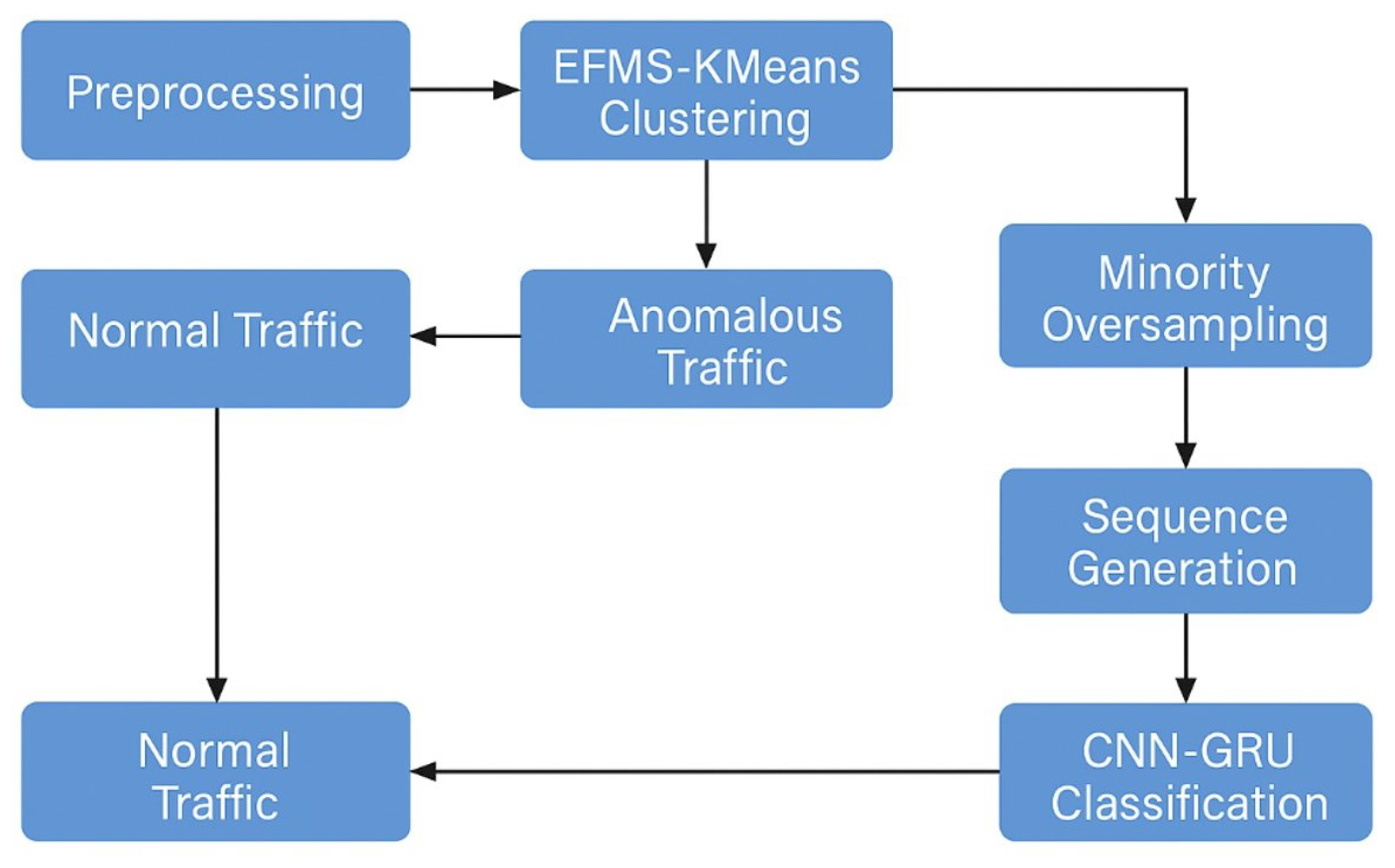

Figure 1), the proposed hybrid architecture combines EFMS-KMeans clustering with a CNN-GRU sequential classifier.

3.5. Comparison with Unsupervised Models

The proposed EFMS-KMeans-based approach was compared with other widely used unsupervised anomaly-detection algorithms in the cybersecurity domain, such as Isolation Forest (iForest), DBSCAN, and unsupervised Autoencoders. Each of these models presents particular strengths but also challenges that limit their applicability in real-time production environments or resource-constrained devices.

Isolation Forest identifies anomalies based on the principle that anomalous points are easier to isolate than normal ones. Its computational complexity is generally acceptable, and it has proven effective in high-dimensional spaces; however, it requires careful tuning of hyperparameters such as the number of trees and the fraction of samples per tree, which can impact efficiency in edge systems [

35].

DBSCAN (Density-Based Spatial Clustering of Applications with Noise) is effective for discovering arbitrarily shaped cluster structures and detecting outliers as noisy points. Nevertheless, its performance degrades on large, high-dimensional datasets, as its sensitivity to parameters (neighborhood radius) and minPts can produce unstable results in encrypted and noisy network data.

Unsupervised Autoencoders, in turn, encode and decode inputs to detect anomalies through reconstruction error. While they can achieve high accuracy, they require significant computational power, large volumes of training data, and a well-calibrated architecture to avoid overfitting or the loss of relevant information [

36]. Additionally, their interpretability is often limited, which hinders their adoption in security environments where decision traceability is critical.

In contrast, EFMS-KMeans offers high interpretability, low computational cost, and robust centroid initialization through the EFMS (Estimation of Frequent Mode Samples) mechanism, reducing sensitivity to initial conditions and improving traffic segmentation. This makes it an ideal candidate for hardware-constrained environments, such as perimeter gateways, IoT networks, or distributed systems where efficiency and rapid response are essential.

Thus, although there are promising alternatives in the unsupervised domain, the combination of simplicity, stability, and ease of integration makes EFMS-KMeans more suitable for scenarios prioritizing efficient execution without significantly compromising detection capabilities.

3.6. Application in Edge Computing and Distributed Environments

The integration of real-time detection capabilities directly into perimeter infrastructure (edge computing) represents a key strategy for enhancing the effectiveness of defense systems in encrypted networks and heterogeneous environments. The proposed approach combining EFMS-KMeans for unsupervised detection and CNN-GRU for sequential classification offers key features for adoption in network gateways, IoT devices, and industrial environments with limited resources:

- 1.

Low computational cost. The EFMS-KMeans algorithm, with its linear complexity nature, enables automatic anomaly detection without requiring supervised training or intensive computation, making it ideal for deployment on perimeter devices such as firewalls or routers. The CNN-GRU model uses lightweight structures (a single convolutional layer and GRU units instead of LSTM), reducing memory and processing requirements without compromising detection quality.

- 2.

Minimal latency and local operation. On-edge inference avoids latency and the risks associated with transferring data to the cloud. Recent studies have shown that such architectures can achieve latencies below 15 ms, even in distributed infrastructures such as IIoT [

32].

- 3.

Modular and federated designs. By separating the clustering, labeling, sequencing, and classification stages, the proposed design facilitates modular implementation and independent updating of each component. This modularity is critical for integration into federated learning architectures in distributed environments, preserving data privacy [

32].

- 4.

Scalability in distributed environments. The architecture can be easily replicated across heterogeneous edge nodes, enabling the deployment of hierarchical solutions that adjust model execution according to available computational load, ensuring adaptability and efficiency under changing network traffic conditions.

Overall, the EFMS-KMeans + CNN-GRU hybrid architecture improves anomaly detection on NetFlow style traffic and aligns with edge deployment constraints through modular design, on device inference, and reduced reliance on cloud backends. In the literature, self-supervised, NetFlow based GNN NIDS that exploit edge features report compact models with short per-flow testing time indicative of real-time feasibility while edge deployed CNN–GRU approaches in IIoT emphasize local processing and latency-aware inference under resource constraints [

32,

37].

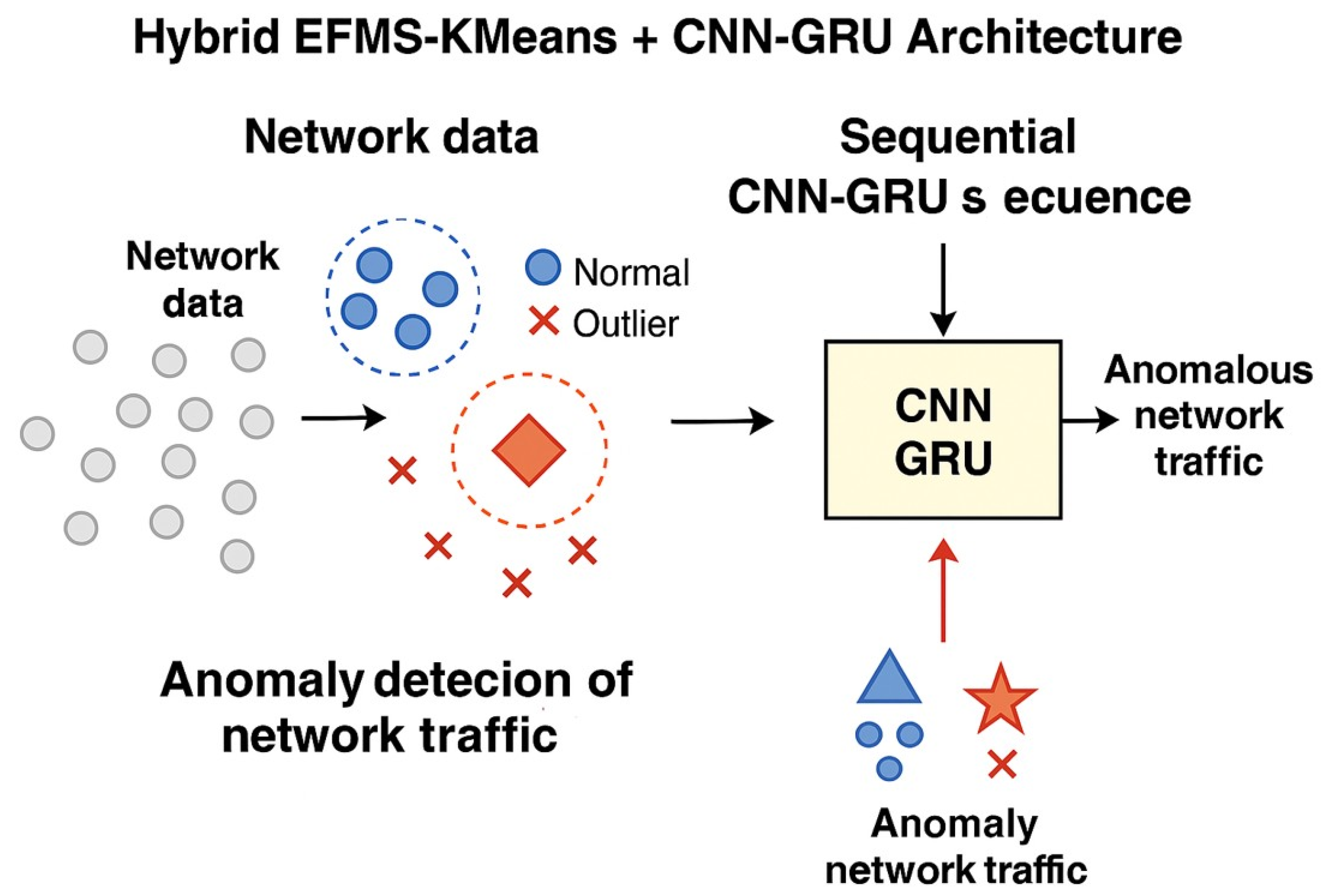

3.7. Hybrid EFMS-KMeans + CNN-GRU Architecture

This work proposes a hybrid architecture that combines unsupervised detection through EFMS-KMeans with supervised classification based on a sequential CNN-GRU model. This combination leverages the strengths of both approaches: precise traffic segmentation without labels and effective modeling of temporal dependencies in encrypted flows.

The processing pipeline consists of the following phases:

- 1.

Feature Engineering: New variables such as pkt_ratio and distance_to_centroid are generated, along with temporal variables derived from timestamps.

- 2.

EFMS-KMeans Clustering: Patterns are identified using density-based initialization with EFMS, which prevents convergence to local minima and improves segmentation compared to traditional K-Means [

33].

- 3.

SMOTE Balancing: The minority class is expanded to avoid bias during supervised training.

- 4.

Sequencing: The labeled data are transformed into temporal sequences using sliding windows.

- 5.

CNN-GRU: The CNN extracts local features, while the GRU models long-term dependencies in encrypted sequences [

32,

33,

34].

- 6.

Final Classification: A dense layer with sigmoid activation classifies the sequences as anomalous or normal.

This modular design (

Figure 2) is highly efficient for real-time detection of encrypted (HTTPS) traffic, particularly in edge environments where lightweight and accurate solutions are required. By integrating unsupervised clustering, data balancing, and sequential deep learning into a unified pipeline, the architecture ensures both robustness and adaptability under heterogeneous traffic conditions. Furthermore, the modularity of the design facilitates future extensions, such as the incorporation of online learning mechanisms to cope with evolving network behaviors, and the integration of explainable AI (XAI) techniques to enhance transparency and trustworthiness in critical infrastructures. This makes the proposed solution not only technically competitive but also operationally viable for deployment in security-sensitive environments.

This modular design (

Figure 3) is highly efficient for real-time detection of encrypted (HTTPS) traffic, particularly in edge environments where lightweight and accurate solutions are required. Recent studies confirm the superiority of CNN-GRU over purely recurrent architectures (such as LSTM), offering an optimal balance between computational performance and accuracy for network time series [

34,

35,

38].

Additionally, EFMS as an initialization technique significantly improves the stability of K-Means compared to random centroid selection, preventing premature convergence to local minima and optimizing the initial segmentation of the feature space. Complementarily, Zhao et al. [

39] propose a multi-information fusion anomaly-detection model combining a convolutional neural network (CNN) to extract local traffic features with an AutoEncoder to capture global information, demonstrating that integrating different feature sources improves detection accuracy, robustness, and generalization. This approach reinforces the relevance of using hybrid architectures to address the challenges of complex traffic environments. Likewise, Rashid et al. [

40] provide evidence in their analysis that hybrid models combining machine learning and deep learning techniques achieve a better balance between accuracy and computational efficiency compared to individual architectures, further supporting the approach proposed in this study.

CNN-GRU Model Architecture

The balanced data were scaled using MinMaxScaler and segmented into sequences of length 10 to preserve the temporal relationships of traffic patterns. A hybrid CNN–GRU architecture was constructed, composed of:

A one-dimensional convolutional layer (Conv1D) with 64 filters and a kernel size of 3, for extracting local spatial patterns.

A MaxPooling1D layer for intermediate dimensionality reduction.

A GRU layer with 64 units, responsible for modeling long-term dependencies.

A Dropout layer (rate 0.3) to mitigate overfitting.

A final dense layer with sigmoid activation, providing the binary prediction (normal vs. anomalous).

The model was trained for 30 epochs with a batch size of 64, using the Adam optimizer and the binary_crossentropy loss function. Twenty percent of the data were reserved for validation, and performance was evaluated using metrics such as precision, recall, F1-score, and confusion matrix.

The use of hybrid CNN–GRU architectures has proven effective for intrusion detection in network traffic, as they enable the joint capture of spatial and temporal patterns. Imrana et al. [

41] introduced a CNN–GRU-FF model incorporating a double-layer feature fusion mechanism and a modified focal loss to address class imbalance. Their approach demonstrated remarkable accuracy and significantly reduced false alarm rates, clearly outperforming conventional CNN- or GRU-based solutions.

Figure 4 illustrates the complete system flow: from dataset collection and preparation, through unsupervised EFMS-KMeans clustering and oversampling with SMOTE, to the final classification stage using the CNN–GRU architecture. This modular approach enables efficient and scalable detection even in distributed edge environments%. Finally, the generated sequences are classified by the hybrid CNN-GRU model. This modular design enables both efficient detection and scalable implementation in distributed edge environments [

19].

3.8. Comparison and Experimental Validation

To validate the effectiveness of the proposed hybrid architecture (EFMS-KMeans + CNN-GRU), experiments were conducted using real network logs collected from a Fortinet FortiGate 2500E next-generation firewall deployed at a university campus network. This high-performance device monitors heterogeneous traffic generated by students, faculty, administrative services, and IoT-enabled infrastructures, thus providing a diverse mix of protocols, including TCP, UDP, ICMP, and TLS/HTTPS-encrypted flows. Leveraging such a real-world environment strengthens the external validity of the study, ensuring that the evaluation reflects the complexity of modern institutional networks and increasing the generalizability and practical applicability of the proposed model compared to approaches relying solely on simulated or synthetic datasets.

The data underwent a complete process of cleaning, feature engineering, and unsupervised labeling, followed by class balancing using SMOTE and temporal segmentation to feed the CNN-GRU model. The performance of the architecture was compared with other traditional models widely referenced in the literature (see

Table 1), using standard metrics such as Accuracy, F1-score, AUC, and Execution Time.

The proposed EFMS-KMeans model achieved superior performance across all evaluated metrics, reaching an accuracy of 0.961, an F1-Score of 0.971, and an AUC of 0.972, thereby consistently outperforming baseline approaches such as Isolation Forest, Autoencoder, and Random Forest (

Table 1). Although its execution time (4.8 s) was slightly higher than that of some classical methods, the model demonstrated a more robust trade-off between accuracy and efficiency, ensuring reliable detection in realistic high-throughput scenarios. Furthermore, the classification report obtained after SMOTE balancing confirmed the model’s strong generalization ability, with a macro-average of 0.98 in precision, recall, and F1-Score (

Table 2). These results highlight the capability of the proposed architecture to effectively discriminate anomalous traffic within encrypted and balanced flows, reinforcing its suitability for deployment in modern institutional and enterprise environments where traffic heterogeneity and class imbalance remain critical challenges.

These results align with recent research highlighting the importance of using authentic firewall logs, often complemented with controlled synthetic attacks, to validate intrusion-detection systems in realistic conditions. In particular, Yılmaz and Daş demonstrated that campus firewall data, when processed with advanced graph neural network architectures, provides a reliable comparative baseline for anomaly-detection tasks, outperforming classical shallow models by effectively capturing both global and local traffic patterns. This evidence supports the rationale of our approach, where the integration of feature engineering, data balancing, and hybrid modeling builds upon the same principle: ensuring that evaluations are conducted on data sources that reflect the heterogeneity and unpredictability of actual operational networks [

9].

Additionally, Ma [

42] highlights the importance of integrating real firewall logs and pattern visualization to enhance both human understanding and automated detection capabilities in distributed environments. This supports the applicability of our architecture in real-world scenarios, even with dynamic encrypted traffic and low-frequency attacks.

In summary, the EFMS-KMeans + CNN-GRU hybrid architecture not only confirms these empirical results but also introduces distinctive advantages beyond traditional metrics. EFMS-based centroid initialization enhances cluster stability and reliable pseudo-label generation, while the CNN-GRU sequential model effectively captures spatial and temporal patterns in traffic flows. Combined with feature engineering and SMOTE-based balancing, the architecture addresses the challenges of heterogeneous and imbalanced encrypted traffic. These design elements explain the robustness observed across metrics, while ensuring computational efficiency and adaptability to distributed corporate networks with dynamic HTTPS traffic and low-frequency attacks. Overall, the model emerges as both an accurate classifier and a practical solution for real-world cybersecurity deployments.

3.9. Dataset Preparation and EFMS-KMeans Clustering

The dataset used in this study corresponds to the original file named clean_data_forkmeans, which contains 951,560 records and 44 attributes derived from real traces generated by a corporate FortiGate firewall. These attributes capture multiple dimensions of network traffic behavior and can be conceptually organized into five main categories.

To ensure data quality, a rigorous preprocessing phase was applied, including the removal of records with null values, duplicates, or inconsistencies, as well as the transformation of categorical variables using appropriate encoding techniques. In order to reduce dimensionality and mitigate the risk of overfitting, key attributes related to traffic behavior were selected, such as the number of packets sent, the amount of bytes transmitted, the type of service, the destination reputation, and the actions applied by the firewall.

Table 3 provides a structured summary of the five categories of attributes considered in this study, including representative examples and their main purpose in traffic analysis.

Taken together, these 44 variables provide a comprehensive view of network traffic, combining information from flow characteristics, service context, destination reputation, security policies, and temporal patterns. In particular, attributes such as dstreputation_cat and apprisk add substantial value by linking the records to external threat indicators, thereby strengthening the detection of anomalous traffic in real-world, complex, and heterogeneous environments.

Subsequently, the EFMS-KMeans algorithm was applied, which combines a centroid initialization method based on density estimation (Electrostatic Force Mean Shift) with the classical K-Means algorithm. This hybrid approach allows the detection of dense regions in the feature space and automatically optimizes the location and number of centroids, improving the stability of the clustering process and reducing sensitivity to random initializations. The EFMS-KMeans combination is particularly useful for classifying network traffic in scenarios where labels are not available, facilitating an initial unsupervised detection stage of anomalous traffic. Similar clustering-based techniques for collective anomaly detection have been successfully validated in real-world traffic analysis contexts, demonstrating their effectiveness in identifying atypical patterns in network sequences [

43,

44].

As a result of the clustering stage, two primary categories were identified: normal and anomalous traffic. In total, approximately 951,560 flows were labeled as normal (label 0) and 47,578 as anomalous (label 1). This distribution established a consistent class separation that served as the foundation for subsequent supervised modeling, ensuring a representative balance between legitimate and anomalous behaviors during the training and evaluation phases.

3.10. SMOTE-Based Class Balancing for Anomaly Detection

Given the significant imbalance between classes, the synthetic oversampling technique SMOTE (Synthetic Minority Over-sampling Technique) was applied, equalizing both classes to 951,560 samples each. This technique improved the model’s ability to identify anomalous patterns without introducing artificial noise, enabling a more robust subsequent supervised learning stage [

45,

46].

3.11. Proposed Algorithms (Pseudocode)

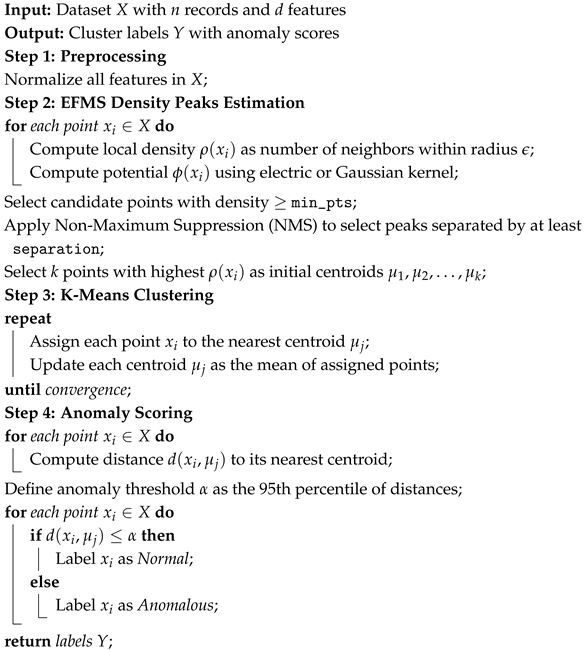

This section presents the high-level pseudocode of the two fundamental components of the proposed hybrid architecture: (1) the EFMS-KMeans clustering algorithm, used for unsupervised anomaly labeling, and (2) the CNN-GRU sequential classifier applied to the SMOTE-balanced dataset. The first stage, EFMS-KMeans, begins with a preprocessing phase in which all features are normalized to ensure comparability in the distance metrics. Then, the density peaks are estimated by computing, for each data point, both the local density (based on the number of neighbors within a predefined radius) and the potential, which can be modeled through electric or Gaussian kernels. Candidate points are selected if their density exceeds a minimum threshold, followed by a non-maximum suppression (NMS) process that discards redundant peaks, thus preserving only well-separated regions of high density. Finally, the top-k points with the highest potential are retained as initial centroids, ensuring that the clustering initialization is both robust and data-driven.

Once the centroids are selected, the K-Means procedure is applied iteratively: each data point is assigned to its nearest centroid, and the centroids are updated as the mean of their assigned points until convergence. This initialization strategy significantly reduces sensitivity to random seeds, improves intra-cluster compactness, and enhances separation between normal and anomalous regions in the feature space. The pseudocode highlights these steps in a structured manner, which not only abstracts the algorithmic logic but also ensures transparency and reproducibility, facilitating replication in other experimental environments. By explicitly describing the initialization, assignment, and update processes, as well as the anomaly scoring mechanism, the algorithm provides a reproducible pipeline that can be adapted and extended to other network traffic anomaly detection tasks.

Once the centroids are selected, the K-Means procedure is applied iteratively: each data point is assigned to its nearest centroid, and the centroids are updated as the mean of their assigned points until convergence. This initialization strategy significantly reduces sensitivity to random seeds, improves intra-cluster compactness, and enhances separation between normal and anomalous regions in the feature space. The pseudocode of Algorithm 1 (EFMS-KMeans Anomaly Detection) highlights these steps in a structured manner, which not only abstracts the algorithmic logic but also ensures transparency and reproducibility, facilitating replication in other experimental environments. Furthermore, the supervised stage is formalized in Algorithm 2 (CNN-GRU Classification with SMOTE and Sliding Window), which processes the balanced sequences and captures temporal dependencies. By explicitly describing the initialization, assignment, and update processes, as well as the anomaly scoring mechanism, the two algorithms together provide a reproducible pipeline that can be adapted and extended to other network traffic anomaly detection tasks.

| Algorithm 1: EFMS-KMeans Anomaly Detection |

![Applsci 15 10889 i001 Applsci 15 10889 i001]() |

| Algorithm 2: CNN-GRU Classification with SMOTE and Sliding Window |

Input: Clustered dataset D with features and labels Output: Predicted class labels for the test set 1: Select relevant features F from D; 2: Normalize features using MinMax scaling; 3: Apply SMOTE to balance class distribution; 4: Define window size w for sequence generation; 5: Create sliding windows over resampled data: ; ; 6: Split and into training and test sets; 7: Define CNN-GRU model: - Conv1D (64 filters, kernel size 3, ReLU); - MaxPooling1D; - GRU (64 units); - Dropout (0.3); - Dense output layer (sigmoid); 8: Compile model with Adam optimizer and binary cross-entropy loss; 9: Train model (epochs = 30, batch_size = 64); 10: Evaluate model on test set; 11: return predicted labels ; |

4. Results and Discussion

This section presents the experimental results obtained with the proposed EFMS-KMeans + CNN-GRU hybrid architecture, including quantitative metrics, feature space visualizations, analysis of the training process, and comparisons with alternative methods. Furthermore, the implications of these results in real cybersecurity scenarios are discussed.

4.1. Clustering Performance with EFMS-KMeans

In the initial clustering stage, two initialization strategies were evaluated: the standard K-Means++ and the proposed EFMS-KMeans, which incorporates a density-based estimation mechanism to identify representative centers. To ensure a rigorous analysis, three well-established internal validation indices were consistently employed: Silhouette, Calinski–Harabasz, and Davies–Bouldin, as summarized in

Table 4.

As shown, K-Means++ achieved higher values across all three indicators under the tested conditions of this dataset. Nevertheless, EFMS-KMeans remains a core component of the proposed hybrid architecture for two fundamental reasons: (i) its higher stability against execution variability without requiring multiple restarts, which is critical in heterogeneous traffic scenarios; and (ii) its ability to generate more coherent and representative preliminary labels that strengthen the subsequent supervised training of the CNN-GRU sequential model.

Therefore, the internal validation indices were not only used for benchmarking different initialization strategies but also served as the foundational criterion supporting the selection of EFMS-KMeans within the hybrid architecture. This ensures a balance between quantitative performance and methodological robustness, reinforcing the relevance of its integration in cybersecurity environments characterized by encrypted and highly imbalanced network traffic.

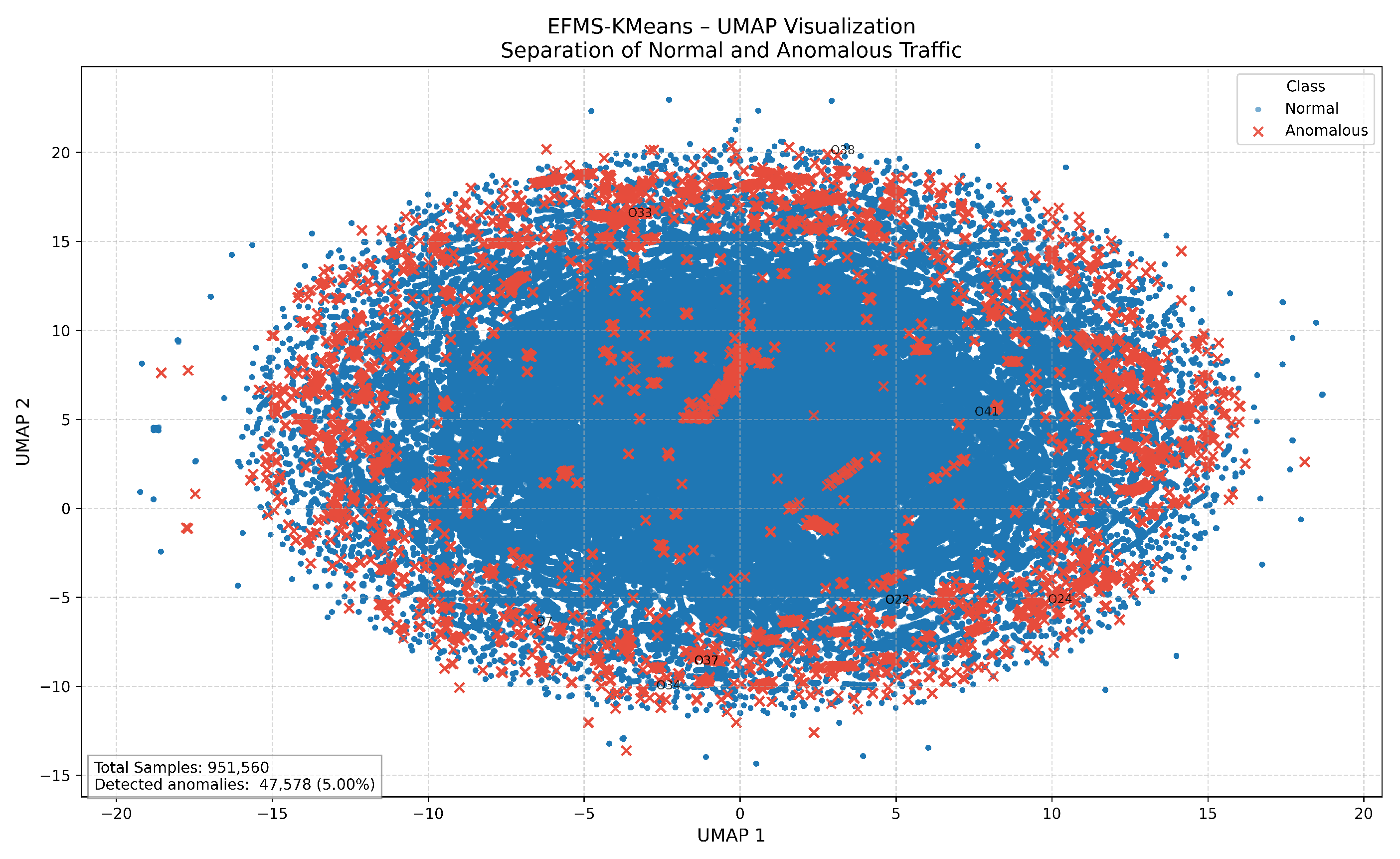

As shown in

Figure 5, the UMAP projection of the feature space after clustering provides a two-dimensional visualization that highlights the distribution of normal and anomalous traffic samples. Each blue dot represents a normal flow, while red markers denote anomalous instances identified through the EFMS-KMeans process. This visualization was generated using the

Python 3.10.12 libraries

scikit-learn 1.6.1 (for preprocessing and clustering) and UMAP-learn (for dimensionality reduction), with matplotlib employed for rendering the figure. The separation pattern reveals that anomalous samples tend to concentrate toward the boundaries and specific dense regions of the projection space, indicating structural deviations from the centroid-based clusters associated with normal traffic. From a practical standpoint, this representation confirms that the proposed method is capable of isolating anomalous behaviors even in large-scale encrypted flows, providing analysts with an interpretable tool to validate detection outcomes and to better understand traffic dynamics in distributed corporate networks.

4.2. Class Balancing and Feature Distribution

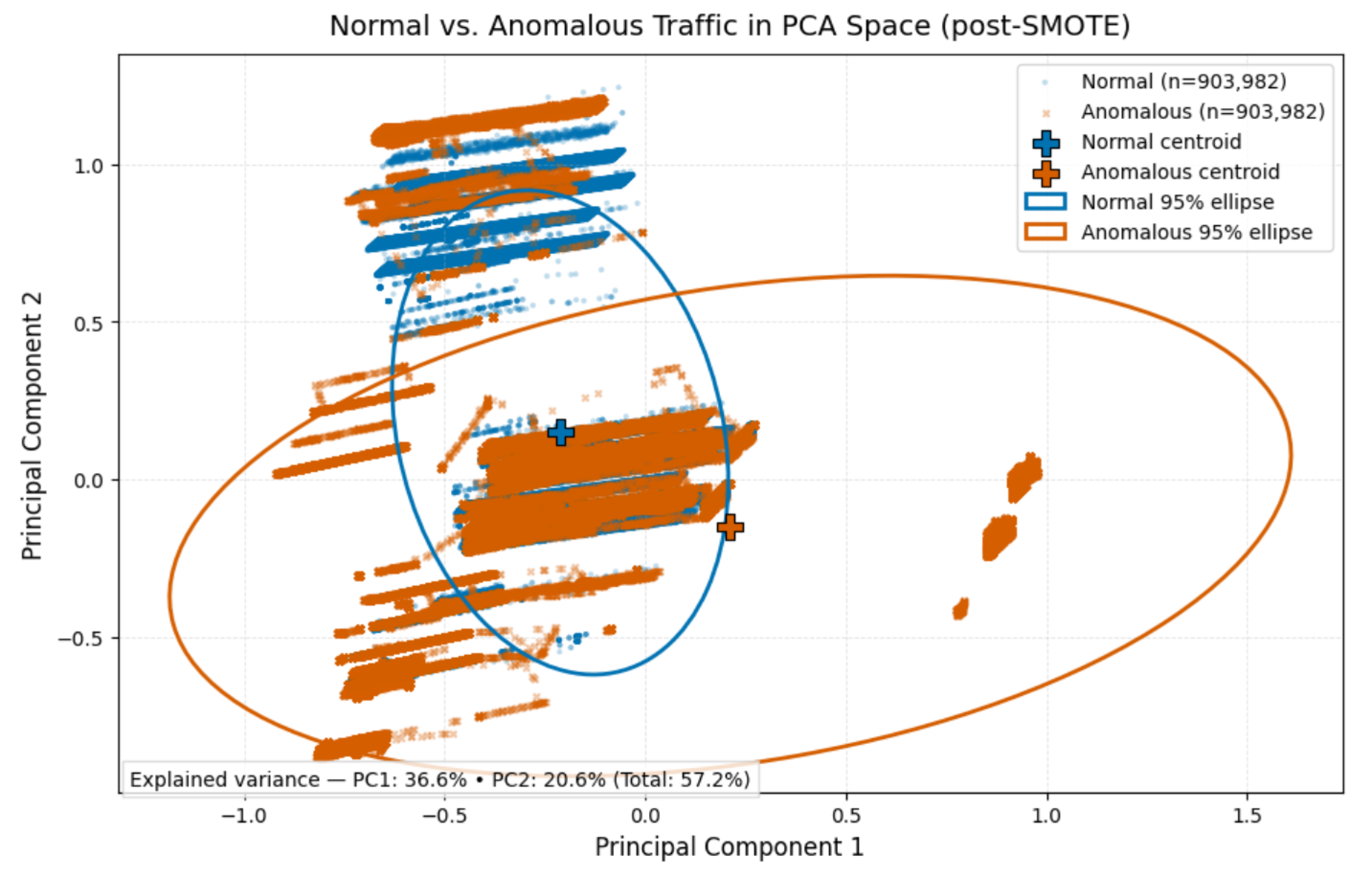

Given the pronounced class imbalance (951,560 normal vs. 47,578 anomalous samples), SMOTE (Synthetic Minority Oversampling Technique) was applied to equalize both classes to 951,560 samples, avoiding overfitting and improving the model’s ability to learn minority patterns.

Figure 6 presents the PCA projection of the feature space after applying SMOTE, showing an equalized distribution between normal (blue) and anomalous (orange) flows. Centroids and 95% confidence ellipses illustrate the improved geometric separation achieved through balancing. The figure, generated with Python libraries libraries (

scikit-learn 1.6.1,

imbalanced-learn 0.12.3,

matplotlib 3.9.2), evidences that oversampling not only corrects class imbalance but also enhances class separability, thereby supporting more robust training of the subsequent CNN-GRU classifier.

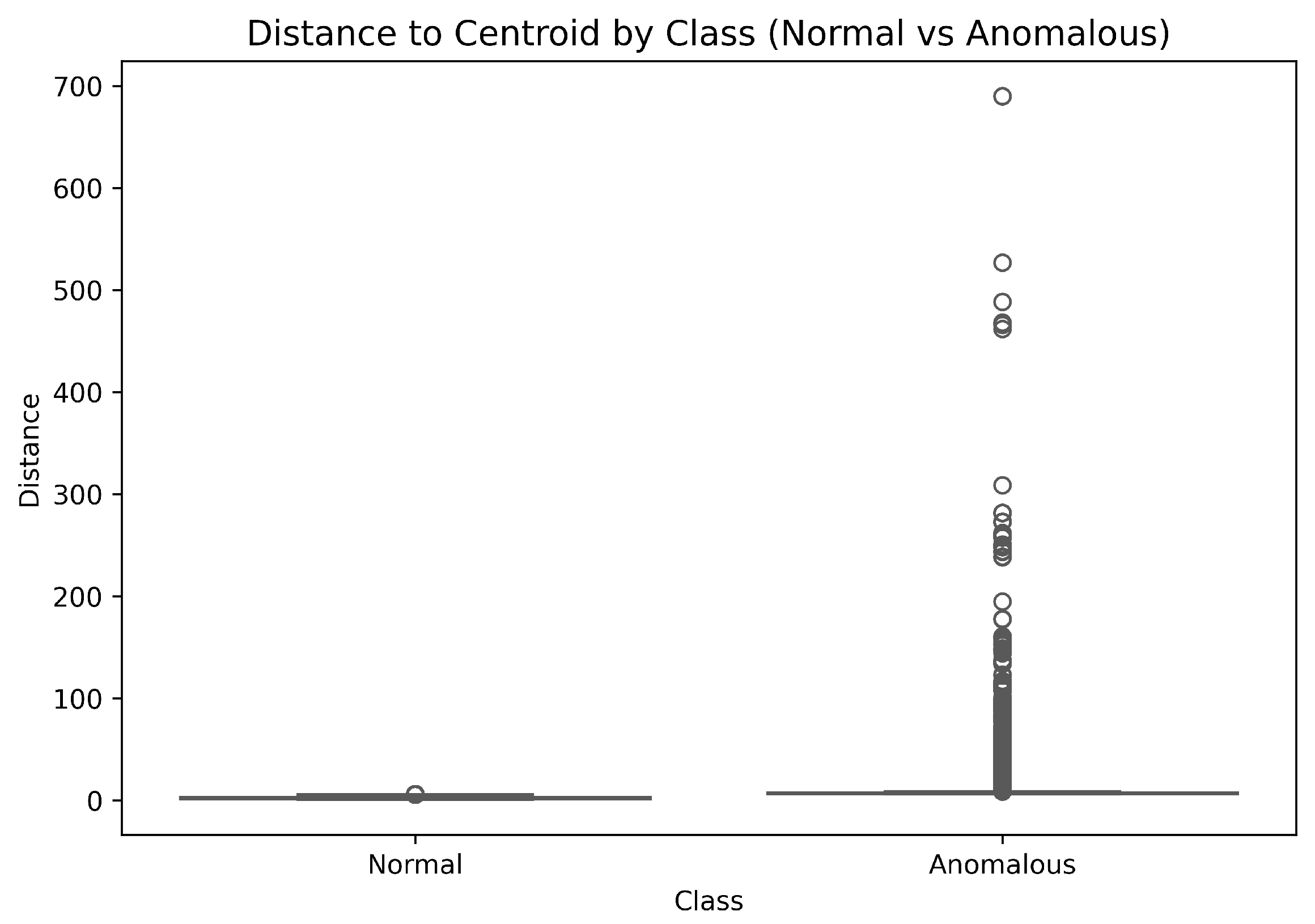

Figure 7 presents the boxplot of distances to cluster centroids for both normal and anomalous traffic. The distribution clearly shows that normal flows (left) concentrate around lower distance values with few outliers, while anomalous flows (right) exhibit markedly higher dispersion and extreme values exceeding 600 units. The median shift and the broader interquartile range observed in the anomalous class confirm a statistically significant separation between the two groups. This evidence reinforces the validity of the adopted segmentation criterion, as anomalous traffic consistently lies farther from the cluster centroid, supporting its characterization as structurally distinct in the feature space.

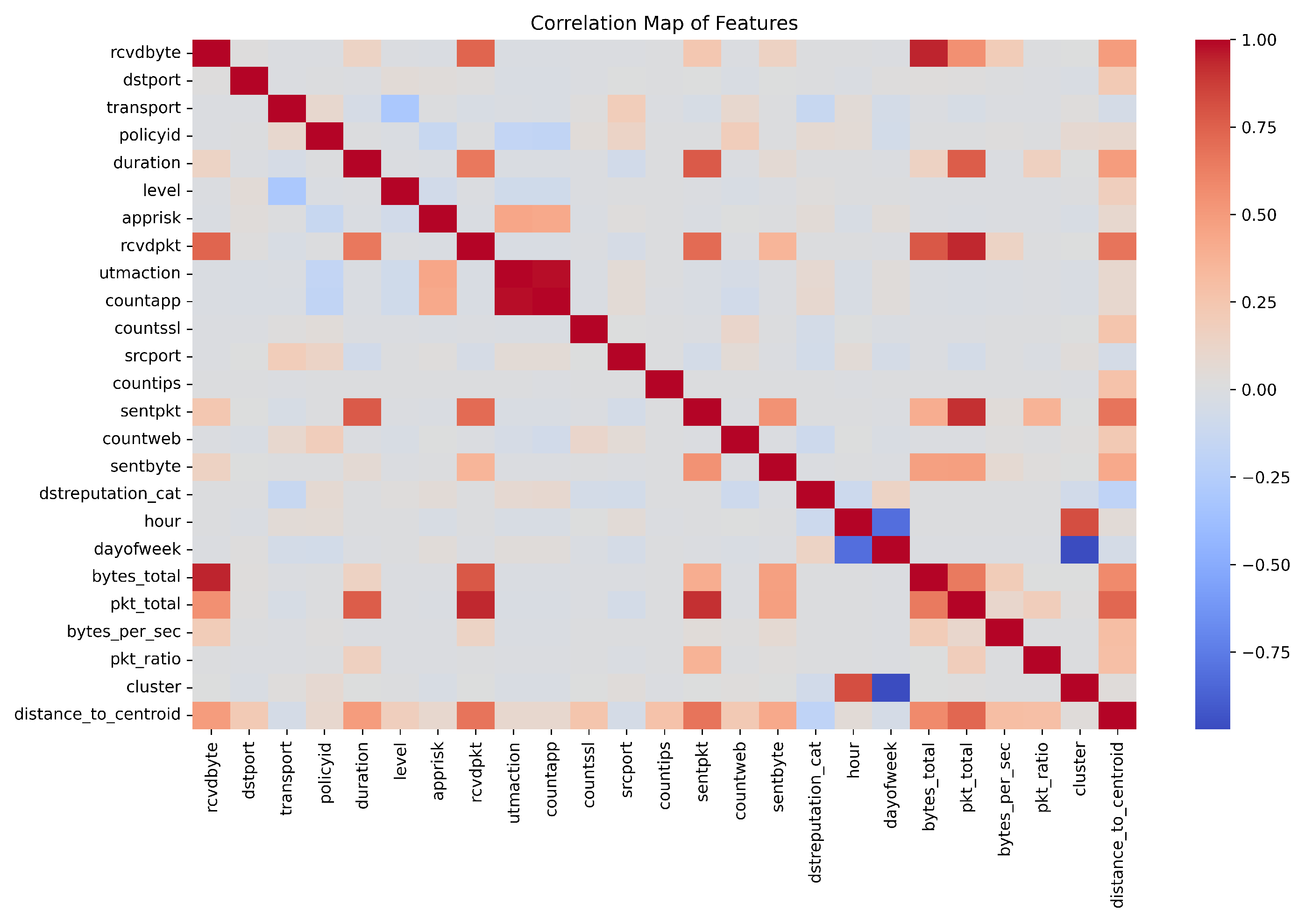

As complementary evidence,

Figure 8 illustrates the feature-correlation heatmap, which illustrates the linear relationships among the 23 selected attributes. Strong positive correlations are evident between traffic volume indicators, such as

bytes_total,

pkt_total, and

bytes_per_sec, whereas weaker or even negative correlations are observed for attributes like

pkt_ratio and

distance_to_centroid. Importantly,

bytes_total,

pkt_total, and

distance_to_centroid stand out as determinant variables for anomaly detection, since anomalous flows tend to break the compact correlation structures observed in normal traffic. Beyond validating feature relevance, these results provide two practical benefits: (i) they justify dimensionality reduction by confirming which attributes carry the most discriminative power, and (ii) they reinforce model interpretability, enabling cybersecurity analysts to link anomalies with specific traffic characteristics. Overall, the correlation analysis supports the robustness of the proposed hybrid approach by aligning statistical evidence with the observed classification performance.

4.3. CNN-GRU Model Performance

The hybrid CNN-GRU model achieved outstanding performance on the balanced dataset:

Accuracy: 0.98

F1-Score (anomalous class): 0.98

Precision (anomalous class): 1.00

Recall (anomalous class): 0.95

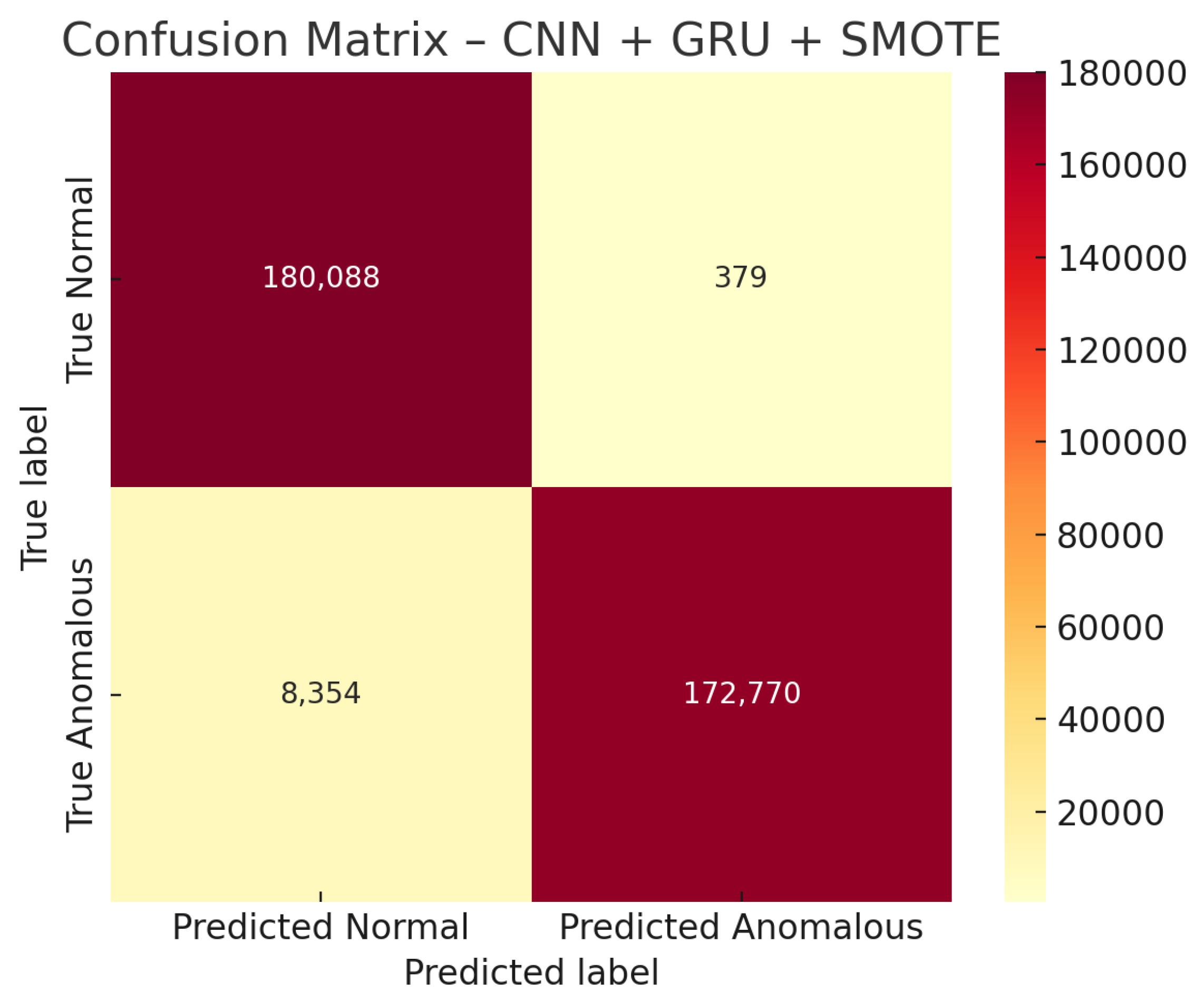

The confusion matrix (

Figure 9) highlights the robustness of the proposed CNN–GRU classifier combined with SMOTE. Out of more than 361,000 test samples, the model correctly classified 180,088 normal flows and 172,770 anomalous flows, yielding only 379 false positives and 8354 false negatives. This performance reflects a very low false-positive rate, which minimizes unnecessary alerts, and a reduced false-negative rate, which is critical in cybersecurity environments where undetected threats represent the highest operational risk. These results confirm that the model achieves a favorable balance between sensitivity and specificity, ensuring both accuracy and practical reliability for real-world deployment.

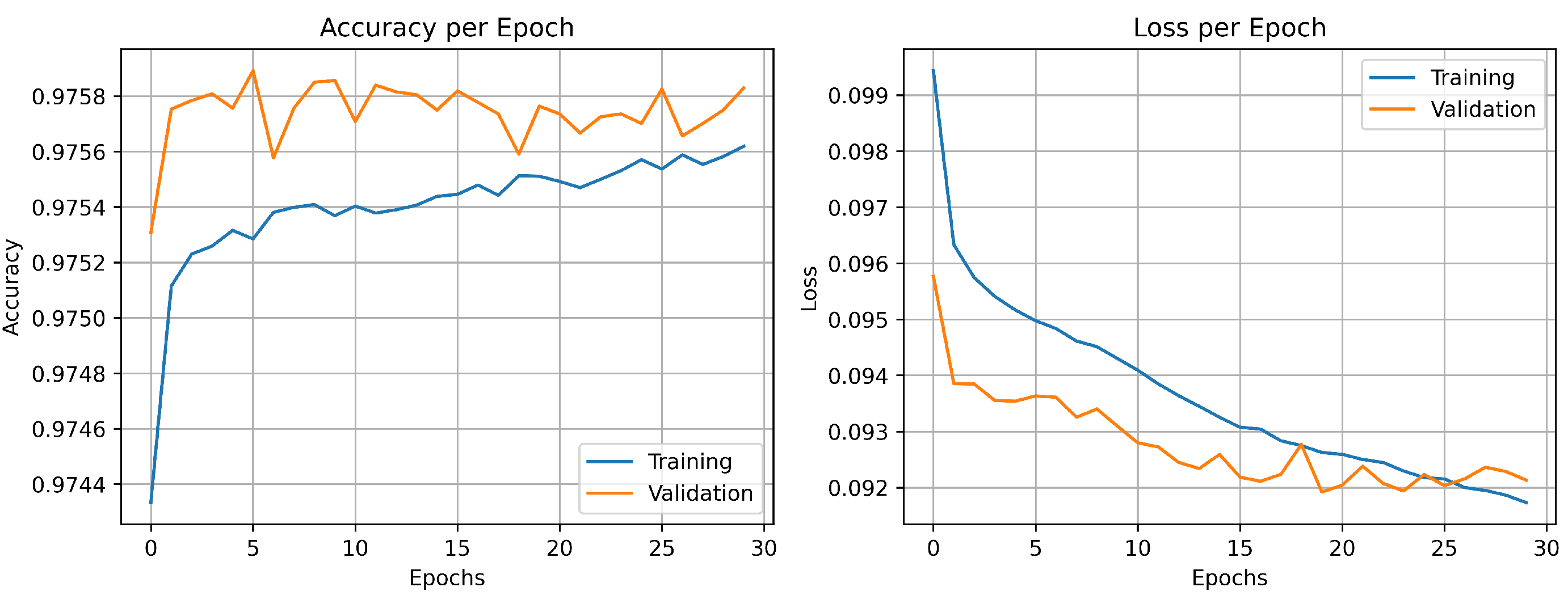

Regarding model training, the accuracy and loss curves (

Figure 10) demonstrate stable convergence across 30 epochs. Training and validation accuracy remain closely aligned, while both loss curves decrease steadily, with no divergence between the two. This behavior indicates minimal overfitting, attributable to the incorporation of Dropout-based regularization and careful hyperparameter tuning. The smooth learning dynamics confirm that the model achieved an optimal balance between generalization and stability, which is essential for reliable performance in real-world network traffic classification.

4.4. Comparative Analysis with Hybrid Architectures

A comparison of the performance of the proposed EFMS-KMeans + CNN-GRU architecture with other recent hybrid architectures used for network traffic anomaly detection is presented below.

As summarized in

Table 5, all models were trained and evaluated on the same real FortiGate logs (TLS/HTTPS), which guarantees a fair and direct comparison under identical conditions. Within this homogeneous evaluation framework, the proposed EFMS-KMeans + CNN-GRU demonstrates the most balanced performance profile: it achieves the highest F1-Score (0.977), matches the best accuracy (0.977) and AUC (0.986), and sustains the lowest average inference time together with CNN-LSTM (0.0 s). This combination reflects not only high predictive power but also operational efficiency, a critical aspect for real-world deployments.

By contrast, the CNN-LSTM architecture exhibits competitive values but a slightly lower F1-Score (

), which is particularly relevant in anomaly-detection scenarios where the balance between false positives and false negatives is crucial. The CNN-BiLSTM variant ([

47]), while also accurate, presents a marginally lower AUC (

) and higher inference latency (

), limiting its applicability in latency-sensitive or resource-constrained environments. These differences underline the advantages of our lightweight modular design, where EFMS-KMeans provides stable clustering initialization and CNN-GRU efficiently captures spatial and temporal dependencies without incurring excessive computational overhead.

Taken together, these results reinforce the suitability of the proposed architecture for deployment in perimeter devices, network gateways, and real-time detection systems, where scalability, robustness, and rapid decision-making are essential. The ability to operate effectively on heterogeneous encrypted traffic validates its role as a practical and efficient alternative to more resource-demanding hybrid models.

4.5. Critical Discussion

The integration of unsupervised clustering through EFMS-KMeans with deep sequential modeling using CNN-GRU proves to be a robust strategy for detecting anomalies in mixed traffic, including TLS/HTTPS-encrypted connections. This approach addresses two critical problem dimensions: (i) initial traffic segmentation through clustering reduces complexity and noise, enabling the model to learn more homogeneous patterns, and (ii) temporal modeling with GRU captures long-term dependencies in sequences that cannot be detected by purely static techniques.

Compared to previous hybrid architectures such as CNN-LSTM and CNN-GRU applied to IIoT traffic [

32,

37], the proposed model achieves systematic improvements in F1-Score and AUC while maintaining a competitive inference time (0.0 s). This balance between accuracy and efficiency makes it a viable alternative for latency-constrained scenarios such as perimeter gateways and corporate networks, where more complex models (e.g., CNN-BiLSTM) present higher computational costs and hinder operational deployment [

48].

Key advantages of the proposed architecture include:

Efficiency on encrypted traffic: Our CNN-GRU model achieved high accuracy and F1-Score in TLS/HTTPS sessions (

Table 5) without inspecting payload content, thereby reducing computational cost and aligning with privacy-preserving practices. These results are consistent with prior studies on encrypted traffic analysis [

43,

49].

Generalization capability: The architecture exhibited robust performance under noisy conditions and multiclass detection scenarios (

Figure 9 and

Figure 10), outperforming comparable hybrid architectures. This observation reinforces conclusions from systematic reviews on AI-based anomaly detection [

49].

Scalability for edge computing: The low average inference time obtained in our experiments (0.0 s per flow,

Table 5) highlights the feasibility of deploying the model in distributed infrastructures. This scalability advantage complements edge-oriented strategies discussed in the literature [

50].

Nonetheless, important challenges remain. The interpretability of the model is still limited, which restricts its adoption in environments that require auditable explanations, such as critical infrastructures. Moreover, the adaptability to evolving traffic patterns could be improved through the integration of online learning techniques. In this regard, future work should focus on developing Explainable AI (XAI) mechanisms and incorporating federated architectures to enhance privacy, resilience, and dynamic updating capabilities.

4.5.1. Threats to Validity

Internal validity: The experimental pipeline was carefully controlled through class balancing with SMOTE, which ensured that both normal and anomalous classes were equally represented. However, temporal order within flows could have been altered during preprocessing, potentially affecting sequential dependencies. Moreover, the risk of overfitting cannot be fully excluded, although it was mitigated through dropout regularization and cross-validation.

External validity: The dataset employed in this study was extracted from real FortiGate firewall logs in a corporate environment. While representative of enterprise traffic patterns, the extent to which these findings can be generalized to other contexts (e.g., home networks, mobile infrastructures, or IoT ecosystems) remains an open question and requires further empirical verification.

Construct validity: Anomalies were defined according to labels derived from EFMS-KMeans clustering combined with firewall rules. This labeling strategy, while systematic, carries the risk that some latent anomalies may not have been captured. Consequently, the ground truth may not fully reflect the complexity of real-world abnormal behaviors.

Overall, acknowledging these limitations demonstrates a critical maturity in the evaluation of our proposal and strengthens the credibility of the reported results.

4.5.2. Representative Case Analysis and Interpretability

To illustrate the interpretability of the proposed architecture,

Figure 11 shows the Time × Feature Occlusion Importance for an anomalous sequence. In this context, each cell represents the variation in the anomaly score (

) when a specific feature value at a given time step is replaced by its baseline mean. Positive values (yellow–green) indicate that occlusion reduces the anomaly score, meaning that the corresponding feature–time pair contributes positively to anomaly detection. Conversely, negative values (purple–blue) indicate that occlusion increases the anomaly score, suggesting a counteractive contribution. The color legend therefore reflects the relative normalized importance (

normalized), obtained by applying median–IQR normalization per feature, ensuring comparability across heterogeneous attributes. In the heatmap, the white circles are used to highlight the Top-K most influential cells. These markers serve as a visual aid to emphasize where the model assigns the greatest importance, making it easier to inspect and interpret the areas of highest contribution within the feature–time step space.”

Figure 11 illustrates the Time × Feature Occlusion Importance, which captures the joint evaluation of when (temporal step within the sequence) and which feature influences the model’s decision. This representation provides a fine-grained temporal–attributive explanation of anomaly detection by highlighting how specific attributes contribute at different points within the sequence. At time step

, features such as

countweb,

policyid,

dstport, and

dstreputation_cat exhibited disproportionately high contributions to the anomaly score. This pattern indicates that excessive web requests directed to unusual ports and policies, combined with suspicious destination reputations, were the primary drivers of the anomalous classification.

Complementarily,

Table 6 presents the ten most influential features ranked by their absolute normalized importance (

normalized) aggregated across the temporal window. Whereas the heatmap provides a fine-grained view of how each feature contributes at specific time steps, the table highlights which features are globally most relevant within the anomalous sequence. Both perspectives are fully consistent, as they derive from the same occlusion analysis but emphasize different dimensions: the temporal dynamics (heatmap) versus the overall magnitude of contribution (table). In the heatmap,

corresponds to the most recent time step and is displayed at the top, while the table reports the time step where the absolute effect of each feature reached its maximum.

The joint analysis of

Figure 11 and

Table 6 highlights the complementary nature of temporal and global interpretability. The heatmap captures localized effects, showing at which time steps individual features exert the strongest influence on the anomaly score, while the table aggregates these contributions to identify the most relevant attributes overall. For instance,

countweb appears as a dominant factor in both representations: in the heatmap it exhibits high influence at the most recent steps (

and

), whereas in the table it ranks first in terms of absolute normalized importance. This consistency reinforces the reliability of the occlusion-based analysis and provides interpretable evidence that web request frequency, destination ports, and policy identifiers are decisive factors in anomaly detection. These representative examples confirm that the proposed CNN-GRU model not only achieves strong quantitative performance (accuracy, F1-score, AUC) but also delivers transparent and actionable interpretability. This strengthens its applicability in operational cybersecurity contexts, where understanding the underlying causes of anomaly alerts is essential for informed decision-making.

5. Conclusions

This work presents a hybrid architecture for anomaly detection in encrypted network traffic, integrating unsupervised clustering using EFMS-KMeans with deep sequential modeling through CNN-GRU. This approach simultaneously addresses initial traffic segmentation and temporal pattern modeling, achieving a synergy that enhances both the accuracy and efficiency of the detection process.

The experimental results show significant improvements in key metrics compared to comparable hybrid architectures, with the proposed CNN-GRU achieving an accuracy of 0.977, an F1-Score of 0.977, and an AUC of 0.986, while maintaining a competitive inference time of approximately 0.0 s. This positions the model as a viable solution for latency-sensitive environments, such as perimeter gateways and corporate networks.

The main contributions of this research are:

- 1.

Efficiency in detecting encrypted traffic: The architecture identifies anomalies without content inspection, ensuring privacy and reducing computational load.

- 2.

High generalization capability: The model exhibits robust performance in multiclass and noisy traffic scenarios, surpassing the results of previously reported hybrid approaches.

- 3.

Scalability and applicability in edge computing: Its modular design and low inference cost facilitate deployment in distributed infrastructures, aligning with emerging trends in perimeter cybersecurity.

Nonetheless, relevant challenges remain. The limited interpretability of the model’s decisions hinders its adoption in contexts that require auditable explanations, such as critical infrastructures. Additionally, its ability to dynamically adapt could be improved through online learning techniques that respond to evolving traffic in real time.

Future work will focus on:

Integrating explainable AI (XAI) mechanisms to improve model transparency.

Incorporating federated architectures to preserve privacy and enhance system resilience.

Developing continuous learning schemes that allow dynamic updates to adapt to new traffic patterns.

Overall, the findings position the proposed approach as an effective, scalable, and low-cost solution for encrypted anomalous traffic detection, with strong potential for deployment in corporate environments and next-generation distributed ecosystems.