Design of a Multi-Method Integrated Intelligent UAV System for Vertical Greening Maintenance

Abstract

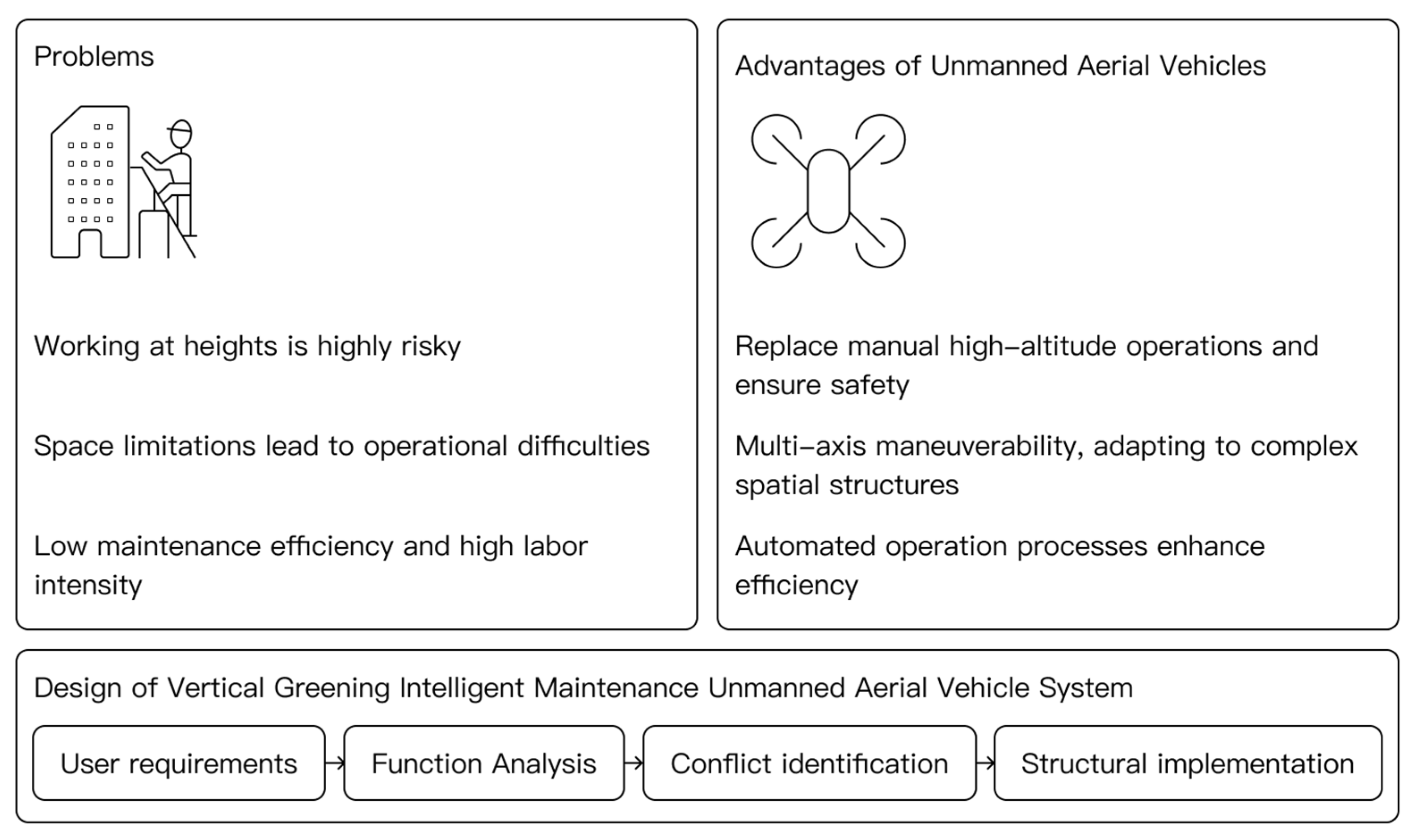

1. Introduction

2. Literature Review

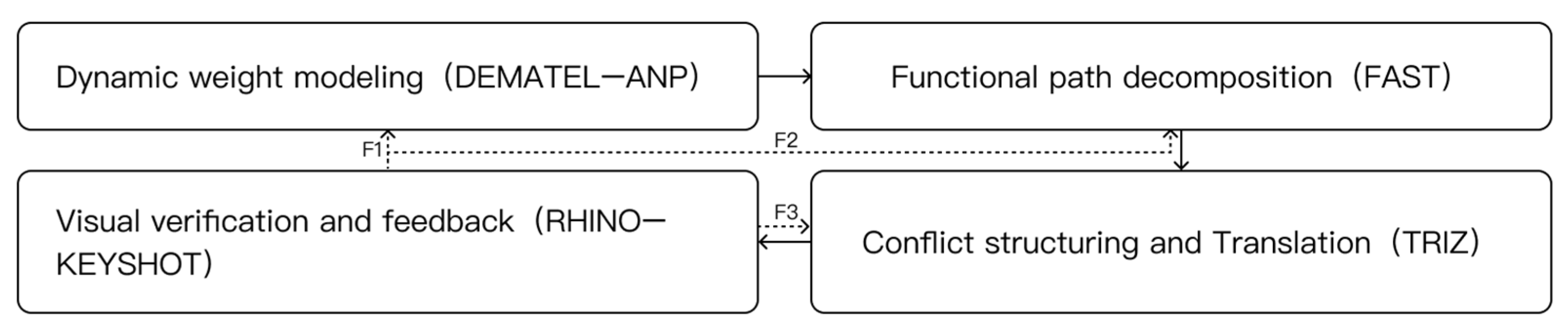

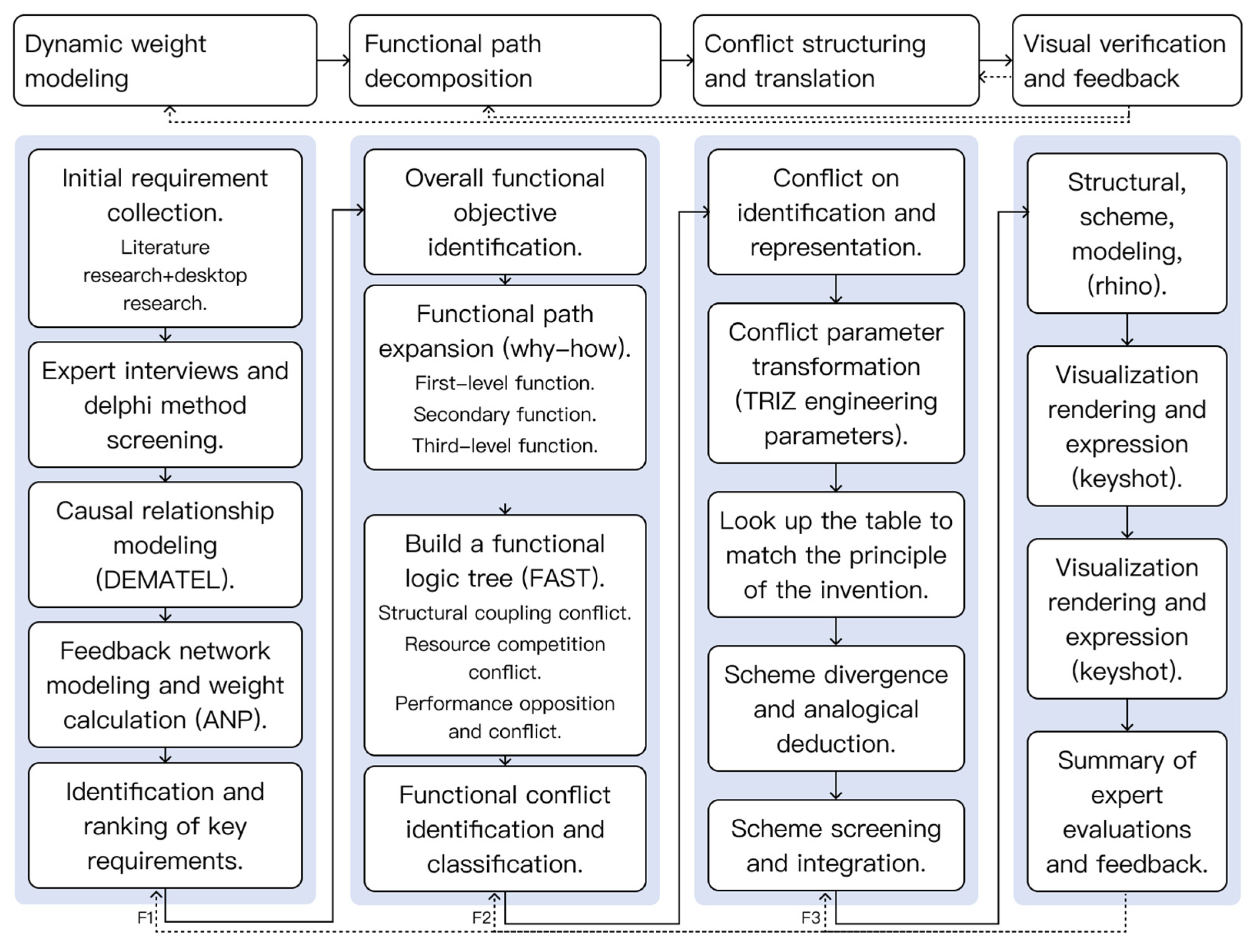

3. Construction of the Methodological Framework

3.1. DEMATEL-ANP Implementation Process and Computational Details

3.1.1. Causal Relationship Modeling Using DEMATEL

3.1.2. ANP Feedback Network and Weight Calculation

3.2. Logic and Implementation Steps of FAST Function Tree Construction

- Define the scope and top function. Phrase all functions as verb–noun pairs; set the system boundary and assumptions.

- Expand Why–How paths. From the top function, iteratively decompose along How (right) and justify by Why (left), checking logical completeness and dependency consistency.

- Structure the function tree. Classify nodes as primary, supporting, and executive; annotate interfaces (signals, materials, energy).

- Conflict localization. Traverse the tree to identify resource competition, spatial/structural coupling, and performance trade-offs; register conflict pairs with their triggering contexts and related KPIs.

- Prioritization. Weight branches and conflicts by DEMATEL-ANP salience to form a conflict register for TRIZ.

3.3. TRIZ-Based Conflict Transformation and Innovation Implementation Path

- Parameter abstraction. Map each conflict to standardized improving and worsening parameters (domain-adapted from the classical set).

- Contradiction matrix and principle matching. Retrieve candidate inventive principles for each parameter pair; where appropriate, also apply separation principles (in time/space/condition) or Su-Field/Standard solutions for interaction-level issues.

- Concept synthesis by analogy. Translate principles into multiple structural/architectural alternatives via case-based reasoning and concept sketching; define operating mechanisms and expected KPI effects.

- Concept screening. Evaluate against engineering constraints (mass/power budgets, reachability and attitude margins, manufacturability, maintainability); down-select via a lightweight Pugh/morphological assessment.

4. Design Practice

4.1. Demand Modeling and Weighting (Stage D-A: DEMATEL-ANP)

4.1.1. Indicator System: Construction and Validity

4.1.2. DEMATEL: Matrices, Indices, and Causal Plot

- Drivers/high centrality (Q1, , H ≥ 0): C8 Payload Capacity (C = 5.243, H = 0.681), C6 Flight Stability (4.903, 0.840), C17 Structural Safety (4.771, 0.050), C2 Precision Spraying (4.536, 0.197), C18 Autonomous Decision-Making (4.490, 0.465), C3 Plant Replacement (4.319, 0.471), C4 Data Transmission (4.225, 0.354), and C1 Automatic Obstacle Avoidance (4.177, 0.749).

- Drivers/low centrality (Q2, < , H ≥ 0): C9 Facade Adaptation (4.110, 0.789).

- Receivers/high centrality (Q3, , H < 0): C14 Operational Safety (4.544, −0.877) and C16 Environmental Safety (4.182, −0.395).

- Receivers/low centrality (Q4, < , H < 0): C5 Environmental Monitoring (3.931, −0.072), C19 Predictive Maintenance (3.849, −0.702), C15 Material Safety (3.801, −0.107), C10 Human–Machine Dimensional Compatibility (3.781, −0.226), C12 User Interface (3.533, −0.153), C11 Color Harmony (3.451, −0.555), C7 Endurance Time (3.371, −0.467), and C13 Aesthetic Appearance (2.988, −1.044).

4.1.3. ANP Network and Global Weights

4.1.4. Selection of Core Requirements (Top-6)

4.2. Functional Decomposition and Conflict Localization (Stage F: FAST)

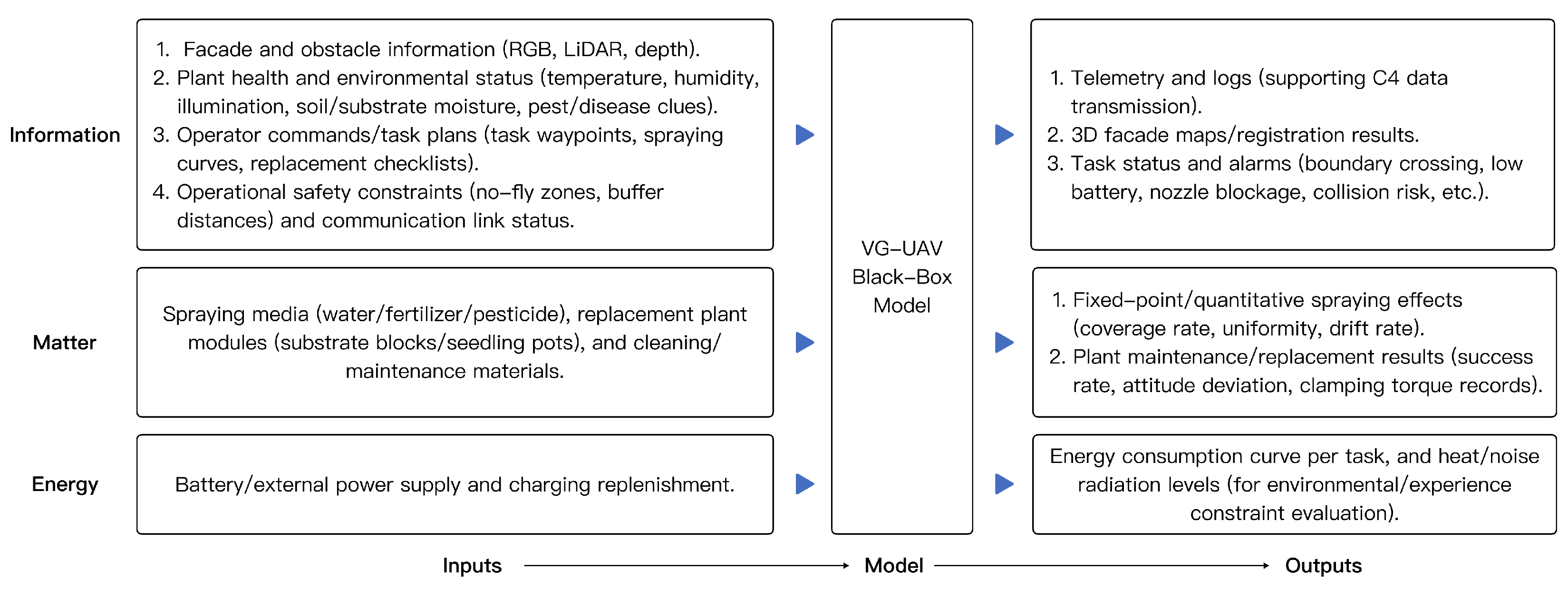

4.2.1. VG-UAV Black-Box Model (Inputs–Model–Outputs)

- Inputs. The left interface comprises three port classes, aligned with Figure 6 and prioritized by the Top-6 weights from 4.1 (C1, C6, C2, C17, C18, C3).

- Information: Facade and obstacle information (RGB, LiDAR, depth), plant health and environmental status (temperature, humidity, illumination, soil/substrate moisture, pest/disease clues), operator commands/task plans (task waypoints, spraying curves, replacement checklists), and operational safety constraints (no-fly zones, buffer distances) and communication link status.

- Matter: Spraying media (water/fertilizer/pesticide), replacement plant modules (substrate blocks/seedling pots), and cleaning/maintenance materials.

- Energy: Battery/external power supply and charging replenishment.

- Outputs. Right-side deliverables are grouped as follows:

- Information: Telemetry and logs (supporting C4 data transmission), 3D facade maps/registration results, and task status and alarms (boundary crossing, low battery, nozzle blockage, collision risk, etc.).

- Matter: Fixed-point/quantitative spraying effects (coverage rate, uniformity, drift rate) and plant maintenance/replacement results (success rate, attitude deviation, clamping torque records).

- Energy/loss: Energy consumption curve per task, and heat/noise radiation levels (for environmental/experience constraint evaluation).

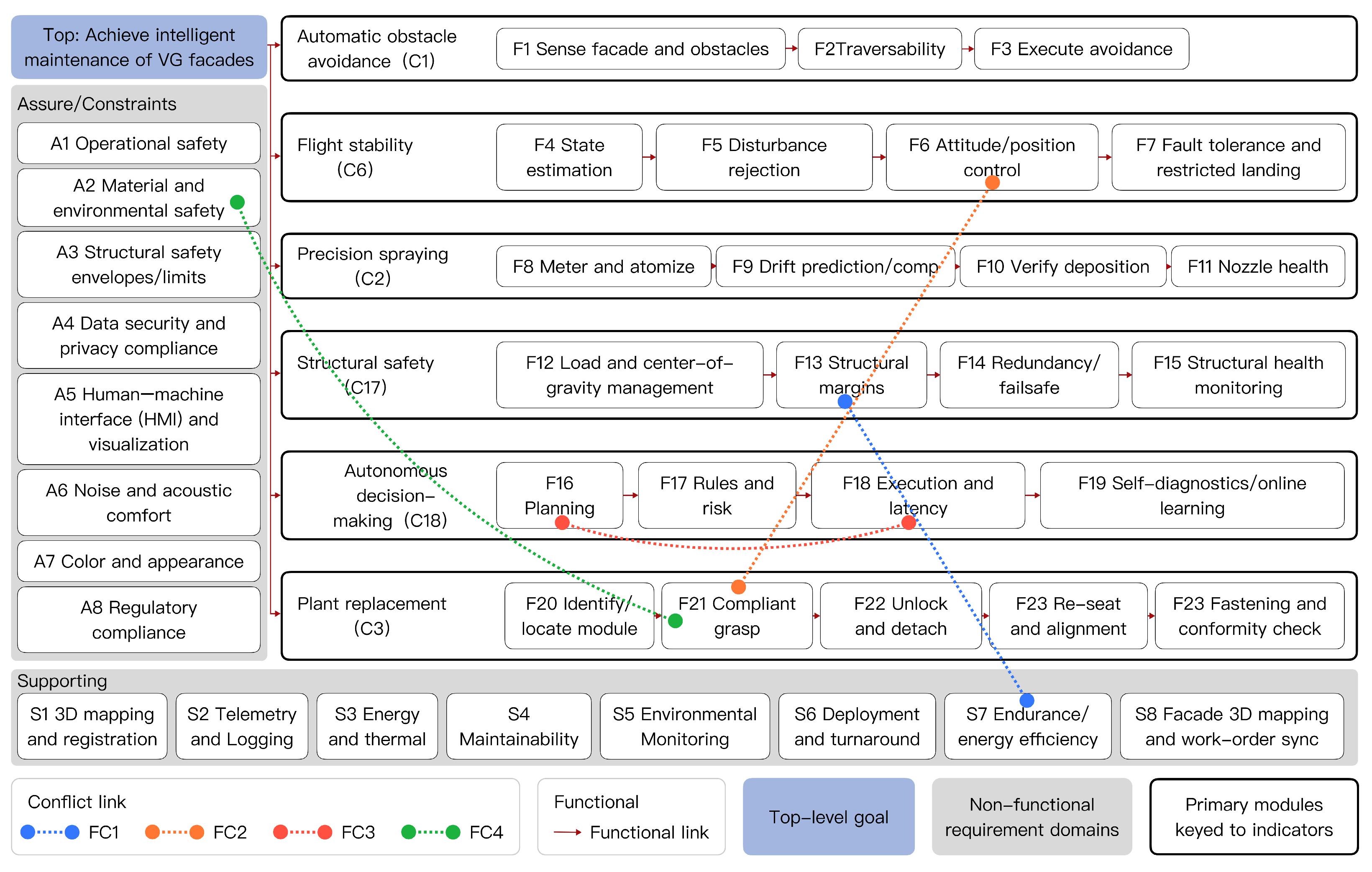

4.2.2. FAST-Based Functional Tree Construction

- Anchor the primary “How” paths with the Top-6 requirements from 4.1: C1 Obstacle Avoidance, C6 Flight Stability, C2 Precision Spraying, C17 Structural Safety, C18 Autonomous Decision-Making, and C3 Plant Replacement.

- Decompose each path into executable subfunctions (F-nodes) along the Why–How axis, preserving task causality and control flow.

- Attach supporting functions (S-nodes) that cross-serve multiple branches, and bind assure/constraints (A-nodes) that cap risk across the whole tree.

- Localize cross-branch conflicts (FC1–FC4) as dashed links to guide downstream TRIZ resolution.

- C1 Automatic Obstacle Avoidance: F1 Sense facade/obstacles → F2 Traversability → F3 Execute avoidance.

- C6 Flight Stability: F4 State estimation → F5 Disturbance rejection → F6 Attitude/position control → F7 Fault tolerance and restricted landing.

- C2 Precision Spraying: F8 Meter and atomize → F9 Drift prediction/compensation → F10 Verify deposition → F11 Nozzle health.

- C17 Structural Safety: F12 Load and Center-of-Gravity management → F13 Structural margins → F14 Redundancy/failsafe → F15 Structural health monitoring.

- C18 Autonomous Decision-Making: F16 Planning → F17 Rules and risk → F18 Execution and latency → F19 Self-diagnostics/online learning.

- C3 Plant Replacement: F20 Identify/locate module → F21 Compliant grasp → F22 Unlock and detach → F23 Re-seat and alignment → F24 Fastening and Conformity Check.

- FC1 (blue): S7 Endurance/energy efficiency ↔ F13 Structural margins—lightweighting vs. structural safety.

- FC2 (orange): F21 Compliant grasp ↔ F6 Attitude/position control—manipulator/payload disturbance vs. flight stability.

- FC3 (red): F16 Planning ↔ F18 Execution and latency—autonomy complexity vs. real-time deadlines.

- FC4 (green): F21 Compliant grasp ↔ A2 Material and environmental safety—grasp stiffness vs. botanical compliance.

4.3. Conflict Transformation and Concept Generation (Stage T: TRIZ)

4.3.1. Parameterizing the Conflicts

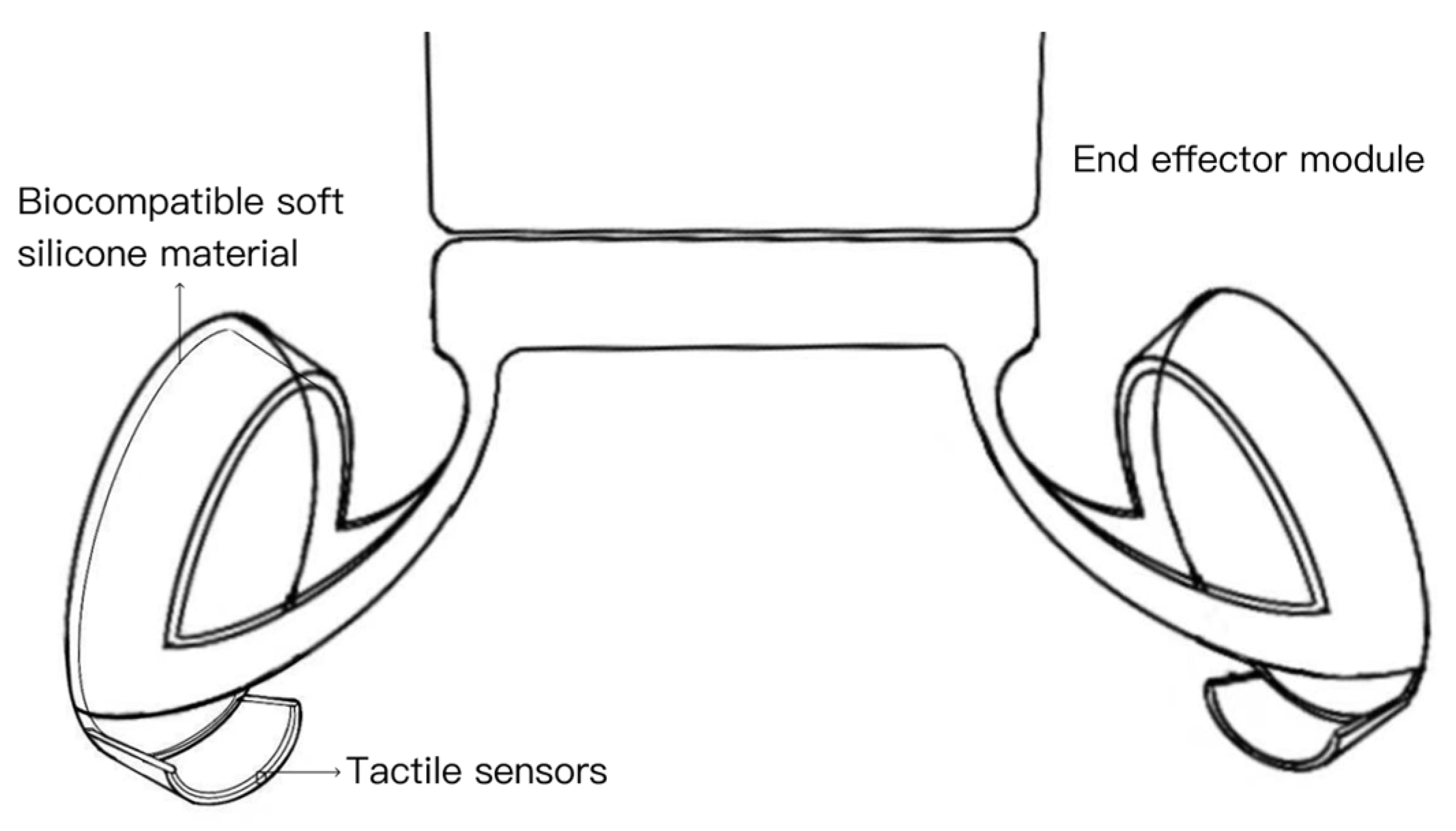

4.3.2. Selected Principles and Concept Generation

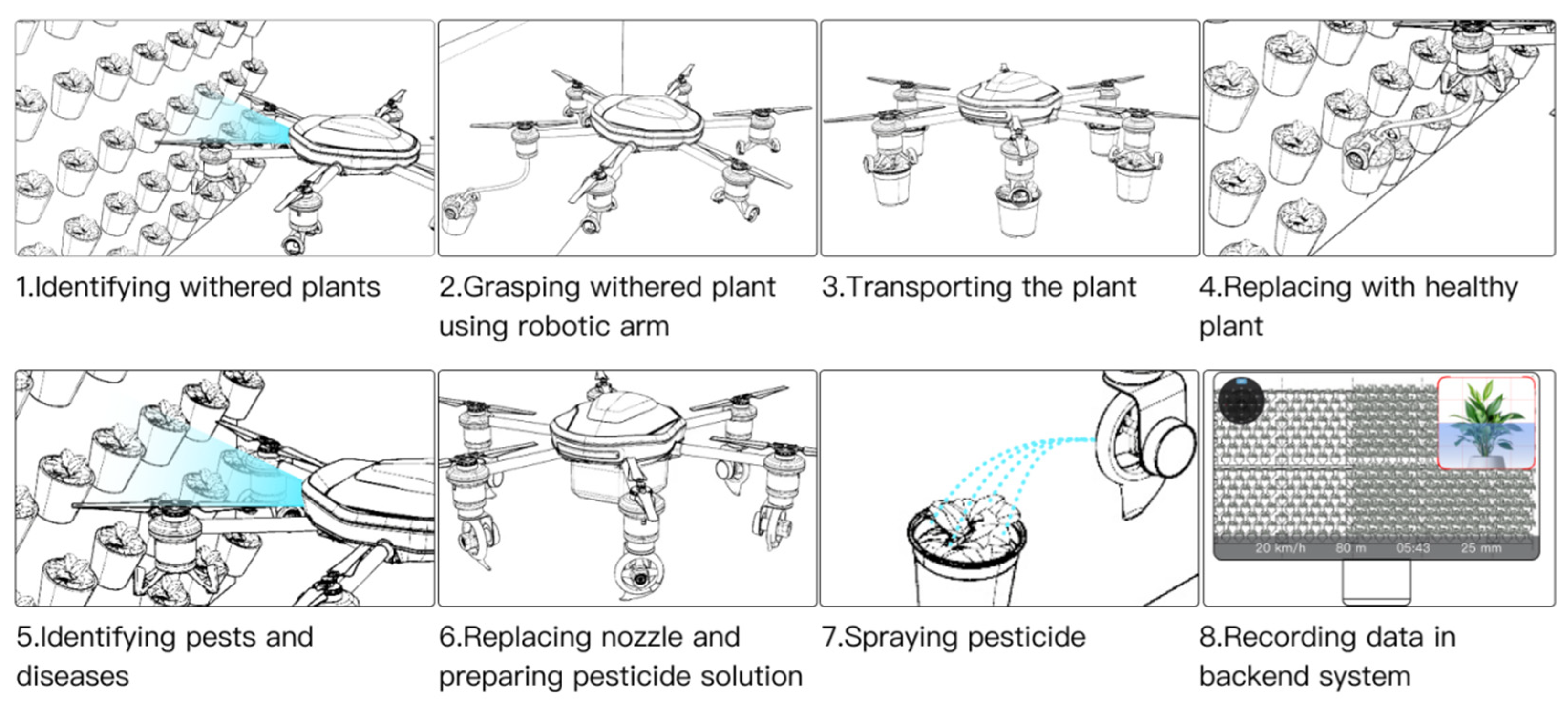

4.4. Design Scheme

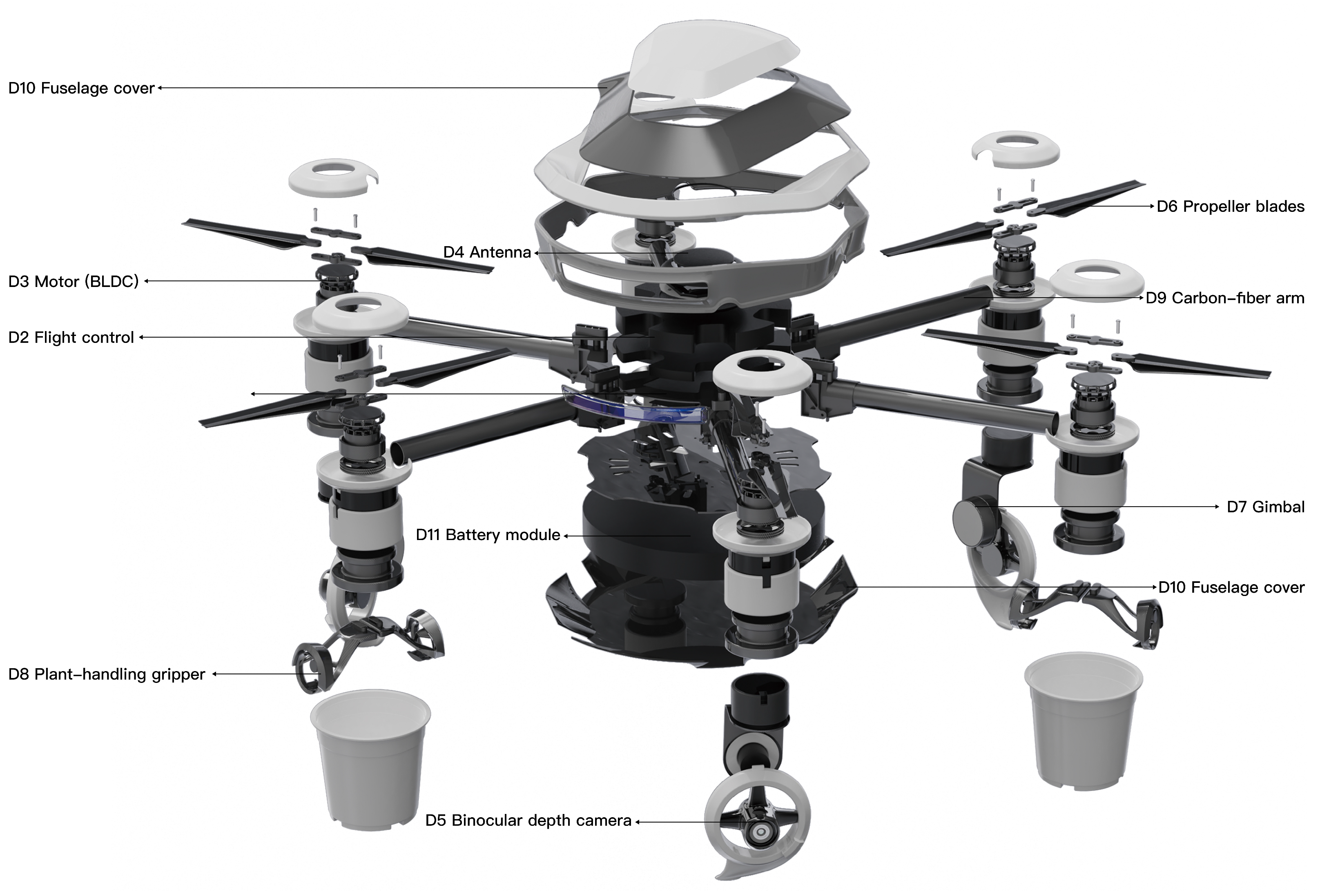

4.4.1. Airframe and Mission Modules (Rhino–KeyShot)

4.4.2. Mission Workflow

4.4.3. Web-Based HMI

4.4.4. Visual System

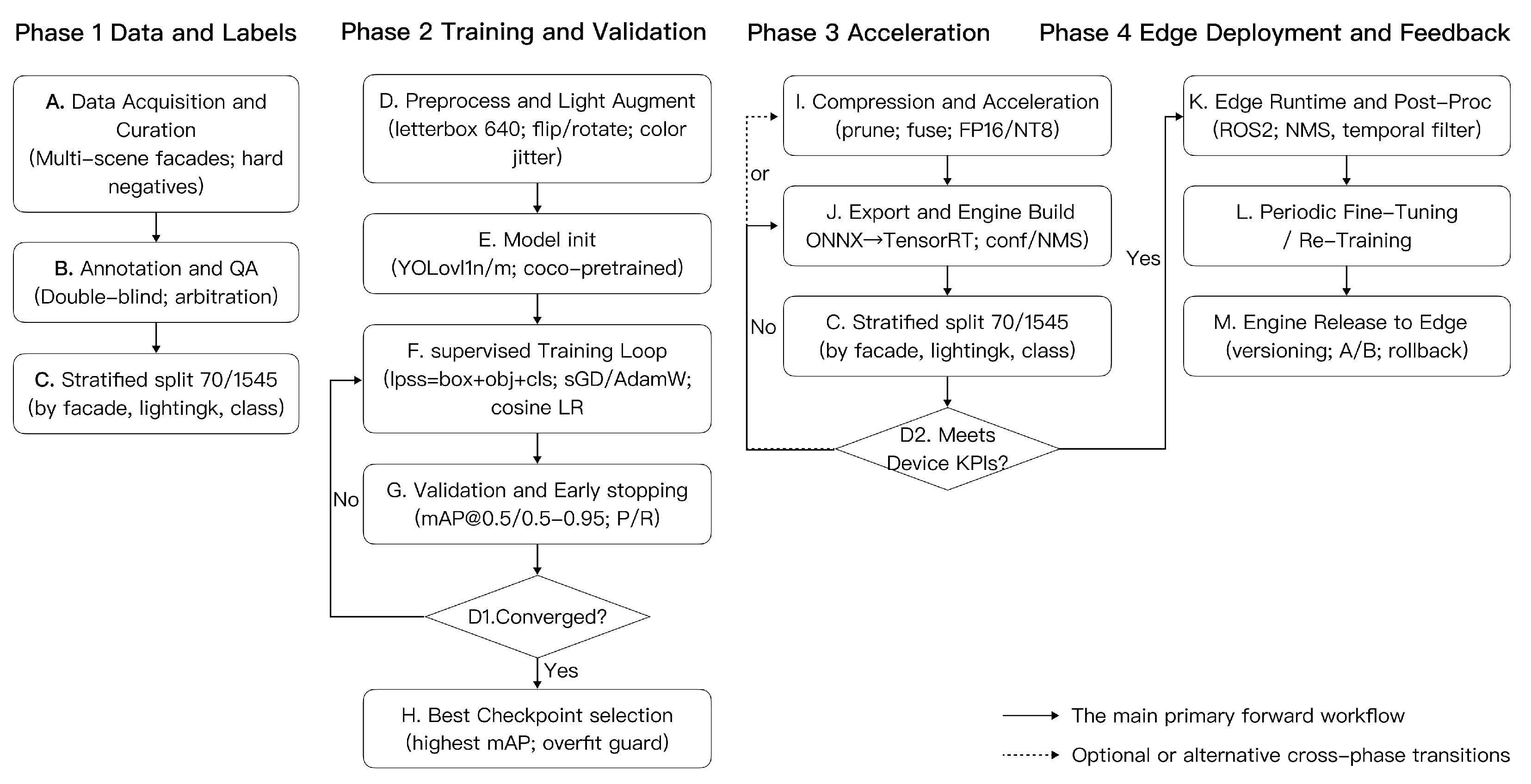

- Data and labels. Curate multi-scene facade imagery with hard negatives; use double-blind annotation + adjudication; apply stratified splits (by facade type, lighting, and class balance) to prevent leakage.

- Training and validation. Initialize YOLOv11-lite weights; run supervised loops with early stopping and stratified K-fold checks; monitor mAP@50/50:95, precision/recall, and latency as co-primary criteria.

- Acceleration. Export ONNX → TensorRT; apply structured pruning and INT8 calibration to meet edge latency on the onboard SoC; verify accuracy drop < 1 pp mAP@50.

- Edge deployment and feedback. Integrate ROS 2 post-processing (NMS, temporal filters); log telemetry/images/QC to the backend for periodic fine-tuning (Step 8 of the operational loop).

4.5. Design Evaluation

4.5.1. Baseline Systems and Selection Rationale

4.5.2. Evaluation Criteria and Scoring Methodology

- Functionality encompasses the core operational capabilities of the system (e.g., obstacle avoidance, precision spraying, plant replacement, data transmission, environmental monitoring).

- Performance covers quantitative operational metrics (e.g., flight stability, endurance time, payload capacity, adaptability to facade geometry).

- User Experience addresses ergonomic and aesthetic factors (e.g., human-factor sizing, visual integration with the environment, user interface usability, overall form appeal).

- Safety includes operational, material, environmental, and structural safety aspects (e.g., fail-safe operation, material reliability, minimal environmental impact, structural integrity under stress).

- Intelligence evaluates autonomous and smart maintenance capabilities (e.g., onboard autonomous decision-making and predictive maintenance functions).

- Criterion-Level Scoring: For each criterion Ci, collect the scores assigned to each scheme by the experts and compute the average score. This yields an average performance score for S1, S2, and S3 on each individual indicator C1–C19.

- Dimension Aggregation: For each scheme, aggregate its criterion scores into the five B-level dimension scores. This is performed by computing a weighted average of the C-level scores within each cluster B1–B5, using the ANP-derived weight of each criterion as the weighting factor. In other words, a scheme’s score on a given dimension (e.g., B1 Functionality) is the sum of its scores on the associated criteria (C1–C5 for Functionality), each multiplied by that criterion’s priority weight (from the ANP limit supermatrix). This produces a weighted mean score for each dimension per scheme.

- Composite Score Calculation: Compute an overall composite score for each scheme by taking a weighted sum of its five dimension scores, using the relative importance weights of B1–B5 as coefficients. This mirrors the ANP cluster weights, thereby emphasizing dimensions in proportion to their importance. The resulting composite score is a single value (out of 5) that reflects the scheme’s overall performance with respect to all evaluated criteria.

4.5.3. Results and Comparative Analysis

4.6. Analytical Feasibility Envelope

4.6.1. Equations and Definitions

4.6.2. Parameterization and Data Sources

4.6.3. Numerical Substitutions and Compact Results

- Thrust margin (Equation (9)). Using Equation (10) with per-rotor power and per-rotor disk area (see Table 12), we obtain per rotor and hence, kN; thus, and .

- Hover power and endurance (Equations (10) and (11)).kW; kW.Usable energy kWh. We use the minutes form .Results: empty hover 34.7 min; spraying (+250 W) 33.2 min; MTOW hover 19.8 min.

- Crosswind tilt (Equation (12)) (, )...For these speeds the small-angle condition holds (), so is a good approximation.

- Grasp disturbance (Equation (13)) ( kg, m, )., .Drift upper bound and uniformity (Equation (14)) (h = 2.00 m, ).: at ; at .: at ; at .

- Uniformity criterion for effective swath: CV ≤ 35%.

- Near-wall safety (Equation (16)) (; ; ; ; ).Reaction distance ; braking distance .Required stable sensing: m.

4.6.4. Conclusions

5. Discussion

5.1. Interpretation of the Main Findings

5.2. Practical Implications for VG and UAV Practitioners

5.3. Methodological Implications and Impacts on the D-A-F-T Framework

5.4. Limitations, Risks, and Scalability

6. Conclusions

6.1. Summary of Findings and Contributions

6.2. Future Work

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| D-A-F-T | DEMATEL-ANP-FAST-TRIZ |

| VG | Vertical Greening |

| UHI | Urban Heat Island |

| UAV | Unmanned Aerial Vehicle |

| ANP | Analytic Network Process |

| DEMATEL | Decision-Making Trial and Evaluation Laboratory |

| FAST | Functional Analysis System Technique |

| TRIZ | Theory of Inventive Problem Solving |

Appendix A. VG-UAV User Requirement Indicator Survey (Round-1)

Appendix A.1. Purpose and Scope

Appendix A.2. Respondent Profile (to Be Completed by Experts)

Appendix A.3. Rating Instructions

Appendix A.4. Indicator Set and Item Wording (R1)

| Dimension | Indicator | Operational Description (Item Wording) | Importance (1–5) | Category Reasonable? (Y/N) | Remarks |

|---|---|---|---|---|---|

| Function | avoidance | In complex facade environments, detect and avoid obstacles via multi-sensor fusion and online path planning. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | |

| Precision spraying | Deliver water/fertilizer/chemicals at fixed points and doses according to plant water/nutrient needs and micro-plot variation. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Data transmission | Upload operation and environmental parameters to a remote platform in real time to support telemetry and supervision. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Environmental monitoring | Sense micro-environmental factors (temperature, humidity, illumination, etc.) in real time to support decisions. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Facade 3D path planning | Build/update facade point clouds (SLAM) and align with BIM/GIS to anchor ROIs and revisit paths. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Performance | Flight stability | Maintain stable hover and controllable flight under gusts and boundary-layer effects. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | |

| Endurance time | Sustainable operation time per charge or energy-swap cycle. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Payload capacity | Safe carrying limit for task payloads (e.g., nozzle, gripper, tank). | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Deployment and turnaround efficiency | Time/steps from arrival to takeoff and from battery swap to relaunch minimized. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Energy efficiency | Energy consumption per unit of task output. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| User experience | Human-factor size/handling | Volume/weight/grip suitable for one-person or small-team carry and deployment. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | |

| Color harmonization | Body colorway harmonizes with urban visual context and minimizes visual intrusion. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Aesthetic form | Exterior aligns with contemporary industrial design aesthetics and conveys professional quality. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Noise and acoustic comfort | Overall noise level/spectrum and its impact on the public and operators. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Safety | Operational safety | Prevention of personnel/environmental risks in high-altitude operations and fail-safe protection. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | |

| Material safety | Materials are eco-friendly and non-toxic; comply with industrial safety and sustainability norms. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Environmental safety | Avoid pollution or secondary harm during operations (e.g., control of spray drift/runoff). | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Structural safety | Resistance to impact/vibration/fatigue to maintain mechanical integrity. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Data security and privacy compliance | Encryption, access control, and audit trails in line with applicable regulations. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Intelligence | Autonomous decision-making | Perception-driven autonomous path planning, task allocation, and real-time re-planning. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | |

| Maintainability and modularity | Standard interfaces, tool-less quick-release, and accessibility to minimize downtime. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Self-diagnosis and fault tolerance | Health monitoring, redundancy, and graceful degradation to sustain mission continuity. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N |

Appendix A.5. Open-Ended Question (R1—Proposal Only, Not Scored)

Appendix B. VG-UAV User Requirement Indicator Survey (Round-2)

Appendix B.1. Purpose and Scope

Appendix B.2. Respondent Profile (to Be Completed by Experts)

Appendix B.3. Rating Instructions

Appendix B.4. Consolidated Indicator List and Item Wording (R2)

| Dimension | Indicator | Operational Description (Item Wording) | Importance (1–5) | Category Reasonable? (Y/N) | Remarks |

|---|---|---|---|---|---|

| Function | Autonomous obstacle avoidance | Detect and avoid obstacles in complex facade environments via multi-sensor fusion and online path planning. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | |

| Precision spraying | Point/dose-accurate water/fertilizer/chemical delivery per plant needs and micro-plot variation. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Plant replacement | Identify, grasp, and replace modular plants through vision-end-effector coordination. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Data transmission | Real-time upload of operation and environmental parameters to a remote platform (telemetry/supervision). | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Environmental monitoring | Real-time sensing of micro-environment (temperature, humidity, illumination) to support decisions. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Performance | Flight stability | Maintain stable hover and controllable flight under gusts and boundary-layer effects. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | |

| Endurance time | Sustainable operating time per charge or energy-swap cycle. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Payload capacity | Safe payload limit for mission modules (e.g., nozzle, gripper, liquid tank). | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Facade adaptability | Operational adaptability to diverse facade structures/textures/heights (consolidates goals of facade 3D modeling and spatial registration). | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| User experience | Human-factor sizing | Volume/weight/grip suitable for one-person or small-team carry and deployment. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | |

| Color harmonization | Body colorway harmonizes with urban visual context to reduce visual intrusion. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| User interface | Clear logic, intuitive layout, and user-friendly interaction at the control terminal. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Aesthetic form | Exterior aligns with contemporary industrial design and conveys professional quality. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Safety | Operational safety | Prevention of personnel/environmental risks in high-altitude operations; fail-safe protection. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | |

| Material safety | Eco-friendly, non-toxic materials compliant with industrial safety and sustainability norms. | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Environmental safety | Avoid pollution or secondary harm during operations (e.g., control of spray drift/runoff). | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Structural safety | Resistance to impact/vibration/fatigue; accommodates complex disturbances (partly absorbing reliability concerns of self-diagnosis/fault tolerance). | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | ||

| Intelligence | Autonomous decision-making | Perception-driven path planning, task allocation, and real-time re-planning (partly absorbing algorithm robustness of self-diagnosis/fault tolerance). | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N | |

| Predictive maintenance | State monitoring/log analytics and data modeling for early detection of equipment and plant anomalies (absorbing maintainability/modularity objectives). | ☐1 ☐2 ☐3 ☐4 ☐5 | ☐Y ☐N |

Appendix C. VG-UAV User Requirement Indicators—DEMATEL Questionnaire

Appendix C.1. Purpose and Notes

Appendix C.2. Indicator Set and Operational Definitions

| Target (A) | Criterion (B) | Indicator (C, Code) | Operational Description |

|---|---|---|---|

| VG-UAV design goal | Function (B1) | Autonomous obstacle avoidance (C1) | Detect and avoid obstacles in complex facade environments via multi-sensor fusion and online path planning. |

| Precision spraying (C2) | Point/dose-accurate water/fertilizer/chemical delivery per plant needs and micro-plot variation. | ||

| Plant replacement (C3) | Identify, grasp, and replace modular plants through vision-end-effector coordination. | ||

| Data transmission (C4) | Real-time upload of operation and environmental parameters to a remote platform (telemetry/supervision). | ||

| Environmental monitoring (C5) | Real-time sensing of micro-environment (temperature, humidity, illumination) to support decisions. | ||

| Performance (B2) | Flight stability (C6) | Maintain stable hover and controllable flight under gusts and boundary-layer effects. | |

| Endurance time (C7) | Sustainable operating time per charge or energy-swap cycle. | ||

| Payload capacity (C8) | Safe payload limit for mission modules (e.g., nozzle, gripper, liquid tank). | ||

| Facade adaptability (C9) | Operational adaptability to diverse facade structures/textures/heights (consolidates goals of facade 3D modeling and spatial registration). | ||

| User experience (B3) | Human-factor sizing (C10) | Volume/weight/grip suitable for one-person or small-team carry and deployment. | |

| Color harmonization (C11) | Body colorway harmonizes with urban visual context to reduce visual intrusion. | ||

| User interface (C12) | Clear logic, intuitive layout, and user-friendly interaction at the control terminal. | ||

| Aesthetic form (C13) | Exterior aligns with contemporary industrial design and conveys professional quality. | ||

| Safety (B4) | Operational safety (C14) | Prevention of personnel/environmental risks in high-altitude operations; fail-safe protection. | |

| Material safety (C15) | Eco-friendly, non-toxic materials compliant with industrial safety and sustainability norms. | ||

| Environmental safety (C16) | Avoid pollution or secondary harm during operations (e.g., control of spray drift/runoff). | ||

| Structural safety (C17) | Resistance to impact/vibration/fatigue; accommodates complex disturbances (partly absorbing reliability concerns of self-diagnosis/fault tolerance). | ||

| Intelligence (B5) | Autonomous decision-making (C18) | Perception-driven path planning, task allocation, and real-time re-planning (partly absorbing algorithm robustness of self-diagnosis/fault tolerance). | |

| Predictive maintenance (C19) | State monitoring/log analytics and data modeling for early detection of equipment and plant anomalies (absorbing maintainability/modularity objectives). |

Appendix C.3. How to Score (Matrix Format)

| C1 | C2 | |

|---|---|---|

| C1 | 0 | 3 |

| C2 | 1 | 0 |

Appendix C.4. DEMATEL Rating Matrix (C1–C19)

| C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | C9 | C10 | C11 | C12 | C13 | C14 | C15 | C16 | C17 | C18 | C19 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| C1 | |||||||||||||||||||

| C2 | |||||||||||||||||||

| C3 | |||||||||||||||||||

| C4 | |||||||||||||||||||

| C5 | |||||||||||||||||||

| C6 | |||||||||||||||||||

| C7 | |||||||||||||||||||

| C8 | |||||||||||||||||||

| C9 | |||||||||||||||||||

| C10 | |||||||||||||||||||

| C11 | |||||||||||||||||||

| C12 | |||||||||||||||||||

| C13 | |||||||||||||||||||

| C14 | |||||||||||||||||||

| C15 | |||||||||||||||||||

| C16 | |||||||||||||||||||

| C17 | |||||||||||||||||||

| C18 | |||||||||||||||||||

| C19 |

Appendix D. VG-UAV User Requirement Indicators—ANP Questionnaire

Appendix D.1. Purpose and Notes

Appendix D.2. Instructions and Saaty Scale

Appendix D.3. Element Lists

Appendix D.4. Questionnaire Content

Appendix E

| No. | Parameter Name | Category |

|---|---|---|

| 1 | Weight of Moving Object | Physical |

| 2 | Weight of Stationary Object | Physical |

| 3 | Length of Moving Object | Geometric |

| 4 | Length of Stationary Object | Geometric |

| 5 | Area of Moving Object | Geometric |

| 6 | Area of Stationary Object | Geometric |

| 7 | Volume of Moving Object | Geometric |

| 8 | Volume of Stationary Object | Geometric |

| 9 | Speed | Physical |

| 10 | Force | Physical |

| 11 | Stress/Pressure | Physical |

| 12 | Shape | Geometric |

| 13 | Structural Stability | Capability |

| 14 | Strength | Capability |

| 15 | Action Time (Moving Object) | Capability |

| 16 | Action Time (Stationary Object) | Capability |

| 17 | Temperature | Physical |

| 18 | Illuminance | Physical |

| 19 | Energy Consumption (Moving Object) | Resource |

| 20 | Energy Consumption (Stationary Object) | Resource |

| 21 | Power | Physical |

| 22 | Energy Loss | Resource |

| 23 | Material Loss | Resource |

| 24 | Information Loss | Resource |

| 25 | Time Loss | Resource |

| 26 | Quantity of Substance/Matter | Resource |

| 27 | Reliability | Capability |

| 28 | Measurement Accuracy | Controllability |

| 29 | Manufacturing Precision | Controllability |

| 30 | Harmful Factors (Acting on Object) | Harm |

| 31 | Harmful Factors (Generated by Object) | Harm |

| 32 | Manufacturability | Capability |

| 33 | Operability | Controllability |

| 34 | Maintainability | Capability |

| 35 | Adaptability and Versatility | Capability |

| 36 | Equipment Complexity | Controllability |

| 37 | Detection Complexity | Controllability |

| 38 | Automation Level | Controllability |

| 39 | Productivity | Capability |

Appendix F

| No. | Name | No. | Name |

|---|---|---|---|

| 1 | Segmentation | 21 | Skipping (Reduce Harm Time) |

| 2 | Extraction | 22 | Blessing in Disguise (Harm → Benefit) |

| 3 | Local Quality | 23 | Feedback |

| 4 | Asymmetry | 24 | Mediator (Intermediary) |

| 5 | Combination | 25 | Self-Service |

| 6 | Universality (Diversity) | 26 | Copying |

| 7 | Nesting | 27 | Cheap Substitute |

| 8 | Counterweight (Mass Compensation) | 28 | Mechanical Substitution |

| 9 | Preliminary Anti-Action | 29 | Pneumatic/Hydraulic Structure |

| 10 | Preliminary Action | 30 | Flexible Membrane/Shell |

| 11 | Cushioning (Precaution) | 31 | Porous Materials |

| 12 | Equipotentiality | 32 | Color Changes |

| 13 | Reverse Action | 33 | Homogeneity |

| 14 | Curvature (Surfaceization) | 34 | Discarding and Recovering |

| 15 | Dynamics (Dynamic Features) | 35 | Parameter Changes (Physical/Chemical) |

| 16 | Partial/Excessive Action | 36 | Phase Transition |

| 17 | Dimension Change | 37 | Thermal Expansion |

| 18 | Vibration | 38 | Strong Oxidants |

| 19 | Periodic Action | 39 | Inert Environment |

| 20 | Continuity of Useful Action | 40 | Composite Materials |

References

- Pan, L.; Zheng, X.-N.; Luo, S.; Mao, H.-J.; Meng, Q.-L.; Chen, J.-R. Review on building energy saving and outdoor cooling effect of vertical greenery systems. Chin. J. Appl. Ecol. 2023, 34, 2871–2880. [Google Scholar] [CrossRef]

- Wang, P.; Wong, Y.H.; Tan, C.Y.; Li, S.; Chong, W.T. Vertical Greening Systems: Technological Benefits, Progresses and Prospects. Sustainability 2022, 14, 12997. [Google Scholar] [CrossRef]

- Wu, Q.; Huang, Y.; Irga, P.; Kumar, P.; Li, W.; Wei, W.; Shon, H.K.; Lei, C.; Zhou, J.L. Synergistic Control of Urban Heat Island and Urban Pollution Island Effects Using Green Infrastructure. J. Environ. Manag. 2024, 370, 122985. [Google Scholar] [CrossRef]

- Okwandu, A.C.; Akande, D.O.; Nwokediegwu, Z.Q.S. Green Architecture: Conceptualizing Vertical Greenery in Urban Design. Eng. Sci. Technol. J. 2024, 5, 1657–1677. [Google Scholar] [CrossRef]

- Irga, P.J.; Torpy, F.R.; Griffin, D.; Wilkinson, S.J. Vertical Greening Systems: A Perspective on Existing Technologies and New Design Recommendation. Sustainability 2023, 15, 6014. [Google Scholar] [CrossRef]

- Farrokhirad, E.; Rigillo, M.; Köhler, M.; Perini, K. Optimising Vertical Greening Systems for Sustainability: An Integrated Design Approach. Int. J. Sustain. Energy 2024, 43, 2411831. [Google Scholar] [CrossRef]

- Xu, H.; Yang, Y.; Li, J.; Huang, X.; Han, W.; Wang, Y. A Unmanned Aerial Vehicle System for Urban Management. In Proceedings of the IGARSS 2024—2024 IEEE International Geoscience and Remote Sensing Symposium, Athens, Greece, 7–12 July 2024; pp. 4617–4620. [Google Scholar] [CrossRef]

- Chen, G.; Lin, Y.; Wu, X.; Yue, R.; Chen, W. An Unmanned Aerial Vehicle Based Intelligent Operator for Power Transmission Lines Maintenance. In Proceedings of the 2024 Second International Conference on Cyber-Energy Systems and Intelligent Energy (ICCSIE), Shenyang, China, 17–19 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Tsellou, A.; Livanos, G.; Ramnalis, D.; Polychronos, V.; Plokamakis, G.; Zervakis, M.; Moirogiorgou, K. A UAV Intelligent System for Greek Power Lines Monitoring. Sensors 2023, 23, 8441. [Google Scholar] [CrossRef] [PubMed]

- Forcael, E.; Román, O.; Stuardo, H.; Herrera, R.; Soto-Muñoz, J. Evaluation of Fissures and Cracks in Bridges by Applying Digital Image Capture Techniques Using an Unmanned Aerial Vehicle. Drones 2023, 8, 8. [Google Scholar] [CrossRef]

- Dutta, M.; Gupta, D.; Sahu, S.; Limkar, S.; Singh, P.; Mishra, A.; Kumar, M.; Mutlu, R. Evaluation of Growth Responses of Lettuce and Energy Efficiency of the Substrate and Smart Hydroponics Cropping System. Sensors 2023, 23, 1875. [Google Scholar] [CrossRef]

- Ng, H.T.; Tham, Z.K.; Abdul Rahim, N.A.; Rohim, A.W.; Looi, W.W.; Ahmad, N.S. IoT-Enabled System for Monitoring and Controlling Vertical Farming Operations. Int. J. Reconfigurable Embed. Syst. (IJRES) 2023, 12, 453. [Google Scholar] [CrossRef]

- Aiyetan, A.O.; Das, D.K. Use of Drones for Construction in Developing Countries: Barriers and Strategic Interventions. Int. J. Constr. Manag. 2023, 23, 2888–2897. [Google Scholar] [CrossRef]

- Hu, S.; Xin, J.; Zhang, D.; Xing, G. Research on the Design Method of Camellia Oleifera Fruit Picking Machine. Appl. Sci. 2024, 14, 8537. [Google Scholar] [CrossRef]

- Zhou, H.; Chen, Y.; Zhang, X. Design of Electric Water Heaters Based on QFD-TRIZ. Packag. Eng. 2023, 44, 215–223. [Google Scholar] [CrossRef]

- Huang, J.; Lin, J.; Feng, T. Design of agricultural plant protection UAV based on Kano-AHP. J. Fujian Univ. Technol. 2023, 21, 97–102. [Google Scholar] [CrossRef]

- Su, C.; Li, X.; Jiang, Y.; Li, C. Design of intelligent home health equipment based on DEMATEL-ANP. J. Mach. Des. 2025, 42, 161–167. [Google Scholar] [CrossRef]

- Thakkar, J.J. Decision-Making Trial and Evaluation Laboratory (DEMATEL). In Multi-Criteria Decision Making; Thakkar, J.J., Ed.; Springer: Singapore, 2021; pp. 139–159. ISBN 978-981-334-745-8. [Google Scholar]

- Zhou, Q.; Tang, F.; Zhu, Y. Research on Product Design for Improving Children’s Sitting Posture Based on DEMATEL-ISM-TOPSIS Method. Furnit. Inter. Des. 2024, 31, 48–55. [Google Scholar] [CrossRef]

- Taherdoost, H.; Madanchian, M. Analytic Network Process (ANP) Method: A Comprehensive Review of Applications, Advantages, and Limitations. J. Data Sci. Intell. Syst. (JDSIS) 2023, 1, 12–18. [Google Scholar] [CrossRef]

- Viola, N.; Corpino, S.; Fioriti, M.; Stesina, F. Functional Analysis in Systems Engineering: Methodology and Applications. In Systems Engineering—Practice and Theory; InTech: London, UK, 2012; ISBN 978-953-51-0322-6. [Google Scholar]

- Xie, Q.; Liu, Q. Application of TRIZ Innovation Method to In-Pipe Robot Design. Machines 2023, 11, 912. [Google Scholar] [CrossRef]

- Tang, K.; Qian, Y.; Dong, H.; Huang, Y.; Lu, Y.; Tuerxun, P.; Li, Q. SP-YOLO: A Real-Time and Efficient Multi-Scale Model for Pest Detection in Sugar Beet Fields. Insects 2025, 16, 102. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M.; Ye, S.; Zhao, S.; Wang, W.; Xie, C. Pear Object Detection in Complex Orchard Environment Based on Improved YOLO11. Symmetry 2025, 17, 255. [Google Scholar] [CrossRef]

- Zhu, H.; Lin, C.; Liu, G.; Wang, D.; Qin, S.; Li, A.; Xu, J.-L.; He, Y. Intelligent Agriculture: Deep Learning in UAV-Based Remote Sensing Imagery for Crop Diseases and Pests Detection. Front. Plant Sci. 2024, 15, 1435016. [Google Scholar] [CrossRef] [PubMed]

- Holschemacher, D.; Müller, C.; Helbig, M.; Weisel, N. LARGE-SCALE, ROPE-DRIVEN ROBOT FOR THE AUTOMATED MAINTENANCE OF URBAN GREEN FACADES. In State-of-the-art Materials and Techniques in Structural Engineering and Construction, Structural Engineering and Construction, Proceedings of the Fourth European and Mediterranean Structural Engineering and Construction Conference (EURO-MED-SEC-4), Leipzig, Germany, 20–23 June 2022; Holsche macher, K., Quapp, U., Singh, A., Yazdani, S., Eds.; ISEC Press: Fargo, ND, USA, 2022; SUS-12. [Google Scholar] [CrossRef]

- Jamšek, M.; Sajko, G.; Krpan, J.; Babič, J. Design and Control of a Climbing Robot for Autonomous Vertical Gardening. Machines 2024, 12, 141. [Google Scholar] [CrossRef]

- Hattenberger, G.; Bronz, M.; Condomines, J.-P. Evaluation of Drag Coefficient for a Quadrotor Model. Int. J. Micro Air Veh. 2023, 15. [Google Scholar] [CrossRef]

- Weber, C.; Eggert, M.; Udelhoven, T. Flight Attitude Estimation with Radar for Remote Sensing Applications. Sensors 2024, 24, 4905. [Google Scholar] [CrossRef] [PubMed]

- SAMR; SAC. GB/T 43071—2023; Unmanned Aircraft Spray System for Plant Protection. State Administration for Market Regulation (SAMR); Standardization Administration of China (SAC): Beijing, China, 2023. Available online: https://openstd.samr.gov.cn/bzgk/gb/newGbInfo?hcno=DE5EB96756889201A2EBA08F003DB744 (accessed on 6 September 2025).

- Falanga, D.; Kleber, K.; Scaramuzza, D. Dynamic Obstacle Avoidance for Quadrotors with Event Cameras. Sci. Robot. 2020, 5, eaaz9712. [Google Scholar] [CrossRef] [PubMed]

- ISO. ISO 2533:1975; Standard Atmosphere. International Organization for Standardization: Geneva, Switzerland, 1975.

| Phase | Retain | Retain but Revise | Revise/Relocate/Merge (Delete if Necessary) | Stopping Rule |

|---|---|---|---|---|

| Round 1 | Mean ≥ 3.122 and CV ≤ 0.185; median ≥ 4 with IQR ≤ 1 | Meets mean/CV but wording/attribution ambiguous, or median ∈ [3.5, 4) | Mean < 3.122 and/or CV > 0.185, or persistently low consensus after revision | Significant W (p < 0.001) and negligible “new/merge” suggestions |

| Round 2 | Mean ≥ 3.809 and CV ≤ 0.096; median ≥ 4 with IQR ≤ 1 | Thresholds met yet residual ambiguity—refine and keep | Fails tightened cutoffs or consensus remains low → merge/delete | Significant W (p < 0.001) and list stabilized → freeze |

| Goal Layer (A) | Criteria Layer (B) | Indicator Layer (C) |

|---|---|---|

| Design of an Intelligent UAV for VG Maintenance | Functional Requirements (B1) | Automatic Obstacle Avoidance (C1); Precision Spraying (C2); Plant Replacement (C3); Data Transmission (C4); Environmental Monitoring (C5) |

| Performance Requirements (B2) | Flight Stability (C6); Endurance Time (C7); Payload Capacity (C8); Facade Adaptability (C9) | |

| Experience Requirements (B3) | Human–Machine Dimensional Compatibility (C10); Color Harmony (C11); User Interface (C12); Aesthetic Appearance (C13) | |

| Safety Requirements (B4) | Operational Safety (C14); Material Safety (C15); Environmental Safety (C16); Structural Safety (C17) | |

| Intelligent Decision-Making(B5) | Autonomous Decision-Making (C18); Predictive Maintenance (C19) |

| C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | C9 | C10 | C11 | C12 | C13 | … | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| C1 | 0.075 | 0.172 | 0.091 | 0.159 | 0.095 | 0.161 | 0.094 | 0.112 | 0.085 | 0.142 | 0.150 | 0.119 | 0.149 | … |

| C2 | 0.095 | 0.089 | 0.120 | 0.090 | 0.167 | 0.110 | 0.146 | 0.146 | 0.076 | 0.080 | 0.088 | 0.079 | 0.150 | … |

| C3 | 0.118 | 0.132 | 0.082 | 0.100 | 0.107 | 0.145 | 0.150 | 0.131 | 0.089 | 0.117 | 0.122 | 0.119 | 0.108 | … |

| C4 | 0.089 | 0.143 | 0.112 | 0.078 | 0.143 | 0.119 | 0.120 | 0.150 | 0.082 | 0.143 | 0.108 | 0.134 | 0.103 | … |

| C5 | 0.088 | 0.143 | 0.097 | 0.082 | 0.069 | 0.115 | 0.083 | 0.084 | 0.100 | 0.094 | 0.107 | 0.086 | 0.073 | … |

| C6 | 0.170 | 0.153 | 0.137 | 0.172 | 0.149 | 0.103 | 0.141 | 0.161 | 0.161 | 0.142 | 0.150 | 0.139 | 0.141 | … |

| C7 | 0.066 | 0.066 | 0.057 | 0.064 | 0.077 | 0.060 | 0.048 | 0.143 | 0.058 | 0.092 | 0.081 | 0.069 | 0.064 | … |

| C8 | 0.092 | 0.167 | 0.186 | 0.151 | 0.153 | 0.113 | 0.170 | 0.122 | 0.140 | 0.158 | 0.175 | 0.107 | 0.161 | … |

| C9 | 0.111 | 0.125 | 0.106 | 0.141 | 0.093 | 0.141 | 0.114 | 0.156 | 0.072 | 0.164 | 0.130 | 0.108 | 0.154 | … |

| C10 | 0.072 | 0.103 | 0.099 | 0.090 | 0.091 | 0.093 | 0.091 | 0.144 | 0.072 | 0.062 | 0.096 | 0.064 | 0.142 | … |

| C11 | 0.048 | 0.075 | 0.089 | 0.071 | 0.087 | 0.094 | 0.073 | 0.092 | 0.060 | 0.079 | 0.050 | 0.132 | 0.082 | … |

| C12 | 0.059 | 0.070 | 0.088 | 0.141 | 0.097 | 0.093 | 0.069 | 0.093 | 0.058 | 0.100 | 0.067 | 0.054 | 0.099 | … |

| C13 | 0.039 | 0.063 | 0.055 | 0.037 | 0.061 | 0.044 | 0.049 | 0.043 | 0.028 | 0.050 | 0.076 | 0.032 | 0.032 | … |

| … | … | … | … | … | … | … | … | … | … | … | … | … | … | … |

| Result | Received Influence (R) | Exerted Influence (D) | Prominence (C) | Relation (H) |

|---|---|---|---|---|

| C1 | 1.714 | 2.463 | 4.177 | 0.749 |

| C2 | 2.170 | 2.367 | 4.536 | 0.197 |

| C3 | 1.924 | 2.395 | 4.319 | 0.471 |

| C4 | 1.936 | 2.290 | 4.225 | 0.354 |

| C5 | 2.001 | 1.929 | 3.931 | −0.072 |

| C6 | 2.031 | 2.871 | 4.903 | 0.840 |

| C7 | 1.919 | 1.452 | 3.371 | −0.467 |

| C8 | 2.281 | 2.962 | 5.243 | 0.681 |

| C9 | 1.661 | 2.449 | 4.110 | 0.789 |

| C10 | 2.004 | 1.778 | 3.781 | −0.226 |

| C11 | 2.003 | 1.448 | 3.451 | −0.555 |

| C12 | 1.843 | 1.690 | 3.533 | −0.153 |

| C13 | 2.016 | 0.972 | 2.988 | −1.044 |

| C14 | 2.710 | 1.834 | 4.544 | −0.877 |

| C15 | 1.954 | 1.847 | 3.801 | −0.107 |

| C16 | 2.288 | 1.894 | 4.182 | −0.395 |

| C17 | 2.361 | 2.411 | 4.771 | 0.050 |

| C18 | 2.013 | 2.478 | 4.490 | 0.465 |

| C19 | 2.275 | 1.574 | 3.849 | −0.702 |

| Row\Col | B1 | B2 | B3 | B4 | B5 |

|---|---|---|---|---|---|

| B1 | 0.432 | 0.391 | 0.260 | 0.450 | 0.502 |

| B2 | 0.201 | 0.129 | 0.446 | 0.214 | 0.194 |

| B3 | 0.053 | 0.068 | 0.078 | 0.047 | 0.085 |

| B4 | 0.220 | 0.243 | 0.141 | 0.112 | 0.154 |

| B5 | 0.094 | 0.169 | 0.075 | 0.177 | 0.066 |

| Primary Criterion | Weight | Secondary Criterion | Weight | Rank |

|---|---|---|---|---|

| Functional Requirements (B1) | 0.425 | C1—Automatic Obstacle Avoidance | 0.166 | 1 |

| C2—Precision Spraying | 0.085 | 3 | ||

| C3—Plant Replacement | 0.069 | 6 | ||

| C4—Data Transmission | 0.040 | 11 | ||

| C5—Environmental Monitoring | 0.066 | 7 | ||

| Performance Requirements (B2) | 0.203 | C6—Flight Stability | 0.092 | 2 |

| C7—Endurance Time | 0.035 | 12 | ||

| C8—Payload Capacity | 0.032 | 13 | ||

| C9—Facade Adaptation | 0.045 | 10 | ||

| User Experience Requirements (B3) | 0.060 | C10—Human–Machine Dimensional | 0.029 | 15 |

| C11—Color Harmony | 0.011 | 18 | ||

| C12—User Interface | 0.009 | 19 | ||

| C13—Aesthetic Appearance | 0.012 | 17 | ||

| Safety Requirements (B4) | 0.191 | C14—Operational Safety | 0.059 | 8 |

| C15—Material Safety | 0.030 | 14 | ||

| C16—Environmental Safety | 0.021 | 16 | ||

| C17—Structural Safety | 0.081 | 4 | ||

| Intelligence Requirements (B5) | 0.121 | C18—Autonomous Decision-Making | 0.074 | 5 |

| C19—Predictive Maintenance | 0.047 | 9 |

| Code | Indicator | ANP Global Weight | DEMATEL C | DEMATEL H |

|---|---|---|---|---|

| C1 | Obstacle Avoidance | 0.166 | 4.177 | 0.749 |

| C6 | Flight Stability | 0.092 | 4.903 | 0.840 |

| C2 | Precision Spraying | 0.085 | 4.536 | 0.197 |

| C17 | Structural Safety | 0.081 | 4.771 | 0.050 |

| C18 | Autonomous Decision-Making | 0.074 | 4.490 | 0.465 |

| C3 | Plant Replacement | 0.069 | 4.319 | 0.471 |

| Conflict | Improve (Desired ↑/↓) | Worsen (Risk ↑/↓) | Recommended Inventive Principles |

|---|---|---|---|

| FC1 lightweighting vs. structural safety | P1 Weight of moving object ↓, P19 Energy consumption (moving) ↓, P39 Productivity ↑ | P14 Strength ↓, P13 Structural stability ↓, P31 Harmful factors generated by object ↑ | 1 Segmentation, 35 Parameter changes, 40 Composite materials |

| FC2 manipulator/payload disturbance vs. flight stability | P13 Structural stability ↑, P27 Reliability ↑, P33↑ Operability ↑ | P10 Force/torque disturbance↑, P31 Harmful factors generated ↑ | 24 Intermediary (mediator), 10 Preliminary action, 15 Dynamics, 28 Mechanics substitution |

| FC3 autonomy complexity vs. real-time deadlines | P38 Automation level ↑, P28 Measurement accuracy ↑, P39 Productivity ↑ | P25 Time loss ↑, P37 Detection complexity ↑/P36 Equipment complexity ↑ | 1 Segmentation (multi-rate/layered), 21 Skipping (anytime), 10 Preliminary action |

| FC4 grasp stiffness vs. botanical compliance | P27 Reliability ↑, P33 Operability ↑ | P30 Harmful factors acting on object ↑, P10 Force ↑ | 30 Flexible shells and thin films, 5 Merging (sensor fusion) |

| Callout | Module/Subsystem | Primary Material(s) | Function/Notes |

|---|---|---|---|

| D1 | Obstacle-avoidance system | Optical glass; electronics | Near-field facade sensing (RGB/ToF/ultrasonic) |

| D2 | Flight control | FR-4 PCB; Al heat spreader; connectors | Autopilot; power conditioning |

| D3 | Motor (BLDC) | Cu windings; steel shaft | Propulsion; sized to mission load |

| D4 | Antenna | Cu trace; ABS radome | RF link; keep clearance from CFRP to avoid detuning |

| D5 | Binocular depth camera | ABS shell; optical glass; Al mount | Depth/pose for landing, alignment, QC logging |

| D6 | Propeller blades | CFRP blades; SS hub fixings | High-stiffness, low-inertia rotors |

| D7 | Gimbal | Al 6061-T6 links; PA12 covers; SS fasteners | Pose stabilization for end-effector/sensors |

| D8 | Plant-handling gripper | PA12 housings; silicone pads (Shore A ≈ 10–25) | Gentle grasp–reseat; loads act on rigid pot rim |

| D9 | Carbon-fiber arm | CFRP tube; metal inserts | Lightweight, high-specific-stiffness arms; root reinforcement |

| D10 | Fuselage cover | CFRP laminate; optional ABS trims | Aerodynamic/protective cover |

| D11 | Battery module | PC/ABS shell; Cu busbars | Primary power supply; quick-release hot-swap |

| Dimension (B) | S1: VG-UAV | S2: Rope-Driven | S3: Climbing |

|---|---|---|---|

| Functionality (B1) | 4.53 | 3.98 | 4.08 |

| Performance (B2) | 4.49 | 4.40 | 4.10 |

| User Experience (B3) | 4.31 | 4.04 | 4.11 |

| Safety (B4) | 4.49 | 4.49 | 4.59 |

| Intelligence (B5) | 4.46 | 4.14 | 4.04 |

| Symbol | Name | Acceptance Criterion/Band | Source |

|---|---|---|---|

| μ | Thrust margin | Μ ≥ 0.80 at MTOW (equivalently T/W ≥ 1.8) | eCalc suggests a lower-limit thrust-to-weight ratio of ≈1.8 for multirotors; we map this to μ ≥ 0.80 for our envelope (software guideline, not a formal standard). |

| θ | Crosswind tilt | (good); (acceptable) | Hattenberger et al. [28], “Evaluation of drag coefficient for a quadrotor model”: linear bank-angle-speed relation up to ≈9 m·s−1; citing prior work, transition near ≈6° to quadratic drag (≈8–10 m·s−1). Bands above adopted as engineering guidelines. |

| Δφ | Roll angle deviation | Good: Δφ ≤ 2.5°; Acceptable: Δφ ≤ 3°. | Weber, C. et al. [29] main-flight RMSEs ≈ 1.4–2.5° (roll/pitch), turbulent cases up to 5.1°/7.8°. Bands derived as empirical, non-normative limits. |

| CV | Spray CV | CV ≤ 35% | GB/T 43071—2023 [30] (national standard; acceptance threshold). |

| Sensing → actuation latency | Good ≤ 100 ms; Acceptable ≤ 200 ms (onboard loop). | Falanga, D. et al. [31] event–camera pipeline achieves ≈3.5 ms perception-to-first-command; reliably avoids at . Thresholds here are conservative engineering bounds; supervision-link latency evaluated separately. |

| Symbol | Unit | Name | Value/Range (Baseline) | Source/Justification (Type) |

|---|---|---|---|---|

| n | - | Rotor count | 6 | Design setting (within 6–8 for heavy-lift peers) |

| D | m | Single-rotor diameter | 1.375 (=54″) | Peer product spec (manufacturer) |

| m2 | Total rotor disk area | ) | Actuator-disk (momentum) model; | |

| m | kg | Mass (two points) | 65 (dual-battery empty), 95 (MTOW) | Peer product spec (manufacturer) |

| Air density | 1.225 | ISA standard atmosphere | ||

| Air dynamic viscosity | ISA at 20 °C | |||

| Gravity | 9.81 | Standard constant | ||

| - | Propulsive/rotor efficiency | 0.60–0.70 (baseline 0.65) | Rotor hover FoM (textbook/industry survey) | |

| V | Battery nominal voltage | 52.22 | DB2000 datasheet (manufacturer) | |

| Ah | Battery capacity (dual) | 76 (=2 × 38) | DB2000 datasheet | |

| DoD | - | Depth of discharge | 0.80–0.90 (baseline 0.85) | Mission-window practice (battery life trade-off) |

| - | Battery-powertrain efficiency | 0.90–0.95 (baseline 0.92) | ESC/power distribution white papers (engineering range) | |

| W | Auxiliary power | 60 (vision/link/nav) | System budget consistent with peers | |

| W | Payload power | Spraying: ~250 (dual pumps at mid-flow); Winch: ≈300 W (30 kg, 0.8 m/s) | Peer specs + P = ΔpQ/η (pump) and P = mgv/η (winch) | |

| U | Crosswind speed | 5 and 10 (evaluation points) | Operating subset of 12 m/s wind tolerance | |

| - | Fuselage drag coefficient | 1.2 (bluff-body baseline) | Multirotor wind-tunnel/CFD ranges | |

| Reference frontal area | 1.0 (effective projection when deployed) | Estimated from outer dimensions (standard practice) | ||

| kg | Potted-plant mass | 0.5149 | Experimental input (measured) | |

| m | CoG offset due to grasp | 0.02 (upper bound) | End-effector geometry constraint (engineering) | |

| Roll equivalent stiffness | 2.86 (from 0.05 N·m/deg) | Control stiffness estimate (entry-level identification) | ||

| d | μm | Representative droplet size (VMD) | 150 and 300 (two points) | Peer spraying system range; ASABE S572.1 terms |

| α | Drift empirical factor | 0.03 (conservative lower end) | Literature range pick for envelope use | |

| h | m | Nozzle-to-canopy height | 2.0 (with sensitivity: 1.5/3.0) | Common operating height in field practice |

| ms | Latency components | 40/15/80/20 | Peer link and onboard inference scales | |

| - | Near-wall constraints | Operational rule consistent with peer “safe distance” |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ying, F.; Zhai, B.; Zhao, X. Design of a Multi-Method Integrated Intelligent UAV System for Vertical Greening Maintenance. Appl. Sci. 2025, 15, 10887. https://doi.org/10.3390/app152010887

Ying F, Zhai B, Zhao X. Design of a Multi-Method Integrated Intelligent UAV System for Vertical Greening Maintenance. Applied Sciences. 2025; 15(20):10887. https://doi.org/10.3390/app152010887

Chicago/Turabian StyleYing, Fangtian, Bingqian Zhai, and Xinglong Zhao. 2025. "Design of a Multi-Method Integrated Intelligent UAV System for Vertical Greening Maintenance" Applied Sciences 15, no. 20: 10887. https://doi.org/10.3390/app152010887

APA StyleYing, F., Zhai, B., & Zhao, X. (2025). Design of a Multi-Method Integrated Intelligent UAV System for Vertical Greening Maintenance. Applied Sciences, 15(20), 10887. https://doi.org/10.3390/app152010887