Residual Life Prediction of SA-CNN-BILSTM Aero-Engine Based on a Multichannel Hybrid Network

Abstract

1. Introduction

2. Basic Principles

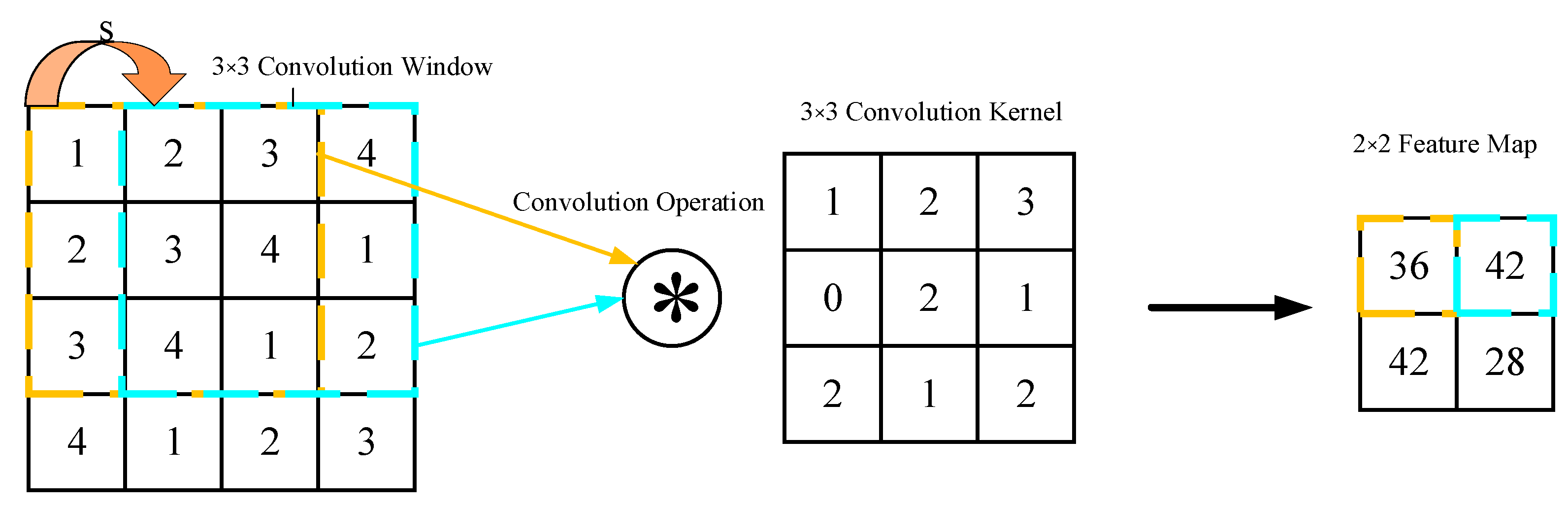

2.1. Convolutional Neural Network

2.1.1. Convolutional Layer

2.1.2. Activation Layer

2.1.3. Pooling Layer

2.2. Bidirectional Long Short-Term Memory

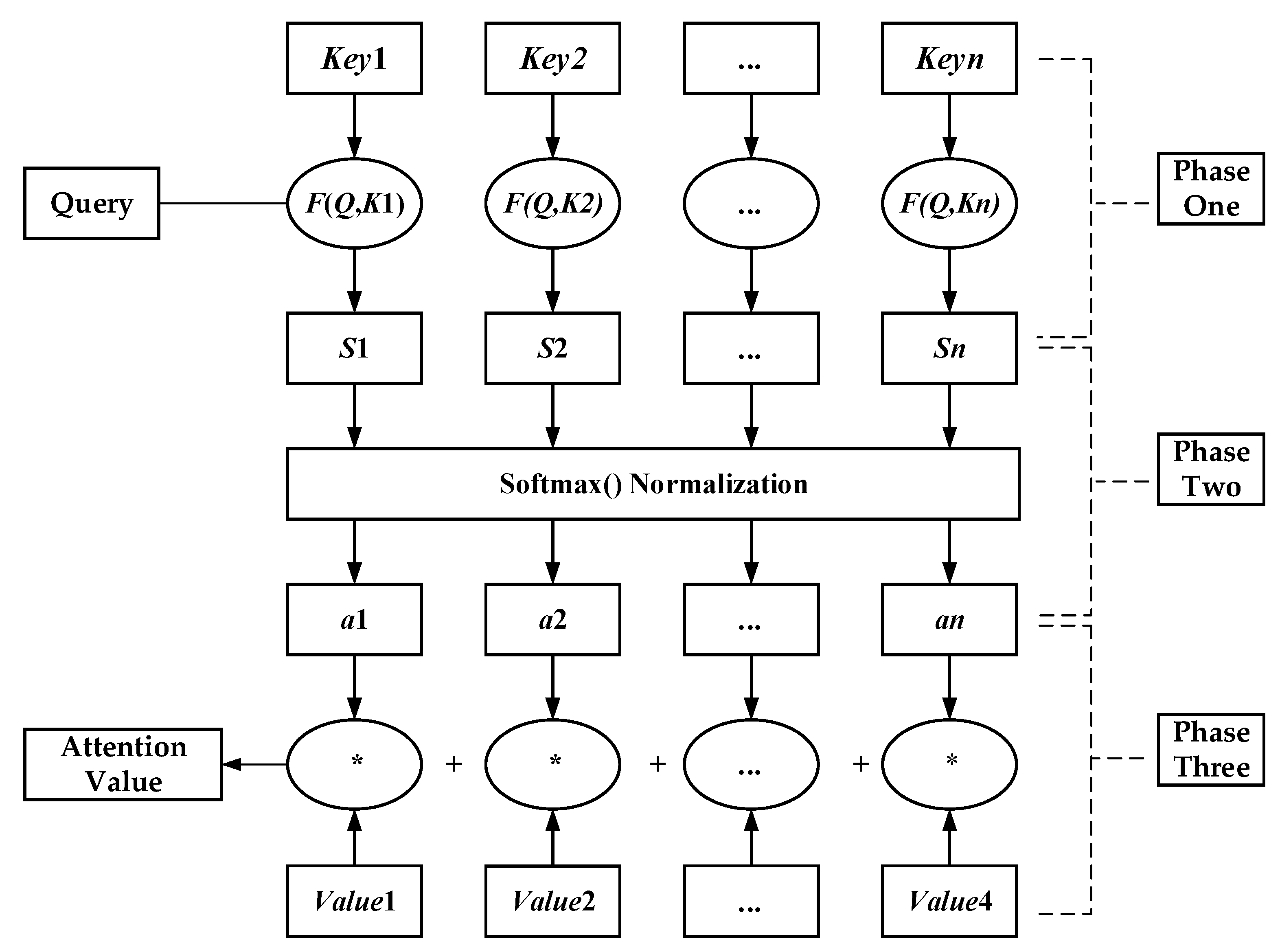

2.3. Attention Mechanism

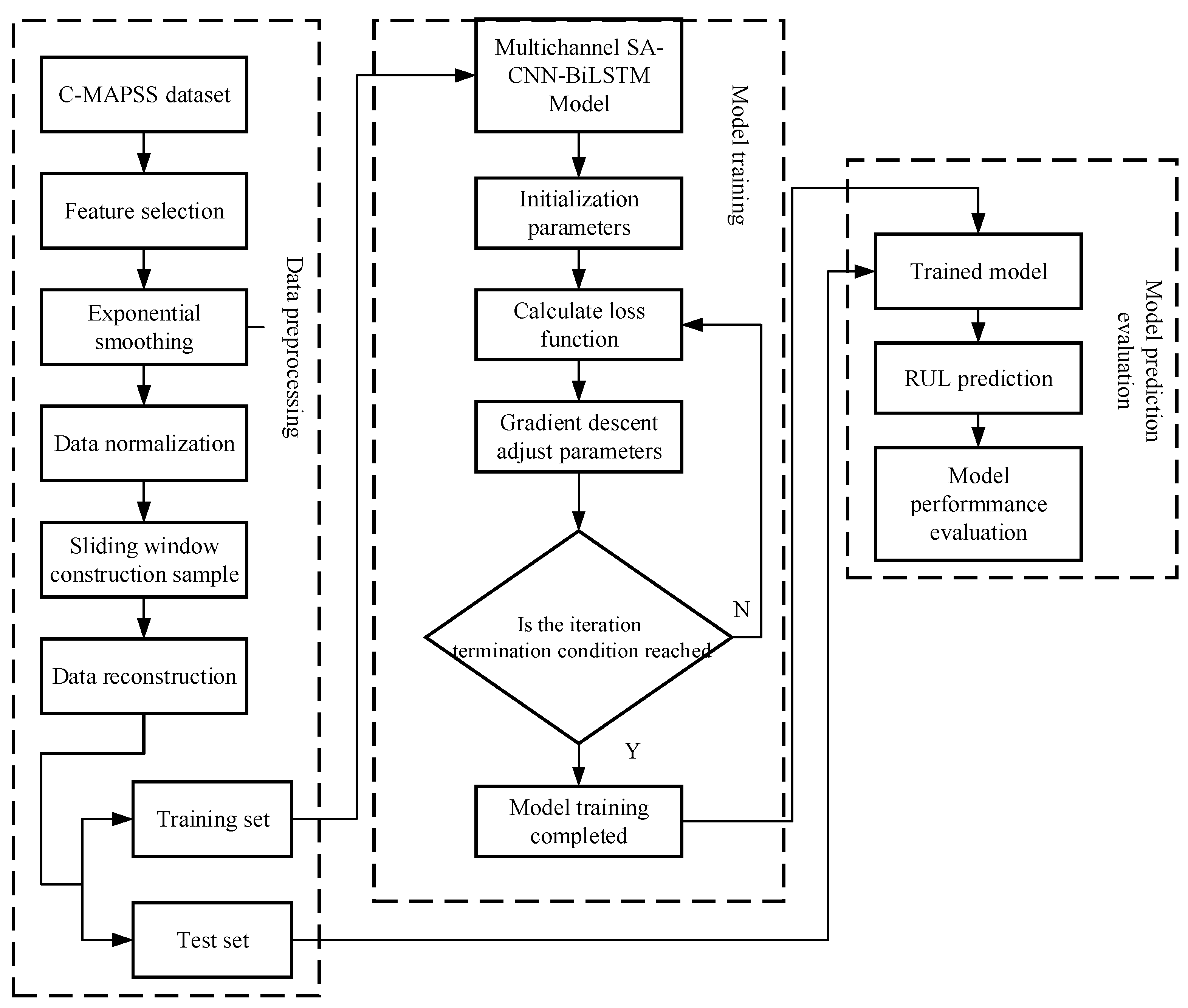

3. Multichannel SA-CNN-BiLSTM Network Model

3.1. Remaining Life Prediction Model Training Prediction Process

3.2. Data Preprocessing

3.2.1. Data Pre-Description

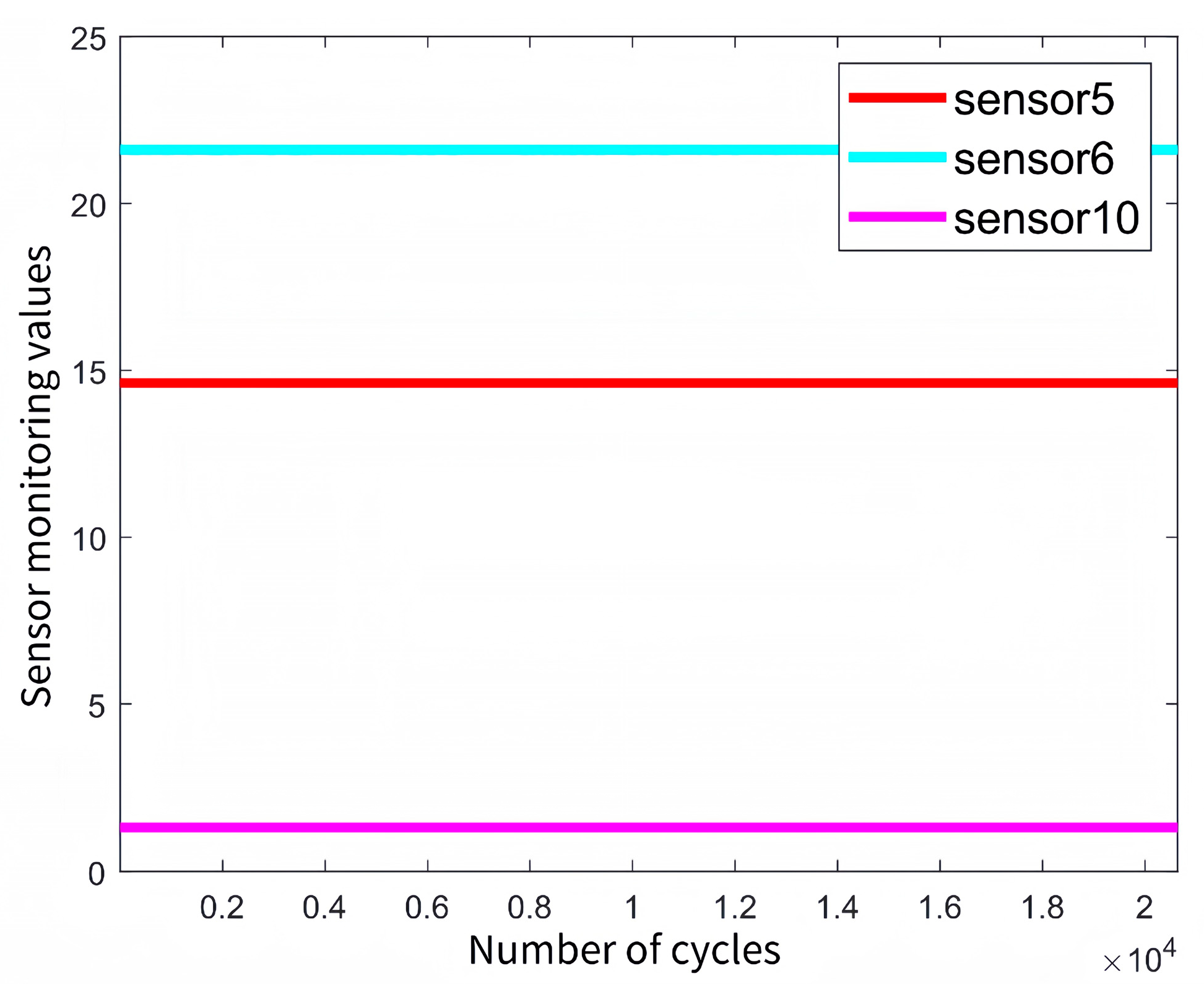

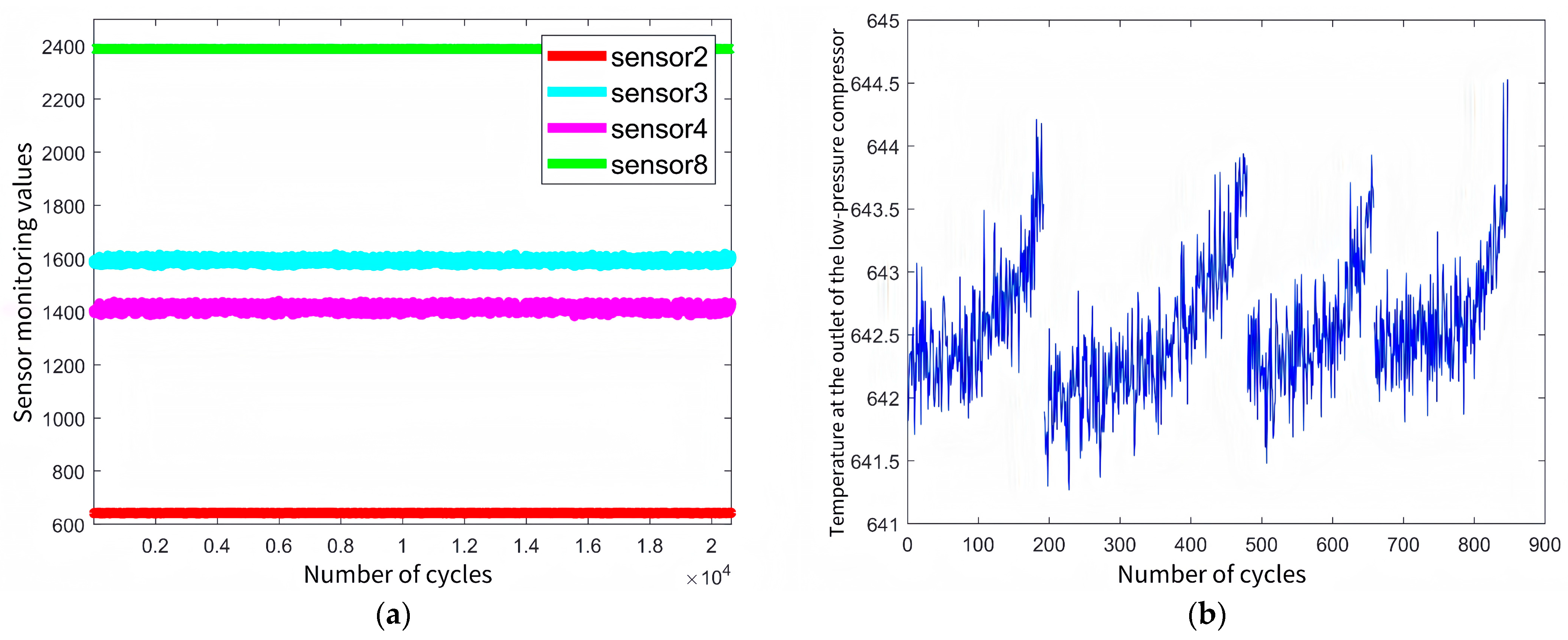

3.2.2. Feature Screening

3.2.3. Data Normalization

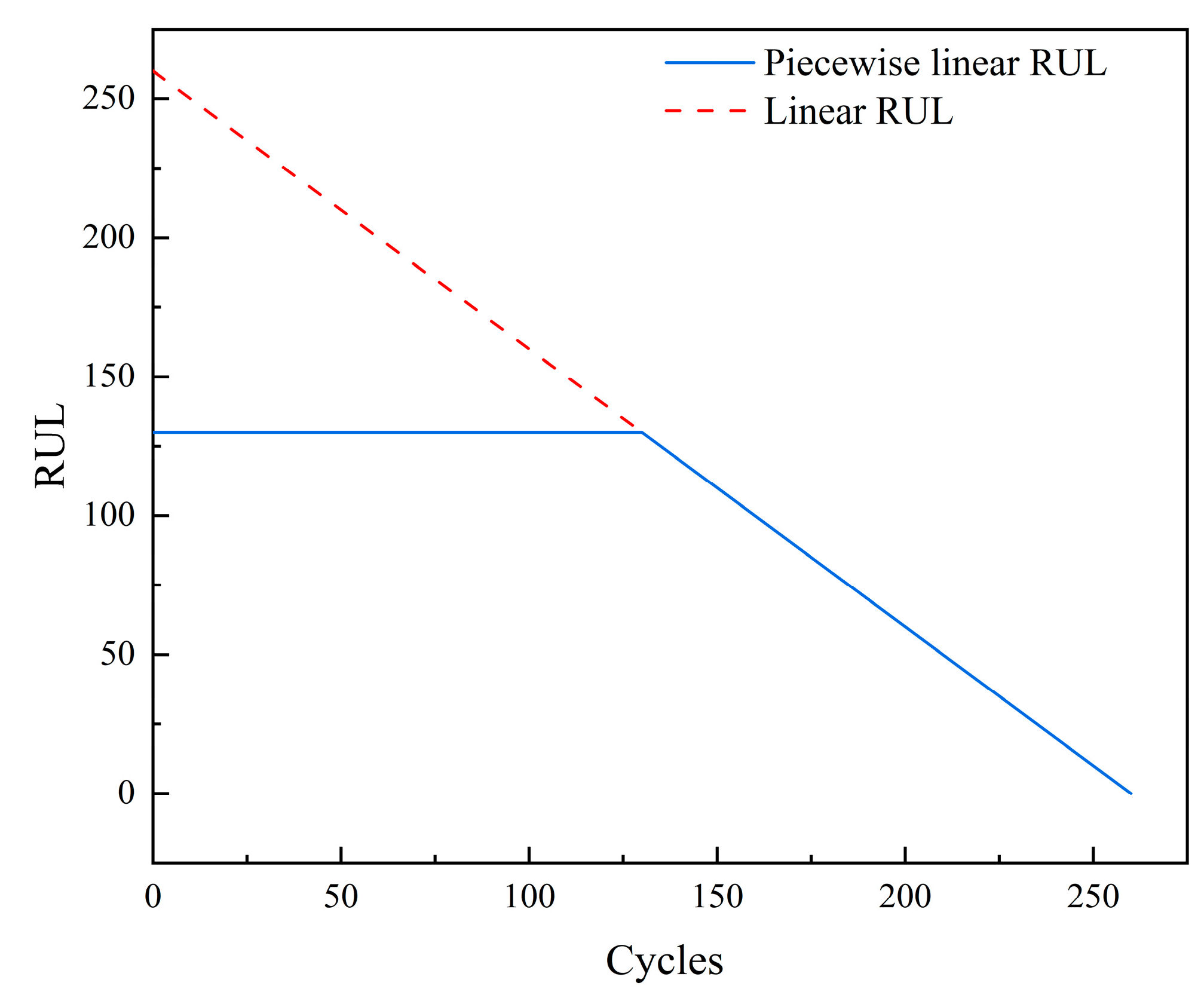

3.2.4. Segmented RUL Labeling

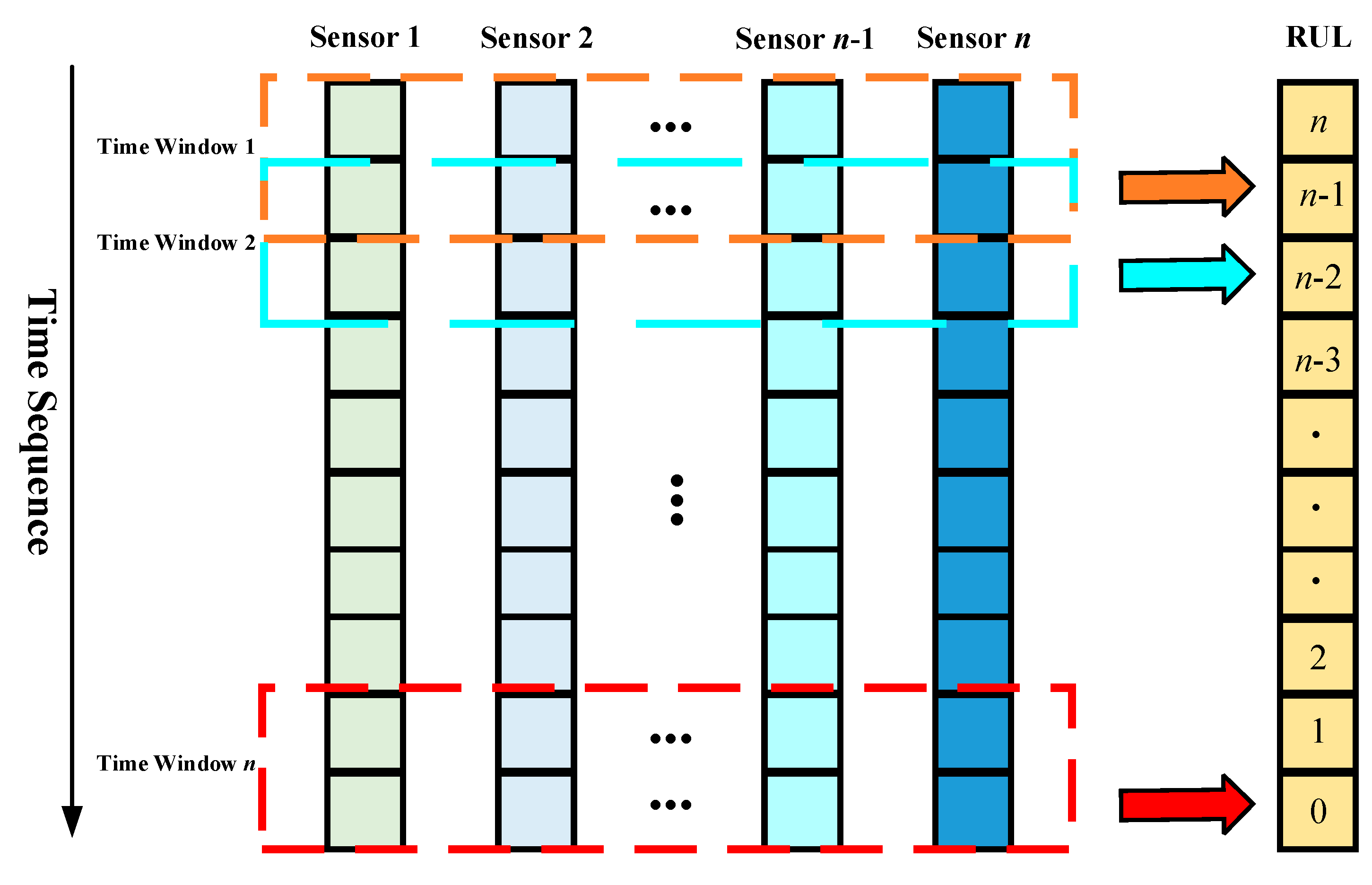

3.2.5. Sliding Time Window

4. Experimental Validation

4.1. Evaluation Criteria

4.1.1. MAE

4.1.2. RMSE

4.1.3. Score Evaluation Function

4.2. Experimental Condition

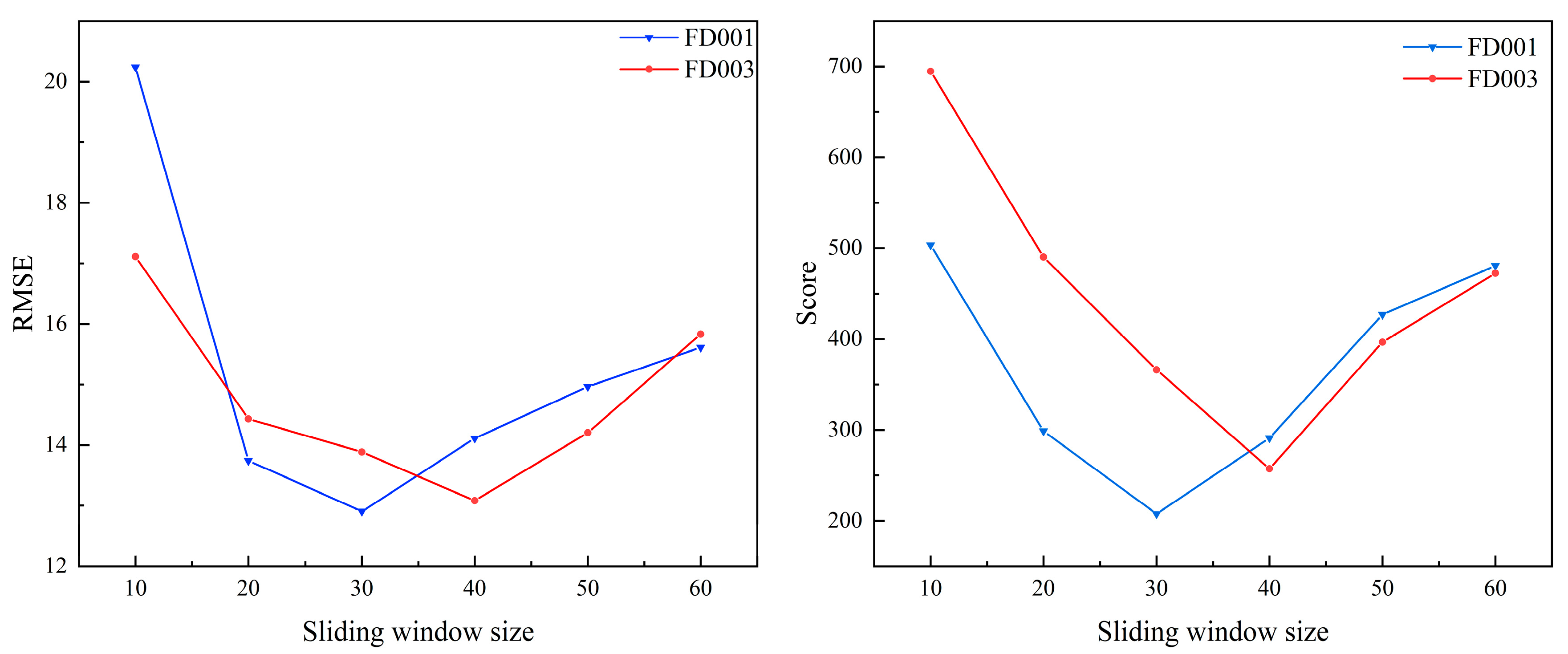

4.3. Analysis of Sliding Window Size Results

4.4. Analysis of Model Hyperparameter Results

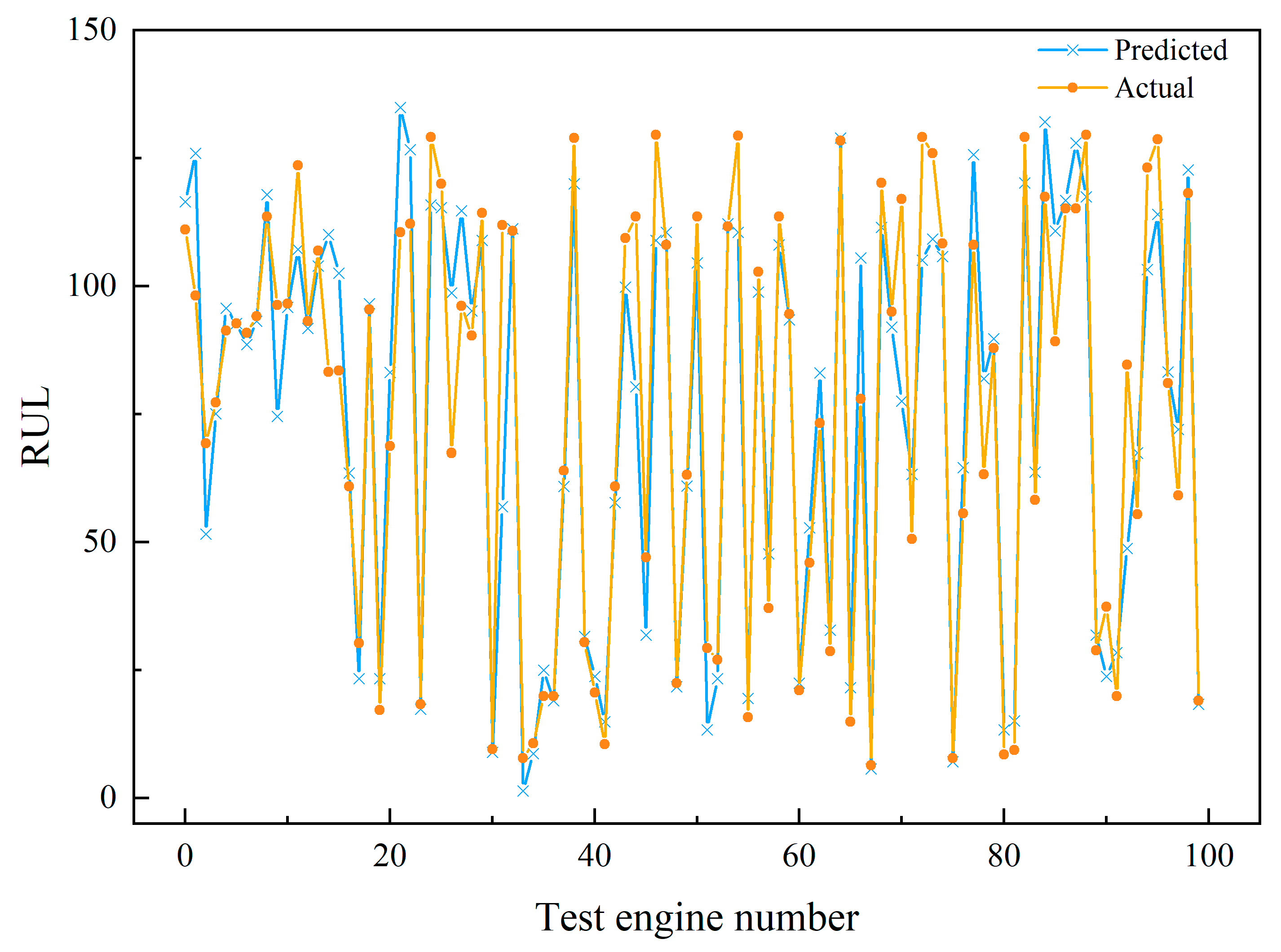

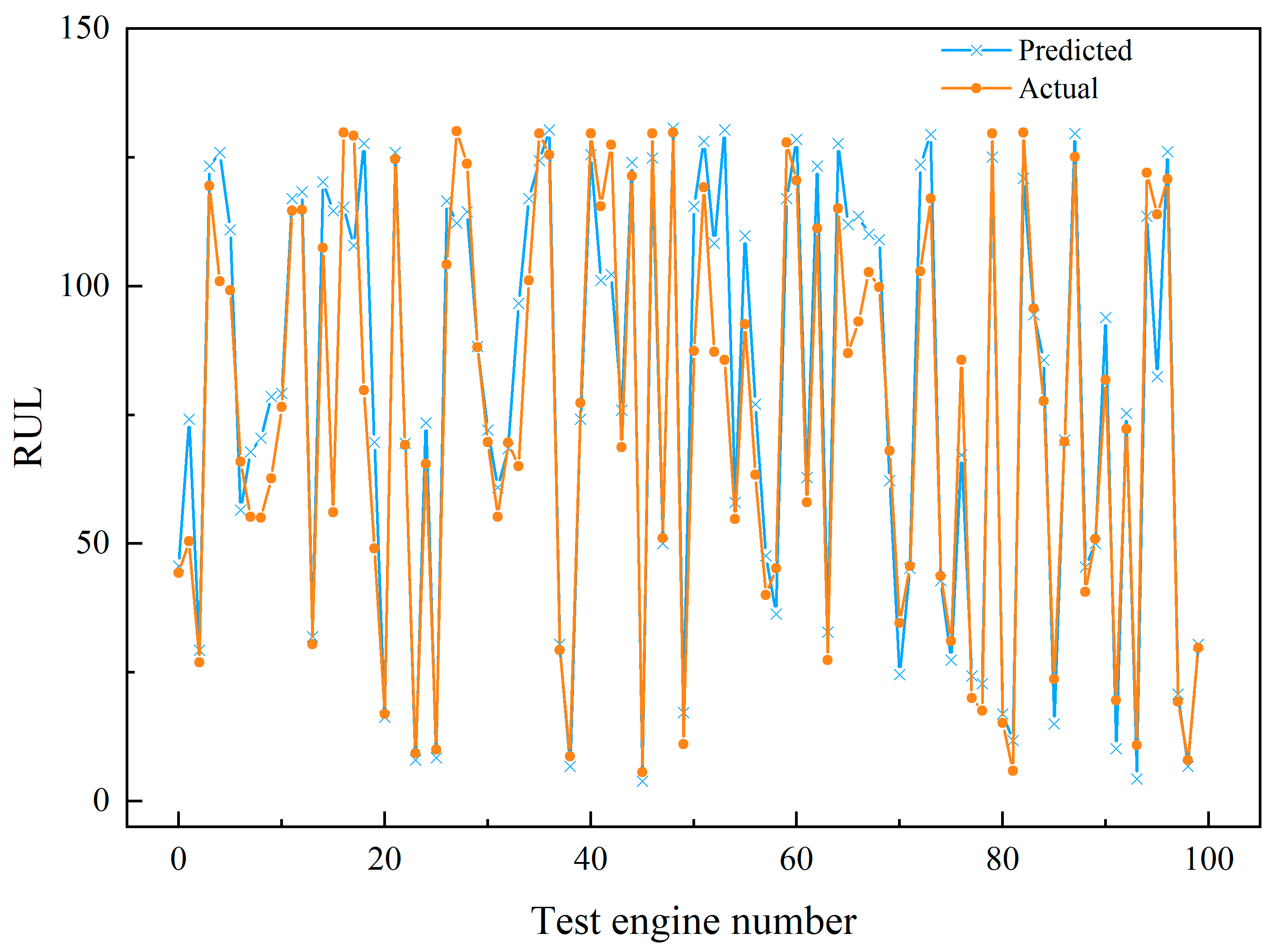

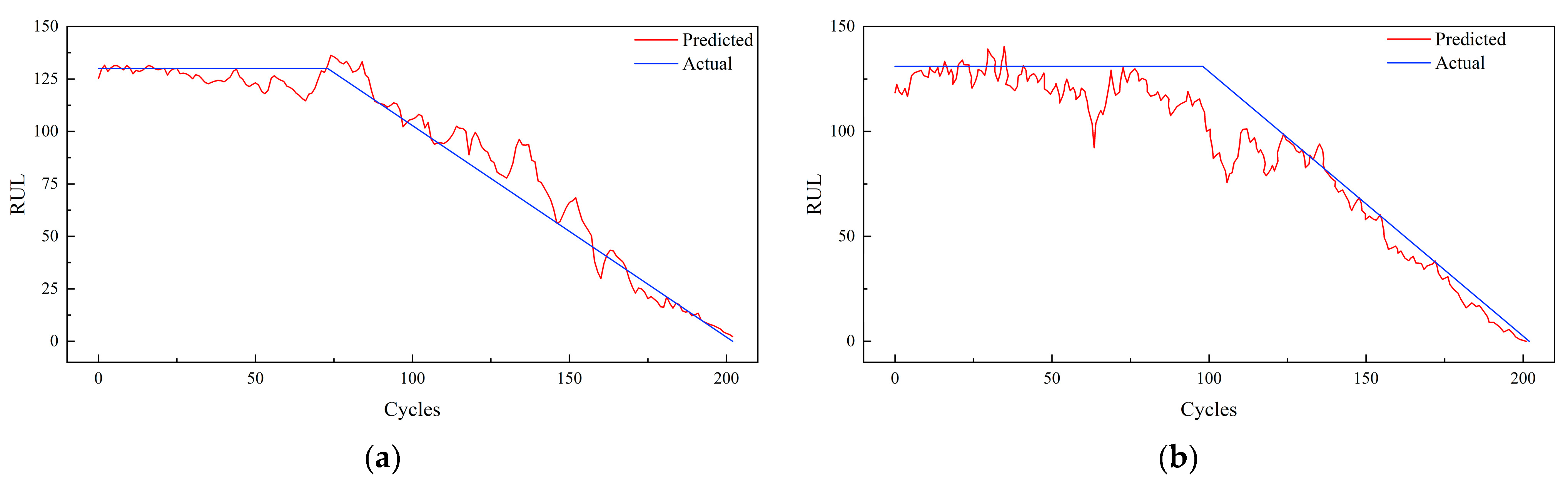

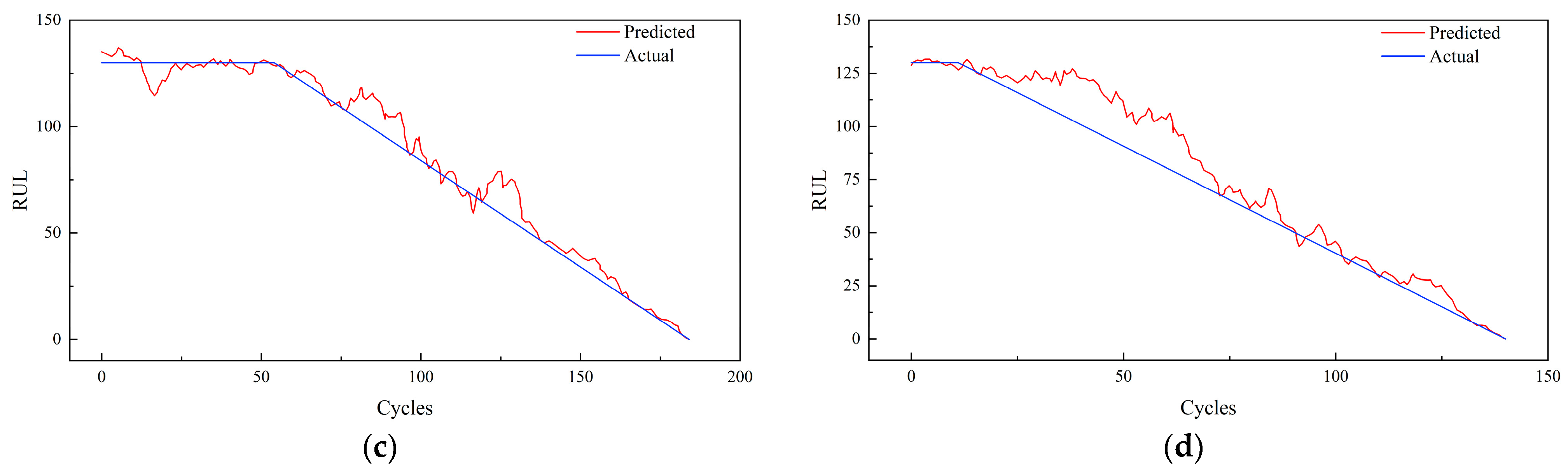

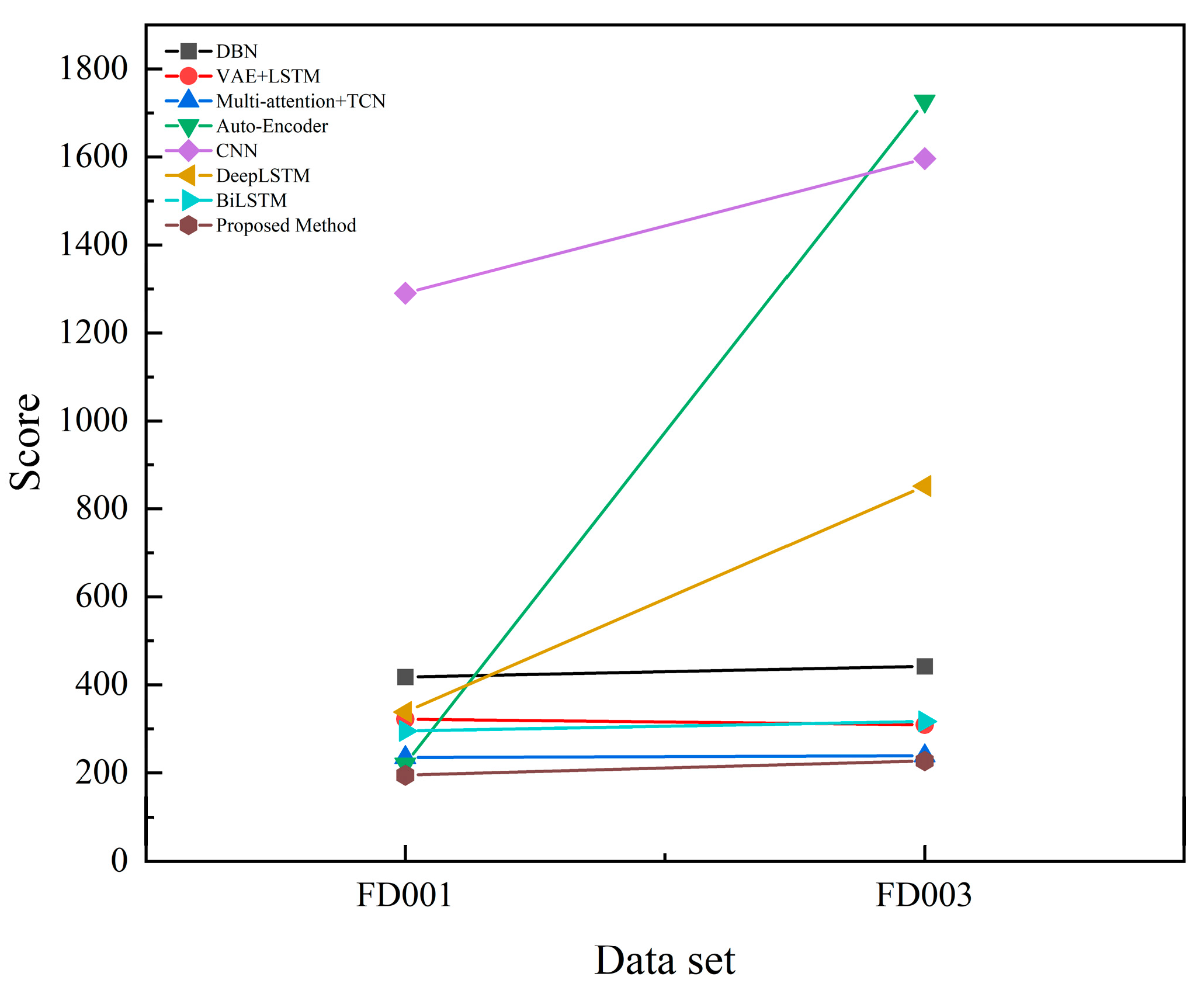

4.5. Experimental Results and Analyses

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, X.; Yu, J.; Tang, D.; Wang, Y. Remaining useful life prognostic estimation for aircraft subsystems or components: A review. In Proceedings of the IEEE 2011 10th International Conference on Electronic Measurement & Instruments, Chengdu, China, 16–19 August 2011; pp. 94–98. [Google Scholar]

- Nguyen, K.T.; Medjaher, K.; Tran, D.T. A review of artificial intelligence methods for engineering prognostics and health management with implementation guidelines. Artif. Intell. Rev. 2023, 56, 3659–3709. [Google Scholar] [CrossRef]

- Chiachío, J.; Jalón, M.L.; Chiachío, M.; Kolios, A. A Markov chains prognostics framework for complex degradation processes. Reliab. Eng. Syst. Saf. 2020, 195, 106621. [Google Scholar] [CrossRef]

- Shi, Y.; Zhu, W.; Xiang, Y.; Feng, Q. Condition-based maintenance optimization for multi-component systems subject to a system reliability requirement. Reliab. Eng. Syst. Saf. 2020, 202, 107042. [Google Scholar] [CrossRef]

- Sateesh Babu, G.; Zhao, P.; Li, X.-L. Deep convolutional neural network based regression approach for estimation of remaining useful life. In Database Systems for Advanced Applications, Proceedings of the Database Systems for Advanced Applications: 21st International Conference, DASFAA 2016, Dallas, TX, USA, 16–19 April 2016, Proceedings, Part I; Springer: Cham, Switzerland, 2016; pp. 214–228. [Google Scholar]

- Yu, S.; Wu, Z.; Zhu, X.; Pecht, M. A domain adaptive convolutional LSTM model for prognostic remaining useful life estimation under variant conditions. In Proceedings of the 2019 Prognostics and System Health Management Conference (PHM-Paris), Paris, France, 2–5 May 2019; pp. 130–137. [Google Scholar]

- Li, X.; Ding, Q.; Sun, J.-Q. Remaining useful life estimation in prognostics using deep convolution neural networks. Reliab. Eng. Syst. Saf. 2018, 172, 1–11. [Google Scholar] [CrossRef]

- Guo, L.; Li, N.; Jia, F.; Lei, Y.; Lin, J. A recurrent neural network based health indicator for remaining useful life prediction of bearings. Neurocomputing 2017, 240, 98–109. [Google Scholar] [CrossRef]

- Chen, J.; Jing, H.; Chang, Y.; Liu, Q. Gated recurrent unit based recurrent neural network for remaining useful life prediction of nonlinear deterioration process. Reliab. Eng. Syst. Saf. 2019, 185, 372–382. [Google Scholar] [CrossRef]

- Huang, C.-G.; Huang, H.-Z.; Li, Y.-F. A bidirectional LSTM prognostics method under multiple operational conditions. IEEE Trans. Ind. Electron. 2019, 66, 8792–8802. [Google Scholar] [CrossRef]

- Al-Dulaimi, A.; Zabihi, S.; Asif, A.; Mohammadi, A. A multimodal and hybrid deep neural network model for remaining useful life estimation. Comput. Ind. 2019, 108, 186–196. [Google Scholar] [CrossRef]

- Ansari, S.; Ayob, A.; Hossain Lipu, M.S.; Hussain, A.; Saad, M.H.M. Multi-channel profile based artificial neural network approach for remaining useful life prediction of electric vehicle lithium-ion batteries. Energies 2021, 14, 7521. [Google Scholar] [CrossRef]

- Peng, C.; Chen, Y.; Chen, Q.; Tang, Z.; Li, L.; Gui, W. A remaining useful life prognosis of turbofan engine using temporal and spatial feature fusion. Sensors 2021, 21, 418. [Google Scholar] [CrossRef]

- Zhao, C.; Huang, X.; Li, Y.; Yousaf Iqbal, M. A double-channel hybrid deep neural network based on CNN and BiLSTM for remaining useful life prediction. Sensors 2020, 20, 7109. [Google Scholar] [CrossRef] [PubMed]

- Heidelberg, S.B. Journal of Ambient Intelligence and Humanized Computing; Springer: Berlin/Heidelberg, Germany, 2023. [Google Scholar]

- Kawakami, K. Supervised Sequence Labelling with Recurrent Neural Networks. Ph.D. Thesis, Technical University of Munich, Munich, Germany, 2008. [Google Scholar]

- Gers, F.A.; Schraudolph, N.N.; Schmidhuber, J. Learning Precise Timing with LSTM Recurrent Networks. J. Mach. Learn. Res. 2003, 3, 115–143. [Google Scholar]

- Arias Chao, M.; Kulkarni, C.; Goebel, K.; Fink, O. Aircraft engine run-to-failure dataset under real flight conditions for prognostics and diagnostics. Data 2021, 6, 5. [Google Scholar] [CrossRef]

- Ramasso, E.; Saxena, A. Performance Benchmarking and Analysis of Prognostic Methods for CMAPSS Datasets. Int. J. Progn. Health Manag. 2014, 5, 1–15. [Google Scholar] [CrossRef]

- Zhang, C.; Lim, P.; Qin, A.K.; Tan, K.C. Multiobjective deep belief networks ensemble for remaining useful life estimation in prognostics. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 2306–2318. [Google Scholar] [CrossRef]

- Su, C.; Li, L.; Wen, Z. Remaining useful life prediction via a variational autoencoder and a time-window-based sequence neural network. Qual. Reliab. Eng. Int. 2020, 36, 1639–1656. [Google Scholar] [CrossRef]

- Shang, Z.; Zhang, B.; Li, W.; Qian, S.; Zhang, J. Machine remaining life prediction based on multi-layer self-attention and temporal convolution network. Complex Intell. Syst. 2022, 8, 1409–1424. [Google Scholar] [CrossRef]

- Yu, W.; Kim, I.Y.; Mechefske, C. An improved similarity-based prognostic algorithm for RUL estimation using an RNN autoencoder scheme. Reliab. Eng. Syst. Saf. 2020, 199, 106926. [Google Scholar] [CrossRef]

- Zheng, S.; Ristovski, K.; Farahat, A.; Gupta, C. Long short-term memory network for remaining useful life estimation. In Proceedings of the 2017 IEEE International Conference on Prognostics and Health Management (ICPHM), Dallas, TX, USA, 19–21 June 2017; pp. 88–95. [Google Scholar]

- Wang, J.; Wen, G.; Yang, S.; Liu, Y. Remaining useful life estimation in prognostics using deep bidirectional LSTM neural network. In Proceedings of the 2018 Prognostics and System Health Management Conference (PHM-Chongqing), Chongqing, China, 26–28 October 2018; pp. 1037–1042. [Google Scholar]

| Dataset | FD001 | FD002 | FD003 | FD004 |

|---|---|---|---|---|

| Operating conditions | 1 | 6 | 1 | 6 |

| Fault modes | 1 | 1 | 2 | 2 |

| Train trajectories | 100 | 260 | 100 | 249 |

| Test trajectories | 100 | 259 | 100 | 248 |

| Actual value of RUL | 100 | 259 | 100 | 248 |

| Window Sizes | FD001 | FD003 | ||

|---|---|---|---|---|

| RMSE | Score | RMSE | Score | |

| Lws = 10 | 20.2502 | 503.3330 | 17.1317 | 694.7253 |

| Lws = 20 | 13.7231 | 298.7673 | 14.4360 | 490.3760 |

| Lws = 30 | 12.9073 | 207.5724 | 13.8445 | 366.1153 |

| Lws = 40 | 14.1324 | 291.1356 | 13.0865 | 257.3720 |

| Lws = 50 | 14.9618 | 427.0348 | 14.2448 | 396.6330 |

| Lws = 60 | 15.6623 | 480.7516 | 15.8343 | 472.5729 |

| Model Hyperparameters | FD001 | FD003 | ||

|---|---|---|---|---|

| RMSE | Score | RMSE | Score | |

| F = 32, K = 2 | 13.7407 | 135.9350 | 12.9706 | 267.9601 |

| F = 64, K = 2 | 12.0347 | 67.5300 | 12.7825 | 194.5720 |

| F = 32, K = 3 | 14.3873 | 154.2784 | 13.4312 | 278.8967 |

| F = 64, K = 3 | 15.8711 | 170.6143 | 13.5116 | 345.2449 |

| Method | FD001 | FD003 | ||||

|---|---|---|---|---|---|---|

| MAE | RMSE | Score | MAE | RMSE | Score | |

| DBN [20] | 13.46 | 15.21 | 418 | 12.74 | 14.71 | 442 |

| VAE + LSTM [21] | 14.14 | 15.88 | 322 | 12.25 | 14.29 | 309 |

| Multi-attention + TCN [22] | 12.16 | 13.25 | 235 | 11.96 | 13.43 | 239 |

| Auto-Encoder [23] | 12.48 | 13.58 | 220 | 16.28 | 19.16 | 1727 |

| CNN [5] | 16.12 | 18.45 | 1290 | 17.27 | 19.82 | 1596 |

| DeepLSTM [24] | 14.58 | 16.14 | 338 | 14.37 | 16.18 | 852 |

| BiLSTM [25] | 12.35 | 13.65 | 295 | 12.02 | 13.74 | 317 |

| Proposed Method | 11.47 | 12.26 | 195 | 11.76 | 12.78 | 227 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, Y.; Wen, C.; Xu, W. Residual Life Prediction of SA-CNN-BILSTM Aero-Engine Based on a Multichannel Hybrid Network. Appl. Sci. 2025, 15, 966. https://doi.org/10.3390/app15020966

He Y, Wen C, Xu W. Residual Life Prediction of SA-CNN-BILSTM Aero-Engine Based on a Multichannel Hybrid Network. Applied Sciences. 2025; 15(2):966. https://doi.org/10.3390/app15020966

Chicago/Turabian StyleHe, Yonghao, Changjun Wen, and Wei Xu. 2025. "Residual Life Prediction of SA-CNN-BILSTM Aero-Engine Based on a Multichannel Hybrid Network" Applied Sciences 15, no. 2: 966. https://doi.org/10.3390/app15020966

APA StyleHe, Y., Wen, C., & Xu, W. (2025). Residual Life Prediction of SA-CNN-BILSTM Aero-Engine Based on a Multichannel Hybrid Network. Applied Sciences, 15(2), 966. https://doi.org/10.3390/app15020966