Abstract

Real-time detection of rockfall on slopes is an essential part of a smart worksite. As a result, target detection techniques for rockfall detection have been rapidly developed. However, the complex geologic environment of slopes, special climatic conditions, and human factors pose significant challenges to this research. In this paper, we propose an enhanced high-speed slope rockfall detection method based on YOLOv8n. First, the LSKAttention mechanism is added to the backbone part to improve the model’s ability to balance the processing of global and local information, which enhances the model’s accuracy and generalization ability. Second, in order to ensuredetection accuracy for smaller targets, an enhanced detection head is added, and other detection heads of different sizes are combined to form a multi-scale feature fusion to improve the overall detection performance. Finally, a bidirectional feature pyramid network (BiFPN) is introduced in the neck to effectively reduce the parameters and computational complexity and improve the overall performance of rockfall detection. In addition we compare the LSKAttention mechanism with other attention mechanisms to verify the effectiveness of the improvements. Compared with the baseline model, our method improves the average accuracy mAP@0.5 by 4.8%. Moreover, the amount of parameters is reduced by 20.2%. Among the different evaluation criteria, the LHB-YOLOv8 method shows obvious advantages, making it suitable for engineering applications and the practical deployment of slope rockfall detection systems.

1. Introduction

With the rapid development of highway networks, the construction of mountain highways has become an important way to connect cities and villages and promote economic development. However, complex geological environments, special climatic conditions, and human factors in mountainous areas have led to the frequent occurrence of rockfall disasters, which bring great threats to driving safety. Especially in steep slope areas, rockfall disasters are unpredictable and sudden, and once they occur, they may cause serious traffic accidents and loss of life and property and even lead to traffic disruption. In 2013, in the State of Colorado, United States, several huge rocks accidentally tumbled down a cliff 4267 m above sea level, some of which weighed more than 100 tons and were the size of a car, causing injuries and deaths. In Taroko National Park, it is estimated that about TWD 20 billion in tourism revenue is lost annually due to fewer tourists as a result of frequent rockfalls. Therefore, how to effectively monitor and detect falling rocks in a timely manner has become an important research topic to ensure highway safety [1].

Existing slope monitoring and rockfall detection techniques mainly rely on traditional sensors, such as geo-radar [2], accelerometers [3], and inclinometers [4], and aero-photogrammetry, which leverages UAVs with high-resolution cameras, has become a powerful method for monitoring rockfalls on steep slopes. This technique allows for the detailed mapping of terrain and rock movement, providing critical data for assessing rockfall susceptibility [5]. By capturing precise 3D landscape models, aero-photogrammetry enhances understanding of rockfall dynamics, essential for evaluating the risk in challenging environments. However, these devices are often constrained by high installation costs, complex maintenance, and limited coverage. In addition, complex terrain and environmental conditions can increase the monitoring difficulty and affect the detection accuracy. In recent years, image-based automatic detection technology has developed rapidly, especially driven by deep learning, and target detection technology has made significant progress. In the field of target detection, the YOLO (You Only Look Once) algorithm [6] has become an important tool for solving various types of target detection problems due to its good detection accuracy and real-time performance. The YOLO series of algorithms greatly improves detection efficiency through the single-phase detection method, which is especially suitable for application scenarios such as highway rockfalls, which require real-time detection and response.

With the continuous development of transportation infrastructure construction, the monitoring and early warning of slope rockfall disasters has become a key research direction in transportation safety management worldwide. In recent years, scholars at home and abroad have conducted a large number of studies on slope rockfall monitoring and have made significant progress with respect to different technical paths and means. The existing research can be broadly categorized into monitoring technology based on traditional physical sensors [7] and intelligent detection technology based on computer vision and deep learning [8].

With the development of computer vision technology, image-based intelligent detection technology has gradually become one of the mainstream directions for slope rockfall monitoring. Compared with the traditional physical sensor method, computer vision technology realizes target detection through the use of cameras and deep learning algorithms, which can realize real-time monitoring in a wide range of complex environments and are not limited by physical contact.

Early image processing methods such as edge detection and background subtraction [9], were initially applied in rockfall detection, but the performance of these methods was not stable enough due to their sensitivity to ambient lighting and background complexity. With the development of deep learning technology, the target detection model based on a convolutional neural network (CNN) [10] has become the mainstream research direction for slope rockfall detection. Cai, Jiehua, et al. [11] used the Faster R-CNN model for real-time monitoring of mountainous slopes, and using a large-scale image dataset for training, the system achieved satisfactory landslide detection performance, with no need to retrain the model with new samples.

François Noël et al. proposed a rock roll impact detection algorithm to objectively extract different perceived surface roughnesses from detailed terrain samples by combining it with a rebound model to perform rockfall simulations directly on a detailed 3D point cloud, to extract the perceived surface roughness related to the size of the impacting rock from detailed terrain samples, and to analyze the rockfall from multiple perspectives at the physical level [12]. To solve the problem of uncertainty in location and time due to different sizes of falling rocks, Jack et al. used the 8987 3D point cloud dataset, where rockfalls occur frequently, to provide more scientific statistics on the timing and location patterns of rock roll [13]. Josué Briones et al. analyzed scientific production by using bibliometric techniques to study the rolling of falling rock trajectories to analyze and reason about the falling trends of falling rocks and provided a systematic assessment of the risk of rockfall hazards through the use of large-sample statistics [14].

Research on rockfall detection based on computer vision has also made rapid progress. Songge Wang [15] and others proposed a YOLOX video streaming-based rockfall detection model for slopes, aiming to solve the problems of missed detection, false detection, and trajectory interruption that occur in online deep learning multi-target tracking. The detection phase uses a three-frame differencing method combined with an offline-trained YOLOX model for rock detection, while the tracking phase re-matches the unmatched trajectories based on a combination of data correlation and moving object detection results. In addition, Wang, Shouxing, et al. [16] achieved competitive results for dilemmas such as falling rocks on roadways based on YOLOv5 network optimization with fewer parameters, as well as simpler training, and efficiently identified scattered falling rocks by leveraging the feature extraction capability of YOLOv5. Meanwhile, shankar et al. [17] proposed an integrated system based on YOLOv8 detection technology, which focuses on a variety of terrain safety issues, including debris landslides, while the system’s multi-threaded controller ensures concurrent execution in order to analyze the video source in real time so as to quickly respond to potential hazards and provide real-time alarms.

Research based on the latest target detection algorithms, such as YOLOv8, has begun to gradually enter the field. YOLOv8 has stronger feature extraction capabilities and higher detection accuracy than its predecessor, especially in multi-scale target detection. Currently, related studies at home and abroad are gradually exploring how to combine the advantages of YOLOv8 to further improve the accuracy and robustness of slope rockfall detection. For example, certain researches have enhanced the model’s ability to recognize small targets by introducing an attention mechanism or multi-scale feature fusion module while, at the same time, simulating complex rockfall scenarios by means of data enhancement and other means to improve the model’s generalization ability. Therefore, we choose to optimize the model using the YOLOv8 framework to further improve the algorithm’s accuracy and feature recognition ability in complex environments.

This paper proposes an effective method for falling rock detection. This method can distinguish falling rocks from other objects and detect them in real time in complex environments. The main contributions outlined are as follows:

- In order to improve the model’s ability to balance the processing of global and local information and to enhance the model’s accuracy and generalization ability, the LSKAttention mechanism is chosen to be placed in the backbone part. Its ability to enhance the model’s perception of global features is enhanced by using a large convolutional kernel to capture a larger range of contextual information.

- We add a small-target detection head to the head, improving the capture of smaller rocks. Especially for cases of dense targets and mutual occlusion in complex scenes, it improves the accuracy and inference speed of the model and, thus, performs better in real-time applications.

- In order to reduce the demand for computational resources, the feature pyramid is optimized to better capture multi-scale information and effectively improve model performance by reducing the number of model parameters and computational complexity.

2. Methodology

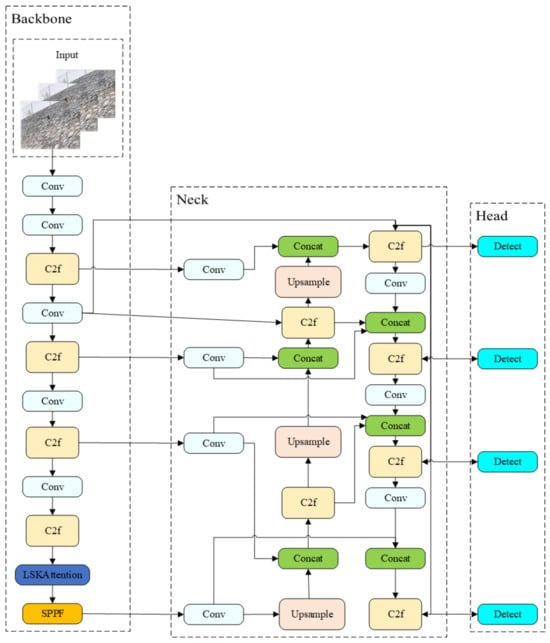

Based on the fact that there are few open-source slope fall data, in order to ensure the number of samples for this experiment, as well as the training effect, this paper carries out the construction of a high-speed slope fall dataset. In order to facilitate the deployment of our model on embedded platforms in the future, the smallest model, YOLOV8n, is chosen. Therefore, when optimizing the model, not only should its detection accuracy be improved, but the number of parameters of the model and the computation of the model should also be reduced. Therefore, the LSKAttention mechanism [18] is chosen to maintain the acquisition of local information while reducing the amount of computation. Then, the BiFPN network structure [19] is integrated so that the model can better understand and interpret multi-scale information and effectively reduce the parameters and computational complexity. Finally, a new detection head is added to the head of the model to enhance the model’s ability to capture more detailed information. The improved network structure is shown in Figure 1.

Figure 1.

Detailed LBH-YOLOv8 framework.

2.1. Dynamic Large Convolutional Kernel Spatial Attention Mechanisms

Attention mechanisms were first used in natural language processing but are now widely used in areas such as computer vision. The core idea is that the model should pay more attention to information that is critical to the task while ignoring unimportant parts when processing the data. Through this selective allocation of attention, the model is able to extract features more efficiently. In visual tasks, the attention mechanism can help the model to better focus on the target region and reduce background interference. For example, the common SE (Squeeze and Excitation) module [20] enables the enhancement of useful feature channels by adaptively adjusting the weights of the channels. The CBAM (Convolutional Block Attention Module) [21] combines spatial and channel attention to further enhance feature expression, as well as the Global Attention Mechanism (GAM) [22] and Efficient Channel Attention (ECA) [23], among others. However, the large kernel attention mechanism enhances the model’s ability to perceive global features by using a large convolutional kernel to capture a larger range of contextual information. Compared to the traditional small convolutional kernel, it can focus on key information in a larger region while maintaining computational efficiency. This mechanism performs well when dealing with complex scenes or large targets, especially in tasks such as image segmentation and target detection, which improves the model’s ability to balance the processing of global and local information and enhances the model’s accuracy and generalization. Therefore, the LSKAttention mechanism is chosen to be placed in the backbone part in this paper.

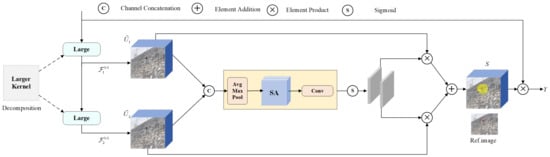

The working principle of LSKAttention is shown in Figure 2. LSKAttention expands the receptive field by using a series of deep convolutional kernels of different sizes instead of simply using a fixed-size convolutional kernel. To avoid an increase in computational complexity, the large convolutional kernel is decomposed into multiple layers of smaller convolutional kernels, each with a different dilation rate. This design allows the receptive field to expand rapidly, capturing contextual information at different scales.

Figure 2.

LSK module.

The convolution size of layer i is , and the expansion rate is .

The receptive field of each layer () is based on the receptive field of the previous layer () and is a function of the size of the convolutional kernel and expansion rate of the current layer:

By increasing the size of the convolutional kernel (k) and the expansion rate (d) layer by layer, the sensory field can be rapidly expanded to ensure that contextual information can be captured over long distances. After features are extracted at multiple scales, the spatial selection mechanism selects contextual information that is suitable for different regions. Specific steps include the following:

First, the features extracted through different convolution kernels are stitched into a feature matrix ():

Average pooling and maximum pooling are performed on this spliced feature matrix to obtain a global spatial feature descriptor:

These pooled features are converted into N spatial attention maps (), each corresponding to a convolutional kernel, by means of a convolutional layer:

These attention maps are normalized using the Sigmoid activation function to generate spatial selection masks ():

Finally, the features from different convolutional kernels are weighted and fused to obtain the final output features (S):

The output feature (Y) is the elemental product of the input feature (X) and the fused feature (S):

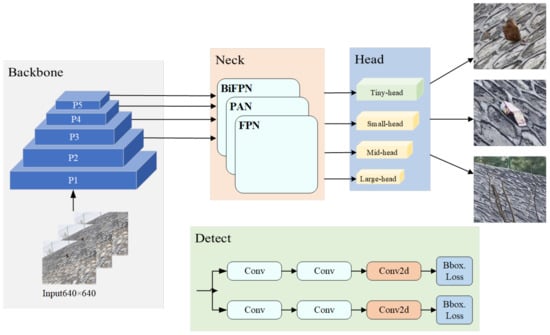

2.2. Enhanced Detection Heads

Based on the fact that the objects we detect are falling rocks from high speed slopes, as well as a number of interfering objects of different scales, we keep a large field of view when arranging the camera in order to prevent the high-speed falling rocks from washing out of the camera’s field of view, as well as the waste of resources in the future when the camera is put into use with a dense camera arrangement. Therefore, the proportion of falling rocks in the image is usually small. There are only three detecting heads in YOLOv8, detecting feature maps with scales of 80 × 80, 40 × 40, and 20 × 20, respectively. In the YOLOv8 model, the image first undergoes five downsampling operations in the backbone network, which, in turn yields five scales of feature maps, denoted as P1–P5. The three detecting heads are finally detected after passing through P3, P4, and P5. However, these three heads cannot meet the demand for detection accuracy in our high-speed slope rockfall scenarios in complex environments, especially for the detection of small rocks in the dataset, which results in the loss of a lot of feature information.

Therefore, in order to improve detection accuracy for smaller targets, as shown in Figure 3, we add a new detection head in the head of YOLOv8, whose detection feature map is 160 × 160, containing richer feature information about the detected object. The smaller detection head is able to capture details from the high-resolution feature map and effectively recognize small objects in the image while combining with other detection heads of different sizes to form a multi-scale feature fusion and improve the overall detection performance. Especially in complex scenes, it is able to handle the situation of dense targets and mutual occlusion, improving the accuracy and inference speed of the model, thus performing better in real-time applications.

Figure 3.

New detection heads.

2.3. Bidirectional Characteristic Pyramid

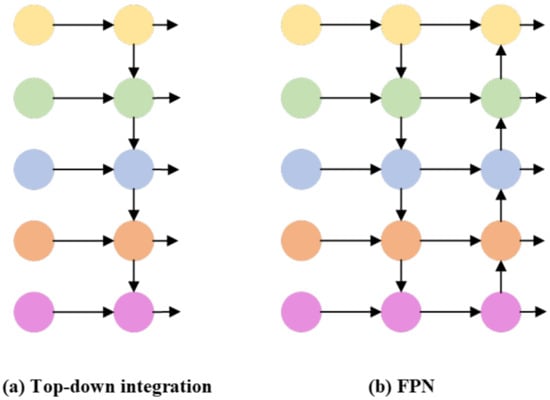

A feature pyramid network (FPN) [24] is a network structure designed for multi-scale target detection, aiming at extracting features from different layers of convolutional neural networks and enhancing the model’s multi-scale detection capability through the flow of information in the upper and lower paths.

In YOLOv8, compared with the ordinary top-down feature fusion, FPN enhances the performance of the model in dealing with targets of different sizes through multi-scale feature fusion, especially for the detection of small targets, which is significantly improved, as shown in Figure 4, which compares the structure of top-down fusion and FPN in a sketch. The top-down feature enhancement and lateral connectivity make YOLOv8 more robust in complex scenes, while the efficient real-time performance is maintained by optimizing the FPN structure. FPN not only performs well in improving the target detection accuracy but also enables YOLOv8 to achieve better generalization ability and adaptability in the multi-scale target detection task. However, as shown in Figure 4, FPN is a unidirectional feature flow (top-down), which restricts the full fusion of information and prevents simultaneous top-down and bottom-up feature transfer, resulting in a weak capture of details in small targets and complex scenes. In addition, FPN lacks a dynamic weighting mechanism and cannot adjust the weights according to the importance of the features during feature fusion, leading to poor performance in multi-scale target detection and complex scenes, with especially weak performance in small target detection.

Figure 4.

Schematic comparison of top-down fusion and the FPN structure.

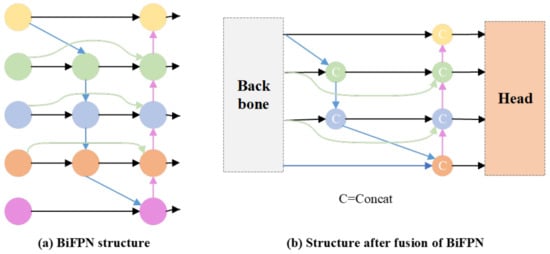

In the detection of high-speed falling stones, based on the problem of detecting multiple types of stones falling simultaneously and in complex scenes, the network structure of FPN cannot meet our detection needs, so we introduce the bidirectional feature pyramid network (BiFPN). As shown in Figure 5a, its feature flow is bidirectional (top-down and bottom-up)so that feature maps at different levels can be fused more effectively. It introduces a learnable weighting mechanism that dynamically adjusts the weights according to the actual contributions of the feature maps, improving the efficiency and detection accuracy of feature fusion. Meanwhile, the structure combining our small-target detection head is shown in Figure 5b. BiFPN has fewer parameters and lower computational complexity, which facilitates our subsequent deployment on embedded platforms.

Figure 5.

BiFPN feature fusion method.

3. Dataset Construction

In our study, we conducted simulation experiments on slopes where rockfalls are likely to occur on the Zhangji Expressway (closed section) in Jiangxi Province in order to simulate the occurrence of realistic rockfalls in the future. Through the simulation experiment in the field, we were able to gain a deeper understanding of the scenarios in which rockfalls occur on highway segments and optimize the system accordingly to better adapt to these complex situations. This field experiment provides a practical and intuitive evaluation criterion for evaluating and improving methods and technologies.

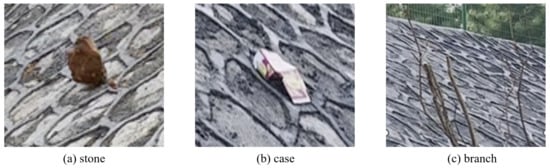

In this paper, we use an optical measurement device, an iPhone 14 Pro, which has an aperture of f/1.78, to capture video from different angles and disturbing backgrounds. For experimental validation, in addition to detecting falling rocks, this study considered that there were also tree branches and other objects moving on high-speed slopes that could interfere with our detection, so we increased the interference term of detection. The detection type is shown in Figure 6 (for the case of a kind of a generalized class of objects that may be present on the slope).

Figure 6.

Type of data set.

In order to verify the model’s multi-scale interpretation ability and anti-interference ability, we carried out the rolling shooting of single large, medium, and small stones in the absence of interference; the rolling shooting of single large, medium, and small stones in the presence of interference; and the simultaneous dropping of multiple types of stones with and without interference, as shown in Figure 7. By taking frames of the video to obtain images and after excluding the images without falling rocks, we obtained nearly 2500 images of falling rocks on high-speed slopes with a resolution of 1920 × 796.

Figure 7.

Slope rockfall dataset.

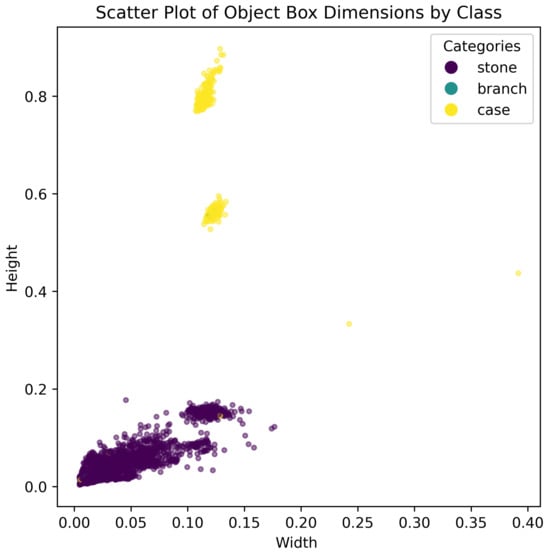

Subsequently, the 2500 images of falling rocks we produced were processed by the Lambellmg tool to construct the dataset, which was divided into training and validation sets in a ratio of 8:2 for model training and evaluation purposes, respectively. The frame size characteristics of different classes of objects are shown in Figure 8.

Figure 8.

Scale of different objects in the image.

4. Results and Analysis

In this paper, we use a homemade high-speed slope dataset to evaluate the performance of the model and present the experimental settings, evaluation metrics, ablation experiments, comparisons of the effects of different attention mechanisms, and comparative experiments.

4.1. Experimental Environment

In this paper, based on the Pytorch 2.1.0 deep learning framework, YOLOv8n is used as the underlying network model, and an experimental platform is set up on a Linux server. The detailed configuration of the experimental environment is shown in Table 1, and all the experiments in this paper use the default hyperparameters. Table 2 shows the hyperparameters used in the experiments.

Table 1.

Configuration and training environment.

Table 2.

Hyperparametric configuration.

4.2. Evaluation Indicators

In order to objectively evaluate the performance of the high-speed slope rockfall detection model, P, R, mAP, F1, and the number of parameters are selected as the experimental evaluation indexes.

Precision: Precision measures how many of the positive samples predicted by the model are truly positive. It is calculated as follows:

where (True Positive) represents correctly identified positive samples in the test and (False Positive) indicates samples that were incorrectly predicted as positive.

Recall: Recall measures the proportion of all actual positive samples correctly identified as positive. It is calculated as follows:

where (False Negative) indicates positive samples incorrectly identified as negative.

mAP: The mAP is the most commonly used aggregate performance metric for target detection tasks and represents the average AP across all categories. AP (Average Precision) is the average precision over a single category. The calculations are expressed as follows:

where is a functional relationship between precision and recall derived by calculating the area under the precision–recall curve. mAP then calculates the average AP across all categories. Common threshold settings are mAP@0.5 and mAP@0.5:0.95. It is calculated as follows:

where N is the number of datasets per category and is the average precision per category.

The F1 score is a reconciled average of precision and recall used to balance the two. It is calculated as follows:

The F1 score provides a tradeoff between precision and recall, a metric that is especially important when there is a large difference between the two.

4.3. Comparison of the Effects of Different Attention Mechanisms

In order to verify the effect of LSK module replacement with other attentional mechanisms on the accuracy of our model, we replaced the LSK module in the LHB-YOLOv8 model with the currently popular CAFM [25], CBAM, CPCA [26], SEAttention, and SimAM [27] attentional mechanism modules to conduct experimental comparisons. To ensure consistency in the comparison of the effects of different attention mechanisms, this set of experiments was conducted on the LHB-YOLOv8 model, and the hyperparameters of each experiment were kept consistent. Table 3 lists the results of the experiments using the different attention modules on the LHB-YOLOv8 model.

Table 3.

Experimental results for different attention modules.

As shown in Table 3, the LSK attention mechanism was replaced with the other five attention mechanisms in the backbone part of the LHB-YOLOv8 model. Although the LSK attention mechanism is slightly inferior to the other five attention mechanisms in terms of accuracy, its average accuracy value achieves the maximum performance improvement of 89.3%, and all other evaluation metrics of these six attention mechanisms are comparable.

4.4. Ablation Experiments

In this study, we conducted ablation experiments based on a self-constructed high-speed slope rockfall dataset to evaluate the improvement of each module in our proposed LHB-YOLOv8 model. We used the same hyperparameters throughout the experiments to rank the improvements in the trunk, neck, and detection head sections separately, using the original YOLOv8n as the baseline. The first of these experiments is the baseline result of YOLOv8, which was used to analyze the performance improvement effect of each module, and the second experiment denotes the addition of the LSK module to the YOLOv8 trunk section. The third experiment denotes the replacement of the YOLOv8 neck structure with the BiFPN module. The fourth is an experiment denoting the addition of an extra-small-target detection head to YOLOv8. The fifth experiment represents the addition of the LSK module to the YOLOv8 trunk section while simultaneously replacing the neck structure with a BiFPN module. The sixth experiment represents the addition of the LSK module to the YOLOv8 trunk while adding an additional small-target detection head. The seventh experiment represents the replacement of the YOLOv8 neck structure with a BiFPN module while adding an additional small-target detection head. The last experiment is our BLP-YOLOv8 model results. The experimental results of the model are shown in Table 4.

Table 4.

LHB-YOLOv8 model ablation experiment results.

From Table 4, it can be observed that with the introduction of the BiFPN module, the model precision is relatively increased by 2.7%, and the amount of parameters is reduced by 33.3%, which allows the information to propagate bidirectionally between the different resolution levels, facilitating the fusion of low- and high-level features. The increase in small-target detection heads results in a relative increase in precision of 4.2% and a relative increase in recall of 2.2%, indicating that the newly added detection heads effectively enhance the model’s ability to detect small targets. The introduction of the LSK module alone improves the precision and parameter count by not much; even the recall decreases by 0.6%, but we can observe that he the P, R, mAP@0.5, F1, and parameter counts are improved when fused with the BiFPN module and the small-target detection head. The BiFPN module fused with a small-target detection layer improves the model P, R, and mAP@0.5 by 2.4%, 2.3%, and 2.4%, respectively, while the number of parameters is reduced by 26.7%. However, compared to the baseline model (YOLOv8n), the combination of improved modules significantly increases P, R, and mAP@0.5 by 4.3%, 2.2%, and 4.8%, respectively. Moreover, the amount of parameters is reduced by 20.2%. Therefore, the practicality and effectiveness of the improved model are demonstrated.

As shown in Figure 9, comparing the results of rockfall detection, it can be seen that LHB-YOLOv8 is more effective than the baseline model in detecting rockfalls at the same location and detects the rockfalls that are missed by the baseline model, which effectively verifies that the model proposed in this paper has certain advantages over the baseline model.

Figure 9.

Comparison of LBH-YOLOv8 with baseline model assays.

4.5. Comparative Experiments

To validate the effectiveness of our proposed LHB-YOLOv8 model, we experimented with two versions of the first transformer-based end-to-end real-time detector, RT-DETR [28,29] (v2 and v3) using our dataset. It was also experimentally compared with four state-of-the-art algorithms of the YOLO family (YOLOv5 [30], YOLOv7 [31], YOLOv9 [32], and YOLOv10 [33]) at this stage. To ensure the consistency of the comparison results, the hyperparameters of each experiment were kept consistent, and the precision (P), recall (R), mean average precision (mAP@0.5), F1 score, and number of parameters were used as the evaluation indexes of detection performance. The results of the comparison experiments are shown in Table 5.

Table 5.

Performance comparison of the LHB-YOLOv8 model with other models.

According to Table 5, RT-DETR3 achieves considerable improvements relative to RT-DETR2 in terms of its precision, recall, mean average precision, and F1 score, but it still lags behind our model. Although YOLOv5 performs well in terms of precision and the number of parameters compared to LHB-YOLOv8, it performs much worse in terms of R, mAP@0.5, and F1 score. Compared to YOLOv7, LHB-YOLOv8 reduces the number of parameters by 60% while improving precision by 19.2%, recall by 4.3%, and mAP@0.5 by 13.5%. Compared to YOLOv9, LHB-YOLOv8 reduces the amount of parameters by 7% while improving precision by 7%, recall by 9.9%, mAP@0.5 by 9.3%, and F1 score by 8%. The overall performance of YOLOv10 is good, although it is outperformed by our model.

5. Conclusions

Aiming at the problem of rockfall detection on highway slopes, this paper introduces a new LHB-YOLOv8 model based on YOLOv8. Compared with the original model, the model overcomes the adverse effects of falling rocks of different sizes, shapes, and colors, which are often in motion, and also meets the deployment requirements of embedded devices. Furthermore, it significantly improves the accuracy of rockfall detection on high-speed slopes. First, the LSKAttention module is introduced in the backbone part to enable the model to capture information on various types of falling rocks, which effectively retains the global features related to falling rocks and enhances the detection accuracy of the model. Subsequently, the BiFPN structure is used to reconstruct the neck structure of the original model, which effectively reduces the number of model parameters and computation and decreases the model’s size to enhance its feature fusion capability. Finally, the additional detection head enriches the efficiency of multi-scale rockfall detection. The experimental results show that the LHB-YOLOv8 model outperforms existing detection methods in terms of real-time performance and detection accuracy. In addition, our model has fewer parameters, and after quantizing and accelerating it to improve the detection speed, it is capable of efficient deployment and application in real high-count scenarios.

In future work, the rich promise of targeting slope decline detection can be further explored and refined. The potential to fine tune the model for dataset extension by using hyperparameters, alternative architectures, and integration techniques to optimize the model’s performance is another avenue for improvement, as a more diverse and wider range of datasets can enhance the model’s robustness and generality. Secondly, in complex terrain such as mountain slopes, adaptation to changing weather conditions, especially haze, is still an unsolved problem that significantly affects the effectiveness and accuracy of image-based target detection techniques. Haze seriously interferes with the performance of surveillance systems by blurring images, reducing contrast, and obscuring distant targets. Such unfavorable weather conditions result in the loss of image details, making it difficult for conventional image processing and target detection algorithms to extract key features, which, in turn, affects the accuracy of real-time monitoring. Therefore, looking ahead, we will investigate how to enhance target detection capabilities and embedded integration in such adverse environments.

Author Contributions

Conceptualization, A.Y. and H.F.; methodology, A.Y.; software, H.F.; validation, A.Y., H.F. and Y.X.; formal analysis, A.Y.; investigation, Y.X.; resources, L.W.; data curation, H.F.; writing—original draft preparation, A.Y. and H.F.; writing—review and editing, A.Y., H.F. , Y.X., L.W. and J.S.; supervision, Y.X.; project administration, Y.X.; funding acquisition, A.Y. All authors have read and agreed to the published version of the manuscript.

Funding

This work was financially supported by the National Natural Science Foundation of China under research project number 61873249.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors upon request.

Conflicts of Interest

Author Anjun Yu was employed by the company Jiangxi Ganyue Expressway Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Lin, Y.W.; Chiu, C.F.; Chen, L.H.; Ho, C.C. Real-time dynamic intelligent image recognition and tracking system for rockfall disasters. J. Imaging 2024, 10, 78. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Yang, Q.; Gu, X. Assessment of pavement structural conditions and remaining life combining accelerated pavement testing and ground-penetrating radar. Remote Sens. 2023, 15, 4620. [Google Scholar] [CrossRef]

- Jing, C.; Huang, G.; Li, X.; Zhang, Q.; Yang, H.; Zhang, K.; Liu, G. GNSS/accelerometer integrated deformation monitoring algorithm based on sensors adaptive noise modeling. Measurement 2023, 218, 113179. [Google Scholar] [CrossRef]

- Fiolleau, S.; Uhlemann, S.; Wielandt, S.; Dafflon, B. Understanding slow-moving landslide triggering processes using low-cost passive seismic and inclinometer monitoring. J. Appl. Geophys. 2023, 215, 105090. [Google Scholar] [CrossRef]

- Cirillo, D.; Zappa, M.; Tangari, A.C.; Brozzetti, F.; Ietto, F. Rockfall analysis from UAV-based photogrammetry and 3D models of a cliff area. Drones 2024, 8, 31. [Google Scholar] [CrossRef]

- Diwan, T.; Anirudh, G.; Tembhurne, J.V. Object detection using YOLO: Challenges, architectural successors, datasets and applications. Multimed. Tools Appl. 2023, 82, 9243–9275. [Google Scholar] [CrossRef]

- Ghazali, M.; Mohamad, H.; Nasir, M.; Aizzuddin, A.; Aiman, M. Slope Monitoring of a Road Embankment by Using Distributed Optical Fibre Sensing Inclinometer. IOP Conf. Ser. Earth Environ. Sci. 2023, 1249, 012004. [Google Scholar] [CrossRef]

- Chen, M.; Cai, Z.; Zeng, Y.; Yu, Y. Multi-sensor data fusion technology for the early landslide warning system. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 11165–11172. [Google Scholar] [CrossRef]

- Xie, T.; Zhang, C.C.; Shi, B.; Chen, Z.; Zhang, Y. Integrating distributed acoustic sensing and computer vision for real-time seismic location of landslides and rockfalls along linear infrastructure. Landslides 2024, 21, 1941–1959. [Google Scholar] [CrossRef]

- Zhao, Y. Omnial: A unified cnn framework for unsupervised anomaly localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 3924–3933. [Google Scholar]

- Cai, J.; Zhang, L.; Dong, J.; Guo, J.; Wang, Y.; Liao, M. Automatic identification of active landslides over wide areas from time-series InSAR measurements using Faster RCNN. Int. J. Appl. Earth Obs. Geoinf. 2023, 124, 103516. [Google Scholar] [CrossRef]

- Noël, F.; Cloutier, C.; Jaboyedoff, M.; Locat, J. Impact-detection algorithm that uses point clouds as topographic inputs for 3D rockfall simulations. Geosciences 2021, 11, 188. [Google Scholar] [CrossRef]

- Williams, J.G.; Rosser, N.J.; Hardy, R.J.; Brain, M.J. The importance of monitoring interval for rockfall magnitude-frequency estimation. J. Geophys. Res. Earth Surf. 2019, 124, 2841–2853. [Google Scholar] [CrossRef]

- Briones-Bitar, J.; Carrión-Mero, P.; Montalván-Burbano, N.; Morante-Carballo, F. Rockfall research: A bibliometric analysis and future trends. Geosciences 2020, 10, 403. [Google Scholar] [CrossRef]

- Wang, L.; Wang, S.; Xie, X.; Deng, Y.; Tian, W. An improved method for rockfall detection and tracking based on video stream. In Proceedings of the IET International Radar Conference (IRC 2023), Chongqing, China, 3–5 December 2023. [Google Scholar]

- Wang, S.; Jiao, H.; Su, X.; Yuan, Q. An Ensemble Learning Approach With Attention Mechanism for Detecting Pavement Distress and Disaster-Induced Road Damage. IEEE Trans. Intell. Transp. Syst. 2024, 25, 13667–13681. [Google Scholar] [CrossRef]

- Shankar, K.; Akash, S.; Gokulakrishnan, K.; Gokulakrishnan, K.J. YOLOv8-Driven Integration of Advanced Detection Technologies for Enhanced Terrain Safety. In Proceedings of the 2024 5th International Conference on Mobile Computing and Sustainable Informatics (ICMCSI), Lalitpur, Nepal, 18–19 January 2024; IEEE: New York, NY, USA, 2024; pp. 344–351. [Google Scholar]

- Li, Y.; Hou, Q.; Zheng, Z.; Cheng, M.M.; Yang, J.; Li, X. Large selective kernel network for remote sensing object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 16794–16805. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10781–10790. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global attention mechanism: Retain information to enhance channel-spatial interactions. arXiv 2021, arXiv:2112.05561. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11534–11542. [Google Scholar]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. Nas-fpn: Learning scalable feature pyramid architecture for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7036–7045. [Google Scholar]

- Hu, S.; Gao, F.; Zhou, X.; Dong, J.; Du, Q. Hybrid Convolutional and Attention Network for Hyperspectral Image Denoising. IEEE Geosci. Remote. Sens. Lett. 2024, 21, 5504005. [Google Scholar] [CrossRef]

- Huang, H.; Chen, Z.; Zou, Y.; Lu, M.; Chen, C.; Song, Y.; Zhang, H.; Yan, F. Channel prior convolutional attention for medical image segmentation. Comput. Biol. Med. 2024, 178, 108784. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, R.Y.; Li, L.; Xie, X. Simam: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Online, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Lv, W.; Zhao, Y.; Chang, Q.; Huang, K.; Wang, G.; Liu, Y. Rt-detrv2: Improved baseline with bag-of-freebies for real-time detection transformer. arXiv 2024, arXiv:2407.17140. [Google Scholar]

- Wang, S.; Xia, C.; Lv, F.; Shi, Y. RT-DETRv3: Real-time End-to-End Object Detection with Hierarchical Dense Positive Supervision. arXiv 2024, arXiv:2409.08475. [Google Scholar]

- Mahaur, B.; Mishra, K. Small-object detection based on YOLOv5 in autonomous driving systems. Pattern Recognit. Lett. 2023, 168, 115–122. [Google Scholar] [CrossRef]

- Zhao, H.; Zhang, H.; Zhao, Y. Yolov7-sea: Object detection of maritime uav images based on improved yolov7. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 233–238. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Mark Liao, H.Y. Yolov9: Learning what you want to learn using programmable gradient information. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2025; pp. 1–21. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).