Resource Scheduling Algorithm for Edge Computing Networks Based on Multi-Objective Optimization

Abstract

1. Introduction

- To assess the computing power of heterogeneous edge nodes, this paper proposes a hybrid computing power measurement method that combines static and dynamic metrics to establish a unified evaluation system, enhancing the matching efficiency between computing nodes and service requirements.

- To facilitate real-time scheduling of microservices for computing power services in edge computing network scenarios, this paper presents a multi-objective optimization model for microservice scheduling in edge computing networks, targeting minimized latency and energy consumption. Formulated as a MOMDP problem, it is efficiently solved via MORL and PPO algorithms, enabling dynamic multi-objective resource allocation.

- We conducted extensive simulation experiments to validate the effectiveness and feasibility of the proposed multi-objective optimization-based resource scheduling algorithm for edge computing networks. The results demonstrate that our algorithm outperforms others in terms of comprehensive rewards for latency and energy consumption, as well as achieving an optimal Pareto front and hypervolume.

2. Related Work

2.1. Edge Computing Power Scheduling Strategy

2.2. Multi-Objective Optimization

3. Edge Computing Scheduling Algorithms

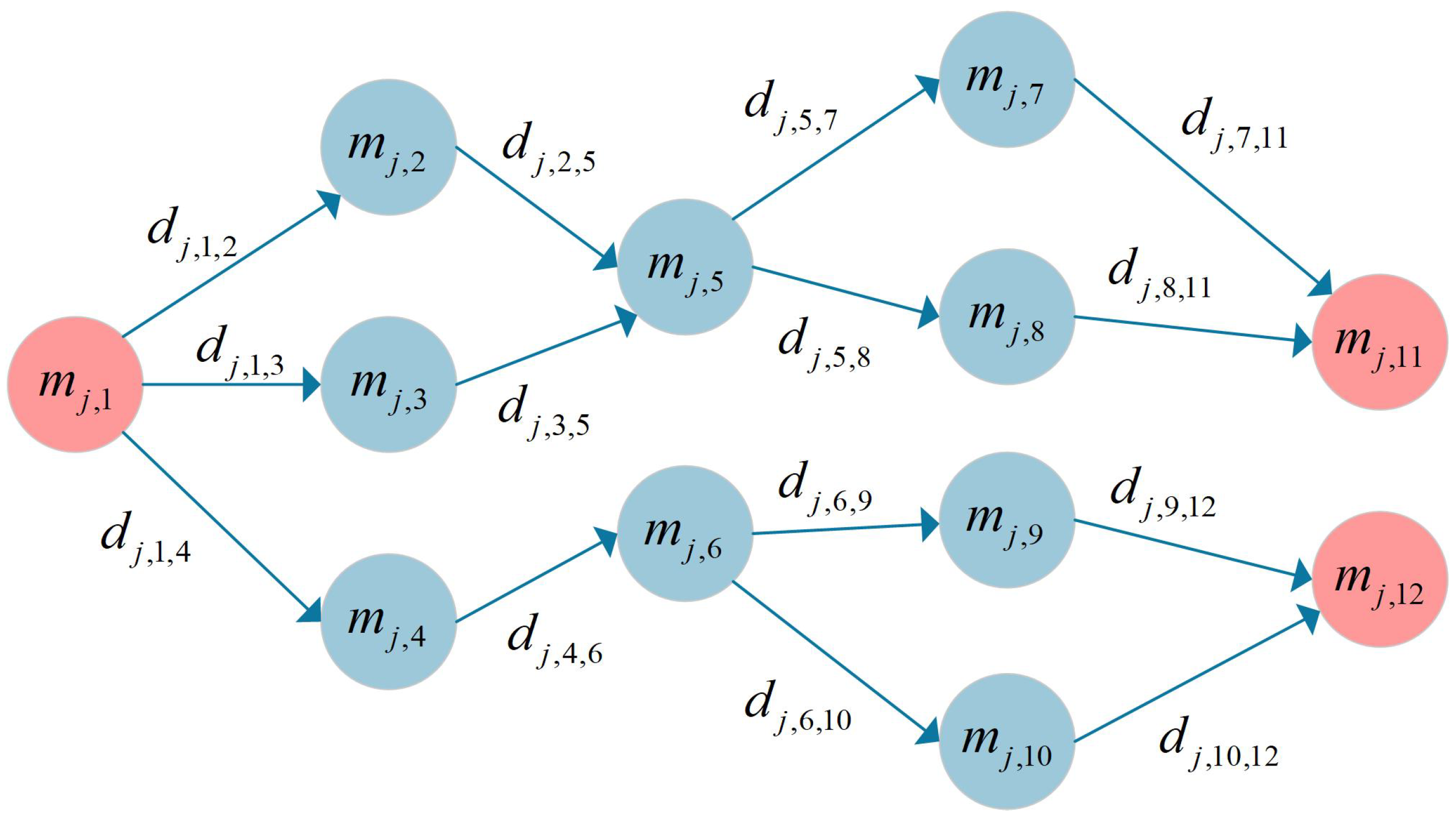

3.1. Microservice Edge Computing Power Network Model

3.2. Hybrid Static–Dynamic Computing Power Measurement

3.3. Multi-Objective Optimization for Resource Scheduling

3.3.1. MOOECN Scheduling Scheme

3.3.2. PPO-Based Scheduling Strategy

3.4. Algorithm Implementation and Analysis

3.4.1. Algorithm Implementation

| Algorithm 1 MOOECN |

|

3.4.2. Complexity Analysis

4. Experimentation and Evaluation

- Question 1: What is the performance of our multi-objective optimization-based edge computing network resource scheduling (MOOECN)?

- Question 2: How do different components of MOOECN impact its performance?

- Question 3: What is the influence of hyperparameters on MOOECN?

4.1. Experimental Setup

4.1.1. Simulation Environment

4.1.2. Evaluation Metrics

- Energy consumption: The total energy consumption of the computational tasks during a complete training cycle, i.e., .

- Latency: The total latency of the computational tasks during a complete training cycle, i.e., .

- Total reward: The cumulative reward value obtained over a complete training cycle, i.e., .

- Pareto frontier: For any strategy under a given preference, an optimal trade-off between latency and energy consumption can be maintained, i.e., .

- Pareto hypervolume: This metric is used to measure the approximation quality of the Pareto frontier. It evaluates the performance of multi-objective optimization algorithms by calculating the volume between the Pareto frontier and a reference point.

4.1.3. Baseline

- Multi-armed bandit-based scheme [49]: This approach formulates the task scheduling problem in edge computing as a contextual multi-armed bandit problem. Each “arm” corresponds to an available edge server or a scheduling action (e.g., local execution, offloading to edge node A/B). At each decision step, the system observes the current task features—such as task size, deadline, and device battery level—as context information and dynamically adjusts its selection policy based on historical rewards (e.g., task completion delay, energy consumption, success rate). This approach exhibits low computational overhead and fast convergence, making it suitable for lightweight edge devices; however, it cannot explicitly model state transitions or optimize long-term cumulative rewards.

- Deep Q-network-based scheme [50]: This scheme formulates task scheduling as a Markov decision process (MDP) and employs a deep Q-network (DQN) to solve for the optimal policy. DQN uses a deep neural network to approximate the Q-function and stabilizes the training process through experience replay and a target network. This method is capable of handling high-dimensional state spaces and learning long-term optimized strategies; however, it has high sample efficiency requirements and may face significant training overhead in edge computing environments.

- Greedy algorithm-based scheme [51]: This scheme selects, at each decision step, the action that yields the highest immediate reward based solely on the current state, without considering the impact of future states. For example, it always schedules tasks to the edge node with the current lowest load or the shortest estimated completion time. It is simple to implement and highly responsive, making it suitable for scenarios with stringent real-time requirements. However, due to the lack of consideration of long-term performance, it is prone to getting trapped in local optima and tends to perform unstably, especially in dynamic edge environments with fluctuating workloads or resources.

- Random-based scheme [52]: This scheme selects, at each decision step, the action that yields the highest immediate reward based solely on the current state, without considering the impact of future states. For example, it always schedules tasks to the edge node with the current lowest load or the shortest estimated completion time. It is simple to implement and highly responsive, making it suitable for scenarios with stringent real-time requirements. However, due to the lack of consideration of long-term performance, it is prone to getting trapped in local optima and tends to perform unstably, especially in dynamic edge environments with fluctuating workloads or resources.

- SAC-based approach [53]: This scheme uniformly randomly selects a scheduling target from all available actions at each time step, without relying on any historical experience or state information. Although seemingly inefficient, it serves as a baseline to effectively evaluate whether other algorithms genuinely outperform random, non-strategic behavior. Moreover, in highly uncertain environments or during the early exploration phase, the random policy helps collect diverse experience data and is commonly used in the initial exploration stage of reinforcement learning algorithms.

- Heuristic algorithm-based approach [54]: This scheme designs domain-specific rules to rapidly generate approximate optimal scheduling decisions. The solving strategy, crafted based on experience, intuition, or problem-specific knowledge, aims to obtain high-quality solutions within a reasonable computational time, which is especially suitable for problems with high computational complexity that are difficult to solve exactly (e.g., NP-hard problems). While it does not guarantee finding the global optimum, it often achieves good performance in practical applications and is widely used in combinatorial optimization, scheduling, path planning, resource allocation, and related fields.

4.2. Experimental Results

4.2.1. Performance Comparison

4.2.2. Ablation Study

4.2.3. Hyperparameter Analysis

4.3. Evaluation on Real-Life Use Cases

4.3.1. Experimental Environment

4.3.2. Experimental Equipment Information

- Cloud Server: One server equipped with two Intel Xeon Gold 5318Y processors (Intel Corporation, Santa Clara, CA, USA) with a base frequency of 2.1 GHz, six NVIDIA A6000 GPUs (NVIDIA Corporation, Santa Clara, CA, USA), and 8 TB of hard disk storage.

- Edge Servers: Five NVIDIA Jetson AGX Orin Developer Kits (NVIDIA Corporation, Santa Clara, CA, USA). Each unit features a 12-core ARM Cortex-A78AE CPU (Arm Limited, Cambridge, UK) running at 2.0 GHz, 32 GB of LPDDR5 memory, and an integrated Ampere GPU capable of delivering up to 200 TOPS (INT8). Storage is provided via 64 GB microSD cards.

- End Devices: Eight Raspberry Pi 5 boards (Raspberry Pi Ltd, Cambridge, UK). Each board is equipped with a quad-core ARM Cortex-A76 CPU (Arm Limited, Cambridge, UK) running at 2.4 GHz, 8 GB of RAM, and 64 GB of microSD card storage.

4.3.3. Evaluation Metrics

- Average delay: The arithmetic mean of the time interval from the moment a task is submitted by the cloud server to the system until its final execution result is successfully returned to the cloud server, calculated over all successfully completed tasks. This metric reflects the system’s overall responsiveness in processing mixed workloads across heterogeneous edge resources.

- Average energy consumption: The average electrical energy consumed per task during its execution is computed as the total energy consumed by all participating edge servers and end devices throughout the entire batch execution period, divided by the number of successfully completed tasks. Specifically, the energy consumption of the five NVIDIA Jetson AGX Orin edge servers is measured using their built-in power sensors to collect board-level power, which is then integrated over the task execution duration. For the eight Raspberry Pi 5 end devices, a calibrated USB power meter records voltage and current during operation; instantaneous power is calculated and integrated to obtain the energy consumption. This metric measures the system’s energy efficiency in completing individual tasks.

- Task completion rate: This is the percentage of submitted tasks that are successfully completed and return results. A task is considered to have failed if it cannot return a valid result due to reasons such as resource insufficiency or node failure. This metric reflects the robustness and reliability of the scheduling policy in a real-world heterogeneous environment.

- Resource utilization: This is the comprehensive average utilization rate of CPU and memory resources across all participating nodes (including edge servers and end devices) during task execution. For Jetson nodes, CPU utilization and memory usage are obtained via tegrastats. For Raspberry Pi nodes, the corresponding metrics are collected using the psutil library. The final value is a spatio-temporal average, taken over all nodes and all sampling instants. This metric characterizes the system’s efficiency in utilizing heterogeneous computing resources, with a higher value indicating less resource waste.

4.3.4. Experimental Results

4.4. Practical Deployment Challenges and Scalability Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, X.; Sun, C.; Zhou, M.; Wu, C.; Peng, B.; Li, P. Reinforcement Learning-Based Multislot Double-Threshold Spectrum Sensing with Bayesian Fusion for Industrial Big Spectrum Data. IEEE Trans. Ind. Inform. 2021, 17, 3391–3400. [Google Scholar] [CrossRef]

- Na, Z.; Li, B.; Liu, X.; Wang, J.; Zhang, M.; Liu, Y.; Mao, B. UAV-Based Wide-Area Internet of Things: An Integrated Deployment Architecture. IEEE Netw. 2021, 35, 122–128. [Google Scholar] [CrossRef]

- Zhou, W.; Xia, J.; Zhou, F.; Fan, L.; Lei, X.; Nallanathan, A.; Karagiannidis, G.K. Profit Maximization for Cache-Enabled Vehicular Mobile Edge Computing Networks. IEEE Trans. Veh. Technol. 2023, 72, 13793–13798. [Google Scholar] [CrossRef]

- Rajput, K.R.; Kulkarni, C.D.; Cho, B.; Wang, W.; Kim, I.K. EdgeFaaSBench: Benchmarking Edge Devices Using Serverless Computing. In Proceedings of the 2022 IEEE International Conference on Edge Computing and Communications (EDGE), Barcelona, Spain, 11–15 July 2022; pp. 93–103. [Google Scholar] [CrossRef]

- Kiani, A.; Ansari, N. Edge Computing Aware NOMA for 5G Networks. IEEE Internet Things J. 2018, 5, 1299–1306. [Google Scholar] [CrossRef]

- Du, Z.; Li, Z.; Duan, X.; Wang, J. Service Information Informing in Computing Aware Networking. In Proceedings of the 2022 International Conference on Service Science (ICSS), Shenzhen, China, 2–4 July 2022; pp. 125–130. [Google Scholar] [CrossRef]

- Yao, H.; Duan, X.; Fu, Y. A computing-aware routing protocol for Computing Force Network. In Proceedings of the 2022 International Conference on Service Science (ICSS), Zhuhai, China, 13–15 May 2022; pp. 137–141. [Google Scholar] [CrossRef]

- Wu, W.; Zhou, F.; Hu, R.Q.; Wang, B. Energy-Efficient Resource Allocation for Secure NOMA-Enabled Mobile Edge Computing Networks. IEEE Trans. Commun. 2020, 68, 493–505. [Google Scholar] [CrossRef]

- Chen, L.; Fan, L.; Lei, X.; Duong, T.Q.; Nallanathan, A.; Karagiannidis, G.K. Relay-Assisted Federated Edge Learning: Performance Analysis and System Optimization. IEEE Trans. Commun. 2023, 71, 3387–3401. [Google Scholar] [CrossRef]

- Zhao, R.; Zhu, F.; Tang, M.; He, L. Profit maximization in cache-aided intelligent computing networks. Phys. Commun. 2023, 58, 102065. [Google Scholar] [CrossRef]

- Bertsekas, D. Dynamic Programming and Optimal Control: Volume I; Athena Scientific: Nashua, NH, USA, 2012; Volume 4. [Google Scholar]

- Assila, B.; Kobbane, A.; El Koutbi, M. A Cournot Economic Pricing Model for Caching Resource Management in 5G Wireless Networks. In Proceedings of the 2018 14th International Wireless Communications & Mobile Computing Conference (IWCMC), Limassol, Cyprus, 25–29 June 2018; pp. 1345–1350. [Google Scholar] [CrossRef]

- Ye, Y.; Shi, L.; Chu, X.; Hu, R.Q.; Lu, G. Resource Allocation in Backscatter-Assisted Wireless Powered MEC Networks with Limited MEC Computation Capacity. IEEE Trans. Wirel. Commun. 2022, 21, 10678–10694. [Google Scholar] [CrossRef]

- Dinh, T.Q.; Tang, J.; La, Q.D.; Quek, T.Q.S. Offloading in Mobile Edge Computing: Task Allocation and Computational Frequency Scaling. IEEE Trans. Commun. 2017, 65, 3571–3584. [Google Scholar] [CrossRef]

- Zheng, T.; Wan, J.; Zhang, J.; Jiang, C. Deep reinforcement learning-based workload scheduling for edge computing. J. Cloud Comput. 2022, 11, 3. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, Z.; Shi, Z.; Meng, L.; Zhang, Z. Online Scheduling Optimization for DAG-Based Requests Through Reinforcement Learning in Collaboration Edge Networks. IEEE Access 2020, 8, 72985–72996. [Google Scholar] [CrossRef]

- Tuli, S.; Ilager, S.; Ramamohanarao, K.; Buyya, R. Dynamic Scheduling for Stochastic Edge-Cloud Computing Environments Using A3C Learning and Residual Recurrent Neural Networks. IEEE Trans. Mob. Comput. 2022, 21, 940–954. [Google Scholar] [CrossRef]

- ETSI. Mobile Edge Computing (MEC); Framework and Reference Architecture; Technical Report ETSI GS MEC 003 V3.1.1; European Telecommunications Standards Institute (ETSI): Valbonne, France, 2022; Available online: https://www.etsi.org/deliver/etsi_gs/MEC/001_099/003/03.01.01_60/gs_MEC003v030101p.pdf (accessed on 5 October 2025).

- Huawei. Computing Power Network (CPN) White Paper. Technical Report, 2023. Available online: https://e.huawei.com/en/ict-insights (accessed on 5 October 2025).

- Amazon Web Services. AWS Panorama Developer Guide. 2023. Available online: https://docs.aws.amazon.com/panorama/latest/dev/ (accessed on 5 October 2025).

- Ren, J.; Lei, X.; Peng, Z.; Tang, X.; Dobre, O.A. RIS-Assisted Cooperative NOMA with SWIPT. IEEE Wirel. Commun. Lett. 2023, 12, 446–450. [Google Scholar] [CrossRef]

- Lan, D.; Taherkordi, A.; Eliassen, F.; Liu, L.; Delbruel, S.; Dustdar, S.; Yang, Y. Task Partitioning and Orchestration on Heterogeneous Edge Platforms: The Case of Vision Applications. IEEE Internet Things J. 2022, 9, 7418–7432. [Google Scholar] [CrossRef]

- Chen, Z.; Hu, J.; Chen, X.; Hu, J.; Zheng, X.; Min, G. Computation Offloading and Task Scheduling for DNN-Based Applications in Cloud-Edge Computing. IEEE Access 2020, 8, 115537–115547. [Google Scholar] [CrossRef]

- Pu, L.; Chen, X.; Xu, J.; Fu, X. D2D Fogging: An Energy-Efficient and Incentive-Aware Task Offloading Framework via Network-assisted D2D Collaboration. IEEE J. Sel. Areas Commun. 2016, 34, 3887–3901. [Google Scholar] [CrossRef]

- Chen, X.; Jiao, L.; Li, W.; Fu, X. Efficient Multi-User Computation Offloading for Mobile-Edge Cloud Computing. IEEE/ACM Trans. Netw. 2016, 24, 2795–2808. [Google Scholar] [CrossRef]

- Wang, X.; Ning, Z.; Guo, S. Multi-Agent Imitation Learning for Pervasive Edge Computing: A Decentralized Computation Offloading Algorithm. IEEE Trans. Parallel Distrib. Syst. 2021, 32, 411–425. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, J.; Lin, B.; Chen, Z.; Wolter, K.; Min, G. Energy-Efficient Offloading for DNN-Based Smart IoT Systems in Cloud-Edge Environments. IEEE Trans. Parallel Distrib. Syst. 2022, 33, 683–697. [Google Scholar] [CrossRef]

- Gao, H.; Wang, X.; Wei, W.; Al-Dulaimi, A.; Xu, Y. Com-DDPG: Task Offloading Based on Multiagent Reinforcement Learning for Information-Communication-Enhanced Mobile Edge Computing in the Internet of Vehicles. IEEE Trans. Veh. Technol. 2024, 73, 348–361. [Google Scholar] [CrossRef]

- Peng, Q.; Wu, C.; Xia, Y.; Ma, Y.; Wang, X.; Jiang, N. DoSRA: A Decentralized Approach to Online Edge Task Scheduling and Resource Allocation. IEEE Internet Things J. 2022, 9, 4677–4692. [Google Scholar] [CrossRef]

- Phuc, L.H.; Phan, L.A.; Kim, T. Traffic-Aware Horizontal Pod Autoscaler in Kubernetes-Based Edge Computing Infrastructure. IEEE Access 2022, 10, 18966–18977. [Google Scholar] [CrossRef]

- Liu, T.; Ni, S.; Li, X.; Zhu, Y.; Kong, L.; Yang, Y. Deep Reinforcement Learning Based Approach for Online Service Placement and Computation Resource Allocation in Edge Computing. IEEE Trans. Mob. Comput. 2023, 22, 3870–3881. [Google Scholar] [CrossRef]

- Zhou, H.; Jiang, K.; Liu, X.; Li, X.; Leung, V.C.M. Deep Reinforcement Learning for Energy-Efficient Computation Offloading in Mobile-Edge Computing. IEEE Internet Things J. 2022, 9, 1517–1530. [Google Scholar] [CrossRef]

- Yang, Y.; Wang, S. EdgeOPT: A competitive algorithm for online parallel task scheduling with latency guarantee in mobile edge computing. IEEE Trans. Commun. 2024, 72, 7077–7092. [Google Scholar] [CrossRef]

- Khoshvaght, P.; Haider, A.; Rahmani, A.M.; Rajabi, S.; Gharehchopogh, F.S.; Lansky, J.; Hosseinzadeh, M. A Self-Supervised Deep Reinforcement Learning for Zero-Shot Task Scheduling in Mobile Edge Computing Environments. Ad Hoc Netw. 2025, 178, 103977. [Google Scholar] [CrossRef]

- Long, L.; Liu, Z.; Shen, J.; Jiang, Y. SecDS: A security-aware DAG task scheduling strategy for edge computing. Future Gener. Comput. Syst. 2025, 166, 107627. [Google Scholar] [CrossRef]

- Xie, R.; Feng, L.; Tang, Q.; Zhu, H.; Huang, T.; Zhang, R.; Yu, F.R.; Xiong, Z. Priority-aware task scheduling in computing power network-enabled edge computing systems. IEEE Trans. Netw. Sci. Eng. 2025, 12, 3191–3205. [Google Scholar] [CrossRef]

- Mokhtari, A.; Hossen, M.A.; Jamshidi, P.; Salehi, M.A. FELARE: Fair Scheduling of Machine Learning Tasks on Heterogeneous Edge Systems. In Proceedings of the 2022 IEEE 15th International Conference on Cloud Computing (CLOUD), Barcelona, Spain, 10–16 July 2022; pp. 459–468. [Google Scholar] [CrossRef]

- Zhang, M.; Cao, J.; Yang, L.; Zhang, L.; Sahni, Y.; Jiang, S. ENTS: An Edge-native Task Scheduling System for Collaborative Edge Computing. In Proceedings of the 2022 IEEE/ACM 7th Symposium on Edge Computing (SEC), Seattle, WA, USA, 5–8 December 2022; pp. 149–161. [Google Scholar] [CrossRef]

- Ma, T.; Wang, M.; Zhao, W. Task scheduling considering multiple constraints in mobile edge computing. In Proceedings of the 2021 International Conference on Intelligent Computing, Automation and Systems (ICICAS), Chongqing, China, 29–31 December 2021; pp. 43–47. [Google Scholar] [CrossRef]

- Gong, Q.; Xia, Y.; Zou, J.; Hou, Z.; Liu, Y. Enhancing Dynamic Constrained Multi-Objective Optimization with Multi-Centers Based Prediction. IEEE Trans. Evol. Comput. 2025. [Google Scholar] [CrossRef]

- Zheng, X.L.; Wang, L. A Collaborative Multiobjective Fruit Fly Optimization Algorithm for the Resource Constrained Unrelated Parallel Machine Green Scheduling Problem. IEEE Trans. Syst. Man Cybern. Syst. 2018, 48, 790–800. [Google Scholar] [CrossRef]

- Wang, F.; Sun, J.; Gan, X.; Gong, D.; Wang, G.; Guo, Y. A Dynamic Interval Multi-Objective Evolutionary Algorithm Based on Multi-Task Learning and Inverse Mapping. IEEE Trans. Evol. Comput. 2025. [Google Scholar] [CrossRef]

- Li, J.; Shang, Y.; Qin, M.; Yang, Q.; Cheng, N.; Gao, W.; Kwak, K.S. Multiobjective Oriented Task Scheduling in Heterogeneous Mobile Edge Computing Networks. IEEE Trans. Veh. Technol. 2022, 71, 8955–8966. [Google Scholar] [CrossRef]

- Pan, L.; Liu, X.; Jia, Z.; Xu, J.; Li, X. A Multi-Objective Clustering Evolutionary Algorithm for Multi-Workflow Computation Offloading in Mobile Edge Computing. IEEE Trans. Cloud Comput. 2023, 11, 1334–1351. [Google Scholar] [CrossRef]

- Li, L.; Qiu, Q.; Xiao, Z.; Lin, Q.; Gu, J.; Ming, Z. A Two-Stage Hybrid Multi-Objective Optimization Evolutionary Algorithm for Computing Offloading in Sustainable Edge Computing. IEEE Trans. Consum. Electron. 2024, 70, 735–746. [Google Scholar] [CrossRef]

- Al-Bakhrani, A.A.; Li, M.; Obaidat, M.S.; Amran, G.A. MOALF-UAV-MEC: Adaptive Multiobjective Optimization for UAV-Assisted Mobile Edge Computing in Dynamic IoT Environments. IEEE Internet Things J. 2025, 12, 20736–20756. [Google Scholar] [CrossRef]

- Qiu, Q.; Ye, Y.; Li, L.; Xiao, Z.; Lin, Q.; Ming, Z. Joint computation offloading and service caching in Vehicular Edge Computing via a dynamic coevolutionary multiobjective optimization algorithm. Expert Syst. Appl. 2025, 284, 127821. [Google Scholar] [CrossRef]

- Sunny, A. Joint Scheduling and Sensing Allocation in Energy Harvesting Sensor Networks with Fusion Centers. IEEE J. Sel. Areas Commun. 2016, 34, 3577–3589. [Google Scholar] [CrossRef]

- Alipour-Fanid, A.; Dabaghchian, M.; Arora, R.; Zeng, K. Multiuser Scheduling in Centralized Cognitive Radio Networks: A Multi-Armed Bandit Approach. IEEE Trans. Cogn. Commun. Netw. 2022, 8, 1074–1091. [Google Scholar] [CrossRef]

- Nandhakumar, A.R.; Baranwal, A.; Choudhary, P.; Golec, M.; Gill, S.S. EdgeAISim: A toolkit for simulation and modelling of AI models in edge computing environments. Meas. Sens. 2024, 31, 100939. [Google Scholar] [CrossRef]

- Wang, Z.Y.; Pan, Q.K.; Gao, L.; Jing, X.L.; Sun, Q. A cooperative iterated greedy algorithm for the distributed flowshop group robust scheduling problem with uncertain processing times. Swarm Evol. Comput. 2023, 79, 101320. [Google Scholar] [CrossRef]

- Burra, R.; Singh, C.; Kuri, J. Service scheduling for random requests with fixed waiting costs. Perform. Eval. 2022, 155, 102297. [Google Scholar] [CrossRef]

- Liu, T.; Tang, L.; Wang, W.; Chen, Q.; Zeng, X. Digital-Twin-Assisted Task Offloading Based on Edge Collaboration in the Digital Twin Edge Network. IEEE Internet Things J. 2022, 9, 1427–1444. [Google Scholar] [CrossRef]

- Hu, M.; Zhou, M.; Zhang, Z.; Zhang, L.; Li, Y. A novel disjunctive-graph-based meta-heuristic approach for multi-objective resource-constrained project scheduling problem with multi-skilled staff. Swarm Evol. Comput. 2025, 95, 101939. [Google Scholar] [CrossRef]

| Algorithm Name | Algorithm Type | Task Type | Multi-Objective | Dynamic Scheduling | Lightweight |

|---|---|---|---|---|---|

| EDGEVISION [21] | Heuristic Rules + Heterogeneous Resource Abstraction Model | DAG Tasks | 🗸 | ✘ | 🗸 |

| COTDCEG [23] | Greedy + Genetic Algorithm | DNN Tasks | 🗸 | ✘ | 🗸 |

| D2D Fogging [24] | Online Algorithm Based on Stochastic Optimization | Mobility-Aware Tasks | 🗸 | 🗸 | 🗸 |

| EMCOM [25] | Game Theory + Convex Optimization | Mobility-Aware Tasks | 🗸 | ✘ | 🗸 |

| SPSO-GA [27] | RL + Q-learning | DNN Tasks | 🗸 | 🗸 | 🗸 |

| Com-DDPG [28] | MADDPG | IoV Tasks | 🗸 | 🗸 | ✘ |

| DoSRA [29] | Distributed Online RL | Heterogeneous Tasks | 🗸 | 🗸 | 🗸 |

| THPA [30] | Traffic-Aware Algorithm | Microservice Tasks | 🗸 | 🗸 | 🗸 |

| PDQN [31] | DQN + Policy Gradient | Microservice Tasks | 🗸 | 🗸 | 🗸 |

| DRLMC [32] | DQN + Policy Gradient | Mobility-Aware Tasks | 🗸 | 🗸 | ✘ |

| EdgeOPT [33] | Online Competitive Algorithm | Monolithic Tasks | ✘ | 🗸 | 🗸 |

| ZSTS-MEC [34] | SAC+Self-Supervised Learning | General Task Scheduling | 🗸 | 🗸 | ✘ |

| SecDS [35] | Heuristic Algorithm | DAG Tasks | 🗸 | ✘ | 🗸 |

| PATD3 [36] | TD3 | General Task Scheduling | 🗸 | 🗸 | ✘ |

| Symbol | Definition |

|---|---|

| Set of edge servers | |

| Resource set of edge server i | |

| S | Set of computing services |

| Set of microservices for computing service | |

| The i-th microservice of computing service | |

| Microservice dependency graph of computing service | |

| Set of all nodes in directed acyclic graph j | |

| Set of all edges in directed acyclic graph j | |

| Set of all edges incident to microservice | |

| M | Set of tasks pending allocation at time t |

| Transmission rate of microservice m offloaded to edge server | |

| Transmission delay of microservice m | |

| Energy consumption for offloading microservice m | |

| Total computation energy consumption of microservice m | |

| Computation delay of microservice m | |

| Total delay of microservice m | |

| Total energy consumption of microservice m | |

| Value of the j-th computing power metric at the i-th computing node | |

| Relative importance of the j-th metric at the i-th computing node | |

| Information entropy value of the j-th indicator | |

| Information utility value of the j-th indicator | |

| Weight of the j-th indicator | |

| Comprehensive computing power evaluation value of node i | |

| Comprehensive computing capability of a computing node | |

| Total computational resources | |

| Total storage resources | |

| Remaining resource quantity | |

| Remaining storage space | |

| Computing power quintuple of node i | |

| w | Preference vector |

| The state vector of task m offloaded to edge server | |

| The state vector of task m offloaded to terminal u | |

| Preference w replay buffer | |

| Policy parameters for preference w | |

| is a binary variable. means task m is offloaded to edge server and otherwise. | |

| The byte size of microservice m. | |

| The floating-point operation count of microservice m. | |

| The floating-point computing capacity of the edge server. | |

| The floating-point computing capacity of the end device. | |

| The energy efficiency ratio of the end device. | |

| The energy efficiency ratio of the edge server. |

| Symbol | Quantity | Values |

|---|---|---|

| Total number of steps in one training cycle | 100 | |

| Time per step | 1 s | |

| U | Number of terminals | 10 |

| Number of edge servers | 20 | |

| CPU frequency of terminals | 2 ± 0.5 GHz | |

| CPU frequency of edge servers | 4 ± 1 GHz | |

| C | Channel bandwidth | 16.6 MHz |

| L | Task size | 0.1 MB–100 MB |

| Offloading power | 0.01 W | |

| Noise power spectral density | −174 dBm/Hz | |

| D | Distance between terminals and edge servers | 50–500 m |

| Algorithm | Greedy | Heuristics | MAB | Random | SAC | DQN | MOOECN |

|---|---|---|---|---|---|---|---|

| Pareto Hypervolume | 24.22 | 122.63 | 390.39 | 17.34 | 209.62 | 1323.35 | 1545.55 |

| Algorithm | Greedy | Heuristics | MAB | Random | SAC | DQN |

|---|---|---|---|---|---|---|

| p-value | ≈6 | ≈1 | ≈4 | ≈5 | ≈2 | ≈8 |

| Task Maxsize | 50 MB | 100 MB | 150 MB |

|---|---|---|---|

| Pareto Hypervolume | 3609.25 | 3303.88 | 2252 |

| Algorithm | Average Delay (s) | Average Energy Consumption (J) | Task Completion Rate (%) | Resource Utilization (%) |

|---|---|---|---|---|

| Random | ||||

| Greedy | ||||

| MAB | ||||

| DQN | ||||

| SAC | ||||

| Heuristic | ||||

| MOOECN |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, W.; Zhu, J.; Li, X.; Fei, Y.; Wang, H.; Liu, S.; Zheng, X.; Ji, Y. Resource Scheduling Algorithm for Edge Computing Networks Based on Multi-Objective Optimization. Appl. Sci. 2025, 15, 10837. https://doi.org/10.3390/app151910837

Liu W, Zhu J, Li X, Fei Y, Wang H, Liu S, Zheng X, Ji Y. Resource Scheduling Algorithm for Edge Computing Networks Based on Multi-Objective Optimization. Applied Sciences. 2025; 15(19):10837. https://doi.org/10.3390/app151910837

Chicago/Turabian StyleLiu, Wenrui, Jiale Zhu, Xiangming Li, Yichao Fei, Hai Wang, Shangdong Liu, Xiaoyao Zheng, and Yimu Ji. 2025. "Resource Scheduling Algorithm for Edge Computing Networks Based on Multi-Objective Optimization" Applied Sciences 15, no. 19: 10837. https://doi.org/10.3390/app151910837

APA StyleLiu, W., Zhu, J., Li, X., Fei, Y., Wang, H., Liu, S., Zheng, X., & Ji, Y. (2025). Resource Scheduling Algorithm for Edge Computing Networks Based on Multi-Objective Optimization. Applied Sciences, 15(19), 10837. https://doi.org/10.3390/app151910837