1. Introduction

With the rapid development of artificial intelligence and big data technologies, affective computing has gradually become an important research direction in fields such as human-computer interaction, education, healthcare, and public opinion analysis. Compared to sentiment recognition that relies solely on a single data source, Multimodal Sentiment Analysis (MSA) can simultaneously utilize multisource information from text, speech, and vision to capture more comprehensive and fine-grained emotional features, thereby significantly improving recognition accuracy and robustness. However, inherent differences exist between different modalities, such as heterogeneity in expression, information redundancy and conflict, and common issues like missing modalities and noise interference in real-world applications. These problems severely restrict the performance of multimodal sentiment analysis models.

To alleviate these issues, scholars have recently proposed various cross-modal alignment and fusion methods, including attention-based modality interaction, graph network-based feature modeling, and Transformer-based multimodal pre-training models. These methods have, to some extent, improved the complementarity and fusion effectiveness among modalities. Nevertheless, current research still faces two key challenges: (1) The semantic expressive power of non-verbal modalities (such as speech and vision) is limited, often making the fusion process heavily dependent on the text modality, but lacking an effective enhancement mechanism. (2) Traditional modality fusion methods, which often use simple weighting or concatenation, struggle to suppress noisy information and selectively complete key information, ultimately affecting overall sentiment recognition performance.

To address these challenges, this paper proposes a Modality-Enhanced Multimodal Integration Model (MEMMI). The novelty of MEMMI lies in its ability to achieve modality enhancement and cross-modal fusion within a unified architecture, which directly advances existing multimodal sentiment analysis approaches in the following three aspects:

First, a modality enhancement module is designed that leverages the semantic guidance capability of the text modality through a multihead attention mechanism and a dynamic routing strategy, effectively strengthening the feature representations of speech and visual modalities.

Second, a gated fusion mechanism is proposed, which selectively injects speech and visual features into the dominant text modality, thereby completing missing information while suppressing noise interference, which is a significant improvement compared to prior fusion strategies.

Finally, a combined attention fusion module is constructed and integrated with a multiscale encoder to capture cross-modal semantic interactions at both global and local levels, leading to more robust and efficient sentiment recognition.

Nevertheless, the current evaluation remains limited to English and Chinese datasets, suggesting that cross-cultural validation should be explored in future work. The rest of this paper is organized as follows.

Section 2 reviews related work and highlights existing limitations.

Section 3 presents the proposed MEMMI model in detail.

Section 4 describes the experimental setup, datasets, evaluation metrics, ablation experiment and the experimental results.

Section 5 concludes the paper and outlines future research directions.

2. Related Works

Multimodal sentiment analysis research has evolved from early traditional machine learning feature concatenation to complex deep learning-based modality interaction and fusion models, primarily including methods focused on modality fusion and those centered on modality representation. In the area of modality fusion, model architectures are often designed based on attention mechanisms. For example, in 2017, Vaswani et al. proposed the Transformer model based on a self-attention mechanism [

1], which abandoned traditional recurrent and convolutional structures and focused on capturing global dependencies between inputs and outputs, fundamentally changing the paradigm of sequence modeling. Building on this, Tsai et al. created the classic MulT model [

2], which used an attention mechanism to build a cross-modal attention fusion model. This model’s unique properties allowed it to directly process unaligned multimodal data, significantly improving its performance on such data. This method has been widely adopted by subsequent researchers as a common approach for modality fusion.

Soon after, Rahman et al. utilized BERT features to design the MAG component [

3], which used a conditional self-attention mechanism to enable BERT [

4] and XLNet [

5] to seamlessly adapt to multimodal input during fine-tuning, becoming a new method for modality fusion in the field. Tang et al. proposed a bidirectional dynamic routing mechanism that uses a bidirectional attention mechanism to capture fine-grained multimodal sentiment, enhancing the ability to extract emotional context [

6]. Huang et al. introduced a modality binding mechanism and enhanced cross-modal feature interaction with a fine-grained convolutional module, addressing the problem of losing fine-grained modal features during fusion [

7]. Kyeonghun et al. proposed the AOBERT model to avoid information loss in traditional multimodal methods by using a single network to process text, visual, and speech modalities simultaneously [

8]. Han et al. addressed the issue that existing methods do not fully consider the unequal importance of modalities by proposing a pairwise modality fusion framework and using a gating mechanism to balance the influence of different modalities on sentiment polarity judgment [

9]. Cai et al. proposed a unimodal feature extraction network (UFEN) to extract unimodal features with stronger representation capabilities; then introduced multitask fusion network (MTFN) to improve the correlation and fusion effect between multiple modalities. Multilayer feature extraction, attention mechanisms and Transformer are used in the model to mine potential relationships between features [

10].

In the area of modality representation, research primarily divides modal information into modality-shared and modality-specific representations. This approach originated from the MISA framework proposed by Hazarika et al. in 2020 [

11], which learns modality-invariant and specific representations by mapping each modality’s representation to a shared modality-invariant subspace and a unique modality-specific subspace, enhancing the model’s understanding of different modalities. Subsequently, Wu et al. designed a text-centric shared-private framework for feature fusion to differentiate shared and private features in text, visual, and speech modalities [

12]. Xu et al. proposed the SATI multimodal sentiment decoding model based on time-invariant learning to address the issues of excessive noise and information redundancy in the visual modality [

13]. This model uses modality-invariant representation to guide interactions between different modalities while maintaining the consistency of time-series features. Lai et al. argued that modality representation methods in multimodal sentiment analysis have not effectively distinguished and extracted shared information between different modalities [

14]. They designed a deep modality-shared information learning module to guide the model in learning cross-modal shared features. Wang et al., considering the limitations of modality decomposition and the problem of modality heterogeneity, introduced a policy and critic model from reinforcement learning to dynamically adjust the importance of each modality’s specific representation [

15]. Zhou et al., based on the premise of modality decomposition, built a language-focused attractor to enhance the representation of the language modality, attracting complementary information from other modalities and improving overall performance [

16].

Despite the significant progress made by the aforementioned methods in multimodal sentiment analysis, two prominent issues remain. On the one hand, the semantic expressive power of non-verbal modalities (such as speech and vision) is limited, often requiring semantic alignment with the text modality, but existing methods lack effective enhancement mechanisms. On the other hand, the fusion process is still insufficient in handling modality redundancy and noise, which can easily lead to interference from irrelevant information and affect sentiment recognition. Based on this, the Modality-Enhanced Multimodal Integration Model (MEMMI) proposed in this paper aims to strengthen the feature representation of non-verbal modalities and achieve noise suppression and information completion through a semantically guided modality enhancement and a gated fusion mechanism, thereby improving the overall sentiment analysis performance.

3. Methods

3.1. Overall Architecture

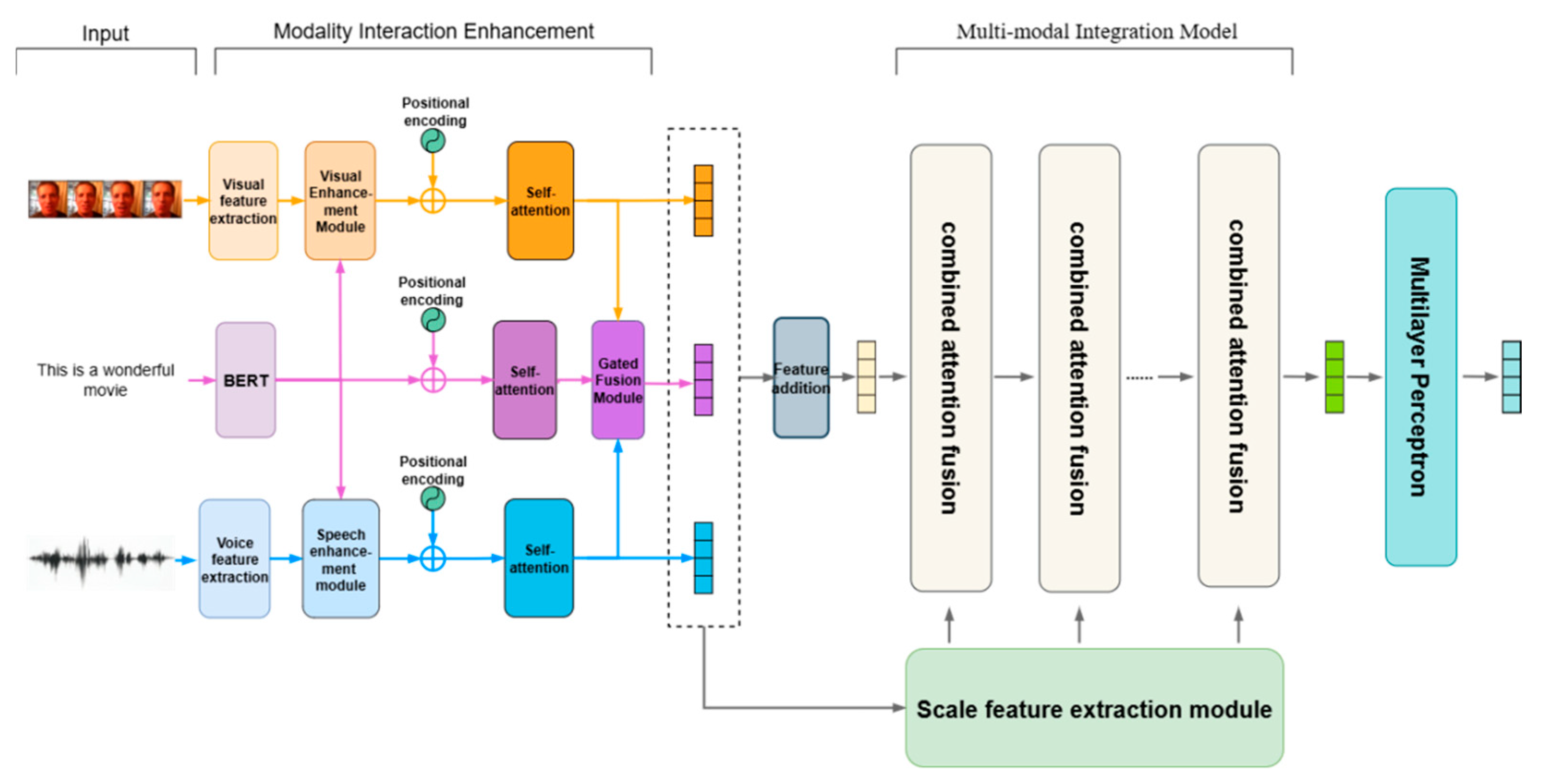

Figure 1 shows the overall architecture of the model and its workflow. The proposed multimodal integrated fusion attention framework primarily consists of two stages: modality interaction enhancement and combined attention fusion. It is capable of effectively processing information from three modalities: text, speech, and vision. First, for feature extraction, BERT-base-uncased is adopted to obtain contextualized textual embeddings, where each utterance is tokenized and represented as the last hidden state of the [CLS] token. Acoustic features are extracted using COVAREP, including pitch, energy, and spectral descriptors, sampled at 100 Hz. Visual features are obtained with OpenFace 2.0, which provides facial action units, gaze, and head pose information. Next, in the modality enhancement stage, the extracted visual and speech features are processed by their respective modality enhancement modules, followed by a self-attention calculation to obtain enhanced visual and speech representations guided by the text modality. Simultaneously, the text modality first undergoes self-attention calculation and then passes through a gated fusion module to inject non-verbal information for information completion. Finally, in the combined attention fusion stage, the three modalities are summed and fed into the combined attention fusion module. This module, with a total of four layers, also uses a multilayer encoder to extract features of different scales from each modality for modeling. The final results are then passed through a multilayer perceptron to obtain the multimodal representation for the sentiment analysis task.

3.2. Feature Extraction and Symbol Definition

In this task, the input data consists of text, visual, and speech modalities. After extraction by their respective feature extractors, the feature sequences for the three modalities can be represented as a triplet , where , and . Here, is the sequence length and is the vector dimension. The predicted sentiment score , ∈[−3, 3] is a discrete value. Values greater than, equal to, and less than 0,represent positive, neutral, and negative sentiment, respectively.

3.3. Modality Interaction Enhancement Stage

3.3.1. Modality Enhancement Module

In multimodal sentiment analysis, traditional methods often treat the initial features of each modality as fixed inputs for fusion, lacking interaction between modalities. If the initial single-modality representations are weak or full of noise, the subsequent fusion performance may be poor. Therefore, this study designs a modality enhancement module that directly addresses the problem of insufficient single-modality expressive power from the outset. The module’s structure is shown in

Figure 2, and it leverages the semantic guidance capability of the text modality to enhance the feature representation of non-verbal modalities. The core idea is to use a multihead attention mechanism and a dynamic routing iteration mechanism to extract guidance information from the text modality from different perspectives, dynamically optimizing the non-verbal modal features. Finally, a convolutional fusion is used to generate the final enhanced features. The multihead attention mechanism, by setting multiple independent attention heads, calculates the similarity between modalities, allowing each head to capture the relationship between text and non-verbal modalities from different perspectives. The dynamic routing iteration mechanism involves performing multiple iterative similarity calculations for a single attention head and accumulating them with historical similarity values. This ensures that each iterative update can comprehensively consider previous association information, progressively optimizing the non-verbal features to be more aligned with the semantic content of the text. For example, in a multimodal sentiment analysis task, if the text says “happy,” the enhanced speech features may more prominently highlight the positive tone in the voice.

Taking text-enhanced speech modality as an example, for the

-th attention head, assuming

iterations are performed, the formulas are as follows:

For each iteration, the current similarity value between modalities is calculated by Formula (1). is the enhanced speech feature from the previous iteration. The resulting will be stored as the historical modality similarity value for the next iteration. When performing the iteration, the current calculated modality similarity value needs to be added to the historical modality similarity value.

Then, the softmax function is used to obtain the attention weight, which is multiplied by to perform a weighted summation, and the result is the enhanced speech feature for the current -th iteration. When there is no historical modality similarity value, so is the original speech modality, that is .

After all attention heads have been processed, the enhanced speech modality from each head is obtained. These outputs are then concatenated and passed through a one-dimensional convolution for dimensionality reduction while preserving key information. The result after the convolution is the final enhanced speech modality. The formula is as follows:

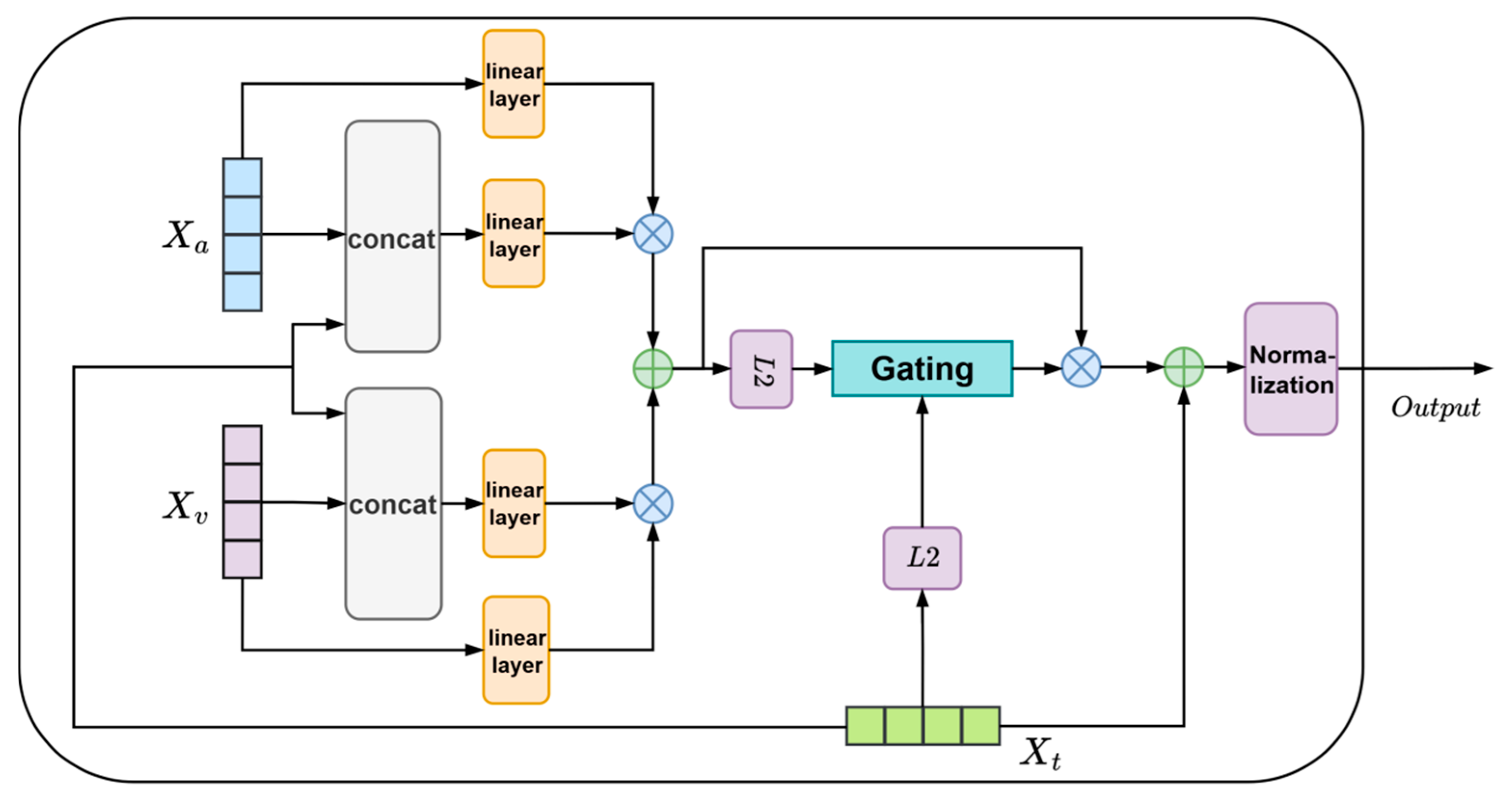

3.3.2. Gated Fusion Module

To facilitate the interaction between text and non-verbal modalities and ensure the text modality remains dominant, this paper introduces a gated mechanism. This mechanism allows the text modality to integrate information from speech and vision. As shown in

Figure 3, visual and speech features are first concatenated with text features. They are then mapped through a linear layer and weighted by the original linear mappings of speech and visual features. These two parts are then summed to represent the joint contribution of speech and vision to the text. The formulas are:

Here, is a linear layer, and is the final joint representation. This design allows text features to guide the extraction of visual and speech features, ensuring that the fused features remain consistent with the text semantics.

To balance the contributions of speech and visual features, a dynamic scaling factor

is introduced into the gated mechanism. By calculating the L2 norm of the text and fused features and combining it with an adjustable parameter

, the module generates a threshold and constrains the scaling factor to be between 0 and 1. The final enhanced feature is a weighted combination of the fused features and the original text features. Finally, the module uses Layer Normalization and Dropout to process the enhanced features, improving model stability and generalization ability and preventing overfitting. The formulas are as follows:

Here, is to prevent division by zero, and is an adjustable hyperparameter that controls the maximum influence of the non-verbal modalities. The final output is the text feature fused with speech and visual information.

3.4. Combined Attention Fusion Stage

After the modality enhancement is completed, a combined attention fusion module is used to achieve synchronous fusion of the three modality representations. To comprehensively capture the multilevel information of each modality, a scale feature extraction module is designed using an encoder, which can effectively generate multiscale feature representations. This design allows the model to fully focus on and utilize all valuable information within each modality, achieving more efficient multimodal fusion.

3.4.1. Scale Feature Extraction Module

The scale feature extraction module uses Transformer encoder layers to extract modal features. As shown in

Figure 4, each layer has three encoders, and the number of layers in the scale feature extraction module is the same as the number of layers in the combined attention fusion module. They are used to encode the text, visual, and speech modalities, respectively. The outputs of the encoders serve as the keys and values in the cross-attention of the combined attention fusion layer. The formulas are:

Here, , , are the encoders, represents the internal parameters of the encoders, is the output of the encoders, and are the original inputs of the three modalities. By stacking multiple encoder layers, features of different scales are extracted and modeled in the combined attention fusion, which deepens the interaction between modalities and provides a better solution to the problem of modality heterogeneity.

3.4.2. Combined Attention Fusion Module

To address the issue of low traditional fusion efficiency, this paper designs a combined attention fusion module. This module is capable of synchronously and integrally fusing text, visual, and speech information within a unified architecture. Specifically, the module sums the enhanced outputs of each modality from the modality interaction enhancement stage to form an initial hybrid representation containing information from all three modalities. This hybrid representation then serves as the query for the cross-attention calculation within the combined attention fusion layer. Because it contains integrated information from all three modalities, it can interact with the representations of each individual modality from a global perspective in subsequent attention calculations, ensuring that all modal information is fully considered and avoiding the problem of neglecting some modal information that can occur with traditional step-by-step fusion methods.

The structure of the combined attention fusion module is shown in

Figure 4. It is composed of three major stages: modality-specific encoding, cross-modal interaction, and final fusion. In the encoding stage, the module first uses a linear layer to align the three modal features, ensuring they are comparable in the same feature space. Subsequently, in the cross-modal interaction, the three aligned modal features are summed to serve as the initial fused representation, which is used as the query for cross-attention in the combined attention fusion layer. The module adopts a residual connection structure, and feature expression is enhanced through normalization and a feed-forward layer network. The processing results of the three modalities are summed after normalization and then input into a multihead self-attention mechanism for further fusion. Finally, a feed-forward layer generates the input representation for the next layer. The specific formulas are as follows:

Here, , . represents the internal parameters of each attention module, and the number of multihead attention heads is 8. are the aligned modal features. is the sum of the three modal features. represents cross-attention, and represents multihead attention.

are the results of the i-th layer’s cross-attention calculation, and the summation term is the result of the cross-attention calculation followed by two layers of normalization. LayerNorm and FFN represent layer normalization and a feed-forward layer, respectively. is the final output of the i-th layer of the combined attention fusion module.

In the cross-attention mechanism, the fused representation from the previous layer is used as the query, while the feature representations of each modality are used as the keys and values. This design allows the fusion process to adaptively extract relevant information from each modality based on the current fusion state. The multihead self-attention mechanism further enhances the model’s ability to capture complex relationships between different features, allowing the final fused representation to integrate information from each modality more comprehensively. Furthermore, the combination of Layer Normalization and the feed-forward layer (FFN) not only stabilizes the training process but also enhances the model’s expressive power. The residual connection structure ensures the effective transmission of information in deep networks, preventing the vanishing gradient problem. Through this designed combined attention fusion mechanism, the model can fully utilize the complementary information of different modalities while reducing redundancy and noise between them, thereby improving the accuracy and robustness of multimodal sentiment analysis.

3.5. Output and Loss

After the final combined attention fusion block is computed, a MultiLayer Perceptron (MLP) is used to predict the sentiment score. The Mean Absolute Error (MAE) is used as the loss function, with the formula as follows:

Here, is the ground truth label from the dataset, and is the predicted value of the model. Mean represents the mean operation, which is the model’s loss value.

4. Experiments

4.1. Dataset Preparation

This paper selects the public datasets CMU-MOSEI, CMU-MOSI, and CH-SIMS for experiments.

Table 1 shows the partitioning of the training, validation, and test sets for these datasets. The effectiveness of the proposed framework is validated by comparing the scores on the three datasets.

CMU-MOSI is a dataset created by Zadeh et al. in 2016 [

17]. MOSI contains 2199 annotated video clips, all of which are self-monologues by speakers to ensure data consistency and controllability. Each video clip in MOSI is annotated with a sentiment intensity score using a [−3, +3] scale, where −3 represents very negative and +3 represents very positive. This annotation method allows researchers to quantify emotional intensity for analysis. This dataset has made a significant contribution to the development of multimodal sentiment analysis and is one of the most commonly used MSA datasets.

CMU-MOSEI, created by Zadeh et al., is another very important dataset in the field of multimodal sentiment analysis [

18]. It contains 23,453 annotated video clips from 5000 videos and 1000 different speakers, covering 250 different topics such as product reviews and movie reviews. It also consists of self-monologues by speakers, and the sentiment intensity annotation is the same as in MOSI. Furthermore, its annotation system is more comprehensive. As shown in

Figure 5, the CMU-MOSEI annotation system includes two dimensions: sentiment polarity and emotional intensity. Sentiment polarity is represented by a continuous value from −3 to +3, from extremely negative to extremely positive. For better interpretability, these values are grouped into five categories: Negative (−3, −2), Weakly Negative (−1), Neutral (0), Weakly Positive (+1), and Positive (+2, +3). Emotional intensity is annotated for six basic emotions (anger, disgust, fear, happiness, sadness, and surprise), with a focus on gender balance.

CH-SIMS is a dataset created by Yu et al. that focuses on Chinese MSA [

19]. It contains 2281 carefully selected Chinese video clips, covering various scenarios and roles. The data source for CH-SIMS is primarily popular Chinese variety shows, which include rich dialogue and interactive scenes with high video quality and rich facial expressions and body language. In terms of modality composition, CH-SIMS is similar to the CMU series datasets, containing video, speech, and text modalities. However, its annotation system is different; for sentiment polarity, it uses a three-category annotation [−1, 1], representing negative, neutral, and positive, respectively. It also provides fine-grained emotional annotations for six basic emotions: happiness, surprise, disgust, fear, sadness, and anger. This allows researchers to not only perform multimodal sentiment analysis but also analyze the visual, speech, or text information in the videos separately, capturing fine-grained emotions in the data.

4.2. Baseline Models

To validate the reliability and effectiveness of the proposed model, this paper selects 10 classic baseline models from the field of multimodal sentiment analysis for comparison. Among these, MISA [

11]. represents the modality representation direction, Self-MM [

20]. belongs to the self-supervised learning direction, and the rest are modality fusion methods. Specifically, these include: TFN [

21], which achieves multimodal interaction representation through tensor outer product; MulT [

2], which uses a cross-modal attention mechanism to solve modality misalignment; MAG-BERT [

3], which dynamically injects non-verbal features into BERT using a gating mechanism; MISA, which improves robustness by separating modality-invariant and modality-specific spaces; BBFN [

9] which uses a bidirectional bimodal fusion to balance performance and complexity; MMIM [

22], which enhances modality complementarity based on mutual information maximization; Self-MM, which combines predicting missing modalities with contrastive learning for self-supervised optimization; CubeMLP [

23], which proposes a cubic MLP structure for cross-modal interaction; ALMT [

24], which focuses on the modality alignment problem; and KuDA [

25], which combines knowledge transfer with domain adaptation to improve model generalization ability. DLF [

16], is a Disentangled-Language-Focused (DLF) multimodal representation learning framework, which incorporates a feature disentanglement module to separate modality-shared and modality-specific information. CRNet [

26] leverages different acoustic and visual representation subspaces to interact with linguistic modality. TMBL [

7], a new modality-binding learning framework, is proposed, and the internal structure of the transformer model is redesigned. These methods cover different approaches to multimodal fusion, providing a comprehensive baseline for the performance evaluation of the proposed model.

4.3. Experimental Environment and Parameters

The experiments were trained on an NVIDIA GeForce RTX 4090 graphics card with 24 GB of memory. The AdamW optimizer was used to optimize the model, with four layers of combined attention fusion and scale feature extraction layers. The initial learning rate was 1 × 10

−4. Detailed parameter settings can be found in

Table 2. Hyperparameters and training:batch_size = 64; epochs = 100; initial learning rate = 1 × 10

−4; optimizer = AdamW (weight_decay = 0.01); dropout after fusion/attention layers = 0.3; dropout in classification FC layers = 0.5; warmup for first 5% steps then linear decay. Early stopping is applied with patience = 10 epochs on validation loss; the best validation model is saved.

Compute cost (measured on a single NVIDIA GeForce RTX 4090, 24 GB): full training time ≈ 2.5 hrs (MOSI), 6 hrs (MOSEI), 8 hrs (CH-SIMS); peak GPU memory ≈ 18 GB/20 GB/22 GB, respectively. Model size ≈ 14.2 M parameters; forward FLOPs ≈ 8.5 GFLOPs/sample (batch = 1); inference latency ≈ 12 ms/sample(end-to-end, including feature extraction; core MEMMI module ≈ 6–8 ms).

4.4. Evaluation Metrics

To comprehensively evaluate the model’s performance, this paper uses a variety of regression and classification metrics. In the regression task, Mean Absolute Error (MAE) is used to measure the average deviation between predicted and true values, with a lower value indicating higher prediction accuracy. Pearson Correlation Coefficient (Corr) is used to assess the linear correlation between predicted and true values, reflecting the model’s ability to capture sentiment trends. In the classification task, Binary Accuracy (Acc-2), Three-class Accuracy (Acc-3), Five-class Accuracy (Acc-5), and Seven-class Accuracy (Acc-7) are used to evaluate sentiment polarity or intensity at different granularities. Acc-2 includes two forms, Has0-Acc2 and Non0-Acc2, to adapt to different label partitioning methods. Furthermore, the F1 score is introduced to balance precision and recall, which is particularly suitable for sentiment recognition scenarios with imbalanced class distributions.

4.5. Results Analysis

Table 3,

Table 4 and

Table 5 show the experimental results of the model on the three datasets. The Acc-2 metric represents Has0-Acc2/Non0-Acc2, and the F1 metric represents Has0-F1/Non0-F1. The data indicate that the proposed model demonstrates a significant advantage. On the MOSI dataset, the proposed model achieves an Acc-7 of 45.91, a significant improvement compared to earlier methods like TFN’s 34.9 and MulT’s 40.0. The binary accuracy and F1 score reach 82.86/84.60 and 82.70/84.56, respectively, with an MAE of 0.73 and a Corr of 0.79. All metrics surpass all baseline models. On the MOSEI dataset, the advantage is even more pronounced, with the proposed model achieving an Acc-7 of 54.17, surpassing the second-best model, ALMT, at 53.72. The Acc-2 and F1 scores reach 83.69/86.02 and 83.22/86.01, respectively, MAE drops to 0.53, and Corr reaches 0.78, also showing the best performance.

Figure 6 shows the Non0-Acc2 scores of the different models. Compared to earlier methods like TFN, MulT, and MAG-BERT, the advantage of the proposed model lies primarily in its enhanced modality interaction capability. While TFN created a unified representation space for multimodal interaction, its static fusion strategy cannot adapt to the changing importance of modalities in different samples. MulT introduced a cross-modal attention mechanism to solve the modality misalignment problem, but its directional cross-attention structure limited comprehensive interaction between modalities. MAG-BERT incorporated non-verbal information through a multimodal adaptive gating mechanism, but its excessive reliance on the text modality led to insufficient robustness. On CH-SIMS, its Corr was only 0.399, while the proposed model achieved 0.594. In contrast, the proposed model, through its modality enhancement and multimodal integrated fusion attention framework, achieves more comprehensive and adaptive modality interaction.

Compared to recent methods, the proposed model also demonstrates advantages. BBFN’s bidirectional bimodal fusion mechanism processes different modalities in parallel, balancing relationship modeling and computational complexity, but struggles to capture the complete relationship among three modalities. Self-MM enhances modality consistency through self-supervised tasks, but its multitask learning framework complicates the training process. The proposed model avoids these limitations by using an iterative self-attention mechanism and a precise gating mechanism to achieve dynamic feature enhancement and noise control, simplifying the training process while improving performance.

CubeMLP’s three-dimensional cubic MLP structure avoids the computational overhead of attention mechanisms, but its fixed projection and transformation methods limit its modeling capability. ALMT focuses on solving the cross-modal alignment problem, but its single strategy struggles to handle the complexity of emotional expression. KuDA combines knowledge transfer with adaptive dominant modality technology, but its reliance on external knowledge increases model complexity. In contrast, the proposed model does not require external knowledge support. By relying solely on its internal modality enhancement and fusion mechanisms, it improves Acc-7 to 45.91 on MOSI, proving the intrinsic effectiveness of the proposed method.

On the CH-SIMS dataset, the proposed model achieves the highest Acc-5 and Acc-3 scores of 41.88 and 66.52, respectively. The Corr metric, in particular, reaches 0.59, which is superior to all comparison models. A comprehensive analysis shows that the proposed model’s advantages mainly stem from the modality enhancement mechanism, the combined attention fusion framework, and the optimized gating mechanism, which effectively improve the performance of multimodal sentiment analysis.

4.6. Ablation Experiment

To further explore the effectiveness and contribution of each module in the model and to prove the rationality of the model design, this paper designs four ablation experiments.

4.6.1. Impact of Different Modules

To verify the effectiveness of each module in the model, the performance change after removing each core module was tested on the MOSI and CH-SIMS datasets. The results are shown in

Table 6, where MEB stands for Modality Enhancement Block, GFM for Gated Fusion Module, CAF for Combined Attention Fusion, and SFE for Scale Feature Extraction encoder. The notation w/o indicates the removal of the corresponding module.

The experimental results show that removing the combined attention fusion module has the most significant impact on model performance. Acc-5 on the CH-SIMS dataset decreases by 6.74 percentage points, and Acc-7 on the MOSI dataset decreases by 5.65 percentage points. When the modality enhancement module is removed, the model’s accuracy drops by about 1 percentage point on both datasets, for both visual and audio modalities. Removing the scale feature extraction encoder results in a 2.76 percentage point drop in Acc-5 on CH-SIMS and a 1.75 percentage point drop in Acc-7 on MOSI. These results demonstrate the crucial role of the combined attention fusion mechanism and also validate the importance of modality enhancement and scale feature extraction in improving multimodal sentiment analysis performance.

4.6.2. Impact of Different Modalities

To further investigate the contribution of each modality to the overall performance, this paper attempts to remove each modality to verify the necessity of multimodal fusion and compares the results with the ALMT and CubeMLP models. The results are shown in

Table 7. In the table, the proposed model is denoted as MEMMI, and V+A, T+V, T+A represent the removal of text, speech, and visual modalities, respectively.

The modality ablation experimental results show that removing the text modality causes a sudden drop in scores for all three models on both datasets. This clearly demonstrates the crucial role of the text modality in multimodal sentiment analysis. In contrast, the score drops for removing the speech modality and removing the visual modality are not as large, and the degree of impact between the two is relatively close. This indicates that although speech and visual modalities are important, their contributions are relatively balanced. This experiment also compares the impact of removing different modalities on the baseline ALMT and CubeMLP models. Specifically, when the text modality is removed, the performance of the proposed model decreases more slowly than that of ALMT and CubeMLP.

Figure 7 shows the trend changes of the Acc-5 and Acc-7 metrics when different modalities are removed. This strongly proves that the proposed modality enhancement mechanism can more effectively utilize the remaining non-verbal information to better maintain model performance when the primary information source is missing.

4.6.3. Impact of Different Fusion Techniques

To analyze the impact of different fusion techniques, this paper compares four multimodal fusion methods and conducts comparison experiments on the MOSI dataset, as shown in

Table 8. The data shows that the proposed combined attention fusion module demonstrates the best performance, outperforming other fusion methods on all evaluation metrics. These experimental results fully prove that, compared to BBFN’s pairwise modality fusion, MulT’s three-modal pairwise cross-fusion, and TFN’s simple fusion methods, the proposed combined attention fusion mechanism can more effectively capture the complex interaction relationships among multiple modalities, thereby achieving comprehensively leading performance on all evaluation metrics.

4.6.4. Impact of the Number of Different Scale Feature Extraction Layers

To verify the impact of each layer of scale features on model performance, the ablation experiment designed in this paper was conducted by selectively enabling or disabling different encoder layers. The results are shown in

Table 9. When all four encoder layers (L1-L4) are involved in scale feature modeling, the model achieves optimal performance on both datasets for key metrics. Removing any layer or combination of layers typically leads to a decrease in performance metrics to varying degrees. For example, when only L1 and L2 layers are used, the model performance is at its lowest. When only L3 and L4 layers are used, the Acc-7 on MOSI is 44.52 and the Acc-5 on CH-SIMS is 40.88, both of which are significantly lower than the results when all four layers are used. Although for the MOSI MAE metric, some three-layer combinations (e.g., L1, L2, L3) can achieve the same performance as the four-layer combination, there is still a gap in the main classification accuracy metrics Acc-7 and Acc-5. This indicates that while the contribution of different-level features to the final performance may have a slight focus, overall, each scale feature extraction layer makes a positive contribution to the model’s overall performance.

5. Conclusions

This paper addresses the issues of modality heterogeneity, insufficient expressive power of non-verbal modalities, and low fusion efficiency in multimodal sentiment analysis by proposing a Modality Enhanced Multimodal Integration Model (MEMMI). This model achieves modality enhancement and efficient fusion within a unified architecture. It improves the feature representation of non-verbal modalities through a semantically guided modality enhancement module. It also achieves selective injection of speech and visual information through a gated fusion mechanism, which completes information while effectively suppressing noise. Finally, it fully models cross-modal interaction features at global and local semantic levels using a combined attention fusion module and a multiscale feature encoder. Experiments on three mainstream datasets show that MEMMI surpasses existing methods on all metrics, validating its effectiveness and robustness in the multimodal sentiment recognition task. In addition, this paper designs ablation experiments to verify the crucial role of the combined attention fusion module and the effectiveness of the modality enhancement module, further proving the rationality and necessity of the proposed method.

Future work will focus on three aspects: first, exploring more efficient cross-modal pre-training methods to further improve the model’s generalization ability in low-resource scenarios. Second, extending MEMMI to broader multilingual and multicultural datasets, and improving its scalability to real-time applications. And third, we plan to further enhance the explainability and interpretability of the proposed framework. Specifically, we aim to incorporate model-agnostic interpretability techniques and design visualization methods to better illustrate how different modalities and features contribute to the final prediction. Such efforts will not only improve the transparency of the model but also facilitate its adoption in real-world applications.”