Evolution of Deep Learning Approaches in UAV-Based Crop Leaf Disease Detection: A Web of Science Review

Abstract

1. Introduction

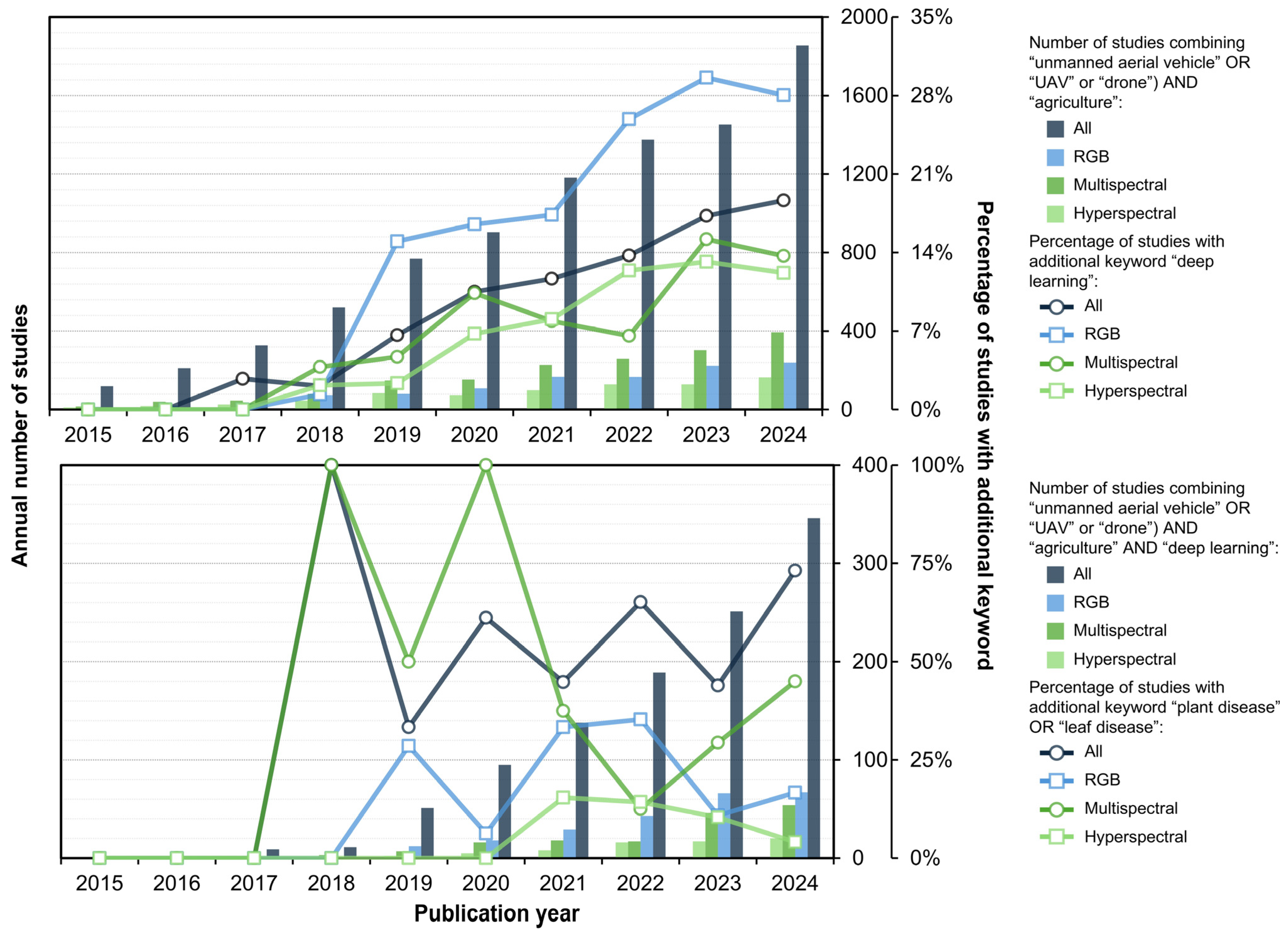

2. Crop Leaf Disease Studies Based on UAVs and Deep Learning Indexed in the Web of Science Core Collection

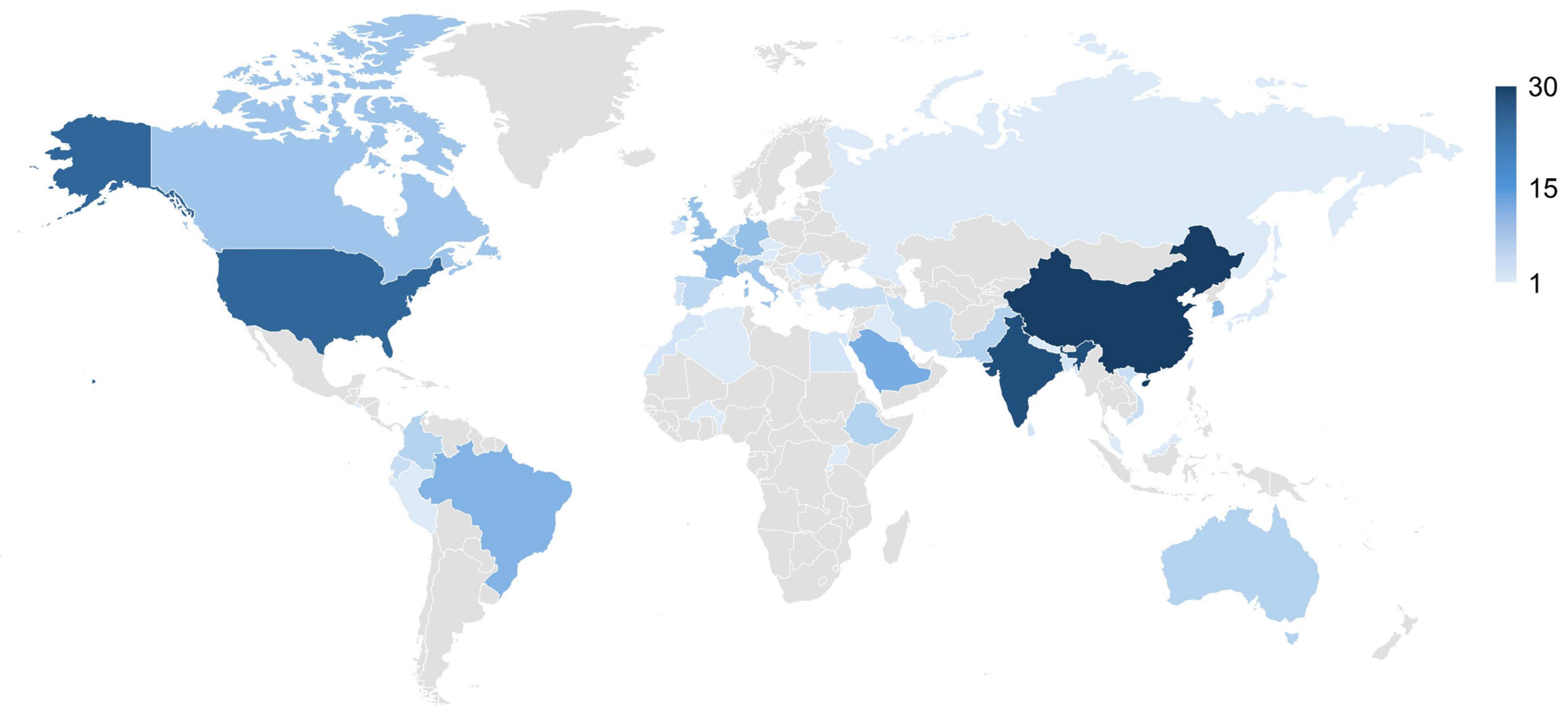

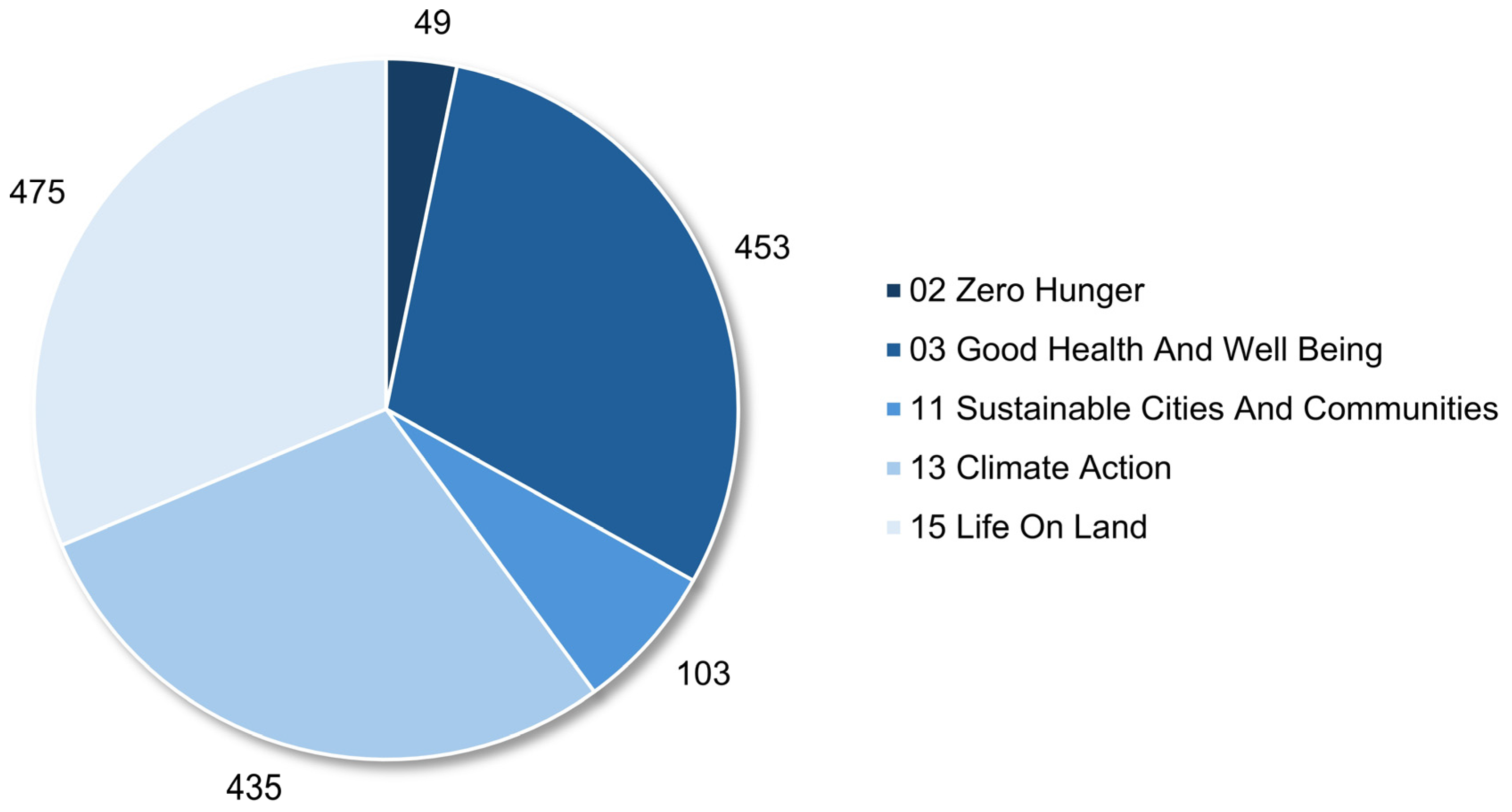

State of Crop Leaf Disease Detection Studies Based on UAVs and Deep Learning on a Global Scale

3. Latest Developments in UAV Aspect of Analyzed Crop Leaf Disease Studies

3.1. Trends in UAV Aspect of Crop Leaf Disease Studies Indexed in Web of Science

3.2. Imaging Sensors in Crop Leaf Disease Studies Indexed in Web of Science

3.3. UAV Platforms in Crop Leaf Disease Studies Indexed in Web of Science

4. Latest Developments in Deep Learning Aspect of Analyzed Crop Leaf Disease Studies

4.1. Deep Learning Algorithms in Crop Leaf Disease Studies Indexed in Web of Science

4.2. Comparative Assessment of Major Deep Learning Approaches in Crop Leaf Disease Studies

4.3. Computational Efficiency of Major Deep Learning Approaches in Crop Leaf Disease Studies

4.4. Analysis of Statistical Metrics Used for Accuracy Assessment

4.5. Advantages and Limitations of Deep Learning Approaches Based on Used Input Crop Leaf Disease Image Datasets

4.6. Potential of Deep Learning Algorithms in Practical Applications for Crop Leaf Disease Detection

5. Conclusions and Future Remarks

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ngugi, H.N.; Akinyelu, A.A.; Ezugwu, A.E. Machine Learning and Deep Learning for Crop Disease Diagnosis: Performance Analysis and Review. Agronomy 2024, 14, 3001. [Google Scholar] [CrossRef]

- Terentev, A.; Dolzhenko, V.; Fedotov, A.; Eremenko, D. Current State of Hyperspectral Remote Sensing for Early Plant Disease Detection: A Review. Sensors 2022, 22, 757. [Google Scholar] [CrossRef] [PubMed]

- Wen, T.; Li, J.-H.; Wang, Q.; Gao, Y.-Y.; Hao, G.-F.; Song, B.-A. Thermal Imaging: The Digital Eye Facilitates High-Throughput Phenotyping Traits of Plant Growth and Stress Responses. Sci. Total Environ. 2023, 899, 165626. [Google Scholar] [CrossRef]

- Ding, W.; Abdel-Basset, M.; Alrashdi, I.; Hawash, H. Next Generation of Computer Vision for Plant Disease Monitoring in Precision Agriculture: A Contemporary Survey, Taxonomy, Experiments, and Future Direction. Inf. Sci. 2024, 665, 120338. [Google Scholar] [CrossRef]

- Wang, Y.M.; Ostendorf, B.; Gautam, D.; Habili, N.; Pagay, V. Plant Viral Disease Detection: From Molecular Diagnosis to Optical Sensing Technology—A Multidisciplinary Review. Remote Sens. 2022, 14, 1542. [Google Scholar] [CrossRef]

- Fu, X.; Jiang, D. Chapter 16–High-Throughput Phenotyping: The Latest Research Tool for Sustainable Crop Production under Global Climate Change Scenarios. In Sustainable Crop Productivity and Quality Under Climate Change; Liu, F., Li, X., Hogy, P., Jiang, D., Brestic, M., Liu, B., Eds.; Academic Press: Amsterdam, The Netherlands, 2022; pp. 313–381. ISBN 978-0-323-85449-8. [Google Scholar]

- Polymeni, S.; Skoutas, D.N.; Sarigiannidis, P.; Kormentzas, G.; Skianis, C. Smart Agriculture and Greenhouse Gas Emission Mitigation: A 6G-IoT Perspective. Electronics 2024, 13, 1480. [Google Scholar] [CrossRef]

- Laveglia, S.; Altieri, G.; Genovese, F.; Matera, A.; Di Renzo, G.C. Advances in Sustainable Crop Management: Integrating Precision Agriculture and Proximal Sensing. AgriEngineering 2024, 6, 3084–3120. [Google Scholar] [CrossRef]

- Yadav, A.; Yadav, K.; Ahmad, R.; Abd-Elsalam, K.A. Emerging Frontiers in Nanotechnology for Precision Agriculture: Advancements, Hurdles and Prospects. Agrochemicals 2023, 2, 220–256. [Google Scholar] [CrossRef]

- Khan, N.; Ray, R.L.; Sargani, G.R.; Ihtisham, M.; Khayyam, M.; Ismail, S. Current Progress and Future Prospects of Agriculture Technology: Gateway to Sustainable Agriculture. Sustainability 2021, 13, 4883. [Google Scholar] [CrossRef]

- Wang, D.; Cao, W.; Zhang, F.; Li, Z.; Xu, S.; Wu, X. A Review of Deep Learning in Multiscale Agricultural Sensing. Remote Sens. 2022, 14, 559. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-Based Plant Height from Crop Surface Models, Visible, and near Infrared Vegetation Indices for Biomass Monitoring in Barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Radočaj, D.; Šiljeg, A.; Plaščak, I.; Marić, I.; Jurišić, M. A Micro-Scale Approach for Cropland Suitability Assessment of Permanent Crops Using Machine Learning and a Low-Cost UAV. Agronomy 2023, 13, 362. [Google Scholar] [CrossRef]

- Radočaj, D.; Gašparović, M.; Jurišić, M. Open Remote Sensing Data in Digital Soil Organic Carbon Mapping: A Review. Agriculture 2024, 14, 1005. [Google Scholar] [CrossRef]

- Wilke, N.; Siegmann, B.; Postma, J.A.; Muller, O.; Krieger, V.; Pude, R.; Rascher, U. Assessment of Plant Density for Barley and Wheat Using UAV Multispectral Imagery for High-Throughput Field Phenotyping. Comput. Electron. Agric. 2021, 189, 106380. [Google Scholar] [CrossRef]

- Maguluri, L.P.; Geetha, B.; Banerjee, S.; Srivastava, S.S.; Nageswaran, A.; Mudalkar, P.K.; Raj, G.B. Sustainable Agriculture and Climate Change: A Deep Learning Approach to Remote Sensing for Food Security Monitoring. Remote Sens. Earth Syst. Sci. 2024, 7, 709–721. [Google Scholar] [CrossRef]

- Mohsan, S.A.H.; Khan, M.A.; Noor, F.; Ullah, I.; Alsharif, M.H. Towards the Unmanned Aerial Vehicles (UAVs): A Comprehensive Review. Drones 2022, 6, 147. [Google Scholar] [CrossRef]

- Aslan, M.F.; Durdu, A.; Sabanci, K.; Ropelewska, E.; Gültekin, S.S. A Comprehensive Survey of the Recent Studies with UAV for Precision Agriculture in Open Fields and Greenhouses. Appl. Sci. 2022, 12, 1047. [Google Scholar] [CrossRef]

- Benincasa, P.; Antognelli, S.; Brunetti, L.; Fabbri, C.A.; Natale, A.; Sartoretti, V.; Modeo, G.; Guiducci, M.; Tei, F.; Vizzari, M. Reliability of NDVI Derived by High Resolution Satellite and UAV Compared to In-Field Methods for the Evaluation of Early Crop N Status and Grain Yield in Wheat. Exp. Agric. 2018, 54, 604–622. [Google Scholar] [CrossRef]

- Khanal, S.; Kc, K.; Fulton, J.P.; Shearer, S.; Ozkan, E. Remote Sensing in Agriculture—Accomplishments, Limitations, and Opportunities. Remote Sens. 2020, 12, 3783. [Google Scholar] [CrossRef]

- Kakarla, S.C.; Costa, L.; Ampatzidis, Y.; Zhang, Z. Applications of UAVs and Machine Learning in Agriculture. In Unmanned Aerial Systems in Precision Agriculture: Technological Progresses and Applications; Zhang, Z., Liu, H., Yang, C., Ampatzidis, Y., Zhou, J., Jiang, Y., Eds.; Springer Nature: Singapore, 2022; pp. 1–19. ISBN 978-981-19-2027-1. [Google Scholar]

- Mulla, D.J. Satellite Remote Sensing for Precision Agriculture. In Sensing Approaches for Precision Agriculture; Kerry, R., Escolà, A., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 19–57. ISBN 978-3-030-78431-7. [Google Scholar]

- Bouguettaya, A.; Zarzour, H.; Kechida, A.; Taberkit, A.M. Deep Learning Techniques to Classify Agricultural Crops through UAV Imagery: A Review. Neural Comput. Appl. 2022, 34, 9511–9536. [Google Scholar] [CrossRef]

- Kaushalya Madhavi, B.G.; Bhujel, A.; Kim, N.E.; Kim, H.T. Measurement of Overlapping Leaf Area of Ice Plants Using Digital Image Processing Technique. Agriculture 2022, 12, 1321. [Google Scholar] [CrossRef]

- Jurado, J.M.; López, A.; Pádua, L.; Sousa, J.J. Remote Sensing Image Fusion on 3D Scenarios: A Review of Applications for Agriculture and Forestry. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102856. [Google Scholar] [CrossRef]

- Qadri, S.A.A.; Huang, N.-F.; Wani, T.M.; Bhat, S.A. Advances and Challenges in Computer Vision for Image-Based Plant Disease Detection: A Comprehensive Survey of Machine and Deep Learning Approaches. IEEE Trans. Autom. Sci. Eng. 2025, 22, 2639–2670. [Google Scholar] [CrossRef]

- Younesi, A.; Ansari, M.; Fazli, M.; Ejlali, A.; Shafique, M.; Henkel, J. A Comprehensive Survey of Convolutions in Deep Learning: Applications, Challenges, and Future Trends. IEEE Access 2024, 12, 41180–41218. [Google Scholar] [CrossRef]

- Retallack, A.; Finlayson, G.; Ostendorf, B.; Lewis, M. Using Deep Learning to Detect an Indicator Arid Shrub in Ultra-High-Resolution UAV Imagery. Ecol. Indic. 2022, 145, 109698. [Google Scholar] [CrossRef]

- Neupane, K.; Baysal-Gurel, F. Automatic Identification and Monitoring of Plant Diseases Using Unmanned Aerial Vehicles: A Review. Remote Sens. 2021, 13, 3841. [Google Scholar] [CrossRef]

- Tamayo-Vera, D.; Wang, X.; Mesbah, M. A Review of Machine Learning Techniques in Agroclimatic Studies. Agriculture 2024, 14, 481. [Google Scholar] [CrossRef]

- Khan, A.; Vibhute, A.D.; Mali, S.; Patil, C.H. A Systematic Review on Hyperspectral Imaging Technology with a Machine and Deep Learning Methodology for Agricultural Applications. Ecol. Inform. 2022, 69, 101678. [Google Scholar] [CrossRef]

- Kuswidiyanto, L.W.; Noh, H.-H.; Han, X. Plant Disease Diagnosis Using Deep Learning Based on Aerial Hyperspectral Images: A Review. Remote Sens. 2022, 14, 6031. [Google Scholar] [CrossRef]

- Shahi, T.B.; Xu, C.-Y.; Neupane, A.; Guo, W. Recent Advances in Crop Disease Detection Using UAV and Deep Learning Techniques. Remote Sens. 2023, 15, 2450. [Google Scholar] [CrossRef]

- Telli, K.; Kraa, O.; Himeur, Y.; Ouamane, A.; Boumehraz, M.; Atalla, S.; Mansoor, W. A Comprehensive Review of Recent Research Trends on Unmanned Aerial Vehicles (UAVs). Systems 2023, 11, 400. [Google Scholar] [CrossRef]

- Ampatzidis, Y.; Partel, V. UAV-Based High Throughput Phenotyping in Citrus Utilizing Multispectral Imaging and Artificial Intelligence. Remote Sens. 2019, 11, 410. [Google Scholar] [CrossRef]

- Kerkech, M.; Hafiane, A.; Canals, R. Vine Disease Detection in UAV Multispectral Images Using Optimized Image Registration and Deep Learning Segmentation Approach. Comput. Electron. Agric. 2020, 174, 105446. [Google Scholar] [CrossRef]

- Csillik, O.; Cherbini, J.; Johnson, R.; Lyons, A.; Kelly, M. Identification of Citrus Trees from Unmanned Aerial Vehicle Imagery Using Convolutional Neural Networks. Drones 2018, 2, 39. [Google Scholar] [CrossRef]

- Selvaraj, M.G.; Vergara, A.; Montenegro, F.; Ruiz, H.A.; Safari, N.; Raymaekers, D.; Ocimati, W.; Ntamwira, J.; Tits, L.; Omondi, A.B.; et al. Detection of Banana Plants and Their Major Diseases through Aerial Images and Machine Learning Methods: A Case Study in DR Congo and Republic of Benin. ISPRS J. Photogramm. Remote Sens. 2020, 169, 110–124. [Google Scholar] [CrossRef]

- Reedha, R.; Dericquebourg, E.; Canals, R.; Hafiane, A. Transformer Neural Network for Weed and Crop Classification of High Resolution UAV Images. Remote Sens. 2022, 14, 592. [Google Scholar] [CrossRef]

- Mazzia, V.; Comba, L.; Khaliq, A.; Chiaberge, M.; Gay, P. UAV and Machine Learning Based Refinement of a Satellite-Driven Vegetation Index for Precision Agriculture. Sensors 2020, 20, 2530. [Google Scholar] [CrossRef]

- Chicco, D.; Jurman, G. The Advantages of the Matthews Correlation Coefficient (MCC) over F1 Score and Accuracy in Binary Classification Evaluation. BMC Genom. 2020, 21, 6. [Google Scholar] [CrossRef]

- Duarte-Carvajalino, J.M.; Alzate, D.F.; Ramirez, A.A.; Santa-Sepulveda, J.D.; Fajardo-Rojas, A.E.; Soto-Suarez, M. Evaluating Late Blight Severity in Potato Crops Using Unmanned Aerial Vehicles and Machine Learning Algorithms. Remote Sens. 2018, 10, 1513. [Google Scholar] [CrossRef]

- Robi, S.N.A.M.; Ahmad, N.; Izhar, M.A.M.; Kaidi, H.M.; Noor, N.M. Utilizing UAV Data for Neural Network-Based Classification of Melon Leaf Diseases in Smart Agriculture. Int. J. Adv. Comput. Sci. Appl. IJACSA 2024, 15, 1212–1219. [Google Scholar] [CrossRef]

- Shah, S.A.; Lakho, G.M.; Keerio, H.A.; Sattar, M.N.; Hussain, G.; Mehdi, M.; Vistro, R.B.; Mahmoud, E.A.; Elansary, H.O. Application of Drone Surveillance for Advance Agriculture Monitoring by Android Application Using Convolution Neural Network. Agronomy 2023, 13, 1764. [Google Scholar] [CrossRef]

- PlantVillage-Dataset/Raw/Color at Master—spMohanty/PlantVillage-Dataset. Available online: https://github.com/spMohanty/PlantVillage-Dataset/tree/master/raw/color (accessed on 20 March 2025).

- Zhou, C.; Ye, H.; Hu, J.; Shi, X.; Hua, S.; Yue, J.; Xu, Z.; Yang, G. Automated Counting of Rice Panicle by Applying Deep Learning Model to Images from Unmanned Aerial Vehicle Platform. Sensors 2019, 19, 3106. [Google Scholar] [CrossRef]

- Tetila, E.C.; Machado, B.B.; Menezes, G.K.; Oliveira, A.D.S.; Alvarez, M.; Amorim, W.P.; de Souza Belete, N.A.; da Silva, G.G.; Pistori, H. Automatic Recognition of Soybean Leaf Diseases Using UAV Images and Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2020, 17, 903–907. [Google Scholar] [CrossRef]

- Su, J.; Yi, D.; Su, B.; Mi, Z.; Liu, C.; Hu, X.; Xu, X.; Guo, L.; Chen, W.-H. Aerial Visual Perception in Smart Farming: Field Study of Wheat Yellow Rust Monitoring. IEEE Trans. Ind. Inform. 2021, 17, 2242–2249. [Google Scholar] [CrossRef]

- Amarasingam, N.; Gonzalez, F.; Salgadoe, A.S.A.; Sandino, J.; Powell, K. Detection of White Leaf Disease in Sugarcane Crops Using UAV-Derived RGB Imagery with Existing Deep Learning Models. Remote Sens. 2022, 14, 6137. [Google Scholar] [CrossRef]

- Shi, Y.; Han, L.; Kleerekoper, A.; Chang, S.; Hu, T. Novel CropdocNet Model for Automated Potato Late Blight Disease Detection from Unmanned Aerial Vehicle-Based Hyperspectral Imagery. Remote Sens. 2022, 14, 396. [Google Scholar] [CrossRef]

- Günder, M.; Ispizua Yamati, F.R.; Kierdorf, J.; Roscher, R.; Mahlein, A.-K.; Bauckhage, C. Agricultural Plant Cataloging and Establishment of a Data Framework from UAV-Based Crop Images by Computer Vision. GigaScience 2022, 11, giac054. [Google Scholar] [CrossRef]

- Deng, J.; Zhang, X.; Yang, Z.; Zhou, C.; Wang, R.; Zhang, K.; Lv, X.; Yang, L.; Wang, Z.; Li, P.; et al. Pixel-Level Regression for UAV Hyperspectral Images: Deep Learning-Based Quantitative Inverse of Wheat Stripe Rust Disease Index. Comput. Electron. Agric. 2023, 215, 108434. [Google Scholar] [CrossRef]

- Noroozi, H.; Shah-Hosseini, R. Cercospora Leaf Spot Detection in Sugar Beets Using High Spatio-Temporal Unmanned Aerial Vehicle Imagery and Unsupervised Anomaly Detection Methods. J. Appl. Remote Sens. 2024, 18, 024506. [Google Scholar] [CrossRef]

- Alves Nogueira, E.; Moraes Rocha, B.; da Silva Vieira, G.; Ueslei da Fonseca, A.; Paula Felix, J.; Oliveira, A., Jr.; Soares, F. Enhancing Corn Image Resolution Captured by Unmanned Aerial Vehicles with the Aid of Deep Learning. IEEE Access 2024, 12, 149090–149098. [Google Scholar] [CrossRef]

- Alirezazadeh, P.; Schirrmann, M.; Stolzenburg, F. A Comparative Analysis of Deep Learning Methods for Weed Classification of High-Resolution UAV Images. J. Plant Dis. Prot. 2024, 131, 227–236. [Google Scholar] [CrossRef]

- Ribeiro, J.B.; da Silva, R.R.; Dias, J.D.; Escarpinati, M.C.; Backes, A.R. Automated Detection of Sugarcane Crop Lines from UAV Images Using Deep Learning. Inf. Process. Agric. 2024, 11, 385–396. [Google Scholar] [CrossRef]

- Martins, J.A.C.; Hisano Higuti, A.Y.; Pellegrin, A.O.; Juliano, R.S.; de Araújo, A.M.; Pellegrin, L.A.; Liesenberg, V.; Ramos, A.P.M.; Gonçalves, W.N.; Sant’Ana, D.A.; et al. Assessment of UAV-Based Deep Learning for Corn Crop Analysis in Midwest Brazil. Agriculture 2024, 14, 2029. [Google Scholar] [CrossRef]

- Mora, J.J.; Selvaraj, M.G.; Alvarez, C.I.; Safari, N.; Blomme, G. From Pixels to Plant Health: Accurate Detection of Banana Xanthomonas Wilt in Complex African Landscapes Using High-Resolution UAV Images and Deep Learning. Discov. Appl. Sci. 2024, 6, 377. [Google Scholar] [CrossRef]

- Zeng, T.; Wang, Y.; Yang, Y.; Liang, Q.; Fang, J.; Li, Y.; Zhang, H.; Fu, W.; Wang, J.; Zhang, X. Early Detection of Rubber Tree Powdery Mildew Using UAV-Based Hyperspectral Imagery and Deep Learning. Comput. Electron. Agric. 2024, 220, 108909. [Google Scholar] [CrossRef]

- Slimani, H.; Mhamdi, J.E.; Jilbab, A. Deep Learning Structure for Real-Time Crop Monitoring Based on Neural Architecture Search and UAV. Braz. Arch. Biol. Technol. 2024, 67, e24231141. [Google Scholar] [CrossRef]

- Poblete-Echeverría, C.; Olmedo, G.F.; Ingram, B.; Bardeen, M. Detection and Segmentation of Vine Canopy in Ultra-High Spatial Resolution RGB Imagery Obtained from Unmanned Aerial Vehicle (UAV): A Case Study in a Commercial Vineyard. Remote Sens. 2017, 9, 268. [Google Scholar] [CrossRef]

- Lee, J.; Sung, S. Evaluating Spatial Resolution for Quality Assurance of UAV Images. Spat. Inf. Res. 2016, 24, 141–154. [Google Scholar] [CrossRef]

- Zhang, J.; Su, R.; Fu, Q.; Ren, W.; Heide, F.; Nie, Y. A Survey on Computational Spectral Reconstruction Methods from RGB to Hyperspectral Imaging. Sci. Rep. 2022, 12, 11905. [Google Scholar] [CrossRef]

- Lan, Y. Overview of Precision Agriculture Aviation Technology. In Precision Agricultural Aviation Application Technology; Lan, Y., Ed.; Springer Nature: Cham, Switzerland, 2025; pp. 1–38. ISBN 978-3-031-89917-1. [Google Scholar]

- Logavitool, G.; Horanont, T.; Thapa, A.; Intarat, K.; Wuttiwong, K. Field-Scale Detection of Bacterial Leaf Blight in Rice Based on UAV Multispectral Imaging and Deep Learning Frameworks. PLoS ONE 2025, 20, e0314535. [Google Scholar] [CrossRef]

- Li, L.; Zhang, S.; Wang, B. Plant Disease Detection and Classification by Deep Learning—A Review. IEEE Access 2021, 9, 56683–56698. [Google Scholar] [CrossRef]

- Sladojevic, S.; Arsenovic, M.; Anderla, A.; Culibrk, D.; Stefanovic, D. Deep Neural Networks Based Recognition of Plant Diseases by Leaf Image Classification. Comput. Intell. Neurosci. 2016, 2016, 3289801. [Google Scholar] [CrossRef] [PubMed]

- Ang, K.L.-M.; Seng, J.K.P. Big Data and Machine Learning with Hyperspectral Information in Agriculture. IEEE Access 2021, 9, 36699–36718. [Google Scholar] [CrossRef]

- Elavarasan, D.; Vincent, P.M.D. Crop Yield Prediction Using Deep Reinforcement Learning Model for Sustainable Agrarian Applications. IEEE Access 2020, 8, 86886–86901. [Google Scholar] [CrossRef]

- Rodriguez-Conde, I.; Campos, C.; Fdez-Riverola, F. Optimized Convolutional Neural Network Architectures for Efficient On-Device Vision-Based Object Detection. Neural Comput. Appl. 2022, 34, 10469–10501. [Google Scholar] [CrossRef]

- Sultana, F.; Sufian, A.; Dutta, P. A Review of Object Detection Models Based on Convolutional Neural Network. In Intelligent Computing: Image Processing Based Applications; Mandal, J.K., Banerjee, S., Eds.; Springer: Singapore, 2020; pp. 1–16. ISBN 978-981-15-4288-6. [Google Scholar]

- Bouacida, I.; Farou, B.; Djakhdjakha, L.; Seridi, H.; Kurulay, M. Innovative Deep Learning Approach for Cross-Crop Plant Disease Detection: A Generalized Method for Identifying Unhealthy Leaves. Inf. Process. Agric. 2025, 12, 54–67. [Google Scholar] [CrossRef]

- Gálvez-Gutiérrez, A.I.; Afonso, F.; Martínez-Heredia, J.M. On the Usage of Deep Learning Techniques for Unmanned Aerial Vehicle-Based Citrus Crop Health Assessment. Remote Sens. 2025, 17, 2253. [Google Scholar] [CrossRef]

- Dhruthi, P.A.; Somashekhar, B.M.; Rani, N.S.; Lin, H. Advanced Plant Species Classification with Interclass Similarity Using a Fine-Grained Computer Vision Approach. In Proceedings of the 2024 International Conference on Advances in Modern Age Technologies for Health and Engineering Science (AMATHE), Shivamogga, India, 16–17 May 2024; pp. 1–9. [Google Scholar]

- Albanese, A.; Nardello, M.; Brunelli, D. Low-Power Deep Learning Edge Computing Platform for Resource Constrained Lightweight Compact UAVs. Sustain. Comput. Inform. Syst. 2022, 34, 100725. [Google Scholar] [CrossRef]

- Zhang, Z.; Song, W.; Wu, Q.; Sun, W.; Li, Q.; Jia, L. A Novel Local Enhanced Channel Self-Attention Based on Transformer for Industrial Remaining Useful Life Prediction. Eng. Appl. Artif. Intell. 2025, 141, 109815. [Google Scholar] [CrossRef]

- Das, A.; Nandi, A.; Deb, I. Recent Advances in Object Detection Based on YOLO-V4 and Faster RCNN. In Mathematical Modeling for Computer Applications; John Wiley & Sons, Ltd: Hoboken, NJ, USA, 2024; pp. 405–417. ISBN 978-1-394-24843-8. [Google Scholar]

- Albahli, S. AgriFusionNet: A Lightweight Deep Learning Model for Multisource Plant Disease Diagnosis. Agriculture 2025, 15, 1523. [Google Scholar] [CrossRef]

- Huang, Z.; Bai, X.; Gouda, M.; Hu, H.; Yang, N.; He, Y.; Feng, X. Transfer Learning for Plant Disease Detection Model Based on Low-Altitude UAV Remote Sensing. Precis. Agric. 2024, 26, 15. [Google Scholar] [CrossRef]

- Zhang, Y.; Lin, Z.; Chen, Z.; Fang, Z.; Chen, X.; Zhu, W.; Zhao, J.; Gao, Y. SatFed: A Resource-Efficient LEO-Satellite-Assisted Heterogeneous Federated Learning Framework. Engineering 2025, in press. [Google Scholar] [CrossRef]

| Publication Year | Country | Study Area | Imaging Sensors | Vegetation Indices | Multirotor/Fixed Wing | GSD | Reference |

|---|---|---|---|---|---|---|---|

| 2018 | USA | 64.6 ha | Multispectral (4 bands) | NDVI | Fixed wing (senseFly eBee) | 12 cm | [37] |

| 2018 | Colombia | / | Multispectral (4 bands) | NDVI | Multirotor (3DR IRIS+) | / | [42] |

| 2019 | China | / | RGB | / | Multirotor (DJI Matrice 600) | 2.51 cm | [46] |

| 2019 | USA | 5.7 ha | Multispectral (5 bands) | NDVI | Multirotor (DJI Matrice 600) | / | [35] |

| 2020 | France | 3.3 ha | Multispectral (4 bands) | NDVI | Multirotor (Scanopy) | 1 cm | [36] |

| 2020 | DR Congo and Benin | / | Multispectral (5 bands) | 13 indices | Multirotor (DJI Phantom 4 Multispectral) | 3.5–8.0 cm | [38] |

| 2020 | Brazil | / | RGB | / | Multirotor (DJI Phantom 3) | / | [47] |

| 2020 | Italy | 2.5 ha | Multispectral (4 bands) | NDVI | / | 5 cm | [40] |

| 2021 | China | / | Multispectral (5 bands) | OSAVI | Multirotor (DJI Matrice 100) | 1.3 cm | [48] |

| 2022 | France | 4 ha | RGB | / | Multirotor (EagleView Starfury) | / | [39] |

| 2022 | Sri Lanka | 0.75 ha | RGB | / | Multirotor (DJI Phantom 4) | 1.1 cm | [49] |

| 2022 | China | 0.25 ha | Hyperspectral (125 bands) | / | Multirotor (DJI S1000) | 2.5 cm | [50] |

| 2022 | Germany | 0.3 ha | RGB | NGRDI, GLI | Multirotor (DJI Matrice 600) | / | [51] |

| 2023 | Pakistan | / | RGB | / | Multirotor (DJI Mini 2) | / | [44] |

| 2023 | China | 1.36 ha | Hyperspectral (176 bands) | BI, DBSI | Multirotor (DJI Matrice 600) | / | [52] |

| 2024 | Germany | 0.3 ha | Multispectral (6 bands) | 9 indices | Multirotor (DJI Matrice 210) | / | [53] |

| 2024 | USA | / | RGB | / | Multirotor (DJI Matrice 100) | 0.5 cm | [54] |

| 2024 | Germany | 0.77 ha | RGB | / | Multirotor (OktopusXL) | / | [55] |

| 2024 | Brazil | / | RGB | / | Fixed wing (senseFly eBee) | 5.3 cm | [56] |

| 2024 | Brazil | / | RGB | / | Multirotor (DJI Mavic 2 Pro) | 3 cm | [57] |

| 2024 | DR Congo | / | Multispectral (5 bands) | 7 indices | Multirotor (DJI Phantom 4) | 6.5 cm | [58] |

| 2024 | China | 1.6 ha | Hyperspectral (150 bands) | 22 indices | Multirotor (DJI Matrice 600) | 10 cm | [59] |

| 2024 | Malaysia | / | RGB | / | Multirotor (DJI Mavic Air 2s) | / | [43] |

| 2024 | Morocco | / | RGB | / | Multirotor (DJI Mavic 3) | / | [60] |

| Publication Year | Country | Crops | Deep Learning Algorithms | Maximum Accuracy Metrics | Reference |

|---|---|---|---|---|---|

| 2018 | USA | Citrus trees | CNN | F1-score = 96.24% | [37] |

| 2018 | Colombia | Potato | CNN (custom) | / | [42] |

| 2019 | China | Rice | CNN (AlexNet, VGG, Inception-v3, improved R-FCN) | OA = 91.67%, F1-score = 87.4% | [46] |

| 2019 | USA | Citrus trees | CNN (YOLO v3) | F1-score = 99.8% | [35] |

| 2020 | France | Vineyard | CNN (SegNet) | OA = 95.02%, F1-score = 97.66% | [36] |

| 2020 | DR Congo and Benin | Banana plants | CNN (VGG-16) | OA = 97% | [38] |

| 2020 | Brazil | Soybean | CNN (Inception-v3, ResNet, VGG-19) | OA = 99.04% | [47] |

| 2020 | Italy | Vineyard | CNN (RarefyNet) | / | [40] |

| 2021 | China | Wheat | CNN (U-Net) | F1-score = 92% | [48] |

| 2022 | France | Spinach | CNN (ViT, ResNet, EfficientNet) | F1-score = 99.4% | [39] |

| 2022 | Sri Lanka | Sugarcane | YOLOv5, YOLOR, DETR, Faster R-CNN | mAP = 79% | [49] |

| 2022 | China | Potato | Custom CNN, 3D-CNN | OA = 97.33% | [50] |

| 2022 | Germany | Sugar beet, cauliflower | Mask R-CNN | precision > 95%, recall > 97% | [51] |

| 2023 | Pakistan | Tomato, potato, pepper | EfficientNet-B3 | F1-score = 98.8% | [44] |

| 2023 | China | Wheat | DeepLabv3+, HRNet, OCRNet, UNet | R2 = 0.875 | [52] |

| 2024 | Germany | Sugar beet | Custom hybrid model | F1-score = 78.76% | [53] |

| 2024 | USA | Maize | MuLUT, LeRF, REAL-ESRGAN | / | [54] |

| 2024 | Germany | Wheat | MobileNet, ResNet, MobileViT | OA = 89.06% F1-score = 88.95% | [55] |

| 2024 | Brazil | Sugar cane | U-Net, LinkNet, PSPNet | Dice coefficient = 0.721 | [56] |

| 2024 | Brazil | Maize | Fcn, DeepLabV3+, Segformer | OA = 91.41% | [57] |

| 2024 | DR Congo | Banana | Faster R-CNN, YOLOv8 | F1-score = 98% | [58] |

| 2024 | China | Rubber tree | MSA-CNN | OA = 94.11% | [59] |

| 2024 | Malaysia | Melon | YOLOv8 | mAP = 83.2% | [43] |

| 2024 | Morocco | Beans | YOLOv5, YOLOv8, Faster RCNN, YOLO-NAS | mAP = 73.7% | [60] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Radočaj, D.; Radočaj, P.; Plaščak, I.; Jurišić, M. Evolution of Deep Learning Approaches in UAV-Based Crop Leaf Disease Detection: A Web of Science Review. Appl. Sci. 2025, 15, 10778. https://doi.org/10.3390/app151910778

Radočaj D, Radočaj P, Plaščak I, Jurišić M. Evolution of Deep Learning Approaches in UAV-Based Crop Leaf Disease Detection: A Web of Science Review. Applied Sciences. 2025; 15(19):10778. https://doi.org/10.3390/app151910778

Chicago/Turabian StyleRadočaj, Dorijan, Petra Radočaj, Ivan Plaščak, and Mladen Jurišić. 2025. "Evolution of Deep Learning Approaches in UAV-Based Crop Leaf Disease Detection: A Web of Science Review" Applied Sciences 15, no. 19: 10778. https://doi.org/10.3390/app151910778

APA StyleRadočaj, D., Radočaj, P., Plaščak, I., & Jurišić, M. (2025). Evolution of Deep Learning Approaches in UAV-Based Crop Leaf Disease Detection: A Web of Science Review. Applied Sciences, 15(19), 10778. https://doi.org/10.3390/app151910778