1. Introduction

The proliferation of large language models (LLMs) has marked a new era in content generation, with models like GPT-3 [

1], GLM [

2], ERNIE 3.0 [

3], and Qwen [

4] demonstrating remarkable capabilities. Yet, this advancement has simultaneously opened a Pandora’s box for the spread of sophisticated disinformation [

5]. The ability of these models to produce fluent, coherent, and contextually appropriate text has made it increasingly challenging to distinguish between human-written and machine-generated content [

6]. This challenge forms the basis of a fundamental research problem: the detection of fake AI-generated text, which now attracts significant attention across various domains [

7,

8].

While the detection of fake text is a general problem, its implications become particularly critical in high-stakes domains such as cybersecurity [

9]. In this field, cybersecurity threat intelligence (CTI) is a foundational element of modern defense systems, providing actionable knowledge about existing and emerging threats [

10]. The authenticity and reliability of CTI are paramount; however, the very LLMs that advance technology can be weaponized by malicious actors to generate convincing but fake CTI at scale [

11,

12]. These fabricated reports, when disseminated in open-source communities or intelligence feeds, can execute sophisticated data poisoning attacks [

13]. Such attacks can distort data distributions, mislead security analysts, and ultimately compromise the AI-based detection infrastructures that rely on this data [

14]. A notable example occurred in January 2021, when a state-sponsored campaign used fabricated cybersecurity reports to deceive security researchers [

15].

Despite the gravity of this threat, the problem of fake CTI has often been overlooked in the broader context of cybersecurity research. Many threat intelligence solutions assume the authenticity of the input CTI by default [

16,

17]. While some recent studies have begun to address CTI quality and credibility assessment [

18,

19], there remains a lack of specialized models and, crucially, a lack of public, domain-specific datasets tailored for the task of fake CTI detection. This research gap presents a significant vulnerability in the cybersecurity ecosystem.

This paper addresses this gap by framing the fake CTI problem as a specific and critical instance of the broader fake text detection challenge. We explore the entire lifecycle of a data poisoning attack in this domain, from generation to detection. We propose a systematic approach to first generate realistic fake CTIs using a fine-tuned Generalized Language Model (GLM), and then to accurately detect them using an efficient and effective TextCNN-based classification model.

The main contributions of this paper are as follows:

We model and simulate the complete data poisoning attack lifecycle in the CTI domain, from the generation of fake intelligence using a reproducible, parameter-efficient fine-tuned GLM-based scheme (FCTIG) to its subsequent detection.

We construct and will make publicly available a novel, domain-specific dataset for fake CTI detection. This dataset, comprising both real and generated CTIs, provides a valuable resource for future research in this area.

We propose an efficient classification model (FCTICM-TC) based on a BERT-TextCNN architecture, which is tailored to the specific characteristics of CTI data and demonstrates strong performance in distinguishing between authentic and fake intelligence.

Through extensive experiments, we validate the effectiveness of our end-to-end method (FCTIM), confirming its practical value in enhancing the robustness of CTI-driven security systems against disinformation attacks.

2. Related Work

2.1. Advances in Generative Language Models

The landscape of natural language processing has been reshaped by the advent of transformer-based LLMs, beginning with the seminal “Attention Is All You Need” paper [

20]. Models like BERT revolutionized language understanding through bidirectional pre-training [

21], while generative models like GPT demonstrated an uncanny ability for few-shot learning [

1]. Subsequent architectures such as GLM [

2], ERNIE [

3], and Qwen [

4] have further pushed the boundaries of language understanding and generation. The development of efficient fine-tuning techniques like LoRA has made it feasible to adapt these large models for specialized tasks [

22], a technique we leverage in our fake CTI generation process.

2.2. Detection of AI-Generated Text

As generative models have grown more powerful, the detection of AI-generated text has emerged as a crucial and active research area. Methodologies can be broadly categorized into two streams. The first, and most common, involves training dedicated classifiers. This often entails fine-tuning pre-trained models like BERT on datasets containing both human and machine text [

23]. The second stream focuses on statistical or feature-based methods. These approaches analyze intrinsic properties of the text, such as perplexity, token distributions, or other linguistic artifacts, to identify machine-generated content without the need for extensive training [

6,

8]. Our work contributes to the first stream by developing a specialized classifier, but we also benchmark our model against several feature-based methods to ensure a comprehensive evaluation.

2.3. Text Classification Architectures

Text classification is a foundational task in NLP, and various neural architectures have been developed for this purpose. Convolutional Neural Networks (CNNs) were successfully adapted for sentence classification by capturing local n-gram features through convolutional filters [

24]. Early work demonstrated the power of deep CNNs for this task [

25], with subsequent research exploring methods to initialize filters with semantic features to improve performance [

26]. Other popular architectures include Recurrent Neural Networks (RNNs), often augmented with attention mechanisms [

27], and hybrid models like BiLSTM-CRF combined with CNNs [

28]. The BERT-TextCNN architecture we employ in this paper is a hybrid model that aims to combine the deep contextual understanding of BERT with the efficient local feature extraction capabilities of TextCNN, which we argue is particularly suitable for the concise and entity-rich nature of CTI data.

2.4. AI, Disinformation, and Cybersecurity

The intersection of AI and cybersecurity is a dual-use domain [

9,

12]. While AI can enhance threat detection and response, it can also be exploited by adversaries. The generation of fake CTI is a prime example of such malicious use, falling under the broader umbrella of data poisoning attacks [

13,

14]. Prior research has confirmed the feasibility of using transformer models to generate plausible fake CTI reports [

7,

11], thereby validating the threat model that our work is built upon. The unstructured nature of threat intelligence makes it a fertile ground for such attacks [

29]. Our research directly confronts this challenge by providing a systematic methodology for both simulating and detecting these advanced, AI-driven disinformation campaigns within the critical domain of cybersecurity.

3. Fake CTI Generation and Data Poisoning Attack

The purposeful generation of cybersecurity analysis texts, a key technique in data poisoning attacks, is employed to produce fake CTIs. This approach not only misleads the training of detection models but also adversely affects their learned recognition patterns. The CTI text generation not only involves extracting or selecting parts of existing texts, but also creatively generates non-existent statements that align with the contextual meaning, ensuring logical coherence in the cybersecurity analysis texts. Utilizing the well-known transformer architecture, a transformer-based generation pre-training general language model (GLM) [

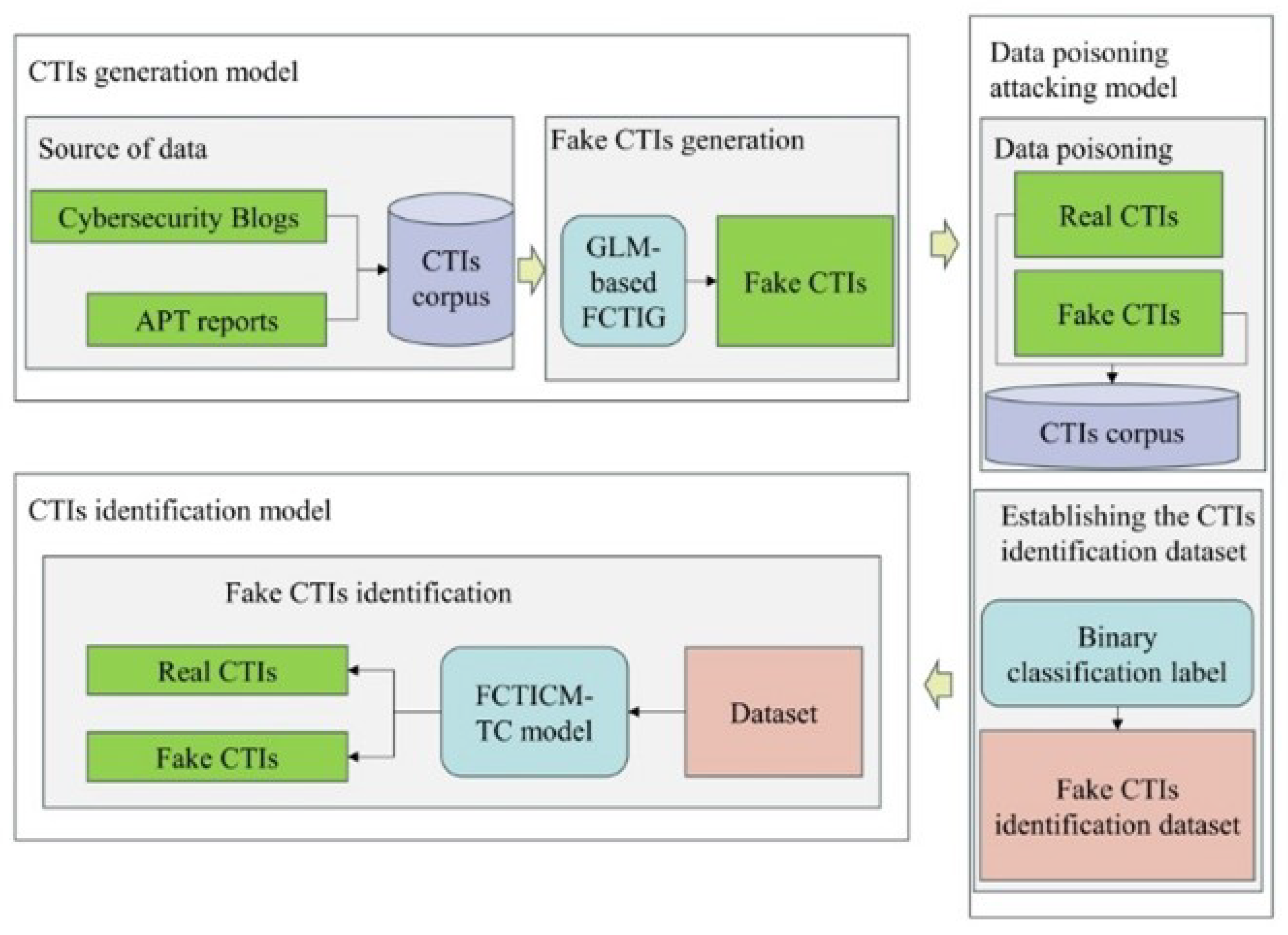

2], and employing guidance from reputable open-source CTIs, a GLM-based fake CTI generation (GLM-based FCTIG) scheme is developed, as shown in

Figure 1. This figure also depicts the infrastructure of the fake CTI generation scheme which comprises two main components, the fake CTI generation model and the fake CTI generation method.

3.1. Fake CTI Generation Method

To generate realistic fake CTIs, our scheme adapts a public, pre-trained General Language Model (GLM) to the cybersecurity domain. This is achieved through a parameter-efficient fine-tuning (PEFT) technique known as Low-Rank Adaptation (LoRA) [

22]. Instead of training all model parameters, LoRA introduces small, trainable matrices into the transformer layers, dramatically reducing computational requirements while effectively capturing domain-specific knowledge.

We fine-tuned the ChatGLM-6B model on our corpus of 5381 authentic CTI reports. The LoRA adapter was configured with a rank (

r) of 8 and a scaling factor (

) of 16, and the model was trained to maximize the standard language modeling objective (see Equation (

1)). This process yields a specialized generator capable of producing high-fidelity fake CTIs in a fully reproducible, offline manner.

3.2. Generating Fake CTI Texts

To ensure that the generated texts adhere to thematic guidelines, the Top-p method [

20] performs the operation of sampling. The sampling range sets

, it represents the set of all words that meets

, where

is a pre-set hyper-parameter. The most probable words within this set are selected as candidates. Consequently, the initial probability distribution is recalculated according to Equation (

2). Based on this recalculated probability, a word is chosen as the final output. This process is iterative: the previous output is fed back into the GLM pre-training model to facilitate the generation of the next word. By continuously performing these calculations, the model successfully produces authentic-looking fake CTI texts.

3.3. Examining Data Poisoning Attacks

Typically, attackers execute data poisoning attacks across the CTI lifecycle, such as during CTI storage and processing, even in the open-source community. During these attacks, they may mislead or even obscure real attack activities by forging the source of CTIs, altering the content, and deleting the actual data [

14].

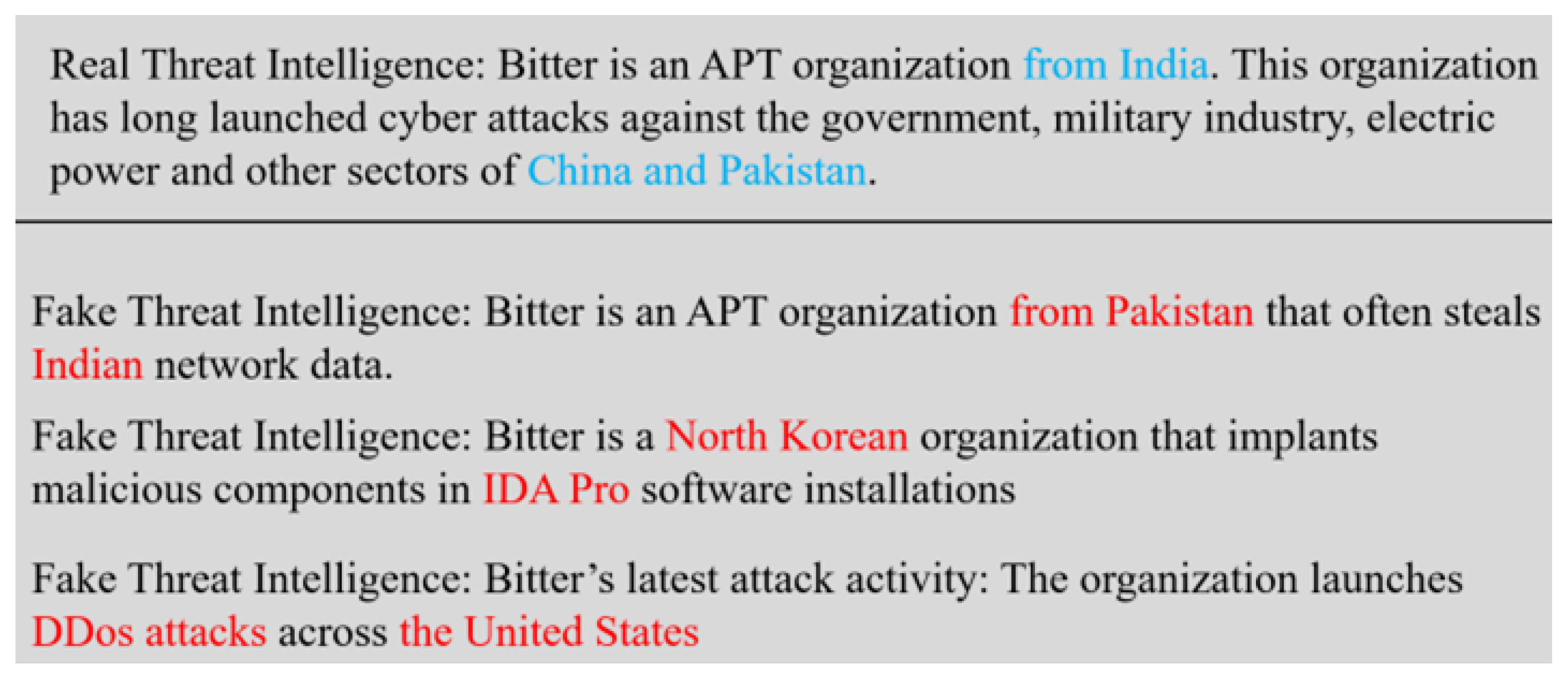

To more accurately reveal the mechanism of data poisoning attacks and to effectively identify fake CTIs, a dataset of the fake CTI analysis reports has been established based on the above GLM-based FCTIG scheme and the real cybersecurity threat analysis reports. Initially, the real cybersecurity threat analysis reports are intercepted, and fixed-length statements from the beginning of the report texts are selected as seeds for generation. The length was set to 15 words based on a preliminary analysis of our CTI corpus. We observed that the initial 10–20 words of a CTI report typically contain the core identifying information, such as the threat actor, malware family, or primary target. We chose 15 words as a balance: it is long enough to provide sufficient context for our GLM-based generator to produce coherent text, yet short enough to represent a concise piece of intelligence that an attacker might feasibly manipulate to mislead analysts at a glance. Subsequently, the trained GLM-based FCTIG scheme is used to randomly generate the fake CTI-like reports. To enhance the randomness of the generated texts, each real statement corresponds to at least three fake statements. Eventually, the fake analysis report statements are marked as ‘0’, and the real analysis report statements are marked as ‘1’. This rule creates a labeled dataset of cybersecurity threat analysis reports. An example of the generated cybersecurity threat analysis reports and the fake CTIs is shown in

Figure 2, which is a sample of the fake CTI generated by the developed GLM-based FCTIG scheme (i.e.,

Figure 1). As exemplified in

Figure 2, even professional analysts find it difficult to distinguish the fake CTI from the real ones. In fact, some elements are not only misleading but also illegal, presenting contradictions to what exist in the real world.

As shown in

Figure 2, the “Bitter” group, originally from India, primarily targets Pakistan and China. However, the fake CTIs depicted in

Figure 2 have altered the original location of the “Bitter” group, modified the attack targets, and even inserted fabricated details such as IDA Pro malware, DDoS attack mode, and other forged information. Despite these changes, the fake CTIs generated by the GLM-based FCTIG scheme maintain the correct grammatical structure and logical language flow, devoid of any grammatical errors. This accuracy makes it challenging for even seasoned cybersecurity experts to identify flaws or inaccuracies without prior knowledge of the discrepancies. In reality, though their contents have been altered, it differs from the actual facts. In this scenario, the attackers are enabled to exploit such fabricated fake CTI reports, strategically distributing similar deceptive content across the open-source Internet or other platforms, leading cybersecurity analysts to incorrect interpretations and analyses of real cybersecurity incidents. This method of data poisoning attack can effectively conceal attackers’ true attack intentions and behaviors. Similar incidents frequently occur, with the data poisoning attack extending to some other areas, such as influencing social public opinion. Consequently, these fake CTIs can cause unforeseen damage to the cybersecurity world.

4. Fake CTI Identification Model

Here, to accurately detect the fake CTIs resulting from the data poisoning attacks, the challenge of distinguishing between authentic CTIs and counterfeit CTIs has been transformed into a text classification problem. In the context, the identification of fake CTI is approached through the lens of text classification. While modern, end-to-end transformer architectures are powerful, we deliberately chose a hybrid BERT-TextCNN model. The rationale for selecting TextCNN as the classification head is twofold: (1) Computational Efficiency: TextCNN has a significantly lower computational overhead compared to full transformer-based classifiers, making it more suitable for real-world security applications where inference speed can be critical. (2) Feature Extraction Suitability: CTI texts are often short and contain key threat indicators as local n-gram patterns (e.g., “malware family X,” “targets Y”). TextCNN excels at capturing such local features through its convolutional filters. By using a pre-trained BERT for word embeddings, our model leverages the deep contextual understanding of transformers, while the TextCNN layer provides an efficient and effective mechanism for the final classification. TextCNN is able to efficiently extract local features of the short text by convolutional operations and has lower computation cost. It is appropriate for the CTI classification task. Accordingly, we propose a classification model specifically designed for this purpose, the fake CTIs classification scheme based on TextCNN (FCTICM-TC), as depicted in

Figure 3. This model features a six-layer infrastructure, which includes word embedding, convolution, activation function, pooling, full connection, and sigmoid binary classification.

The core of our FCTICM-TC model, depicted in

Figure 3, is an end-to-end fine-tuning approach. Input text vectors

are first processed by a BERT layer. Critically, unlike methods that use BERT as a static feature extractor [

30], our approach unfreezes the BERT parameters during training. This allows the powerful encoder to adapt its contextual representations specifically to the nuances of the CTI domain. The output is a matrix of high-dimensional, task-adapted embeddings,

. This matrix then serves as a rich input for the subsequent TextCNN layers. Since Convolutional Neural Networks are adept at extracting local correlations from spatial information [

31], TextCNN is a particularly suitable architecture for identifying key patterns in CTI texts [

25,

26,

28,

32,

33]. Hence, the FCTICM-TC model is built, and its processing steps of TextCNN are as follows.

First, the output of the BERT word-embedding layer, namely the word-embedding matrix, is convolved. Due to the word vector trained by the BERT is as a whole, only one-dimensional convolution in the vertical direction is needed for feature extract. In addition, since the complexity of words and syntax in cybersecurity report texts, three groups of convolutional layers are employed to extract features from different perspectives, with 256 convolution kernels in each group, wherein the sizes set to 2, 3, and 4, respectively; The output of each convolution is passed through a Rectified Linear Unit (ReLU) activation function. Next, performing the reduction in the dimensionality of the feature matrix by maximizing pooling, while retaining the primary features and filtering out the irrelevant features, hence improving the generalization ability of the model and efficiently preventing overfitting. Lastly, different groups of features are integrated through the full connect layer to achieve the high-level feature meaning. Assuming that the output vector of the TextCNN is

y, and its calculation process is expressed as shown in Equations (

3) and (

4).

where

represents the

i-th convolution layer,

denotes the max pooling layer,

means the concatenation of three groups of feature matrices, and

explains a fully connect layer.

In

Figure 3, the sigmoid function primarily works as the logistic regression layer, which is capable of accepting numerical inputs of any size and convert them into values between 0 and 1. Purposefully, such strategy effectively represents the probability of a certain category in the binary classification task. Under the above, if the fake CTI is marked as 0 and the true CTI is marked as 1, then the output of the sigmoid denotes the probability that the input is true CTIs. Here, the sigmoid function is calculated as Equation (

5).

Cross entropy, as a loss function, essentially measures the difference between the predicted probability distribution and the actual probability distribution. To evaluate the developed model’s capability to detect fake CTIs, the cross entropy is employed to evaluate and train the proposed FCTICM-TC model depending on the loss value of the cross entropy. The loss value for the training in the presented model is calculated as Equation (

6).

where

N means the total number of the instances,

denotes the true label of the

i-th instance (0 or 1), and

represents the predicted probability for the

i-th instance.

5. Fake CTI Mining Method

In the contemporary digital landscape, the efficacy of artificial intelligence-based application systems, particularly in sensitive domains like cybersecurity, is heavily contingent upon the quality and volume of their training data. Usually, for the artificial intelligence-based application system, a large number of training data is often required during its model establishment [

9,

27]. This dependency, however, exposes a critical vulnerability. To this point, the attackers often utilize the counterfeit cybersecurity threat information to mix with the training dataset to adversely affect the exact data distribution and even perturb the model’s parameters, thereby, for example, evading supervision and identification of the AI-based network defense systems. Recognizing this emergent threat, this paper proposes a comprehensive framework to both simulate and counteract such malicious activities. Here, a technical scheme integrates the proposed GLM-based FCTIG scheme and the developed FCTICM-TC model on the entire process, including fake CTI-like generation, data poisoning attack, and false CTI identification, which demonstrates their effectiveness of the fake CTI generation and the fake CTI identifying method. The logical architecture of the experimental program of the fake CTIs identification method is shown as

Figure 4.

Collectively, the fake CTI identification method is referred to as the FCTICM-TC-based fake CTI mining (FCTICM-TC-based FCTIM) method. The FCTICM-TC-based FCTIM method focuses on various types of cybersecurity analysis reports in the open-source communities.

As shown in

Figure 4, the experimental program of the fake CTI identification method primarily includes three parts: the fake CTI generation module, the data poisoning attack module, and the fake CTI identification module. The fake CTI generation module mainly establishes the CTI datasets with combination of the open-source data such as the cybersecurity blogs and APT analysis reports, and generates the fake CTIs depending on the presented GLM-based FCTIG scheme. The data poisoning attack module is mainly used to analyze the difference between the authentic CTIs and the counterfeit CTIs, as well as simulate data poisoning attack to generate the fake CTIs; The fake CTI identification module focuses on identifying the fake CTIs through the presented FCTICM-TC model. The description of the proposed FCTICM-TC-based FCTIM method is provided in Algorithm 1.

The operational workflow of the presented FCTICM-TC-based FCTIM method, as outlined in Algorithm 1, follows a systematic, multi-stage process designed to rigorously simulate and address data poisoning attacks. For each authentic CTI within the source corpus, the process begins with the generative phase: the GLM-based FCTIG scheme is invoked to randomly generate a corresponding set of fake CTI-like texts. Subsequently, the data poisoning attack is simulated by integrating these newly generated items into the original corpus. This crucial step creates a compromised dataset where authentic and counterfeit intelligence coexist. Following this integration, a clear supervisory signal is established by marking each instance in the combined dataset with its ground-truth label. This fully labeled dataset then serves as the primary training resource for our detection model. Finally, the developed FCTICM-TC model is trained on this dataset and then utilized in the validation phase to identify and distinguish between the authentic CTIs and the counterfeit CTIs, thereby evaluating its effectiveness in a realistic data poisoning scenario.

| Algorithm 1: FCTICM-TC-based FCTIM |

- Require:

, n ▹Input CTI corpus and generation quantity - Ensure:

, ▹Output real and fake CTIs - 1:

- 2:

- 3:

for all do - 4:

▹Generate n fake CTIs using GLM-based FCTIG - 5:

▹Perform data poisoning by mixing fakes - 6:

for all do - 7:

- 8:

end for - 9:

- 10:

end for - 11:

for all do - 12:

if then - 13:

- 14:

else - 15:

- 16:

end if - 17:

end for return

|

6. Experiment Results and Analysis

Initially, 5381 security blogs and APT cybersecurity analysis reports, i.e., the text-format analysis reports were crawled from the official websites such as MITRE, Cisco. Depending on the self-developed CTI mining software [

29], 50,615 items of the CTIs were mined from the 5381 text-format reports. In advance, these extracted CTIs are taken as the authentic ones. On the basis of these so-called true CTIs, the “fake CTIs” generation module, as shown in the first part of

Figure 4, is employed to generate 151,845 pieces of fake CTIs through the trained and fine-tuned GLM-based FCTIG scheme. With combination of the above extracted 50,615 items of authentic CTIs and the 151,845 pieces of counterfeit CTIs, the final experiment CTI dataset is established successfully. We acknowledge that the 3:1 ratio of fake-to-real CTIs in our primary dataset does not mirror typical, stealthy data poisoning attacks where fake data is sparse. However, this imbalanced ratio was intentionally chosen for a specific experimental purpose: to rigorously evaluate the robustness and discriminative power of our proposed FCTICM-TC model under a challenging, high-noise condition. By creating a scenario with a high volume of deceptive information, we can more effectively assess the model’s ability to identify fake CTIs without being overwhelmed by the majority class, thus providing a stringent test of its effectiveness. Furthermore, to address the concern about real-world applicability, we also conduct experiments on a dataset with a lower, more realistic fake-to-real ratio (approximately 1:1) in

Section 6.3.3In addition, to validate their effectiveness of the proposed fake CTI generation method and the CTI identification method, i.e., the models shown in

Figure 4. Here, the experiments and verifications were examined from three aspects: (1) validating the effectiveness of the fake CTI generation method; (2) performing visual analysis for data poisoning attack; (3) conducting the comparison analysis for the fake CTI identification methods. Specifically, since this article generates many false CTI analysis reports with the help of GLM, and the essence of these yielded reports is corpus. Thus, it is necessary to evaluate the reliability and truth of the content described by the corpus. And also, it is necessary to evaluate the natural language attributes of the corpus, including logic and syntax. Finally, knowledge graphs are used to evaluate the effectiveness of data poisoning attack from the perspective of visualization view.

6.1. Experiment Arrangement

To ensure data integrity and ecological validity, we implemented a robust text cleaning pipeline that preserves critical technical entities (e.g., IPs, CVEs, file paths) by retaining alphanumeric characters and key symbols (., -, /, :, _). The data loading process was also revised to eliminate any arbitrary sample deletion, ensuring all experiments were conducted on the full, clean dataset.

The parameters for our generation and identification experiments are listed in

Table 1 and

Table 2, respectively. The dataset was shuffled and split into training, validation, and test sets as detailed in

Table 3.

6.2. Evaluations of the Proposed

6.2.1. The Effectiveness of the Yielded Fake CTI Corpus

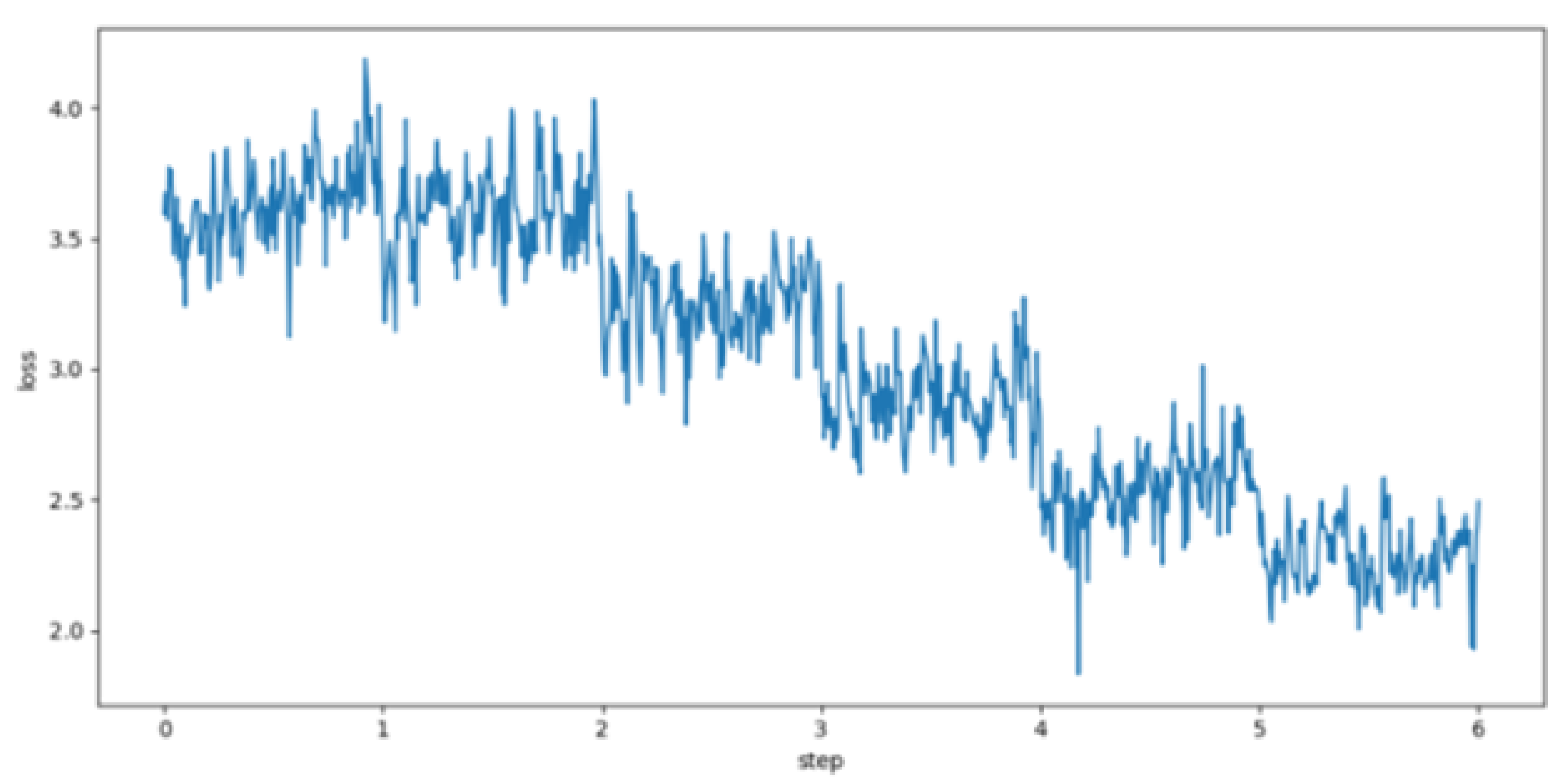

To validate the effectiveness of our training process on the yielded CTI corpus, we analyzed the loss value during the model training, which was extended to 10 epochs to ensure thorough convergence. The updated training loss curve is shown in

Figure 5. As shown in the figure, the model’s loss value, which started at approximately 3.5, steadily decreased throughout the 10 epochs and stabilized below 2.0 by the end of the training. This result demonstrates that our training process is stable and that the extended training contributes to a more robust and well-fitted GLM-based FCTIG scheme.

6.2.2. The Reliability of the GLM-Based FCTIG Scheme

To evaluate the linguistic quality of the fake CTIs generated by our FCTIG scheme, we employ two standard text generation metrics: BLEU [

34] and DISTINCT [

35]. We acknowledge that these metrics do not directly measure the “deceptiveness” of the content. Instead, they serve to quantify the fluency, coherence (via similarity to real CTIs measured by BLEU), and diversity (measured by DISTINCT) of the generated text. A high degree of linguistic quality is a necessary prerequisite for a text to be deceptive to human experts. Therefore, these metrics provide a foundational assessment of our generator’s capabilities. The similarity is calculated as Equation (

7).

The BLEU score [

34] evaluates text quality by comparing the generated text with reference texts. It is calculated as a geometric mean of modified n-gram precisions (

), multiplied by a Brevity Penalty (BP) to penalize generated texts that are too short:

where

N is the maximum n-gram order (typically 4), and

are uniform weights (typically

). This metric provides a holistic measure of the generated text’s similarity to the ground truth. Here, the

candidates denote the generated CTIs, the

references are the real CTIs, and

and

denote the counts of the specified

n-gram in the reference and candidate sets, respectively.

The DISTINCT is calculated as Equation (

8):

where

denotes the number of non-duplicate

n-grams in the generated CTIs.

With respect to Equation (

7), taking the true CTIs and the generated fake CTIs as objects, the semantic similarity between the two objects is determined according to the BLEU metrics to evaluate the quality of the generated fake CTIs. Here, four different BLEU values, including BLEU1, BLEU2, BLEU3, and BLEU4, were used for performing their measurement. The different BLEU values represent their corresponding accuracy of the different word sequence lengths, as shown in

Table 4.

Table 4 shows the

n-gram weights represented by different BLEU values, and

Table 5 shows the BLEU values and the DISTINCT values for the fake CTIs.

As listed in

Table 5, it can be found that the BLEU1 value of the generated fake CTIs by the presented GLM-based FCTIG scheme is 0.931; this case is because the embedded words in the generated fake CTIs are basically the same as those of the true CTIs; the values of BLEU2 and BLEU3 are 0.753 and 0.539, respectively, which implies that the phrases in the generated fake CTIs are similar to those of the true ones; while the BLEU4 is just 0.263, which indicates that the syntax of the generated fake CTIs is similar to that of the true CTIs partly. The DISTINCT value is 0.943; it shows a high level of diversity in the fake CTIs, and the GLM-based FCTIG scheme can generate different words instead of repeating the same words frequently. Considering these indicators collectively, the results suggest that the morphology and syntax of the fake CTIs generated by the proposed GLM-based FCTIG scheme are reasonable and rational.

In order to evaluate the real-world implications of the generated fake CTIs, a preliminary assessment was conducted. We asked ten cybersecurity practitioners to label a randomly selected set of 30 CTIs (composed of 15 real and 15 fake items). The average accuracy of the experts in this task was 58.7%, which is only marginally better than random chance (50%). While we acknowledge that the scale of this expert evaluation is limited and cannot yield statistically significant conclusions, the result still suggests that CTIs generated by our FCTIG scheme are highly deceptive and can confuse even experienced security experts. If such fake CTIs were to spread widely, they could pose a significant danger to cybersecurity operations.

6.2.3. Visual Evaluation for Data Poisoning Attack

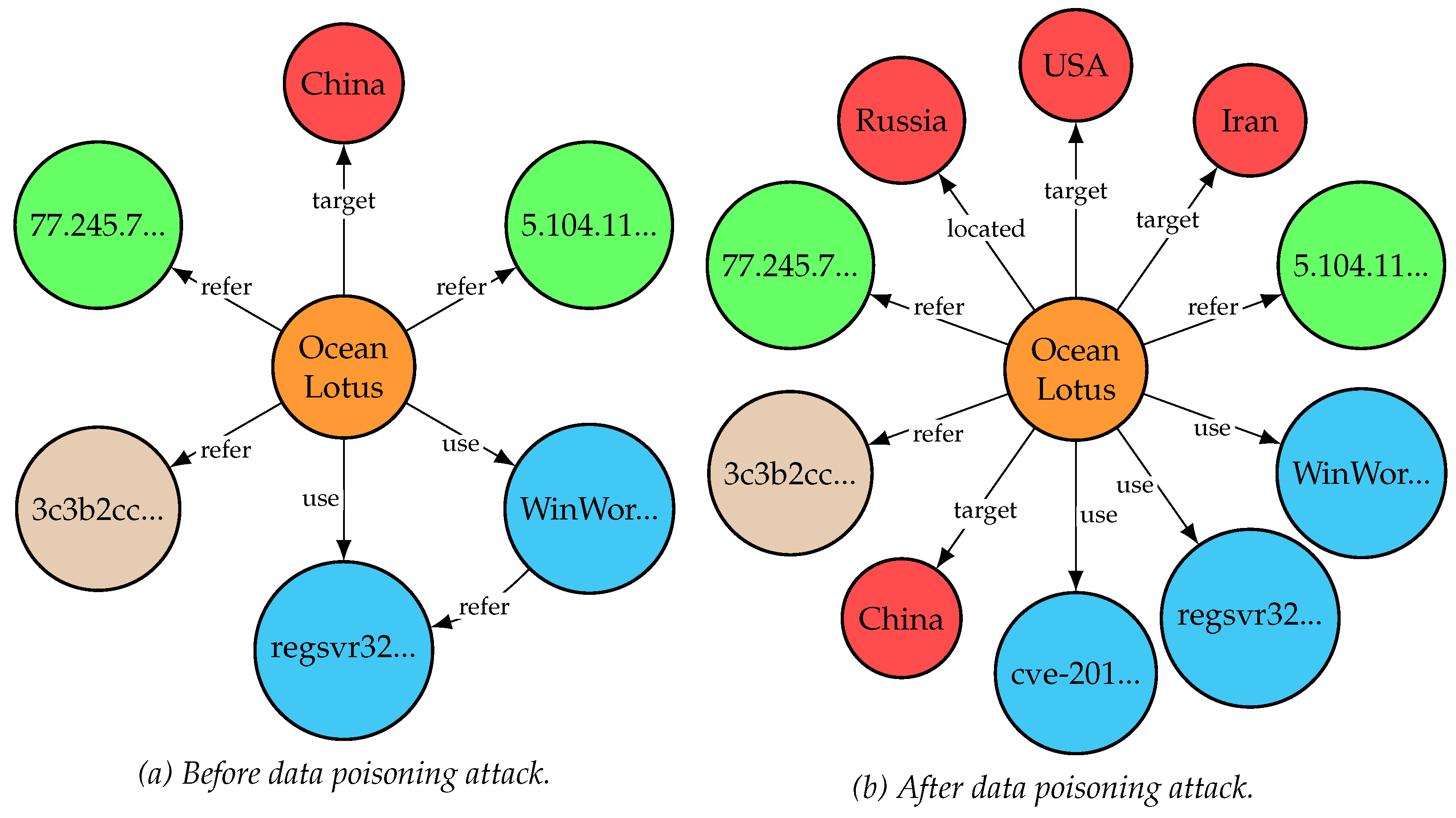

To evaluate and analyze the impacts on the syntax and semantics in the cybersecurity analysis reports from the fake CTIs, some data poisoning attack experiments are examined. As an example, one of the experimental results is visualized and analyzed in the form of a knowledge graph, as shown in

Figure 6.

Figure 6 displays the changes observed in the knowledge graph regarding the technical report on “Ocean Lotus Organization Attack Activity” [

15], with and without the data poisoning attack.

As shown in

Figure 6, compared with the outcomes with or without data poisoning attack, the fake CTIs generated by the proposed GLM-based FCTIG scheme and method produce a series of counterfeit information. Actually, the primary attacking objective of the Ocean Lotus group is China, and their employed malicious software includes “WinWord.exe” and “regsvr32.exe” [

33]; in contrast, after data poisoning attack is done, the Ocean Lotus group is located in Russia, adding two attacking targets such as United States and Iran, and even the non-existent cybersecurity vulnerability number “cve-2018-71” occurred. Obviously, such counterfeit CTI information will seriously disrupt the analysis of the cybersecurity analysts, spurring the neglect of the authentic and useful information, and resulting in the serious misleading in the real cybersecurity world. Specifically, these disruptions may manifest in the following ways:

- (1)

The injection of fake CTIs diverts attention and resources away from genuine threats. For example, the fabricated inclusion of the United States and Iran as targets could lead organizations in these regions to prioritize countermeasures against non-existent attacks, leaving actual vulnerabilities unaddressed.

- (2)

The presence of fake vulnerability numbers like “cve-2018-71” creates noise in CTI databases, delaying the identification and remediation of real vulnerabilities. Analysts may spend valuable time verifying and investigating counterfeit data, prolonging the exposure window for actual threats.

6.3. Validating the FCTICM-TC-Based FCTIM Method

6.3.1. Evaluation Metrics

The performance of all models is evaluated using standard metrics for binary classification: Precision, Recall, and the F1-score. These metrics are derived from the confusion matrix (True Positives, False Positives, True Negatives, and False Negatives). Given their widespread use, their formulas are omitted for brevity.

In addition to these standard metrics, we introduce a novel metric named Logarithmic Misclassification Impact (LMI) to better assess the model’s performance from a practical cybersecurity perspective. Standard metrics like Precision, Recall, and F1-score often treat False Positives (FPs) and False Negatives (FNs) with equal importance. However, in the context of fake CTI detection, the consequences of misclassification are highly asymmetric: classifying a fake CTI as real (an FP) can lead to wasted resources and misguided actions, posing a much greater risk than missing a real CTI in this specific detection context (an FN). The LMI metric is designed to explicitly model this asymmetric risk by assigning a higher penalty to FPs through the weighting factor

. The LMI is calculated as Equation (

9).

While LMI is a novel metric proposed in this work, it is specifically tailored to provide a more domain-relevant measure of a model’s practical impact than what is captured by traditional metrics alone.This formulation, with a higher weight assigned to False Positives (FPs), is specifically designed to model the high operational cost in cybersecurity, where investigating a fake threat (an FP) consumes significantly more resources and poses a greater risk of distracting from real attacks than missing a real threat in this context (an FN).

6.3.2. Effectiveness of the Proposed Method

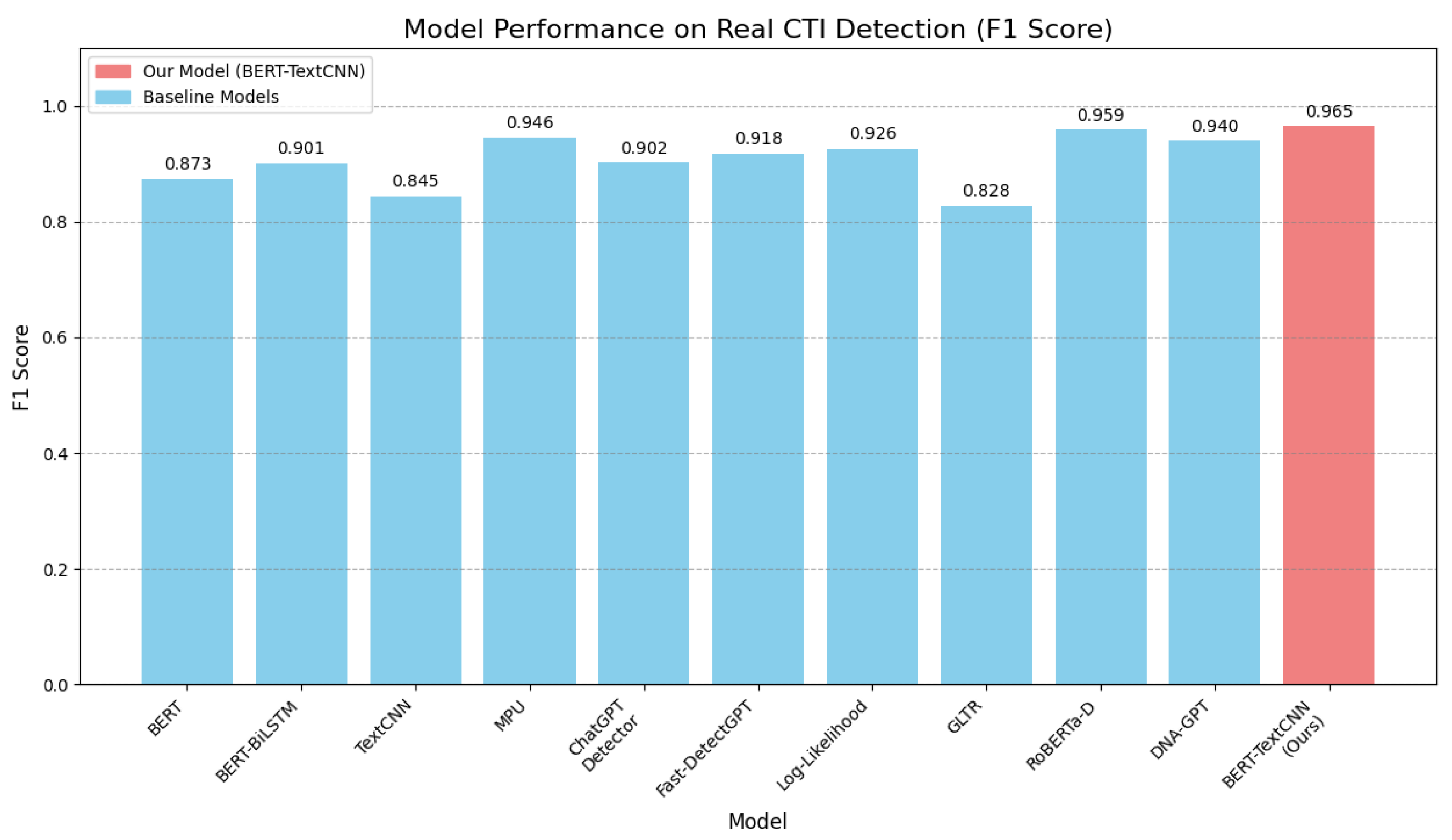

To validate the effectiveness of the proposed FCTICM-TC-based FCTIM method for fake CTI detection, 151,845 fake CTI-like texts generated by the proposed GLM-based FCTIG scheme and 50,615 real CTIs are utilized as the experiment dataset. To comprehensively evaluate the performance of our proposed FCTICM-TC model, we compared it against a wide range of baseline methods on our dataset. These baselines include the following: (1) classic deep learning models for text classification (BERT, BERT-BiLSTM, TextCNN); (2) several widely recognized detectors for AI-generated text, including both model-based and feature-based approaches (ChatGPT Detector, Fast-DetectGPT, GLTR, Log-Likelihood, and the recent DNA-GPT [

36]); and (3) state-of-the-art (SOTA) fine-tuned models such as MPU [

8] and RoBERTa-D [

37].

From the results shown in

Figure 7 and

Figure 8, we find the following: (1) By comparing the BERT model with the BERT-BiLSTM and BERT-TextCNN models, it shows that the identifying capability of the models with BiLSTM and TextCNN improves all of the employed metrics, which proves that the BiLSTM and TextCNN structures are more effective than that of the original BERT in the textual feature extraction for the CTIs. (2) Comparing the BERT-BiLSTM model and BERT-TextCNN model, regarding the fake CTI identification, the BERT-TextCNN model achieves a 4 percentage point higher Recall than the BERT-BiLSTM model, but its Precision is 2.1 percentage lower. In contrast, with respect to the true CTIs identification, the BERT-TextCNN model has 4 percentage points higher Recall ratio than that of the BERT-BILSTM model, but its Precision ratio is 2.1 percentage points lower than that of the previous one. In the field of cybersecurity, the damage incurred by misclassifying a counterfeit CTI as authentic is far more significant than the potential benefit lost by missing an authentic CTI. Therefore, Precision is more meaningful than Recall on the real CTIs, and similarly, Recall is more important than Precision on the fake CTIs. In view of this point, the BERT-BILSTM has a relative advantage in identifying the fake CTIs when the ratio of fake-to-real CTIs is large. (3) By comparing the TextCNN model and the BERT-TextCNN model, the Recall rate of BERT-TextCNN in detecting the fake CTIs and the true CTI Precision rate are both improved; this case indicates that the capability for capturing the fake CTI information is strengthened after adding the BERT pre-training model. (4) The MPU and the BERT-TextCNN outperform the other models. Compared to the MPU model, the BERT-TextCNN has a higher Recall ratio and F1-score on true CTIs, but its Precision is lower than that of the MPU. This is because the MPU tends to misclassify true CTIs as fake CTIs, leading to lower Recall in true CTIs. (5) Comparing the two types of approaches, it can be seen that the statistics-based approach is not as effective as the model-based approach on CTIs. This is because fine-tuning can improve the performance of models in a specific area. (6) The newly introduced SOTA baselines, RoBERTa-D and DNA-GPT, also demonstrated strong performance. RoBERTa-D, leveraging a more powerful pre-trained backbone, achieved the highest F1-score on the fake CTIs dataset, confirming the effectiveness of this approach. However, our BERT-TextCNN model achieved higher Precision and a better (lower) LMI score, indicating a better trade-off for practical security applications where minimizing false alarms is critical. The feature-based DNA-GPT also outperformed older statistical methods, but was not as effective as the fine-tuned models on this specialized CTI task. (7) The MPU and the BERT-TextCNN both have lower LMI, which suggests that they cause less harm for misclassification and are more suitable for the fake CTI detection task in realistic scenarios compared to other methods.

6.3.3. Generalizability of the Proposed Method

To validate the generalizability of the proposed FCTICM-TC-based FCTIM method for the issue of the fake CTI identifying, 4982 counterfeit CTI-like texts and 5381 authentic cybersecurity analysis reports are employed as the experiment dataset. These fake data generated by the popular Large Language Model including ERNIE [

3] and Qwen [

4] are similar to the real data, and can simulate aggressors performing the data poisoning attacks. Moreover, a lower ratio of fake-to-real CTIs is more consistent with real scenarios. The presented FCTICM-TC model performs the comparative experiments on these generated texts. The experiment results are shown in

Table 6 and

Table 7.

From the results of

Table 6 and

Table 7, we can find the following: (1) The Recall rate of the TextCNN for the fake CTIs is 0.992, but it is only 0.809 for the true CTIs. This is because the TextCNN excels at capturing the counterfeit CTI information. (2) The BERT-TextCNN and the MPU still outperform the others on the new data. Regarding the fake CTI identification, the MPU model has a higher Precision rate than that of the BERT-TextCNN model, but the Recall ratio of the MPU model is 1.1 percentage points lower than that of the BERT-TextCNN model. In contrast, with respect to the true CTIs identification, the BERT-TextCNN model has 2.2 percentage points higher Precision rate than that of the MPU model, but its Recall ratio is 1.9 percentage points lower than that of the MPU. That is, the BERT-TextCNN model has higher Precision on the real CTIs as well as greater Recall on the fake CTIs when the ratio of fake-to-real CTIs is set to a lower one. Based on the points discussed in the previous subsection, it shows that the BERT-TextCNN model is superior in identifying the fake CTIs in a more realistic scenario. (3) The BERT-TextCNN is optimal for the LMI metric, which means that the impact of its misclassification in the real world is minimized. Hence, the BERT-TextCNN will have a strong performance in realistic scenarios. (4) Compared with the results shown in

Figure 7 and

Figure 8, it is noticed that the performance of the respective methods on the new dataset has improved. This may be due to the fact that the CTI reports generated by the published LLMs significantly differ from the human-generated reports. In summary, the results of the experiment show that the presented FCTICM-TC model and the FCTICM-TC-based FCTIM method perform well on the new data and have good generalization properties.

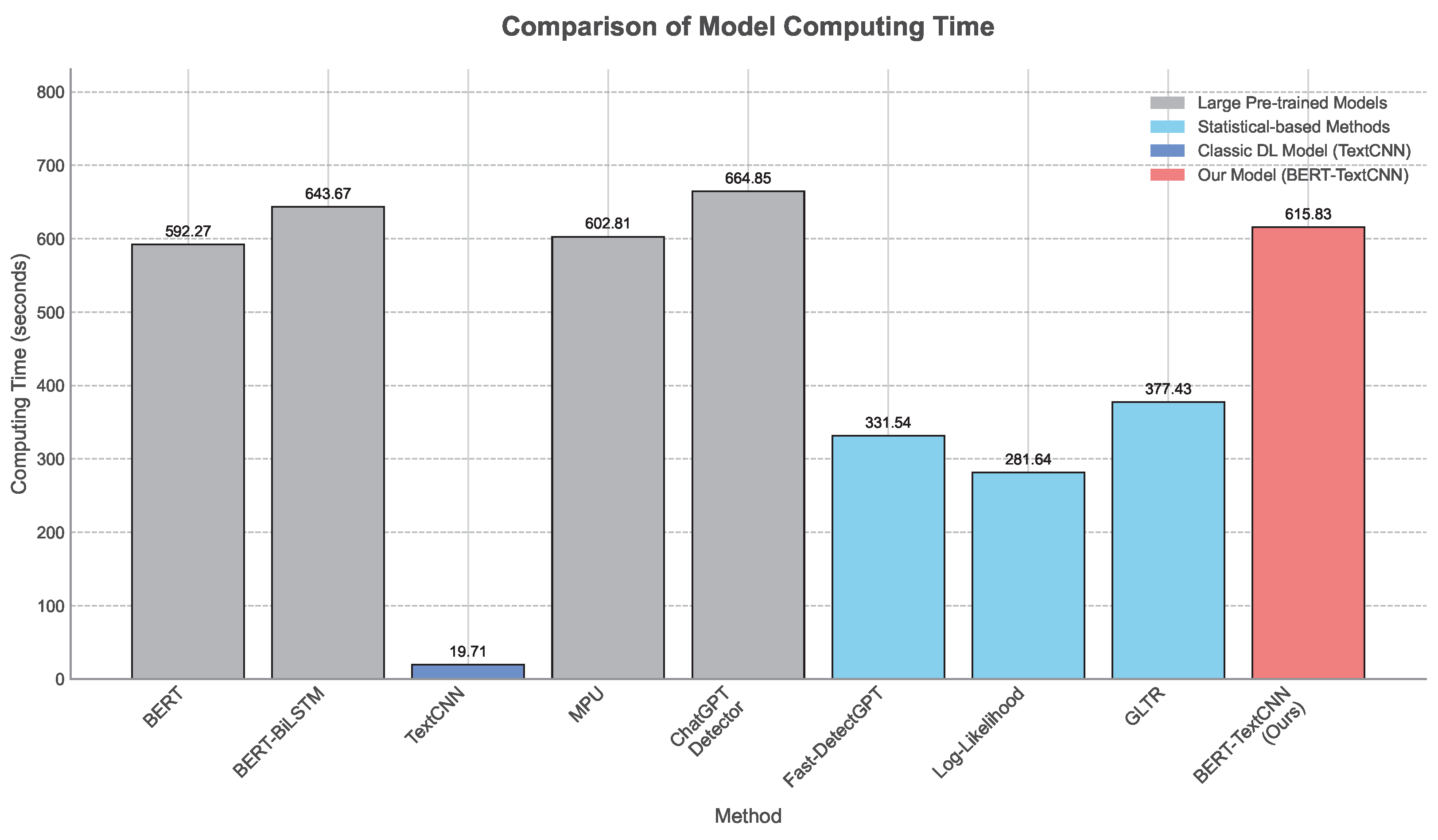

6.3.4. Computational Efficiency of the Proposed Method

To validate the computational efficiency of the proposed scheme for fake CTIs detection, the inference time of each method is compared with the same hardware environment (CPU and GPU) and the same dataset. The experiment results are shown in

Figure 9.

From the results shown in

Figure 9, we can find the following: (1) Statistical-based methods are visually faster than most model-based methods; this is because neural network models usually need to have a huge amount of computation. (2) The TextCNN costs the minimum time, which is due to the smaller parameter quantity and the smaller computation volume of the convolutional layer compared to the fully connected layer and the transformer. (3) Among the model-based methods, the BERT-TextCNN has a computation time cost comparable to that of the BERT and the MPU, but the difference is not significant. In summary, the experimental results show that the presented FCTICM-TC model and the FCTICM-TC-based FCTIM method will still perform well with large-scale data in the real world.

6.3.5. The Misclassified CTIs Analysis

In the application of the FCTIM method, while most CTI reports are classified accurately, a certain proportion are misclassified. To better understand the limitations of the method in real-world applications, this section conducts a detailed analysis of these misclassified reports and explores the potential reasons behind these errors. Several typical misclassification cases are presented below to analyze the causes of the method errors.

Case 1: “A hacker group claims to have successfully infiltrated the world’s largest national-level power control systems and threatens to launch large-scale destructive attacks within the next 72 h.”

This fake CTI report appears highly alarming and follows the common pattern of cyberattack threats. The mention of national power control systems and the time-sensitive nature of the threat (72 h) creates a sense of urgency, which enhances the authenticity of the report. The model may have been influenced by the severity and immediacy conveyed in the text, leading it to incorrectly classify the spurious CTI report as real.

Case 2: “Recent reports suggest that cyber attackers may pose a potential threat to critical infrastructure in several countries.”

Real CTI reports contain more detailed information, such as the identity of the attackers, the methods used, or the impact of the attack. In contrast, this authentic report is vague in its presentation, providing no concrete details about the threat. The lack of specificity in this case may have caused the model to misinterpret the report as fake.

Case 3: “After two months of investigation, security experts discovered that a globally renowned company is being subjected to a sustained cyberattack from state-sponsored hackers. The attack methods include infiltrating core servers and using advanced spyware.”

This authentic CTI report describes a typical advanced persistent threat (APT) attack, which aligns with real-world cyberattack scenarios. However, the model misclassified it as fake. One possible explanation is that the description is somewhat generic, lacking distinctive characteristics or unique details that would make it stand out as a specific event. The model may have mistakenly identified it as a generic cyberattack template, leading to its misclassification.

6.3.6. Statistical Significance Analysis

To further validate the superiority of our proposed model, we conducted statistical significance tests on the experimental results. Specifically, we performed paired

t-tests on the F1-scores obtained from the test set (as shown in

Figure 7 and

Figure 8, and

Table 6 and

Table 7) between our BERT-TextCNN model and each of the baseline models. The results indicate that our model’s performance improvement over the classic deep learning models (BERT, BERT-BiLSTM, TextCNN) and most of the recent detectors (e.g., ChatGPT Detector, GLTR) is statistically significant, with

p-values well below 0.05. When compared with the SOTA MPU model, while the F1-score difference was not always statistically significant, our model consistently achieved a lower (better) LMI score, indicating its superior performance in minimizing the potential harm of misclassification in practical cybersecurity scenarios. This robustly demonstrates the effectiveness and practical value of our proposed FCTICM-TC model.

7. Ethical Considerations

We acknowledge the dual-use nature of the research presented in this paper. The GLM-based FCTIG scheme, while developed for the defensive purpose of training a robust detector, demonstrates techniques that could potentially be adapted for malicious use, such as creating disinformation or enabling more sophisticated data poisoning attacks.

Our motivation for developing and detailing this generation methodology is rooted in the principle of “know your enemy.” To build effective defenses, it is crucial to understand and replicate the capabilities of potential attackers. The generated dataset of fake CTIs was a necessary component for training and validating our FCTICM-TC detection model, a common practice in cybersecurity and machine learning security research.

In releasing our code and data, we aim to empower fellow researchers and cybersecurity defenders to develop and benchmark more advanced detection techniques. We believe that the benefits of openly discussing and providing tools for defensive research outweigh the risks, as it fosters a more prepared and resilient security community. We advocate for the responsible use of these resources and encourage further research into robust defenses against AI-generated disinformation in the cybersecurity domain.

8. Conclusions

This paper presents an end-to-end framework for studying fake cybersecurity threat intelligence (CTI) in open-source settings. We (i) introduce a GLM-based generator (FCTIG) to synthesize realistic fake CTIs, (ii) simulate data poisoning by mixing generated and authentic reports, and (iii) develop a BERT–TextCNN classifier (FCTICM-TC) and the integrated FCTIM pipeline to identify fake CTIs.

Extensive experiments show that our generator produces linguistically fluent and diverse fake CTIs and that these texts are deceptively convincing to human practitioners. In a preliminary user study, ten cybersecurity experts achieved only marginally-better-than-chance accuracy when distinguishing real from fake items, highlighting the real-world risk posed by such content. On detection, our FCTICM-TC consistently outperforms classic deep learning baselines and recent detectors, with improvements that are statistically significant (paired tests, p < 0.05). Against strong modern baselines (e.g., MPU), our method achieves a lower (better) LMI score, indicating reduced operational harm from misclassification. Meanwhile, its inference cost remains practical—comparable to BERT/MPU and substantially faster than heavier pre-trained pipelines—suggesting suitability for integration in time-sensitive security workflows.

We acknowledge limitations that motivate future work. First, broader validation on third-party CTI corpora and professionally curated feeds (e.g., MISP/OpenCTI) is needed to further assess generalizability. Second, evaluating adversarial robustness—such as paraphrase-level or instruction-level attacks explicitly crafted to evade detectors—remains an important next step. Finally, we plan to engineer a lightweight service (plugin/API) that provides real-time trust scores for incoming CTI within SOC pipelines and sharing platforms, bridging research and deployment.

Author Contributions

Conceptualization, J.Q.; Methodology, J.Q., X.Z. and S.C.; Software, X.Z.; Validation, J.Q. and X.Z.; Writing—original draft preparation, X.Z. and S.C.; Writing—review and editing, X.Z.; Visualization, X.Z.; Supervision, Z.L.; Project administration, J.Q.; Funding acquisition, J.Q. and Z.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Smart Manufacturing New Model Application Project, Ministry of Industry and Information Technology, grant number ZH-XZ-18004.

Data Availability Statement

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Du, Z.; Qian, Y.; Liu, X.; Ding, M.; Qiu, J.; Yang, Z.; Tang, J. Glm: General language model pretraining with autoregressive blank infilling. arXiv 2021, arXiv:2103.10360. [Google Scholar]

- Sun, Y.; Wang, S.; Feng, S.; Ding, S.; Pang, C.; Shang, J.; Liu, J.; Chen, X.; Zhao, Y.; Lu, Y.; et al. Ernie 3.0: Large-scale knowledge enhanced pre-training for language understanding and generation. arXiv 2021, arXiv:2107.02137. [Google Scholar]

- Bai, J.; Bai, S.; Chu, Y.; Cui, Z.; Dang, K.; Deng, X.; Fan, Y.; Ge, W.; Han, Y.; Huang, F.; et al. Qwen technical report. arXiv 2023, arXiv:2309.16609. [Google Scholar] [CrossRef]

- Naveed, H.; Khan, A.U.; Qiu, S.; Saqib, M.; Anwar, S.; Usman, M.; Akhtar, N.; Barnes, N.; Mian, A. A comprehensive overview of large language models. arXiv 2023, arXiv:2307.06435. [Google Scholar] [CrossRef]

- Guo, B.; Zhang, X.; Wang, Z.; Jiang, M.; Nie, J.; Ding, Y.; Yue, J.; Wu, Y. How close is chatgpt to human experts? Comparison corpus, evaluation, and detection. arXiv 2023, arXiv:2301.07597. [Google Scholar] [CrossRef]

- Ranade, P.; Piplai, A.; Mittal, S.; Joshi, A.; Finin, T. Generating fake cyber threat intelligence using transformer-based models. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–9. [Google Scholar]

- Tian, Y.; Chen, H.; Wang, X.; Bai, Z.; Zhang, Q.; Li, R.; Xu, C.; Wang, Y. Multiscale positive-unlabeled detection of ai-generated texts. arXiv 2023, arXiv:2305.18149. [Google Scholar]

- Li, J.h. Cyber security meets artificial intelligence: A survey. Front. Inf. Technol. Electron. Eng. 2018, 19, 1462–1474. [Google Scholar] [CrossRef]

- Tseng, P.; Yeh, Z.; Dai, X.; Liu, P. Using LLMs to Automate Threat Intelligence Analysis Workflows in Security Operation Centers. arXiv 2024, arXiv:2407.13093. [Google Scholar] [CrossRef]

- Song, Z.; Tian, Y.; Zhang, J.; Hao, Y. Generating fake cyber threat intelligence using the gpt-neo model. In Proceedings of the 2023 8th International Conference on Intelligent Computing and Signal Processing (ICSP), Xi’an, China, 21–23 April 2023; pp. 920–924. [Google Scholar]

- Dushyant, K.; Muskan, G.; Annu.; Gupta, A.; Pramanik, S. Utilizing machine learning and deep learning in cybesecurity: An innovative approach. In Cyber Security and Digital Forensics; Wiley: Hoboken, NJ, USA, 2022; pp. 271–293. [Google Scholar]

- Awan, S.; Luo, B.; Li, F. Contra: Defending against poisoning attacks in federated learning. In Proceedings of the Computer Security–ESORICS 2021: 26th European Symposium on Research in Computer Security, Darmstadt, Germany, 4–8 October 2021; Proceedings, Part I 26. Springer: Cham, Switzerland, 2021; pp. 455–475. [Google Scholar]

- Chen, H.; Zhang, Y.; Cao, Y.; Xie, J. Security issues and defensive approaches in deep learning frameworks. Tsinghua Sci. Technol. 2021, 26, 894–905. [Google Scholar] [CrossRef]

- AnHeng. Suspected to Be a New Activity by Ocean Lotus, the Attack Target Seems to Be Large Chinese Enterprises; Technical Report; AnHeng: Beijing, China, 2021. [Google Scholar]

- Singh, A.; Kanishka; Dubey, S.K. Analytical Approach Towards Cybersecurity Through AI-Enabled Threat Intelligence. In Proceedings of the 2024 11th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions) (ICRITO), Noida, India, 14–15 March 2024; pp. 1–6. [Google Scholar]

- Durai pandian, A.p. Variational Autoencoders using Convolutional neural network for highly advanced cyber threats. In Proceedings of the 2024 IEEE Integrated STEM Education Conference (ISEC), Princeton, NJ, USA, 9 March 2024; pp. 1–6. [Google Scholar]

- Wu, Z.; Tang, F.; Zhao, M.; Li, Y. KGV: Integrating Large Language Models with Knowledge Graphs for Cyber Threat Intelligence Credibility Assessment. arXiv 2024, arXiv:2408.08088. [Google Scholar] [CrossRef]

- Yang, L.; Wang, M.; Lou, W. An automated dynamic quality assessment method for cyber threat intelligence. Comput. Secur. 2025, 148, 104079. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, L.K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the naacL-HLT, Minneapolis, MN, USA, 2–7 June 2019; Volume 1, p. 2. [Google Scholar]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. Lora: Low-rank adaptation of large language models. arXiv 2021, arXiv:2106.09685. [Google Scholar]

- Dai, Z.; Wang, X.; Ni, P.; Li, Y.; Li, G.; Bai, X. Named entity recognition using BERT BiLSTM CRF for Chinese electronic health records. In Proceedings of the 2019 12th International Congress on Image and Signal Processing, Biomedical Engineering and Informatics (CISP-BMEI), Suzhou, China, 19–21 October 2019; pp. 1–5. [Google Scholar]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014. [Google Scholar]

- Conneau, A.; Schwenk, H.; Barrault, L.; Lecun, Y. Very deep convolutional networks for text classification. arXiv 2016, arXiv:1606.01781. [Google Scholar]

- Li, S.; Zhao, Z.; Liu, T.; Hu, R.; Du, X. Initializing convolutional filters with semantic features for text classification. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 1884–1889. [Google Scholar]

- Liu, T.; Yu, S.; Xu, B.; Yin, H. Recurrent networks with attention and convolutional networks for sentence representation and classification. Appl. Intell. 2018, 48, 3797–3806. [Google Scholar] [CrossRef]

- Chen, T.; Xu, R.; He, Y.; Wang, X. Improving sentiment analysis via sentence type classification using BiLSTM-CRF and CNN. Expert Syst. Appl. 2017, 72, 221–230. [Google Scholar] [CrossRef]

- Li, Z.; Yu, X.; Wei, T.; Qian, J. Unstructured Big Data Threat Intelligence Parallel Mining Algorithm. Big Data Min. Anal. 2024, 7, 531–546. [Google Scholar] [CrossRef]

- Xiong, A.; Liu, D.; Tian, H.; Liu, Z.; Yu, P.; Kadoch, M. News keyword extraction algorithm based on semantic clustering and word graph model. Tsinghua Sci. Technol. 2021, 26, 886–893. [Google Scholar] [CrossRef]

- Zeng, R.; Liu, H.; Peng, S.; Cao, L.; Yang, A.; Zong, C.; Zhou, G. CNN-based broad learning for cross-domain emotion classification. Tsinghua Sci. Technol. 2022, 28, 360–369. [Google Scholar] [CrossRef]

- Yenigalla, P.; Kar, S.; Singh, C.; Nagar, A.; Mathur, G. Addressing unseen word problem in text classification. In Proceedings of the Natural Language Processing and Information Systems: 23rd International Conference on Applications of Natural Language to Information Systems, NLDB 2018, Paris, France, 13–15 June 2018; Proceedings 23. Springer: Cham, Switzerland, 2018; pp. 339–351. [Google Scholar]

- Zhang, Y.; Wang, Q.; Li, Y.; Wu, X. Sentiment Classification Based on Piecewise Pooling Convolutional Neural Network. Comput. Mater. Contin. 2018, 56, 285. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. Bleu: A method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002; pp. 311–318. [Google Scholar]

- Li, J.; Galley, M.; Brockett, C.; Gao, J.; Dolan, B. A diversity-promoting objective function for neural conversation models. arXiv 2015, arXiv:1510.03055. [Google Scholar]

- Li, J.; Singh, R. DNA-GPT: Detecting AI-Generated Text by Analyzing its Linguistic and Statistical Artifacts. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Bangkok, Thailand, 11–16 August 2024; pp. 1021–1032. [Google Scholar]

- Vasiliev, A.; Chen, W. RoBERTa-D: A Robust Pre-trained Model for AI-Generated Text Detection. arXiv 2024, arXiv:2402.11223. [Google Scholar]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).