Abstract

Artificial intelligence (AI), particularly large language models (LLMs) such as ChatGPT, has attracted attention for its potential to enhance diagnostic decision-making. This study aims to compare the decision-making tendencies of clinicians from different disciplines regarding implant planning with the approach of ChatGPT and to evaluate the potential integration of AI into clinical decision-making. This cross-sectional, survey-based study compared the implant planning decisions of prosthodontic and surgical residents with those of ChatGPT 4.0 and each other. Fourteen cases were presented to 20 prosthodontics, 20 oral and maxillofacial surgery residents, and ChatGPT. Participants selected the option they found most appropriate; responses were compared case by case using chi-square tests with p < 0.05. The responses of residents showed no statistically significant differences. However, comparisons between the AI and clinicians revealed significant differences in 11 of the scenarios. In clinical scenarios where no differences were found, both human and artificial intelligence tended to make the same choices on less complicated cases. While AI is increasingly advancing in clinical decision-making, its responses to clinical scenarios may show some inconsistencies. AI demonstrated similarity in simple cases but diverged in complex ones. While promising as a support, broader studies with diverse scenarios are necessary to enhance the integration potential of AI in clinical decision-making.

1. Introduction

The use of dental implants has evolved over the years from an experimental approach to a reliable treatment option with high predictability for replacing missing teeth with implant-supported prostheses. Today, it is widely used in daily clinical practice for both complete and partial edentulism. Contemporary implant therapy offers significant functional and biological advantages for many patients compared to traditional fixed or removable prostheses [1].

Advancements and innovations in dental implant systems have enabled successful outcomes. However, the success of dental implants is not only dependent on the properties of the implant material. Detailed surgical plans, depending on the structure of the existing bone and the type of prosthetic rehabilitation to be performed, are critical factors for success [2]. Comprehensive preoperative planning, both surgically and prosthetically, enables accurate placement of implants for the patient, ensuring restorations that can meet functional and aesthetic expectations [2,3].

During diagnosis and treatment planning, the clinician must carefully evaluate both prosthetic and anatomical limitations when selecting an alveolar bone region of sufficient quality for the safe and ideal placement of implants [4]. Implant planning requires the collaboration of many specialists, including radiologists, oral and maxillofacial surgeons, periodontists, and prosthodontists. This multidisciplinary approach is essential for optimal planning; however, it is often time-consuming and does not always yield a single correct approach [5,6].

Recently, artificial intelligence (AI), particularly large language models (LLMs) such as ChatGPT (OpenAI, San Francisco, CA, USA), has attracted attention for its potential to enhance diagnostic decision-making and risk classification [7]. AI, as in other healthcare fields, is becoming part of pre-surgical planning for dental implants [8,9]. Machine learning (ML), deep learning (DL), and artificial neural networks (ANNs), which are subfields of AI, offer a wide range of applications from the analysis of dental images to treatment planning, optimization of implant design, and outcome prediction [10]. AI-supported systems significantly contribute to challenging clinical tasks such as identifying anatomical structures in cone-beam computed tomography (CBCT) images [11], implant brand identification [12], bone volume evaluation, and support of surgical protocols [13]. Furthermore, advanced applications such as predicting implant failure risks [14] and the development of robot-assisted surgical systems [15] demonstrate the potential of this technology. These developments enable personalized and data-driven approaches in implantology, making clinical decision-making processes more reliable and effective [16]. Although recently there are studies evaluating artificial intelligence in implant planning, they have mainly focused on true–false questions [17] or literature-based assessments [18]; this study differs by comparing AI responses to real clinical scenarios with participants’ treatment-planning decisions, providing a more practice-oriented evaluation.

This study aimed to compare implant treatment planning decisions between prosthodontic and oral and maxillofacial surgery residents and those generated by ChatGPT 4.0. In this context, the consistency of approaches between the two disciplines, the differences between ChatGPT and other groups in decision-making, the clinical criteria prioritized by the groups, and the proposed treatment plans were analyzed. The null hypothesis of this study is that the clinical decision-making tendencies of clinicians from different disciplines and the clinical decision-making capabilities of AI will not differ from each other.

2. Materials and Methods

2.1. Study Design and Ethical Approval

This study compared the decisions made by clinicians from different disciplines in dental implant planning with the responses provided by ChatGPT 4.0 (OpenAI, GPT-4), a large language model-based AI system. The study was conducted with ethical approval from the Non-Interventional Clinical Research Ethics Committee of Ankara University Faculty of Dentistry (Meeting No: [9/38], Date: [5 May 2025]).

2.2. Case Scenario Preparation

Fourteen anonymized real patient cases with pre-implant surgery panoramic images were selected for the study. 14 cases were selected retrospectively from the patient records of the Department of Prosthodontics at Ankara University. The selection was performed jointly by one prosthodontist and one oral surgeon, based on representations of common clinical scenarios in implantology from simpler cases to more complex cases (single tooth loss, partial edentulism, and full-arch cases). Cases with poor radiographic quality, systemic contraindications, or unclear treatment history were excluded. To facilitate responses from both clinicians and the AI model, tomographic data, interocclusal distance, and other relevant surgical and prosthetic information were provided in written form, while only panoramic radiographs were presented visually. Due to the very large file sizes of cone-beam computed tomography (CBCT) datasets, which exceeded the upload limits, CBCT images were not provided; however, all clinically relevant three-dimensional information obtainable from CBCT was summarized within the case descriptions to ensure sufficient data for treatment planning. It was assumed that all patients mentioned in the case scenarios had good systemic health, adequate oral hygiene, and no financial restrictions for standardization. Clinical scenarios were prepared with four alternative treatment options for each case. The four management options for each case were drafted by one senior prosthodontist and one oral surgeon with >10 years of experience. The options were reviewed for clinical plausibility and to ensure that at least two alternatives represented commonly accepted approaches, while others reflected less preferred yet still clinically possible options (not intentionally wrong or contraindicated). The aim was not to distinguish “correct vs. incorrect” answers but to evaluate preference tendencies between clinicians and AI when faced with multiple feasible solutions. Scenarios included surgical parameters such as the number, size, location, and position of implants, the need for additional surgical procedures, and the placement protocol, as well as prosthetic factors including prosthesis type and number, location, abutment selection, material preferences, and loading protocols. Prosthetic and surgical considerations varied for each question. The panoramic radiographs, along with the case descriptions and four treatment options, are presented in Figure 1, Figure 2, Figure 3, Figure 4, Figure 5, Figure 6 and Figure 7.

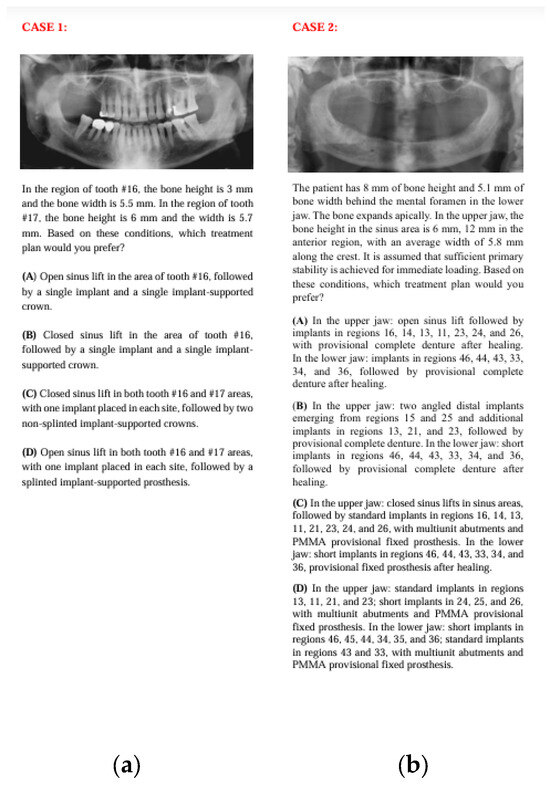

Figure 1.

The panoramic radiographs, descriptions, and four treatment options of Case 1 (a) and Case 2 (b).

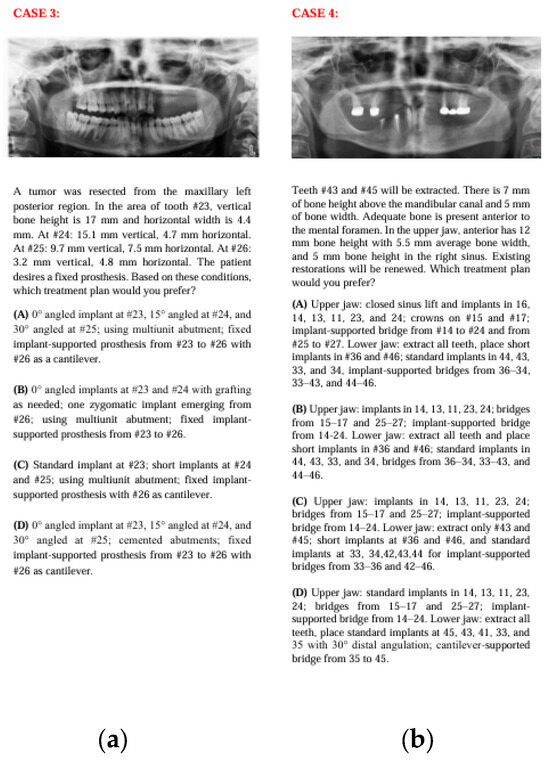

Figure 2.

The panoramic radiographs, descriptions, and four treatment options of Case 3 (a) and Case 4 (b).

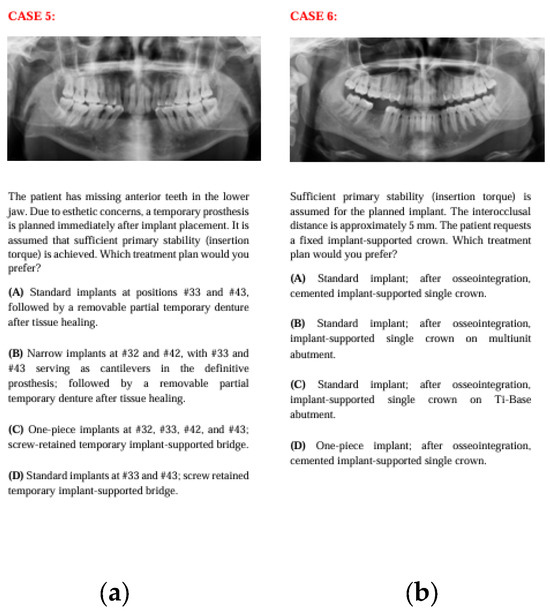

Figure 3.

The panoramic radiographs, descriptions, and four treatment options of Case 5 (a) and Case 6 (b).

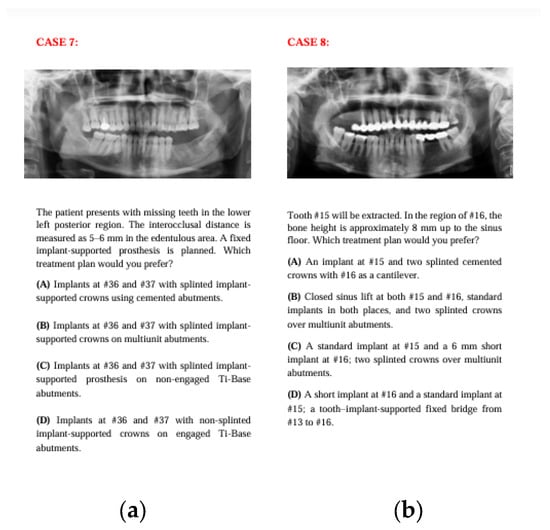

Figure 4.

The panoramic radiographs, descriptions, and four treatment options of Case 7 (a) and Case 8 (b).

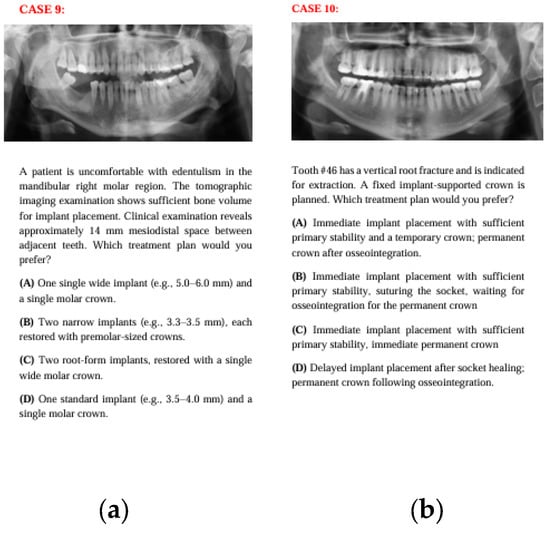

Figure 5.

The panoramic radiographs, descriptions, and four treatment options of Case 9 (a) and Case 10 (b).

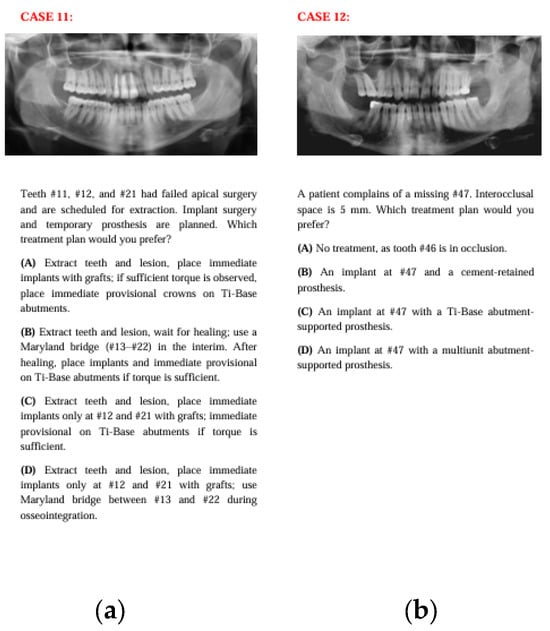

Figure 6.

The panoramic radiographs, descriptions, and four treatment options of Case 11 (a) and Case 12 (b).

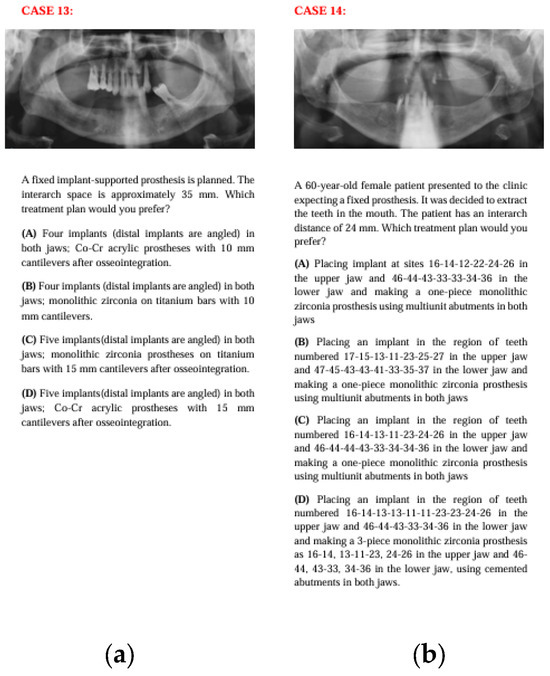

Figure 7.

The panoramic radiographs, descriptions, and four treatment options of Case 13 (a) and Case 14 (b).

2.3. Participant Groups

The prepared case scenarios, along with panoramic radiographs and case descriptions, were presented to three groups:

- 20 residents in the Department of Prosthodontics

- 20 residents in the Department of Oral and Maxillofacial Surgery

- ChatGPT 4.0 AI model

Residents who have at least 3 years of experience were selected for the survey.

2.4. Collection of Responses

The 14 case scenarios, including panoramic radiographs and explanatory texts, were shared with participants via an online survey form created on Google Drive (Google LLC, Mountain View, CA, USA). Only invited surgical and prosthodontic residents could access the form, and each participant could respond only once. Four planning options were presented for each case, and participants were asked to select the alternative they found most appropriate. The study’s purpose was explained at the start of the form, and online informed consent was obtained.

The ChatGPT 4.0 model answered each scenario under the same conditions and with standard prompts twice daily for ten days, generating 20 AI responses per case. The model’s conversation history was cleared, and responses were obtained each time without guidance. Aggregating multiple outputs provides a more representative sample of the AI’s performance, reduces potential bias from any single atypical response, and mirrors the variability observed in the 40 resident responses from two different specialties. This approach enhances the fairness and generalizability of comparisons between human- and AI-generated answers.

2.5. Data Collection and Analysis

The survey responses were automatically recorded. They were blinded to participant identity, ensuring impartiality. Similarities and differences between the surgical, prosthodontic, and AI responses were compared on a case-by-case basis. For each case, the AI model (ChatGPT) generated 20 independent responses, and all responses were treated as individual data points in the statistical analysis. No aggregation or majority-vote method was applied, allowing each AI response to contribute separately to the evaluation. This approach ensures that the variability in the AI’s decision-making is fully captured. Appropriate statistical analysis methods (chi-square test and Cohen-Kappa test) were used for group comparisons. BM SPSS Statistics for Windows, Version 22.0 (IBM Corp., Armonk, NY, USA) software was utilized for analyses, with the significance level set at p < 0.05.

3. Results

The case-based questions prepared with 14 different clinical scenarios and supported by panoramic radiographs were posed to 40 clinicians from two different disciplines and to ChatGPT over ten days, twice daily, for a total of 20 AI responses. Participants selected the treatment option they would prefer among four alternatives for each scenario. Responses were evaluated accordingly and were presented in Table 1.

Table 1.

Case-based Response Distribution Among Two Specialties and AI.

No statistical difference between prosthodontic and surgical residents, but for natural variability, was observed (p > 0.05). However, comparisons between the AI and human responses revealed no statistically significant differences in three of the 14 case scenarios, while significant differences were found in 11 of the scenarios (p < 0.05).

The Cohen’s Kappa analysis revealed a kappa value of 0.270 between the AI and prosthodontics group, 0.213 between the AI and surgical group, and 0.618 between the prosthodontics and surgical groups.

4. Discussion

Clinical decision-making in implant planning requires consideration of multiple variables, making it unlikely for clinicians to agree on a single treatment option. With the increasing integration of artificial intelligence (AI) into various fields, including healthcare, the potential for AI-based language models such as ChatGPT 4.0 to guide clinical decision-making in implant-supported treatment planning warrants exploration.

The null hypothesis of this study proposed that the clinical decision-making tendencies of artificial intelligence and clinicians from different disciplines would not differ from each other significantly. However, the findings of this study rejected this hypothesis. Contrary to expectations, no significant differences were observed between the clinician groups, while statistically significant differences were noted in most scenarios between AI-generated and human decisions.

The survey scenarios were designed to reflect the commonly seen clinical cases that allow multiple treatment plans. Panoramic radiographs were provided to facilitate the decision-making process, while tomographic data and relevant clinical details were summarized in written form to minimize data load and standardize the evaluation process.

To reflect the complexity of clinical decision-making while ensuring structured comparison, responses were limited to four predetermined options for each case, encouraging participants to select the one most closely aligned with their own choice. The primary aim was not to identify “right vs. wrong” answers but to compare patterns of decision-making. Specifically, we aimed to assess whether ChatGPT aligns with human clinicians in prioritizing surgical vs. prosthetic considerations when multiple valid treatment pathways exist.

The diversity in surgical and prosthetic considerations across questions enabled the analysis of prioritization patterns between AI and human decision-making. For example, in posterior cases with limited bone support, surgical parameters often took precedence, whereas anterior cases required prioritization of prosthetic and aesthetic considerations. In certain questions, alternative options were provided to reflect either conservative or more invasive approaches within surgical planning. Similarly, alternatives regarding abutment type and material selection were presented, aiming to assess whether clinicians and the AI model would consider potential long-term complications of the prosthesis in their decision-making. This diversity within the scenarios may be beneficial for analyzing which factors are prioritized by both clinicians and the AI model. Moreover, such variability allows for a clear evaluation of the reliability of AI responses when managing complex clinical situations.

It was noticed that ChatGPT sometimes gave different answers to the same case when asked at different times. This happens because language models create responses using probabilities, not fixed rules. While this can be useful in general conversations, it causes problems for clinical work where reliable and repeatable decisions are important. For this reason, more studies are needed to make AI responses more stable and consistent before they can be trusted in implant planning.

Since implant treatment planning is a process requiring both surgical and prosthetic competence, this study aimed to evaluate two different disciplines separately. No significant difference was found between the disciplines. This may be due to the educational approach conducted within the same faculty. If similar studies are conducted in different dental faculties, differences in interdisciplinary clinical decision-making processes may emerge. Further studies are needed to determine this.

In 11 out of 14 questions asked, a significant difference was found between human intelligence and artificial intelligence responses. This difference may be related to the variety in knowledge acquisition and the degree of difficulty across the questions.

In clinical scenarios where no statistically significant differences were found, both human and artificial intelligence tended to concentrate on the same choices. Upon examining these scenarios, it was observed that they involved less complicated partial edentulism cases. These scenarios, which clinicians frequently encounter, may also be easier for artificial intelligence to process and may have more comparable cases in the literature.

According to the Cohen’s Kappa analysis, the agreement between the AI and the prosthodontics groups was fair (κ = 0.270), and similarly, the agreement between the AI and the oral and maxillofacial surgery groups was also fair (κ = 0.213). In contrast, a substantial level of agreement was observed between the prosthodontics and oral and maxillofacial surgery groups (κ = 0.618). These findings suggest that while human evaluators from different specialties demonstrated a relatively high level of consistency in their assessments, the AI system showed limited alignment with either expert group. This discrepancy may highlight the need for further refinement of AI algorithms to better reflect expert clinical judgment, especially when applied across different dental specialties.

In Case 1, AI predominantly chose option C, while human participants chose option D. AI’s preference for option C, which involved a closed sinus lift and non-splinted crowns, may indicate that it approaches the issue differently from clinicians in both surgical and prosthetic perspectives. AI, being literature-focused, may have leaned toward minimally invasive surgical procedures and designs closer to the natural dentition. In contrast, human intelligence, based on experience, may have prioritized providing a safer surgical field through open sinus lift and improving biomechanical load distribution by splinting crowns.

In Case 2, although option C was the most preferred across all three groups, there was a statistically significant difference in the distribution between human and artificial intelligence. AI consistently chose option C, while other participants selected other options as well. This may be due to the extensive evidence in the literature suggesting that increasing the number of implants enhances treatment success. Additionally, the preference for fixed prostheses over removable dentures for temporary restorations may have been considered more advantageous in terms of aesthetics and function.

In Case 3, while AI chose option A and planned to use tilted implants with a cantilever-supported fixed prosthesis, the assistants chose option B and planned a structure with posterior support using grafts and zygomatic implants. It can be inferred that AI tended to avoid complex surgical procedures, favoring minimally invasive methods instead. Additionally, due to the limited data available in the literature regarding zygomatic implants, AI may have opted for more commonly documented solutions. Human intelligence, on the other hand, may have leaned toward more aggressive yet contemporary surgical methods for certain cases, based on clinical experience.

In Case 4, while AI and human intelligence preferred the same planning for the maxilla, they differed in their approaches for the mandible. AI preferred a more conservative approach by preserving some of the lower teeth, whereas human intelligence, possibly using clinical foresight to prevent potential future complications, opted for a more radical approach, planning for full-arch implant-supported prostheses after extracting all lower teeth. Additionally, AI may have preferred using short implants to avoid cantilevers, while clinicians tended to place distally tilted implants to design prostheses with cantilevers instead.

In Case 7, although option C was the most preferred in all groups, a significant distribution difference was found between human and artificial intelligence. This could be due to clinicians varying their abutment choices based on individual experiences considering clinical variables such as interocclusal space and retention. AI, on the other hand, may have responded solely based on the available data. While AI may have considered non-splinted crowns advantageous for their natural anatomy in Case 1, in Case 7, it may have preferred splinted crowns in the posterior region to optimize load distribution. This indicates that AI can provide different answers for similar clinical cases, raising questions about the reliability of AI in clinical decision-making.

In Case 8, AI predominantly chose option C, likely opting for a less invasive and faster solution by avoiding additional surgical procedures and using short implants instead of sinus lifts. Clinicians, on the other hand, may have concentrated on the option of recommending standard implants with sinus lifts, considering them more predictable than short implants in terms of case safety and clinical experience.

In Case 10, although all three groups predominantly recommended immediate loading (option A), some clinicians also preferred delayed loading (option B), which led to a statistically significant distribution difference. In this case, AI may have recommended immediate loading based on primary stability criteria in the literature, while clinicians considered the risks of biological complications and opted for more traditional loading protocols. This reflects the differing priorities among groups in the clinical decision-making process.

In Case 11, where the case involved anterior esthetics and immediate implantation, clinicians predominantly chose options C and D, while AI chose option A. This may be because clinicians aimed to avoid the risk of placing implants across the entire area in an anterior region with a history of lesions or, guided by clinical intuition, leaned toward treatments with fewer implants or plans like option D that involve waiting for osseointegration to reduce complications. AI, on the other hand, may have leaned toward option A, which aligns with the current literature emphasizing the success of immediate implantation with immediate provisional prostheses in the esthetic zone, aiming to place as many implants as possible.

In Case 12, although option C was the most preferred across all groups, a significant distribution difference was found between human and artificial intelligence. In this question evaluating abutment types in single-implant restorations, clinicians, based on varied clinical experiences, opted for different choices, whereas AI consistently preferred single crown restorations on Ti-base abutments, which are frequently highlighted in the recent literature.

In Case 13, involving multiple evaluations regarding implant numbers, cantilever lengths, and prosthetic material selection for full-arch fixed prostheses, AI preferred an option with fewer implants and shorter cantilevers using a Ti-bar, while human intelligence opted for restorations with increased implant numbers and cantilevers. This indicates potential differences in prioritization algorithms between human and artificial intelligence during surgical and prosthetic planning. The fact that AI, which previously tended to increase implant numbers, opted for fewer implants with reduced cantilevers in this question may provide insight into AI’s consistency in clinical decision-making.

In Case 14, AI recommended placing six implants in each arch and fabricating a single-piece monolithic zirconia prosthesis using multi-unit abutments. However, clinicians preferred a three-piece prosthesis, considering factors such as the long-term use in fully edentulous patients, the feasibility of segmental approaches during regional surgical or prosthetic interventions, and the reduced technical sensitivity during laboratory procedures compared to screw-retained structures. This difference reveals the contrast between AI systems’ optimization approach, which focuses on production and durability, and the clinical practice prioritizing patient-centered, feasible, and sustainable solutions.

It should be noted that the AI model operated on text-based decision-making and lacked the capability to directly assess patient behaviors, examination findings, and clinical intuition. While the AI model’s data access was limited to online information, the clinicians had access to not only online data but also printed sources, training materials, clinical experiences, and various academic publications, which may have impacted decision-making processes. The observed tendency of ChatGPT toward conservative treatment options reflects this fundamental divergence from clinicians’ clinical intuition and individualized decision-making. As large language models like ChatGPT are trained on accessible literature and similar sources, where conservative approaches are often prioritized, the model is likely to rate options that minimize potential complications as more optimal and to adopt this cautious stance as a reference point. This inclination may be balanced in the future through richer integration of patient-specific data and the development of more context-sensitive algorithms.

This study aimed to evaluate decision-making processes in implant planning but has several limitations. First, scenarios were limited to written case descriptions and panoramic images; complementary clinical data such as 3D radiographic images, clinical photographs, and intraoral examination findings were not included, which may have influenced participants’ planning differently than in real clinical settings. It should be noted that panoramic radiographs alone do not fully reflect real-world practice, where CBCT imaging and comprehensive clinical examinations are essential for accurate treatment planning.

Elgarba et al. [9] reported that CBCT-based AI implant planning achieved quality comparable to human experts while being substantially faster and more consistent. In contrast, in our study comparing panoramic radiographs with ChatGPT, the AI produced more conservative and significantly different decisions in most cases, likely due to limited input data. These findings suggest that task-specific models trained on three-dimensional datasets can approach human performance, whereas large language models remain more cautious and literature-driven. This study evaluated only the responses of the ChatGPT 4.0 model. Future studies incorporating different AI models in comparative settings could more comprehensively evaluate AI’s contribution to decision-making processes in implant planning.

Additionally, due to the limited number of studies in the literature directly comparing AI and human expert preferences in dental implant planning, the findings of this study should be considered exploratory, and larger-scale studies are needed in the future.

5. Conclusions

It was found that while statistical tests showed no significant differences between residents, variations in their treatment choices were still observed. ChatGPT’s preference for conservative treatments highlights a key difference from clinicians’ individualized decision-making. AI should be viewed as a complementary aid rather than a substitute for expert clinical judgment, and further research with larger and more diverse case sets and multiple AI models is necessary to strengthen its role in routine clinical practice.

Author Contributions

Conceptualization, E.A.B. and Ö.N.Ö.; methodology, F.G.; validation, F.G., Ö.N.Ö. and E.A.B.; investigation, E.A.B. and F.G.; resources, F.G.; writing—original draft preparation, E.A.B. and Ö.N.Ö.; writing—review and editing, F.G.; formal analysis, C.Ö.; supervision, F.G. and C.Ö. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Non-Interventional Clinical Research Ethics Committee of Ankara University Faculty of Dentistry (Meeting No: [9/38], Date: [5 May 2025]).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The dataset used and analyzed during the current study is available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| LLMs | Large Language Models |

| ML | Machine Learning |

| DL | Deep Learning |

| ANN | Artificial Neural Networks |

| CBCT | Cone Beam Computed Tomography |

| OMFS | Oral and Maxillofacial Surgery |

| Pros | Prosthodontics |

| Ti | Titanium |

| 3D | Three Dimensional |

References

- Buser, D.; Sennerby, L.; De Bruyn, H. Modern implant dentistry based on osseointegration: 50 years of progress, current trends and open questions. Periodontol. 2000 2017, 73, 7–21. [Google Scholar] [CrossRef] [PubMed]

- Bankoğlu, M.; Nemli, S.K. İntraoral implant planlamasında üç boyutlu görüntüleme tekniklerinin kullanımı. ADO Klin. Bil. Derg. 2010, 4, 564–573. [Google Scholar]

- Mupparapu, M.; Singer, S.R. Implant imaging for the dentist. J. Can. Dent. Assoc. 2004, 70, 32. [Google Scholar] [PubMed]

- Dioguardi, M.; Spirito, F.; Quarta, C.; Sovereto, D.; Basile, E.; Ballini, A.; Caloro, G.A.; Troiano, G.; Muzio, L.L.; Mastrangelo, F. Guided dental implant surgery: Systematic review. J. Clin. Med. 2023, 13, 1490. [Google Scholar] [CrossRef] [PubMed]

- Cheung, M.C.; Hopcraft, M.S.; Darby, I.B. Implant education patterns and clinical practice of general dentists in Australia. Aust. Dent. J. 2019, 64, 273–281. [Google Scholar] [CrossRef] [PubMed]

- Yoon, T.Y.H.; Muntean, S.A.; Michaud, R.A.; Dinh, T.N. Investigation of dental implant referral patterns among general dentists and dental specialists: A survey approach. Compend. Contin. Educ. Dent. 2018, 39, e13–e16. [Google Scholar] [PubMed]

- Zaboli, A.; Brigo, F.; Sibilio, S.; Mian, M.; Turcato, G. Human intelligence versus ChatGPT: Who performs better in correctly classifying patients in triage? Am. J. Emerg. Med. 2024, 79, 44–47. [Google Scholar] [CrossRef] [PubMed]

- Topol, E.J. High-performance medicine: The convergence of human and artificial intelligence. Nat. Med. 2019, 25, 44–56. [Google Scholar] [CrossRef] [PubMed]

- Elgarba, B.M.; Fontenele, R.C.; Du, X.; Mureșanu, S.; Tarce, M.; Meeus, J.; Jacobs, R. Artificial intelligence versus human intelligence in presurgical implant planning: A preclinical validation. Clin. Oral Implants Res. 2025, 36, 835–845. [Google Scholar] [CrossRef] [PubMed]

- Thurzo, A.; Urbanová, W.; Novák, B.; Czako, L.; Siebert, T.; Stano, P.; Mareková, S.; Fountoulaki, G.; Kosnáčová, H.; Varga, I. Where is the artificial intelligence applied in dentistry? Systematic review and literature analysis. Healthcare 2022, 10, 1269. [Google Scholar] [CrossRef] [PubMed]

- Kurt Bayrakdar, S.; Orhan, K.; Bayrakdar, I.S.; Bilgir, E.; Ezhov, M.; Gusarev, M.; Shumilov, E. A deep learning approach for dental implant planning in cone-beam computed tomography images. BMC Med. Imaging 2021, 21, 86. [Google Scholar] [CrossRef] [PubMed]

- Michelinakis, G.; Sharrock, A.; Barclay, C.W. Identification of dental implants through the use of implant recognition software (IRS). Int. Dent. J. 2006, 56, 203–208. [Google Scholar] [CrossRef] [PubMed]

- Mangano, F.G.; Admakin, O.; Lerner, H.; Mangano, C. Artificial intelligence and augmented reality for guided implant surgery planning: A proof of concept. J. Dent. 2023, 133, 104485. [Google Scholar] [CrossRef] [PubMed]

- Huang, N.; Liu, P.; Yan, Y.; Xu, L.; Huang, Y.; Fu, G.; Lan, Y.; Yang, S.; Song, J.; Li, Y. Predicting the risk of dental implant loss using deep learning. J. Clin. Periodontol. 2022, 49, 872–883. [Google Scholar] [CrossRef] [PubMed]

- Grischke, J.; Johannsmeier, L.; Eich, L.; Griga, L.; Haddadin, S. Dentronics: Towards robotics and artificial intelligence in dentistry. Dent. Mater. 2020, 36, 765–778. [Google Scholar] [CrossRef] [PubMed]

- Altalhi, A.M.; Alharbi, F.S.; Alhodaithy, M.A.; Almarshedy, B.S.; Al-Saaib, M.Y.; Al Jfshar, R.M.; Aljohani, A.S.; Alshareef, A.H.; Muhayya, M.; Al-Harbi, N.H. The impact of artificial intelligence on dental implantology: A narrative review. Cureus 2023, 15, e47941. [Google Scholar] [CrossRef] [PubMed]

- Eraslan, R.; Ayata, M.; Yagci, F.; Albayrak, H. Exploring the potential of artificial intelligence chatbots in prosthodontics education. BMC Med. Educ. 2025, 25, 321. [Google Scholar] [CrossRef] [PubMed]

- Sadowsky, S.J. Can ChatGPT be trusted as a resource for a scholarly article on treatment planning implant-supported prostheses? J. Prosthet. Dent. 2025, 134, 438–443. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).