1. Introduction

The rapid pace of urbanization and the escalating number of automobiles have rendered urban traffic congestion a ubiquitous challenge. The contradiction between limited road resources and a dense traffic flow has intensified regional congestion. Such congestion results in frequent vehicle idling, which not only extends commuting times but also causes a surge in regional CO2 emissions and fuel consumption. This dual pressure not only degrades the air quality but also increases travel time costs and energy expenditure.

Over an extended period, scholars have consistently advanced their research on urban congestion. Laval [

1] analogized traffic flow to a gas and put forward the phase transition theory of traffic flow, which elucidates the complex dynamic characteristics of urban traffic, and defined the boundaries of congestion. Relevant research has revealed the relationships between congestion and traffic flow—such as those between the maximum network throughput, congestion levels, and traffic flow normalization methods—and has also highlighted the implications of congestion for sustainable urban development [

2].

Research indicates that traffic congestion directly increases vehicular emissions on urban roads, thereby exacerbating air pollution. Vehicles that frequently stop and start in congested areas significantly increase CO

2 emissions and particulate matter (PM2.5), further deteriorating the local air quality. Traffic congestion not only raises pollutant emissions but also indirectly affects public health. In 2020, excessive vehicle emissions caused by traffic congestion in China led to over 25,000 deaths [

3]. Moreover, the noise generated by congestion, including driver honking, adversely impacts the daily lives of students and local residents.

Beyond environmental impacts, congestion also imposes economic costs by increasing fuel expenses and lost productivity due to longer travel times [

4]. According to Ahn et al. [

5], fuel consumption increases with the jerk of vehicles. That is, for a given distance, smoother driving results in lower fuel consumption. However, traffic congestion frequently induces unhealthy driving behaviors, such as repeated acceleration and braking. Under identical traffic conditions, variations in driving style can lead to fuel consumption differences of up to 29% [

6]. Mohammadnazar et al. [

7] demonstrated that, within urban networks, normal driving improves fuel economy by approximately 23% compared with unhealthy driving behavior. Marzet et al. [

8] revealed that traffic conditions exert a substantial influence on both driving behavior and vehicle fuel consumption. Experimental results indicate that, relative to congested roads, vehicles operating under favorable traffic conditions achieve up to a 36% improvement in fuel efficiency. Therefore, developing a traffic control system aimed at mitigating congestion is of great significance for both environmental sustainability and economic efficiency.

Traffic light signal control represents an effective means of mitigating traffic congestion. By optimizing signal phase timing plans, researchers have enhanced the operational efficiency of individual intersections. Agand et al. [

9] advanced environmental impact mitigation by pioneering a DRL-based traffic signal control framework that integrates a novel reward distribution mechanism, effectively reducing CO

2 emissions across diverse vehicle types while simultaneously shortening travel times in urban networks.

In recent years, the coordinated control of multi-intersection signals in large-scale urban networks has emerged as a prominent research direction. The optimization framework for coordinated multi-intersection signal control centers on two primary objectives: maximizing the green wave bandwidth and optimizing traffic performance metrics [

10,

11]. Recent developments in traffic signal coordination have seen substantial research dedicated to the development of Multi-Agent Reinforcement Learning (MARL) methodologies. Elise et al. [

12] modeled the road network state space using a Deep Q-Network (DQN) and achieved significant reductions in travel time and queue length through dynamic policy optimization. Wei et al. [

13] enhanced the DQN architecture by integrating Graph Convolutional Networks (GCNs) for topological feature extraction, introducing a dual-reward mechanism that jointly optimizes the vehicle waiting time and intersection throughput. Their approach reduced the average waiting time by 40–47% under high-density traffic conditions. Liang et al. [

14] proposed a Double Dueling Deep Q-Network (3DQN) framework that employs Convolutional Neural Networks (CNNs) for grid-based traffic scenario representation, consistently reporting performance improvements, including more than a 20% reduction in the average vehicle delay across diverse traffic patterns.

While MARL has shown considerable potential in coordinated multi-intersection traffic signal control, it faces a fundamental challenge known as the curse of dimensionality. Existing mitigation strategies have converged into three primary technical approaches:

- (1)

Decentralized Architecture: Chu et al. [

15] proposed the Multi-Advantage Actor-Critic (MA2C) algorithm that enables independent agent learning through environmental dynamics modeling. Min et al. [

16] yielded 10–25% improvements in traffic throughput metrics via a strategic state-reward system reconfiguration within the Advantage Actor-Critic (A2C) framework.

- (2)

Hybrid Hierarchical Approach: Tan et al. [

17] developed the Collaborative Deep Reinforcement Learning (Coder) framework, which decomposes complex tasks into single-agent subproblems and achieves large-scale traffic network optimization through the hierarchical aggregation of regional and global agents.

- (3)

Collaborative Learning Innovation: Wang et al. [

18] developed the Cooperative Double Q-Learning (Co-DQL) algorithm incorporating mean-field approximation, surpassing existing decentralized MARL algorithms across multiple traffic flow metrics [

19].

The rapid advancement of Connected Autonomous Vehicle (CAV) technology has propelled intelligent transportation research into a new phase. Ghiasi et al. [

20] proposed a hybrid traffic trajectory coordination algorithm leveraging CAV technology, which mitigates stop-and-go oscillations and reduces both fuel consumption and emissions. Arvin et al. [

21] combined Adaptive Cruise Control (ACC) with Cooperative Adaptive Cruise Control (CACC) to enhance car-following models, thereby improving traffic efficiency and safety. Lin et al. [

22] constructed an MARL-ES (Evolution Strategy) cooperative framework to optimize variable speed limits, significantly improving the throughput under high-density traffic flow conditions. Yu et al.’s [

23] team developed a Mixed Integer Linear Programming (MILP) optimization model that improves the traffic capacity while reducing delays, fuel consumption, and CO

2 emissions. Guo et al. [

24] proposed a Cooperative Traffic and Vehicle control (CoTV) framework, achieving the dual optimization of the travel time and energy consumption across diverse urban networks. Busch et al. [

25] designed a DRL-based joint controller, significantly enhancing system efficiency by smoothing vehicle speed profiles.

Previous research has often employed grid networks composed of identical intersections. However, such grid networks fail to capture the complexity of real-world urban networks. Furthermore, an effective intelligent transportation system should not only improve the operational efficiency of the road networks but also encourage safe and sustainable driving behaviors. Accordingly, this research proposes an integrated vehicle–road cooperative control framework, termed the CTS, which leverages the Soft Actor-Critic (SAC) algorithm to achieve the multi-objective optimization of traffic efficiency, emissions, and energy consumption in large-scale urban networks. The main innovations of the CTS include the following:

- (1)

Collaborative Control Architecture: A joint control model integrating traffic signals and vehicle speeds is constructed to overcome the limitations of single-dimension control, mitigate congestion, and reduce both carbon emissions and fuel consumption. Ablation studies confirm that joint control outperforms signal-only control in overall performance.

- (2)

Action Space Adaptation: The SAC algorithm is innovatively enhanced by employing a Beta distribution to address the high estimation bias of Gaussian policies in continuous and bounded action spaces within traffic scenarios.

- (3)

Algorithm Generalization Verification: The approach demonstrates strong scalability by reducing average delays compared with traditional DRL methods across scenarios ranging from single intersections to large-scale networks.

2. Traffic Signal Controller System

This research proposes a cooperative control method integrating traffic signals and vehicle speeds. As a core system component, the Traffic Signal Controller (TSC) dynamically adjusts the phase sequence and duration of traffic lights to optimize vehicle flow efficiency in urban networks. This section systematically details the theoretical foundation, the physical model, and the adapted control algorithm underlying the proposed method.

2.1. Traffic Signal Model

Based on the theory of Markov Decision Processes (Markov property), this research establishes a state transition model for the traffic system. In the TSC, the traffic signal at each intersection is considered as an agent. The phase of traffic lights is influenced solely by the previous phase, and the transient changes in vehicle speeds are constrained by the current motion state. Both the traffic signal phase switching and vehicle acceleration/deceleration behaviors can be modeled as Markov processes as follows:

where

is the state transition probability function, and

represents the conditional probability.

denotes the state space of the system at time

.

In the traffic systems, the state space of the TSC primarily comprises two components: the current traffic signal phases and the traffic flow state information within the networks. According to Laval’s research [

1], the traffic congestion and vehicle density in the road network conform to a power-law distribution. Therefore, this research incorporates the vehicle density, queue length, and waiting time of stationary vehicles into the state space.

Table 1 summarizes the state space of the TSC, where

is the number of signal phases at the intersection, and

is the number of incoming lanes connected to the traffic signals in the networks.

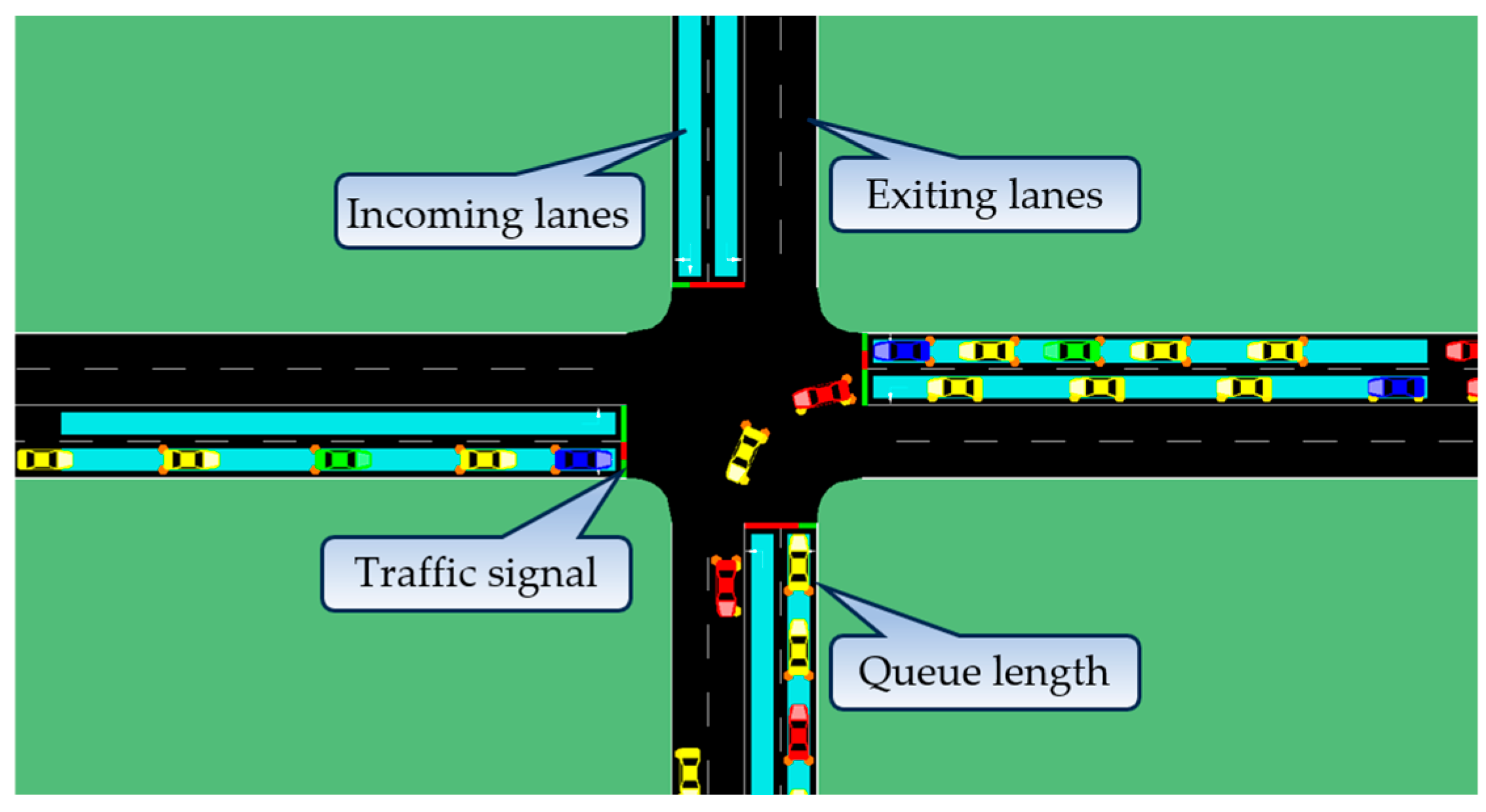

Figure 1 further illustrates the state space of the TSC. In

Figure 1, the blue segments indicate incoming lanes.

The Markov Reward Process (MRP) is an extension of the Markov process by adding a reward function

and a discount factor

, typically represented by the quadruple

, which determine how the agent evaluates and optimizes its policy to achieve its goal.

represents the total reward the agent receives starting from time

, and the formula is

The state value function is proposed to evaluate the expected value that an agent can obtain when taking actions based on the current policy at a given state. According to the Bellman Equation, the state value function is expressed as

where

denotes the current state at time

, and

represents a possible state at the next time step. The value function consists of two parts: the expected immediate reward

and the expected value of the next state, which is the discounted sum of future expected rewards.

The Markov Decision Process (MDP) extends the Markov Reward Process by introducing an action

, forming the tuple

. In traffic systems, changing the signal phase or adjusting the vehicle speed is regarded as taking an action

. Here, both the state transition function

and the reward function

are related to the specific action

. The state transition function

in a Markov Decision Process is

where

and

are random variables representing possible states and actions, respectively. The reward function

is

The action space of the TSC is discrete and bounded. Specifically, within the TSC, the phase sequence and duration of traffic signals constitute the actions of the agents. This research employs a binary-encoded action space for the TSC, where each discrete element corresponds to a specific signal phase. In this binary coding scheme, “0” represents a red light and “1” represents a green light, with a mandatory 2 s yellow light phase inserted between red–green transitions. The TSC executes control decisions at fixed 5 s intervals, establishing a minimum allowable duration of 5 s for both red and green signal phases.

For dense urban networks, MARL is more appropriate and is generally modeled using a Markov Game. In this research, the Markov Game is represented by a six-tuple

, where N:

denotes the set of

agents in the system, and

represents the reward obtained by agent

at time

. The discounted return is

The reward of the TSC primarily concerns traffic flow information in the network, including the queue length [

26], waiting time [

27], and traffic pressure [

28]. The reward function mainly depends on the optimization objectives of the experiment. There are numerous factors influencing traffic congestion, including the maximum road throughput and traffic flow density. These factors are often not easily accessible directly; therefore, this research selects the traveling time of vehicles—closely related to congestion—as the primary optimization objective for the TSC. The reward function for a single intersection is defined as follows:

where

denotes the set of incoming lanes at intersection

, and

represents the set of all vehicles currently in lane

;

is the travel time of vehicle

in lane

.

To directly guide the agent decision-making and policy optimization, the state-action value function

serves as the core evaluation metric in Reinforcement Learning (RL), quantifying the expected cumulative future rewards from action

at time

under policy

:

The goal of RL is to discover the optimal policy at each state in the environment to maximize the expected reward, while the single agent optimizes the objective function

J through individual policy networks.

2.2. Traffic Signal Controller Design

The objective of the TSC is to enhance the overall traffic efficiency within urban networks and achieve a global optimum. Achieving global optimality and multi-agent collaboration entails addressing several challenges, such as competitive and cooperative interactions among agents and the curse of dimensionality [

29,

30]. Currently, mainstream MARL approaches share the state space among neighboring agents, strengthening interactions and no longer treating traffic signals in isolation, which facilitates global optimization and better reflects real-world conditions. However, sharing the state space inevitably increases communication costs and computational burdens, which tends to cause the system to fall into the curse of dimensionality. To address these issues, this research designs a dynamic reward mechanism that integrates a global reward signal with individual contribution assessments, maintaining agent autonomy while enabling system-level cooperation. The cooperative reward function is

The discount factor α balances the individual reward and the global reward. As α approaches 0, agents prioritize local traffic optimization, potentially exacerbating network-wide congestion. Higher α values promote cooperative behavior, benefiting system-level efficiency.

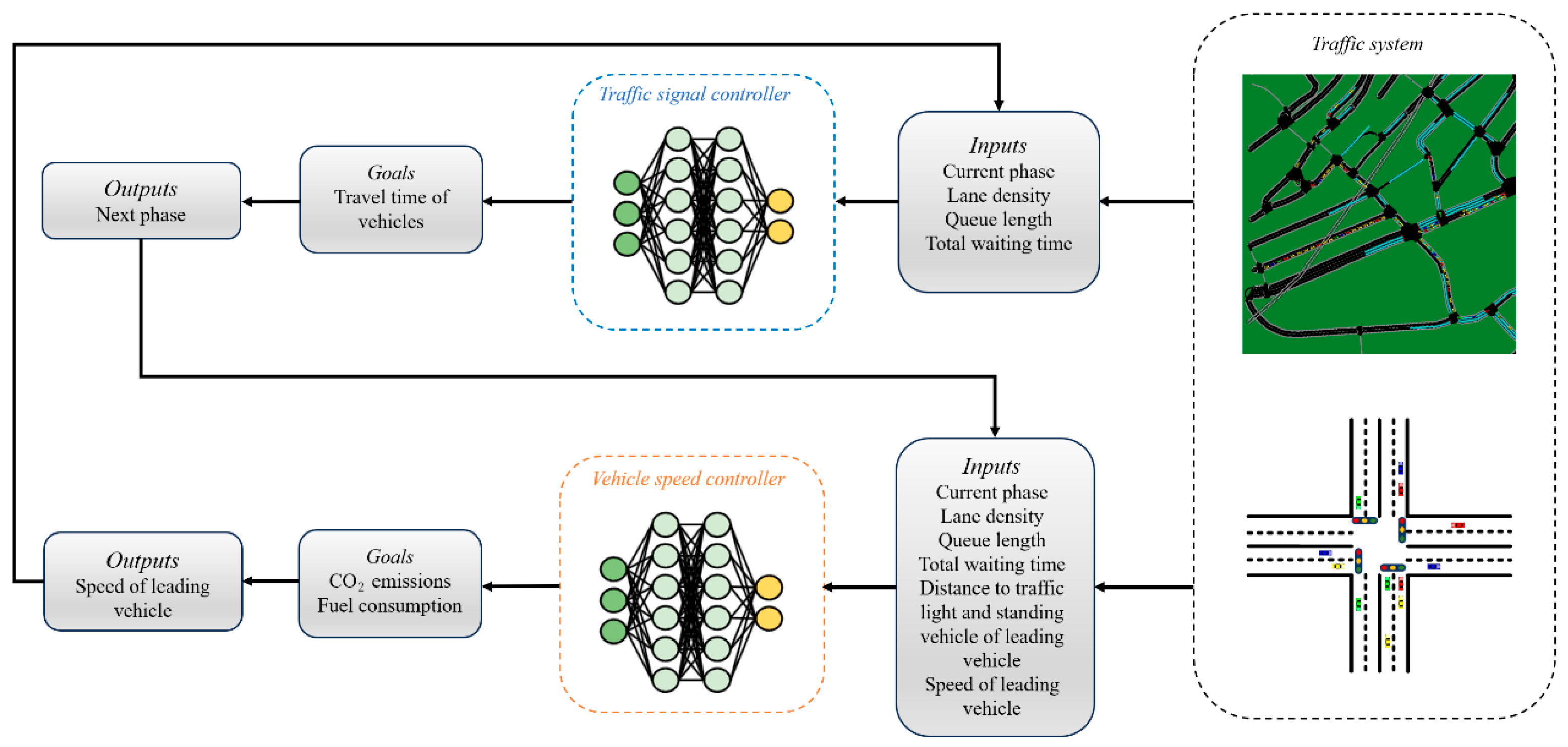

Each intersection (agent) shares a unified global reward function aimed at minimizing the total vehicle travel time, while retaining decentralized decision-making based on real-time local conditions. Compared with state-sharing approaches, our cooperative reward mechanism achieves global optimization with reduced communication costs and computational overhead. The system architecture is illustrated in

Figure 2.

2.3. SAC for Traffic Signal Controller

For general DRL algorithms, the learning objective is straightforward: to learn a policy π that maximizes the expected cumulative reward:

The primary objective of applying deep reinforcement learning (DRL) in intelligent transportation systems is to optimize spatiotemporal resource allocation through reward functions. Nevertheless, the effectiveness of the reward design is critical to algorithmic performance. For example, an excessive emphasis on traffic efficiency may inadvertently increase energy consumption, whereas a narrow focus on environmental metrics could undermine the overall network capacity.

The SAC algorithm is an off-policy algorithm developed for Maximum Entropy Reinforcement Learning [

31]. Compared with DDPG, which is also based on the Actor-Critic architecture, the SAC algorithm enhances the exploration capability by maximizing policy entropy (Equation (12)), effectively addressing the multi-objective optimization challenges in traffic control.

where

is a hyperparameter that can automatically adjust the weight between the policy entropy and reward, and

represents the entropy of the agent’s action

. Entropy is used to quantify the uncertainty of the outcomes of random variables. In a traffic environment, the larger the entropy of a signal phase, the lower and more random the predictability of the traffic condition under that phase.

The conventional objective of policy learning is to maximize the expected state value function, as shown in Equation (9). The difference is that SAC adds the expected entropy of the action,

, as a regularization term to the objective function:

In time-varying traffic scenarios, there often exists a considerable interval between peak and off-peak periods. If the traffic signal control policy converges prematurely within either phase, it may fail to adapt to subsequent variations in traffic flow. However, by leveraging its entropy-driven exploration mechanism, the SAC enables the control system to actively sustain a dynamic balance within the policy space, thereby mitigating the adaptation degradation caused by the premature convergence.

To achieve continuous control, the SAC reparametrizes the policy network, typically using the Squashed Gaussian policy as the policy function:

where for an n-dimensional continuous action space, the outputs of the neural networks

and

are both n-dimensional vectors;

are the parameters of the neural networks. Each component of the n-dimensional vector

follows a standard normal distribution, i.e.,

;

denotes the Hadamard product, meaning element-wise multiplication. The SAC network architecture proposed in this paper for traffic systems is shown in

Figure 3.

3. Cooperative Traffic Controller System

This chapter will first introduce the Vehicle Speed Controller (VSC) in the Cooperative Traffic Controller System (CTS), explain the interaction between the TSC and the VSC, and finally introduce the CTS proposed in this research.

3.1. Vehicle Speed Controller

The VSC is formulated as an MDP within the traffic environment and employs DRL methods for policy optimization. Studies have shown that the average vehicle speed is a crucial indicator for measuring congestion and traffic flow, and both the average vehicle speed and the factors it reflects (congestion and traffic flow) follow a power-law distribution [

2]. By constraining vehicle speeds within a reasonable range, the VSC effectively reduces frequent acceleration and deceleration events, thereby achieving the objectives of energy conservation and emission reduction [

32]. Following the setting in [

25], this research considers the leading vehicle in each lane as the control target. The detailed design of the state space, action space, and reward function for the VSC is presented as follows:

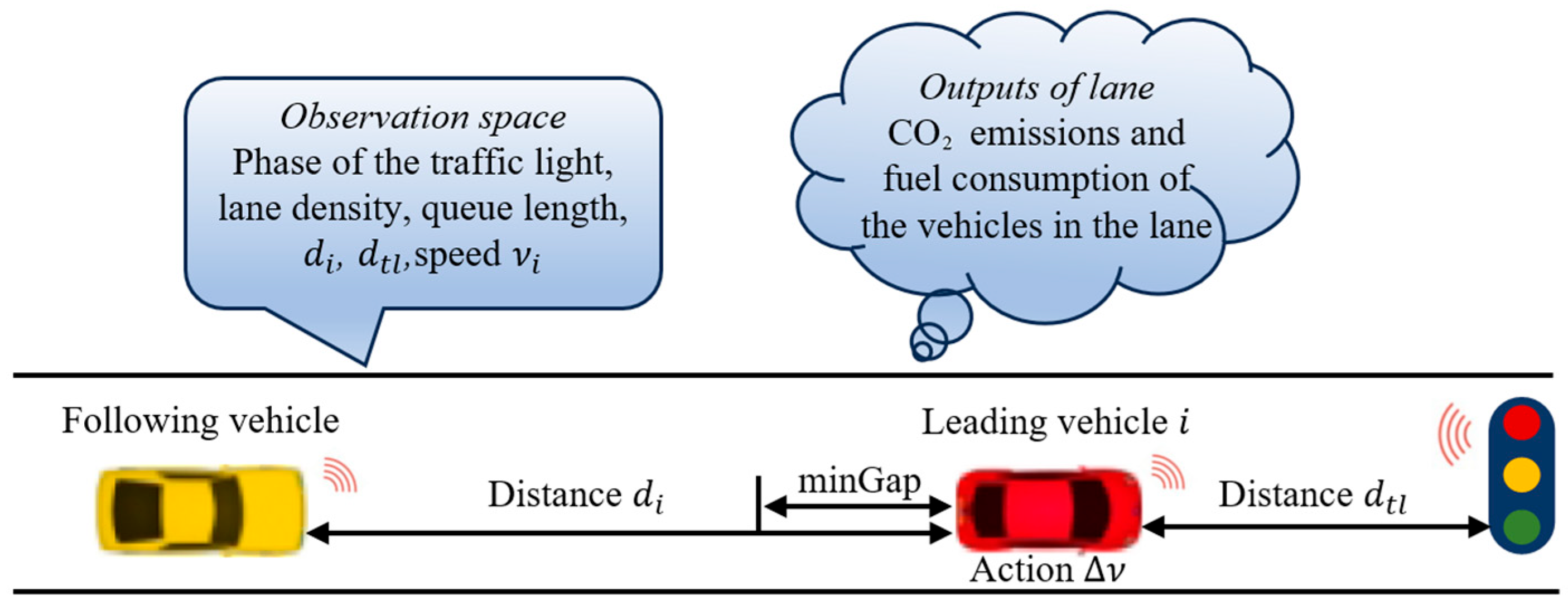

The state space of the VSC includes not only traffic signal phases and vehicle information but also focuses on the leader vehicle in the lanes. The state space of the VSC is shown in

Table 2. In

Table 2, the lane density, queue length, and total waiting time are used to evaluate the congestion level, while the information of the leader vehicle serves as the input for the VSC.

Similarly to the TSC, the VSC takes an action every 5 s of simulation time to change the speed of the leader vehicle. This action limits the speed of the leader vehicle within a continuous range, thereby controlling the speed of all vehicles. From a safety perspective, this research limits the speed within , where is the current leader vehicle’s speed, is the maximum speed limit for the current lane, and is set to 10% of the in the experiment.

Since vehicle CO

2 emissions and fuel consumption are strongly influenced by the speed and travel time [

8], the primary objective of the VSC in this study is to minimize the CO

2 emissions and fuel consumption. For safety considerations, the risk of vehicle collisions during travel is evaluated by imposing a minimum safety gap (minGap), as illustrated in

Figure 4. If the distance between two consecutive vehicles falls below this threshold, a substantial penalty is applied in the reward function. Furthermore, vehicles in the lane are encouraged to maintain a steady and relatively high speed, which not only contributes to energy conservation and emission reductions but also helps sustain a high level of traffic efficiency. Therefore, the reward function for VSC is

where

V is the set of vehicles in the lane;

and

are the CO

2 emissions and fuel consumption of vehicle

in the lane, respectively;

is the distance between two adjacent vehicles; and

is the current speed of vehicle

.

in Equation (15) maximizes the environmental and economic benefits of the vehicle.

measures the safety of the vehicle during travel. The smaller the distance between two adjacent vehicles, the greater the penalty. In this experiment, the minGap is set to 2.5 m, and

encourages vehicles to travel at a higher speed, reducing the travel time.

The flowchart of the VSC framework is presented in

Figure S1 of the Supporting Information. The process begins by traversing all vehicles in the current lane and selecting the leading vehicle as the control target. Based on the current state space, the controller then determines the appropriate action and immediately verifies its feasibility. This verification step is designed to prevent abrupt changes in the vehicle speed within a short time horizon, which could otherwise increase the risk of traffic accidents.

3.2. SAC with Beta Policy for CTS Design

Figure 5 illustrates the structure of the CTS, which integrates both the TSC and VSC. Within this framework, the traffic light phase serves as the output of the Traffic Signal Controller and simultaneously as the input to the VSC. The output of the VSC, in turn, determines the vehicle queue length and traffic density on the road, thereby influencing the subsequent input to the TSC. Through this mutual interaction, the two controllers form a closed-loop control system.

The standard SAC algorithm uses the Gaussian policy as the policy function, which is a policy based on the Gaussian distribution used for handling continuous action spaces, as shown in Equation (16). The probability density function of the Gaussian distribution is

where

and

are the mean and standard deviation of the Gaussian distribution, respectively. Since the Gaussian distribution is an unbounded probability distribution, the Gaussian policy is more suitable for environments with unbounded action spaces. However, the action space of the traffic light is a binary set. Clearly, simply using the Gaussian policy to output the action will inevitably cause a bias.

To address the aforementioned problem, one possible solution is to adopt the Squashed Gaussian policy as the policy function, as defined in Equation (14). This approach integrates a squashing function with the Gaussian policy to produce continuous yet bounded actions. Nevertheless, when the optimal action approaches an extreme value, the gradient of the tanh function becomes nearly zero. This can readily result in vanishing gradients, a reduction in policy entropy, and constrained exploration. In high-dimensional settings, where each action dimension undergoes nonlinear compression, the issue of gradient saturation is further exacerbated.

Another approach is to introduce the Beta policy based on the Beta distribution as the policy function. The Beta distribution is represented as follows:

where

and

are the shape parameters of the Beta distribution, and

is the Gamma function, which extends the factorial to real numbers. The Beta policy, as a policy function, can map actions to other intervals through a linear transformation, such as [

]. Thus, Equation (14) will be modified as follows:

where

is an action sampled from the Beta distribution, and

and

are the network parameters used to generate

and

. The Beta distribution is bounded, and for data within a finite range, it usually provides a more accurate fit. Moreover, the bounded nature and inherent skewness of the Beta distribution facilitate a convergence in high-dimensional action tasks. In traffic environments, the vehicle speed constitutes a continuous and bounded action space. As vehicles approach intersections, the policy often needs to select actions near zero or the maximum speed. The Beta distribution naturally accommodates such skewed action distributions, enabling a more precise representation of boundary actions. Even when boundary actions are taken, it preserves relatively high entropy and balanced exploration, thereby accelerating the policy convergence and improving the final performance. Therefore, the Beta policy is more suitable as the policy function for the SAC algorithm in this research.

5. Experimental Results

This section presents the three main experimental results, highlighting the excellent performance of the proposed CTS. First, an ablation study was conducted to validate the effectiveness of the CTS, demonstrating that the SAC outperforms other MARL algorithms in this domain. Second, further comparisons confirmed the superior performance of the SAC over alternative MARL approaches in the given context. Finally, experiments analyzed the performance differences between the Gaussian and Beta policies, as well as the specific effectiveness of the CTS across different intersections in large-scale networks. In the experiment, both the CO2 emissions and fuel consumption model for vehicles adopt the default emissions model of SUMO.

5.1. Validity of Cooperative Traffic Controller System

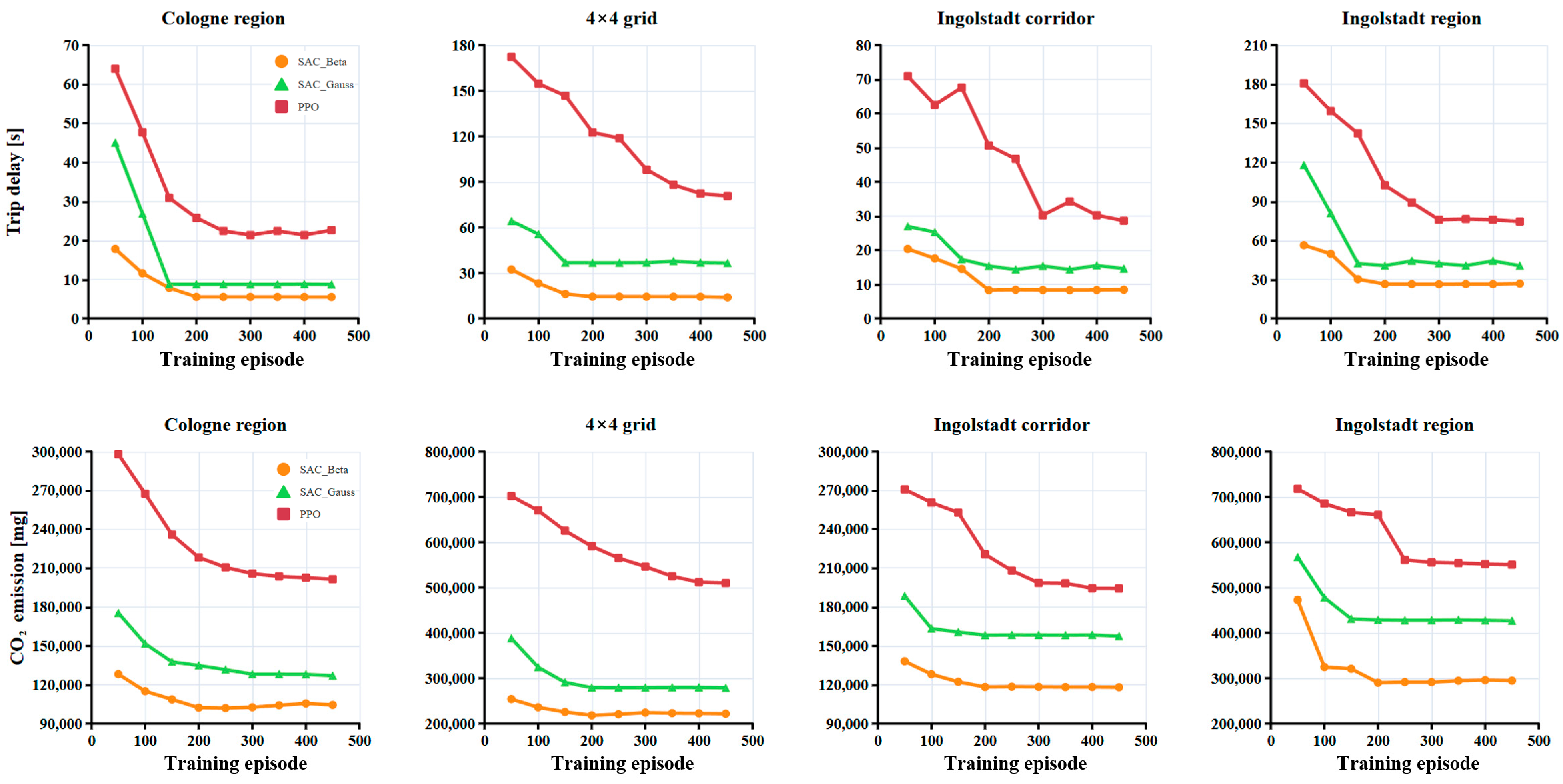

Figure 7 compares traffic conditions under the CTS and TSC. (1) Trip Delay (

Figure 7a,b): The CTS consistently achieves a shorter trip delay than the TSC in both the Cologne region and 4 × 4 grid scenarios, regardless of the algorithm (SAC or PPO). While the difference is marginal in the smaller Cologne network (fewer intersections), the CTS demonstrates a significantly better performance in the larger 4 × 4 grid (more intersections). (2) Average CO

2 Emissions (

Figure 7c,d): The CTS outperforms the TSC in both the Ingolstadt corridor and region. The performance gap is more pronounced in the larger Ingolstadt region (21 intersections) than in the corridor (7 intersections). Under PPO, emissions from the CTS and TSC initially converge but diverge sharply after approximately 200 training episodes. In the regional network, this divergence eventually stabilizes at a gap of about 50,000 mg, which is considerably larger than the gap observed in the corridor. This pronounced disparity arises in part from the CTS’s explicit inclusion of CO

2 emissions in its reward function, as well as the larger scale and greater complexity of the regional network.

Figure 7 illustrates the consistently superior performance of the CTS over the TSC across most scenarios, with the extent of the improvement contingent on the scale and complexity of the networks.

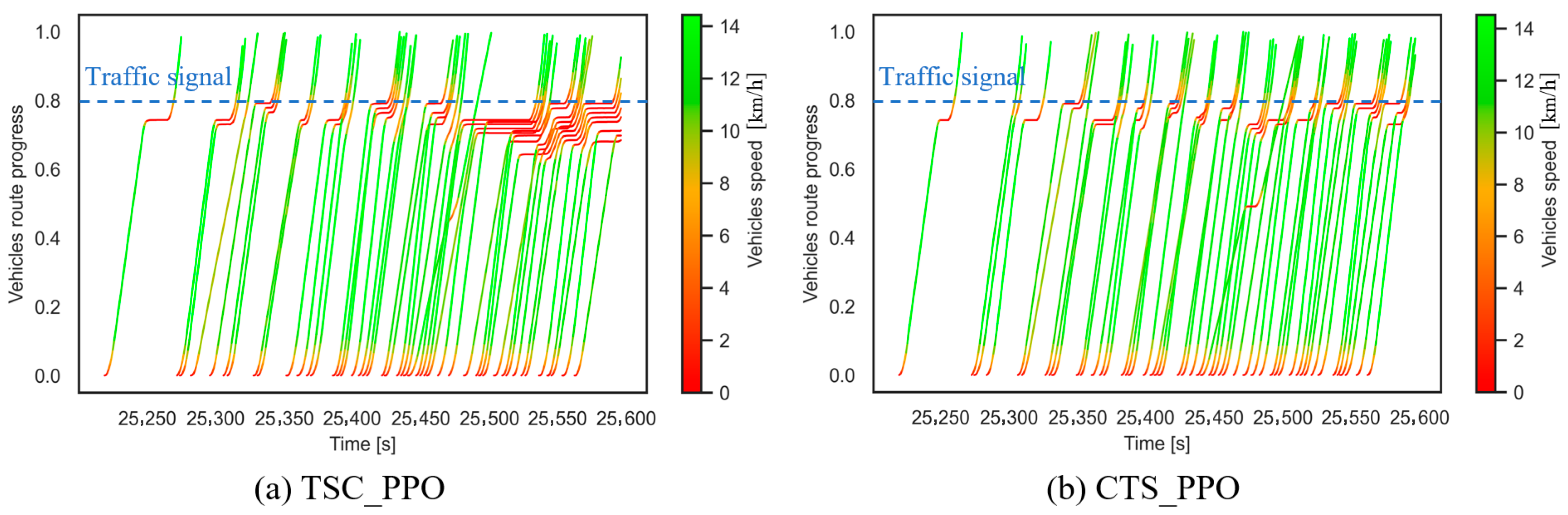

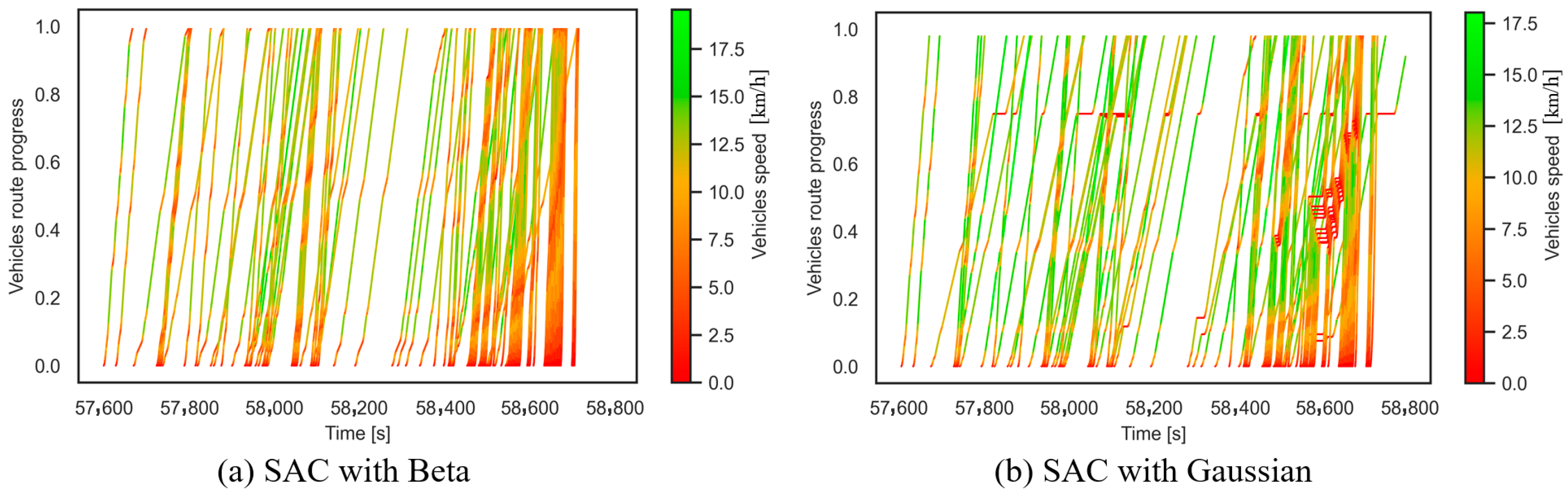

Figure 8a,b depict the speed profiles of traffic flows in the TSC and CTS, respectively, traversing the single intersection shown in

Figure 8a. The left vertical axis represents the normalized vehicle progress through the intersection, where zero indicates an entry into the speed-controlled section, and one denotes the passage through the signalized intersection into another lane. Vehicles within the [0, 1] interval are under speed control, with 1 marking the endpoint to account for potential intersection congestion. Each curve represents an individual vehicle’s speed trajectory, color-coded by speed (green: faster, red: slower). The line slope indicates traffic efficiency: steeper slopes denote higher efficiency. A horizontal dark-red segment signifies vehicle congestion. While the slopes in

Figure 8a,b are initially comparable, congestion levels diverge in the latter traffic flow phase. The CTS exhibits only minor congestion, with average vehicle waiting times remaining below 10 s. In contrast, the TSC leads to substantially more severe congestion, with waiting times approaching 50 s.

5.2. An Analysis of the Proposed Algorithm

Figure 9 compares the performance of the standard SAC, SAC with Beta policy, and PPO applied to the CTS across diverse scenarios, with each point representing the average value per 50 training cycles. As illustrated, SAC-Beta consistently outperforms the other methods. In smaller-scale networks (Cologne region and Ingolstadt corridor), it achieves vehicle delay times of approximately 10 s and CO

2 emissions below 12,000 g.

Conversely, PPO performs less effectively, particularly in larger networks with higher traffic volumes. Its relatively weak exploration mechanism depends heavily on environmental randomness or external exploration strategies, thereby requiring more time to identify optimal actions as the network size increases. For example, in the largest network (Ingolstadt region), SAC-Beta sustains delays below 30 s, saving nearly a minute compared with PPO. Correspondingly, the CO

2 emissions of PPO in Ingolstadt are nearly double those of SAC-Beta. A reduced delay directly translates into shorter waiting times and fewer stop–start events. Since frequent acceleration significantly increases CO

2 emissions compared to steady driving, the vehicle delay serves as a key determinant of emissions.

Figure 9 demonstrates that the SAC outperforms PPO in the CTS, and the Beta policy is better suited than the Gaussian policy for traffic signals and vehicle speed control. In continuous-action tasks, such as vehicle speed control, SAC-Gauss requires the compression and mapping of the action space. If the optimal action lies near the boundary (e.g., the vehicle reaches its maximum speed), the exploratory capability of the Gaussian distribution may be affected.

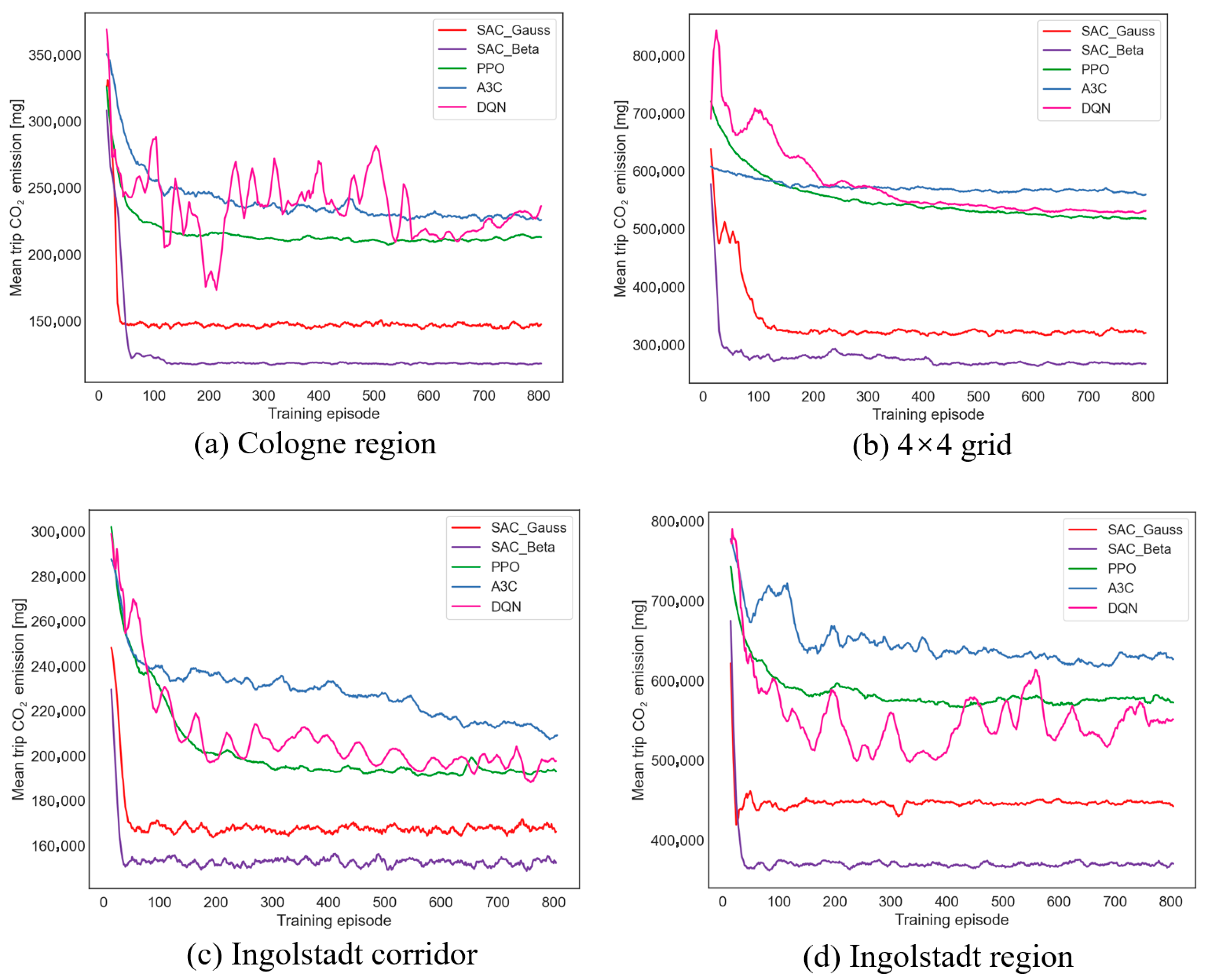

Figure 10 compares CO

2 emissions across traffic scenarios for five MARL algorithms in the TSC. Both SAC algorithms substantially outperform the other methods. The SAC achieves the fastest convergence, around 50 training cycles. Owing to entropy regularization, the SAC preserves the training stability and converges more rapidly than traditional Q-learning. PPO ranks second, converging at approximately 200 cycles, consistent with the results in

Figure 9. The SAC is an off-policy algorithm that can leverage a replay buffer to repeatedly train on historical interaction data. Learning based on historical traffic flow data is likely the main reason for the rapid convergence of the SAC. In contrast, PPO is an on-policy algorithm, and each policy update relies solely on new data collected under the current policy.

After convergence, the SAC achieves substantially lower CO2 emissions than other algorithms, which display only marginal differences among themselves. The performance gaps are most evident in the largest-scale network, the Ingolstadt region. The DQN fails to converge even after 800 training cycles. A3C yields the highest CO2 emissions, followed by the PPO. The Beta policy converges the fastest and reduces CO2 emissions by at least 20% versus the Gaussian policy.

Table 3 summarizes the fuel consumption under the CTS across various scenarios. SAC-Beta consistently exhibits the lowest fuel consumption in the CTS, outperforming SAC-Gaussian. At a single intersection, the performance gap between SAC-Beta and SAC-Gaussian is minimal, with differences below 10% under both systems. This gap widens significantly with the increased network scale and traffic flow, reaching approximately 56%.

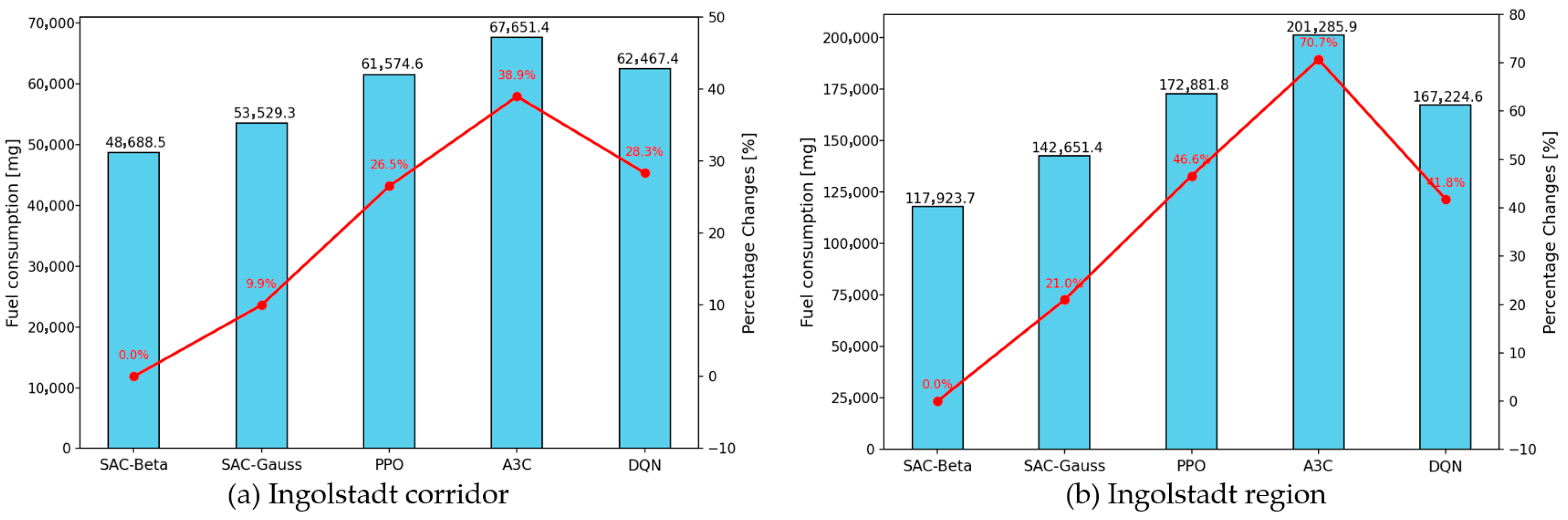

Figure 11 presents the fuel consumption results of different algorithms after the convergence under the TSC. The red polyline in

Figure 11 depicts the percentage increase of other algorithms compared to SAC-Beta in fuel consumption. In Ingolstadt networks, SAC-Beta achieves the best performance, followed by SAC-Gaussian. PPO ranks third, whereas A3C and DQN demonstrate the poorest fuel efficiency.

The consistency with

Figure 9 and

Figure 10 confirms that algorithm performance rankings for the trip delay, CO

2 emissions, and fuel consumption are aligned. This alignment is expected, as the CO

2 emissions and fuel consumption scale proportionally with the trip delay. The vehicle stop–start frequency and delay time constitute key determinants of both metrics. This observed real-world correlation enhances the reliability of the experimental results. Furthermore, the advantage of SAC-Beta amplifies with increasing network complexity. This advantage arises from the Beta distribution being inherently bounded with the nonzero density at the boundaries. Particularly for continuous bounded action spaces that require precise control—such as vehicle speed—SAC-Beta does not require action mapping, unlike other algorithms.

5.3. Performance Analysis of CTS Under Intersections

Balancing local and global objectives constitutes a significant challenge in MARL. To further evaluate the performance of the SAC-Beta, SAC-Gaussian, and PPO policies within the CTS with respect to this balance, six key intersections in the Ingolstadt region were selected for observation (see

Figure S2 in the Supporting Information). These intersections feature diverse geometries (crossroads and T-junctions) and road configurations (single-, double-, and three-lane roads).

Figure 12 and

Figure 13 compare the traffic efficiency of the Beta and Gaussian policies at Intersections A and D, respectively.

Figure 14 illustrates the speed variation for SAC-Beta and PPO at Intersection F. Traffic efficiency comparisons for the remaining three intersections (B, C, and E) are presented in

Figures S3–S5. Collectively, these results demonstrate that the Beta policy significantly outperforms both the Gaussian policy and PPO in traffic efficiency across all six intersections.

Figure 12 illustrates the performance of the Beta and Gaussian policies at Intersection A. The Beta policy predominantly exhibits a smooth green curve, indicating that vehicle speeds remain above 15 km/h while traversing the intersection. In contrast, the Gaussian policy displays fragmented dark-red curves, reflecting frequent vehicle stoppages with delays of up to approximately 100 s.

At Intersection D with heavy traffic flow (

Figure 13), both policies predominantly produce red curves, with vehicle speeds frequently dropping below 10 km/h. Nevertheless, SAC-Beta maintains relatively smooth trajectories and avoids congestion despite the low speeds.

Table 4 offers a more detailed interpretation of the traffic conditions illustrated in

Figure 13.

At Intersection F (

Figure 14), the combination of heavy traffic flow and geometric constraints leads to relatively shallow curve slopes for both the Beta policy and PPO, indicating reduced traffic efficiency. Notably, PPO frequently produces deep-red curves indicative of congestion, whereas the Beta policy largely sustains a congestion-free operation.

Table 5 offers a more detailed interpretation of the traffic conditions illustrated in

Figure 14.

Overall, SAC-Beta demonstrates relatively excellent adaptability, delivering a substantially superior performance compared with the Gaussian policy and PPO across all six intersections. The efficacy of SAC-Beta at the intersection level enables the joint control system to achieve a high throughput, reduced emissions, and improved energy efficiency across large-scale networks.

6. Conclusions

This research proposes an eco-network control system based on MARL to alleviate urban congestion and environmental pollution. The framework synergistically integrates adaptive signal timing optimization with dynamic speed guidance control, overcoming traditional single-signal control limitations through dual-module coordination. By incorporating Beta distribution-enhanced SAC algorithms, our CTS demonstrates superior performance in traffic efficiency and ecological benefits compared to conventional approaches.

Future research directions include establishing a multi-power vehicle energy consumption model (covering fuel, electric, and hybrid vehicles) and implementing a nonlinear reward allocation strategy to optimize system practicality. In future work, we plan to incorporate vehicles with different emission levels into the networks, including light cars, buses, and heavy-duty trucks, and assign weights according to their emission levels in the reward function. Moreover, since it is difficult to ensure stable V2V and V2X communication for vehicles under different CAV penetrations, we will verify the practicality of the CTS in mixed CAV traffic flows.