1. Introduction

With the rapid advancement of smart agriculture [

1], achieving real-time and accurate detection and pose estimation of corn—one of the world’s most important staple crops [

2]—has become a critical task. Such capabilities are essential for supporting downstream applications, including growth status monitoring [

3] and intelligent harvesting [

4]. In particular, when combined with depth maps, oriented object detection provides an effective means to accomplish reliable pose estimation. Traditional corn detection approaches typically rely on horizontal bounding box (HBB) annotations. However, due to the naturally inclined posture of corn, objects often appear tilted in images. Consequently, HBB-based methods fail to tightly enclose the object regions, frequently introducing redundant background information that adversely affects subsequent tasks such as tracking, segmentation, and localization. To overcome this limitation, oriented object detection has been introduced, where object boundaries are represented more accurately by annotating the four vertices of an oriented bounding box (OBB) [

5]. A comparison between HBB and OBB is illustrated in

Figure 1. If this detection is based on RGB data, only oriented detection of corn can be achieved. However, by combining depth data and the precise bounding box of corn, the pose of corn can be estimated. A comparison with pose estimation using stereo images is under discussion.

Research on oriented object detection [

6] and pose estimation [

7] in agriculture has received increasing attention, but existing works remain limited in several aspects: many rely on outdated detection frameworks, lack integration of 3D information, and seldom conduct research on corn specifically. For instance, Song [

8] employed R-CNN for detecting oriented corn tassels, while Zhou [

9] proposed a YOLOv8-OBB model to detect Zizania. Liu [

10] applied YOLOv3 to identify broken corn kernels during mechanical harvesting. In other fields, Wang [

11] utilized a diffusion model for oriented object detection in aerial images, while Su [

12] proposed MOCA-Net to detect oriented objects in remote sensing images.

Regarding pose estimation, existing methods often suffer from large and complex network architectures. For example, Mola [

13] proposed an apple detection and 3D modeling method using an RGB-D camera, where RGB and depth information were fused to generate point clouds for dimension estimation. Du [

14] developed a technique for tomato 3D pose estimation in clustered scenes by identifying key points and processing point clouds to extract centroid and calyx positions. More recently, Gao [

15] introduced a stereo-based 3D object detection method for estimating the pose of corn, where joint bounding boxes were predicted and fed into a deep learning model to infer 3D bounding boxes and poses. Existing approaches often rely on complex data modalities, such as point clouds or stereo imagery, which necessitate the use of more sophisticated neural architectures. In contrast, the present work is grounded solely in an object detection framework. In other fields, oriented detection based on unmanned aerial vehicle data [

16] is a hot direction. Zhang [

17] utilized point cloud to estimate the pose of industrial parts, Ausserlechner [

18] proposed ZS6D to utilize vision transformers for zero-shot 6D object Pose.

For more precise pose estimation, this study introduces a corn pose estimation framework with two major contributions. First, during the annotation stage, orientation labels are designed to capture the natural growth poses of corn, thereby constructing a precisely oriented corn detection dataset that incorporates depth information for pose estimation. Second, at the algorithmic level, this work introduces a lightweight yet precise oriented detection model, YOLOv11 Oriented Corn (YOLOv11OC), specifically tailored for corn.

Recent advances in deep learning-based detection frameworks [

19], including Faster R-CNN [

20], the YOLO series [

21], and Vision Transformers [

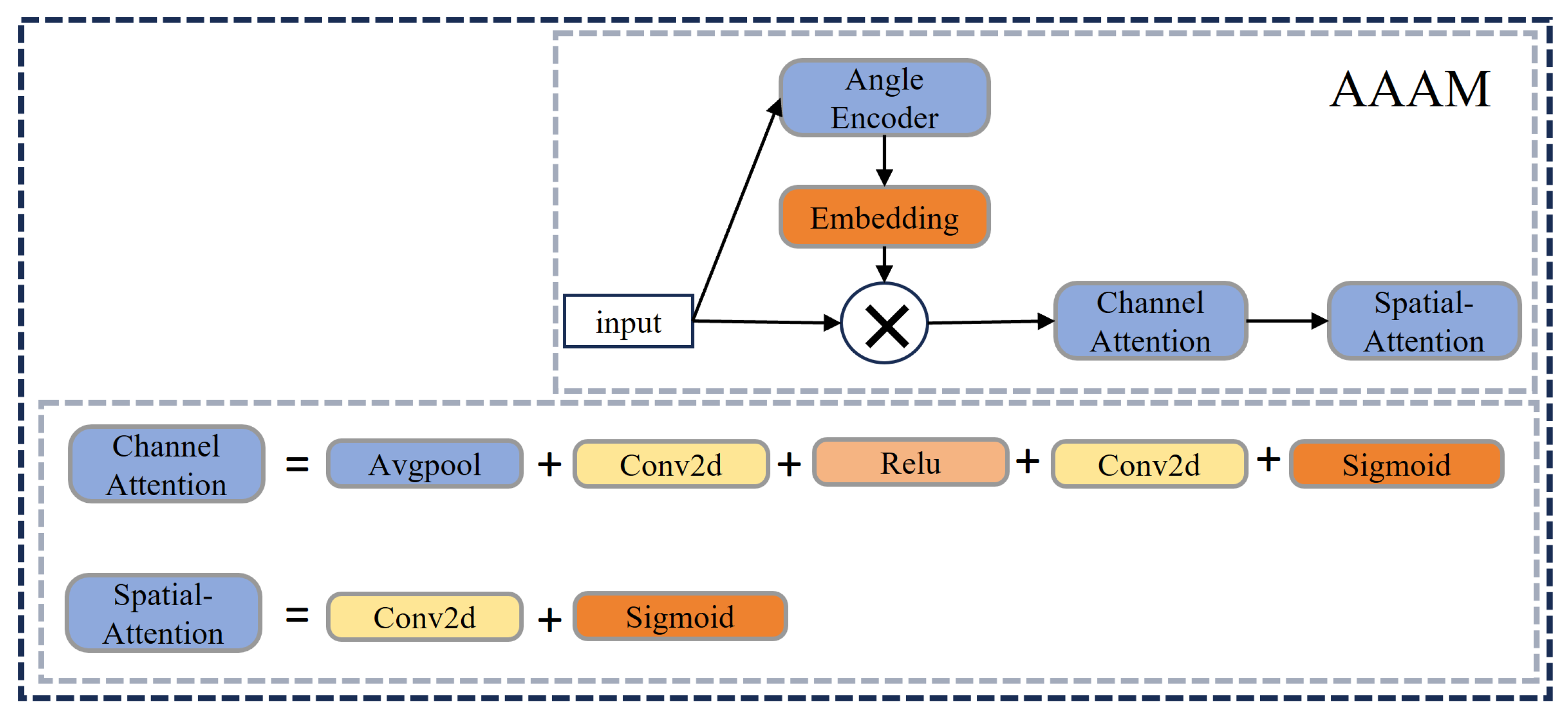

22], have shown remarkable success in general object detection tasks. Among them, the YOLO series has become widely adopted due to its generality and ease of deployment. In this work, the latest YOLOv11 is adopted as the baseline for oriented corn detection. To further improve detection precision, we integrate three modules: the Angle-aware Attention Module (AAM) for angle feature encoding and fusion, the GSConV Inception Network (GSIN) for efficient multi-scale feature extraction with reduced model complexity, and the Cross-Layer Fusion Network (CLFN) to enhance multi-scale object detection through cross-layer feature fusion.

This study presents a corn pose estimation approach based on RGB-D data and OBB detection, specifically designed to overcome the limitations of accuracy in existing corn OBB detection methods. The main contributions of this paper are summarized as follows:

- (1)

This work constructs a precise OBB detection dataset for corn. Unlike conventional HBB annotations, the proposed OBB labels are precise and effectively reduce redundant background regions. Furthermore, by combining OBB annotations with depth maps, the pose of corn can be accurately estimated.

- (2)

This work proposes a lightweight oriented detection framework YOLOv11OC based on YOLOv11. To achieve both efficiency and accuracy, the framework integrates the GSConV Inception Network to reduce parameter complexity and the Cross-Layer Fusion Network to strengthen multi-scale feature fusion capability.

- (3)

This work designs the Angle-aware Attention Module, which encodes orientation information as an attention mechanism, thereby enhancing the model’s ability to perceive and regress object angles more accurately.

4. Discussion

4.1. Main Work

Precision agriculture and smart farming have emerged as transformative paradigms for enhancing crop yield, optimizing resource utilization, and enabling intelligent field management. A central challenge in these domains lies in the precise 3D perception of crops. In the context of corn cultivation, high-precision pose estimation of individual corn is crucial for improving the efficiency of automated planting systems and advancing overall management practices.

Building upon RGB-D data and camera intrinsic parameters, accurate pose estimation can be achieved through precise OBB detection. Unlike conventional HBB, OBBs provide a more faithful representation of object geometry by aligning with the natural orientation of the object. In agricultural scenarios, this orientation-aware representation enables finer delineation of plant boundaries and more reliable spatial alignment. Nevertheless, OBB detection introduces unique challenges, particularly the difficulty of robust angle regression and the increased complexity of annotation preprocessing. Addressing these challenges constitutes the primary focus of this work.

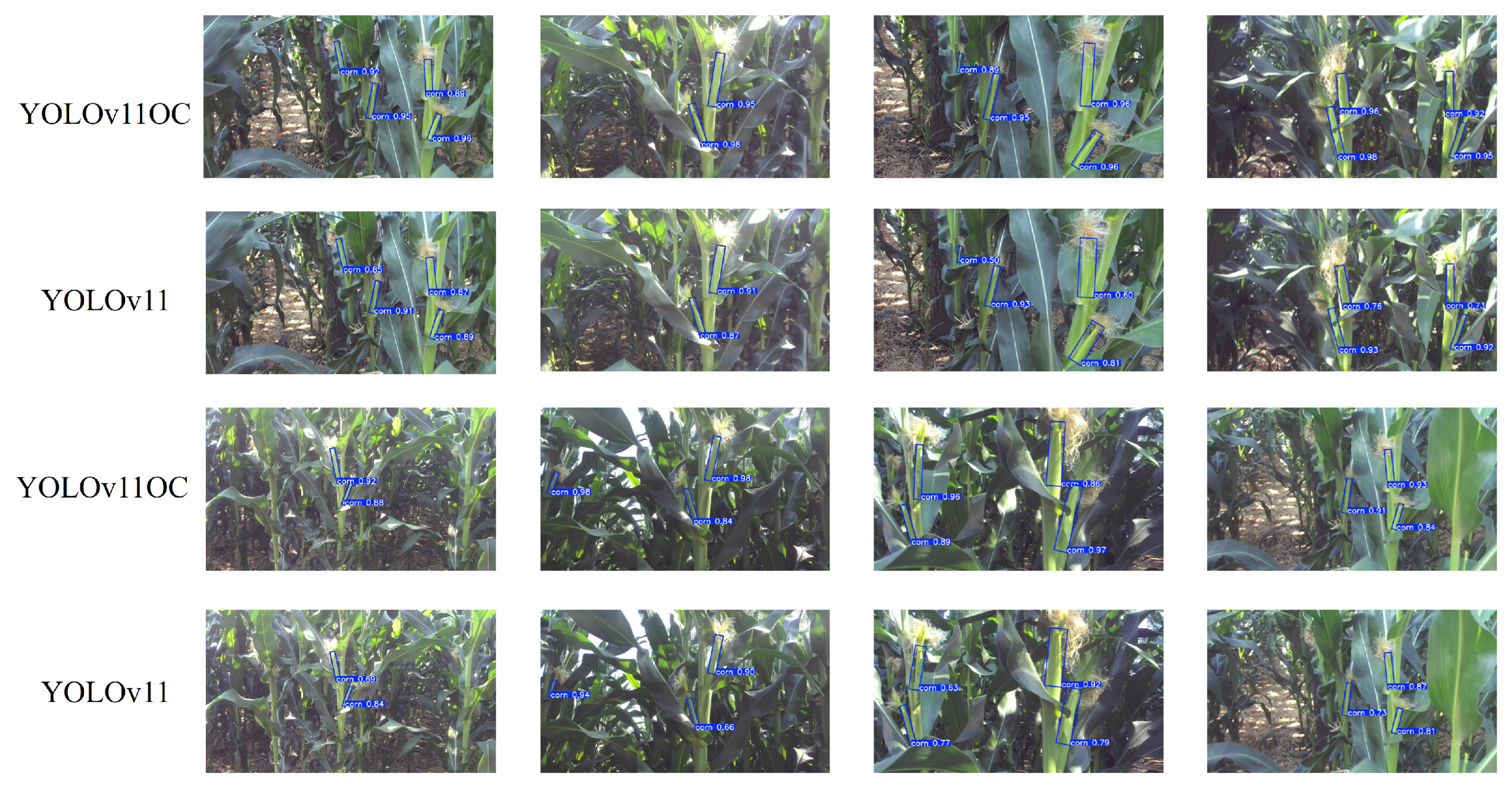

To this end, YOLOv11OC was proposed, an enhanced framework designed specifically for oriented corn detection. This model incorporates three key components to improve YOLOv11’s representational capacity and regression accuracy: the AAM, CLFN, and GSIN. Experimental results confirm that the integration of these modules significantly strengthens orientation-aware object detection. Specifically, the AAM improves the model’s ability to perceive and encode angular information by deriving orientation vectors from OBB corner coordinates. Through dynamic modulation of both spatial and channel attention, AAM achieves more stable angle regression and markedly enhances sensitivity to variations in object orientation.

The CLFN mitigates the loss of low-dimensional features that typically occur in traditional FPN structures by introducing a cross-layer fusion mechanism. This design enables more effective integration of semantic information from both low-level and high-level feature maps, thereby enhancing the detection of multi-scale objects. Complementarily, the GSIN employs a multi-kernel convolution fusion strategy in combination with GSConV to maintain a lightweight yet powerful architecture. This module effectively extracts and fuses multi-scale features, compensating for the limitations of single-scale convolution, and is particularly effective in detecting small or edge-blurred objects.

The complementary strengths of these three modules—angle encoding, cross-layer feature fusion, and lightweight multi-scale feature extraction—enable YOLOv11OC to achieve robust and precise OBB detection. This improvement not only advances the accuracy of oriented corn detection but also provides a solid foundation for pose estimation.

On the basis of precise OBB detection, this work further integrates depth maps to estimate the pose of corn. By computing the 3D coordinates of key points—including the midpoints of the bounding box edges, the corner endpoints, and the OBB center—using depth values in conjunction with camera intrinsic parameters, the length, width, yaw, and pitch angles of each corn instance can be derived. To the best of our knowledge, this is the first study to introduce precise OBB detection as a basis for crop pose estimation. This approach establishes a technical foundation for phenotypic analysis and lays the groundwork for future applications in intelligent harvesting and automated crop management.

4.2. Comparison with Previous Studies

4.2.1. Comparison with Previous Studies of OBB Detection

Table 7 compares the proposed YOLOv11OC and previous studies. This work is based on the latest detection model YOLOv11. The proposed YOLOv11OC achieved the best mAP and was the first work for detecting oriented corn.

4.2.2. Comparison with Previous Studies of Pose Estimation

Table 8 presents a comparison between this work and previous studies. To the best of our knowledge, this is the first attempt in the field of smart agriculture to employ OBB detection with RGB-D data for estimating the pose and shape of corn under occlusion. The proposed method achieves a detection speed of up to 10 ms per corn instance. In terms of efficiency, it outperforms the approaches of Chen [

33] and Gené-Mola [

13], which rely on 3D modeling for shape estimation. Furthermore, in both speed and methodological simplicity, it demonstrates superiority over Guo [

34], who employed point cloud segmentation.

Compared with our previous work [

15], as shown in

Table 8, the proposed approach places stricter requirements on the accuracy of annotation and depth acquisition. However, its pose estimation, based on physically interpretable calculations, provides a stronger explanatory foundation. Overall, under conditions of limited data volume and relatively small variability, the proposed method proves to be more suitable and effective than prior approaches.

4.3. Limitations

Experiments show that at the commonly used IoU threshold of 0.5, YOLOv11 and YOLOv11OC exhibit comparable detection accuracy, suggesting that the performance gains of the proposed method are not highly pronounced under standard evaluation settings. This observation underscores the need to construct more challenging datasets containing a higher proportion of small objects, densely packed targets, or severe occlusion, which would better demonstrate the advantages of oriented detection frameworks. From a deep learning model perspective, future work could focus on integrating global context modeling mechanisms, such as Transformer-based architectures, to further improve detection robustness under complex scenarios.

Moreover, the present study was conducted using data collected from a single area, season, and growth stage, under relatively stable environmental conditions. While this provides a controlled evaluation setting, it may limit the generalizability of the results, as lighting variations, environmental diversity, and different corn varieties were not considered. Future work should therefore include broader data collection campaigns covering multiple environments, seasons, and corn cultivars, to comprehensively validate the robustness and adaptability of the proposed method.

Furthermore, pose estimation based on OBB detection imposes stringent requirements on both model precision and dataset annotation quality. Reliable results demand high-quality, fine-grained annotations, which are labor-intensive and time-consuming. A promising direction for future research is to leverage auxiliary approaches, such as semantic segmentation, to enable automated, precise labeling. This would reduce annotation effort and further enhance pose estimation performance in agricultural applications.

4.4. Implementation for Precision Agriculture

The proposed system shows promise for deployment in precision agriculture. With 97.6% mAP@0.75 and 94% pose estimation accuracy, it can meet the precision requirements of automated harvesting, where small pose errors are acceptable. Its inference speed of about 10 ms further supports real-time operation on harvesting vehicles.

However, the current dataset mainly covers corn near maturity and a single variety, limiting generalization. Future work will expand testing across different growth stages and varieties, and integrate the system with harvesting platforms to verify its practical applicability.

5. Conclusions

This study focused on precise OBB detection for corn pose estimation. To this end, we proposed YOLOv11OC, which integrates three novel modules: AAM for stable and accurate orientation perception, CLFN for enhanced multi-scale feature fusion, and GSIN for lightweight yet effective feature extraction and multi-scale detection.

Experimental results demonstrate that the proposed framework significantly improves oriented detection performance. The YOLOv11n variant achieves competitive accuracy with the smallest parameter size, underscoring its potential for deployment on resource-constrained agricultural platforms. Ablation studies further confirm that AAM yields the most notable performance gain, while CLFN and GSIN both improve accuracy and contribute to parameter reduction. Moreover, results show that while YOLOv11 and YOLOv11OC perform similarly at lower IoU thresholds, the performance gap increases at higher thresholds, reflecting the superior localization precision of YOLOv11OC.

Overall, YOLOv11OC achieves a strong balance between detection precision, efficiency, and robustness. Leveraging these advantages, the proposed framework enables accurate and reliable corn pose estimation, supporting key agricultural applications such as automated harvesting, growth monitoring, and pesticide spraying. With 97.6% mAP@0.75 and 94% pose estimation accuracy, the proposed method satisfies the precision requirements of automated harvesting while maintaining real-time inference speed suitable for on-vehicle deployment. Nonetheless, the current dataset primarily covers corn near maturity and a single variety, which may limit generalization. Future work will extend evaluation across diverse growth stages and varieties, and integrate the system into harvesting machinery to validate its field applicability.

By bridging precise oriented detection with practical agricultural needs, this work contributes to advancing automation, efficiency, and sustainability in smart agriculture.