Joint Learning for Mask-Aware Facial Expression Recognition Based on Exposed Feature Analysis and Occlusion Feature Enhancement

Abstract

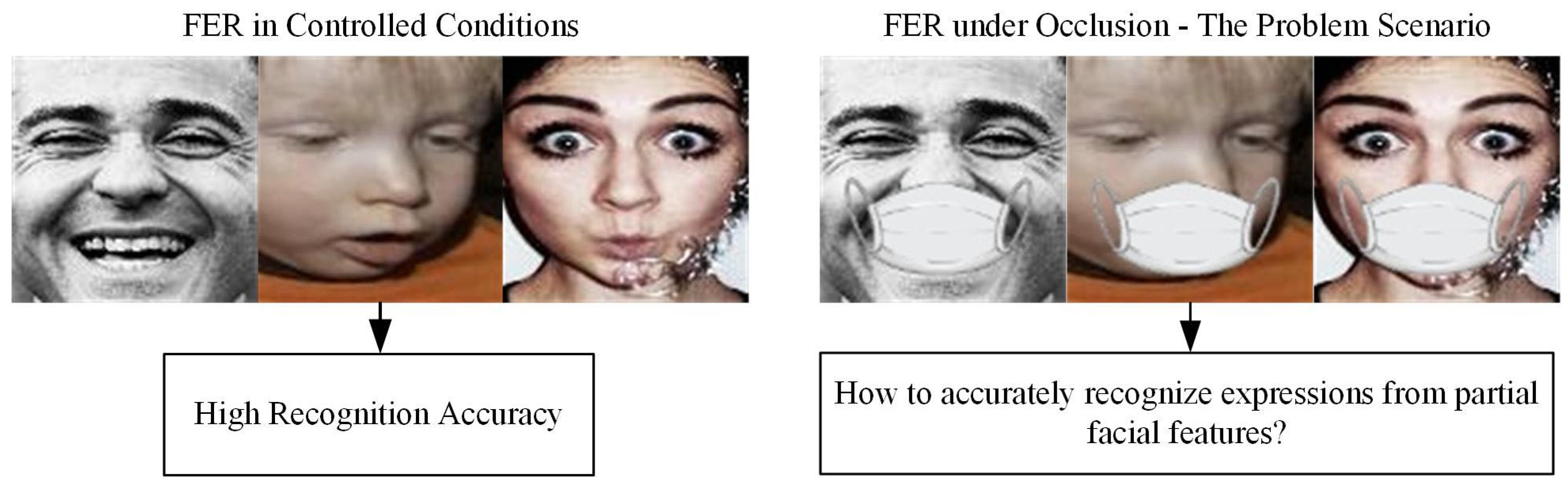

1. Introduction

- (1)

- Our method proposes a lightweight Facial Occlusion Parsing Module (FOPM) that explicitly estimates occlusion patterns and integrates this structural prior to guide feature extraction. This plug-and-play component can be easily deployed in existing FER systems, significantly enhancing their robustness to partial occlusions with low computational cost.

- (2)

- Our study introduces an efficient end-to-end trainable framework that performs robust occlusion-aware FER by synergistically analyzing both exposed and occluded facial regions. The system employs innovative attention mechanisms that enable practical feature transfer and enhancement without compromising inference speed.

- (3)

- We conduct a thorough evaluation on publicly available and mask-aware faces, showcasing the superior performance of our proposed method in comparison to various recent methods.

2. Related Works

2.1. Occlusion Problem in FER

- Reconstruction-Based Paradigm: The earliest approaches to handling occlusion in FER followed a reconstruction-based paradigm, aiming to restore occluded facial regions before recognition. These methods typically employ generative techniques such as Generative Adversarial Networks (GANs) [20] or autoencoders to reconstruct complete facial images from partially occluded inputs. The underlying assumption is that once reconstructed, standard FER methods can be applied effectively. While conceptually straightforward, these methods face significant limitations: they become highly speculative when major facial regions (e.g., over 50% of the face with masks) are obscured, often introducing artifacts that may mislead subsequent recognition. Furthermore, the reconstruction quality heavily depends on the occlusion pattern and extent, making this approach unreliable for real-world applications with diverse occlusion types.

- Holistic Representation Paradigm: The second paradigm shift moved toward holistic representation methods that leverage sparse signal processing and global feature representations inherently robust to partial occlusion [21,22]. These approaches posit that discriminative expression information is distributed across the face rather than concentrated in specific regions. By learning robust global representations through techniques like sparse coding or deep global descriptors, these methods avoid the need for explicit occlusion detection or reconstruction. However, while demonstrating resilience in occluded object classification generally, these methods often fail to capture the subtle local variations crucial for fine-grained expression discrimination, particularly when critical expression regions (e.g., mouth for happiness, eyes for surprise) are obscured.

- Local Feature Emphasis Paradigm: Recognizing the limitations of global approaches, the field evolved toward local feature emphasis methods that explicitly handle occlusion through region-based processing. This paradigm encompasses several sub-categories: Patch-based Methods segment facial images into smaller patches (overlapping or non-overlapping) and employ attention mechanisms or weighting schemes to emphasize non-occluded regions [23]. The ACNN framework, for instance, combines patch-based and global-local attention to mitigate occlusion effects. Attention-based Methods use learned attention weights to dynamically focus on semantically important and visible regions. The RAN framework [24] introduced relation-attention modules to adaptively capture vital facial regions, while OADN [25] combined landmark-guided attention with regional features to identify non-occluded areas. Landmark-based Methods utilize facial landmarks to guide feature extraction from specific semantic regions, providing structural priors for handling occlusion. While representing significant advancement, these methods still fundamentally treat occlusion as a nuisance to be avoided rather than a structural element to be explicitly modeled.

- Joint Learning Paradigm (Emerging): The most recent evolution in occlusion-aware FER moves toward joint learning frameworks that explicitly model occlusion as an integral part of the recognition process. Rather than merely avoiding or compensating for occlusion, these methods aim to understand the occlusion configuration and leverage this understanding to guide feature extraction and fusion [26]. This emerging paradigm includes: Mask-aware Specialized Methods that specifically address predictable occlusions like face masks [6,27], often using mask detection as an auxiliary task. Geometry-Appearance Fusion Methods that combine geometric facial structure information with appearance features for more robust recognition under occlusion [10]. Explicit Occlusion Modeling Approaches that directly estimate occlusion patterns and use this information to guide adaptive feature processing—the direction our work advances.

2.2. Mask-Aware FER as a Specialized Domain

3. Methods

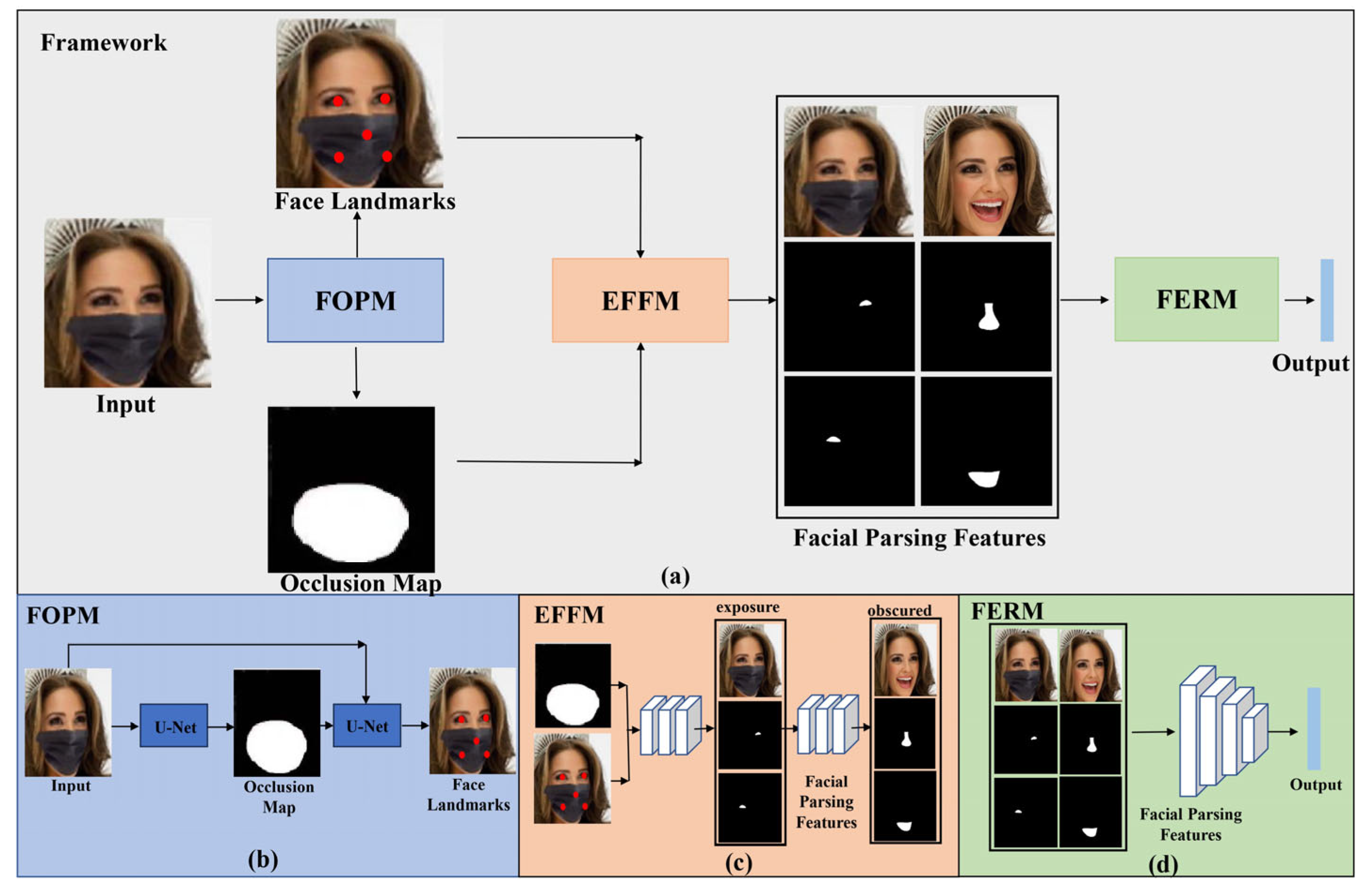

3.1. Framework

3.2. Facial Occlusion Parsing Module

3.3. Expression Feature Fusion Module

3.4. Facial Expression Recognition Module

3.5. Loss Functions

4. Experiments

4.1. Datasets

- (1)

- RAF-DB (Real-world Affective Faces Database) [36] comprises 29,672 facial images, each annotated with one of seven basic expressions: Surprise, Fear, Disgust, Happiness, Sadness, Anger, and Neutral. We strictly adhered to the official dataset split: 12,271 images for training and 3068 images for testing.

- (2)

- FER+ (Face Emotion Recognition Plus) [37], an enhanced version of FER2013, contains 28,315 training images and 3589 testing images, also labeled with seven expression categories.

4.2. Implementation Details

4.2.1. Training Configuration

- (1)

- Framework & Hardware: Implemented in PyTorch 1.12 [39], running on Ubuntu 18.04 with 2 × NVIDIA GeForce RTX 3090 GPUs.

- (2)

- Optimizer: AdamW [40] was used with decay rates β1 = 0.9 and β2 = 0.99, and a weight decay of 1 × 10−4.

- (3)

- Learning Rate Schedule: The initial learning rate was set to 0.002, and was reduced by a factor of 0.2 every 6 epochs.

- (4)

- Training Strategy: Models were trained for a maximum of 300 epochs with a batch size of 16. Early stopping was employed with a patience of 20 epochs, monitoring the validation accuracy. The model with the best validation performance was restored for final evaluation.

- (5)

- Input Resolution: All input images were resized to 3 × 224 × 224 pixels.

4.2.2. Model Complexity and Efficiency

- (1)

- The Facial Occlusion Parsing Module (FOPM including the U-Net): ~3.5 M parameters.

- (2)

- The Expression Feature Fusion and Recognition Modules (EFFM + FERM): ~6.3 M parameters.

4.3. Evaluation Metrics

- (1)

- Accuracy measures the overall correctness of predictions:

- (2)

- Precision assesses the correctness of positive predictions:

- (3)

- Recall evaluates the ability to detect positive samples:

- (4)

- F1-Score is the harmonic mean of Precision and Recall, providing a balanced measure especially under class imbalance:where TP, TN, FP, and FN denote True Positives, True Negatives, False Positives, and False Negatives, respectively.

4.4. Ablation Study

- (1)

- Efficacy of FOPM: The FOPM demonstrates the most significant individual impact, improving accuracy by 2.33% and 3.05% on RAF-DB and FER+, respectively, compared to the model without it. This underscores the critical importance of explicit occlusion parsing for recovering discriminative features under occlusion.

- (2)

- Role of EFFM and FERM: While the individual improvements from EFFM and FERM are relatively smaller, their presence is essential. The cascaded integration of all three modules yields a synergistic effect, where the combined accuracy gain exceeds the sum of individual improvements. This visually demonstrates how the modules work in concert: FOPM identifies usable regions, EFFM performs in-depth analysis of expressive details within these regions, and FERM strategically enhances features potentially weakened by occlusion.

4.5. Comparison with State-of-the-Art Methods

4.5.1. Implementation Details for Compared Methods

4.5.2. Quantitative Results

- (1)

- Baseline Performance: From Table 2, early influential methods like RAN and SCN achieved accuracies of 86.90% and 87.03%, respectively. These established a foundational performance level but highlighted the difficulty of occlusion handling. From Table 3, Early methods RAN and SCN achieved accuracies of 87.85% and 88.01%, respectively. These established a foundational performance level but indicated room for improvement in handling occlusion.

- (2)

- Progressive Improvements: From Table 2, subsequent advancements, represented by CVT (88.14%) and the 2021 state-of-the-art MVT (88.62%), demonstrated significant progress. The improvement from 87% to 88.6% underscores the research community’s ongoing efforts to tackle occlusion complexity. PACVT further refined these approaches, achieving 88.21%. From Table 3, Subsequent methods CVT (88.81%) and the then state-of-the-art MVT (89.22%) demonstrated significant progress, pushing accuracy closer to 89%. PACVT further refined these approaches, achieving 88.72%.

- (3)

- Our Model: Our proposed model achieves a remarkable accuracy of 91.24%, surpassing the previous best (MVT at 88.62%) by a substantial margin of 2.62 percentage points. This result is highlighted as the highest in Table 2. Our proposed model achieves a notable accuracy of 90.18%, surpassing the previous best (MVT at 89.22%) by 0.96 percentage points. This result is highlighted as the highest in Table 3.

- (4)

- Statistical Significance Analysis: To rigorously validate that the performance improvement of our model is statistically significant and not due to random chance, we conducted the McNemar’s test [41], a widely used non-parametric test for comparing the proportions of matched pairs in classification tasks. The null hypothesis posits that there is no difference in the error rates between our proposed method and the compared model (MVT). The tests were performed on the predictions of our model and the best previous model (MVT) across both RAF-DB and FER+ datasets. The resulting * p *-values were extremely small (* p * < 0.001 for both datasets), allowing us to confidently reject the null hypothesis. This result provides strong statistical evidence that the superior accuracy achieved by our proposed model is statistically significant.

4.5.3. Generalization Evaluation on Real-World Occlusions

- (1)

- Performance Gap Highlights Real-World Challenge: A consistent performance drop is observed across all models when compared to their results on synthetic masked benchmarks (Table 2 and Table 3). This drop is not a failure but a validation of the RealMask-FER dataset’s complexity and its success in capturing the challenging domain gap between idealized synthetic occlusions and messy real-world scenarios.

- (2)

- Superior Generalization of Our Model: Our model demonstrates remarkable robustness, maintaining a commanding lead with an accuracy of 89.75%. It outperforms the best baseline (MVT [11]) by a significant margin of 1.83%. This substantial gap underscores that our framework’s core innovation—explicit occlusion parsing and guided feature enhancement—is effective at handling the inherent complexity and variety of real-world occlusions, proving its generalization is superior to methods reliant on implicit attention or multi-branch features alone.

- (3)

- Effectiveness of Explicit Parsing: The fact that our model, trained solely on synthetically generated data, generalizes effectively to real and varied masks indicates that the Facial Occlusion Parsing Module (FOPM) learns a fundamental, generic representation of “occlusion” as a structural entity, rather than memorizing the texture or pattern of a synthetic mask. This explicit structural prior is crucial for adapting to unseen occlusion patterns.

4.6. Visualization and Discussion

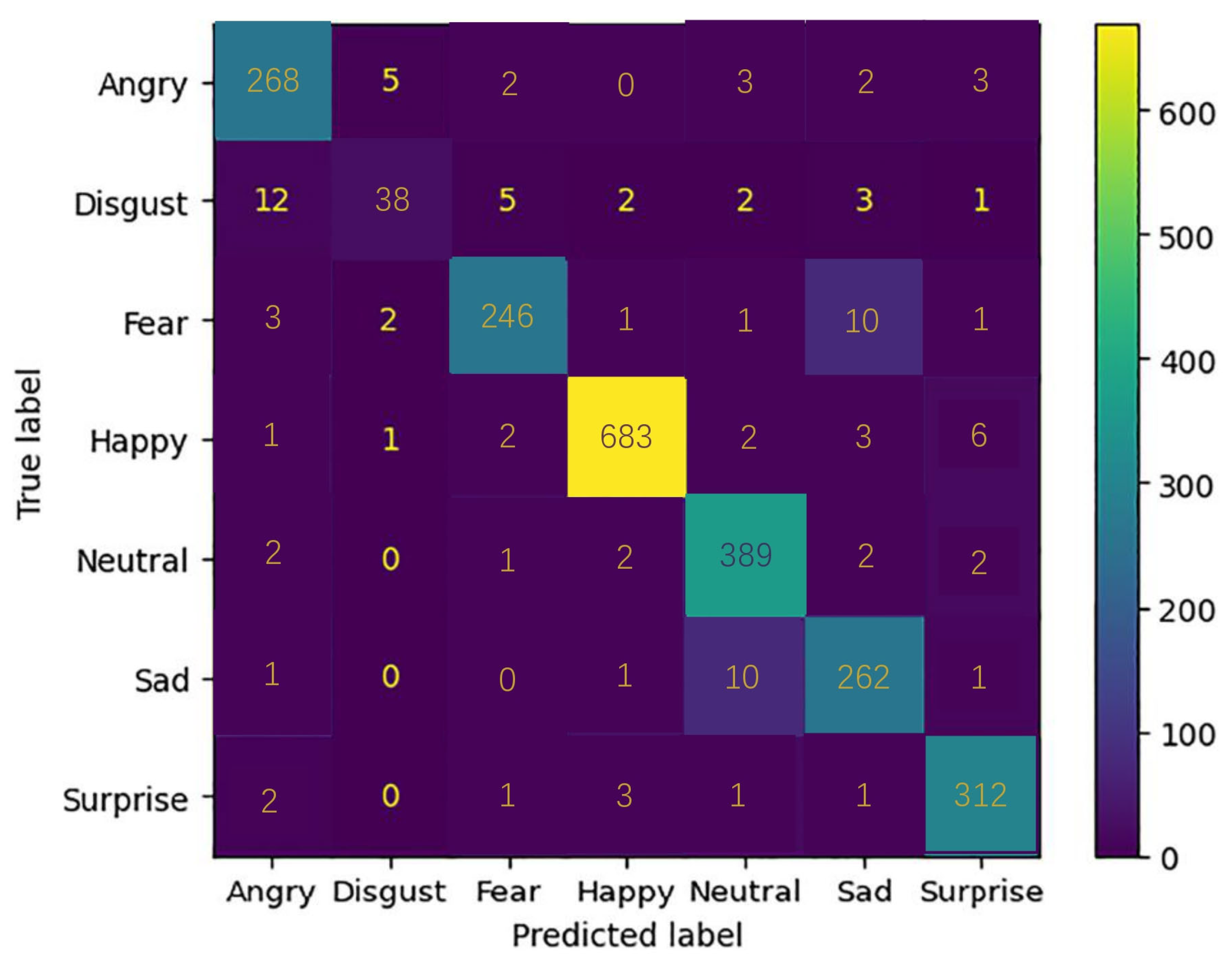

4.6.1. Confusion Matrix Analysis

- (1)

- High Overall Accuracy: Strong diagonal values across all expressions indicate high per-class recognition accuracy.

- (2)

- Negative Valence Confusion: A consistent pattern of confusion exists among negative valence emotions (e.g., Sad, Fear, Angry, Disgust), as indicated by significant off-diagonal values between these classes. This is a common challenge in FER due to similar facial muscle activations.

- (3)

- Performance Extremes: The Happy expression consistently shows the strongest diagonal intensity (highest accuracy), confirming its robust recognition even with masks. Conversely, the Disgust expression shows the weakest diagonal intensity and the most dispersed confusion, often being misclassified as Anger or Sadness.

- (4)

- Specific Neutral vs. Sad Confusion: Figure 5 highlights a distinct, reciprocal confusion pattern between Neutral and Sad. This suggests that occlusion masks critically obscure key lower-face discriminators for Sadness (e.g., mouth corners drawn downwards), making it difficult to distinguish from a Neutral expression based primarily on upper-face features.

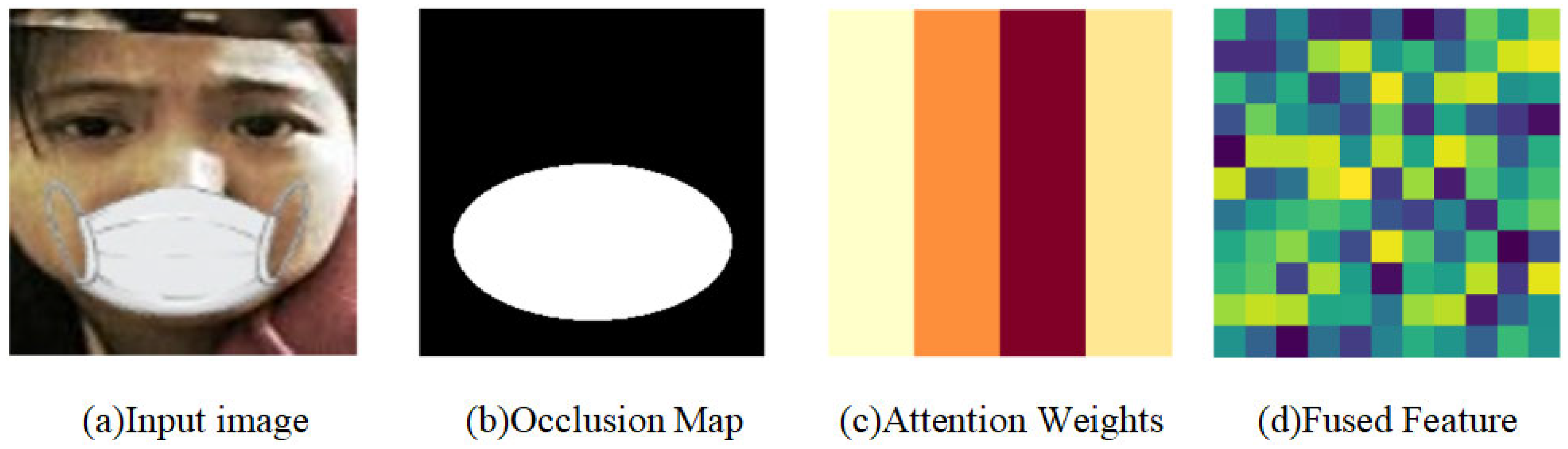

4.6.2. Intermediate Feature Visualization

- (1)

- Targeted Attention Allocation: The attention map (Figure 6c) exhibits a clear intensity contrast. Significantly higher weights (visually darker) are concentrated on visible, expression-salient regions (e.g., eyes, forehead), while occluded regions (e.g., mouth covered by the mask) are effectively suppressed (visually lighter).

- (2)

- Salient Feature Fusion: The fused feature map (Figure 6d) visually reflects this modulation by the attention weights. Stronger activation patterns are predominantly over the unoccluded, high-attention regions identified in Figure 6c, while activations over the occluded areas are minimal. This confirms the model’s ability to dynamically focus on reliable visual cues and suppress noisy information from occluded areas.

4.7. Additional Analysis on Neutral vs. Sad Confusion

- (1)

- When a Sad expression was misclassified as Neutral, the model’s attention was often incorrectly focused on parts of the upper face that appeared neutral rather than on the subtle cues (e.g., slight eyebrow lowering, eyelid tension) that might persist even with masks.

- (2)

- When a Neutral expression was misclassified as Sad, the model sometimes over-interpreted mild upper-face features or the overall context as indicative of negative valence.

- (1)

- Incorporating more granular, attention-guided landmark detection specifically around the eyes and eyebrows.

- (2)

- Employing contrastive learning to explicitly minimize the intra-class variation in Neutral and Sad while maximizing the inter-class separation in the feature space.

4.8. Conclusion of Experiments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Amiri, Z.; Hassanpour, H.; Beghdadi, A. Combining deep features and hand-crafted features for abnormality detection in WCE images. Multimed. Tools Appl. 2024, 83, 5837–5870. [Google Scholar] [CrossRef]

- Khanbebin, S.N.; Mehrdad, V. Improved convolutional neural network-based approach using hand-crafted features for facial expression recognition. Multimed. Tools Appl. 2024, 82, 11489–11505. [Google Scholar] [CrossRef]

- Hu, M.; Wang, H.; Wang, X.; Yang, J.; Wang, R. Video facial emotion recognition based on local enhanced motion history image and CNN-CTS LSTM networks. J. Vis. Commun. Image Represent. 2019, 59, 176–185. [Google Scholar] [CrossRef]

- Duncan, D.; Shine, G.; English, C. Facial emotion recognition in real time. Comput. Sci. 2016, 10, 1–7. [Google Scholar]

- Jain, N.; Kumar, S.; Kumar, A.; Shamsolmoali, P.; Zareapoor, M. Hybrid deep neural networks for face emotion recognition. Pattern Recognit. Lett. 2018, 115, 101–106. [Google Scholar] [CrossRef]

- Yang, B.; Wu, J.; Ikeda, K.; Hattori, G.; Sugano, M.; Iwasawa, Y.; Matsuo, Y. Face-mask-aware facial expression recognition based on face parsing and vision transformer. Pattern Recognit. Lett. 2022, 164, 173–182. [Google Scholar] [CrossRef]

- Li, Y.; Liu, H.; Liang, J.; Jiang, D. Occlusion-Robust Facial Expression Recognition Based on Multi-Angle Feature Extraction. Appl. Sci. 2025, 15, 5139. [Google Scholar] [CrossRef]

- Kim, J.; Lee, D. Facial Expression Recognition Robust to Occlusion and to Intra-Similarity Problem Using Relevant Subsampling. Sensors 2023, 23, 2619. [Google Scholar] [CrossRef]

- Devasena, G.; Vidhya, V. Twinned attention network for occlusion-aware facial expression recognition. Mach. Vis. Appl. 2025, 36, 23. [Google Scholar]

- Liang, X.; Xu, L.; Zhang, W.; Zhang, Y.; Liu, J.; Liu, Z. A convolution-transformer dual branch network for head-pose and occlusion facial expression recognition. Vis. Comput. 2023, 39, 2277–2290. [Google Scholar] [CrossRef]

- Li, H.; Sui, M.; Zhao, F.; Zha, Z.; Wu, F. MVT: Mask vision transformer for facial expression recognition in the wild. arXiv 2021, arXiv:2106.04520. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, Z.; Shen, D.; Wang, K.; Li, J.; Xia, C. Information gap based knowledge distillation for occluded facial expression recognition. Image Vis. Comput. 2015, 154, 105365. [Google Scholar] [CrossRef]

- Wang, H.-T.; Lyu, J.-L.; Chien, S.H.-L. Dynamic Emotion Recognition and Expression Imitation in Neurotypical Adults and Their Associations with Autistic Traits. Sensors 2024, 24, 8133. [Google Scholar] [CrossRef] [PubMed]

- Souza, J.M.S.; Alves, C.d.S.M.; Cerqueira, J.d.J.F.; Oliveira, W.L.A.d.; Pires, O.M.; Santos, N.S.B.d.; Wyzykowski, A.B.V.; Pinheiro, O.R.; Almeida Filho, D.G.d.; da Silva, M.O.; et al. Facial Biosignals Time–Series Dataset (FBioT): A Visual–Temporal Facial Expression Recognition (VT-FER) Approach. Electronics 2024, 13, 4867. [Google Scholar] [CrossRef]

- Qi, Y.; Zhuang, L.; Chen, H.; Han, X.; Liang, A. Evaluation of Students’ Learning Engagement in Online Classes Based on Multimodal Vision Perspective. Electronics 2024, 13, 149. [Google Scholar]

- Zhi, R.; Flierl, M.; Ruan, Q.; Kleijn, W.B. Graph-Preserving Sparse Nonnegative Matrix Factorization with Application to Facial Expression Recognition. IEEE Trans. Syst. Man Cybern. Part B 2010, 41, 38–52. [Google Scholar]

- Shen, L.; Jin, X. VaBTFER: An Effective Variant Binary Transformer for Facial Expression Recognition. Sensors 2024, 24, 147. [Google Scholar] [CrossRef]

- Xia, B.; Wang, S. Occluded facial expression recognition with stepwise assistance from unpaired non-occluded images. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 2927–2935. [Google Scholar]

- Liu, S.; Agaian, S.; Grigoryan, A. PortraitEmotion3D: A Novel Dataset and 3D Emotion Estimation Method for Artistic Portraiture Analysis. Appl. Sci. 2024, 14, 11235. [Google Scholar] [CrossRef]

- Lu, Y.; Wang, S.; Zhao, W.; Zhao, Y. WGAN-based robust occluded facial expression recognition. IEEE Access 2019, 7, 93594–93610. [Google Scholar] [CrossRef]

- Wright, J.; Yang, A.Y.; Ganesh, A.; Sastry, S.S.; Ma, Y. Robust face recognition via sparse representation. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 31, 210–227. [Google Scholar] [CrossRef]

- Selma, T.; Masud, M.M.; Bentaleb, A.; Harous, S. Inference Analysis of Video Quality of Experience in Relation with Face Emotion, Video Advertisement, and ITU-T P.1203. Technologies 2024, 12, 62. [Google Scholar] [CrossRef]

- Li, Y.; Zeng, J.; Shan, S.; Chen, X. Occlusion aware facial expression recognition using CNN with attention mechanism. IEEE Trans. Image Process. 2018, 28, 2439–2450. [Google Scholar] [CrossRef]

- Wang, K.; Peng, X.; Yang, J.; Meng, D.; Qiao, Y. Region attention networks for pose and occlusion robust facial expression recognition. IEEE Trans. Image Process. 2020, 29, 4057–4069. [Google Scholar] [CrossRef]

- Ding, H.; Zhou, P.; Chellappa, R. Occlusion-Adaptive Deep Network for Robust Facial Expression Recognition. In Proceedings of the 2020 IEEE International Joint Conference on Biometrics (IJCB), Houston, TX, USA, 28 September–1 October 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–9. [Google Scholar]

- Zhu, M.; Shi, D.; Zheng, M.; Sadiq, M. Robust facial landmark detection via occlusion-adaptive deep networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; IEEE Computer Society: Washington, DC, USA, 2019; pp. 3486–3496. [Google Scholar]

- Yang, B.; Jianming, W.; Hattori, G. Face mask aware robust facial expression recognition during the COVID-19 pandemic. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 240–244. [Google Scholar]

- Castellano, G.; De Carolis, B.; Macchiarulo, N. Automatic emotion recognition from facial expressions when wearing a mask. In Proceedings of the 14th Biannual Conference of the Italian SIGCHI Chapter, Bolzano, Italy, 11–13 July 2021; ACM: New York, NY, USA, 2021; pp. 1–5. [Google Scholar]

- Araluce, J.; Bergasa, L.M.; Gómez-Huélamo, C.; Barea, R.; López-Guillén, E.; Arango, F.; Pérez-Gil, Ó. Integrating OpenFace 2.0 toolkit for driver attention estimation in challenging accidental scenarios. In Proceedings of the Advances in Physical Agents II: Proceedings of the 21st International Workshop of Physical Agents (WAF 2020), Alcalá de Henares, Spain, 19–20 November 2020; Springer: Cham, Switzerland, 2021; pp. 274–288. [Google Scholar]

- Shaikh, M.A.; Al-Rawashdeh, H.S.; Sait, A.R.W. Deep Learning-Powered Down Syndrome Detection Using Facial Images. Life 2025, 15, 1361. [Google Scholar] [CrossRef]

- Arabian, H.; Abdulbaki Alshirbaji, T.; Chase, J.G.; Moeller, K. Emotion Recognition beyond Pixels: Leveraging Facial Point Landmark Meshes. Appl. Sci. 2024, 14, 3358. [Google Scholar] [CrossRef]

- Zhu, C.; Li, X.; Li, J.; Dai, S. Improving robustness of facial landmark detection by defending against adversarial attacks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; IEEE Computer Society: Washington, DC, USA, 2021; pp. 11751–11760. [Google Scholar]

- Wang, K.; Peng, X.; Yang, J.; Lu, S.; Qiao, Y. Suppressing uncertainties for large-scale facial expression recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; IEEE Computer Society: Washington, DC, USA, 2020; pp. 6897–6906. [Google Scholar]

- Ma, F.; Sun, B.; Li, S. Robust facial expression recognition with convolutional visual transformers. arXiv 2021, arXiv:2103.16854. [Google Scholar]

- Liu, C.; Hirota, K.; Dai, Y. Patch attention convolutional vision transformer for facial expression recognition with occlusion. Inf. Sci. 2023, 619, 781–794. [Google Scholar]

- Li, S.; Deng, W.; Du, J. Reliable crowdsourcing and deep locality preserving learning for expression recognition in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE Computer Society: Washington, DC, USA, 2017; pp. 2852–2861. [Google Scholar]

- Barsoum, E.; Zhang, C.; Ferrer, C.C.; Zhang, Z. Training deep networks for facial expression recognition with crowd-sourced label distribution. In Proceedings of the ACM International Conference on Multimodal Interaction, Tokyo, Japan, 12–16 November 2016; ACM: New York, NY, USA, 2016; pp. 279–283. [Google Scholar]

- King, D.E. Dlib-ml: A machine learning toolkit. J. Mach. Learn. Res. 2009, 10, 1755–1758. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Proceedings of the Neural Information Processing Systems 32 (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019; pp. 8024–8035. [Google Scholar]

- Llugsi, R.; El Yacoubi, S.; Fontaine, A.; Lupera, P. Comparison between Adam, Adamax and AdamW optimizers to implement a weather forecast based on neural networks for the Andean city of Quito. In Proceedings of the 2021 IEEE Fifth Ecuador Technical Chapters Meeting (ETCM), Cuenca, Ecuador, 12–15 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–6. [Google Scholar]

- McNemar, Q. Note on the sampling error of the difference between correlated proportions or percentages. Psychometrika 1947, 12, 153–157. [Google Scholar] [CrossRef] [PubMed]

- Susmaga, R. Confusion matrix visualization. In Proceedings of the Intelligent Information Processing and Web Mining: Proceedings of the International IIS: IIPWM ‘04 Conference, Zakopane, Poland, 17–20 May 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 107–116. [Google Scholar]

| Methods | FOPM | EFFM | FERM | RAF-DB | FER+ |

|---|---|---|---|---|---|

| w/o FOPM | × | √ | √ | 88.91% | 87.13% |

| w/o EFFM | √ | × | √ | 90.37% | 89.37% |

| w/o FERM | √ | √ | × | 89.56% | 88.75% |

| Ours | √ | √ | √ | 91.24% | 90.18% |

| Methods | Years | AP | Precision | Recall | F1-Score | Training Protocol |

|---|---|---|---|---|---|---|

| RAN | 2018 | 86.90% | 86.45% | 86.20% | 86.32% | Retrained on our masked data |

| SCN | 2020 | 87.03% | 86.88% | 86.70% | 86.79% | Retrained on our masked data |

| CVT | 2020 | 88.14% | 87.92% | 87.65% | 87.78% | Retrained on our masked data |

| MVT | 2021 | 88.62% | 88.40% | 88.15% | 88.27% | Retrained on our masked data |

| PACVT | 2023 | 88.21% | 87.95% | 87.73% | 87.84% | Retrained on our masked data |

| Ours | 2025 | 91.24% | 91.05% | 90.88% | 90.96% | Trained on our masked data |

| Methods | Years | AP | Precision | Recall | F1-Score | Training Protocol |

|---|---|---|---|---|---|---|

| RAN | 2018 | 87.85% | 87.50% | 87.20% | 87.35% | Retrained on our masked data |

| SCN | 2020 | 88.01% | 87.75% | 87.52% | 87.63% | Retrained on our masked data |

| CVT | 2020 | 88.81% | 88.55% | 88.30% | 88.42% | Retrained on our masked data |

| MVT | 2021 | 89.22% | 89.00% | 88.75% | 88.87% | Retrained on our masked data |

| PACVT | 2023 | 88.72% | 88.45% | 88.20% | 88.32% | Retrained on our masked data |

| Ours | 2025 | 90.18% | 89.95% | 89.70% | 89.82% | Trained on our masked data |

| Methods | Years | AP | Precision | Recall | F1-Score | Training Protocol |

|---|---|---|---|---|---|---|

| MVT | 2021 | 87.92% | 87.65% | 87.40% | 87.52% | Retrained on our masked data |

| PACVT | 2023 | 87.30% | 87.02% | 86.75% | 86.88% | Retrained on our masked data |

| Ours | 2025 | 89.75% | 89.50% | 89.25% | 89.37% | Trained on our masked data |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hou, H.; Sun, X. Joint Learning for Mask-Aware Facial Expression Recognition Based on Exposed Feature Analysis and Occlusion Feature Enhancement. Appl. Sci. 2025, 15, 10433. https://doi.org/10.3390/app151910433

Hou H, Sun X. Joint Learning for Mask-Aware Facial Expression Recognition Based on Exposed Feature Analysis and Occlusion Feature Enhancement. Applied Sciences. 2025; 15(19):10433. https://doi.org/10.3390/app151910433

Chicago/Turabian StyleHou, Huanyu, and Xiaoming Sun. 2025. "Joint Learning for Mask-Aware Facial Expression Recognition Based on Exposed Feature Analysis and Occlusion Feature Enhancement" Applied Sciences 15, no. 19: 10433. https://doi.org/10.3390/app151910433

APA StyleHou, H., & Sun, X. (2025). Joint Learning for Mask-Aware Facial Expression Recognition Based on Exposed Feature Analysis and Occlusion Feature Enhancement. Applied Sciences, 15(19), 10433. https://doi.org/10.3390/app151910433