Sustainable Conservation of Embroidery Cultural Heritage: An Approach to Embroidery Fabric Restoration Based on Improved U-Net and Multiscale Discriminators

Abstract

1. Introduction

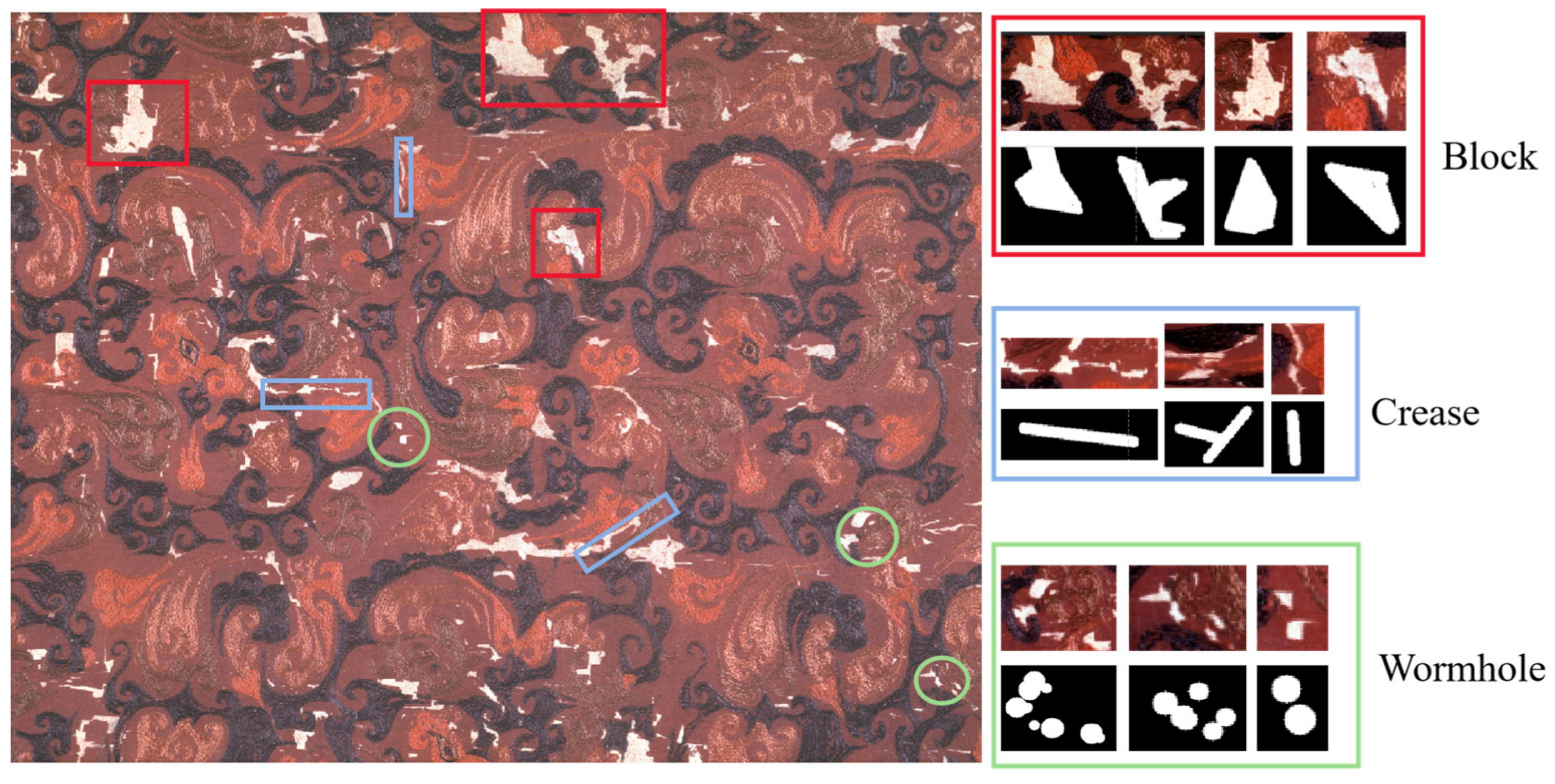

- The proposed approach supports arbitrary combinations of three mask types—wormhole, crease, and block. During training, irregular masks are dynamically combined at random to enhance the model’s robustness in handling diverse real-world damage patterns, thereby preventing overfitting to any specific type of degradation.

- An enhanced generative adversarial network is employed as the restoration model. The generator incorporates a U-Net architecture to mitigate issues arising from the limited sample size in embroidery image data. Through collaborative optimization with a multi-scale discriminator, the model achieves more accurate reconstruction of complex structural and textural details, which is essential for restoring intricate embroidery patterns.

- A hybrid loss function combining adversarial loss, pixel-level L1 loss, perceptual loss, and style loss is introduced for joint optimization. This composite loss is crucial for reconstructing the unique textural patterns and stylistic features of embroidery, encouraging the model to generate visually realistic and detailed images.

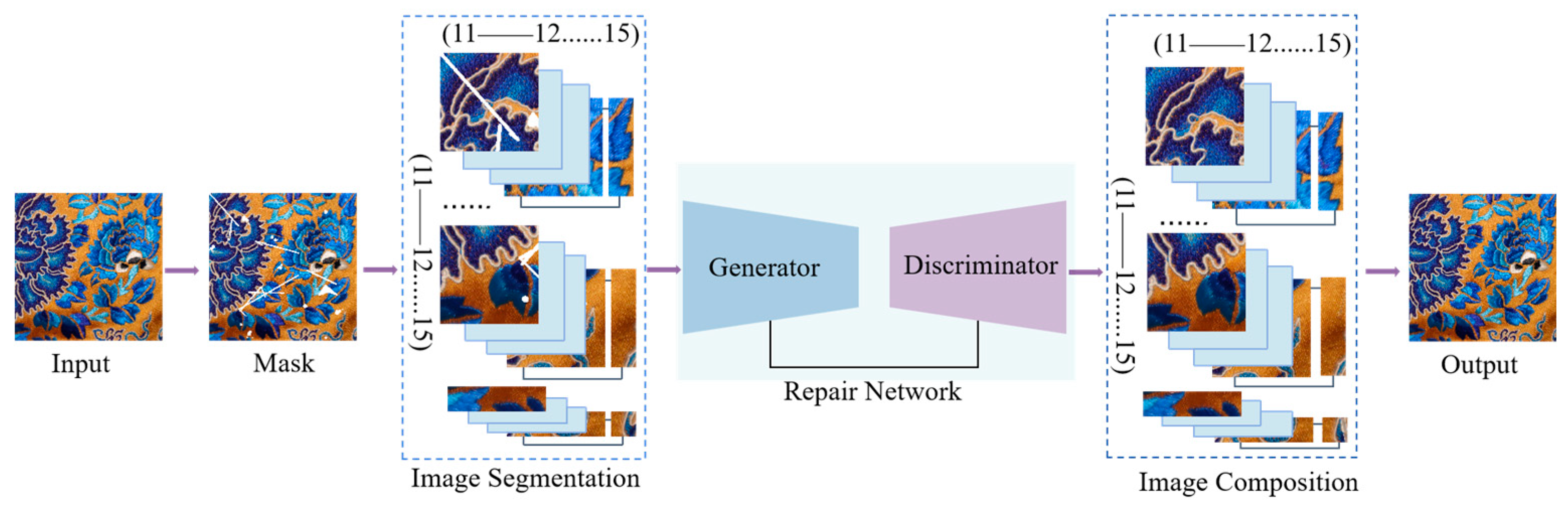

- A complete workflow for embroidery image restoration is designed, encompassing mask generation, image segmentation, image inpainting, and image recomposition. Additionally, an eye-tracking experiment is conducted to quantitatively evaluate the visual authenticity of the restoration results. Experimental outcomes demonstrate that the proposed method exhibits notable advantages in restoring embroidery images.

2. Methods

2.1. Generative Adversarial Network Architecture

2.2. Generator Structure

2.3. Discriminator Structure

2.4. Loss Function

3. Repair Framework

3.1. Repair Process

3.2. Mask Generation

3.3. Image Segmentation and Combination

3.3.1. Image Segmentation

3.3.2. Image Combination

4. Experiments

4.1. Data Collection and Experimental Setup

4.2. Qualitative Comparison

4.3. Ablation Experiment

4.4. Overall Restoration Effect Evaluation

4.5. Practical Application

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| GAN | Generative Adversarial Networks |

| SSIM | Structure Similarity Index Measure |

| PSNR | Peak Signal-to-Noise Ratio |

| BSCB | Bertalmio-Sapiro-Caselles-Ballester Model |

| AE | Autoencoder |

| CNN | Convolutional Neural Network |

| CA | Contextual Attention |

| LeNet-5 | LeNet-5 Convolutional Neural Network |

| U-Net | U-Shaped Network |

| GatedConv | Gated Convolution |

| PatchGAN | Patch-based Generative Adversarial Network |

| LeakyReLU | Leaky Rectified Linear Unit |

| VGG16 | Visual Geometry Group 16-layer Convolutional Neural Network |

References

- Yan, W.-J.; Chiou, S.-C. The Safeguarding of Intangible Cultural Heritage from the Perspective of Civic Participation: The Informal Education of Chinese Embroidery Handicrafts. Sustainability 2021, 13, 4958. [Google Scholar] [CrossRef]

- Sha, S.; Li, Y.; Wei, W.; Liu, Y.; Chi, C.; Jiang, X.; Deng, Z.; Luo, L. Image Classification and Restoration of Ancient Textiles Based on Convolutional Neural Network. Int. J. Comput. Intell. Syst. 2024, 17, 11. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, Z.; Chen, J.; Duan, Z. Research on the Color Characteristics of Dragon Patterns in the Embroidery Collection of the Palace Museum During the Qing Dynasty Based on K-Means Clustering Analysis and Its Application in the Color. In Advances in Printing, Packaging and Communication Technologies; Song, H., Xu, M., Yang, L., Zhang, L., Eds.; Springer Nature: Singapore, 2024; pp. 43–57. [Google Scholar]

- Li, H.; Zhang, J.; Peng, W.; Tian, X.; Shi, J. Digital Restoration of Ancient Loom: Evaluation of the Digital Restoration Process and Display Effect of Yunjin Dahualou Loom. npj Herit. Sci. 2025, 13, 3. [Google Scholar] [CrossRef]

- Yang, J.; Cao, J.; Yang, H.; Li, Y.; Wang, J. Digitally Assisted Preservation and Restoration of a Fragmented Mural in a Tang Tomb. Sens. Imaging 2021, 22, 32. [Google Scholar] [CrossRef]

- Liu, K.; Zhao, J.; Zhu, C. Research on Digital Restoration of Plain Unlined Silk Gauze Gown of Mawangdui Han Dynasty Tomb Based on AHP and Human–Computer Interaction Technology. Sustainability 2022, 14, 8713. [Google Scholar] [CrossRef]

- Liu, K.; Wu, H.; Ji, Y.; Zhu, C. Archaeology and Restoration of Costumes in Tang Tomb Murals Based on Reverse Engineering and Human-Computer Interaction Technology. Sustainability 2022, 14, 6232. [Google Scholar] [CrossRef]

- Lozano, J.S.; Pagán, E.A.; Roig, E.M.; Salvatella, M.G.; Muñoz, A.L.; Peris, J.S.; Vernus, P.; Puren, M.; Rei, L.; Mladenič, D. Open Access to Data about Silk Heritage: A Case Study in Digital Information. Sustainability 2023, 15, 14340. [Google Scholar] [CrossRef]

- Rastogi, R.; Rawat, V.; Kaushal, S. Advancements in Image Restoration Techniques: A Comprehensive Review and Analysis through GAN. In Generative Artificial Intelligence and Ethics: Standards, Guidelines, and Best Practices; IGI Global: Hershey, PA, USA, 2025; pp. 53–90. [Google Scholar]

- Li, H.; Luo, W.; Huang, J. Localization of Diffusion-Based Inpainting in Digital Images. IEEE Trans. Inf. Forensics Secur. 2017, 12, 3050–3064. [Google Scholar] [CrossRef]

- Bertalmio, M.; Sapiro, G.; Caselles, V.; Ballester, C. Image inpainting. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, New Orleans, LA, USA, 23–28 July 2000; pp. 417–424. [Google Scholar]

- Criminisi, A.; Perez, P.; Toyama, K. Region Filling and Object Removal by Exemplar-Based Image Inpainting. IEEE Trans. Image Process. 2004, 13, 1200–1212. [Google Scholar] [CrossRef]

- Cheng, W.; Hsieh, C.; Lin, S.; Wang, C.; Wu, J. Robust Algorithm for Exemplar-Based Image Inpainting. In Proceedings of the International Conference on Computer Graphics, Imaging and Visualization, Beijing, China, 26–29 July 2005; pp. 64–69. [Google Scholar]

- Zhang, X.; Zhai, D.; Li, T.; Zhou, Y.; Lin, Y. Image Inpainting Based on Deep Learning: A Review. Inform. Fusion 2023, 90, 74–94. [Google Scholar] [CrossRef]

- Xu, Z.S.; Zhang, X.F.; Chen, W.; Yao, M.D.; Liu, J.T.; Xu, T.T.; Wang, Z.H. A Review of Image Inpainting Methods Based on Deep Learning. Appl. Sci. 2023, 13, 11189. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the Advances in Neural Information Processing Systems 27, Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Moghadam, M.M.; Boroomand, B.; Jalali, M.; Zareian, A.; Daeijavad, A.; Manshaei, M.H.; Krunz, M. Games of GANs: Game-Theoretical Models for Generative Adversarial Networks. Artif. Intell. Rev. 2023, 56, 9771–9807. [Google Scholar] [CrossRef]

- Mirza, M.; Osindero, S. Conditional generative adversarial nets. arXiv 2014, arXiv:1411.1784. [Google Scholar] [CrossRef]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Pathak, D.; Krahenbuhl, P.; Donahue, J.; Darrell, T.; Efros, A.A. Context Encoders: Feature Learning by Inpainting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2536–2544. [Google Scholar]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein generative adversarial networks. Int. Conf. Mach. Learn. PMLR 2017, 70, 214–223. [Google Scholar]

- Iizuka, S.; Simo-Serra, E.; Ishikawa, H. Globally and Locally Consistent Image Completion. ACM Trans. Graph. 2017, 36, 1–14. [Google Scholar] [CrossRef]

- Yu, J.; Lin, Z.; Yang, J.; Shen, X.; Lu, X.; Huang, T.S. Generative Image Inpainting with Contextual Attention. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5505–5514. [Google Scholar]

- Liu, G.; Reda, F.A.; Shih, K.J.; Wang, T.-C.; Tao, A.; Catanzaro, B. Image inpainting for irregular holes using partial convolutions. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8 September 2018; pp. 85–100. [Google Scholar]

- Zhong, C.; Yu, X.; Xia, H.; Xu, Q. Restoring intricate Miao embroidery patterns: A GAN-based U-Net with spatial-channel attention. Vis. Comput. 2025, 41, 7521–7533. [Google Scholar] [CrossRef]

- Yu, J.; Lin, Z.; Yang, J.; Shen, X.; Lu, X.; Huang, T. Free-form image inpainting with gated convolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4471–4480. [Google Scholar]

- Chen, G.; Zhang, G.; Yang, Z.; Liu, W. Multi-Scale Patch-GAN with Edge Detection for Image Inpainting. Appl. Intell. 2023, 53, 3917–3932. [Google Scholar] [CrossRef]

- Barron, J.T. A General and Adaptive Robust Loss Function. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4331–4339. [Google Scholar]

- Wang, N.; Wang, W.; Hu, W.; Fenster, A.; Li, S. Thanka mural inpainting based on multi-scale adaptive partial convolution and stroke-like mask. IEEE Trans. Image Process. 2021, 30, 3720–3733. [Google Scholar] [CrossRef]

- Hore, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the 2010 20th International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

| Loss Function Weight | Default Weight Value | Epoch ≥ 300 |

|---|---|---|

| adv_weight | 1.0 | remain unchanged |

| pixel_weight | 10.0 | remain unchanged |

| perceptual_weight | 0.1 | 0.15 |

| style_weight | 100.0 | remain unchanged |

| optimizer_D.lr | 0.0001 | Reduced to 5% of the initial value (0.000005) |

| Parameter/Configuration | Details |

|---|---|

| Dataset split | Training: 90%, Testing: 10% |

| Resolution | 256 × 256 |

| Optimizer | Adam |

| β1 | 0.5 |

| β2 | 0.999 |

| Learning rate | Initial: 2 × 10−4, Discriminator: 1 × 10−4 |

| Learning rate adjustment | ReduceLROnPlateau (factor = 0.5, patience = 25) |

| Batch size | 16 |

| Experimental Server | |

| CPU | 16 vCPU Intel(R) Xeon(R) Gold 6430 |

| GPU | RTX 4090 (24 GB) |

| Operating System | ubuntu20.04 |

| Python Version | 3.8 |

| CUDA Version | 11.4 |

| Method | PSNR | SSIM |

|---|---|---|

| CE | 28.33 dB | 0.9001 |

| PCnov | 22.74 dB | 0.8495 |

| GCnov | 25.11 dB | 0.8822 |

| OURS | 28.64 dB | 0.9074 |

| Method | PSNR | SSIM |

|---|---|---|

| Group 1 | 27.72 dB | 0.9055 |

| Group 2 | 27.76 dB | 0.9038 |

| Group 3 | 27.94 dB | 0.9043 |

| Complete model | 28.18 dB | 0.9066 |

| Indicator | Average | Standard Deviation | Minimum Value | Maximum Value | Threshold |

|---|---|---|---|---|---|

| IoU | 0.040 | 0.020 | 0.015 | 0.062 | <0.30 |

| BAI | 0.086 | 0.027 | 0.057 | 0.116 | <0.20 |

| VSD | 0.889 | 0.038 | 0.832 | 0.930 | <0.10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Q.; Jiang, C.; Lu, Z.; Liu, X.; Jiang, K.; Liu, F. Sustainable Conservation of Embroidery Cultural Heritage: An Approach to Embroidery Fabric Restoration Based on Improved U-Net and Multiscale Discriminators. Appl. Sci. 2025, 15, 10397. https://doi.org/10.3390/app151910397

Wang Q, Jiang C, Lu Z, Liu X, Jiang K, Liu F. Sustainable Conservation of Embroidery Cultural Heritage: An Approach to Embroidery Fabric Restoration Based on Improved U-Net and Multiscale Discriminators. Applied Sciences. 2025; 15(19):10397. https://doi.org/10.3390/app151910397

Chicago/Turabian StyleWang, Qiaoling, Chenge Jiang, Zhiwen Lu, Xiaochen Liu, Ke Jiang, and Feng Liu. 2025. "Sustainable Conservation of Embroidery Cultural Heritage: An Approach to Embroidery Fabric Restoration Based on Improved U-Net and Multiscale Discriminators" Applied Sciences 15, no. 19: 10397. https://doi.org/10.3390/app151910397

APA StyleWang, Q., Jiang, C., Lu, Z., Liu, X., Jiang, K., & Liu, F. (2025). Sustainable Conservation of Embroidery Cultural Heritage: An Approach to Embroidery Fabric Restoration Based on Improved U-Net and Multiscale Discriminators. Applied Sciences, 15(19), 10397. https://doi.org/10.3390/app151910397