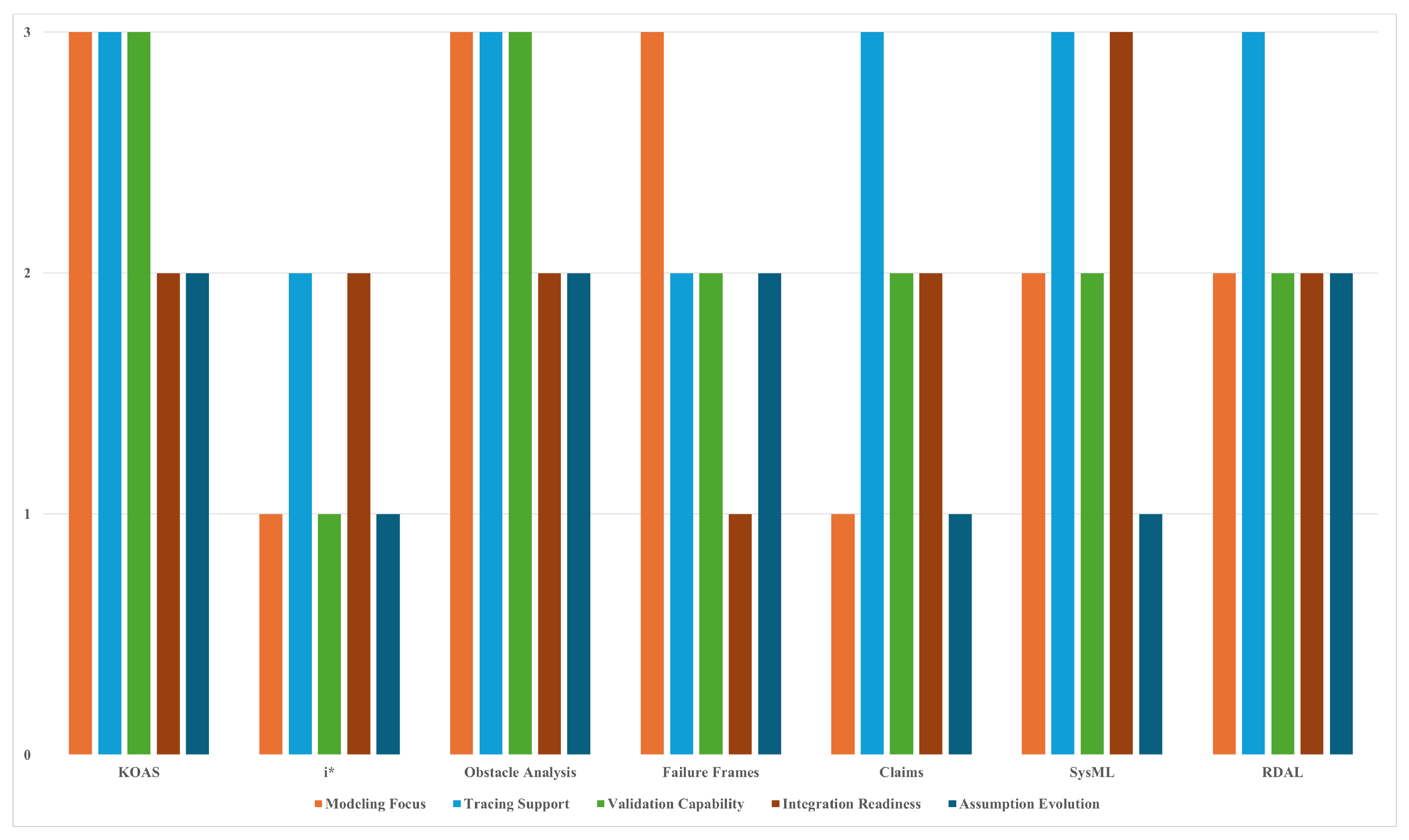

This section presents the results of a comparative evaluation of seven modeling approaches with respect to their support for representing and managing environmental assumptions. The evaluation is structured around five dimensions introduced earlier, i.e., Modeling Focus, Tracing Support, Validation Capability, Integration Readiness, and Support for Assumption Evolution, and applied consistently across all frameworks using the sUAS case study scenario.

For each framework, we provide a descriptive analysis of how it addresses the five dimensions, supported by evidence from the literature and example models. A scoring scheme on a four-point scale (0 = not supported, 1 = weak, 2 = moderate, 3 = strong) summarizes each framework’s performance. Following the individual evaluations, a consolidated comparative table highlights cross-framework patterns and trade-offs, which are then discussed in a synthesis subsection.

4.3. Obstacle Analysis

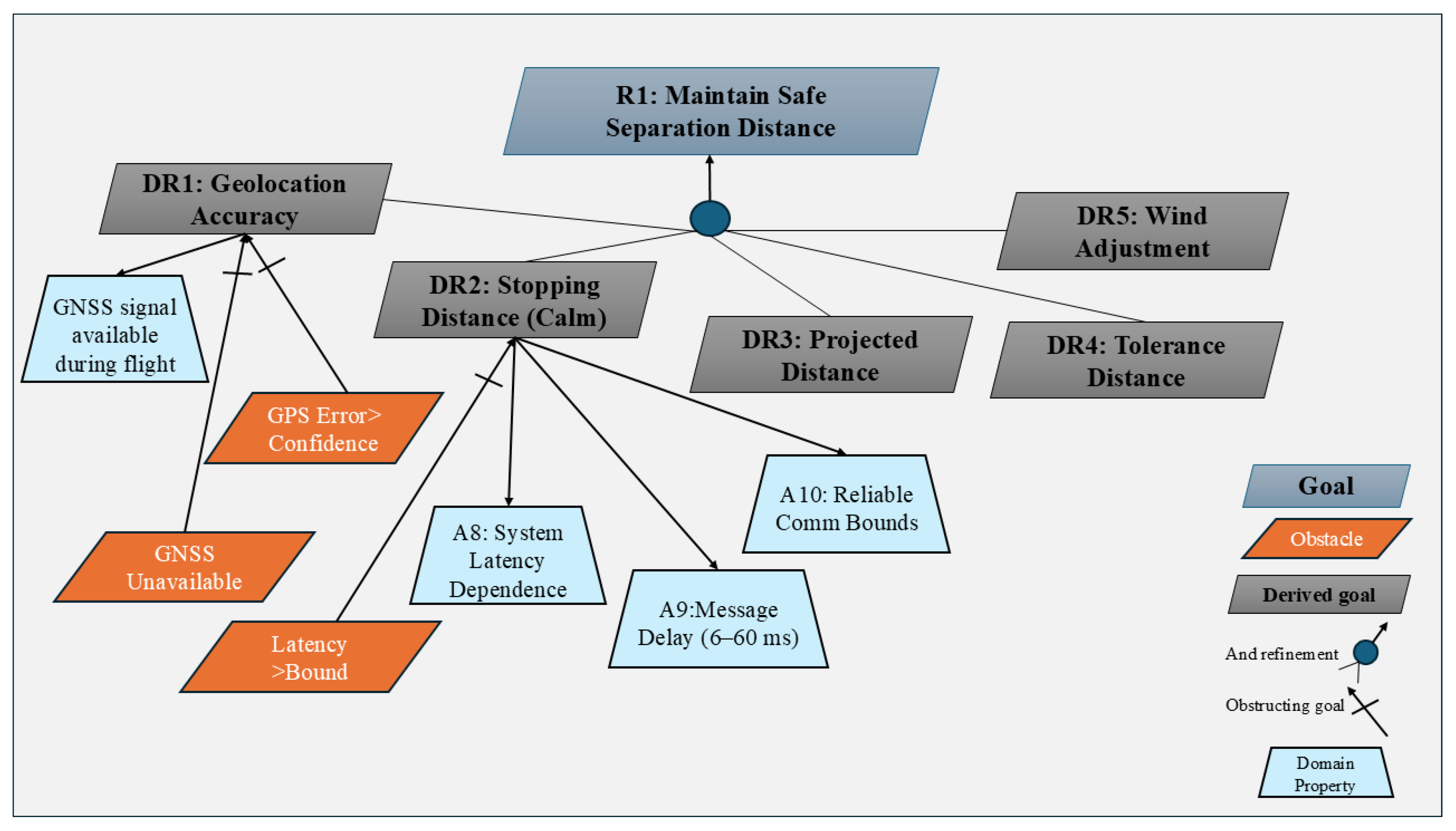

In Obstacle Analysis, environmental assumptions are captured primarily through the identification of obstacles, i.e., conditions that may prevent goals from being satisfied [

37]. Assumptions are therefore modeled negatively, in terms of their possible violations, rather than as explicit positive invariants. In the sUAS scenario, for example, the assumption “GNSS signal is available during flight” would be reflected indirectly through the obstacle “Severe ionospheric disturbance blocks GNSS signal.” This modeling focus provides systematic means of identifying vulnerabilities in goals but makes it less straightforward to represent assumptions that hold under normal conditions.

Tracing support in Obstacle Analysis is strong, as obstacles are explicitly linked to the goals they obstruct, and their propagation through goal refinement structures can be analyzed systematically [

41]. This enables clear impact analysis when assumptions fail, since every obstacle can be traced to the goals and requirements it threatens. Validation capability is also a key strength: obstacles can be formalized and analyzed through temporal logic, theorem proving, and model checking, allowing rigorous reasoning about system robustness under assumption failures [

53]. However, the need for detailed formalization can impose high modeling effort.

Integration readiness is limited, as Obstacle Analysis has been mainly supported through research prototypes and extensions of KAOS-based tools such as Objectiver [

48]. Interoperability with MBSE toolchains remains underdeveloped, making it challenging to connect obstacle models directly with system architectures or simulation environments. With respect to assumption evolution, Obstacle Analysis is primarily reactive since new or changing assumptions are handled by introducing corresponding obstacles and adapting the mitigation strategies linked to them [

41]. While this provides a systematic process for dealing with assumption violations at design time, there is no built-in support for runtime monitoring or automated evolution. A summary of the scores and justifications for Obstacle Analysis across the five evaluation dimensions is presented in

Table 4.

4.4. Failure Frames

In the Failure Frames approach, environmental assumptions are modeled explicitly through failure conditions that describe ways in which the system’s operating context may deviate from expectations [

54,

55]. Unlike obstacle analysis, which models the violation of goals, failure frames emphasize the interaction between assumptions about the environment and the system’s responsibilities, framing requirements in terms of how the system should respond to environmental failures. In the sUAS scenario, for example, the assumption “GNSS signal is available during flight” can be expressed as a failure frame when it is violated, requiring the system to adopt fallback behavior such as switching to inertial navigation.

The modeling focus is strong, as failure frames make assumptions explicit and systematically associate them with specific failure classes, e.g., omission, commission, timing, value. This classification provides a structured way to anticipate assumption breakdowns and ensures that environmental conditions are consistently represented. Tracing support is also strong, since failure frames connect environmental assumptions directly to goals, requirements, and mitigation behaviors, allowing systematic analysis of the impact of assumption violations. Validation capability is rated as moderate, while failure frames enable structured reasoning about the completeness of failure handling, the absence of formal semantics or automated verification limits them compared to approaches like KAOS or Obstacle Analysis. As such, validation relies on qualitative argumentation and systematic coverage checks rather than model checking.

Integration readiness is limited. Failure frames have been demonstrated in safety-critical domains such as avionics, but tool support is ad hoc and not well integrated into MBSE toolchains. Finally, support for assumption evolution is largely reactive: analysts can update or extend failure frames when new assumptions or failure modes are identified, but the method does not provide mechanisms for runtime monitoring or automated updates. The evaluation scores and justifications for Failure Frames across the five comparison dimensions are summarized in

Table 5.

4.5. Claims

The Claims approach models environmental assumptions as part of an argumentation structure that justifies specific system properties [

14]. Claims are supported by evidence and connected to underlying assumptions that must hold for the claim to remain valid.

In the sUAS scenario, an assurance case might include the following elements:

Claim: “The sUAS battery will not overheat during the mission.”

Assumption (context): “Ambient temperature remains below 35 °C.”

Argument: “Battery safety depends on thermal margins tested under operational conditions.”

Evidence: “Laboratory test data demonstrating battery performance below 35 °C.”

Here, the environmental assumption about ambient temperature is treated as a contextual condition that underpins the claim. If the assumption fails (e.g., during a heatwave), the validity of the claim may be undermined. Unlike Failure Frames, which categorize failures explicitly, Claims analysis emphasizes reasoning about why a requirement is believed to be satisfied and recording the assumptions that underpin this reasoning.

Table 6 presents the evaluation of Claims against the five comparison dimensions. This approach scores moderately on modeling focus, as assumptions are captured as contextual warrants rather than as first-class modeling constructs. Tracing support is strong, as claims, arguments, evidence, and their assumptions are systematically linked. Validation capability is moderate: argument structures enable qualitative validation, but formal rigor depends on integration with external verification methods. Integration readiness is also moderate: while assurance case tools such as SEURAT and ASCE provide strong support within safety analysis and certification, integration with broader MBSE workflows remains limited. Support for assumption evolution is weak, as there are no built-in mechanisms for updating or versioning assumptions when environmental conditions change. Updates are possible only through manual revisions to the assurance case.

4.6. SysML

In SysML, environmental assumptions are not modeled as first-class constructs but are usually represented informally in requirement diagrams, parametric constraints, or textual annotations [

15,

44,

56]. This makes the modeling focus only moderate, since assumptions are not native constructs and must be represented indirectly, e.g., via requirements, constraint blocks, or annotations. While this is less explicit than in goal-oriented approaches, SysML’s extensibility through stereotypes and profiles still allows assumptions to be captured systematically. In the sUAS scenario, for example, the assumption “GNSS signal is available during flight” may appear as a requirement note or a constraint on navigation accuracy, but SysML lacks a dedicated construct for differentiating assumptions from requirements or design specifications.

Tracing support in SysML is moderate, as requirements diagrams, allocation relationships, and parametric links allow some connections to be established between assumptions, requirements, and system elements. However, because assumptions are not explicit, traceability requires disciplined modeling practices and often depends on conventions or extensions defined by the project team. Validation capability is also moderate, as parametric diagrams can support consistency checking and integration with simulation tools, but formal semantics for verifying environmental assumptions are limited [

15]. This restricts the depth of reasoning compared to goal-oriented approaches.

SysML scores higher on integration readiness. It is natively aligned with MBSE practices, widely supported by industrial tools such as Cameo Systems Modeler and IBM Rhapsody, and can interoperate with requirement management (DOORS), simulation (Simulink), and safety analysis environments [

57]. However, support for assumption evolution is weak. While assumptions can be updated through model edits or requirement changes, SysML does not offer systematic mechanisms for proactive monitoring or automated propagation of assumption changes across models. The evaluation scores and justifications for SysML across the five dimensions are summarized in

Table 7.

4.7. RDAL

RDAL was introduced to strengthen requirements specification in MBSE environments by providing a structured language for defining requirements and linking them to system models [

16]. Environmental assumptions are expressed as requirements, constraints, or contextual conditions but are not first-class constructs within the language. In the sUAS scenario, for example, an assumption and its associated requirement could be specified in RDAL as follows:

This illustrates how RDAL allows engineers to capture assumptions in requirement form, link them to functional requirements, and enrich them with annotations such as risk or confidence levels. These extensions make assumptions more visible and analyzable, though they remain embedded in requirement constructs rather than being treated as first-class entities.

The modeling focus is therefore moderate, as RDAL allows explicit documentation of assumptions through requirement statements but lacks native constructs to distinguish them from system requirements. Tracing support is stronger: RDAL enables links between requirements, system model elements, and verification activities, so assumptions expressed as requirements can be systematically connected to design artifacts and test cases. Validation capability is also moderate: RDAL specifications can be linked to verification methods, enabling checks against system behavior, but the language does not provide formal semantics for reasoning about assumptions.

Integration readiness is one of RDAL’s strengths, since it was designed as an Eclipse-based profile and integrates with SysML and MBSE toolchains. This makes it possible to manage assumptions alongside requirements, architectures, and simulations within a consistent environment. With respect to assumption evolution, RDAL supports traceable updates when requirements change, but this process is reactive and design-time only. There is no explicit support for runtime monitoring of assumptions or proactive adaptation when assumptions evolve. The evaluation scores and justifications for RDAL across the five comparison dimensions are presented in

Table 8.

Table 9 provides a comparative overview of how each of the seven modeling approaches represents environmental assumptions in practice. While all frameworks include some mechanism to encode assumptions, they differ significantly in modeling constructs, emphasis, and explicitness. For example, KAOS and Obstacle Analysis support systematic treatment of environmental constraints through domain properties and obstacle conditions, respectively, and provide structured reasoning about their potential violations. In contrast, frameworks like i* and Claims capture assumptions more implicitly, often through actor dependencies or justificatory elements in argumentation models, with validation relying heavily on external evidence or tools. SysML provides moderate support for explicit representation by allowing assumptions to be defined through user-defined stereotypes or profile extensions within standard requirement constructs, while RDAL offers dedicated modeling elements with targeted annotations that link assumptions directly to requirements and risks, and allow metadata such as confidence levels to be specified. At a glance, KAOS and Obstacle Analysis provide the strongest support for explicit assumption modeling, i* and Claims remain weakest, and RDAL and SysML occupy a middle ground. Their relative positioning, however, shifts once additional dimensions such as traceability, validation, integration, and assumption evolution are considered.

Table 10 and

Figure 2 synthesize the evaluation results across the seven frameworks. To complement this comparison,

Figure 3 provides a radar-style visualization of the same results, where each axis corresponds to an evaluation dimension and each polygon represents a framework. As an illustration of how the scoring was applied, SysML was assigned a score of 1 for assumption evolution because, while assumptions can be captured via parametric constraints and analyzed in simulations, explicit constructs for runtime adaptation are absent.

KAOS, Obstacle Analysis, and RDAL emerge as the strongest overall, though with different emphases. KAOS integrates assumptions as domain properties and obstacles, structurally tied to goals and agents. Its formal semantics enable rigorous validation, and its traceability to requirements is strong, although integration into MBSE workflows remains limited. Obstacle Analysis supports explicit modeling of conditions that obstruct goals and provides systematic resolution tactics, but its integration with broader toolchains and lifecycle support is weaker. RDAL provides native constructs for assumptions, complete with metadata such as risk and confidence levels, and explicit links to requirements and rationale. It also supports structured updating and versioning of assumptions, though this remains largely manual, and its industrial adoption and toolchain integration are weaker than SysML.

SysML scores highest in integration readiness, reflecting its widespread use in MBSE toolchains. Assumptions can be captured through stereotypes, tagged values, and parametric constraints, enabling traceability across requirements and design. However, effective validation typically requires significant customization or external analysis tools, and its evolution support is weak. Failure Frames emphasize distinguishing between system and environmental assumption violations, providing structured within-frame traceability and qualitative validation support, but they remain poorly integrated with mainstream MBSE environments. The i* framework and Claims approaches provide the weakest support for explicit assumption handling. i* embeds assumptions indirectly in actor dependencies and softgoals, offering moderate traceability but little validation or evolution support. Claims frameworks capture assumptions as contextual warrants in assurance arguments, achieving strong traceability across claims, arguments, and evidence, but offering only qualitative validation and minimal evolution support.

Overall, no single framework excels across all dimensions. KAOS, Obstacle Analysis, and RDAL provide the most comprehensive modeling and analysis capabilities, SysML offers the strongest MBSE integration, while i* and Claims remain limited in explicitness but valuable for early-phase socio-technical analysis and assurance reasoning. These findings suggest that combining complementary frameworks or extending existing ones may be necessary to ensure assumptions remain visible, analyzable, and evolvable in safety-critical systems.

The comparative results summarized in

Table 10 and visualized in

Figure 3 reveal a diverse landscape of strengths and weaknesses across the seven modeling frameworks. KAOS and Obstacle Analysis provide the strongest explicit constructs and systematic reasoning for handling environmental assumptions, while RDAL offers dedicated modeling elements with traceability and metadata, but less industrial adoption and weaker support for evolution. By contrast, i* and Claims handle assumptions more implicitly and provide limited support for validation and lifecycle management. SysML stands out in integration readiness, reflecting its central role in MBSE, and can capture assumptions through stereotypes or profiles, though explicit representation and evolution support remain limited without extensions. These findings suggest that framework selection should consider both the criticality of environmental assumptions and the engineering context. In particular, safety-critical domains may benefit from combining the explicit modeling strengths of goal- or requirements-oriented frameworks with the integration advantages of SysML.