Dual-Stream Transformer with LLM-Empowered Symbol Drift Modeling for Health Misinformation Detection

Abstract

1. Introduction

- Construction of a Transformer-based sequential modeling network for click behavior to simulate the evolution of user interests and content click dynamics.

- Design of a multimodal propagation potential perception module that integrates text, image, audio, and user bullet comment information to enhance content-level propagation potential assessment.

- Joint incorporation of propagation potential factors and click sequence features to optimize recommendation ranking, achieving precise recommendations driven by both interest and popularity.

2. Related Work

2.1. CTR Prediction and Temporal Modeling Methods

2.2. Multimodal Content Understanding and Propagation Modeling

2.3. Content Popularity and Propagation Potential Modeling in Recommendation Systems

3. Materials and Method

3.1. Data Collection

3.2. Data Preprocessing and Data Augmentation

3.3. Proposed Method

3.3.1. Overall

3.3.2. Symbol-Sensitive Lexicon and Drift Score Extraction

3.3.3. Symbol-Aware Text GNN

3.3.4. Multimodal Fusion Module for Image–Text Alignment

4. Results and Discussion

4.1. Experimental Setup and Evaluation Metrics

4.1.1. Evaluation Metrics

4.1.2. Baseline Models

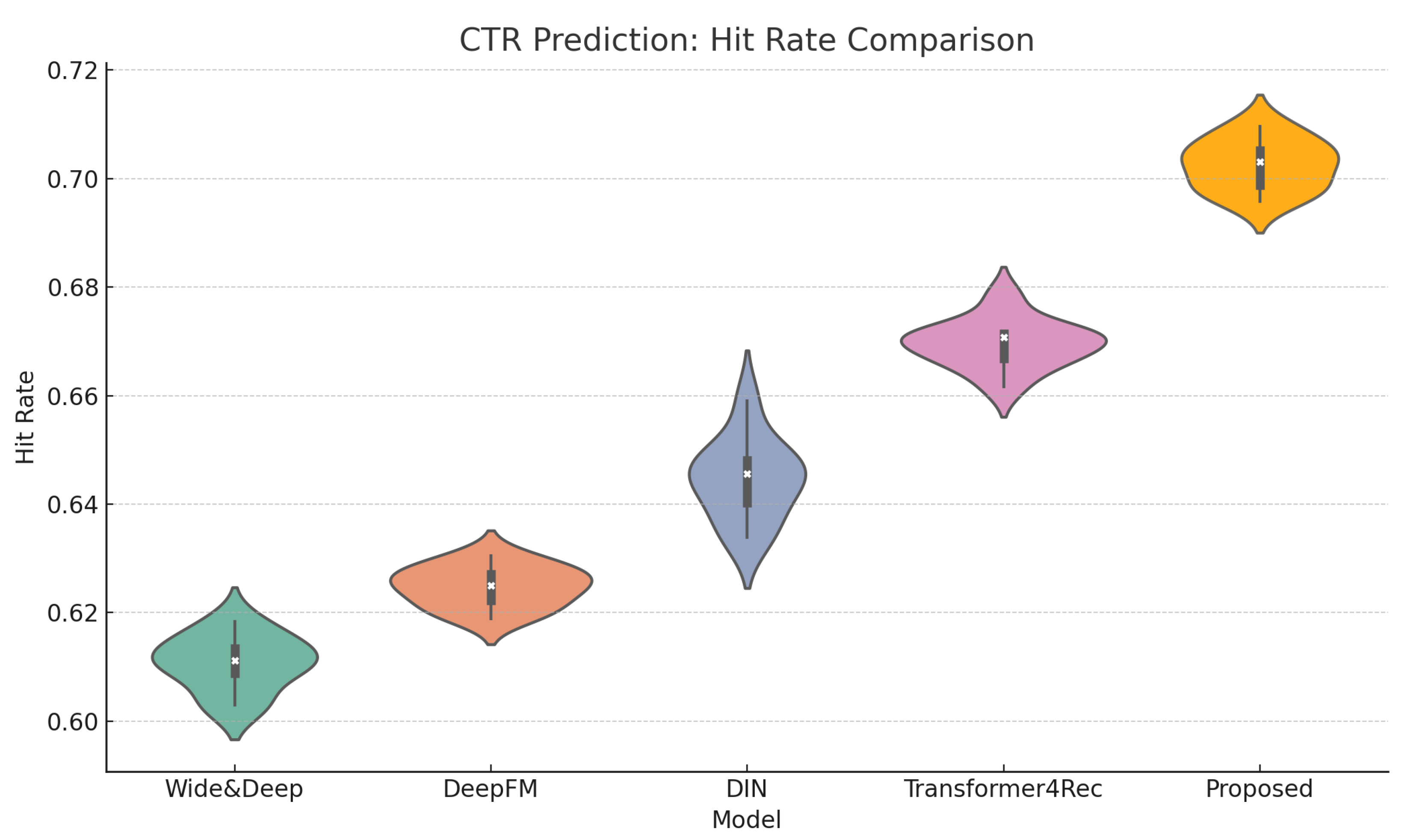

4.2. CTR Prediction Baselines vs. Proposed Model

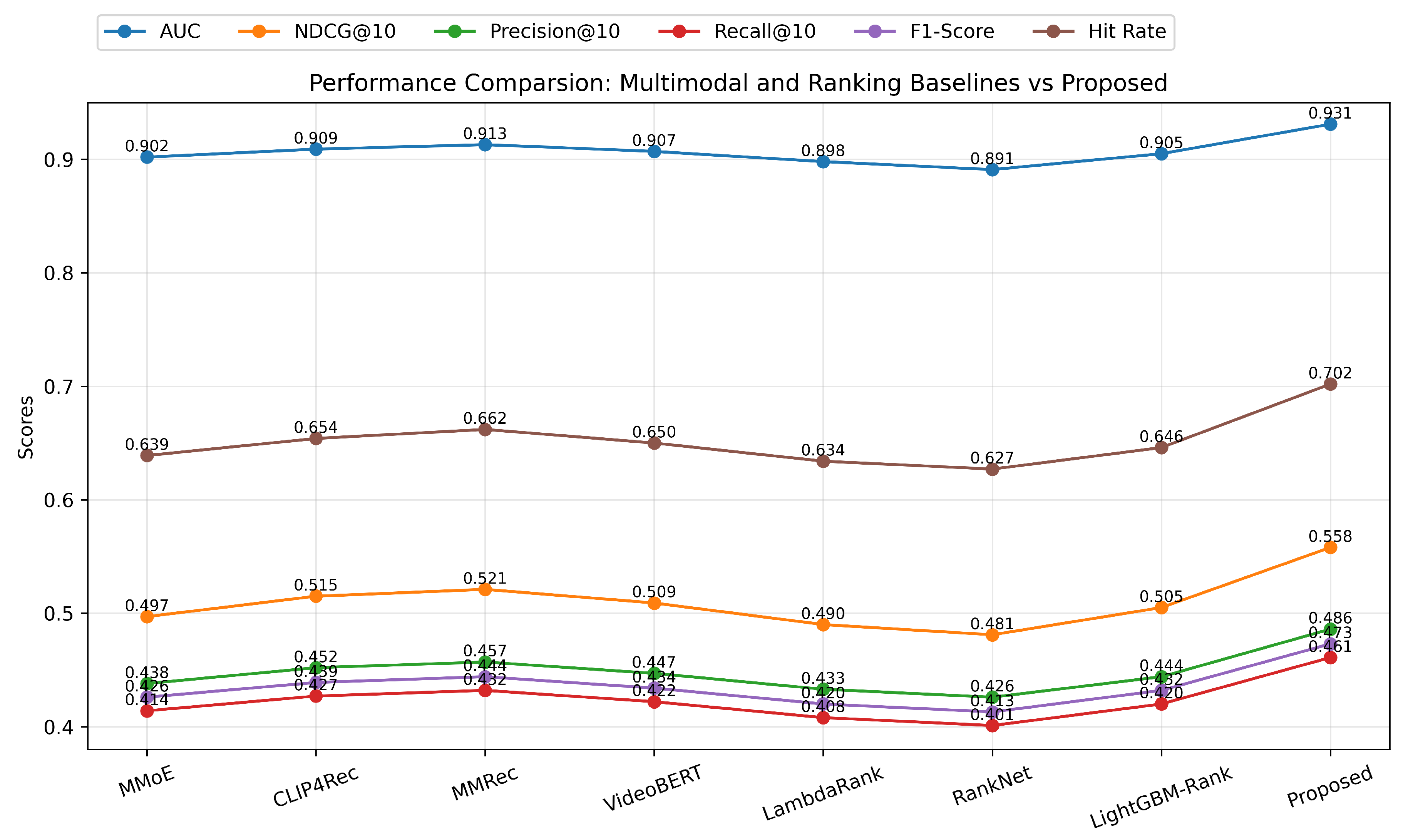

4.3. Multimodal and Ranking Baselines vs. Proposed Model

4.4. Comparison of Ranking-Based Baselines and the Proposed Framework

4.5. Ablation Study of the Proposed Framework

4.6. Sensitivity Analysis of Key Hyperparameters

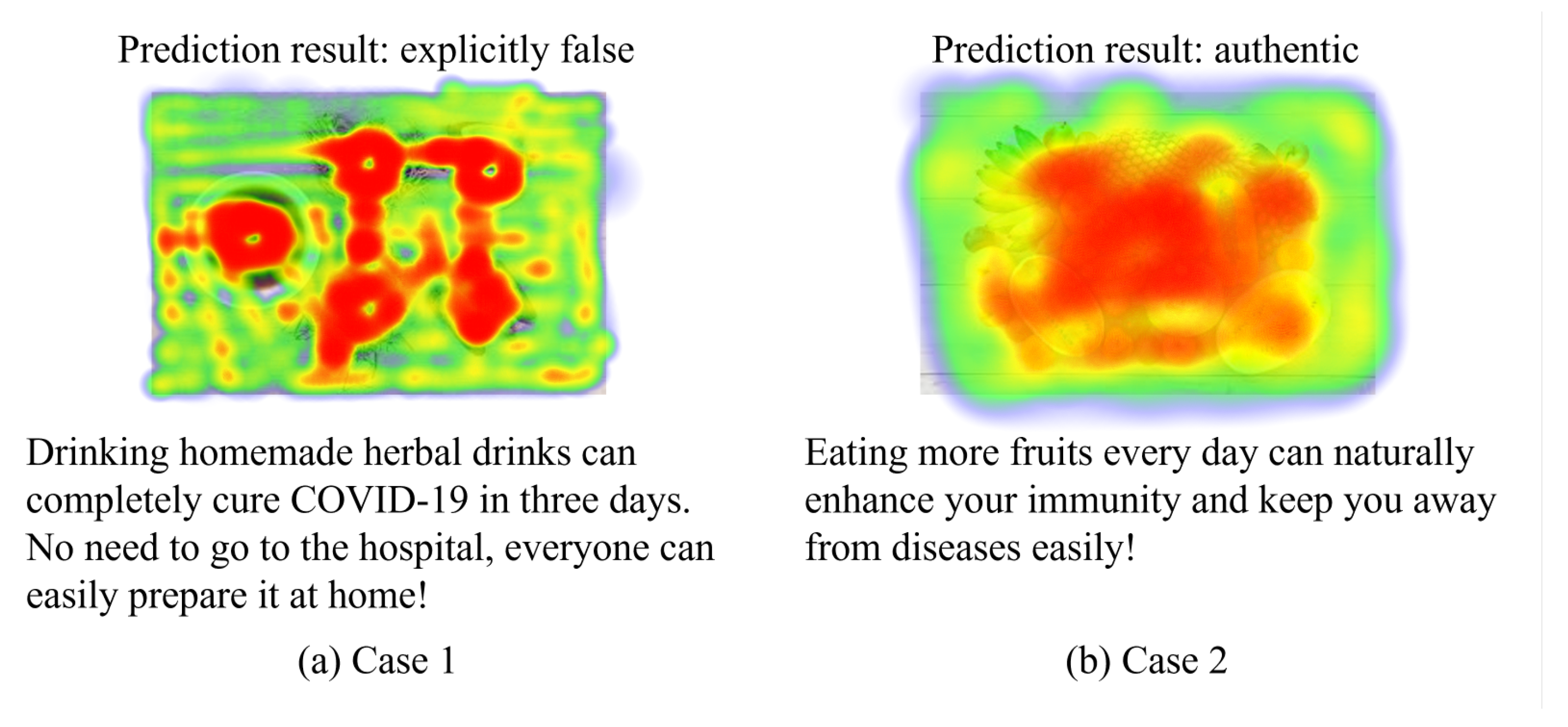

4.7. Case Study

5. Discussion

5.1. Practical Application Analysis

5.2. Computational Complexity Analysis

5.3. Error Analysis

5.4. Limitation and Future Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Algorithm A1: Symbol-Aware Text GNN with hyperparameters |

|

| Algorithm A2: Drift-aware bidirectional cross-attention and contrastive alignment |

Input: Text token matrix ; image region matrix ; Global vectors ; drift score ; Projection heads ; fusion MLP; temperature ; Drift-aware attention bias ; contrastive drift weight Output: Fused vector ; losses

|

References

- Chen, A.; Wei, Y.; Le, H.; Zhang, Y. Learning by teaching with ChatGPT: The effect of teachable ChatGPT agent on programming education. Br. J. Educ. Technol. 2024; early view. [Google Scholar]

- Chen, J.; Sun, B.; Li, H.; Lu, H.; Hua, X.S. Deep ctr prediction in display advertising. In Proceedings of the 24th ACM international conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 811–820. [Google Scholar]

- Zhao, W.; Zhang, J.; Xie, D.; Qian, Y.; Jia, R.; Li, P. AIBox: CTR prediction model training on a single node. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019; pp. 319–328. [Google Scholar]

- Wang, Q.; Liu, F.; Zhao, X.; Tan, Q. A CTR prediction model based on session interest. PLoS ONE 2022, 17, e0273048. [Google Scholar] [CrossRef]

- Yin, H.; Cui, B.; Chen, L.; Hu, Z.; Zhou, X. Dynamic user modeling in social media systems. ACM Trans. Inf. Syst. TOIS 2015, 33, 1–44. [Google Scholar] [CrossRef]

- Zhou, C.; Bai, J.; Song, J.; Liu, X.; Zhao, Z.; Chen, X.; Gao, J. Atrank: An attention-based user behavior modeling framework for recommendation. In Proceedings of the AAAI conference on artificial intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Lan, L.; Geng, Y. Accurate and interpretable factorization machines. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 4139–4146. [Google Scholar]

- Wu, S.; Li, Z.; Su, Y.; Cui, Z.; Zhang, X.; Wang, L. GraphFM: Graph factorization machines for feature interaction modeling. arXiv 2021, arXiv:2105.11866. [Google Scholar] [CrossRef]

- Yi, Y.; Zhou, Y.; Wang, T.; Zhou, J. Advances in Video Emotion Recognition: Challenges and Trends. Sensors 2025, 25, 3615. [Google Scholar] [CrossRef] [PubMed]

- Shaikh, M.B.; Chai, D.; Islam, S.M.S.; Akhtar, N. Multimodal fusion for audio-image and video action recognition. Neural Comput. Appl. 2024, 36, 5499–5513. [Google Scholar] [CrossRef]

- Kraprayoon, J.; Pham, A.; Tsai, T.J. Improving the robustness of DTW to global time warping conditions in audio synchronization. Appl. Sci. 2024, 14, 1459. [Google Scholar] [CrossRef]

- Zhang, Y.; Wu, J.; Sun, L.; Yang, G. Contrastive Learning-Based Cross-Modal Fusion for Product Form Imagery Recognition: A Case Study on New Energy Vehicle Front-End Design. Sustainability 2025, 17, 4432. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, Y.; Ma, X. A new strategy for tuning ReLUs: Self-adaptive linear units (SALUs). In Proceedings of the ICMLCA 2021; 2nd International Conference on Machine Learning and Computer Application, Shenyang, China, 17–19 December 2021; pp. 1–8. [Google Scholar]

- Bai, J.; Geng, X.; Deng, J.; Xia, Z.; Jiang, H.; Yan, G.; Liang, J. A comprehensive survey on advertising click-through rate prediction algorithm. Knowl. Eng. Rev. 2025, 40, e3. [Google Scholar] [CrossRef]

- Zhou, X.; Chen, S.; Ren, Y.; Zhang, Y.; Fu, J.; Fan, D.; Lin, J.; Wang, Q. Atrous Pyramid GAN Segmentation Network for Fish Images with High Performance. Electronics 2022, 11, 911. [Google Scholar] [CrossRef]

- Zhang, W.; Han, Y.; Yi, B.; Zhang, Z. Click-through rate prediction model integrating user interest and multi-head attention mechanism. J. Big Data 2023, 10, 11. [Google Scholar] [CrossRef]

- He, L.; Chen, H.; Wang, D.; Jameel, S.; Yu, P.; Xu, G. Click-through rate prediction with multi-modal hypergraphs. In Proceedings of the 30th ACM International Conference on Information & Knowledge Management, Virtual Event, QLD, Australia, 1–5 November 2021; pp. 690–699. [Google Scholar]

- Deng, J.; Shen, D.; Wang, S.; Wu, X.; Yang, F.; Zhou, G.; Meng, G. ContentCTR: Frame-level live streaming click-through rate prediction with multimodal transformer. arXiv 2023, arXiv:2306.14392. [Google Scholar]

- Deng, K.; Woodland, P.C. Multi-head Temporal Latent Attention. arXiv 2025, arXiv:2505.13544. [Google Scholar] [CrossRef]

- Blondel, M.; Fujino, A.; Ueda, N.; Ishihata, M. Higher-order factorization machines. In Proceedings of the 30th International Conference on Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016. [Google Scholar]

- Guo, H.; Tang, R.; Ye, Y.; Li, Z.; He, X. DeepFM: A factorization-machine based neural network for CTR prediction. arXiv 2017, arXiv:1703.04247. [Google Scholar]

- Zhou, G.; Zhu, X.; Song, C.; Fan, Y.; Zhu, H.; Ma, X.; Yan, Y.; Jin, J.; Li, H.; Gai, K. Deep interest network for click-through rate prediction. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 1059–1068. [Google Scholar]

- de Souza Pereira Moreira, G.; Rabhi, S.; Lee, J.M.; Ak, R.; Oldridge, E. Transformers4rec: Bridging the gap between nlp and sequential/session-based recommendation. In Proceedings of the 15th ACM Conference on Recommender Systems, Amsterdam, The Netherlands, 27 September–1 October 2021; pp. 143–153. [Google Scholar]

- Yang, Y.; Zhang, L.; Liu, J. Temporal user interest modeling for online advertising using Bi-LSTM network improved by an updated version of Parrot Optimizer. Sci. Rep. 2025, 15, 18858. [Google Scholar] [CrossRef] [PubMed]

- Wei, Y.; Wang, X.; Nie, L.; He, X.; Hong, R.; Chua, T.S. MMGCN: Multi-modal graph convolution network for personalized recommendation of micro-video. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 1437–1445. [Google Scholar]

- Li, T.; Yang, X.; Ke, Y.; Wang, B.; Liu, Y.; Xu, J. Alleviating the inconsistency of multimodal data in cross-modal retrieval. In Proceedings of the 2024 IEEE 40th International Conference on Data Engineering (ICDE), Utrecht, The Netherlands, 13–16 May 2024; pp. 4643–4656. [Google Scholar]

- Kim, W.; Son, B.; Kim, I. Vilt: Vision-and-language transformer without convolution or region supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 5583–5594. [Google Scholar]

- Gan, Z.; Li, L.; Li, C.; Wang, L.; Liu, Z.; Gao, J. Vision-language pre-training: Basics, recent advances, and future trends. Comput. Graph. Vis. 2022, 14, 163–352. [Google Scholar]

- Singh, A.; Hu, R.; Goswami, V.; Couairon, G.; Galuba, W.; Rohrbach, M.; Kiela, D. Flava: A foundational language and vision alignment model. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 15638–15650. [Google Scholar]

- Sun, C.; Myers, A.; Vondrick, C.; Murphy, K.; Schmid, C. Videobert: A joint model for video and language representation learning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7464–7473. [Google Scholar]

- Li, M.; Xu, R.; Wang, S.; Zhou, L.; Lin, X.; Zhu, C.; Zeng, M.; Ji, H.; Chang, S.F. Clip-event: Connecting text and images with event structures. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 16420–16429. [Google Scholar]

- Woo, J.; Chen, H. Epidemic model for information diffusion in web forums: Experiments in marketing exchange and political dialog. SpringerPlus 2016, 5, 66. [Google Scholar] [CrossRef]

- Xu, Z.; Qian, M. Predicting popularity of viral content in social media through a temporal-spatial cascade convolutional learning framework. Mathematics 2023, 11, 3059. [Google Scholar] [CrossRef]

- Nguyen, P.T.; Huynh, V.D.B.; Vo, K.D.; Phan, P.T.; Le, D.N. Deep Learning based Optimal Multimodal Fusion Framework for Intrusion Detection Systems for Healthcare Data. Comput. Mater. Contin. 2021, 66, 2555–2571. [Google Scholar] [CrossRef]

- Song, Y.; Elkahky, A.M.; He, X. Multi-rate deep learning for temporal recommendation. In Proceedings of the 39th International ACM SIGIR Conference on Research and Development in Information Retrieval, Pisa, Italy, 17–21 July 2016; pp. 909–912. [Google Scholar]

- Dean, S.; Dong, E.; Jagadeesan, M.; Leqi, L. Recommender systems as dynamical systems: Interactions with viewers and creators. In Proceedings of the Workshop on Recommendation Ecosystems: Modeling, Optimization and Incentive Design, Vancouver, BC, Canada, 26–27 February 2024. [Google Scholar]

- Sangiorgio, E.; Di Marco, N.; Etta, G.; Cinelli, M.; Cerqueti, R.; Quattrociocchi, W. Evaluating the effect of viral posts on social media engagement. Sci. Rep. 2025, 15, 639. [Google Scholar] [CrossRef] [PubMed]

- Munusamy, H.; C, C.S. Multimodal attention-based transformer for video captioning. Appl. Intell. 2023, 53, 23349–23368. [Google Scholar] [CrossRef]

- Fedus, W.; Zoph, B.; Shazeer, N. Switch transformers: Scaling to trillion parameter models with simple and efficient sparsity. J. Mach. Learn. Res. 2022, 23, 5232–5270. [Google Scholar]

- Cheng, H.T.; Koc, L.; Harmsen, J.; Shaked, T.; Chandra, T.; Aradhye, H.; Anderson, G.; Corrado, G.; Chai, W.; Ispir, M.; et al. Wide & deep learning for recommender systems. In Proceedings of the 1st Workshop on Deep Learning for Recommender Systems, Boston, MA, USA, 15 September 2016; pp. 7–10. [Google Scholar]

- Ma, J.; Zhao, Z.; Yi, X.; Chen, J.; Hong, L.; Chi, E.H. Modeling task relationships in multi-task learning with multi-gate mixture-of-experts. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 1930–1939. [Google Scholar]

- Luo, H.; Ji, L.; Zhong, M.; Chen, Y.; Lei, W.; Duan, N.; Li, T. Clip4clip: An empirical study of clip for end to end video clip retrieval and captioning. Neurocomputing 2022, 508, 293–304. [Google Scholar] [CrossRef]

- Zhou, X. Mmrec: Simplifying multimodal recommendation. In Proceedings of the 5th ACM International Conference on Multimedia in Asia Workshops, Tainan, Taiwan, 6–8 December 2023; pp. 1–2. [Google Scholar]

- Burges, C.J. From ranknet to lambdarank to lambdamart: An overview. Learning 2010, 11, 81. [Google Scholar]

- Lyu, J.; Ling, S.H.; Banerjee, S.; Zheng, J.; Lai, K.L.; Yang, D.; Zheng, Y.P.; Bi, X.; Su, S.; Chamoli, U. Ultrasound volume projection image quality selection by ranking from convolutional RankNet. Comput. Med Imaging Graph. 2021, 89, 101847. [Google Scholar] [CrossRef] [PubMed]

- Ke, G.; Meng, Q.; Finley, T.; Wang, T.; Chen, W.; Ma, W.; Ye, Q.; Liu, T.Y. Lightgbm: A highly efficient gradient boosting decision tree. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

| Platform | Period | Modality | Authentic | Misleading | False | Total |

|---|---|---|---|---|---|---|

| January 2023–December 2024 | Text + Image | 2350 | 2120 | 1980 | 6450 | |

| Xiaohongshu | January 2023–December 2024 | Text + Image | 2180 | 2040 | 1950 | 6170 |

| Total | — | Text + Image | 4530 | 4160 | 3930 | 12,620 |

| Model | AUC | NDCG@10 | Precision@10 | Recall@10 | F1-Score | Hit Rate | Inference Latency (ms) | Memory Usage (GB) |

|---|---|---|---|---|---|---|---|---|

| Wide&Deep [40] | 0.882 | 0.468 | 0.415 | 0.392 | 0.403 | 0.611 | 12 | 1.2 |

| DeepFM [21] | 0.894 | 0.482 | 0.429 | 0.406 | 0.417 | 0.628 | 18 | 1.5 |

| DIN [22] | 0.903 | 0.501 | 0.442 | 0.418 | 0.430 | 0.644 | 27 | 2.1 |

| Transformer4Rec [23] | 0.914 | 0.523 | 0.459 | 0.434 | 0.446 | 0.667 | 35 | 2.8 |

| Proposed | 0.931 † | 0.558 † | 0.486 † | 0.461 † | 0.473 † | 0.702 † | 42 | 3.4 |

| Model | AUC | NDCG@10 | Precision@10 | Recall@10 | F1-Score | Hit Rate | Inference Latency (ms) | Memory Usage (GB) |

|---|---|---|---|---|---|---|---|---|

| MMoE [41] | 0.902 | 0.497 | 0.438 | 0.414 | 0.426 | 0.639 | 25 | 2.0 |

| CLIP4Rec [42] | 0.909 | 0.515 | 0.452 | 0.427 | 0.439 | 0.654 | 33 | 2.6 |

| MMRec [43] | 0.913 | 0.521 | 0.457 | 0.432 | 0.444 | 0.662 | 36 | 2.9 |

| VideoBERT [30] | 0.907 | 0.509 | 0.447 | 0.422 | 0.434 | 0.650 | 38 | 3.0 |

| LambdaRank [44] | 0.898 | 0.490 | 0.433 | 0.408 | 0.420 | 0.634 | 8 | 0.9 |

| RankNet [45] | 0.891 | 0.481 | 0.426 | 0.401 | 0.413 | 0.627 | 10 | 1.0 |

| LightGBM-Rank [46] | 0.905 | 0.505 | 0.444 | 0.420 | 0.432 | 0.646 | 15 | 1.2 |

| Proposed | 0.931 † | 0.558 † | 0.486 † | 0.461 † | 0.473 † | 0.702 † | 42 | 3.4 |

| Model | AUC | NDCG@10 | Precision@10 | Recall@10 | F1-Score | Hit Rate | Inference Latency (ms) | Memory Usage (GB) |

|---|---|---|---|---|---|---|---|---|

| LambdaRank [44] | 0.898 | 0.490 | 0.433 | 0.408 | 0.420 | 0.634 | 8 | 0.9 |

| RankNet [45] | 0.891 | 0.481 | 0.426 | 0.401 | 0.413 | 0.627 | 12 | 1.1 |

| LightGBM-Rank [46] | 0.905 | 0.505 | 0.444 | 0.420 | 0.432 | 0.646 | 15 | 1.3 |

| Proposed | 0.931 † | 0.558 † | 0.486 † | 0.461 † | 0.473 † | 0.702 † | 42 | 3.4 |

| Configuration | AUC | NDCG@10 | Precision@10 | Recall@10 | F1-Score | Hit Rate |

|---|---|---|---|---|---|---|

| Full model (Symbol drift + Text GNN + Cross-attn + Contrastive) | 0.931 | 0.558 | 0.486 | 0.461 | 0.473 | 0.702 |

| – Symbol drift module | 0.919 | 0.536 | 0.468 | 0.444 | 0.456 | 0.683 |

| – Text GNN (use pooled BERT text only) | 0.915 | 0.529 | 0.462 | 0.438 | 0.450 | 0.676 |

| – Cross-attention (late concat only) | 0.917 | 0.532 | 0.465 | 0.440 | 0.452 | 0.678 |

| – Contrastive alignment loss | 0.920 | 0.540 | 0.470 | 0.446 | 0.458 | 0.687 |

| – Both drift and contrastive | 0.908 | 0.517 | 0.452 | 0.428 | 0.440 | 0.662 |

| AUC | NDCG@10 | Precision@10 | Recall@10 | F1-Score | Hit Rate | |

|---|---|---|---|---|---|---|

| 0.00 | 0.902 | 0.495 | 0.437 | 0.412 | 0.424 | 0.638 |

| 0.25 | 0.915 | 0.520 | 0.454 | 0.429 | 0.442 | 0.661 |

| 0.50 | 0.923 | 0.539 | 0.471 | 0.445 | 0.458 | 0.683 |

| 1.00 | 0.931 | 0.558 | 0.486 | 0.461 | 0.473 | 0.702 |

| 2.00 | 0.918 | 0.528 | 0.462 | 0.436 | 0.449 | 0.672 |

| AUC | NDCG@10 | Precision@10 | Recall@10 | F1-Score | Hit Rate | |

|---|---|---|---|---|---|---|

| 0.00 | 0.901 | 0.492 | 0.434 | 0.410 | 0.422 | 0.636 |

| 0.25 | 0.914 | 0.518 | 0.452 | 0.428 | 0.440 | 0.659 |

| 0.50 | 0.922 | 0.537 | 0.468 | 0.443 | 0.456 | 0.681 |

| 1.00 | 0.931 | 0.558 | 0.486 | 0.461 | 0.473 | 0.702 |

| 2.00 | 0.916 | 0.526 | 0.459 | 0.435 | 0.447 | 0.670 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, J.; Fu, Z.; Jiang, C.; Li, M.; Zhan, Y. Dual-Stream Transformer with LLM-Empowered Symbol Drift Modeling for Health Misinformation Detection. Appl. Sci. 2025, 15, 9992. https://doi.org/10.3390/app15189992

Wang J, Fu Z, Jiang C, Li M, Zhan Y. Dual-Stream Transformer with LLM-Empowered Symbol Drift Modeling for Health Misinformation Detection. Applied Sciences. 2025; 15(18):9992. https://doi.org/10.3390/app15189992

Chicago/Turabian StyleWang, Jingsheng, Zhengjie Fu, Chenlu Jiang, Manzhou Li, and Yan Zhan. 2025. "Dual-Stream Transformer with LLM-Empowered Symbol Drift Modeling for Health Misinformation Detection" Applied Sciences 15, no. 18: 9992. https://doi.org/10.3390/app15189992

APA StyleWang, J., Fu, Z., Jiang, C., Li, M., & Zhan, Y. (2025). Dual-Stream Transformer with LLM-Empowered Symbol Drift Modeling for Health Misinformation Detection. Applied Sciences, 15(18), 9992. https://doi.org/10.3390/app15189992