Few-Shot Fine-Grained Image Classification with Residual Reconstruction Network Based on Feature Enhancement

Abstract

1. Introduction

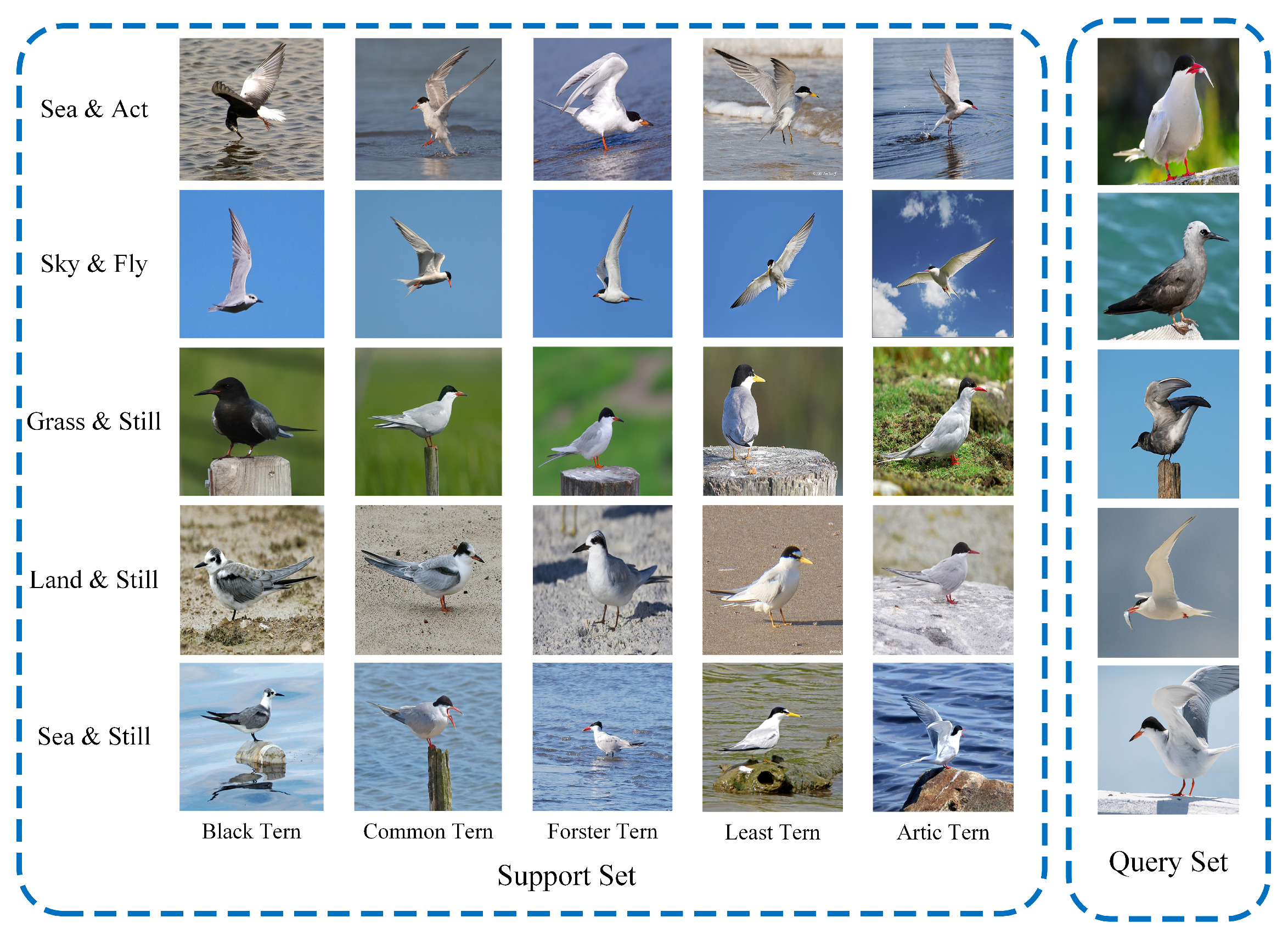

- (1)

- In this work, the Feature Enhancement Attention Module (FEAM) is proposed, which integrates multi-scale convolutions with attention mechanisms. This design effectively combines features from the main branch and the side branch, enabling the model to comprehensively perceive the data distribution and accurately respond to feature variations in different local regions.

- (2)

- The Feature Refinement Module and the Residual Reconstruction Module are introduced. This Module integrates self-attention mechanisms with feature fusion techniques to effectively aggregate information from different feature channels and capture subtle and discriminative fine-grained details. By enhancing the representation of these refined features, the Residual Reconstruction Module leverages the concept of residual learning to fully exploit the enhanced features, thereby improving the model’s ability to distinguish between minor inter-class differences and significant intra-class variations.

- (3)

- Experiments on three widely used fine-grained classification datasets demonstrate the effectiveness and robustness of the proposed method. Detailed ablation studies and performance comparisons show that the proposed components contribute significantly to addressing the challenges of FS-FGIC.

2. Proposed Method

2.1. The Proposed Algorithm

2.2. Feature Enhancement Attention Module

2.3. Feature Refinement Module

2.4. Residual Feature Similarity and Reconstruction Module

2.5. Metric Module

3. Experiments

3.1. Experiments Setup

3.2. Comparative Experiments

3.3. Visualization

3.4. Ablation Experiments

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wei, X.S.; Wang, P.; Liu, L.; Shen, C.; Wu, J. Piecewise classifier mappings: Learning fine-grained learners for novel categories with few examples. IEEE Trans. Image Process. 2019, 28, 6116–6125. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Chen, L.; Li, W.; Wang, N. Few-shot fine-grained classification with rotation-invariant feature map complementary reconstruction network. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5608312. [Google Scholar] [CrossRef]

- Yang, M.; Bai, X.; Wang, L.; Zhou, F. HENC: Hierarchical embedding network with center calibration for few-shot fine-grained SAR target classification. IEEE Trans. Image Process. 2023, 32, 3324–3337. [Google Scholar] [CrossRef] [PubMed]

- Sun, X.; Xv, H.; Dong, J.; Zhou, H.; Chen, C.; Li, Q. Few-shot learning for domain-specific fine-grained image classification. IEEE Trans. Ind. Electron. 2020, 68, 3588–3598. [Google Scholar] [CrossRef]

- Li, X.; Yang, X.; Ma, Z.; Xue, J.H. Deep metric learning for few-shot image classification: A review of recent developments. Pattern Recognit. 2023, 138, 109381. [Google Scholar] [CrossRef]

- Snell, J.; Swersky, K.; Zemel, R. Prototypical Networks for Few-Shot Learning. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS’17), Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017; pp. 4080–4090. [Google Scholar]

- Sung, F.; Yang, Y.; Zhang, L.; Xiang, T.; Torr, P.H.S.; Hospedales, T.M. Learning to compare: Relation network for few-shot learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 1199–1208. [Google Scholar]

- Zhang, C.; Cai, Y.; Lin, G.; Shen, C. DeepEMD: Few-shot image classification with differentiable earth mover’s distance and structured classifiers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 12203–12213. [Google Scholar]

- Wertheimer, D.; Tang, L.; Hariharan, B. Few-shot classification with feature map reconstruction networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8012–8021. [Google Scholar]

- Li, X.; Song, Q.; Wu, J.; Zhu, R.; Ma, Z.; Xue, J.H. Locally-enriched cross-reconstruction for few-shot fine-grained image classification. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 7530–7540. [Google Scholar] [CrossRef]

- Li, X.; Guo, Z.; Zhu, R.; Ma, Z.; Guo, J.; Xue, J.H. A simple scheme to amplify inter-class discrepancy for improving few-shot fine-grained image classification. Pattern Recognit. 2024, 156, 110736. [Google Scholar] [CrossRef]

- Wu, J.; Chang, D.; Sain, A.; Li, X.; Ma, Z.; Cao, J.; Guo, J.; Song, Y.Z. Bi-directional ensemble feature reconstruction network for few-shot fine-grained classification. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 6082–6096. [Google Scholar] [CrossRef] [PubMed]

- Ruan, X.; Lin, G.; Long, C.; Lu, S. Few-shot fine-grained classification with spatial attentive comparison. Knowl.-Based Syst. 2021, 218, 106840. [Google Scholar] [CrossRef]

- Huang, X.; Choi, S.H. Sapenet: Self-attention based prototype enhancement network for few-shot learning. Pattern Recognit. 2023, 135, 109170. [Google Scholar] [CrossRef]

- Ma, Z.X.; Chen, Z.D.; Zhao, L.J.; Zhang, Z.C.; Luo, X.; Xu, X.S. Cross-layer and cross-sample feature optimization network for few-shot fine-grained image classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 4136–4144. [Google Scholar]

- Li, X.; Ding, S.; Xie, J.; Yang, X.; Ma, Z.; Xue, J.H. CDN4: A cross-view Deep Nearest Neighbor Neural Network for fine-grained few-shot classification. Pattern Recognit. 2025, 163, 111466. [Google Scholar] [CrossRef]

- Sun, Z.; Zheng, W.; Guo, P.; Wang, M. TST_MFL: Two-stage training based metric fusion learning for few-shot image classification. Inf. Fusion 2025, 113, 102611. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Zhang, Y.; Ye, M.; Zhu, G.; Liu, Y.; Guo, P.; Yan, J. FFCA-YOLO for small object detection in remote sensing images. IEEE Trans. Geosci. Remote Sens. 2024, 62, 5611215. [Google Scholar] [CrossRef]

- Dai, Y.; Gieseke, F.; Oehmcke, S.; Wu, Y.; Barnard, K. Attentional feature fusion. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 3560–3569. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Yang, L.; Zhang, R.Y.; Li, L.; Xie, X. Simam: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Wu, J.; Chang, D.; Sain, A.; Li, X.; Ma, Z.; Cao, J.; Guo, J.; Song, Y.Z. Bi-directional feature reconstruction network for fine-grained few-shot image classification. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 2821–2829. [Google Scholar]

- Wah, C.; Branson, S.; Welinder, P.; Perona, P.; Belongie, S.J. The Caltech-UCSD Birds-200-2011 Dataset. 2011. Available online: https://authors.library.caltech.edu/records/cvm3y-5hh21 (accessed on 9 September 2025).

- Krause, J.; Stark, M.; Deng, J.; Fei-Fei, L. 3d object representations for fine-grained categorization. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Sydney, Australia, 2–8 December 2013; pp. 554–561. [Google Scholar]

- Dataset, E. Novel datasets for fine-grained image categorization. In Proceedings of the First Workshop on Fine Grained Visual Categorization, Columbus, OH, USA, 23–28 June 2014; Volume 5, p. 2. [Google Scholar]

- Zhu, Y.; Liu, C.; Jiang, S. Multi-attention meta learning for few-shot fine-grained image recognition. In Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence, Yokohama, Japan, 11–17 July 2020; pp. 1090–1096. [Google Scholar]

- Ye, H.J.; Hu, H.; Zhan, D.C.; Sha, F. Few-shot learning via embedding adaptation with set-to-set functions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 8808–8817. [Google Scholar]

- Doersch, C.; Gupta, A.; Zisserman, A. Crosstransformers: Spatially-aware few-shot transfer. Adv. Neural Inf. Process. Syst. 2020, 33, 21981–21993. [Google Scholar]

- Lee, S.; Moon, W.; Heo, J.P. Task discrepancy maximization for fine-grained few-shot classification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 5331–5340. [Google Scholar]

- Li, X.; Li, Z.; Xie, J.; Yang, X.; Xue, J.H.; Ma, Z. Self-reconstruction network for fine-grained few-shot classification. Pattern Recognit. 2024, 153, 110485. [Google Scholar] [CrossRef]

- Yang, S.; Li, X.; Chang, D.; Ma, Z.; Xue, J.H. Channel-Spatial Support-Query Cross-Attention for Fine-Grained Few-Shot Image Classification. In Proceedings of the 32nd ACM International Conference on Multimedia, Melbourne, Australia, 28 October–1 November 2024; pp. 9175–9183. [Google Scholar]

- Muhammad, M.B.; Yeasin, M. Eigen-cam: Class activation map using principal components. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–7. [Google Scholar]

| Method | CUB | Dogs | Cars | |||

|---|---|---|---|---|---|---|

| 1-Shot | 5-Shot | 1-Shot | 5-Shot | 1-Shot | 5-Shot | |

| ProtoNet (NeurIPS’17) * [6] | 64.82 ± 0.23 | 64.82 ± 0.23 | 46.66 ± 0.21 | 46.66 ± 0.21 | 50.88 ± 0.23 | 66.07 ± 0.21 |

| CTX (NeurIPS’20) * [29] | 72.61 ± 0.21 | 86.23 ± 0.14 | 57.86 ± 0.21 | 73.59 ± 0.16 | 66.35 ± 0.21 | 82.25 ± 0.14 |

| FRN (CVPR’21) † [9] | 75.64 ± 0.21 | 89.39 ± 0.12 | 60.72 ± 0.22 | 79.07 ± 0.15 | 68.37 ± 0.21 | 87.51 ± 0.11 |

| FRN+TDM(-noise) (CVPR’22) * [30] | 76.55 ± 0.21 | 90.33 ± 0.11 | 62.68 ± 0.22 | 79.59 ± 0.15 | 71.16 ± 0.21 | 89.55 ± 0.10 |

| BiFRN (AAAI’23) † [23] | 78.56 ± 0.20 | 91.81 ± 0.11 | 64.89 ± 0.22 | 81.51 ± 0.14 | 76.30 ± 0.20 | 91.63 ± 0.09 |

| SRM (PR’24) † [31] | 73.53 ± 0.21 | 87.81 ± 0.13 | 58.09 ± 0.21 | 77.47 ± 0.15 | 66.07 ± 0.21 | 84.90 ± 0.13 |

| AFRN (PR’24) † [11] | 75.99 ± 0.23 | 89.25 ± 0.12 | 60.16 ± 0.22 | 78.73 ± 0.15 | 68.08 ± 0.22 | 87.38 ± 0.12 |

| TDM+CSCAM (MM’24) † [32] | 79.89 ± 0.20 | 92.49 ± 0.11 | 65.22 ± 0.22 | 82.45 ± 0.14 | 78.34 ± 0.20 | 92.08 ± 0.09 |

| Ours | 82.00 ± 0.19 | 93.06 ± 0.10 | 70.16 ± 0.21 | 85.21 ± 0.13 | 80.48 ± 0.19 | 93.46 ± 0.08 |

| Method | CUB | Dogs | Cars | |||

|---|---|---|---|---|---|---|

| 1-Shot | 5-Shot | 1-Shot | 5-Shot | 1-Shot | 5-Shot | |

| ProtoNet (NeurIPS’17) * [6] | 81.59 ± 0.19 | 91.99 ± 0.10 | 73.81 ± 0.21 | 87.39 ± 0.12 | 85.46 ± 0.19 | 95.08 ± 0.08 |

| CTX (NeurIPS’20) * [29] | 80.39 ± 0.20 | 91.01 ± 0.11 | 73.22 ± 0.22 | 85.90 ± 0.13 | 85.03 ± 0.19 | 92.63 ± 0.11 |

| FRN (CVPR’21) † [9] | 84.30 ± 0.18 | 93.34 ± 0.10 | 76.76 ± 0.21 | 88.74 ± 0.12 | 88.01 ± 0.17 | 95.75 ± 0.07 |

| FRN+TDM(-noise) (CVPR’22) * [30] | 84.97 ± 0.18 | 93.83 ± 0.09 | 77.94 ± 0.21 | 89.54 ± 0.12 | 88.80 ± 0.16 | 97.02 ± 0.06 |

| BiFRN (AAAI’23) † [23] | 85.50 ± 0.18 | 94.73 ± 0.09 | 76.55 ± 0.21 | 88.22 ± 0.12 | 90.28 ± 0.14 | 97.45 ± 0.06 |

| SRM (PR’24) † [31] | 82.46 ± 0.19 | 93.71 ± 0.09 | 75.23 ± 0.20 | 88.84 ± 0.11 | 84.06 ± 0.19 | 96.07 ± 0.07 |

| AFRN (PR’24) † [11] | 84.78 ± 0.18 | 93.49 ± 0.09 | 78.23 ± 0.21 | 89.18 ± 0.16 | 89.18 ± 0.16 | 96.25 ± 0.07 |

| TDM+CSCAM (MM’24) † [32] | 85.78 ± 0.18 | 94.42 ± 0.09 | 76.35 ± 0.21 | 88.94 ± 0.11 | 90.34 ± 0.15 | 96.53 ± 0.08 |

| Ours | 86.42 ± 0.17 | 94.87 ± 0.08 | 78.46 ± 0.20 | 90.06 ± 0.11 | 90.92 ± 0.14 | 97.62 ± 0.05 |

| Baseline | RFSR | CASA | FEAM | CUB | Cars | ||

|---|---|---|---|---|---|---|---|

| 1-Shot | 5-Shot | 1-Shot | 5-Shot | ||||

| ✓ | 78.56 ± 0.20 | 91.81 ± 0.11 | 76.30 ± 0.20 | 91.63 ± 0.09 | |||

| ✓ | ✓ | 79.10 ± 0.20 | 92.39 ± 0.10 | 75.82 ± 0.20 | 91.55 ± 0.10 | ||

| ✓ | ✓ | 80.21 ± 0.20 | 92.44 ± 0.10 | 75.92 ± 0.21 | 90.64 ± 0.10 | ||

| ✓ | ✓ | 81.48 ± 0.19 | 92.76 ± 0.10 | 80.12 ± 0.19 | 92.65 ± 0.08 | ||

| ✓ | ✓ | ✓ | 80.35 ± 0.19 | 92.44 ± 0.10 | 76.69 ± 0.20 | 91.74 ± 0.09 | |

| ✓ | ✓ | ✓ | 80.82 ± 0.19 | 92.89 ± 0.10 | 79.39 ± 0.19 | 93.25 ± 0.08 | |

| ✓ | ✓ | ✓ | ✓ | 82.00 ± 0.19 | 93.06 ± 0.10 | 80.48 ± 0.19 | 93.46 ± 0.08 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Zhang, H.; Zhang, W. Few-Shot Fine-Grained Image Classification with Residual Reconstruction Network Based on Feature Enhancement. Appl. Sci. 2025, 15, 9953. https://doi.org/10.3390/app15189953

Liu Y, Zhang H, Zhang W. Few-Shot Fine-Grained Image Classification with Residual Reconstruction Network Based on Feature Enhancement. Applied Sciences. 2025; 15(18):9953. https://doi.org/10.3390/app15189953

Chicago/Turabian StyleLiu, Ying, Haibin Zhang, and Weidong Zhang. 2025. "Few-Shot Fine-Grained Image Classification with Residual Reconstruction Network Based on Feature Enhancement" Applied Sciences 15, no. 18: 9953. https://doi.org/10.3390/app15189953

APA StyleLiu, Y., Zhang, H., & Zhang, W. (2025). Few-Shot Fine-Grained Image Classification with Residual Reconstruction Network Based on Feature Enhancement. Applied Sciences, 15(18), 9953. https://doi.org/10.3390/app15189953