1. Introduction

Road infrastructure is fundamental to economic growth, social mobility, and overall quality of life. Global road length is estimated at approximately 39.7 million km [

1]. In most regions, the share of paved roads ranges between 60 and 80% [

2], although sizable gaps remain in parts of Asia and Africa. OpenStreetMap (OSM) data indicate that about 47% of roads in Germany are asphalted [

3]. Given OSM incompleteness, this figure should be regarded as a lower bound—actual coverage is likely higher. Public investment in land transport infrastructure (rail + road + waterways) typically amounts to ~0.1–3% of Gross Domestic Product (GDP); in most countries, roads account for the majority of expenditures. For example, Poland has averaged ~0.7% of GDP since 2014, with 75–80% directed to roads [

4,

5].

Among defects of asphalt pavements, cracks are both ubiquitous and critical [

6]. They serve as initiation points for more severe failures (potholes, raveling, premature layer degradation), driving life-cycle costs upward and elevating safety risks. Reliable, timely crack detection is therefore essential for preventive maintenance planning and sustainable asset management.

Against this backdrop, computer vision and deep learning have become central to automated inspection pipelines, increasingly coupled with unmanned aerial vehicles (UAVs) and vehicle-mounted acquisition systems [

7,

8,

9,

10,

11]. Contemporary studies span both detection and pixel-level segmentation. Reported detection systems include members of the You Only Look Once (YOLO) and Faster R-CNN families, often augmented with attention mechanisms; high mean average precision (mAP) values (e.g., ~91.48%) and even crack-width estimation with ~10% error have been documented [

12,

13]. Pixel-level semantic segmentation provides finer localization on heterogeneous and noisy backgrounds. Improvements to fully convolutional network (FCN) and U-Net-like architectures via spatial/channel attention or enhanced decoders have yielded higher Intersection over Union (IoU) and F1-score values [

14,

15]. To address data scarcity, synthetic datasets have been explored [

16], while multimodal fusion (e.g., UAV imagery with Light Detection and Ranging (LiDAR)) has demonstrated advantages on complex surfaces [

17]. Survey papers note the growing prevalence of convolutional neural network (CNN) and Transformer-based pixel segmentation while emphasizing persistent challenges: dependence on high-quality annotations, limited cross-surface generalization, and sensitivity to capture conditions (illumination changes, perspective, shadows) [

18,

19,

20].

In recent years, most works on asphalt crack segmentation have relied on CNN-based architectures enhanced with targeted decoder or attention improvements. For instance, modifications of DeepLabV3+ with multi-level attention modules have been shown to improve generalization over U-Net on complex asphalt backgrounds, though such solutions require substantial architectural changes and careful hyperparameter tuning [

21]. Specialized networks such as MixCrackNet employ deformable convolutions, weighted loss functions, and other techniques to increase robustness against noise, but these improvements come at the cost of greater architectural complexity and computational overhead [

22]. Research on lightweight variants is also emerging; however, such models are often trained and tested on controlled datasets using small image fragments captured at close range, which limits transferability to field conditions, with greater variation in perspective, illumination, and surface textures [

23].

In addition to general-purpose CNN and Transformer backbones, several crack-specific architectures have been proposed. DeepCrack employs a hierarchical CNN with multi-scale deep supervision to capture fine crack structures, achieving high accuracy on benchmark datasets but being limited to relatively low-resolution imagery and small training sets [

24]. CrackFormer and its extensions introduce Transformer-based modules tailored for thin-structure extraction and deliver state-of-the-art results on public benchmarks, but their high computational demands and reliance on lower-resolution inputs constrain their applicability in large-scale, high-resolution field monitoring [

25].

In parallel, Transformer-based approaches and modern benchmark architectures have gained attention. Enhanced versions of Swin-UNet for road crack segmentation have demonstrated improved contour continuity, while studies on SegFormer report advantages over classical CNNs on specific datasets. Nevertheless, these models generally require larger datasets and computational resources, and are frequently evaluated on images obtained very close to the surface (or on small tiles), which does not always reflect operational monitoring conditions. Recent initiatives involving UAV-based datasets (e.g., DronePavSeg) and encoder–decoder designs such as ConvNeXt-UPerNet underscore a trend toward heavier architectures that are better suited to large-scale datasets and structured acquisition protocols [

26,

27,

28,

29].

Another important direction is multimodality and the use of external priors. Fusion of UAV imagery with low-cost LiDAR (intensity/height) or the use of hyperspectral data has been shown to significantly improve segmentation performance on asphalt cracks but requires additional sensors, synchronization, and more complex data acquisition pipelines. This indirectly demonstrates that supplementary information beyond raw RGB can help models focus on relevant regions; however, conventional approaches for obtaining such information increase system cost and complicate deployment [

17,

30].

Across this body of work, several recurring limitations can be identified. Many methods rely on substantial architectural modifications, such as complex attention modules, deformable convolutions, or customized decoder designs, which increase model complexity and computational costs. Others depend on close-range imagery or small input sizes, limiting their transferability to large-scale monitoring scenarios. Performance is often sensitive to dataset scale and annotation quality, while computational demands remain high, making deployment in real-world infrastructure monitoring challenging. These limitations motivate the use of auxiliary prior information in the form of a crack-probability map. In the present study, the probability map is produced by an ensemble of classification models operating at multiple spatial scales (including lightweight CNNs and Transformer architectures) and then injected as a fourth input channel to the segmentation network. In contrast to work conducted under standardized laboratory conditions, the evaluation relies on field imagery (UAV and action cameras) without controlled distance or illumination, aligning the methodology with real-world monitoring scenarios. The primary objective is a quantitative assessment of the fourth-channel integration for improving crack segmentation, together with an evaluation of the role of Transformer-based classifiers within the ensemble relative to lightweight CNN alternatives. The novelty of this work lies in showing that ensemble-derived probability maps can systematically enhance segmentation accuracy of asphalt pavement cracks under real-world conditions while keeping computational costs nearly unchanged. By treating the probability map as an external, modular prior, the approach provides an interpretable, modular prior that can act as a complement or alternative to built-in attention mechanisms while remaining practical for large-scale infrastructure monitoring. Performance is benchmarked against three-channel baselines and a reference model (YOLO11x) and two recent Transformer-based segmentation architectures (SegFormer B2 and SwinUNet Tiny), enabling conclusions on accuracy, robustness, and computational practicality for deployment.

The remainder of the paper is organized as follows:

Section 2 details the methodology (dataset, models, and ensemble construction),

Section 3 presents the results with quantitative and qualitative comparisons of three- and four-channel configurations, and

Section 4 provides the discussion and conclusions.

2. Methods

2.1. Dataset Description

The dataset was constructed using asphalt pavement images obtained from two open-access sources: UAV flights [

31] and the EdmCrack600 dataset collected with an action camera mounted on a passenger vehicle [

32]. The UAV imagery (117 images in total) was acquired with a DJI Mini 2 (SZ DJI Technology Co., Ltd., Shenzhen, China) drone at varying altitudes under sunny conditions, capturing top-down views with high spatial resolution. The action camera imagery (818 images in total) was recorded using a GoPro Hero 7 Black (GoPro Inc., San Mateo, CA, USA) at an oblique 45° angle during real traffic, including challenging conditions such as the presence of other vehicles, roadside vegetation, snow and ice, shadows, and wet pavement with sun glare. All original images were captured at a resolution of 1920 × 1080 pixels.

The dataset was then processed to create uniform training samples. Images were cropped into square tiles of 1080 × 1080 pixels and subsequently resized to 1280 × 1280 to avoid distortions while preserving crack details. In cases where cracks spanned the entire image width, frames were split into two tiles to enlarge the dataset. The final dataset comprised 935 images in total, with 702 allocated to the training set and 233 to the validation set. To prevent data leakage, tiles derived from the same source frame were always kept within the same subset.

For segmentation tasks, ground truth annotations were prepared in the form of binary masks (PNG format), with each pixel labeled either “crack” or “background.” After annotation, the segmentation masks were exported in PNG format. For YOLO11x-seg, these masks were further converted using the OpenCV (Open Computer Vision Library) [

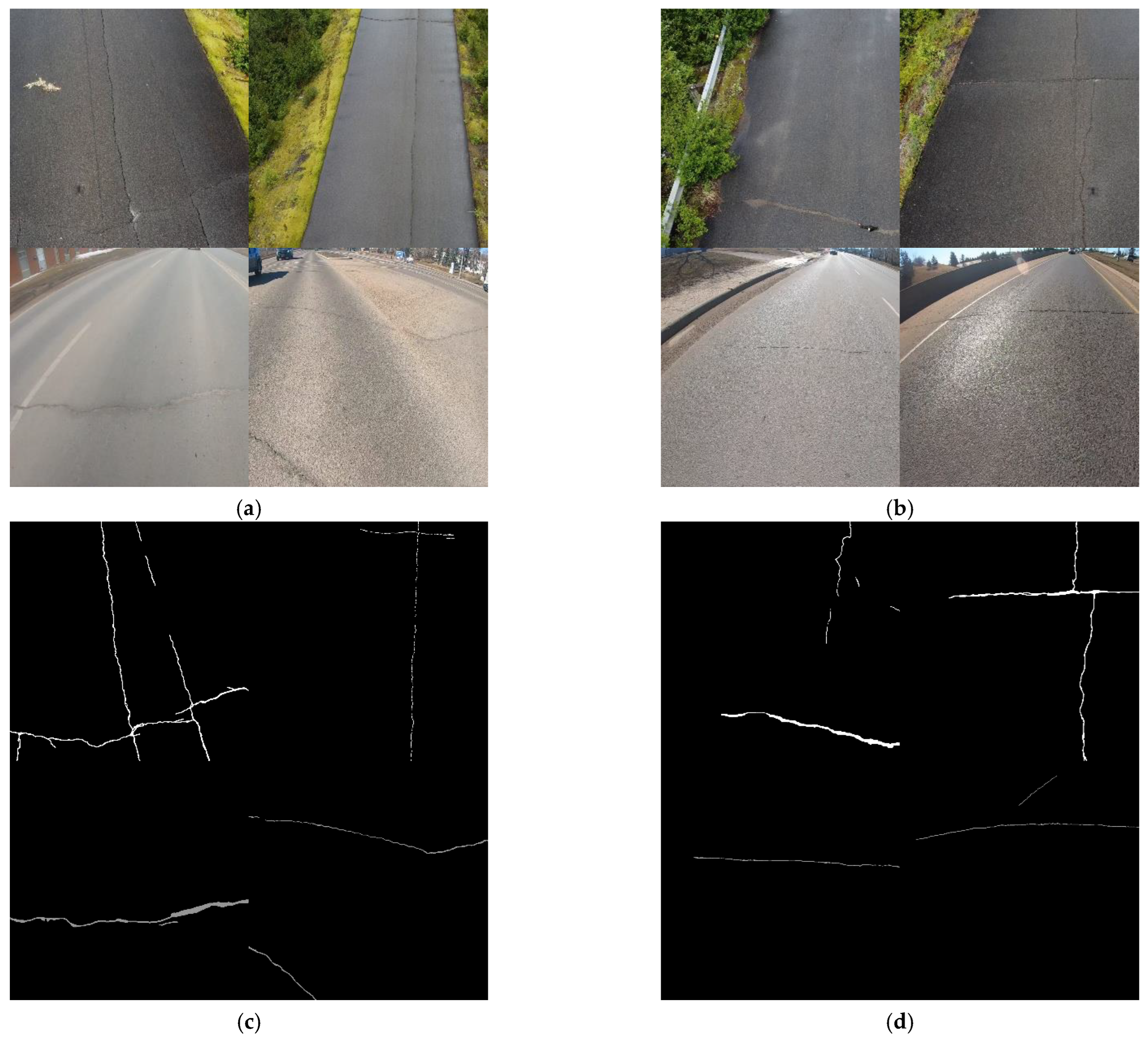

22], which identified all contour points of the masks. The coordinates of these points were normalized by dividing them by the width and height of the image and then stored in a list for subsequent use. Examples of training and validation images together with their corresponding masks are illustrated in

Figure 1.

For classification tasks, additional image patches of 32 × 32, 64 × 64, and 128 × 128 pixels were generated. Patches containing cracks were selected such that at least 10% of pixels belonged to the crack class, and an equal number of crack-free patches were added to maintain class balance. In total, 51,494 training patches and 17,244 validation patches were produced, and were evenly distributed between the two classes.

2.2. Segmentation Models

Several representative segmentation architectures were employed to address the crack segmentation task:

U-Net [

33]—a symmetric encoder–decoder network with skip connections that transfer information from early layers to the corresponding decoding blocks. This design helps reconstruct fine crack boundaries and improves accuracy on small datasets.

Efficient Neural Network (ENet) [

34]—a compact real-time segmentation architecture with a large encoder and minimalistic decoder. The use of PReLU activations improves the model’s capacity to represent negative values and reduces information loss.

High-Resolution Network (HRNet) [

35]—a high-resolution network that maintains multi-scale feature representations throughout the processing pipeline. This enables the accurate capture of thin cracks against complex asphalt textures.

DeepLabV3+ [

36]—a model that integrates an Atrous Spatial Pyramid Pooling (ASPP) module for multi-scale feature extraction with a decoder for boundary refinement. Dilated convolutions make the network robust to textural variations.

All four models are widely available in open-source toolchains, can be modified to accept a fourth input channel by adjusting only the first convolutional layer, and were trained under a unified optimization setup (

Section 2.3). Taken together, they cover a broad spectrum of accuracy–efficiency trade-offs (from real-time to high-capacity settings), allowing an informative analysis of whether the probability map consistently improves segmentation quality across architectures and how such gains relate to computational constraints relevant for field deployment.

2.3. Training of Segmentation Models

All segmentation models were trained under consistent hyperparameters to ensure comparability across architectures. The Adam optimizer was employed with an initial learning rate of 0.0001, and BCEWithLogitsLoss was selected as the loss function, being well suited for binary pixel-level classification tasks. Batch size varied from 4 to 16 depending on GPU memory constraints. To mitigate overfitting, an early stopping mechanism was applied, halting training if validation loss or IoU did not improve for 50 consecutive epochs.

The hyperparameters used for segmentation model training are summarized in

Table 1.

The training was conducted on a personal workstation with the following specifications:

CPU: AMD Ryzen 5 5600

RAM: 32 GB

GPU: Nvidia RTX 4060 Ti

OS: Ubuntu 22.04

In

Table 2, the training results of segmentation models with three-channel input are presented, allowing for a direct comparison of baseline architectures under identical settings.

In summary, DeepLabV3+ attains the highest IoU (up to 0.5697 when saved by IoU). U-Net shows very high precision (≈0.93) but low recall (≈0.23–0.37), which depresses IoU. ENet reaches moderate IoU (up to 0.3063) with a lightweight design, while HRNet underperforms on this dataset (IoU ≈ 0.07–0.08). These observations motivate selecting DeepLabV3+ as the baseline backbone for evaluating probability-map variants in

Section 2.7.

2.4. Evaluation Metrics

All models were evaluated using consistent metrics, with additional comparisons made against the original YOLO11x-seg, SegFormer B2, and SwimUNet Tiny models. The machine learning models in this study were assessed based on the following metrics: precision, recall, F1-score, IoU, and loss [

37].

In semantic segmentation, precision quantifies the ratio of correctly predicted pixels of the target class to the total number of pixels predicted as that class:

where TP (True Positive) denotes the number of pixels correctly classified as belonging to the target class, and FP (False Positive) represents the number of pixels incorrectly assigned to that class.

Recall reflects the model’s ability to identify all relevant pixels of the target class. It is defined as the fraction of correctly detected positive pixels out of all actual positive pixels:

where FN (False Negative) indicates the number of pixels from the target class that were not detected.

To balance precision and recall, the F1-score is used. It is the harmonic mean of these two metrics, providing a single measure that accounts for both false positives and false negatives:

Another widely adopted metric is the Intersection over Union (IoU), also known as the Jaccard Index, which evaluates the spatial agreement between the predicted segmentation mask and the ground truth mask:

where Intersection corresponds to the number of pixels shared by both masks, and Union is the total number of pixels present in at least one of them (either predicted or ground truth).

These metrics collectively assessed both localization accuracy and completeness of crack detection.

2.5. Classification Models

To generate probability maps, a broad spectrum of models was investigated, covering both classical CNNs and Transformer-based architectures: AlexNet, MobileNetV2, MobileNetV3, Swin Transformer (Swin-Tiny), and Vision Transformer (ViT Tiny).

AlexNet [

38]—one of the pioneering deep CNNs, comprising five convolutional layers (some followed by max pooling) and three fully connected layers, with ~60 million parameters and 650,000 neurons. ReLU activations improved gradient propagation and nonlinearity, while Dropout in fully connected layers reduced overfitting by randomly deactivating neurons during training.

MobileNetV2/V3 [

39,

40]—lightweight networks optimized for mobile and low-power devices. MobileNetV2 introduces inverted residual blocks, linear bottlenecks (with no ReLU activation at compressed stages), and depthwise separable convolutions with an expansion factor, allowing efficient feature retention. MobileNetV3 builds upon this with automated architecture search (NAS), the hard swish activation function, and Squeeze and Excitation (SE) blocks for dynamic channel recalibration, achieving an improved trade-off between accuracy and efficiency.

ShuffleNet V2 [

41]—an efficient CNN optimized for mobile devices, evolving from ShuffleNet V1 [

42]. While V1 relied on group convolutions and channel shuffle operations to reduce complexity, V2 removed group convolutions due to their memory overhead and introduced the channel split operation. At the start of each block, channels are divided into two branches: One remains unchanged, while the other undergoes convolutional transformations before recombination and shuffling. This structure enhances information exchange with reduced computational cost. Multiple variants (0.5×, 1.0×, 1.5×, 2.0×) were proposed to balance accuracy and speed.

ViT Tiny [

43]—the first model to apply a Transformer encoder directly to image processing. Images are divided into fixed size patches (e.g., 16 × 16), linearly projected into vectors, and processed by a standard Transformer encoder similar to NLP tasks. This enables the learning of global dependencies without convolutions. The Tiny version uses fewer layers and parameters, making it suitable for small datasets and resource-limited tasks.

Swin-Tiny [

44]—a hierarchical Transformer with shifted windows. Instead of processing entire images globally, it divides them into fixed-size local windows and gradually shifts them to improve cross-window communication. This design scales efficiently to larger images while capturing both fine local details (e.g., hairline cracks) and global structural patterns.

2.6. Training of Classification Models

The classification models were trained on balanced sets of patches (with and without cracks). The AdamW optimizer was used with a learning rate of 0.0001, while BCELoss served as the loss function. A batch size of 64 ensured stable and efficient convergence. An early stopping strategy monitored loss and F1 score to avoid overfitting. Both randomly initialized and pre-trained Transformer-based models were tested, with the pre-trained variants showing substantially better performance on the relatively small dataset.

The performance of the classification models was evaluated on balanced datasets of patches containing cracks and non-crack regions. The results of training are summarized in

Table 3, which reports the values of loss, accuracy, precision, recall, and F1-score for all architectures considered.

A review of the results shows that among convolutional architectures, MobileNetV2 achieved the most stable and competitive performance across all metrics (accuracy = 0.906, F1-score = 0.903). Both MobileNetV3-Large and ShuffleNetV2 also demonstrated strong results, slightly trailing behind MobileNetV2. In contrast, AlexNet, while historically important, performed considerably worse, highlighting its limitations compared to more recent lightweight CNNs.

When comparing Transformer-based approaches, it is evident that pretrained models significantly outperformed randomly initialized counterparts. For instance, Swin-Tiny Pretrained and ViT-Tiny Pretrained attained accuracy levels of 0.932 and 0.920, respectively, with F1-scores above 0.92, surpassing all CNN-based models. This finding underscores the effectiveness of transfer learning in scenarios with limited training data.

Overall, the best-performing models were Swin-Tiny Pretrained and ViT-Tiny Pretrained, confirming the suitability of Transformer-based architectures, particularly when pretrained weights are utilized, for crack versus non-crack classification tasks.

2.7. Ensemble Construction

The primary aim of this study is to rigorously evaluate the effectiveness of using an auxiliary (fourth) input channel—composed of crack-probability maps generated by an ensemble of binary classification models—for improving segmentation accuracy. In addition, the study assesses the advisability of incorporating Transformer-based classifiers into the ensemble in comparison with lightweight CNN alternatives.

At the first stage, 54 datasets were generated: six for each classification model. These six datasets resulted from three patch scales (32 × 32, 64 × 64, 128 × 128) combined with two model checkpoints (saved by loss and F1 criteria). Each dataset was used to train the DeepLabV3+ segmentation model, allowing the evaluation of probability maps produced by classification architectures. The IoU metric served as the primary comparison criterion.

Table 4 reports results for all classification models, while

Table 5 and

Table 6 present the best results along with representative cases from Transformer-based models. To avoid redundancy, only the best result (between loss and F1 checkpoints) was reported. Results were ranked in descending order of IoU. The analysis revealed that MobileNetV2 consistently achieved the highest scores across all patch sizes (32 × 32, 64 × 64, 128 × 128), while Transformer architectures (ViT Tiny, Swin Tiny) generally underperformed in generating accurate probability maps.

The comparative analysis highlights that, although Transformer-based models initially achieved superior results during the classification training phase, their effectiveness in generating probability maps for crack segmentation was notably lower compared to CNN-based models. Furthermore, CNN architectures consistently demonstrated faster inference, with substantially reduced processing time per image, which makes them more practical for large-scale applications.

At the second stage, based on the results in

Table 4, MobileNetV2 was chosen as the baseline for ensemble construction due to its superior IoU performance. Additional tests combined it with the next three best models at 32 × 32 and 64 × 64 scales. The aim was to determine whether architectural diversity could reduce false predictions and improve stability. The best performance at this stage was obtained with the combination of 32 × 32 MobileNetV2 and 64 × 64 MobileNetV2, which consistently achieved the highest IoU and recall among the tested ensembles. The corresponding results are reported in

Table 7.

The third stage examined whether incorporating models trained on larger patches (128 × 128) could further enhance performance. Building on the best two-model ensemble identified in

Table 6, the top three models at the 128 × 128 scale were added sequentially. As summarized in

Table 8, the optimal configuration was achieved with the ensemble of 32 × 32 MobileNetV2 + 64 × 64 MobileNetV2 + 128 × 128 MobileNetV2, where the 128 × 128 model was selected based on the F1-score checkpoint. This three-model combination provided the highest overall IoU performance at this stage.

At the final stage, the best-performing three-model ensemble identified in

Table 8 was extended by incorporating an additional 32 × 32 model. For this purpose, the second- to fourth-highest-ranked models from

Table 4 at the 32 × 32 scale were sequentially tested as candidates. This approach was intended to evaluate whether increasing model diversity at the finest resolution could further improve the accuracy of the generated probability maps. The detailed results of these experiments are presented in

Table 9.

According to

Table 9, the final ensemble comprised:

32 × 32 MobileNetV2 (loss)

32 × 32 ShuffleNet V2 × 1.0 (loss)

64 × 64 MobileNetV2 (loss)

128 × 128 MobileNetV2 (F1)

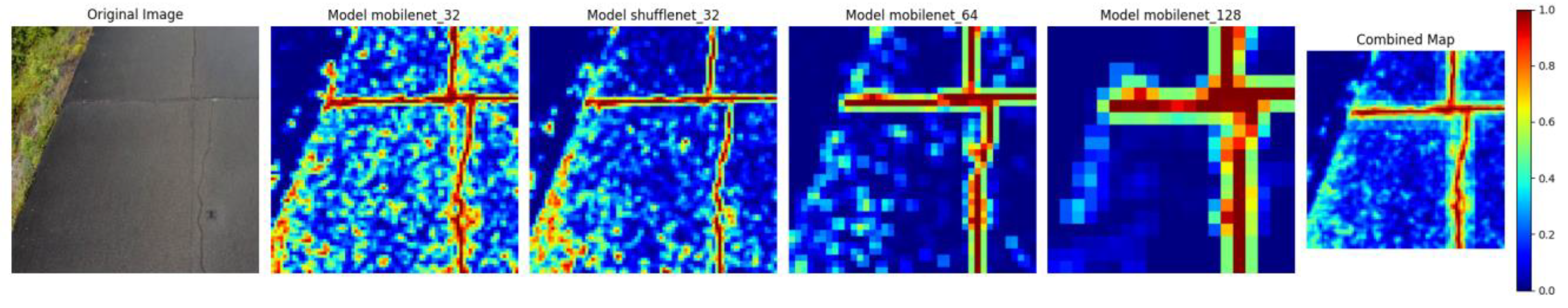

Figure 2 shows the probability maps produced by each model in the ensemble, along with the combined probability map indicating the presence of a crack.

As illustrated, employing a pair of classifiers on 32 × 32 patches together with another pair on larger segments markedly reduced false-positive findings while maintaining fine detail in the resulting probability maps.

Based on the probability maps generated by the ensemble, all considered segmentation models were additionally retrained with a four-channel input (RGB + probability map). For this purpose, only the first convolutional layer of each network was modified to accept four channels, with its weights initialized as the mean of the RGB filters; no other architectural changes were introduced. Detailed numerical results and comparisons are presented in

Section 3, providing a comprehensive evaluation of ensemble effectiveness.

3. Results

Based on the multi-stage evaluation detailed in

Section 2.7, the final ensemble consisted of four models: 32 × 32 MobileNetV2, 64 × 64 MobileNetV2, 128 × 128 MobileNetV2 (F1 checkpoint), and 32 × 32 ShuffleNetV2. Using this ensemble, all segmentation architectures were retrained with RGB + probability input and compared against their RGB (3-channel) baselines on the same validation split. For benchmarking, we also included Transformer-based segmentation models (SegFormer B2, SwinUNet Tiny) and YOLO11x-seg. This setup enabled direct assessment of three- vs. four-channel configurations in comparison with both Transformer-based architectures and a widely used object-detection-driven baseline.

Table 10,

Table 11,

Table 12,

Table 13 and

Table 14 summarize the validation of precision, recall, F1-score, IoU, and loss for each model in two settings—best checkpoint selected by loss and by IoU—and report the relative change (Δ%) from three-channel to four-channel inputs. Overall, the four-channel configuration improves performance across all architectures. For example, DeepLabV3+ improves IoU from 0.5697 → 0.6062 (Δ +6.41%) when selected by IoU, with consistent gains in precision, recall, and F1-score; ENet shows larger relative gains (e.g., IoU +59.48% when selected by IoU); and U-Net exhibits modest improvements (IoU +1.44–23.72% depending on the checkpointing criterion). Very large relative increases observed for HRNet stem from low three-channel baselines. Among Transformer-based models, SegFormer B2 and SwinUNet Tiny achieve IoU values of 0.2719 and 0.5649, respectively, which remain below the best-performing CNN-based four-channel configurations. As a reference, YOLO11x-seg attains an IoU of 0.4987, also lower than DeepLabV3+.

The remainder of this section presents consolidated, metric-wise comparisons for all architectures (3- vs. 4-channel inputs) and a concise qualitative illustration of typical segmentation improvements achieved with the additional probability map.

Table 10 reports the validation of precision for each segmentation architecture under two configurations: baseline three-channel (RGB) input and four-channel (RGB + probability map) input. Results are reported for the best checkpoint selected by loss and by IoU, with YOLO11x-seg, SegFormer B2, and SwinUNet Tiny included as external benchmarks.

Adding the probability map increases precision for all reported models. DeepLabV3+ improves by +4.05% (saved by loss) and +2.09% (saved by IoU), ENet by +6.63%/+4.21%, and U-Net by +2.18%/+3.03%, which also attains the highest absolute precision among all configurations (0.9512 when saved by loss). HRNet exhibits very large relative gains (+977%/+649%) due to extremely low three-channel baselines; its four-channel precision (≈0.79–0.80) falls within the range of the other CNNs. Although the Transformer-based models show strong precision overall—with SegFormer B2 achieving 0.9247 (loss) and 0.8940 (IoU), and SwinUNet Tiny reaching 0.8418 (loss) and 0.8165 (IoU)—they do not surpass the best CNN results. In particular, both the baseline and the four-channel U-Net configurations outperform them, underscoring the effectiveness of the proposed probability map approach. The reference YOLO11x-seg precision (0.6428) is lower than all four-channel CNN-based models as well as the Transformer benchmarks.

Table 11 lists validation recall for each architecture with three-channel (RGB) and four-channel (RGB + probability map) inputs under two checkpointing criteria (saved by loss/by IoU). Δ% denotes the relative change from the three-channel baseline.

Incorporating the probability map leads to clear recall improvements for CNN-based models. The gains are moderate for DeepLabV3+ (+5.46% when saved by loss, +6.29% when saved by IoU) and marginal for U-Net under the IoU criterion (+0.11%), while ENet and HRNet exhibit the largest relative increases (+110.73%/+72.43% and +142.22%/+268.03%, respectively) due to low three-channel baselines. The Transformer-based benchmarks also achieve competitive results: SegFormer B2 reports a recall of 0.2816 (loss) and 0.4395 (IoU), while SwinUNet Tiny reaches 0.6100 (loss) and 0.6485 (IoU). Although strong, these values do not surpass the top-performing four-channel CNNs such as HRNet and DeepLabV3+. The YOLO11x reference (≈0.708) is comparable to the three-channel DeepLabV3+ but remains below its four-channel configuration.

Table 12 reports the F1-score values for the three- and four-channel models. In contrast to precision and recall, here the improvements appear more consistent across nearly all architectures. The relative gains range from modest (+1.65% for U-Net, IoU) to very substantial (over +67% for ENet, loss; and +518% for HRNet, IoU).

Adding the probability map improves the harmonic mean of precision and recall across most CNN-based models. DeepLabV3+ shows consistent but moderate gains (+4.87%/+4.32%), ENet achieves the largest relative increases (+67.17%/+40.62%), while U-Net records smaller improvements (+19.98%/+1.65%). HRNet again exhibits very large relative growth due to weak three-channel baselines, reaching competitive values in its four-channel form. Transformer-based models (SegFormer B2, SwinUNet Tiny) perform strongly in baseline settings, with F1 ≈ 0.57–0.72, but do not surpass the top CNN results with probability-map integration. The YOLO11x-seg baseline (0.6461) remains below the stronger configurations.

Table 13 summarizes the IoU metrics. Here, the integration of the probability map yields particularly strong gains, with HRNet and ENet showing the most dramatic relative improvements (over +700% and +95%, respectively). Even in stronger baselines such as DeepLabV3+, the additional channel still contributes to a measurable increase (+7.40% by loss, +6.41% by IoU).

Probability maps yield systematic improvements in overlap accuracy for all CNN-based models. DeepLabV3+ increases by +7.40% (loss) and +6.41% (IoU), ENet by +95.42%/+59.48%, and U-Net by +23.72%/+1.44%. HRNet again shows very large relative gains, surpassing 0.64 in its four-channel configuration. SegFormer B2 and SwinUNet Tiny demonstrate solid IoU values in baseline form (0.27–0.56) but remain below the strongest CNN configurations with probability-map integration. YOLO11x-seg attains an IoU of 0.4987, also lower than four-channel DeepLabV3+.

Table 14 presents the validation losses. In most cases, the loss values slightly increase after adding the probability map. However, it is important to note that the highest recorded value (0.0252) still remains very low in absolute terms.

Validation losses show mixed trends. For DeepLabV3+ and HRNet, the additional channel increases the loss slightly (+12.31%/+37.14%), but absolute values remain very low (<0.023). ENet records minor reductions in loss when selected by loss (−9.28%), while U-Net stays within a narrow range (−3.03%/+12.70%). SegFormer B2 achieves the lowest absolute loss (≈0.0126–0.0158), while SwinUNet Tiny reports higher values (>0.23), reflecting dataset scale sensitivity. These results confirm that even when the loss metric rises locally, the overlap-based metrics (IoU/F1) consistently improve with the probability map.

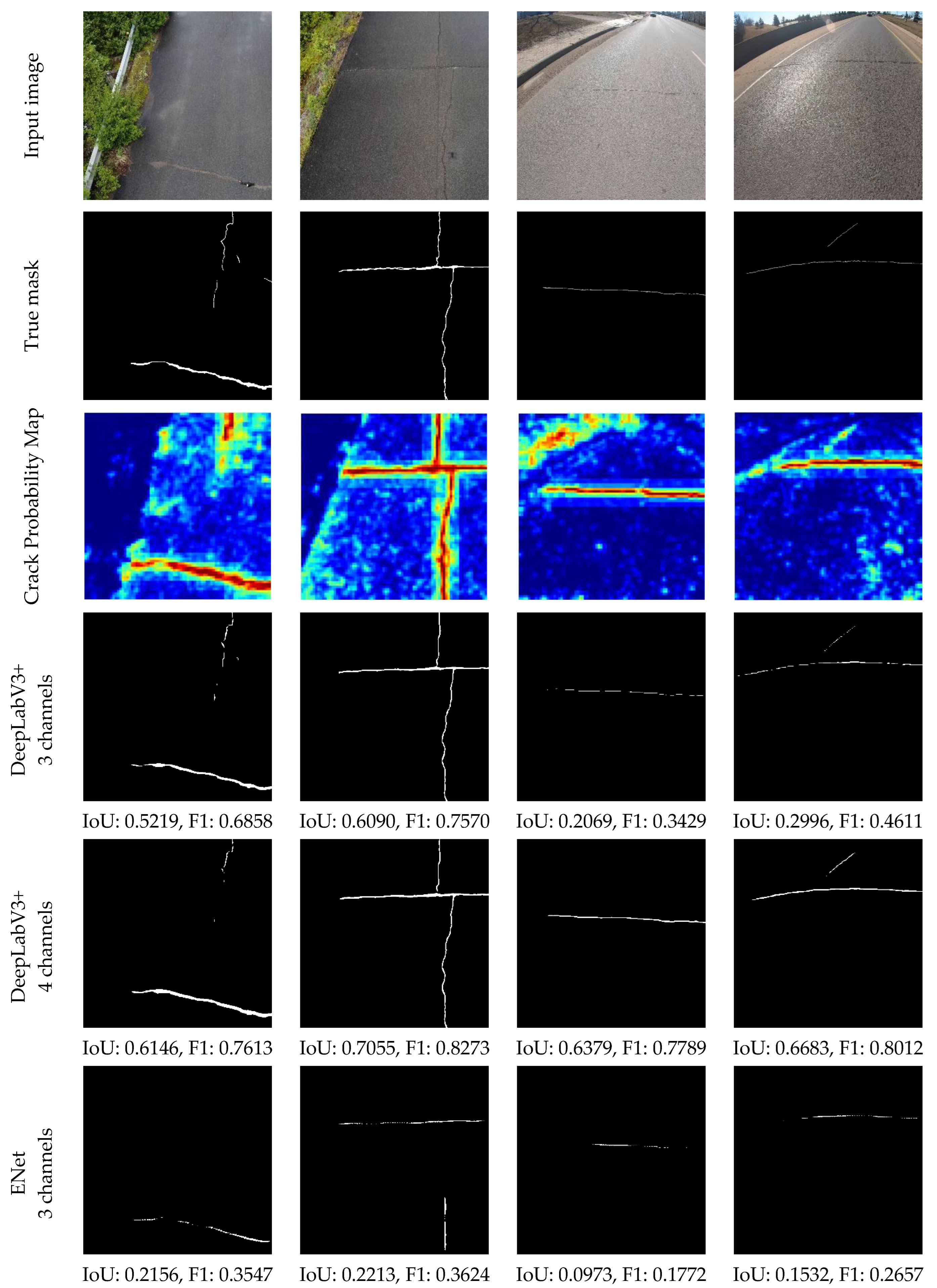

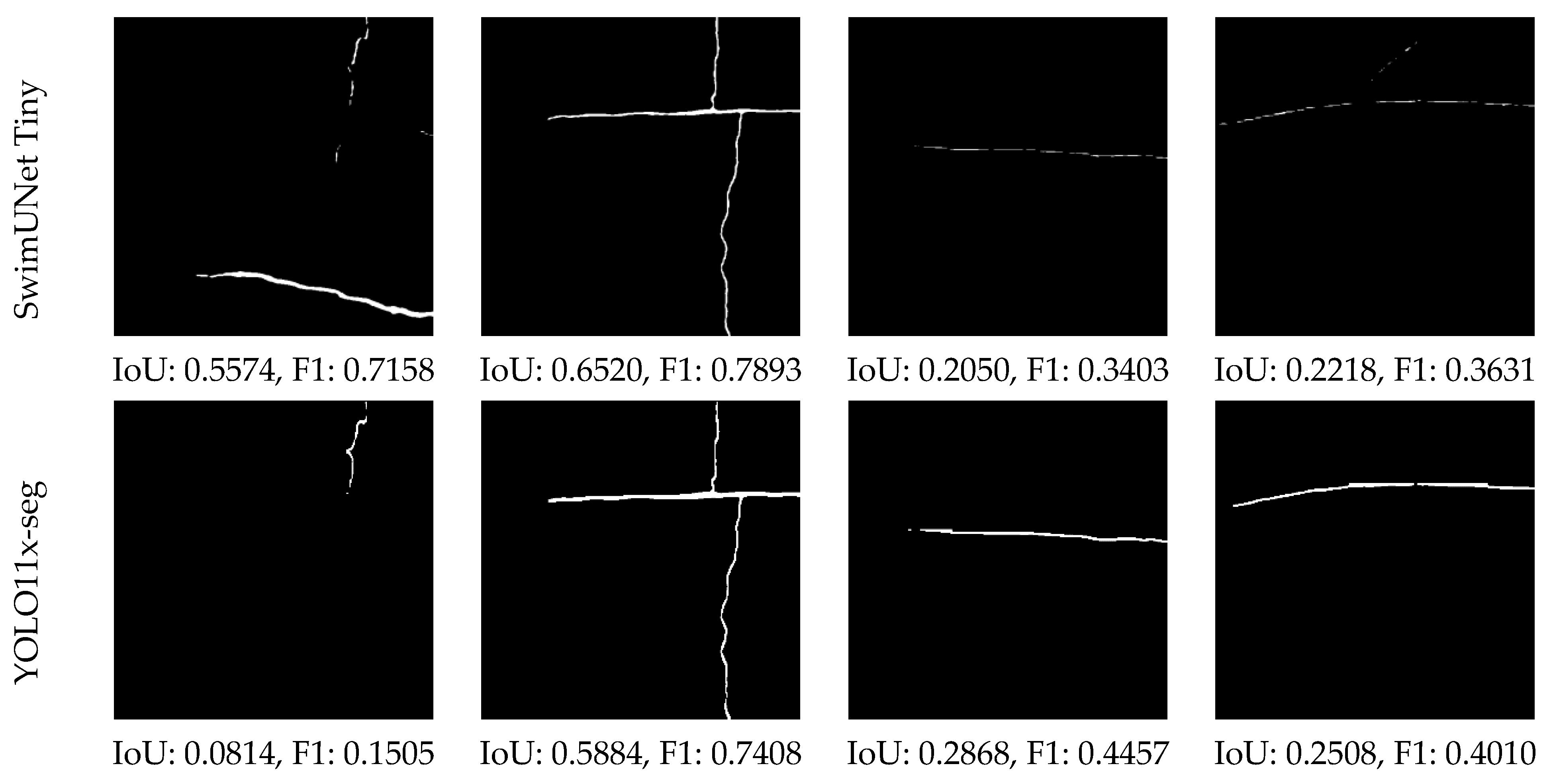

Figure 3 presents qualitative examples of crack segmentation, comparing baseline three-channel models with their four-channel counterparts, where the additional channel corresponds to the probability map generated by the classification ensemble. The examples include both drone-captured and action camera images, enabling an assessment of model robustness across different acquisition conditions.

As illustrated, only DeepLabV3+ consistently produces accurate crack delineations across both types of images, showing high alignment with the ground truth. Other architectures, particularly when processing action camera inputs, exhibit weaker baseline performance; however, the integration of the probability map systematically improves the coverage and continuity of predicted masks. HRNet demonstrates the most notable relative gains, with its weak three-channel baseline transformed into competitive four-channel outputs. U-Net and ENet also benefit, albeit to different extents, confirming that the proposed strategy is adaptable across diverse CNN families.

Figure 3 thus reinforces the quantitative findings from

Table 10,

Table 11,

Table 12,

Table 13 and

Table 14: The probability map enhances both detection sensitivity and boundary consistency while limiting fragmented predictions. Although Transformer-based models such as SegFormer B2 and SwinUNet Tiny achieve strong precision and recall without modification, they do not surpass the best four-channel CNNs, such as DeepLabV3+. Importantly, all four-channel CNN configurations outperform the YOLO11x-seg reference under identical validation conditions.

Overall, the results highlight that incorporating ensemble-derived probability maps as an auxiliary channel provides a robust and computationally efficient means of improving crack segmentation, offering a practical complement or alternative to heavier state-of-the-art architectures.

4. Discussion

The proposed approach integrates a crack probability map—generated by a classification-model ensemble—as a fourth input channel for segmentation networks. The final ensemble used to produce probability maps comprised 32 × 32 MobileNetV2 + 64 × 64 MobileNetV2 + 128 × 128 MobileNetV2 (F1 checkpoint) + 32 × 32 ShuffleNetV2 (x1_0). Subsequently, all segmentation models were retrained with four-channel input (RGB + probability map) and compared against their three-channel baselines on the same validation split. For benchmarking, YOLO11x-seg was included as a reference, while SegFormer B2 and SwinUNet Tiny were added as Transformer-based baselines for comparison.

4.1. Quantitative Summary

Across

Table 10,

Table 11,

Table 12,

Table 13 and

Table 14, the four-channel configuration yields systematic improvements. For IoU, gains range from moderate for DeepLabV3+ (from 0.5697 to 0.6062; Δ +6.41%) to large relative increases for ENet (e.g., +59.48% when the checkpoint is selected by IoU) and HRNet (very high relative gains due to extremely low three-channel baselines). F1-score improves for most architectures, with stable gains for DeepLabV3+ and pronounced improvements for ENet; HRNet exhibits the largest relative rise because of its weak baseline. Precision consistently increases across models, while recall also improves, with effect sizes varying by architecture. Although the validation loss often rises slightly after adding the fourth channel, absolute values remain very low (maximum around 0.0252), and these local optimization effects do not offset the overlap-based gains (IoU/F1). For reference, YOLO11x-seg reaches IoU 0.4987, while Transformer-based baselines (SegFormer B2, SwinUNet Tiny) exceed several CNN results but remain below the best four-channel DeepLabV3+ and HRNet configurations.

4.2. Comparison with Integrated Attention Mechanisms

The proposed probability map can be regarded as a complementary strategy to conventional built-in attention modules rather than a direct replacement. Unlike mechanisms such as Squeeze-and-Excitation Networks (SE-Nets) [

45] or the Convolutional Block Attention Module (CBAM) [

46], which adaptively reweight features during training in an implicit manner, the probability map provides an explicit and interpretable prior that highlights the regions most likely to contain cracks. This design offers several advantages:

Modularity, as classification and segmentation models can be trained, updated, or replaced independently.

Computational flexibility, since classification and segmentation can be executed on different devices, enabling scalable deployment.

Minimal architectural modifications, as only the first convolutional layer is adapted to four channels, leaving the segmentation backbone unchanged.

The probability map can itself act as a lightweight screening tool, allowing segmentation to be omitted when no cracks are detected.

At the same time, certain trade-offs exist. Segmentation accuracy becomes sensitive to the quality of the classification ensemble, false activations may propagate to the segmentation stage, and the two-stage pipeline introduces additional inference overhead compared to end-to-end attention. Nevertheless, the explicit nature of the probability prior makes the approach transparent and adaptable, providing a degree of interpretability and modularity that built-in attention mechanisms cannot directly offer.

4.3. Qualitative Observations

The examples in

Figure 3 corroborate the quantitative findings. Four-channel models produce cleaner masks with fewer fragmented regions and better agreement with the ground truth. DeepLabV3+ remains robust across both drone and dashboard camera imagery. HRNet benefits markedly from the additional channel relative to its weak three-channel baseline. For U-Net, the effect is limited and depends on the checkpoint selection criterion.

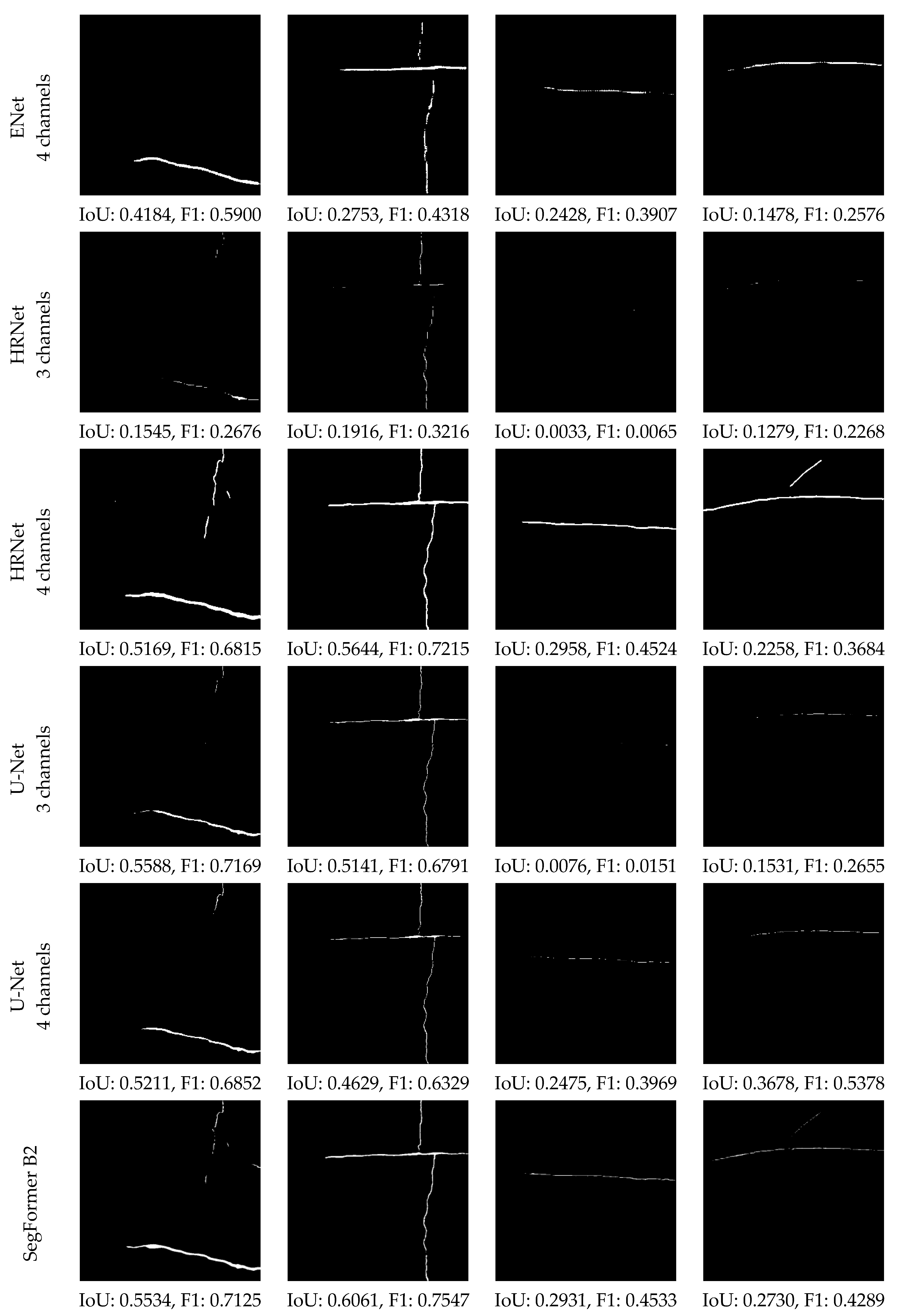

In addition to the successful examples, several challenging cases are illustrated in

Figure 4 using DeepLabV3+ as the segmentation backbone.

The first case demonstrates cracks located in a hard shadow cast by a vehicle, which were not detected by either the ensemble or the segmentation model. In the second example, motion blur caused by UAV movement led to missed detection of partially blurred cracks, although sharp cracks were correctly identified; notably, cracks partially covered with sand were successfully detected, and vegetation did not produce false positives. In the third case, the ensemble generated a noisy probability map that highlighted all cracks, but the segmentation network failed to fully utilize this information, resulting in discontinuities in the predicted mask. The fourth case involves low-contrast cracks filled with dirt, where the ensemble identified only a short fragment with low confidence, while the segmentation model captured approximately half of the crack pattern.

To complement the qualitative examples in

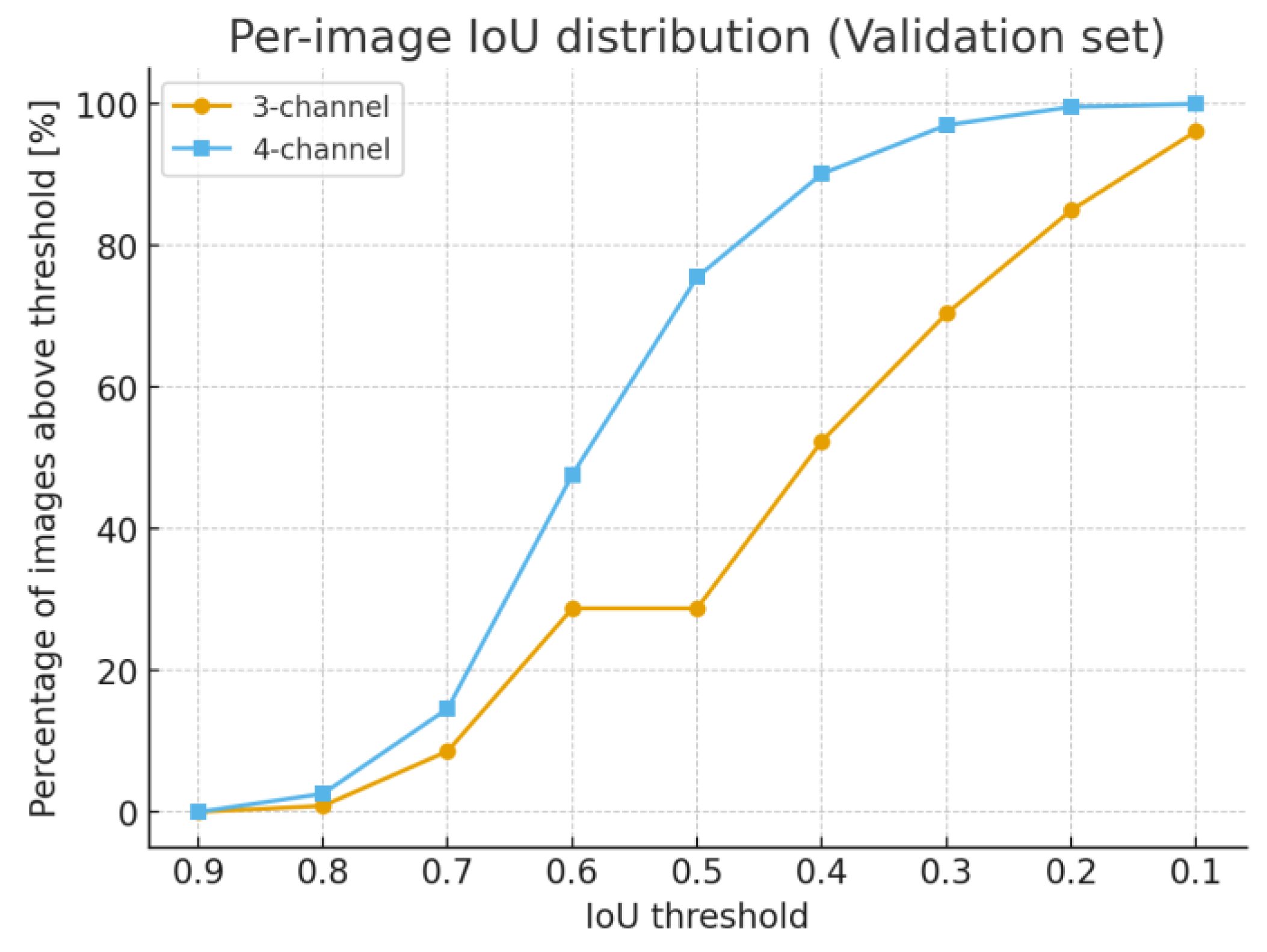

Figure 4, we also conducted a quantitative failure case analysis.

Figure 5 presents the cumulative distribution of per-image IoU scores on the validation set for three-channel and four-channel models. The four-channel configuration consistently achieves a higher proportion of high-IoU predictions, confirming the stability of the proposed approach. Using IoU < 0.4 as a threshold for failure, 21 images (≈9% of the validation set) were identified as low-quality cases. Their distribution across categories is summarized in

Table 15: 11 involved low-contrast cracks, 5 were caused by false activations in the probability maps, 4 by shadows, and 2 by noisy probability maps.

This quantitative assessment confirms that failure cases are not isolated but occur systematically under challenging acquisition conditions. Moreover, they highlight the dual role of ensemble activations: Sufficiently confident false activations may propagate into segmentation and cause spurious detections, while strong true activations do not always guarantee successful segmentation. These insights reinforce the importance of ensemble calibration, adaptive fusion strategies, and more diverse training data to further improve robustness.

4.4. Computational Aspects

The ensemble that delivers the best probability maps enables the top IoU at a practical processing time of approximately 0.6–0.7 s/image (the best four-model configuration ≈ 0.63 s/image, with alternatives ≈ 0.59 and 0.72 s/image). On the classification side, CNN-based models infer substantially faster than pretrained transformer counterparts, which supports the choice of CNNs as the ensemble backbone for large-scale or field deployments.

An additional observation concerns the relative performance of Transformer-based classifiers compared with lightweight CNNs. Despite their strong results on large-scale vision benchmarks, pre-trained Transformer backbones underperformed in our setting. Three factors likely explain this outcome. First, Transformers are known to require very large training datasets to exploit their self-attention capacity effectively, whereas our patch-level datasets (32 × 32, 64 × 64, 128 × 128) were comparatively limited. Second, the architectural complexity of Transformers may constitute an over-design for the relatively simple binary classification task of distinguishing crack versus non-crack patches, resulting in higher computational overhead without proportional accuracy gains. Third, the self-attention mechanism of Transformers aggregates information across patch windows (e.g., 7 × 7 or 16 × 16), which favors capturing global context but may overlook very fine local structures. By contrast, convolutional kernels in lightweight CNNs (e.g., 3 × 3 filters in MobileNet or ShuffleNet) are inherently suited to emphasize local textures and thin crack patterns. Together, these factors explain why CNNs produced more reliable probability maps in this study, making them preferable for small-data regimes and real-time applications.

4.5. Practical Implications for Deployment

From a practical standpoint, the proposed approach is attractive for deployment. UAV and vehicle-mounted imagery can be processed with minimal hardware modification: Classification can run on one device, segmentation on another. This modularity supports integration into pavement management systems, where the method can simplify adoption since it does not substantially increase computational complexity. In practice, deployment could rely on existing servers, accepting a modest increase in processing time, or alternatively on minimal digital infrastructure upgrades that distribute classification and segmentation tasks across separate devices. The ensemble itself may also serve as a lightweight, standalone screening tool on low-power hardware such as entry-level laptops or GPUs, enabling preliminary assessments of pavement condition before full segmentation is performed. This flexibility enables scalable inspection pipelines without requiring expensive multimodal sensors or large investments in new hardware.

4.6. Limitations and Potential Improvements

Despite the overall improvement in segmentation quality, this study has some limitations:

Segmentation quality is sensitive to the reliability of the probability maps; occasional false activations from the ensemble can propagate to the four-channel input and locally reduce model confidence.

Generalization was assessed under specific acquisition conditions; broader validation on diverse pavement types and capture setups is warranted.

Not all architectures exploit the additional channel equally well; some (e.g., U-Net) may require tailored integration (e.g., attention mechanisms, adaptive channel fusion, or deeper insertion points for the probability map).

Final performance can depend on the checkpoint selection criterion (loss vs. IoU), suggesting that systematic analysis of selection strategies is beneficial.

Direct comparison with crack-specific architectures (e.g., DeepCrack, CrackFormer) was not feasible due to low-resolution datasets and high computational requirements, though they remain important references for future work.

A more detailed examination of these aspects highlights their implications. First, the dataset consisted of 935 images (702 training, 233 validation), captured in relatively narrow acquisition settings (sunny UAV flights and action camera recordings during winter). While sufficient to demonstrate feasibility, this limited coverage restricts the generalization of trained models to other pavement types (e.g., concrete, composite, or different asphalt mixes) and environmental conditions such as nighttime, rain, or strong shadows. Second, the effectiveness of segmentation is tied to the reliability of the probability maps: localized false activations (e.g., shadows, vegetation, or debris) can reduce confidence in the four-channel input. Third, the improvements obtained with probability maps are not uniform across segmentation backbones: Stronger architectures tend to show moderate yet stable gains, while weaker baselines benefit more substantially, in some cases surpassing initially stronger models. Finally, model selection based on different checkpointing criteria (loss vs. IoU) led to noticeable variations in outcomes, underscoring sensitivity to training dynamics.

Addressing these issues through ensemble refinement, dataset expansion (including synthetic augmentation and domain adaptation), broader validation campaigns, and architecture-level adaptation would strengthen both accuracy and robustness. In addition, exploring synthetic dataset generation and semi-supervised annotation and generative approaches for dataset enrichment may extend applicability and help unlock the potential of Transformer-based classifiers, complementing the strengths of lightweight CNNs.

5. Conclusions

Incorporating an ensemble-derived probability map as a fourth channel is an effective and minimally invasive means to improve crack segmentation on asphalt imagery. The proposed method delivers consistent gains in IoU, F1-score, precision, and recall across multiple architectures and surpasses the YOLO11x-seg reference under identical validation conditions. While Transformer-based segmentation models have shown strong potential in recent literature, in this study, the best-performing four-channel CNN configurations (DeepLabV3+, HRNet) achieved superior results across most metrics. It should be noted, however, that the extent of improvement varies by backbone: stronger models generally gain moderately, while weaker baselines benefit more substantially, in some cases exceeding initially stronger configurations. This highlights that modern CNN backbones, when combined with ensemble-derived probability maps, can offer more robust generalization on relatively small, heterogeneous datasets, making them particularly attractive for practical infrastructure monitoring. Given its moderate computational cost and compatibility with common backbones, the method is well suited to practical inspection pipelines (both ground and aerial).

Future research directions include:

Refining and calibrating the classification ensemble to reduce false activations.

Exploring alternative integration strategies for the probability map (e.g., attention-based fusion, adapter layers, or multi-layer injection rather than input-only).

Extending evaluation to additional surface types and acquisition regimes.

Analyzing the effect of checkpoint selection strategies on final performance.

Assessing probability-map integration for Transformer-based segmentation models.

Using generative AI for dataset expansion and developing pre-trained models tailored to road and infrastructure inspection.

Overall, this study demonstrates that probability maps derived from lightweight classifier ensembles can serve as a scalable solution for automated pavement crack segmentation, bridging the gap between academic research and deployable systems for road management.