Abstract

The early detection of fire and smoke is essential for mitigating human casualties, property damage, and environmental impact. Traditional sensor-based and vision-based detection systems frequently exhibit high false alarm rates, delayed response times, and limited adaptability in complex or dynamic environments. Recent advances in deep learning and computer vision have enabled more accurate, real-time detection through the automated analysis of flame and smoke patterns. This paper presents a comprehensive review of deep learning techniques for fire and smoke detection, with a particular focus on convolutional neural networks (CNNs), object detection frameworks such as YOLO and Faster R-CNN, and spatiotemporal models for video-based analysis. We examine the benefits of these approaches in terms of improved accuracy, robustness, and deployment feasibility on resource-constrained platforms. Furthermore, we discuss current limitations, including the scarcity and diversity of annotated datasets, susceptibility to false alarms, and challenges in generalization across varying scenarios. Finally, we outline promising research directions, including multimodal sensor fusion, lightweight edge AI implementations, and the development of explainable deep learning models. By synthesizing recent advancements and identifying persistent challenges, this review provides a structured foundation for the design of next-generation intelligent fire detection systems.

1. Introduction

Fires can remain wildfires or become structural fires; industrial fires, with their extremely destructive and long-lasting traits, have, therefore, always constituted a threat to human life, property, and the environment. However, in the past few decades, due to increasing climate changes, urban sprawl, and insufficient forest management strategies, they have grown in frequency and intensity. About 400 million hectares were scorched, larger than the Indian subcontinent, in 2020, with wildfire incidents worldwide leaving devastated ecosystems and displaced populations [1]. Worse still, fires claim over 180,000 lives every year, mostly caused by burns and smoke inhalation, a scenario that calls for very prompt and accurate early recognitions [2].

The increase in fire occurrence and fire mortality highlights the inefficacy of typical fire monitoring systems, and there are considerable opportunities to use smart, rapid fire detection systems instead. Thermal detectors, smoke detectors, thermal flame detectors, and satellite remote sensing have a long history of being important technologies in fire prevention and fire response. However, many of these systems continue to have limited capabilities in real-world situations. Smoke detectors using ionization and photoelectric techniques can be highly susceptible to false-alarm sources (e.g., dust, vapors, or food vapor from cooking) [3], and in most applications, thermal sensors [4] will not work when the heat source is not directly available or swiftly changing. Vertical coverage offered by satellites is ubiquitous, but satellite meaning making typically takes an inordinate amount of time due to poor temporal resolution and/or cloud cover if affected by severe weather [5].

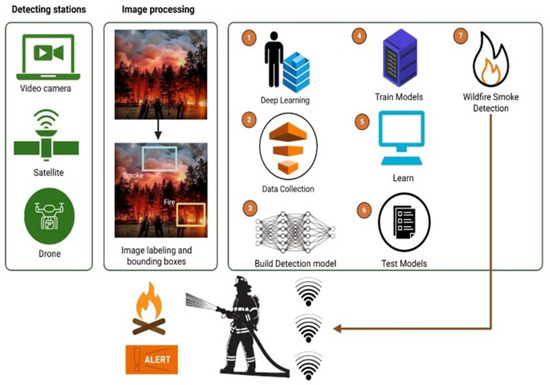

Even traditional vision-based fire detection systems, which rely on hand-crafted features such as color and motion, have faced significant challenges in robustness and generalizability across diverse environmental conditions [6]. These limitations, coupled with rapid advances in artificial intelligence (AI) and, in particular, deep learning (DL), have driven a paradigm shift in fire and smoke detection research. DL architectures—including convolutional neural networks (CNNs), recurrent neural networks (RNNs), and spatiotemporal models—have demonstrated superior performance in computer vision tasks such as object recognition, segmentation, and action detection [7,8]. By leveraging these capabilities, DL enables more reliable, real-time, and flexible fire detection in complex environments [9], as it can automatically learn hierarchical representations of subtle visual features such as smoke, flames, and related confounding patterns. Figure 1 illustrates the general end-to-end workflow for wildfire smoke detection using DL, encompassing data acquisition from cameras, satellites, or drones, model training, and real-time alert generation.

Figure 1.

This diagram illustrates the end-to-end process of wildfire smoke detection using deep learning. Detection begins with image data collected from various sources such as video cameras, satellites, and drones. These images undergo preprocessing, including labeling and bounding boxes to identify smoke and fire regions. The labeled data are used for model development through steps including data collection, model building, training, learning, and testing. Once trained, the detection model can accurately identify wildfire smoke in real-time. Alerts are then sent to emergency responders, enabling early action to prevent fire spread and minimize damage.

Recent technological advances have further accelerated the integration of DL into practical fire and smoke detection systems. The widespread availability of visual sensors, including RGB, infrared, and drone-mounted cameras, has facilitated the creation of richer datasets [10], while modern DL frameworks (e.g., TensorFlow, PyTorch) and high-performance GPUs have lowered development barriers [11]. Concurrently, edge computing has enabled real-time inference even in resource-constrained settings [12]. Within this context, CNNs remain central for fire classification and localization [13], with extensions such as Faster R-CNN enhancing both detection accuracy and bounding-box precision [14]. Lightweight architectures, such as YOLOv4-tiny, support low-latency UAV-based wildfire monitoring [15], while hybrid CNN–LSTM and 3D CNN models capture spatiotemporal dynamics, improving early detection capabilities [10,16,17]. Furthermore, multimodal fusion approaches that combine RGB, infrared, and thermal imagery have demonstrated superior robustness compared to unimodal systems, reducing false positives and improving overall detection performance [17,18,19].

Despite these advancements, several challenges remain for real-world deployment. The limited availability of large, annotated, and diverse datasets constrains model generalization, as most public datasets contain fewer than 10,000 samples and lack coverage of night-time, urban, or foggy conditions [17,20]. Consequently, models remain susceptible to misclassifying fire-like distractors, such as sunlight, headlights, fog, or steam [21]. High computational demands further constrain deployment, necessitating optimization strategies such as pruning, quantization, and knowledge distillation to enable effective operation on embedded platforms [12,22]. Latency is also critical, as emergency response applications often require inference times below 100 ms, whereas current embedded implementations may exceed one second per frame [15]. Additionally, the inherent “black-box” nature of DL raises accountability concerns, motivating the use of interpretability techniques such as Grad-CAM [21,23], though standardized solutions are still lacking. Finally, integration into emergency infrastructures, compliance with regulatory standards, and the effective management of deployed models remain underexplored [24], limiting the transition of DL-based fire detection from research prototypes to operational systems.

1.1. Critical Review of Existing Surveys

Several recent surveys have comprehensively examined the progression of fire and smoke detection methods, highlighting the transition from traditional handcrafted approaches to modern AI-driven frameworks. Gaur et al. [25] traced this evolution in video-based detection, contrasting the efficiency but limited robustness of feature-based techniques with the higher accuracy yet data-intensive requirements of deep learning models. A similar perspective was provided by Jin et al. [26], who emphasized CNN-, RNN-, and hybrid architectures for video recognition, detection, and segmentation, while also presenting benchmarks with datasets such as VisiFire, BoWFire, and FASDD. Complementary to this, Gragnaniello et al. [27] proposed a scenario-based taxonomy, re-annotating existing datasets to evaluate methods across different fire sizes and activity levels, thereby offering a more reliable benchmarking framework. Collectively, these reviews underline how deep learning has reshaped video-based fire detection by improving recognition accuracy and enabling more systematic evaluation protocols.

Other studies have focused on broader applications of machine learning and deep learning across multiple fire monitoring contexts. Alkhatib et al. [28] reviewed the role of ML algorithms—ranging from supervised to reinforcement learning—in forest fire detection, prediction, and mapping using sensor networks, UAV imagery, and remote sensing data. Ghali and Akhloufi [29] and Vasconcelos et al. [30] extended this scope to remote sensing and wildland fire monitoring, synthesizing advances in CNNs, lightweight models, and Vision Transformers, while also mapping key datasets such as BowFire, FLAME, CorsicanFire, and FLAME2. Yang et al. [31] reinforced this by documenting the evolution of satellite-based datasets (e.g., Landsat, MODIS, VIIRS, Sentinel) and integrating them with modern DL architectures like ConvLSTMs and transformers for large-scale wildfire monitoring. In parallel, Sulthana et al. [32] provided insights into sensing technologies beyond vision, covering smoke, heat, flame, and gas detectors, as well as IoT-enabled multi-sensor systems for real-time detection. More focused analyses, such as those of Özel et al. [33] and Khan et al. [34], categorized image- and AI-based techniques for forest and surveillance contexts respectively, emphasizing the role of multimodal data and UAV/UGV platforms. Finally, Diaconu [35] offered a scientometric perspective, mapping research trends from over 2300 publications, while synthesizing classical ML methods alongside contemporary DL approaches.

In contrast, the present review offers a more comprehensive and integrative perspective, encompassing traditional sensor-based methods, machine learning techniques, and deep learning approaches, while systematically contextualizing their application across scenarios ranging from indoor surveillance to UAV- and satellite-based wildfire monitoring. Furthermore, this work emphasizes the significance of edge AI, lightweight architectures, and multimodal fusion strategies—areas that are under-represented in prior reviews but critical for practical, real-time deployment. To situate our contribution clearly, Table 1 provides a structured comparison of existing state-of-the-art surveys with the present work, highlighting distinctions in scope, focus, and methodological coverage.

Table 1.

Comparison of existing fire and smoke detection surveys and our work.

1.2. Main Contributions

The contributions of this research are as follows:

- The paper provides a comprehensive compendium of deep learning architectures (CNNs, YOLO, Faster R-CNN, and spatiotemporal models based on LSTM) for fire and smoke detection. It includes classification, object detection, segmentation, and hybrid systems. Further, it reports the effectiveness of the systems in terms of accuracy, speed, and fitness for real-time deployment (especially under resource-constrained conditions).

- It provides a rich comparative assessment of public datasets grouped by detection focus (fire, smoke, and combined) and discusses the challenges of data imbalance, variability, and lack of diverse forms of annotation. This valuable contribution clearly lays out the data bottleneck issue that model training and evaluation can address.

- It evaluates examples of real-world deployment contexts, e.g., urban, forest fire, tunnel, marine, and drone-based, identifies the importance of edge AI and light-weight models in building value, and provides evidence for how limits imposed by environment, context, and infrastructure vary and shape detection performance and model design.

- It identifies multiple challenges, e.g., high false alarm rates, the inability to know why an AI model made a decision (lack of interpretability), and the ability to generalize to unforeseen or changed environments. The paper proposes different areas of research as ways forward. Proposed avenues are the engagement of multimodal sensor fusion, the application of federated learning, synthetic data, and explainable AI. This vision has potential value to provide guidance for current and next-generation fire detection systems.

The paper is organized as follows. Section 1 presents the introduction, outlining the motivation for early fire and smoke detection and the limitations of traditional approaches in terms of responsiveness, accuracy, and adaptability. Section 2 describes the methodology adopted for this review, detailing the systematic search strategy, inclusion and exclusion criteria, and analytical procedures. Section 3 provides an overview of traditional fire and smoke detection technologies, such as sensor-based and rule-based methods, and discusses their operational limitations in dynamic environments. Section 4 focuses on deep learning models, highlighting various architectures (e.g., CNNs, RNNs, YOLO, hybrid models), their training requirements, and comparative advantages for fire and smoke detection tasks. Section 5 presents a detailed review of publicly available datasets used in fire and smoke detection research, examining their scale, annotation formats, image modalities, and the influence of dataset characteristics on model performance. Section 6 introduces a taxonomy of detection types, classifying approaches based on environmental conditions (e.g., forest, indoor, urban) and technical parameters (e.g., image modality, real-time constraints). Section 7 discusses deployment strategies, with a particular focus on edge AI systems for real-time fire and smoke detection in resource-constrained environments. Section 8 outlines key open challenges—such as dataset bias, model generalizability, and false positives—while Section 9 identifies promising directions for future research. Finally, Section 10 concludes the paper by summarizing the main findings and offering recommendations to guide the development of robust, scalable, and intelligent fire and smoke detection systems.

2. Review Methodology

In this section, we describe the review methodology adopted to investigate the current landscape of deep learning-based techniques for early fire and smoke detection. A systematic and structured approach was implemented to ensure a thorough examination of relevant literature, reduce potential biases, and support a critical synthesis of emerging trends, methods, datasets, and application areas. This section details the search strategy, inclusion and exclusion criteria, and analytical procedures employed to derive insights from the selected studies, with particular emphasis on assessing model performance, deployment feasibility, and real-world applicability.

2.1. Systematic Literature Review Methodology

To identify the relevant literature for this review on deep learning approaches to early fire and smoke detection, a comprehensive keyword-driven search strategy was adopted. The search utilized terms such as “early fire detection,” “smoke detection,” “wildfire detection,” “deep learning for fire recognition,” “CNN fire detection,” “YOLO fire detection,” “real-time fire detection,” and “edge AI for fire monitoring.” To reflect the breadth of application scenarios and deployment environments, additional domain-specific keywords were incorporated, including “forest fire detection,” “urban fire surveillance,” “indoor smoke detection,” “smart surveillance for fire safety,” “autonomous fire monitoring,” “IoT-based fire detection,” and “UAV fire detection.” The literature search was conducted across several major academic databases, including IEEE Xplore, ACM Digital Library, Scopus, Web of Science, SpringerLink, ScienceDirect, and Wiley Online Library. To ensure the inclusion of the most up-to-date research, only peer-reviewed journal articles, conference proceedings, and high-quality technical reports published between 2020 and 2025 were considered. The review specifically targeted studies employing machine learning (ML) or deep learning (DL) techniques for fire or smoke detection across various settings, including forests, buildings, industrial sites, vehicles, and public infrastructure.

2.2. Inclusion/Exclusion Criteria

Table 2 outlines the inclusion and exclusion criteria employed during the study selection process to ensure the relevance, quality, and scientific rigor of the literature reviewed. The inclusion criteria prioritize research that explicitly applies machine learning (ML) or deep learning (DL) techniques to fire and/or smoke detection across a range of environments, including forests, residential and commercial buildings, industrial facilities, and transportation systems. Eligible studies encompassed theoretical model development, empirical performance evaluations, and real-world deployment scenarios. Conversely, exclusion criteria were defined to eliminate studies that were not directly relevant, lacked methodological robustness, or were outdated. This filtering aimed to ensure that only contributions advancing the understanding, development, or implementation of early AI-driven fire and smoke detection systems were considered in the review.

Table 2.

Inclusion/exclusion criteria for selecting studies on early fire and smoke detection.

2.3. Data Analysis

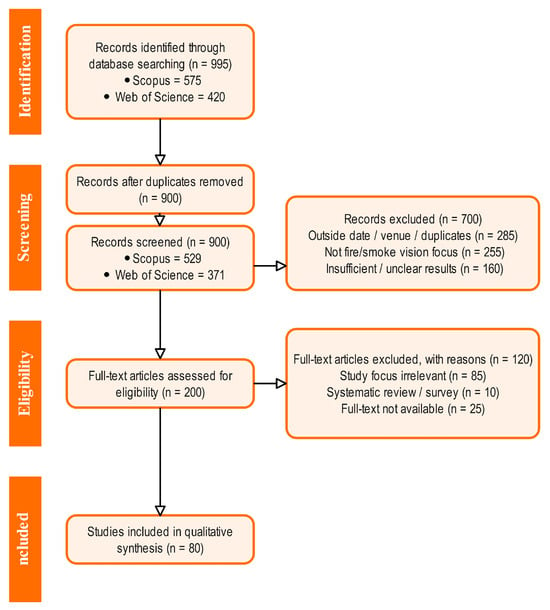

Following the search and selection process (see Figure 2), a total of 80 relevant studies were identified and analyzed for this review. These comprised 69 peer-reviewed journal articles, eight conference proceedings, and three preprints retrieved from arXiv. The selected literature spans various application areas within the domain of early fire and smoke detection, including forest monitoring, urban fire safety, indoor detection systems, industrial hazard surveillance, transportation networks, and smart infrastructure. The predominance of journal articles among the selected works reflects the growing scientific maturity and rigor of research in this area. This distribution highlights the interdisciplinary and rapidly evolving nature of deep learning-based fire and smoke detection research. It also establishes a solid foundation for synthesizing methodological frameworks, evaluating practical deployment scenarios, and identifying research gaps—particularly in less explored contexts such as indoor industrial facilities or constrained edge AI environments.

Figure 2.

PRISMA flow diagram.

3. Traditional Fire and Smoke Detection Methods

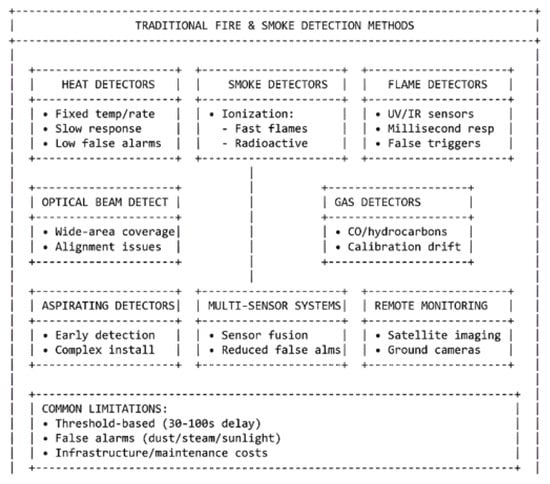

Traditional fire and smoke detection has historically relied on physical sensors and human observation, targeting heat, smoke particles, gas emissions, and flame radiation. Among the earliest devices, heat detectors are simple and cost-effective but activate only after a significant temperature rise, resulting in delayed response [36]. Smoke detectors, which became widespread in the 1970s, enhanced sensitivity through ionization or photoelectric principles; however, they are susceptible to nuisance alarms from dust, steam, or cooking vapors [5,37]. More sophisticated systems, such as optical beam detectors and aspirating smoke detectors (ASDs), expanded coverage and detection sensitivity, particularly in large-scale or critical infrastructures, though they introduced additional challenges related to calibration, maintenance, and cost [38,39]. Flame detectors, leveraging ultraviolet (UV) or infrared (IR) signatures, enable rapid fire ignition detection but remain prone to false alarms triggered by lightning, welding arcs, or environmental interference [40]. Gas sensors, capable of detecting combustion gases before flames emerge, often lack specificity and are affected by calibration drift [41].

To enhance reliability, multi-sensor detectors that integrate smoke, heat, flame, and gas sensing modalities have been developed, with performance guided by international standards such as EN54 and EN14604 [42,43]. At a larger scale, satellite-based remote sensing platforms—such as AVHRR, MODIS, and VIIRS—enable thermal anomaly monitoring for wildfire detection, while ground-based camera networks provide visual surveillance in high-risk areas. Nevertheless, these remote monitoring methods are limited by low temporal resolution, susceptibility to cloud cover, and reliance on continuous human or algorithmic oversight [44,45]. Fundamentally, traditional fire detection systems operate on threshold-based measurements of environmental changes, which restrict their responsiveness to incipient fires and limit overall situational awareness.

The limitations of conventional approaches—including delayed alarms, frequent false positives [46], sensitivity to environmental noise, and high infrastructure costs—pose significant challenges for both safety and resource management. Threshold-based designs often detect fires only after substantial development, while nuisance triggers erode public trust and impose unnecessary burdens on emergency responders [5,44]. These constraints underscore the necessity for intelligent systems capable of learning complex spatiotemporal patterns, adapting to diverse conditions, and integrating multiple modalities. Deep learning (DL) and computer vision techniques address these gaps by enabling faster detection, higher accuracy, and enhanced contextual understanding, thereby forming the foundation for next-generation fire and smoke detection solutions.

Figure 3 shows the overview description of traditional techniques for fire and smoke detection.

Figure 3.

Overview description of several examples of traditional fire and smoke detection methods, including heat detectors, smoke detectors, flame detectors, optical beam detectors, gas detection, aspirating detectors, multi-sensor systems, and remote monitoring. The figure also describes important aspects of each, specifically their capabilities, strengths, and typical limitations, such as delayed response, false alarms, and maintenance problems.

4. Deep Learning Techniques for Fire and Smoke Detection

Research on automated fire and smoke detection has increasingly leveraged deep learning [47], with the earliest contributions relying on relatively simple CNN classifiers. Frizzi et al. [48] introduced a six-layer CNN to identify smoke and flames in indoor and outdoor surveillance video. Subsequent work adopted deeper backbones such as VGG19 and ResNet50 [49], which improved robustness across challenging lighting conditions and variable outdoor scenes [50,51]. To integrate temporal cues, CNNs were extended with recurrent modules. For instance, a CNN+LSTM framework captured spatiotemporal dynamics from flame flicker and smoke motion, improving early fire recognition in video streams [52].

Two-stage detectors provided higher accuracy at the expense of computational cost. Faster R-CNN was applied to fire and smoke imagery, demonstrating precise localization in general vision benchmarks [53]. Its application in industrial factory environments further showed near-perfect recognition for indoor smoke events [54]. To stabilize predictions across frames, Bayesian fusion approaches combined Faster R-CNN with LSTM, enhancing reliability in video-based detection [55]. Complementary strategies also explored classification-focused backbones, such as EfficientNet with DeepLabv3+, which reduced false alarms in outdoor smoke monitoring [56], and hybrid ensembles of AlexNet, ResNet, and Inception that improved recognition in building-scale datasets [57].

One-stage detectors, particularly YOLO variants, gained popularity due to their favorable balance between speed and accuracy. YOLOv5 enabled real-time wildfire monitoring with UAVs [58], while improved variants trained on forest fire datasets achieved high mAP scores in large-scale monitoring [59]. Hybrid approaches, such as YOLO combined with U-Net segmentation, further reduced false positives in wildfire scenarios by refining smoke plume localization [60]. Recent comparative studies have benchmarked YOLOv5, YOLOv6, YOLOv7, YOLOv8, and YOLO-NAS on datasets such as Foggia, demonstrating the versatility of YOLO-based pipelines [61]. Additional extensions include ensemble YOLO models for UAV wildfire detection [62] and attention-enhanced YOLOv7 incorporating CBAM and BiFPN for UAV smoke imagery [63].

Transformers and attention-based architectures have also been investigated. DETR-style models combined with CNN backbones achieved high recall for small-object smoke detection in outdoor datasets [64]. Grad-CAM applied to ResNet and EfficientNet improved interpretability and trust in wildfire smoke recognition from tower-mounted surveillance [65]. Swin Transformer integrated with guillotine feature pyramid networks facilitated robust smoke detection in forest monitoring under complex backgrounds [66]. Hierarchical transformer networks have been introduced for smoke video recognition, maintaining high accuracy while reducing computational complexity [67], and Swin Transformer combined with Mask R-CNN enabled the simultaneous detection and segmentation of smoke and fire in mixed wildfire and non-fire scenes [68].

Multimodal learning strategies further enhanced detection performance. Multi-modal CNNs fusing RGB and infrared UAV imagery improved pixel-level accuracy in wildfire smoke recognition [69], while Inception-based preprocessing with CNNs provided additional gains in both indoor and outdoor datasets [70]. Modified ResNet backbones improved mAP by nearly 15% in forest fire monitoring [71], and RGB-infrared fusion with transformer architectures achieved over 90% precision and recall in urban park monitoring [72]. Hybrid ConvNeXt–transformer models with multi-scale fusion modules reported superior accuracy on benchmark datasets such as USTC_SmokeRS and E_SmokeRS, advancing remote-sensing fire detection [73].

Recent benchmarking efforts have focused on the latest YOLO generations. YOLOv8 consistently demonstrated the best speed–accuracy trade-off on wildfire datasets relative to YOLOv9, YOLOv10, and YOLOv11 [74]. GA-optimized YOLOv5 variants showed strong performance for indoor smoke detection on constrained datasets [75]. Large-scale experiments with YOLOv9–v11 identified YOLOv11n as achieving the optimal balance of recall and precision on the D-Fire dataset [76], while YOLOv11-DH3 introduced deformable convolutions and optimized IoU loss, enhancing detection precision and recall in both indoor and outdoor settings [77]. YOLOv11x, trained with optimized loss functions, reached state-of-the-art precision and recall on wildfire imagery [78]. Additional contemporary pipelines include YOLOv8 for UAV smoke detection [79,80] and semi-occluded fire detection for indoor disaster management [81].

Beyond YOLO, other architectures have contributed significantly to advancing fire detection. Ensembles combining ResNeXt-50 [82], EfficientNet-B4, and YOLOv5 achieved notable improvements in multiclass fire and smoke datasets [83]. Capsule neural networks optimized with the adapted golden search optimizer (AGSO) further increased accuracy beyond standard CNN baselines [84]. Risk-aware frameworks such as MM-SERNet incorporated multimodal fusion with repulsion loss to jointly detect smoke and assess fire risk levels [85]. Progression-aware CNNs were introduced to monitor fire growth stages in industrial and construction contexts, providing detection aligned with evacuation planning [86]. The CICLOPE project utilized dense CNN ensembles for large-scale wildfire monitoring, achieving extremely high accuracy [87]. Improved YOLOv8 frameworks demonstrated superior detection of flames, sparks, and smoke across industrial, indoor, and forest settings [88]. Collectively, these studies demonstrate the progression from initial CNN-based classifiers [48,50] to sophisticated multimodal, transformer-based, and hybrid architectures designed for robust, real-time fire and smoke detection across a wide range of environments.

More broadly, it is important to situate fire and smoke detection research within the advances of state-of-the-art computer vision. Modern object detectors (e.g., anchor-free one-stage families and transformer-based detectors such as YOLOv8/YOLOv11, RT-DETR/DETR/Deformable-DETR) define the accuracy–latency frontier on COCO-style benchmarks and offer design choices (query-based decoding, dynamic head, and strong multi-scale feature fusion) that map directly to wildfire use-cases with small, low-contrast targets. Segmentation models (Mask R-CNN, DeepLabv3+, and recent foundation models such as SAM for promptable segmentation) enable plume delineation and pixel-level supervision that can reduce false positives in cluttered backgrounds. Advances in backbones (ConvNeXt, EfficientNetV2, Vision Transformers and hierarchical variants like Swin) improve representation quality under illumination and texture shifts common to outdoor monitoring, while video architectures (SlowFast, Video Swin, and TimeSformer) provide temporally coherent features for early-smoke dynamics. Open-vocabulary and grounding approaches (e.g., OWL-ViT, Grounding-DINO) suggest pathways for rare-event generalization and rapid class expansion without exhaustive relabeling. From a learning perspective, self-supervised pretraining (e.g., MAE-style) and test-time/domain adaptation can mitigate dataset shift across regions, sensors (RGB/IR), and seasons; and for deployment, model compression via distillation, pruning, and quantization remains essential for edge constraints. We, therefore, recommend that future fire/smoke systems explicitly leverage these SOTA components (pretraining on ImageNet/COCO [89], multi-scale transformer heads, promptable segmentation for pseudo-labeling, and temporal models for stability) and report standardized metrics (e.g., mAP50:95, calibration, robustness under weather/occlusion) alongside public code/checkpoints to enhance reproducibility and comparability.

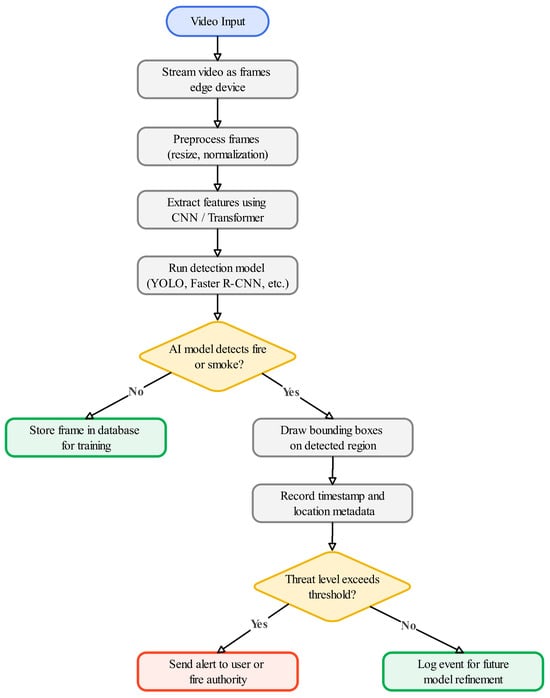

Table 3 provide a comprehensive overview of the current state of the art in deep learning approaches for fire and smoke detection, outlining the evolution of models, datasets, and performance indicators.The flowchart in Figure 4 illustrates how the surveyed methods can be positioned within a unified processing pipeline. It further highlights the methodological divergence across model families, distinguishing between CNN-based classifiers, two-stage detectors, one-stage detectors, and transformer-based architectures.

Table 3.

Comparative analysis of deep learning models for fire and smoke detection.

Figure 4.

Conceptual flow of deep learning architectures applied to fire and smoke detection.

5. Datasets Analysis for Fire and Smoke Detection

Deep learning-based approaches for fire and smoke detection are affected considerably by the quality and availability of training datasets. To this end, there have been several publicly available datasets developed, which are designed to help develop more effective recognition models across a variety of environmental contexts and data modalities. These datasets differ between each other in a number of ways, including primary focus (e.g., fire only, smoke only, or both), data type (e.g., still images, video clips, or infrared), annotation type, and the complexity of the scene depicted. To assist with clarity and facilitate comparisons, we categorize and review each dataset by four main groups: fire-only detection, smoke-only detection, fire and smoke detection, and flame and smoke detection (see Table 4).

5.1. Fire-Only Detection Datasets

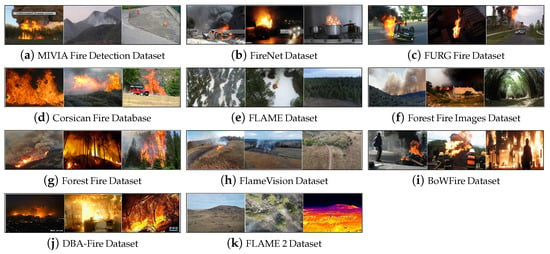

A diverse array of publicly available datasets has been curated to advance research in fire detection, encompassing a broad spectrum of environmental settings, imaging modalities, and annotation formats (see Figure 5). The MIVIA Fire Detection Dataset provides video sequences of both real fire and non-fire events, including visually similar distractors such as smoke, clouds, and red-colored objects [99]. The FireNet dataset combines fire and non-fire videos with still images to enable training and testing under varied conditions [100]. The FURG Fire dataset offers annotated video scenarios of wildfires, controlled burns, and indoor fires, supporting evaluation under dynamic conditions [101]. The Corsican Fire Database contributes multimodal RGB–NIR imagery and video for multispectral research in fusion and segmentation [102].

Figure 5.

Publicly available fire detection datasets that provide diverse environments, modalities (RGB, thermal, and NIR), and types of annotations (classification, detection, and segmentation).

The FLAME dataset captures real wildfire events with high-resolution RGB and thermal imagery, enabling both classification and segmentation tasks [103]. Similarly, the Forest Fire Images dataset provides image collections divided into fire and no-fire categories for classification in forest settings [104], while the Forest Fire Dataset includes both classification and object detection subsets for wildfire imagery [105,106]. The FlameVision dataset, compiled from online wildfire videos, supports both image classification and object detection [107]. Smaller-scale datasets such as BoWFire [108] and DBA-Fire [109] extend coverage to mixed indoor and outdoor scenarios, with annotations distinguishing flames, smoke, and distractors. Finally, the FLAME2 dataset introduces synchronized RGB and infrared drone-captured videos with expert annotations and supplementary metadata, enabling multimodal analysis of controlled burns [110].

5.2. Smoke-Only Detection Datasets

Several specialized datasets have been previously developed to facilitate the advancement of deep learning models for smoke detection in visually complex environments (see Figure 6). These datasets differ in scale, origin, and annotation granularity, serving as critical benchmarks for algorithm performance assessment. The MIVIA Smoke Dataset provides video recordings containing both smoke and challenging non-smoke distractors such as clouds, sunsets, and lens reflections [99]. The Video Smoke Detection Dataset combines smoke and no-smoke videos with multiple image subsets, supplying diverse labeled instances for training and evaluation [111]. The FIgLib dataset, derived from the HPWREN camera network, includes long-term footage from wide-area mountaintop cameras and offers stable smoke/no-smoke annotations across multiple remote sites [112]. The SKLFS dataset contributes a large corpus of synthetic and real smoke images for training robust recognition models [113], while the DSDF dataset emphasizes difficult visual conditions such as fog, motion blur, and smoke-like patterns to challenge detection algorithms [114]. Finally, the Nevada Smoke Detection Benchmark (Nemo) dataset provides images with bounding box annotations derived from the AlertWildfire network, covering multiple U.S. states and ensuring consistent annotation quality through expert re-labeling [115].

Figure 6.

Publicly available smoke detection datasets with various environments and annotations.

5.3. Fire and Smoke Detection Datasets

Similarly, multiple comprehensive datasets have been created to facilitate joint fire and smoke detection tasks using deep learning (see Figure 7). These datasets cover a wide range of formats, sources, and annotation schemes, providing valuable resources for training, evaluation, and benchmarking under complex real-world scenarios. Deep Quest AI’s Fire Flame Dataset provides labeled images across fire, smoke, and neutral classes [116], while the VisiFire Dataset contributes video clips categorized into fire, smoke, other, and forest smoke [117]. The D-Fire dataset offers annotated images with bounding boxes for fire and smoke in YOLO format [118]. FireSense is tailored for heritage site protection and provides flame-positive and smoke-positive videos alongside negative samples [119]. FiSmo combines images and videos from multiple online and simulated sources for fire–smoke analysis [120], whereas DFS provides real-life annotated images categorized into fire, smoke, and others [121].

Figure 7.

Publicly available fire and smoke datasets with multi-class labels, environmental diversity, and imaging modalities.

Larger-scale resources include the ForestFireSmoke dataset with balanced fire, smoke, and normal categories [122], the FASDD dataset with diverse imagery collected from surveillance, drones, and satellites [123], and the Domestic-Fire-and-Smoke dataset that emphasizes early-stage fire and smoke events in everyday environments [124]. Other contributions include the Fire and Smoke dataset captured in varied environments with smartphone cameras [125], ONFIRE videos with ignition time annotations [126], and the PYRONEAR2024 dataset that compiles historical wildfire events across Europe and the US [127]. Additional benchmarks such as MS-FSDB [128], the Wildfire dataset [129,130], and the WIT-UAS thermal dataset collected by drones [131] further extend the range of conditions, modalities, and tasks for joint fire and smoke detection.

5.4. Flame and Smoke Detection Datasets

As illustrated in Figure 8, various datasets have been curated to enable the simultaneous detection of flame and smoke, thereby addressing the challenges posed by overlapping visual cues in real-world environments. These datasets are particularly pertinent to domains such as industrial safety, indoor surveillance, and environmental monitoring. The KMU Fire and Smoke Dataset comprises video recordings capturing indoor and outdoor short-range flames, indoor and outdoor smoke, wildfire smoke, and moving objects that visually mimic fire or smoke [132]. The RISE (Recognizing Industrial Smoke Emissions) Dataset represents a large-scale video benchmark compiled from multiple industrial sites, featuring annotated clips with smoke presence labels to support environmental monitoring and industrial emission detection [133].

Figure 8.

Representative samples from flame and smoke detection datasets designed for mixed-cue scenarios across indoor, outdoor, and industrial environments.

Table 4.

Summary of fire and smoke detection datasets.

Table 4.

Summary of fire and smoke detection datasets.

| Dataset Class | Name | Year | Data Type | Samples | Environment |

|---|---|---|---|---|---|

| Fire Dataset | MIVIA Fire Detection [99] | 2015 | Video | 31 videos | Indoor/Outdoor |

| FURG Fire [101] | 2015 | Video | Multiple annotated videos | Wildfire/Indoor/Controlled | |

| Corsican Fire [102] | 2016 | Video | Multimodal dataset | Forest/NIR Visible | |

| BoWFire [108] | 2017 | Images | 466 images | Urban/Industrial | |

| FireNet [100] | 2019 | Video | 62 videos, 160 images | Indoor/Outdoor | |

| FLAME [103] | 2022 | Images | 39,375 (train), 8617 (test), 2003 masks | Wildfire/Outdoor | |

| Forest Fire Images [104] | 2022 | Images | 5050 images | Forest | |

| Forest Fire Dataset [105,106] | 2023 | Images | 2974 (classification) + 1690 (detection) | Forest | |

| FlameVision [107] | 2023 | Video | 8600 images | Wildfire/Outdoor | |

| DBA-Fire [109] | 2023 | Images | 3905 images (YOLO format) | Indoor/Outdoor | |

| FLAME 2 [110] | 2023 | Video | 7 RGB-IR video pairs + metadata | Wildfire/Outdoor | |

| Smoke Dataset | Video Smoke Detection [111] | 2004 | Video | ∼80K images | Outdoor |

| MIVIA Smoke [99] | 2015 | Video | 149 videos (∼35 h) | Outdoor | |

| DSDF [114] | 2020 | Images | 18,413 images | Outdoor/Lab Simulations | |

| FIgLib [112] | 2020 | Images | 24,800 high-res images | Outdoor/Wildfire | |

| SKLFS [113] | 2022 | Images | 36,104 images | Synthetic/Real | |

| Nemo dataset [115] | 2022 | Images | 2859 images | Wildfire/Outdoor | |

| Fire and Smoke Dataset | FireSense [119] | 2010 | Video | 49 videos | Urban/Heritage sites |

| VisiFire [117] | 2015 | Video | 40 video clips | Outdoor/Forest/Urban | |

| FiSmo [120] | 2017 | Images | ∼9K images + videos | Emergency/Social Media | |

| Fire Flame [116] | 2019 | Images | 3000 images | Mixed | |

| Fire & Smoke [125] | 2020 | Images | 100 images | Indoor/Outdoor/Urban | |

| D-Fire [118] | 2021 | Images | 21,000+ images | Outdoor/Mixed | |

| Domestic-Fire-and-Smoke-Dataset [124] | 2021 | Images | 5000+ images | Indoor and Outdoor | |

| DFS [121] | 2022 | Images | 9462 images | Mixed | |

| ForestFireSmoke [122] | 2023 | Images | 14,300 images | Forest | |

| FASDD [123] | 2023 | Images | 10,000 images | Various | |

| ONFIRE Dataset [126] | 2023 | Video | 322 videos | Urban/Wildfire | |

| Wildfire Dataset [129,130] | 2023 | Images | 2701 images | Forest | |

| WIT-UAS [131] | 2023 | Images | 1691 IR images (2062 labeled) | Wildfire | |

| PYRONEAR2024 [127] | 2024 | Images | 150,000 images, 150,000 annotations | Wildfire/Outdoor | |

| MS-FSDB [128] | 2024 | Images | 12,518 high-res images | Various | |

| Flame and Smoke Dataset | KMU [132] | 2011 | Video | 308.1 MB video | Indoor/Outdoor/Wildfire |

| RISE [133] | 2020 | Video | 12,567 video clips | Industrial/Environmental |

6. Detection Scenarios and Taxonomy

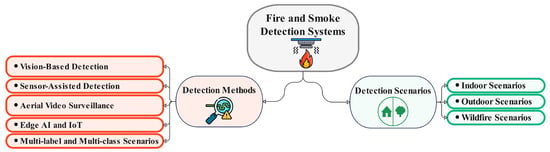

Fire and smoke detection systems must be able to operate under a wide variety of difficult and complex real-world situations. The overall performance of fire and smoke detection systems is heavily context-dependent, and the usage context involves environmental characteristics, sensor modalities, image variations and system-level operational constraints. Recent research has emphasized the increasing value of detection capabilities that are adapted to a specific context to improve robustness of detection, mitigate false alarms, and provide effective early warning [134,135]. This section contains a hierarchy of fire and smoke detection scenarios incorporating information from the current literature and real-world deployments (See Figure 9).

Figure 9.

Classification of fire and smoke detection systems across detection methods and scenarios.

6.1. Scenario Classification

Fire and smoke detection specifically needs to be tailored for each field condition, as different environments present different visual and behavioral challenges. For indoor scenarios, such as homes, businesses, warehouses, and industrial facilities, artificial lighting, reflections, airflows, or limited camera angles all provide visual noise to complicate the detection process. Models utilizing convolutional neural networks, for example, have shown interesting performance on videos containing fire surveillance, because early flame detection is crucial to avoid escalating situations [134,136].

Outdoor detection in urban environments, containing road intersections, transit centres, or construction areas, has systems that need to address changing ambient light levels, dynamic movement patterns, environmental effects (rain and fog), and clear discrimination of visual characteristics of fire-like behavior from similar phenomena such as exhaust or clouds. Since there are visual similarities that can reduce detection reliability, the detection algorithms should have a methodology to detect visual cues of fire, separately from similar visual cues found in the environment. The classification models are commonly benchmarked with datasets such as BoWFire [108] and FASDD [123] to report high performance against in order to improve visual inertia detection performance [135].

Monitoring wildfires and forested areas is even more difficult in irregular terrain, in areas of heavy foliage with low visibility, and in remote areas. There are various detection systems, whether permanent observation towers, unmanned aerial vehicles, or satellites. Each system must be rapidly and accurately able to detect faint smoke signatures or temperature changes. Datasets such as FLAME [103], PYRONEAR2024 [127], and FLAME 2 [110] have substantial multimodal data, including visible spectrum data, infrared data, and meteorological data, and have led to high-accuracy detection and segmentation results in these challenging natural environments [112,127,137].

6.2. Taxonomy by Detection Method

Fire and smoke detection methods can be categorized by the methods of detection and can provide specialized benefits for specified situations. For example, vision-based detection uses RGB, infrared (IR), or multispectral images that are processed through deep learning architectures, such as YOLO, Faster R-CNN, or numerous CNN variations, and Vision Transformers (ViT). The majority of individuals use vision-based methods solely due to the flexibility they offer, cost, and usability with traditional cameras [103,116], with applications being demonstrated with datasets such as FlameVision [107] and FireNet [100]. Sensor-assisted detection methods combine visual images with input from gas, temperature, or humidity sensors to improve confidence in environments that cannot be seen, which has made an impactful reduction in false positives in industrial or foggy environments [138]. Aerial video surveillance utilizes UAVs to conduct monitoring in large or inaccessible areas while utilizing thermal or multispectral imaging as well as GPS outputs. Datasets such as FLAME 2 [110] and WIT-UAS [131] support advancements in real-time wildfire detection and tracking systems that are used to support emergency response implementations [69]. Edge AI and Internet of Things (IoT) implementations enable low-footprint AI to function on small hardware such as NVIDIA Jetson Nano, ESP32, and Raspberry Pi enabling low-power and low-latency detection when the power availability and connectivity are limited [139].

6.3. Multi-Label and Multi-Class Scenarios

In real-world settings, fire detection systems typically deal with visual scenes that are more complex than simply an active flame or smoke. Oftentimes there could be other fire-like distractors present such as reflections or exhaust, fog, or shine from sunlight. In these kinds of environments, traditional binary classifications (fire or no-fire) tend to fail and inflate false alarm rates while undermining detection reliability. To address these and other shortcomings, the last few years have seen a proliferation of research using multi-label and multi-class classification approaches. New datasets (DFS [121], FASDD [123], and Forest Fire Smoke [122]) with complex and informative annotations that characterize multiple concurrent phenomena are now available. These annotations allow for the creation/use of models that can independently detect and differentiate between fire, smoke, and other visually similar elements in the same frame. This kind of multi-label approach will increase the generalization capacity and robustness of these detection systems especially in diverse and ambiguous operational contexts. The principal types of annotated visual conditions that the state-of-the-art fire and smoke detection models are now expected to be able to detect are summarized in Table 5.

Table 5.

Common visual conditions in multi-label and multi-class fire detection datasets.

7. Real-World Deployment and Edge AI

The deployment of fire and smoke detection systems at the edge spans diverse real-world environments, including dense forests, maritime settings, agricultural fields, and underground tunnels. Each context imposes distinct operational constraints, requiring tailored system architectures to ensure robustness and reliability (see Figure 10). While significant progress has been made, most studies remain limited to controlled experimental conditions, raising questions about scalability, reproducibility, and generalization under dynamic real-world scenarios.

Figure 10.

Deployment environments for fire detection models and supporting technologies.

Forests and wildland areas represent one of the most active domains for edge AI deployment, where unmanned aerial vehicles (UAVs) [140] are used for wide-area monitoring and rapid response. These platforms combine mobility with high-resolution coverage and onboard inference, enabling early detection of smoke plumes and flame activity in remote environments [92,141,142,143,144,145,146,147,148]. However, reproducibility remains limited by the variability of UAV hardware, flight conditions, and sensor payloads, making it challenging to benchmark results across studies. Moreover, performance often deteriorates under occlusions, adverse weather, or at night, where small plumes are difficult to distinguish from clouds or fog. Tunnel environments provide another important application domain, where embedded processors support continuous monitoring in confined, low-visibility conditions. Real-time inference in such settings can reduce response times and improve safety in transportation or industrial tunnels [149,150,151]. Nonetheless, methodological limitations include the lack of standardized tunnel benchmarks, meaning models trained in one type of infrastructure may not transfer reliably to others with different geometries, ventilation systems, or lighting conditions. False positives caused by dust, headlights, or exhaust remain common in practical deployments.

In urban and industrial facilities, energy-efficient edge AI systems are integrated into buildings, factories, and warehouses. Devices ranging from microcontrollers to single-board computers can operate independently or as distributed sensor networks, providing localized hazard detection [152,153,154,155]. While this modularity offers flexibility, reproducibility across deployments is hindered by the diversity of hardware–software combinations. Additionally, existing studies often evaluate performance in short pilot trials; long-term durability under continuous industrial operations, electromagnetic interference, and variable connectivity is rarely documented. In agriculture, UAVs and ground-based platforms are deployed to monitor controlled burns and spontaneous ignitions across large open fields. Edge AI enables adaptation to dynamic environmental factors such as wind, altitude, and illumination, facilitating early detection in challenging conditions [156,157]. Still, models validated in specific agricultural contexts may fail in others due to differences in crop types, soil reflectance, or seasonal vegetation cover. This lack of reproducibility across geographies underscores the need for diverse and standardized agricultural datasets.

Maritime and offshore installations rely on embedded fire detection systems integrated into shipboard operations. Edge AI facilitates onboard processing despite vibration, reflective surfaces, and intermittent connectivity [158,159]. Yet, most reported systems are evaluated under laboratory or port conditions rather than in extended deployments at sea. Reproducibility is hindered by limited public datasets representing maritime environments, and false alarms caused by reflections or engine exhaust remain unresolved challenges. Satellite-based fire detection represents an emerging frontier, with lightweight models embedded into payloads to process multispectral data in orbit. These platforms extend monitoring coverage to remote regions [158,160]. However, reproducibility is constrained by the proprietary nature of satellite datasets and the scarcity of open benchmarks. Furthermore, latency introduced by onboard processing, energy constraints, and downlink bottlenecks complicate real-world performance assessments. Finally, general-purpose fire detection platforms have been proposed as modular frameworks that can operate across multiple domains. By combining configurable hardware with optimized lightweight models, these platforms promise cross-domain adaptability [85,161,162].

Nonetheless, most evaluations emphasize laboratory demonstrations rather than comparative field trials, raising concerns about how well such systems generalize under heterogeneous deployment constraints.

Table 6 synthesizes contemporary edge AI fire and smoke detection platforms, systematically classifying them by deployment scenario, model architecture, hardware configuration, and environmental domain. While the table highlights architectural diversity, the broader literature reveals recurring methodological gaps such as reliance on small or synthetic datasets, limited reproducibility across platforms, and insufficient testing under adverse real-world conditions. Addressing these challenges remains essential for advancing edge AI systems from proof-of-concept prototypes to reliable large-scale deployments.

Table 6.

Edge AI fire detection platforms: deployment contexts, models, and hardware characteristics.

8. Open Challenges

Although DL has achieved substantial progress in early fire and smoke detection, numerous challenges and limitations remain. Effectively addressing these issues is crucial to ensure that detection systems are reliable, efficient, and ethically deployable in real-world environments. This section highlights the primary concerns related to dataset quality, false alarm mitigation, computational efficiency, model interpretability, and integration with existing safety standards.

- Dataset Limitations: A critical challenge in developing robust fire and smoke detection models lies in the scarcity of large-scale, high-quality, and diverse datasets with comprehensive annotations. Existing repositories are often limited in size and fail to encompass essential conditions such as nighttime visibility, dense urban environments, or extreme weather, which restrict model generalization and hinder consistent benchmarking across architectures [165,167]. Advances in this area increasingly rely on collaborative efforts to construct standardized, multimodal datasets—including RGB, infrared, and thermal imagery—that capture diverse environmental conditions and support comprehensive evaluation.

- High False Alarm Rates: Deep learning-based detection systems remain prone to misclassifying confounding factors—such as sunlight glare, vehicle headlights, fog, or steam—as fire, resulting in elevated false alarm rates that compromise trust and emergency response effectiveness [30,167,169,170]. Addressing this issue has driven research toward the integration of multimodal sensor data and the development of sophisticated preprocessing and anomaly detection techniques, enabling models to better distinguish between true fire events and visual distractors.

- Computational and Resource Constraints: High-performing architectures, including Faster R-CNN and 3D CNN+LSTM models, require substantial computational resources, limiting their deployment on UAVs, IoT devices, and other edge platforms [165,168,172]. The design of lightweight neural networks, combined with hardware acceleration through TPUs, FPGAs, or other specialized processors, has become a central strategy for maintaining detection accuracy while achieving real-time performance in resource-constrained environments.

- Interpretability and Trustworthiness: The black-box nature of deep learning models presents a significant obstacle in safety-critical applications such as fire detection, where errors can have severe consequences [30,172]. Efforts to incorporate interpretability directly into model architectures and to develop standardized evaluation protocols for explainable AI have emerged as key avenues for enhancing trustworthiness and facilitating regulatory compliance.

- Integration, Standards, and Ethics: Many current systems lack evaluation within operational emergency-response pipelines and do not fully comply with international safety standards such as EN 54. Ethical concerns—including privacy, data security, and potential misuse of surveillance technologies—further complicate deployment [13,168,172]. Progress in this domain depends on coordinated efforts among AI researchers, emergency-response authorities, and policymakers to establish regulatory frameworks, operational standards, and ethical guidelines that support safe and responsible implementation.

9. Future Directions

To overcome the aforementioned challenges, several promising research directions can enhance the robustness, efficiency, and trustworthiness of DL-based fire and smoke detection systems:

- Multimodal Sensor Fusion: Integrating heterogeneous data sources such as RGB, infrared, thermal, LiDAR, and environmental sensors can reduce false alarms and increase resilience across diverse conditions. Future work should prioritize lightweight, synchronized fusion frameworks optimized for edge deployment [173]. Popular frameworks like TensorFlow (v2.12.0), PyTorch (v2.0.1), and PyTorch Lightning (v2.0.2) can be combined with specialized toolkits (e.g., OpenVINO or NVIDIA TensorRT) to deploy multimodal fusion models on resource-constrained hardware. Research should also investigate transformer-based fusion architectures that natively combine different modalities.

- Adaptive and Lifelong Learning: Because fire dynamics and environmental conditions evolve, models must adapt continuously to new scenarios. Lifelong and continual learning methods can prevent catastrophic forgetting while improving generalization. Tools such as Avalanche (continual learning framework in PyTorch v2.0.1) or Elastic Weight Consolidation (EWC) can be leveraged to implement adaptive systems [174]. Integrating online learning into UAV- and IoT-based monitoring pipelines would allow models to evolve with changing environments.

- Federated and Distributed Learning: Federated learning enables collaborative training across distributed devices without centralizing sensitive data, thereby improving dataset diversity, ensuring privacy, and reducing communication overhead [175]. Frameworks such as TensorFlow Federated, PySyft, or NVIDIA Clara FL can be applied to large-scale surveillance networks for real-time wildfire monitoring. Future systems should investigate hybrid approaches that combine federated learning with edge-cloud orchestration to achieve scalability and robustness.

- Synthetic Data and Data Augmentation: Generating synthetic data through physics-based simulators (e.g., FARSITE, Fire Dynamics Simulator) or deep generative models such as generative adversarial networks (GANs) and variational autoencoders (VAEs) can mitigate dataset scarcity [176]. Combining this with advanced augmentation strategies in libraries like Albumentations or AugLy allows the modeling of rare and hazardous fire scenarios. Such synthetic pipelines enhance robustness under conditions that are unsafe or difficult to capture in practice, and can serve as benchmarks for stress-testing models.

- Lightweight Architectures and Edge AI: Future detection systems should prioritize efficiency-focused architectures such as MobileNetV3, YOLO-tiny variants, ShuffleNet, and lightweight Vision Transformers (e.g., MobileViT) [139]. When combined with hardware accelerators such as NVIDIA Jetson, Google Coral TPU, or Intel Movidius, these designs can achieve real-time inference on UAVs, IoT devices, and embedded systems. Tools like TensorRT, ONNX Runtime, and OpenVINO can be employed for model compression, quantization, and hardware-aware optimization.

- Explainable and Transparent AI: Developing inherently interpretable models and standardized explanation methods is essential for trust and regulatory compliance. Beyond visualization techniques such as Grad-CAM, SHAP, and LIME, research should focus on architectures with built-in interpretability (e.g., attention visualization in transformers, prototype-based models) [177]. Toolkits such as Captum (PyTorch) or InterpretML can provide explainability features during both training and deployment phases. Such methods can support certification and accountability in safety-critical applications.

- Context-Aware and Attention-Based Models: Embedding semantic reasoning and advanced attention mechanisms (e.g., CBAM, SE-blocks, or transformer-based attention) enables better discrimination between real fire events and distractors such as fog, reflections, or smoke-like patterns [178]. Context-aware architectures should integrate spatiotemporal modeling and scene understanding, potentially using frameworks like Detectron2 or MMDetection for modular experimentation. These methods promise higher robustness in cluttered and dynamic real-world environments.

10. Conclusions

This review presented how deep learning can significantly improve fire and smoke detection in the early stages. It examines some we have seen in action today, including CNN’s, YOLO versions, Faster R-CNNs, and spatiotemporal models (i.e., 3D CCNNswith LSTM’s), which basically have great capabilities to detect fire in a reasonable time and generate a high precision level depending on the scenario (i.e., centre metropolitan, suburban, and rural/forested). Additionally, examples of different data items (i.e., RGBA, thermal, Infrared, etc.), and the production of fast small models such as Fire-YOLOv5 and EFA-YOLO, allow individuals to harness early-detection systems on low-powered electronic devices in environments that prove difficult use cases.

However, there are significant issues to remediate. One is the lack of large datasets with a high quality and diversity of images that challenge the beginning successes reported in real-world applications. Other challenges include spontaneous false positives generated by open-flame detection models when reacting to fog or strong sunlight. Furthermore, deep learning models typically require powerful computing resources; hence, there is a necessity to usher in continued improvements in size and efficiency for use on smaller devices. The inherent complexity of these models necessitates transparency mechanisms (e.g., Grad-CAM) to increase the trust for these models’ performance, especially when decisions are often made in a time confluence emergency.

Unlike prior reviews that have predominantly concentrated on handcrafted techniques, video-based detection, or limited subsets of datasets and models, this study offers a more comprehensive perspective. It integrates sensor-based, machine learning, and deep learning methodologies into a cohesive framework; proposes a structured taxonomy of datasets organized into multiple categories; and systematically explores deployment contexts spanning forests, tunnels, urban environments, agriculture, maritime domains, and satellite monitoring. Additionally, it places emphasis on edge AI and lightweight architectures for real-time implementation—an area that has been under-represented in previous surveys. These facets underscore the novelty and distinctive contribution of the present work.

The central premise of this review asserts that the challenge of achieving reliable early detection of fire and smoke can be effectively addressed only through a comprehensive integration of methodologies, datasets, and deployment strategies, rather than through incremental advancements in isolated models. By leveraging multimodal sensing, lightweight model architectures, dataset augmentation, federated learning, and explainable AI, the field can attain both predictive accuracy and operational practicality. This approach reconceptualizes the issue as a systems-level challenge, necessitating innovation across technical, infrastructural, and ethical domains.

Therefore, in terms of moving forward, future studies can discover new methods to better combine all forms of datasets (and imaging types) to optimally increase accuracy while reducing false positives. The development of synthetic datasets and use of federated learning methods for data shortage could also be implemented. Innovations for small-size and adaptable models is also an essential part of these systems’ use on drones, satellites, and any IoT device. Thus, there is also an urgent need to create concrete defining rules and published ethics to promote safe and responsible technologies. Addressing these challenges will ensure that deep learning-based fire detection systems demonstrate a practical applicability as tools that can save lives and potentially limit the impact of a fire incident. This review provides a platform for researchers and developers alike to develop these systems while increasing relevance to the practical aspects of use.

Author Contributions

Conceptualization, A.E., S.E., P.D. and S.S.; data curation, A.E. and S.E.; formal analysis, A.E., S.E., P.D. and S.S.; funding acquisition, A.E.; methodology, A.E., S.E., P.D. and S.S.; project administration, A.E., S.E., P.D. and S.S.; supervision, A.E., S.E., P.D. and S.S.; validation, A.E., S.E., P.D. and S.S.; visualization, A.E., S.E., P.D. and S.S.; writing—original draft, A.E. and S.E.; writing—review and editing, A.E., S.E., P.D. and S.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No new data were created or analyzed during this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| BiFPN | Bi-directional Feature Pyramid Network |

| CBAM | Convolutional Block Attention Module |

| CNN | Convolutional Neural Network |

| DL | Deep Learning |

| DNN | Deep Neural Network |

| Faster R-CNN | Faster Region-based Convolutional Neural Network |

| GPU | Graphics Processing Unit |

| Grad-CAM | Gradient-weighted Class Activation Mapping |

| IR | Infrared |

| IoT | Internet of Things |

| KD | Knowledge Distillation |

| LSTM | Long Short-Term Memory |

| mAP | mean Average Precision |

| ML | Machine Learning |

| NIR | Near-Infrared |

| R-CNN | Region-based Convolutional Neural Network |

| RGB | Red, Green, Blue |

| RNN | Recurrent Neural Network |

| TPU | Tensor Processing Unit |

| UAV | Unmanned Aerial Vehicle |

| ViT | Vision Transformer |

| WSOL | Weakly Supervised Object Localization |

| YOLO | You Only Look Once |

References

- Bowman, D.M.J.S.; Balch, J.K.; Artaxo, P.; Bond, W.J.; Carlson, J.M.; Cochrane, M.A.; D’Antonio, C.M.; DeFries, R.S.; Doyle, J.C.; Harrison, S.P.; et al. Fire in the earth system. Science 2009, 324, 481–484. [Google Scholar] [CrossRef]

- World Health Organization. Burns. Available online: https://www.who.int/news-room/fact-sheets/detail/burns (accessed on 18 September 2025).

- Geetha, S.; Abhishek, C.S.; Akshayanat, C.S. Machine vision based fire detection techniques: A survey. Fire Technol. 2021, 57, 591–623. [Google Scholar] [CrossRef]

- Brenner, M.; Siqueira, H.V.; Gonçalves, L.M.G.; Pereira, A.S. RGB-D and thermal sensor fusion: A systematic literature review. IEEE Access 2023, 11, 82410–82442. [Google Scholar] [CrossRef]

- Roy, D.P.; Boschetti, L.; Justice, C.O.; Ju, J. The collection 5 MODIS burned area product—Global evaluation by comparison with the MODIS active fire product. Remote Sens. Environ. 2008, 112, 3690–3707. [Google Scholar] [CrossRef]

- Wang, H.; Zhou, L.; Wang, L. Miss detection vs. false alarm: Adversarial learning for small object segmentation in infrared images. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Wang, L.; Zheng, Y.; Yu, Y.; Liu, L.; Lu, H. A deep learning-based experiment on forest wildfire detection in machine vision course. IEEE Access 2023, 11, 32671–32681. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, Q.; Zhao, R. Progressive dual-attention residual network for salient object detection. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 5902–5915. [Google Scholar] [CrossRef]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: A system for large-scale machine learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI), Savannah, GA, USA, 2–4 November 2016. [Google Scholar]

- Xu, H.; Yu, Y.; Su, Y.; Zhang, Y.; Tang, Y.; Zhang, C. Light-YOLOv5 in complex fire scenarios. Remote Sens. 2022, 14, 1330. [Google Scholar]

- Muhammad, K.; Ahmad, J.; Lv, Z.; Bellavista, P.; Yang, P.; Baik, S.W. Efficient deep CNN-based fire detection and localization in video surveillance applications. IEEE Trans. Syst. Man Cybern. Syst. 2018, 49, 1419–1434. [Google Scholar] [CrossRef]

- Sucuoğlu, H.S.; Böğrekci, İ.; Demircioğlu, P. Real time fire detection using faster R-CNN model. Int. J. 3D Print. Technol. Digit. Ind. 2019, 3, 220–226. [Google Scholar]

- Zhao, M.; Barati, M. A real-time fault localization in power distribution grid for wildfire detection through deep convolutional neural networks. IEEE Trans. Ind. Appl. 2021, 57, 4316–4326. [Google Scholar] [CrossRef]

- Dewangan, A.; Krishna, K.M.; Krishna, P.V.; Tripathi, R.; Krishna, C.M. FIgLib & SmokeyNet: Dataset and deep learning model for real-time wildland fire smoke detection. Remote Sens. 2022, 14, 1007. [Google Scholar]

- Torabian, M.; Pourghassem, H.; Mahdavi-Nasab, H. Fire detection based on fractal analysis and spatio-temporal features. Fire Technol. 2021, 57, 2583–2614. [Google Scholar] [CrossRef]

- Nosseir, A.E.; Kashani, A.; Ghelfi, P.; Bogoni, A. OFS-embedded smart composites: OFDR distributed sensing for structural condition and operation monitoring in spacecraft propellant tank. In Proceedings of the 29th International Conference on Optical Fiber Sensors, Alexandria, VA, USA, 13–17 May 2025; Volume 13639, pp. 1573–1576. [Google Scholar]

- Zhou, Z.; Liu, X.; Li, Y.; Zheng, Y.; Xu, H. High-performance fire detection framework based on feature enhancement and multimodal fusion. J. Saf. Sci. Resil. 2025, 7, 100212. [Google Scholar] [CrossRef]

- MIVIA. Fire Detection Dataset. Available online: https://mivia.unisa.it/datasets/video-analysis-datasets/fire-detection-dataset/ (accessed on 18 September 2025).

- Ansmann, A.; Baars, H.; Engelmann, R.; Althausen, D.; Haarig, M.; Seifert, P.; Ohneiser, K.; Düsing, S.; Wandinger, U. CALIPSO aerosol-typing scheme misclassified stratospheric fire smoke: Case study from the 2019 Siberian wildfire season. Front. Environ. Sci. 2021, 9, 769852. [Google Scholar] [CrossRef]

- Li, Y.; Liu, Q.; Gao, X.; Zhang, Y.; Zhang, X. Real-time early indoor fire detection and localization on embedded platforms with fully convolutional one-stage object detection. Sustainability 2023, 15, 1794. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-CAM: Visual explanations. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Nosseir, A.E.; Ghelfi, P.; Bogoni, A.; Hassan, M. Design and prototyping of a smart propellant tank for spacecraft. In Proceedings of the 75th International Astronautical Congress (IAC), Baku, Azerbaijan, 14–18 October 2024. [Google Scholar]

- Gaur, A.; Singh, A.; Kumar, A.; Kumar, A.; Kapoor, K. Video flame and smoke based fire detection algorithms: A literature review. Fire Technol. 2020, 56, 1943–1980. [Google Scholar] [CrossRef]

- Jin, C.; Wang, T.; Alhusaini, N.; Zhao, S.; Liu, H.; Xu, K.; Zhang, J. Video fire detection methods based on deep learning: Datasets, methods, and future directions. Fire 2023, 6, 315. [Google Scholar] [CrossRef]

- Gragnaniello, D.; Greco, A.; Sansone, C.; Vento, B. Fire and smoke detection from videos: A literature review under a novel taxonomy. Expert Syst. Appl. 2024, 255, 124783. [Google Scholar] [CrossRef]

- Alkhatib, R.; Sahwan, W.; Alkhatieb, A.; Schütt, B. A brief review of machine learning algorithms in forest fires science. Appl. Sci. 2023, 13, 8275. [Google Scholar] [CrossRef]

- Ghali, R.; Akhloufi, M.A. Deep learning approaches for wildland fires remote sensing: Classification, detection, and segmentation. Remote Sens. 2023, 15, 1821. [Google Scholar] [CrossRef]

- Vasconcelos, R.N.; Franca Rocha, W.J.S.; Costa, D.P.; Duverger, S.G.; Santana, M.M.M.; Cambui, E.C.B.; Ferreira-Ferreira, J.; Oliveira, M.; Barbosa, L.S.; Cordeiro, C.L. Fire detection with deep learning: A comprehensive review. Land 2024, 13, 1696. [Google Scholar] [CrossRef]

- Yang, S.; Huang, Q.; Yu, M. Advancements in remote sensing for active fire detection: A review of datasets and methods. Sci. Total Environ. 2024, 943, 173273. [Google Scholar] [CrossRef] [PubMed]

- Sulthana, S.F.; Wise, C.T.A.; Ravikumar, C.V.; Anbazhagan, R.; Idayachandran, G.; Pau, G. Review study on recent developments in fire sensing methods. IEEE Access 2023, 11, 90269–90282. [Google Scholar] [CrossRef]

- Özel, B.; Alam, M.S.; Khan, M.U. Review of modern forest fire detection techniques: Innovations in image processing and deep learning. Information 2024, 15, 538. [Google Scholar] [CrossRef]

- Khan, R.A.; Bajwa, U.I.; Raza, R.H.; Anwar, M.W. Beyond boundaries: Advancements in fire and smoke detection for indoor and outdoor surveillance feeds. Eng. Appl. Artif. Intell. 2025, 142, 109855. [Google Scholar] [CrossRef]

- Diaconu, B.M. Recent advances and emerging directions in fire detection systems based on machine learning algorithms. Fire 2023, 6, 441. [Google Scholar] [CrossRef]

- Lee, J.-G.; Jun, S.; Cho, Y.-W.; Lee, H.; Kim, G.B.; Seo, J.B.; Kim, N. Deep learning in medical imaging: General overview. Korean J. Radiol. 2017, 18, 570–584. [Google Scholar] [CrossRef]

- Goldammer, J.G.; Kashparov, V.; Zibtsev, S.; Robinson, S. Best Practices and Recommendations for Wildfire Suppression in Contaminated Areas, with Focus on Radioactive Terrain. Global Fire Monitoring Center (GFMC): Freiburg, Basel, Kyiv, INIS-UA–21O0045; 2014. [Google Scholar]

- Fonollosa, J.; Solórzano, A.; Marco, S. Chemical sensor systems and associated algorithms for fire detection: A review. Sensors 2018, 18, 553. [Google Scholar] [CrossRef]

- Wikipedia. Aspirating Smoke Detector. Available online: https://en.wikipedia.org/wiki/Aspirating_smoke_detector (accessed on 18 September 2025).

- Wikipedia. Flame Detector. Available online: https://en.wikipedia.org/wiki/Flame_detector (accessed on 18 September 2025).

- Wiśnios, M.; Paciorek, M.; Szulim, R.; Poźniak, K. Identifying characteristic fire properties with stationary and non-stationary fire alarm systems. Sensors 2024, 24, 2772. [Google Scholar] [CrossRef]

- EN 54; Fire Detection and Fire Alarm Systems. European Committee for Standardization: Brussels, Belgium, 2011.

- Kiwa. EN 14604 certification smoke alarm devices. Kiwa.com April 11, 2023. Available online: https://www.kiwa.com/en/services/certification/en-14604-certification-smoke-alarm-devices/?utm_source=chatgpt.com (accessed on 18 September 2025).

- Giglio, L.; Randerson, J.T.; van der Werf, G.R. Analysis of daily, monthly, and annual burned area using the fourth-generation global fire emissions database (GFED4). J. Geophys. Res. Biogeosci. 2013, 118, 317–328. [Google Scholar] [CrossRef]

- Honary, R.; Shelton, J.; Kavehpour, P. A review of technologies for the early detection of wildfires. ASME Open J. Eng. 2025, 4, 040803. [Google Scholar] [CrossRef]

- Lee, Y.; Shim, J. False positive decremented research for fire and smoke detection in surveillance camera using spatial and temporal features based on deep learning. Electronics 2019, 8, 1167. [Google Scholar] [CrossRef]

- Zhang, B.; Li, Y. Research of deep learning-based fire and smoke detection using adaptive attention. In Proceedings of the SPIE, 6th International Conference on Optical and Photonic Engineering (icOPEN), Chongqing, China, 23 January 2024; Volume 13575, p. 135752B. [Google Scholar] [CrossRef]

- Frizzi, S.; Kaabi, R.; Bouchouicha, M.; Ginoux, J.M.; Rovetta, A. Convolutional neural network for video fire and smoke detection. In Proceedings of the 14th International Conference on Control, Automation, Robotics and Vision (ICARCV), Phuket, Thailand, 13–15 November 2016; pp. 1057–1062. [Google Scholar]

- Sathishkumar, V.E.; Cho, J.; Subramanian, M.; Naren, O.S. Forest fire and smoke detection using deep learning-based learning without forgetting. Fire Ecol. 2023, 19, 9. [Google Scholar] [CrossRef]

- Khan, A.; Hassan, B.; Khan, S.; Ahmed, R.; Abuassba, A. DeepFire: A novel dataset and deep transfer learning benchmark for forest fire detection. Mobile Inf. Syst. 2022, 45358359. [Google Scholar] [CrossRef]

- Sousa, M.J.; Moutinho, A.; Almeida, M. Wildfire detection using transfer learning on augmented datasets. Expert Syst. Appl. 2020, 142, 112975. [Google Scholar] [CrossRef]

- Ghani, R.F. Robust real-time fire detector using CNN and LSTM. In Proceedings of the IEEE Student Conference on Research and Development (SCOReD), Selangor, Malaysia, 2–4 December 2019. [Google Scholar]

- Chaoxia, C.; Shang, W.; Zhang, F. Information-guided flame detection based on Faster R-CNN. IEEE Access 2020, 8, 58923–58932. [Google Scholar] [CrossRef]

- Li, L.; Liu, F.; Ding, Y. Real-time smoke detection with Faster R-CNN. In Proceedings of the 2021 2nd International Conference on Artificial Intelligence and Information Systems, Chongqing, China, 28–30 May 2021; pp. 1–5. [Google Scholar]

- Kim, B.; Lee, J. A Bayesian network-based information fusion combined with DNNs for robust video fire detection. Appl. Sci. 2021, 11, 7624. [Google Scholar] [CrossRef]

- Khan, S.; Muhammad, K.; Hussain, T.; Del Ser, J.; Cuzzolin, F.; Bhattacharyya, S.; Akhtar, Z.; de Albuquerque, V.H.C. Deepsmoke: Deep learning model for smoke detection and segmentation in outdoor environments. Expert Syst. Appl. 2021, 182, 115125. [Google Scholar] [CrossRef]

- Jia, Y.; Chen, W.; Yang, M.; Wang, L.; Liu, D.; Zhang, Q. Video smoke detection with domain knowledge and transfer learning from deep convolutional neural networks. Optik 2021, 240, 166947. [Google Scholar] [CrossRef]

- Shahriar Sozol, M.S.; Mondal, M.R.H.; Thamrin, A.H. Indoor fire and smoke detection based on optimized YOLOv5. PLoS ONE 2025, 20, e0322052. [Google Scholar]