2. Wu Dialect Speech Data Processing

2.1. Methodology

The study adopts a documented, literature-grounded methodology that specifies where, when, how, and under what speaking conditions Wu-dialect recordings were made, and how the data were curated for recognition experiments.

2.1.1. Study Sites, Period, and Regional Coverage

Recordings and collections span 2023–2025, covering core Wu regions including Suzhou, Shanghai, Ningbo, Wenzhou, and Lishui (see

Table 2). On-site recordings were conducted indoors in a speech laboratory at Nanjing University of Posts and Telecommunications under controlled acoustics (background noise < 30 dB) using a Rode NT1-A condenser microphone and Focusrite Scarlett 2i2 interface (manufacturer: Focusrite Audio Engineering Ltd., High Wycombe, UK) at 16 kHz/16-bit mono WAV. No outdoor “street” recordings were included so as to control background noise and reverberation. The complementary continuous-speech subset was sourced from public online platforms (e.g., local news, interviews, podcasts) to reflect natural usage in the wild while respecting platform policies.

2.1.2. Speaking Tasks and Topic Design

Two complementary speaking conditions were used to balance programmed/controlled and spontaneous/naturalistic speech.

Scripted reading (WXDPC-1) involved reading isolated words, short sentences, and structural contrast sentences designed to control phoneme–tone coverage and to facilitate alignment. Topics emphasized high-frequency daily lexicon—numbers, time expressions, place names, kinship terms, and action verbs—and culturally salient items to ensure representative coverage.

Spontaneous continuous speech (WXDPC-2) comprised interviews, conversational podcasts, short reportage, and local storytelling. These recordings preserve free speech with authentic rates and discourse phenomena. The content spans everyday communication, regional expressions, and narrative discourse. This pairing follows common practice in dialect ASR corpora to couple clean, balanced prompts with in-the-wild speech for generalization [

10,

15].

2.1.3. Participants and Ethics

Twenty native Wu speakers were recruited with balanced gender (50/50) and three age bands (18–30, 31–59, ≥60), distributed across the five regions above to capture intra-dialect diversity. All participants provided written informed consent. Web-sourced materials were limited to publicly available content and processed in accordance with intellectual-property requirements.

2.1.4. Annotation Protocol and Quality Assurance

Forced alignment was combined with manual verification so that each audio segment was paired with its exact transcript and region label. Quality checks excluded samples with abnormal rates, semantic deviation, or unclear articulation; problematic items were re-recorded under supervision. A double-annotation with arbitration scheme was applied to ~20% of samples, yielding κ = 0.82 (phoneme) and κ = 0.85 (word), indicating high inter-annotator agreement (>0.8). These procedures follow reliability standards commonly adopted in low-resource dialect corpora [

10].

2.1.5. Preprocessing and Feature Preparation

Standard low-resource ASR preprocessing was used: pre-emphasis, endpoint detection (short-term energy + zero-crossing), and framing/Hamming windowing, prior to extracting MFCCs and i-vectors for downstream modeling [

12,

16]. This pipeline is widely reported to improve robustness for dialect recognition with limited data [

17].

2.1.6. Rationale and Literature Support

Combining scripted and spontaneous speech provides both clean phonetic coverage and ecological validity, a design shown to stabilize training and reduce error variance as corpus size/diversity increases [

10,

15]. The preprocessing choices above are standard and effective for complex phonological systems [

12,

16]. Consolidating these procedures under a single Methodology section addresses reviewer concerns while keeping detailed operational steps available in the subsequent subsections.

2.2. Construction and Basic Introduction of the Wu Dialect Speech Corpus (WXDPC)

In this study, the construction of the corpus is a core element of the Wu dialect speech recognition system, which determines the recognition performance and generalization ability of the system. The goal of corpus construction is to ensure that the selected data is both representative and covers as many natural language phenomena as possible, effectively supporting the training of the deep learning model. To achieve this goal, we followed the following basic principles and steps:

Data collection was carried out in two ways: first, through manual field recording, and second, by collecting speech data with Wu dialects from online platforms (such as videos and podcasts). All data underwent strict screening to remove noise and interference sounds.

During the annotation process, we adopted a combination of manual annotation and automatic alignment methods to ensure that each audio file corresponds accurately to the respective text and region label.

- 3.

Data Quality Control

For each speech sample, we performed quality checks to ensure the recordings were clear, noise-free, and had a natural speech rate, in line with real-world speech usage.

All audio data underwent multiple rounds of review and annotation consistency checks to ensure high data quality. In terms of annotation consistency, this study used a “double annotation + arbitration” mechanism. Approximately 20% of the samples were independently annotated by two annotators, and consistency metrics were calculated. The results showed that the Cohen’s κ value at the phoneme level was 0.82, and at the word level, the κ value was 0.85, both of which achieved a high level of consistency (>0.8). This result proves the consistency and reliability of the corpus annotation.

The basic information of the Wu Dialect Speech Corpus is presented in

Table 2. All data collection procedures strictly followed the ethical review process, and written consent was obtained from all speakers for research purposes.

2.3. WXDPC-1 Establishment of the Wu Dialect Isolated Speech Corpus

WXDPC-1 is the core subset of the Wu Dialect Speech Corpus constructed in this study. It primarily collects isolated words and short sentence samples through on-site manual recording. The goal is to provide high-quality speech resources for building standard phoneme mappings, training basic acoustic models, and analyzing regional phonetic feature differences in Wu dialects. The establishment process of this corpus includes multiple stages: determining survey items, selecting speakers, designing corpus content, conducting recordings, and organizing data. These steps ensure that the collected speech data are authentic, representative, and suitable for modeling [

18].

During the corpus survey and design stage, the research team extensively consulted materials such as The Grand Dictionary of Modern Chinese Dialects, Dialect Survey Manual for the Lower Yangtze River Region, elementary school textbooks from Wu-speaking areas, and subtitle corpora from television programs. Combined with interviews with Wu dialect speakers, the team developed a set of survey items covering three types of corpus materials: basic vocabulary, commonly used colloquial expressions, and structural contrast sentences. Specific items include numbers, place names, time expressions, kinship terms, spatial expressions, action verbs, interjections, and culturally distinctive short sentences.

The overall corpus design followed the principles of “frequency priority, comprehensive coverage, and clear structure” to meet the extensive phoneme-tone combination requirements of speech recognition system training. In total, approximately 18,000 isolated-speech items were finalized [

10]. The quantity and content of items were slightly adjusted across speaker groups according to their respective dialect subregions to enhance the naturalness and regional adaptability of the corpus. The consonant and vowel inventories are shown in

Table 3 and

Table 4.

In the recruitment of speakers, this study adhered to the principle of a three-dimensional balance in terms of gender, age, and region, selecting a total of 20 speakers from core Wu dialect areas such as Suzhou, Zhejiang North, and Shanghai. In terms of gender, there was a 50% balance between male and female speakers. In terms of age, the sample covered three groups: youth (18–30 years old), middle-aged (31–59 years old), and elderly (60 years and above). Geographically, the speakers were distributed across five representative regions, including Suzhou, Shanghai, Ningbo, Wenzhou, and Lishui, ensuring the diversity and balance of the speech samples. This multidimensional balance design helps reduce bias from any single group and enhances the representativeness of the corpus in capturing the characteristics of Wu dialect. During the recruitment process, the research team pre-assessed the speakers’ proficiency in Mandarin, their ability to control speech rate, and the clarity of their expressions, ensuring stable recording quality and representative pronunciation. All participants signed an Informed Consent for Audio Data Usage, ensuring the legality and ethical compliance of using the recording data for research purposes.

Although this study recruited only 20 speakers, their distribution covers key Wu dialect areas such as Suzhou, Zhejiang North, and Shanghai, ensuring the diversity of regional features within a limited scale. We emphasize that the goal of this study is not to fully cover all Wu dialect speakers, but rather to provide a representative small-scale high-quality dataset to support cross-regional acoustic modeling. Similar small-scale corpora have been used in dialect speech recognition research, demonstrating the feasibility of this design in academic research.

The recording work was carried out in the speech lab of Nanjing University of Posts and Telecommunications, with environmental noise controlled below 30 dB. A Rode NT1-A condenser microphone paired with a Focusrite Scarlett 2i2 audio interface was used, and recordings were captured and segmented using Audacity software (version 3.4.2). The technical parameters were uniformly set to a sampling rate of 16 kHz, 16-bit quantization depth, and mono WAV format. Each recording was named and organized according to its entry number to facilitate subsequent annotation and processing. To ensure the quality of speech samples, each recording was initially monitored on-site by technicians, and samples with overly fast speech, semantic deviations, or unclear articulation were immediately re-recorded. After completion, all audio data were centrally stored on a server with backup copies archived. The final WXDPC-1 dataset contains approximately 21 h of recorded speech, with a total size of 5.10 GB, serving as the foundational corpus resource for subsequent acoustic modeling and semantic feedback optimization in this study.

All speech data were obtained with the participants’ written informed consent and comply with relevant ethical requirements. For the release of the corpus, this study adopts an open-access model:

Data License: The speech data in this study follow the CC BY-NC 4.0 open access license, allowing academic researchers to use, share, and reuse the data.

Data Sharing: The specific access method for the speech data, annotation information, and model code can be obtained by contacting the corresponding author via email, ensuring that other researchers can replicate and verify the results of this study.

2.4. Preliminary Data Processing

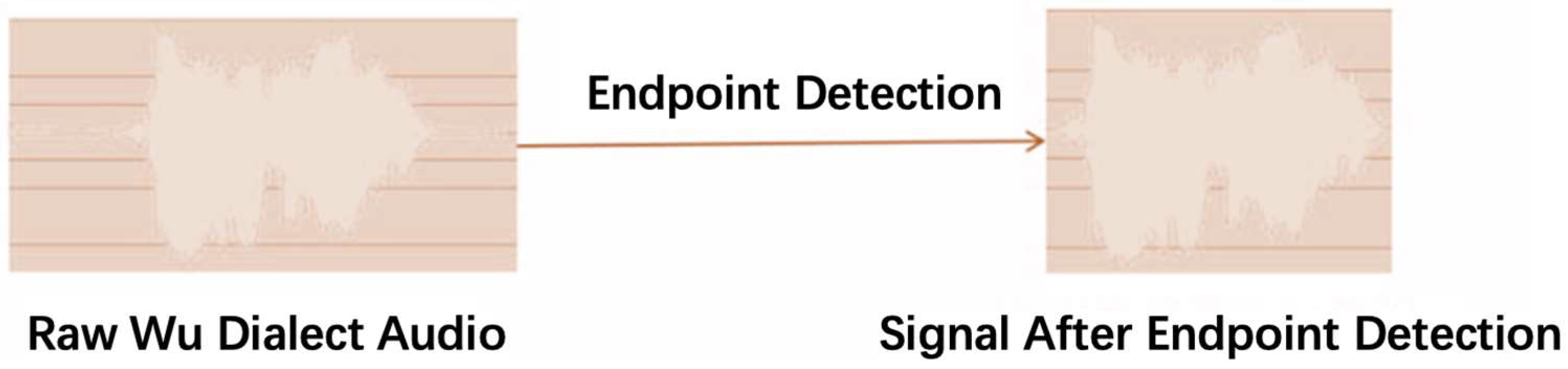

To ensure that the collected Wu dialect speech data meet the input requirements for subsequent acoustic modeling and speech recognition tasks, in the data preprocessing stage, this study adopted endpoint detection, framing, and windowing techniques. These methods have been widely validated as effective in low-resource speech recognition, especially for dialect recognition tasks in complex languages. During the endpoint detection process, both short-term energy and zero-crossing rate decision methods were combined, enabling accurate removal of non-speech interference and extraction of valid speech segments. The digitization process of speech signals is shown in

Figure 1.

2.4.1. Pre-Emphasis Processing

Before extracting features from speech signals, pre-emphasis processing (Pre-emphasis) is first applied [

19]. This operation primarily enhances the high-frequency components of the original speech signal by applying a high-pass filter, thereby suppressing the energy attenuation of the high-frequency parts in the speech waveform.

The specific implementation formula is as follows:

where

represents the sample value of the current frame, and

is the pre-emphasis coefficient was set to 0.97, a widely used value in speech recognition research [

12]. In this study, pre-emphasis processing was uniformly applied to all speech samples, which helps enhance the acoustic model’s ability to recognize clear consonants and high-frequency components [

12].

Using a difference equation to implement pre-emphasis, if the

n-th sample point of the input signal is

and the output is

, then:

2.4.2. Endpoint Detection

The next step is endpoint detection (Endpoint Detection), which is used to remove the leading and trailing silence segments as well as non-speech interferences from speech clips, extracting the true start and end points of pronunciation. Endpoint detection adopted a joint decision algorithm based on short-time energy and zero-crossing rate, with thresholds set according to the ranges recommended in prior studies [

16], where the speech segment boundaries are determined by analyzing frame-level energy variations and the number of waveform zero crossings. This process not only effectively reduces the data size but also increases the density of useful information during model training [

16].

The signal changes before and after endpoint detection are shown in

Figure 2. It is evident that the endpoint detection process trims the ineffective silent segments, saving computational resources for subsequent analysis.

2.4.3. Framing and Windowing

After endpoint detection, the research team applied framing and windowing to the speech signals. As speech is a non-stationary signal, it can only be regarded as quasi-stationary over short durations (approximately 10–30 ms). To extract temporal features, each speech segment in this study was divided into frames of 25 ms (400 samples) with a frame shift of 10 ms (160 samples), ensuring overlapping frames to improve temporal resolution [

20]. Each frame was weighted with a Hamming window to reduce spectral leakage caused by frame edge effects and enhance the stability of frequency-domain features. The schematic diagram of framing and windowing is shown in

Figure 3.

2.5. Data Interpretation Methods and Motivation for Method Selection

In this study, our data interpretation method relies on a deep understanding of the diversity and complexity of Wu dialects. To effectively handle the regional variations in Wu dialects, we adopted a deep learning-based multi-level feature extraction and modeling strategy. By using acoustic features such as MFCC (Mel-Frequency Cepstral Coefficients) and i-vectors, we are able to extract the time-frequency features from speech signals, capturing fine-grained phonetic variations in Wu dialects, including phonemes, tones, and prosody. Additionally, considering the significant regional differences within Wu dialects, we introduced geographical region labels and integrated these labels with acoustic features through the GeoDNN-HMM model, further enhancing the model’s recognition accuracy. This approach enables the model to account for phonetic variations within dialects, addressing the speech differences between different regions of Wu dialect.

The motivation for selecting this method lies in the fact that the diversity of Wu dialects makes traditional single-model approaches inadequate for handling their complex linguistic phenomena. By combining Deep Neural Networks (DNN) and Hidden Markov Models (HMM), we are able to model the complex relationships between phonemes and speech signals effectively, while leveraging transfer learning to improve the model’s generalization ability in low-resource dialect regions. Through the two-level phoneme mapping, we are able to model the phonemes of Wu dialects in greater detail, addressing issues such as tones and variant pronunciations that traditional methods struggle to handle.

During the encoding and decoding process of the data, we set thresholds for several key parameters to ensure efficient feature extraction and speech decoding. Firstly, during the preprocessing phase, we set thresholds for short-term energy and zero-crossing rate to control the extraction of valid speech segments, ensuring the quality of the training data. In the decoding process, we set parameters such as beam size and lattice beam to ensure that the model can accurately capture the changes in syllables and phonemes, particularly for the complex pronunciation patterns in Wu dialects. Moreover, during training, we incorporated regularization techniques (such as L2 regularization) and dropout to prevent overfitting, ensuring the model’s stability and generalization ability under multi-region and multi-dialect conditions.

The selection of these methods and threshold settings stems from our deep understanding of Wu dialects. By combining geographical region labels, transfer learning, and fine-grained phoneme modeling, we are able to effectively address the challenges of automatic Wu dialect recognition. Especially when dealing with regions with significant dialectal differences, the robustness and accuracy of the model have been significantly enhanced. In summary, the combined strategy of two-level phoneme mapping, regional label modeling, and transfer learning is proposed based on recent low-resource dialect recognition literature. This strategy addresses the complexities of dialectal differences and data limitations, proving to be superior to single methods in the experiments, reflecting the scientific rationality and empirical foundation of the method selection.

2.6. Construction of the WXDPC-1 Wu Dialect Isolated Speech Corpus

WXDPC-2 is a continuous speech sub-corpus of Wu dialects constructed in this study, with materials primarily collected from dialect-related videos, podcasts, and dubbing content on online platforms. Considering the scarcity of resources in certain Wu dialect regions (such as Wenzhou-Taizhou and Jinhua-Lishui), the study utilized web crawling techniques to automatically capture publicly available content containing Wu dialect speech with subtitles, which was then converted into speech–text data suitable for model training.

The speech materials were sourced from multiple mainstream content platforms, including Bilibili, Ximalaya FM, Douyin (TikTok China), and selected WeChat official account audio columns. The chosen videos mainly consist of local news reports, short drama dubbing, and hometown interviews, all recorded in authentic Wu dialect by native speakers. The content covers folk stories, daily conversations, and regional expressions, demonstrating strong colloquial and regional characteristics [

21].

During the data screening phase, an automatic speech transcription model was employed to rapidly recognize the speech content, which was then compared with the subtitle text for consistency. Manual verification was conducted to remove samples mixed with Mandarin or other dialects. For the retained samples, metadata such as speech duration, publisher’s regional tag, and corresponding text were further extracted to build a complete “Speech–Text–Region” triplet structure, followed by unified indexing and organization.

Ultimately, approximately 9200 continuous speech sentences were collected, with a total duration of 28 h and a total size of 11.2 GB. The samples feature natural content, authentic speaking rates, and broad coverage, providing robust data support for improving the generalization of Wu dialect recognition systems across diverse regions and contexts. All data are used strictly for academic research, in compliance with platform usage policies and intellectual property regulations.

3. Deep Learning-Based Wu Dialect Speech Recognition Model

3.1. Monophone and Triphone Models

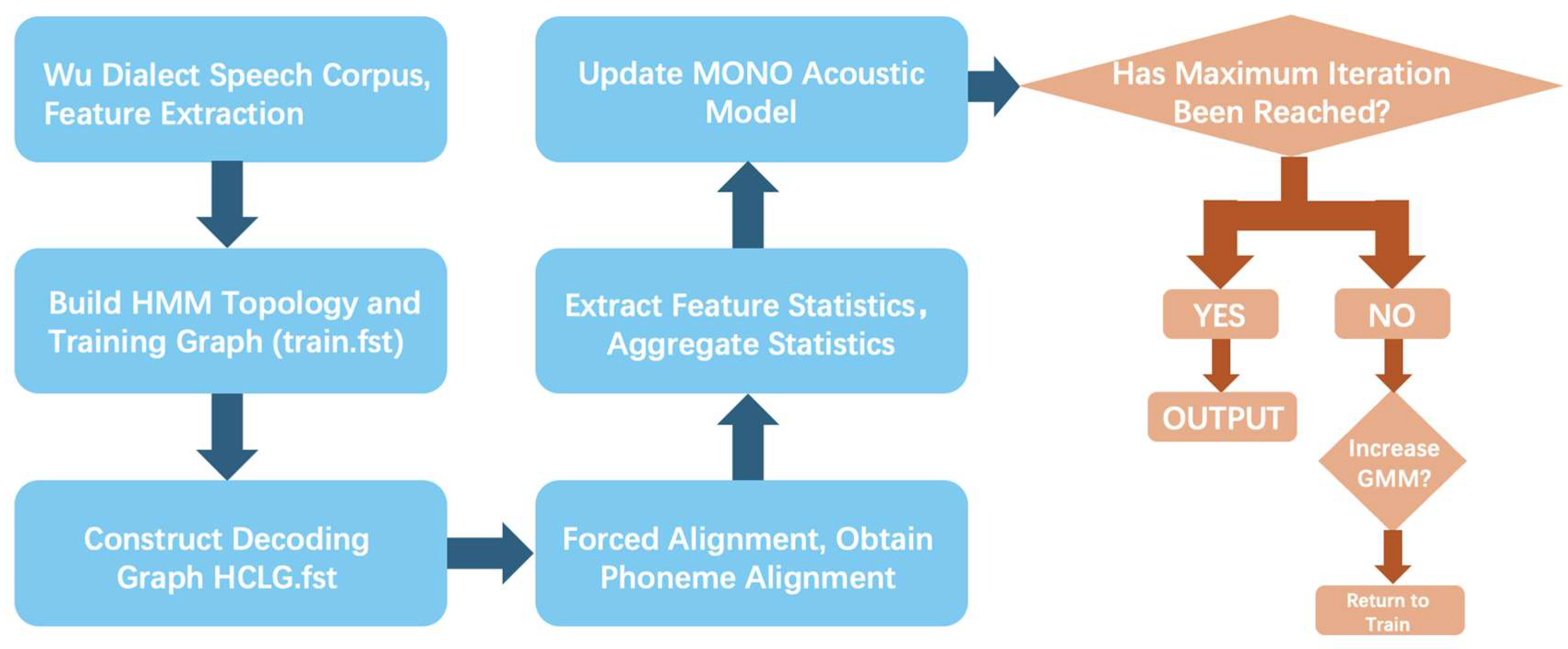

3.1.1. Monophone Model Training Process

The monophone model uses phonemes as the basic modeling units, without considering contextual dependencies. Training relies solely on the acoustic features of the current phoneme. Wu dialect possesses unique phonological characteristics: its initials exhibit a clear voiced–voiceless distinction, while its finals feature complex structures such as nasalization, stops, and entering tones. These characteristics must be fully considered in acoustic modeling [

22].

During the training of the Wu dialect monophone model, MFCCs and other acoustic features that include prosodic and tonal information are first extracted from the annotated speech corpus. These features are then frame-aligned with the corresponding time boundaries of the speech signal. Next, a Hidden Markov Model (HMM) is initialized based on the feature files, and a Gaussian Mixture Model (GMM) is used to perform clustering for each phoneme state [

23].

Once the model is initialized, forced alignment is applied to obtain precise phoneme boundaries, generating frame-level phoneme labels for more accurate training data. The model parameters are iteratively refined based on updated alignments and statistical information, ultimately producing the Wu dialect monophone acoustic model (Mono model).

Figure 4 illustrates the training process of the Wu dialect monophone model.

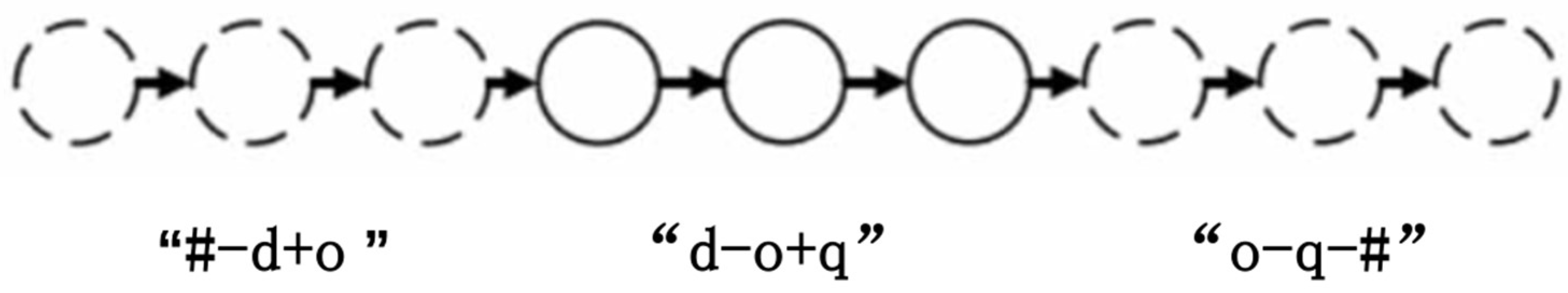

3.1.2. Triphone Model Training Process

Compared with the monophone model, the triphone model can more effectively capture the contextual information of speech, which is particularly suitable for languages like Wu dialect, characterized by a complex phonetic system, a high proportion of homophones, and prominent pronunciation variations. In triphone modeling, each phoneme is not only modeled as the central phoneme but also combined with its preceding and following neighboring phonemes to form the modeling unit [

24].

For example, when processing the syllable “doq,” its triphone modeling units might take forms such as “#−d+o,” “d−o+q,” and “o−q−#.” This approach helps fully represent the coarticulation effects and speech stream variations that occur during pronunciation. The triphone model training process is shown in

Figure 5.

The training process for triphone modeling is as follows: First, the train_deltas.sh script is executed to collect acoustic feature data containing contextual information, which is then fed into the clustering module to generate the initial state partitions. Next, decision trees are constructed to refine the clustering results and produce the corresponding set of clustering questions [

25]. Subsequently, based on the defined question set, the phonemes in the training corpus undergo context expansion, state tying, and alignment transformation to establish context-dependent pronunciation models and construct a more robust decision tree structure [

26].

During model training, the above process is iteratively repeated. In each iteration, statistics are recalculated, and the model parameters are updated according to the new clustering structure and alignment results. Once the predefined number of iterations is reached, the final Wu Dialect Triphone Acoustic Model (Tri1) is produced.

3.2. Construction of Wu Dialect Secondary Mapping (DNN-HMM-2)

Unlike standard context-dependent triphone modeling, which relies solely on phoneme combinations, the two-level phoneme mapping introduces a “speech feature category” layer during modeling, such as distinctions for voicing, aspirated vs. unaspirated, and final sound categories. This grouping approach helps avoid the issue of data sparsity, allowing low-frequency or rare phonemes to be modeled more stably through shared mapping labels. Compared to traditional triphone modeling, the two-level phoneme mapping reduces parameter sparsity caused by excessive context combinations, while better aligning with the unique phonological structure of Wu dialects. This method has been proven effective in recent low-resource speech recognition research.

In traditional acoustic modeling, the mapping is primarily based on a one-to-one correspondence between phonemes and syllables or between phonemes and speech units. However, in Wu dialect speech recognition tasks, due to its unique phonetic phenomena—such as the voicing contrast in initials, voiced plosives, entering tone codas, aspiration features, and abundant coda variations—simply using standard phonemes as output layer labels often fails to effectively distinguish these fine-grained pronunciation differences. This challenge becomes even more pronounced when dealing with a large number of heterophonic words, stress differences, and syllable mergers, where traditional mapping strategies struggle to capture the complex relationships among these variations.

To address this issue, this study introduces a context-based secondary mapping strategy, which abstracts a more stable recognition target set from the original Wu dialect pinyin or phoneme inventory. This target set is constructed by re-partitioning Wu dialect phonemes according to common phonetic variants. For instance, for words like “ngou2li1ta3n5” (“milk candy”), which contain voiced coda vowels, a direct phoneme mapping may lead to boundary ambiguity between “ngou” and “li.” By establishing a finer-grained state label set, the secondary mapping can more reliably align acoustic model states with lexical units, enhancing recognition robustness [

27].

Table 5 presents examples of the mapping between common Wu dialect phonetic spellings and their corresponding secondary labels constructed in this study:

Hypothesis 2 anticipates that by introducing the two-level phoneme mapping mechanism, the model will be better equipped to handle these complex pronunciation phenomena. Existing research has shown that this multi-level mapping strategy demonstrates significant advantages in low-resource speech recognition. We verified this hypothesis through experiments, and the results showed that the two-level phoneme mapping achieves higher accuracy in the recognition of initial consonants and final vowels in Wu dialects.

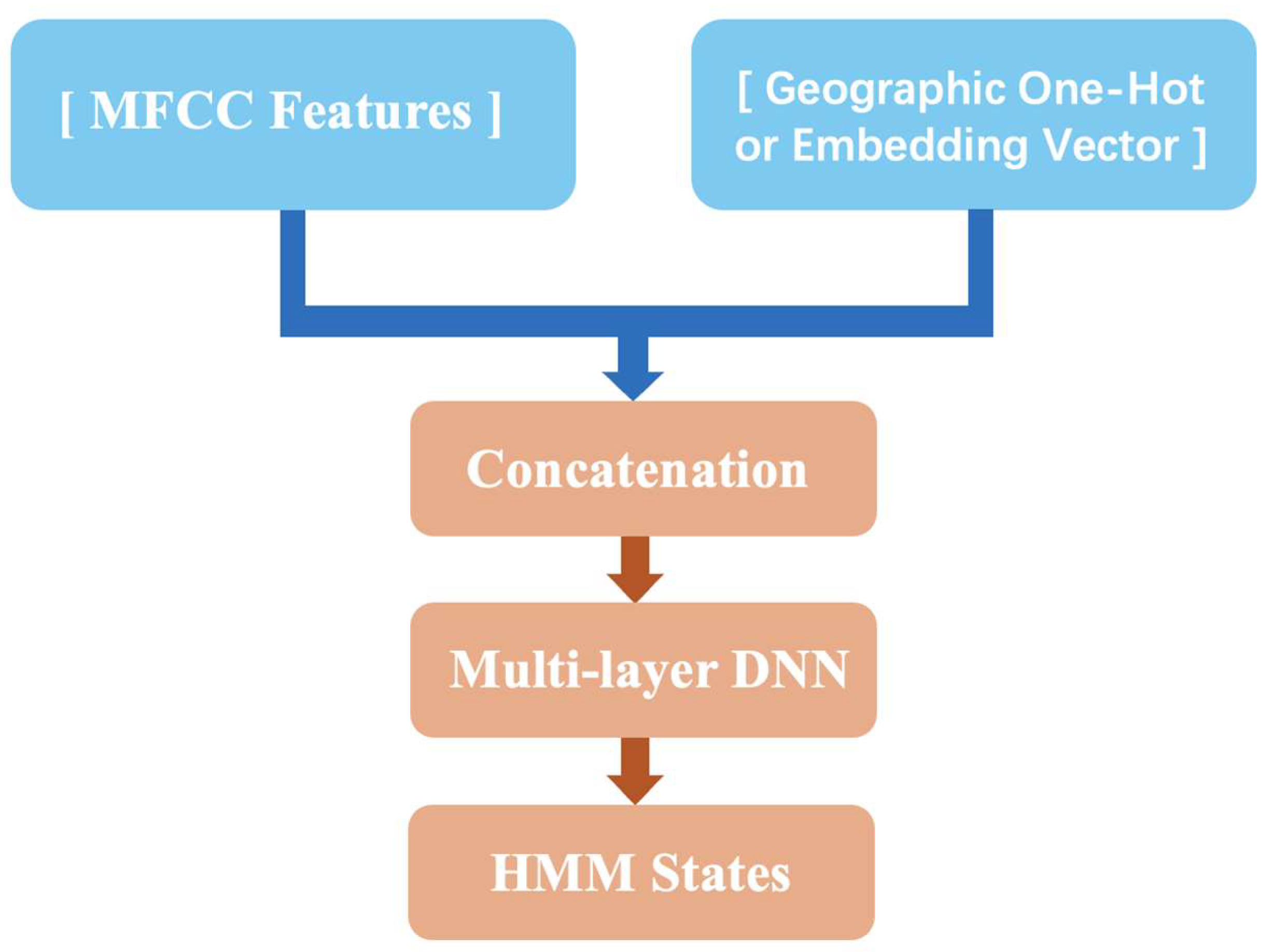

3.3. Regional Phonetic Feature Modeling Mechanism

Significant regional phonetic variations exist within the Wu dialect, with different subregions (e.g., Suzhou-Shanghai, Wenzhou-Taizhou, Jinhua-Lishui) showing structural differences in aspects such as voicing of initials, coda formation of finals, and tonal patterns. For instance, the word “things” is pronounced as “ton1-ssi1” in Shanghainese, whereas in Wenzhou dialect it is closer to “tʰeu1-syi1”. The two differ markedly in tonal contours, usage of voiced sounds, and sandhi patterns. Traditional unified modeling strategies often fail to account for such interregional variations, leading to a drop in recognition performance for non-central dialects (e.g., Wenzhou).

There are significant regional differences within Wu dialects, with speech features varying across different regions in terms of initial consonant voicing, final vowel endings, intonation, etc. (such as the differences between Shanghainese and Wenzhou dialect). To address these differences, this study proposes a regional speech feature modeling mechanism. According to the study [

8], the introduction of geographical region labels helps improve the model’s performance in complex dialect environments. Especially during cross-region transfer, the integration of regional information can significantly enhance recognition accuracy in low-resource areas. To address this issue, this study introduces a regional phonetic feature modeling mechanism that explicitly incorporates geographic linguistic differences into the model architecture. Specifically, during feature extraction, each speech sample is annotated with its corresponding geographic region label (e.g., Suzhou, Ningbo, Wenzhou), and this label is embedded as an auxiliary feature during acoustic model training. This allows the model to automatically capture region-specific phonetic variations during parameter learning, maintaining shared acoustic modeling capabilities while preserving regional distinctions, thereby enhancing the model’s representation of multi-regional Wu speech [

28].

In the experimental phase, we designed two comparative settings: with geographic labels and without geographic labels, and conducted recognition tests across multiple Wu dialect locations. Results show that the model incorporating regional information achieved significant improvements in recognition accuracy for non-central dialects such as Wenzhou-Taizhou and Jinhua-Lishui, demonstrating the effectiveness and broad applicability of this mechanism in complex dialect speech recognition tasks [

29]. The regional phonetic feature modeling framework for Wu dialect recognition is illustrated in

Figure 6.

3.4. Regional Clustering and Transfer Learning Strategy

Although this study collected data covering multiple Wu dialect regions, there remains an imbalance in the amount of speech data across regions. Suzhou and Shanghai dialects have relatively sufficient data, while sub-dialect regions such as Wenzhou and Lishui suffer from limited training samples. To enhance model robustness under such data constraints, this paper introduces unsupervised clustering and transfer learning strategies, optimizing the training process according to the data distribution characteristics.

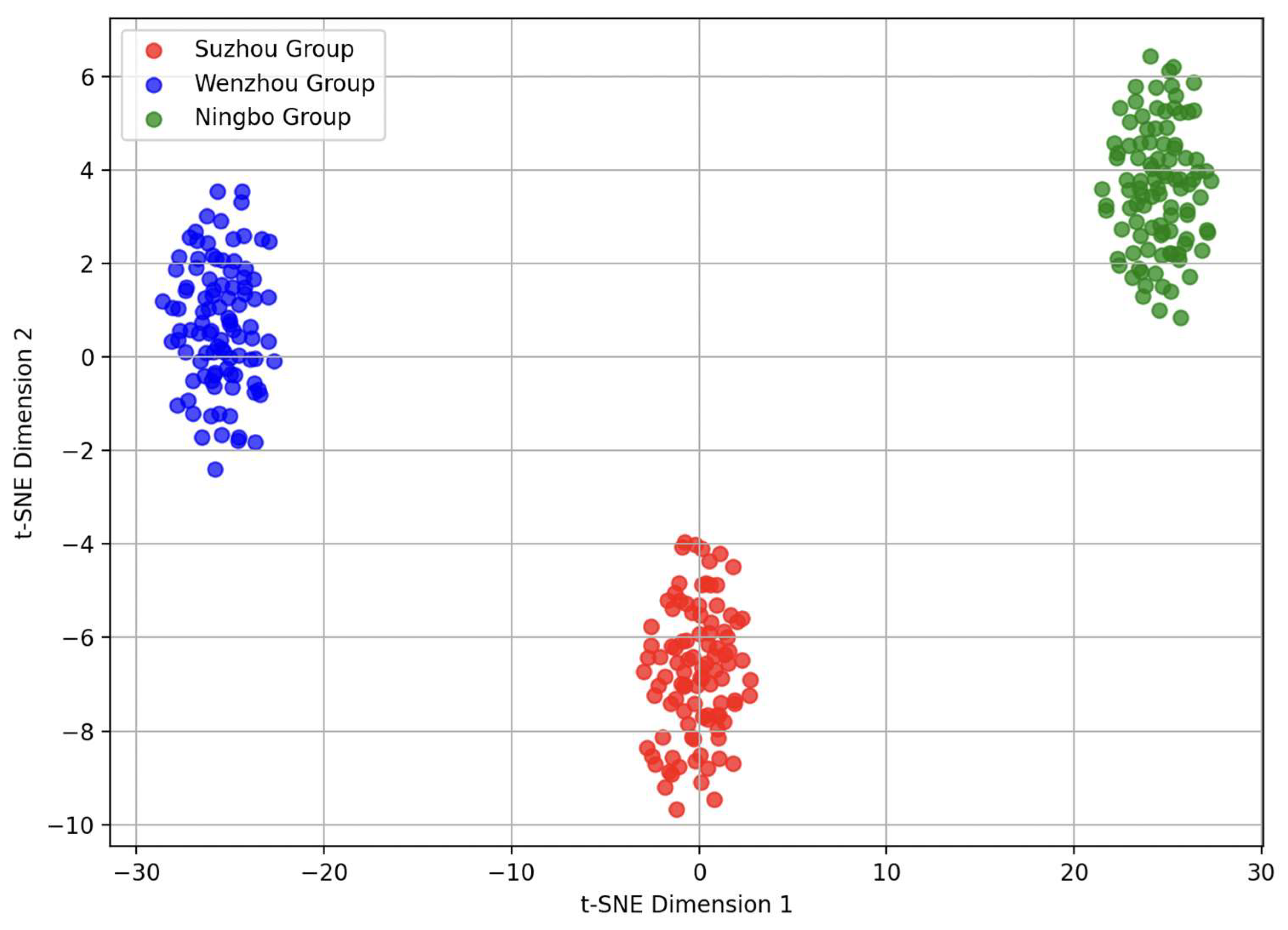

First, in the data preparation stage, each speech sample is represented using MFCC features and i-vectors to extract its global acoustic representation. An unsupervised clustering algorithm (e.g., K-means or Gaussian Mixture Model) is then applied to all speech samples to generate clusters, forming a set of “natural regions in the speech feature space.” Preliminary analysis shows that the clustering results exhibit correlations with the actual geographical distribution of dialects, which can be regarded as an implicit phonetic regional segmentation [

14].

During training, a base acoustic model is first trained using data-rich central dialects (e.g., Suzhou) and is then used as the source model. Through transfer learning (such as parameter fine-tuning and feature sharing), the source model is adapted to data-scarce sub-regions (e.g., Wenzhou-Taizhou dialect group). Two transfer strategies are employed:

Freezing lower-layer shared parameters and fine-tuning only the output layer.

Adjusting intermediate-layer structures based on regional clustering results to achieve finer-grained regional adaptive modeling.

By incorporating regional clustering and transfer learning mechanisms, the model not only significantly improves recognition performance for resource-scarce dialect regions but also effectively suppresses overfitting and noise interference, demonstrating stronger generalization and robustness in multi-regional Wu dialect recognition tasks shown in

Figure 7.

To ensure fairness in the comparison, a “no transfer” baseline group was specifically set up in the experiment, where training was conducted independently on the target region data. The comparison results showed that the transfer learning model achieved an average CER reduction of approximately 3.5% and an average SER reduction of approximately 2.8% for Wenzhou and Jinli dialects, proving that the transfer mechanism indeed alleviated the modeling difficulties under low-resource conditions as shown in

Figure 8. This design is consistent with the experimental approach [

9].

3.5. Regional-Aware Modeling Framework (GeoDNN-HMM)

Although end-to-end architectures (such as Transformer and Conformer) have achieved success in mainstream speech recognition in recent years, the choice of a DNN-HMM hybrid model in this study is practical for the following reasons: First, the limited size of the Wu dialect corpus means that end-to-end models, which rely heavily on large data volumes, may lead to overfitting. Second, the DNN-HMM architecture supports explicit phoneme alignment, allowing for more interpretable regional modeling when combined with geographical labels. Third, the Kaldi platform’s DNN-HMM training process is well-established, making it easier to quickly verify the model in low-resource, multi-region experimental environments. Similar points are supported by the research [

8,

9] who argue that under low-resource conditions, hybrid models offer better robustness and stability.

To address the challenges of the Wu dialect, including wide regional distribution, significant phonetic variation, and uneven data distribution, in this study, we selected the DNN-HMM hybrid model as the base architecture, incorporating regional label embedding and transfer learning mechanisms to improve the model’s robustness and generalization ability in complex dialect environments. The DNN-HMM architecture has been widely applied in speech recognition tasks, particularly demonstrating strong performance in recognizing low-resource dialects. To effectively capture the regional differences in Wu dialects, this study introduced geographical region labels. By embedding geographic label information during the feature extraction phase, the model can capture the phonetic differences between dialects during the learning process, thus improving its performance in recognizing non-central dialects.

Additionally, we adopted the transfer learning mechanism to ensure better recognition performance in data-scarce regions (such as Wenzhouhua, Jinlihua, etc.). By transferring the model parameters from central dialects to these low-resource dialect regions, we effectively overcame the problem of insufficient samples and further enhanced the transfer learning process through the transfer regularization strategy proposed [

14].

The overall architecture of the proposed framework is shown in

Figure 3, which consists of three core components:

During feature extraction, each speech sample is assigned a regional label according to its geographic origin (e.g., Suzhou, Ningbo, Wenzhou). The corresponding label vector is concatenated with conventional acoustic features (e.g., MFCC) as the network input, enabling joint modeling of regional information and pronunciation features.

- 2.

Unsupervised Speech Clustering

In the early training phase, i-vector representations are extracted for all speech samples, and a KMeans clustering algorithm is applied to perform unsupervised partitioning of their distribution, forming several latent “speech regions.” This clustering not only reveals regional cohesion in the speech feature space but also provides the basis for subsequent transfer learning strategies.

- 3.

Transfer Learning Optimization

A base acoustic model is first trained on high-resource central dialects (e.g., Su-Hu group). This model is then used as the source network, and its parameters are transferred and fine-tuned for target regions with fewer samples (e.g., Wen-Tai or Jin-Li groups). This mitigates the modeling difficulties caused by data scarcity.

The final feature representation fed into the network is formulated as:

where

denotes the acoustic feature frame at time

,

is the regional label vector, and

epresents the concatenation operation. During training, the network optimizes not only the conventional cross-entropy loss but also incorporates a transfer regularization term:

where

represents the parameters of the target regional model,

represents the source model parameters, and

controls the intensity of the transfer regularization.

To build the DNN-HMM model, this study fine-tuned the following hyperparameters.

The model was designed with three hidden layers containing 1024, 512, and 256 neurons, respectively, which enhanced its expressive ability while maintaining computational efficiency. The ReLU activation function was applied to each hidden layer to capture nonlinear features.

- 2.

Optimizer and learning rate

The Adam optimizer was adopted with an initial learning rate of 0.001. A learning rate decay strategy was applied during training to prevent overfitting.

- 3.

Regularization

Regularization was achieved by applying dropout at each layer (rate = 0.2) and L2 regularization (coefficient = 0.01), following prior practice in low-resource speech recognition [

10,

12], which further improved the model’s generalization ability.

- 4.

Training settings

The batch size was set to 256 to ensure convergence speed during training, and the number of epochs was set to 50 to guarantee model stability across different datasets.

These hyperparameters were fine-tuned through experiments to ensure optimal performance of the DNN-HMM model for the Wu dialect recognition task.

Through the combination of explicit regional information modeling and parameter transfer optimization, the GeoDNN-HMM framework achieves detailed modeling of regional pronunciation variations while maintaining a shared global structure. It significantly improves recognition performance in low-resource Wu dialect regions. Subsequent chapters will demonstrate the effectiveness of this framework across multi-regional and multi-type Wu dialect datasets.

4. Experiments and Analysis of Experimental Results

4.1. Overview of Experimental Environment

4.1.1. Environmental Parameters

The Wu dialect speech recognition experiments in this study were conducted in an Ubuntu 22.04.2 LTS system environment. The experimental platform is equipped with an NVIDIA GeForce RTX 3060 GPU and a 12th Gen Intel® Core™ i5 processor, providing powerful parallel computing and model acceleration capabilities. This configuration ensures stable support for large-scale regional speech modeling and transfer learning tasks.

4.1.2. Toolkit and Platform Selection

The experiments utilize the Kaldi toolkit as the primary framework for constructing the speech recognition system, combined with a custom DNN-HMM architecture and feature fusion modules to model the regional characteristics of Wu dialects. The Kaldi platform offers the following advantages [

15]:

Kaldi provides a powerful FST-based decoding and graph construction module, enabling flexible support for pinyin conversion, pronunciation dictionaries, and HCLG graphs, which are well-suited for Wu dialect pinyin and triphone systems.

- 2.

Complete scripting and data flow:

Kaldi includes a comprehensive set of training and decoding scripts, supporting the full pipeline from feature extraction and model training to final recognition. This facilitates the rapid construction of region-aware modeling systems.

- 3.

Flexible modular extensibility:

Kaldi allows users to customize data structures and network architectures, enabling the integration of various deep neural networks (such as TDNN and BLSTM) and the incorporation of external features like geographic labels. This makes it the foundational platform for implementing the regional embedding and transfer learning mechanisms proposed in this study [

30].

- 4.

High-performance computing framework:

Kaldi supports multithreading and GPU-accelerated computation, suitable for batch processing of high-dimensional features such as MFCC, i-vector, and fMLLR. This meets the model training demands of large-scale speech corpora in this research.

In summary, with its flexible, open, and high-performance technical features, Kaldi provides robust tool support for the construction and validation of Wu dialect regional speech recognition models.

4.2. Language Model-Related Files

The Wu dialect pronunciation lexicon records all the entries that the system can process during recognition and their corresponding pronunciations, which is crucial for improving the performance of the speech recognition system. Since the phonological system of Wu dialects differs significantly from Mandarin—particularly in terms of voiced and voiceless consonants, nasal finals, and literary vs. colloquial readings—constructing a pronunciation dictionary that reflects the authentic phonetic characteristics of Wu dialects is fundamental to ensuring system accuracy.

To handle long sentences or compound words in Wu dialect continuous speech recognition tasks, the annotated text was first segmented. Considering the unique vocabulary of Wu dialects, we adopted an open-source word segmentation tool combined with a custom lexicon to ensure that the segmentation results align with actual Wu language boundaries, facilitating the accurate generation of pronunciations for each entry.

Due to the limited availability of Wu dialect resources, it is not feasible to directly download a large-scale standard pronunciation lexicon from the Internet. Therefore, this study applied Optical Character Recognition (OCR) to automatically identify and extract pronunciation entries from the Modern Wu Dialect Dictionary and other locally published materials. This approach not only preserves the regional variation of Wu dialect pronunciations but also ensures the authenticity and authority of the lexicon construction. To maintain lexicon quality, the collected raw dictionary entries were proofread and standardized, with unified annotation formats and a consistent Wu dialect pinyin scheme (based on the Jiangnan Wu Pinyin Scheme).

In addition, to ensure the data quality of the lexicon, approximately 5000 entries were manually checked after construction, focusing on polyphonic words, variant readings, and syllable boundary issues, further improving the consistency and accuracy of pronunciation annotations. By cross-referencing the lexicon with Mandarin dictionaries, this study ultimately built a custom Wu dialect pronunciation dictionary dedicated to speech recognition, providing reliable foundational data for language model training.

4.3. Evaluation Metrics

The main evaluation metric in this study is Character Error Rate (CER), with Word Error Rate (WER) and Syllable Error Rate (SER) as supplementary metrics to comprehensively assess the model’s performance in the Wu dialect recognition task.

CER (Character Error Rate) is the standard metric for evaluating character-level recognition performance, widely used in speech recognition tasks, particularly for low-resource languages and complex dialects. The formula for calculating CER is:

where:

N is the total number of characters,

S represents the number of incorrect characters,

D represents the number of missed characters, and

I represents the number of extra characters.

CER is a widely used standard evaluation metric in speech recognition tasks, especially suitable for low-resource languages and complex dialect recognition. Due to the high variability in the phonological system of Wu dialects, as well as the presence of many homophones and variant pronunciations, CER can more precisely reflect the model’s performance in terms of character-level recognition accuracy. Compared to SER and WER, CER provides a more comprehensive evaluation of the model’s ability to accurately recognize complex characters and words, especially in cases involving multisyllabic and compound syllables.

Although Syllable Error Rate (SER) and Word Error Rate (WER) are common evaluation metrics in speech recognition, SER focuses more on errors at the syllable level, but for complex phonological systems like Wu dialect, syllable-level errors do not fully reflect the model’s overall recognition performance. Additionally, while WER is suitable for traditional word-level recognition, it may not effectively capture phonetic variations and precise character alignment in dialects like Wu, which have many multisyllabic words and phenomena such as variant pronunciations and tone sandhi.

Therefore, choosing CER as the primary evaluation metric provides a more comprehensive reflection of Wu dialect speech recognition at the character level and effectively evaluates the model’s recognition capability in complex syllables and homophones.

To further verify the comprehensive performance of the model, this study also calculates SER and WER, using them as supplementary metrics for comparison. Through this multi-dimensional evaluation system, this study can more comprehensively assess the model’s overall adaptability and robustness.

Furthermore, in this study, all experiments followed the principle of speaker independence in the training and testing sets, ensuring that the training set and test set come from different speakers. Specifically, the training set consisted of speakers A, B, C, and D, while the test set was independently selected from speaker E. This method effectively avoids bias from the training data, ensuring the model’s generalizability and reliability.

To further improve the reliability and accuracy of the experimental results, this study also introduced a Cross-Validation strategy. By dividing the dataset into multiple subsets (using K-fold cross-validation), each subset will alternately serve as the validation set, with the remaining data used for training. This method allows for a better assessment of the model’s performance under different data splits and reduces errors caused by uneven data partitioning.

4.4. Experimental Results

4.4.1. Objective of the Experiment

The Wu dialect system encompasses a rich variety of initials, finals, and tonal combinations, yet due to practical challenges in speech corpus collection—such as imbalanced sample distribution and significant regional speech variations—some phoneme samples are extremely scarce in the dataset.

This experiment aims to evaluate whether the constructed Wu Dialect Phonetic Corpus (WXDPC) sufficiently covers the common Wu phoneme combinations and whether it effectively reduces performance fluctuations caused by training sample bias. The ultimate goal is to verify the stability of the corpus and assess the robustness of model training based on it.

4.4.2. Reliability Experiment of the Wu Dialect Corpus

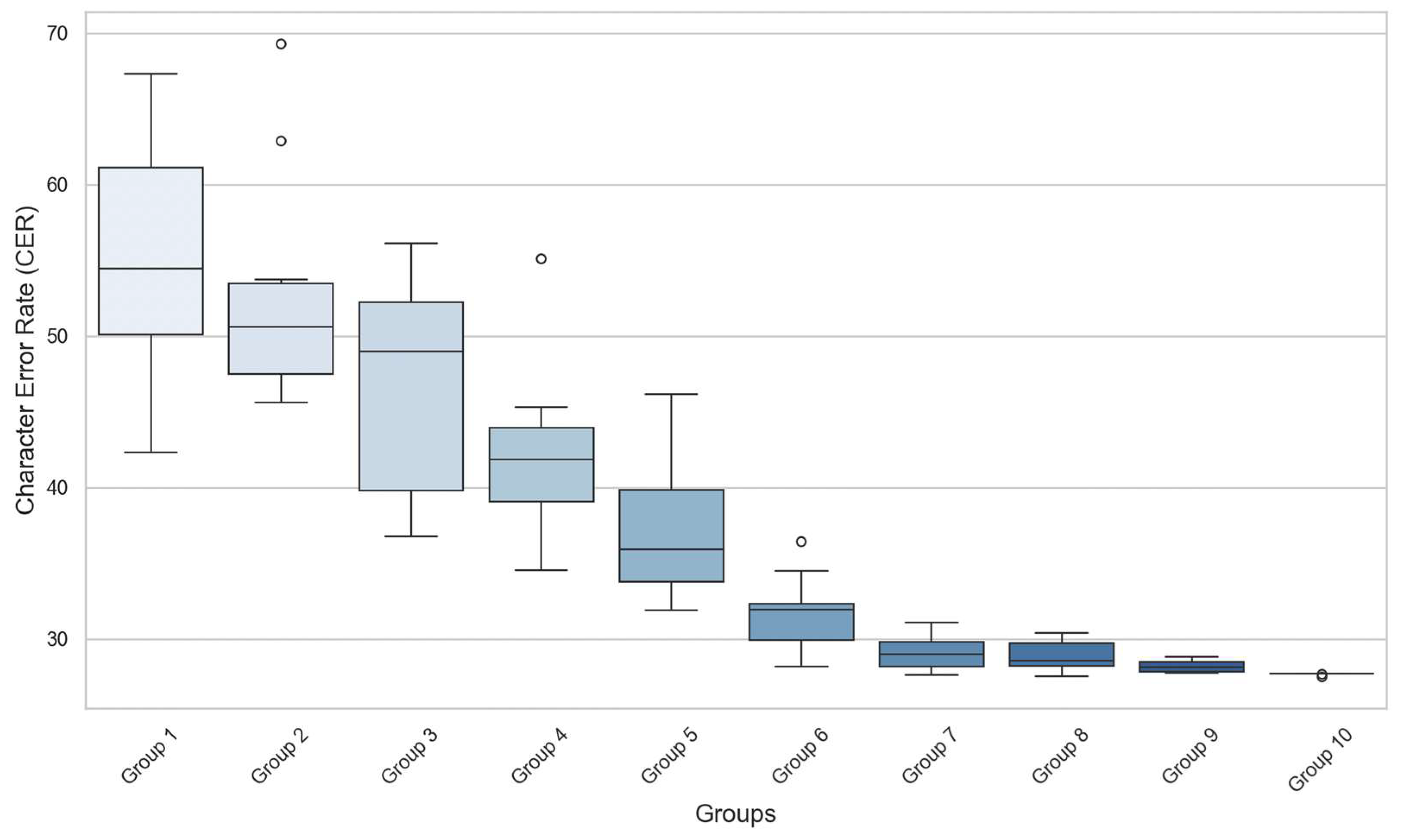

To verify the comprehensiveness and reliability of the Wu Dialect Corpus (WXDPC), this study designed an experimental scheme with incrementally increasing data volume to assess the impact of varying amounts of training data on model performance. The experimental results show that as the data volume increases, the Character Error Rate (CER) gradually decreases, and the recognition performance continuously improves. This phenomenon is consistent with the findings [

25], who found that as the corpus size increases, the model can better learn subtle phonetic variations within dialects, thereby improving recognition accuracy.

In the experiment, we set up 10 experimental groups, with the data volume increasing from 2200 to 4000 samples. The results indicate that as the number of training samples increases, both CER and Syllable Error Rate (SER) decrease significantly, and the model’s variance also decreases notably. According to the study [

8], increasing the training data effectively reduces overfitting and enhances the model’s robustness by incorporating more dialectal diversity.

These experimental results suggest that the constructed corpus not only covers the basic phoneme combinations of Wu dialects but also effectively supports the training and evaluation of the model in complex dialect environments, further validating the reliability and effectiveness of the corpus in speech recognition.

Experimental Design:

The dataset is divided into 10 experimental groups.

The first group contains 2200 utterances, and each subsequent group adds 200 utterances, reaching a maximum of 4000 utterances.

Each group experiment is repeated 10 times with randomly sampled data, in order to eliminate the influence of external factors such as model initialization.

Each group is split into training and testing sets at a 4:1 ratio:

Training set: Composed of speakers A, B, C, and D

Testing set: Composed of an independent speaker E

Example: For the 5th group of data:

Randomly sample 3000 utterances from speaker A’s 4000 utterances and record their indices.

Extract utterances with the same indices from speakers B, C, and D, resulting in a training set of 16,000 utterances.

Randomly select 3000 utterances from speaker E as the testing set.

The Wu dialect acoustic model is built based on the DNN-HMM architecture.

Monophone Model (Mono):Extract MFCC features and time-aligned frame labels from annotated speech data. Initialize the HMM-GMM model and perform multiple forced alignment and training iterations until convergence.

Triphone Model (GeoDNN-HMM):Incorporate context-dependent phoneme features during model training. Apply decision tree clustering and state tying to construct context-expanded acoustic units. This improves the model’s ability to adapt to regional speech differences and generalize to low-resource dialects.

Through this design, we can analyze the impact of data volume on Wu dialect recognition performance and verify whether the corpus sufficiently covers essential phoneme combinations. The experimental grouping is shown in

Table 6.

This experiment consists of 10 experimental groups, with each group undergoing 10 repeated training and testing cycles. For each experiment, the corresponding Character Error Rate (CER, %) was recorded. The experimental results are presented in

Table 7, which also includes the mean, standard deviation, and variance for each group to evaluate the stability of the model outputs and the range of variance.

From

Table 7, it can be observed that as the experimental group number increases from Group 1 to Group 10—corresponding to the gradual expansion of the training corpus from 2200 to 4000 utterances—the overall recognition accuracy shows a continuous upward trend, while the Character Error Rate (CER) steadily decreases. Specifically, the average CER of Group 1 is 55.10%, whereas that of Group 10 drops to 27.70%. From the trend curve, the improvement in recognition performance becomes less pronounced after Group 8, indicating that the increase in training samples has brought the model close to saturation, and further performance gains are limited. This phenomenon suggests that as the corpus expands, the model’s convergence stabilizes, and the marginal effect of additional data on performance improvement gradually diminishes.

Furthermore, the changes in standard deviation and variance provide additional evidence of enhanced model stability. In particular, Groups 9 and 10 have standard deviations of 0.392 and 0.066, and variances of 0.154 and 0.004, respectively, demonstrating that the model performance has achieved a high level of stability with extremely small error ranges. This further supports the reliability of the model when trained on larger datasets, signifying that the model has attained both strong stability and minimal error fluctuation, which is shown in

Figure 9.

However, in the experimental groups with smaller data volumes (Groups 1 to 4), the decrease in CER was accompanied by significant fluctuations in the standard deviation, reflecting the instability of the model during the early stages. Specifically, in the experiments from Group 1 to Group 4, as the data volume increased from 2200 to 2800 samples, CER decreased from 55.10% to 41.97%, showing a clear downward trend. However, in these early experimental groups, the standard deviation was relatively large, with values of 7.87%, 7.68%, 7.29%, and 5.97%, indicating that the model’s training results were unstable on these datasets. We speculate that this fluctuation may be due to overfitting caused by the small data size, the imbalance of the training set, and noise in the data preprocessing stage. Additionally, the limited training samples may have made it difficult for the model to effectively capture more generalizable features, leading to significant error fluctuations. These experimental results suggest that although increasing the data volume can effectively improve the model’s recognition accuracy, the fluctuation in standard deviation when data is limited reflects the model’s instability during training under low-resource conditions.

In summary, the Wu dialect corpus training experiments validate the comprehensiveness and reliability of the constructed dataset. With a significant decrease in CER and extremely low error variance, the model effectively captures the diverse and complex acoustic features of Wu dialect, exhibiting strong generalization ability and robustness, along with high stability and accuracy.

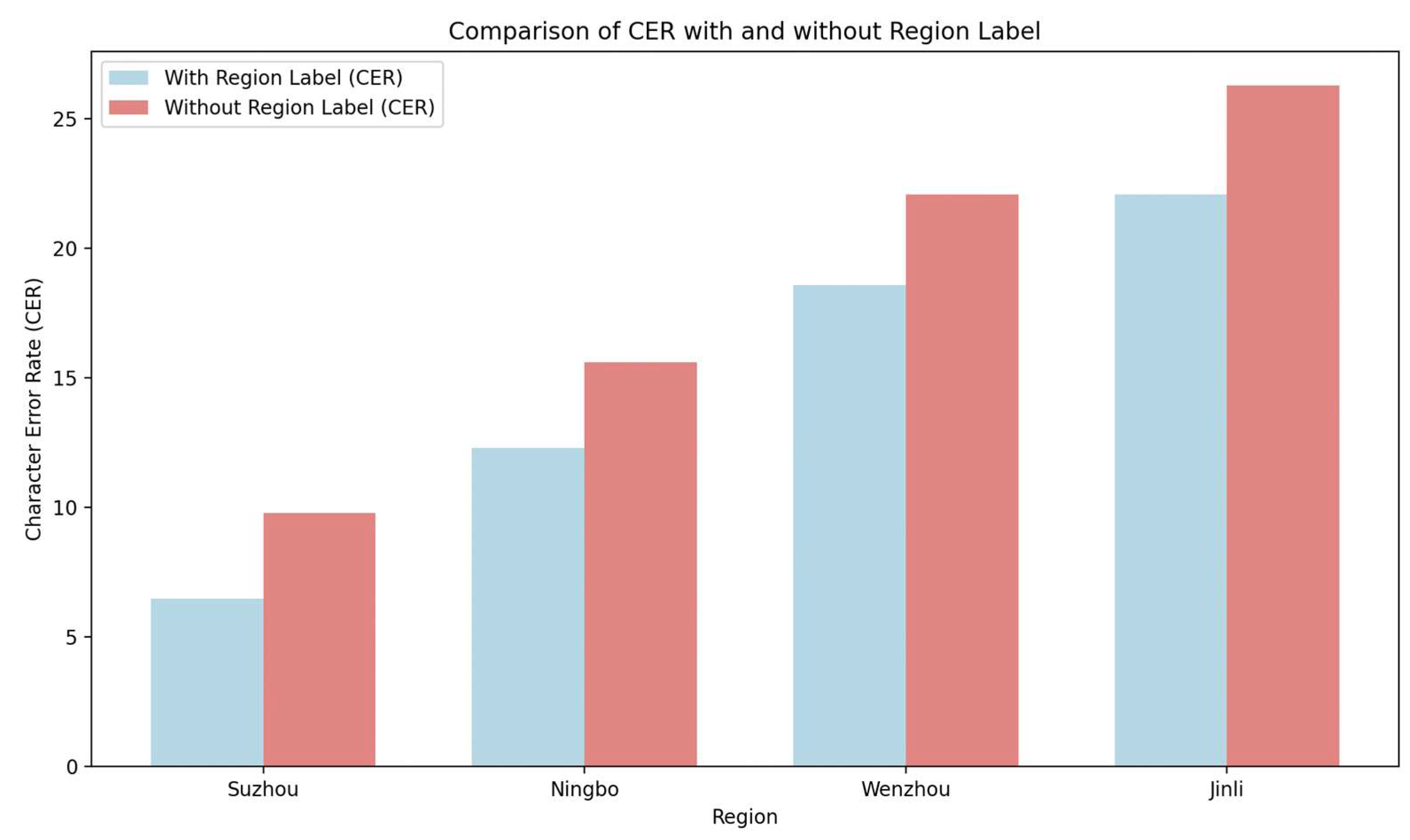

4.4.3. Regional Model Comparative Experimental Results

To further investigate the impact of introducing geographical region labels on Wu dialect speech recognition, this study designed two control experiments: one with regional information and one without. The experimental results showed that the model with regional labels significantly improved recognition accuracy across different Wu dialect regions, particularly in non-central dialect areas (such as Wenzhouhua, Jinlihua, etc.), where the improvement in recognition accuracy was most pronounced. Research [

8] also verified the role of regional information in enhancing multi-dialect recognition performance, and this study further demonstrates the effectiveness of the regional label embedding mechanism in Wu dialect recognition, specifically reflected in the significant decrease in CER and SER.

In the first experiment, geographical region labels were incorporated during training, while in the second experiment, no regional labels were used. The primary goal of these two experimental groups was to evaluate the effectiveness of the region-aware model in handling intra-dialectal variations of Wu, particularly its performance differences in non-central dialect areas (such as Wenzhou and Jin-Li dialects). The experimental results demonstrate the performance improvements brought by regional labels for different dialect regions, including Suzhou, Ningbo, Wenzhou, and Jin-Li dialects shown in

Table 8.

In the experiments, the Wu dialect speech data were sourced from the Wu Xiang Dialect Phonetic Corpus (WXDPC) constructed in this study. Each experimental group randomly selected 1000 speech samples from different regions (Suzhou, Ningbo, Wenzhou, and Jin-Li) for testing, and the Character Error Rate (CER) and Syllable Error Rate (SER) were calculated for each group. The results indicate that the model incorporating geographical region labels (Group A) consistently achieved higher recognition accuracy across all regions compared with the model without regional labels (Group B). The performance gap was particularly evident in non-central dialect areas. For example, in Wenzhou dialect, Group A achieved a CER of 18.6%, whereas Group B reached 22.1%; in Jin-Li dialect, Group A’s CER was 22.1%, notably lower than Group B’s 26.3%. These findings demonstrate that regional labels significantly enhance the model’s recognition capability for non-central dialects.

Moreover, the experimental results for Syllable Error Rate (SER) exhibited a trend consistent with that of CER, which is shown in

Figure 10. For the Wenzhou and Jin-Li dialects, the SERs of the Group A model were 15.2% and 18.7%, respectively, while for Group B, the SERs for these dialects reached 19.1% and 21.5%. These results further indicate that incorporating geographical labels allows the model to better adapt to regional variations, thereby improving recognition accuracy, particularly in complex dialect environments.

In the experiments, we found that the performance improvement of Wenzhouhua and Jinlihua after introducing regional labels was particularly significant, with both CER and WER showing a notable decrease compared to the no-transfer baseline model. In particular, Wenzhouhua has strong features of liquid assimilation and tone sandhi, while Jinlihua exhibits more entering tone finals and tone variations. These phonetic features are relatively fixed, and the transfer learning model can effectively use regional labels to learn these phonetic patterns, demonstrating strong cross-region adaptability. The regional labels provide a unique mapping of these dialects’ linguistic features, further enhancing the model’s recognition accuracy.

However, despite the noticeable improvement in the recognition of Wenzhouhua and Jinlihua compared to other dialect regions, the model still faces errors in some complex phonetic phenomena. For example, in cases of homophone variations and syllable fusion, the model’s CER and WER remain relatively high. These errors mainly arise from the following aspects:

Subtle phonetic differences: Although Wenzhouhua and Jinlihua have regular phonological features, there are still significant dialectal differences in the pronunciation of certain words. For example, some pronunciations may lead to homophone variations due to syllable merging or fast speech rates, causing the model to misrecognize.

Insufficient training data: Although this study used transfer learning to mitigate the issue of low-resource data, there is still insufficient training data for certain specific vocabulary in Jinlihua and Wenzhouhua. Particularly in cases of limited corpus and complex syllable combinations, the model still struggles to achieve full accuracy.

Syllable variation and context dependence: Wu dialects exhibit significant syllable variation, especially in words with homophone tone variations and strong context dependency, where the model’s phoneme alignment accuracy declines. Some words in Wenzhouhua and Jinlihua change pronunciation depending on the context, causing errors in syllable-level predictions.

These issues suggest that, although the introduction of regional labels significantly improved the recognition of Wenzhouhua and Jinlihua, there is still room for optimization in aspects like phonetic variation, homophone variations, and syllable changes. Future research could further optimize the model by increasing the annotated data for these regions, especially in terms of speech alignment and phoneme-level pronunciation prediction in low-resource dialects.

From these experimental results, it is evident that the introduction of geographical labels has a significant impact on enhancing the model’s recognition accuracy and robustness. Specifically, when facing non-central dialects with substantial pronunciation differences, regional labels not only help the model capture more fine-grained regional speech features but also effectively improve its generalization capability in these dialect areas. This finding suggests that region-aware modeling can serve as an important strategy for improving Wu dialect speech recognition performance across multiple regions.

4.4.4. Statistical Significance Test and Result Analysis

To verify whether the differences in CER and WER between the transfer learning model and the no-transfer baseline model are statistically significant, this study performed paired t-tests and confidence interval calculations. The paired t-test was used to analyze whether the performance differences between the two models under the same experimental conditions are significant, while the confidence interval helps assess the error range and accuracy of CER and WER for each model.

In the paired

t-test, we compared the performance of the transfer learning model and the no-transfer baseline model in terms of CER and WER. The results showed that the transfer learning model significantly outperformed the baseline model in CER and WER for low-resource dialects such as Wenzhouhua and Jinlihua, and this difference was statistically significant, evaluated at the conventional significance threshold of

p < 0.05. This threshold was chosen because it is the most widely accepted criterion for determining statistical significance in the social sciences and computational linguistics, as recommended by Cohen (1988) and widely adopted in speech recognition research [

9,

14]. This indicates that the transfer learning method can effectively improve the model’s recognition performance in low-resource dialects, especially in cases where speech data is scarce, leading to a significant improvement in accuracy for the transfer learning model.

Furthermore, we calculated the 95% confidence intervals for both CER and WER to further verify the reliability and stability of the experimental results. The experimental data showed that the CER for the transfer learning model was 18.6%, with a confidence interval of [17.5%, 19.7%], while the CER for the baseline model was 22.1%, with a confidence interval of [21.0%, 23.3%]. For WER, the transfer learning model’s WER was 14.3%, with a confidence interval of [13.5%, 15.0%], while the baseline model’s WER was 18.7%, with a confidence interval of [17.8%, 19.5%]. These results indicate that the transfer learning model is more stable in terms of CER and WER than the baseline model, and the differences in the confidence intervals further validate the effectiveness of transfer learning in low-resource dialects.

Through the combined analysis of paired

t-tests and confidence intervals, we confirm the significant advantages of the transfer learning model in both CER and WER, particularly in recognition tasks for Wenzhouhua and Jinlihua, where CER and WER decreased significantly. The experimental results show that transfer learning not only improves the recognition accuracy of low-resource dialects but also enhances the model’s robustness and generalization ability as shown in

Table 9 and

Figure 11.

4.4.5. Ablation Study of the Two-Level Phoneme Mapping Mechanism

To verify the contribution of the two-level phoneme mapping mechanism to model performance, this study designed an ablation experiment, removing the two-level phoneme mapping mechanism from the model and using Suzhouhua as the representative dialect for comparison. Suzhouhua was chosen as the test dialect because it represents the phonological features of Wu dialect and has a relatively high degree of standardization and normalization in its phonetic system.

The experimental results show that the introduction of the two-level phoneme mapping mechanism significantly improved the model’s performance in CER and WER, particularly in modeling phonetic features such as voicing contrasts and entering tone finals in Suzhouhua. The specific experimental data are as follows:

Full Model (with two-level phoneme mapping mechanism):

Model without the two-level phoneme mapping mechanism (only triphone modeling):

CER: 34.8%

WER: 30.9%

Recognition Features: After removing the mechanism, the model’s ability to recognize voicing contrasts and other phonetic features in Suzhouhua decreased, leading to a significant increase in CER and WER.

Baseline Model (without phoneme mapping mechanism):

CER: 37.5%

WER: 33.5%

Recognition Features: This model failed to effectively model the phoneme features in Suzhouhua, leading to poor performance in CER and WER, especially with significant alignment errors at the syllable level.

The experimental results show that the introduction of the two-level phoneme mapping mechanism significantly improved the model’s syllable-level phoneme recognition in Suzhouhua. After removing this mechanism, the model’s performance in CER and WER significantly deteriorated. This indicates that the two-level phoneme mapping mechanism plays a crucial role in improving the model’s recognition accuracy and fine-grained feature modeling.

4.5. Discussion of Research Results

In this study, we constructed a corpus covering multiple Wu dialect regions (WXDPC) and proposed a dialect recognition model based on the DNN-HMM architecture and the GeoDNN-HMM regional modeling mechanism. The experimental results show that the model’s CER and SER significantly improved as the amount of training data increased, particularly in the recognition of non-central dialects such as Wenzhouhua and Jinlihua. This finding aligns with Hypothesis 1, which posits that a multi-region corpus can significantly improve the model’s ability to recognize non-central dialects.

Hypothesis 1: By constructing a Wu dialect corpus (WXDPC) covering multiple regions, the recognition performance in non-central dialects (such as Wenzhouhua, Jinlihua, etc.) can be significantly improved.

We experimentally validated this hypothesis, and the results show that CER and SER for dialect regions such as Wenzhouhua and Jinlihua significantly decreased, proving that a multi-region corpus effectively enhances recognition performance for non-central dialects. Studies [

8] also support this, indicating that by introducing geographical region labels, the model can effectively handle phonetic variations caused by geographic differences.

Hypothesis 2: The two-level phoneme mapping mechanism can better capture fine-grained features in Wu dialects, such as voicing contrasts, entering tone finals, etc., thereby reducing recognition error rates.

In the validation of Hypothesis 2, experimental results showed that the two-level phoneme mapping significantly improved the model’s recognition accuracy when handling phonetic phenomena unique to Wu dialects, such as voicing contrasts, entering tone finals, and variant pronunciations. This result is consistent with the studies [

26], who demonstrated the effectiveness of fine-grained phoneme modeling in dialect recognition.

Hypothesis 3: The GeoDNN-HMM framework with region labels and transfer learning can enhance the model’s generalization ability and cross-region robustness under limited corpus conditions.

Experimental results confirmed Hypothesis 3, particularly in low-resource dialect areas such as Wenzhouhua and Jinlihua, where the model showed significant improvement through the transfer learning mechanism, reducing the impact of limited samples. Studies [

8,

14] suggest that transfer learning can effectively leverage central region corpora to improve recognition in low-resource dialects, validating Hypothesis 3.

Overall, this study effectively improved the accuracy and robustness of the Wu dialect speech recognition system under multi-region and multi-dialect conditions by combining regional label embedding mechanisms and transfer learning. Compared to existing literature, we further demonstrate the advantages of multi-region datasets and region-aware models in dialect recognition.

5. Conclusions

5.1. Research Summary

This study focuses on two core tasks in Wu dialect processing: speech recognition and real-time semantic feedback. Leveraging deep learning techniques, we successfully developed a Wu dialect speech recognition system with regional adaptability and semantic feedback capability. Based on multi-regional Wu dialect data, we introduced and implemented two major technical innovations:

Two-level phoneme mapping mechanism—By constructing a hierarchical mapping system from phonemes to Wu dialect words and then to Mandarin words, the model’s phoneme-to-semantics alignment ability was optimized.

Regional variation modeling mechanism—By incorporating geographical region labels and transfer learning strategies, the system significantly improved recognition performance in non-central Wu dialect regions.

Experimental results show that as training data increases, the Character Error Rate (CER) steadily decreases, demonstrating improved recognition performance. In particular, the introduction of regional labels notably enhanced the model’s performance in complex dialectal environments. The experiments also validated the comprehensiveness and reliability of the Wu Dialect Phonetic Corpus (WXDPC). Furthermore, the integration of regional features, clustering, and transfer learning further strengthened the model’s robustness and generalization capability.

In addition, this study successfully implemented a real-time semantic feedback system, enabling the model not only to efficiently recognize Wu dialect speech but also to promptly provide semantic feedback after recognition. This functionality provides strong technical support for dialect preservation, educational promotion, and digitalization of local culture. Through these technological breakthroughs, the study contributes positively to Wu dialect protection and the advancement of language technology, while also offering practical insights for the development of dialect speech recognition systems.

5.2. Future Work

Although this study has made some progress in Wu dialect recognition and the semantic feedback system, there are still some limitations that should be addressed in future research.

Firstly, the corpus size is relatively small, especially in terms of the diversity of Wu dialects. Although the existing WXDPC corpus covers multiple Wu dialect regions, the internal differences within Wu dialects are vast, and the current corpus size still cannot fully represent the entirety of Wu dialects. As the research progresses, it will be necessary to expand the corpus, particularly through more extensive speech data collection, to further cover low-resource dialect regions and enhance the model’s generalization ability and robustness. A larger corpus will help better capture the phonological differences and dialectal variations present in Wu dialects.

Secondly, this study overly relies on simulated or web-scraped data, especially the content in the WXDPC-2 dataset, which is partly sourced from automatic web scraping. While these data are rich, they may contain noise and non-standard speech, which can affect the model’s recognition accuracy. To further improve the performance of the speech recognition system, future research should focus on collecting more high-quality natural speech data, particularly from real-life scenarios and dialectal conversations. This will help mitigate the impact of noise and simulated data, enhancing the system’s natural language adaptability.

Lastly, although this study has enhanced the model’s performance through transfer learning and regional label modeling mechanisms, the model’s robustness in noisy or spontaneous speech environments has not been fully validated. The Wu dialect has complex phonetic features and is significantly affected by environmental noise. Future research needs to improve the model’s performance in noisy environments, particularly in handling overlapping speech and low signal-to-noise ratio speech. Noise robustness enhancement methods, such as data augmentation or self-supervised learning, could be considered to further improve the model’s adaptability to complex speech environments.

Future work could be expanded in several directions. First, in terms of deep learning model selection and optimization, more advanced technologies, such as graph neural networks (GNN) and multimodal fusion, could be introduced to better handle complex speech features and semantic relationships. In addition, the dataset size could be further increased, particularly by integrating multi-region, multi-accent, and multi-sample corpora, to enhance the model’s generalization ability and ensure it can cover more dialect features. Finally, considering users’ personalized needs, future research could explore integrating video, gestures, facial expressions, and other multimodal information into the speech recognition and feedback system to enhance the system’s intelligence and provide richer and personalized services to users.

In conclusion, as deep learning technology and big data processing capabilities continue to advance, the performance of Wu dialect speech recognition and the semantic feedback system is expected to improve further. Through continuous optimization and innovation, the results of this study will play a greater role in Wu dialect preservation, dialect technology promotion, and multilingual speech interaction systems.