CNN-Based Road Event Detection Using Multiaxial Vibration and Acceleration Signals

Abstract

1. Introduction

Convolutional Neural Networks (CNNs)

2. Related Works

Motivation and Scope

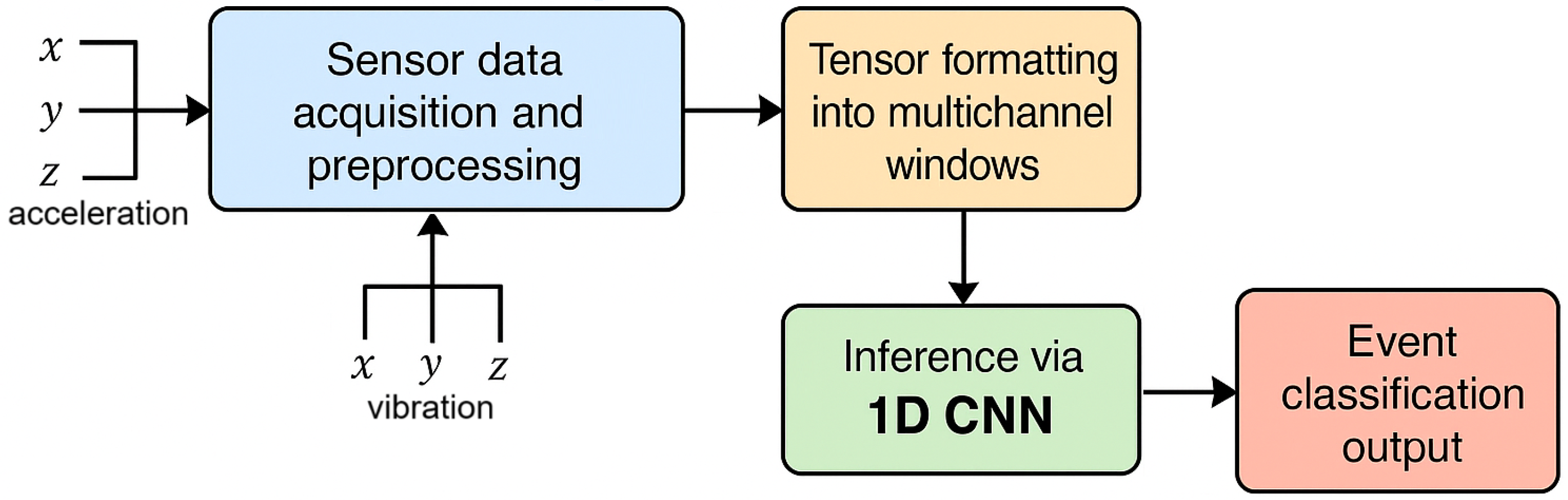

3. The Proposed Algorithm

3.1. Data Acquisition and Preprocessing

3.2. Tensor Formatting

3.3. Road Event Classes and Annotation Criteria

3.4. CNN Model Training

3.5. Event Classification

3.6. Design Rationale and Advantages

4. Results

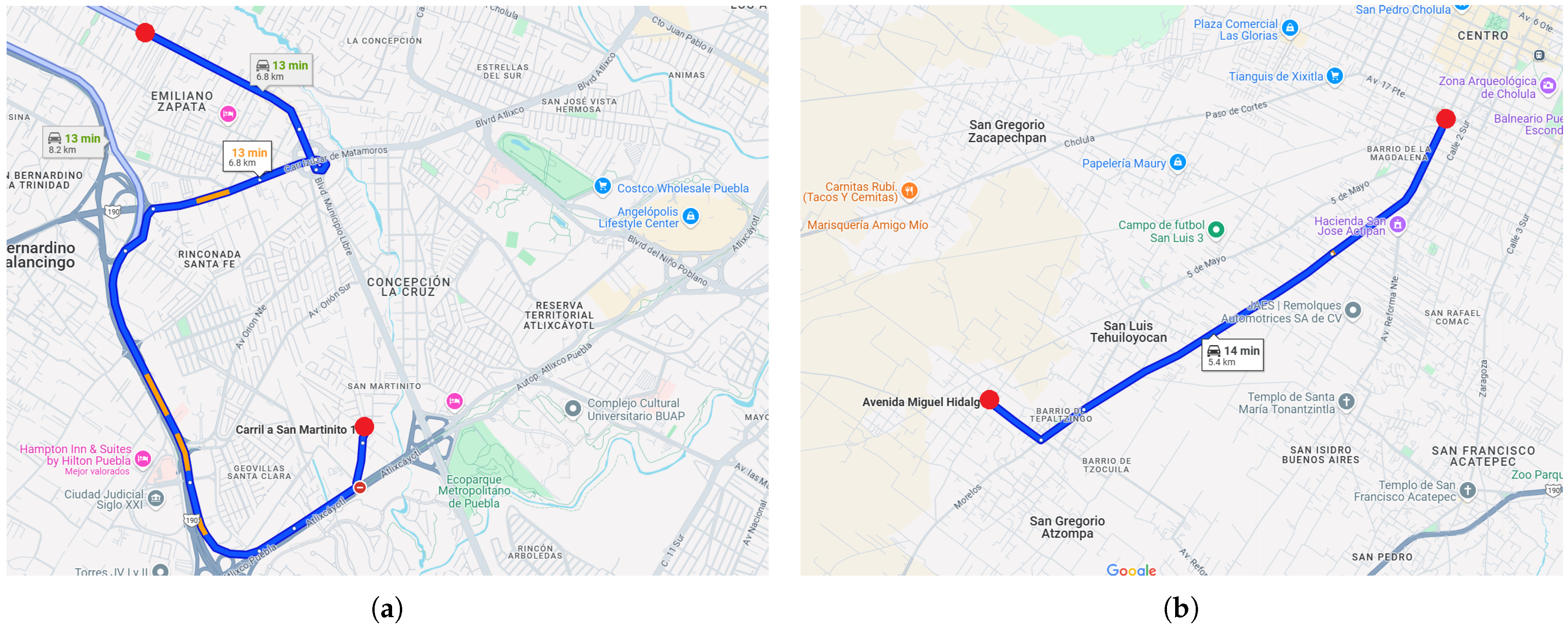

4.1. Dataset Description

- urban_trip_puebla_1.json: Outbound trajectory through the central urban area.

- urban_trip_puebla_2.json: Return trajectory along the same urban route.

- urban_trip_puebla_3.json: Outbound trajectory through suburban and peripheral areas.

- urban_trip_puebla_4.json: Return trajectory along the same suburban route.

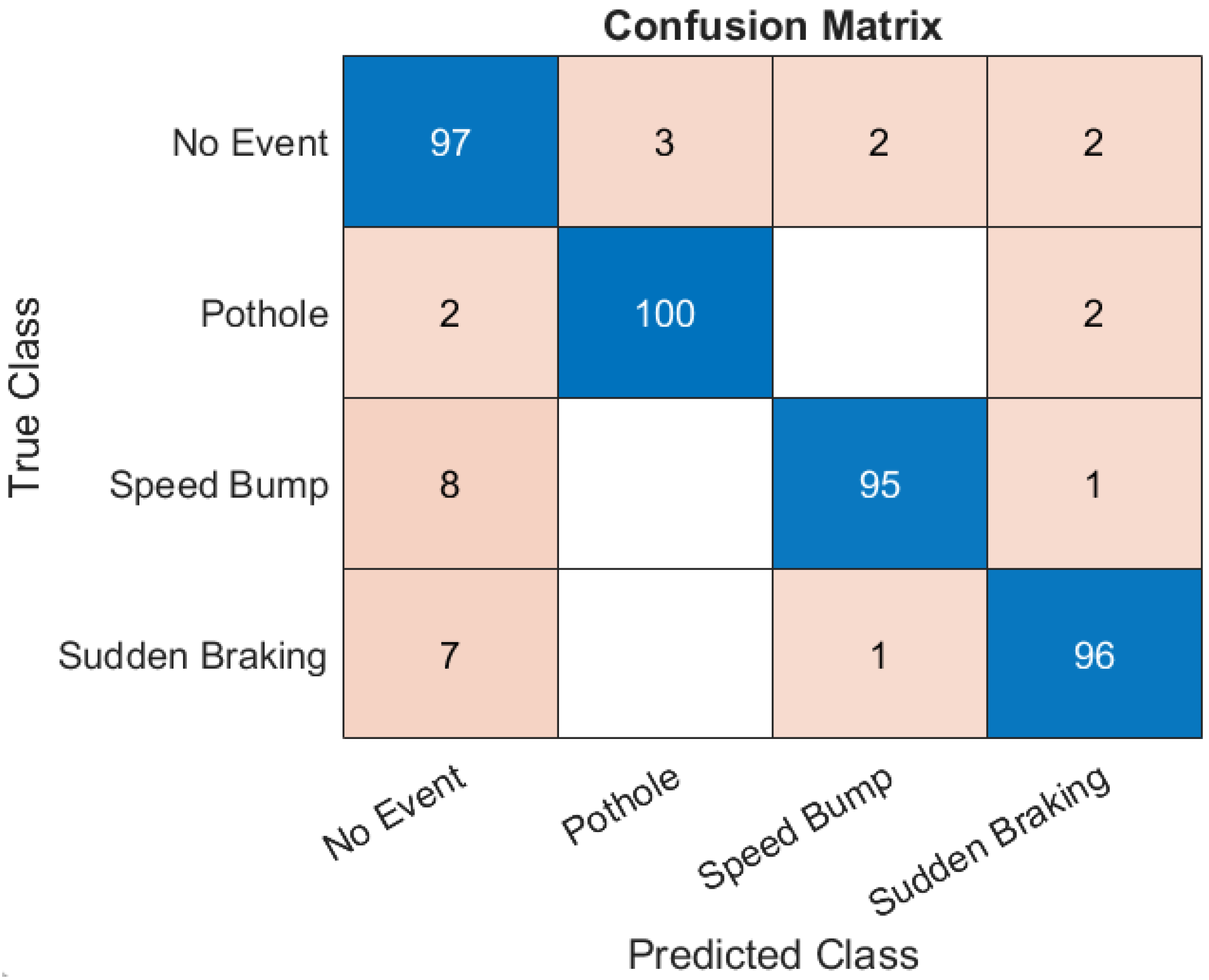

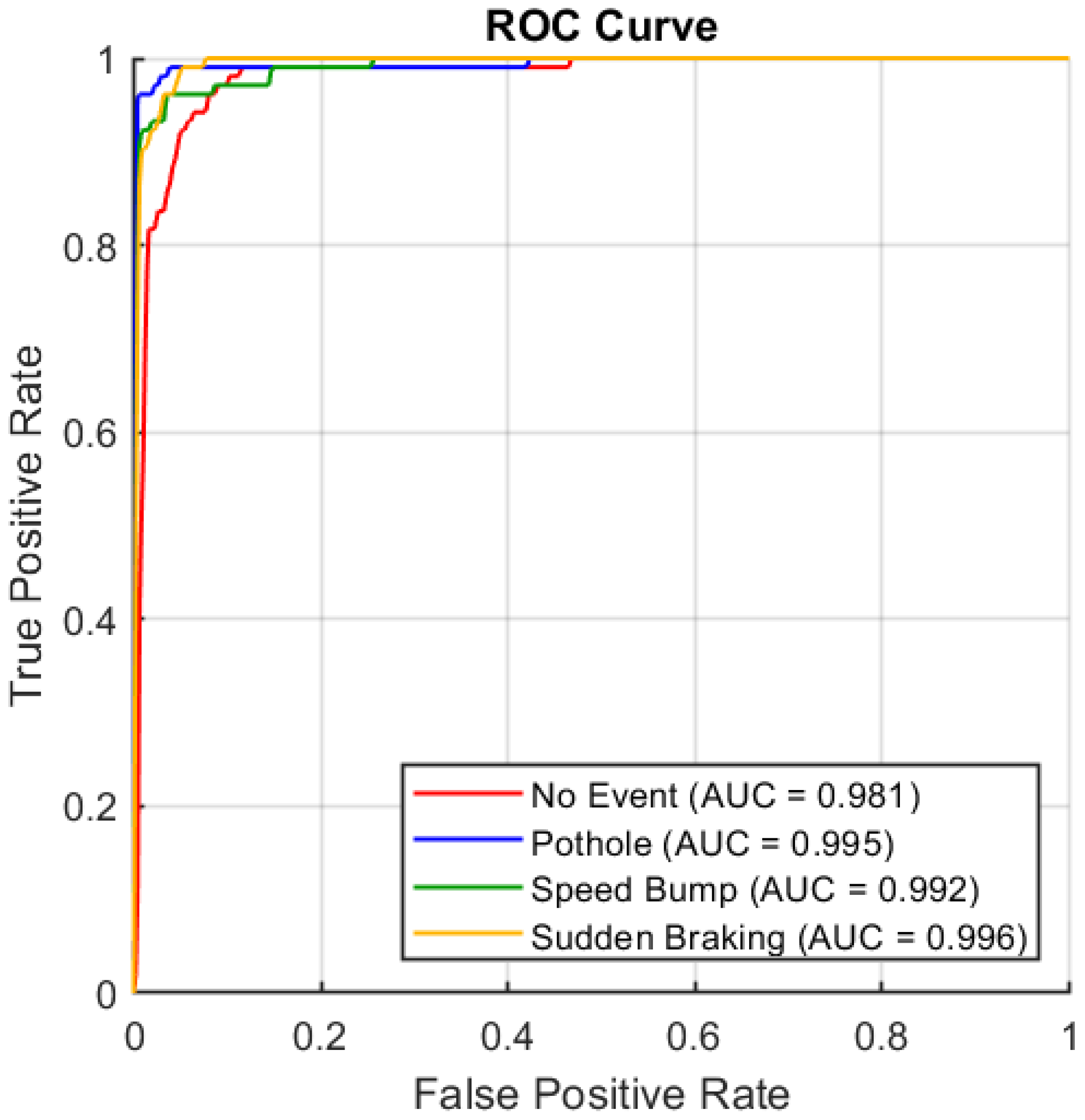

4.2. Quantitative Analysis

- Global Accuracy: 93.51%;

- Macro-averaged Precision: 0.9356;

- Macro-averaged Recall: 0.9351;

- Macro-averaged F1-Score: 0.9352.

- End-to-end learning: Our CNN-based approach directly learns discriminative spatio-temporal features from raw multiaxial sensor data, eliminating the need for manual feature extraction or domain-specific preprocessing.

- Efficiency and deployability: The model architecture is lightweight and optimized for constant-time inference , making it feasible for real-time deployment on embedded and automotive-grade platforms with limited computational resources.

4.3. Computational Complexity

5. Current Scope and Limitations

Future Work

- Real-Time Embedded Deployment: Extend the current offline implementation into a real-time pipeline running on embedded hardware platforms. This involves profiling the model and optimizing its inference time and power consumption for deployment in automotive or mobile systems.

- Hybrid Architectures: Explore the combination of CNNs with sequential models such as LSTMs or modules based on attention to better capture temporal dependencies and improve performance in complex events that overlap.

- Multimodal Sensor Fusion: Integrate additional signals such as gyroscope, GPS, and visual input to improve context awareness and classification accuracy in ambiguous or noisy conditions.

- Dynamic Windowing: Implement adaptive windowing techniques that adjust the length of the input based on signal characteristics or the detection of the onset of the event, increasing the responsiveness to short- or long-duration events.

- Cross-Domain Evaluation: Test the model in real-world data sets from different cities, road surfaces, and vehicle types to evaluate and improve generalization in multiple scenarios.

6. Conclusions

- The proposed CNN model achieved a macroaveraged F1 score of 0.9352 and a global precision of 93.51% in four categories of events. It demonstrated balanced precision and recall for both frequent and rare events.

- Class-specific performance remained consistently high; for example, pothole detection reached a precision and recall of 0.9711, while sudden braking maintained an F1 score of 0.9314, demonstrating the model’s ability to generalize between types of event.

- Compared to a rule-based baseline, the CNN model exhibited superior performance, especially in its ability to handle diverse sensor inputs and output probabilistic event predictions suitable for real-time thresholding and integration with downstream systems.

- The proposed architecture remains lightweight enough to support potential deployment in embedded systems, offering an efficient solution for inference on the device in vehicular environments.

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Rathee, M.; Bačić, B.; Doborjeh, M. Automated road defect and anomaly detection for traffic safety: A systematic review. Sensors 2023, 23, 5656. [Google Scholar] [CrossRef]

- Kyriakou, C.; Christodoulou, S.E.; Dimitriou, L. Do vehicles sense, detect and locate speed bumps? Transp. Res. Procedia 2021, 52, 203–210. [Google Scholar] [CrossRef]

- Misra, M.; Mani, P.; Tiwari, S. Early Detection of Road Abnormalities to Ensure Road Safety Using Mobile Sensors. In Ambient Communications and Computer Systems: Proceedings of RACCCS 2021; Springer: Berlin/Heidelberg, Germany, 2022; pp. 69–78. [Google Scholar]

- Bala, J.A.; Adeshina, S.A.; Aibinu, A.M. Advances in Road Feature Detection and Vehicle Control Schemes: A Review. In Proceedings of the 2021 1st International Conference on Multidisciplinary Engineering and Applied Science (ICMEAS), Abuja, Nigeria, 15–16 July 2021; pp. 1–6. [Google Scholar]

- Ozoglu, F.; Gökgöz, T. Detection of road potholes by applying convolutional neural network method based on road vibration data. Sensors 2023, 23, 9023. [Google Scholar] [CrossRef]

- Martinelli, A.; Meocci, M.; Dolfi, M.; Branzi, V.; Morosi, S.; Argenti, F.; Berzi, L.; Consumi, T. Road surface anomaly assessment using low-cost accelerometers: A machine learning approach. Sensors 2022, 22, 3788. [Google Scholar] [CrossRef]

- Behera, B.; Sikka, R. Deep learning for observation of road surfaces and identification of path holes. Mater. Today Proc. 2023, 81, 310–313. [Google Scholar] [CrossRef]

- Peralta-López, J.E.; Morales-Viscaya, J.A.; Lázaro-Mata, D.; Villaseñor-Aguilar, M.J.; Prado-Olivarez, J.; Pérez-Pinal, F.J.; Padilla-Medina, J.A.; Martínez-Nolasco, J.J.; Barranco-Gutiérrez, A.I. Speed bump and pothole detection using deep neural network with images captured through zed camera. Appl. Sci. 2023, 13, 8349. [Google Scholar] [CrossRef]

- Celaya-Padilla, J.M.; Galván-Tejada, C.E.; López-Monteagudo, F.E.; Alonso-González, O.; Moreno-Báez, A.; Martínez-Torteya, A.; Galván-Tejada, J.I.; Arceo-Olague, J.G.; Luna-García, H.; Gamboa-Rosales, H. Speed bump detection using accelerometric features: A genetic algorithm approach. Sensors 2018, 18, 443. [Google Scholar] [CrossRef]

- Karim, A.; Adeli, H. Incident detection algorithm using wavelet energy representation of traffic patterns. J. Transp. Eng. 2002, 128, 232–242. [Google Scholar] [CrossRef]

- Martikainen, J.P. Learning the Road Conditions. Ph.D. Thesis, University of Helsinki, Helsinki, Finland, 2019. [Google Scholar]

- Radak, J.; Ducourthial, B.; Cherfaoui, V.; Bonnet, S. Detecting road events using distributed data fusion: Experimental evaluation for the icy roads case. IEEE Trans. Intell. Transp. Syst. 2015, 17, 184–194. [Google Scholar] [CrossRef]

- Aguilar-González, A.; Medina Santiago, A. Road Event Detection and Classification Algorithm Using Vibration and Acceleration Data. Algorithms 2025, 18, 127. [Google Scholar] [CrossRef]

- He, D.; Xu, H.; Wang, M.; Wang, T. Transmission and dissipation of vibration in a dynamic vibration absorber-roller system based on particle damping technology. Chin. J. Mech. Eng. 2024, 37, 108. [Google Scholar] [CrossRef]

- Tang, C.; Lu, Z.; Qin, L.; Yan, T.; Li, J.; Zhao, Y.; Qiu, Y. Coupled vibratory roller and layered unsaturated subgrade model for intelligent compaction. Comput. Geotech. 2025, 177, 106827. [Google Scholar] [CrossRef]

- Zhao, Y.; Lu, Z.; Gedela, R.; Tang, C.; Feng, Y.; Liu, J.; Yao, H. Performance and geocell-soil interaction of sand subgrade reinforced with high-density polyethylene, polyester, and polymer-blend geocells: 3D numerical studies. Comput. Geotech. 2025, 178, 106949. [Google Scholar] [CrossRef]

- Zhao, Y.; Xiao, H.; Chen, L.; Chen, P.; Lu, Z.; Tang, C.; Yao, H. Application of the non-linear three-component model for simulating accelerated creep behavior of polymer-alloy geocell sheets. Geotext. Geomembr. 2025, 53, 70–80. [Google Scholar] [CrossRef]

- Ismail Fawaz, H.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P.A. Deep learning for time series classification: A review. Data Min. Knowl. Discov. 2019, 33, 917–963. [Google Scholar] [CrossRef]

- Ismail Fawaz, H.; Lucas, B.; Forestier, G.; Pelletier, C.; Schmidt, D.F.; Weber, J.; Webb, G.I.; Idoumghar, L.; Muller, P.A.; Petitjean, F. Inceptiontime: Finding alexnet for time series classification. Data Min. Knowl. Discov. 2020, 34, 1936–1962. [Google Scholar] [CrossRef]

- Jamshidi, M.; El-Badry, M. Structural damage severity classification from time-frequency acceleration data using convolutional neural networks. In Proceedings of the Structures; Elsevier: Amsterdam, The Netherlands, 2023; Volume 54, pp. 236–253. [Google Scholar]

- Kempaiah, B.U.; Mampilli, R.J.; Goutham, K. A Deep Learning Approach for Speed Bump and Pothole Detection Using Sensor Data. In Proceedings of the Emerging Research in Computing, Information, Communication and Applications: ERCICA 2020; Springer: Berlin/Heidelberg, Germany, 2022; Volume 1, pp. 73–85. [Google Scholar]

- Dogru, N.; Subasi, A. Traffic accident detection using random forest classifier. In Proceedings of the 2018 15th Learning and Technology Conference (L&T), Jeddah, Saudi Arabia, 25–26 February 2018; pp. 40–45. [Google Scholar]

- Su, Z.; Liu, Q.; Zhao, C.; Sun, F. A traffic event detection method based on random forest and permutation importance. Mathematics 2022, 10, 873. [Google Scholar] [CrossRef]

- Jiang, H.; Deng, H. Traffic incident detection method based on factor analysis and weighted random forest. IEEE Access 2020, 8, 168394–168404. [Google Scholar] [CrossRef]

- Rigatti, S.J. Random forest. J. Insur. Med. 2017, 47, 31–39. [Google Scholar] [CrossRef] [PubMed]

- Biau, G.; Scornet, E. A random forest guided tour. Test 2016, 25, 197–227. [Google Scholar] [CrossRef]

- Parmar, A.; Katariya, R.; Patel, V. A review on random forest: An ensemble classifier. In Proceedings of the International Conference on Intelligent Data Communication Technologies and Internet of Things (ICICI) 2018; Springer: Berlin/Heidelberg, Germany, 2019; pp. 758–763. [Google Scholar]

- Salman, A.; Mian, A.N. Deep learning based speed bumps detection and characterization using smartphone sensors. Pervasive Mob. Comput. 2023, 92, 101805. [Google Scholar] [CrossRef]

- Kumar, T.; Acharya, D.; Lohani, D. A Data Augmentation-based Road Surface Classification System using Mobile Sensing. In Proceedings of the 2023 International Conference on Computer, Electronics & Electrical Engineering & Their Applications (IC2E3), Srinagar Garhwal, India, 8–9 June 2023; pp. 1–6. [Google Scholar]

- Dofitas, C., Jr.; Gil, J.M.; Byun, Y.C. Multi-Directional Long-Term Recurrent Convolutional Network for Road Situation Recognition. Sensors 2024, 24, 4618. [Google Scholar] [CrossRef] [PubMed]

- Chen, B.; Fang, M.; Wei, H. Incorporating prior knowledge for domain generalization traffic flow anomaly detection. Neural Comput. Appl. 2024, 36, 1–14. [Google Scholar] [CrossRef]

- Abu Tami, M.; Ashqar, H.I.; Elhenawy, M.; Glaser, S.; Rakotonirainy, A. Using multimodal large language models (MLLMs) for automated detection of traffic safety-critical events. Vehicles 2024, 6, 1571–1590. [Google Scholar] [CrossRef]

- Kim, G.; Kim, S. A road defect detection system using smartphones. Sensors 2024, 24, 2099. [Google Scholar] [CrossRef]

- Varona, B.; Monteserin, A.; Teyseyre, A. A deep learning approach to automatic road surface monitoring and pothole detection. Pers. Ubiquitous Comput. 2020, 24, 519–534. [Google Scholar] [CrossRef]

- Karlsson, R.; Hendeby, G. Speed estimation from vibrations using a deep learning CNN approach. IEEE Sens. Lett. 2021, 5, 7000504. [Google Scholar] [CrossRef]

- Baldini, G.; Giuliani, R.; Geib, F. On the application of time frequency convolutional neural networks to road anomalies’ identification with accelerometers and gyroscopes. Sensors 2020, 20, 6425. [Google Scholar] [CrossRef]

- Ding, H.; Tang, Q. Optimization of the road bump and pothole detection technology using convolutional neural network. J. Intell. Syst. 2024, 33, 20240164. [Google Scholar] [CrossRef]

- Menegazzo, J.; von Wangenheim, A. Speed Bump Detection Through Inertial Sensors and Deep Learning in a Multi-contextual Analysis. SN Comput. Sci. 2022, 4, 18. [Google Scholar] [CrossRef]

- Khan, Z.; Tine, J.M.; Khan, S.M.; Majumdar, R.; Comert, A.T.; Rice, D.; Comert, G.; Michalaka, D.; Mwakalonge, J.; Chowdhury, M. Hybrid quantum-classical neural network for incident detection. In Proceedings of the 2023 26th International Conference on Information Fusion (FUSION), Charleston, SC, USA, 27–30 June 2023; pp. 1–8. [Google Scholar]

- Zhang, S.; Zhang, S.; Qian, Z.; Wu, J.; Jin, Y.; Lu, S. DeepSlicing: Collaborative and adaptive CNN inference with low latency. IEEE Trans. Parallel Distrib. Syst. 2021, 32, 2175–2187. [Google Scholar] [CrossRef]

- Tang, E.; Stefanov, T. Low-memory and high-performance CNN inference on distributed systems at the edge. In Proceedings of the 14th IEEE/ACM International Conference on Utility and Cloud Computing Companion, Zurich, Switzerland, 6–9 December 2021; pp. 1–8. [Google Scholar]

- Zuo, C.; Zhang, X.; Zhao, G.; Yan, L. PCR: A parallel convolution residual network for traffic flow prediction. IEEE Trans. Emerg. Top. Comput. Intell. 2025, 9, 3072–3083. [Google Scholar] [CrossRef]

- Chen, J.; Ye, H.; Ying, Z.; Sun, Y.; Xu, W. Dynamic trend fusion module for traffic flow prediction. Appl. Soft Comput. 2025, 174, 112979. [Google Scholar] [CrossRef]

- Chen, J.; Pan, S.; Peng, W.; Xu, W. Bilinear Spatiotemporal Fusion Network: An efficient approach for traffic flow prediction. Neural Netw. 2025, 187, 107382. [Google Scholar] [CrossRef]

- Wang, X.; Zhao, Y.; Huang, Z. A Survey of Deep Transfer Learning in Automatic Modulation Classification. IEEE Trans. Cogn. Commun. Netw. 2025, 11, 1357–1381. [Google Scholar] [CrossRef]

- Yao, S.; Guan, R.; Peng, Z.; Xu, C.; Shi, Y.; Ding, W.; Lim, E.G.; Yue, Y.; Seo, H.; Man, K.L.; et al. Exploring radar data representations in autonomous driving: A comprehensive review. IEEE Trans. Intell. Transp. Syst. 2025, 26, 7401–7425. [Google Scholar] [CrossRef]

- Wang, Q.; Chen, J.; Song, Y.; Li, X.; Xu, W. Fusing visual quantified features for heterogeneous traffic flow prediction. Promet-Traffic Transp. 2024, 36, 1068–1077. [Google Scholar] [CrossRef]

- Huang, S.; Sun, C.; Wang, R.Q.; Pompili, D. Toward Adaptive and Coordinated Transportation Systems: A Multi-Personality Multi-Agent Meta-Reinforcement Learning Framework. IEEE Trans. Intell. Transp. Syst. 2025, 26, 12148–12161. [Google Scholar] [CrossRef]

- Song, D.; Zhao, J.; Zhu, B.; Han, J.; Jia, S. Subjective driving risk prediction based on spatiotemporal distribution features of human driver’s cognitive risk. IEEE Trans. Intell. Transp. Syst. 2024, 25, 16687–16703. [Google Scholar] [CrossRef]

- Song, D.; Zhu, B.; Zhao, J.; Han, J. Modeling lane-changing spatiotemporal features based on the driving behavior generation mechanism of human drivers. Expert Syst. Appl. 2025, 284, 127974. [Google Scholar] [CrossRef]

- Ji, W.; Lin, X.; Sun, Y.; Lin, G.; Vulevic, A. Intelligent Fault-tolerant control for high-speed maglev transportation based on error-driven adaptive fuzzy online compensator. IEEE Trans. Intell. Transp. Syst. 2025, early access, 1–10. [Google Scholar] [CrossRef]

- Chang, Y.; Ren, Y.; Jiang, H.; Fu, D.; Cai, P.; Cui, Z.; Li, A.; Yu, H. Hierarchical adaptive cross-coupled control of traffic signals and vehicle routes in large-scale road network. In Computer-Aided Civil and Infrastructure Engineering; Wiley: Hoboken, NJ, USA, 2025. [Google Scholar]

- Race Technology Ltd. AQ-1 Data Logger OBDII Datasheet. 2025. Available online: https://www.turbo-total.com/media/pdf/0f/73/92/10-2501-for-AQ-1-Data-Logger-OBDII.pdf (accessed on 9 August 2025).

| Layer | Configuration/Parameters | Output Shape |

|---|---|---|

| Input Layer | (channels × time steps × depth) | |

| Conv Layer 1 | 32 filters, kernel , stride , padding = same | |

| Batch Norm 1 + ReLU | — | |

| Max Pooling 1 | Pool | |

| Conv Layer 2 | 64 filters, kernel , stride , padding = same | |

| Batch Norm 2 + ReLU | — | |

| Max Pooling 2 | Pool | |

| Dropout | Rate = 0.5 | |

| Fully Connected | Dense layer (num_classes) | num_classes |

| Softmax | Class probability output | num_classes |

| Class | TP | FP | FN | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|

| No Event | 94 | 13 | 10 | 0.8785 | 0.9038 | 0.8910 |

| Pothole | 101 | 3 | 3 | 0.9711 | 0.9711 | 0.9711 |

| Speed Bump | 99 | 6 | 5 | 0.9429 | 0.9519 | 0.9474 |

| Sudden Braking | 95 | 5 | 9 | 0.9500 | 0.9135 | 0.9314 |

| Method | Precision | Recall | F1-Score | Accuracy | Probabilistic |

|---|---|---|---|---|---|

| Rule-based approach | 0.9200 | 0.7800 | 0.8400 | 0.9100 | No |

| CNN (proposed) | 0.9356 | 0.9351 | 0.9352 | 0.9351 | Yes |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aguilar-González, A.; Medina Santiago, A. CNN-Based Road Event Detection Using Multiaxial Vibration and Acceleration Signals. Appl. Sci. 2025, 15, 10203. https://doi.org/10.3390/app151810203

Aguilar-González A, Medina Santiago A. CNN-Based Road Event Detection Using Multiaxial Vibration and Acceleration Signals. Applied Sciences. 2025; 15(18):10203. https://doi.org/10.3390/app151810203

Chicago/Turabian StyleAguilar-González, Abiel, and Alejandro Medina Santiago. 2025. "CNN-Based Road Event Detection Using Multiaxial Vibration and Acceleration Signals" Applied Sciences 15, no. 18: 10203. https://doi.org/10.3390/app151810203

APA StyleAguilar-González, A., & Medina Santiago, A. (2025). CNN-Based Road Event Detection Using Multiaxial Vibration and Acceleration Signals. Applied Sciences, 15(18), 10203. https://doi.org/10.3390/app151810203