1. Introduction

The ongoing digitalization of industrial manufacturing—driven by the adoption of cyber–physical systems, industrial IoT, and automated control infrastructure—has led to a massive increase in the availability of machine-generated data [

1]. While this development offers new opportunities for monitoring, diagnosing, and optimizing production systems, it also introduces significant challenges for analysis: industrial data are often high volume, heterogeneous, noisy, and context-dependent [

2,

3]. This paradigm, commonly termed smart manufacturing, leverages data-driven analytics to orchestrate production and supply chains in real time [

4].

Visual analytics (VA), “the science of analytical reasoning facilitated by interactive visual interfaces” [

5], recognizes that analysis is not a routine process that can be fully automated [

6]. It relies on the expertise, initiative, and domain knowledge of analysts, supported by visual representations and computational methods. Interaction is central to this process, enabling flexible, iterative exploration [

7]. VA helps uncover trends, patterns, and anomalies. However, for VA to be effective, it must deliver precise, relevant, and interpretable information [

8] that is tailored to the user’s mental model and task context.

In many industrial settings, analysts are domain experts (e.g., engineers, operators, or managers) who may not be specialists in data analysis or visualization. This creates a gap: while VA provides powerful interactive methods, it often struggles to capture and integrate the contextual knowledge and reasoning processes of these experts. Stoiber et al. [

9] highlight that domain experts need systems that go beyond displaying data. They must also provide guidance and incorporate knowledge to support decision-making in complex environments.

In response to this gap, the concept of

knowledge-assisted visual analytics (KAVA) was introduced [

10,

11,

12,

13]. KAVA extends classical VA by systematically integrating explicit knowledge (extracted tacit knowledge in an structured form)—such as rules, ontologies, provenance information, or domain constraints—into interactive visualization and computational analysis. Explicit knowledge in this context refers to knowledge that can be formalized, stored, and reused, which distinguishes it from tacit knowledge that remains tied to human expertise. By externalizing tacit knowledge and embedding explicit knowledge into the analytical workflow, KAVA enables context-aware, explainable, and more reusable VA solutions [

9,

11,

12].

Several studies have demonstrated that KAVA can improve interpretability, support the onboarding of new users, and guide analysis workflows across different domains, ranging from biomedical data analysis (e.g., Ordino and KAVAGait) to general-purpose visualization environments such as Tableau and Advizor [

9]. However, despite these promising advances, the systematic study of KAVA in

industrial manufacturing remains limited. Industrial contexts are characterized by heterogeneous data sources, domain-specific tasks, and high demands for reliability and interoperability—making them an ideal yet challenging testbed for KAVA approaches.

This motivated our survey, which aimed to outline the state of the art of KAVA in industrial manufacturing and explore how knowledge, data, visualization, and interaction are addressed within existing systems and where gaps or best practices can be identified. We aimed to obtain a comprehensive understanding of the conceptual and technical building blocks relevant to the domain.

Thus, we conducted a systematic literature review following the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) methodology [

14,

15] to ensure a transparent, comprehensive, and structured review process. The following research questions served as the foundation for this review:

- RQ1:

How is explicit knowledge modeled? Which knowledge modeling approaches are commonly used for knowledge representation in industrial manufacturing VA?

- RQ2:

Which visualization and interaction methods are used for externalizing explicit knowledge in industrial manufacturing?

- RQ3:

How are ML methods integrated into KAVA in industrial manufacturing?

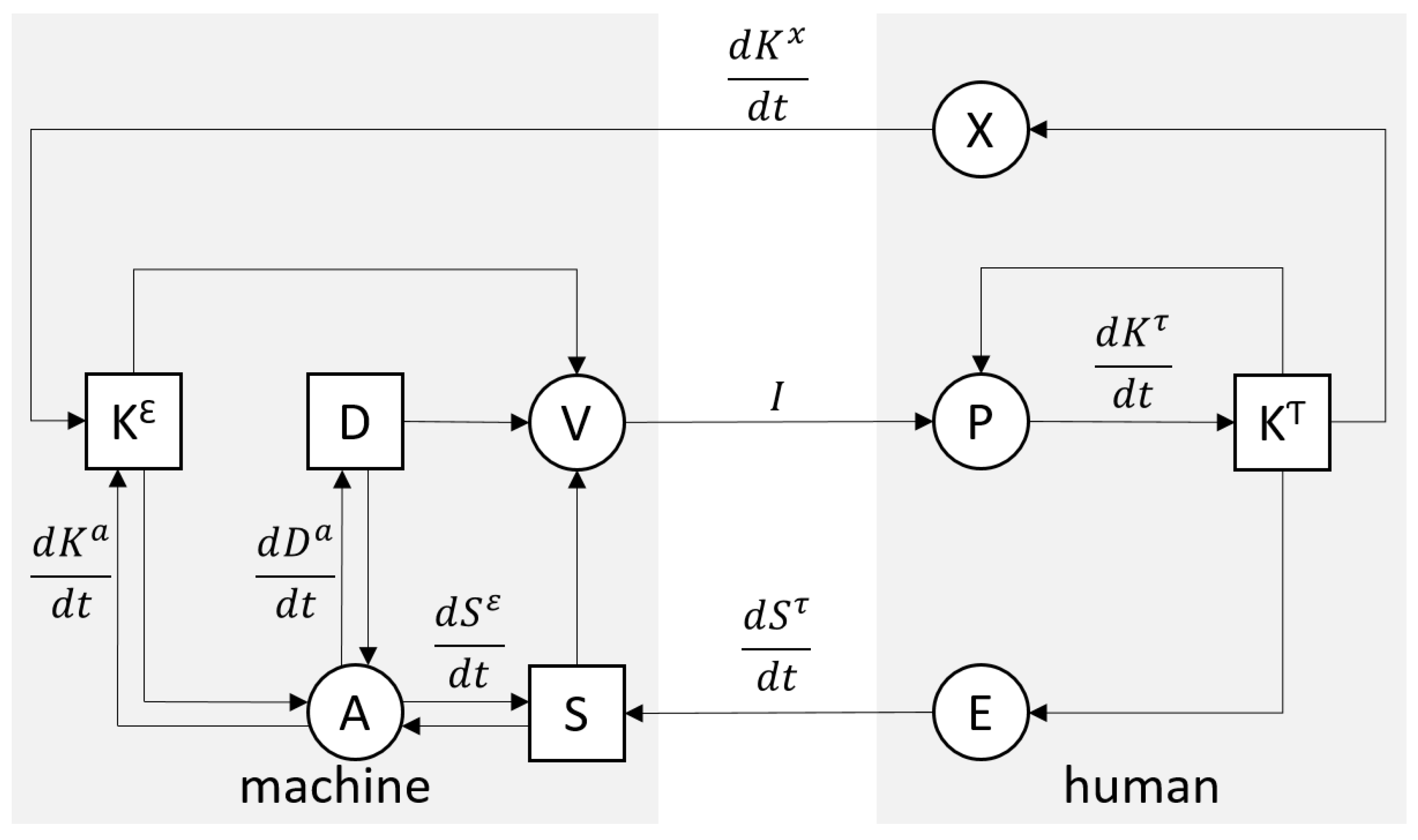

To systematically address these questions, we grounded our analysis in the conceptual foundation provided by the “knowledge-assisted visual analytics (KAVA) model”, as introduced by Federico et al. [

11] and Wagner [

12]. This model opens the possibility to frame KAVA as an

iterative process of knowledge generation, representation, application, and refinement. It emphasizes that knowledge in VA systems is not static—it evolves through interaction, feedback, and usage. Based on this model, as displayed in

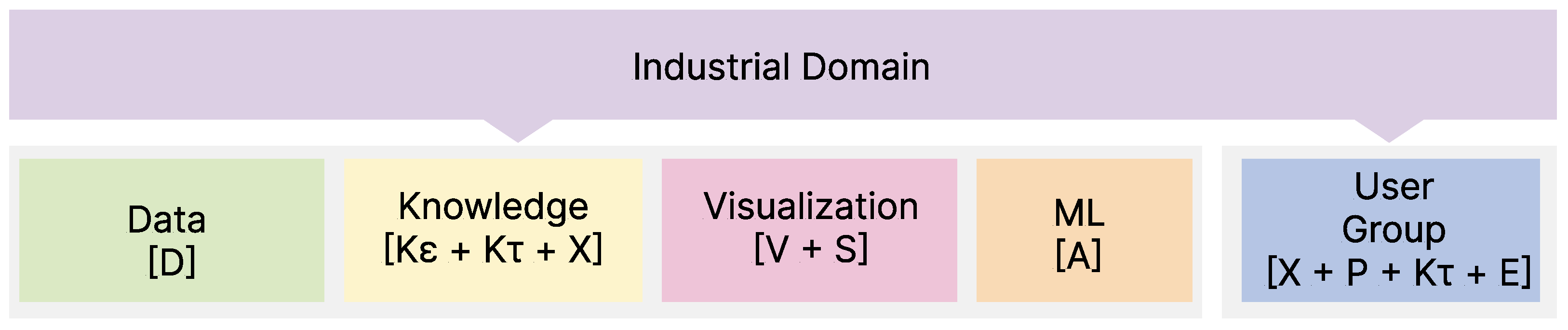

Figure 1, we identified a set of functional building blocks, illustrated in

Figure 2, that structure our literature review and allow us to examine KAVA systems along consistent and conceptually meaningful dimensions (see

Section 4):

The

industrial domain within industrial manufacturing (e.g., automotive and packaging production) shapes every component of the KAVA [

11] model. It plays a cross-cutting role in, for example, guiding data selection, using visualization techniques and interaction concepts, formalizing explicit knowledge, automating analysis, and determining the way that users apply and externalize their tacit knowledge. The building blocks, summarized in

Figure 2, include the following:

Data: The types of data (e.g., time-dependent data and tabular data) that are described in the examined studies.

Knowledge: The knowledge modeling storage mechanisms (e.g., text, ontologies, and taxonomies) and the process, type, and origin of knowledge gathered in this survey.

Visualization: Visualization and interaction techniques (e.g., line plots and node–link diagrams).

Machine learning: Descriptions of the used machine learning (ML) methods, e.g., supervised, unsupervised, and semi-supervised ML.

User group: The main user group (e.g., engineers and managers) interacting with the KAVA system, contributing tacit knowledge, and steering exploration.

Contributions: By analyzing the literature across these dimensions, we systematically provide a review that discusses and helps to understand the field by investigating existing KAVA methods, specifically focusing on how explicit human domain knowledge is modeled and visualized in other applications, uncovering gaps and offering insights for future research and applications in industrial manufacturing. To summarize, our contributions are as follows:

We provide a structured overview of research on industrial manufacturing and KAVA (see

Section 2);

We present our categorizations, which are the main building blocks of KAVA (see

Section 4);

We contextualize the major ongoing challenges in the domain of KAVA in industrial manufacturing (see

Section 5).

2. Background and Related Work

This article combines three thematic areas of data analytics research: interactive visual interfaces through visual analytics (VA) [

5], knowledge externalization and knowledge-based analysis [

16], and data-driven approaches for industrial manufacturing [

2].

Before

Section 4 systematically investigates the overlap of all three areas, the related work in each pair-wise combination of thematically adjacent fields is explored below.

There is a growing body of literature applying

VA to industrial manufacturing data. Four surveys describe this field. Zhou et al. [

17] carried out a systematic survey in 2019 and provided a thorough review of the visualization technologies used to analyze data throughout the manufacturing lifecycle by categorizing them into two primary areas: “replacement,” which employs immersive tools like virtual reality (VR), augmented reality (AR), and mixed reality (MR) to replicate and digitally enhance physical processes, and “creation,” which focuses on using visualization to drive innovation in design, production, testing, and maintenance. The survey covers various sectors, including the automotive, energy, and food processing sectors, and it discusses challenges such as integrating diverse data types, ensuring data security, achieving real-time processing, and designing user-centered interfaces. Cibulski et al. [

18] retrospectively analyzed and compared 13 visualization research projects in the Austrian and German manufacturing industry. Moreover, Schreck et al. [

3] highlighted the role of VA in optimizing industrial processes through computational analysis and interactive visualization. Their paper provides an overview of goals, concepts, and existing solutions for industrial applications by discussing the main data types and challenges in data acquisition. Furthermore, challenges such as heterogeneity, data ownership, and governance are presented.

In contrast, Wang et al. [

19] focused on visualization and VA methods for multimedia data in industrial settings. They introduced a taxonomy based on data types, categorizing research based on visualization techniques, interaction methods, and specific application areas. They highlighted the need for integrated frameworks to manage diverse data formats.

As the above surveys did not address the roles of knowledge and KAVA methods, we plan to extend their contributions by focusing on how experts’ domain knowledge can be integrated into VA to enhance effective decision-making in industrial manufacturing environments.

While VA approaches integrate industrial domain knowledge through user interaction, previous research has also directly integrated

explicit knowledge into industrial analytics. In particular, knowledge graphs have established themselves in recent years as a central method for externalizing domain expertise in a structured, machine-readable format. In a recent review study, Xiao et al. [

20] discussed the role of process knowledge graphs in manufacturing and their significance for transforming process knowledge and the knowledge of domain experts and engineers into formalized graph structures that enable, for example, computer-aided process planning. The authors emphasized that such graphs allow for the storage and reuse of knowledge and can also visualize implicit relationships between processes, which supports scalable knowledge externalization in industrial scenarios. Furthermore, Buchgeher et al. [

21] systematically investigated the application of knowledge graphs in the production and manufacturing domain. They showed that knowledge fusion and semantic integration are common use cases. Nevertheless, the broader focus is often on externalizing heterogeneous domain knowledge into unified, structured representations. Although numerous technical contributions already exist, the study indicated that empirical validation and industrial implementation have so far been limited. This shows that knowledge externalization continues to tackle challenges in practice, particularly concerning usability and adoption in industrial applications.

The central role of

knowledge in VA has been highlighted in many theoretical works [

22]. Models of the VA process, such as the knowledge generation model by Sacha et al. [

23], typically place knowledge as the input and final output of interactive visual analysis. These process models anchor knowledge solely in the human space, describing it as tacit knowledge that is only available to humans through their cognitive processes. Yet, based on earlier calls to make knowledge directly available for VA [

24,

25], Federico et al. [

11] introduced the conceptual model of KAVA, which pursues explicit knowledge that is available in the machine space in visualization and analytical methods. Some works have dealt with explicit knowledge in the context of their VA topic: Battle and Ottley [

26,

27] reviewed 41 papers to categorize various definitions of “insight”, a term often used for the output of VA. They observed that “incorporating user domain knowledge appears to be a consistent challenge within visualization research”; discussed how insights can be externalized in VA approaches; and concluded that knowledge links between findings, data, and domain knowledge, like a knowledge graph, are the most promising conceptualization. Li et al. [

28] interviewed 19 knowledge graph practitioners regarding visualization aspects of their current work and derived VA challenges and opportunities for knowledge graphs. Ceneda et al. [

29] analyzed 53 VA approaches and how they provide guidance to users, e.g., recommending possibly interesting parts of large scatterplot matrices or networks. The use of explicit knowledge for guidance is conceptualized in an extension of the KAVA model [

9]. Explicit knowledge can also be externalized while building ML models using VA approaches such as visual interactive labeling [

30]. In this regard, a survey of 52 approaches [

31] compared the importance of different knowledge types but did not discuss how knowledge would be reintegrated as explicit knowledge. Thus, the only survey covering KAVA approaches directly was conducted in 2017 by Federico et al. [

11].

It reviewed 32 KAVA approaches, their support for the knowledge process and type, and the origin of knowledge. This work will provide a follow-up review with a thematic focus on industrial manufacturing.

3. Scope and Methodology

Our survey focused on KAVA in industrial manufacturing, covering research published from 2014 to 2024. We followed the standardized search and research guidelines of the Preferred Reporting Items for Systematic Reviews and Meta-Analysis (PRISMA) [

14,

15] to ensure a transparent and structured approach (

Figure 3). We first conducted an exploratory pre-selection phase to refine the search criteria and scope. Based on this, a search string was developed and adapted according to the syntax and indexing of each selected database to download the studies. Each stage of the screening process, title screening, abstract reading, and full-paper review, was independently conducted by at least two reviewers. Any disagreements were resolved by consulting a third reviewer who served as a tie-breaker. Two reviewers carried out paper categorization, discussed their results, and agreed upon the final categorization.

3.1. Search Strategy

During the ideation phase, a structured keyword search was performed across six key academic databases: ACM Digital Library, IEEE Xplore, Springer-Link, Google Scholar, SAGE, and Eurographics. At first, keywords matching our research questions and criteria were collected, such as “Data Visualization”, “Industry”, “Knowledge”, “Smart Factory”, “Smart Manufacturing”, and “Visual Analytics”, along with their variants and synonyms. After agreeing on the keywords, initial experiments with variants of search terms were carried out as described in the following section.

3.1.1. Search Term Pre-Selection Phase

Initially, experimental searches were performed on the databases to estimate the expected number of results. This pre-selection was performed on papers published between 2014 and 2024. The search process used Boolean operators (AND, OR, NOT) and wildcards (e.g., “Visuali?ation” to capture spelling variations) to improve precision. We aimed for a query that would yield 1000–1300 results on Google Scholar. This approach was considered to balance the need for a comprehensive resource pool with a manageable scope.

The chosen search string from the pre-selection phase was as follows:

| (“Data Visualization” OR “Data Visualisation”) AND “Dashboard” AND (“Visual Analytics” OR “Visual Analysis”) AND Knowledge AND (Smart Manufacturing OR Smart Factory OR Smart Production OR Industry*) |

We manually screened the titles of the retrieved resources to obtain insights into which domains we should specifically exclude from our search, which were not suitable for our scope of industrial manufacturing (e.g., urban planning and smart cities, education, business, healthcare, and energy sectors). Following these insights, we explicitly defined domains outside of industrial settings as an exclusion criterion and adapted our final search strategy accordingly.

3.1.2. Finalized Search Strategy and Screening Criteria

After the pre-selection phase, we decided on the following search string, which was further adjusted to match the syntax of each specific database:

| (“Data Visualization” OR “Data Visualisation”) AND “Dashboard” AND (“Visual Analytics” OR “Visual Analysis”) AND Knowledge AND (Smart Manufacturing OR Smart Factory OR Smart Production OR Industry) -cit -busin -energ -health -educ -student |

A total of 1352 papers were retrieved after applying our search strategy to the databases. To ensure quality, we considered only peer-reviewed venues. Established publishers such as Springer, ACM, and IEEE enforce peer review as a publication standard; for other outlets, we validated peer-reviewed status by consulting the journal’s editorial or preface.

After removing duplicated entries, 1318 papers remained for the screening procedure, which included a title, abstract, and full-paper reading step. To ensure the relevance and quality of the included studies, progressively stricter inclusion and exclusion criteria were applied at each screening stage, as detailed in

Table 1. While multiple authors contributed to the overall screening process, each stage was carried out independently by two authors who reviewed the same set of papers. If their decisions did not align, a third reviewer acted as a tie-breaker. To minimize bias and maintain transparency, we adhered to the principles of the PRISMA checklist, which we considered more suitable for our review than a formal bias scoring system.

4. Categorization and Results

After completing the screening process, 13 papers were selected for fine-grained categorization. The analysis was structured around six main entity groups, as illustrated in

Figure 2 and

Table 2, namely

data [D] (as defined by Schreck et al. [

3]),

knowledge [] (as in Federico and Wagner et al. [

11]), and

visualization [V, S] (according to the Data Visualization Catalogue [

32]). In addition, we reported on visualization techniques that are not covered by the Data Visualization Catalogue because they are described in the source, which are labeled “as reported” in

Table 2.

Furthermore, industrial domain, user group, and machine learning [A] were identified during the categorization process.

For ML, we defined supervised, unsupervised, and semi-supervised methods as the main categories.

4.1. Industrial Domain and User Group

KAVA systems can only succeed if they match the needs of their intended users. This requires understanding who the users are, what expertise they have, and the roles that they play in analysis and decision-making. Identifying these groups also means considering the industrial domains that they work in, since domain processes and data environments strongly shape system requirements. The next sections, therefore, examine the user group addressed in the reviewed publications and the industrial domain in which they operate.

Understanding the intended

user groups of KAVA systems is essential for evaluating how well these systems support real-world manufacturing workflows. Across the 13 reviewed publications, operators and managers are the most frequently addressed groups, each appearing in 6 of the 13 works (see

Table 3).

Operators typically execute shop-floor tasks and interact with monitoring, control, or annotation interfaces, with systems emphasizing usability, real-time feedback, and contextual visualizations [

33,

34,

35].

Managers focus on high-level oversight, decision-making, and performance monitoring, where visualizations aggregate data and highlight KPIs or process summaries [

36,

37].

Process engineers are identified in three papers. They are involved in designing, optimizing, and troubleshooting production processes. The systems for this group often support detailed process modeling and knowledge integration [

35,

38,

39].

Machine engineers are also mentioned in three works; their responsibilities include configuring and maintaining equipment. The visual tools for this group often focus on sensor data, machine states, and fault diagnosis [

35,

39,

40].

Domain experts appear in three papers. These users contribute specialized knowledge, often in the form of annotations, rules, or ontologies. Their input is crucial for knowledge modeling and system interpretability [

41,

42,

43].

Analysts are also present in three papers. They are typically data scientists or engineers responsible for performing data-driven analyses. The systems supporting analysts often include advanced filtering, modeling, and visualization capabilities [

38,

39,

44].

While user groups define the roles and responsibilities that KAVA systems must support, their needs cannot be fully understood without considering the

industrial domain in which they operate. The reviewed publications address four recurring domains, which are the manufacturing industry (discrete production with items that can be counted, touched, and seen individually), process industry (the production of bulk materials through chemical, physical, or biological processes), heavy industry and machinery, and the automotive sector, summarized in

Table 4. The following paragraphs summarize how these domains are represented in the reviewed publications.

The manufacturing industry and

process industry are the most frequently represented domains, each appearing in 5 of the 13 reviewed works (see

Table 4). Works in the

manufacturing industry typically focus on discrete production environments, such as assembly lines, component fabrication, and smart factory setups. Examples include ontology-based systems for monitoring and planning [

34,

39]. The

process industry, on the other hand, includes continuous production environments such as cement, chemical, or energy sectors. These systems often emphasize sensor integration, process modeling, and fault detection [

35,

37,

41].

The automotive sector is represented in two papers [

36,

38], with a focus on vehicle manufacturing and battery module assembly. These works emphasize multi-stakeholder collaboration and knowledge contextualization.

The heavy industry is addressed in one paper [

40], focusing on large-scale equipment and infrastructure.

4.2. Data

The

data essentially drive the analytical processes of KAVA systems. We adopt the data-type taxonomy as defined in Schreck et al. [

3], which distinguishes between tabular, time-dependent, spatial, textual, graph/network, and multimedia data (e.g., image, audio, and video). Based on this classification, we categorize the 13 reviewed publications.

The analysis reveals a strong emphasis on structured formats:

tabular, time-dependent, spatial, and

textual data dominate the literature (see

Table 5).

Tabular data underpin most approaches, supporting performance diagnostics and anomaly detection in sensor logs and production metrics [

36,

39].

Time-dependent data are used for temporal analyses such as multivariate series for crane anomaly detection and trend analysis in production quality [

37,

40].

Spatial data inform contextualized visualizations via 3D factory models and shop-floor layouts [

34,

38].

Textual data enable the extraction of domain knowledge from manuals and thesaurus-guided annotation [

35,

45].

Graph and network data are used in only three papers, visualizing process dependencies and modeling relationships between annotated cases [

36,

44].

Multimedia data appear in two publications, supporting the CAD-based annotation of battery modules and metadata-driven visualizations based on image inputs [

38,

43].

After identifying the data types in the reviewed publications, we now consider how these data are represented visually.

4.3. Visualization

Visualization techniques are a core component of KAVA systems, serving as the interface between data, knowledge, and user interpretation. To understand how visual representations are employed in industrial manufacturing contexts, we categorized the reviewed publications based on the types of visualizations that they use. These were grouped into six main subcategories, namely,

graphs/plots,

tables,

diagrams,

maps,

others, and

as reported (in source)—the latter capturing visualization types not listed in standard catalogs but explicitly mentioned in the papers. For clarity and emphasis, the order of the subcategories in

Table 6 reflects their frequency of occurrence across the reviewed works.

Across the reviewed literature,

line graphs emerged as the most frequently used visualization technique, appearing in 7 of the 13 papers. For instance, Alm et al. [

34] and Kaupp et al. [

39] used line graphs to display sensor trends and performance metrics over time.

Bar charts were the second most common, featured in six papers, examples of which are Xu et al. [

36] and Behringer et al. [

42] (see

Figure 4), where they were used to compare categorical values or summarize aggregated data.

Scatterplots were employed in five studies, namely, [

33,

40,

43,

44,

45], to explore variable relationships and detect anomalies.

Other common visualization types included

histograms,

radial bar charts,

box-and-whisker plots, and

adar charts, with each appearing in two publications [

36,

38,

40,

41,

42]. These supported tasks such as distribution analysis (e.g., Eirich et al. [

38]) and multivariate comparisons (e.g., Zhang et al. [

41]). Less commonly used were

tacked bar charts,

area graphs,

stream graphs,

stacked area graphs, and

parallel coordinate plots, each found in only one paper [

33,

34,

40].

Table-based visualizations also played a role in supporting temporal and density-based analyses.

Calendars were used in two papers [

33,

36].

Heat maps appeared in one paper [

33]. For example, Wu et al. [

33] used calendar views to visualize maintenance schedules and heat maps to indicate when faults occur.

Regarding the

diagram category,

network diagrams appeared in seven papers, which are listed in detail in

Table 6. These were used to represent relationships between entities, such as in [

35,

36], where they were used to visualize process flows or semantic structures. Some of them could also be considered flowcharts, which were used to depict system logic or task sequences, such as in [

36,

39,

42,

44].

Among the

map visualizations,

connection maps were used in one paper [

38] to illustrate the spatial layouts of manufacturing stations.

Regarding the

other category,

donut charts appeared in three papers [

33,

38,

41], often used to represent categorical distributions or knowledge maturity levels.

Pie charts were used in two papers [

33,

43], and circle packing was mentioned once [

33] to visualize hierarchical data compactly. One paper used a

Nightingale rose chart to show stall distribution [

40].

The

as reported category includes domain-specific or custom visualizations not listed in the catalogs.

Three-dimensional models were used in three papers [

34,

36,

41] to support spatial reasoning and digital twin representations. Other unique visualizations, such as

2D models of products, were used in two papers [

38,

43]. Other unique visualizations, such as

radial heat maps,

icicle plots, and

Marey’s graphs, were each found in only one publication [

36,

37,

38].

Visualization alone does not suffice for complex analysis in industrial manufacturing. KAVA builds on visual exploration by embedding explicit domain knowledge such as rules, ontologies, and structured annotations directly into the analytic workflow. This allows visual patterns to be interpreted within their industrial context and transformed into actionable insights. The following section investigates how explicit knowledge is modeled and applied in KAVA systems.

4.4. Knowledge

Explicit knowledge is understood as formalized and structured domain knowledge that can be shared, reused, and reasoned with [

11]. It plays a central role in embedding domain expertise and guiding analytic processes. To examine how explicit knowledge is integrated into VA for industrial manufacturing, we adopt the categorization framework proposed by Federico et al. [

11], which conceptualizes knowledge as flowing through different

transformation pathways, which they call KAVA processes. An overview of how these pathways, knowledge types, origins, and storage mechanisms are represented across the reviewed systems is provided in

Table 7.

These pathways describe how knowledge is created, transformed, and applied in KAVA systems by linking tacit knowledge (

), explicit knowledge (

), data (

D), analysis (

A), and visualization (

V). They aim to show the dynamic interplay between human and machine in knowledge-assisted analytics. Following Federico et al. [

11], we distinguish six pathways in the surveyed literature; the

“Simulation” pathway is excluded, as it was not reported in our papers.

4.4.1. Data Analysis ():

Explicit knowledge is automatically obtained from data through analytic methods such as clustering, anomaly detection, or classification. For instance, Xu et al. [

36] apply outlier detection algorithms (the greedy algorithm/unsupervised and k-nearest neighbor graphs/semi-supervised algorithms), while Liu et al. [

40] use unsupervised ML models such as Isolation Forest (iForest), k-nearest neighbors (KNNs), Local Outlier Factor (LOF), and One-Class Support Vector (OCSVM) to flag anomalies. Similarly, Wu et al. [

33] use Pearson correlation coefficients for feature extraction and Gaussian Mixture Models for real-time equipment condition monitoring. Kaupp et al. [

39,

44] use the composition of multiple outlier detection algorithms such as ARIMA or autoencoders.

4.4.2. Knowledge Visualization ():

Explicit knowledge is directly visualized, enabling users to internalize structured knowledge. Xu et al. [

36] update their knowledge representations carried out with

Marey’s graphs in real time via brushing. Some papers use

node–link diagrams for displaying semantic networks [

35], for displaying production halls [

38], and for displaying entity graphs [

45]. Behringer et al. [

42] display numeric data quality scores as

color-coded bars and pre-defined rules as

labels within their dashboard (see

Figure 4). Kaupp et al. [

39,

44] display human-annotated abnormal cases with contexts and rules as

glyphs on their dashboard. Clicking on them reveals more details. They also introduce an interactive user interface for creating such human-annotated abnormal cases. The simplest way to visualize knowledge is to display

thresholds, as in Cruz et al. [

37]. Other papers such as Liu et al. [

40] do not explicitly mention how the human explicit knowledge is visualized. However, in their work, the knowledge changes the specification of the visualization, resulting in different visualizations. Berges et al. [

43] embed expert knowledge into visualizations by generating customized views of each machine that display only the sensors actually installed on it, as defined in the ontology. User-defined constraints (e.g., time ranges, limits, and aggregations) are then reflected in dynamically adapted query forms and tailored charts. Zhang et al. [

41] visualize explicit human knowledge by displaying expert-defined rules and thresholds in 3D digital twin views, using

color codes and

progress bars.

4.4.3. Direct Externalization ():

Tacit knowledge is made explicit through direct user input. A frequent approach is

annotation and labeling. For example, Wu et al. [

33] and Xu et al. [

36] allow users to highlight or label data samples via brushing, thereby explicitly marking normal or abnormal cases. Alm et al. [

34] describe annotating documents, photos, and structured metadata, while Eirich et al. [

38] support the annotation of CAD images by drawing rectangles on their interface. Another approach is

rule and threshold specification. Cruz et al. [

37] and Behringer et al. [

42] report on explicitly setting thresholds for alerts. Liu et al. [

40] allow experts to edit rule files and to manually exclude false anomalies. Berges et al. [

43] employ ontology-driven query forms, where users select sensors and constraints (e.g., time ranges, limits, and aggregations), which are directly embedded into the visualization output. More advanced systems support

interactive decision adjustments. Zhang et al. [

41] provide a 3D digital twin interface where experts define targets and thresholds and can override algorithm outputs. These manual adjustments and expert opinions are recorded for later reuse, though the paper does not detail the integration mechanism. Similarly, Kaupp et al. [

39,

44] allow the creation of Context-Infused Cases (CICs) in which anomalies are annotated with structured rules and contextual descriptions that can be reapplied in subsequent analyses. Finally, some approaches rely on the

manual entry of domain knowledge, such as those in Han et al. [

35], where domain experts can adjust a knowledge graph. However, this is not directly carried out in the UI but in the database before the knowledge graph construction.

4.4.4. Interaction Mining ():

Tacit knowledge is inferred when the system goes beyond applying explicit user inputs and instead derives more general rules or models from interaction patterns. For example, in Wu et al. [

33], analysts brush over process data to mark normal samples. These brushed labels are not only stored as annotations but are also considered by the system to infer normal and abnormal ranges through correlation analysis and Gaussian Mixture Models, thus including expert input into predictive models. Similarly, Xu et al. [

36] allow users to brush over time-series anomalies. The system interprets these selections to update its outlier detection results in real time. Kaupp et al. [

39,

44] manually mark unusual patterns on performance dashboards. Beyond simply storing these annotations, the system formalizes them into structured anomaly cases and trains predictive models of machine behavior. Behringer et al. [

42] also employ a form of interaction mining in their data quality monitoring framework. Here, when domain experts repeatedly adjust thresholds for quality dimensions like completeness or accuracy, the system learns from these adjustments and updates its domain-specific rules automatically.

4.4.5. Intelligent Data Analysis ():

Existing explicit knowledge is reused to improve automatic analysis methods. For example, Ameri et al. [

45] employ a thesaurus-driven entity extractor, where domain-specific vocabularies guide the identification of manufacturing capabilities in unstructured data. Kaupp et al. [

39,

44] reuse previously defined Context-Infused Cases (CICs) to retrain their anomaly detection algorithms to achieve more accurate and context-aware fault identification. Similarly, Liu et al. [

40] combine anomaly detection results from unsupervised learning models with expert-defined decision-tree rules.

4.4.6. Guidance ():

Explicit knowledge is reused by the system to suggest or adapt visualization specifications. For example, Eirich et al. [

38] encode ontological models of production processes, which the system uses to suggest suitable visualization types and provide dashboards tailored to different user groups. Similarly, Zhang et al. [

41] integrate expert-defined rules and sensor-specific thresholds into their digital twin dashboards so that color-coded decision panels, progress bars, and alerts dynamically adapt based on these encoded constraints.

Beyond these transformation processes, the reviewed works also reflect differences in the

types of knowledge addressed.

Operational knowledge refers to how users interact with the system, such as brushing, filtering, or dashboard configuration [

36].

Domain declarative knowledge encompasses facts, vocabularies, and semantic structures, often represented through ontologies or thesauri [

34,

35].

Domain procedural knowledge captures rules, workflows, or decision logic, such as maintenance schedules or quality thresholds [

33,

37].

Explicit knowledge also enters KAVA systems at different

origins.

Pre-design knowledge is formalized before system development, for instance, via expert interviews or domain standards [

34].

Design-stage knowledge is embedded by developers, such as through predefined rules or ontology schemas [

38].

Post-design knowledge is generated dynamically during system use. Sources include automated analysis [

40]; single-user contributions such as the manual specification of error cases and diagnostic rules [

44]; and multi-user collaboration, which produces shared knowledge bases [

35].

Finally, the

storage mechanisms determine how explicit knowledge persists and is reused. Ontologies, RDF knowledge graphs [

35,

40], and relational databases [

39] are the most common storage mechanisms. Some systems also store structured diagnostic cases and rule sets for future sessions [

44]. Others use cloud infrastructures [

41] or modular repositories, allowing multiple users to contribute knowledge collaboratively and to expand and refine the stored content over time [

42].

While explicit knowledge forms the conceptual backbone of KAVA systems, enabling users to contextualize, interpret, and structure information, many reviewed works further extend these capabilities with data-driven methods. In particular, data mining and machine learning (ML) techniques are central to automating the extraction of knowledge from large-scale industrial data. Since ML plays a recurring and critical role across several transformation pathways, we next provide a dedicated analysis of its use.

4.5. Machine Learning

The integration of data mining and

machine learning (ML) techniques supports tasks such as anomaly detection [

36], classification [

45], clustering [

40], and optimization [

41]—often in combination with visual interfaces to enhance interpretability and user control. To understand how ML is applied in the reviewed literature, we categorized the papers based on the type of learning approach used.

Table 8 presents this categorization.

Unsupervised learning is the most frequently used approach, appearing in 6 of the 13 papers (e.g., [

39,

40,

44,

45]).

Semi-supervised learning follows with two mentions [

33,

36], while

supervised learning is used in one paper [

44]. Zhang et al. [

41] describe a hybrid intelligent optimization system that combines evolutionary algorithms, reinforcement learning, and human-in-the-loop decision support. Unlike traditional ML approaches, such as supervised or unsupervised learning, this system relies on both data-driven models and expert knowledge. It also allows users to interact with the system, providing feedback to help make better decisions in complex, changing environments.

Notably, half of the reviewed papers (6 out of 13) either did not specify their ML approach or did not incorporate ML techniques at all.

5. Discussion

Having surveyed the existing literature on KAVA in industrial manufacturing, this section aims to discuss and synthesize our findings, highlighting recurrent patterns considered in the papers used for knowledge modeling and visualization. We also investigate the methodological challenges and open questions across our six core building blocks: user group, industrial domain, visualization, knowledge, data, and machine learning.

5.1. Main Findings

5.1.1. Wide Variations in User Group and Industrial Domain

Across the 13 surveyed papers, most approaches serve two primary audiences: operators, who require shop-floor visualization for monitoring and contextual views to react quickly to alerts, and managers, who require high-level summaries of key performance indicators (KPIs). However, the managers and operators are rarely specified in detail. Other stakeholders, such as process engineers, machine engineers, data analysts, and broadly defined domain experts, work with separate purpose-built interfaces, for example, workflow editors, ontology management tools, or custom filtering modules, rather than the shared dashboards used by operators and managers. The majority of systems are situated in the manufacturing and process industries, with fewer examples from automotive sectors or the heavy industry.

5.1.2. Heavy Use of Domain-Specific Visualization Techniques

Most reviewed KAVA systems rely on general-purpose visualization types. Line graphs, bar charts, and scatterplots are among the most used ones. In addition, simple network diagrams and flowcharts are used to show connections between elements or process steps. Several papers employ domain-specific visualizations, where standard charts are either inadequate or less commonly used than more specialized formats. For instance, Marey’s graphs excel in visualizing temporal dependencies [

36]; others introduce radial heat maps [

37], icicle plots [

38], or custom 2D/3D models visualizing factory floors, products, or specialized diagrams when the spatial context is critical [

34,

36,

38,

41,

43].

Two key insights emerge: First, the heavy reliance on standard visualization types points to a need for reusable visualization templates that can be parameterized for different manufacturing tasks rather than rebuilding similar views from scratch in each project. Second, although many systems store and apply explicit knowledge (rules, thresholds, ontologies, text documents, etc.), almost none of them include a declarative mapping from those knowledge models to visualization specifications. In practice, many visualization types and thresholds are still hard-coded rather than being automatically suggested or configured based on the underlying domain knowledge represented in the system.

5.1.3. Challenges in Storing, Visualizing, and Validating Knowledge

Our review shows that explicit knowledge in current KAVA systems falls mainly into two archetypes: (1) declarative knowledge, defined as vocabularies (e.g., taxonomies and ontologies), and (2) procedural knowledge, defined as rules (e.g., thresholds and if–then logic). In practice, most systems set and load a fixed set of terms or rules at design time, and then they allow individual users to add or adjust labels, thresholds, or configurations and/or let users annotate labels on a dashboard (e.g., by brushing or drawing rectangles) during a session. Only a few works feed those user edits back into their analytical pipeline for later reuse, for example, by retraining models or updating rules. However, such knowledge persistence might have been realized but not explicitly mentioned in the publication. Even if knowledge persistence is realized, whether evenly or unevenly among users, the question of input quality remains. When multiple users annotate the same data, how can we ensure that their labels are reliable? A possible solution for this could be the implementation of calculating inter-annotator agreement metrics, majority-voting schemes, or confidence scoring to validate annotations.

When it comes to storage, most platforms either keep ontologies and taxonomies in dedicated RDF or graph databases (e.g., Neo4j graph database as in Han et al. [

35]) or store rule tables and configuration files in relational databases or flat files (e.g., Hadoop or MangoDB as in Wu et al. [

33]). Some systems use cloud-based repositories to store and process industrial data streams, as in Zhang et al. [

41], although aspects such as multi-user contributions or collaborative knowledge management are not addressed. Data silos rarely interoperate since ontology updates do not propagate to relational rule tables, and user-generated annotations often vanish at session end, a limitation also emphasized by Wagner’s KAVA model, which notes that current systems do not distinguish between single and multi-user settings and provide no clear specification of how explicit knowledge is stored, propagated, or validated—misleading knowledge can corrupt a system [

47].

Knowledge visualization takes several forms. Thresholds and rule boundaries are most commonly drawn as lines or colored bands on time-series plots; categorical vocabularies appear as labels or color encodings on bar charts; and individual annotations show up as glyphs or icons on scatterplots and network diagrams. A number of systems embed richer structures—semantic networks and process flows rendered as node–link diagrams or expert rules and progress indicators overlaid on 3D digital twins.

5.1.4. Limited Integration of Machine Learning

ML techniques appear in roughly half of the reviewed systems, primarily using unsupervised models (e.g., for anomaly detection) and semi-supervised methods (e.g, for outlier detection), followed by supervised approaches or mixed methods. However, over half of the studies either omit ML entirely or do not report how the models were integrated with the knowledge and visualization layers. This limited integration is consistent with findings from the broader visual analytics community. Bernard et al.’s [

30] visual-interactive labeling (VIAL) process explicitly examines the interplay between active learning and visual-interactive labeling, demonstrating both the potential and the challenges of aligning model-centered ML techniques with user-centered visualization. Their work highlights that, although ML can guide labeling and knowledge generation, integration with KAVA systems often remains ad hoc, hard-coded, or absent. This further supports our observation that systematic ML integration into KAVA remains an open challenge despite its wider adoption in Industry 4.0 contexts.

5.2. Open Challenges and Future Directions

While notable progress has been made in applying KAVA research to industrial contexts, our comprehensive review of the selected papers and related literature indicates that several challenges remain to be addressed.

5.2.1. Heterogeneity of Explicit Knowledge and Data

In the surveyed approaches, we encountered a variety of explicit knowledge, including basic rule tables and ontologies, as well as standards documents, process and architecture diagrams, machine-generated insights, and various user annotations.

Another challenge is posed by the heterogeneity of data: while tabular and time-dependent data are almost omnipresent, 9 out of 13 approaches incorporate an additional data category such as spatial, textual, graph, or multimedia data. This corroborates the results of an earlier survey on VA for industry [

3,

17,

19] regarding data heterogeneity due to ongoing digitalization.

Future research should support a broad spectrum of knowledge and data types. A unified storage layer is needed to organize and version resources, propagate updates, and enable “what-if” simulations on updated rules or models.

Standardized representations would improve interoperability across KAVA systems, ease integration with industrial IT infrastructures, and support the reuse and exchange of knowledge across organizations and sectors.

5.2.2. Higher Level of Automation

A major challenge in KAVA research is achieving a higher level of automation across data processing, knowledge modeling, knowledge externalization, and knowledge generation. In this regard, Alm et al. [

34] state that manual formalization is inconvenient and easily neglected by users due to competing priorities. Therefore, a higher level of automation is necessary for context detection, annotatation, and linking of data. Federico et al. [

11] propose deriving explicit knowledge from user interactions as a route to automate knowledge externalization. Other authors also propose automation opportunities, such as AI-assisted pipeline generation based on user feedback [

39], large language models (LLMs) to extract knowledge to automate the construction of process knowledge graphs (PKGs) [

20], or the automatic alignment of entities between different knowledge graphs to fully utilize their capabilities [

42].

This presents notable directions for future research: developing adaptive pipelines that combine explicit knowledge with automated inference to keep visualizations and dashboards continuously updated, validating and aligning knowledge models from streaming data and user interactions, and maintaining traceability at scale. Further opportunities include leveraging large language models and domain-specific reasoning tools to extract and formalize knowledge from heterogeneous sources, as well as developing automated methods to assess the quality of user-generated inputs. Beyond knowledge extraction, LLMs hold particular promise for semi-automated knowledge graph generation, which could reduce the manual effort of formalization, improve scalability, and enable cross-domain interoperability of industrial knowledge assets. Advancing these areas could lead to KAVA systems that not only externalize and visualize knowledge but also evolve dynamically with changing industrial contexts.

5.2.3. Effective User Interfaces

While automated data analysis approaches are attractive from the perspectives of time and cost, VA approaches provide opportunities beyond that. KAVA systems need to address, in particular, data analysis problems that cannot be solved fully automatically and effectively distribute tasks. Cibulski et al. [

18] warn that overly complex dashboards can be overwhelming and thus ineffective. They recommend visualization onboarding [

9] and guidance [

48] due to their great potential in helping to lower the barrier to adoption and reduce manual effort for users. Thus, we encourage further research on such approaches, as well as automatically recommending suitable visualizations.

5.2.4. Validation of Human Annotations

In KAVA, human input is essential but prone to error, which makes its validation critical in industrial and manufacturing contexts, where incorrect data can lead to security risks or unplanned maintenance. Therefore, we motivate the issues of how to ensure that user-provided input is correct, how to manage conflicting input from multiple users, and how to incorporate confidence measures into the labeling process [

9,

11,

25]. Also, the interview study by Li et al. [

28] describes data quality as the most common challenge (reported by 15 out of 19 interviewees). In this regard, crowd-sourcing research has investigated how to manage distributed human input with techniques for task design, quality control, and the validation of user-generated data [

49]. Recent work by Liu et al. addresses this challenge with a VA approach that involves experts in the labeling process within crowd-sourced settings. Their learning-from-crowds model supports the identification and correction of uncertain labels, e.g., through majority voting and interactive visualizations, for the progressive improvement of annotation quality. This work demonstrates how combining ML with human validation can enhance data quality while reducing manual effort [

50]. Future research could explore leveraging crowd-sourcing, which generally involves large groups of non-experts; however, we motivate KAVA research that learns from those approaches for annotation validation in industrial settings.

5.2.5. Long-Term Studies and Real-World Scenarios

An open challenge is the lack of real-world evaluations of the proposed models and systems. Many tools show promising results in controlled and limited settings; however, their effectiveness in long-term industrial environments is largely unknown. Several authors highlight this issue and motivate studies to address this gap. For example, Xu et al. note that, with the planned deployment of their system in real production lines, its long-term usage can be studied [

36]. Similarly, Wu et al. intend to conduct long-term studies with quantitative measurements and end-user feedback, which can further improve their system [

33]. Behringer et al. [

42] and Kaupp et al. [

44] motivate the validation of their prototypes and models through extended, real-world scenarios, while Buchgeher et al. [

21] point out the limited validation of existing solutions across many industrial sectors and applications. Also, Cibulski et al. [

18] observed in their survey that none of the solutions with the highest technology readiness level had been evaluated. Yet such studies are essential to further advance KAVA by assessing its practical impact adaptability and scalability across dynamic manufacturing domains. As suggested by Federico et al. [

11], novel evaluation methods can be conceptualized that instrumentalize the changes and flows of explicit knowledge as a unit of measurement for studies on the effectiveness of VA environments. Advancing this conceptual framework represents an important avenue for future research. In addition to individual case studies, there is a pressing need for longitudinal evaluation frameworks that assess the adoption of KAVA in industrial settings over extended periods. Such frameworks could capture the evolving interplay of human, organizational, and technological factors and provide structured evidence on adaptability, scalability, and sustained value creation in real-world manufacturing environments.

5.2.6. Generalizability

A recurring open challenge in VA is the limited generalizability of the proposed systems across domains and datasets (e.g., [

19]), since tools are mostly developed and tailored to specific use cases, which makes it difficult to transfer findings to other scenarios. For example, Eirich et al. [

38] acknowledge this limitation and state that their generalizability remains to be investigated in broader applications, while Wu et al. point out that their interactive techniques for user-steerable outlier detection can be potentially applied to more general usage scenarios [

33]. However, the majority of interviewed knowledge graph practitioners from different application domains stated that domain-specific challenges stand in the way of generalized solutions [

28]. Research needs to be conducted to determine how—at least in manufacturing—commonalities can provide a common basis for generalization. Recently, Subramonyam et al. published a review that discusses the existing literature and provides recommendations on how to address this challenge [

31]. Among other points, the authors discuss how dependencies, such as dataset-specific assumptions or configuration constraints, are often insufficiently documented in research papers, which reduces the reproducibility and, consequently, the generalizability of research findings. They argue that more transparent reporting and more explicit reasoning about design choices are necessary to support the generalization and reuse of research outcomes. Therefore, we motivate generalizability and documentation standards to support the broader applicability of KAVA.

5.2.7. Methodological Limitations

The exclusion of gray literature, such as industrial reports and white papers, was a deliberate choice to ensure the reproducibility and quality of the included studies. However, this decision might have resulted in missing relevant insights from industry practice and could have increased the number of papers included in the systematic literature review. A further limitation concerns the vocabulary of our search strategy: by focusing on terms such as “data visualization,” “dashboard,” “visual analytics,” “visual analysis,” “knowledge,” and “smart manufacturing,” relevant work using alternative terminology (e.g., guidance, provenance, rule-based, ontology-driven, knowledge graph, explicit knowledge, or human-in-the-loop) may have been excluded. While we retained this string for consistency, we explicitly acknowledge this risk and provide the full database-specific queries, including all limits and language restrictions, in

Supplementary Material S1 to ensure transparency and reproducibility.

Including such sources may be beneficial for future work provided that alternative mechanisms are applied to ensure their quality. In addition, while dual independent screening was conducted at each stage, no formal inter-reviewer agreement metrics (e.g., Cohen’s kappa) were calculated. Disagreements were resolved through a tie-breaker process to maintain consistency. Incorporating formal agreement metrics in future work could further strengthen methodological rigor.

6. Conclusions

This review of 13 KAVA systems in industrial manufacturing investigated how explicit knowledge is modeled and stored, how it is externalized through visualization and interaction, and how ML is integrated into these processes.

In answering RQ1, the analysis shows that explicit knowledge is most frequently represented as ontologies, taxonomies, knowledge graphs, and rule sets, often enriched by user-provided annotations and threshold specifications. These resources are stored in RDF or graph databases, relational tables, or flat files. However, interoperability between formats is limited. While several systems retain post-design contributions, such as annotated anomaly cases or updated ontologies, others keep them only temporarily, leading to inconsistent long-term availability. Concerns about the reliability of user-generated input are discussed in the literature, though none of the reviewed works systematically evaluate annotation quality.

Addressing RQ2, the surveyed systems predominantly employ line graphs, bar charts, and scatterplots, often enhanced with thresholds, annotations, and filters derived from explicit knowledge. Explicit knowledge is directly visualized in various ways. Some systems visualize knowledge directly. For example, Marey’s graphs update in real time through brushing, and node–link diagrams display semantic networks, production halls, or entity graphs. Dashboards use numeric quality scores as color-coded bars and pre-defined rules as labels. Human-annotated abnormal cases are displayed with contextual rules as glyphs, often as clickable elements for more details. Interactive interfaces allow for the creation of such annotated cases. The simplest approach is displaying thresholds directly. In other systems, explicit knowledge alters visualization specification, leading to different outputs without direct display. Expert knowledge can also define customized views. For instance, ontologies specify which sensors belong to a machine, and user-defined rules and constraints adapt queries and charts dynamically. In other cases, expert-defined rules and thresholds are displayed in 3D digital twin views using color codes and progress bars. Network diagrams and flowcharts show relationships and process logic. More specialized approaches employ radial heat maps, icicle plots, and custom 2D/3D product models when the spatial or hierarchical context is critical. Despite these integrations of knowledge, most configurations remain hard-coded. No reviewed system offers a fully declarative mapping from knowledge models to visualization specifications, which restricts automation and reuse.

With respect to RQ3, 6 of the 13 works incorporate ML, most often using unsupervised methods, followed by semi-supervised, supervised, and hybrid approaches. In some cases, outputs from these models are used to generate rules or are refined through human-in-the-loop processes. The remaining systems either do not integrate ML or do not specify their approach, and only a few detail how such models interact with knowledge representation and visualization, limiting reproducibility and transferability.

Across these three areas, recurring challenges, drawn from both the reviewed studies and related work, include the heterogeneity of knowledge and data types and the absence of unified storage that supports interoperability; limited automation in linking knowledge to visualizations and in externalizing knowledge; the need for interfaces that support varied user roles; the validation and reuse of human-generated annotations; a lack of long-term, real-world evaluations; and limited applicability beyond the specific industrial contexts studied. Progress in these areas will require integrated knowledge repositories that propagate updates, mechanisms for validating and reusing user contributions, tighter coupling of knowledge models with visualization specifications, and systematic assessments in operational manufacturing environments. These steps would improve the adaptability, reusability, and long-term impact of KAVA systems in industrial analytics.

Author Contributions

Conceptualization: A.J.B., S.G., J.V., C.S., and A.R.; Investigation: A.J.B., S.G., J.V., C.S., A.R., and M.W.; Methodology: A.J.B., S.G., J.V., C.S., A.R., and M.W.; Writing—original draft: A.J.B., and S.G.; Writing—review & editing: A.J.B., S.G., J.V., C.S., A.R., M.W., W.A., T.S., and J.S.; Supervision: W.A., and T.S.; Funding acquisition: M.W. All authors unanimously agree that both Adrian Böck and Stefanie Größbacher are first authors of the publication and may therefore be listed as first authors (jointly or individually). All authors have read and agreed to the published version of the manuscript.

Funding

The financial support provided by the Austrian Federal Ministry of Labour and Economy, the National Foundation for Research, Technology and Development, and the Christian Doppler Research Association is gratefully acknowledged.

Conflicts of Interest

Author Josef Suschnigg was employed by the company Pro2Future. Jan Vrablicz was also employed by Georg Fischer. All authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| IoT | Internet of Things |

| KAVA | Knowledge-Assisted Visual Analytics |

| VA | Visual Analytics |

| ML | Machine Learning |

References

- Posada, J.; Toro, C.; Barandiaran, I.; Oyarzun, D.; Stricker, D.; De Amicis, R.; Pinto, E.B.; Eisert, P.; Dollner, J.; Vallarino, I. Visual Computing as a Key Enabling Technology for Industrie 4.0 and Industrial Internet. IEEE Comput. Graph. Appl. 2015, 35, 26–40. [Google Scholar] [CrossRef]

- Tambare, P.; Meshram, C.; Lee, C.C.; Ramteke, R.J.; Imoize, A.L. Performance Measurement System and Quality Management in Data-Driven Industry 4.0: A Review. Sensors 2021, 22, 224. [Google Scholar] [CrossRef]

- Schreck, T.; Mutlu, B.; Streit, M. Visual Data Science for Industrial Applications. In Digital Transformation: Core Technologies and Emerging Topics from a Computer Science Perspective; Springer: Berlin/Heidelberg, Germany, 2023; pp. 447–471. [Google Scholar] [CrossRef]

- Wang, B.; Tao, F.; Fang, X.; Liu, C.; Liu, Y.; Freiheit, T. Smart Manufacturing and Intelligent Manufacturing: A Comparative Review. Engineering 2021, 7, 738–757. [Google Scholar] [CrossRef]

- Thomas, J.J.; Cook, K.A. Illuminating the Path: The Research and Development Agenda for Visual Analytics; National Visualization and Analytics Ctr: Richland, WA, USA, 2005. [Google Scholar]

- Wegner, P. Why Interaction is More Powerful Than Algorithms. Commun. ACM 1997, 40, 80–91. [Google Scholar] [CrossRef]

- Spence, R. Information visualization: Design for interaction, 2nd ed.; Pearson/Prentice Hall: Munich, Germany, 2007. [Google Scholar]

- Kielman, J.; Thomas, J.; May, R. Foundations and Frontiers in Visual Analytics. Inf. Vis. 2009, 8, 239–246. [Google Scholar] [CrossRef]

- Stoiber, C.; Ceneda, D.; Wagner, M.; Schetinger, V.; Gschwandtner, T.; Streit, M.; Miksch, S.; Aigner, W. Perspectives of visualization onboarding and guidance in VA. Vis. Inform. 2022, 6, 68–83. [Google Scholar] [CrossRef]

- Wagner, M.; Rind, A.; Thür, N.; Aigner, W. A knowledge-assisted visual malware analysis system: Design, validation, and reflection of KAMAS. Comput. Secur. 2017, 67, 1–15. [Google Scholar] [CrossRef]

- Federico, P.; Wagner, M.; Rind, A.; Amor-Amoros, A.; Miksch, S.; Aigner, W. The Role of Explicit Knowledge: A Conceptual Model of Knowledge-Assisted Visual Analytics. In Proceedings of the 2017 IEEE Conference on Visual Analytics Science and Technology (VAST), Phoenix, AZ, USA, 3–6 October 2017; pp. 92–103. [Google Scholar] [CrossRef]

- Wagner, M. Integrating Explicit Knowledge in the Visual Analytics Process. Ph.D. Thesis, TU Wien, Vienna, Austria, 2017. [Google Scholar] [CrossRef]

- Wagner, M.; Slijepcevic, D.; Horsak, B.; Rind, A.; Zeppelzauer, M.; Aigner, W. KAVAGait: Knowledge-Assisted Visual Analytics for Clinical Gait Analysis. IEEE Trans. Vis. Comput. Graph. (TVCG) 2018, 25, 1528–1542. [Google Scholar] [CrossRef] [PubMed]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. BMJ 2021, 372, n71. [Google Scholar] [CrossRef] [PubMed]

- Page, M.J.; Moher, D.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. PRISMA 2020 explanation and elaboration: Updated guidance and exemplars for reporting systematic reviews. BMJ 2021, 372, n160. [Google Scholar] [CrossRef] [PubMed]

- Zupan, B.; Holmes, J.H.; Bellazzi, R. Knowledge-Based Data Analysis and Interpretation. Artif. Intell. Med. 2006, 37, 163–165. [Google Scholar] [CrossRef] [PubMed]

- Zhou, F.; Lin, X.; Liu, C.; Zhao, Y.; Xu, P.; Ren, L.; Xue, T.; Ren, L. A Survey of Visualization for Smart Manufacturing. J. Vis. 2019, 22, 419–435. [Google Scholar] [CrossRef]

- Cibulski, L.; Schmidt, J.; Aigner, W. Reflections on Visualization Research Projects in the Manufacturing Industry. IEEE Comput. Graph. Appl. 2022, 42, 21–32. [Google Scholar] [CrossRef]

- Wang, Y.; Zhu, Z.; Wang, L.; Sun, G.; Liang, R. Visualization and Visual Analysis of Multimedia Data in Manufacturing: A Survey. Vis. Inform. 2022, 6, 12–21. [Google Scholar] [CrossRef]

- Xiao, Y.; Zheng, S.; Shi, J.; Du, X.; Hong, J. Knowledge graph-based manufacturing process planning: A state-of-the-art review. J. Manuf. Syst. 2023, 70, 417–435. [Google Scholar] [CrossRef]

- Buchgeher, G.; Gabauer, D.; Martinez-Gil, J.; Ehrlinger, L. Knowledge Graphs in Manufacturing and Production: A Systematic Literature Review. IEEE Access 2021, 9, 55537–55554. [Google Scholar] [CrossRef]

- Bernard, J. The Human-Data-Model Interaction Canvas for Visual Analytics. arXiv 2025, arXiv:2505.07534. [Google Scholar] [CrossRef]

- Sacha, D.; Stoffel, A.; Stoffel, F.; Kwon, B.C.; Ellis, G.; Keim, D.A. Knowledge Generation Model for Visual Analytics. IEEE Trans. Vis. Comput. Graph. 2014, 20, 1604–1613. [Google Scholar] [CrossRef]

- Chen, M.; Ebert, D.; Hagen, H.; Laramee, R.S.; van Liere, R.; Ma, K.L.; Ribarsky, W.; Scheuermann, G.; Silver, D. Data, Information, and Knowledge in Visualization. IEEE Comput. Graph. Appl. 2009, 29, 12–19. [Google Scholar] [CrossRef]

- Wang, X.; Jeong, D.H.; Dou, W.; Lee, S.W.; Ribarsky, W.; Chang, R. Defining and Applying Knowledge Conversion Processes to a Visual Analytics System. Comput. Graph. 2009, 33, 616–623. [Google Scholar] [CrossRef]

- Battle, L.; Ottley, A. What Exactly Is an Insight? A Literature Review. In Proceedings of the IEEE Visualization and Visual Analytics (VIS), Melbourne, Australia, 21–27 October 2023; pp. 91–95. [Google Scholar] [CrossRef]

- Battle, L.; Ottley, A. What Do We Mean When We Say “Insight”? A Formal Synthesis of Existing Theory. IEEE Trans. Vis. Comput. Graph. 2024, 30, 6075–6088. [Google Scholar] [CrossRef] [PubMed]

- Li, H.; Appleby, G.; Brumar, C.D.; Chang, R.; Suh, A. Knowledge Graphs in Practice: Characterizing Their Users, Challenges, and Visualization Opportunities. IEEE Trans. Vis. Comput. Graph. 2024, 30, 584–594. [Google Scholar] [CrossRef]

- Ceneda, D.; Gschwandtner, T.; Miksch, S. A Review of Guidance Approaches in Visual Data Analysis: A Multifocal Perspective. Comput. Graph. Forum 2019, 38, 861–879. [Google Scholar] [CrossRef]

- Bernard, J.; Zeppelzauer, M.; Sedlmair, M.; Aigner, W. VIAL: A Unified Process for Visual Interactive Labeling. Vis. Comput. 2018, 34, 1189–1207. [Google Scholar] [CrossRef]

- Subramonyam, H.; Hullman, J. Are We Closing the Loop Yet? Gaps in the Generalizability of VIS4ML Research. IEEE Trans. Vis. Comput. Graph. 2024, 30, 672–682. [Google Scholar] [CrossRef]

- Ribecca, S. The Data Visualisation Catalogue. 2020. Available online: https://datavizcatalogue.com/home_list.html (accessed on 12 January 2024).

- Wu, W.; Zheng, Y.; Chen, K.; Wang, X.; Cao, N. A Visual Analytics Approach for Equipment Condition Monitoring in Smart Factories of Process Industry. In Proceedings of the 2018 IEEE Pacific Visualization Symposium (PacificVis), Kobe, Japan, 10–13 April 2018; pp. 140–149. [Google Scholar] [CrossRef]

- Alm, R.; Aehnelt, M.; Hadlak, S.; Urban, B. Annotated Domain Ontologies for the Visualization of Heterogeneous Manufacturing Data. In Proceedings of the 17th International Conference on Human Interface and the Management of Information, Angeles, CA, USA, 2–7 August 2015. Part I: Information and Knowledge Design. [Google Scholar] [CrossRef]

- Han, H.; Fu, D.; Jia, H. Construction of Knowledge Graphs Related to Industrial Key Production Processes for Query and Visualization. In Proceedings of the 2023 Chinese Intelligent Systems Conference, Ningbo, Zhejiang, China, 14–15 October; Jia, Y., Zhang, W., Fu, Y., Wang, J., Eds.; Springer: Singapore, 2023; pp. 855–863. [Google Scholar] [CrossRef]

- Xu, P.; Mei, H.; Ren, L.; Chen, W. ViDX: Visual Diagnostics of Assembly Line Performance in Smart Factories. IEEE Trans. Vis. Comput. Graph. 2017, 23, 291–300. [Google Scholar] [CrossRef]

- Cruz, A.B.; Sousa, A.; Cardoso, Â.; Valente, B.; Reis, A. Smart Data Visualisation as a Stepping Stone for Industry 4.0—A Case Study in Investment Casting Industry. In Proceedings of the Robot 2019: Fourth Iberian Robotics Conference, Porto, Portugal, 20–22 November; Silva, M.F., Luís Lima, J., Reis, L.P., Sanfeliu, A., Tardioli, D., Eds.; Springer: Cham, Switzerland, 2020; pp. 657–668. [Google Scholar] [CrossRef]

- Eirich, J.; Jäckle, D.; Sedlmair, M.; Wehner, C.; Schmid, U.; Bernard, J.; Schreck, T. ManuKnowVis: How to Support Different User Groups in Contextualizing and Leveraging Knowledge Repositories. IEEE Trans. Vis. Comput. Graph. 2023, 29, 3441–3457. [Google Scholar] [CrossRef] [PubMed]

- Kaupp, L.; Nazemi, K.; Humm, B. An Industry 4.0-Ready Visual Analytics Model for Context-Aware Diagnosis in Smart Manufacturing. In Proceedings of the 2020 24th International Conference Information Visualisation (IV), Melbourne, Australia, 7–11 September 2020; pp. 350–359. [Google Scholar] [CrossRef]

- Liu, J.; Zheng, H.; Jiang, Y.; Liu, T.; Bao, J. Visual Analytics Approach for Crane Anomaly Detection Based on Digital Twin. In Proceedings of the Cooperative Design, Visualization, and Engineering: 19th International Conference, CDVE 2022, Virtual Event, 25–28 September 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 1–12. [Google Scholar] [CrossRef]

- Zhang, K.; Xu, Q.; Liu, C.; Chai, T. Intelligent decision-making system for mineral processing production indices based on digital twin interactive visualization. J. Vis. 2024, 27, 421–436. [Google Scholar] [CrossRef]

- Behringer, M.; Hirmer, P.; Villanueva, A.; Rapp, J.; Mitschang, B. Enhancing Data Trustworthiness in Explorative Analysis: An Interactive Approach for Data Quality Monitoring. SN Comput. Sci. 2024, 5, 465. [Google Scholar] [CrossRef]

- Berges, I.; Ramírez-Durán, V.J.; Illarramendi, A. Facilitating Data Exploration in Industry 4.0. In Proceedings of the Advances in Conceptual Modeling: ER 2019 Workshops FAIR, MREBA, EmpER, MoBiD, OntoCom, and ER Doctoral Symposium Papers, Salvador, Brazil, 4–7 November 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 125–134. [Google Scholar] [CrossRef]

- Kaupp, L.; Nazemi, K.; Humm, B. Context-Aware Diagnosis in Smart Manufacturing: TAOISM, An Industry 4.0-Ready Visual Analytics Model. In Integrating Artificial Intelligence and Visualization for Visual Knowledge Discovery; Springer International Publishing: Cham, Switzerland, 2022; pp. 403–436. [Google Scholar] [CrossRef]

- Ameri, F.; Bernstein, W. A Thesaurus-guided Framework for Visualization of Unstructured Manufacturing Capability Data. In Proceedings of the IFIP Advances in Information and Communication Technology. APMS 2017 International Conference Advances in Production Management Systems (APMS 2017), Hamburg, Germany, 3–7 September 2017. [Google Scholar] [CrossRef]

- Behringer, M.; Hirmer, P.; Villanueva, A.; Rapp, J.; Mitschang, B. Unobtrusive Integration of Data Quality in Interactive Explorative Data Analysis. In Proceedings of the 25th International Conference on Enterprise Information Systems—Volume 2: ICEIS, Prague, Czech Republic, 24–26 April 2023; INSTICC. SciTePress: Setúbal, Portugal, 2023; pp. 276–285. [Google Scholar] [CrossRef]

- Wagner, M. Knowledge-Assisted Visual Analytics for Time-Oriented Data. Ph.D. Thesis, TU Wien, Vienna, Austria, 2017. [Google Scholar]

- Ceneda, D.; Gschwandtner, T.; Miksch, S. You Get by with a Little Help: The Effects of Variable Guidance Degrees on Performance and Mental State. Vis. Inform. 2019, 3, 177–191. [Google Scholar] [CrossRef]

- Bhatti, S.S.; Gao, X.; Chen, G. General framework, opportunities and challenges for crowdsourcing techniques: A Comprehensive survey. J. Syst. Softw. 2020, 167, 110611. [Google Scholar] [CrossRef]

- Liu, S.; Chen, C.; Lu, Y.; Ouyang, F.; Wang, B. An Interactive Method to Improve Crowdsourced Annotations. IEEE Trans. Vis. Comput. Graph. 2019, 25, 235–245. [Google Scholar] [CrossRef] [PubMed]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).