Web-Based Platform for Quantitative Depression Risk Prediction via VAD Regression on Korean Text and Multi-Anchor Distance Scoring

Abstract

1. Introduction

2. Related Works

2.1. Language-Based Mental Health Detection Studies

2.2. Multimodal and Quantitative Emotion Analysis Studies

3. Methodology

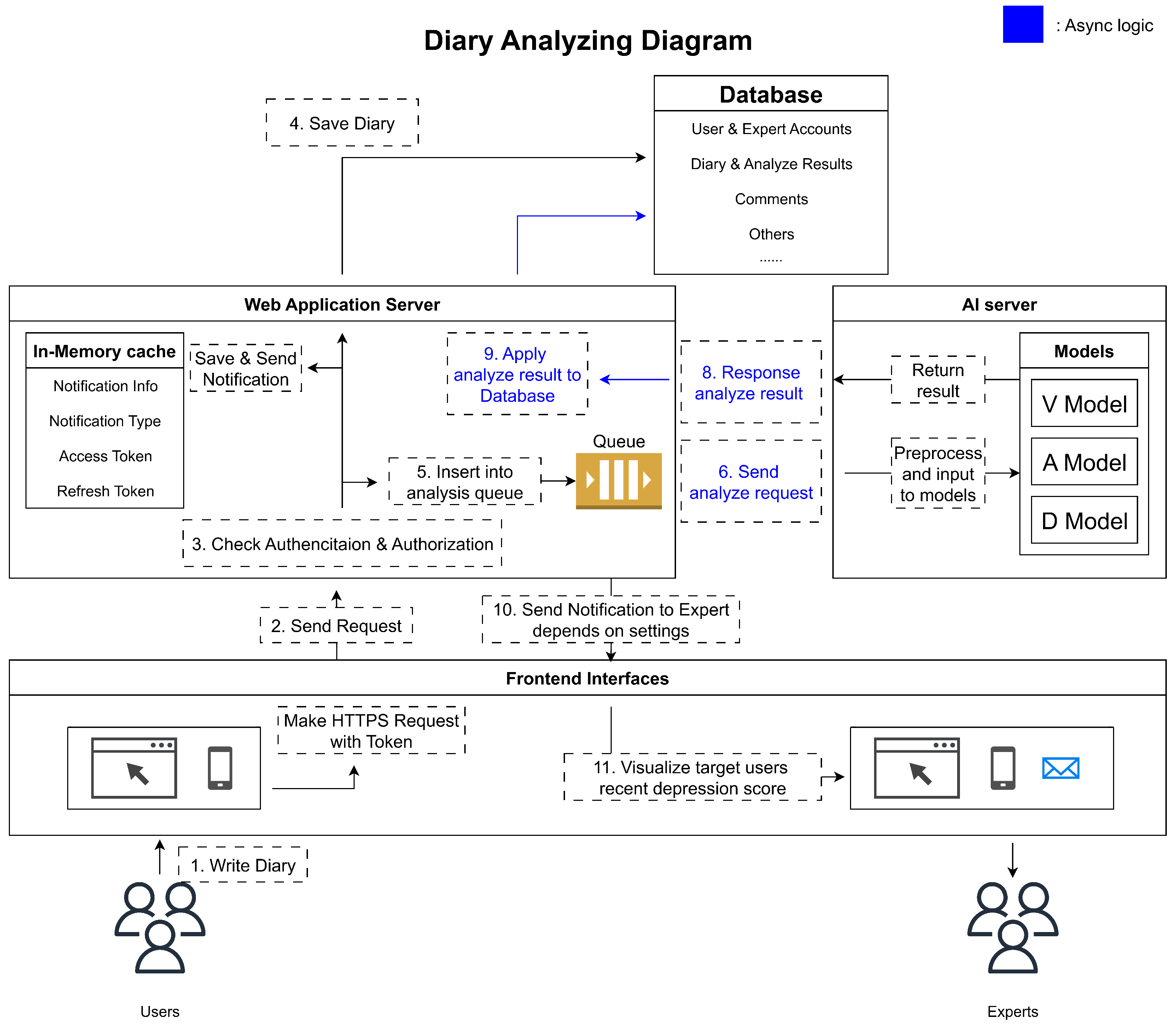

3.1. Platform Architecture

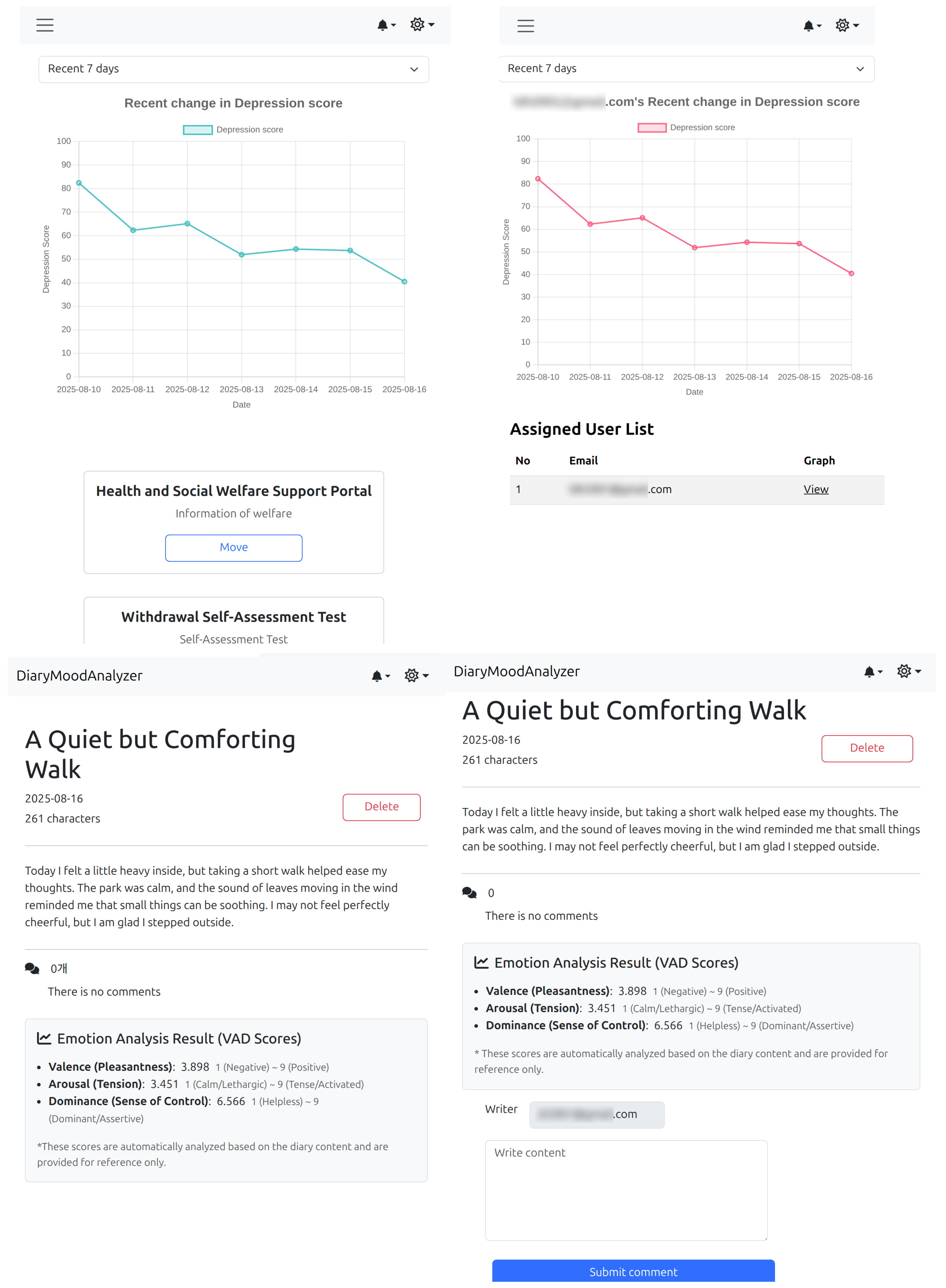

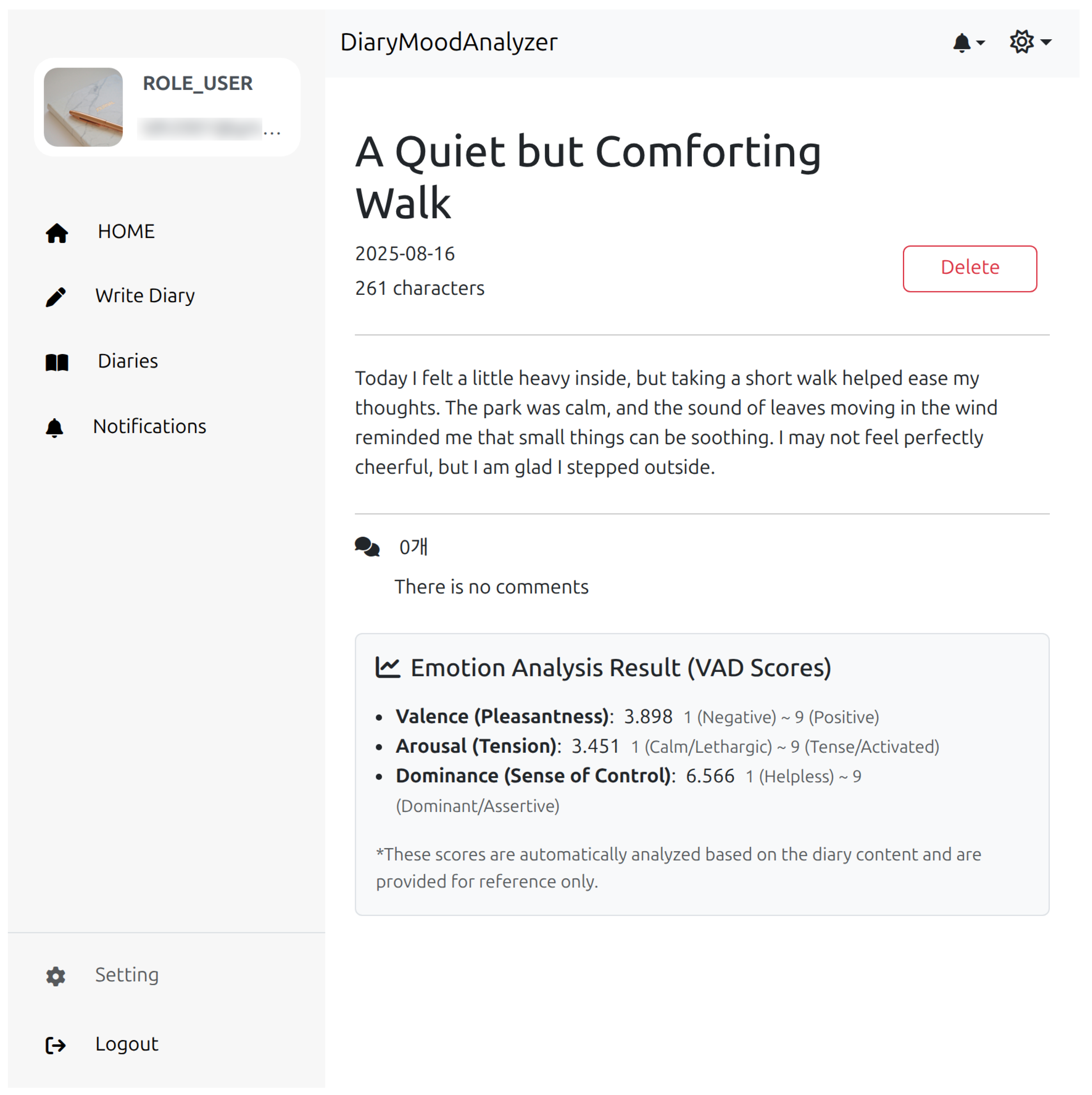

3.2. User Interface Overview

3.3. Dataset and Preprocessing

3.4. Model Design

3.4.1. VAD Vector Regression Model

3.4.2. Depression Score Calculation via Multi-Anchor Euclidean Distance

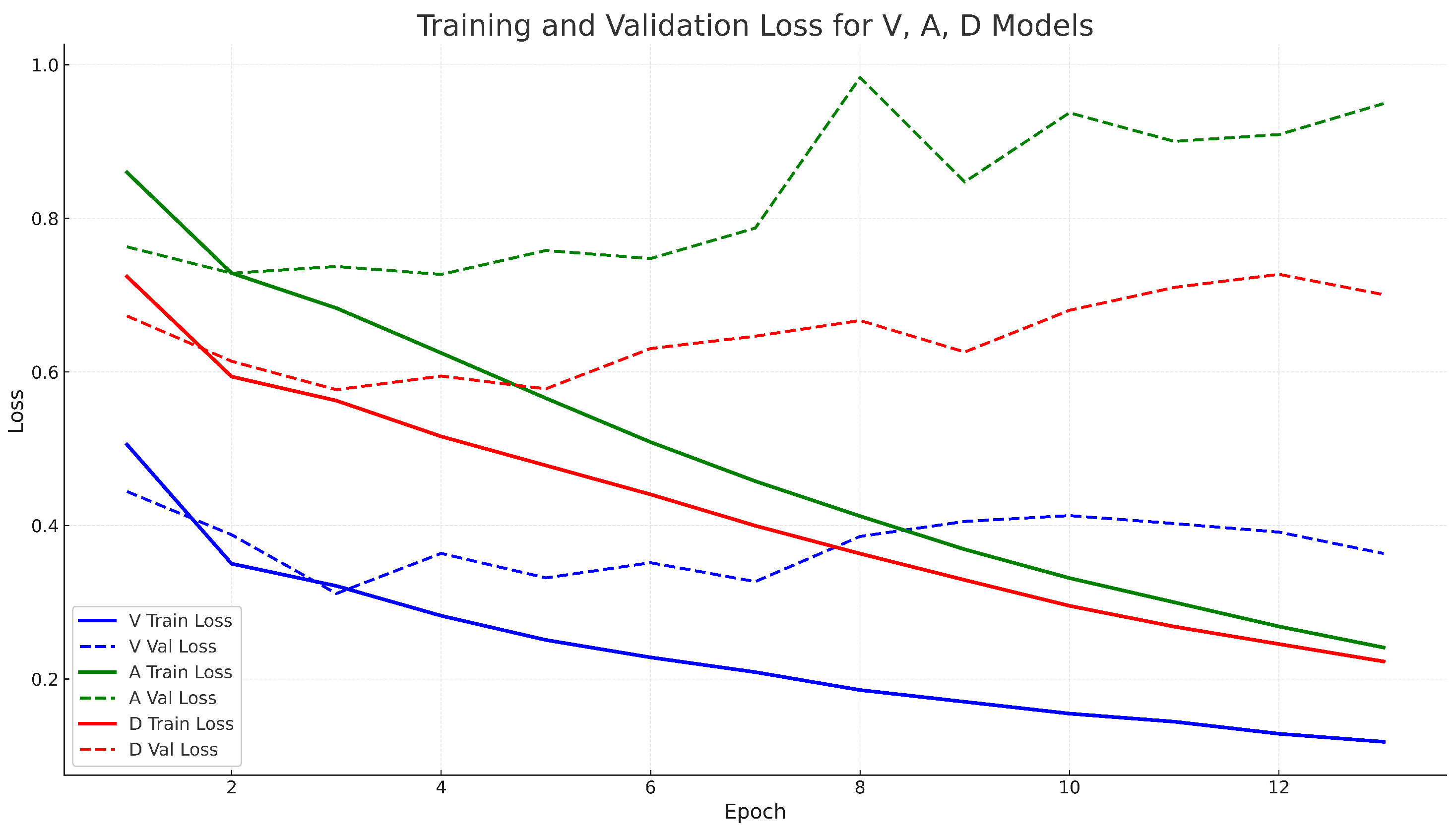

3.5. Training Setup

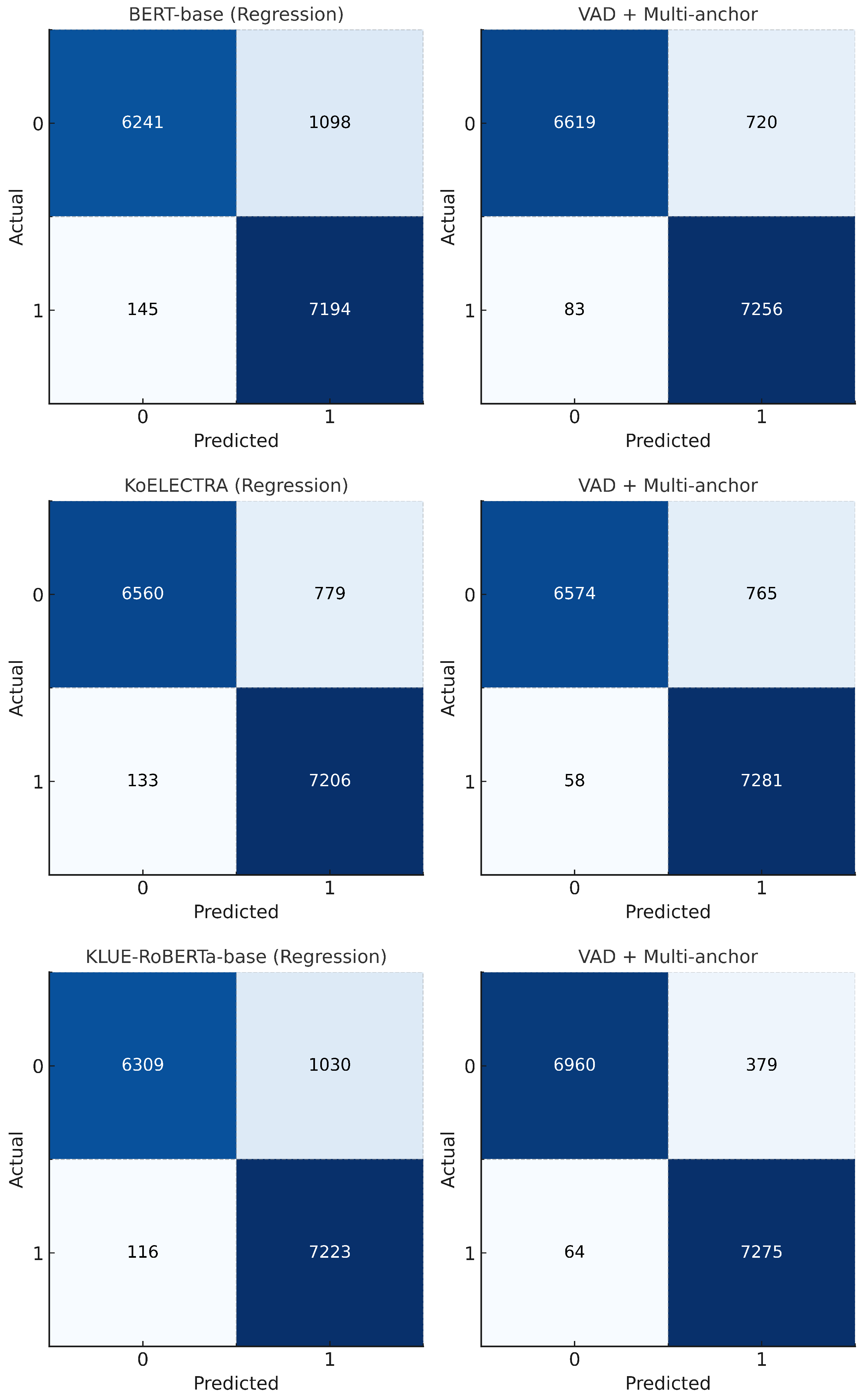

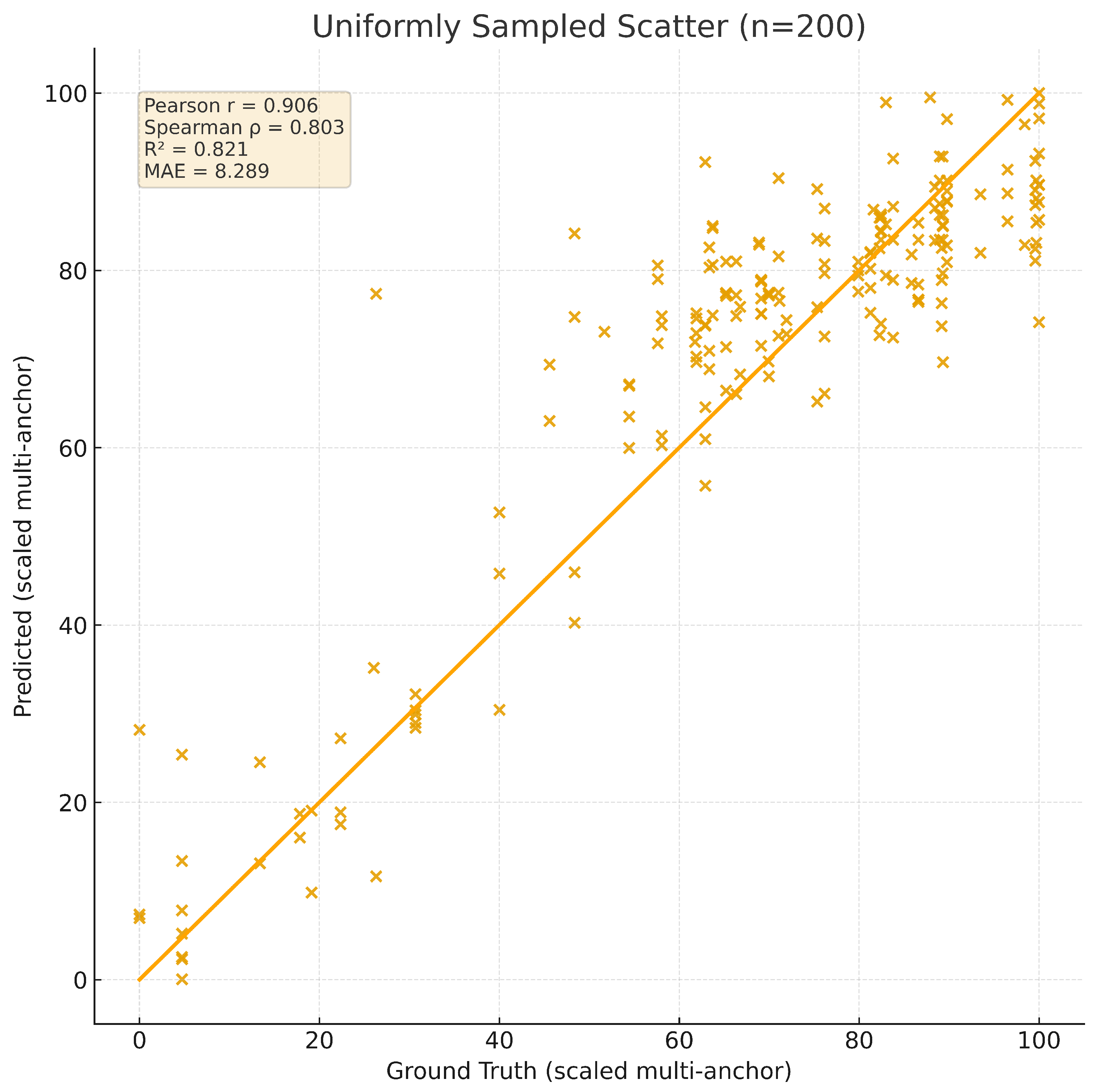

4. Experiments

5. Discussion and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Complete Mapping of Korean Emotions

| Emotion Label (Korean) | Code | NRC Word | V | A | D |

|---|---|---|---|---|---|

| anger (분노) | E10 | anger | 2.336 | 7.920 | 6.256 |

| grunting (툴툴대는) | E11 | grunting | 2.584 | 7.616 | 4.848 |

| frustrated (좌절한) | E12 | frustrated | 1.640 | 6.208 | 3.040 |

| annoyed (짜증내는) | E13 | annoyed | 1.832 | 7.264 | 3.760 |

| defensive (방어적인) | E14 | defensive | 5.336 | 6.120 | 6.424 |

| malicious (악의적인) | E15 | malicious | 2.416 | 7.120 | 5.856 |

| impatient (안달하는) | E16 | impatient | 3.000 | 6.664 | 4.432 |

| disgusting (구역질 나는) | E17 | disgusting | 1.248 | 7.368 | 2.960 |

| angry (노여워하는) | E18 | angry | 1.976 | 7.640 | 5.832 |

| annoying (성가신) | E19 | annoying | 1.656 | 7.824 | 3.784 |

| sadness (슬픔) | E20 | sadness | 1.416 | 3.304 | 2.312 |

| disappointed (실망한) | E21 | disappointed | 1.568 | 4.776 | 2.928 |

| sorrowful (비통한) | E22 | sorrowful | 1.392 | 4.376 | 2.304 |

| regretful (후회되는) | E23 | regretful | 2.336 | 4.840 | 2.504 |

| depressed (우울한) | E24 | depressed | 1.192 | 4.560 | 2.088 |

| numb (마비된) | E25 | numb | 1.864 | 4.360 | 3.816 |

| pessimistic (염세적인) | E26 | pessimistic | 1.704 | 4.152 | 2.888 |

| tearful (눈물이 나는) | E27 | tearful | 2.664 | 5.000 | 2.472 |

| discouraged (낙담한) | E28 | discouraged | 2.760 | 3.432 | 1.360 |

| jaded (환멸을 느끼는) | E29 | jaded | 3.040 | 5.480 | 4.200 |

| anxiety (불안) | E30 | anxiety | 2.168 | 7.920 | 3.736 |

| afraid (두려운) | E31 | afraid | 1.092 | 6.408 | 2.884 |

| stressed out (스트레스 받는) | E32 | stressed out | 2.000 | 5.344 | 3.168 |

| vulnerable (취약한) | E33 | vulnerable | 2.584 | 4.920 | 2.960 |

| confused (혼란스러운) | E34 | confused | 2.760 | 6.200 | 2.432 |

| baffled (당혹스러운) | E35 | baffled | 2.208 | 5.800 | 3.712 |

| skeptical (회의적인) | E36 | skeptical | 2.656 | 5.000 | 4.616 |

| worried (걱정스러운) | E37 | worried | 1.752 | 7.592 | 4.160 |

| cautious (조심스러운) | E38 | cautious | 4.760 | 4.560 | 5.136 |

| nervous (초조한) | E39 | nervous | 2.668 | 7.560 | 2.704 |

| hurt (상처) | E40 | hurt | 1.496 | 7.184 | 3.328 |

| jealous (질투하는) | E41 | jealous | 2.384 | 7.840 | 3.760 |

| betrayed (배신당한) | E42 | betrayed | 1.976 | 7.152 | 3.464 |

| isolated (고립된) | E43 | isolated | 2.768 | 3.848 | 3.040 |

| shocked (충격 받은) | E44 | shocked | 3.336 | 7.184 | 4.000 |

| poor, needy (가난한, 불우한) | E45 | poor, needy | 3.064 | 4.236 | 2.242 |

| victimized (희생된) | E46 | victimized | 1.920 | 6.152 | 3.184 |

| resentful (억울한) | E47 | resentful | 1.960 | 5.864 | 3.592 |

| distressed (괴로워하는) | E48 | distressed | 2.144 | 7.168 | 3.592 |

| abandoned (버려진) | E49 | abandoned | 1.368 | 4.848 | 2.040 |

| embarrassed (당황) | E50 | embarrassed | 2.472 | 5.480 | 3.120 |

| isolated (고립된(당황한)) | E51 | isolated | 2.768 | 3.848 | 3.040 |

| self conscious (남의 시선을 의식하는) | E52 | self conscious | 5.664 | 5.160 | 5.256 |

| lonely (외로운) | E53 | lonely | 3.000 | 2.808 | 2.904 |

| inferiority complex (열등감) | E54 | inferiority complex | 3.896 | 5.944 | 3.584 |

| guilty (죄책감의) | E55 | guilty | 2.080 | 7.160 | 3.816 |

| ashamed (부끄러운) | E56 | ashamed | 2.248 | 5.704 | 2.824 |

| repulsive (혐오스러운) | E57 | repulsive | 2.168 | 7.200 | 4.400 |

| pathetic (한심한) | E58 | pathetic | 1.704 | 4.712 | 2.112 |

| confused (혼란스러운(당황한)) | E59 | confused | 2.760 | 6.200 | 2.432 |

| joy (기쁨) | E60 | joy | 8.840 | 7.592 | 7.352 |

| grateful (감사하는) | E61 | grateful | 8.664 | 3.824 | 5.480 |

| trusting (신뢰하는) | E62 | trusting | 7.856 | 5.064 | 7.000 |

| comfortable (편안한) | E63 | comfortable | 8.416 | 2.304 | 4.784 |

| satisfied (만족스러운) | E64 | satisfied | 8.672 | 5.080 | 6.480 |

| excited (흥분) | E65 | excited | 8.264 | 8.448 | 6.672 |

| relaxed (느긋) | E66 | relaxed | 7.920 | 1.360 | 4.244 |

| relief (안도) | E67 | relief | 7.752 | 3.224 | 4.848 |

| excited (신이 난) | E68 | excited | 8.264 | 8.448 | 6.672 |

| confident (자신하는) | E69 | confident | 7.120 | 3.592 | 6.784 |

Appendix B. Constants and Data-Derived Statistics

| Symbol/Setting | Value | Usage (Eq./Sec.) | Determination/Procedure |

|---|---|---|---|

| 3.1036 | Equations (1) and (2) | Mean of valence on the training split (1–9 scale). | |

| 2.1210 | Equations (1) and (2) | Std. of valence on the training split (1–9 scale). | |

| 5.76297 | Equations (1) and (2) | Mean of arousal on the training split (1–9 scale). | |

| 1.590486 | Equations (1) and (2) | Std. of arousal on the training split (1–9 scale). | |

| 3.8244 | Equations (1) and (2) | Mean of dominance on the training split (1–9 scale). | |

| 1.3897869 | Equations (1) and (2) | Std. of dominance on the training split (1–9 scale). | |

| 0.7892 | Equation (4) | Minimum of computed on ground-truth VAD vectors over the dataset. | |

| 9.7557 | Equation (4) | Maximum of computed on ground-truth VAD vectors over the dataset. | |

| 40 | Equation (6) | Fixed threshold for binary at-risk label in main experiments; may be tuned on validation if noted. | |

| B | 10 | Equations (8) and (9) | Number of equal-width bins on the 1–9 scale for computing sample weights. |

| Section 3.3 | Linear map from (lexicon) to (model/output) applied elementwise to . | ||

| Split ratio | 4:1:1 | Section 3.3 | Train/validation/test split; all statistics (e.g., ) estimated on the training split only. |

References

- Park, S.M.; Joo, M.J.; Lim, J.H.; Jang, S.Y.; Park, E.C.; Ha, M.J. Association between hikikomori (social withdrawal) and depression in Korean young adults. J. Affect. Disord. 2025, 380, 647–654. [Google Scholar] [CrossRef]

- Leigh-Hunt, N.; Bagguley, D.; Bash, K.; Turner, V.; Turnbull, S.; Valtorta, N.; Caan, W. An overview of systematic reviews on the public health consequences of social isolation and loneliness. Public Health 2017, 152, 157–171. [Google Scholar] [CrossRef]

- Wong, J.C.M.; Wan, M.J.S.; Kroneman, L.; Kato, T.A.; Lo, T.W.; Wong, P.W.C.; Chan, G.H. Hikikomori phenomenon in East Asia: Regional perspectives, challenges, and opportunities for social health agencies. Front. Psychiatry 2019, 10, 512. [Google Scholar] [CrossRef] [PubMed]

- Goldman, N.; Khanna, D.; El Asmar, M.L.; Qualter, P.; El-Osta, A. Addressing loneliness and social isolation in 52 countries: A scoping review of National policies. BMC Public Health 2024, 24, 1207. [Google Scholar] [CrossRef]

- Muris, P.; Ollendick, T.H. Contemporary hermits: A developmental psychopathology account of extreme social withdrawal (Hikikomori) in young people. Clin. Child Fam. Psychol. Rev. 2023, 26, 459–481. [Google Scholar] [CrossRef] [PubMed]

- Ogawa, T.; Shiratori, Y.; Midorikawa, H.; Aiba, M.; Sugawara, D.; Kawakami, N.; Arai, T.; Tachikawa, H. A survey of changes in the psychological state of individuals with social withdrawal (hikikomori) in the context of the COVID pandemic. COVID 2023, 3, 1158–1172. [Google Scholar] [CrossRef]

- Lin, P.K.; Andrew.; Koh, A.H.; Liew, K. The relationship between Hikikomori risk factors and social withdrawal tendencies among emerging adults—An exploratory study of Hikikomori in Singapore. Front. Psychiatry 2022, 13, 1065304. [Google Scholar] [CrossRef]

- Lin, T.; Heckman, T.G.; Anderson, T. The efficacy of synchronous teletherapy versus in-person therapy: A meta-analysis of randomized clinical trials. Clin. Psychol. Sci. Pract. 2022, 29, 167. [Google Scholar] [CrossRef]

- Giovanetti, A.K.; Punt, S.E.; Nelson, E.L.; Ilardi, S.S. Teletherapy versus in-person psychotherapy for depression: A meta-analysis of randomized controlled trials. Telemed. E-Health 2022, 28, 1077–1089. [Google Scholar] [CrossRef]

- Fernandez, E.; Woldgabreal, Y.; Day, A.; Pham, T.; Gleich, B.; Aboujaoude, E. Live psychotherapy by video versus in-person: A meta-analysis of efficacy and its relationship to types and targets of treatment. Clin. Psychol. Psychother. 2021, 28, 1535–1549. [Google Scholar] [CrossRef]

- Yamazaki, S.; Ura, C.; Inagaki, H.; Sugiyama, M.; Miyamae, F.; Edahiro, A.; Ito, K.; Iwasaki, M.; Sasai, H.; Okamura, T.; et al. Social isolation and well-being among families of middle-aged and older hikikomori people. Psychogeriatrics 2024, 24, 145–147. [Google Scholar] [CrossRef]

- Mehrabian, A. Analysis of the big-five personality factors in terms of the PAD temperament model. Aust. J. Psychol. 1996, 48, 86–92. [Google Scholar] [CrossRef]

- Kannan, K.D.; Jagatheesaperumal, S.K.; Kandala, R.N.; Lotfaliany, M.; Alizadehsanid, R.; Mohebbi, M. Advancements in machine learning and deep learning for early detection and management of mental health disorder. arXiv 2024, arXiv:2412.06147. [Google Scholar] [CrossRef]

- Olawade, D.B.; Wada, O.Z.; Odetayo, A.; David-Olawade, A.C.; Asaolu, F.; Eberhardt, J. Enhancing mental health with Artificial Intelligence: Current trends and future prospects. J. Med. Surgery Public Health 2024, 3, 100099. [Google Scholar] [CrossRef]

- Nag, P.K.; Bhagat, A.; Priya, R.V.; Khare, D.K. Emotional intelligence through artificial intelligence: Nlp and deep learning in the analysis of healthcare texts. In Proceedings of the 2023 International Conference on Artificial Intelligence for Innovations in Healthcare Industries (ICAIIHI), Raipur, India, 29–30 December 2023; IEEE: Piscataway, NJ, USA, 2023; Volume 1, pp. 1–7. [Google Scholar] [CrossRef]

- Zhang, T.; Schoene, A.M.; Ji, S.; Ananiadou, S. Natural language processing applied to mental illness detection: A narrative review. NPJ Digit. Med. 2022, 5, 46. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhu, J.; Guo, Z.; Zhang, Y.; Li, Z.; Hu, B. Natural Language Processing for Depression Prediction on Sina Weibo: Method Study and Analysis. JMIR Ment. Health 2024, 11, e58259. [Google Scholar] [CrossRef]

- Mansoor, M.A.; Ansari, K.H. Early detection of mental health crises through artificial-intelligence-powered social media analysis: A prospective observational study. J. Pers. Med. 2024, 14, 958. [Google Scholar] [CrossRef] [PubMed]

- Gavalan, H.S.; Rastgoo, M.N.; Nakisa, B. A BERT-Based Summarization approach for depression detection. arXiv 2024, arXiv:2409.08483. [Google Scholar] [CrossRef]

- Saffar, A.H.; Mann, T.K.; Ofoghi, B. Textual emotion detection in health: Advances and applications. J. Biomed. Inform. 2023, 137, 104258. [Google Scholar] [CrossRef] [PubMed]

- Teferra, B.G.; Rueda, A.; Pang, H.; Valenzano, R.; Samavi, R.; Krishnan, S.; Bhat, V. Screening for Depression Using Natural Language Processing: Literature Review. Interact. J. Med. Res. 2024, 13, e55067. [Google Scholar] [CrossRef]

- Zhong, Z.; Wang, Z. Intelligent Depression Prevention via LLM-Based Dialogue Analysis: Overcoming the Limitations of Scale-Dependent Diagnosis through Precise Emotional Pattern Recognition. arXiv 2025, arXiv:2504.16504. [Google Scholar] [CrossRef]

- Qin, W.; Chen, Z.; Wang, L.; Lan, Y.; Ren, W.; Hong, R. Read, diagnose and chat: Towards explainable and interactive LLMs-augmented depression detection in social media. arXiv 2023, arXiv:2305.05138. [Google Scholar] [CrossRef]

- Li, H.; Zhang, R.; Lee, Y.C.; Kraut, R.E.; Mohr, D.C. Systematic review and meta-analysis of AI-based conversational agents for promoting mental health and well-being. NPJ Digit. Med. 2023, 6, 236. [Google Scholar] [CrossRef]

- Cui, X.; Gu, Y.; Fang, H.; Zhu, T. Development and Evaluation of LLM-Based Suicide Intervention Chatbot. Front. Psychiatry 2025, 16, 1634714. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Wang, Y.; Xiao, Y.; Escamilla, L.; Augustine, B.; Crace, K.; Zhou, G.; Zhang, Y. Evaluating an LLM-Powered Chatbot for Cognitive Restructuring: Insights from Mental Health Professionals. arXiv 2025, arXiv:2501.15599. [Google Scholar] [CrossRef]

- Hadjar, H.; Vu, B.; Hemmje, M. TheraSense: Deep Learning for Facial Emotion Analysis in Mental Health Teleconsultation. Electronics 2025, 14, 422. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, S.; Ni, D.; Wei, Z.; Yang, K.; Jin, S.; Huang, G.; Liang, Z.; Zhang, L.; Li, L.; et al. Multimodal sensing for depression risk detection: Integrating audio, video, and text data. Sensors 2024, 24, 3714. [Google Scholar] [CrossRef]

- Gimeno-Gómez, D.; Bucur, A.M.; Cosma, A.; Martínez-Hinarejos, C.D.; Rosso, P. Reading between the frames: Multi-modal depression detection in videos from non-verbal cues. In Proceedings of the European Conference on Information Retrieval, Glasgow, UK, 24–28 March 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 191–209. [Google Scholar] [CrossRef]

- Park, S.; Kim, J.; Ye, S.; Jeon, J.; Park, H.Y.; Oh, A. Dimensional emotion detection from categorical emotion. arXiv 2019, arXiv:1911.02499. [Google Scholar] [CrossRef]

- Mitsios, M.; Vamvoukakis, G.; Maniati, G.; Ellinas, N.; Dimitriou, G.; Markopoulos, K.; Kakoulidis, P.; Vioni, A.; Christidou, M.; Oh, J.; et al. Improved text emotion prediction using combined valence and arousal ordinal classification. arXiv 2024, arXiv:2404.01805. [Google Scholar] [CrossRef]

- Fatima, A.; Li, Y.; Hills, T.T.; Stella, M. Dasentimental: Detecting depression, anxiety, and stress in texts via emotional recall, cognitive networks, and machine learning. Big Data Cogn. Comput. 2021, 5, 77. [Google Scholar] [CrossRef]

- Buechel, S.; Hahn, U. Emobank: Studying the impact of annotation perspective and representation format on dimensional emotion analysis. arXiv 2022, arXiv:2205.01996. [Google Scholar] [CrossRef]

- AI Hub. Emotional Dialogue Corpus. 2022. Available online: https://aihub.or.kr/aihubdata/data/view.do?dataSetSn=86 (accessed on 13 January 2025).

- Mohammad, S.M. NRC VAD Lexicon v2: Norms for valence, arousal, and dominance for over 55k English terms. arXiv 2025, arXiv:2503.23547. [Google Scholar] [CrossRef]

- Park, S.; Moon, J.; Kim, S.; Cho, W.I.; Han, J.; Park, J.; Song, C.; Kim, J.; Song, Y.; Oh, T.; et al. Klue: Korean language understanding evaluation. arXiv 2021, arXiv:2105.09680. [Google Scholar] [CrossRef]

- Vaiouli, P.; Panteli, M.; Panayiotou, G. Affective and psycholinguistic norms of Greek words: Manipulating their affective or psycho-linguistic dimensions. Curr. Psychol. 2023, 42, 10299–10309. [Google Scholar] [CrossRef]

- Kuppens, P.; Tuerlinckx, F.; Russell, J.A.; Barrett, L.F. The relation between valence and arousal in subjective experience. Psychol. Bull. 2013, 139, 917. [Google Scholar] [CrossRef] [PubMed]

- Nandy, R.; Nandy, K.; Walters, S.T. Relationship between valence and arousal for subjective experience in a real-life setting for supportive housing residents: Results from an ecological momentary assessment study. JMIR Form. Res. 2023, 7, e34989. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Minneapolis, MN, USA, 2–7 June 2019; Volume 1, pp. 4171–4186. [Google Scholar] [CrossRef]

- Park, J. KoELECTRA: Pretrained ELECTRA Model for Korean. 2020. Available online: https://github.com/monologg/KoELECTRA (accessed on 13 June 2025).

| Data Source | Sentences | Preprocessing Method | Notes |

|---|---|---|---|

| Emotional dialogue corpus (AI Hub) [34] | 58,269 (87.54%) | First utterance selection; emotion-to-VAD mapping | VAD scores derived from NRC-VAD Lexicon |

| EmoBank [33] (translated and verified) | 8290 (12.46%) | Korean translation with manual verification | Original VAD scores preserved |

| Total | 66,559 (100%) |

| Section | Metric | Valence (V) | Arousal (A) | Dominance (D) |

|---|---|---|---|---|

| VAD Regression | MSE | 1.0261 | 1.7010 | 0.9478 |

| MAE | 0.6446 | 1.0147 | 0.7129 | |

| Pearson | 0.8843 | 0.5842 | 0.7220 | |

| Multi-anchor (Regression) | ||||

| MSE | 152.0647 | |||

| MAE | 8.9024 | |||

| Pearson | 0.8735 | |||

| Multi-anchor (Classification) | ||||

| Accuracy | 0.9825 | |||

| Precision | 0.9733 | |||

| Recall | 0.9715 | |||

| F1-Score | 0.9724 | |||

| No. | Model (Method) | MAE (Depression Score) | Pearson r | F1-Score (Binary) |

|---|---|---|---|---|

| 1 | SVR (VAD + Multi-anchor) | 13.52 | 0.6412 | 0.835 |

| 2 | BERT-base [40] (Simple Regression) | 12.24 | 0.7268 | 0.92 |

| 3 | BERT-base [40] (VAD + Multi-anchor) | 10.1975 | 0.8442 | 0.95 |

| 4 | KoELECTRA [41] (Simple Regression) | 11.43 | 0.7722 | 0.94 |

| 5 | KoELECTRA [41] (VAD + Multi-anchor) | 10.1642 | 0.8310 | 0.9590 |

| 6 | KLUE-RoBERTa-base [36] (Simple Regression) | 10.3516 | 0.7770 | 0.94 |

| 7 | KLUE-RoBERTa-base [36] (VAD + Multi-anchor) | 8.9024 | 0.87 | 0.97 |

| No. | Sentence | Valence (Δ) | Arousal (Δ) | Dominance (Δ) | Dep. Score (Δ) |

|---|---|---|---|---|---|

| (1) | I’m sick, but my children don’t care about me—just about money. I feel betrayed. | 1.96 (−0.02) | 7.13 (−0.02) | 3.39 (−0.07) | 71.64 (0.60) |

| (2) | Something truly joyful happened during the holidays. | 8.66 (−0.18) | 7.59 (0.00) | 6.65 (−0.70) | 5.52 (5.52) |

| (3) | I feel much more at ease now that my retirement is approaching. | 8.66 (0.24) | 2.85 (0.55) | 4.77 (−0.01) | 21.00 (−1.34) |

| (4) | My wife has been abroad for a long time, and I feel very depressed—like I’ve been left behind. | 1.84 (0.65) | 4.67 (0.11) | 2.62 (0.53) | 97.90 (−2.09) |

| (5) | Why did I have to get this illness and keep going to the hospital? I’m so sick and tired of it. | 1.93 (0.10) | 7.38 (0.12) | 3.77 (0.01) | 67.51 (−1.35) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lim, D.; Lee, K.; Jo, J.; Lim, H.; Bae, H.; Kang, C. Web-Based Platform for Quantitative Depression Risk Prediction via VAD Regression on Korean Text and Multi-Anchor Distance Scoring. Appl. Sci. 2025, 15, 10170. https://doi.org/10.3390/app151810170

Lim D, Lee K, Jo J, Lim H, Bae H, Kang C. Web-Based Platform for Quantitative Depression Risk Prediction via VAD Regression on Korean Text and Multi-Anchor Distance Scoring. Applied Sciences. 2025; 15(18):10170. https://doi.org/10.3390/app151810170

Chicago/Turabian StyleLim, Dongha, Kangwon Lee, Junhui Jo, Hyeonji Lim, Hyeongchan Bae, and Changgu Kang. 2025. "Web-Based Platform for Quantitative Depression Risk Prediction via VAD Regression on Korean Text and Multi-Anchor Distance Scoring" Applied Sciences 15, no. 18: 10170. https://doi.org/10.3390/app151810170

APA StyleLim, D., Lee, K., Jo, J., Lim, H., Bae, H., & Kang, C. (2025). Web-Based Platform for Quantitative Depression Risk Prediction via VAD Regression on Korean Text and Multi-Anchor Distance Scoring. Applied Sciences, 15(18), 10170. https://doi.org/10.3390/app151810170