1. Introduction

Contemporary industrial systems have experienced unprecedented expansion and development, with control objects evolving toward higher-dimensional, increasingly nonlinear, and more complex operational objectives. This paradigm shift has imposed substantially elevated demands on control system design. Reinforcement learning (RL) has emerged as a particularly promising solution, as it obviates the need for complete a priori knowledge of environmental dynamics. This capability enables robotic systems, automated equipment, and other intelligent agents to autonomously adapt and optimize their behavior in dynamic, uncertain environments, thereby enhancing operational efficiency, minimizing resource expenditure, and facilitating more sophisticated task execution. Notably, in domains such as Large Language Models (LLMs), embodied intelligence, and autonomous driving, RL has proven instrumental in developing more flexible and responsive solutions that drive technological innovation.

The conceptual foundations of RL can be traced back to the mid-20th century, when Minsky [

1] first formalized the notions of “reinforcement” and “reinforcement learning”. His seminal work emphasized the critical role of reward–punishment mechanisms in learning processes and identified trial-and-error as the fundamental paradigm of RL.

From a methodological perspective, dynamic programming provided essential mathematical tools for RL development. Bellman’s 1960 formulation of dynamic programming [

2,

3] not only established the theoretical framework for solving Markov Decision Processes (MDPs) but also introduced the Bellman equation, which remains the cornerstone of modern RL algorithms. Subsequent work on policy iteration methods further refined the MDP solution framework. However, these early developments remained largely theoretical until the breakthrough introduction of Q-learning [

4]. This algorithm enabled practical applications by discovering optimal action policies without requiring explicit reward functions or state transition models. As a model-free approach that provably converges to optimal policies in deterministic MDP, Q-learning represented a watershed moment in RL research.

The 21st century witnessed transformative advances with the integration of deep learning. In 2013, DeepMind’s pioneering work [

5] combined deep neural networks with Q-learning to create Deep Q-Networks (DQNs), effectively addressing the critical challenge of high-dimensional state space representation. This innovation spawned numerous advanced algorithms, including DDQN [

6], TRPO [

7], PPO [

8], DDPG [

9], A3C [

10], SAC [

11], TD3 [

12], and [

13,

14,

15], collectively propelling RL into mainstream artificial intelligence research and applications.

While deep neural networks have significantly enhanced the capability of reinforcement learning agents to handle complex decision-making tasks, conventional RL methodologies necessitate continuous environmental interaction for experience collection during agent training. This exploration process often incurs substantial costs in real-world applications. Specifically, agents typically require millions of interactions to develop effective policies, which proves particularly problematic in domains such as robotic control and autonomous driving—scenarios where such extensive interaction is not only cost-prohibitive but also poses significant safety risks. Furthermore, the inherent randomness in environmental interactions coupled with frequent policy updates during exploration may lead to training gradient instability.

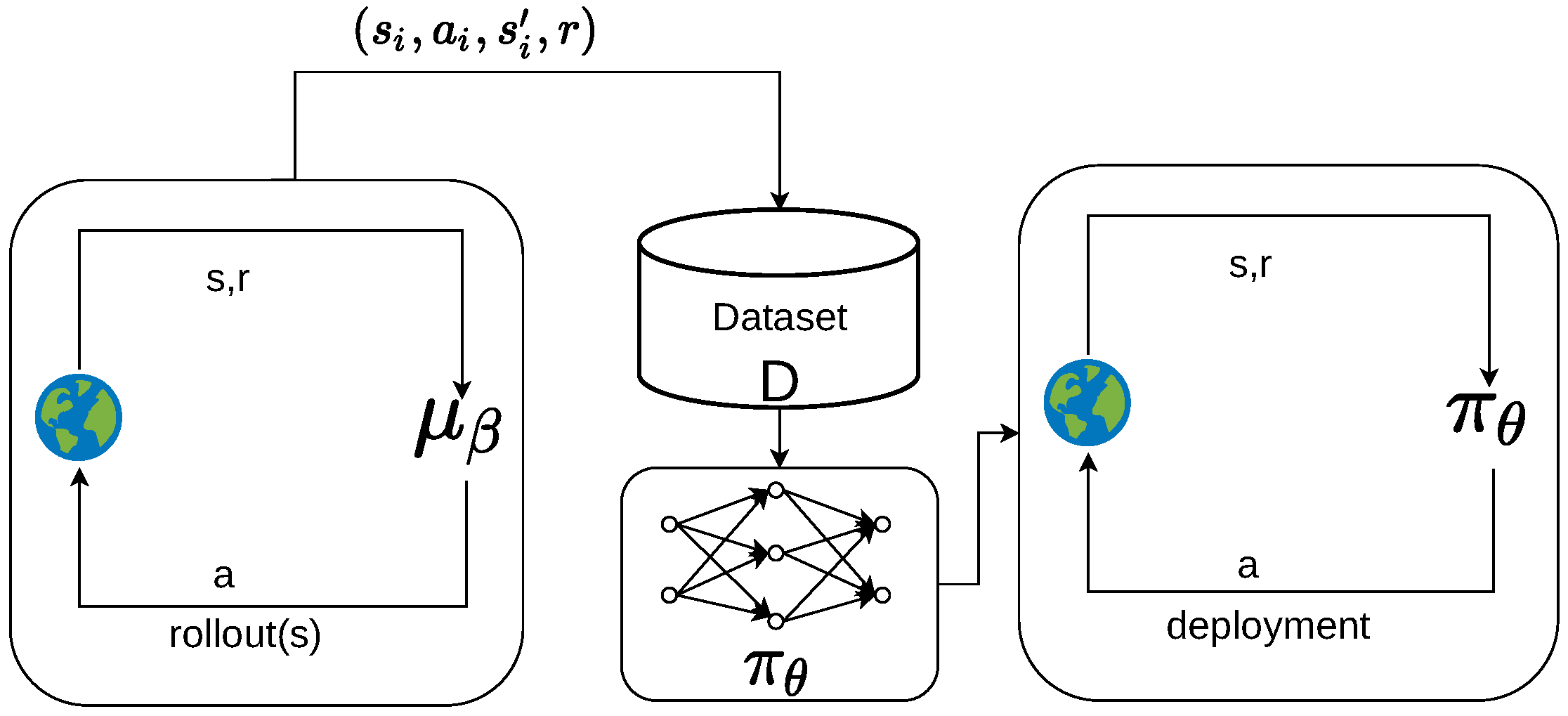

Offline reinforcement learning (

Figure 1), also known as batch reinforcement learning, has emerged as a promising research direction to address these challenges. This paradigm enables policy learning exclusively from pre-collected datasets, eliminating the need for online environmental interaction. The principal advantages of this approach are threefold: First, the use of fixed datasets substantially improves training stability; second, the elimination of real-time interaction allows for the optimal utilization of historical data, particularly valuable in high-cost or safety-critical applications (e.g., healthcare, autonomous vehicles, and robotics); finally, offline RL facilitates policy optimization using large-scale offline datasets, thereby mitigating traditional RL’s sample inefficiency while enhancing data utilization.

However, offline RL introduces unique challenges. Since it relies solely on pre-collected data, standard RL methods encounter distributional shift during policy optimization, generating out-of-distribution actions. Q-learning exacerbates this issue through extrapolation error, overestimating the value of OOD (out-of-distribution actions). This overestimation triggers a destructive feedback loop: the policy increasingly selects overestimated OOD actions, and the Q-function amplifies these errors, ultimately resulting in significant policy performance degradation.

Prior research in offline RL has typically addressed this problem through one of four approaches: 1. Policy Constraints [

12,

16,

17,

18,

19,

20]: Restricting the policy from selecting actions that deviate substantially from the offline data distribution. While effective in preventing OOD action selection and subsequent Q-value overestimation, this method renders policy learning critically dependent on dataset quality. 2. Uncertainty Estimation [

21,

22]: Adjusting policies based on estimated uncertainty (e.g., in policy or value functions) to avoid decisions in high-uncertainty regions. Practical implementations often underestimate uncertainty, potentially increasing OOD action selection. 3. Regularization [

19,

23,

24]: Incorporating regularization terms without explicit policy constraints. Generally less conservative than policy constraints, this approach typically achieves superior performance in practice. 4. Trajectory Optimization [

25,

26]: Framing RL as a sequence generation task with multiple state–action anchor points throughout trajectories. While theoretically eliminating distributional shift, this method demands substantial computational resources and exhibits slow prediction times.

In the majority of prior work, the policy is typically parameterized as a Gaussian distribution, with its mean and (often diagonal) covariance determined by the output of a neural network. However, in the context of offline reinforcement learning, datasets are frequently collected from a mixture of diverse behavior policies. Consequently, the true underlying behavior policy may exhibit complex characteristics such as strong multimodality, significant skewness, or intricate dependencies between different action dimensions. A standard diagonal Gaussian policy inherently struggles to adequately model these complex properties [

27]. This inherent limitation in policy representation capacity can severely constrain the expressiveness of the learned policy. As a result, offline reinforcement learning methods that rely on this restrictive parameterization, particularly those incorporating policy regularization, often tend to converge to suboptimal policies and may exhibit performance that is slightly inferior compared to alternative approaches.

Owing to the superior performance of diffusion models, recent studies have increasingly adopted them as policy networks, as exemplified by Diffuser [

28] and Diffusion Policy [

29]. However, these methods rely solely on behavioral cloning for policy training without directly optimizing the policy, leading to performance that critically depends on the neural network’s scale and often suffers from suboptimal real-time efficiency. In contrast, Diffusion-QL aligns with our approach by leveraging the Q-function for policy improvement to learn the optimal policy. Nevertheless, its training process necessitates frequent sampling, which substantially increases computational overhead. Notably, if we model the action distribution of the dataset as a perturbed optimal policy distribution, based on prior studies [

30], the later stages of the denoising process have the most significant impact on sample quality, where the noise prediction near

can be viewed as estimating the perturbed noise of the optimal policy distribution. This insight enables highly efficient sampling during training with only a minimal number of diffusion steps.

Building upon these observations, we propose DQS, a novel reinforcement learning algorithm that formalizes the dataset’s action distribution as a noise-injected optimal policy distribution and employs a diffusion (or score-based) model for policy regularization. Specifically, DQS utilizes a multilayer perceptron (MLP)-based Denoising Diffusion Probabilistic Model (DDPM) as its policy. The training objective comprises two key components: 1. a behavioral cloning term, ensuring alignment with the empirical action distribution of the training data, and 2. a policy optimization term, where the Q-function guides the diffusion model in noise prediction during the later stages of denoising. Crucially, this framework enables highly efficient training by requiring only minimal denoising steps during sampling.

In summary, this paper contributes DQS, an improved offline RL algorithm that leverages diffusion models’ exceptional data distribution learning capability for precise policy regularization while successfully employing Q-learning to guide noise prediction in suboptimal actions toward optimal action discovery. Comprehensive evaluation on the D4RL offline RL benchmark demonstrates that DQS achieves performance comparable to or superior to recent offline RL baselines.

2. Preliminaries

This section systematically delineates the formal definition of Markov Decision Processes, the application of weighted regression to policy improvement, and the mathematical underpinnings of diffusion models. This exposition establishes the theoretical foundation for the subsequent development of methodologies.

2.1. Offline Reinforcement Learning

A Markov Decision Process (MDP) is formally defined as a tuple . represents the state space, consisting of the set of all possible states. represents the action space, consisting of the set of all possible actions. is the state transition probability function, which specifies the probability of transitioning to state from state s when taking action a. This function satisfies the property for all . is the reward function, which defines the immediate reward received upon taking action a in state s. is the discount factor, which weights the importance of immediate versus future rewards.

Markov Decision Processes constitute the theoretical foundation for reinforcement learning, providing a mathematical framework to model the interaction dynamics between an intelligent agent and its environment. The primary objective in reinforcement learning is to learn an optimal policy

by maximizing the expected cumulative discounted return, denoted by

:

Here,

denotes a trajectory (or sequence of state–action pairs) generated by following policy

.

Specifically, the action–value function

is defined as the expected cumulative discounted return obtained by taking action

a in state

s and thereafter following policy

:

where

is the cumulative discounted return from timestep

t.

The action–value function

satisfies the Bellman equation, which offers a recursive relationship between the value of a state–action pair and the expected value of the subsequent state–action pair:

Maximizing the reinforcement learning objective function is equivalent to maximizing the expected values of the action–value function.

In contrast to online reinforcement learning, offline reinforcement learning solely utilizes a static dataset of previously collected transitions for policy optimization, without explicit interaction with the environment. This dependency introduces significant challenges, including distribution shift (or covariate shift) and extrapolation errors, as the learned policy might select actions outside the distribution of the dataset’s behavior policy . To address these challenges, a common approach in offline RL is to constrain the learned policy to adhere closely to the behavior policy that generated the dataset , while concurrently optimizing for high Q-values.

This objective is formally expressed as maximizing the expected Q-value under the behavior policy’s state distribution

, penalized by the KL divergence between the learned policy

and the behavior policy

:

Here,

denotes the learned action–value function parameterized by

,

is the stationary state distribution induced by the behavior policy

, and

represents the Kullback-Leibler divergence. The term

acts as a regularization term, scaled by

(where

is a hyperparameter) to penalize deviations of the learned policy

from the behavior policy

.

2.2. Policy Improvement via Weighted Regression

The optimal policy

for the objective in Equation (

4) is derived using Lagrange multipliers, yielding [

17]

where

is the partition function. This expression for

represents a policy improvement step.

Directly sampling from

requires explicit modeling of the behavior policy

. This poses a considerable challenge, particularly in continuous action spaces, as

can be highly complex or multimodal. Previous methods [

17] address this challenge through projecting the optimal policy

onto a parameterized policy class

. This projection is typically accomplished by minimizing the KL divergence between

and

:

This approach is typically referred to as weighted regression or advantage-weighted regression (specifically when Q is replaced by the advantage ), where serves as the regression weight.

2.3. Diffusion Models

Diffusion models [

31,

32] represent a prominent class of generative models in deep learning, which employ thermodynamic diffusion principles to iteratively reconstruct data from noise. These models have exhibited notable performance in various generation tasks, including image, audio, and video synthesis. The framework encompasses two fundamental phases: the forward process and the reverse process.

Forward Process: The forward process, also known as the diffusion phase, systematically transforms the original data sample

into pure Gaussian noise through

T discrete timesteps. This transformation is mathematically represented as

Here, denotes the noise intensity at step t, represents standard normal distribution noise, and corresponds to the scaling factor at timestep t.

Reverse Process: The reverse process constitutes the central innovation of diffusion models, which aims to learn the gradual reconstruction of data

from noisy observations

. This parameterized Markov chain is realized through neural network learning, with the transition given by

In this formulation, denotes the parameterized noise prediction network, while represents the noise variance.

The neural network training objective is expressed as

3. Diffusion-Q Synergy

As the control requirements of intelligent systems exhibit increasing complexity (e.g., dynamic gait regulation in quadrupedal robots, multimodal decision-making in autonomous vehicles), the limitations inherent in conventional control methodologies when applied to high-dimensional, nonlinear, and time-varying systems have become more pronounced. To effectively address these challenges, a novel offline reinforcement learning algorithm, predicated upon the diffusion model and designated as DQS, is proposed.

This methodology capitalizes on the progressive distribution-fitting capabilities characteristic of diffusion models to execute behavioral cloning based on the policy present within the dataset. Furthermore, through rigorous theoretical analysis, the mathematical equivalence between the reverse denoising process of the diffusion model and the policy learning process is demonstrably established. This equivalence ensures that the learned policy, denoted as , maintains alignment with the dataset’s behavioral policy while simultaneously enabling the Q-learning-guided noise prediction network, , to generate optimized denoised actions.

3.1. Diffusion Policy Cloning

For the purpose of clearly distinguishing between two distinct temporal processes, the following notation is adopted: Specifically, the subscript is utilized to denote discrete timesteps associated with the diffusion process. The superscript is employed to represent timesteps within the reinforcement learning (RL) trajectory.

In the field of reinforcement learning (RL), a policy

is formally defined as a conditional probability function that characterizes the probability distribution over actions given a specific state. Concurrently, diffusion models, as a class of generative models, can also be conceptualized as a conditional probability function. This function describes the probabilistic generation of a final output

(e.g., an action

a) from an initial random noise

, contingent upon a given state

s, through an iterative reverse denoising process. Given the demonstrated efficacy of diffusion models in accurately modeling and generating complex data distributions, it is posited that these models can be directly employed for the parameterization of policies within RL frameworks.

In this context, represents the learned policy, and represents the reverse process of the diffusion model, indicating the likelihood of the diffusion model generating a clean sample given the state s and the final noisy sample .

A rigorous mathematical equivalence is established between the conditional generation process of the diffusion model and the process of RL policy optimization. Consequently, the standard objective function utilized in reinforcement learning may be reformulated as follows:

Here, represents the set of learnable parameters, and denotes the optimal policy.

Remark 1.

is defined as the policy that maximizes :If matches , i.e., , then must reach its maximum value. Therefore, minimizing the discrepancy between and can be regarded as a surrogate objective for maximizing , as expressed in Equation (12). Remark 2.

Within the framework presented in Equation (10), the policy improvement process characteristic of reinforcement learning is modeled as the reverse denoising trajectory of the diffusion model, wherein a clean sample is generated from noise ϵ. This specific formulation necessitates two conditions: 1. The diffusion model must possess the requisite capacity to approximate arbitrary policy distributions, a capability previously demonstrated in ref. [33]. 2. Knowledge or reliable estimability of the optimal policy is required. It is pertinent to note that in the context of offline RL, is typically unknown and must therefore be inferred from a dataset that has been generated by a suboptimal behavioral policy, denoted by μ. To apply

definition to the objective function,

Following the methodology established in [

31], the diffusion model’s training objective (Equation (

9)) relates to the noise prediction error:

Thus, minimizing

is equivalent to minimizing the diffusion model’s noise prediction error.

3.2. Q-Learning-Guided Noise Prediction

Remark 3.

Q-Learning-guided can be reformulated through the application of KL divergence to quantify the dissimilarity between the optimal policy and the denoised action distribution for a given state, thereby facilitating the guidance of noise prediction for suboptimal actions. However, analogous to the challenge encountered in Equation (12), the optimal policy remains unknown, necessitating the determination of its relationship with other components. As delineated in the preliminaries (Equation (5)), connections have been established between the behavioral policy μ and the optimal policy , as well as between the parameterized policy and . Correspondingly, a relationship between the denoising model and the optimal policy can be derived. Building upon the weighted regression formulation presented in Equation (

6), it is further derived that

In the context of offline reinforcement learning, weighted regression imposes a constraint on the policy to maintain proximity to

while employing the Q-value as regression weights to direct policy optimization. Similarly, Q-Learning-guided term within the objective function adjusts the direction of noise prediction while preserving the overall noise prediction trajectory (a characteristic ensured by the behavior cloning term), thereby optimizing the policy to better approximate the optimal policy. Consequently,

is simplified by adapting the weighted regression methodology:

where

functions as a normalization factor for the Q-function.

Substituting Equation (

14) into the

objective gives

As indicated in Equation (

17), the objective

is ultimately reduced to the evaluation of generated actions

through the Q-network

. This process facilitates the adjustment of the diffusion model’s parameters

to enable

to yield higher expected Q-values. Given that the objective

is defined by leveraging the Q-function, the accuracy of Q-value estimation is rendered critical. To mitigate potential overestimation issues, double Q-learning is employed for Q-function estimation. Specifically, two Q-networks,

and

, are utilized to independently estimate action values, and their parameters are updated by minimizing the discrepancy between their estimates. This approach serves to reduce Q-value variance and enhance the stability of policy optimization. Furthermore, target networks are implemented to stabilize the training procedure. These networks, updated through Exponential Moving Average (EMA), contribute to relative stability during training by serving as delayed-update replicas for the computation of Q-value targets. The objective function for Q-learning is formulated as

Reducing the function to its simplest form, the complete optimization objective is expressed as

This section presents the derivation of the policy optimization term as a formulation that maximizes the expected Q-value of denoised actions. This derivation is predicated upon weighted regression under the premise of an unknown optimal policy. Through the application of double Q-learning and target networks, the stability and accuracy of Q-value estimation are enhanced. The final optimization objective integrates both the noise prediction error and Q-function-guided policy optimization, thereby ensuring behavior cloning while simultaneously adjusting the direction of noise prediction to approximate the optimal policy.

3.3. Algorithm Details

To help readers better understand our algorithm process, we will introduce the main steps of our algorithm below [

34]:

- 1.

Initialization Phase: Initially, the noise prediction network and dual Q-learning networks are constructed and initialized, along with their corresponding target networks and . Subsequently, the offline dataset is loaded to acquire state-action-next state-reward tuples () for use as training samples.

- 2.

Critic Training Phase: During this phase, potential actions

for subsequent states

are generated. The target Q-values are computed using the Bellman equation and the target networks. The loss function (Equation (

18)) is computed by minimizing the mean squared error between the current state–action Q-values predicted by

and

and their target values. The backpropagation process is then employed to update the parameters (

and

) of the dual Q-network, thereby enhancing value estimation accuracy.

- 3.

Policy Training Phase: The policy training procedure comprises two principal components:

- (a)

Behavior Cloning Term: Starting from actions within the dataset , intermediate noisy states () are generated through random timestep noising (). These states, along with the corresponding timestep (t), are input into the noise prediction network to yield predicted noise (). The loss function is computed by minimizing the mean squared error between the actual noise () and the predicted noise () for the current state (s) and timestep (t).

- (b)

Policy Improvement Term: Optimized actions () are generated through the denoising process, starting from noisy samples derived from dataset actions . Policy gradient signals are constructed by evaluating the Q-function values () for state s and the optimized action .

The compound loss function (Equation (

19)) integrates these two components, with gradient descent utilized to update the parameters (

) of the noise prediction network.

- 4.

Target Network Update: An Exponential Moving Average (EMA) mechanism is employed to execute soft updates on the target network parameters (, , and ). This gradual update strategy effectively stabilizes the training process by mitigating instability induced by high target value variance, thereby promoting steady improvement in model performance.

During both policy training and critic training, policy gradient clipping is applied to ensure training stability. The pseudocode and specific algorithm are shown in the following Algorithm 1.

| Algorithm 1 DQS |

Require:

Initialize the score-based model , training parameters and of the action

evaluation model , training parameters and of the target action evaluation

model ,Loading the Dataset .

- 1:

for each gradient step do - 2:

, , - 3:

// Training Critic - 4:

Sample by - 5:

Update and by ( 18) - 6:

// Training Policy - 7:

Adding Noise to to get - 8:

Recovering by - 9:

- 10:

// Update Target Network - 11:

Update and by EMA - 12:

Update by EMA - 13:

end for

|

4. Experimental Evaluation

The efficacy of the proposed methodology was systematically evaluated using the D4RL benchmark dataset [

35], a widely recognized standard in offline reinforcement learning. To comprehensively validate the algorithm’s performance within the domain of intelligent control systems, the standard Gym test environments were selected as the evaluation platform. This test suite comprises three representative locomotion tasks: Hopper-v2, Walker2d-v2, and HalfCheetah-v2. Additionally, the Kitchen-v2 environment was included to assess the algorithm’s capability in complex manipulation tasks. Each task presents distinct challenges in biomechanical control, simulating various robotic locomotion and manipulation paradigms.

The Hopper-v2 environment models the control dynamics of a monopedal robotic system, analogous to the locomotion observed in kangaroos. This task requires the precise regulation of torque at the agent’s articulated joints to achieve stable forward hopping while concurrently maintaining dynamic equilibrium. The primary control objective is the joint optimization of forward velocity and postural stability to prevent instability or falls.

In the Walker2d-v2 environment, the control of a bipedal robotic system is simulated, emulating the mechanical principles of human ambulation. Effective control in this environment necessitates the coordinated application of torque across multiple leg joints to generate and sustain stable gait patterns. The central control challenge lies in the simultaneous maximization of forward velocity and preservation of upright balance.

The HalfCheetah-v2 environment simulates the control requirements of a quadrupedal robotic platform, inspired by the locomotion of cheetahs. Successful performance in this task demands sophisticated inter-limb coordination to achieve high-speed running gaits. The paramount control objective centers on maximizing horizontal velocity through the optimal distribution of torque across all actuated joints.

The Kitchen environment introduces a new dimension of evaluation by simulating a robotic manipulation scenario with multiple articulated objects. This environment tests the agent’s ability to perform sequential manipulation tasks, such as opening microwave doors, moving kettles, and turning knobs, requiring precise motor control and long-term task planning. The key challenge lies in coordinating high-dimensional continuous control while maintaining object interactions and avoiding collisions in a constrained workspace.

4.1. Comparison with Other Methods

Baselines

To establish a comprehensive performance benchmark, the proposed approach was systematically evaluated against several representative baselines from distinct methodological categories.

Within the domain of regularization-based offline reinforcement learning, baselines include Conservative Q-Learning (CQL) [

23], TD3+BC [

12], DQL [

36], AWR [

17], SAC [

11], BEAR [

37], BCQ [

16], and Implicit Q-Learning (IQL) [

19]. Within the model-based offline RL paradigm, Model-Based Offline Planning (MBOP) [

38] was considered. Additionally, a comparative analysis was performed against trajectory prediction approaches, specifically Decision Transformer (DT) [

25], Trajectory Transformer (TT) [

26], and Diffuser [

28].

The reported performance metrics for baseline methods are derived from either (1) the optimal results published in their respective original papers, or (2) the standardized evaluations conducted by ref. [

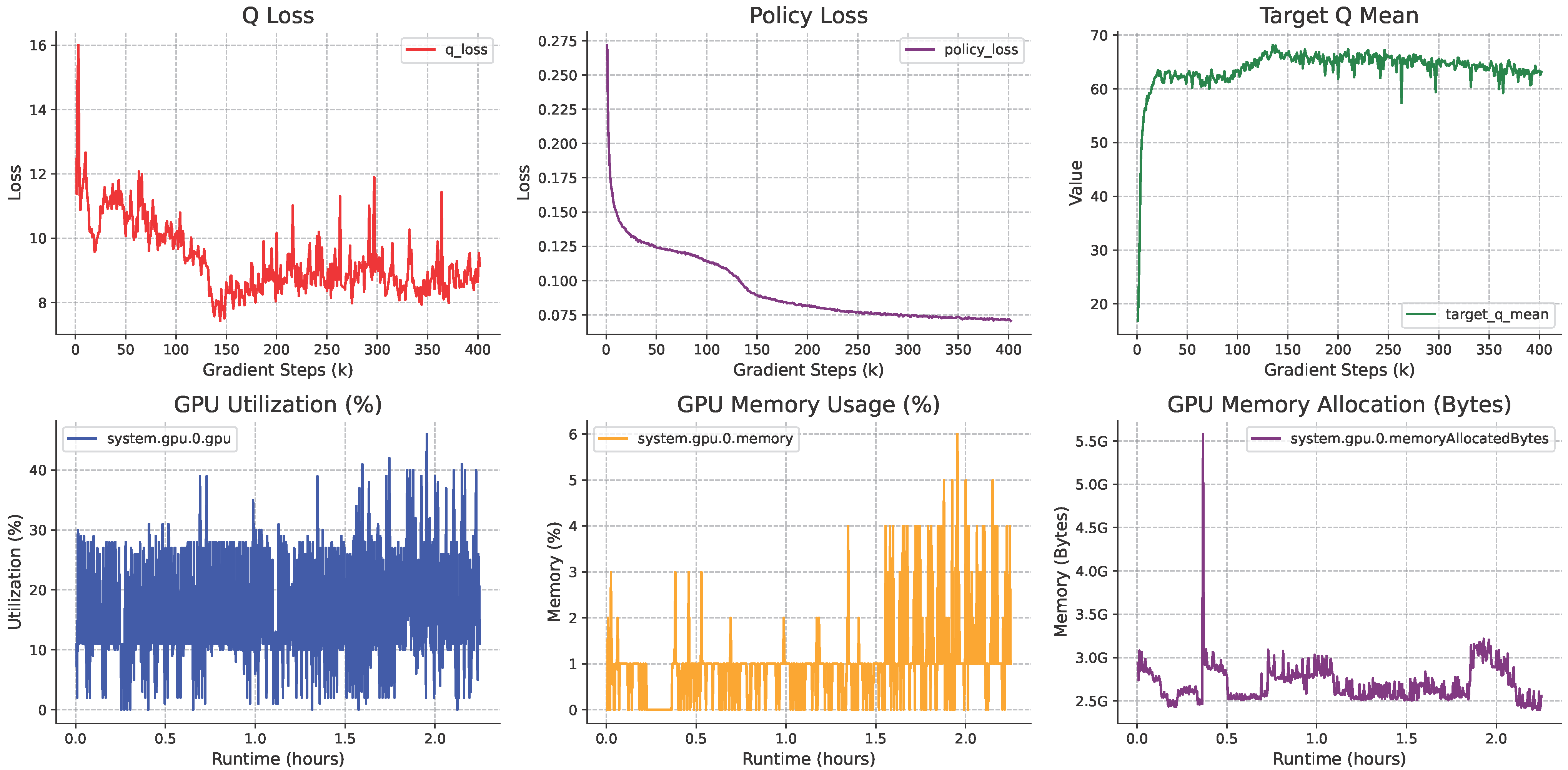

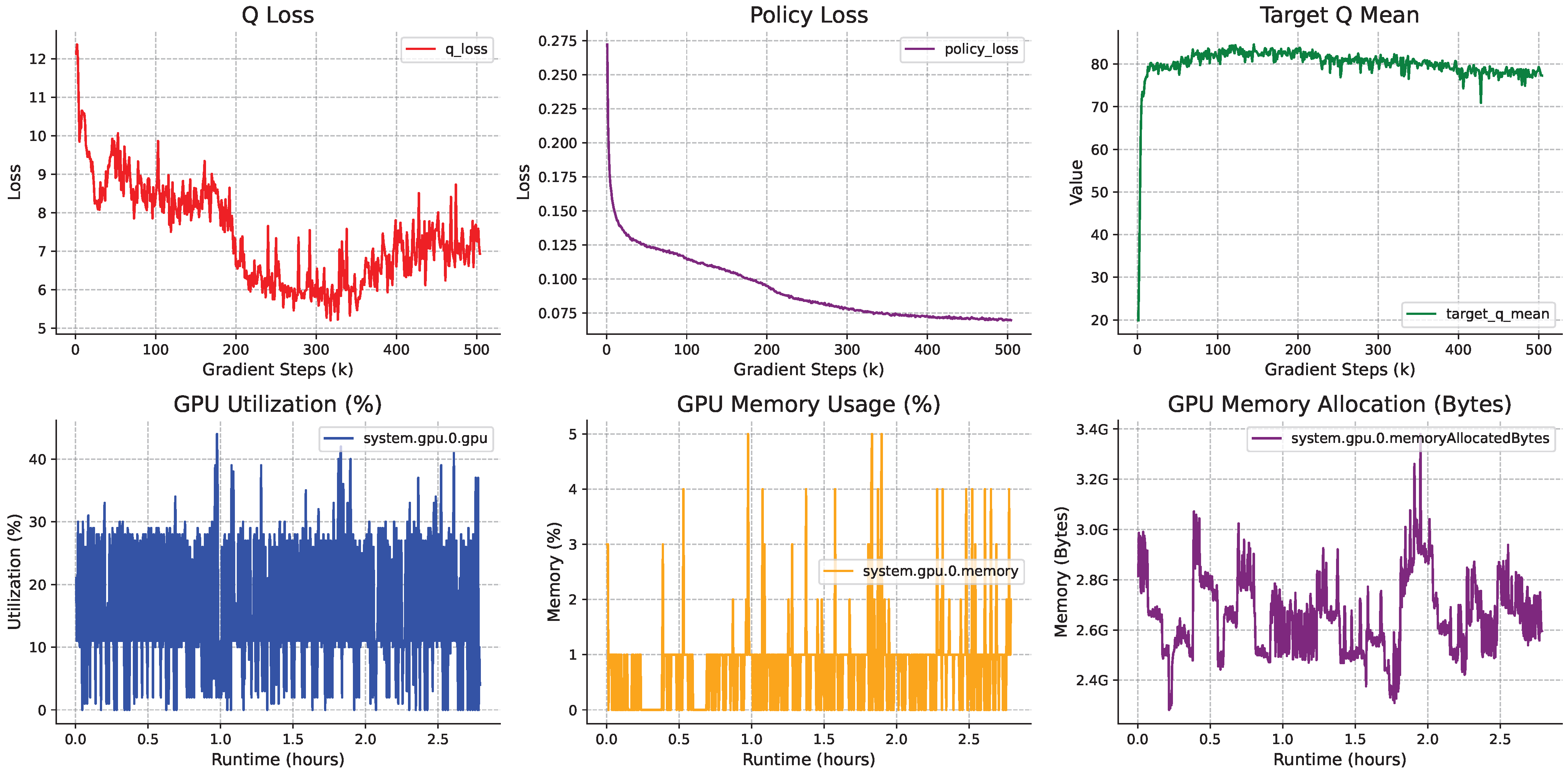

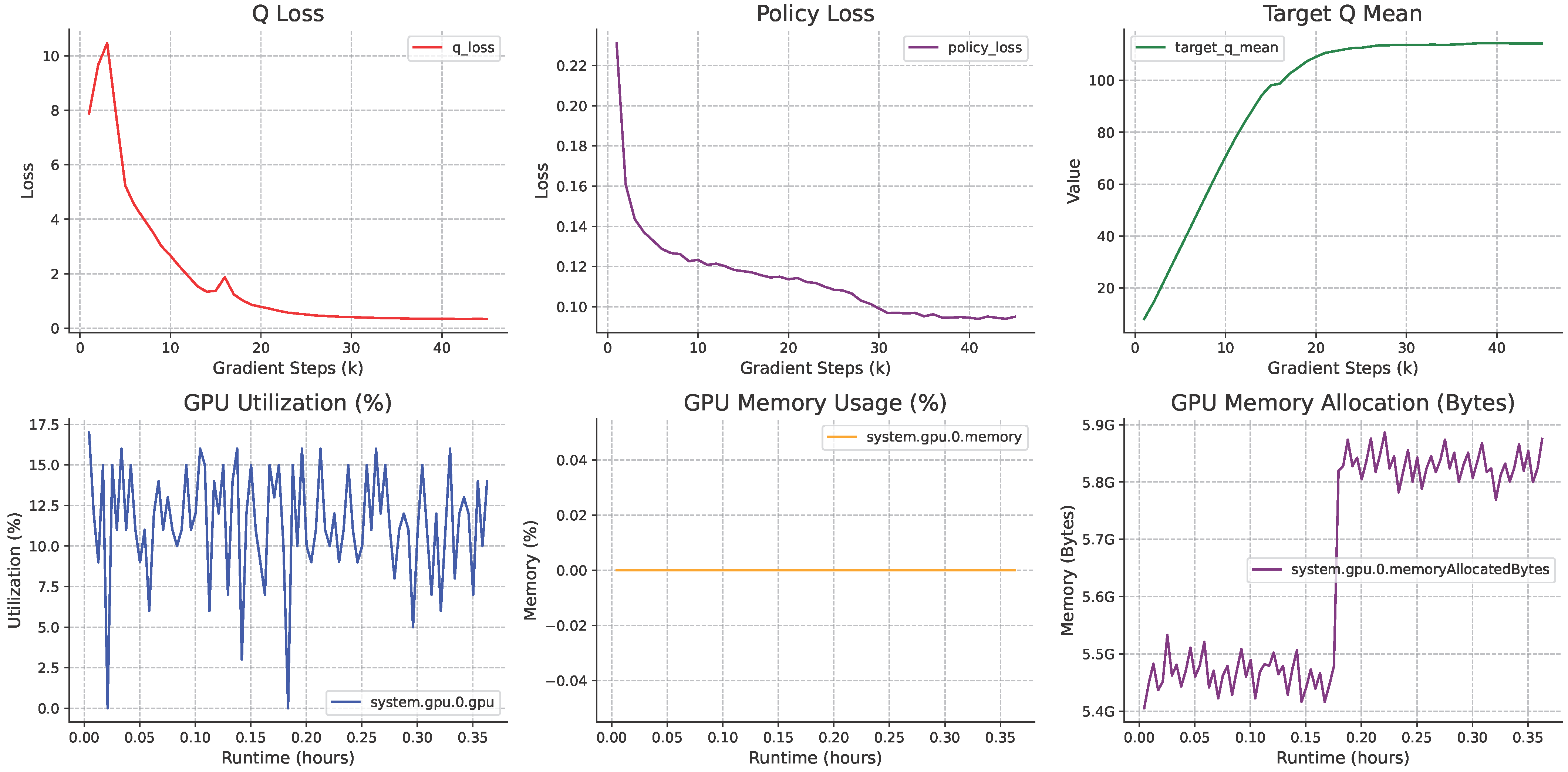

35]. Our experimental results (see

Table 1) were evaluated across five random seeds and 150 episodes, with performance variance quantified using standard deviation to ensure statistical reliability. This ensures a fair and consistent comparison framework. We employed permutation testing to assess statistical significance, with the detailed implementation and hyperparameters of our method provided in

Appendix A,

Table A1. Furthermore, the training details of our method are comprehensively described in

Figure A1,

Figure A2,

Figure A3 and

Figure A4.

Our method’s suboptimal performance in Gym tasks compared to DQL [

36] might stem from the Q-function primarily guiding the denoising process only during its later stages. This late-stage guidance could be detrimental to effective motor control. Conversely, our method’s superior performance in Kitchen tasks could be attributed to the Q-function’s guidance during the earlier stages of the denoising process, which appears to be either less critical or even counterproductive for this specific task.

Table 2 presents a comparison of the computational efficiency of an algorithm named DQS against several baseline algorithms on an NVIDIA 4090 GPU. The table shows two key metrics: training time and inference time. Training time is defined as the duration required to complete one million training steps. Inference time is measured after 150 inference episodes.

4.2. Impact of Hyperparameters

The number of denoising steps, denoted as

t, within diffusion models is a critical hyperparameter that significantly influences both the quality of generated samples and the efficiency of the inference process. Generally, increasing the number of denoising steps facilitates the progressive refinement of the output through more meticulous noise removal, thereby yielding samples characterized by enhanced fidelity and realism. Each successive step applies subtle adjustments to the generated data, with the cumulative effect leading to a sharper final result. However, each additional denoising step necessitates a distinct forward pass through the model, consequently increasing the total generation time. Thus, selecting the number of denoising steps involves a fundamental trade-off between generation quality and computational efficiency. In applications such as control systems where real-time performance is paramount, minimizing the value of

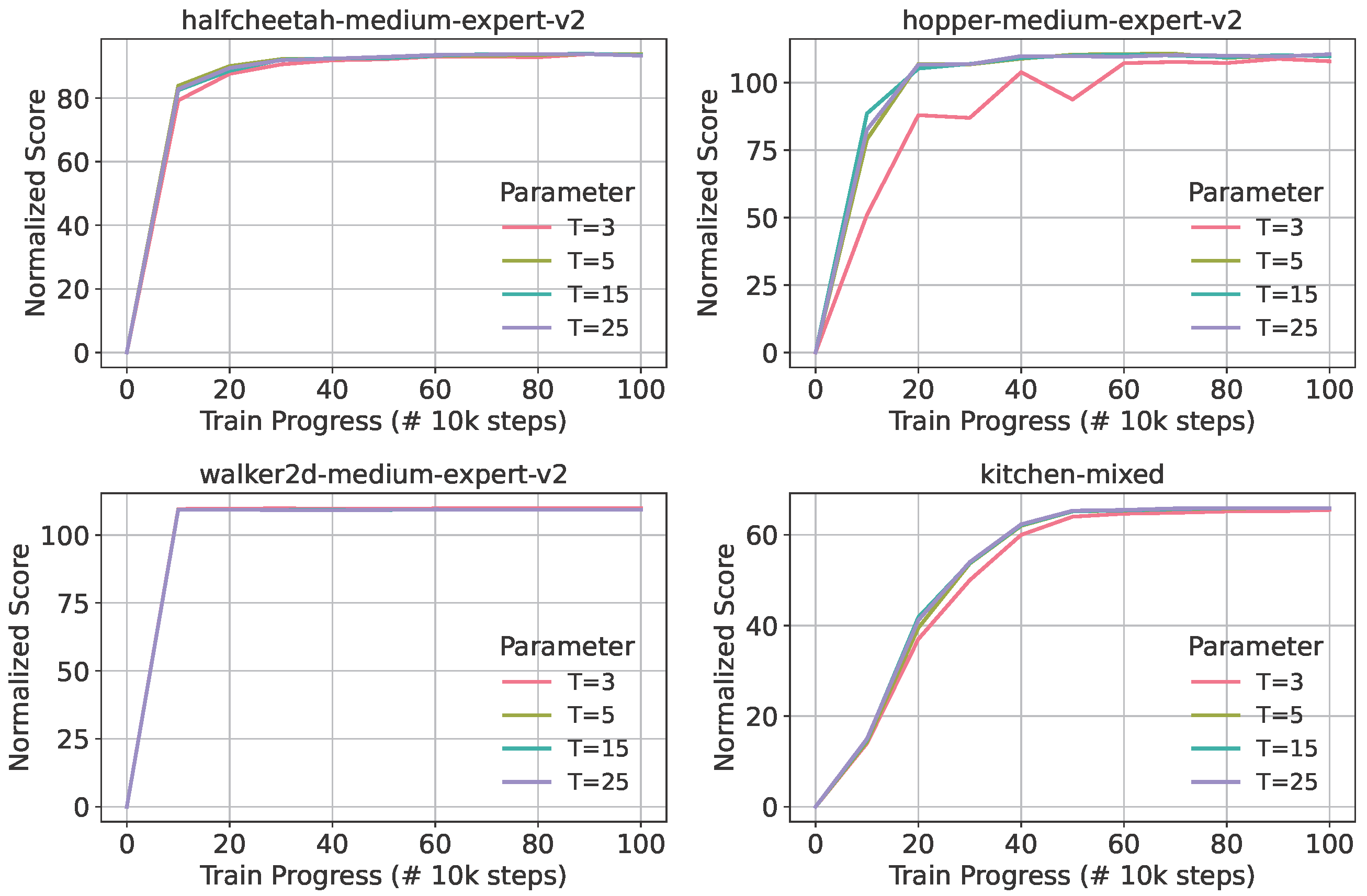

t becomes essential. As depicted in

Figure 2, empirical analysis conducted in this study indicates that setting

achieves an optimal balance between sample quality and real-time performance requirements.

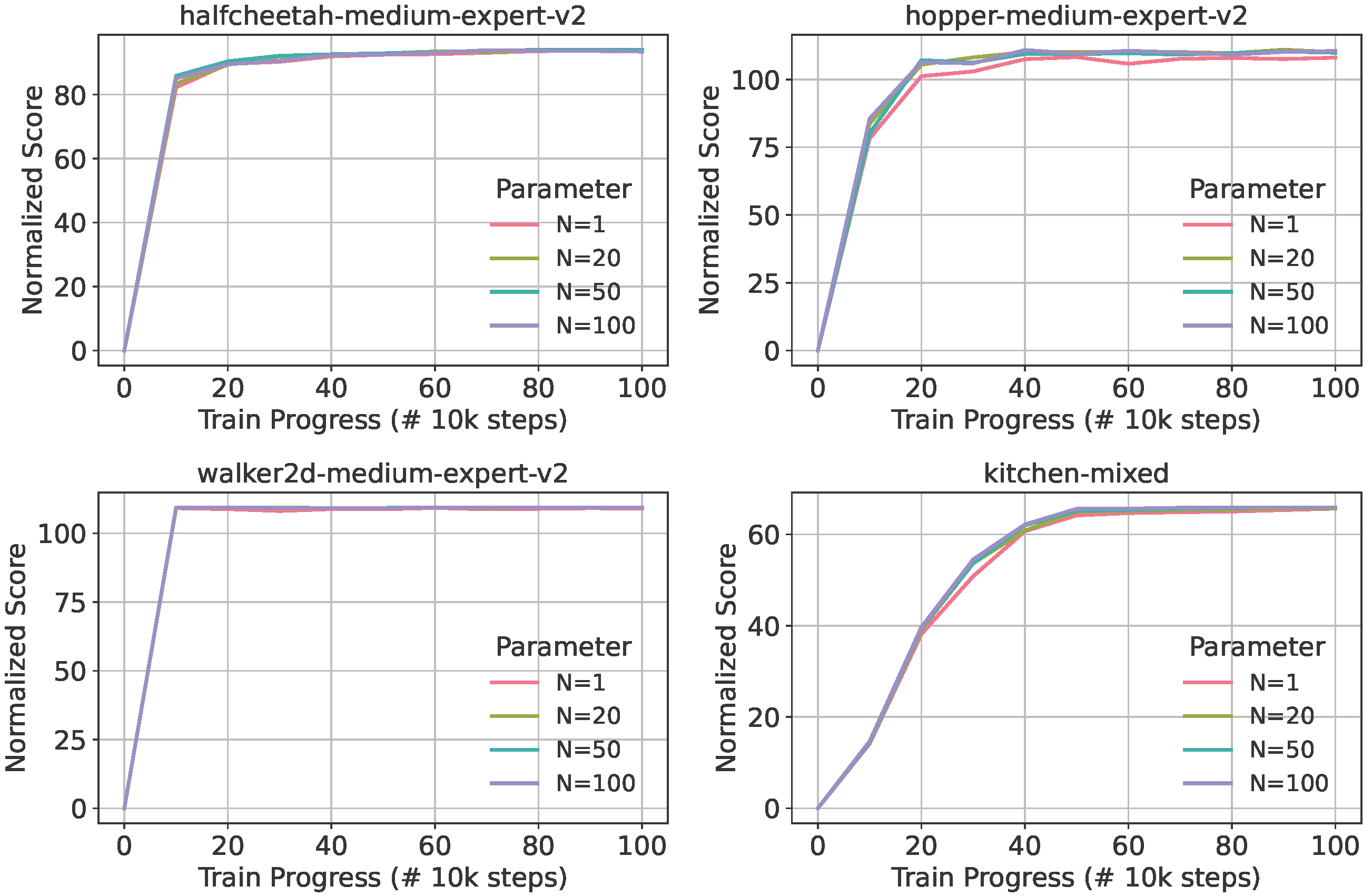

The alternative action parameter, denoted as

n, governs the generation of

N candidate actions for a given state

s. These candidates are subsequently evaluated utilizing the learned critic

prior to resampling the optimal action. This parameter similarly impacts both generation quality and computational efficiency. In principle, exploring multiple action candidates enables the model to select superior subsequent actions, potentially mitigating suboptimal local convergence and enhancing overall output quality. This process is analogous to conducting a broader search within the action space. While generating

N candidate actions inherently demands greater computational resources compared to single-action generation, the parallelizable nature of this process renders the impact of

N on generation speed less pronounced than that of

t. Consequently, the parameter selection space for

n typically affords greater flexibility. As demonstrated in

Figure 3, experimental results indicate that setting

optimally reconciles generation quality with real-time constraints.

4.3. Ablation Study

This section presents a comprehensive analysis validating the superior quantitative performance of Diffusion-Q Synergy (DQS) compared to other policy-constrained methods on D4RL benchmark tasks. Ablation studies were conducted to examine the two principal components of DQS: (1) the utilization of diffusion models as expressive policies and (2) Q-learning-guided denoising of suboptimal actions. Regarding the policy component, behavioral cloning comparisons were performed between the diffusion model and prevalent Transformer architectures. With respect to the Q-learning component, policy improvement was evaluated against BCQ methods.

As evidenced in

Table 3, diffusion-based behavioral cloning (Diffusion-BC) demonstrates performance superior to that of baseline approaches. This outcome validates the diffusion policy’s greater expressiveness and enhanced capability in fitting data distributions within control domains. The performance advantage of DQS over Diffusion-BC substantiates that the Q-learning-guided denoising of suboptimal actions provides additional policy enhancement.

Table 3 further reveals that the Q-learning component of DQS yields more significant policy improvement than baseline methods, such as BCQ. Collectively, these results demonstrate the synergistic interaction between DQS’s dual components, which culminates in superior overall performance.

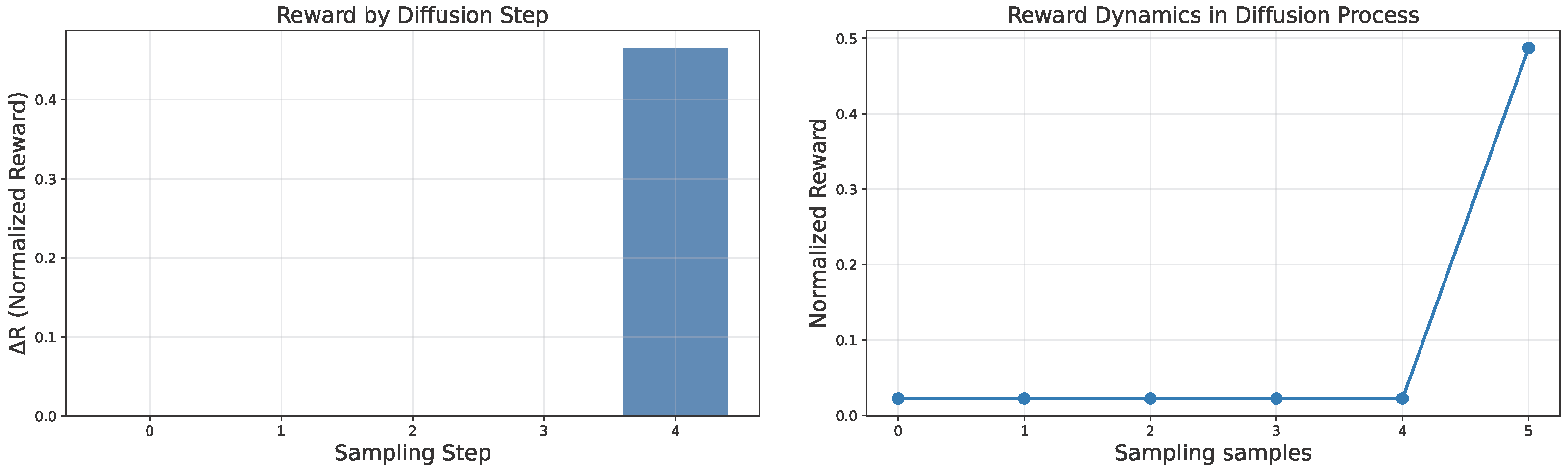

We conducted systematic evaluations of the actions generated at each temporal step under a fixed sampling horizon (T = 5) within the experimental environment. As depicted in

Figure 4, these actions are referred to as “Sampling samples”. Our analysis reveals that the action outputs of the diffusion policy exhibit predominant dependence on the terminal sampling step. Importantly, the implementation of guidance mechanisms at this final sampling stage yields substantial improvements in the quality of generated actions.