Abstract

Artificial Intelligence (AI) is reshaping higher education by enabling personalized learning (PL) and enhancing teaching and learning practices. To examine global research trends, pedagogical paradigms, equity and sustainability considerations, instructional strategies, learning outcomes, and interdisciplinary collaboration, this study systematically reviewed 29 articles indexed in the Social Sciences Citation Index (SSCI) Q1, representing the top 25% of cited articles, published between January 2020 and December 2024 in the Web of Science database. Results indicate that AI-PL research is concentrated in Asia, particularly China, and predominantly situated within education and computer science. Quantitative designs prevail, often complemented by qualitative insights, with supervised machine learning as the most common algorithm. While constructivist principles implicitly guide most studies, explicit theoretical grounding improves AI-pedagogy alignment and educational outcomes. AI demonstrates potential to enhance instructional approaches such as PBL, STEAM, gamification, and UDL, and to foster higher-order skills, yet uncritical use may undermine learner autonomy. Systematic attention to equity and SDG-related objectives remains limited. Emerging interdisciplinary collaborations show promise but are not yet fully institutionalized, constraining integrative system design. These findings underscore the need for stronger theoretical framing, alignment of AI with pedagogical and societal imperatives, and professional development to enhance educators’ AI literacy. Coordinated efforts among academia, industry, and policymakers are essential to develop scalable, context-sensitive AI solutions that advance inclusive, adaptive, and transformative higher education.

1. Introduction

Personalized Learning (PL) represents a transformative shift in higher education, aiming to accommodate the diverse needs, preferences, and goals of individual learners. Rooted in the principles of learner-centered education, PL tailors instructional strategies, content delivery, and assessment methods to align with students’ unique learning trajectories [1,2]. By moving beyond one-size-fits-all instruction, PL promotes engagement, inclusivity, and academic success through technology-driven customization [3,4]. Artificial Intelligence (AI) plays a critical enabling role in realizing this vision, offering dynamic capabilities such as adaptive feedback, real-time performance monitoring, and intelligent content recommendation [5,6].

Through advanced techniques like machine learning, deep learning, and natural language processing, AI-driven systems support the personalization of educational content and learner pathways [7,8]. For example, the use of distance eTeaching and eLearning (DTL), an intelligent ubiquitous learning system, integrates AI technologies—such as context-aware behavior analysis, adaptive recommendation algorithms, and personalized learning path generation—to deliver individualized support in real time [9]. This system demonstrates how AI facilitates seamless learning experiences across physical and digital environments while enhancing self-regulated learning and engagement. In addition, AI-driven adaptive learning platforms can provide real-time analytics and responsive content delivery, enabling instructors to continuously refine instructional strategies based on learner progress and preferences [1,10]. Such tools are being applied across a wide range of domains—from STEM education to the humanities—allowing educators to design more inclusive and context-sensitive learning environments [11]. For instance, intelligent tutoring systems and personalized dashboards help track individual trajectories, fostering not only academic improvement but also self-regulation and learner autonomy [3,12]. By addressing key limitations of traditional instruction—such as lack of differentiation and limited scalability—AI applications enhance the responsiveness, equity, and sustainability of educational systems [5,13]. Such AI-based applications not only increase personalization and efficiency but also promote equitable access to learning opportunities in increasingly diverse educational settings.

Despite the growing body of research on AI in Personalized Learning (PL), significant gaps exist in the literature, underscoring the need for a systematic review to consolidate and synthesize current findings. Previous reviews have predominantly focused on technical or domain-specific aspects of AI, often neglecting its interdisciplinary applications and pedagogical implications [14,15]. For instance, research by Fariani, Junus [14] emphasizes cognitive impacts but overlooks the socio-emotional and ethical dimensions of AI integration in education. Moreover, existing reviews rarely consider how AI-supported PL corresponds to broader educational aims, such as social equity, inclusion, and the United Nations Sustainable Development Goals (SDG 4), especially in underserved or non-Western contexts [16]. Studies on higher-order learning outcomes—such as critical thinking, creativity, and ethical reasoning—also remain unsystematic [17,18]. In addition, limited attention has been given to emerging pedagogical paradigms, such as socioformation or Universal Design for Learning (UDL), which emphasize social co-construction, cultural relevance, and learner agency [19,20,21]. This review addresses these gaps by systematically examining leading articles indexed in the Social Sciences Citation Index (SSCI) Q1, representing the top 25% of cited articles, published between January 2020 and December 2024 in the Web of Science database (WoS), focusing on cutting-edge developments in AI-enabled PL within the context of higher education. The review goes beyond descriptive mapping to explore critical dimensions, including pedagogical orientations, social development implications, instructional innovation strategies, higher-order outcomes, and interdisciplinary collaboration. By consolidating insights from top-tier journals, this study highlights emerging trends, best practices, and unresolved challenges in the field. In doing so, this systematic review not only advances scholarly understanding but also informs the development of innovative, evidence-based strategies to enhance personalized learning in diverse educational contexts.

To achieve these objectives, the study proposes a multidimensional analytical framework, addressing the following research questions:

RQ1: Which countries dominate research on AI-driven personalized learning in higher education? What research methods, sample sizes, data sources, recurring themes, and AI algorithm types are most used? How does this research map differ across studies of varying scholarly impact (high-, medium-, and low-impact) on AI-driven personalized learning in higher education?

RQ2: What pedagogical paradigms or learning theories underpin the implementation of AI in personalized learning (e.g., behaviorism, constructivism, connectivism, socioformation)? Are these models explicitly stated or implicitly embedded in the studies?

RQ3: To what extent do the reviewed studies address social equity, accessibility, and sustainable development goals (such as SDG 4)? How do these considerations shape the application of AI for personalized learning, particularly in underserved regions or populations?

RQ4: What types of innovative instructional strategies—such as project-based learning (PBL), STEAM, gamification, Universal Design for Learning (UDL), or socioformative projects—are integrated into AI-driven personalized learning approaches? How does AI support or enhance these strategies?

RQ5: Do the studies report improvements in higher-order skills (e.g., critical thinking, creativity, ethical awareness, emotional regulation) and academic learning outcomes (e.g., test scores, engagement, completion rates)? How are these outcomes measured and interpreted?

RQ6: To what extent do the studies demonstrate interdisciplinary or transdisciplinary collaboration (e.g., education + computer science, psychology + engineering)? How does such collaboration influence research design, implementation, and findings?

2. Methodology

This systematic literature review (SLR) was conducted and reported in accordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA 2020) guidelines [22]. The review protocol was prospectively registered in the International Prospective Register of Systematic Reviews (PROSPERO; Registration No.: CRD420251133713). The PRISMA 2020 flow diagram is included to illustrate the study selection process (Figure 1). The following review process is organized into three key stages: planning, conducting, and reporting. These stages ensure a rigorous and structured approach to synthesize the research on Artificial Intelligence (AI) applications in Personalized Learning (PL) within higher education.

Figure 1.

Flowchart of study selection.

2.1. Search Strategy and Inclusion Criteria

The review targeted peer-reviewed journal articles published between 1 January 2020 and 31 December 2024 to reflect the period of rapid development and adoption of AI technologies in higher education. The Web of Science (WoS) Core Collection was selected as the sole database due to its comprehensive coverage of high-impact journals in social sciences, particularly those indexed in SSCI Q1, which ensures the inclusion of the most rigorously peer-reviewed and influential research relevant to the pedagogical and social aspects of AI in higher education [23]. While we recognize that the use of a single database may limit the breadth of the review, WoS was selected due to its extensive coverage of high-quality, peer-reviewed journals that align with the scope of this study. Future research could expand its scope by incorporating additional databases, such as Scopus, to enhance the comprehensiveness of the findings and reduce potential biases introduced by using only one data source. The search strategy combined Boolean operators and keywords related to three domains: AI technologies (i.e., “artificial intelligence” OR “AI” OR “AI based” OR “automated grad*” OR “automated tutor” OR “automated scor*” OR “machine intelligence” OR “machine learning” OR “intelligent support” OR “intelligent virtual reality” OR “intelligent agent*” OR “intelligent system” OR “intelligent tutor*”), personalized learning (i.e., “personalized learning” OR “personalized e-learning” OR “PL” OR “personalized online learning” OR “personal*” OR “adaptive learning system*” OR “adaptive system*” OR “adaptive educational system*” OR “adaptive testing”), and higher education (i.e., “higher education” OR “college” OR “undergrad*” OR “graduate” OR “postgrad*” OR “university” OR “sophomore” OR “course” OR “freshman” or “tertiary” or “post-secondary education”).

The inclusion and exclusion criteria are summarized below:

Included

- Peer-reviewed empirical articles published in SSCI Q1 journals

- English-language articles published between 2020–2024

- Studies with explicit focus on AI-based personalized learning in higher education

Excluded

- Review articles, proceeding papers, and retracted papers

- Early access articles

- Non-English publications

- Studies outside higher education or not involving AI as a core tool

- Studies without clear empirical application of PL

- Articles from SSCI Q2–Q4 journals

2.2. Study Selection Process

The study selection followed four stages: Identification, Screening, Eligibility, and Inclusion.

2.2.1. Identification

A total of 210 articles were retrieved from the WoS Core Collection. 61 studies were excluded using WoS metadata filters such as “Document Type” and “Publication Status” during the initial metadata export for reviews, proceeding papers, and retracted papers. Proceeding papers were excluded on the grounds that they frequently do not undergo the same rigorous peer-review process as journal articles, are subject to abbreviated review timelines, and often disseminate preliminary findings whose definitive validity remains to be established.

2.2.2. Screening

In the subsequent phase, texts of 149 studies were reviewed. 29 records were eliminated after screening titles and abstracts because they were either Early Access items or written in languages other than English. Early Access articles were omitted to safeguard the structural integrity of the dataset and to guarantee the reproducibility of all downstream analyses. Although these manuscripts have usually completed peer review, they typically lack complete bibliographic metadata—volume, issue, and pagination—and their citation metrics remain volatile while cross-database synchronization is often delayed. To eliminate such inconsistencies, only formally published articles bearing definitive volume, issue, and page numbers were retained. Likewise, non-English publications were excluded to ensure uniform interpretation of content and consistent methodological appraisal across the entire sample.

2.2.3. Eligibility

The next phase, eligibility assessment, involved a full-text evaluation of 120 articles. 91 records were excluded after applying further exclusion criteria, such as being not related to AI in HE, non-empirical articles, not related to AI in PL in HE, without clear descriptions of practical applications, without IF index, and being studies of Q2, Q3, Q4 ranking. The Q1 filter was applied at the eligibility stage rather than during initial screening to enable multidimensional assessment of content quality and topic relevance.

2.2.4. Inclusion

The final set of 29 empirical studies published in SSCI Q1 journals (based on JCR Category rankings 2023, which uses 2022 data) was retained. The inclusion of only Q1 journals ensured that only the highest-quality publications were considered. The overall process, including the number of articles excluded at each stage, is summarized in the following table (Figure 1).

2.3. Quality Assessment

To ensure the inclusion of only high-quality research, a thorough quality assessment was performed on the 29 selected studies. A seven-criterion framework was used to assess methodological quality: (1) clarity in research objectives, (2) inclusion of a comprehensive literature review, (3) clear presentation of related work to position the study within the current body of research, (4) detailed description of methodology or model architecture, (5) presentation of clear research results, (6) alignment of conclusions with research objectives, and (7) recommendations for future work [14]. Only studies that met all seven criteria were retained for analysis. Two independent reviewers applied these criteria (0 or 1 score per item). Any discrepancies were resolved through discussion and consensus with a third senior reviewer. Only studies scoring 7/7 were retained. This quality assessment ensured that the selected studies were robust and contributed valuable insights to the understanding of AI in PL within higher education.

2.4. Data Extraction, Impact Stratification, and Synthesis

Data were extracted to answer five research questions, focusing on the research background, pedagogical models, social development dimension, instructional innovation strategies, higher-order outcomes and disciplinary composition. Thematic analysis was conducted using an inductive coding approach [24]. This method allowed for the identification of emergent themes from the data, ensuring that the analysis was closely aligned with the content of the studies without imposing preconceived categories [25]. The coding process included familiarization, code generation, theme identification, and sub-theme development. The process was collaborative, with the primary researcher conducting the analysis and the co-authors validating the findings. This collaborative approach ensured a robust and comprehensive interpretation of the data, providing insights into the current state of AI applications in personalized learning and identifying both emerging trends and gaps in the existing literature.

To evaluate influence variance among studies, this review additionally implemented impact stratification using citation data from the Web of Science Core Collection. Citation count is a widely recognized indicator of academic influence [26]. Since all included studies were from SSCI Q1 journals, journal-based metrics offered limited differentiation. Therefore, impact grouping was based on citation distribution quartiles: High-impact studies (≥36 citations, top 25%), Medium-impact (5–35 citations), and Low-impact (<5 citations, bottom 25%). Citation data were retrieved on 2 August 2025. A corresponding comparison table and subgroup analysis of findings are provided in Section 3.

3. Results

3.1. Impact-Based Grouping of Studies

To capture heterogeneity in scholarly influence, the 29 included studies were classified into three citation-based impact groups: high-impact (≥75th percentile), medium-impact (25th–75th percentile), and low-impact (<25th percentile). This grouping enables comparative analysis across countries, disciplines, methodological designs, thematic emphases, and AI algorithm applications. The classification yielded 8 high-impact studies, 15 medium-impact studies, and 6 low-impact studies (Table 1).

Table 1.

Synthesis of Key Findings from 29 Studies.

This stratification provides a structured lens for evaluating whether certain geographical regions, disciplinary orientations, or methodological approaches are more strongly associated with higher scholarly visibility. In the following subsections, results are organized into three thematic areas—(1) Countries and Disciplines, (2) Research Methods, Sample Sizes, and Data Sources, and (3) Research Themes and Types of AI Algorithms—to facilitate comparison across impact levels.

3.1.1. Countries and Disciplines

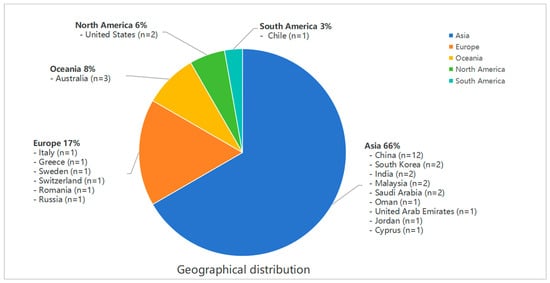

Totally, the analysis of 29 reviewed articles reveals a clear geographical distribution in AI research for personalized learning (PL) in higher education, with significant contributions concentrated in Asia (Figure 2). Notably, 24 of the reviewed studies (82.8%) are authored by researchers based in Asian countries, indicating a strong regional focus on this topic. Among these, China accounts for 12 articles, representing 41.4% of all studies, followed by South Korea, India, Malaysia, and Saudi Arabia, each contributing two articles. Additional contributions from Asia come from Oman, the United Arab Emirates, Jordan, and Cyprus, each represented by one study [30,47]. Outside Asia, Europe is moderately represented, with six studies from countries including Italy, Greece, Sweden, Switzerland, Romania, and Russia [36,40]. Oceania is represented exclusively by three studies from Australia, while North America contributes two studies from the United States, and South America is represented by one study from Chile [48,52]. This regional distribution highlights Asia’s dominance in AI-PL research while indicating increasing interest across other continents.

Figure 2.

Geographical distribution of included articles.

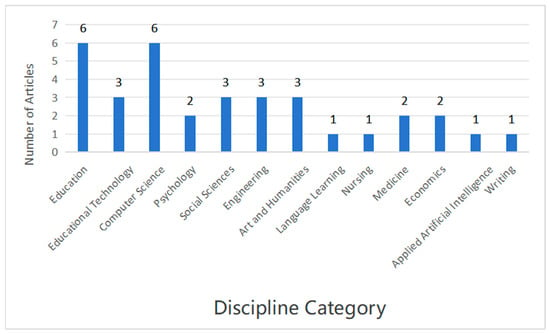

The reviewed studies span a total of 13 academic disciplines, emphasizing the interdisciplinary nature of AI-PL research in higher education (Figure 3). Education and Computer Science dominate, each accounting for 6 articles (20.7%). Education-focused research explores the pedagogical impacts of AI, such as how AI enhances learning accessibility and improves teaching strategies [7,37]. Studies in computer science focus on the development and optimization of AI tools and algorithms, including natural language processing (NLP) and predictive analytics [29,45]. Additional disciplines include Educational Technology (3 articles, 10.3%), which explores AI-powered learning management systems [27], and Engineering (3 articles, 10.3%), which focuses on hybrid systems and adaptive learning tools [43]. Disciplines such as Psychology, Social Sciences, and Art and Humanities each contribute 2–3 articles, demonstrating growing interest in AI’s broader cognitive and social impacts [38,51]. Less represented fields include Nursing and Language Learning, with one article each, underscoring the need for greater exploration of AI’s role in specialized domains [8].

Figure 3.

Examined disciplines in the included studies.

An analysis of the included studies based on impact-level grouping reveals notable patterns in geographical distribution, disciplinary focus, and potential implications for educational outcomes. In terms of impact-based grouping, high-impact studies were predominantly conducted in China (n = 3) and Malaysia (n = 2), with additional contributions from India, South Korea, Oman, the United Arab Emirates, Saudi Arabia, Russia, and Sweden. Disciplinary foci were concentrated in Education (n = 3), Educational Technology (n = 2), and Computer Science (n = 2), alongside emerging work in STEM, arts, business, and information management. These studies tend to report more substantial educational benefits, such as improved personalized learning outcomes and innovative AI applications in higher education. Medium-impact studies showed broader geographic and disciplinary diversity, largely authored in China (n = 9) and Australia (n = 2), led by Computer Science (n = 6) and Engineering (n = 3), but also including Psychology, Humanities, Mathematics, Management, Education, and Economics, suggesting that AI-PL adoption is spreading across multiple fields and regions. Low-impact studies were most geographically and disciplinarily heterogeneous, with the United States (n = 2) contributing the largest share, and disciplinary oriented in Interdisciplinary research (n = 2), Engineering, Writing Studies, Language Learning, Applied AI, Computer Science, Social Sciences, and Education, indicating exploratory or emerging efforts whose educational effectiveness may be context-specific or limited by methodological rigor.

3.1.2. Research Methods, Sample Sizes, and Data Sources

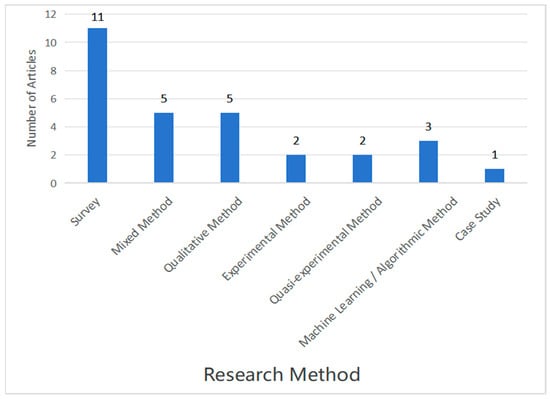

Figure 4 shows the commonly utilized methods in studies on AI-driven personalized learning in higher education. Quantitative research methods dominate the methodological landscape of AI-PL studies, utilized in 18 of the 29 reviewed articles (62.1%). Of these, survey-based research emerges as the most frequently used sub-method, appearing in 11 articles. These studies rely on structured surveys or questionnaires to gather large-scale empirical data from diverse participant groups [7,28]. Computational analytic methods, such as machine learning and predictive modeling, are employed in three articles, reflecting the increasing integration of big data into educational research [45]. Experimental and quasi-experimental approaches, though less common, are utilized in four studies to evaluate the impact of AI interventions in controlled or semi-controlled settings [35,46]. Qualitative methods are adopted in six articles (20.7%), focusing on interviews or thematic analysis to explore nuanced participant experiences [50]. Mixed-methods approaches are used in five studies (17.2%), combining surveys with interviews or computational analyses to provide a holistic understanding of AI’s educational applications [27,38].

Figure 4.

Frequency of research methodologies.

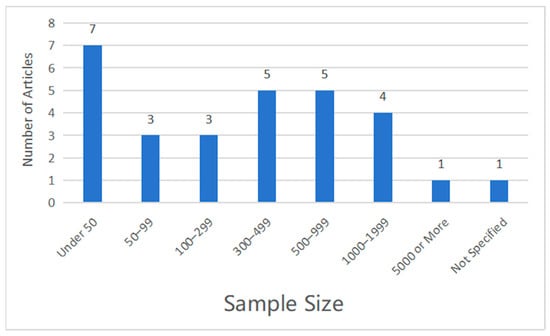

The reviewed studies demonstrate considerable variation in sample sizes, reflecting diverse research designs and objectives (Figure 5). Seven studies (24.1%) employ small sample sizes of fewer than 50 participants, often in qualitative or exploratory investigations aimed at capturing detailed insights [33,44]. Moderate sample sizes, ranging from 300 to 999 participants, account for 10 studies (34.5%), reflecting efforts to balance generalizability with logistical feasibility [7,28]. Large sample sizes of 1000 to 1999 participants are employed in four studies (13.8%), often leveraging secondary data or machine learning techniques for predictive modeling [27,32]. One study uniquely features a dataset of over 5000 participants, showcasing the potential for AI to analyze massive datasets [43]. Notably, one article lacks explicit sample size reporting, relying instead on publicly available datasets for algorithm testing [39]. This diversity highlights the methodological adaptability of AI-PL research in addressing various educational challenges.

Figure 5.

Distribution of sample size.

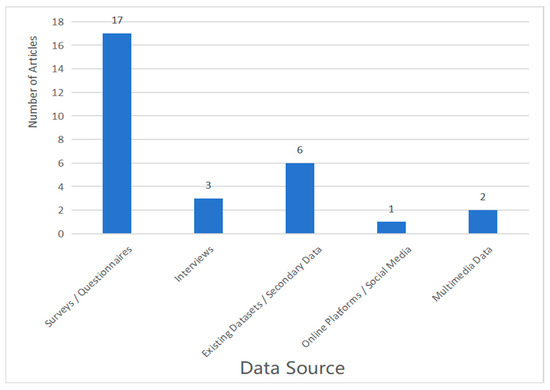

Figure 6 shows that a multiple range of data sources is employed across the 29 studies, with surveys and questionnaires emerging as the most prevalent, appearing in 17 articles (58.6%). These instruments provide large-scale, structured data to analyze learner behavior and perceptions [7,28]. Existing datasets or secondary data are utilized in six articles (20.7%), particularly for machine learning and big data analyses [36,39]. Interviews are used in three studies (10.3%) to capture in-depth qualitative insights, focusing on participants’ experiences and perceptions of AI tools [44,50]. Multimedia data, such as teaching videos or student-generated drawings, are employed in two studies (6.9%) to explore non-traditional data types in education [37,52]. One study uniquely uses social media and online platform data, reflecting an emerging trend of analyzing user-generated content for learning analytics [29]. This variety of data sources demonstrates the adaptability of AI-PL research in capturing both quantitative and qualitative dimensions of personalized learning.

Figure 6.

Distribution of data source.

Comparative scrutiny of research methods, sample sizes, and data sources across impact-level strata discloses convergences as well as divergences, each mirroring prevailing methodological currents and exerting discernible leverage on resultant findings. Across all groups, survey-based approaches were the most frequently employed, underscoring the prevalence of self-reported data in AI-supported personalized learning research. In the high-impact group, surveys were dominant (n = 4), complemented by mixed methods, machine learning experiments, algorithmic development, qualitative methods, and quasi-experiments (each n = 1). Corresponding data sources were primarily surveys and questionnaires (n = 7), with occasional use of online platforms or social media datasets, indicating a focus on structured and quantifiable measures to support robust findings. Medium-impact studies also favored surveys (n = 6), but demonstrated greater methodological variety, including qualitative approaches (n = 3), experiments (n = 2), mixed methods (n = 2), case studies, machine learning, and algorithm development (each n = 1). Data sources similarly prioritized surveys and questionnaires (n = 8), supplemented by secondary datasets (n = 4) and existing institutional records (n = 4), and interviews (n = 2), reflecting an expansion toward more varied evidence and richer contextual insights. Low-impact studies displayed the highest proportion of mixed-method designs (n = 2) relative to group size, along with isolated uses of machine learning, algorithm development, quasi-experiments, qualitative methods, and surveys. Data sources were distributed among existing data (n = 2), secondary datasets (n = 2), and smaller shares of surveys (n = 2), interviews, and multimedia resources, indicating exploratory or heterogeneous approaches with less emphasis on standardized metrics.

Sample size patterns further highlight methodological trends. High-impact studies showed a polarized pattern, with both medium-to-large samples (300–499: n = 2; 500–999: n = 2; 1000–1999: n = 2) and very small samples (under 50: n = 2), suggesting that impactful studies may succeed either through large-scale validation or highly targeted, in-depth investigation. Medium-impact studies spanned the full range from under 50 to over 5000 participants, with 300–499 most common (n = 3), reflecting flexibility in study scale and design. Low-impact studies skewed toward small samples (under 50: n = 3), potentially limiting generalizability and statistical power.

3.1.3. Research Themes and Types of AI Algorithms

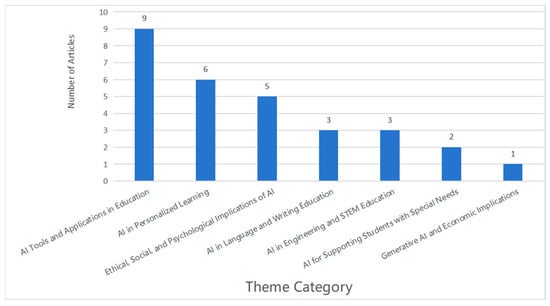

Seven dominant themes emerge from the reviewed studies, highlighting the broad focus of AI-PL research (Figure 7). The most prevalent theme, AI Tools and Applications in Education, is represented by nine articles (31%), which explore practical implementations like learning management systems and AI-powered feedback tools [29,39]. AI in Personalized Learning, addressed in six articles (20.7%), investigates how AI customizes educational experiences to meet individual learner needs [7,27]. Ethical and social implications, such as data privacy and emotional responses to AI, are discussed in five articles (17.2%), reflecting growing attention to the challenges posed by AI in education [30,38]. Discipline-specific themes include AI in STEM Education and AI in Language Learning, each with three articles, while two studies address Supporting Students with Special Needs [41]. Emerging themes like Generative AI emphasize innovative applications, indicating future directions for the field [40].

Figure 7.

Distribution of research themes.

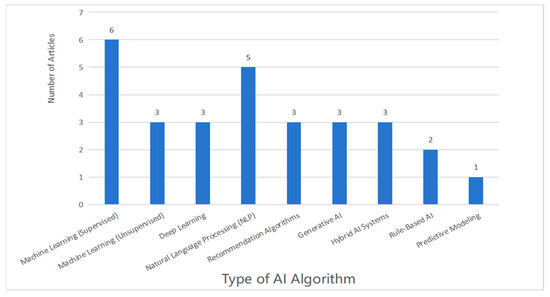

As depicted in Figure 8, supervised machine learning dominates the algorithmic landscape, underpinning six investigations and accounting for 20.7% of all implementations. This algorithm type is predominantly employed for tasks like predicting student performance and categorizing learner behaviors [29,35]. Natural Language Processing (NLP) is the second most prevalent, utilized in five studies (17.2%) for applications like language acquisition and writing assistance [7,8]. Other algorithms include unsupervised learning and deep learning, each appearing in three articles, highlighting their role in clustering and feature extraction tasks [28,39]. Generative AI and hybrid systems are represented by three studies each, focusing on dynamic content creation and the integration of multiple AI methods [38,40]. Rule-based systems and predictive modeling appear in fewer studies, suggesting opportunities for further exploration in educational applications to enhance personalized learning and outcome prediction [37,51]. This algorithmic diversity reflects the field’s innovative approaches to addressing complex challenges in personalized learning.

Figure 8.

Distribution of AI algorithms types.

Across impact-level strata, research themes and AI algorithms diverge in focal emphasis and technical rigor, collectively mapping the shifting priorities and advancing technological trajectories that characterize AI-mediated personalized learning. High-impact studies were primarily concentrated on AI tools and applications in education (n = 5) and AI in personalized learning (n = 2), with individual studies addressing ethical, social, and psychological implications. This concentration suggests that highly cited research prioritizes practical and scalable educational applications while occasionally considering broader societal impacts. Medium-impact studies demonstrated a wider thematic spectrum, including ethical, social, and psychological implications of AI (n = 3), AI in personalized learning (n = 3), AI tools and applications in education (n = 2), AI in engineering and STEM education (n = 2), AI for supporting students with special needs (n = 2), and AI in language and writing education (n = 2). Such diversity indicates a balance between applied studies and exploratory research addressing emerging educational contexts and inclusivity challenges. Low-impact studies most often examined AI tools and applications in education (n = 2), supplemented by single studies in engineering/STEM, personalized learning, language and writing education, and ethical/social/psychological domains, reflecting smaller-scale, less thematically cohesive investigation.

Regarding AI algorithms, high-impact studies most frequently employed natural language processing (NLP) (n = 2) and deep learning (n = 2), alongside recommendation algorithms, supervised learning, generative AI, and rule-based systems (each n = 1). The use of advanced AI techniques aligns with their emphasis on innovation and impactful educational interventions. Medium-impact studies exhibited greater algorithmic diversity, with supervised learning (n = 4), unsupervised learning (n = 3), hybrid systems (n = 2), and generative AI (n = 2) leading the list, plus recommendation algorithms, deep learning, rule-based AI, and NLP (each n = 1). This variety reflects exploratory experimentation with different AI methods to address diverse pedagogical challenges. Low-impact studies were dominated by NLP (n = 2), with isolated applications of supervised learning, recommendation algorithms, predictive modeling, and hybrid systems, indicating narrower algorithmic exploration and more limited methodological sophistication.

3.2. Pedagogical Paradigms or Learning Theories

Across the 29 studies, theoretical grounding was heterogeneous. Roughly one-third of the articles explicitly named a pedagogical or learning theory to frame AI-supported personalized learning, including Activity Theory [37], Constructivism and allied approaches such as design thinking and competency-based education [40], Generative Learning Theory [10], Felder–Silverman learning styles [48], post-humanism/distributed cognition [32], student-centered and project/task-based learning [44], and motivational/learning frameworks such as Basic Psychological Needs/Self-Determination and I-PACE [51]. In these studies, theory typically informed concrete design choices—for example, generative learning guided the embedding of summarizing/organizing/reflecting strategies in AI environments [10], Activity Theory structured the analysis of learner–tool–community interactions in AI-assisted settings [37], and student-centered/PBL rationalized adaptive feedback and task sequencing.

By contrast, about half of the corpus relied on implicit pedagogical assumptions. Many papers operationalized constructivist/socioconstructivist or connectivist logics—e.g., “learn–practice–feedback” cycles and adaptive guidance [33,36], personal learning environments [29], or the 3P model as an interpretive lens for students’ engagement [7]—without naming a formal pedagogy. Several studies adopted a student-centered discourse or emphasized personalization and collaboration [35], yet still treated pedagogy as background rather than a primary design driver. A smaller subset anchored AI-PL primarily in technology-adoption frameworks—TAM, UTAUT, TPB, TRI, and related IS models [27,28,30,31,42]. While these models robustly explain usage intentions and acceptance, they function more as behavioral than pedagogical theories and seldom articulate how instructional strategies (e.g., scaffolding, feedback, social co-construction) are to be structured.

Two notable gaps emerge. First, classical behaviorism was virtually absent, and connectivism appeared mostly implicitly rather than as an explicit design principle. Second, socioformation, which foregrounds social co-construction and quality-of-life outcomes, was only implicitly aligned via needs-based perspectives [51], with no studies explicitly operationalizing socioformative design. Overall, the field skews toward constructivist family logics (often implicit) or adoption/acceptance lenses (explicit), with relatively few studies that explicitly translate a pedagogical paradigm into implementable AI design rules for personalization. This pattern underscores a continuing need for research that (i) makes pedagogical commitments explicit, (ii) links those commitments to AI functionalities (e.g., recommendation, feedback, collaboration orchestration), and (iii) evaluates impacts on higher-order outcomes in a theoretically coherent manner.

3.3. Sustainable Development and Equity

The reviewed studies show limited but varied engagement with sustainable development goals (SDGs), equity, and accessibility, with only a minority explicitly addressing these dimensions in design or evaluation. Using the operational categories (A–E) defined for this review, the distribution is as follows: 1 study explicitly mentions an SDG (Category A), 7 studies explicitly foreground equity or inclusion (B), 8 studies raise implicit ethical or fairness concerns without operationalizing them (C), 10 studies give little or no attention to such issues (D), and 3 studies make only indirect connections to broader social or educational benefits (E).

First, the explicitly inclusion-oriented studies (B) illustrate concrete ways in which social aims can shape AI-PL design. For example, Ou, Stöhr [32] integrate AI-language tools to support dyslexic, ADHD, autistic, and L2 learners, pairing technical design with recommendations for institutional policies on privacy and bias. Zingoni, Taborri [41] create a machine-learning classifier explicitly for students with dyslexia, framing it as an assistive, accessibility-focused intervention. Similarly, Țală, Muller [40] propose AI pathways customized for learners with impairments, grounded in UNESCO/OECD guidelines, while Chang [43] design an inclusive course recommender serving over 5600 students across diverse disciplines. These studies reframe AI features from pure optimization toward assistive personalization, evaluate representativeness in sample selection, and couple technical innovation with policy-oriented recommendations—demonstrating a deliberate alignment between AI affordances and inclusion goals.

Second, studies in the implicit/ethical cluster (C) surface concerns relevant to equity but stop short of embedding them as design requirements. For instance, Chan and Hu [7] highlight student fears that AI might “widen the gap between rich and poor,” Bouteraa, Bin-Nashwan [30] focus on academic-integrity risks, and Zhong, Luo [51] discuss AI dependency and mental-health vulnerabilities in particular subgroups. Although these contributions shape the discourse on responsible deployment by advocating integrity policies, balanced usage, and ethical safeguards, they seldom quantify accessibility outcomes or integrate adaptive features designed to mitigate disadvantage. As such, they occupy a middle ground between equity awareness and concrete, equity-driven innovation.

Finally, a plurality of studies (Categories D and E) either omit social-development considerations or address them only indirectly. Category D (n = 10) papers prioritize technical performance, adoption, or pedagogical efficacy without explicit attention to SDGs, equity, or the digital divide [27,28,39]. Category E (n = 3), including Kong, Ning [44] and Wang, Aguilar [49], links AI to improved teaching quality or student decision-making, implying potential societal benefits but without operationalizing equity in the design. This concentration on functionality within relatively well-resourced contexts raises the risk that AI interventions, if transferred to underserved settings without adaptation, may fail to reduce or could even exacerbate existing disparities.

3.4. Instructional Innovation Strategies

Across the reviewed literature, explicit integration of innovative instructional strategies—such as project-based learning (PBL), STEAM programs, socioformative projects, design thinking, gamification, or Universal Design for Learning (UDL)—is relatively uncommon. Only a minority of works adopt sustained, pedagogy-driven designs, including project-based or socioformative approaches [38,42,44] and design-thinking frameworks [40]. Other innovative practices appear in gamified micro-learning contexts [35,38], AI-assisted writing pedagogies [30,47,49], and inclusion-oriented practices drawing on UDL principles [32,41]. The majority of studies, however, focus on adaptive pathways and recommendation systems without embedding them into a comprehensive pedagogical redesign [33,36,39,43].

Where innovative strategies are adopted, AI predominantly functions as a scaffolding and orchestration mechanism rather than replacing the pedagogy itself. Reported supports include intelligent task recommendation, adaptive sequencing, formative assessment automation, group formation, affective state detection, and AI-generated content. For instance, dialogue-based project support guided learners through multi-stage PBL tasks [44], while AI-driven grouping algorithms and tailored resource recommendations enhanced collaborative creativity in socioformative contexts [38]. In AI-assisted writing, large language models provided real-time feedback, stylistic refinement, and idea generation [8,49], whereas gamification designs leveraged AI to adapt challenge levels and feedback loops [35]. Inclusive learning systems applied classification and recommendation models to match instructional resources to the needs of students with learning difficulties [32,41]. Nevertheless, many technically sophisticated studies [39,45] fail to reconfiguring classroom activity structures, rarely providing detailed task scripts, assessment rubrics, or collaboration protocols. As a result, AI in personalized learning currently serves more to support existing pedagogical practices than to catalyze comprehensive instructional transformation.

3.5. Impacts of AI on Personalized Learning Outcomes and Higher-Order Skills

Only a small subset of studies demonstrates objective evidence of academic performance gains through experimental or quasi-experimental designs. Specifically, AI-assisted interventions produced significant pre–post improvements in multiple subjects [35] and enhanced artwork appreciation using rubric-based evaluation [33]. Similarly, in writing instruction, AI support was associated with improved product quality, though researchers also warned of potential risks to personal expression [49]. Complementing these findings, learning-analytics studies linked AI-mediated behaviors to higher final grades, such as the production of cognitively substantive forum posts, suggesting that AI-enhanced engagement can predict academic achievement [29].

In contrast, a larger body of research reports perceived gains in higher-order skills, such as critical thinking, creativity, collaboration, and self-directed learning, typically measured through surveys, interviews, or qualitative analysis [8,10,32,34,38,44,46]. While these findings highlight promising learner experiences, they often lack standardized performance metrics or longitudinal validation. Moreover, many studies emphasize system-level outcomes, including algorithmic accuracy (e.g., RMSE, NDCG) or adoption intentions, rather than direct educational impact [27,28,36,43,48]. Additionally, risk-oriented investigations caution that reliance on generative AI may undermine originality, integrity, and critical-thinking skills, underscoring the need for ethical guidelines and metacognitive scaffolds [7,37,51].

Taken together, the literature reveals an uneven evidence base: while there is emerging proof of academic achievement improvements in targeted contexts and self-perceived skill development across several domains, much of the research remains indirect, relying on perceptions or system proxies. Consequently, the transformative potential of AI for fostering higher-order learning outcomes has yet to be demonstrated through rigorous, longitudinal, and transfer-sensitive evaluations.

3.6. Interdisciplinary and Transdisciplinary Collaboration

The extent of interdisciplinary engagement across the reviewed studies varied markedly, ranging from genuine transdisciplinary integration to superficial disciplinary juxtaposition. In more advanced cases, education researchers and computer scientists collaborated in co-design processes that embedded pedagogical principles directly into computational models, thereby shaping both system architecture and instructional design [36,41,52]. Such studies exemplify how transdisciplinary practice can generate research designs that are simultaneously pedagogically valid and technically robust. By contrast, a larger proportion of investigations displayed only limited interdisciplinarity, where diverse disciplinary expertise was present but operationalized in parallel rather than integrative ways—for example, reporting algorithmic performance alongside learner surveys without demonstrating cross-domain synthesis [27,38,40]. At the minimal end, some projects remained confined within a single disciplinary lens, either technical or educational, offering little evidence of cross-field interaction [44].

The depth of collaboration demonstrably shaped research implementation and outcomes. Stronger interdisciplinary integration produced methodologically richer studies, combining psychometric assessment, system validation, and classroom observation to enhance ecological validity and pedagogical relevance [10,43]. These designs not only improved the interpretability of AI-driven personalization but also situated findings within authentic educational contexts. Conversely, studies with weaker collaboration tended to yield fragmented insights, where technical performance metrics lacked pedagogical translation or educational implications remained under-theorized [28,35]. Overall, while interdisciplinary participation is increasingly visible, sustained transdisciplinary co-design—where disciplinary boundaries are transcended to produce genuinely integrative frameworks—remains the exception rather than the norm.

4. Discussion

This discussion synthesizes core findings in the literature from SSCI Q1 journals (top 25% most-cited in social sciences) published between January 2020 and December 2024 in the Web of Science database, focusing on AI-personalized learning (AI-PL) integration in higher education. The review mapped global trends, pedagogical paradigms, sustainability and equity considerations, instructional innovations, learning outcomes, and interdisciplinary collaboration, offering a comprehensive account of the field’s trajectory. Results reveal a strong geographical concentration in Asia, particularly China, alongside disciplinary dominance of education and computer science. Methodologically, quantitative designs and supervised learning algorithms prevail, while explicit theoretical grounding and systematic engagement with equity remain limited. Although AI demonstrates clear potential to enhance academic outcomes, higher-order skills, and innovative strategies such as PBL, STEAM, and gamification, risks of cognitive disengagement and diminished autonomy emerge when pedagogical and ethical safeguards are absent. Furthermore, interdisciplinary collaboration is expanding but remains fragmented, constraining the design of integrative, human-centered AI systems. Collectively, these patterns highlight both the transformative promise and unresolved challenges of AI adoption in higher education.

4.1. Countries and Disciplines

High-impact studies were predominantly conducted in China and Malaysia, reflecting strong research momentum in these regions, particularly within Education, Educational Technology, and Computer Science. Medium-impact studies showed broader geographic and disciplinary diversity, suggesting that AI-PL adoption is spreading across multiple fields and regions, though with somewhat less pronounced impact on measurable learning outcomes. Low-impact studies were the, indicating exploratory or emerging efforts whose educational effectiveness may be context-specific or limited by methodological rigor. The dominance of Asia in AI-PL research reflects the region’s significant investment in educational technology and innovation, with China accounting for almost half of the reviewed studies [29,35]. The geographical concentration of AI-PL research in China reflects a confluence of policy-driven initiatives, infrastructural advantages, and academic ecosystem dynamics. First, with China’s substantial investment in AI research and its strategic emphasis on integrating advanced technologies into educational frameworks, AI-driven education has become a robust domestic focus [35,38]. For instance, with the guide of government, AI technology industrialization has increased clearly, while Chinese government utilized various types of government support to facilitate enterprise innovation on AI technology to reduce education redundancy, enhancing the effectiveness of artificial intelligence in the educational domain [53]. Second, as the largest higher education system in the world, China, with its 44.3 million higher education students [54], creates a structural advantage for data-driven AI applications, which provides unparalleled training datasets in Western contexts. Third, the academic ecosystem differences between the East and West profoundly influence the distribution of research directions. Chinese researchers may have a publication preference for high-quality journals, potentially linked to the research assessment system where Q1 journal papers carry significant weight in university research evaluations, especially in “Double First-Class” universities [55].

Meanwhile, countries like South Korea, India, and Malaysia demonstrate their growing capacity for impactful contributions, leveraging AI to address unique educational challenges. However, limited representation from regions like North America and South America highlights disparities in resource allocation and technological infrastructure [48]. Europe’s moderate presence, particularly from Italy and Sweden, underscores a focus on ethical and multidisciplinary AI applications [32,41]. The scattered distribution outside Asia indicates that while there is global engagement with AI in personalized learning, the efforts are not yet as concentrated or extensive as those in Asia. Overall, the global distribution of authors signifies an expanding and diversified effort to integrate AI into personalized learning across higher education. However, the regional imbalance highlights the need for increased collaboration and knowledge exchange between dominant regions like Asia and other parts of the world to foster a more balanced and inclusive advancement of AI-driven personalized learning. For instance, China’s AI systems, motivated by government policies, have significantly reformed education by integrating AI into curricula and mandating partnerships between AI companies and educational institutions [56]. Conversely, Europe’s adherence to the General Data Protection Regulation (GDPR) emphasizes data privacy, influencing AI deployment in education to prioritize user consent and data protection [57]. Contrasting China’s centralized AI systems with Europe’s GDPR-compliant models can extract transferable insights, such as balancing innovation with privacy, to guide global AI integration in education.

The interdisciplinary nature of AI-PL research is evident in contributions from 13 distinct fields. Education and computer science dominate, reflecting their foundational role in developing and applying AI technologies for personalized learning. This dominance is unsurprising, given that education provides the pedagogical framework necessary for implementing AI-driven personalized learning strategies. Education research prioritizes accessibility and learner engagement, examining how AI tools address diverse learning needs [7,37]. Conversely, computer science offers the technical expertise required to develop and refine AI algorithms and systems, which advances the technical infrastructure, such as predictive models and adaptive algorithms [29,45]. By integrating perspectives from psychology, social sciences, and educational technology, these studies address the complex interplay between technology and human factors in learning processes [42,51]. For example, one study demonstrates how AI tools can influence students’ creativity and emotional engagement, highlighting the importance of considering psychological dimensions in the design and implementation of AI-driven educational tools [38]. This interdisciplinary approach not only enriches the research but also ensures that AI applications are holistic and considerate of diverse learner needs. Engineering-focused studies, for instance, are pivotal in developing advanced course recommendation systems and adaptive learning technologies that enhance the personalization of learning experiences [43,48]. Similarly, research in the arts explores innovative ways to use AI to foster creativity and engagement, thereby broadening the scope of personalized learning beyond traditional academic subjects [33]. Social Sciences research plays a critical role in addressing the ethical and societal implications of AI in education, ensuring that AI applications are fair, responsible, and beneficial to all stakeholders [30]. Despite this breadth, areas like nursing and language education remain underexplored, signaling opportunities for expanding research into specialized domains where AI could address unique challenges [8,44]. At the same time, fostering greater geographical diversity is critical to ensure a truly inclusive research landscape. Underrepresented regions such as Africa and South America bring unique cultural and educational contexts that could enrich the understanding of AI applications. Expanding collaborative networks and ensuring equitable resource distribution would not only enhance diversity but also generate innovative approaches to AI integration, ultimately advancing the global sustainability and inclusivity of personalized learning.

4.2. Research Methods, Sample Sizes, and Data Sources

Impact patterns indicate that high-impact studies tend to balance methodological rigor with sufficient sample sizes to achieve robust, generalizable findings, while medium- and low-impact studies explore broader methodological diversity but may face limitations in scale or consistency, highlighting an ongoing trend toward methodological refinement in AI-enhanced personalized learning research. The methodological approaches in AI-PL research reveal a strong emphasis on quantitative studies, particularly survey-based research. This approach accounts for over half of the reviewed studies, which is likely attributable to the scalability and generalizability that quantitative methods offer, allowing researchers to analyze large datasets and identify significant patterns in learner behaviors and outcomes [7,28]. Computational methods, such as machine learning and predictive analytics, are also prevalent, enabling researchers to analyze large datasets and uncover trends in personalized learning outcomes [39,45]. However, the relatively limited application of experimental and quasi-experimental designs highlights a gap in rigorous causal investigations. These designs are critical for assessing the effectiveness of AI interventions under controlled conditions [35,46]. Qualitative methods, though less common, provide valuable insights into learners’ and educators’ experiences with AI, often complementing quantitative data to offer a richer understanding of the technology’s impact [32,44]. By integrating quantitative and qualitative data, mixed methods studies provide a more holistic understanding of AI’s role in personalized learning, capturing both statistical trends and individual experiences [27,38]. This approach is particularly valuable in addressing the multifaceted challenges of AI integration, ensuring that technological advancements are aligned with pedagogical goals and learner needs. Future research should adopt mixed-method approaches to balance empirical rigor with contextual depth, addressing the complexities of AI integration in education.

The diversity in sample sizes across the reviewed studies reflects the flexibility of AI-PL research in addressing various research objectives. Small sample sizes, typically involving fewer than 50 participants, are commonly used in exploratory or pilot studies to gain detailed insights into specific educational settings [33,44]. These smaller studies provide rich, nuanced understandings of individual learner experiences and the effectiveness of AI tools in personalized learning settings, thereby offering depth that large-scale quantitative studies may overlook. Moderate sample sizes, ranging from 300 to 999 participants, are the most prevalent, striking a balance between feasibility and generalizability [30,31]. Large-scale studies, leveraging datasets with over 1000 participants, exemplify the potential for big data analytics to enhance personalized learning [27,34]. The presence of a mega-scale study with over 5000 participants highlights the emerging trend of leveraging big data analytics in personalized learning research [43]. Such studies utilize advanced machine learning techniques and predictive modeling to analyze large datasets, enabling the identification of significant trends and the development of scalable AI solutions. This approach not only enhances the statistical robustness of findings but also facilitates the application of AI tools across extensive educational contexts, promoting scalability and adaptability.

The reviewed studies highlight a predominant reliance on surveys and secondary datasets as primary data sources. Surveys, utilized in 17 studies, are particularly effective for capturing large-scale learner perspectives on AI-PL systems, emphasizing subjective experiences and usability metrics [7,28]. Surveys and questionnaires facilitate the collection of structured, standardized data, enabling robust statistical analyses which inform the effectiveness of AI interventions in diverse learning environments [30,31]. Secondary datasets, used in six studies, demonstrate the growing adoption of machine learning techniques to analyze pre-existing data, such as academic performance metrics and behavioral logs [36,39]. By utilizing pre-collected data, researchers can conduct large-scale analyses without the logistical challenges of primary data collection, thereby enhancing the depth and breadth of their investigations. Conversely, interviews and multimedia data are less frequently employed, appearing in three and two studies, respectively, but provide valuable qualitative insights into learner interactions and experiences [37,44]. Social media data, utilized in only one study, reflects an emerging trend of leveraging digital platforms for real-time educational analytics [29]. This trend aligns with the increasing digitization of education, where understanding online behaviors and interactions can provide valuable insights into the effectiveness and adoption of AI tools in real-world settings.

4.3. Research Themes and Types of AI Algorithms

Thematic trends in AI-PL research highlight its multidimensional nature, with seven dominant themes emerging from the reviewed studies. High-impact research tends to concentrate on high-value educational applications employing advanced AI algorithms, medium-impact studies explore both thematic and algorithmic diversity, and low-impact studies display narrower focus and less innovative algorithmic use, revealing clear trends in both research priorities and technological approaches within AI-enhanced personalized learning. AI Tools and Applications in Education, the most prevalent theme, focuses on practical implementations like learning management systems, recommendation algorithms, and adaptive feedback mechanisms [28,29]. The studies within this category often focus on the integration of AI into classroom activities, its effectiveness in supporting learning outcomes, and its potential to transform instructional design. These tools demonstrate AI’s capacity to optimize teaching strategies and improve learning achievement. AI in Personalized Learning, a closely related theme, emphasizes tailoring educational experiences to individual needs, leveraging adaptive learning systems to provide customized feedback and resources [7,27]. This theme encompasses studies investigating AI-driven adaptive learning systems, personalized feedback mechanisms, and the customization of curriculum to address diverse learner profiles. Ethical and social considerations have also gained traction, with studies exploring issues such as algorithmic bias, data privacy, and emotional impacts on learners [30,38]. The broader impacts of AI integration, including ethical concerns, psychological effects on learners, and the socio-cultural implications of widespread AI adoption in education should also be a prominent theme. Emerging themes, such as AI in STEM and Language Education, highlight discipline-specific applications but remain underexplored relative to broader themes [8,36]. Niche themes, including Generative AI and Supporting Students with Special Needs, reflect innovative directions but require further investigation to ensure equitable AI integration [40,41]. The trends indicate a progression towards more sophisticated, integrative, and ethically conscious approaches to AI, highlighting the dynamic and multifaceted nature of AI’s role in transforming higher education.

The reviewed studies reveal the extensive application of diverse AI algorithms, showcasing the field’s adaptability in addressing educational challenges. Supervised machine learning emerges as the most used algorithm, particularly for tasks involving predictive analytics and learner classification [29,35]. This aligns with the goal of many studies to enhance educational outcomes through data-driven decision-making and tailored interventions. Natural Language Processing (NLP) is also prominent, facilitating applications in language education and academic writing by offering real-time feedback and automated content analysis [7,8]. Furthermore, emerging algorithms, such as deep learning and hybrid systems, represent a shift towards more sophisticated models capable of analyzing unstructured data and integrating multiple functions [38,39]. Generative AI tools, while less common, demonstrate potential for dynamic content creation and personalized learning materials [40,42]. The incorporation of recommendation algorithms, generative AI, and hybrid AI systems demonstrates a shift towards creating more interactive and personalized learning environments, where AI not only predicts outcomes but also generates tailored content and integrates multiple AI techniques for enhanced functionality However, the limited use of rule-based AI and predictive modeling suggests that these approaches are still niche, potentially due to their reliance on predefined rules and specific data requirements, which may limit their flexibility and scalability in diverse educational contexts [37,51]. The increasing reliance on advanced algorithms, such as deep learning and generative AI, highlights the field’s commitment to innovation while underscoring the need for rigorous evaluations of their educational impact.

4.4. Pedagogical Paradigms in AI-Supported Personalized Learning

Pedagogical paradigms in AI-supported personalized learning are predominantly implicit and constructivist in orientation, while explicitly theorized and innovative frameworks remain underutilized. First, the implicit constructivist mainstream lacks clarity and depth. Most studies operationalize personalization via adaptive sequencing, feedback loops, or self-regulatory mechanisms without explicitly articulating their pedagogical underpinnings [7,29,33,34,35]. Such designs often equate PL with efficiency gains and user engagement while under-specifying instructional mechanisms (e.g., types of scaffolding, modes of social orchestration). As a result, they tend to focus on proximal indicators, such as learner perceptions or platform usage. Although these implementations imply a learner-centered, constructivist logic, their lack of explicit theoretical framing reduces transparency and replicability, making cross-study synthesis of instructional mechanisms more difficult.

Second, explicitly theorized studies demonstrate richer objectives and alignment between pedagogy and AI design. A smaller group of studies explicitly adopts and operationalizes frameworks such as Activity Theory, Generative Learning Theory, post-humanism, Human-Centered AI, design thinking, and competency-based education [10,32,37,40,43]. This explicit theory–design mapping also enhances transparency in how AI functionalities—such as feedback timing, recommendation logic, or collaborative task structuring—enabling richer evaluation of higher-order learning outcomes like creativity and ethical reasoning.

Third, notable gaps remain in inclusion-oriented paradigms and theory–tool integration. The literature rarely incorporates socioformation or Universal Design for Learning in an explicit, systematic manner. An exception is Zhong, Luo [51], whose implicit use of Basic Psychological Needs theory gestures toward socioformative goals. When inclusion is considered (e.g., Zingoni, Taborri [41]’s focus on dyslexia support), it is primarily as assistive technology rather than as part of a theoretically framed inclusive pedagogy. Similarly, while adoption and acceptance models (e.g., TAM, UTAUT, TPB) are used to explain user engagement, they are seldom integrated with learning theories that specify how personalization should be pedagogically enacted [27,28,30,31,42]. This disconnect limits the field’s capacity to address broader educational aims—particularly in underserved or non-Western contexts—and to design AI-supported PL that is both inclusive and pedagogically robust.

Future research on pedagogical paradigms in AI-supported personalized learning should prioritize explicit theorization and operationalization of instructional frameworks. Rather than relying on implicit constructivist assumptions, systems should deliberately embed paradigms such as socioformation, Universal Design for Learning (UDL), or generative learning, aligning adaptive algorithms with concrete pedagogical objectives—for example, incorporating collaborative knowledge-building analytics to scaffold social learning processes [58]. In parallel, adoption models like UTAUT must be paired with robust educational theories such as design thinking or activity theory, thereby enabling evaluation not only of user acceptance but also of underlying learning mechanisms and reflective practices [59]. Finally, AI-PL systems should be assessed against inclusive and higher-order goals—critical thinking, creativity, equity, and emotional regulation—rather than performance metrics alone. For instance, a UDL-informed AI tutor could integrate multimodal resources and scaffolded challenges tailored to neurodiverse learners, while systematically measuring growth in autonomy, engagement, and decision-making [60].

4.5. Sustainable Development and Equity

The corpus reveals a persistent disconnection between the technical/learning-outcome focus of most AI-PL research and the broader social objectives of sustainable development and educational equity. Explicit integration of frameworks such as the United Nations Sustainable Development Goals (SDG 4) remains rare, and only a small number of studies place equity considerations at the core of their design or evaluation. Where issues of equity and inclusion are made explicit, the literature offers promising yet limited examples. Research targeting accessibility—such as Zingoni, Taborri [41] on dyslexia support, Ou, Stöhr [32] on adaptive AI learning tools for students with reading difficulties, ADHD, autism, or second-language needs, and Țală, Muller [40] on custom AI approaches aligned with UNESCO/OECD guidelines—demonstrates how personalization can shift from performance optimization toward assistive and socially responsive functions. These works highlight practical mechanisms, including adaptive scaffolds, multimodal delivery, and institutional policy integration, that can broaden participation. However, such efforts remain a minority, often confined to narrow user groups or institutional settings, and rarely operationalize SDG targets into measurable educational outcomes, such as retention rates or equitable attainment.

Prevailing studies emphasize academic and technical indicators such as model accuracy, learner engagement, and adoption rates, whereas equity and public-good considerations receive only peripheral attention. Studies focused on prediction, recommendation, or adoption frequently omit disaggregated analyses by socio-economic status, disability, or geographic location, limiting the ability to assess distributive impacts [27,28,39,45]. Ethical or fairness issues are occasionally acknowledged [7,30,51], yet such concerns often do not translate into design safeguards or targeted evaluation. Even when the digital divide is mentioned [10,38,47], the discussion is typically brief and lacks empirical measurement in underserved contexts, resulting in a body of work that is methodologically sophisticated in technical validation but underdeveloped in assessing whether AI-PL advances equity-related outcomes.

Advancing AI-supported personalized learning requires positioning sustainable development and equity at the center of research design and evaluation [61,62]. This entails embedding SDG-aligned indicators—such as equitable participation, retention, and inclusive attainment—into study frameworks, with systematic disaggregation of outcomes by socio-economic status, disability, language proficiency, and geography [63]. Participatory and co-design approaches with marginalized learners, as illustrated in Zingoni, Taborri [41] and Ou, Stöhr [32], are essential to ensure contextual relevance and to align innovation with the lived realities of target groups. Equally important are longitudinal and multi-site field trials in resource-constrained settings, which can establish scalability, external validity, and uncover unintended distributional effects. For example, explicitly setting equity targets, co-developing AI tools with underserved learners, and tracking both achievement and access patterns across multiple terms would enable AI-PL initiatives to function not merely as technological advances but as drivers of inclusive and sustainable educational transformation [64].

4.6. Instructional Innovation Strategies

The integration of AI into personalized learning shows a marked divergence between studies adopting innovative instructional strategies—such as PBL, STEAM, and gamification—and those retaining conventional, teacher-led approaches, with the former generally demonstrating greater pedagogical depth and learner engagement. Studies employing innovative strategies often describe richer, multi-dimensional learning experiences, where AI acts as both a scaffolding and orchestration mechanism. For example, AI-supported project-based designs facilitated complex task decomposition, collaborative grouping, and adaptive feedback loops that sustained inquiry and creativity over extended periods [38,44]. In gamification contexts, AI-driven adaptivity enhanced challenge–skill balance, promoted motivation, and enabled real-time personalization [35]. STEAM-oriented applications leveraged AI for dynamic resource recommendation and cross-disciplinary content integration, aligning technological affordances with creative problem-solving goals [40]. Such studies typically provided at least partial documentation of instructional design, activity sequencing, and AI–pedagogy alignment, yielding more compelling evidence of enhanced engagement, skill development, and learner autonomy compared to control or baseline conditions.

By contrast, research that retained traditional instructional paradigms—often characterized by lecture-based delivery or individual drill-and-practice—tended to integrate AI as a peripheral enhancement rather than as a co-driver of pedagogical change. In these cases, AI tools were frequently limited to automating feedback, recommending resources, or providing surface-level adaptivity without reconfiguring the underlying learning model [36,39,45]. Although such approaches occasionally reported efficiency gains or modest improvements in test performance, they rarely demonstrated broader competencies such as collaboration, creativity, or critical thinking. Moreover, the absence of detailed implementation protocols and the lack of authentic, problem-based tasks limited the capacity of these studies to show transformative learning effects. This contrast underscores that AI’s potential to amplify personalized learning is most fully realized when paired with thoughtfully designed, innovative instructional strategies rather than appended to conventional formats.

Moving beyond surface-level adaptivity, future studies need to investigate how AI can be systematically embedded into the design and enactment of innovative pedagogies. This entails not only using AI for automating feedback or sequencing content but also aligning it with deeper instructional redesigns, such as AI-supported project scaffolds that foster long-term inquiry, or adaptive gamification systems that sustain learner motivation across diverse contexts [65]. For example, a socioformative project could leverage AI to dynamically group learners based on evolving competencies and generate tailored collaborative tasks, thereby addressing both cognitive growth and social equity [66]. By advancing such models, future work can better demonstrate how AI contributes to transformative learning outcomes that extend beyond efficiency or test performance.

4.7. Impacts of AI on Personalized Learning Outcomes and Higher-Order Skills

AI-driven personalized learning has demonstrated promising positive effects on both academic performance and higher-order skills, particularly in contexts employing rigorous designs. Experimental and quasi-experimental studies show significant gains in domain-specific performance: for instance, mathematics, language, and social science scores improved significantly post-intervention [35], while AI-supported art systems yielded enhanced artwork quality based on rubric assessments [33]. In writing contexts, AI-assisted tools enhanced content quality, albeit raising concerns about originality [49]. Additionally, learning-analytics research reveals that students who engage with cognitively substantive AI-facilitated discussions, measured via ML classifiers of forum posts, tend to achieve higher final grades [29]. Moreover, many studies document perceived development of higher-order capacities, such as critical thinking, creativity, metacognition, self-direction, and emotional regulation, through qualitative and survey-based designs [8,10,32,34,38,44,46]. These findings reflect students’ subjective experience of enhanced cognitive and emotional engagement, though they are limited by self-report and the absence of performance validation.

However, alongside these benefits, several studies warn of detrimental effects arising from over-reliance on AI. Learners may experience diminished critical thinking, loss of originality, or weakened cognitive autonomy [7,37,40]. In terms of psychological challenges, the continuous monitoring and frequent AI assessments may increase performance anxiety, and the lack of human presence can cause emotional disengagement further [38]. Concerning barriers for teachers, educators often experience difficulties in adapting to AI-driven learning environments due to a lack of sufficient training and guidance on how to effectively integrate AI tools into their teaching methods [10]. Moreover, AI-driven education diminishes teacher-student interaction, making it harder for educators to provide emotional and psychological support essential for effective learning [38]. Empirical evidence even suggests neurological disengagement: Javadi, Emo [67] showed that when individuals followed external guidance rather than planning autonomously, hippocampal and lateral prefrontal activations were markedly reduced, indicating diminished engagement of higher-order cognitive networks. By analogy, excessive reliance on AI in learning may similarly attenuate neural involvement in critical thinking, problem-solving, and creative reasoning, explaining potential cognitive and neurological disengagement. Complementary literature emphasizes the risk of cognitive offloading, where habitual AI reliance reduces independent decision-making and analytical thinking [68].

Crucially, future inquiry must design studies that intentionally integrate AI as a thought partner rather than a cognitive crutch. For example, an intervention could embed “ask-don’t-solve” AI interactions—where learners must critique AI-generated suggestions before adopting them—within PBL units, measuring both creative problem-solving outcomes and measures of metacognition. These approaches align with curricular frameworks advocating first scaffolding deep thinking before AI use, thereby safeguarding higher-order cognitive engagement [69]. Additionally, longitudinal mixed-methods studies ought to assess whether these interventions support sustained critical thinking and emotional regulation over time. In doing so, the literature can shift from describing AI’s surface benefits to demonstrating how AI can genuinely cultivate deep learning and higher-order capacities when embedded in balanced, ethically framed learning designs.

4.8. Interdisciplinary and Transdisciplinary Collaboration

The varied landscape of disciplinary collaboration across the reviewed literature reveals both promising integrative models and largely parallel disciplinary efforts. Notably, several projects exemplify deep interdisciplinarity: for instance, Zingoni, Taborri [32] combine psychology, engineering, and special-education expertise to co-design machine-learning tools tailored for students with dyslexia, ensuring both technological precision and pedagogical accessibility. Similarly, Dann, O’Neill [52] integrate educational psychology and computer science to develop microskill-assessment systems, allowing real-time feedback on teaching behaviors—a design that would have been impossible without cross-domain synergy. These collaborations rigorously influence study design: educational researchers define the learning constructs, engineers operationalize those constructs algorithmically, and iterative testing embeds findings in authentic instructional practice. These collaborative arrangements not only strengthen the rigor of system design but also expand interpretive depth, allowing educational inquiries to be anchored in robust computational frameworks while simultaneously informed by cognitive and behavioral theories. Conversely, many studies feature multidisciplinary authorship without detailing integrative mechanisms—reducing interdisciplinary contributions to parallel interpretations rather than co-constructed insights [27,38].