1. Introduction

Deep clustering is a rapidly evolving field within deep learning, and it is particularly impactful in computer vision due to its performance nearing that of supervised frameworks, notably supervised classification [

1,

2]. We use deep clusteringto denote methods that train at least one neural component with a clustering-dependent objective, either (i) end-to-end schemes that update a deep encoder jointly with in-loop cluster assignments (the assigner may be classical, such as the spherical k-means [

3] used in ProPos [

4], or neural), or (ii) multi-stage schemes that keep a pretrained encoder fixed and train a neural clustering head on its features (such as [

2]). Deep clustering methods excel in grouping high-dimensional and complex data into clusters based on similarity without prior knowledge of labels. By leveraging deep neural networks for representation learning, deep clustering discovers meaningful patterns, enhancing performance and applicability in scenarios where manual labeling is impractical.

One of the most straightforward categories for deep clustering is into two families: parametric methods and non-parametric methods. Parametric methods involve fixing the number of clusters, K, before training, which can lead to issues like overclustering or underclustering when the true number of clusters is unknown, thus degrading the clustering quality [

5,

6]. In contrast, non-parametric methods dynamically adjust the number of clusters during training, aligning more closely with real-world scenarios.

In this context, state-of-the-art (SOTA) models like DeepDPM [

6] and DIVA [

7] have demonstrated the effectiveness of using an alternating framework to train a feature extractor and clustering model alternately. Indeed, this approach significantly enhances performance by iteratively refining both representation and clustering. Thus, these models represent a significant step forward in the development of non-parametric deep clustering techniques.

The quality of deep clustering is closely tied to the representation learning techniques used. While DeepDPM and DIVA perform well on metrics, they rely on traditional self-supervised techniques such as autoencoders with reconstruction for DeepDPM, VAEs for DIVA, and clustering loss for both. Consequently, for large-scale and complex datasets like ImageNet-50 [

8], they use a two-step approach through which their models are built on top of the self-supervised model MOCO [

9], which uses a contrastive learning framework to generate robust data representations. This separation, however, loses the advantages of an end-to-end approach. Therefore, integrating a more performant framework for representation learning that can alternatively learn the number of clusters remains a challenge to be addressed.

To address these limitations, we propose AutoProPos, an extension of the SOTA deep parametric clustering model ProPos [

4]. Like ProPos, AutoProPos belongs to the end-to-end family. AutoProPos includes our Clustering Supervisor Module (CLS) in an alternating framework to dynamically adjust the number of clusters, K, needed for ProPos training, making it non-parametric. Unlike previous works grounded in the Dirichlet process mixture (DPM) field, our CLS employs a model selection framework. This framework involves running a parametric clustering model over a range of specified

K values and selecting the best clustering configuration using an unsupervised metric. Model selection-based frameworks determine the optimal number of clusters

K for various classical parametric algorithms such as spherical k-means [

3], KMeans [

10], and spectral clustering [

11], making the

K prediction adaptable to different data manifolds. ProPos, in our case, produces a uniform representation on a unit hypersphere, a spherical approach rarely explored by DPM-based clustering works (for example [

12]). A common disadvantage of both model selection-based frameworks and DPM-based clustering works is their potential computational expense for high-dimensional data. In CLS, we tackle this issue by remapping ProPos’s latent space using autoencoders into a lower-dimensional space and training on a subset of the dataset, as further explained in

Section 4.1. Thus, our method is efficient and scalable.

Finally, our CLS module selects the optimal K for the directional latent data produced by ProPos based on the silhouette score and our novel index, the Silhouette Uniformity Index (SUI), designed to find the optimal cluster configuration for balanced datasets.

Our contributions can be summarized as follows: (1) We developed the Clustering Supervisor Module or CLS, a scalable module that automatically determines the optimal number of clusters during ProPos training. (2) We introduced the Silhouette Uniformity Index or SUI, a novel metric to evaluate the number of clusters in balanced datasets. (3) Our approach achieved SOTA and competitive results across various datasets against both parametric and non-parametric deep clustering models.

3. Preliminaries: ProPos

As previously introduced, ProPos [

4] is a parametric deep clustering model for image clusterization that learns its latent representation using a non-contrastive and prototype scattering framework. The model employs k-means clustering and introduces two new loss functions named Prototype Scattering Loss (PSL) and Positive Sampling Alignment (PSA) to improve the separation of these representations. This section gives a brief overview of its framework, explaining the architecture, novel loss functions, and training methodology.

3.1. ProPos Architecture

ProPos [

4] involves three principal encoders: the online network

, the target network

, and the predictor network

. The online network and the predictor weights are updated directly through the backpropagation of the computed loss defined in

Section 3.2 and

Section 3.3. As stated by the original ProPos authors, the design follows a BYOL-style online–target–predictor scheme [

17]: the online and target networks produce instance representations, and the predictor aligns the online representation with the target one to mitigate representational collapse. The parameters of the target network are updated through a moving average of the parameters from the online network, formulated as follows:

where

is the momentum hyperparameter, and

denotes the networks’ parameters.

3.2. Prototype Scattering Loss (PSL)

By partitioning the target network’s representations into

K clusters, we can compute its and the online network’s respective cluster centers, also called prototypes by the authors [

4]. The PSL loss is subsequently defined as follows:

Here, the networks’ respective prototypes,

and

, are computed within a mini-batch,

B, as follows:

where

is the cluster assignment posterior probability. It is important to note that PSL is computed after a warmup epoch because the learned representations, and thus the cluster centers, may not be well defined during the early stages of training. During this initial period, only PSA is computed.

can be viewed as two distinct components, prototypical alignment, denoted by the first term, and prototypical uniformity, represented in the second term. Prototypical alignment stabilizes an update of the prototypes by enforcing alignment among the two views, and prototypical uniformity enforces a uniform distribution over each prototype set on the unit hypersphere, which maximizes the inter-cluster distance.

3.3. Positive Sampling Alignment (PSA)

PSA aims to improve within-cluster compactness by aligning neighboring examples around one augmented view with another view. By sampling neighboring examples from a Gaussian distribution using the reparameterization trick, the following is obtained:

where

denotes the identity matrix. This method extends the instance alignment by considering neighboring samples:

By ensuring that neighboring examples are from the same cluster, PSA avoids class collision issues [

28] and enhances within-cluster compactness.

3.4. Training ProPos

ProPos is optimized using an expectation–maximization (EM) framework with the following steps.

In the E-step, is estimated for PSL on the projected dataset via the target network using spherical k-means clustering. This is updated every r epochs (with in our paper).

In the M-step, to compute PSL and PSA, two augmented views of images in the mini-batch are passed into the online and target networks. PSL and PSA losses are then combined to form the objective function:

where

balances the two loss components. The different networks are then updated, and the whole process is repeated until the maximum number of epochs is reached.

ProPos remains a parametric deep clustering model: it assumes a fixed

K and does not explicitly regulate

cluster distribution uniformity during training.

Section 4 introduces AutoProPos, which augments ProPos with a lightweight clustering supervisor (CLS) that alternates with the ProPos loop to select

K using a cosine silhouette computed in a reduced latent subspace and to promote uniform cluster distributions via the Silhouette Uniformity Index (SUI); the final assignments remain those of ProPos’ spherical

k-means.

4. Our Method: AutoProPos

The AutoProPos framework combines the strengths of ProPos [

4] with our newly developed Clustering Supervisor Module (CLS) to enable dynamic clustering without knowing the cluster counts, K, in advance. This section starts with an overview of the entire framework (

Section 4.1), showing how ProPos and CLS work together to refine latent space representations and dynamically adjust K. The CLS module is then presented (

Section 4.2), explaining its role in analyzing ProPos latent representations to determine the optimal number of clusters. This includes using the well-known average silhouette score as a metric for assessing clustering quality (

Section 4.2.1) and our novel metric, the Silhouette Uniformity Index (

Section 4.2.3), designed to select a set of balanced clusters. Finally, we describe the iterative process of the CLS (

Section 4.2.4), involving the training of autoencoders, computing the previously presented metrics, and inferring the number of clusters using our Mean K and Max K strategies. The implementation is available at:

https://github.com/Cyrilkt/AutoProPos, accessed on 15 July 2025.

4.1. Framework Overview

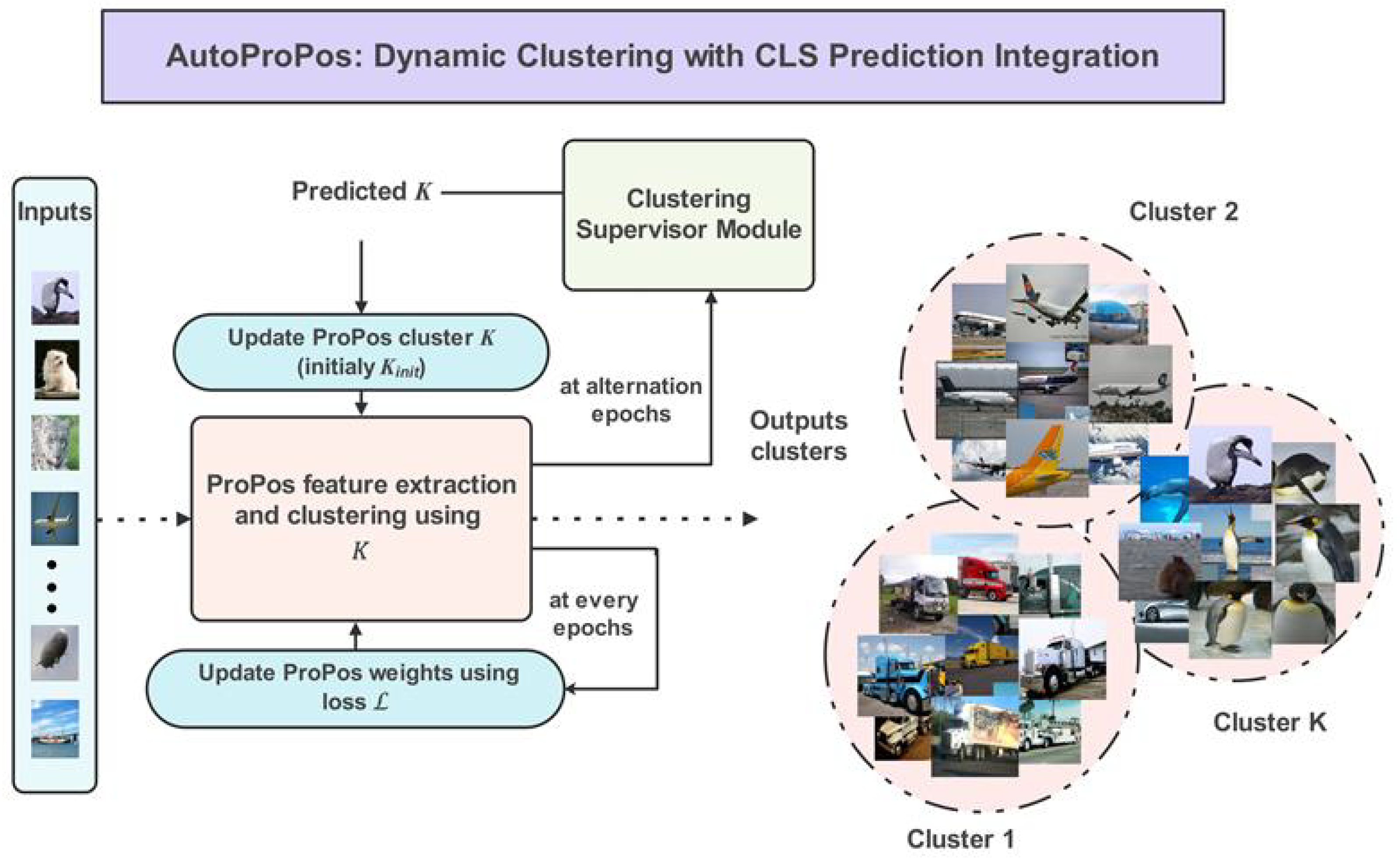

Figure 1 outlines the training pipeline of the AutoProPos framework, demonstrating the sequence of operations from initial setup to the refinement stages. In AutoProPos, we first train ProPos [

4] by using an initial estimate of the number of clusters,

, to refine the latent space representations based on this cluster count. During alternation epochs, the training dataset is projected through the target network to generate latent representations. Our Clustering Supervisor Module (CLS) analyzes these representations and provides the new K to ProPos, continuing the training with this updated count. This iterative training process is repeated until we determine that the cluster count has stabilized. Unlike SOTA non-parametric deep clustering works [

6,

7], which alternate between training an autoencoder and a clustering module, where the clustering module also aims to infer the final assignment of data to clusters. Our implementation of CLS solely aims to determine the number of clusters. The final sample-to-cluster assignments used to optimize ProPos remain those produced via the spherical k-means E-step (

Section 3.4). This focus allows us to use a subset of the data while inferring K using CLS, significantly reducing its computation cost and maintaining training duration for AutoProPos similar to ProPos.

4.2. Clustering Supervisor Module (CLS)

4.2.1. Average Silhouette Score

The average silhouette score,

, quantifies how well each object has been classified in its cluster compared to other clusters. Like the silhouette score s, it ranges from −1 to 1, where a high

value indicates a tight and well-separated cluster structure, while a low

means that the clusters are less distinctive with potential overlap between adjacent clusters. The silhouette score can be written as follows:

where

a is the mean intra-cluster distance, defined as follows:

and

b is the mean nearest-cluster distance to the next closest cluster, defined as follows:

Here,

is the data belonging to cluster

i, and the distance function

being used is the well-known cosine similarity distance. The average silhouette score is then calculated as follows:

where

n is the number of clustered data points.

4.2.2. Internal CVIs Beyond Silhouette

A wide range of internal cluster validity indices (CVIs) has been proposed in the literature (e.g., measures trading off separation and compactness, density-based criteria, or prototype-stability scores). Among the most widely used are

Calinski–Harabasz (CH) [

29], which favors partitions with strong between-cluster separation relative to within-cluster compactness, and

Davies–Bouldin (DB) [

30], which penalizes large within-cluster scatter and weak inter-cluster separation. These indices are frequently used as general-purpose, partition-level criteria in automatic clustering.

Scope of this work. AutoProPos employs only silhouette, together with our Silhouette Uniformity Index (SUI). We do not implement or evaluate CH, DB, or other CVIs in this study. Our choice is driven by the fact that SUI operates on per-sample silhouette scores to penalize non-uniform clusters, whereas CH/DB are partition-level summaries; directly substituting them would change the CLS design and its Max-K/Mean-K selection rule.

4.2.3. Silhouette Uniformity Index (SUI)

Our proposed metric, SUI, is as follows. We define

as the probability mass function computed from the normalized and shifted silhouette score samples in the

i-th cluster. Given the silhouette scores grouped by clusters,

is calculated by shifting the scores to ensure that they are positive and then normalizing them to form proper probability distributions. Finally, the scores within each cluster are ordered in descending order. The normalized score for a cluster is given as follows:

where

is the silhouette score for the

j-th sample in the

i-th cluster,

C is a constant corresponding to the minimum silhouette score across the set of clusters, and

is a small constant to ensure a normalized score that is defined and non-null (further discussion on

in

Supplementary Section S4.2). The probability mass function

can be defined for four cases, as follows:

where

is the number of data points belonging to cluster

i, and

is the total number of samples in the largest cluster.

The SUI is then calculated using the generalized Jensen–Shannon formula [

31], where the mixture of distributions of the

n cluster probability mass functions

M is defined as follows:

and the entropy

is given as follows:

Due to Jensen–Shannon properties, SUI ranges from 0 to 1, with 0 indicating perfect similarity between our probabilities and 1 indicating a complete lack of uniformity. Based on our definition of these probabilities, SUI assesses proportion and silhouette similarities across clusters. SUI quantifies the similarity between each cluster’s silhouette–value distribution and the prototype distribution obtained by averaging across clusters; a higher SUI indicates homogeneous silhouette profiles and discourages the concentration of assignments in a single cluster. Thus, it aims to assess the best cluster configuration for datasets with balanced numbers of data points and evenly distributed clusters.

4.2.4. Description of the Framework

As detailed in Algorithm

A1 (referenced at the end of the paper), the CLS framework involves an iterative process of training N autoencoders, computing the average silhouette score and SUI, and selecting the optimal number of clusters using our Mean

K and Max

K approach.

Firstly,

N autoencoders are trained on the ProPos latent projections of a subset of the dataset,

D. Our autoencoders are trained by sampling a ratio

r of ProPos latent features to be masked and training the autoencoders to reconstruct the entire latent data. This approach, inspired by MAE [

32], provides an easy-to-compute but effective self-supervisory task. Unlike MAE, which applies mean squared error to images in the pixel space, we use a cosine dissimilarity loss on the reconstructed and original ProPos latent components.

Once the autoencoders are trained, the latent representations are clustered using spherical k-means over a specified range of

K values, denoted as

. This range reflects prior beliefs about the interval where the true number of clusters might belong. We show in

Supplementary Section S4.2 that the number of clusters inferred using AutoProPos is stable even for a large cluster candidate interval.

For each encoder

i, we pick the top

sets of clusters based on the criterion of having the highest average silhouette score. Among these top candidates, we select the best-fitted

by choosing the set that has the lowest SUI score.

where

.

The optimal number of clusters, K, is then selected using two strategies:

Max K: during the first alternation, we select the maximum

K from the top

candidates identified based on their SUI scores:

where

.

Mean K: for subsequent alternations, we calculate the mean

K from the top

candidates, also selected based on their SUI scores:

where

. The resulting

K is then rounded to the nearest integer.

The Max K strategy is used during the first alternation because it occurs in the early training epochs of ProPos, where the generated latent representation is still not well advanced, leading to an underestimation of K. Choosing the maximum K among the sets of helps reduce this problem. Conversely, the Mean K strategy is used in subsequent alternations to make the predicted K more robust to the stochasticity in latent spaces produced via autoencoders. We also use SUI scores to select the or latent space candidates that have the best uniform clusterization, thereby representing a balanced data distribution most accurately.

6. Results

We present AutoProPos’s performance in comparison to that of previous parametric and non-parametric methods, as well as an ablation study under multiple training configurations.

6.1. Classical and Deep Non-Parametric Methods

As depicted in

Table 1, we evaluate AutoProPos against nine non-parametric clustering methods, which we categorized into two distinct groups: deep clustering models transitioning to non-parametric, and models that do not follow this transition. The evaluation was conducted using three well-known metrics: Normalized Mutual Information (NMI), the Adjusted Rand Index (ARI), and clustering accuracy (ACC), as defined in

Supplementary Section S1.

The different methods were tested on four datasets: MNIST, Fashion-MNIST, STL-10, and ImageNet-50. We note that the performance metrics for all models, except for Deep Plug and Play [

25], were obtained from DIVA [

7]. For Deep Plug and Play, we used the highest results reported in its paper, which utilized SCAN [

5] as a baseline.

To ensure a fair comparison between AutoProPos and the other methods, we adhered to the train–test splits, ran our model five times for each dataset, and reported the average and standard deviation on the test set. For STL-10, we utilized both the labeled training set and the unlabeled set for training. Due to the lack of an implementation repository from Deep Plug and Play, we report its performance for STL-10 without providing the mean ± standard deviation.

As shown in

Table 1, AutoProPos demonstrates competitive performance against SOTA methods. On MNIST, it outperforms the second-best model by 2% in the tested metrics. For Fashion-MNIST, it achieves the highest ACC of 0.74. On STL-10 and ImageNet-50, it maintains the second-best NMI and ARI scores while surpassing the next-best model in ACC by 11% and 7%, respectively.

Additionally,

Table 2 presents the inferred number of clusters

K by our method and compares it with five known methods for the presented datasets. AutoProPos infers the exact

K value for MNIST and STL-10 and comes close for Fashion-MNIST and ImageNet-50. Although DeepDPM and Deep Plug and Play (SCAN) offer the closest predictions for the latter two datasets, AutoProPos outperforms them overall in clustering performance.

6.2. Deep Clustering Transitioning to Non-Parametric and Parametric Methods

In

Table 3, AutoProPos is compared to sixteen parametric deep clustering methods across previously established metrics. Deep Plug and Play is also included in the comparison due to its methodological proximity to AutoProPos. Consequently, the table is divided into two groups: parametric deep clustering methods and models transitioning to non-parametric. We excluded the clustering methods TSP [

18] and TEMI [

2] from our comparison due to their use of pretrained models on large datasets, giving them an unfair advantage over our method.

We evaluate model performance on three datasets: CIFAR-20, ImageNet-10, and ImageNet-Dogs. Following previous studies (e.g., [

1,

4]), the training and test sets are merged during the training and evaluation phases of AutoProPos. NMI, ARI, and ACC results for all presented methods, except for ProPos on ImageNet-10 and ImageNet-Dogs, are sourced from ProPos [

4], SPICE [

1], and Deep Plug and Play [

25]. For fair comparisons, ProPos was retrained on ImageNet-10 and ImageNet-Dogs with an input size of 224 × 224 × 3, using

Supplementary Sections S2 and S3 configurations. Both ProPos and AutoProPos were run five times, and the best performance was selected.

Despite parametric methods offering the advantage of knowing the ground truth K, AutoProPos demonstrates competitive performance on the tested datasets. AutoProPos achieves better performance than previous methods on CIFAR-20 and ImageNet-Dogs. On ImageNet-10, it closely matches ProPos, with a 0.3% gap in ACC.

6.3. Ablation Study

Here, we present further analyses of AutoProPos to highlight its key components and demonstrate robustness while maintaining training time similar to ProPos [

4].

Table 4 shows the performance of AutoProPos on the Fashion-MNIST dataset with the previously described training configuration. For each ablation, we present the mean and standard deviation of five runs.

We compared the CLS prediction on the ProPos latent space with the prediction obtained by first projecting it using an autoencoder. The latter produced more accurate K values, demonstrating the autoencoder’s ability to map the ProPos latent space towards a representation analyzable via CLS. However, it also produced a high prediction standard deviation, likely due to the stochasticity of the latent representation induced during autoencoder training. We mitigated this in the full method by training multiple encoders and using the Mean K strategy.

We also analyzed the prediction of K using only the average silhouette score and only the SUI score with a one-encoder training setup. We found that, alone, they overestimated and underestimated the K value, respectively. Their combination, as depicted in the full method, provided better results. The average silhouette score helps select well-defined cluster configurations, while the SUI index regulates the final configuration choice.

Finally, as shown in

Table 4, using only the Mean

K strategy already produces good results; adding the initial Max

K step in the full method further improves the overall performance and robustness.

Table 5 demonstrates AutoProPos’s robustness to the initial number of clusters

. We trained the model on ImageNet-10 with both underclustering and overclustering

, and observed stable results across the tested metrics.

Finally,

Table 6 compares the training times of AutoProPos and ProPos, both trained on 4× NVIDIA RTX A5000 (24 GB) under Ubuntu 22.04 with PyTorch 2.1.2 (CUDA 12.1); notably, the CLS module uses a single GPU. We report that training AutoProPos only added a maximum of 2 h to the training time on the tested datasets.

Further insights, including extended ablation results, hyperparameter studies, and t-SNE visualizations of the latent space evolution, which further demonstrate the robustness and usefulness of our approach, are available in

Supplementary Section S4.