Research on BBHL Model Based on Hybrid Loss Optimization for Fake News Detection

Abstract

1. Introduction

- (1)

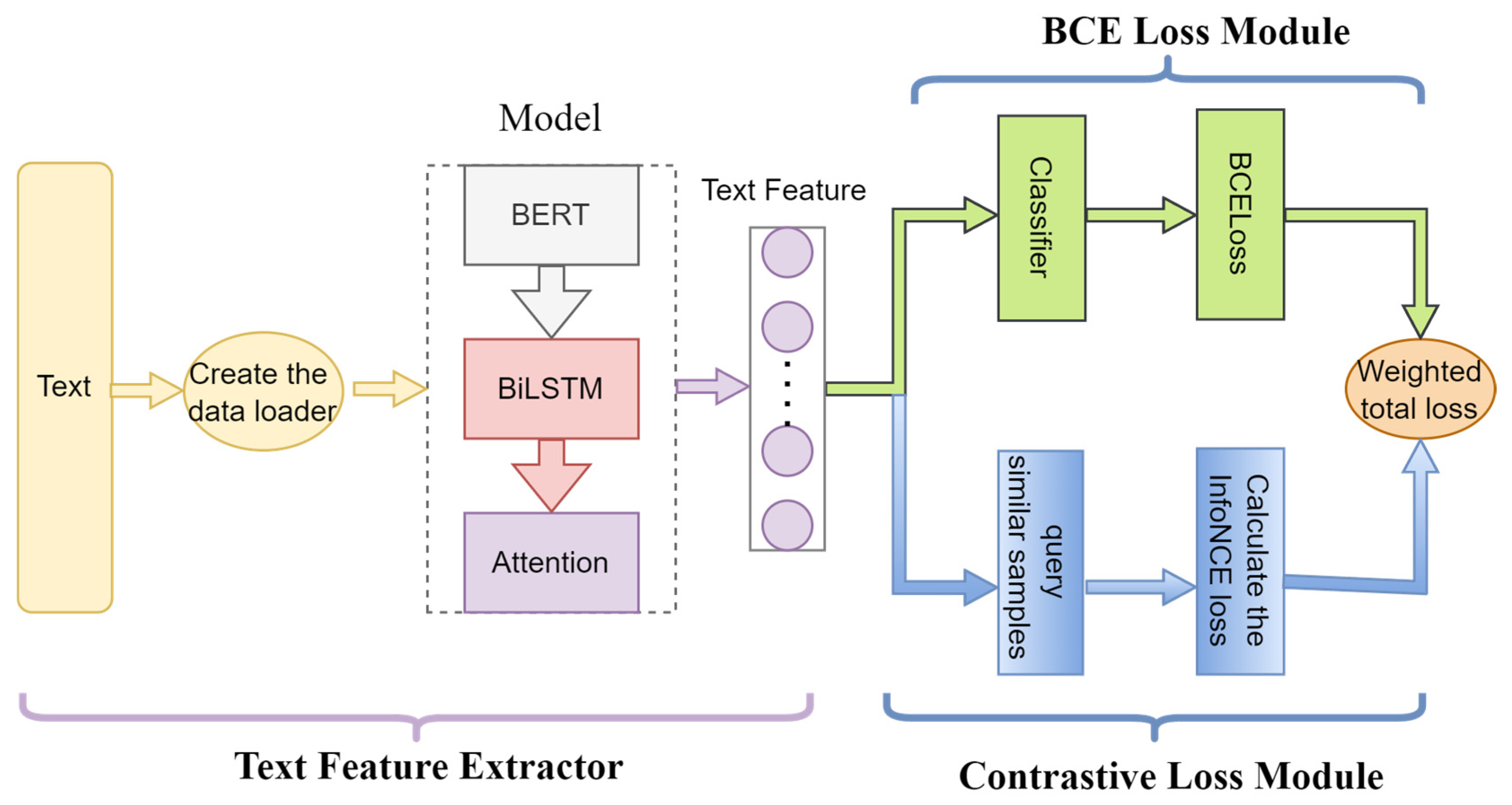

- The feature extraction module integrates BERT, Bi-LSTM, and an attention mechanism. BERT is responsible for capturing deep semantics, Bi-LSTM models temporal dependencies, and the attention mechanism focuses on key information, enabling multi-level extraction of text features [14];

- (2)

- The hybrid loss function innovatively fuses BCE loss and contrastive loss through weighted summation. The former ensures classification accuracy, while the latter enhances feature discriminability by narrowing the feature distance between samples of the same class and widening that between samples of different classes;

- (3)

- The model architecture has modal scalability, allowing flexible integration of feature extractors for images, videos, etc., to adapt to multi-scenario requirements. The main contributions of this paper are as follows:

- The proposed BBHL model is a general framework for fake news detection. The feature extraction part can be easily replaced by different models specifically designed for feature extraction, thereby adapting to diverse task requirements and data modalities, and achieving continuous optimization of model performance and scenario generalization.

- In the model optimization part, contrastive loss is innovatively used as an auxiliary component, which is weighted and summed with the main BCE loss to jointly solve the problem of model training optimization and improve the generalization ability of the model.

- Experiments demonstrate that the proposed BBHL model can effectively identify fake news and perform well when tested on multiple large-scale real-world datasets.

2. Related Work

2.1. Fake News Detection Methods

- (1)

- Attention mechanism: Highlighting important associations by dynamically assigning weights. For example, the TDEDA model designs text-visual bidirectional attention, where text features guide visual features to focus on key regions (such as facial expressions of people in news), and visual features feedback to text features to enhance scene description [10];

- (2)

- Knowledge graph assistance: Introducing external knowledge to compensate for the lack of modal information. For instance, the ERIC-FND model links Wikipedia entities, fusing entities like “celebrities” and “institutions” in news with background knowledge to improve semantic understanding [21];

- (3)

- Contrastive learning: Enhancing fusion effects by aligning cross-modal feature spaces. For example, the BMR method adopts multi-view contrastive learning, forcing the feature representations of text, image patterns, and image semantics to converge in a shared space [22].

2.2. Application of Hybrid Loss Functions in Fake News Detection

- (1)

- KL divergence constraint: The MVACLNet model uses KL divergence to limit the distribution difference between virtual samples and real samples in virtual augmented contrastive learning, improving the model’s resistance to adversarial attacks by 20% [5];

- (2)

- Reconstruction loss: The GAMC model combines the reconstruction loss of a graph autoencoder with contrastive loss to achieve unsupervised fake news detection, outperforming traditional unsupervised methods by 4.49% in accuracy on the PolitiFact dataset [8];

- (3)

- Evidential theory loss: The MDF-FND model designs a dynamic fusion loss based on Dempster-Shafer evidential theory, adaptively adjusting weights according to modal quality (e.g., text clarity, image resolution), and outperforming fixed-weight fusion on noisy data [28].

3. Methodology

3.1. Problem Statment

3.2. Model Overview

3.3. Text Preprocessing

3.4. Text Feature Extractor

3.5. BCE Loss Module

3.6. Contrastive Loss Module

4. Materials and Methods

4.1. Datasets

4.2. Baseline Model

4.3. Model Parameters

4.4. Evaluation Metrics

5. Results

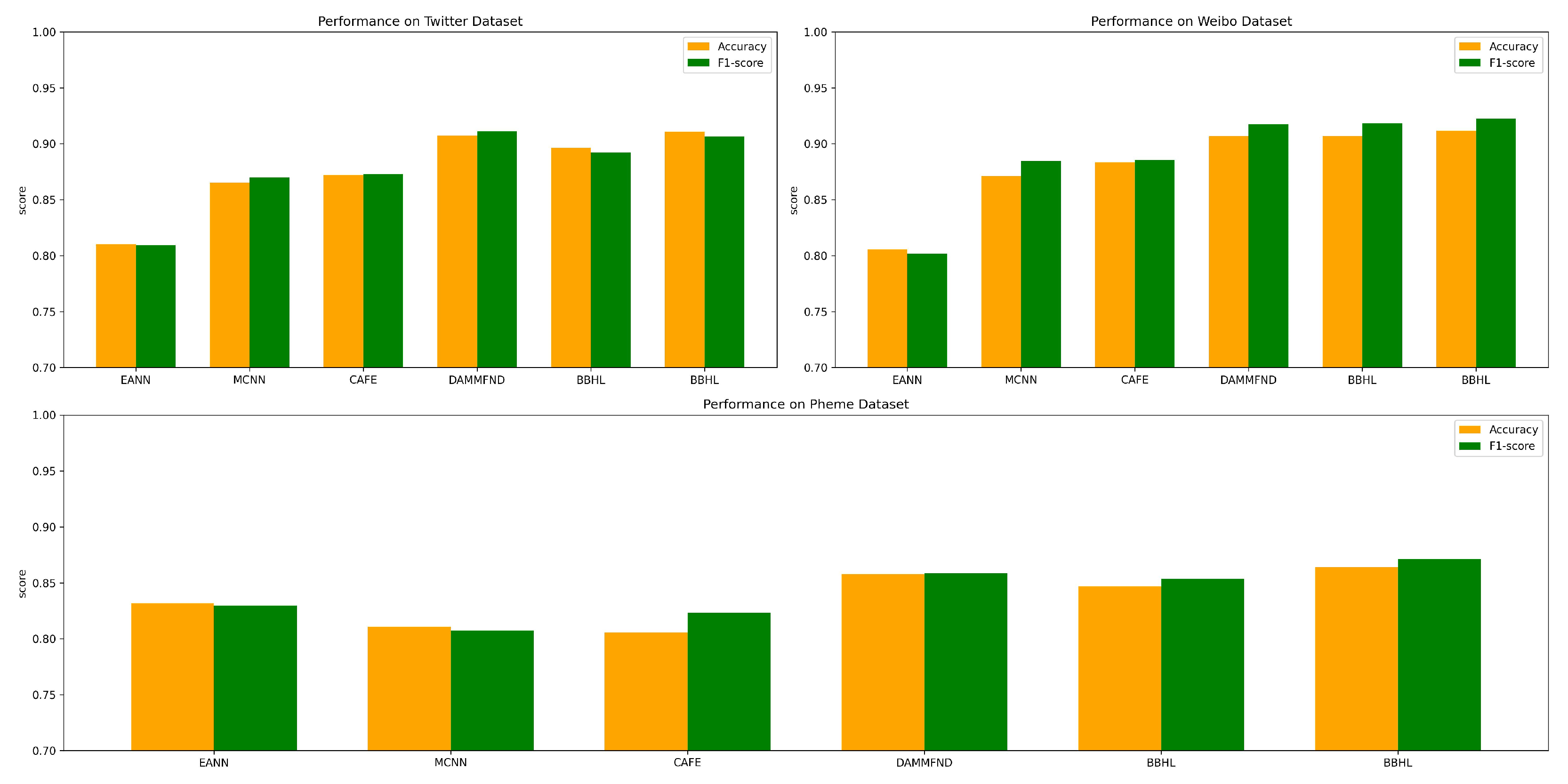

5.1. Overall Performance Comparison

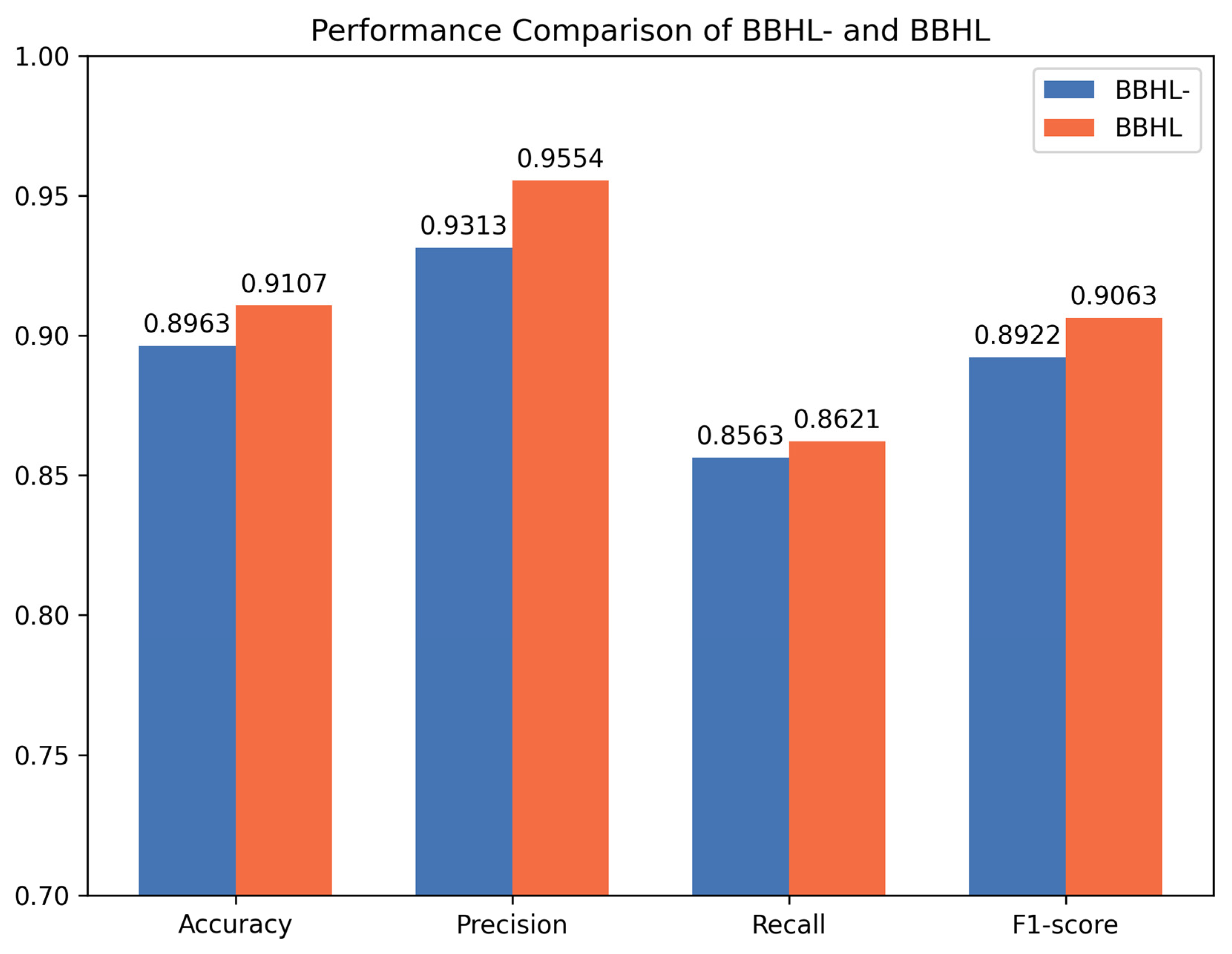

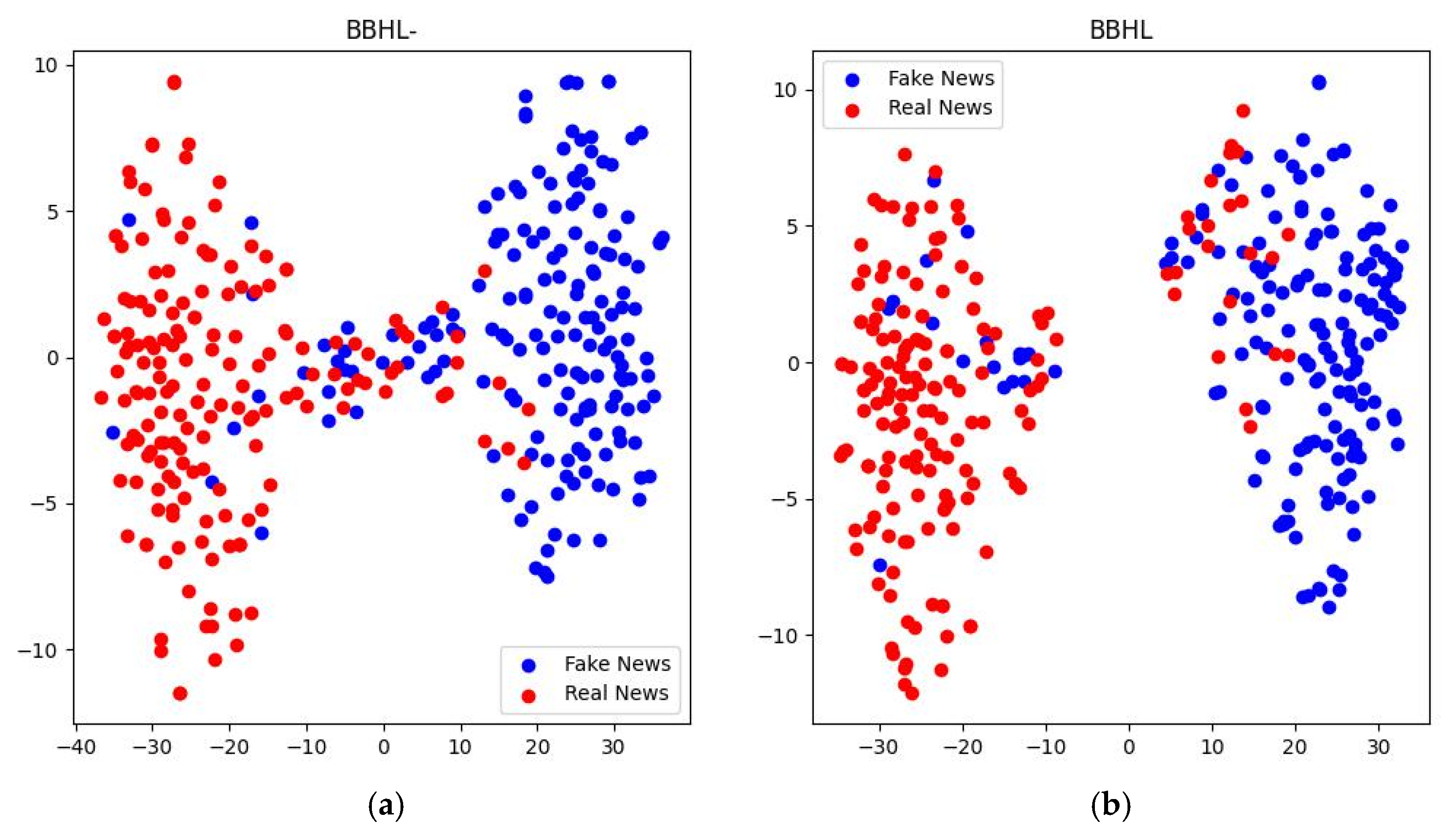

5.2. Ablation Experiments

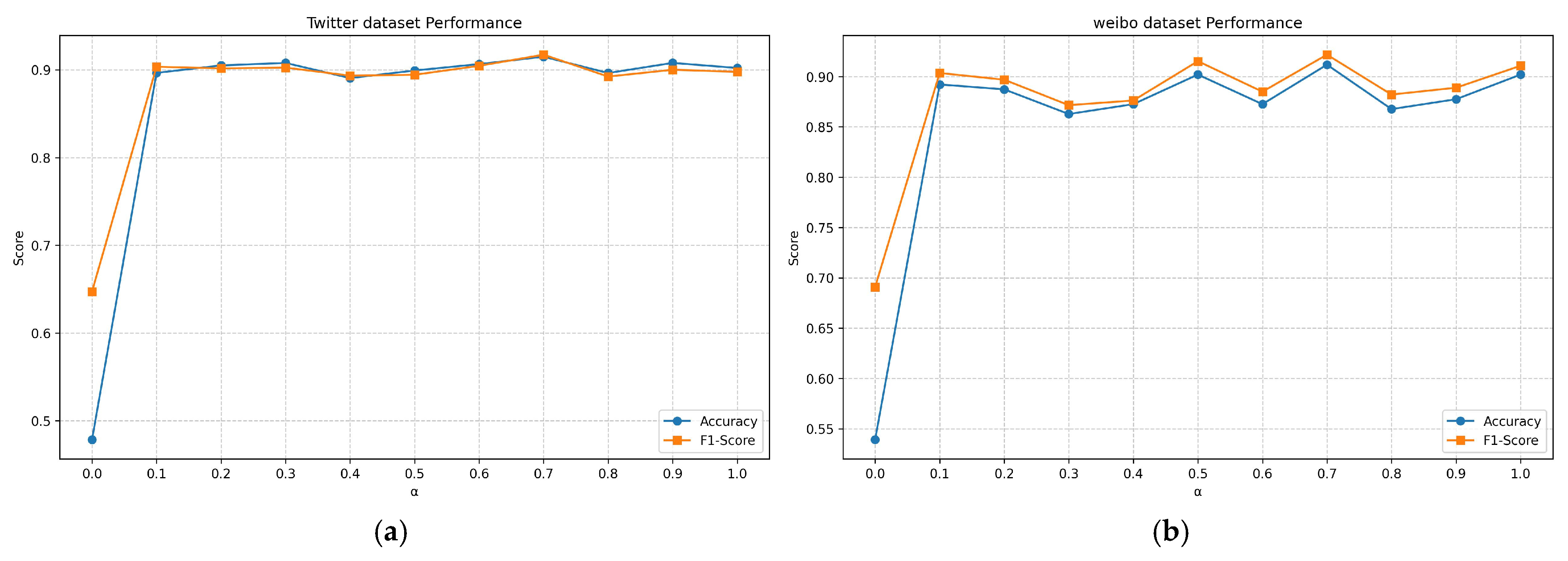

5.3. BCE Loss Weight and Temperature Value Setting

5.4. Convergence Analysis

5.5. Discussion on the Necessity of Multimodal Extension

6. Conclusions

6.1. Method Reflection

6.2. Future Research Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, L.; Zhang, X.; Zhou, Z.; Zhang, X.; Wang, S.; Yu, P.S.; Li, C. Early Detection of Multimodal Fake News via Reinforced Propagation Path Generation. IEEE Trans. Knowl. Data Eng. 2025, 37, 613–625. [Google Scholar] [CrossRef]

- Allcott, H.; Gentzkow, M. Social media and fake news in the 2016 election. J. Econ. Perspect. 2017, 31, 211–234. [Google Scholar] [CrossRef]

- Garg, S.; Sharma, D.K. Linguistic features based framework for automatic fake news detection. Comput. Ind. Eng. 2022, 172, 108432. [Google Scholar] [CrossRef]

- Ge, W.; Hong, Z.; Luo, Y. Online detection of Weibo rumors based on Naive Bayes algorithm. In Proceedings of the 2020 Asia-Pacific Conference on Image Processing, Electronics and Computers, Dalian, China, 14–16 April 2020; pp. 22–25. [Google Scholar]

- Liu, X.; Pang, M.; Li, Q.; Zhou, J.; Wang, H.; Yang, D. MVACLNet: A Multimodal Virtual Augmentation Contrastive Learning Network for Rumor Detection. Algorithms 2024, 17, 199. [Google Scholar] [CrossRef]

- Liu, Z.; Wei, Z.; Zhang, R. Rumor detection based on convolutional neural network. J. Comput. Appl. 2017, 37, 3053–3056+3100. [Google Scholar]

- Li, L.; Cai Gu Pan, J. Weibo rumor event detection method based on C-GRU. J. Shandong Univ. (Eng. Sci.) 2019, 49, 102–106+115. [Google Scholar]

- Yin, S.; Zhu, P.; Wu, L.; Gao, C.; Wang, Z. GAMC: An Unsupervised Method for Fake News Detection Using Graph Autoencoder with Masking. Proc. AAAI Conf. Artif. Intell. 2024, 38, 347–355. [Google Scholar] [CrossRef]

- Khattar, D.; Goud, J.S.; Gupta, M.; Varma, V. MVAE: Multimodal Variational Autoencoder for Fake News Detection. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 2915–2921. [Google Scholar]

- Han, H.; Ke, Z.; Nie, X.; Dai, L.; Slamu, W. Multimodal Fusion with Dual-Attention Based on Textual Double-Embedding Networks for Rumor Detection. Appl. Sci. 2023, 13, 4886. [Google Scholar] [CrossRef]

- Hao, R.; Luo, H.; Li, Y. Multi-Modal Fake News Detection Enhanced by Fine-Grained Knowledge Graph. IEICE Trans. Inf. Syst. 2025, E108D, 604–614. [Google Scholar] [CrossRef]

- Hua, J.; Cui, X.; Li, X.; Tang, K.; Zhu, P. Multimodal fake news detection through data augmentation-based contrastive learning. Appl. Soft Comput. 2023, 136, 110125. [Google Scholar] [CrossRef]

- Wang, J.; Qian, S.; Hu, J.; Hong, R. Positive Unlabeled Fake News Detection via Multi-Modal Masked Transformer Network. IEEE Trans. Multimed. 2024, 26, 234–244. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Lauw, H.W.; Lee, M.L.; Lim, E.P. Deep learning for fake news detection: A survey. AI Open 2022, 3, 133–155. [Google Scholar] [CrossRef]

- Xue, J.; Wang, Y.; Tian, Y.; Li, Y.; Shi, L.; Wei, L. Detecting fake news by exploring the consistency of multimodal data. Inf. Process. Manag. 2021, 58, 102610. [Google Scholar] [CrossRef]

- Zhou, X.; Wu, J.; Zafarani, R. SAFE: Similarity-Aware MultiModal Fake News Detection. arXiv 2020, arXiv:2003.04981. [Google Scholar]

- Asghar, M.Z.; Habib, A.; Habib, A.; Khan, A.; Ali, R.; Khattak, A. Exploring deep neural networks for rumor detection. J. Ambient. Intell. Hum.-Comput. Interact. 2021, 12, 4315–4333. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Cao, B.; Wu, Q.; Cao, J.; Liu, B.; Gui, J. External Reliable Information-enhanced Multimodal Contrastive Learning for Fake News Detection. Proc. AAAI Conf. Artif. Intell. 2025, 39, 31–39. [Google Scholar] [CrossRef]

- Ying, Q.; Hu, X.; Zhou, Y.; Qian, Z.; Zeng, D.; Ge, S. Bootstrapping Multi-View Representations for Fake News Detection. Proc. AAAI Conf. Artif. Intell. 2023, 37, 5384–5392. [Google Scholar] [CrossRef]

- Chen, J.; Jia, C.; Zheng, H.; Chen, R.; Fu, C. Is Multi-Modal Necessarily Better? Robustness Evaluation of Multi-Modal Fake News Detection. IEEE Trans. Netw. Sci. Eng. 2023, 10, 3144–3158. [Google Scholar] [CrossRef]

- Sun, L.; Rao, Y.; Lan, Y.; Xia, B.; Li, Y. HG-SL: Jointly Learning of Global and Local User Spreading Behavior for Fake News Early Detection. Proc. AAAI Conf. Artif. Intell. 2023, 37, 5248–5256. [Google Scholar] [CrossRef]

- Silva, A.; Han, Y.; Luo, L.; Karunasekera, S.; Leckie, C. Propagation2Vec: Embedding partial propagation networks for explainable fake news early detection. Inf. Process. Manag. 2021, 58, 102618. [Google Scholar] [CrossRef]

- Su, P.; Peng, Y.; Vijay-Shanker, K. Improving BERT Model Using Contrastive Learning for Biomedical Relation Extraction. arXiv 2021, arXiv:2104.13913. [Google Scholar] [CrossRef]

- Cui, W.; Shang, M. MIGCL: Fake news detection with multimodal interaction and graph contrastive learning networks. Appl. Intell. 2025, 55, 78. [Google Scholar] [CrossRef]

- Lv, H.; Yang, W.; Yin, Y.; Wei, F.; Peng, J.; Geng, H. MDF-FND: A dynamic fusion model for multimodal fake news detection. Knowl.-Based Syst. 2025, 317, 113417. [Google Scholar] [CrossRef]

- Khosla, P.; Teterwak, P.; Wang, C.; Sarna, A.; Tian, Y.; Isola, P.; Maschinot, A.; Liu, C.; Krishnan, D. Supervised Contrastive Learning. Adv. Neural Inf. Process. Syst. 2020, 33, 18661–18673. [Google Scholar]

- Song, C.; Tu, C.; Yang, C.; Chen, H.; Liu, Z.; Sun, M. CED: Credible Early Detection of Social Media Rumors. arXiv 2018, arXiv:1811.04175. [Google Scholar] [CrossRef]

- Yuan, C.; Ma, Q.; Zhou, W.; Han, J.; Hu, S. Jointly embedding the local and global relations of heterogeneous graph for rumor detection. In Proceedings of the 19th IEEE International Conference on Data Mining, Beijing, China, 8–11 November 2019. [Google Scholar]

- Zubiaga, A.; Liakata, M.; Procter, R.; Hoi, G.W.S.; Tolmie, P.; Masuda, N. Analysing How People Orient to and Spread Rumours in Social Media by Looking at Conversational Threads. PLoS ONE 2016, 11, e0150989. [Google Scholar] [CrossRef]

- Wang, Y.; Ma, F.; Jin, Z.; Yuan, Y.; Xun, G.; Jha, K.; Su, L.; Gao, J. EANN: Event Adversarial Neural Networks for Multi-Modal Fake News Detection. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; pp. 849–857. [Google Scholar]

- Chen, Y.; Li, D.; Zhang, P.; Sui, J.; Lv, Q.; Tun, L.; Shang, L. Cross-modal ambiguity learning for multimodal fake news detection. In Proceedings of the ACM Web Conference 2022, Lyon, France, 25–29 April 2022; pp. 2897–2905. [Google Scholar]

- Lu, W.; Tong, Y.; Ye, Z. DAMMFND: Domain-Aware Multimodal Multi-view Fake News Detection. Proc. AAAI Conf. Artif. Intell. 2025, 39, 559–567. [Google Scholar] [CrossRef]

- van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

| Dataset | Source | Rumors | Non-Rumors |

|---|---|---|---|

| Weibo Dataset | Crawled from the False Information Reporting Platform of Sina Weibo | 1538 items | 1849 items |

| Twitter Dataset | All from tweets on the Twitter platform | 579 items | 576 items |

| Pheme Dataset | Derived from tweets related to 9 breaking news events on the Twitter platform | 1067 items | 1067 items |

| Dataset | Method | Accuracy | Precision | Recall | F1 |

|---|---|---|---|---|---|

| EANN | 0.8103 | 0.8128 | 0.8091 | 0.8094 | |

| MCNN | 0.8652 | 0.8798 | 0.8698 | 0.8698 | |

| CAFE | 0.8721 | 0.8834 | 0.8631 | 0.8729 | |

| DAMMFND | 0.9073 | 0.9158 | 0.9068 | 0.9113 | |

| BBHL- | 0.8963 | 0.9313 | 0.8563 | 0.8922 | |

| BBHL | 0.9107 | 0.9554 | 0.8621 | 0.9063 | |

| EANN | 0.8058 | 0.8002 | 0.8038 | 0.8016 | |

| MCNN | 0.8712 | 0.8762 | 0.8932 | 0.8846 | |

| CAFE | 0.8834 | 0.8756 | 0.8932 | 0.8854 | |

| DAMMFND | 0.9068 | 0.9132 | 0.9218 | 0.9176 | |

| BBHL- | 0.9069 | 0.9068 | 0.9304 | 0.9185 | |

| BBHL | 0.9118 | 0.9145 | 0.9304 | 0.9224 | |

| Pheme | EANN | 0.8318 | 0.8302 | 0.8295 | 0.8298 |

| MCNN | 0.8107 | 0.7981 | 0.8173 | 0.8076 | |

| CAFE | 0.8056 | 0.8345 | 0.8124 | 0.8233 | |

| DAMMFND | 0.8578 | 0.8534 | 0.8634 | 0.8584 | |

| BBHL- | 0.8469 | 0.8563 | 0.8512 | 0.8537 | |

| BBHL | 0.8641 | 0.8651 | 0.8780 | 0.8715 |

| Dataset | Acc | Pre | Recall | F1 |

|---|---|---|---|---|

| 0.93 ± 0.02 | 0.94 ± 0.02 | 0.91 ± 0.04 | 0.93 ± 0.03 | |

| (0.91–0.95) | (0.92–0.96) | (0.87–0.95) | (0.90–0.96) | |

| 0.90 ± 0.02 | 0.92 ± 0.02 | 0.89 ± 0.03 | 0.90 ± 0.02 | |

| (0.88–0.92) | (0.90–0.94) | (0.86–0.92) | (0.88–0.92) | |

| Pheme | 0.85 ± 0.02 | 0.85 ± 0.02 | 0.87 ± 0.02 | 0.86 ± 0.02 |

| (0.83–0.87) | (0.83–0.87) | (0.85–0.89) | (0.84–0.88) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, M.; Zhang, J.; Bu, X.; Wang, J.; Luo, P. Research on BBHL Model Based on Hybrid Loss Optimization for Fake News Detection. Appl. Sci. 2025, 15, 10028. https://doi.org/10.3390/app151810028

Tang M, Zhang J, Bu X, Wang J, Luo P. Research on BBHL Model Based on Hybrid Loss Optimization for Fake News Detection. Applied Sciences. 2025; 15(18):10028. https://doi.org/10.3390/app151810028

Chicago/Turabian StyleTang, Minghu, Jiayi Zhang, Xuan Bu, Junjie Wang, and Peng Luo. 2025. "Research on BBHL Model Based on Hybrid Loss Optimization for Fake News Detection" Applied Sciences 15, no. 18: 10028. https://doi.org/10.3390/app151810028

APA StyleTang, M., Zhang, J., Bu, X., Wang, J., & Luo, P. (2025). Research on BBHL Model Based on Hybrid Loss Optimization for Fake News Detection. Applied Sciences, 15(18), 10028. https://doi.org/10.3390/app151810028