A Tunnel Secondary Lining Leakage Recognition Model Based on an Improved TransUNet

Abstract

1. Introduction

2. CBAM-TransUNet Water Leakage Detection Model

2.1. Overall Architecture of the Water Leakage Identification Model

2.2. Convolutional Block Attention Module

2.2.1. Channel Attention Mechanism

2.2.2. Spatial Attention Mechanism

2.3. Encoder of the Water Leakage Identification Model

2.4. Decoder of the Water Leakage Recognition Model

3. Construction of the Tunnel Water Leakage Dataset

3.1. Collection of Tunnel Water Leakage Images

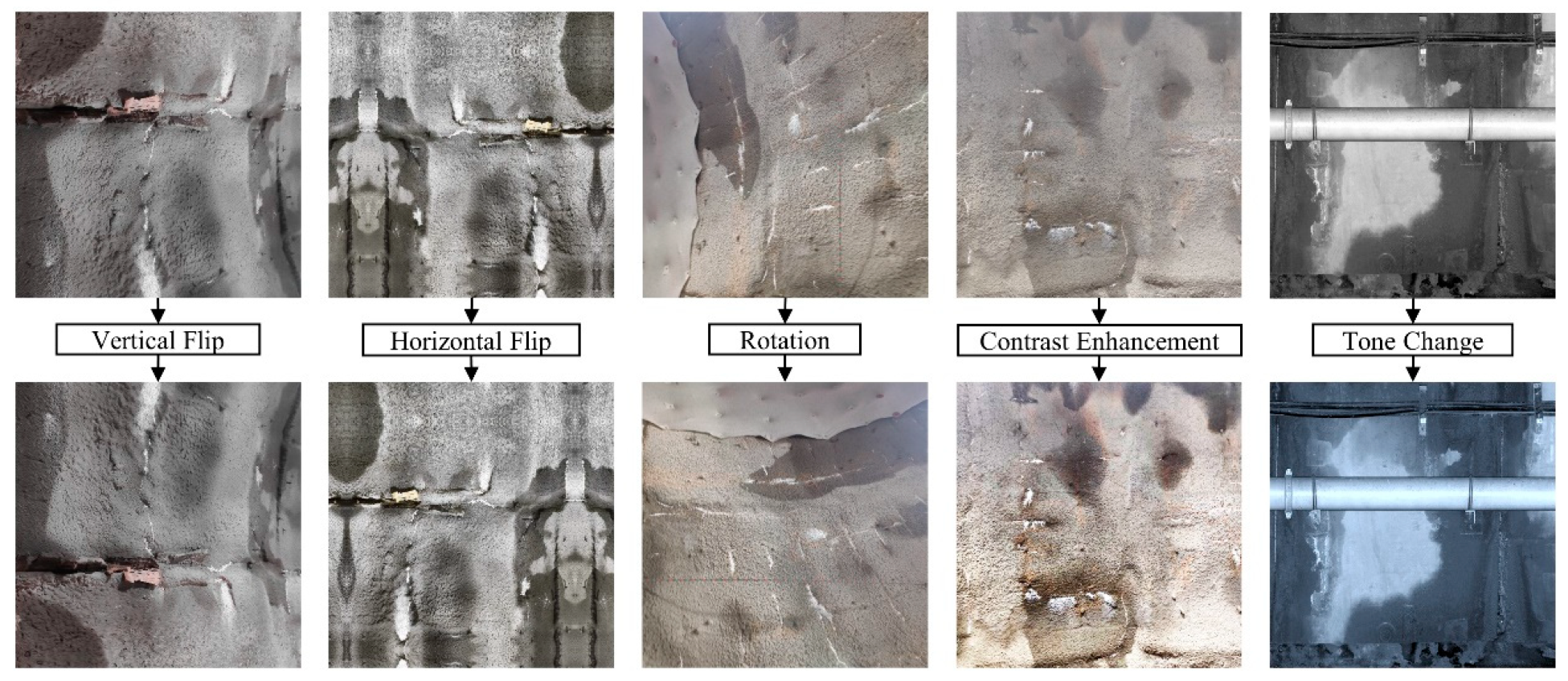

3.2. Data Enhancement Method

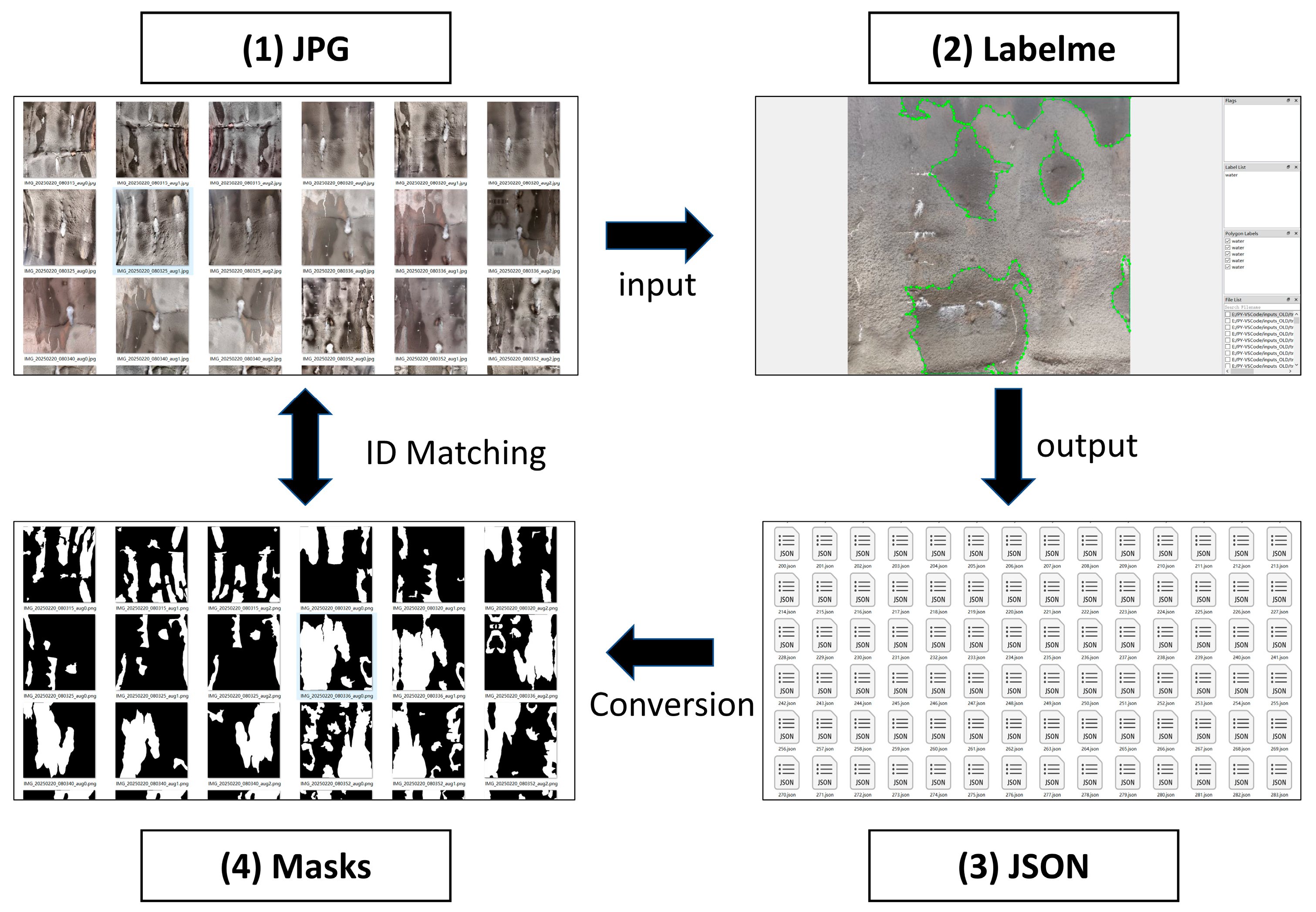

3.3. Image Annotation

4. Model Training

4.1. Training Environment

4.2. BCE-Dice Loss Function

4.3. Evaluation Indicators

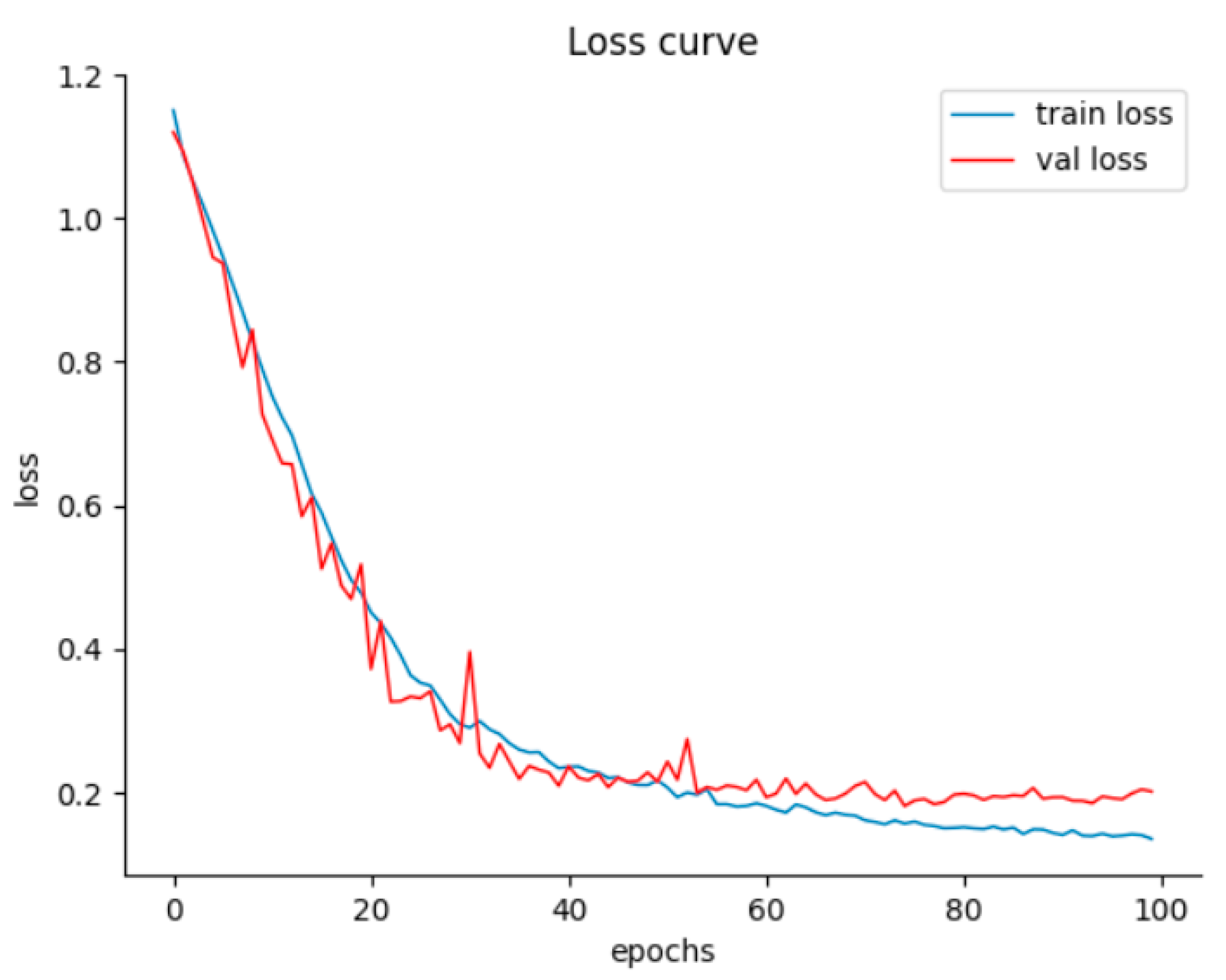

5. Analysis of Training Results

5.1. Performance Analysis of the CBAM-TransUNet Model

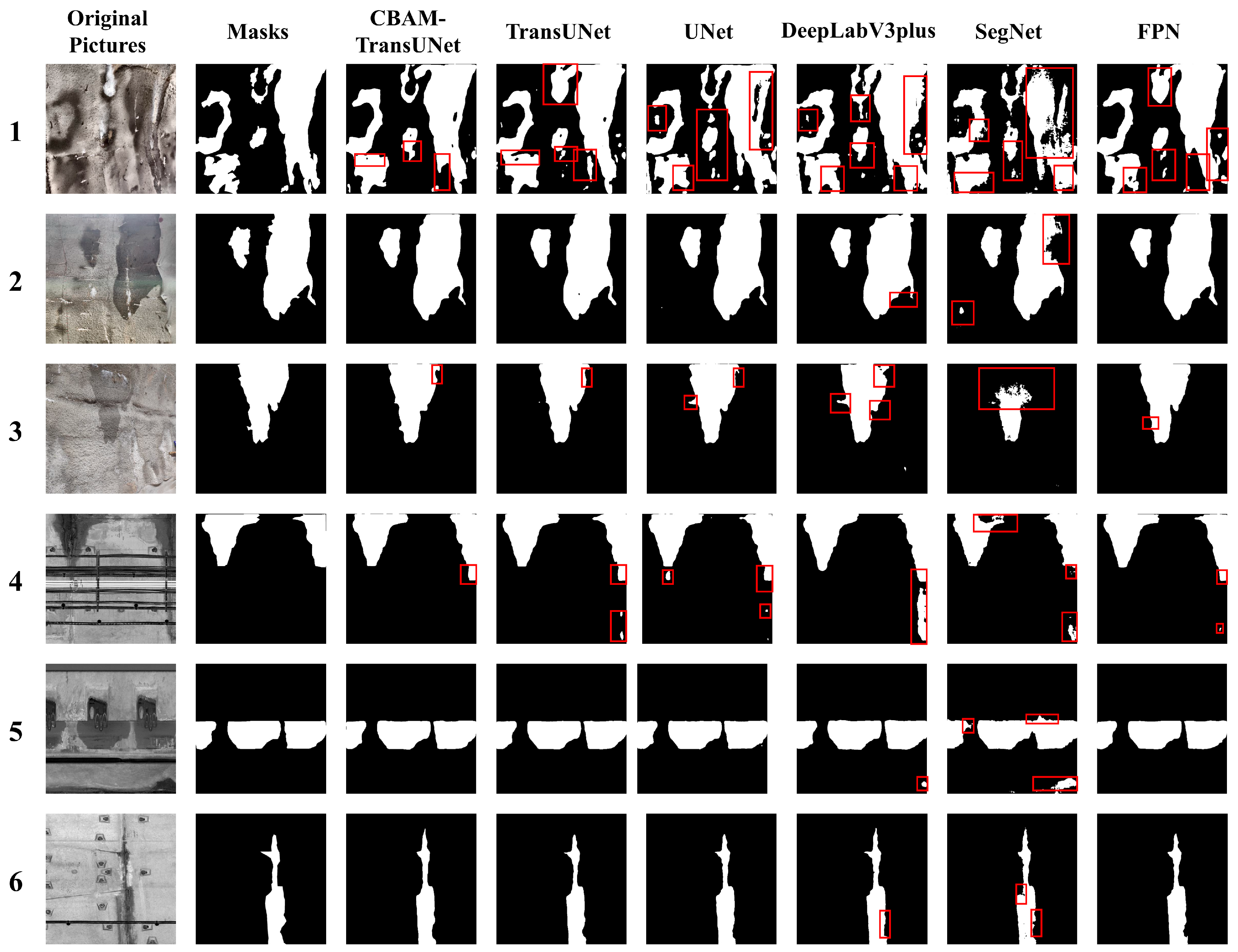

5.2. Comparison of Results of Various Models

5.3. Analysis of Visual Segmentation Results

6. Ablation Experiments

6.1. Analysis of Ablation Experiment Results

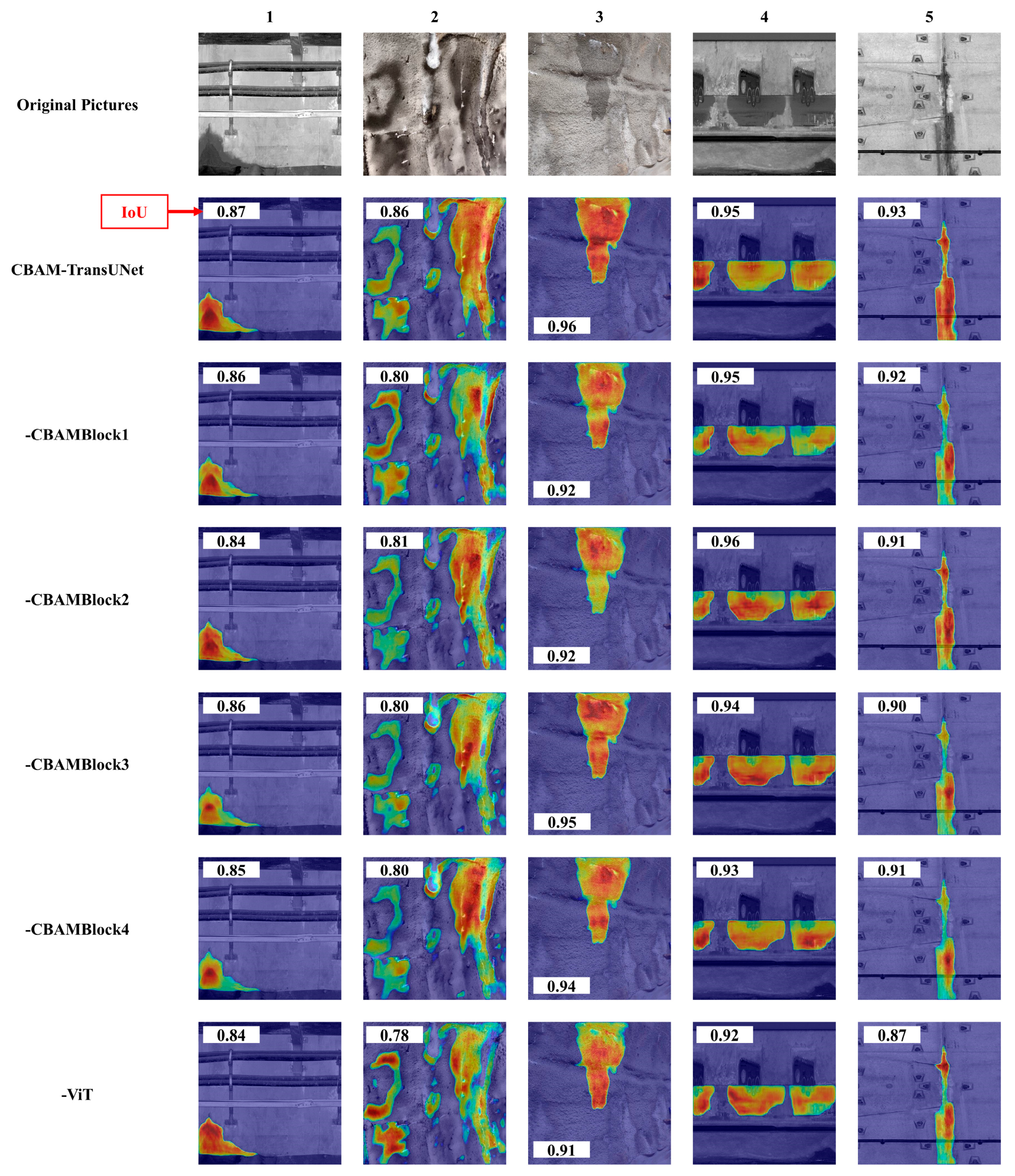

6.2. Analysis of Heatmaps from Ablation Experiments

6.2.1. Heatmap Analysis of Image 1: Corner-Type Leakage

6.2.2. Heatmap Analysis of Image 2: Mixed-Type Leakage

6.2.3. Heatmap Analysis of Image 3: Area-Type Leakage

6.2.4. Heatmap Analysis of Image 5: Linear Leakage

7. Discussion

7.1. Limitations of the Dataset and Annotations

7.2. Constraints on Computational Resources and Real-Time Performance

7.3. Improvement of Model Interpretability and Functionality

7.4. Limitations in Experimental Designs

8. Conclusions

- (1)

- This paper proposes the CBAM-TransUNet model suitable for tunnel lining leakage detection. After training on the constructed mixed leakage dataset, the model achieves average values of 0.8143 (IoU), 0.8433 (Dice), 0.9518 (recall), 0.8482 (precision), 0.9837 (accuracy), 0.9746 (AUC), 0.8568 (MCC), and 0.8970 (F1-score). Experimental verification demonstrates that the model exhibits strong generality and robustness, capable of effectively handling complex and diverse scenarios, such as the presence of other components on the lining surface, rough textures, surface contamination, low distinguishability between leakage traces and background, and partial occlusion of traces.

- (2)

- To validate the performance of the core modules, ablation experiments involved progressively stripping the CBAM and the ViT module away from the CBAM-TransUNet model. The results show that all evaluation metrics decrease to varying degrees. Specifically, the variant model with all CBAMs and ViT modules removed exhibits a significant performance gap compared to the original CBAM-TransUNet, with a difference of up to 7.79% in the IoU metric. Additionally, disparities are observed across other evaluation metrics: The difference in the Dice coefficient is 1.03%; in recall, 2.40%; in precision, 6.20%; in accuracy, 0.96%; and in the F1-score, 4.53%, further confirming the necessity of these two modules in the model.

- (3)

- Analysis of Score-CAM heatmaps for different leakage patterns reveals that CBAM-TransUNet performs stably in detecting corner-type, linear, overall area-type, and mixed-type tunnel leakages. For the aforementioned leakage pattern categories, the differences in the IoU metric before and after the ablation experiments are 3.44%, 6.45%, 5.20%, and 9.30%, in sequence. In contrast to the heatmaps of ablated variant models, the ones generated by CBAM-TransUNet cover leakage areas more completely. The high-activation regions align more closely with real leakage boundaries, and they exhibit stronger spatial continuity. This enables the model to more accurately characterize the spatial distribution features of different leakage forms.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhai, J.; Wang, Q.; Wang, H.; Xie, X.; Zhou, M.; Yuan, D.; Zhang, W. Highway Tunnel Defect Detection Based on Mobile GPR Scanning. Appl. Sci. 2022, 12, 3148. [Google Scholar] [CrossRef]

- Wang, L.; Guan, C.; Wu, Y.; Feng, C. Impact Analysis and Optimization of Key Material Parameters of Embedded Water-Stop in Tunnels. Appl. Sci. 2023, 13, 8468. [Google Scholar] [CrossRef]

- Jin, Y.; Yang, S.; Guo, H.; Han, L.; Su, S.; Shan, H.; Zhao, J.; Wang, G. A Novel Visual System for Conducting Safety Evaluations of Operational Tunnel Linings. Appl. Sci. 2024, 14, 8414. [Google Scholar] [CrossRef]

- Attard, L.; Debono, C.J.; Valentino, G.; Di Castro, M. Vision-Based Tunnel Lining Health Monitoring via Bi-Temporal Image Comparison and Decision-Level Fusion of Change Maps. Sensors 2021, 21, 4040. [Google Scholar] [CrossRef] [PubMed]

- Li, C.; Li, J.; Luo, C.; Xu, Q.; Wan, X.; Yang, L. Diagnosis and Monitoring of Tunnel Lining Defects by Using Comprehensive Geophysical Prospecting and Fiber Bragg Grating Strain Sensor. Sensors 2024, 24, 1749. [Google Scholar] [CrossRef]

- Tan, L.; Hu, X.; Tang, T.; Yuan, D. A Lightweight Metro Tunnel Water Leakage Identification Algorithm via Machine Vision. Eng. Fail. Anal. 2023, 150, 107327. [Google Scholar] [CrossRef]

- Liu, S.; Sun, H.; Zhang, Z.; Li, Y.; Zhong, R.; Li, J.; Chen, S. A Multiscale Deep Feature for the Instance Segmentation of Water Leakages in Tunnel Using MLS Point Cloud Intensity Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5702716. [Google Scholar] [CrossRef]

- Wu, X.; Bao, X.; Shen, J.; Chen, X.; Cui, H. Evaluation of Void Defects behind Tunnel Lining through GPR Forward Simulation. Sensors 2022, 22, 9702. [Google Scholar] [CrossRef]

- Panthi, K.K.; Basnet, C.B. Fluid Flow and Leakage Assessment Through an Unlined/Shotcrete Lined Pressure Tunnel: A Case from Nepal Himalaya. Rock Mech. Rock Eng. 2021, 54, 1687–1705. [Google Scholar] [CrossRef]

- Gong, C.; Cheng, M.; Ge, Y.; Song, J.; Zhou, Z. Leakage Mechanisms of an Operational Underwater Shield Tunnel and Countermeasures: A Case Study. Tunn. Undergr. Space Technol. 2024, 152, 105892. [Google Scholar] [CrossRef]

- Zhang, S.; Xu, Q.; Yoo, C.; Min, B.; Liu, C.; Guan, X.; Li, P. Lining Cracking Mechanism of Old Highway Tunnels Caused by Drainage System Deterioration: A Case Study of Liwaiao Tunnel, Ningbo, China. Eng. Fail. Anal. 2022, 137, 106270. [Google Scholar] [CrossRef]

- Yang, Q.; Hong, C.; Yuan, S.; Wu, P. Development and Verification of a Vertical Graphene Sensor for Tunnel Leakage Monitoring. ACS Appl. Mater. Interfaces 2025, 17, 3962–3972. [Google Scholar] [CrossRef] [PubMed]

- Guo, J.-Y.; Fang, J.-H.; Shi, B.; Zhang, C.-C.; Liu, L. High-Sensitivity Water Leakage Detection and Localization in Tunnels Using Novel Ultra-Weak Fiber Bragg Grating Sensing Technology. Tunn. Undergr. Space Technol. 2024, 144, 105574. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, D.; Ren, K.; Shi, B.; Guo, J.; Sun, M. The Sensing Performance of a Novel Optical Cable for Tunnel Water Leakage Monitoring Based on Distributed Strain Sensing. IEEE Sens. J. 2023, 23, 22496–22506. [Google Scholar] [CrossRef]

- Chen, Q.; Kang, Z.; Cao, Z.; Xie, X.; Guan, B.; Pan, Y.; Chang, J. Combining Cylindrical Voxel and Mask R-CNN for Automatic Detection of Water Leakages in Shield Tunnel Point Clouds. Remote Sens. 2024, 16, 896. [Google Scholar] [CrossRef]

- Zhao, L.; Wang, J.; Liu, S.; Yang, X. An Adaptive Multitask Network for Detecting the Region of Water Leakage in Tunnels. Appl. Sci. 2023, 13, 6231. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. Available online: https://openaccess.thecvf.com/content_iccv_2017/html/He_Mask_R-CNN_ICCV_2017_paper.html (accessed on 4 September 2025).

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 7464–7475. Available online: https://openaccess.thecvf.com/content/CVPR2023/html/Wang_YOLOv7_Trainable_Bag-of-Freebies_Sets_New_State-of-the-Art_for_Real-Time_Object_Detectors_CVPR_2023_paper.html (accessed on 4 September 2025).

- Chen, J.; Xu, X.; Jeon, G.; Camacho, D.; He, B.-G. WLR-Net: An Improved YOLO-V7 with Edge Constraints and Attention Mechanism for Water Leakage Recognition in the Tunnel. IEEE Trans. Emerg. Top. Comput. Intell. 2024, 8, 3105–3116. [Google Scholar] [CrossRef]

- Huang, H.; Cheng, W.; Zhou, M.; Chen, J.; Zhao, S. Towards Automated 3D Inspection of Water Leakages in Shield Tunnel Linings Using Mobile Laser Scanning Data. Sensors 2020, 20, 6669. [Google Scholar] [CrossRef]

- Li, P.; Wang, Q.; Li, J.; Pei, Y.; He, P. Automated Extraction of Tunnel Leakage Location and Area from 3D Laser Scanning Point Clouds. Opt. Lasers Eng. 2024, 178, 108217. [Google Scholar] [CrossRef]

- Xu, Y.; Li, D.; Xie, Q.; Wu, Q.; Wang, J. Automatic Defect Detection and Segmentation of Tunnel Surface Using Modified Mask R-CNN. Measurement 2021, 178, 109316. [Google Scholar] [CrossRef]

- Wu, J.; Zhang, X. Tunnel Crack Detection Method and Crack Image Processing Algorithm Based on Improved Retinex and Deep Learning. Sensors 2023, 23, 9140. [Google Scholar] [CrossRef] [PubMed]

- Ouyang, A.; Di Murro, V.; Daakir, M.; Osborne, J.A.; Li, Z. From Pixel to Infrastructure: Photogrammetry-Based Tunnel Crack Digitalization and Documentation Method Using Deep Learning. Tunn. Undergr. Space Technol. 2025, 155, 106179. [Google Scholar] [CrossRef]

- Maeda, K.; Takada, S.; Haruyama, T.; Togo, R.; Ogawa, T.; Haseyama, M. Distress Detection in Subway Tunnel Images via Data Augmentation Based on Selective Image Cropping and Patching. Sensors 2022, 22, 8932. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Li, S.; Li, H.; Zhou, Z. Data Enhancement and Feature Extraction Optimization in Tunnel Surface Defect Detection: Combining DCGAN-RC and Repvit-YOLO Methods. Eng. Fail. Anal. 2025, 177, 109715. [Google Scholar] [CrossRef]

- Liu, R.; He, Z.; Zhang, J.; Chen, P.; Quan, W.; Liu, S.; Liu, Y. An Improved U-Net Based Method for Predicting Cable Tunnel Cracks. Array 2025, 27, 100421. [Google Scholar] [CrossRef]

- Bono, F.M.; Radicioni, L.; Cinquemani, S.; Conese, C.; Tarabini, M.; Meyendorf, N.G.; Niezrecki, C.; Farhangdoust, S. Development of soft sensors based on neural networks for detection of anomaly working condition in automated machinery. In Proceedings of the NDE 4.0, Predictive Maintenance, and Communication and Energy Systems in a Globally Networked World, Long Beach, CA, USA, 6–10 March 2022; SPIE: Long Beach, CA, USA, 2022; pp. 56–70. [Google Scholar] [CrossRef]

- Tong, J.; Xiang, L.; Zhang, A.A.; Miao, X.; Wang, M.; Ye, P. Fusion of Convolution Neural Network and Visual Transformer for Lithology Identification Using Tunnel Face Images. J. Comput. Civ. Eng. 2025, 39, 04024056. [Google Scholar] [CrossRef]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. TransUNet: Transformers Make Strong Encoders for Medical Image Segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Computer Vision—ECCV 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2018; Volume 11211, pp. 3–19. ISBN 978-3-030-01233-5. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 11–12 June 2015; pp. 770–778. Available online: https://openaccess.thecvf.com/content_cvpr_2016/html/He_Deep_Residual_Learning_CVPR_2016_paper.html (accessed on 4 September 2025).

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. Available online: https://arxiv.org/pdf/2010.11929/1000 (accessed on 4 September 2025).

- Xue, Y.; Cai, X.; Shadabfar, M.; Shao, H.; Zhang, S. Deep Learning-Based Automatic Recognition of Water Leakage Area in Shield Tunnel Lining. Tunn. Undergr. Space Technol. 2020, 104, 103524. [Google Scholar] [CrossRef]

- Buslaev, A.; Iglovikov, V.I.; Khvedchenya, E.; Parinov, A.; Druzhinin, M.; Kalinin, A.A. Albumentations: Fast and Flexible Image Augmentations. Information 2020, 11, 125. [Google Scholar] [CrossRef]

- Russell, B.C.; Torralba, A.; Murphy, K.P.; Freeman, W.T. LabelMe: A Database and Web-Based Tool for Image Annotation. Int. J. Comput. Vis. 2008, 77, 157–173. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Navab, N., Hornegger, J., Wells, W., Frangi, A., Eds.; MICCAI 2015, Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2015; Volume 9351. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Computer Vision—ECCV 2018, 5th European Conference, Munich, Germany, 8–14 September 2018; Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y., Eds.; ECCV 2018. Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2018; Volume 11211. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Yu, C.; Gao, C.; Wang, J.; Yu, G.; Shen, C.; Sang, N. BiSeNet V2: Bilateral Network with Guided Aggregation for Real-Time Semantic Segmentation. Int. J. Comput. Vis. 2021, 129, 3051–3068. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 936–944. [Google Scholar] [CrossRef]

- Jha, D.; Riegler, M.A.; Johansen, D.; Halvorsen, P.; Johansen, H.D. DoubleU-Net: A Deep Convolutional Neural Network for Medical Image Segmentation. In Proceedings of the 2020 IEEE 33rd International Symposium on Computer-Based Medical Systems (CBMS), Rochester, MN, USA, 28–30 July 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 558–564. [Google Scholar] [CrossRef]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Stoyanov, D., Taylor, Z., Carneiro, G., Syeda-Mahmood, T., Martel, A., Maier-Hein, L., Tavares, J.M.R.S., Bradley, A., Papa, J.P., Belagiannis, V., et al., Eds.; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2018; Volume 11045. [Google Scholar] [CrossRef]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-Unet: Unet-like Pure Transformer for Medical Image Segmentation. In Proceedings of the European Conference on Computer Vision Workshops, Tel Aviv, Israel, 23–27 October 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; ECCVW 2022. Lecture Notes in Computer Science. Springer: Cham, Switzerland, 2022; Volume 13673. [Google Scholar] [CrossRef]

- Wang, H.; Wang, Z.; Du, M.; Yang, F.; Zhang, Z.; Ding, S.; Mardziel, P.; Hu, X. Score-CAM: Score-Weighted Visual Explanations for Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 111–119. [Google Scholar] [CrossRef]

- Li, X.; Zhang, H.; Yang, H.; Li, T.-Q. CS-MRI Reconstruction Using an Improved GAN with Dilated Residual Networks and Channel Attention Mechanism. Sensors 2023, 23, 7685. [Google Scholar] [CrossRef]

- Guo, Z.; Wei, J.; Sun, H.; Zhong, R.; Ji, C. Enhanced Water Leakage Detection in Shield Tunnels Based on Laser Scanning Intensity Images Using RDES-Net. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 5680–5690. [Google Scholar] [CrossRef]

- Zhang, W.; Cui, K.; Chen, X.; Ran, Q.; Wang, Z. One Novel Hybrid Flexible Piezoresistive/Piezoelectric Double-Mode Sensor Design for Water Leakage Monitoring. ACS Appl. Mater. Interfaces 2024, 16, 1439–1450. [Google Scholar] [CrossRef] [PubMed]

| Model Name | IoU | Dice | Recall | Precision | Accuracy | Specificity | AUC | MCC | F1-Score |

|---|---|---|---|---|---|---|---|---|---|

| CBAM-TransUNet | 0.8143 | 0.8433 | 0.9518 | 0.8482 | 0.9837 | 0.9866 | 0.9746 | 0.8568 | 0.8970 |

| TransUNet | 0.7756 | 0.8157 | 0.9397 | 0.8160 | 0.9855 | 0.9882 | 0.9733 | 0.8477 | 0.8726 |

| Swin-Unet | 0.8079 | 0.8226 | 0.9488 | 0.8112 | 0.9871 | 0.9859 | 0.9512 | 0.8457 | 0.8747 |

| UNet | 0.7508 | 0.8346 | 0.9290 | 0.7956 | 0.9742 | 0.9842 | 0.9684 | 0.8309 | 0.8564 |

| DeepLabV3plus | 0.6802 | 0.7992 | 0.9302 | 0.7193 | 0.9811 | 0.9831 | 0.9681 | 0.8065 | 0.8112 |

| SegNet | 0.6622 | 0.7859 | 0.9222 | 0.7039 | 0.9790 | 0.9813 | 0.9311 | 0.7934 | 0.7983 |

| BiSeNetV2 | 0.6111 | 0.7316 | 0.8535 | 0.6874 | 0.9760 | 0.9809 | 0.9251 | 0.7511 | 0.7614 |

| FPN | 0.7996 | 0.8296 | 0.9560 | 0.7471 | 0.9840 | 0.9852 | 0.9710 | 0.8351 | 0.8387 |

| DoubleUNet | 0.6948 | 0.8111 | 0.9449 | 0.7277 | 0.9826 | 0.9844 | 0.9696 | 0.8180 | 0.8221 |

| NestedUNet | 0.6944 | 0.8098 | 0.9461 | 0.7253 | 0.9813 | 0.9826 | 0.9626 | 0.8172 | 0.8211 |

| Step Number | Ablation Module | IoU | Dice | Recall | Precision | Accuracy | F1-Score |

|---|---|---|---|---|---|---|---|

| 0 | None | 0.8143 | 0.8433 | 0.9518 | 0.8482 | 0.9837 | 0.8970 |

| 1 | Skip-CBAM1 | 0.7970 | 0.7748 | 0.9164 | 0.8567 | 0.9709 | 0.8848 |

| 2 | Skip-CBAM2 | 0.7922 | 0.8073 | 0.9081 | 0.8579 | 0.9729 | 0.8818 |

| 3 | Skip-CBAM3 | 0.7733 | 0.7685 | 0.9533 | 0.8039 | 0.9850 | 0.8711 |

| 4 | Deep-CBAM | 0.7646 | 0.7971 | 0.9326 | 0.7979 | 0.9841 | 0.8589 |

| 5 | ViT | 0.7508 | 0.8346 | 0.9290 | 0.7956 | 0.9742 | 0.8564 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Z.; Wan, L.; Wu, Y.; Song, R.; Shao, S.; Wu, H. A Tunnel Secondary Lining Leakage Recognition Model Based on an Improved TransUNet. Appl. Sci. 2025, 15, 10006. https://doi.org/10.3390/app151810006

Li Z, Wan L, Wu Y, Song R, Shao S, Wu H. A Tunnel Secondary Lining Leakage Recognition Model Based on an Improved TransUNet. Applied Sciences. 2025; 15(18):10006. https://doi.org/10.3390/app151810006

Chicago/Turabian StyleLi, Zelong, Li Wan, Yimin Wu, Renjie Song, Shuai Shao, and Haiping Wu. 2025. "A Tunnel Secondary Lining Leakage Recognition Model Based on an Improved TransUNet" Applied Sciences 15, no. 18: 10006. https://doi.org/10.3390/app151810006

APA StyleLi, Z., Wan, L., Wu, Y., Song, R., Shao, S., & Wu, H. (2025). A Tunnel Secondary Lining Leakage Recognition Model Based on an Improved TransUNet. Applied Sciences, 15(18), 10006. https://doi.org/10.3390/app151810006