A Deep Reinforcement Learning Framework for Multi-Fleet Scheduling and Optimization of Hybrid Ground Support Equipment Vehicles in Airport Operations

Abstract

1. Introduction

1.1. Airport Ground Support Scheduling for Fuel and Electric Fleets

1.2. Modeling and Optimization for Hybrid Fleet Operations

1.3. Reinforcement Learning in Fleet Scheduling and Management

- An end-to-end DRL framework is proposed for dispatching hybrid fleets consisting of both electric and fuel-powered airport ground support vehicles. By abstracting the complex operational environment into a Markov Decision Process (MDP), the framework improves adaptability in EV scheduling under hybrid energy constraints.

- The framework supports large-scale and multi-type vehicle fleets, capturing the diverse operational demands of airport ground services. This design enhances the model’s scalability and applicability to real-world scheduling scenarios.

- A multi-objective coordination mechanism is embedded within the DRL model to dynamically balance task execution and energy replenishment. The model jointly optimizes service punctuality, fleet utilization, carbon emission reduction, and grid load smoothing, enabling intelligent and sustainable hybrid operations in airports.

2. Methodology

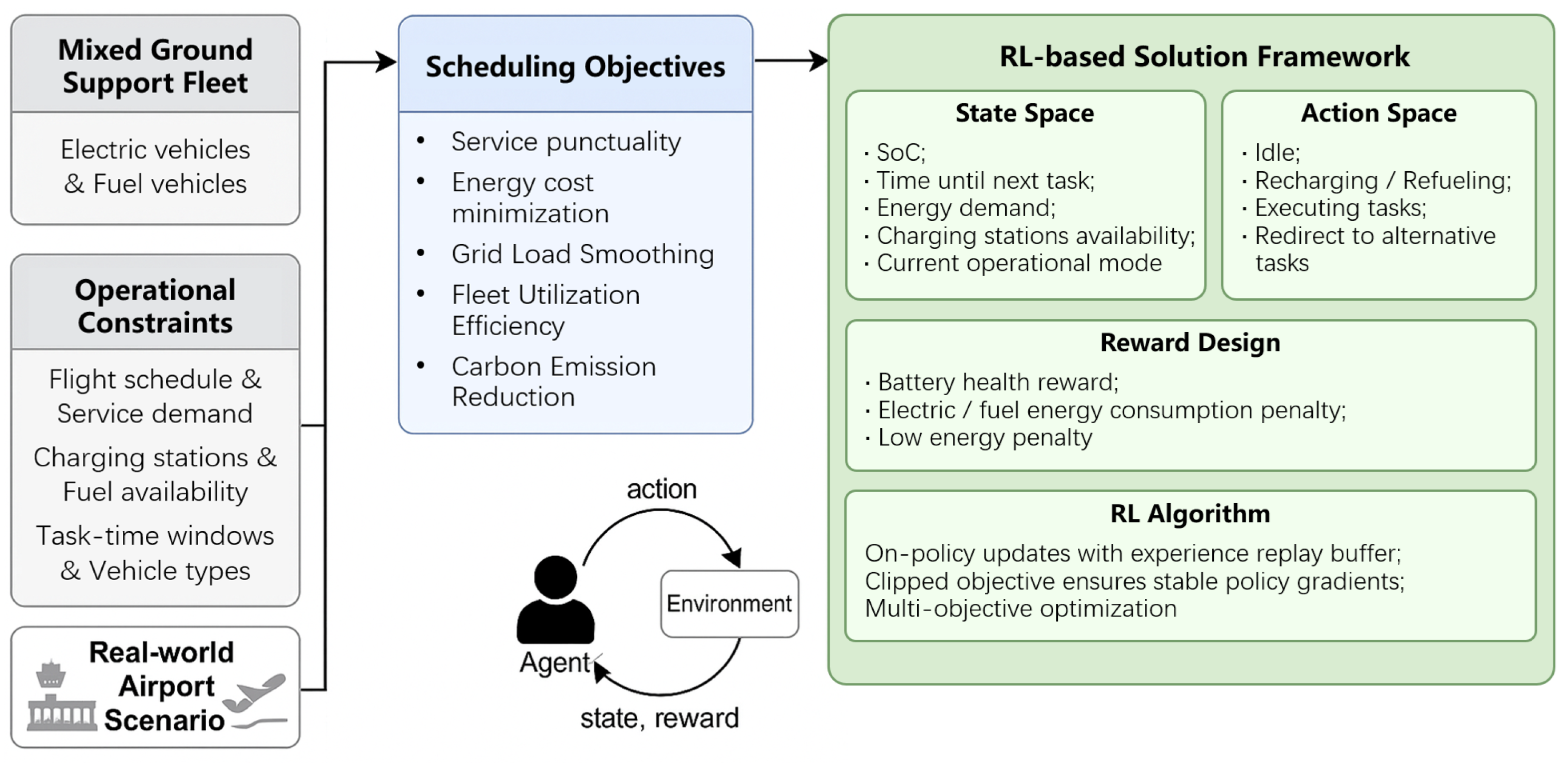

Overview

3. Deep Reinforcement Learning Method for Hybrid Fleet Scheduling Problem

3.1. Environment and State

- For electric vehicles:

- -

- current battery level ;

- -

- time until the vehicle’s next assigned task ;

- -

- estimated energy demand for the upcoming task ;

- -

- availability status of charging stations ;

- -

- current operational mode (e.g., charging, idle, working).

- For fuel-powered vehicles:

- -

- current fuel level ;

- -

- time until the vehicle’s next assigned task ;

- -

- estimated fuel demand for the upcoming task ;

- -

- refueling station availability ;

- -

- current operational mode (e.g., refueling, idle, working).

3.2. Agent and Action

- : direct the vehicle to remain inactive or return to the standby area in preparation for subsequent tasks.

- : initiate the vehicle’s energy replenishment process:

- -

- electric vehicles: move to the nearest charging point and begin recharging;

- -

- fuel-powered vehicles: proceed to the designated fueling area for refueling.

- : execute the vehicle’s currently assigned service task.

- : redirect the vehicle to perform an alternative task type, such as high-priority towing in the case of airtugs.

3.3. Reward

- and denote the sets of electric and fuel-powered vehicles, respectively;

- is the current battery level (state of charge) of electric vehicle v at time t;

- is the desired battery level (e.g., 60%) where the quadratic reward is maximized;

- is the scaling factor that defines the sensitivity of the battery reward shape;

- and denote the normalized electricity and fuel consumption of vehicle v at time t;

- is an indicator function equal to 1 when the condition is true;

- is the remaining energy (SoC or fuel) for vehicle v at time t;

- is the safety threshold for low battery or fuel;

- are weighting coefficients that balance the components.

3.4. PPO Algorithm Architecture

| Algorithm 1 PPO for Airport Ground Service Vehicle Scheduling Optimization |

| 1. for episode = 1 to MAX_EPISODES do |

| 2. # Reset all vehicle states |

| 3. for to T do |

| 4. # Policy network generates actions |

| 5. # select actions for all vehicles |

| 6. # Environment executes actions |

| 7. for each vehicle type do |

| 8. for each vehicle do |

| 9. if then |

| 10. compute_distance_to_charger(D) |

| 11. charge_time = (P.max_charge − q.battery)/P.charge_rate |

| 12. q.schedule(charge, d, charge_time) |

| 13. elif then |

| 14. assign_nearest_task(F) |

| 15. compute_task_distance(D) |

| 16. q.schedule(task1, d, P.task1_time) |

| 17. elif then |

| 18. if v supports task2: |

| 19. assign_towing_task(F) |

| 20. compute_special_distance(D) |

| 21. q.schedule(task2, d, P.task2_time) |

| 22. end if |

| 23. end if |

| 24. end for |

| 25. end for |

| 26. # State transition and reward calculation |

| 27. update_all_vehicles() |

| 28. |

| 29. # Check episode termination |

| 30. |

| 31. # Store experience for PPO |

| 32. Store transition |

| 33. if update_condition: |

| 34. PPO.update(collected_rollouts) |

| 35. end if |

| 36. end for |

| 37. end for |

4. Experiments

4.1. Dataset and Pre-Processing

4.2. Performance Metrics

- Electric Vehicle Carbon Emission: For each electric vehicle, carbon emission is estimated based on its electricity consumption. Given the energy usage (in kWh) at time step t, the emission is computed as:where is the carbon intensity coefficient of the electricity grid (e.g., kg CO2 per kWh).

- Fuel-Powered Vehicle Carbon Emission: For fuel-powered vehicles, the emission is calculated using the consumed fuel volume (in liters) and the fuel-specific carbon factor:where is the emission factor for fuel combustion (e.g., kg CO2 per liter).

- Total Carbon Emission: The overall carbon footprint of the system at time t is the sum of both components:

5. Results and Discussion

5.1. Performance Comparison

5.2. Sensitivity to Reward Weights

5.3. Carbon Emission Evolution and Gantt Chart in Different Fleet Configurations

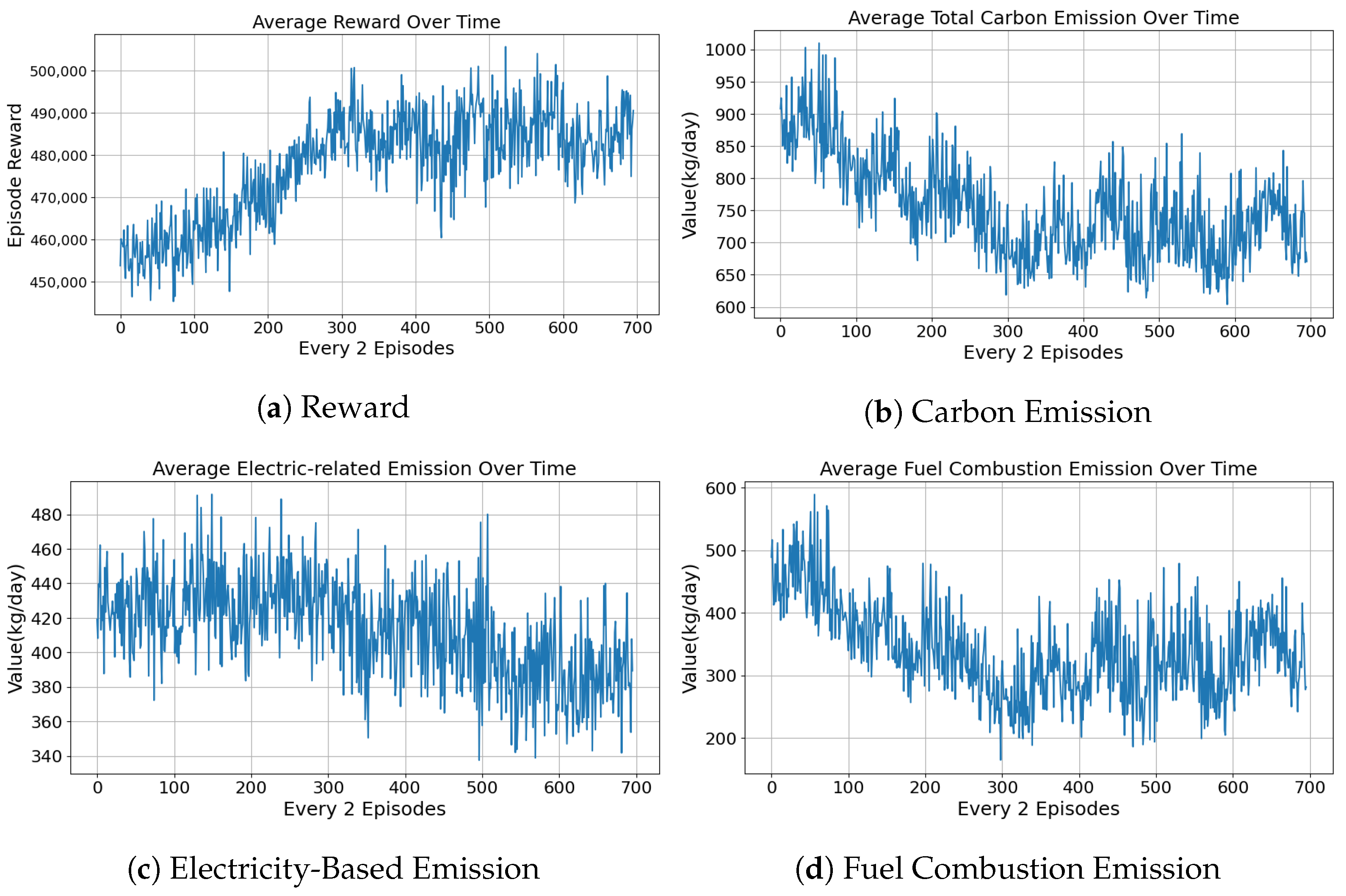

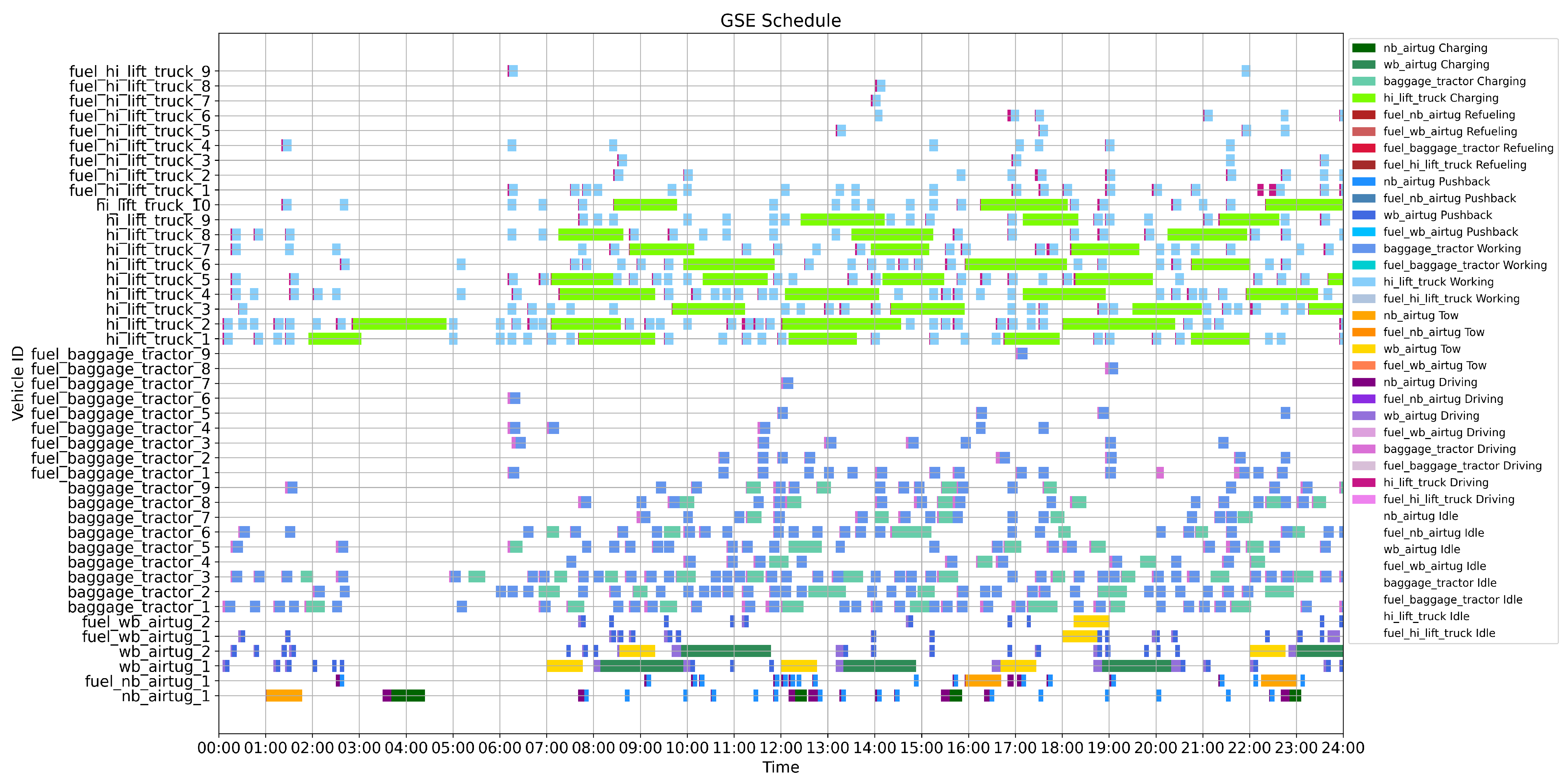

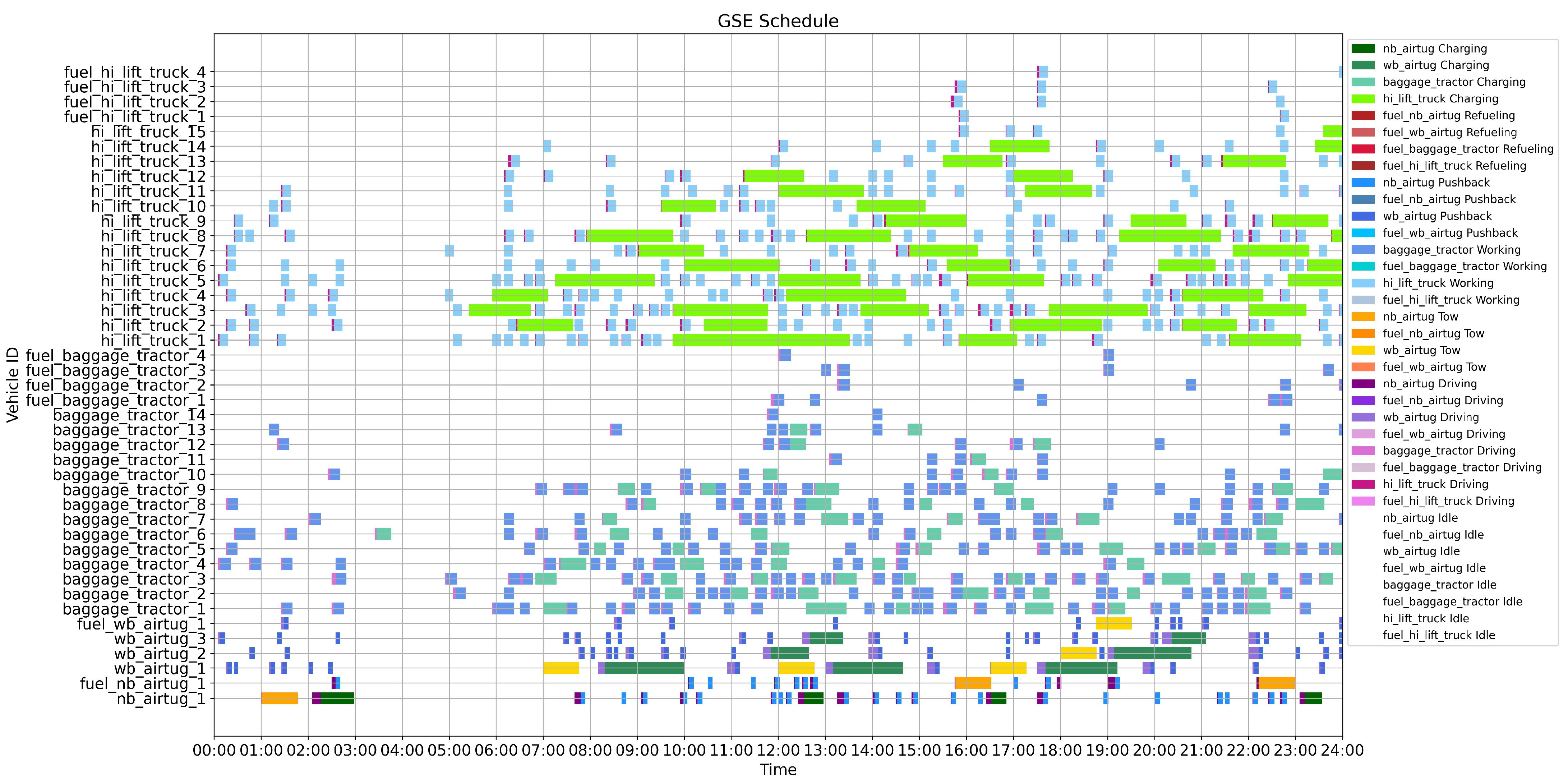

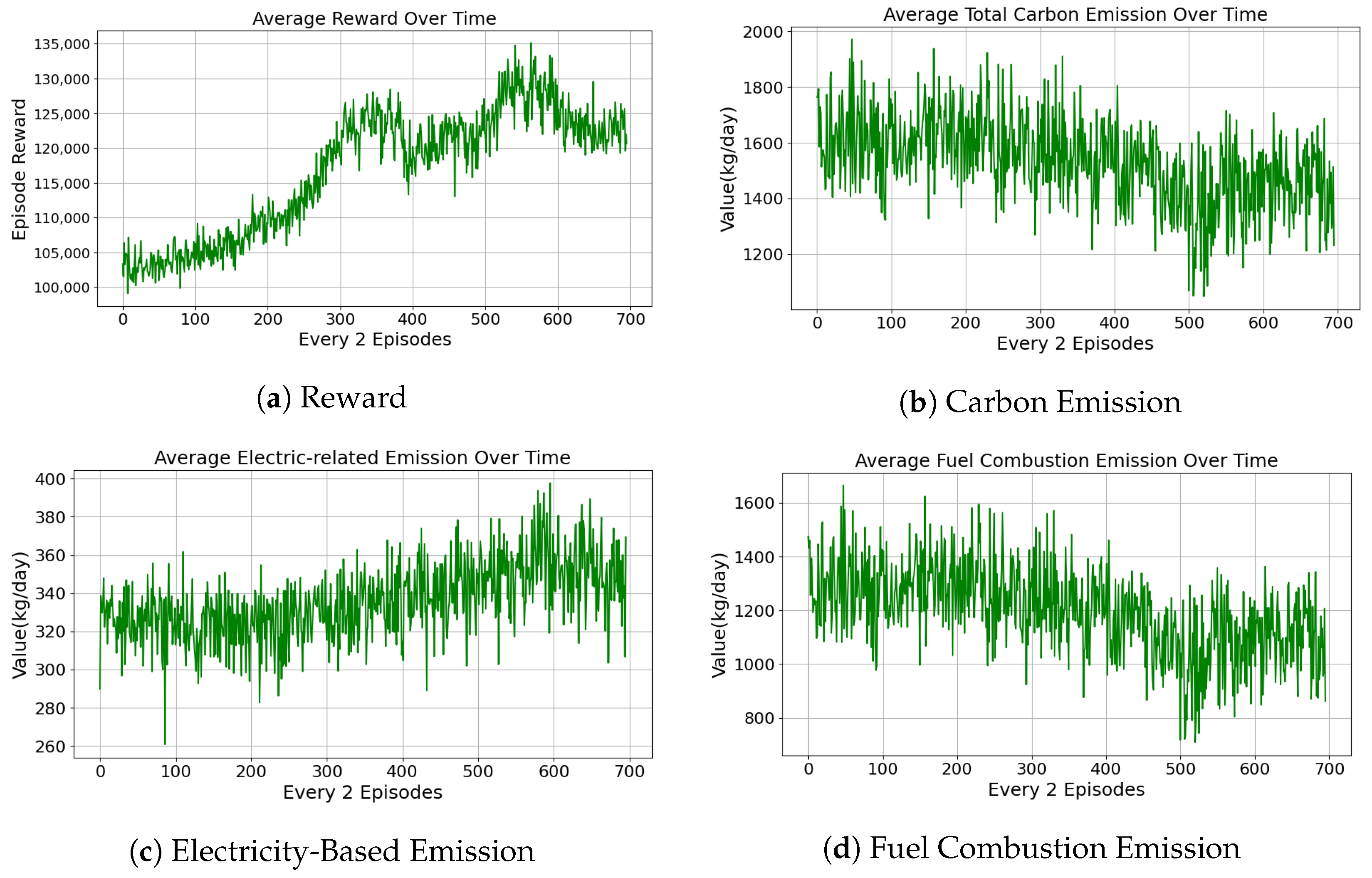

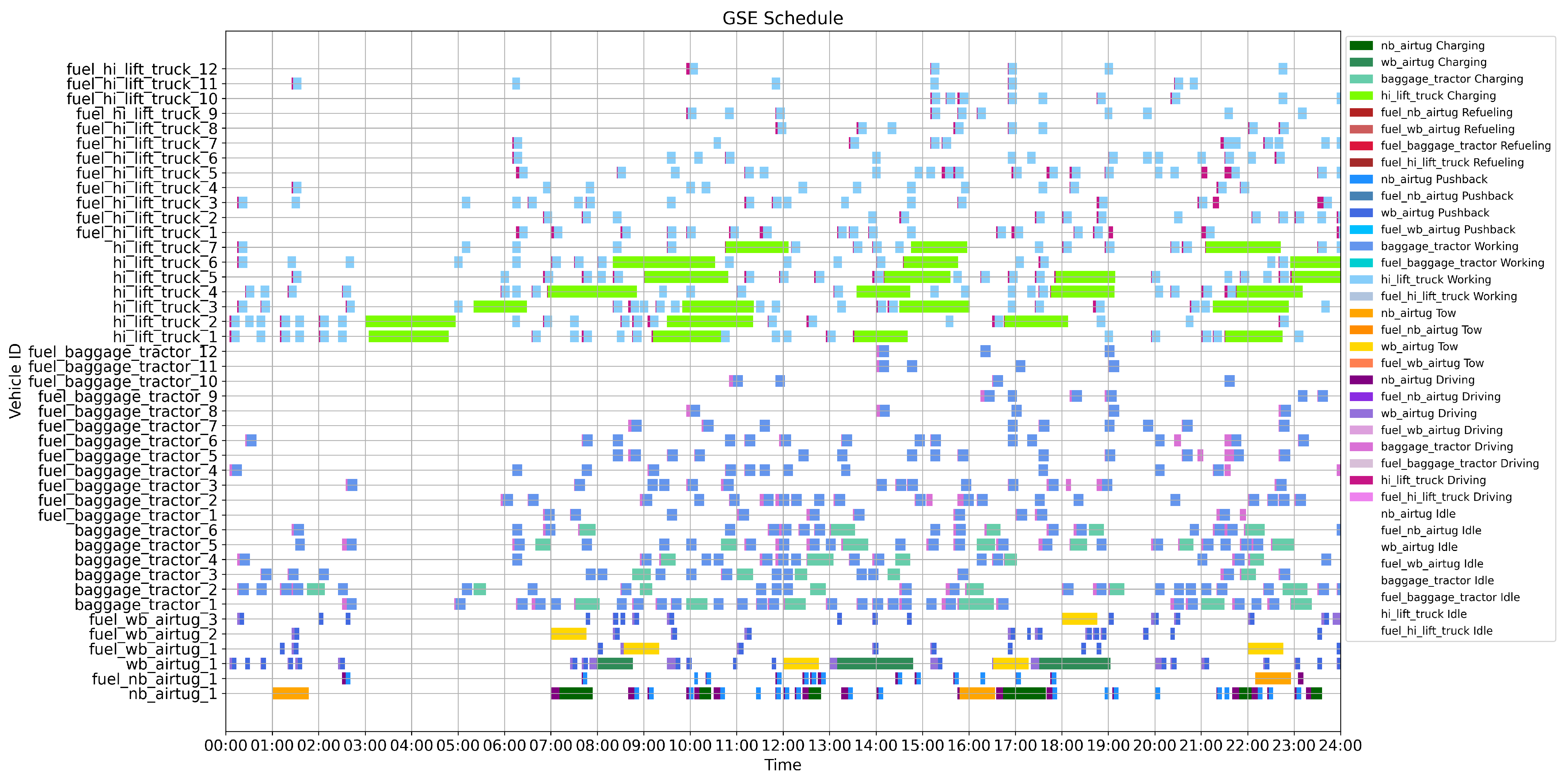

5.3.1. 50% Eletric Fleet

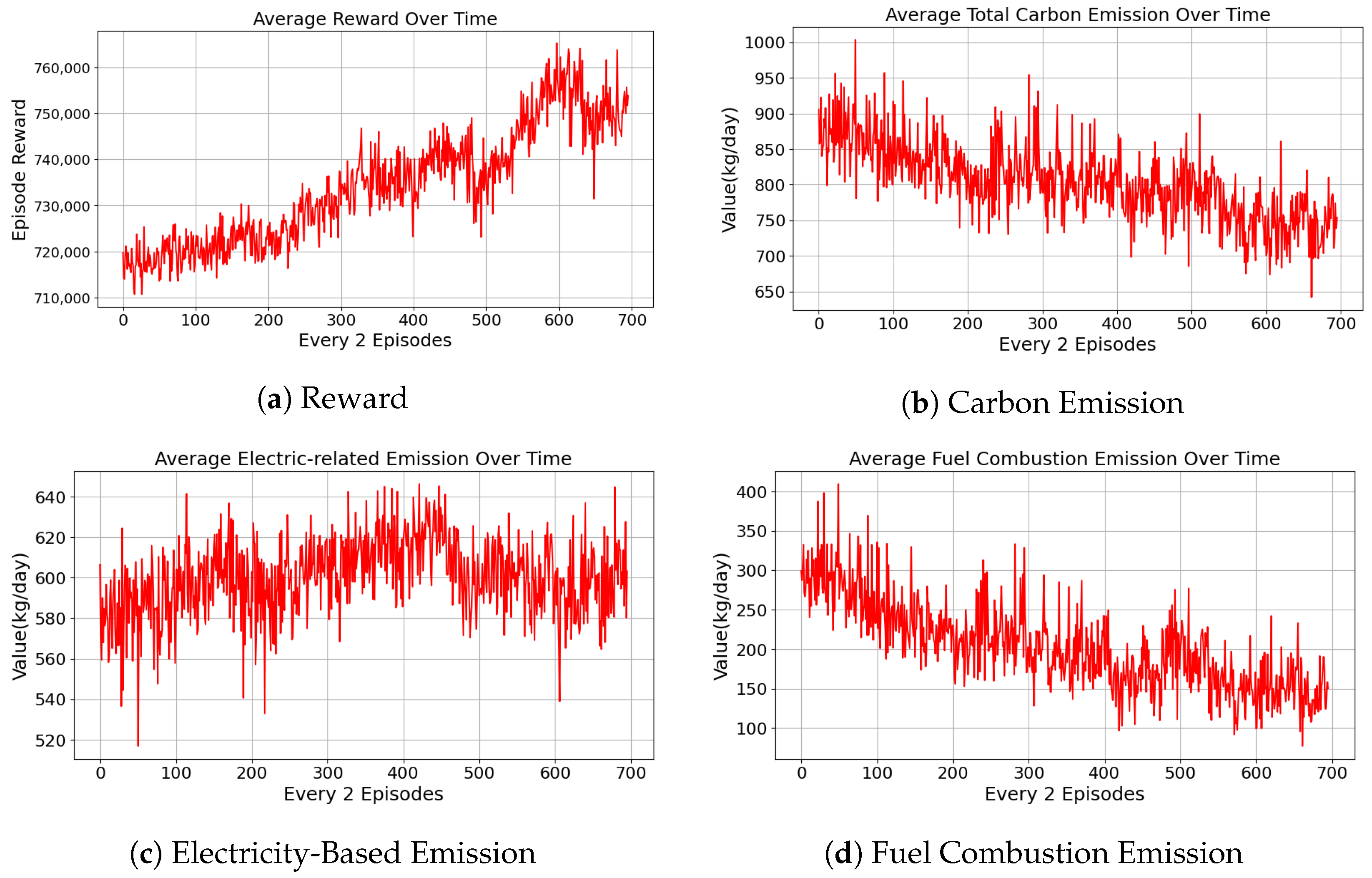

5.3.2. 80% Electric Fleet

5.3.3. 30% Electric Fleet

5.3.4. Comparative Analysis Across Electrification Scenarios

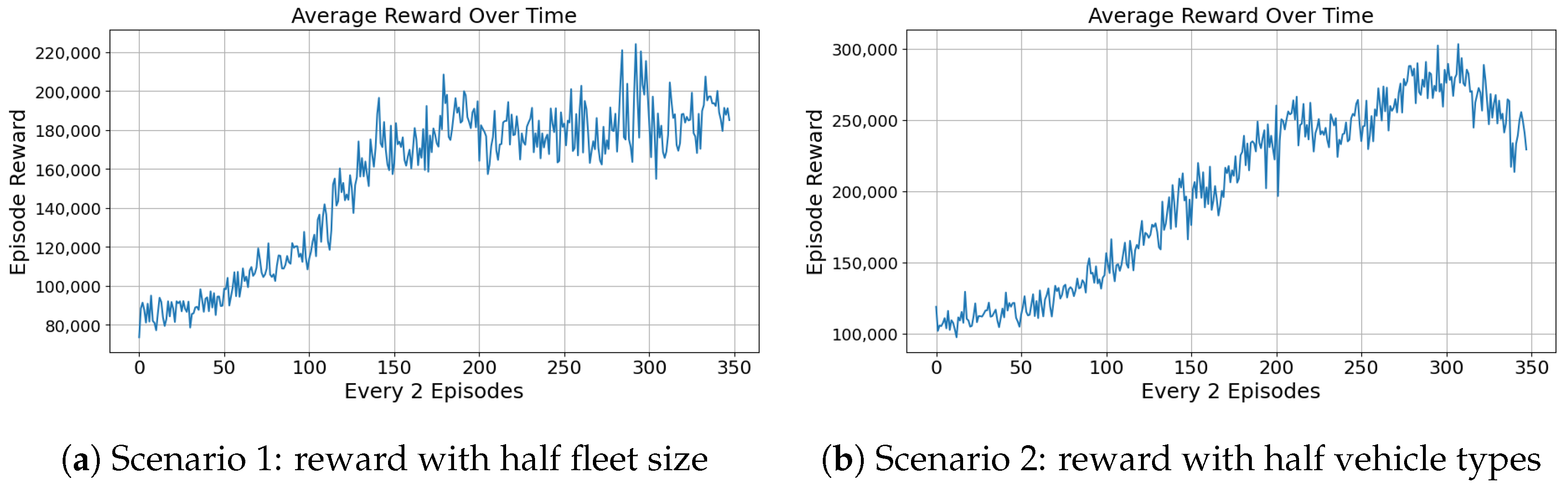

5.4. Operational Performance Under Different Fleet Compositions

5.5. Energy Consumption Patterns Across Fleet Compositions

5.6. Scalability Evaluation

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- ICAO. States Adopt Net-Zero 2050 Global Aspirational Goal for International Flight Operations. 2022. Available online: https://www2023.icao.int/Newsroom/NewsDoc2022/COM.49.22.EN.pdf (accessed on 23 August 2025).

- ACI-EUROPE. Repository of Airports’ Net Zero Carbon Roadmaps. 2023. Available online: https://www.aci-europe.org/netzero/repository-of-roadmaps.html (accessed on 25 July 2025).

- CAAC. The Civil Aviation Administration of China Has Issued the “14th Five Year Plan for Green Development of Civil Aviation”. 2021. Available online: https://www.caac.gov.cn/XXGK/XXGK/FZGH/202201/t20220127_211345.html (accessed on 25 July 2025).

- Bao, D.W.; Zhou, J.Y.; Zhang, Z.Q.; Chen, Z.; Kang, D. Mixed fleet scheduling method for airport ground service vehicles under the trend of electrification. J. Air Transp. Manag. 2023, 108, 102379. [Google Scholar] [CrossRef]

- Winther, M.; Kousgaard, U.; Ellermann, T.; Massling, A.; Nøjgaard, J.K.; Ketzel, M. Emissions of NOx, particle mass and particle numbers from aircraft main engines, APU’s and handling equipment at Copenhagen Airport. Atmos. Environ. 2015, 100, 218–229. [Google Scholar] [CrossRef]

- Changi Airport Group. Tackling Emissions in the Air and on the Ground. 2025. Available online: https://www.changiairport.com/en/corporate/our-sustainability-efforts/environment/tackling-emissions.html (accessed on 25 July 2025).

- Zhu, S.; Sun, H.; Guo, X. Cooperative scheduling optimization for ground-handling vehicles by considering flights’ uncertainty. Comput. Ind. Eng. 2022, 169, 108092. [Google Scholar] [CrossRef]

- Zhao, P.; Han, X.; Wan, D. Evaluation of the airport ferry vehicle scheduling based on network maximum flow model. Omega 2021, 99, 102178. [Google Scholar] [CrossRef]

- Lv, L.; Deng, Z.; Shao, C.; Shen, W. A variable neighborhood search algorithm for airport ferry vehicle scheduling problem. Transp. Res. Part C Emerg. Technol. 2023, 154, 104262. [Google Scholar] [CrossRef]

- Liu, Y.; Wu, J.; Tang, J.; Wang, W.; Wang, X. Scheduling optimisation of multi-type special vehicles in an airport. Transp. B Transp. Dyn. 2022, 10, 954–970. [Google Scholar] [CrossRef]

- Feng, X.; Zuo, H.; Sun, Q. Research on collaborative scheduling of aircraft ground service vehicles based on simple temporal network. In Proceedings of the 2021 IEEE 3rd International Conference on Civil Aviation Safety and Information Technology (ICCASIT), Changsha, China, 20–22 October 2021; pp. 263–269. [Google Scholar]

- Guimarans, D.; Padrón, S. A stochastic approach for planning airport ground support resources. Int. Trans. Oper. Res. 2022, 29, 3316–3345. [Google Scholar] [CrossRef]

- Lee, E.H. eXplainable DEA approach for evaluating performance of public transport origin-destination pairs. Res. Transp. Econ. 2024, 108, 101491. [Google Scholar] [CrossRef]

- Lee, E.H.; Prozzi, J.; Lewis, P.G.T.; Draper, M.; Kim, B. From scores to strategy: Performance-based transportation planning in Texas. Eval. Program Plan. 2025, 111, 102611. [Google Scholar] [CrossRef]

- Fu, W.; Li, J.; Liao, Z.; Fu, Y. A bi-objective optimization approach for scheduling electric ground-handling vehicles in an airport. Complex Intell. Syst. 2025, 11, 209. [Google Scholar] [CrossRef]

- Jin, Z.; Ng, K.K.; Wang, H.; Wang, S.; Zhang, C. Electric airport ferry vehicle scheduling problem for sustainable operation. J. Air Transp. Manag. 2025, 123, 102711. [Google Scholar] [CrossRef]

- Brevoord, J.M. Electric Vehicle Routing Problems: The Operations of Electric Towing Trucks at an Airport Under Uncertain Arrivals and Departures. Master’s Thesis, University of Twente, Enschede, The Netherlands, 2021. [Google Scholar]

- Wei, R.; Zhou, F.; Wang, Y. Energy management of airport service electric vehicles to match renewable generation through rollout approach. Electr. Power Syst. Res. 2024, 235, 110739. [Google Scholar] [CrossRef]

- Tang, Y.; Wang, L.; Kang, W.; Liu, W.; Zhuang, Y. Cooperative scheduling of airport ground electric service vehicles considering workload balance: A column generation approach. Comput. Ind. Eng. 2025, 200, 110773. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, X.; Dong, W.; Xu, G. Adaptive large neighborhood search for autonomous electric vehicle scheduling in airport baggage transport service. Comput. Oper. Res. 2025, 182, 107086. [Google Scholar] [CrossRef]

- Zhou, P.; Shen, Y.; Zheng, Y.; Zheng, Y.; Guo, B.; Du, Y. A comprehensive review of ground support equipment scheduling for aircraft ground handling services. Transp. Res. Part E Logist. Transp. Rev. 2025, 203, 104341. [Google Scholar] [CrossRef]

- Wang, J.; Wang, H.; Chang, A.; Song, C. Collaborative optimization of vehicle and crew scheduling for a mixed fleet with electric and conventional buses. Sustainability 2022, 14, 3627. [Google Scholar] [CrossRef]

- Soltanpour, A.; Ghamami, M.; Nicknam, M.; Ganji, M.; Tian, W. Charging infrastructure and schedule planning for a public transit network with a mixed fleet of electric and diesel buses. Transp. Res. Rec. 2023, 2677, 1053–1071. [Google Scholar] [CrossRef]

- Frieß, N.M.; Pferschy, U. Planning a zero-emission mixed-fleet public bus system with minimal life cycle cost. Public Transp. 2024, 16, 39–79. [Google Scholar] [CrossRef]

- Zhang, A.; Li, T.; Zheng, Y.; Li, X.; Abdullah, M.G.; Dong, C. Mixed electric bus fleet scheduling problem with partial mixed-route and partial recharging. Int. J. Sustain. Transp. 2022, 16, 73–83. [Google Scholar] [CrossRef]

- Duda, J.; Karkula, M.; Puka, R.; Skalna, I.; Fierek, S.; Redmer, A.; Kisielewski, P. Multi-objective optimization model for a multi-depot mixed fleet electric vehicle scheduling problem with real-world constraints. Transp. Probl. 2022, 17, 137–149. [Google Scholar] [CrossRef]

- Al-dal’ain, R.; Celebi, D. Planning a mixed fleet of electric and conventional vehicles for urban freight with routing and replacement considerations. Sustain. Cities Soc. 2021, 73, 103105. [Google Scholar] [CrossRef]

- Zhao, P.; Liu, F.; Guo, Y.; Duan, X.; Zhang, Y. Bi-Objective Optimization for Vehicle Routing Problems with a Mixed Fleet of Conventional and Electric Vehicles and Soft Time Windows. J. Adv. Transp. 2021, 2021, 9086229. [Google Scholar] [CrossRef]

- Dong, H.; Shi, J.; Zhuang, W.; Li, Z.; Song, Z. Analyzing the impact of mixed vehicle platoon formations on vehicle energy and traffic efficiencies. Appl. Energy 2025, 377, 124448. [Google Scholar] [CrossRef]

- Qiu, D.; Wang, Y.; Hua, W.; Strbac, G. Reinforcement learning for electric vehicle applications in power systems: A critical review. Renew. Sustain. Energy Rev. 2023, 173, 113052. [Google Scholar] [CrossRef]

- Basso, R.; Kulcsár, B.; Sanchez-Diaz, I.; Qu, X. Dynamic stochastic electric vehicle routing with safe reinforcement learning. Transp. Res. Part E Logist. Transp. Rev. 2022, 157, 102496. [Google Scholar] [CrossRef]

- Li, S.; Hu, W.; Cao, D.; Dragičević, T.; Huang, Q.; Chen, Z.; Blaabjerg, F. Electric vehicle charging management based on deep reinforcement learning. J. Mod. Power Syst. Clean Energy 2021, 10, 719–730. [Google Scholar] [CrossRef]

- Tuchnitz, F.; Ebell, N.; Schlund, J.; Pruckner, M. Development and evaluation of a smart charging strategy for an electric vehicle fleet based on reinforcement learning. Appl. Energy 2021, 285, 116382. [Google Scholar] [CrossRef]

- Sultanuddin, S.; Vibin, R.; Kumar, A.R.; Behera, N.R.; Pasha, M.J.; Baseer, K. Development of improved reinforcement learning smart charging strategy for electric vehicle fleet. J. Energy Storage 2023, 64, 106987. [Google Scholar] [CrossRef]

- Wu, J.; Song, Z.; Lv, C. Deep reinforcement learning-based energy-efficient decision-making for autonomous electric vehicle in dynamic traffic environments. IEEE Trans. Transp. Electrif. 2023, 10, 875–887. [Google Scholar] [CrossRef]

- Wang, Y.; Wu, J.; He, H.; Wei, Z.; Sun, F. Data-driven energy management for electric vehicles using offline reinforcement learning. Nat. Commun. 2025, 16, 2835. [Google Scholar] [CrossRef]

- Qin, J.; Huang, H.; Lu, H.; Li, Z. Energy management strategy for hybrid electric vehicles based on deep reinforcement learning with consideration of electric drive system thermal characteristics. Energy Convers. Manag. 2025, 332, 119697. [Google Scholar] [CrossRef]

- Liu, C.; Wang, Z.; Liu, Z.; Huang, K. Multi-agent reinforcement learning framework for addressing demand-supply imbalance of shared autonomous electric vehicle. Transp. Res. Part E Logist. Transp. Rev. 2025, 197, 104062. [Google Scholar] [CrossRef]

- Hu, J.; Lin, Y.; Li, J.; Hou, Z.; Chu, L.; Zhao, D.; Zhou, Q.; Jiang, J.; Zhang, Y. Performance analysis of AI-based energy management in electric vehicles: A case study on classic reinforcement learning. Energy Convers. Manag. 2024, 300, 117964. [Google Scholar] [CrossRef]

- Liu, W.; Yao, P.; Wu, Y.; Duan, L.; Li, H.; Peng, J. Imitation reinforcement learning energy management for electric vehicles with hybrid energy storage system. Appl. Energy 2025, 378, 124832. [Google Scholar] [CrossRef]

- Aviationstack. Aviationstack API—Real-Time Flight Status & Global Aviation Data. 2024. Available online: https://aviationstack.com/ (accessed on 15 April 2025).

| Scaling Factor s for | |||

|---|---|---|---|

| 1.2 | 817.1 (+2.5%) | 815.8 (+2.6%) | 798.9 (+4.6%) |

| 1.0 | 808.1 (+3.5%) | 837.8 (0.0%) | 820.1 (+2.1%) |

| 0.8 | 826.9 (+1.3%) | 908.3 (−8.4%) | 1007.8 (−20.3%) |

| Scenario | DR (%) | RT (min) | EU (%) | FU (%) |

|---|---|---|---|---|

| 50% EV fleet | 4.5 | 1.71 | 48.3 | 11.3 |

| 30% EV fleet | 5.5 | 1.82 | 54.1 | 15.7 |

| 80% EV fleet | 5.4 | 1.76 | 42.5 | 10.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, F.; Zhou, M.; Xing, Y.; Wang, H.-W.; Peng, Y.; Chen, Z. A Deep Reinforcement Learning Framework for Multi-Fleet Scheduling and Optimization of Hybrid Ground Support Equipment Vehicles in Airport Operations. Appl. Sci. 2025, 15, 9777. https://doi.org/10.3390/app15179777

Wang F, Zhou M, Xing Y, Wang H-W, Peng Y, Chen Z. A Deep Reinforcement Learning Framework for Multi-Fleet Scheduling and Optimization of Hybrid Ground Support Equipment Vehicles in Airport Operations. Applied Sciences. 2025; 15(17):9777. https://doi.org/10.3390/app15179777

Chicago/Turabian StyleWang, Fengde, Miao Zhou, Yingying Xing, Hong-Wei Wang, Yichuan Peng, and Zhen Chen. 2025. "A Deep Reinforcement Learning Framework for Multi-Fleet Scheduling and Optimization of Hybrid Ground Support Equipment Vehicles in Airport Operations" Applied Sciences 15, no. 17: 9777. https://doi.org/10.3390/app15179777

APA StyleWang, F., Zhou, M., Xing, Y., Wang, H.-W., Peng, Y., & Chen, Z. (2025). A Deep Reinforcement Learning Framework for Multi-Fleet Scheduling and Optimization of Hybrid Ground Support Equipment Vehicles in Airport Operations. Applied Sciences, 15(17), 9777. https://doi.org/10.3390/app15179777