A Lightweight Instance Segmentation Model for Simultaneous Detection of Citrus Fruit Ripeness and Red Scale (Aonidiella aurantii) Pest Damage

Abstract

1. Introduction

1.1. Related Work

1.2. Motivation

1.3. Contribution

2. Materials and Methods

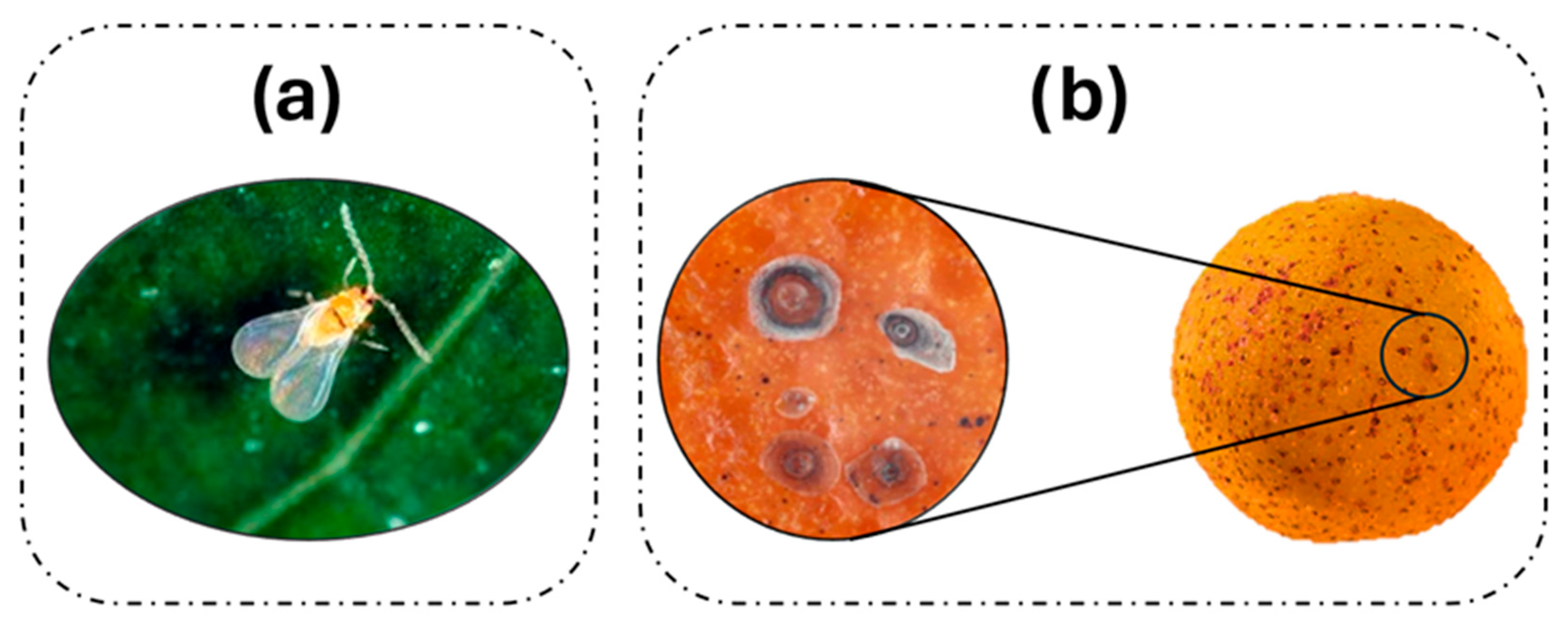

2.1. Red Scale (Aonidiella aurantii (Maskell) (Hemiptera: Diaspididae))

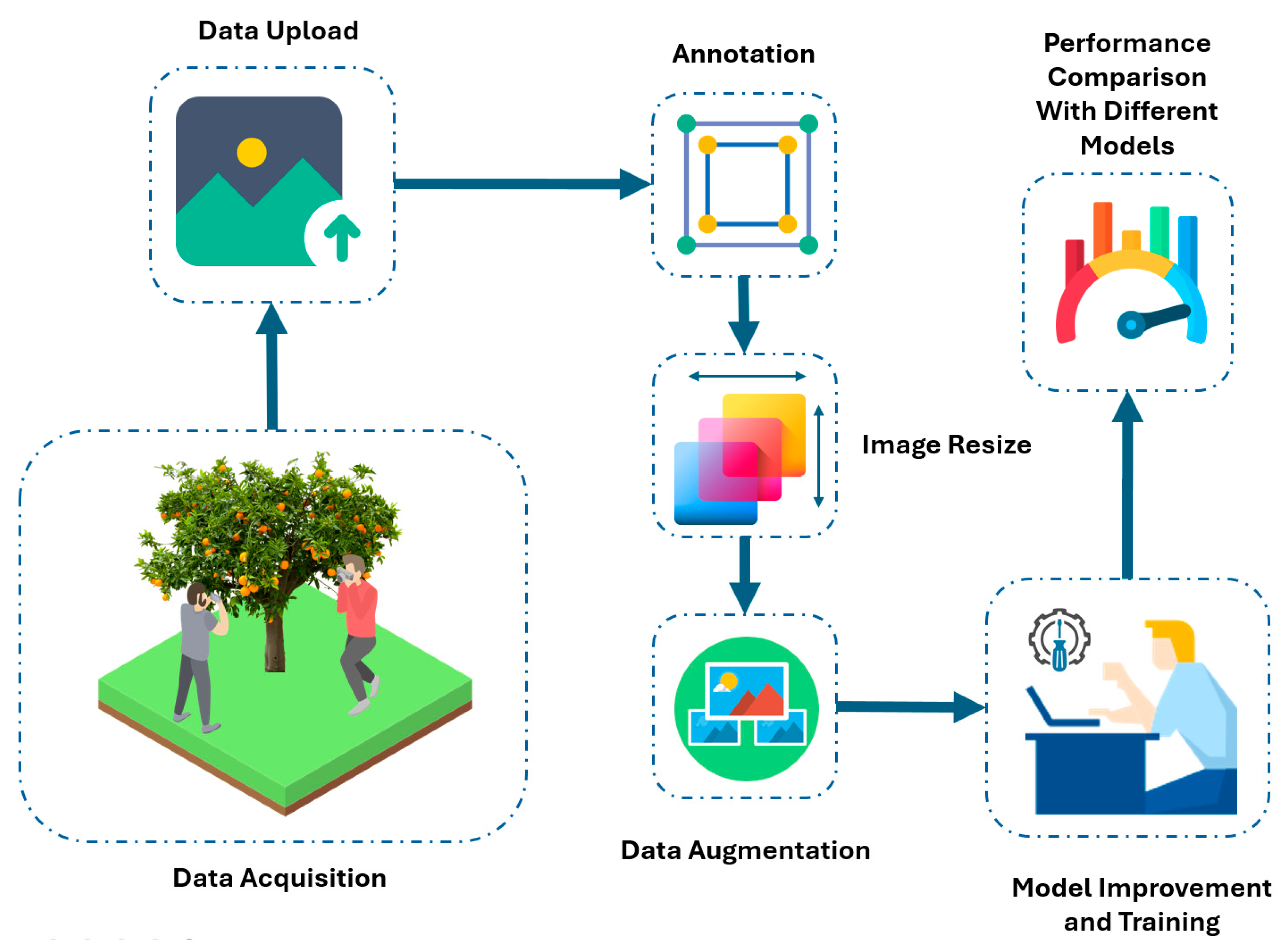

2.2. Data Acquisition

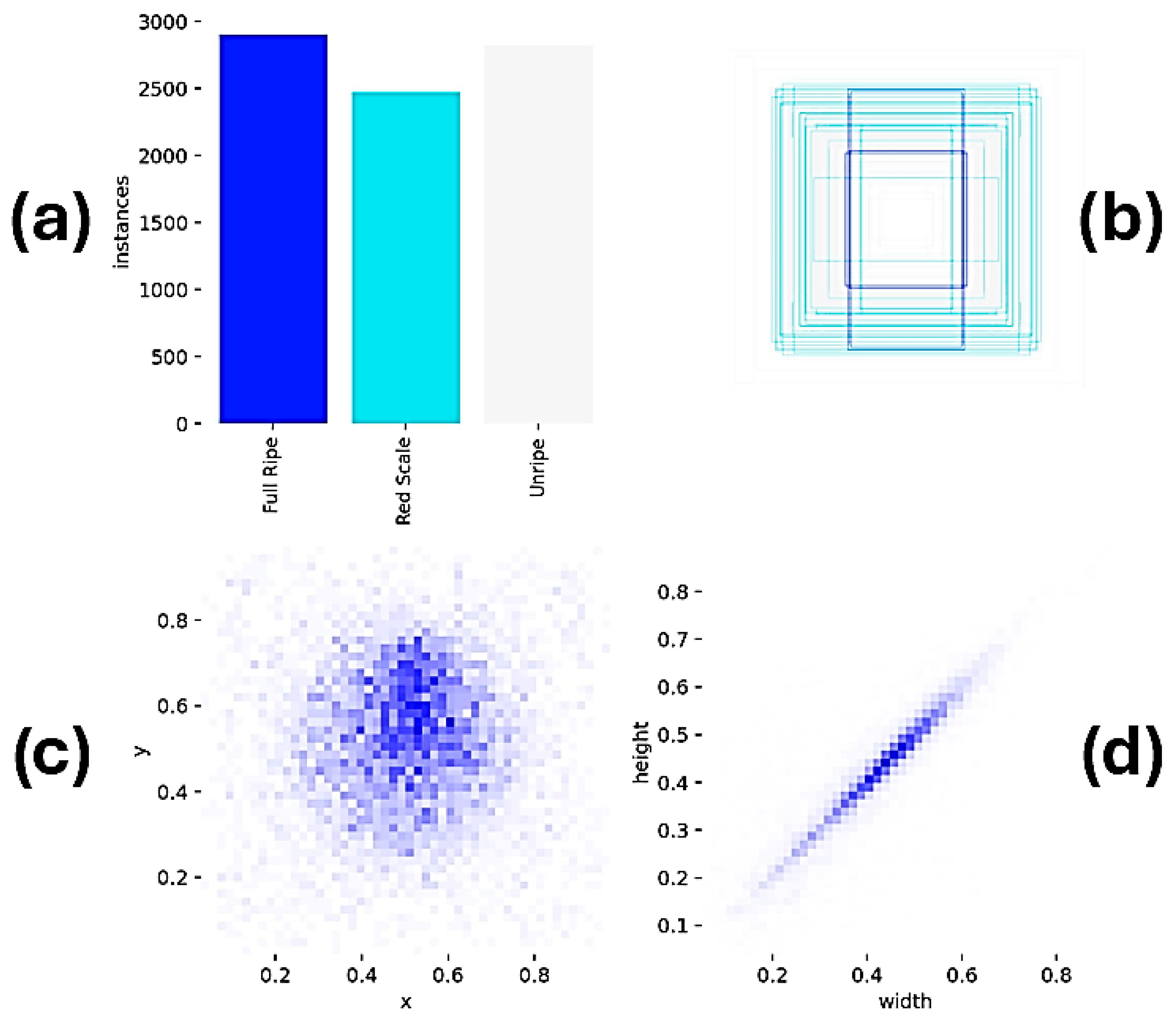

2.3. Data Preprocessing, Annotation, and Augmentation

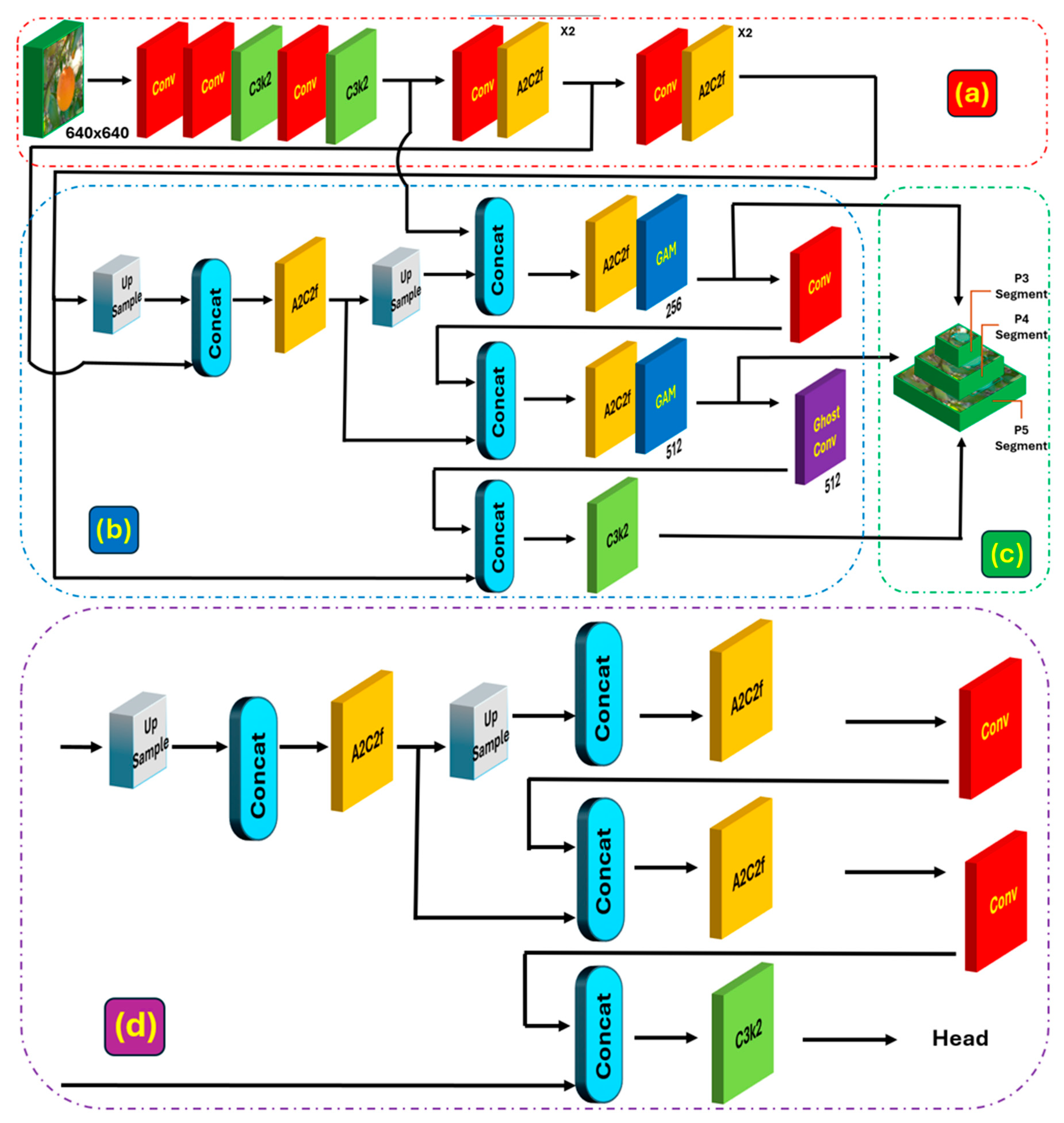

2.4. Improved Instance Segmentation Model

2.4.1. Global Attention Mechanism (GAM) Module

2.4.2. GhostConv

2.5. Experimental Environment and Parameters

2.6. Model Evaluation Indicators

3. Experimental Results and Discussion

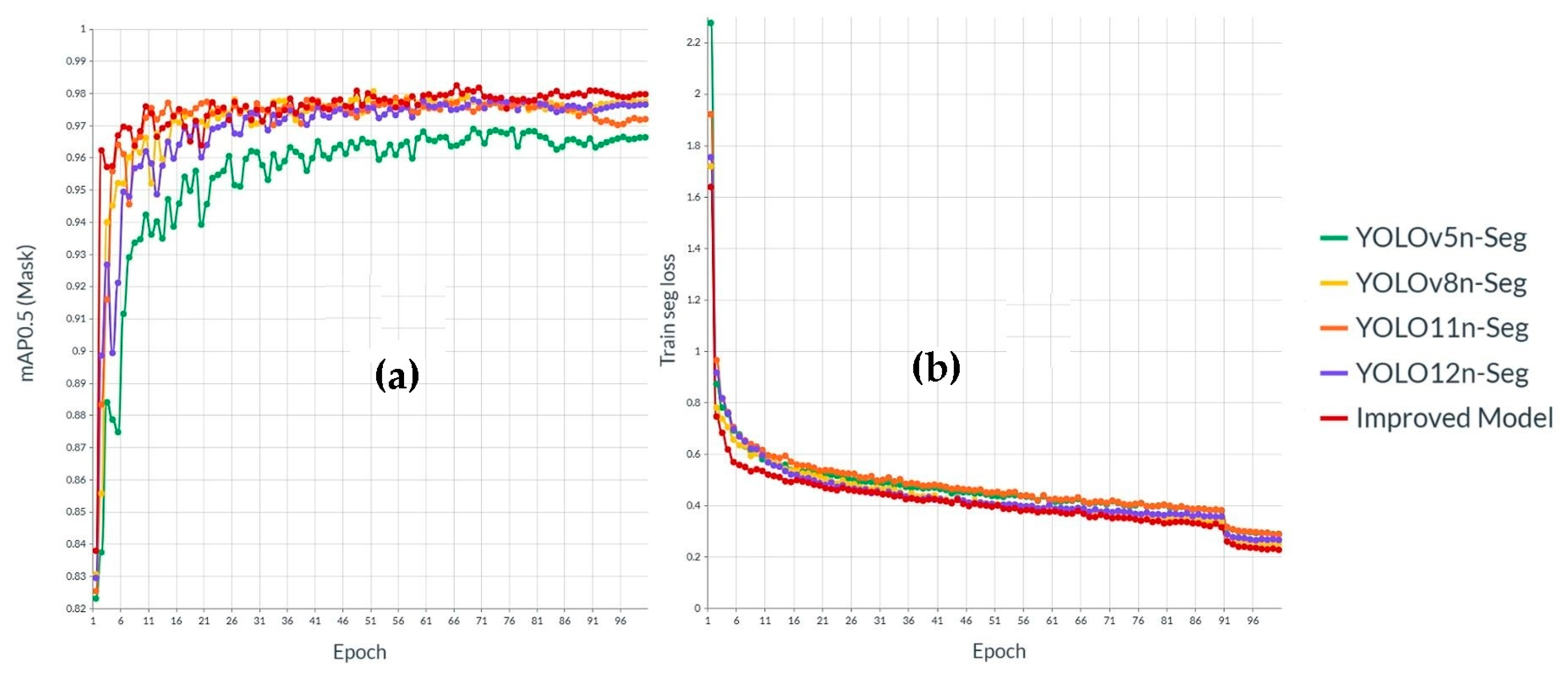

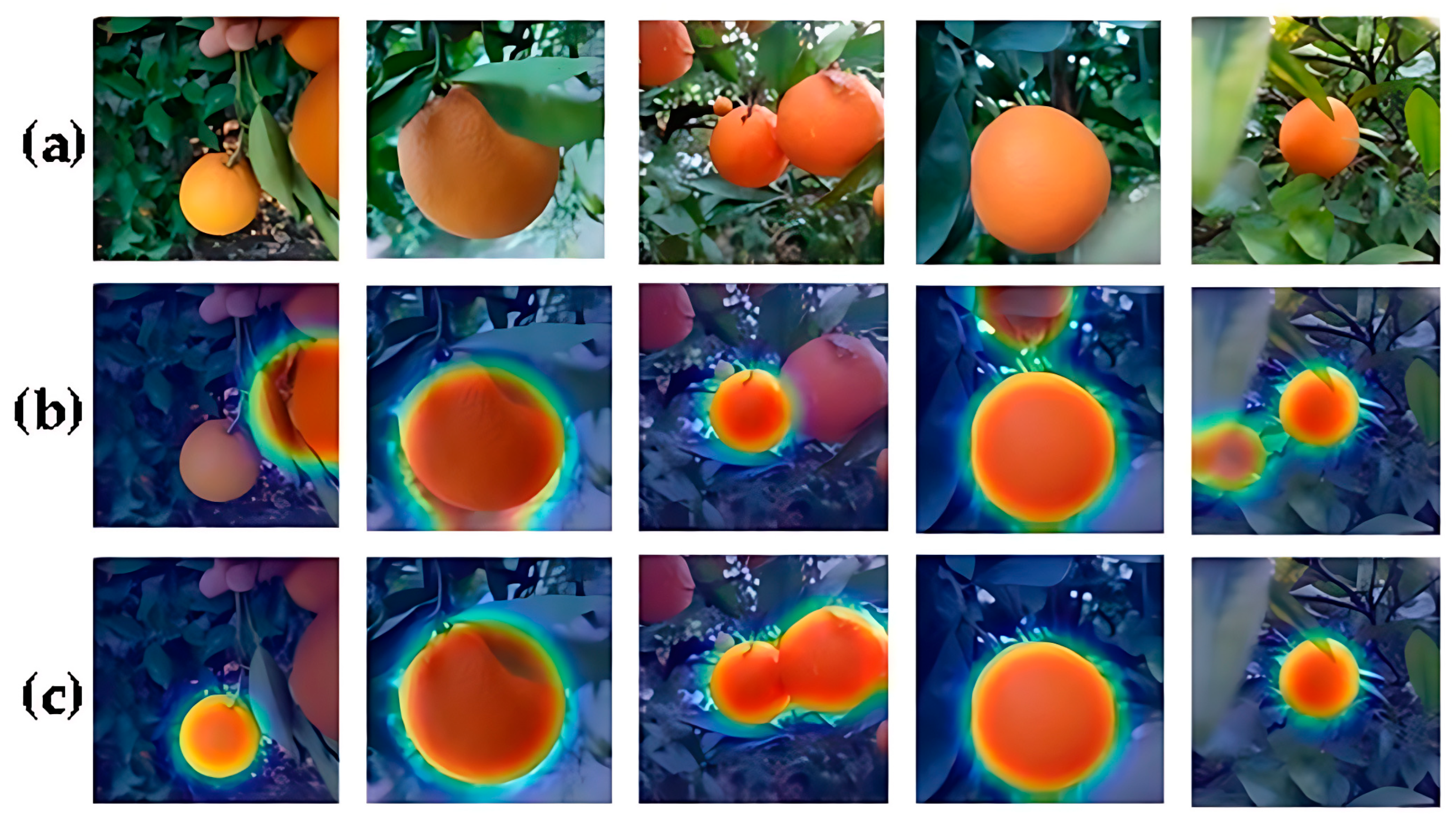

3.1. Model Results

3.2. Ablation Studies

3.3. Comparison with Different Instance Segmentation Algorithm

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- USDA. Citrus: World Markets and Trade; United States Department of Agriculture: Washington, DC, USA, 2024.

- Dai, Q.; Liang, S.; Li, Z.; Lyu, S.; Xue, X.; Song, S.; Huang, Y.; Zhang, S.; Fu, J. YOLOv11-RDTNet: A Lightweight Model for Citrus Pest and Disease Identification Based on an Improved YOLOv11n. Agronomy 2025, 15, 1252. [Google Scholar] [CrossRef]

- Peng, K.; Ma, W.; Lu, J.; Tian, Z.; Yang, Z. Application of Machine Vision Technology in Citrus Production. Appl. Sci. 2023, 13, 9334. [Google Scholar] [CrossRef]

- Life Stages of California Red Scale and Its Parasitoids. Available online: https://anrcatalog.ucanr.edu/pdf/21529E.pdf (accessed on 20 August 2025).

- Red Scale. Available online: https://www.crop.bayer.com.au/pests/pests/red-scale (accessed on 20 August 2025).

- Sun, Y.; Wang, X.; Pan, L.; Hu, Y. Influence of maturity on bruise detection of peach by structured multispectral imaging. Curr. Res. Food Sci. 2023, 6, 100476. [Google Scholar] [CrossRef]

- Chohan, M.; Khan, A.; Chohan, R.; Katpar, S.H.; Mahar, M.S. Plant Disease Detection Using Deep Learning. Int. J. Recent Technol. Eng. 2020, 9, 909–914. [Google Scholar] [CrossRef]

- Guan, H.; Fu, C.; Zhang, G.; Li, K.; Wang, P.; Zhu, Z. A Lightweight Model for Efficient Identification of Plant Diseases and Pests Based on Deep Learning. Front. Plant Sci. 2023, 14, 1227011. [Google Scholar] [CrossRef]

- Guan, T.; Chang, S.; Deng, Y.; Xue, F.; Wang, C.; Jia, X. Oriented SAR Ship Detection Based on Edge Deformable Convolution and Point Set Representation. Remote Sens. 2025, 17, 1612. [Google Scholar] [CrossRef]

- Murthy, C.B.; Hashmi, M.F.; Bokde, N.D.; Geem, Z.W. Investigations of Object Detection in Images/Videos Using Various Deep Learning Techniques and Embedded Platforms—A Comprehensive Review. Appl. Sci. 2020, 10, 3280. [Google Scholar] [CrossRef]

- Matsuzaka, Y.; Yashiro, R. AI-Based Computer Vision Techniques and Expert Systems. AI 2023, 4, 289–302. [Google Scholar] [CrossRef]

- Trigka, M.; Dritsas, E. A Comprehensive Survey of Machine Learning Techniques and Models for Object Detection. Sensors 2025, 25, 214. [Google Scholar] [CrossRef] [PubMed]

- Wu, X.; Liang, J.; Yang, Y.; Li, Z.; Jia, X.; Pu, H.; Zhu, P. SAW-YOLO: A Multi-Scale YOLO for Small Target Citrus Pests Detection. Agronomy 2024, 14, 1571. [Google Scholar] [CrossRef]

- Zeng, T.; Li, S.; Song, Q.; Zhong, F.; Wei, X. Lightweight Tomato Real-Time Detection Method Based on Improved YOLO and Mobile Deployment. Comput. Electron. Agric. 2023, 205, 107625. [Google Scholar] [CrossRef]

- Zhang, X.; Xun, Y.; Chen, Y. Automated Identification of Citrus Diseases in Orchards Using Deep Learning. Biosyst. Eng. 2022, 223, 249–258. [Google Scholar] [CrossRef]

- Hu, W.; Xiong, J.; Liang, J.; Xie, Z.; Liu, Z.; Huang, Q.; Yang, Z. A Method of Citrus Epidermis Defects Detection Based on an Improved YOLOv5. Biosyst. Eng. 2023, 227, 19–35. [Google Scholar] [CrossRef]

- Xu, L.; Wang, Y.; Shi, X.; Tang, Z.; Chen, X.; Wang, Y.; Zou, Z.; Huang, P.; Liu, B.; Yang, N. Real-Time and Accurate Detection of Citrus in Complex Scenes Based on HPL-YOLOv4. Comput. Electron. Agric. 2023, 205, 107590. [Google Scholar] [CrossRef]

- Li, K.; Wang, J.; Jalil, H.; Wang, H. A Fast and Lightweight Detection Algorithm for Passion Fruit Pests Based on Improved YOLOv5. Comput. Electron. Agric. 2023, 204, 107534. [Google Scholar] [CrossRef]

- Song, Z.; Wang, D.; Xiao, L.; Zhu, Y.; Cao, G.; Wang, Y. DaylilyNet: A Multi-Task Learning Method for Daylily Leaf Disease Detection. Sensors 2023, 23, 7879. [Google Scholar] [CrossRef] [PubMed]

- Li, M.; Cheng, S.; Cui, J.; Li, C.; Li, Z.; Zhou, C.; Lv, C. High-Performance Plant Pest and Disease Detection Based on Model Ensemble with Inception Module and Cluster Algorithm. Plants 2023, 12, 200. [Google Scholar] [CrossRef] [PubMed]

- Soeb, M.J.A.; Jubayer, M.F.; Tarin, T.A.; Al Mamun, M.R.; Ruhad, F.M.; Parven, A.; Mubarak, N.M.; Karri, S.L.; Meftaul, I.M. Tea Leaf Disease Detection and Identification Based on YOLOv7 (YOLO-T). Sci. Rep. 2023, 13, 6078. [Google Scholar] [CrossRef]

- Sapkota, R.; Meng, Z.; Churuvija, M.; Du, X.; Ma, Z.; Karkee, M. Comprehensive Performance Evaluation of YOLOv12, YOLO11, YOLOv10, YOLOv9 and YOLOv8 on Detecting and Counting Fruitlet in Complex Orchard Environments. arXiv 2024. [Google Scholar] [CrossRef]

- Sharma, A.; Kumar, V.; Longchamps, L. Comparative Performance of YOLOv8, YOLOv9, YOLOv10, YOLOv11 and Faster R-CNN Models for Detection of Multiple Weed Species. Smart Agric. Technol. 2024, 9, 100648. [Google Scholar] [CrossRef]

- Kumar, V.S.; Jaganathan, M.; Viswanathan, A.; Umamaheswari, M.; Vignesh, J. Rice Leaf Disease Detection Based on Bidirectional Feature Attention Pyramid Network with YOLO v5 Model. Environ. Res. Commun. 2023, 5, 065014. [Google Scholar] [CrossRef]

- Islam, A.; Sama Raisa, S.R.; Khan, N.H.; Rifat, A.I. A Deep Learning Approach for Classification and Segmentation of Leafy Vegetables and Diseases. In Proceedings of the 2023 International Conference on Next-Generation Computing, IoT and Machine Learning (NCIM), Gazipur, Bangladesh, 16–17 June 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Yue, X.; Qi, K.; Na, X.; Zhang, Y.; Liu, Y.; Liu, C. Improved YOLOv8-Seg Network for Instance Segmentation of Healthy and Diseased Tomato Plants in the Growth Stage. Agriculture 2023, 13, 1643. [Google Scholar] [CrossRef]

- Khan, F.; Zafar, N.; Tahir, M.N.; Aqib, M.; Waheed, H.; Haroon, Z. A Mobile-Based System for Maize Plant Leaf Disease Detection and Classification Using Deep Learning. Front. Plant Sci. 2023, 14, 1079366. [Google Scholar] [CrossRef]

- Xue, Z.; Xu, R.; Bai, D.; Lin, H. YOLO-Tea: A Tea Disease Detection Model Improved by YOLOv5. Forests 2023, 14, 415. [Google Scholar] [CrossRef]

- Dai, Q.; Xiao, Y.; Lv, S.; Song, S.; Xue, X.; Liang, S.; Huang, Y.; Li, Z. YOLOv8-GABNet: An Enhanced Lightweight Network for the High-Precision Recognition of Citrus Diseases and Nutrient Deficiencies. Agriculture 2024, 14, 1964. [Google Scholar] [CrossRef]

- Wu, E.; Ma, R.; Dong, D.; Zhao, X. D-YOLO: A Lightweight Model for Strawberry Health Detection. Agriculture 2025, 15, 570. [Google Scholar] [CrossRef]

- Wang, R.; Chen, Y.; Zhang, G.; Yang, C.; Teng, X.; Zhao, C. YOLO11-PGM: High-Precision Lightweight Pomegranate Growth Monitoring Model for Smart Agriculture. Agronomy 2025, 15, 1123. [Google Scholar] [CrossRef]

- Angon, P.B.; Mondal, S.; Jahan, I.; Datto, M.; Antu, U.B.; Ayshi, F.J.; Islam, M.S. Integrated Pest Management (IPM) in Agriculture and Its Role in Maintaining Ecological Balance and Biodiversity. Adv. Agric. 2023, 2023, 5546373. [Google Scholar] [CrossRef]

- Wadhwa, D.; Malik, K. A Generalizable and Interpretable Model for Early Warning of Pest-Induced Crop Diseases Using Environmental Data. Comput. Electron. Agric. 2024, 227, 109472. [Google Scholar] [CrossRef]

- Sankhe, S.R.; Ambhaikar, A. Plant Disease Detection and Classification Techniques: A Review. Multiagent Grid Syst. 2025, 20, 265–282. [Google Scholar] [CrossRef]

- Albahar, M. A Survey on Deep Learning and Its Impact on Agriculture: Challenges and Opportunities. Agriculture 2023, 13, 540. [Google Scholar] [CrossRef]

- Lei, L.; Yang, Q.; Yang, L.; Shen, T.; Wang, R.; Fu, C. Deep Learning Implementation of Image Segmentation in Agricultural Applications: A Comprehensive Review. Artif. Intell. Rev. 2024, 57, 149. [Google Scholar] [CrossRef]

- Normark, B.B.; Morse, G.E.; Krewinski, A.; Okusu, A. Armored Scale Insects (Hemiptera: Diaspididae) of San Lorenzo National Park, Panama, with Descriptions of Two New Species. Ann. Entomol. Soc. Am. 2014, 107, 37–49. [Google Scholar] [CrossRef]

- García Morales, M.; Denno, B.D.; Miller, D.R.; Miller, G.L.; Ben-Dov, Y.; Hardy, N.B. ScaleNet: A Literature-Based Model of Scale Insect Biology and Systematics. Database 2016, 2016, bav118. [Google Scholar]

- Golan, K.; Kot, I.; Kmieć, K.; Górska-Drabik, E. Approaches to Integrated Pest Management in Orchards: Comstockaspis Perniciosa (Comstock) Case Study. Agriculture 2023, 13, 131. [Google Scholar] [CrossRef]

- Roelofs, W.L.; Gieselmann, M.J.; Cardé, A.M.; Tashiro, H.; Moreno, D.S.; Henrick, C.A.; Anderson, R.J. Sex Pheromone of the California Red Scale, Aonidiella Aurantii. Nature 1977, 267, 698–699. [Google Scholar] [CrossRef] [PubMed]

- Aytaş, M.; Yumruktepe, R.; Mart, C. Using Pheromone Traps to Control California Red Scale Aonidiella Aurantii (Maskell)(Hom.: Diaspididae) in the Eastern Mediterranean Region. Turk. J. Agric. For. 2001, 25, 97–110. [Google Scholar]

- Fonte, A.; Garcerá, C.; Tena, A.; Chueca, P. Volume Rate Adjustment for Pesticide Applications Against Aonidiella Aurantii in Citrus: Validation of CitrusVol in the Growers’ Practice. Agronomy 2021, 11, 1350. [Google Scholar] [CrossRef]

- Jacas, J.A.; Karamaouna, F.; Vercher, R.; Zappalà, L. Citrus Pest Management in the Northern Mediterranean Basin (Spain, Italy and Greece). In Integrated Management of Arthropod Pests and Insect Borne Diseases; Springer: Dordrecht, The Netherlands, 2010; pp. 3–27. [Google Scholar]

- Pekas, A.; Aguilar, A.; Tena, A.; Garcia-Marí, F. Influence of Host Size on Parasitism by Aphytis Chrysomphali and A. Melinus (Hymenoptera: Aphelinidae) in Mediterranean Populations of California Red Scale Aonidiella Aurantii (Hemiptera: Diaspididae). Biol. Control 2010, 55, 132–140. [Google Scholar] [CrossRef]

- Rodrigo, E.; Troncho, P.; García-Marí, F. Parasitoids (Hym.: Aphelinidae) of Three Scale Insects (Hom.: Diaspididae) in a Citrus Grove in Valencia, Spain. Phytoparasitica 1996, 24, 273–280. [Google Scholar] [CrossRef]

- Pina, T. Control Biológico del Piojo Rojo de California, Aonidiella Aurantia (Maskell) (Hemiptera: Diaspididae) y Estrategias Reproductivas de su Principal Enemigo Natural Aphytis Chrysomphali (Mercet) (Hymenoptera: Aphelinidae). Ph.D. Thesis, Universitat de València, Valencia, Spain, 2007. [Google Scholar]

- Vanaclocha, P.; Urbaneja, A.; Verdú, M.J. Mortalidad Natural del Piojo Rojo de California, Aonidiella Aurantii, en Cítricos de la Comunidad Valenciana y sus Parasitoides Asociados. Bol. Sanid. Veg. Plagas 2009, 35, 59–71. [Google Scholar]

- Vacas, S.; Alfaro, C.; Primo, J.; Navarro-Llopis, V. Deployment of Mating Disruption Dispensers Before and After First Seasonal Male Flights for the Control of Aonidiella Aurantii in Citrus. J. Pest Sci. 2015, 88, 321–329. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. arXiv 2015. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. arXiv 2017. [Google Scholar] [CrossRef]

- Wu, Y.; Kirillov, A.; Massa, F.; Lo, W.-Y.; Girshick, R. Detectron2 [Computer Software]. Available online: https://github.com/facebookresearch/detectron2 (accessed on 2 September 2025).

- Sapkota, R.; Flores-Calero, M.; Qureshi, R.; Badgujar, C.; Nepal, U.; Poulose, A.; Zeno, P.; Vaddevolu, U.B.P.; Khan, S.; Shoman, M.; et al. YOLO Advances to Its Genesis: A Decadal and Comprehensive Review of the You Only Look Once (YOLO) Series. Artif. Intell. Rev. 2025, 58, 274. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.; Kweon, I.S. CBAM: Convolutional Block Attention Module. arXiv 2018. [Google Scholar] [CrossRef]

- Wang, L.; Xiao, J.; Peng, X.; Tan, Y.; Zhou, Z.; Chen, L.; Tang, Q.; Cheng, W.; Liang, X. Mango Inflorescence Detection Based on Improved YOLOv8 and UAVs-RGB Images. Forests 2025, 16, 896. [Google Scholar] [CrossRef]

- Liu, Y.; Shao, Z.; Hoffmann, N. Global Attention Mechanism: Retain Information to Enhance Channel-Spatial Interactions. arXiv 2021. [Google Scholar] [CrossRef]

- Liu, S.; Wang, Y.; Yu, Q.; Liu, H.; Peng, Z. CEAM-YOLOv7: Improved YOLOv7 Based on Channel Expansion and Attention Mechanism for Driver Distraction Behavior Detection. IEEE Access 2022, 10, 129116–129124. [Google Scholar] [CrossRef]

- Wang, Z.; Yuan, G.; Zhou, H.; Ma, Y.; Ma, Y. Foreign-Object Detection in High-Voltage Transmission Line Based on Improved YOLOv8m. Appl. Sci. 2023, 13, 12775. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More Features from Cheap Operations. In Proceedings of the IEEE/CVF Conference Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 1580–1589. [Google Scholar]

- Wang, T.; Zhang, S. DSC-Ghost-Conv: A Compact Convolution Module for Building Efficient Neural Network Architectures. Multimed. Tools Appl. 2023, 83, 36767–36795. [Google Scholar] [CrossRef]

- Qiu, Z.; Bai, H.; Chen, T. Special Vehicle Detection from UAV Perspective via YOLO-GNS Based Deep Learning Network. Drones 2023, 7, 117. [Google Scholar] [CrossRef]

- Yang, X.; Ji, W.; Zhang, S.; Song, Y.; He, L.; Xue, H. Lightweight Real-Time Lane Detection Algorithm Based on Ghost Convolution and Self Batch Normalization. J. Real-Time Image Process. 2023, 20, 69. [Google Scholar] [CrossRef]

- Cao, J.; Bao, W.; Shang, H.; Yuan, M.; Cheng, Q. GCL-YOLO: A GhostConv-Based Lightweight YOLO Network for UAV Small Object Detection. Remote Sens. 2023, 15, 4932. [Google Scholar] [CrossRef]

- Huang, Z.; Li, X.; Fan, S.; Liu, Y.; Zou, H.; He, X.; Xu, S.; Zhao, J.; Li, W. ORD-YOLO: A Ripeness Recognition Method for Citrus Fruits in Complex Environments. Agriculture 2025, 15, 1711. [Google Scholar] [CrossRef]

- Lin, Y.; Huang, Z.; Liang, Y.; Liu, Y.; Jiang, W. AG-YOLO: A Rapid Citrus Fruit Detection Algorithm with Global Context Fusion. Agriculture 2024, 14, 114. [Google Scholar] [CrossRef]

- Liao, Y.; Li, L.; Xiao, H.; Xu, F.; Shan, B.; Yin, H. YOLO-MECD: Citrus Detection Algorithm Based on YOLOv11. Agronomy 2025, 15, 687. [Google Scholar] [CrossRef]

- Cai, Z.; Zhang, Y.; Li, J.; Zhang, J.; Li, X. Synchronous detection of internal and external defects of citrus by structured-illumination reflectance imaging coupling with improved YOLO v7. Postharvest Biol. Technol. 2025, 227, 113576. [Google Scholar] [CrossRef]

- Wu, Y.; Han, Q.; Jin, Q.; Li, J.; Zhang, Y. LCA-YOLOv8-Seg: An Improved Lightweight YOLOv8-Seg for Real-Time Pixel-Level Crack Detection of Dams and Bridges. Appl. Sci. 2023, 13, 10583. [Google Scholar] [CrossRef]

- Zhang, L.; Ding, G.; Li, C.; Li, D. DCF-YOLOv8: An Improved Algorithm for Aggregating Low-Level Features to Detect Agricultural Pests and Diseases. Agronomy 2023, 13, 2012. [Google Scholar] [CrossRef]

- Mohana Sri, S.; Swetha, S.; Aouthithiye Barathwaj, S.R.Y.; Sai Ganesh, C.S. Intelligent Debris Mass Estimation Model for Autonomous Underwater Vehicle. arXiv 2023. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. arXiv 2017. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-NET: Convolutional Networks for Biomedical Image Segmentation. arXiv 2015. [Google Scholar] [CrossRef]

- Zhu, X.; Chen, F.; Zheng, Y.; Chen, C.; Peng, X. Detection of Camellia oleifera fruit maturity in orchards based on modified lightweight YOLO. Comput. Electron. Agric. 2024, 226, 109471. [Google Scholar] [CrossRef]

- Wang, Y.; Ouyang, C.; Peng, H.; Deng, J.; Yang, L.; Chen, H.; Luo, Y.; Jiang, P. YOLO-ALW: An Enhanced High-Precision Model for Chili Maturity Detection. Sensors 2025, 25, 1405. [Google Scholar] [CrossRef] [PubMed]

- Zhou, B.; Wu, K.; Chen, M. Detection of Gannan Navel Orange Ripeness in Natural Environment Based on YOLOv5-NMM. Agronomy 2024, 14, 910. [Google Scholar] [CrossRef]

- Uygun, T.; Ozguven, M.M. Determination of Tomato Leafminer: Tuta Absoluta (Meyrick) (Lepidoptera: Gelechiidae) Damage on Tomato Using Deep Learning Instance Segmentation Method. Eur. Food Res. Technol. 2024, 250, 1837–1852. [Google Scholar] [CrossRef]

| Device and Software | Environmental Parameter | Value |

|---|---|---|

| Apple MacBook Pro 2012 (Apple Inc., Cupertino, California, CA, USA) | Operating system | Windows 10 (Microsoft Corporation, Redmond, WA, USA) |

| CPU | Intel Core i5 (Microsoft Corporation, Redmond, WA, USA) | |

| RAM | 16 G-LPDDR3L | |

| Google Colab (Mountain View, California, CA, USA) | Deep learning framework | Pytorch 1.10 (Meta AI, Menlo Park, CA, USA) |

| Programming language | Python3.10 (Python Software Foundation, Wilmington, DE, USA) | |

| Virtual RAM | 90 GB | |

| Virtual storage | 250 GB | |

| Virtual GPU (NVIDIA Tesla A100) (NVIDIA Corporation, Santa Clara, CA, USA) | Memory | 40 GB |

| Bandwidth | 1555 GB/sec | |

| Cuda Core | 6912 |

| Parameters | Value |

|---|---|

| Image-size | 640 × 640 |

| Epochs | 100 |

| Batch-size | 16 |

| Momentum | 0.937 |

| lr | Auto |

| Optimizer | SGD |

| Activation function | SiLU |

| Weight_decay | 0.0005 |

| Warmup_epochs | 3 |

| Warmup_momentum | 0.8 |

| Warmup_bias_lr | 0.1 |

| Class | Images | Mask | |||

|---|---|---|---|---|---|

| P | R | mAP@0.5 | mAP@0.5:0.95 | ||

| All | 614 | 0.961 | 0.943 | 0.980 | 0.960 |

| Full Ripe | 221 | 0.963 | 0.926 | 0.966 | 0.946 |

| Red Scale | 212 | 0.982 | 0.971 | 0.992 | 0.984 |

| Unripe | 224 | 0.936 | 0.933 | 0.982 | 0.949 |

| Baseline Model | GhostConv | GAM | mAP@0.5 | mAP@0.5:0.95 | P | R |

|---|---|---|---|---|---|---|

| YOLO12n-Seg | - | - | 0.977 | 0.949 | 0.958 | 0.941 |

| YOLO12n-Seg | ✓ | - | 0.978 | 0.959 | 0.962 | 0.933 |

| YOLO12n-Seg | - | ✓ | 0.979 | 0.955 | 0.959 | 0.945 |

| YOLO12n-Seg | ✓ | ✓ | 0.980 | 0.960 | 0.961 | 0.943 |

| Model | GFLOPS | Parameter | Train Time | Mask | |||

|---|---|---|---|---|---|---|---|

| mAP@0.5 | mAP@0.5:0.95 | P | R | ||||

| YOLOv5n-Seg | 11 | 2.761 M | 2 h, 51 m | 0.969 | 0.951 | 0.949 | 0.931 |

| YOLOv8n-Seg | 12.1 | 3.264 M | 3 h, 21 m | 0.978 | 0.955 | 0.960 | 0.927 |

| YOLO11n-Seg | 10.2 | 2.843 M | 3 h, 2 m | 0.971 | 0.949 | 0.950 | 0.921 |

| YOLO12n-Seg | 10.3 | 2.855 M | 3 h, 9 m | 0.977 | 0.949 | 0.958 | 0.941 |

| Improved Model | 10.4 | 2.749 M | 2 h, 42 m | 0.980 | 0.960 | 0.961 | 0.943 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ünal, İ.; Eceoğlu, O. A Lightweight Instance Segmentation Model for Simultaneous Detection of Citrus Fruit Ripeness and Red Scale (Aonidiella aurantii) Pest Damage. Appl. Sci. 2025, 15, 9742. https://doi.org/10.3390/app15179742

Ünal İ, Eceoğlu O. A Lightweight Instance Segmentation Model for Simultaneous Detection of Citrus Fruit Ripeness and Red Scale (Aonidiella aurantii) Pest Damage. Applied Sciences. 2025; 15(17):9742. https://doi.org/10.3390/app15179742

Chicago/Turabian StyleÜnal, İlker, and Osman Eceoğlu. 2025. "A Lightweight Instance Segmentation Model for Simultaneous Detection of Citrus Fruit Ripeness and Red Scale (Aonidiella aurantii) Pest Damage" Applied Sciences 15, no. 17: 9742. https://doi.org/10.3390/app15179742

APA StyleÜnal, İ., & Eceoğlu, O. (2025). A Lightweight Instance Segmentation Model for Simultaneous Detection of Citrus Fruit Ripeness and Red Scale (Aonidiella aurantii) Pest Damage. Applied Sciences, 15(17), 9742. https://doi.org/10.3390/app15179742