Abstract

Ensuring food quality and safety is a growing challenge in the food industry, where early detection of contamination or spoilage is crucial. Using gas sensors combined with Artificial Intelligence (AI) offers an innovative and effective approach to food identification, improving quality control and minimizing health risks. This study aims to evaluate food identification strategies using supervised learning techniques applied to data collected by the BME Development Kit, equipped with the BME688 sensor. The dataset includes measurements of temperature, pressure, humidity, and, particularly, gas composition, ensuring a comprehensive analysis of food characteristics. The methodology explores two strategies: a neural network model trained using Bosch BME AI-Studio software, and a more flexible, customizable approach that applies multiple predictive algorithms, including DT, LR, kNN, NB, and SVM. The experiments were conducted to analyze the effectiveness of both approaches in classifying different food samples based on gas emissions and environmental conditions. The results demonstrate that combining electronic noses (E-Noses) with machine learning (ML) provides high accuracy in food identification. While the neural network model from Bosch follows a structured and optimized learning approach, the second methodology enables a more adaptable exploration of various algorithms, offering greater interpretability and customization. Both approaches yielded high predictive performance, with strong classification accuracy across multiple food samples. However, performance variations depend on the characteristics of the dataset and the algorithm selection. A critical analysis suggests that optimizing sensor calibration, feature selection, and consideration of environmental parameters can further enhance accuracy. This study confirms the relevance of AI-driven gas analysis as a promising tool for food quality assessment.

1. Introduction

In recent decades, there has been a growing international awareness of the impact of air pollutants on climate and public health, which has driven innovation in techniques for detecting volatile organic compounds (VOCs) in the atmosphere. Particularly when identifying and measuring these compounds, it enhances our understanding of their roles and connections in toxicological processes, as well as their biological and chemical characteristics, and disease mechanisms across several disciplines, including environmental [1,2], biological [3,4,5], agricultural [6,7], and food sciences [8,9].

VOCs are classified according to their origin as either exogenous or endogenous. Exogenous VOCs originate from external influences, including environmental exposure, dietary intake, tobacco use, routine behaviors, pharmaceuticals, and microbial activity. In contrast, endogenous VOCs are closely associated with internal metabolic functions occurring within an individual’s cells and tissues [10].

Most applications in the scientific literature focus on studying indoor and outdoor air pollution, aiming to mitigate their adverse impacts on human health [11]. Regarding indoor air quality, the World Health Organization (WHO) [12] guidelines for pollutants that determine IAQ include benzene, carbon monoxide (CO), carbon dioxide (CO2), formaldehyde, nitrogen dioxide (NO2), polycyclic aromatic hydrocarbons (PAHs), among others, as well as particulate matter, such as PM2.5 and PM10 [13].

The evolution of technology has driven significant advances in several areas, enabling us to interact and transform our relationships with people and our surroundings. Sophisticated sensing technologies, combined with machine learning algorithms, allow us to calculate and interpret complex data in a more efficient, capable, and accurate manner. In the food sector, these issues have led to various solutions from different perspectives [14,15].

Food is a basic human need so ensuring food quality and safety standards is a key priority. Food quality detection methods face challenges due to their complexity, time-consuming, and expensive procedures; therefore, it is necessary to find solutions to address these difficulties [16]. Detection of food spoilage is of the utmost importance, given its irreversible effects on the digestive system and human health due to foodborne illnesses. Consequently, the food freshness index is one of the primary parameters in food safety and quality [17]. To characterize the intended purpose, the substances that need to be monitored generally include semi-volatile organic compounds (sVOCs), Volatile Sulfur Compounds (VSCs), relative humidity (RH), and temperature. Furthermore, to construct the concept of odor measurement or identification, we need to define the following terms [1,18]: VOC refers to a substance that performs a photochemical reaction in the atmosphere; a fragrance, on the other hand, is a single chemical substance or a mixture of chemicals designed exclusively to produce a pleasant smell or to mask undesirable odors. VSCs are sulfur-based gases recognized for their strong, unpleasant smells. They may naturally released during the breakdown of organic material, through volcanic emissions, or as byproducts of microbial metabolism [19].

Instruments that enable the detection and measurement of gases and odors are called electronic noses (E-Noses), which are a multi-sensor sensing technology [4]. These instruments employ multiple sensor arrays with overlapping selectivity. Their strength lies in their ability to identify a wide range of chemical profiles in samples. By interpreting these distinct chemical patterns, the systems can effectively categorize samples. This means that to obtain more in-depth information while minimizing the limitations of the detection systems, the data fusion technique should be used to generate a global and comprehensive signature associated with the sensitivity of the device.

Thus, for E-Noses to be used to reproduce the human olfactory capacity and provide information on gas and odor fingerprints, they generally consist of two modules: a gas detection system (sensors) and an information processing system (microprocessor and identification algorithms) [20,21]. More than 30 years after the introduction of the E-Nose concept, the evolution of this technology in terms of size, cost, and instrument consumption is remarkable. Currently, devices are characterized by efficiency, portability, precision, and compactness in various sectors, including the food industry, healthcare, environmental monitoring, and safety [22,23].

A systematic review, which focused on using E-Noses to evaluate the quality of food products, highlights that this sensor-based technology can effectively assess product quality. It is particularly useful in freshness evaluation, quality control, and process monitoring within the food sector [24].

The objective of this case study is to identify and classify four types of foods, including banana, cabbage, orange, and milk, using VOCs as classification markers. This information is collected through the use of the BME Development Kit. These four food types were selected because they are perishable and allow for visual identification of changes in texture (banana, orange, and cabbage) or because they produce distinct odors, such as the sour smell of milk. Furthermore, they are very popular and readily available foods. The device uses an environmental BME688 sensor to capture temperature, pressure, humidity, and gas composition measurements, reflecting emissions from various foods and environmental conditions. The ability of the sensor to read electronic fingerprints and customize heating profiles (HPs) enhances the accuracy of gas analysis, thereby optimizing food classification. The collected dataset will be subject to applying prediction models to distinguish foods using supervised learning techniques. The classification of food from the prediction models is studied through two approaches: applying neural networks using BME AI-Studio software and, in parallel, exploring the decision tree (DT), Logistic Regression (LR), k-Nearest Neighbors (kNN), Naive Bayes (NB), and Support Vector Machine (SVM) algorithms.

2. State of the Art

Electronic nose systems are sensor-based technologies bioinspired by the human sense of smell [25], which replicate the functional stages of olfaction to detect and identify volatile compounds, making them useful for applications such as identifying food spoilage or distinguishing between bacterial infections.

An electronic nose (E-Nose) is a smart device designed to emulate the human sense of smell in the detection and analysis of odors. It consists of a sensor component and a signal processing unit. The set of sensors, which function as olfactory receptors, interacts with the molecules of the gases whose odor we want to characterize, transforming chemical interactions into electrical signals. These combined responses form a signal pattern intended to be unique for each odor. The generated signals are then refined through preprocessing, after which a pattern recognition system, analogous to the brain, uses various algorithms to interpret the data and evaluate the characteristics of the odor [26,27,28].

E-Nose technology offers an efficient solution for the real-time detection and analysis of gases and odors, thereby enhancing its versatility in various applications, including food, health, environmental monitoring, and safety. In food, E-Noses are used to detect contamination in food products by identifying the tastes and smells produced by the degradation of their constituents, caused by exposure to heat or cold, as well as the growth of harmful substances. In healthcare, this technology is widely used, particularly in disease prediction and the detection of microorganisms, with potential applications in medical diagnosis and the monitoring of medical conditions. Monitoring indoor and outdoor air quality is where the E-Noses are inserted as they play a predominant role in this application. Finally, E-Noses are increasingly used in safety-critical areas, specifically in fire detection and the identification of toxic or explosive gases [29,30].

In the field of E-Noses, their design is based on the ability to detect a specific odor originating from electronic fingerprints in an external environment. Thus, it is crucial to provide a response signal to the patterns produced by various gaseous compounds, granted by the detection and identification mechanism of the sensor matrix. Logically, depending on the gases we want to detect, it is necessary to consider the behavior and functionality of the sensor concerning the specific target [31]. For this reason, there is a diverse set of sensors, which vary in materials, detection principles, and sensitivity. The most common sensor types used in E-Nose technology are instruments based on surface acoustic waves (SAWs), conductive polymers (CPs), optical sensors (OSs), and metal-oxide semiconductors (MOSs), the latter representing the vast majority of solutions found in the literature [32,33].

E-Noses must have distinct characteristics in the paradigm of detecting and analyzing gaseous substances, ensuring high accuracy, selectivity, and speed in identifying odors. Maintaining performance levels, size, and portability of sensors are critical requirements, in addition to integration with AI algorithms that improve the ability to recognize patterns, making devices more effective in distinguishing similar compounds. Energy efficiency is also a crucial factor; it is essential to develop and create optimized systems that focus on real-time operation and low energy consumption [34,35]. The aspects that demonstrate the added value of combining E-Nose technologies and AI techniques include process reliability, promising results, and precision optimization. The compatibility and complementarity between the concepts enable the development of more efficient, autonomous, and adaptable systems for various scenarios, particularly in the food industry. Thus, adopting these technologies can significantly contribute to food safety and product traceability, ensuring the early detection of contamination and adulteration [36].

2.1. BME Development Kit

The BME Development Kit is a development platform designed to facilitate the creation of environmental solutions. At its core, the system features the BME688, a compact and low-power gas sensor that operates over a wide temperature range (−40 °C to 85 °C), enabling its deployment in both outdoor and indoor environments. The sensor does not identify specific gases; instead, it measures the total VOCs and provides a unique profile for each gas based on conductivity changes in its metal-oxide (MOX) sensing layer. These profiles can be further processed and classified using BME AI-Studio Software (version 2.3.4) [37]. This enables the training of custom models for odor detection, gas classification, or other specific application scenarios. In this context, applications of the kit can include indoor and outdoor air monitoring, detection of unpleasant odors, food spoilage, and gas leaks.

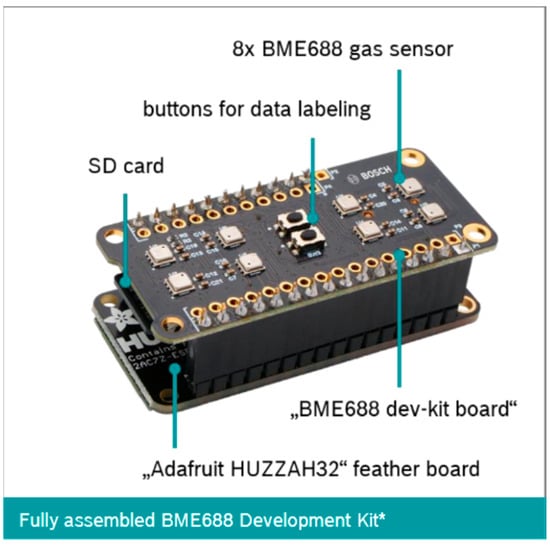

More precisely, the board sensor has eight BME688 that can detect VOCs, VSCs, and other gases, such as carbon monoxide and hydrogen. It includes several sensors for measuring temperature (−40 °C to 85 °C), a pressure sensor (0 to 1100 hPa), a relative humidity sensor (0% to 100%), and a metal-oxide semiconductor (MOX) gas sensor (TPHG). The inclusion of temperature and humidity sensors is crucial as MOS sensors are strongly dependent on temperature and humidity, thus allowing the BME688 to be regulated during its operation [38]. They also house two buttons with the functionality of marking and distinguishing the reading of a given sample, i.e., by pressing a button, we are limiting a set of data from one sample (for example, transitioning the readings from a banana to an orange). The board is built around an ESP32 microcontroller (Adafruit Huzzah32-Adafruit Industries, Brooklyn, NY, USA), which is compatible with the BME688 sensor matrix, along with a micro-SD card and a CR1220 battery cell, as shown in Figure 1. The ESP32, developed by Espressif Systems, is an affordable and energy-efficient system-on-chip (SoC) that features built-in Wi-Fi and supports both classic and BLE (Bluetooth Low Energy), in addition to the capability of establishing communication through the I2C and SPI interfaces, allowing for the collection of data from sensors [39,40]. Its connectivity and performance make it a widely used platform for developing Internet of Things (IoT) solutions, particularly when combined with gas sensors, paving the way for smart and context-aware environmental monitoring.

Figure 1.

BME Development Kit Architecture features [* aspect, depending on the version].

The operation of the BME Development Kit is subject to the firmware provided in the documentation presented by Bosch [18]. In addition to the firmware in that space, there is a device User Guide, User Licenses, and an executable flash.bat file, which we use to send firmware to the BME Development Kit. Firmware is essential for performing the tasks designed for the device, including reading, storing results and settings, and responding to button inputs [38].

2.2. Machine Learning

Machine learning (ML) is a field of Artificial Intelligence that enables a system to automatically acquire knowledge without human intervention, allowing computers to learn like humans and animals by learning from experience [41]. ML techniques have been successfully applied in diverse fields, including pattern recognition, computer vision, spacecraft engineering, finance, entertainment, computational biology, and biomedical and medical applications [42].

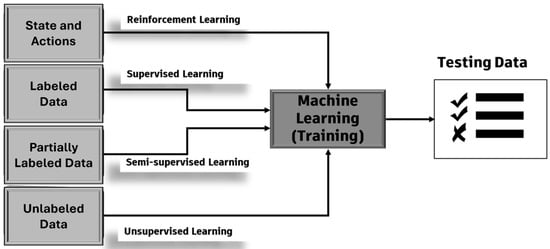

Based on the algorithm procedure, Figure 2, ML can be classified into four distinct techniques: supervised, unsupervised, semi-supervised, and reinforcement learning [43,44].

Figure 2.

Machine learning techniques—supervised, unsupervised, semi-supervised, and reinforcement.

Supervised learning uses a set of known input and output data to train a model to generate predictions for the response of new data; to achieve this, it uses the knowledge gained from the previous data that is labeled. The labeling stage plays a crucial role in this process as it is responsible for assigning categories or labels to input data, representing the expected responses. This type of learning involves two methods of prediction that boil down to techniques that predict discrete or continuous responses, respectively: classification and regression [41].

Supervised learning algorithms are numerous and distinct. The literature usually categorizes them according to their nature, i.e., probabilistic, linear, or non-linear algorithms [45]. In the case of this study, the classification methods most frequently used are as follows:

- Decision Tree (DT) is an algorithm that builds a tree-like structure, where each internal node represents a decision based on a specific attribute, while the leaf nodes denote the predicted outcome or class [6]. DTs are highly interpretable, making them ideal for understanding decision-making processes [45,46].

- Logistic Regression (LR) is a statistical method used for predicting binary outcomes. It models the probability of an event occurring, making it suitable for classification problems where the response variable is categorical [43,45].

- k-Nearest Neighbors (kNN) is a simple and intuitive non-parametric classification algorithm that classifies a data point based on the majority class of its nearest neighbors. The algorithm uses a distance metric to identify neighbors and classify the input data [45,47]. When used for odor classification, it converts sensor outputs into feature vectors and applies a distance measure to locate the K most similar odor samples within that feature space.

- Naive Bayes (NB) is a probabilistic algorithm that calculates the likelihood that a given input will belong to a specific class based on Bayes’ theorem, assuming feature independence. NB is primarily useful when there is a high number of input features and the dataset is sparse [45,46].

- Support Vector Machine (SVM) can be used for linear and non-linear classification tasks, making it highly adaptable to various real-world applications. The algorithm utilizes the concept of a hyperplane, which seeks to identify the hyperplane that maximizes the margin. This margin maximization approach enables SVMs to achieve good generalization performance and handle data that may not be linearly separable [26,28].

2.3. Comparative Analysis of E-Nose and ML Integration

An E-Nose was integrated with Gas Chromatography– Mass Spectrometry (GC-MS) to investigate the influence of enriched CO2, water, and nitrogen on the aroma profiles of tomatoes. This system identifies key volatile compounds that significantly affect tomato quality, including those that influence flavor and freshness. By analyzing these compounds, the research reveals how enriched CO2 application alters the release patterns of specific aroma compounds. These findings provide valuable insights into improving tomato post-harvest preservation and quality control methods, providing new perspectives for improving storage and extending shelf life in the agricultural and food industries [48].

This research also develops a portable, chip-based E-Nose designed for the quick and accurate evaluation of essences. This system utilizes high-performance sensors to detect volatile compounds efficiently, offering a robust and reliable approach to essence analysis. The portable design enables the E-Nose to be applied in various industries, ranging from food quality control to fragrance testing. The findings highlight that the system provides fast and reliable results, making it an excellent tool for real-time essence evaluation, with potential applications in multiple sectors, including environmental monitoring and industrial testing [49].

In the analysis of cow milk, the high susceptibility of cow milk to spoilage processes was examined, which can negatively affect its quality. This highlights the identification of aromas through VOCs, which serve as indicators of changes in milk quality during its deterioration. To address this issue, the possibility of detecting different milk samples, fresh, pasteurized, and UHT, was analyzed through their electronic signatures. Different types of milk are distinguished using classification algorithms that use data collected by the BME Development Kit to train various models [50].

The BME Development Kit was also employed to detect and differentiate various beverages, such as beer, Pepsi, and alcohol. The combination of data collected by the platform and the application of different machine learning models demonstrates the potential of this approach for identifying beverages based on their unique odor profiles [39]. The BME Development Kit was also used to acquire ten resistance values from the BME688 sensor in response to gas exposure. These resistance values, influenced by varying heating profiles and gas resistance, enable the mapping of distinct fingerprints to detect fruits and vegetables. Multiple readings of different spoilage states were collected and used to train a neural network, where the resistance values served as inputs to the dataset algorithm [51].

3. Methodology

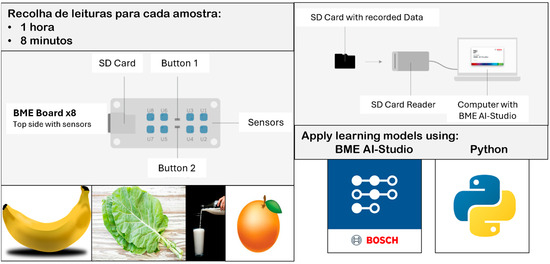

The methodology adopted in this case study involves applying the supervised learning technique. The logic of the food identification process follows the procedure presented in the BME AI-Studio Manual [37], which consists of exploring the use of the BME Development Kit in collecting and gathering readings for each food, followed by the step of assigning classes to measurements corresponding to banana, cabbage, orange, and milk (BCOM). Finally, training classification models were based on data measured by the BME Development Kit.

As mentioned, the application of learning models diverges into two strategies: using the BME AI-Studio tool and a proposed methodology of different classification algorithms. Data acquisition results from readings measured by eight BME688 sensors of TPHG (temperature, pressure, humidity, and gas) variables, which represent the dataset used to train food classification and identification models using the supervised learning technique, as shown in Figure 3.

Figure 3.

Food identification process.

3.1. Data Acquisition and Collection

The acquisition and collection of data took place inside a container measuring 18 × 10 × 20 cm, wherein the four food items, in this case, banana, cabbage, orange, and milk, were individually inserted along the BME Development Kit. The experiments were conducted under uncontrolled indoor ambient conditions, with no implementation of environmental regulation mechanisms such as temperature or airflow control. This setup was intentionally chosen to replicate realistic usage scenarios for the sensor platform. Highlighting the significance of configuring the BME Development Kit is crucial as it involves setting three key operational parameters: the heating profiles (HPs); the ratio of active to inactive periods (RDC), covering scanning and rest phases; and how these settings are allocated across the eight onboard sensors in the layout. The configuration of these parameters is performed in the BME AI-Studio software (version 2.3.4), resulting in a .bmeconfigfile.

Each sensor in the array can be configured using various parameters, including the Heater Profile (HP) and Duty Cycle (DC). In the BME688 sensor, the heater profile involves precisely regulating temperature cycles applied to the metal-oxide (MOX) gas-sensitive layer. This thermal regulation is essential as the temperature of the sensing surface strongly influences the ability of MOX sensors to detect gases [8].

Following this study, the eight sensors were designed to collect data according to the standard configuration recommended by Bosch Sensortec (Kusterdingen, Germany), specifically the Combination HP-345/RDC-5-10. The instructions from the manufacturer recommend stabilizing and calibrating the platform sensors since the platform operates within a 12 h period according to the HP-001 profile [37,39].

No long-term drift correction was required as the experiments were conducted within a short timeframe. Nevertheless, MOS sensors are known to be prone to drift due to aging and contamination; therefore, periodic calibration and drift compensation strategies will be essential in long-term applications, which we plan to address in future work.

In this way, readings were recorded for 1 h and 8 min for each food, designed to use the HP-345/RDC-5-10 configuration. In the problem context, a reading for a given food, recorded by the BME Development Kit, corresponds to a vector represented by TPHG (temperature, pressure, humidity, and gas) variables. Table 1 presents the number of data samples collected for each food item. Although exact uniformity was not achieved, care was taken to ensure an approximately balanced distribution across the four classes, minimizing the risk of bias during model training.

Table 1.

Number of samples collected per food class: banana, cabbage, orange, and milk.

3.2. Importing the Data

Representative data of the different foods is automatically stored on the SD card of the BME Development Kit. The next step after conducting the individual readings of each food is to import the data of TPHG variables through a card reader. The firmware of the device contains the instructions for creating three files: .bmeconfigfile, .bmelabelinfo, and .bmerawdata [37].

The .bmerawdata file contains generic information of the readings, i.e., the configuration of operation and raw data gathered by the BME Development Kit. This file uses a JSON format structured in four parts, representing the configuration of the platform and storage of the collected data [37]:

- Part one: configHeader;

- Part two: configBody;

- Part three: rawDataHeader;

- Part four: rawDataBody.

The data collected is in the rawDataBody section and is divided into two parts. The first dataColumns part describes the columns of the second part dataBlock, which effectively contains the raw data in condensed form. Each block within dataColumns describes one of twelve data columns corresponding to the variables obtained by the platform [37].

Two different strategies were used to import and handle this data:

- BME AI-Studio approach:Data importing was performed through the Import Data interface provided by the AI-Studio software. After unzipping the .bmerawdata file, the platform directly recognizes and interprets the internal structure of the file, streamlining the process for model training and evaluation within the tool.

- Proposed methodology:To enable independent analysis outside the Bosch platform, a custom MATLAB script was developed to parse the JSON structure, extract relevant information from the dataColumns and dataBlock fields, and reconstruct the dataset into tabular form. This process enabled the transformation of raw data into a structured format suitable for preprocessing, labeling, and training using various classification algorithms.

The imported dataset contains a total of 39,583 readings across the four food classes, each consisting of multi-dimensional sensor data vectors corresponding to the TPHG variables. This volume of data provides a robust foundation for effective supervised learning. Additionally, the use of a standardized JSON format for raw data storage facilitates interoperability between different software tools; it allows flexible data manipulation and analysis outside the Bosch proprietary platform.

3.3. Data Labeling

Data labeling is a fundamental process in the field of ML. Through this step, the learning algorithms perform training to recognize/identify patterns and make decisions based on the provided information. This process consists of assigning labels or tags to a set of data to be used for training the models. Typically, these labels provide information about the characteristics or properties of the data [45].

The nature of these labels includes a range of classes, categories, and numerical values, among others—depending on the learning task in question—since the importance of this process is extremely relevant. The foresight and consistency of this step allow errors and ambiguities to be avoided, highlighting the strong relationship between data labeling and the predictive quality of forecasting models [43].

The data labeling performed by the two approaches occurs after the data import. This means that classes are assigned to the food using the same tools: the BME AI-Studio interface and application of the repmat function in MATLAB (version R2020b).

The accuracy and reliability of the data labeling process are crucial as they directly affect the performance of the trained models. Precise and consistent labeling ensures that the learning algorithms can correctly associate sensor readings with their respective classes, thereby improving the predictive capability and generalization of the classification models. Inaccurate or inconsistent labeling could introduce noise and bias, negatively impact model accuracy, and lead to unreliable predictions.

3.4. Training and Evaluation Metrics

The procedures for applying the learning models, both in the BME AI-Studio interface and in the methodologies of the five algorithms, DT, LR, kNN, NB, and SVM, are described in detail to demonstrate and understand their processes.

In BME AI-Studio, the internal neural network architecture is pre-configured by Bosch and cannot be fully customized by the user; however, several hyperparameters can be adjusted, including batch size (4–64), number of training epochs, and the ratio between training and validation sets. During training, class-weighted Cross-Entropy Loss was applied, ensuring balanced learning across categories. These details clarify the fairness of the comparison between the neural network and traditional ML models.

The software was developed specifically for these applications, integrating ML logic into BME AI-Studio by training algorithms based on optimized neural networks. After assigning classes and selecting data, machine learning models are created through the “New Algorithm” menu in the interface. This option opens the environment where the training takes place and, subsequently, validates the classification model for the four foods. The next step is to define the classes equivalent to each food properly labeled, which generates the grouping of information that the algorithm uses to train the model. Then, the model is trained by selecting the “Train Neural Net” option, which starts the process of training and evaluating the prediction model.

It is possible to adjust various parameters through the software to enhance and improve the prediction model. The selection of channels from the collected data or the definition of the batch size (between 4 and 64) that the algorithm should consider, the division into a percentage of the data used for training and validation of results, and the number of training rounds are examples of options that the tool allows to be handled [39].

The proposed methodology uses Python (version 3.12.7) to train and validate various classification models, following a personalized, complex, and diversified approach. The methodology adopted in this strand builds upon the experience and knowledge sustained in the publication of the book “Machine Learning Algorithms from Scratch”; in this way, the ML corroborates the organization and structure presented by Jason Brownlee [52].

Applying learning models requires a path that involves fundamental steps in constructing robust and efficient models. Some of these steps generically include data division, data transformation, and evaluation metrics.

- Data Splitting—the process of splitting data into a set of training and test data.

- Data Transformation—operations and transformations on data that define and complete its structure.

- Evaluation Metrics—evaluation criteria and metrics that calculate the performance of algorithms.

The experiments were conducted within the Anaconda distribution environment (Python 3.12.7) using key packages—scikit-learn 1.5.1, numpy 1.26.4, and pandas 2.2.2. To ensure reproducibility of the results, a fixed random seed (random_seed = 42) was consistently applied across all data-splitting procedures. For every method employed, a test set size corresponding to 30% of the total dataset was maintained. Additionally, where applicable, the number of splits was set to 10, enabling a robust evaluation of model performance through multiple randomized partitions.

Data splitting is a simple method that allows us to evaluate the performance of learning algorithms. The split techniques used are train-test, k-fold cross-validation, and shuffle split.

- Train-Test Split: Split the dataset into two sets: training and test. Generally, the split ratio takes the values 70–30% or 80–20%, with the training set having a greater amount of data.

- K-Fold Cross-Validation: Splitting the data into k equal parts, k-1 folds are used to train the model, and the retained fold is used to perform the test, repeating the process until each of the folds is used in the test.

- Shuffle Split: A distribution of the two previous techniques, which consists of splitting the data k times and, each time, dividing it into a training set and a test set using the proportions, for example, 70–30%.

The application of several operations, concerning the data transformation, allows us to verify whether the respective transformations produce better results using different algorithms. The original data of the problem does not always promote the best conditions for optimizing the performance of learning algorithms; on the contrary, it also happens by demonstrating that the nature of the raw data leads to better results [52]. The transformations used in the case study and the original data include the Min-Max scaler, standard scaler, and normalizer.

- Min-Max Scaler: Normalization process that scales the data between a specific interval, for example, [0, 1];

- Standard Scaler: Normalization process that standardizes data to a mean , and standard deviation std = 1;

- Normalizer: Normalization process that adjusts each data vector in the dataset to have a unit norm.

The methodology is implemented using a Python program to train and validate the DT, LR, kNN, NB, and SVM algorithms. This process generates 60 distinct prediction models (5 × 4 × 3 = 60), resulting from the combination of five learning algorithms, four dataset variations—including the original dataset and its transformed versions using Min-Max scaler, standard scaler, and normalizer—and three data-splitting methods: train-test, k-fold, and shuffle split.

To provide a clearer understanding of the methodological distinctions between the two strategies adopted in this study, Table 2 presents a comparative overview of the main stages involved, including data import, labeling, and model training configuration. The BME AI-Studio strategy is characterized by its simplicity and automation, streamlining the entire process through predefined tools and limited user intervention. In contrast, the proposed methodology offers significantly greater flexibility and control over each stage of the pipeline, at the cost of increased implementation complexity and manual configuration. This trade-off reflects the varying levels of user involvement and adaptability, depending on the intended scope of experimentation.

Table 2.

Comparative summary of both strategies.

3.5. Model Optimization and Performance Enhancement

While the initial training and evaluation of models provide valuable insights into the performance of different ML algorithms under various data preparation techniques, further steps were taken to refine model quality and robustness. In this study, three main optimization procedures were applied: identification and removal of outliers, class balancing to mitigate the impact of uneven distributions, and the application of a more refined learning algorithm to enhance generalization and improve classification accuracy. These steps aimed to maximize the predictive power of the models and address potential limitations found in the baseline approaches.

All data preprocessing operations—including outlier removal and class balancing— were conducted using MATLAB. This tool was already integrated into the workflow of the proposed methodology, supporting both data handling and model development, thus ensuring continuity throughout the entire process.

- Outlier Removal: The three-sigma (3σ) rule is a statistical method used to identify outliers in a dataset by considering the distribution of each numerical variable. It is based on the empirical rule for normally distributed data, which states that approximately 99.7% of values lie within three standard deviations (σ) from the mean (μ). Values that fall outside this range are treated as anomalies or outliers, likely to represent noise or errors.

- Class Balancing: Before performing the class balancing procedure, the distribution of samples per class was analyzed using MATLAB’s tabulate function. This function provides a summary table indicating the frequency of each class in the dataset, which is essential for understanding class imbalances. The dataset was approximately balanced across categories, although small differences remained (e.g., cabbage had slightly more samples). To mitigate this, class balancing was performed by downsampling to the smallest category (orange, with 9160 samples). Based on this, the class balancing was carried out by equalizing the number of samples for each class to this target count. This ensured that all subsequent training and validation stages were not biased toward any single class.

- Algorithm Refinement: To further improve classification performance, the Random Forest algorithm was selected due to its robustness and effectiveness in handling complex datasets. Random Forest is an ensemble learning method that constructs multiple decision trees during training and outputs the class that is the mode of the classes predicted by individual trees. This approach reduces the risk of overfitting, a common issue in single decision trees, by aggregating the predictions of multiple diverse models, thereby enhancing generalization and accuracy.

The Random Forest classifier was configured with one hundred trees and no limit on the maximum depth of each tree using the Gini impurity criterion for node splitting. That balances computational cost and performance, providing sufficient model stability without excessive training time. Allowing each tree to grow without a depth restriction enables the model to capture complex patterns in the data by continuing to split until all leaves are pure or contain fewer samples than the minimum required for a split. The Gini impurity criterion was selected as the default and widely used measure for evaluating splits in classification problems, offering a good trade-off between computational efficiency and accuracy.

4. Implementation and Results

Examining the two strategies employed in this case study will enable a comparison and analysis of the performance of different classification models in the search for food identification. Thus far, the following steps of the procedure have been presented: data collection and acquisition through the BME Development Kit; data importing, considering the different strategies of application of the learning models; and assignment of the classes BCOM to sets of data collected by the platform in the two-time intervals (1 h and 8 min), considering only the TPHG (temperature, pressure, humidity, and gas) variables.

4.1. Exploratory Data Analysis and Visualization

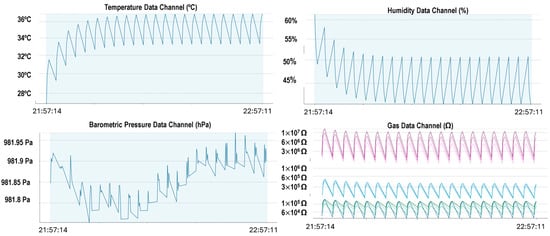

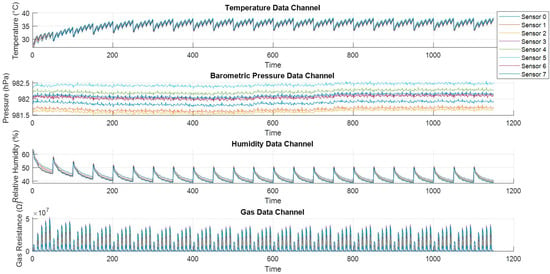

The visualization of data related to readings obtained from temperature, humidity, pressure, and gas for each food is a factor that enables immediate analysis and interpretation of the data in an accessible and efficient manner. Logically, graphs and tables are the tools that give us the possibility to observe the behavior of each TPHG variable for a given food. The following graphs represent the banana dataset collected by the BME Development Kit over time for each TPHG variable.

The banana was selected as a representative food due to its stable and consistent sensor response profile, making it a suitable example to illustrate the typical behavior of the TPHG variables across different sensors. Figure 4 presents the graphs for each TPHG channel provided by the interface, intuitively revealing the readings collected by each of the eight sensors individually. Considering the importance of gathering different values by adopting different HPs, it is possible to verify the ten heating steps corresponding to the detected values of gas resistance at different temperatures in the gas-resistance graph.

Figure 4.

TPHG channels in BME AI-Studio interface—banana sample.

In the script developed from the perspective of the proposed methodology, it was intended to elaborate on the representation of the data in an analogous manner. That is, in the design of the table built after decoding the JSON file, the MATLAB software allows the dataset to be arranged into graphs. As we can see, each TPHG parameter collected by the eight sensors is distributed across the four graphs, which indicate, respectively, the values of TPHG as shown in Figure 5.

Figure 5.

TPHG channels in MATLAB software—banana sample.

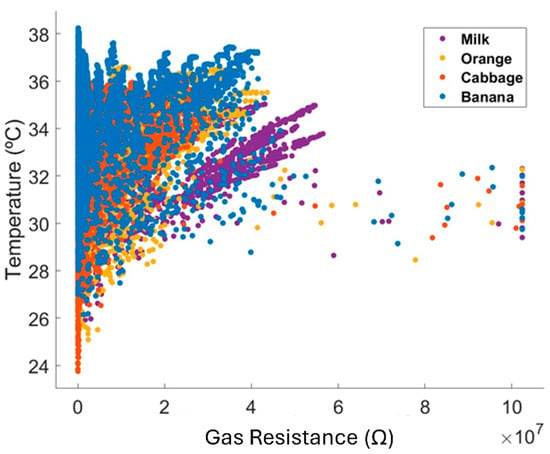

ML heavily depends on data as performance and accuracy are related to the quality and quantity of information used in the model. Scatter plots are an essential tool in this process as they enable you to visualize the relationship between two variables in a two-dimensional plane, assigning one variable to each axis [52].

The usefulness of this tool lies in its capacity to identify patterns and correlations within the dataset, as well as to detect overlapping classes that may compromise classification performance. Scatter plots enable the visualization of the spatial distribution of data points across different feature combinations, thereby allowing researchers to assess the degree of separability between classes intuitively.

Figure 6 illustrates these aspects, focusing on the relationship between the temperature and gas variables—two of the most influential parameters collected by the sensors. By analyzing the plotted clusters, it is possible to observe a partial separation between food classes. In particular, the samples corresponding to cabbage and milk exhibit a more distinct distribution in the feature space, indicating higher discriminative power for these categories based on the selected TPHG variables.

Figure 6.

Scatter plot—gas vs. temperature.

4.2. Evaluation of Results

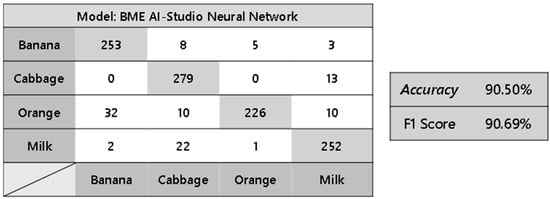

The evaluation of the results allows us to analyze the performance of our classification problem, whose objective is to build a prediction model supported by an ML algorithm capable of identifying the four different foods in the dataset, built by the data recorded using the BME Development Kit. The parameters we use to evaluate the performance of the learning models, built based on the first and second strategies, are particularly focused on the value of accuracy. The accuracy value of classification models is the most important parameter as the higher its value, the better the results of the prediction model.

On the side of the strategy that uses the BME AI-Studio software, it is represented in a general way, as shown in Figure 7, through the confusion matrix and the accuracy of the performance obtained by the neural network, with an accuracy of 90.50%. The validation subset corresponds to 30% of the total cycles, and a class-weighted Cross-Entropy Loss was applied during training to ensure that each class contributed proportionally to the learning process. The number of cycles for each class in the validation subset is, thus, approximately 30% of the total (269, 292, 278, and 277 out of 920, 960, 920, and 920, respectively), supporting the performance metrics observed.

Figure 7.

Results obtained by BME AI-Studio neural network confusion matrix—Class A: banana; Class B: cabbage; Class C: orange; Class D: milk.

Multiple methods exist for assessing the performance of a classifier, among which accuracy, precision, recall, and F1 score are commonly used. These metrics are formally defined in Equations (1)–(4), respectively.

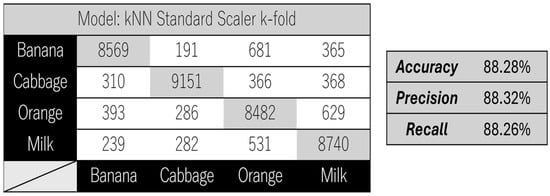

On the other hand, to restrict the selection to different prediction models based solely on the accuracy value, we selected the five best algorithms, presented in descending order, as shown in Table 3.

Table 3.

Results obtained by the 5 best models applying the proposed methodology.

Figure 8 presents the confusion matrix for the prediction model with the best performance, obtained using the presented methodology. This model was generated by the k-fold division method, the dataset after standard scaler transformation, and the kNN algorithm, achieving an accuracy of 88.28%.

Figure 8.

Confusion matrix of the kNN model using the k-fold method and the standard scaler dataset.

The optimization steps carried out in this study resulted in a significant improvement in the predictive performance of the models. Table 4 presents the distribution of samples per class before data balancing, obtained using MATLAB’s tabulate function, providing a clear view of the initial dataset imbalance.

Table 4.

Class distribution using the 3σ rule before applying data balancing.

It is important to emphasize that throughout the optimization process, the data-splitting methods and transformation techniques remained consistent. The primary modification was the implementation of the more advanced Random Forest algorithm.

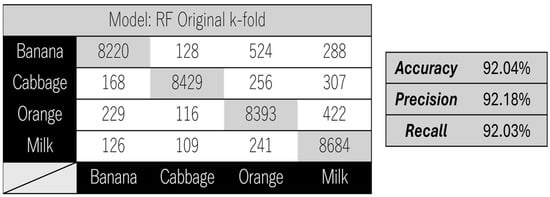

Figure 9 presents the confusion matrix of the best-performing model, providing a clear visual representation of classification accuracy and highlighting the model’s effectiveness in correctly differentiating between the various food categories.

Figure 9.

Confusion matrix of the RF model using the k-fold method and the original dataset.

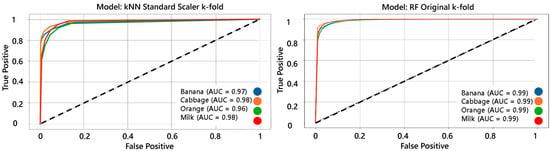

The Receiver Operating Characteristic (ROC) curve is a graphical tool that evaluates the trade-off between True positive rate (sensitivity) and False positive rate (1-specificity) at various classification thresholds, providing detailed insights into model performance per class. The Area Under the Curve (AUC) quantifies this discriminatory ability, with values closer to 1 indicating superior class separation. ROC analysis provides detailed insights into model performance per class, highlighting which categories are easier or harder to distinguish—especially important in multi-class and imbalanced datasets.

In this study, the kNN model with a standard scaler and k-fold cross-validation achieved per-class AUCs of 0.97, 0.98, 0.96, and 0.98 for banana, cabbage, orange, and milk, respectively. The Random Forest model, using the original dataset and k-fold cross-validation, achieved per-class AUCs of 0.99 for all classes. These results demonstrate excellent class discrimination for both models, with Random Forest achieving near-perfect separation as illustrated by the ROC curves in Figure 10.

Figure 10.

Per-class ROC curves and corresponding AUC values for the kNN and RF models using the k-fold method and the standard scaler dataset.

To strengthen the contextualization, Table 5 presents a structured comparison of our results with previous research that has utilized E-Noses and ML for food classification. All studies are presented using the same indicators (accuracy, type of sensors, and food samples). This systematic comparison highlights that our results are competitive, especially considering that only a single BME688-based platform was employed, whereas other studies often rely on larger multi-sensor arrays.

Table 5.

Systematic comparison of our results with previous studies using E-Noses and ML for food classification.

In comparative terms, the results obtained are consistent with [40], which also used BME688 for food classification and found that the kNN method was less effective than the RF method. The present work also has better results for the application of the RF method to the characterization of fruits and vegetables than [53]. In the study [51], BME688 was used to characterize spoiled foods (fruits and vegetables), using several classification methods available in BME AI-Studio, obtaining an accuracy of 76%.

However, the data obtained when compared with a solution based on a sensor array (TGS2602, TGS2611, and TGS2620) [16] presents better results when compared with the CNN+KELM, WSN+KELM, and CNN-WSN+KELM methods, losing in performance to the CNN-WSN+SHO-KELM and CNN-WSN+ISSA-KELM methods. The study carried out by [54] on food (peach) classification was based on the sensor arrays TGS2600, TGS2602, and TGS2603, MQ-2, MQ-3, MQ-4, MQ-5, MQ-6, MQ-7, MQ-8, MQ-9, and MQ-135, and concerning the kNN (k = 5) and RF methods with 10-fold, achieved 95.27% and 93.90%—compared to 88.28% and 92.04% observed in the present work. The work in [55], which utilizes sensors TGS813, TGS822, TGS826, TGS832, TGS2600, TGS2603, TGS2612, and TGS2620 for the classification of chicken, beef, and pork meat, achieves an average accuracy of 86.81% for the kNN method, compared to 88.28% in the present study. The work in [56], applied to the classification of milk deterioration states, based on the SP3-AQ2, MQ3, MQ8, MQ136, TGS813, TGS822, TGS2602, and TGS2620 sensors, presents an average accuracy of 72.22% and 91.75% using the LDA and SVM classifiers, respectively. Another study, which identified spoiled food using MQ136, MQ137, and TGS2602 sensors [57], achieved a success rate of approximately 82%. In short, any of the studies, regardless of the accuracy obtained, utilize classification markers other than VOCs, and as can be confirmed, do not consistently achieve high accuracy due to increased complexity, i.e., the use of more markers. Therefore, given the results obtained, particularly through the ROC/AUC curves, it can be concluded that the present study currently presents very satisfactory and promising results.

5. Discussion

The food identification process integrating E-Nose and ML technologies proves to be a simple and effective solution. Both methodologies highlighted the possibility of developing food recognition systems based on the BME Development Kit and ML procedures, resulting in a low-cost, robust, and effective system. The key results can be highlighted. Firstly, the classification model developed with the BME AI-Studio software demonstrated superior performance to any models used in the second strategy. Secondly, the study highlighted that the best configuration for this problem involved using the k-fold division method, applying the standard scaler transformation process, and using the kNN learning algorithm, which presented the best results in the classification task.

However, the application of the Random Forest algorithm within our custom methodology further improved classification performance, surpassing the results obtained by the kNN model developed with the same strategy as those from the BME AI-Studio approach. Random Forest’s approach effectively handled the complexity and noise of the E-Nose data, leading to enhanced robustness and higher accuracy in the food classification task. The superior performance of the Random Forest model can be explained by its ability to address specific characteristics of E-Nose data. The E-Nose data is high-dimensional, correlated, and affected by noise. RF is well-suited to handle high-dimensional input spaces by constructing an ensemble of decision trees that are trained on random subsets of features and is robust to noise by using multiple decision trees.

The performance of the prediction models can be optimized, and the ability to explore different approaches in selecting input variables can be an option. For example, instead of using TPHG (temperature, pressure, humidity, and gas) variables, a reduced set of variables can be chosen, minimizing the negative effects of certain variables that impact the quality of the forecast. In our understanding, the atmospheric pressure variable may have little relevance in the context of this study and may introduce noise. As an alternative to contributing to the improvement in the performance of food classification models, a greater demand can be placed on data collection by using more controlled environmental processes, which can significantly improve the accuracy of the models. Future studies should include feature-importance analysis (e.g., Random Forest ranking) or dimensionality reduction techniques (e.g., PCA and LDA) to improve the interpretability and robustness of the models.

By exploring the classification rates related to the class intrinsic odor characteristics and their relationship with the sensor characteristics used, exploring different approaches to selecting input variables and other food categories can help explain the obtained classification rates and provide a comprehensive insight into the relationship between food categories, gas–emission patterns, and sensor characteristics.

It is also important to note that the measurement environment itself can influence data quality. The lack of adequate ventilation in the reading container may restrict the airflow and cause uneven accumulation of volatile compounds, impacting sensor responses. Furthermore, the use of industrial plastic materials in the container can interact with the odor molecules or sensors, potentially introducing noise or masking key odor signatures. These factors, while partially mitigated by the robustness of the Random Forest algorithm, represent areas for experimental improvement to enhance future model reliability.

The BME AI-Studio platform offers a streamlined and user-friendly environment that significantly simplifies the model development process. Its integrated tools and predefined neural network models reduce the technical complexity for users, enabling fast and straightforward implementation. This approach is highly suitable for rapid prototyping and applications where ease of use and speed are prioritized. However, this simplification comes with limited flexibility as users are constrained to the options and algorithms provided by the platform, restricting the ability to experiment with alternative configurations or explore novel approaches.

In contrast, the proposed methodology embraces a higher level of complexity, requiring manual scripting for data handling, preprocessing, and model training. While this increases the initial effort and demands more technical expertise, it opens a wide range of possibilities for customization and experimentation. This flexibility was exemplified by the development and evaluation of 60 distinct models through combinations of classifiers, data-splitting techniques, and preprocessing methods. Such extensive experimentation allows for a deeper understanding of the data characteristics and model behavior, which is crucial for fine-tuning and optimizing performance beyond what is feasible in a closed platform environment.

The performance of the prediction models can be further optimized by exploring different approaches in selecting input variables. For example, reducing the feature set by excluding variables like atmospheric pressure, which may contribute little relevant information and potentially add noise, could enhance classification accuracy. Additionally, improving data acquisition conditions through more controlled environmental processes may substantially boost model robustness and reliability.

This work demonstrates the potential of combining an E-Nose and AI in monitoring and controlling stored food. The possibility of loading predictive models into processing units enables the distinction of food stored in refrigerators, allowing for various applications, from supermarkets and homes to food transport. Considering the challenges and opportunities of this approach opens space for future research that can further optimize its implementation and adoption on a large scale.

6. Conclusions

Considering the topics discussed, it is evident that odor classification problems can be effectively addressed using the BME Development Kit, specifically designed for such applications, in combination with AI algorithms. Regardless of the procedures and tools employed, this integration presents a highly effective approach to solving these challenges. The case study supports this claim as the accuracy results obtained from the BME AI-Studio software and the proposed methodology demonstrate excellent performance, reaching 90.50% and 88.28%, respectively. However, several factors must be considered that can influence the accuracy of these results, such as sensor reading errors, sensor degradation over time, and contamination from food residues.

From a performance standpoint, two key findings emerge. First, the classification model built using the BME AI-Studio software outperforms all models used in the second strategy. Second, according to the proposed methodology, the best-performing approach consists of a combination of the k-fold division method, the standard scaler transformation process, and the kNN learning algorithm, which produced the most accurate results in this study.

Alternative input selection strategies can be explored to further enhance the performance of classification models. Instead of relying on the full set of TPHG (temperature, pressure, humidity, and gas) variables, reducing the number of input variables could help mitigate the negative effects on prediction quality. Specifically, we believe that atmospheric pressure may have minimal relevance and could introduce unnecessary noise into the food classification process. Alternatively, improving data collection by implementing more controlled environmental conditions could lead to more reliable and accurate predictions.

Although experiments were conducted under uncontrolled ambient conditions to simulate realistic application scenarios, the BME688 sensors continuously recorded temperature, humidity, and pressure values. These records allow future studies to investigate the influence of environmental variations on sensor response. However, the inclusion of environmental parameters (TPH) in the dataset provides a record of such conditions. Future work will benefit from more controlled setups to isolate the influence of environmental confounding factors, enhancing the reproducibility in follow-up research.

To further optimize classification performance, the Random Forest algorithm was employed following necessary preprocessing steps. These included the identification and removal of outliers using the three-sigma (3σ) rule, as well as balancing the class distribution to equalize sample counts across categories. These operations ensured cleaner and more balanced data, which allowed the Random Forest ensemble method to effectively manage data complexity and reduce overfitting, ultimately improving model robustness and accuracy beyond the initial models tested.

In conclusion, the results of this case study demonstrate the effectiveness and high performance of this methodology in detecting and identifying foods. Its application has enormous potential, especially in low-cost and low-consumption intelligent cooling systems, making it a viable solution for several areas.

Author Contributions

Conceptualization, J.P., A.M., P.C., A.V. and C.S.; methodology, J.P., A.M., P.C. and C.S.; software, J.P.; validation, J.P., A.M., P.C., A.V. and C.S.; formal analysis, J.P., A.M., P.C., A.V. and C.S.; investigation, J.P., P.C. and C.S.; resources, A.M., P.C. and C.S.; data curation, J.P.; writing—original draft preparation, J.P.; writing—review and editing, J.P., A.M., P.C. and C.S.; visualization, J.P., A.M., P.C., A.V. and C.S.; supervision, J.P., A.M., P.C., A.V. and C.S.; project administration, A.M., P.C. and C.S.; funding acquisition, A.M. and C.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors upon request.

Acknowledgments

The authors would like to thank the following projects, which contributed to the completion of this work: UID/04033And LA/P/0126/2020. DOI: 10.54499/LA/P/01264/572020, supported by National Funds from FCT—Portuguese Foundation for Science and Technology.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| AUC | Area Under the Curve |

| BCOM | Banana, cabbage, orange, and milk |

| GC-MS | Gas Chromatography–Mass Spectrometry |

| CO2 | Carbon dioxide |

| CP | Conductive polymer |

| DT | Decision tree |

| E-Nose | Electronic nose |

| HP | Heating profile |

| I2C | Inter-Integrated Circuit |

| JSON | JavaScript Object Notation |

| kNN | k-Nearest Neighbors |

| LR | Logistic Regression |

| ML | Machine learning |

| MOS | Metal-oxide semiconductor |

| NB | Naive Bayes |

| OS | Optical sensor |

| DC | Duty Cycle |

| ROC | Receiver Operating Characteristic |

| SAW | Surface acoustic wave |

| SPI | Serial Peripheral Interface |

| SVM | Support Vector Machine |

| TPHG | Temperature, pressure, humidity, and gas |

| UHT | Ultra-High Temperature |

| VOC | Volatile Organic Compound |

References

- Lan, H.; Hartonen, K.; Riekkola, M.-L. Miniaturised Air Sampling Techniques for Analysis of Volatile Organic Compounds in Air. TrAC Trends Anal. Chem. 2020, 126, 115873. [Google Scholar] [CrossRef]

- Wall, D.; McCullagh, P.; Cleland, I.; Bond, R. Development of an Internet of Things Solution to Monitor and Analyse Indoor Air Quality. Internet Things 2021, 14, 100392. [Google Scholar] [CrossRef]

- Haddadi, S.; Koziel, J.A.; Engelken, T.J. Analytical Approaches for Detection of Breath VOC Biomarkers of Cattle Diseases—A Review. Anal. Chim. Acta 2022, 1206, 339565. [Google Scholar] [CrossRef]

- Zaim, O.; Diouf, A.; El Bari, N.; Lagdali, N.; Benelbarhdadi, I.; Ajana, F.Z.; Llobet, E.; Bouchikhi, B. Comparative Analysis of Volatile Organic Compounds of Breath and Urine for Distinguishing Patients with Liver Cirrhosis from Healthy Controls by Using Electronic Nose and Voltammetric Electronic Tongue. Anal. Chim. Acta 2021, 1184, 339028. [Google Scholar] [CrossRef]

- Moura, P.C.; Raposo, M.; Vassilenko, V. Breath Volatile Organic Compounds (VOCs) as Biomarkers for the Diagnosis of Pathological Conditions: A Review. Biomed. J. 2023, 46, 100623. [Google Scholar] [CrossRef] [PubMed]

- Mortazavi, M.; Carpin, S.; Toudeshki, A.; Ehsani, R. A Practical Data-Driven Approach for Precise Stem Water Potential Monitoring in Pistachio and Almond Orchards Using Supervised Machine Learning Algorithms. Comput. Electron. Agric. 2025, 231, 110004. [Google Scholar] [CrossRef]

- Senger, D.; Schweizer, T.; Jha, R.; Kluss, T.; Vellekoop, M. Evaluating and Optimising Formic Acid Treatment against Varroa Mites on Honey Bees with MOx-Sensors and a Control Loop. Smart Agric. Technol. 2023, 6, 100342. [Google Scholar] [CrossRef]

- Ramadan, M.N.A.; Ali, M.A.H.; Khoo, S.Y.; Hamad, L.; Alkhedher, M. Revolutionizing Agri-Food Technology: Development and Validation of the Portable Intelligent Oil Recognition System (PIORS). Smart Agric. Technol. 2024, 9, 100624. [Google Scholar] [CrossRef]

- Söylemez Milli, N.; Parlak, İ.H. A New Approach for Machine Learning-Based Recognition of Meat Species Using a BME688 Gas Sensors Matrix. CMJS 2025, 52, e2025031. [Google Scholar] [CrossRef]

- Gao, Y.; Chen, B.; Cheng, X.; LiuD, S.; Li, Q.; Xi, M. Volatile Organic Compounds in Exhaled Breath: Applications in Cancer Diagnosis and Predicting Treatment Efficacy. Cancer Pathog. Ther. 2025, 3, 411–419. [Google Scholar] [CrossRef]

- Fuller, R.; Landrigan, P.J.; Balakrishnan, K.; Bathan, G.; Bose-O’Reilly, S.; Brauer, M.; Caravanos, J.; Chiles, T.; Cohen, A.; Corra, L.; et al. Pollution and Health: A Progress Update. Lancet Planet. Health 2022, 6, e535–e547. [Google Scholar] [CrossRef]

- WHO Global Air Quality Guidelines: Particulate Matter (PM2.5 and PM10), Ozone, Nitrogen Dioxide, Sulfur Dioxide and Carbon Monoxide, 1st ed.; World Health Organization: Geneva, Switzerland, 2021; ISBN 978-92-4-003422-8.

- Chojer, H.; Branco, P.T.B.S.; Martins, F.G.; Alvim-Ferraz, M.C.M.; Sousa, S.I.V. Development of Low-Cost Indoor Air Quality Monitoring Devices: Recent Advancements. Sci. Total Environ. 2020, 727, 138385. [Google Scholar] [CrossRef] [PubMed]

- Feng, Y.; Wang, Y.; Beykal, B.; Qiao, M.; Xiao, Z.; Luo, Y. A Mechanistic Review on Machine Learning-Supported Detection and Analysis of Volatile Organic Compounds for Food Quality and Safety. Trends Food Sci. Technol. 2024, 143, 104297. [Google Scholar] [CrossRef]

- Hsieh, Y.-C.; Yao, D.-J. Intelligent Gas-Sensing Systems and Their Applications. J. Micromech. Microeng. 2018, 28, 093001. [Google Scholar] [CrossRef]

- Wang, M.; Chen, Y.; Chen, D.; Tian, X.; Zhao, W.; Shi, Y. A Food Quality Detection Method Based on Electronic Nose Technology. Meas. Sci. Technol. 2024, 35, 056004. [Google Scholar] [CrossRef]

- Prasad, P.S.; Janarnanchi, S.; Narayan, B.S.; Rao, M. SmartSniffer: Predicting Food Spoilage Time with an Electronic Nose-Based Gas Monitoring Apparatus Utilizing a Two-Stage Pipeline Model. In Proceedings of the 2024 9th International Conference on Computer Science and Engineering (UBMK), Antalya, Turkiye, 26–28 October 2024; IEEE: New York, NY, USA, 2024; pp. 506–511. [Google Scholar]

- Guidance Document: Analytical Methods for Determining VOC Concentrations and VOC Emission Potential for the Volatile Organic Compound Concentration Limits for Certain Products Regulations; Environment and Climate Change Canada: Gatineau, QC, Canada, 2022; ISBN 978-0-660-45159-6.

- Kwon, I.-J.; Jung, T.-Y.; Son, Y.; Kim, B.; Kim, S.-M.; Lee, J.-H. Detection of Volatile Sulfur Compounds (VSCs) in Exhaled Breath as a Potential Diagnostic Method for Oral Squamous Cell Carcinoma. BMC Oral Health 2022, 22, 268. [Google Scholar] [CrossRef]

- Jia, P.; Li, X.; Xu, M.; Zhang, L. Classification Techniques of Electronic Nose: A Review. Int. J. Bio-Inspired Comput. 2024, 23, 16–27. [Google Scholar] [CrossRef]

- Li, Y.; Wang, Z.; Zhao, T.; Li, H.; Jiang, J.; Ye, J. Electronic Nose for the Detection and Discrimination of Volatile Organic Compounds: Application, Challenges, and Perspectives. TrAC Trends Anal. Chem. 2024, 180, 117958. [Google Scholar] [CrossRef]

- Cheng, L.; Meng, Q.-H.; Lilienthal, A.J.; Qi, P.-F. Development of Compact Electronic Noses: A Review. Meas. Sci. Technol. 2021, 32, 062002. [Google Scholar] [CrossRef]

- Gondaliya, S.H.; Gondaliya, N.H. Electronic Nose Using Machine Learning Techniques. In Nanostructured Materials for Electronic Nose; Joshi, N.J., Navale, S., Eds.; Advanced Structured Materials; Springer Nature: Singapore, 2024; Volume 213, pp. 71–82. ISBN 978-981-97-1389-9. [Google Scholar]

- Binson, V.A.; Thomas, S. Assessing Quality of Food Products Using Electronic Noses: A Systematic Review. Proceedings 2024, 105, 79. [Google Scholar] [CrossRef]

- Del Valle, M. Sensors as Green Tools in Analytical Chemistry. Curr. Opin. Green Sustain. Chem. 2021, 31, 100501. [Google Scholar] [CrossRef]

- Men, H.; Yin, C.; Shi, Y.; Wang, Y.; Liu, J. Numerical Expression of Odor Intensity of Volatile Compounds from Automotive Polypropylene. Sens. Actuators A Phys. 2021, 321, 112426. [Google Scholar] [CrossRef]

- Caron, A.; Redon, N.; Coddeville, P.; Hanoune, B. Identification of Indoor Air Quality Events Using a K-Means Clustering Analysis of Gas Sensors Data. Sens. Actuators B Chem. 2019, 297, 126709. [Google Scholar] [CrossRef]

- Men, H.; Jiao, Y.; Shi, Y.; Gong, F.; Chen, Y.; Fang, H.; Liu, J. Odor Fingerprint Analysis Using Feature Mining Method Based on Olfactory Sensory Evaluation. Sensors 2018, 18, 3387. [Google Scholar] [CrossRef]

- Caray, I.M.G.; Ditchon, K.P.R.; Arboleda, E.R. Smart Coffee Aromas: A Literature Review on Electronic Nose Technologies for Quality Assessment. World J. Adv. Res. Rev. (WJARR) 2024, 21, 506–514. [Google Scholar] [CrossRef]

- Zhai, Z.; Liu, Y.; Li, C.; Wang, D.; Wu, H. Electronic Noses: From Gas-Sensitive Components and Practical Applications to Data Processing. Sensors 2024, 24, 4806. [Google Scholar] [CrossRef]

- Reis, T.; Moura, P.C.; Gonçalves, D.; Ribeiro, P.A.; Vassilenko, V.; Fino, M.H.; Raposo, M. Ammonia Detection by Electronic Noses for a Safer Work Environment. Sensors 2024, 24, 3152. [Google Scholar] [CrossRef] [PubMed]

- Feng, S.; Farha, F.; Li, Q.; Wan, Y.; Xu, Y.; Zhang, T.; Ning, H. Review on Smart Gas Sensing Technology. Sensors 2019, 19, 3760. [Google Scholar] [CrossRef]

- Tan, J.; Xu, J. Applications of Electronic Nose (e-Nose) and Electronic Tongue (e-Tongue) in Food Quality-Related Properties Determination: A Review. Artif. Intell. Agric. 2020, 4, 104–115. [Google Scholar] [CrossRef]

- Kim, T.; Kim, Y.; Cho, W.; Kwak, J.-H.; Cho, J.; Pyeon, Y.; Kim, J.J.; Shin, H. Ultralow-Power Single-Sensor-Based E-Nose System Powered by Duty Cycling and Deep Learning for Real-Time Gas Identification. ACS Sens. 2024, 9, 3557–3572. [Google Scholar] [CrossRef] [PubMed]

- Anwar, H.; Anwar, T. Application of Electronic Nose and Machine Learning in Determining Fruits Quality: A Review. JAPS J. Anim. Plant Sci. 2024, 34, 283–290. [Google Scholar] [CrossRef]

- Wang, M.; Chen, Y. Electronic Nose and Its Application in the Food Industry: A Review. Eur. Food Res. Technol. 2024, 250, 21–67. [Google Scholar] [CrossRef]

- Bosch Sensortec. BME AI-Studio Manual; Bosch Sensotec, GmbH: Reutlingen, Germany, 2021; p. 78. [Google Scholar]

- Bosch Sensortec. BME688—Digital Low Power Gas, Pressure, Temperature & Humidity Sensor with AI; Bosch Sensotec, GmbH: Reutlingen, Germany, 2024; p. 60. [Google Scholar]

- Molnár, B.; Géczy, A. Electronic Nose Based on AI-Capable Sensor Module for Beverages Identification. In Proceedings of the 2024 47th International Spring Seminar on Electronics Technology (ISSE), Prague, Czech Republic, 15–19 May 2024; IEEE: New York, NY, USA, 2024; pp. 1–5. [Google Scholar]

- Ramadan, M.N.A.; Alkhedher, M.; Tevfik Akgün, B.; Alp, S. Portable AI-Powered Spice Recognition System Using an eNose Based on Metal Oxide Gas Sensors. In Proceedings of the 2023 International Conference on Smart Applications, Communications and Networking (SmartNets), Istanbul, Turkiye, 25 July 2023; IEEE: New York, NY, USA, 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Daly, C. Introduction to Machine Learning and Deep Learning. In Proceedings of the Presented at the Matlab Expo 2017, Paris, France, 30 May 2017. [Google Scholar]

- El Naqa, I.; Murphy, M.J. What Is Machine Learning? In Machine Learning in Radiation Oncology; El Naqa, I., Li, R., Murphy, M.J., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 3–11. ISBN 978-3-319-18304-6. [Google Scholar]

- Saravanan, R.; Sujatha, P. A State of Art Techniques on Machine Learning Algorithms: A Perspective of Supervised Learning Approaches in Data Classification. In Proceedings of the 2018 Second International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 14–15 June 2018; IEEE: New York, NY, USA, 2018; pp. 945–949. [Google Scholar] [CrossRef]

- Sarker, I.H. Machine Learning: Algorithms, Real-World Applications and Research Directions. SN Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef] [PubMed]

- Obaido, G.; Mienye, I.D.; Egbelowo, O.F.; Emmanuel, I.D.; Ogunleye, A.; Ogbuokiri, B.; Mienye, P.; Aruleba, K. Supervised Machine Learning in Drug Discovery and Development: Algorithms, Applications, Challenges, and Prospects. Mach. Learn. Appl. 2024, 17, 100576. [Google Scholar] [CrossRef]

- Tiwari, A.; Singh, G.; Singh, S.; Jain, T. Thyroid Disease Detection Using Supervised Machine Learning Techniques. In Proceedings of the 2023 3rd International Conference on Innovative Sustainable Computational Technologies (CISCT), Dehradun, India, 8–9 September 2023; IEEE: New York, NY, USA, 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Lemos, G.S. IoT and Machine Learning for Process Optimization in Agrofood Industry. Master’s Thesis, Electrical and Computer Engineering, FEUP—Faculty of Engineering of the University of Porto, Porto, Portugal, 2019. [Google Scholar]

- Yang, Z.; Feng, Z.; Bi, J.; Zhang, Z.; Jiang, Y.; Yang, T.; Zhang, Z. Characterization of Key Aroma Compounds of Tomato Quality under Enriched CO2 Coupled with Water and Nitrogen Based on E-Nose and GC–MS. Sci. Hortic. 2024, 338, 113709. [Google Scholar] [CrossRef]

- Jiang, Y.; Wang, Z.; Hu, Y.; Lin, W.; Yao, L.; Xiao, W.; Hu, J.; Liu, W.; Zheng, C.; Chen, L.; et al. Intelligent Sniffer: A Chip-Based Portable e-Nose for Accurate and Fast Essence Evaluation. Sens. Actuators B Chem. 2025, 426, 136989. [Google Scholar] [CrossRef]

- Dokic, K.; Radisic, B.; Kukina, H. Application of Machine Learning Algorithms for Monitoring of Spoilage of Cow’s Milk Using the Cheap Gas Sensor. In Proceedings of the 2024 16th International Conference on Electronics, Computers and Artificial Intelligence (ECAI), Iasi, Romania, 27–28 June 2024; IEEE: New York, NY, USA, 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Tămâian, A.; Folea, S. Spoiled Food Detection Using a Matrix of Gas Sensors. In Proceedings of the 2024 IEEE International Conference on Automation, Quality and Testing, Robotics (AQTR), Cluj-Napoca, Romania, 16–18 May 2024; IEEE: New York, NY, USA, 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Brownlee, J. Machine Learning Mastery with Python: Understand Your Data, Create Accurate Models and Work Projects End-to-End; Edition: v1.20.; Jason Brownlee: Melbourne, Australia, 2021; ISBN 979-8-5404-4627-3. [Google Scholar]

- Xu, A.; Cai, T.; Shen, D.; Wang, A. Food Odor Recognition via Multi-Step Classification. arXiv 2021, arXiv:2110.09956. [Google Scholar] [CrossRef]

- Voss, H.G.J.; Ayub, R.A.; Stevan, S.L. E-Nose Prototype to Monitoring the Growth and Maturation of Peaches in the Orchard. IEEE Sens. J. 2020, 20, 11741–11750. [Google Scholar] [CrossRef]

- Sabilla, S.I.; Sarno, R.; Triyana, K.; Hayashi, K. Deep Learning in a Sensor Array System Based on the Distribution of Volatile Compounds from Meat Cuts Using GC–MS Analysis. Sens. Bio-Sens. Res. 2020, 29, 100371. [Google Scholar] [CrossRef]

- Tohidi, M.; Ghasemi-Varnamkhasti, M.; Ghafarinia, V.; Bonyadian, M.; Mohtasebi, S.S. Development of a Metal Oxide Semiconductor-Based Artificial Nose as a Fast, Reliable and Non-Expensive Analytical Technique for Aroma Profiling of Milk Adulteration. Int. Dairy J. 2018, 77, 38–46. [Google Scholar] [CrossRef]

- Kartika, V.S.; Rivai, M.; Purwanto, D. Spoiled Meat Classification Using Semiconductor Gas Sensors, Image Processing and Neural Network. In Proceedings of the 2018 International Conference on Information and Communications Technology (ICOIACT), Yogyakarta, Indonesia, 6–7 March 2018; IEEE: New York, NY, USA, 2018; pp. 418–423. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).