ACR: Adaptive Confidence Re-Scoring for Reliable Answer Selection Among Multiple Candidates

Abstract

1. Introduction

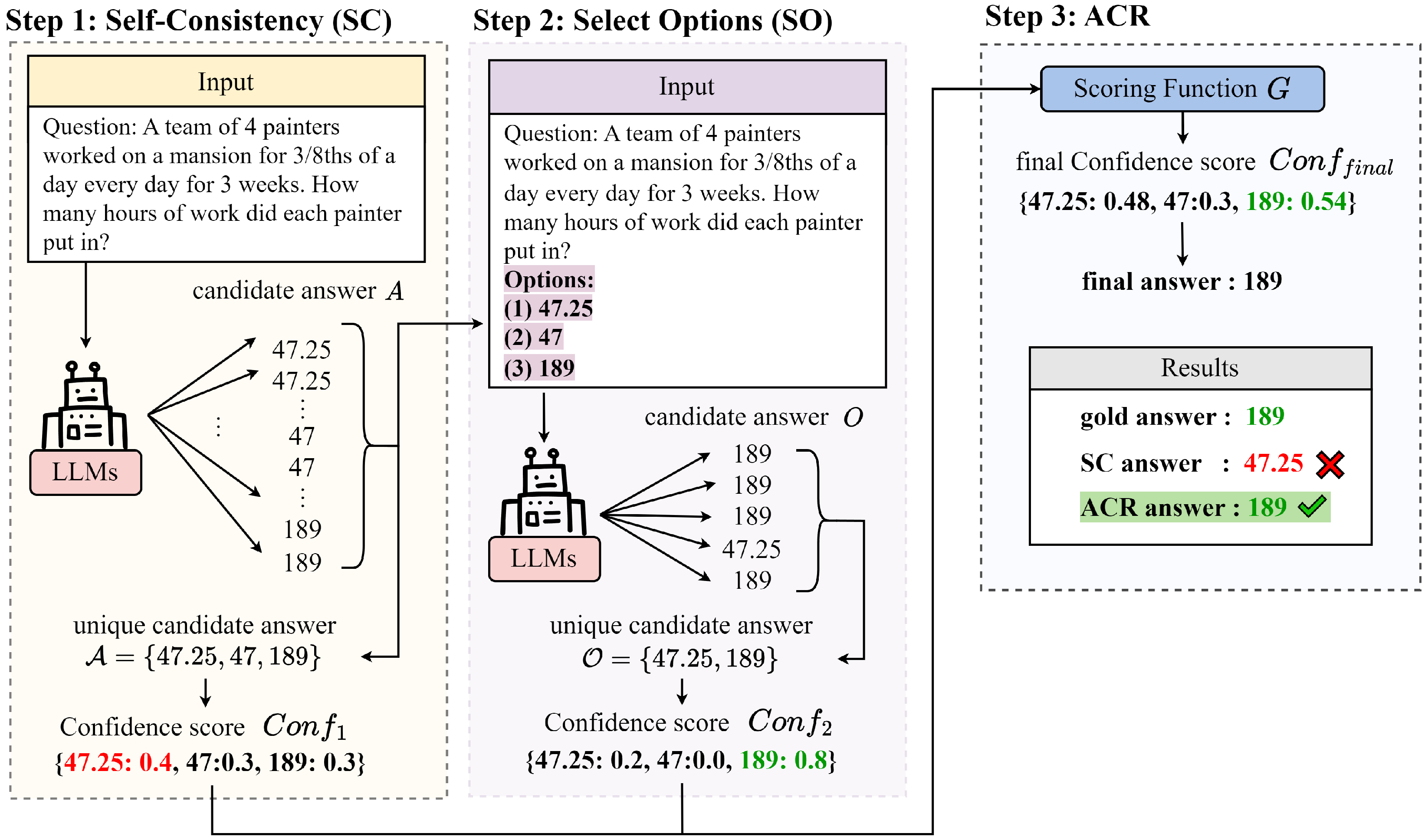

- We reduce computational overhead by selectively evaluating candidate answers based on the confidence distribution derived from SC.

- We reformulate the selection process as a classification task, enabling the model to recover correct answers even when they appear in the minority.

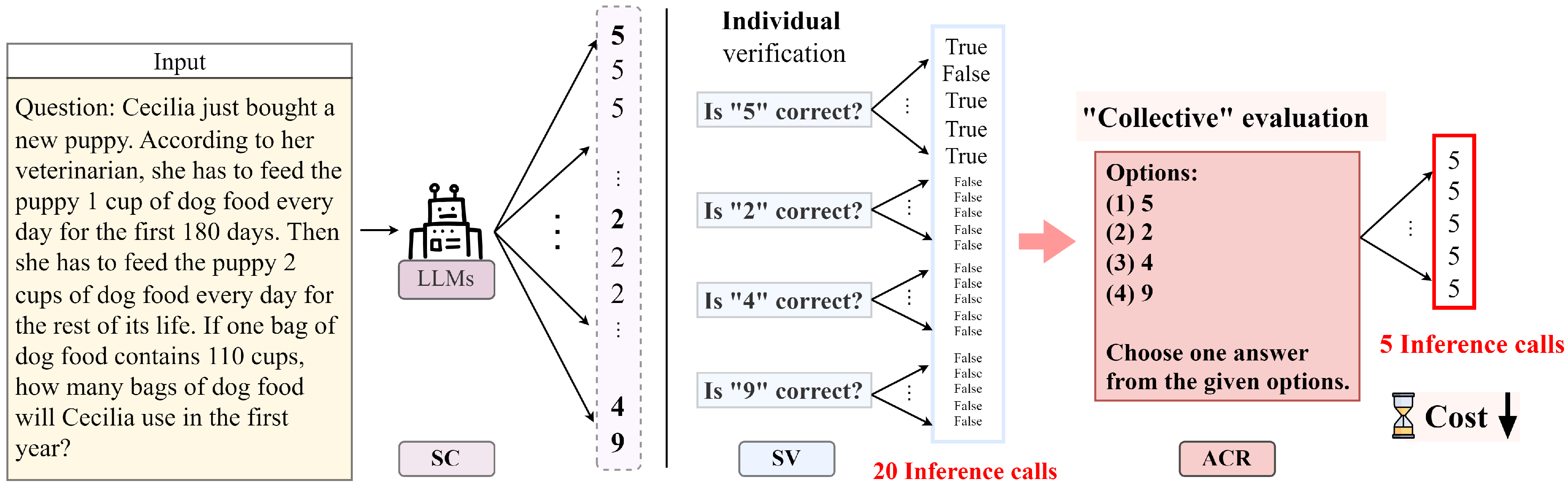

- We propose a collective evaluation strategy that enhances answer reliability without requiring separate verification for each candidate.

2. Related Work

2.1. Self-Consistency

2.2. Verification

3. Method

3.1. SC: Generating Initial Answers and Scores

3.2. SO: Selective and Collective Evaluation of Candidate Answers

3.3. ACR: Re-Scoring

4. Experimental Setup

4.1. Datasets

4.1.1. Arithmetic Reasoning

4.1.2. Logical Reasoning

- Reasoning about Colored Objects (Color) [18]: A subtask from BIG-Bench Hard benchmark, which requires reasoning over object–color relationships. Although the original format is multiple-choice, we remove the options and prompt the model in an open-ended format. We use all 250 examples.

- Word Sorting (Word) [18]: Another subtask from the BIG-Bench Hard that involves sorting a list of words in lexicographic order. The dataset contains 250 examples. Due to time constraints, we evaluate this task only with Gemini-1.5-Flash-8B.

4.2. Implementation Details

4.3. Prompt Design

4.4. Baselines

- SC [11]: Generates responses per example and selects the final answer via majority voting.

- SV [13]: Based on SC results, performs backward reasoning (BR) times for each candidate and selects the one with the highest verification score. We adopt the True–False Item Verification variant for consistency across datasets. For a fair comparison with ACR, BR is applied only to the top-K unique candidates from SC, using the same K value as in our method.

- FOBAR [14]: Extends SV by aggregating SC and BR scores using the weighting strategy proposed in the original FOBAR paper. We set the aggregation weight to .

5. Results

6. Analysis

6.1. Final Confidence Scoring Strategies

- (1)

- Sum: A simple additive strategy that assigns equal weight to both scores. While intuitive and easy to implement, it may overemphasize noisy SO scores when is already high:

- (2)

- Product: A strictly multiplicative strategy that enforces agreement between SC and SO. However, it may excessively penalize candidates when either score is low:

- (3)

- Fobar: A softly weighted geometric mean, proposed by Jiang et al. [14] and controlled by a tunable parameter :Following the original implementation, we set in our experiments.

- (4)

- SC-Boost: A hybrid strategy that augments with a multiplicative boost from , thereby reflecting both the generation and evaluation stages:

6.2. Robustness to Option Order

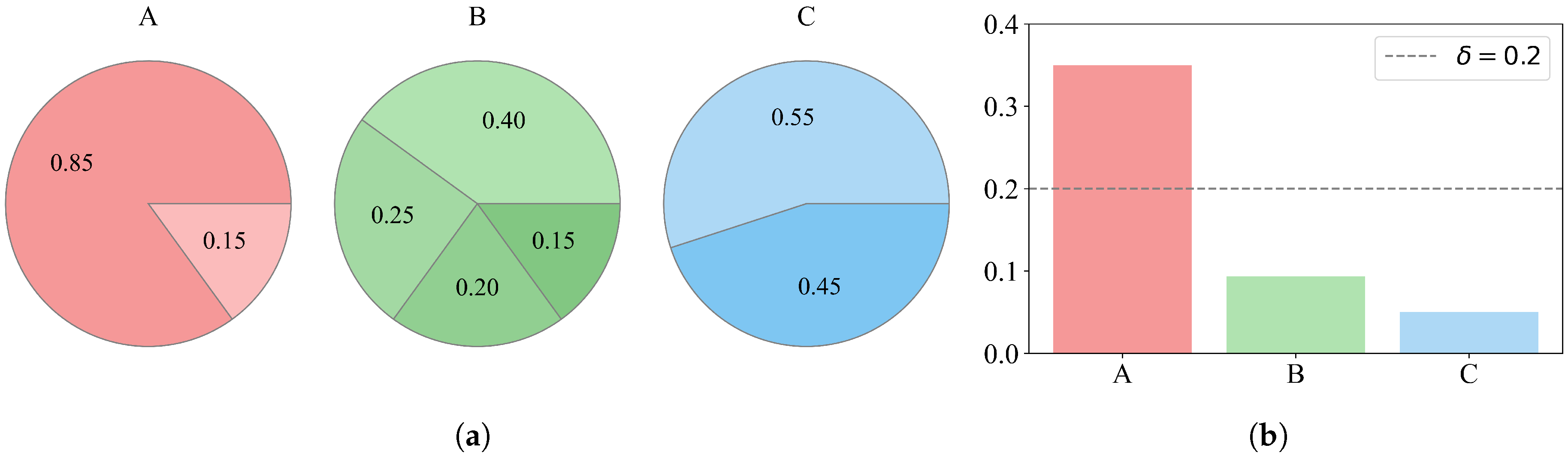

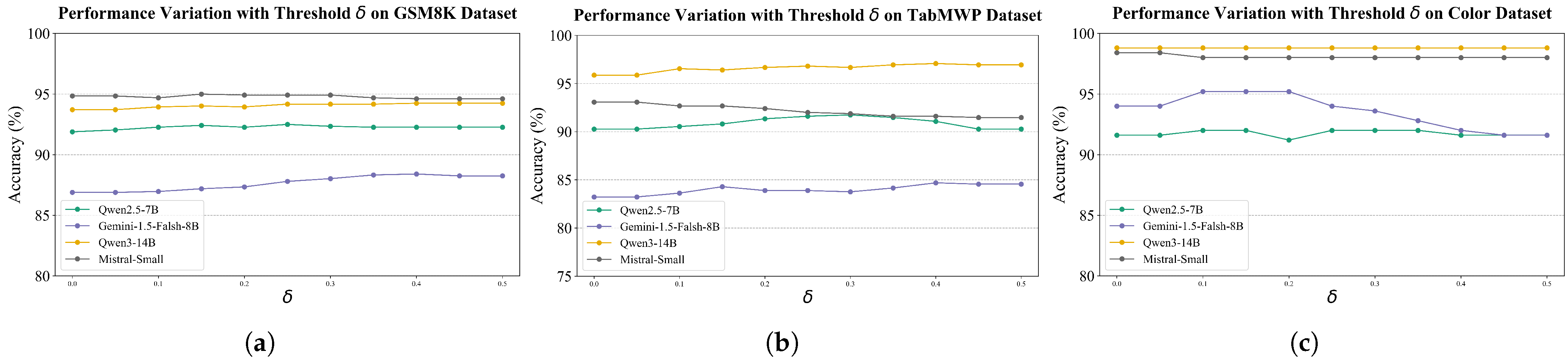

6.3. Effectiveness of Selective Re-Scoring

6.4. Efficiency and Effectiveness: ACR vs. FOBAR

7. Error Cases Analysis

- Failure to generate the correct answer: If the model fails to generate the correct answer in any of the SC reasoning paths, ACR cannot recover it during re-scoring. This represents a fundamental limitation of SC: if the correct answer is absent from the candidate set, no selection mechanism—ACR or otherwise—can succeed. Table 5 shows how often such unrecoverable cases occur.

- Consistently ambiguous cases: In some examples, both SC and SO assign similarly low confidence score gaps across multiple candidates, indicating persistent uncertainty throughout the pipeline. In such cases, ACR struggles to distinguish the correct answer from other plausible but incorrect ones.

- Correct answer favored in SO, but final prediction incorrect: Even when SO correctly assigns high confidence to the correct answer, the final prediction can still be wrong due to residual influence from SC.

- Overconfidence in an incorrect answer: SO occasionally assigns a disproportionately high confidence score to an incorrect candidate, even when SC’s distribution is relatively balanced. As discussed in Section 6.3, this illustrates how unreliable SO outputs can undermine ACR’s overall performance. These cases show that re-scoring may inject spurious certainty, leading ACR to reinforce an initially weak—but ultimately incorrect—prediction.

- Correct answer in SC, incorrect shift in SO: In these cases, SC initially assigns the highest confidence score to the correct answer, but SO shifts the score distribution toward an incorrect one, resulting in a wrong prediction. This highlights the risk of re-scoring introducing harmful bias—even when the SC result was already correct. As in the previous case, this underscores the importance of SO reliability in the overall performance of ACR. Table 6 shows how often such Case 3–5 errors lead ACR to make incorrect predictions.

8. Conclusions

9. Limitations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- OpenAI; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al. GPT-4 Technical Report. 2024. Available online: http://arxiv.org/abs/2303.08774 (accessed on 1 August 2025).

- Anthropic. claude-3-7-sonnet. 2025. Available online: https://www.anthropic.com/news/claude-3-7-sonnet (accessed on 26 July 2025).

- Comanici, G.; Bieber, E.; Schaekermann, M.; Pasupat, I.; Sachdeva, N.; Dhillon, I.; Blistein, M.; Ram, O.; Zhang, D.; Rosen, E.; et al. Gemini 2.5: Pushing the Frontier with Advanced Reasoning, Multimodality, Long Context, and Next Generation Agentic Capabilities. 2025. Available online: http://arxiv.org/abs/2507.06261 (accessed on 1 August 2025).

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. In Proceedings of the Advances in Neural Information Processing Systems; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: New York, NY, USA, 2020; Volume 33, pp. 1877–1901. [Google Scholar]

- Li, T.; Ma, X.; Zhuang, A.; Gu, Y.; Su, Y.; Chen, W. Few-shot In-context Learning on Knowledge Base Question Answering. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics, Toronto, ON, Canada, 9–14 July 2023 (Volume 1: Long Papers); Rogers, A., Boyd-Graber, J., Okazaki, N., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023; pp. 6966–6980. [Google Scholar]

- Coda-Forno, J.; Binz, M.; Akata, Z.; Botvinick, M.; Wang, J.X.; Schulz, E. Meta-in-context learning in large language models. In Proceedings of the Thirty-Seventh Conference on Neural Information Processing Systems, New Orleans, LA, USA, 10–16 December 2023. [Google Scholar]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; ichter, b.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-Thought Prompting Elicits Reasoning in Large Language Models. In Proceedings of the Advances in Neural Information Processing Systems; Koyejo, S., Mohamed, S., Agarwal, A., Belgrave, D., Cho, K., Oh, A., Eds.; Curran Associates, Inc.: New York, NY, USA, 2022; Volume 35, pp. 24824–24837. [Google Scholar]

- Kojima, T.; Gu, S.S.; Reid, M.; Matsuo, Y.; Iwasawa, Y. Large Language Models are Zero-Shot Reasoners. In Proceedings of the Advances in Neural Information Processing Systems; Koyejo, S., Mohamed, S., Agarwal, A., Belgrave, D., Cho, K., Oh, A., Eds.; Curran Associates, Inc.: New York, NY, USA, 2022; Volume 35, pp. 22199–22213. [Google Scholar]

- Fu, Y.; Peng, H.; Sabharwal, A.; Clark, P.; Khot, T. Complexity-Based Prompting for Multi-step Reasoning. In Proceedings of the Eleventh International Conference on Learning Representations, Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Liu, Y.; Peng, X.; Du, T.; Yin, J.; Liu, W.; Zhang, X. ERA-CoT: Improving Chain-of-Thought through Entity Relationship Analysis. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics, Bangkok, Thailand, 11–16 August 2024 (Volume 1: Long Papers); Ku, L.W., Martins, A., Srikumar, V., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024; pp. 8780–8794. [Google Scholar]

- Wang, X.; Wei, J.; Schuurmans, D.; Le, Q.; Chi, E.; Narang, S.; Chowdhery, A.; Zhou, D. Self-Consistency Improves Chain of Thought Reasoning in Language Models. In Proceedings of the International Conference on Learning Representations (ICLR), Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Li, Y.; Yuan, P.; Feng, S.; Pan, B.; Wang, X.; Sun, B.; Wang, H.; Li, K. Escape Sky-high Cost: Early-stopping Self-Consistency for Multi-step Reasoning. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Weng, Y.; Zhu, M.; Xia, F.; Li, B.; He, S.; Liu, S.; Sun, B.; Liu, K.; Zhao, J. Large Language Models are Better Reasoners with Self-Verification. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2023, Singapore, 6–10 December 2023; Bouamor, H., Pino, J., Bali, K., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023; pp. 2550–2575. [Google Scholar]

- Jiang, W.; Shi, H.; Yu, L.; Liu, Z.; Zhang, Y.; Li, Z.; Kwok, J. Forward-Backward Reasoning in Large Language Models for Mathematical Verification. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2024, Bangkok, Thailand, 11–16 August 2024; Ku, L.W., Martins, A., Srikumar, V., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024; pp. 6647–6661. [Google Scholar]

- Cobbe, K.; Kosaraju, V.; Bavarian, M.; Chen, M.; Jun, H.; Kaiser, L.; Plappert, M.; Tworek, J.; Hilton, J.; Nakano, R.; et al. Training Verifiers to Solve Math Word Problems. 2021. Available online: http://arxiv.org/abs/2110.14168 (accessed on 1 August 2025).

- Lu, P.; Qiu, L.; Chang, K.W.; Wu, Y.N.; Zhu, S.C.; Rajpurohit, T.; Clark, P.; Kalyan, A. Dynamic Prompt Learning via Policy Gradient for Semi-structured Mathematical Reasoning. In Proceedings of the International Conference on Learning Representations (ICLR), Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Lightman, H.; Kosaraju, V.; Burda, Y.; Edwards, H.; Baker, B.; Lee, T.; Leike, J.; Schulman, J.; Sutskever, I.; Cobbe, K. Let’s Verify Step by Step. 2023. Available online: http://arxiv.org/abs/2305.20050 (accessed on 1 August 2025).

- Suzgun, M.; Scales, N.; Schärli, N.; Gehrmann, S.; Tay, Y.; Chung, H.W.; Chowdhery, A.; Le, Q.; Chi, E.; Zhou, D.; et al. Challenging BIG-Bench Tasks and Whether Chain-of-Thought Can Solve Them. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2023, Toronto, ON, Canada, 9–14 July 2023; Rogers, A., Boyd-Graber, J., Okazaki, N., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023; pp. 13003–13051. [Google Scholar]

- Yang, A.; Yang, B.; Zhang, B.; Hui, B.; Zheng, B.; Yu, B.; Li, C.; Liu, D.; Huang, F.; Wei, H.; et al. Qwen2.5 Technical Report. 2025. Available online: http://arxiv.org/abs/2412.15115 (accessed on 1 August 2025).

- Yang, A.; Li, A.; Yang, B.; Zhang, B.; Hui, B.; Zheng, B.; Yu, B.; Gao, C.; Huang, C.; Lv, C.; et al. Qwen3 Technical Report. 2025. Available online: http://arxiv.org/abs/2505.09388 (accessed on 1 August 2025).

- Mistral-AI. Mistral-Small-3.1. 2025. Available online: https://mistral.ai/news/mistral-small-3 (accessed on 26 July 2025).

- Reid, M.; Savinov, N.; Teplyashin, D.; Lepikhin, D.; Lillicrap, T.; Alayrac, J.b.; Soricut, R.; Lazaridou, A.; Firat, O.; Schrittwieser, J.; et al. Gemini 1.5: Unlocking multimodal understanding across millions of tokens of context. arXiv 2024, arXiv:2403.05530. [Google Scholar] [CrossRef]

- Stiennon, N.; Ouyang, L.; Wu, J.; Ziegler, D.; Lowe, R.; Voss, C.; Radford, A.; Amodei, D.; Christiano, P.F. Learning to summarize with human feedback. In Proceedings of the Advances in Neural Information Processing Systems; Larochelle, H., Ranzato, M., Hadsell, R., Balcan, M., Lin, H., Eds.; Curran Associates, Inc.: New York, NY, USA, 2020; Volume 33, pp. 3008–3021. [Google Scholar]

- Sessa, P.G.; Dadashi, R.; Hussenot, L.; Ferret, J.; Vieillard, N.; Ramé, A.; Shariari, B.; Perrin, S.; Friesen, A.; Cideron, G.; et al. BOND: Aligning LLMs with Best-of-N Distillation. 2024. Available online: http://arxiv.org/abs/2407.14622 (accessed on 1 August 2025).

- Huang, B.; Lu, S.; Wan, X.; Duan, N. Enhancing Large Language Models in Coding Through Multi-Perspective Self-Consistency. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics, Bangkok, Thailand, 11–16 August 2024 (Volume 1: Long Papers); Ku, L.W., Martins, A., Srikumar, V., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024; pp. 1429–1450. [Google Scholar]

- Wang, A.; Song, L.; Tian, Y.; Peng, B.; Jin, L.; Mi, H.; Su, J.; Yu, D. Self-Consistency Boosts Calibration for Math Reasoning. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2024, Miami, FL, USA, 12–16 November 2024; Al-Onaizan, Y., Bansal, M., Chen, Y.N., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024; pp. 6023–6029. [Google Scholar]

- Aggarwal, P.; Madaan, A.; Yang, Y.; Mausam. Let’s Sample Step by Step: Adaptive-Consistency for Efficient Reasoning and Coding with LLMs. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023; Bouamor, H., Pino, J., Bali, K., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023; pp. 12375–12396. [Google Scholar]

- Wang, H.; Prasad, A.; Stengel-Eskin, E.; Bansal, M. Soft Self-Consistency Improves Language Models Agents. In Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics, Bangkok, Thailand, 11–16 August 2024 (Volume 2: Short Papers); Ku, L.W., Martins, A., Srikumar, V., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024; pp. 287–301. [Google Scholar]

- Zhou, Y.; Zhu, Y.; Antognini, D.; Kim, Y.; Zhang, Y. Paraphrase and Solve: Exploring and Exploiting the Impact of Surface Form on Mathematical Reasoning in Large Language Models. In Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Mexico City, Mexico, 16–21 June 2024 (Volume 1: Long Papers); Duh, K., Gomez, H., Bethard, S., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024; pp. 2793–2804. [Google Scholar]

- Chen, W.; Wang, W.; Chu, Z.; Ren, K.; Zheng, Z.; Lu, Z. Self-Para-Consistency: Improving Reasoning Tasks at Low Cost for Large Language Models. In Proceedings of the Findings of the Association for Computational Linguistics: ACL 2024, Bangkok, Thailand, 11–16 August 2024; Ku, L.W., Martins, A., Srikumar, V., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2024; pp. 14162–14167. [Google Scholar]

- Hendrycks, D.; Burns, C.; Kadavath, S.; Arora, A.; Basart, S.; Tang, E.; Song, D.; Steinhardt, J. Measuring Mathematical Problem Solving with the MATH Dataset. In Proceedings of the Neural Information Processing Systems Track on Datasets and Benchmarks, Virtual, 6–14 December 2021; Vanschoren, J., Yeung, S., Eds.; Volume 1. [Google Scholar]

- Zheng, C.; Zhou, H.; Meng, F.; Zhou, J.; Huang, M. Large Language Models Are Not Robust Multiple Choice Selectors. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

| Setting | Model | Dataset | Baseline | ACR | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| SC | SV | FOBAR | Sum | Product | Fobar | SC-Boost | Setting | |||

| Few-shot | Qwen2.5-7B | GSM8K | 91.96 | 91.81 | 93.25 | 92.34 | 91.96 | 92.49 | 92.72 | |

| TabMWP | 90.40 | 91.73 | 92.53 | 91.73 | 92.00 | 92.00 | 91.73 | |||

| Color | 91.60 | 91.20 | 92.40 | 92.00 | 92.00 | 92.00 | 91.60 | |||

| Gemini-1.5-Flash-8B | GSM8K | 86.88 | 87.26 | 87.64 | 88.40 | 88.17 | 88.48 | 88.25 | ||

| TabMWP | 83.36 | 83.49 | 84.02 | 84.69 | 84.29 | 84.55 | 84.42 | |||

| Color | 94.00 | 94.40 | 94.80 | 95.20 | 95.60 | 95.60 | 95.60 | |||

| Word | 66.80 | 62.80 | 68.40 | 67.60 | 67.20 | 67.60 | 67.20 | |||

| Qwen3-14B | GSM8K | 93.71 | 93.93 | 93.86 | 94.24 | 93.93 | 94.09 | 94.01 | ||

| TabMWP | 95.87 | 97.33 | 97.07 | 97.07 | 97.20 | 97.20 | 97.07 | |||

| Color | 98.80 | 98.00 | 98.80 | 98.80 | 98.80 | 98.80 | 98.80 | |||

| Mistral-Small-24B | GSM8K | 94.84 | 94.77 | 94.92 | 94.91 | 94.91 | 94.91 | 95.29 | ||

| TabMWP | 92.80 | 89.33 | 90.93 | 91.87 | 91.87 | 91.87 | 91.87 | |||

| Color | 98.40 | 97.60 | 97.60 | 98.00 | 98.00 | 98.00 | 98.00 | |||

| Zero-shot | Gemini-2.5-Flash | MATH500 | 91.60 | 91.00 | 91.20 | 92.00 | 91.40 | 91.40 | 91.60 | |

| Model | SC | SV | FOBAR | ACR () | ACR (Random) |

|---|---|---|---|---|---|

| Qwen2.5-7B | 91.96 | 91.81 | 93.25 | 92.79 ( = 0.25) | 92.87 ( = 0.25) |

| Mistral-Small-24B | 94.84 | 94.77 | 94.92 | 95.29 ( = 0.25) | 95.06 ( = 0.25) |

| Dataset | Model | SV | SO |

|---|---|---|---|

| GSM8K | Qwen2.5-7B | 91.81 | 89.83 |

| Gemini-1.5-Flash-8B | 87.26 | 87.79 | |

| Qwen3-14B | 93.93 | 93.86 | |

| Mistral-Small-24B | 94.77 | 94.00 | |

| TabMWP | Qwen2.5-7B | 91.73 | 87.60 |

| Gemini-1.5-Flash-8B | 83.49 | 84.02 | |

| Qwen3-14B | 97.33 | 96.53 | |

| Mistral-Small-24B | 89.33 | 89.20 | |

| Color | Qwen2.5-7B | 91.20 | 91.20 |

| Gemini-1.5-Flash-8B | 94.40 | 90.00 | |

| Qwen3-14B | 98.00 | 98.00 | |

| Mistral-Small-24B | 97.60 | 97.20 | |

| MATH500 | Gemini-2.5-Flash | 91.00 | 91.80 |

| Dataset | Model | # of Samples | Inference Calls | Accuracy Gain (%) | Efficiency | ||||

|---|---|---|---|---|---|---|---|---|---|

| FOBAR | ACR | FOBAR | ACR | Reduction (%) | FOBAR | ACR | |||

| GSM8K | Qwen2.5-7B | 665 | 254 | 9615 | 1270 | 86.79 | 1.29 | 0.38 | 2.21 |

| Gemini-1.5-Falsh-8B | 403 | 318 | 6685 | 1590 | 76.22 | 0.76 | 1.52 | 8.41 | |

| Qwen3-14B | 104 | 90 | 1345 | 450 | 66.54 | 0.15 | 0.53 | 10.52 | |

| Mistral-Small | 277 | 93 | 3605 | 465 | 87.10 | 0.08 | 0.07 | 7.04 | |

| TabMWP | Qwen2.5-7B | 288 | 143 | 4020 | 715 | 82.21 | 2.13 | 1.33 | 3.52 |

| Gemini-1.5-Falsh-8B | 214 | 178 | 3500 | 890 | 74.57 | 0.66 | 1.33 | 7.91 | |

| Qwen3-14B | 94 | 74 | 1310 | 370 | 71.76 | 1.20 | 1.20 | 3.53 | |

| Mistral-Small | 219 | 100 | 3155 | 500 | 84.15 | −1.87 | −0.93 | - | |

| Color | Qwen2.5-7B | 87 | 31 | 1020 | 155 | 84.80 | 0.80 | 0.40 | 3.29 |

| Gemini-1.5-Falsh-8B | 40 | 9 | 535 | 45 | 91.59 | 0.80 | 1.20 | 17.83 | |

| Qwen3-14B | 11 | 8 | 120 | 40 | 66.67 | 0.00 | 0.00 | - | |

| Mistral-Small | 44 | 4 | 460 | 20 | 95.65 | −0.80 | −0.40 | - | |

| Dataset | Model | Incorrect (%) | Gold Missing (%) |

|---|---|---|---|

| GSM8K | Qwen2.5-7B | 8.04 | 31.13 |

| Gemini-1.5-Flash-8B | 13.12 | 41.04 | |

| Qwen3-14B | 6.29 | 63.86 | |

| Mistral-Small | 5.16 | 42.65 | |

| TabMWP | Qwen2.5-7B | 9.60 | 9.72 |

| Gemini-1.5-Flash-8B | 16.64 | 51.20 | |

| Qwen3-14B | 4.13 | 29.03 | |

| Mistral-Small | 7.20 | 29.63 | |

| Color | Qwen2.5-7B | 8.40 | 4.76 |

| Gemini-1.5-Flash-8B | 6.00 | 0.00 | |

| Qwen3-14B | 1.20 | 33.33 | |

| Mistral-Small | 1.60 | 0.00 |

| Dataset | Model | SC O & ACR O | SC O & ACR X | SC X & ACR O | SC X & ACR X |

|---|---|---|---|---|---|

| GSM8K | Qwen2.5-7B | 90.14 % | 1.82 % | 2.20 % | 5.84 % |

| Gemini-1.5-Flash-8B | 85.60 % | 1.28 % | 2.81 % | 10.31 % | |

| Qwen3-14B | 93.18 % | 0.53 % | 1.06 % | 5.23 % | |

| Mistral-Small | 94.23 % | 0.61 % | 0.68 % | 4.48 % | |

| TabMWP | Qwen2.5-7B | 88.27 % | 2.13 % | 3.47 % | 6.13 % |

| Gemini-1.5-Flash-8B | 81.63 % | 1.73 % | 3.06 % | 13.58 % | |

| Qwen3-14B | 95.20 % | 0.67 % | 1.87 % | 2.26 % | |

| Mistral-Small | 91.07 % | 1.73 % | 0.80 % | 6.40 % | |

| Color | Qwen2.5-7B | 90.40 % | 1.20 % | 1.60 % | 6.80 % |

| Gemini-1.5-Flash-8B | 94.00 % | 0.00 % | 1.20 % | 4.80 % | |

| Qwen3-14B | 98.80 % | 0.00 % | 0.00 % | 1.20 % | |

| Mistral-Small | 98.00 % | 0.40 % | 0.00 % | 1.60 % |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jeong, E.; Choi, Y.S. ACR: Adaptive Confidence Re-Scoring for Reliable Answer Selection Among Multiple Candidates. Appl. Sci. 2025, 15, 9587. https://doi.org/10.3390/app15179587

Jeong E, Choi YS. ACR: Adaptive Confidence Re-Scoring for Reliable Answer Selection Among Multiple Candidates. Applied Sciences. 2025; 15(17):9587. https://doi.org/10.3390/app15179587

Chicago/Turabian StyleJeong, Eunhye, and Yong Suk Choi. 2025. "ACR: Adaptive Confidence Re-Scoring for Reliable Answer Selection Among Multiple Candidates" Applied Sciences 15, no. 17: 9587. https://doi.org/10.3390/app15179587

APA StyleJeong, E., & Choi, Y. S. (2025). ACR: Adaptive Confidence Re-Scoring for Reliable Answer Selection Among Multiple Candidates. Applied Sciences, 15(17), 9587. https://doi.org/10.3390/app15179587