1. Introduction

Time series forecasting is very important in fields like economics, energy, retail, and environmental sciences, where making decisions and plans depends on making accurate predictions. It is especially important to make robust forecasting models for competitiongrade datasets like M3 and M4, which have a wide range of real-world series with different patterns and frequencies.

Traditional statistical methods such as Auto-Regressive Integrated Moving Average (ARIMA) have long been used because they are easy to understand and have a strong theoretical basis. More recently, the Ata method has gained attention for its simplicity, computational efficiency, and adaptability to both seasonal and non-seasonal patterns. However, no single forecasting method performs optimally across all types of time series data. This shows how important it is to use adaptive methods that consider the specific features of each series.

This study proposes a hybrid forecasting framework that integrates the best variants of the Ata methods and ARIMA, employing a data-driven optimization process based on grid search and in-sample sMAPE minimization for model selection. By evaluating multiple variations of the Ata model for each series and selecting those with the best in-sample performance, we aim to construct exclusive forecasts that are both accurate and reliable. The proposed method is tested on these datasets, and its performance is evaluated using the official accuracy measures used by the competitions. This research contributes to the literature by demonstrating how statistical modeling and adaptive model selection can be effectively integrated to improve forecasting accuracy across different time series.

2. Literature Review

Time series forecasting is now a very important field that combines statistics, machine learning, and decision sciences. This is because there is a growing need for accurate predictions in many areas, including economics, healthcare, and environmental science. As problems in the real world become more complicated and there are more data available, the need for strong and flexible forecasting methods has grown even more. Over the years, the development of forecasting methods has been influenced by both theoretical progress and large-scale real-world tests, especially through international benchmark competitions. The M3 and M4 forecasting competitions have been especially important because they have led to new ways of doing things and more thorough comparisons. Researchers have been able to test, compare, and improve their methods in these competitions, which has sped up progress in the field.

The M3 competition, which was organized by Makridakis and Hibon [

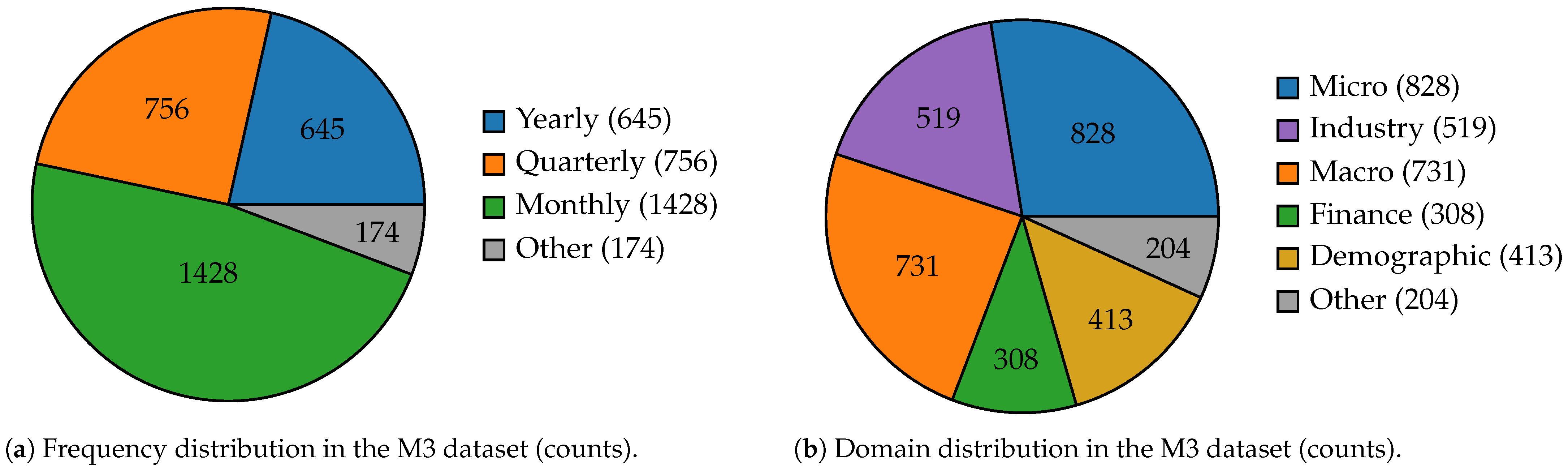

1], was significant because it examined 24 different forecasting methods on 3003 time series. One of the most striking findings was that relatively simple statistical models—such as exponential smoothing and ARIMA—performed on par with, and sometimes even outperformed, more complex and sophisticated approaches. This went against the common belief that making a model more complex always makes it more accurate at predicting. The M3 competition showed that complexity for its own sake is not always useful and that classical statistical methods are still useful today. Because of this, the M3 competition resulted in a lot of research into hybrid and ensemble methods that try to combine the ease of use and understanding of traditional models with the power and flexibility of more advanced ones. This change has contributed to the creation of forecasting frameworks that are useful for researchers. As illustrated in

Figure 1, the M3 dataset spans multiple frequencies and domains, providing a rich ground for testing and comparing forecasting methods.

Building on the foundation laid by M3, the M4 competition [

2,

3] expanded the scope substantially, including 100,000 time series and 61 forecasting methods, comprising a wide array of statistical, machine learning, and hybrid approaches. The unprecedented scale and diversity of the M4 dataset allowed for a more comprehensive assessment of forecasting methods across different domains and time series characteristics. As illustrated in

Figure 2, the dataset covers multiple frequencies—yearly 23,000 (23%), quarterly 24,000 (24%), monthly 48,000 (48%), weekly 359 (0.36%), daily 4,227 (4.23%), and hourly 414 (0.41%)—and spans diverse domains (micro, industry, macro, finance, demographic, and other categories), thereby capturing heterogeneous structures for model evaluation. The results of M4 confirmed the idea that no single method consistently dominates across all types of time series. Instead, ensemble and combination methods—particularly those that integrate statistical and machine learning models—tended to perform the most robust and reliable performance. This finding has important real-world implications. It suggests that being flexible and adaptable is essential for making accurate predictions in diverse settings. The M4 competition also showed that how important it is for forecasting research to be reproducible and open, establishing new standards for future studies.

One of the earliest and most influential works in this line of research was Zhang [

4], who introduced a hybrid ARIMA and Artificial Neural Network (ANN) model. This pioneering study established the foundation for combining linear time series components modeled by ARIMA with the nonlinear learning capacity of neural networks, achieving better performance than either method separately. Building on this foundation, Atesongun and Gulsen [

5] extended the ARIMA–ANN framework with a structured hybrid forecasting model, confirming its efficiency across multiple real-world datasets. Similarly, Tsoku et al. [

6] proposed a hybrid ARIMA–ANN model to forecast South African crude oil prices, showing that the integration of Box–Jenkins methodology with neural networks enhances predictive accuracy in volatile financial markets. In a related contribution, Alshawarbeh et al. [

7] applied the ARIMA–ANN hybrid approach to high-frequency datasets, underscoring its robustness in handling rapidly changing series and reducing forecast errors in complex environments. More recently, Smyl [

8], the overall winner of the M4 competition, proposed a hybrid method of exponential smoothing (ETS) and Recurrent Neural Networks (RNNs). This study provided compelling evidence that hybrid models, when carefully designed, can significantly outperform both classical statistical methods and standalone neural networks, setting a new benchmark for large-scale forecasting competitions. Together, these works demonstrate the enduring importance of hybrid statistical–machine learning approaches as benchmarks and their adaptability across diverse forecasting contexts.

Recent research has emphasized hybrid forecasting frameworks that combine traditional statistical models with regression-based or machine learning approaches to enhance predictive accuracy. For example, Iftikhar et al. [

9] modeled and forecasted carbon dioxide emissions by integrating regression techniques with time series models. Their study demonstrated that a hybrid structure can capture both linear and nonlinear dynamics, offering more reliable forecasts in environmental applications. Similarly, Sherly et al. [

10] proposed a hybrid ARIMA–Prophet model, showing that the integration of Prophet’s trend–seasonality decomposition with ARIMA’s statistical rigor yields superior accuracy compared to either method alone. Ampountolas [

11] conducted a comparative analysis of machine learning, hybrid, and deep learning forecasting methods in European financial markets and Bitcoin, highlighting the competitive advantage of hybrid designs in complex financial domains. These studies highlight that hybrid designs are not restricted to financial or technological data but can be successfully applied in sustainability and policy-relevant domains as well.

The integration of deep learning into hybrid forecasting has further advanced the field. Qin et al. [

12] proposed a BiLSTM–ARIMA hybrid optimized with a whale-driven metaheuristic algorithm for financial forecasting. Their results showed significant improvements in capturing long-term dependencies and market volatility. Likewise, Çınarer [

13] presented a hybrid deep learning and stacking ensemble model for global temperature forecasting, effectively addressing the challenges of nonlinear climate dynamics. Dong and Zhou [

14] introduced a novel hybrid forecasting framework that integrates the ARIMA model with Convolutional Neural Networks (CNN) and Long Short-Term Memory (LSTM) networks, combined with Complete Ensemble Empirical Mode Decomposition with Adaptive Noise (CEEMDAN) and Sample Entropy (SE) techniques for financial forecasting. Their approach demonstrated superior performance by leveraging signal decomposition, statistical modeling, and deep neural networks in a unified structure. These contributions underline that integrating deep learning with statistical backbones allows hybrid models to operate effectively across both financial and environmental domains.

Hybrid forecasting models have also proven valuable in areas where interpretability and domain-specific insights are crucial. Iacobescu and Susnea [

15] developed an ARIMA–ANN framework for crime risk forecasting, incorporating socioeconomic and environmental indicators. Their approach not only improved accuracy but also enhanced interpretability, enabling policymakers to connect predictions with underlying risk factors. In the domain of financial markets, Liagkouras and Metaxiotis [

16] combined LSTM networks with sentiment analysis for stock market forecasting. This hybrid captured both quantitative and qualitative signals, highlighting the value of integrating textual data sources with numerical series. Finally, Liu et al. [

17] introduced a multi-scale hybrid forecasting framework that combined traditional statistical models with deep learning to handle heterogeneous time series across varying temporal resolutions. Their results emphasized scalability and adaptability, making the framework applicable to multi-domain forecasting challenges.

Model selection has become a major topic in modern forecasting research because time series data are naturally diverse. Because time series structures, frequencies, and outside factors can be so different, flexible methods are required that can choose the best model for each series based on its own unique features. For instance, Karim et al. [

18] showed how useful it is to add external data sources like Google Trends to models that forecast macroeconomic indicators in Australia. Their research showed that using relevant outside information along with dynamic model selection can greatly improve the accuracy of predictions, especially in environments that change quickly. In a similar manner, Hananya and Katz [

19] proposed dynamic model selection frameworks that automatically select the best machine learning model in real time, based on changing series characteristics. This is another step forward in the field of adaptive forecasting. All of these studies show that more and more people are realizing that choosing a model is not a one-time thing but an ongoing process that needs to change with new data and outside factors. Moreover, recent advances in artificial intelligence have also begun to influence model selection processes. Wei et al. [

20] came up with a new way to automate the recommendation of forecasting models by using large language models (LLMs). Their framework makes the model selection process easier by using the natural language processing capabilities of LLMs. This points to a future where artificial intelligence (AI) not only builds models but also helps people choose and use them in smart ways. This new development opens new ways to combine expert knowledge and data-driven insights. It could also make advanced forecasting tools available to more people.

As these developments occurred, the Ata method has become an important method in statistical forecasting. The Ata method was first introduced as an alternative to Holt’s linear trend method [

21]. Since then, it has been recognized and tested in competitive settings [

22,

23]. Its ability to handle various types of time series with minimal parameter tuning makes it particularly appealing in practice. Furthermore, the method’s simplicity, interpretability, and accuracy make it a valuable candidate for hybrid and ensemble forecasting frameworks. Its rising popularity in both academic and real-world settings shows its usefulness.

Based on these findings, this study suggests a hybrid forecasting framework, namely, the Ata-best model, based on model selection and examines the performance of the Ata and ARIMA methods using the M3 and M4 datasets. The study’s goal is to improve forecasting accuracy at the individual series level by systematically finding the best-performing model for each time series based on the in-sample symmetric mean absolute percentage error (sMAPE). This method not only uses the real-world results from major forecasting competitions, but it also incorporates new developments in model selection and hybridization. This makes it a better way to forecast time series in both environmental and general settings. Consequently, the study aims to help improve forecasting by showing how adaptive, data-driven model selection can help achieve both accuracy and interpretability.

To highlight the comparative strengths of the two statistical approaches employed in this study,

Table 1 provides a structured summary of the ARIMA and Ata methods. The table illustrates their key characteristics, including modeling assumptions, adaptability, computational efficiency, and practical limitations. By presenting advantages and disadvantages side by side, it becomes clear that while ARIMA offers a well-established framework with strong theoretical foundation, the Ata method introduces a flexible and adaptive alternative that is particularly suitable for heterogeneous or unstable series. This comparative view not only clarifies the methodological reasons behind selecting these two models for further investigation, but also emphasizes their complementary potential in hybrid forecasting frameworks.

3. Methodology

In this study, a model selection-based hybrid approach is utilized for time series forecasting. For each time series, both the Ata and ARIMA models are applied. For the ARIMA model, we used the official forecast values declared in the M3 and M4 competitions. For the Ata method, we choose the two versions of the Ata model: the one model with the lowest in-sample sMAPE (lowest Ata) and the one model with the median in-sample sMAPE (median Ata). A grid search algorithm was used to find these models because of the difference in the dataset’s frequency and the varying structural characteristics of the time series, as well as to determine how to weight the contributions of the ARIMA- and Ata-based models in the hybrid structure. The goal of this strategy is to improve the overall accuracy of the forecasts by finding the best method, i.e., “Ata-best” for each series based on its own unique features. In this section, the mathematical foundations of the employed methods and the construction of the hybrid framework are presented in detail. Below, the fundamental formulations of the ARIMA and Ata methods used in this study are provided.

Before introducing the individual forecasting methods, the overall structure of the hybrid framework is pictured in

Figure 3. As shown, the M3 and M4 datasets are modeled using multiple variations of the Ata method and the official ARIMA forecasts from both competitions. A hybrid framework is then constructed through weight optimization via grid search, producing the final hybrid forecasts. Additionally, model selection procedures, such as in-sample sMAPE evaluation and alternative aggregation strategies (Ata-lowest and Ata-median), are incorporated to ensure robustness in performance evaluation.

3.1. ARIMA

The ARIMA model, first formalized by Box and Jenkins [

24], is a fundamental statistical method for time series forecasting. When it comes to modeling and forecasting linear relationships in univariate time series data, the ARIMA model is considered the most traditional and widely used method. The model is called ARIMA (

p,

d,

q), where

p is the autoregressive (AR) component’s order,

d is the number of differences needed to reach stationarity, and

q is the moving average (MA) component’s order. The general ARIMA model is expressed as follows:

where

is the value at time

t,

B is the backshift operator (

),

is the differencing operator applied

d times,

and

are polynomials of orders

p and

q, and

is white-noise error.

The ARIMA model captures linear relationships in stationary univariate time series by combining autoregressive, differencing, and moving average components. Choosing a model usually entails determining the appropriate orders for each component and testing for stationarity. Despite being popular due to its simplicity and efficiency with linear patterns, ARIMA has limitations when it comes to handling nonlinear dynamics, structural breaks, or external regressors. In such complex scenarios, these limitations often lead to the adoption of more sophisticated or hybrid forecasting techniques.

3.2. Ata Method

The Ata method is an innovative approach to use statistical analysis to make forecasts about the future. It is based on traditional forecasting techniques for time series, the most famous of which is Holt’s linear trend method. The Modified Holt’s Linear Trend Method [

21] was the first name given to Ata. Later, it was made official as a new way of modeling that is different from traditional models such as ARIMA and Exponential Smoothing [

22]. The main benefit of the Ata method over traditional Exponential Smoothing is that it can easily adjust the parameters like trend and level component when the model assumptions are right. This makes it more accurate when predicting time series with complicated trend structures. Notably, the Ata method is based on the ideas behind classical exponential smoothing, but it stands out because it uses adaptive smoothing weights that change over time. This lets the model react quickly to sudden changes in the data. Ata can also be used in both additive and multiplicative forms, and a dampening factor can be added to the trend component to control how long the trend lasts.

The formal mathematical representation of the Ata model is provided below for both additive and multiplicative forms. Here, denotes the level component, represents trends, and is the h-step-ahead forecast at time t. The additive form is denoted as , and the multiplicative form is denoted as , where for non-dampened data.

Additive Ata Model: Multiplicative Ata Model:

where

,

,

, and

.

4. Data and Preprocessing

In this study, we used the M3 and M4 competition datasets, two of the most wellknown and widely used time series datasets in the forecasting literature. Because of their size and diversity, these datasets are frequently used as benchmarks to assess forecasting techniques. Both datasets include a wide range of real-world time series, with differences in length and frequency as well as in the underlying domains and structural features. This variety offers a comprehensive framework for evaluating the generalizability, flexibility, and robustness of forecasting models in various application domains.

There are 3003 time series in the M3 dataset, all of which have been methodically categorized by frequency and domain. Micro, macro, industry, finance, demographics, and other are the six domains in which the series are specifically dispersed. The M3 dataset also includes four primary frequency types: yearly, quarterly, monthly, and other frequencies. From short-term to long-term horizons, from economic indicators to demographic trends, this dual categorization ensures that the dataset captures a broad range of real-world temporal dynamics and forecasting challenges. Likewise, with 100,000 time series, the M4 dataset provides an even bigger and more varying collection. The M4 dataset, like M3, contains series from a variety of domains, including demographics, industry, finance, and macro. The frequency spectrum is further broadened by M4, which offers series at hourly, daily, weekly, monthly, quarterly, and yearly intervals. This thorough framework makes it possible to assess forecasting models over a far larger range of application domains and temporal resolutions.

The primary objective of this research was to establish a hybrid statistical modeling framework that could combine and adaptively choose the best forecasting models for each individual time series. In order to achieve this, we concentrated on two popular statistical forecasting techniques: the Ata method, a more modern and adaptable modeling technique intended for automated and effective forecasting, and the well-known ARIMA model, which has long been a benchmark method in time series literature. A crucial part of our methodology was the implementation of a performance-driven model selection approach for the Ata method, where grid search and in-sample sMAPE minimization were employed. For each time series, we evaluated seven distinct Ata model variations which consist of without trend parameter, trend parameter with additive model, trend parameter with multiplicative model, level-fixed additive model (i.e., choosing optimum level and trend parameter with additive model), level-fixed multiplicative model (i.e., choosing optimum level and trend parameter with multiplicative model), a simple average of the first and second models, and a simple average of the first and third models, respectively. The selection was based on the in-sample sMAPE, and for each series, we identified two representative Ata models: the one with the lowest sMAPE Ata model (“Ata-lowest”) and the one with the median sMAPE Ata model (“Ata-median”), capturing both optimal and robust model performance. This dual selection strategy allowed us to capture both the optimal and a more robust, central tendency of Ata model performance.

For the ARIMA component, although we first experimented with auto.ARIMA based forecasts, we finally decided to use the official ARIMA forecasts published as part of the M3 and M4 competition results. Our hybrid framework’s dependability and comparability were guaranteed by empirical comparisons that consistently showed that the benchmark ARIMA forecasts performed better than our own implementations in terms of out-of-sample accuracy.

To integrate the strengths of both the Ata and ARIMA models, namely, obtaining the “Ata-best” model, we employed a grid search algorithm to determine the optimal combination weights for each time series. In order to minimize the forecasting error, this weight optimization was performed separately for each series due to the significant heterogeneity in series frequency, length, and domain. Our hybrid model was able to maximize predictive accuracy by flexibly adapting to the distinct features of each time series thanks to this customized approach. All computations were performed in R (version 4.4.3) through the RStudio environment (version 2024.12.1+563). The Ata method was implemented using the ATAforecasting package (version 0.0.60). The code was structured to facilitate reproducibility and enable efficient handling of a wide range of time series.

Hence, this study’s methodological pipeline was identified by its thorough statistical foundations, systematic methodical data-driven model selection, and repeatable validation processes. Our hybrid approach takes advantage of the scale and diversity of the M3 and M4 datasets to offer a strong foundation to enhance time series forecasting.

5. Discussion

This study discusses the reason for using ARIMA and Ata methodologies within the forecasting framework, while also considering their individual strengths and limitations. ARIMA has long been considered a benchmark statistical model for time series forecasting because it has an established theoretical basis and can capture linear relationships in a straightforward manner using autoregressive, differencing, and moving average components. Its interpretability and analytical rigor make it a standard reference point in empirical forecasting studies. However, ARIMA’s dependence on linearity and stationarity limits its performance when nonlinear dynamics, structural breaks, or irregular seasonal behaviors are present. This makes it less flexible in complicated real-world situations. The Ata method, on the other hand, is a new and modern way to generate forecasts that builds on the ideas of exponential smoothing by using adaptive updating mechanisms. Its structure allows parameters to evolve dynamically over time, enabling the model to respond flexibly to shifts in level and trend. Moreover, the Ata method’s capacity to incorporate additive and multiplicative forms across diverse series enhances its robustness, while its algorithmic simplicity makes it computationally efficient and easily scalable to large datasets, such as those encountered in forecasting competitions. These qualities represent Ata as a versatile and easily adaptable method that bridges the gap between simplicity and flexibility, thereby complementing the more rigid structure of ARIMA. Taken together, the comparative use of these two approaches not only ensures methodological robustness but also highlights the potential for hybrid frameworks that integrate the interpretability of ARIMA with the adaptability of Ata, ultimately broadening the scope of effective forecasting strategies.

Table 2 summarizes the optimized hybrid weights assigned to the ARIMA, Atalowest, and Ata-median models for different frequency categories in the M3 dataset. These weights were obtained using a grid search procedure combined with in-sample sMAPE minimization. The grid search systematically explored candidate weight combinations, and the final values correspond to those that minimized the error for each frequency type. A higher weight indicates a stronger contribution of the corresponding model to the hybrid forecast. The results highlight that the Ata-median model dominates for yearly series, while the Ata-lowest model gains importance in other categories, and ARIMA plays a larger role in monthly and quarterly data.

The sMAPE evaluation results, presented in

Table A1,

Table A2,

Table A3 and

Table A4, highlight key findings across all frequency categories—namely, yearly, quarterly, monthly, and other—together with the overall performance of the proposed hybrid model (Ata-best). These findings provide compelling evidence of the benefits of model combination strategies that are customized using performance-based weighting. Combining complementary patterns captured by various Ata variations, the hybrid model produces more accurate forecasts, outperforming both the lowest and median Ata variants. Since the sMAPE was adopted as the official error measure of the M3 competition and, in addition, it is a scale-independent metric, it was chosen as the primary criterion when analyzing the M3 dataset in this study. The scale-independence property is particularly important because the M3 dataset contains time series with highly diverse magnitudes, ranging from small-scale microeconomic indicators to large-scale macroeconomic aggregates. By using the sMAPE, all series can be evaluated on a comparable basis, ensuring a fair assessment of model accuracy without being biased toward the absolute scale of the data.

To maintain the coherence of the main text and avoid overloading it with high-volume numerical tables, the detailed frequency-level results have been moved to the

Appendix A. This ensures both readability and completeness, allowing interested readers to examine the extended evidence without interrupting the flow of the discussion.

Importantly, the Ata-best model outperforms the ARIMA model, which was known as B-J Automatic in the original M3 competition tables. Given the Box–Jenkins methodology’s historical success and wide application to time series forecasting, this finding is especially noteworthy. The hybrid Ata-based approach shows that it is not only competitive but also robust in a variety of time series structures by outperforming B–J Automatic in the entire dataset and in all frequency subsets. These results also demonstrate how adaptable the suggested hybrid structure is, as it can dynamically adapt to underlying patterns in the data without depending entirely on strict statistical assumptions. Moreover, the results indicate that the Ata model’s potential is further increased when utilized as part of a weighted ensemble that takes into account the complementary strengths of various configurations and models, even though the model by itself already provides a competitive performance due to its adaptive structure.

In conclusion, the proposed hybrid approach “Ata-best” performs better in terms of forecasting accuracy across all frequency tables than both the traditional ARIMA benchmark and all Ata models. In the case of the M3 competition, the suggested Ata-best model outperforms the ARIMA model and previous Ata models, as well as the Theta model, which was the M3 competition’s overall winner, in almost every forecasting horizons. According to

Table 3, the Ata-best model achieved a lower sMAPE than the Theta method, the official winner of the M3 competition; therefore, we can state that Ata-best can be considered the top-performing model for the M3 dataset. This outstanding performance not only demonstrates the Ata method’s inherent strength but also the effectiveness and adaptability of statistical hybrid modeling when applied through performance-driven model combination. To further clarify the contribution of each individual component, an ablation study was conducted (

Table A7), showing that the superiority of Ata-best arises from systematic improvements over Ata-lowest, Ata-median, ARIMA, and the winning Theta model across different forecasting horizons. These findings offer strong evidence that, when properly planned, adjusted, and tailored to the structural properties of the data, hybrid statistical approaches can obtain substantial improvements in challenging forecasting scenarios such as the M3 dataset.

The best hybrid weights for each frequency type in the M4 dataset—ARIMA, Atalowest, and Ata-median—are shown in

Table 4. The weight distributions differ across frequencies, just like in the M3 results, suggesting that the contribution of each model component should be modified according to the time series’ characteristics. However,

Table 5,

Table A5 and

Table A6’s comprehensive evaluation metrics offer the most important insights. As in the case of the M3 dataset, the detailed frequency-level results for M4 are also provided in the

Appendix B to preserve the readability of the main text.

Table A5, which presents performance based on the sMAPE metric, makes it clear that the suggested hybrid model Ata-best outperforms the conventional ARIMA model and the individual Ata variants (Ata-lowest and Ata-median) in terms of forecasting accuracy. Out of all the models that take part in the M4 competition, the Ata-best model comes in third place (rank

) overall, outperforming Ata-lowest (rank 21), Ata-median (rank 14), and ARIMA (reported in the official table of the M4 study as The M4 Team (ARIMA) with rank 29) according to the sMAPE ranking. Here, the star (

) indicates that, without altering the original table reported in the official M4 paper [

3], we have highlighted the improvement by showing that our proposed model rises to the third position. This notation is also consistently applied in

Table 5 and

Table A6, where it conveys the same meaning. This consistent benefit across many frequency ranges (from hourly to yearly) demonstrates the hybrid approach’s endurance in terms of scale-invariant performance and directional accuracy.

Table A6, which displays performance based on the MASE (mean absolute scaled error) metric, provides additional evidence of improvement. The Ata-best model ranks third as regards the MASE metric and again produces lower MASE values than its counterparts i.e., Ata models and ARIMA. This implies that the hybrid model improves performance in scale-sensitive error measurement contexts in addition to improving the sMAPE, which is frequently used in the M4 competition. Importantly, the MASE, like the sMAPE, is a scaleindependent error metric, which makes it particularly suitable for comparing forecasting accuracy across heterogeneous time series of different magnitudes. Its inclusion in this study ensures that the evaluation is not biased by series scale and strengthens the robustness of the comparative analysis.

According to

Table 5, the Ata-best model achieves an outstanding second place among all competing methods, which takes into account the OWA metric, the official evaluation criterion used to determine final rankings in the M4 competition. This further reinforces its ability to generalize well across both high-frequency (e.g., hourly, daily) and lowfrequency (e.g., yearly, quarterly) time series, which are often characterized by different noise structures and trend dynamics. It is the OWA metric that integrates both into a single performance indicator and ultimately establishes the leaderboard rankings, even though sMAPE and MASE are also reported for transparency and interpretability. This result further supports the suggested hybrid model’s ability to generalize across these categories. Notably, the Ata-best model outperforms the ARIMA model, which is known as a classical benchmark in statistical forecasting, on every evaluation metric. For transparency,

Table A8 provides an ablation study isolating the roles of ARIMA, Ata variants, and the winning Smyl model, confirming that the Ata-best configuration reliably secures the second rank overall. The results indicate that, even with ARIMA’s historical significance and extensive use in many ensemble techniques, a well-designed Ata-based hybrid can be a more precise and adaptable substitute, particularly in a major forecasting competition like M4.

In the official M4 paper [

3], the reported runtimes highlight that several participants who submitted Ata-based models, including

Selamlar, Taylan, Yapar et al., Yilmaz, and

Çetin listed in

Table 5,

Table A5 and

Table A6, implemented their approaches through a standalone .exe platform. According to these records, their computations ranged from approximately 37.2 min to 393.5 min, depending on the specific configuration and dataset frequency. By comparison, some of the benchmark models reported in the same study required substantially longer runtimes, with the winning hybrid ETS–RNN method proposed by Smyl [

8] taking 8056.0 min and the ARIMA benchmark requiring 3030.9 min. In contrast, the present study extends this line of research by incorporating both the ARIMA benchmark and multiple variants of the Ata method within a unified framework, executed entirely in the RStudio environment. Unlike single-model implementations, our methodology systematically evaluates seven distinct variations of the Ata model for each individual series, selecting the most suitable versions (“Ata-lowest” and “Ata-median”) based on in-sample sMAPE. This model selection process, combined with the subsequent gridsearch-based weight optimization for hybridization with ARIMA, substantially increases the computational burden. As a result, the complete experimental pipeline required approximately 2880 min for the M4 dataset. This comparison underscores that, although the hybrid design involves a more comprehensive and computationally demanding workflow than individual Ata-based runs, its runtime remains considerably more efficient than some state-of-the-art benchmarks (e.g., Smyl’s ETS–RNN or ARIMA benchmark). Thus, the proposed hybrid framework achieves a favorable balance between predictive accuracy and computational feasibility.

6. Conclusions

This study proposed a novel hybrid framework for time series forecasting that integrates the classical ARIMA model with multiple variations of the Ata method through a performance-based model selection and grid search optimization weighting strategy. By combining the statistical robustness of ARIMA with the adaptive flexibility of the Ata models, the approach aims to capture both linear dependencies and more complex structural patterns inherent in time series data.

The effectiveness of the proposed framework was rigorously evaluated on two largescale and widely recognized forecasting benchmarks: the M3 and M4 competition datasets. These datasets encompass a broad spectrum of time series with diverse frequencies and levels of complexity, providing a comprehensive testing environment. Results from the M3 dataset demonstrate that the proposed Ata-best model achieved a superior forecasting performance compared to both the traditional ARIMA benchmark and existing Ata variations. Importantly, according to

Table 3, the Ata-best model also outperformed the Theta method, which had been recognized as the overall winner of the M3 competition, thereby underscoring the competitive edge and accuracy of the proposed hybrid approach.

In the case of the M4 dataset, the generalizability of the method was further confirmed. The Ata-best model consistently ranked among the top-performing approaches across different frequencies, ultimately taking the second position overall based on the official OWA metric, according to

Table 5. Furthermore, the model exhibited stable improvements over ARIMA, Ata variants, and other benchmark methods, demonstrating its robustness in handling both stationary and non-stationary components of large-scale real-world time series.

These findings collectively emphasize several significant contributions. First, the study demonstrates that hybridized statistical models, when thoroughly adjusted and weighted, can outperform both individual traditional methods and benchmarks that have won competitions. Second, the results provide strong evidence that the Ata method, particularly when embedded in a hybrid structure, constitutes a highly competitive alternative for practical forecasting tasks. Finally, the suggested framework is not only accurate but also scalable and adaptable, making it useful for a wide range of real-world forecasting circumstances, from finance and economics to energy demand and environmental applications.

In conclusion, this research demonstrates that the integration of ARIMA with the Ata family of models through performance-driven combination results in substantial accuracy improvements in difficult forecasting situations. The success of the Ata-best model on both the M3 and M4 datasets provides strong evidence that hybrid approaches are a promising direction for improving statistical forecasting methods.

In future studies, the proposed hybrid framework could be extended in several promising directions. One avenue is the integration of machine learning and deep learning components, which may enhance the ability to capture complex nonlinear dynamics and long-range dependencies that are difficult for traditional models alone to address. Additionally, expanding the framework to multivariate forecasting tasks, for instance, using the M5 forecasting competition, would allow researchers to incorporate multiple interdependent series, thereby capturing richer temporal interactions. Beyond this, future work could also focus on incorporating exogenous variables (e.g., macroeconomic indicators or weather conditions), which may significantly improve forecasting accuracy in applied domains such as energy demand, financial markets, and retail planning. Collectively, these directions would strengthen the generalizability, interpretability, and practical applicability of the hybrid forecasting approach beyond competition datasets and into real-world decision-making contexts.