V-PRUNE: Semantic-Aware Patch Pruning Before Tokenization in Vision–Language Model Inference

Abstract

1. Introduction

- We introduce a patch-level pruning strategy that eliminates redundant visual regions prior to token embedding.

- Our approach leverages semantic cues to guide pruning, rather than relying solely on attention weights.

- The proposed method significantly improves inference efficiency while preserving task performance across vision–language benchmarks.

2. Related Work

2.1. Vision–Language Model Optimization

2.2. Token Pruning Methods

2.3. Patch-Based Approaches

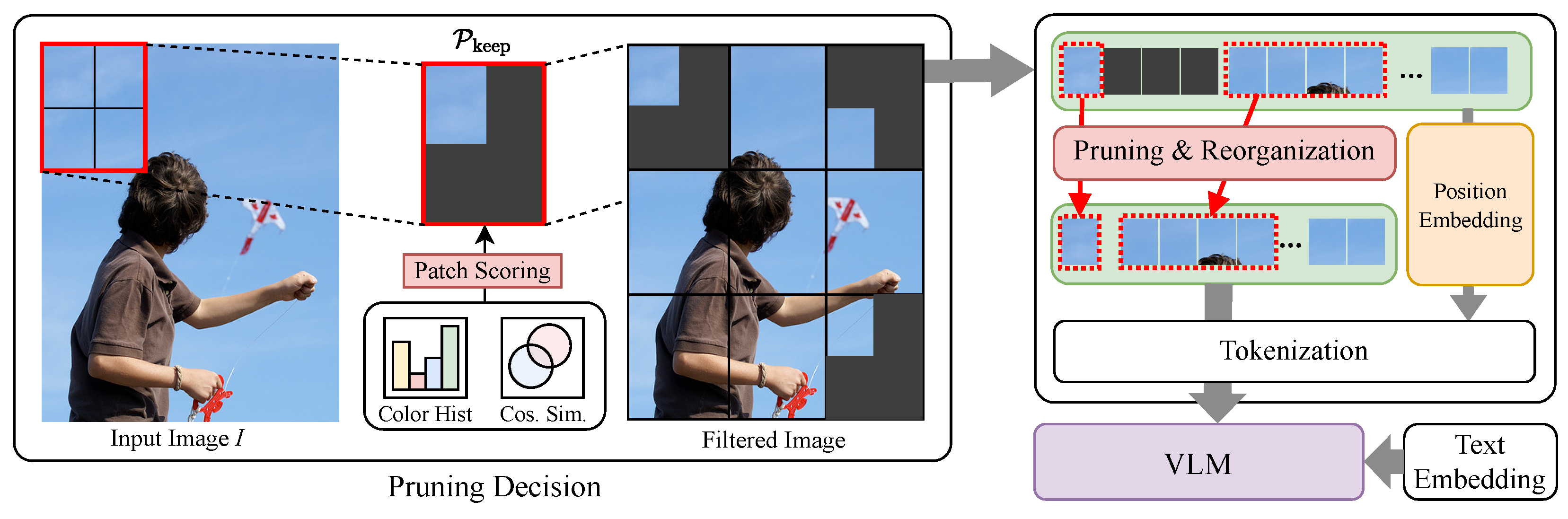

3. Methods

3.1. Problem Setup

3.2. Patch Division

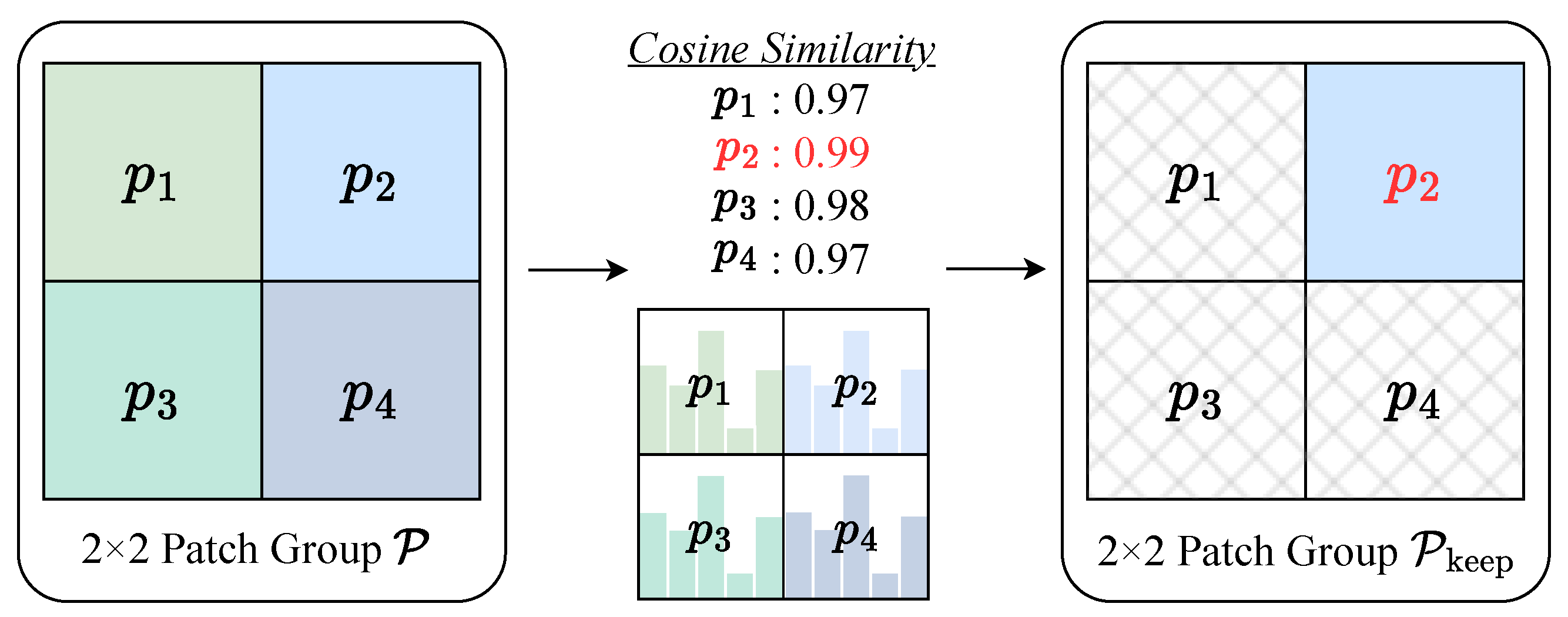

3.3. Pruning Decision

3.4. Patch Reorganization and Tokenization

4. Results and Analysis

4.1. Experiment Settings

- Accuracy: The average accuracy across tasks in each benchmark, reflecting overall performance after pruning.

- Inference Time: The total time required to perform inference on all images in a dataset, used to evaluate the overall latency improvement achieved by pruning.

- Visual Token Reduction: The reduction in the number of visual tokens processed across the dataset, measured to assess the efficiency gains from pruning. To provide a hardware-independent view of computational savings, we report the reduction as a FLOPs ratio.

4.2. Experimental Results

4.2.1. Inference Time, Inference Accuracy, and FLOPs

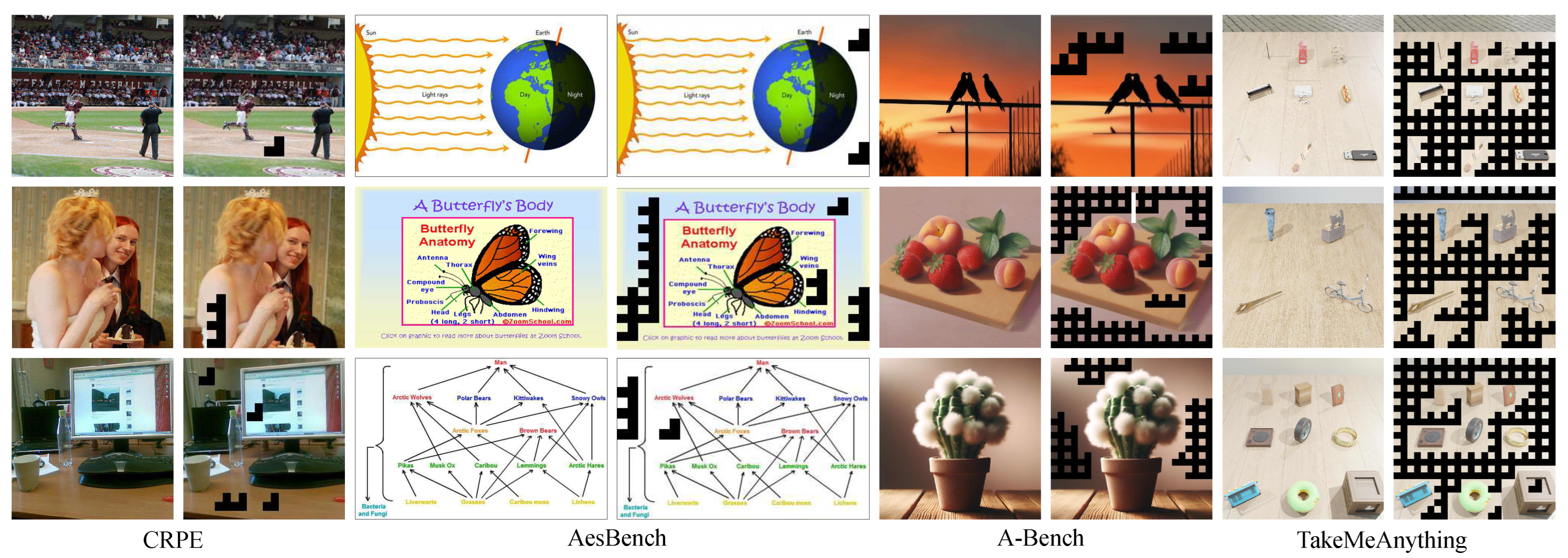

4.2.2. Task Dependency of Pruning Effectiveness

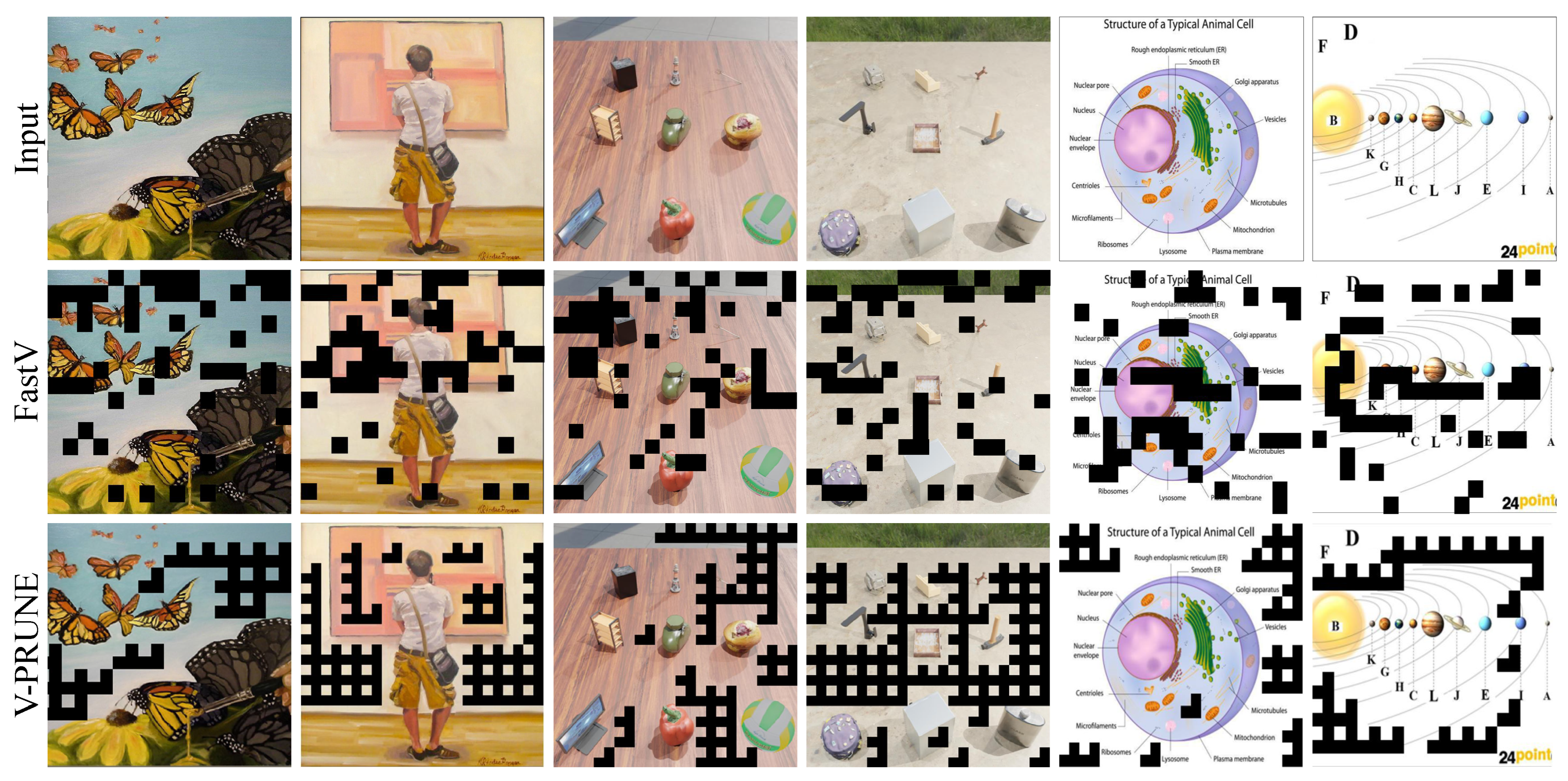

4.2.3. Visual Comparison of Pruned Patches

4.2.4. Result on LLaVA-Next

4.2.5. Analysis of Design Choices

- Consistency with VLM patch grids: Modern VLMs (e.g., CLIP) split images into even×even patch grids (e.g., ; ). A group aligns seamlessly with such grids, avoiding padding or boundary correction.

- Minimum contextual unit: A patch lacks surrounding context, while larger groups dilute fine-grained details. A group is the smallest unit that preserves patch-level granularity with sufficient spatial cues.

- Computational efficiency: With n patches per group, redundancy detection requires comparisons (). Thus, larger groups rapidly increase cost, whereas achieves the best balance.

5. Discussion

5.1. Comparison of Random vs. Proposed Patch Pruning

5.2. Time–FLOPs–Accuracy Trade-Off

5.3. Effect of Patch Pruning Threshold

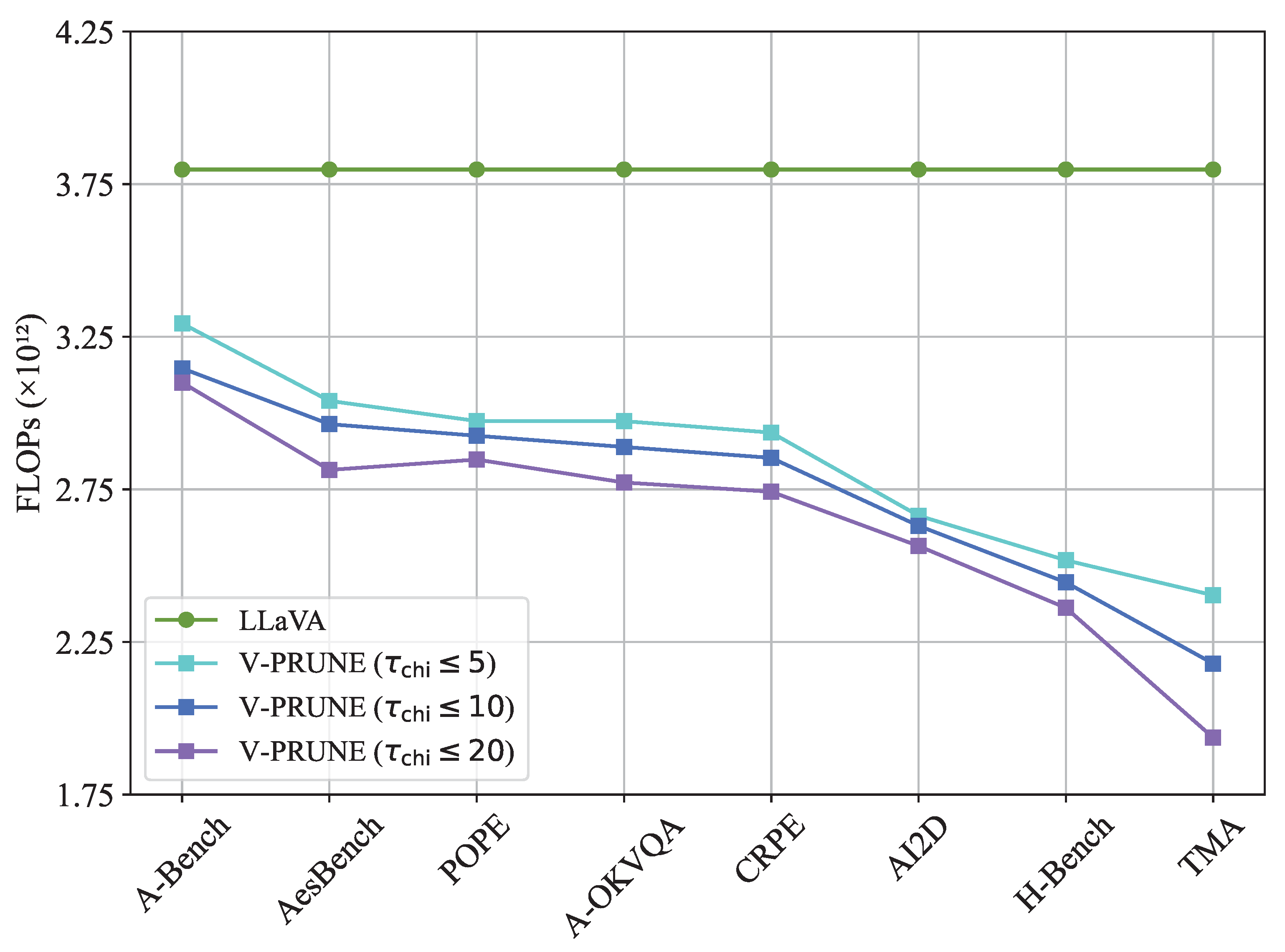

5.3.1. Effect of Chi-Square Distance Threshold

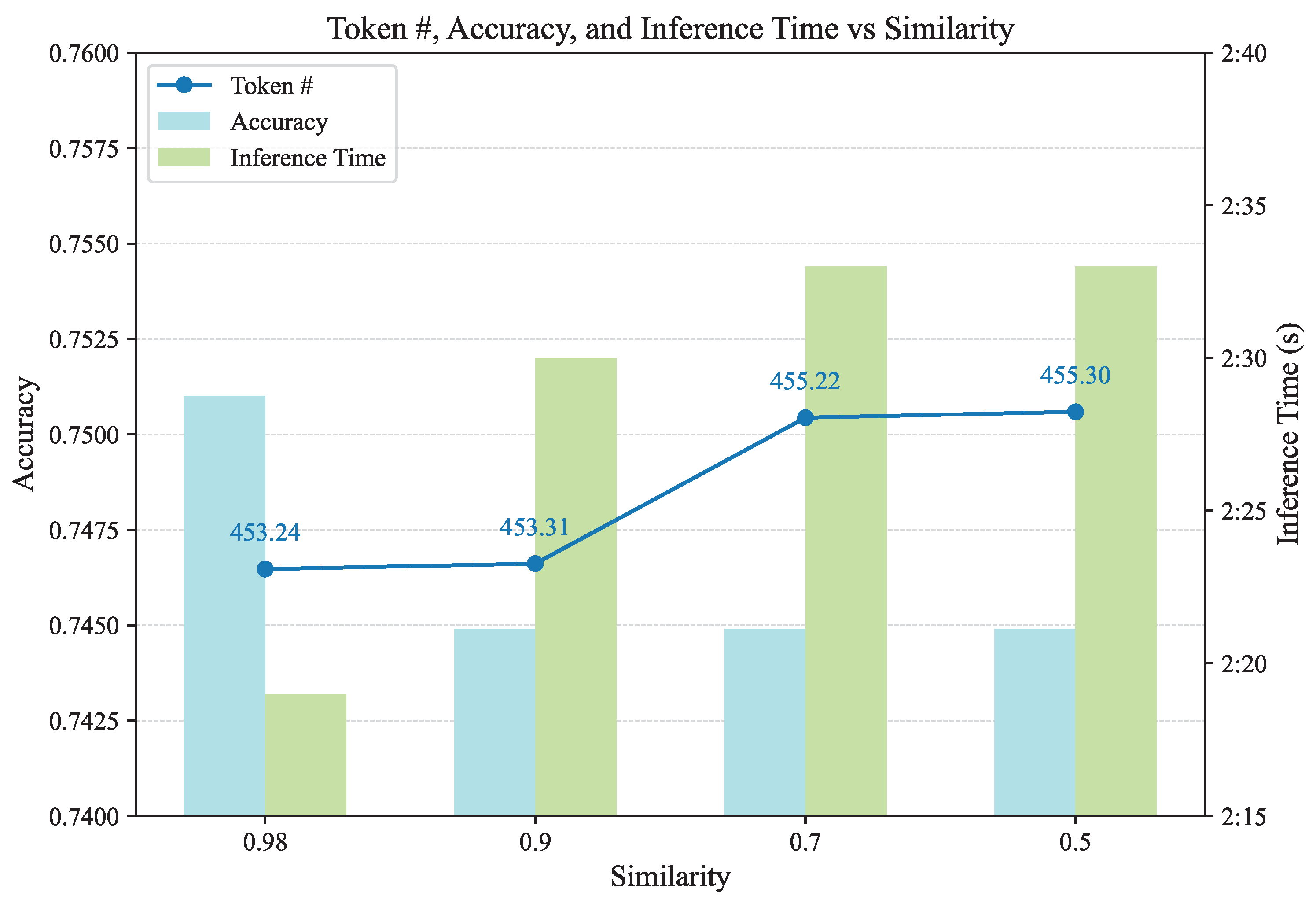

5.3.2. Effect of Cosine Similarity Threshold

5.3.3. Relationship Between the Two Thresholds

6. Conclusions

Directions for Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yu, J.; Wang, Z.; Vasudevan, V.; Yeung, L.; Seyedhosseini, M.; Wu, Y. CoCa: Contrastive Captioners are Image-Text Foundation Models. Trans. Mach. Learn. Res. 2022; preprint. [Google Scholar]

- Chen, B.; Xu, Z.; Kirmani, S.; Ichter, B.; Sadigh, D.; Guibas, L.; Xia, F. Spatialvlm: Endowing vision-language models with spatial reasoning capabilities. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 14455–14465. [Google Scholar]

- Karthik, S.; Roth, K.; Mancini, M.; Akata, Z. Vision-by-Language for Training-Free Compositional Image Retrieval. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Fayyaz, M.; Koohpayegani, S.A.; Jafari, F.R.; Sengupta, S.; Joze, H.R.V.; Sommerlade, E.; Pirsiavash, H.; Gall, J. Adaptive token sampling for efficient vision transformers. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 396–414. [Google Scholar]

- Chen, L.; Zhao, H.; Liu, T.; Bai, S.; Lin, J.; Zhou, C.; Chang, B. An image is worth 1/2 tokens after layer 2: Plug-and-play inference acceleration for large vision-language models. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 19–35. [Google Scholar]

- Zhang, Q.; Cheng, A.; Lu, M.; Zhuo, Z.; Wang, M.; Cao, J.; Guo, S.; She, Q.; Zhang, S. [CLS] Attention is All You Need for Training-Free Visual Token Pruning: Make VLM Inference Faster. arXiv 2024, arXiv:2412.01818. [Google Scholar] [CrossRef]

- Zhu, D.; Chen, J.; Shen, X.; Li, X.; Elhoseiny, M. MiniGPT-4: Enhancing Vision-Language Understanding with Advanced Large Language Models. In Proceedings of the Twelfth International Conference on Learning Representations, Vienna, Austria, 7 May 2024. [Google Scholar]

- Zhou, L.; Palangi, H.; Zhang, L.; Hu, H.; Corso, J.; Gao, J. Unified Vision-Language Pre-Training for Image Captioning and VQA. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 13041–13049. [Google Scholar]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. In Proceedings of the International Conference on Learning Representations, Virtual, 25 April 2022. [Google Scholar]

- Rao, Y.; Zhao, W.; Liu, B.; Lu, J.; Zhou, J.; Hsieh, C.J. DynamicViT: Efficient Vision Transformers with Dynamic Token Sparsification. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Virtual, 6–14 December 2021. [Google Scholar]

- Bolya, D.; Fu, C.Y.; Dai, X.; Zhang, P.; Feichtenhofer, C.; Hoffman, J. Token merging: Your vit but faster. arXiv 2022, arXiv:2210.09461. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations, Virtual, 3–7 May 2021. [Google Scholar]

- Touvron, H.; Cord, M.; Douze, M.; Massa, F.; Sablayrolles, A.; Jégou, H. Training data-efficient image transformers & distillation through attention. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 10347–10357. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Tang, Y.; Han, K.; Wang, Y.; Xu, C.; Guo, J.; Xu, C.; Tao, D. Patch slimming for efficient vision transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12165–12174. [Google Scholar]

- McHugh, M.L. The chi-square test of independence. Biochem. Med. 2013, 23, 143–149. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Li, C.; Li, Y.; Lee, Y.J. Improved baselines with visual instruction tuning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 26296–26306. [Google Scholar]

- Liu, H.; Li, C.; Li, Y.; Li, B.; Zhang, Y.; Shen, S.; Lee, Y.J. LLaVA-NeXT: Improved Reasoning, OCR, and World Knowledge. 2024. Available online: https://llava-vl.github.io/blog/2024-01-30-llava-next/ (accessed on 30 January 2024).

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Wang, W.; Ren, Y.; Luo, H.; Li, T.; Yan, C.; Chen, Z.; Wang, W.; Li, Q.; Lu, L.; Zhu, X.; et al. The all-seeing project v2: Towards general relation comprehension of the open world. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 471–490. [Google Scholar]

- Kembhavi, A.; Salvato, M.; Kolve, E.; Seo, M.; Hajishirzi, H.; Farhadi, A. A diagram is worth a dozen images. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part IV 14. Springer: Berlin/Heidelberg, Germany, 2016; pp. 235–251. [Google Scholar]

- Zhang, Z.; Wu, H.; Li, C.; Zhou, Y.; Sun, W.; Min, X.; Chen, Z.; Liu, X.; Lin, W.; Zhai, G. A-Bench: Are LMMs Masters at Evaluating AI-generated Images? In Proceedings of the Thirteenth International Conference on Learning Representations, Singapore, 24–28 April 2025.

- Huang, Y.; Yuan, Q.; Sheng, X.; Yang, Z.; Wu, H.; Chen, P.; Yang, Y.; Li, L.; Lin, W. AesBench: An Expert Benchmark for Multimodal Large Language Models on Image Aesthetics Perception. arXiv 2024, arXiv:2401.08276. [Google Scholar] [CrossRef]

- Guan, T.; Liu, F.; Wu, X.; Xian, R.; Li, Z.; Liu, X.; Wang, X.; Chen, L.; Huang, F.; Yacoob, Y.; et al. Hallusionbench: An advanced diagnostic suite for entangled language hallucination and visual illusion in large vision-language models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 14375–14385. [Google Scholar]

- Li, Y.; Du, Y.; Zhou, K.; Wang, J.; Zhao, X.; Wen, J.R. Evaluating Object Hallucination in Large Vision-Language Models. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, Singapore, 6–10 December 2023. [Google Scholar]

- Schwenk, D.; Khandelwal, A.; Clark, C.; Marino, K.; Mottaghi, R. A-okvqa: A benchmark for visual question answering using world knowledge. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 146–162. [Google Scholar]

- Zhang, J.; Huang, W.; Ma, Z.; Michel, O.; He, D.; Gupta, T.; Ma, W.C.; Farhadi, A.; Kembhavi, A.; Krishna, R. Task Me Anything. In Proceedings of the Thirty-eight Conference on Neural Information Processing Systems Datasets and Benchmarks Track, 2024, Vancouver, BC, Canada, 10–15 December 2024. [Google Scholar]

- Yang, S.; Chen, Y.; Tian, Z.; Wang, C.; Li, J.; Yu, B.; Jia, J. Visionzip: Longer is better but not necessary in vision language models. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 10–17 June 2025; pp. 19792–19802. [Google Scholar]

| Model | CRPE | AI2D | A-Bench | AesBench | HBench | POPE | A-OKVQA | TMA | |

|---|---|---|---|---|---|---|---|---|---|

| Accuracy | LLaVA | 88.27 100% | 50.93 100% | 65.50 100% | 42.50 100% | 35.54 100% | 80.02 100% | 78.60 100% | 45.71 100% |

| FastV | 87.46 99% | 51.84 101% | 62.91 96% | 51.11 120% | 37.22 104% | 80.48 100% | 78.42 99% | 45.47 99% | |

| VisionZip | 86.48 97% | 51.97 102% | 63.19 96% | 52.77 124% | 32.17 90% | 76.69 95% | 77.90 99% | 43.96 96% | |

| V-PRUNE | 86.43 97% | 52.42 102% | 63.19 96% | 51.94 122% | 37.22 104% | 76.66 95% | 75.10 95% | 42.80 93% | |

| Time | LLaVA | 23:03 | 03:49 | 02:12 | 01:06 | 06:30 | 10:21 | 01:21 | 14:55 |

| FastV | 21:58 | 03:42 | 01:56 | 00:28 | 06:25 | 10:15 | 01:18 | 12:24 | |

| VisionZip | 20:03 | 03:19 | 01:50 | 00:27 | 10:28 | 10:16 | 01:14 | 06:49 | |

| V-PRUNE | 21:28 | 03:25 | 01:50 | 00:26 | 06:23 | 10:08 | 01:15 | 07:42 | |

| FLOPs ratio | LLaVA | 100% | 100% | 100% | 100% | 100% | 100% | 100% | 100% |

| FastV | 20% | 30% | 13% | 20% | 30% | 20% | 20% | 40% | |

| VisionZip | 20% | 30% | 13% | 20% | 30% | 20% | 20% | 40% | |

| V-PRUNE | 22.70% | 29.86% | 13.29% | 19.96% | 33.71% | 21.71% | 21.71% | 36.72% |

| Model | AI2D | A-Bench | A-OKVQA | POPE | |

|---|---|---|---|---|---|

| Acc. | LLaVA-NeXT | 62.85 | 50.00 | 51.52 | 41.11 |

| V-PRUNE | 62.17 | 47.51 | 51.11 | 44.16 | |

| Time | LLaVA-NeXT | 24:12 | 26:18 | 02:58 | 16:37 |

| V-PRUNE | 16:25 | 25:13 | 02:44 | 15:50 |

| Group | AI2D | A-Bench | A-OKVQA | POPE | |

|---|---|---|---|---|---|

| Time | 2 × 2 | 23:15 | 12:00 | 09:21 | 31:11 |

| 3 × 3 | 23:41 | 12:32 | 09:58 | 33:20 | |

| 4 × 4 | 23:44 | 12:22 | 09:45 | 32:56 |

| CRPE | AI2D | A-Bench | AesBench | H-Bench | POPE | A-OKVQA | TMA | ||

|---|---|---|---|---|---|---|---|---|---|

| Time | ≤5 | 37:19 | 05:59 | 03:36 | 01:06 | 27:45 | 10:08 | 02:19 | 12:24 |

| ≤10 | 36:53 | 05:38 | 03:29 | 01:02 | 26:37 | 10:18 | 02:16 | 11:59 | |

| ≤20 | 36:33 | 05:37 | 03:25 | 01:02 | 26:43 | 10:05 | 02:14 | 11:37 | |

| Acc. | ≤5 | 0.8643 | 0.5242 | 0.6319 | 0.5194 | 37.2240 | 77.1373 | 0.7510 | 0.4280 |

| ≤10 | 0.8619 | 0.5187 | 0.6333 | 0.5166 | 37.0137 | 77.9240 | 0.7493 | 0.4250 | |

| ≤20 | 0.8601 | 0.5191 | 0.6361 | 0.5055 | 37.0137 | 76.6600 | 0.7414 | 0.4163 | |

| FLOPs | ≤5 | 22.70% | 29.86% | 13.29% | 19.96% | 33.71% | 22.06% | 21.71% | 36.72% |

| ≤10 | 24.88% | 30.74% | 17.15% | 21.97% | 35.62% | 23.35% | 23.94% | 43.61% | |

| ≤20 | 27.80% | 32.48% | 18.38% | 25.92% | 37.81% | 26.15% | 27.00% | 49.01% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seo, H.; Choi, Y.S. V-PRUNE: Semantic-Aware Patch Pruning Before Tokenization in Vision–Language Model Inference. Appl. Sci. 2025, 15, 9463. https://doi.org/10.3390/app15179463

Seo H, Choi YS. V-PRUNE: Semantic-Aware Patch Pruning Before Tokenization in Vision–Language Model Inference. Applied Sciences. 2025; 15(17):9463. https://doi.org/10.3390/app15179463

Chicago/Turabian StyleSeo, Hyein, and Yong Suk Choi. 2025. "V-PRUNE: Semantic-Aware Patch Pruning Before Tokenization in Vision–Language Model Inference" Applied Sciences 15, no. 17: 9463. https://doi.org/10.3390/app15179463

APA StyleSeo, H., & Choi, Y. S. (2025). V-PRUNE: Semantic-Aware Patch Pruning Before Tokenization in Vision–Language Model Inference. Applied Sciences, 15(17), 9463. https://doi.org/10.3390/app15179463