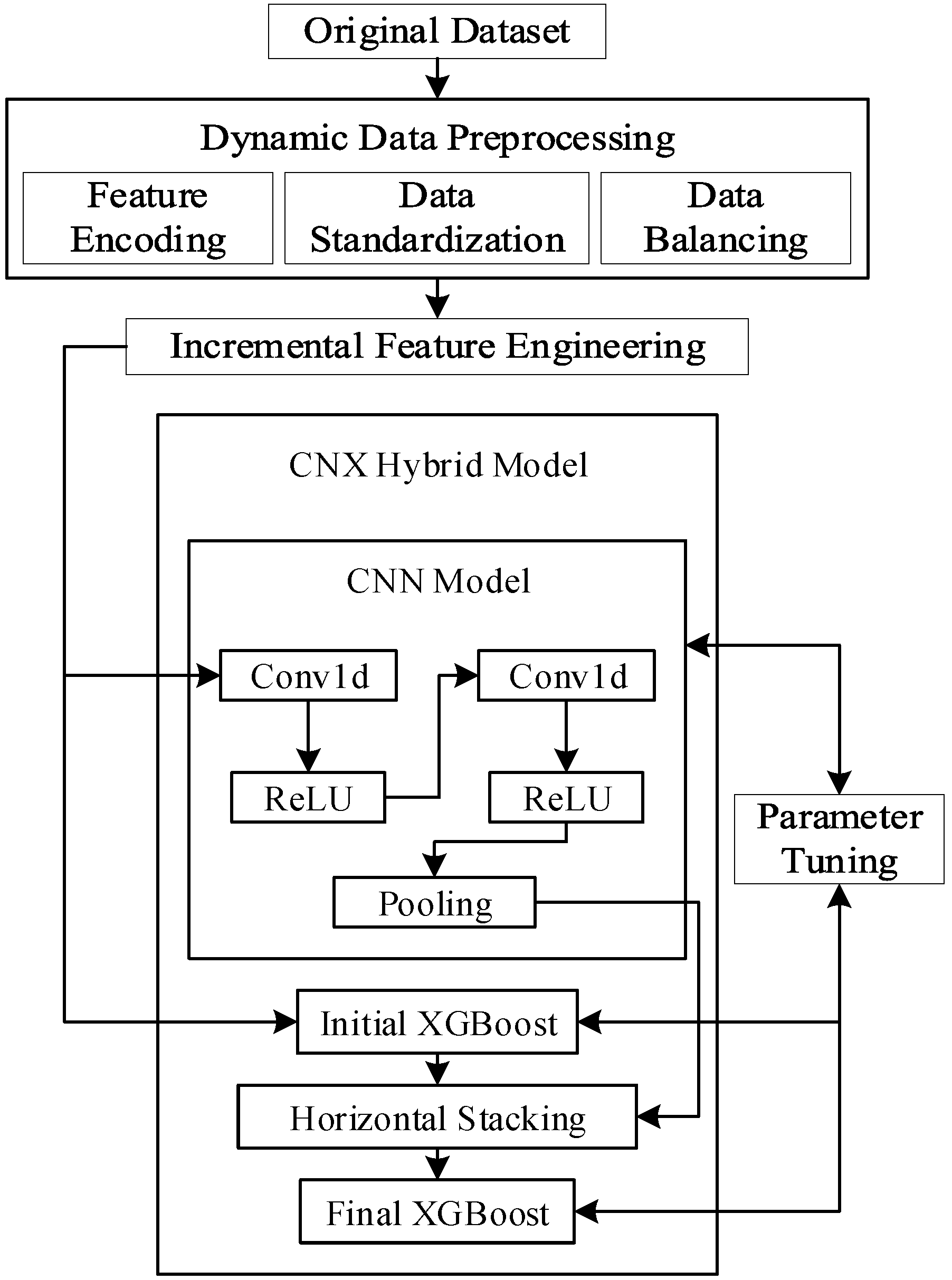

To comprehensively evaluate the DyP-CNX intrusion detection method, this paper conducts experiments on both the UNSW-NB15 and CSE-CIC-IDS 2018 datasets.

4.4.1. Data Standardization and Feature Engineering

In the data processing stage, data standardization and feature engineering have a significant impact on the detection performance of the model. To enhance model performance, this paper conducts experimental studies on the parameter T in Equation (

1) and the parameter K in Equation (

5) to determine their optimal values.

To validate the impact of the sliding window size T on model performance, we conducted an analysis using the UNSW-NB15 dataset. In our experiments, we tested three different window sizes: T = 2500, T = 5000, and T = 10,000. We used a random forest (RF) classifier to evaluate how different T values affect the classification performance of samples. The experimental results are shown in the table below.

Based on the experimental results in

Table 4, the choice of window size T = 5000 for the UNSW-NB15 dataset was made after careful consideration because it strikes the best balance between accuracy and computational efficiency. A smaller window (e.g., T = 2500) is more sensitive to local changes but performs slightly worse under noise, causing a drop in accuracy. A larger window (e.g., T = 10,000) can capture more global information but risks overlooking fine details, which also leads to a slight accuracy decrease. T = 5000 effectively combines the advantages of both, raising the model’s accuracy to 91.04%. Therefore, selecting T = 5000 not only improves detection performance but also demonstrates the robustness and adaptability of dynamic IQR normalization.

It is worth noting that the two datasets used in this study differ significantly in sample size (180,000 vs. 9 million), which directly influences the choice of window size. For the smaller UNSW-NB15 dataset, a window size of T = 5000 is more suitable, ensuring faster training and response times while maintaining good performance. For the larger dataset, we chose a window size of T = 50,000, which can more fully utilize the rich temporal information without excessive computational cost.

These choices consider factors such as the dataset scale, temporal variation scale of features, and computational resources, aiming to find the optimal balance between model performance and efficiency for each dataset. The experimental results and analyses provide empirical support for our parameter choices, demonstrating the robustness and effectiveness of the proposed dynamic IQR normalization technique across different window sizes and dataset scales. Additionally, it showcases the adaptability and flexibility of our method when faced with datasets of varying characteristics.

The Spearman correlation coefficient is a nonparametric statistical measure used to assess the strength and direction of the rank relationship between two variables. In this analysis, it is used to compare the consistency of feature importance rankings calculated from adjacent data windows (such as the ith window and the i+1th window).

As shown by the research results in

Table 5 and

Table 6, our chosen K values (UNSW-NB15: K = 10,000; CSE-CIC-IDS2018: K = 200,000) not only demonstrate excellent performance in terms of model performance (F1-Score), but more importantly, they ensure the high reliability and strong robustness of the feature selection process across the time dimension. This lays a solid foundation for the long-term stable deployment of the model in real-world network environments.

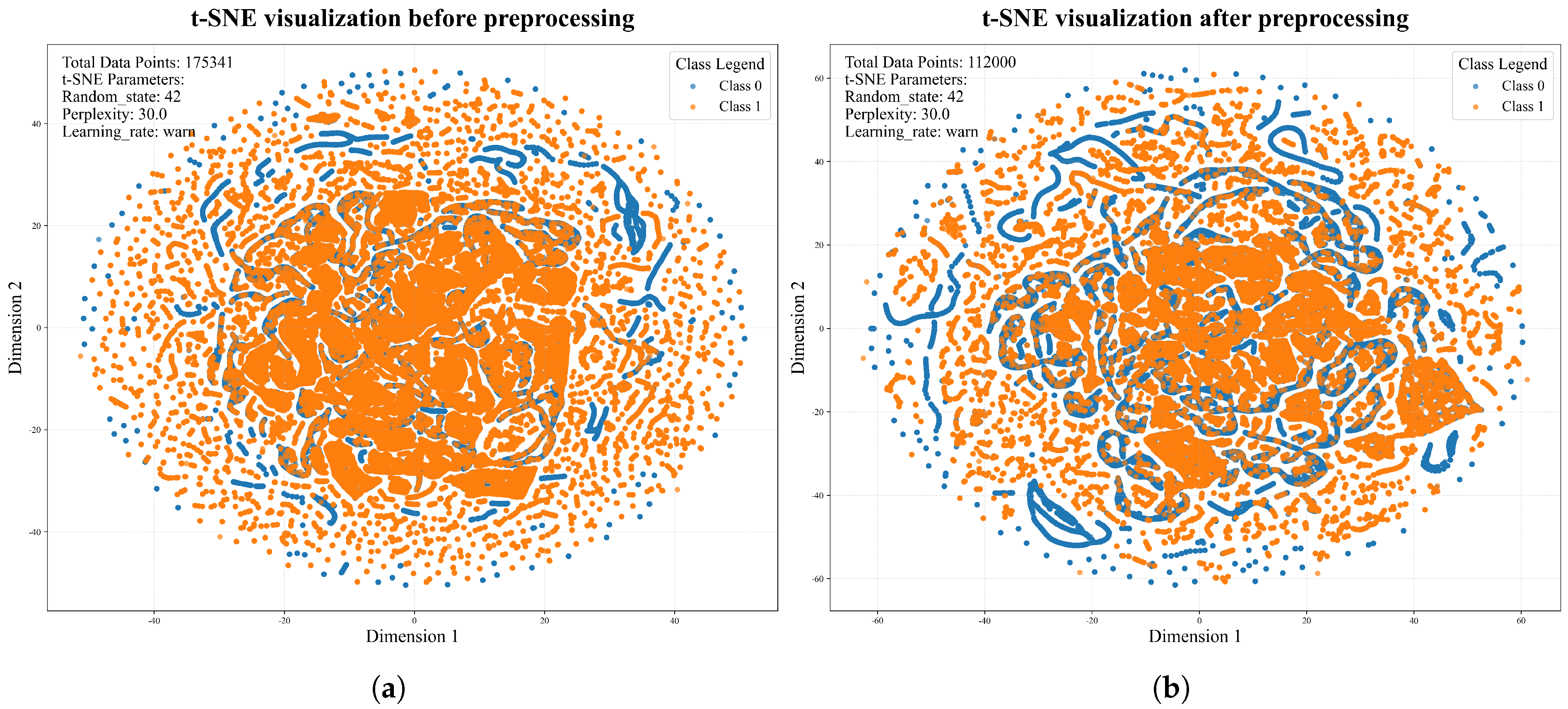

4.4.3. Results on Different Datasets

Initially, we analyzed the UNSW-NB15 dataset and found that some features exhibit a wide range of values. To reduce sensitivity to outliers, this paper employs a dynamic IQR normalization method during feature processing and uses a random forest classifier to preliminarily validate different normalization methods. The results are shown in

Table 7.

As shown in

Table 7, the dynamic IQR method achieved the highest F1 of 86.94% and the lowest Time_Stability of 0.00302, demonstrating its robustness to outliers. This method uses a sliding-window mechanism to update local data distribution characteristics in real time, effectively suppressing the influence of extreme values while maintaining stable computational efficiency.

Moreover, to comprehensively showcase the model’s effectiveness, we conduct an experiment based on the UNSW-NB15 dataset, comparing several mainstream models, including CNN and XGBoost, as well as cuttingedge specialized methods during the past three years of research: AE-GRU [

5], CL-FS [

7], XGBoost-GRU [

16], LightGBM [

17], SRF-CNN-BiLSTM [

18], and DCNN-LSTM [

27]. All methods use the complete dataset and directly cite the best results from the original literature to ensure fair comparison. The experimental results for each method are shown in

Table 8.

As shown in

Table 8, the proposed DyP-CNX method achieves an accuracy of 92.57% and an F1 of 91.57%, surpassing all other comparison methods. This indicates that the model has superior detection capabilities when it comes to identifying anomaly attacks.

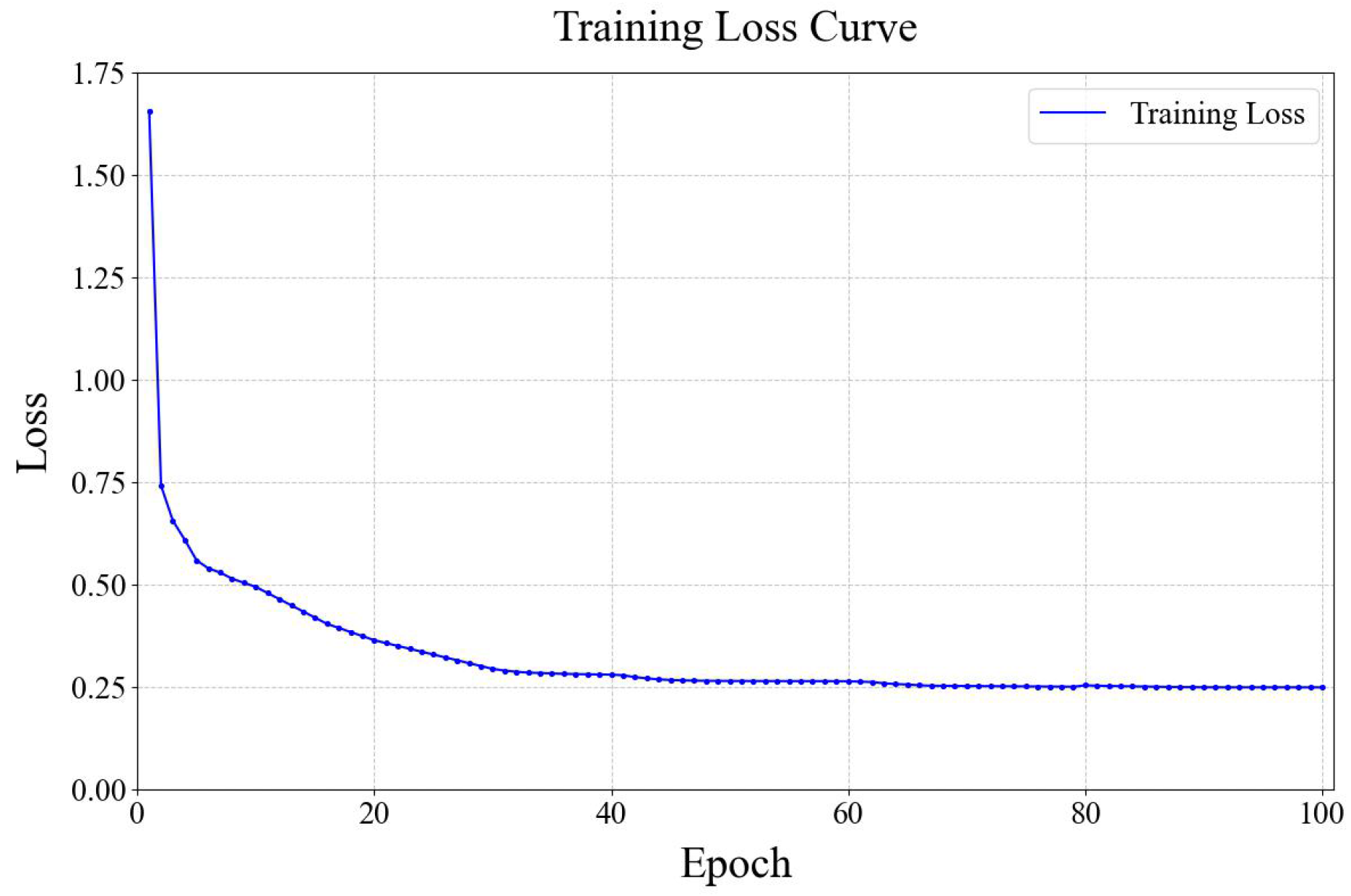

Table 9 presents the computational metrics for the UNSW-NB15 dataset. The training process took approximately 901.07 s, showcasing the efficiency of the DyP-CNX framework in handling complex datasets. Furthermore, the total inference time for the test set was 0.2213 s, translating to an impressive per-sample inference time of 0.000003 s. These metrics indicate that the model is not only robust and accurate but also highly efficient, making it suitable for real-time intrusion detection scenarios where prompt responses are critical. This efficient performance is particularly beneficial in dynamic network environments, where rapid processing and analysis of data streams are essential for maintaining robust security postures.

To further validate the effectiveness of the models, we conducted another experiment based on the CSE-CIC-IDS 2018 dataset, selecting, namely, AI-AWS-RF [

9], CLHF [

28], and PCA-DNN [

29], which have shown outstanding performance on this dataset, for comparison with our proposed method. All methods used the complete dataset and directly adopted the best results from the original literature to ensure fairness in comparison. The experimental results of each method are shown in

Table 10. As shown in

Table 10, the overall performance metrics of the proposed DyP-CNX in this paper are as follows: accuracy of 98.82% and F1 of 99.34%. All metrics are higher than those of other models. The results indicate that the DyP-CNX method can effectively balance security and usability requirements in practical deployments, providing a more robust solution for complex network environments.

4.4.4. Ablation Experiments

Since our proposed model is an ensemble framework, we assessed its performance against its individual component models.

The UNSW-NB15 dataset was chosen for ablation experiments to verify the effectiveness of different components in the model design. One main reason is that UNSW-NB15 is currently widely used in intrusion detection, as seen in refs. [

7,

9,

16,

17,

18], and others. Moreover, in the experimental results based on UNSW-NB15, there was a significant improvement in F1 score performance. As for the CSE-CIC-IDS 2018 dataset, our core objective is to assess the generalization ability of the complete method, using it as an independent external test set to validate the overall performance of the model in complex environments.

Subsequently, we conducted a comparative analysis of CNN, XGBoost, and our proposed DyP-CNX model based on the UNSW-NB15 dataset. The experimental results, shown in

Table 11, clearly indicate that the proposed model achieves nearly a 6% improvement in accuracy and over a 10% increase in precision. It also exhibits a strong F1, demonstrating the model’s robust ability to correctly identify positive samples while effectively balancing false negatives and false positives.

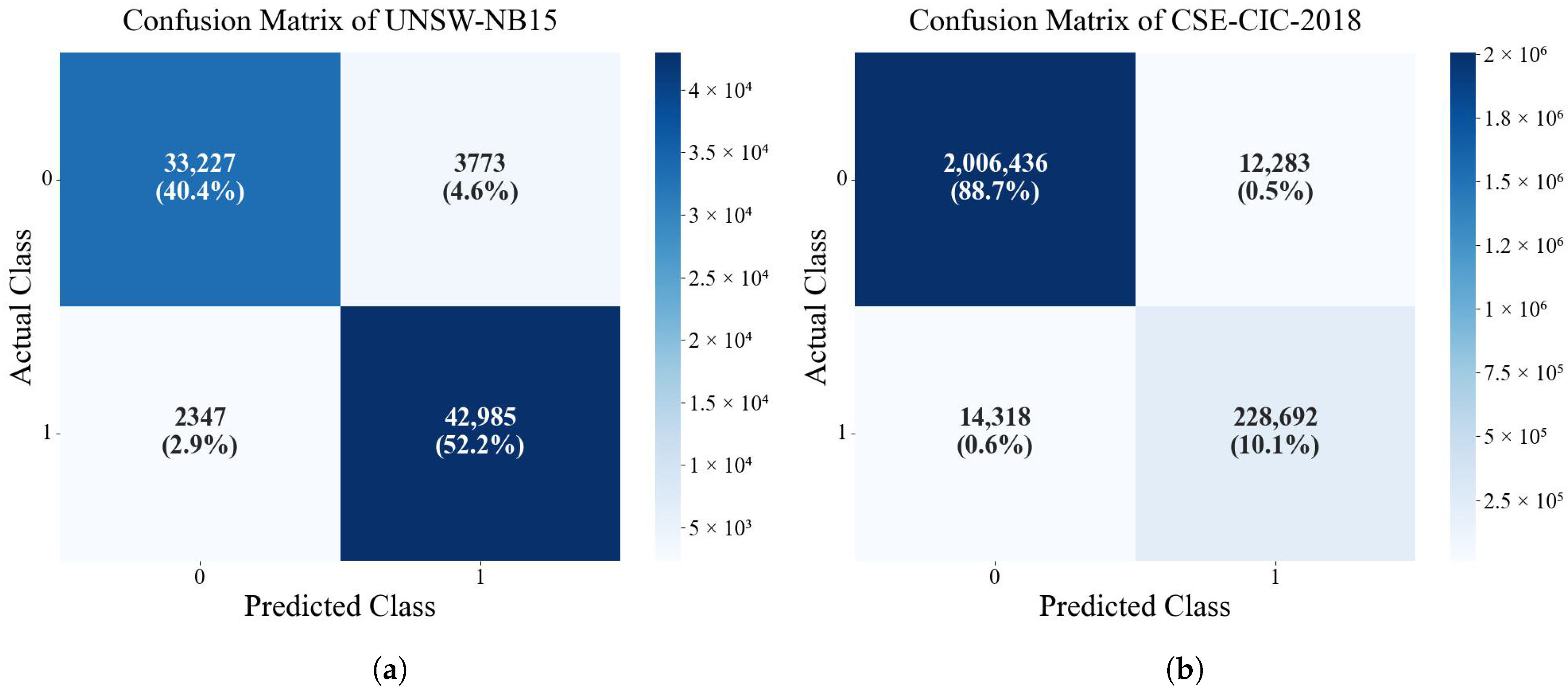

4.4.5. Confusion Matrix on Different Datasets

The confusion matrixes of tow datasets for the DyP-CNX approach are shown in

Figure 4.

The confusion matrix results reveal that the model exhibits high overall classification performance on both datasets, but there are significant differences in the misclassification patterns. On the UNSW-NB15 dataset (

Figure 4a), the model’s misclassification of the negative class (FN = 3773) is slightly higher than the misclassification of the positive class (FP = 2347), indicating that the model may lack sufficient sensitivity to certain attack types (such as low-frequency or novel attack patterns). On the CSE-CIC-IDS2018 dataset (

Figure 4b), the misclassification of the positive class (FP = 14,318) is significantly higher than the misclassification of the negative class (FN = 12,283), suggesting that the model faces challenges in the specific identification of normal traffic, possibly due to the extremely large scale of normal traffic (TN = 2,006,436) in this dataset, leading the model to adopt a conservative decision threshold for attack behavior. This difference in misclassification patterns further reflects the model’s limited generalization to different network environments (UNSW-NB15 as a mixed traffic dataset, CSE-CIC-IDS2018 as a cloud platform traffic dataset). Specifically, the model’s capability to extract high-dimensional sparse features (such as encrypted traffic or low-frequency attacks in CSE-CIC-IDS2018) may be constrained by the CNN architecture, while XGBoost in the ensemble process may over-rely on explicit statistical features and fail to fully capture the temporal and contextual dependence characteristics of attack types. For further analysis, it is suggested to further breakdown the misclassification distribution by specific attack types (such as DoS, Exploit, etc.) to identify the model’s weak points for certain attacks (such as slow attacks or zero-day attacks).

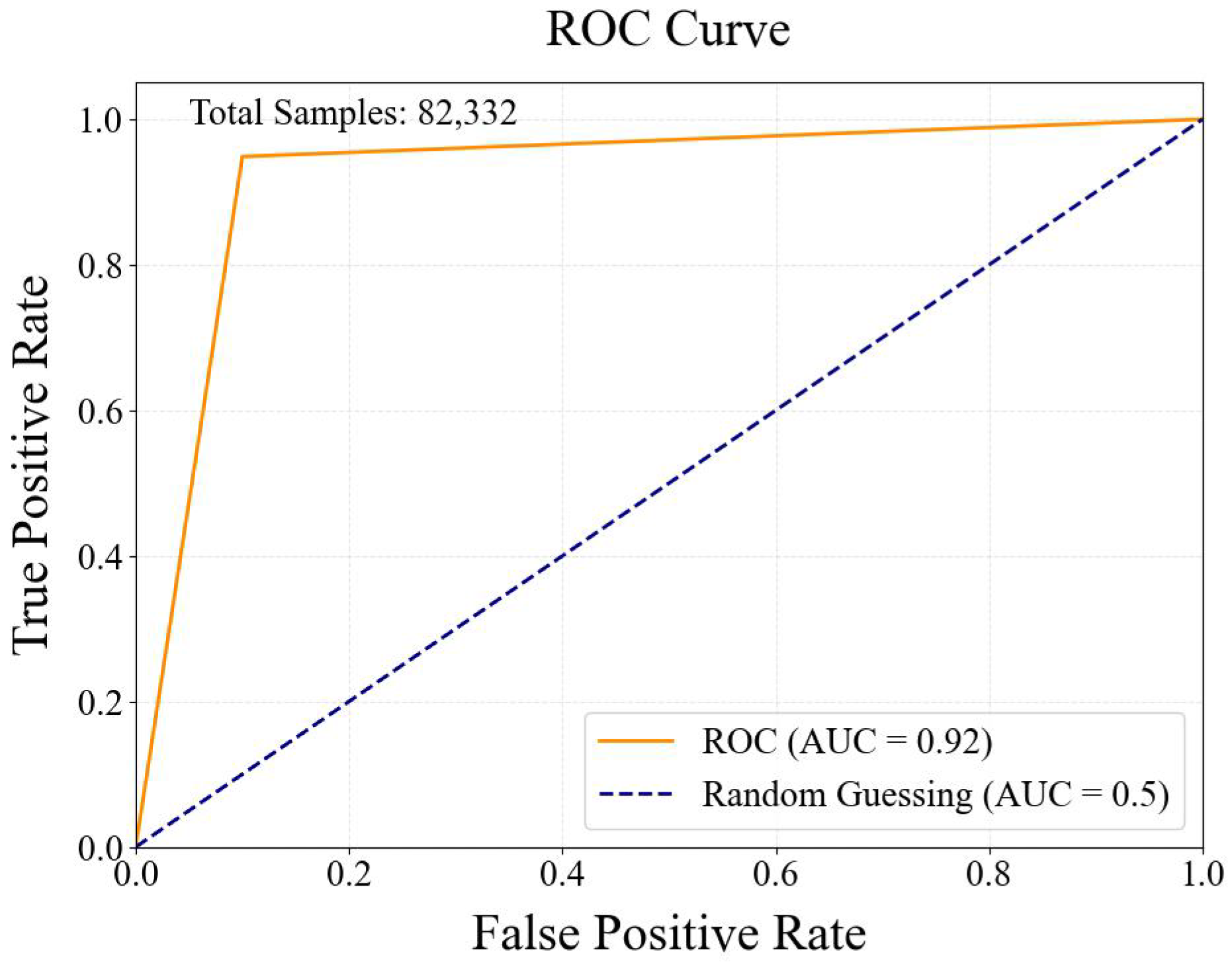

To further evaluate the classification performance of the method, the ROC curve is plotted to illustrate the relationship between the True Positive Rate (TPR) and the False Positive Rate (FPR), demonstrating the method’s performance at various threshold settings. The ROC curve of UNSW-NB15 is shown in

Figure 5.

4.4.6. Comparisons Study

As shown in

Table 11, this table demonstrates a comparison of different methods on the UNSW-NB15 dataset, primarily focusing on accuracy and F1 score metrics. AE-GRU [

5] shows moderate performance in both metrics, indicating balanced performance. CL-FS [

7] has slightly higher accuracy than AE-GRU but a lower F1 score, suggesting a trade-off between precision and recall. XGBoost-GRU [

16] performs well in accuracy but has a lower F1 score, possibly indicating an imbalance between precision and recall. LightGBM [

17] performs excellently in both metrics, demonstrating effective handling of data distribution and category prediction. SRF-CNN-BiLSTM [

18] performs well, maintaining a good balance between accuracy and F1 score. Although DCNN-LSTM [

27] has high accuracy, its lower F1 score indicates issues with class balance. Comparing the experimental results, it can be seen that the combination of GRU with boosting algorithms, as in AE-GRU and XGBoost-GRU, shows competitive performance but has not yet achieved the best results. The use of LSTM and CNN variants in DCNN-LSTM, despite high accuracy, shows challenges in some metrics.

As shown in

Table 10, the experiment is based on the CSE-CIC-IDS 2018 dataset. This dataset originates from actual security projects and features high authenticity, diversity, and comprehensiveness. In particular, it performs well in simulating real-world environments, making its use for performance evaluation highly meaningful in practical applications. According to the results in

Table 6, AI-AWS-RF [

9], CLHF [

28], and PCA-DNN [

29] demonstrate stable performance with high accuracy and F1 scores, indicating good classification capabilities; the method proposed in this paper performs the best, achieving the highest accuracy and the highest F1 score. This clearly shows its advantages in classification accuracy and class balance.

As shown in

Table 11, the XGBoost model exhibited relatively low performance, with an accuracy of 85.06%, a precision of 80.17%, and an F1 score of 87.71%. The standalone CNN model outperformed XGBoost, achieving an accuracy of 86.96%, a precision of 83.30%, and an F1 score of 88.97%. Our proposed method, DyP-CNX, which incorporates dynamic IQR normalization, demonstrated the best results, with an accuracy of 92.57%, a precision of 93.40%, and an F1 score of 91.57%. Compared to XGBoost, the standalone CNN improved accuracy by approximately 1 percentage point, indicating that deep learning models have a superior feature representation capability, enabling them to capture local features more effectively and, thus, enhance overall performance. However, combining dynamic normalization with DyP-CNX significantly increased performance, especially with about a 3-point improvement in the F1 score. This demonstrates that the deep fusion strategy employed in this paper—allowing the model to utilize both local and global features—further enhances the detection ability under complex and concealed attack scenarios, leading to improved performance.

Comparing

Table 8 and

Table 11, it can be observed that Ref. [

16], which adds a GRU module to the XGBoost model in

Table 11, achieves approximately a 2% improvement in accuracy and F1. The models SRFCNN-BiLSTM [

18] and DCNN-LSTM [

27] have also been somewhat optimized relative to the CNN in

Table 11, with performance improvements to varying degrees. However, overall, their results are still inferior to the proposed DyP-CNX method. This further demonstrates that the DyP-CNX approach can effectively detect concealed threats in complex attack scenarios while avoiding over-sensitivity, providing a robust solution for practical network security defense.

In conclusion, DyP-CNX significantly outperforms other methods across all metrics, indicating its greater effectiveness in identifying and correctly classifying instances. While our model performs well on the specific dataset, it still has several limitations. Firstly, the model heavily relies on the representativeness of the training data; its performance may be affected when applied to different or more complex scenarios. Secondly, the deep model has high computational complexity, requiring substantial hardware resources during deployment, which may impact real-time performance and scalability. Additionally, the robustness of the model against unknown attack types or maliciously crafted adversarial samples needs further validation.