An Intelligent Docent System with a Small Large Language Model (sLLM) Based on Retrieval-Augmented Generation (RAG)

Abstract

1. Introduction

1.1. Research Background

1.2. Research Purpose

2. Background & Related Works

2.1. Large Language Model (LLM) Research Cases

2.1.1. The Rise of Multimodal LLM (MLLM)

2.1.2. Combination with Agentic AI

2.1.3. Reinforcement Learning-Based Alignment

2.2. Small Large Language Model (sLLM) Research Cases

2.2.1. On-Device AI and Edge Computing

2.2.2. RAG-Based sLLM Optimization

2.2.3. Hybrid Search

2.3. Retrieval-Augmented Generation (RAG) Research Cases

2.3.1. Multi-Modal RAG

2.3.2. Self-Correcting RAG

2.3.3. Continual Learning RAG

2.4. Vector Database (Vector DB) and FAISS Research Cases

2.4.1. Large-Scale Image and Video Search

2.4.2. Recommendation Systems

2.4.3. Anomaly Detection System

2.5. LangChain-Based Integrated Framework Research Cases

2.5.1. LLM-Based Chatbot Development

2.5.2. Code Generation and Automation

2.5.3. Data Analysis and Automatic Report Generation

3. Research Methodology

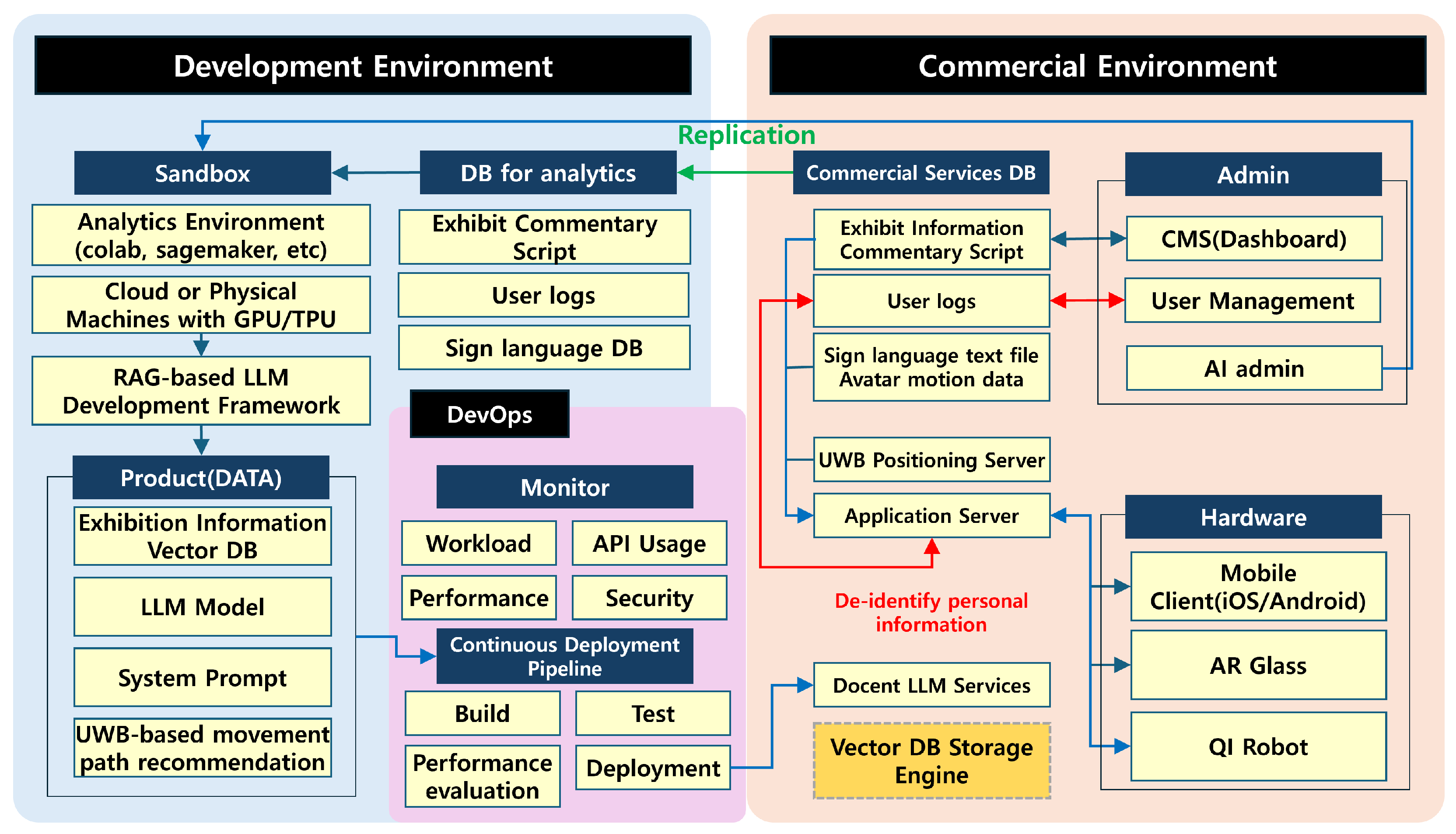

3.1. Overview of the Overall Configuration

3.1.1. Development Environment

- Development Database: This mainly manages source data necessary for research and learning, such as exhibition description texts, Q&A datasets, user persona data, and pilot user logs, and is composed of a hybrid structure of a relational database (MySQL) and a vector database (OpenSearch) that stores embedded vectors. This database stores more than 199,000 exhibition description data and over 102,000 question–answer pairs in JSON format after pre-processing, vectorized using a multilingual sentence embedding model, and indexed through FAISS and Amazon OpenSearch. In addition, researchers can freely perform performance tuning, reflect new data, and configure the experimental environment, and replication from the development database to the commercial database is performed through an ETL pipeline (Extract-Transform-Load). Data from the development environment are transmitted in the background in periodic or trigger-based real-time, and exhibition content, Q&A, user logs, etc., are anonymized and preprocessed before being sorted and version-controlled in JSON format for transmission.

3.1.2. Commercial Environment

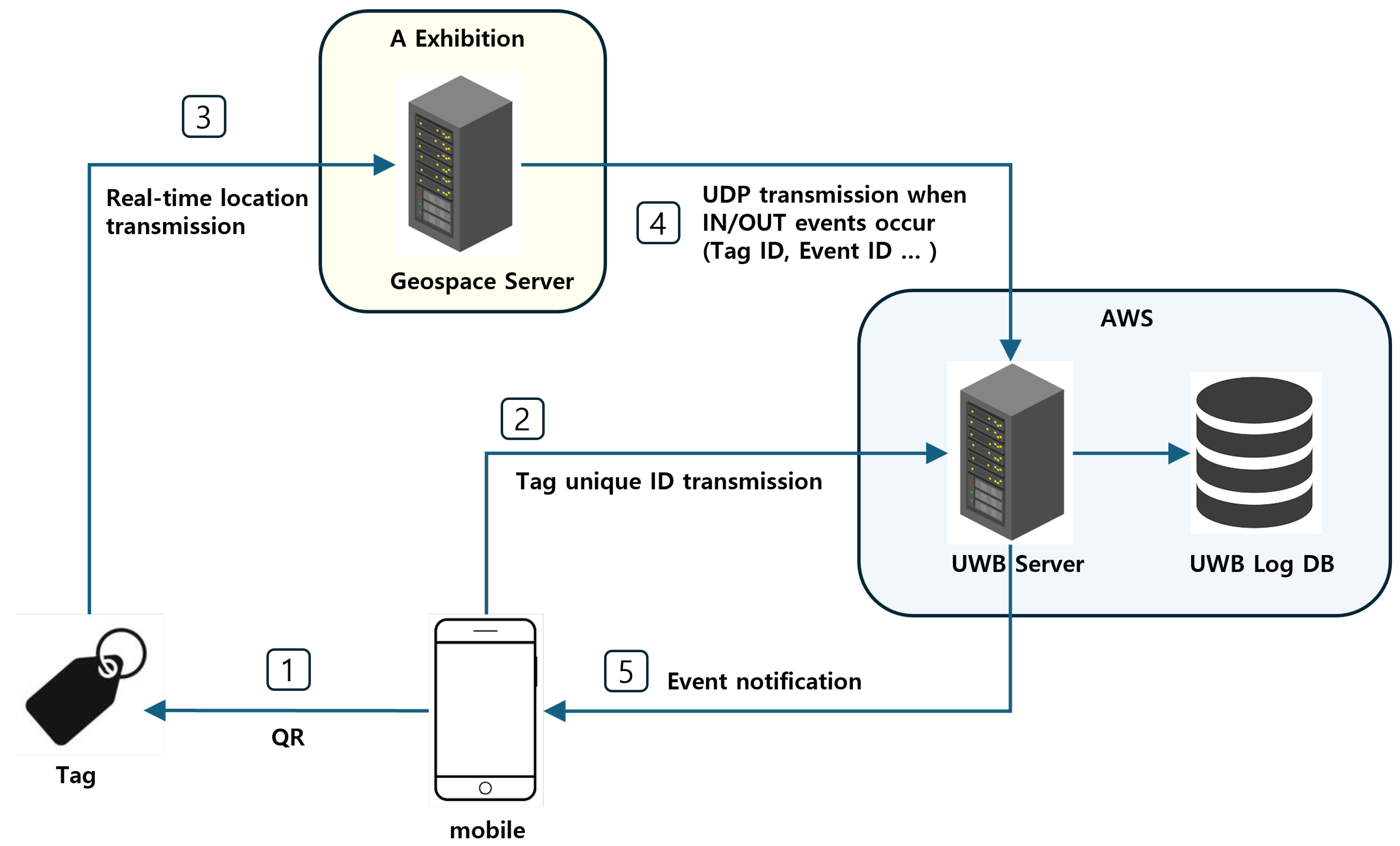

- Commercial Database: The commercial database is configured with a relational database (MySQL) and a vector database (OpenSearch) for real-time RAG search to store the latest exhibition information, user sessions, and logs through regular replication and synchronization with the development database, and to manage personalized data such as UWB-based location information, user language settings, and preferences. This database supports high-speed vector search and response generation to quickly respond to user queries and location-based search requests and is configured as a distributed cluster to provide high availability and stable response speeds. Additionally, service operators use commercial databases to perform user support, content update monitoring, and service log analysis tasks. User text or voice queries are vectorized through the Application Server and then quickly retrieved from the OpenSearch vector DB using the ANN (Approximate Nearest Neighbor) search method [39].

- The UWB Positioning Server tracks visitors’ locations in real time to enable location-based content delivery [40].

- Docent LLM Services and Application Server search for relevant documents in the vector DB in response to visitors’ queries and generate natural explanatory responses through LLM to deliver to users [41].

- Admin functions are responsible for system maintenance and content management, including the administrator dashboard, user management, and AI status monitoring.

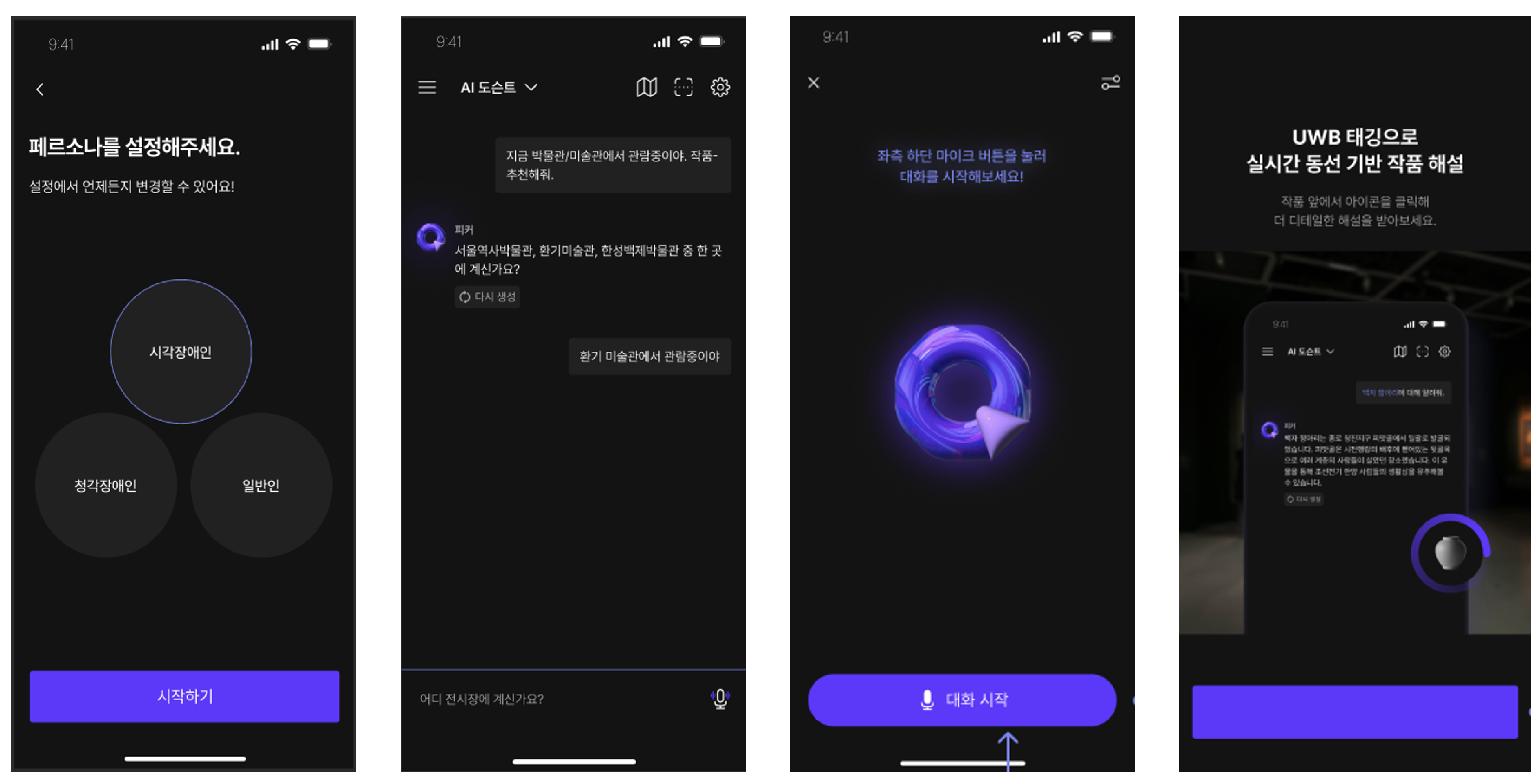

- Hardware terminals are implemented as mobile apps (iOS/Android), augmented reality glasses (AR Glass), and exhibition guide robots (QI Robot), and support various output formats (text, voice).

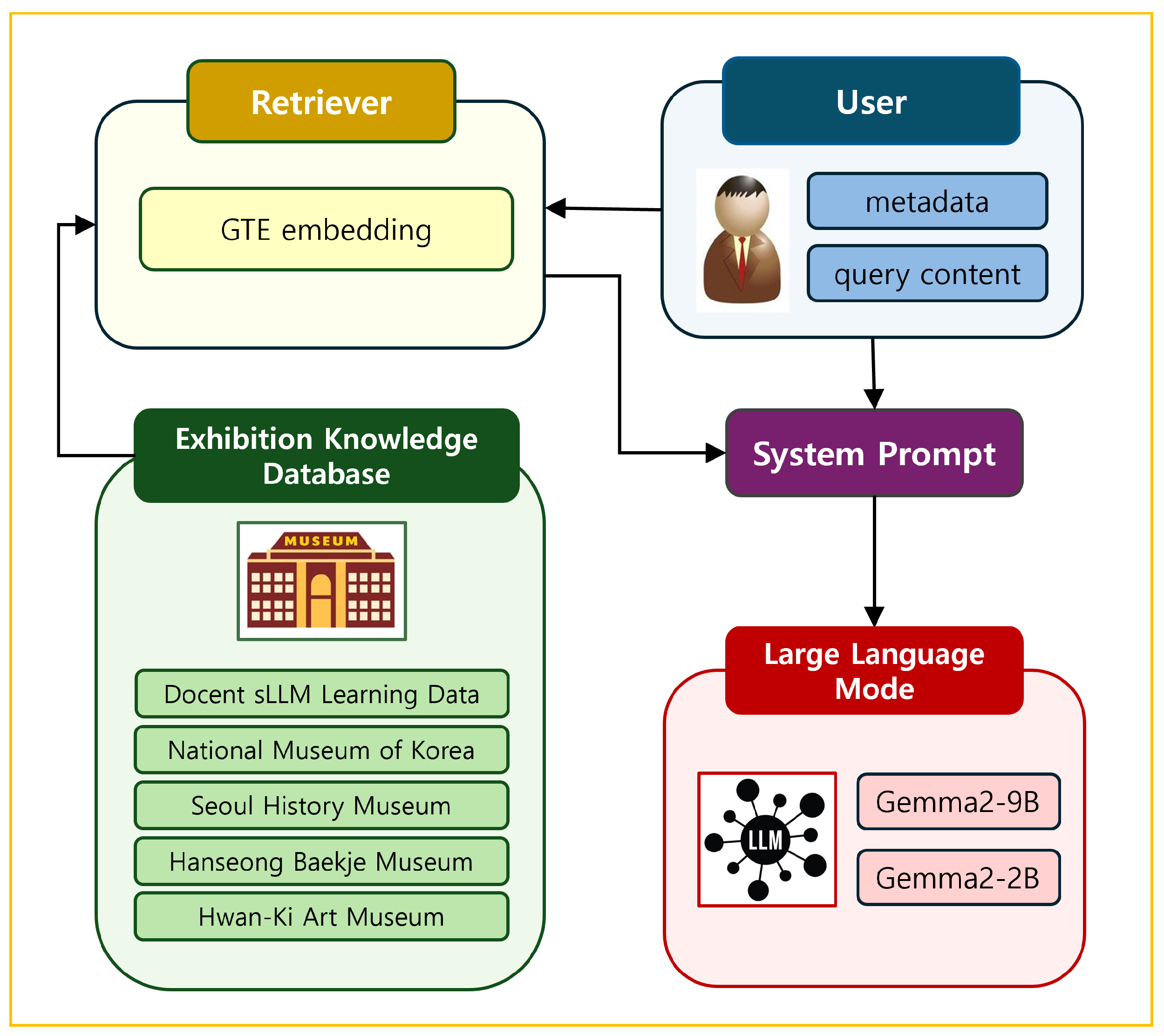

3.2. Detailed Description of Applied Technologies

3.2.1. Information Search Module

3.2.2. Generation Module

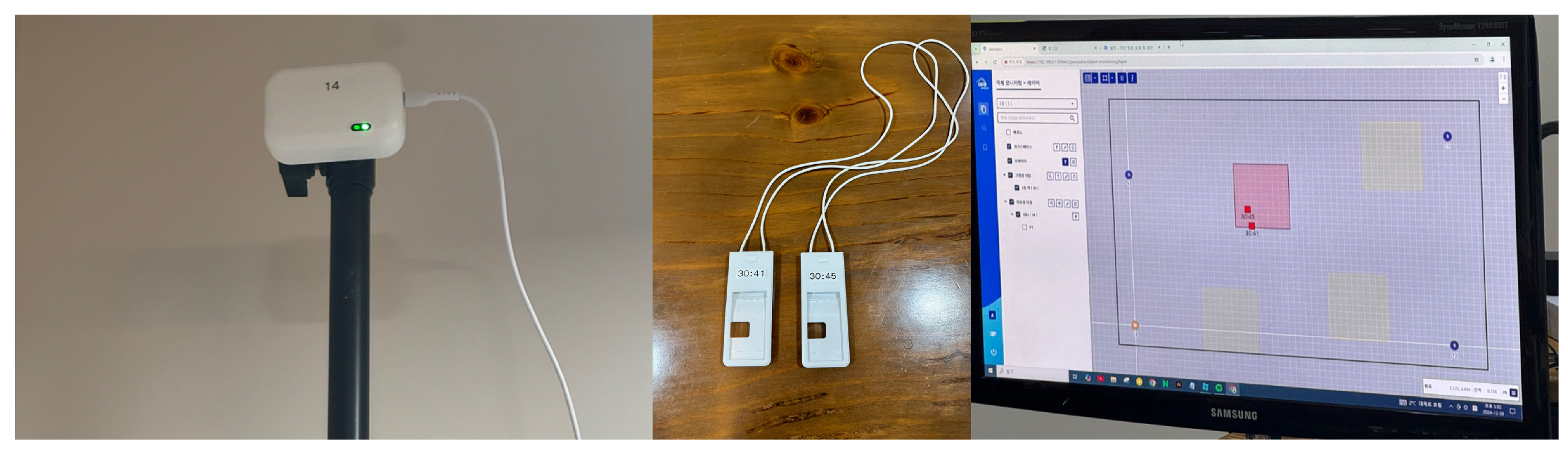

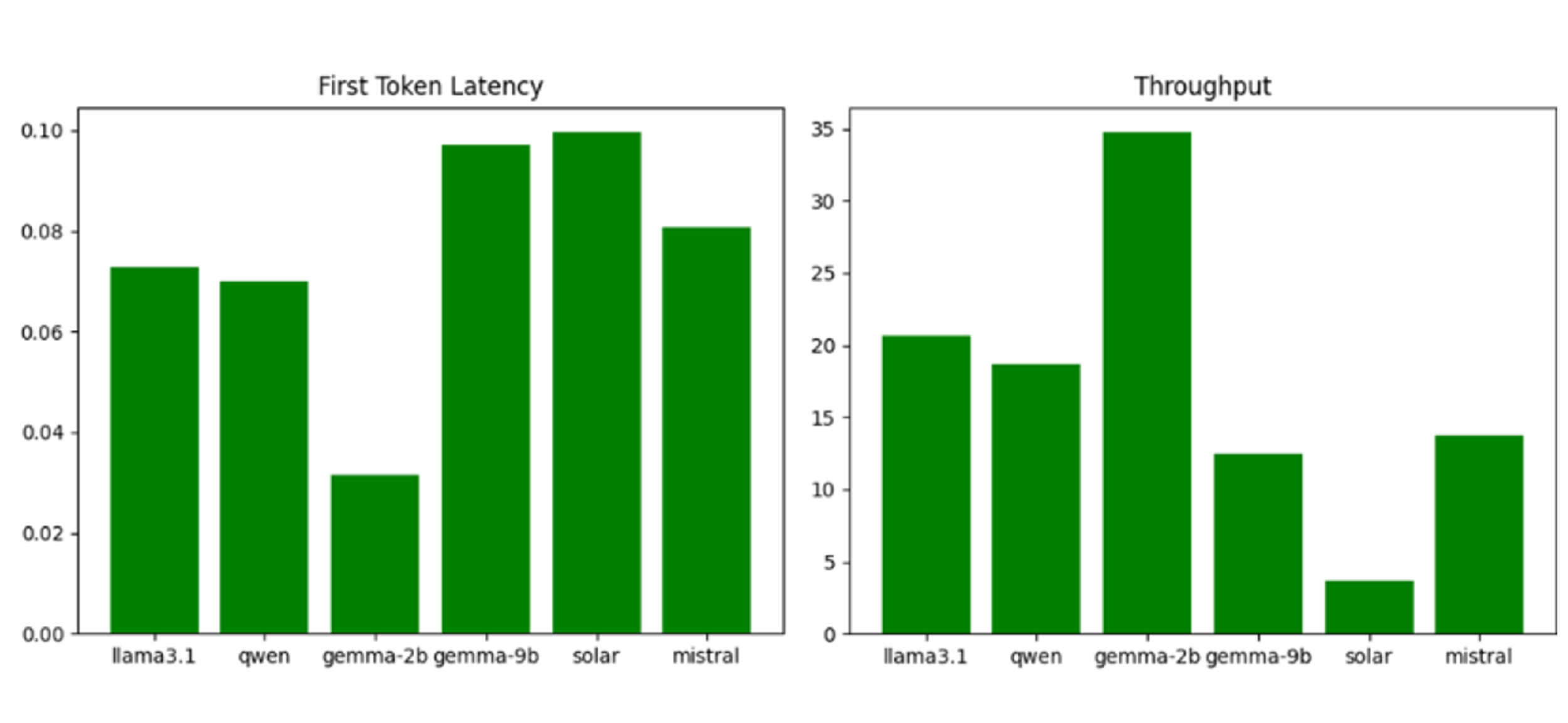

3.2.3. Ultra-Precise Indoor Positioning Technology (UWB-Based)

- is the distance between the UWB tag and the i-th UWB anchor.

- c is the propagation speed (approximately m/s).

- is the transmission time.

- is the reception time.

- d is the distance between the current user location and the center of the exhibit.

- is the content trigger entry radius.

- is the content termination radius.

- UWB Anchor Network: Collects and calculates distance calculation results in real time to calculate the user’s coordinates (x, y, z) [49].

- Mobile App: Provides customized explanations of exhibits based on location and plays related multimedia content via the app.

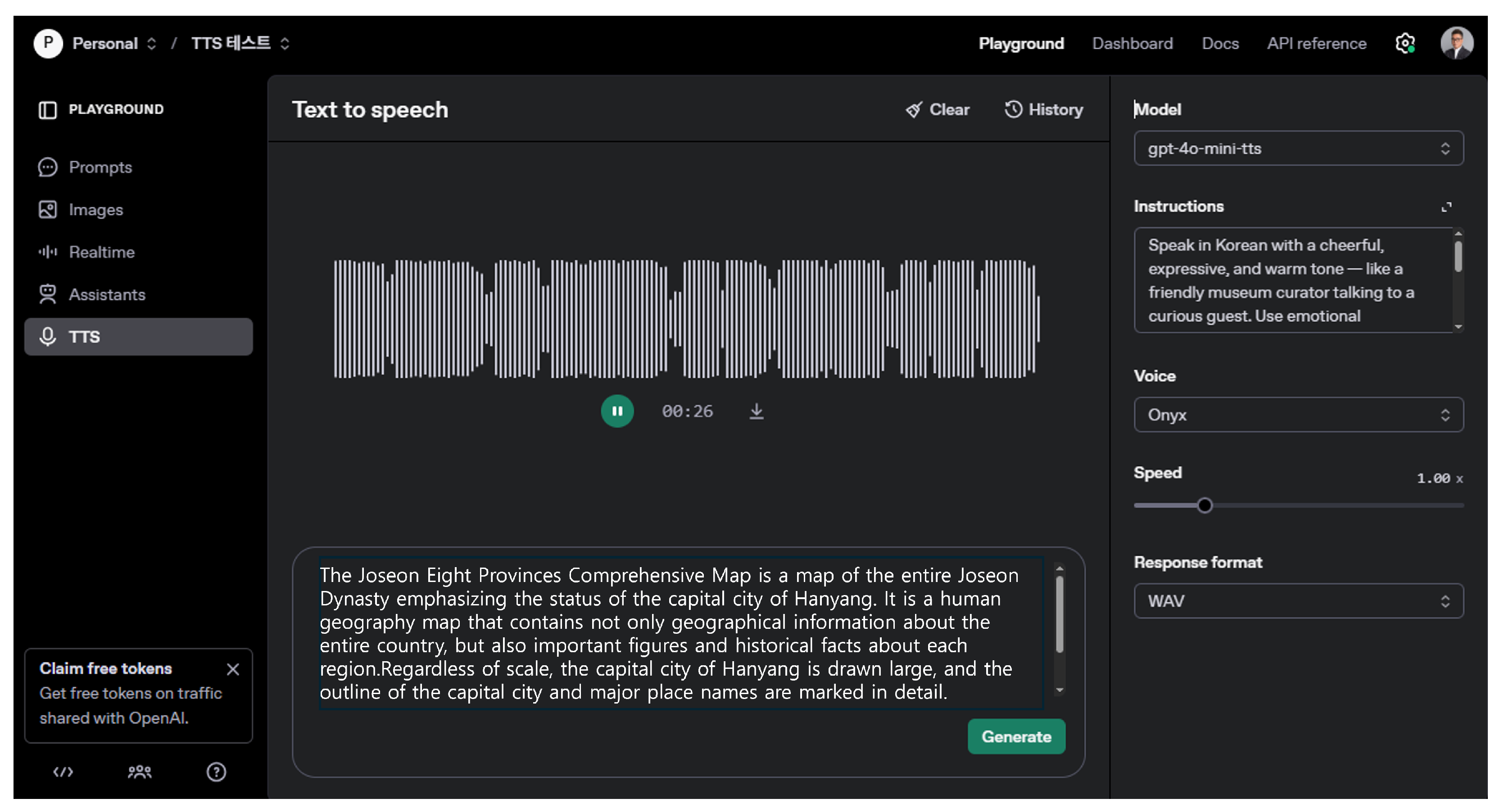

3.2.4. Development of TTS and STT Voice Synthesis Audio Technology

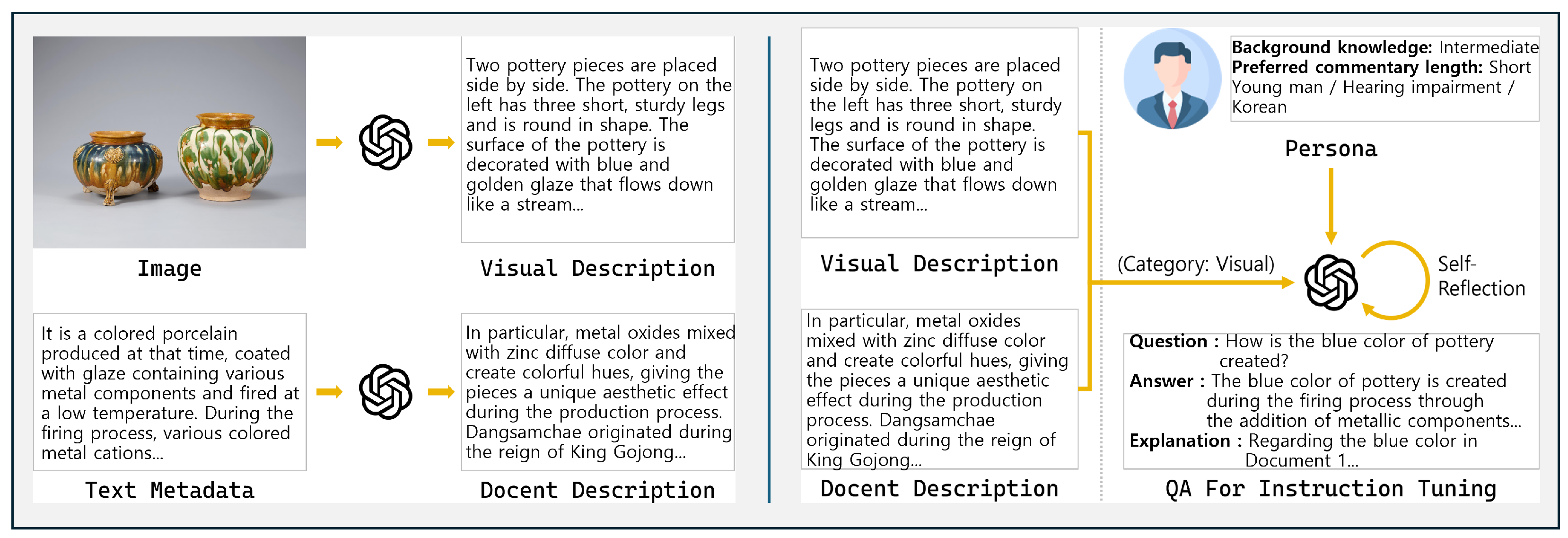

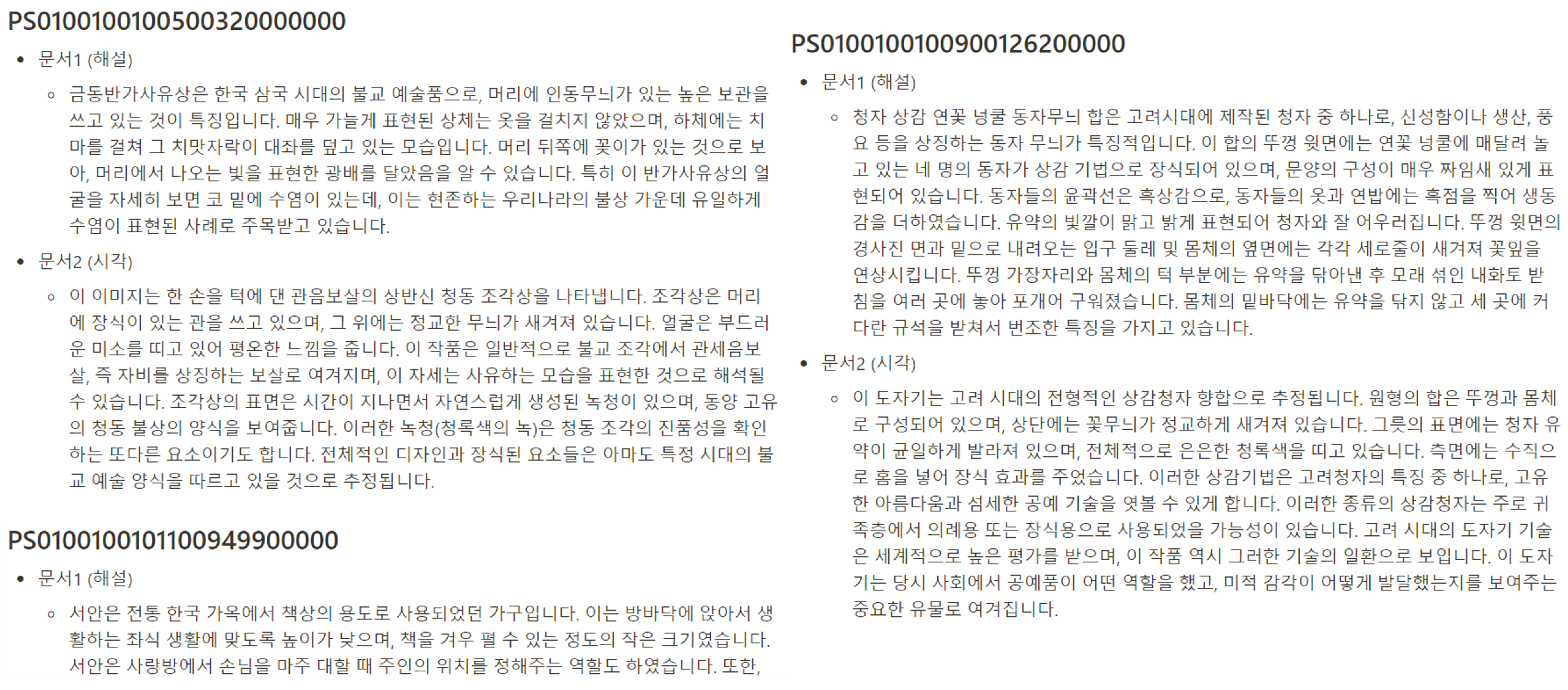

3.3. Building a Dataset

3.3.1. Refining Exhibition Explanations

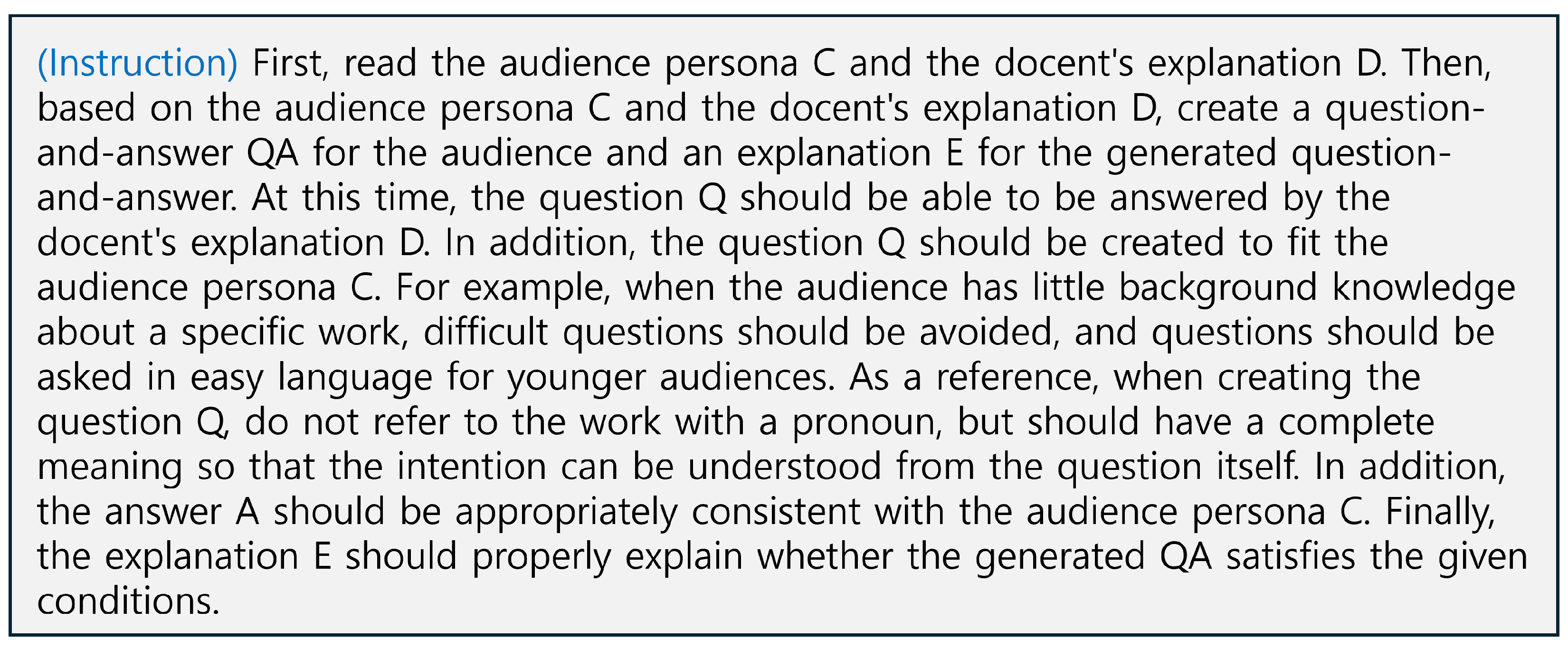

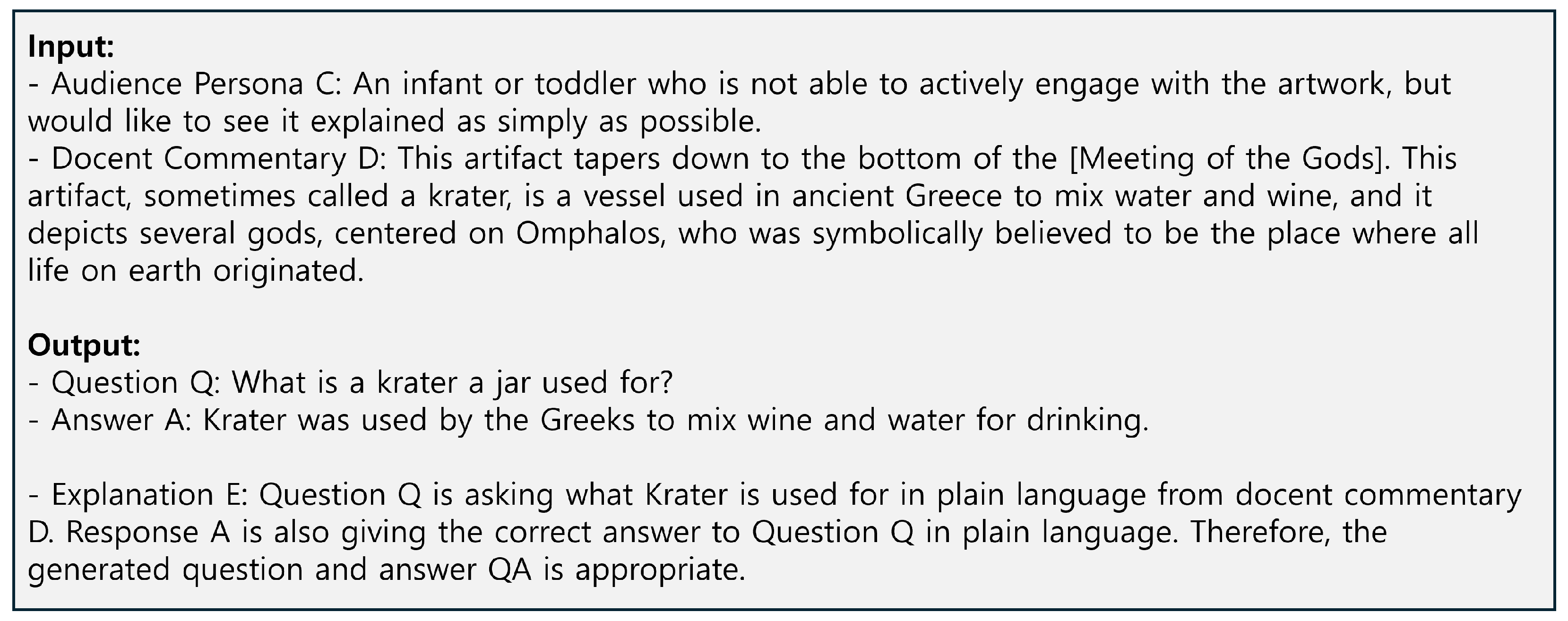

3.3.2. Building a Persona-Based Docent Dataset

3.4. Document Embedding and Vectorization

3.4.1. Application of Multilingual Document Embedding Model

3.4.2. Similar Sentence Normalization and Duplicate Filtering

- : Duplicate judgment threshold (merge if similarity is 0.98 or higher).

- When duplicates are found: fd.

3.4.3. Building a Vector Search Infrastructure Based on OpenSearch

3.4.4. FAISS-Based Parallel Search Optimization

- : the query embedding vector.

- : document vectors in the index set .

- : the similarity score between and (e.g., cosine similarity).

- arg max: returns the indices of the top k vectors with the highest similarity scores.

3.5. LangChain-Based RAG Integration Structure

3.6. sLLM Model Training Strategy

- Final parameters of the model after fine-tuning (Fine-tuned Parameters).

- Parameters of the pre-trained original LLM (Base Model Parameters).

- Low-rank parameter changes learned using the LoRA method.

4. Experiments and Results

4.1. Hardware Specifications

4.2. Background of Scenario Design

4.3. Scenario Components

4.4. Evaluation Data Composition

4.5. Output Devices for Evaluation

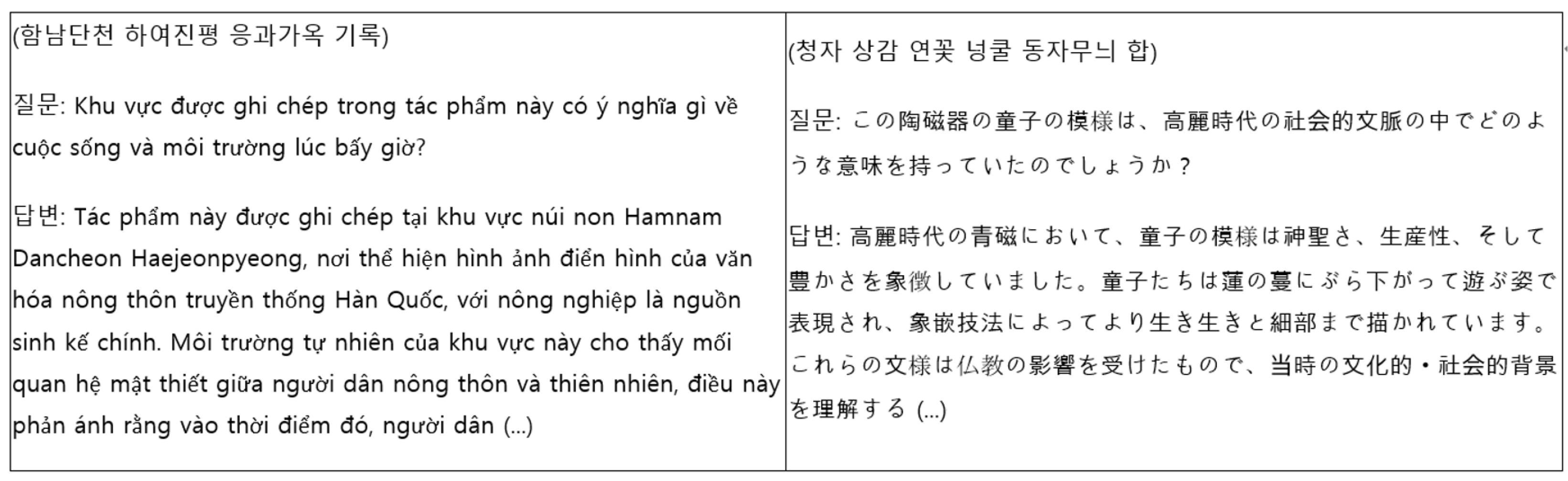

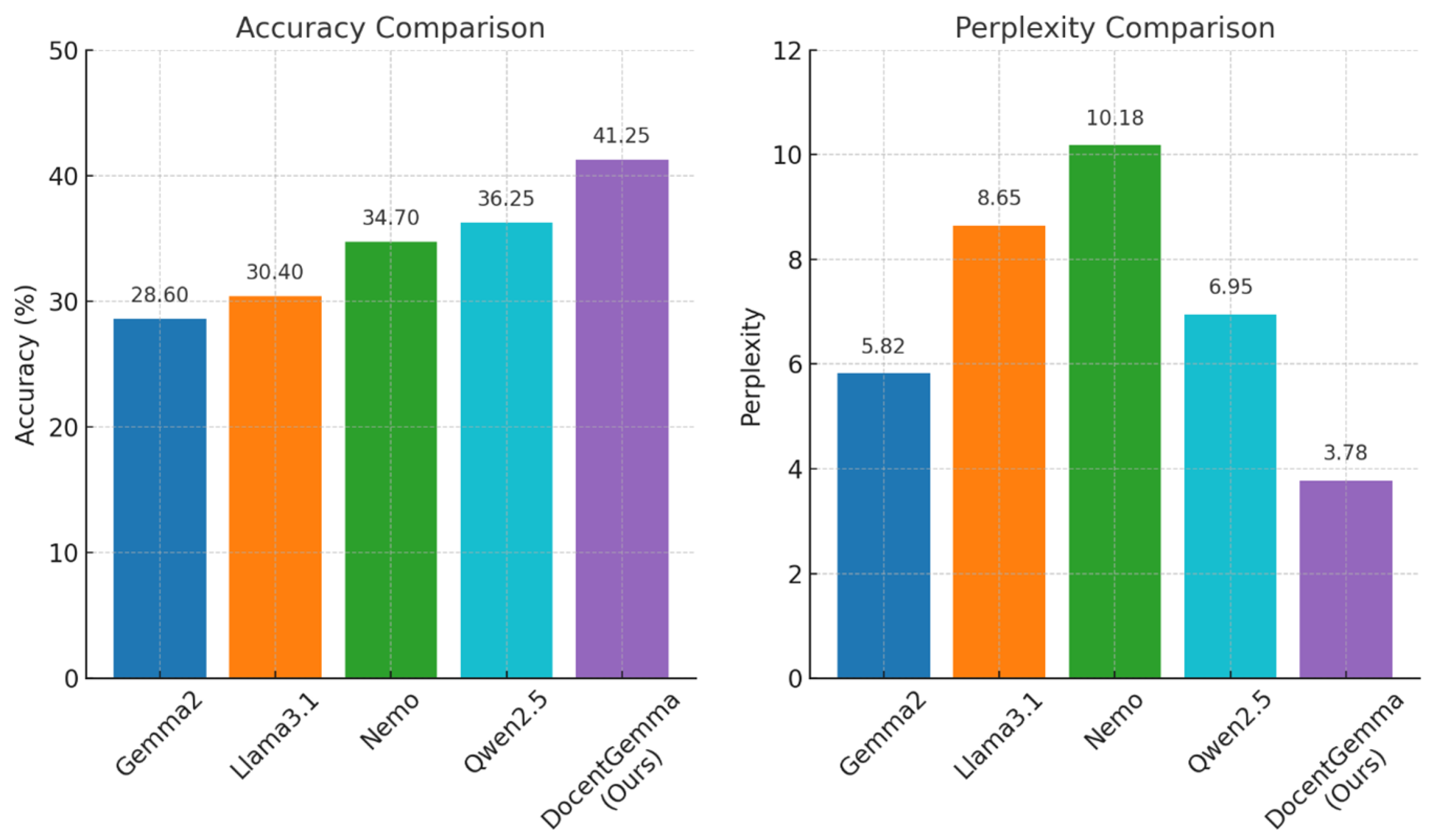

4.6. Small LLM Performance Evaluation

4.7. sLLM Model Training Strategy

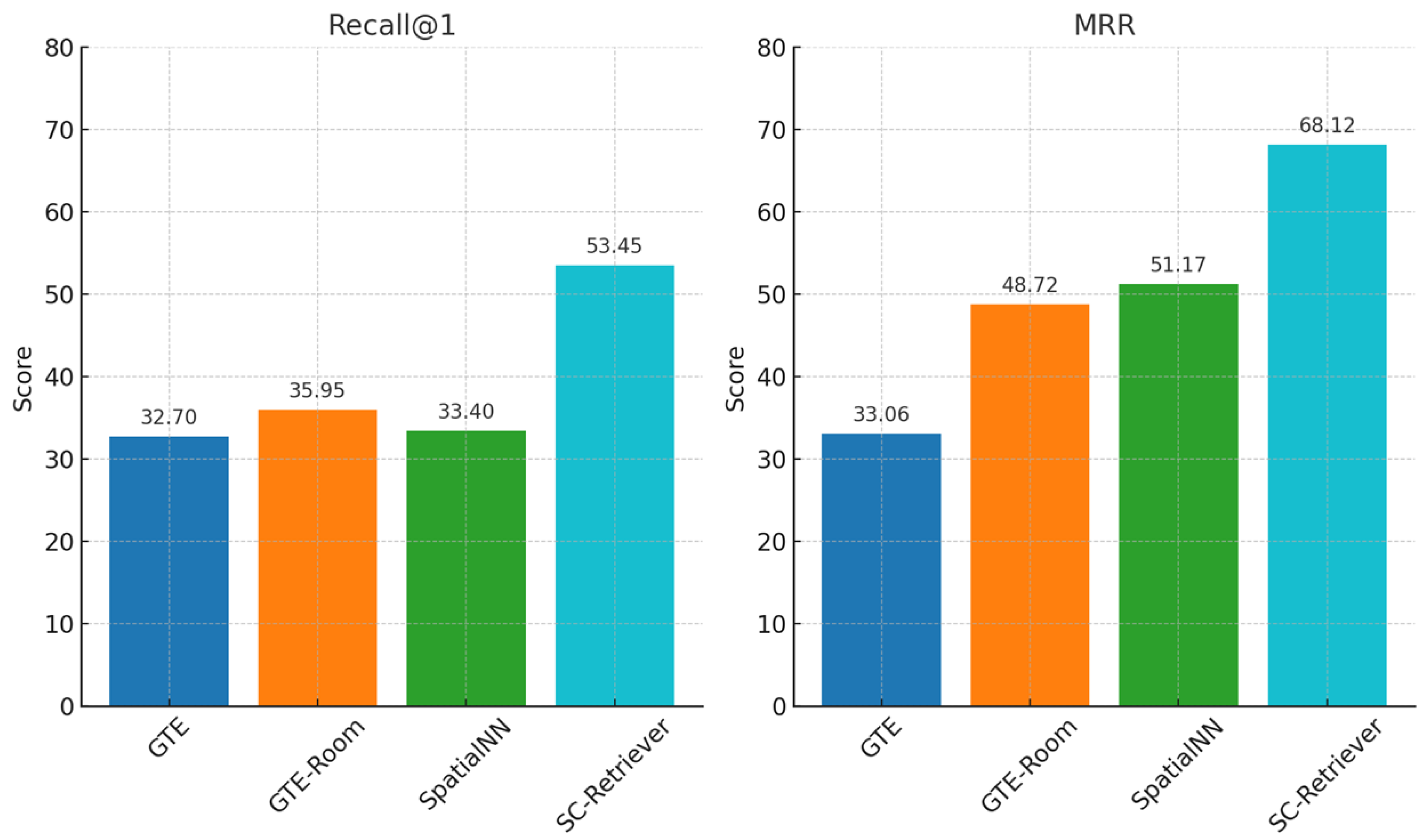

- Q: User query.

- : i-th document (exhibit description, etc.).

- U: User’s current location coordinates.

- : i-th exhibit location coordinates.

- : Weighting coefficient between text and spatial similarity.

- : Text-based semantic similarity (e.g., cosine similarity).

- : Spatial similarity (e.g., distance-based similarity).

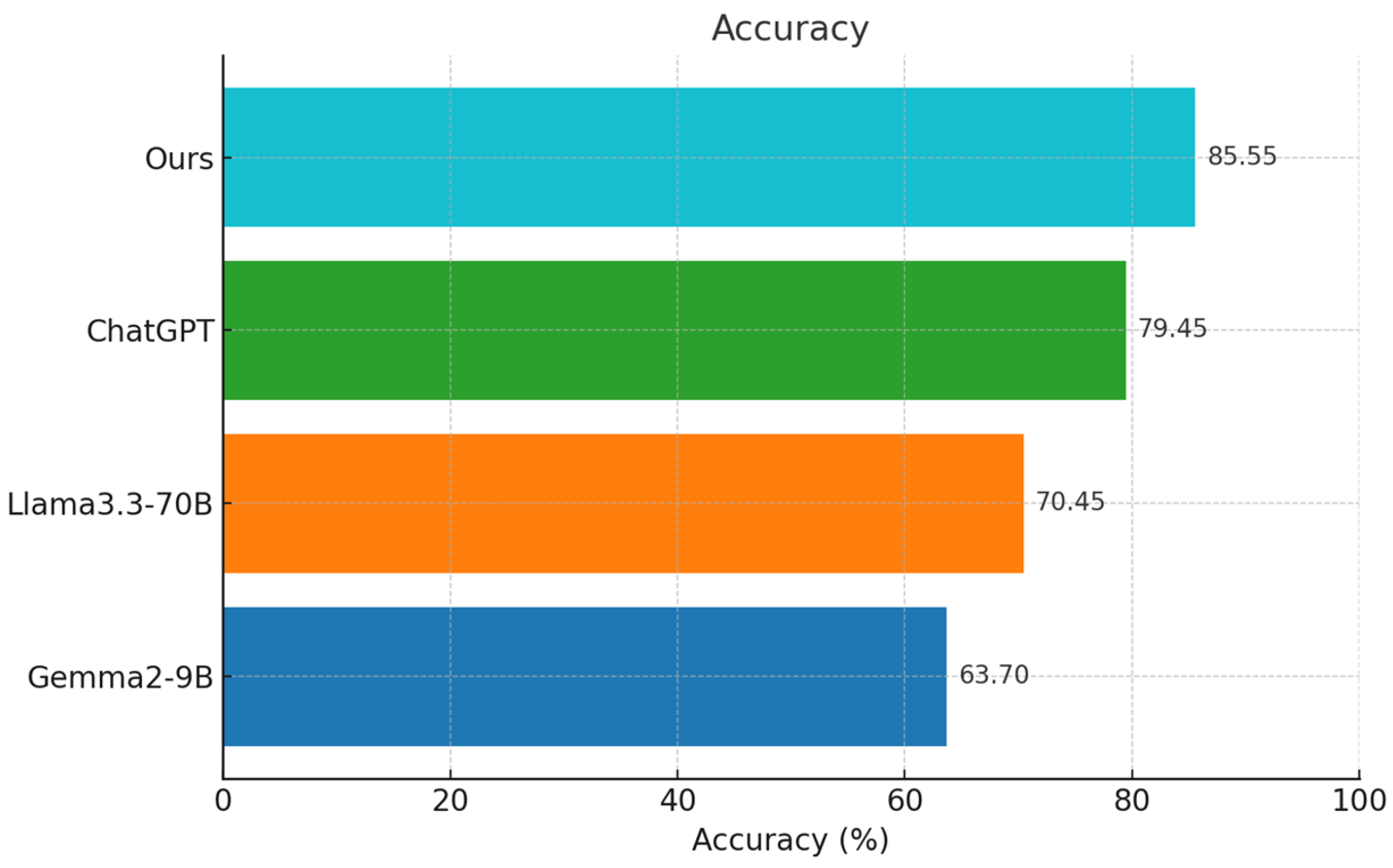

4.8. Final RAG-Based LLM Model Performance Evaluation

4.9. Empirical Application Cases and User Response Analysis

4.10. Demonstration Locations and Deployment Environment

4.11. User Composition and Participation Methods

4.12. User Responses and Qualitative Evaluation Results

4.13. Summary and Interpretation of Empirical Results

5. Conclusions & Future Research

5.1. Conclusions

5.2. Limitations

5.3. Future Research

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Asakawa, S.; Guerreiro, J.; Ahmetovic, D.; Kitani, K.; Asakawa, C. The Present and Future of Museum Accessibility for People with Visual Impairments. In Proceedings of the 20th International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS ’18), Galway, Ireland, 22–24 October 2018; pp. 382–384. [Google Scholar] [CrossRef]

- Jingyu, S.; Xinyi, L.; Zeyou, Z. Research on Museum Accessibility for the Visually Impaired. SHS Web Conf. 2023, 163, 02022. [Google Scholar] [CrossRef]

- Mazzanti, P.; Ferracani, A.; Bertini, M.; Principi, F. Reshaping Museum Experiences with AI: The ReInHerit Toolkit. Heritage 2025, 8, 277. [Google Scholar] [CrossRef]

- Qu, G.; Chen, Q.; Wei, W.; Lin, Z.; Chen, X.; Huang, K. Mobile Edge Intelligence for Large Language Models: A Contemporary Survey. IEEE Commun. Surv. Tutor. 2025. Early Access. [Google Scholar] [CrossRef]

- Trichopoulos, G.; Konstantakis, M.; Alexandridis, G.; Caridakis, G. Large Language Models as Recommendation Systems in Museums. Electronics 2023, 12, 3829. [Google Scholar] [CrossRef]

- An, H.; Park, W.; Liu, P.; Park, S. Mobile-AI-Based Docent System: Navigation and Localization for Visually Impaired Gallery Visitors. Appl. Sci. 2025, 15, 5161. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. arXiv 2019, arXiv:1810.04805. [Google Scholar] [CrossRef]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. arXiv 2023, arXiv:1910.10683. [Google Scholar]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language Models are Few-Shot Learners. arXiv 2020, arXiv:2005.14165. [Google Scholar] [CrossRef]

- OpenAI; Achiam, J.; Adler, S.; Agarwal, S.; Ahmad, L.; Akkaya, I.; Aleman, F.L.; Almeida, D.; Altenschmidt, J.; Altman, S.; et al. GPT-4 Technical Report. arXiv 2024, arXiv:2303.08774. [Google Scholar]

- Driess, D.; Xia, F.; Sajjadi, M.S.M.; Lynch, C.; Chowdhery, A.; Ichter, B.; Wahid, A.; Tompson, J.; Vuong, Q.; Yu, T.; et al. PaLM-E: An Embodied Multimodal Language Model. arXiv 2023, arXiv:2303.03378. [Google Scholar]

- Schick, T.; Dwivedi-Yu, J.; Dessì, R.; Raileanu, R.; Lomeli, M.; Zettlemoyer, L.; Cancedda, N.; Scialom, T. Toolformer: Language Models Can Teach Themselves to Use Tools. arXiv 2023, arXiv:2302.04761. [Google Scholar] [CrossRef]

- Yao, S.; Yu, D.; Zhao, J.; Shafran, I.; Griffiths, T.L.; Cao, Y.; Narasimhan, K. Tree of Thoughts: Deliberate Problem Solving with Large Language Models. arXiv 2023, arXiv:2305.10601. [Google Scholar] [CrossRef]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.L.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Training language models to follow instructions with human feedback. arXiv 2022, arXiv:2203.02155. [Google Scholar] [CrossRef]

- Christiano, P.; Leike, J.; Brown, T.B.; Martic, M.; Legg, S.; Amodei, D. Deep reinforcement learning from human preferences. arXiv 2023, arXiv:1706.03741. [Google Scholar] [CrossRef]

- Bender, E.M.; Gebru, T.; McMillan-Major, A.; Shmitchell, S. On the Dangers of Stochastic Parrots: Can Language Models Be Too Big? In Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency (FAccT ’21), Virtual Event, 3–10 March 2021; pp. 610–623. [Google Scholar] [CrossRef]

- Guerrero, P.; Pan, Y.; Kashyap, S. Efficient Deployment of Vision-Language Models on Mobile Devices: A Case Study on OnePlus 13R. arXiv 2025, arXiv:2507.08505. [Google Scholar] [CrossRef]

- Jiao, X.; Yin, Y.; Shang, L.; Jiang, X.; Chen, X.; Li, L.; Wang, F.; Liu, Q. TinyBERT: Distilling BERT for Natural Language Understanding. arXiv 2020, arXiv:1909.10351. [Google Scholar] [CrossRef]

- Gemma Team; Mesnard, T.; Hardin, C.; Dadashi, R.; Bhupatiraju, S.; Pathak, S.; Sifre, L.; Rivière, M.; Kale, M.S.; Love, J.; et al. Gemma: Open Models Based on Gemini Research and Technology. arXiv 2024, arXiv:2403.08295. [Google Scholar] [CrossRef]

- Kim, J.-H.; Choi, Y.-S. Lightweight Pre-Trained Korean Language Model Based on Knowledge Distillation and Low-Rank Factorization. Entropy 2025, 27, 379. [Google Scholar] [CrossRef]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.-T.; Rocktäschel, T.; et al. Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. arXiv 2021, arXiv:2005.11401. [Google Scholar]

- Kim, C.; Joe, I. A Balanced Approach of Rapid Genetic Exploration and Surrogate Exploitation for Hyperparameter Optimization. IEEE Access 2024, 12, 192184–192194. [Google Scholar] [CrossRef]

- Wahsheh, F.R.; Moaiad, Y.A.; El-Ebiary, Y.A.B.; Hamzah, W.M.A.F.W.; Yusoff, M.H.; Pandey, B. E-Commerce Product Retrieval Using Knowledge from GPT-4. In Proceedings of the 2023 International Conference on Computer Science and Emerging Technologies (CSET), Bangalore, India, 10–12 October 2023; pp. 1–8. [Google Scholar] [CrossRef]

- Robertson, S.; Zaragoza, H. The Probabilistic Relevance Framework: BM25 and Beyond. Found. Trends Inf. Retr. 2009, 3, 333–389. [Google Scholar] [CrossRef]

- Karpukhin, V.; Oğuz, B.; Min, S.; Lewis, P.; Wu, L.; Edunov, S.; Chen, D.; Yih, W.-T. Dense Passage Retrieval for Open-Domain Question Answering. arXiv 2020, arXiv:2004.04906. [Google Scholar] [CrossRef]

- Izacard, G.; Lewis, P.; Lomeli, M.; Hosseini, L.; Petroni, F.; Schick, T.; Dwivedi-Yu, J.; Joulin, A.; Riedel, S.; Grave, E. Atlas: Few-shot Learning with Retrieval Augmented Language Models. arXiv 2022, arXiv:2208.03299. [Google Scholar] [CrossRef]

- Lin, W.; Chen, J.; Mei, J.; Coca, A.; Byrne, B. Fine-grained Late-interaction Multi-modal Retrieval for Retrieval Augmented Visual Question Answering. arXiv 2023, arXiv:2309.17133. [Google Scholar]

- Johnson, J.; Douze, M.; Jégou, H. Billion-Scale Similarity Search with GPUs. IEEE Trans. Big Data 2021, 7, 535–547. [Google Scholar] [CrossRef]

- Douze, M.; Guzhva, A.; Deng, C.; Johnson, J.; Szilvasy, G.; Mazaré, P.-E.; Lomeli, M.; Hosseini, L.; Jégou, H. The Faiss library. arXiv 2024, arXiv:2401.08281. [Google Scholar] [CrossRef]

- Khan, A.; S, N.C.; Gangodkar, D.R. An Overview Recent Trends and Challenges in Multi-Modal Image Retrieval Using Deep Learning. In Proceedings of the 2024 International Conference on Communication, Computing and Energy Efficient Technologies (I3CEET), Gautam Buddha Nagar, India, 20–21 September 2024; pp. 929–934. [Google Scholar] [CrossRef]

- Mu, R. A Survey of Recommender Systems Based on Deep Learning. IEEE Access 2018, 6, 69009–69022. [Google Scholar] [CrossRef]

- He, X.; Liao, L.; Zhang, H.; Nie, L.; Hu, X.; Chua, T.-S. Neural Collaborative Filtering. arXiv 2017, arXiv:1708.05031. [Google Scholar] [CrossRef]

- Pang, G.; Shen, C.; Cao, L.; Van Den Hengel, A. Deep Learning for Anomaly Detection: A Review. ACM Comput. Surv. 2021, 54, 1–38. [Google Scholar] [CrossRef]

- Akkiraju, R.; Xu, A.; Bora, D.; Yu, T.; An, L.; Seth, V.; Shukla, A.; Gundecha, P.; Mehta, H.; Jha, A.; et al. FACTS About Building Retrieval Augmented Generation-based Chatbots. arXiv 2024, arXiv:2407.07858. [Google Scholar] [CrossRef]

- Topsakal, O.; Akinci, T.C. Creating Large Language Model Applications Utilizing LangChain: A Primer on Developing LLM Apps Fast. Int. Conf. Appl. Eng. Nat. Sci. 2023, 1, 1050–1056. [Google Scholar] [CrossRef]

- Mavroudis, V. LangChain. Preprints 2024. online. [Google Scholar] [CrossRef]

- LangChain AI. Build a Customer Support Bot. LangGraph Tutorials. 2025. Available online: https://langchain-ai.github.io/langgraph/tutorials/customer-support/customer-support/ (accessed on 18 August 2025).

- Khachane, A.; Kshirsagar, A.; Takawale, P.; Charate, M. AUTOMATING DATA ANALYSIS WITH LANGCHAIN. Int. Res. J. Mod. Eng. Technol. Sci. 2024, 6, 11066–11070. [Google Scholar] [CrossRef]

- Felicetti, A.; Niccolucci, F. Artificial Intelligence and Ontologies for the Management of Heritage Digital Twins Data. Data 2025, 10, 1. [Google Scholar] [CrossRef]

- Al-Okby, M.F.R.; Junginger, S.; Roddelkopf, T.; Thurow, K. UWB-Based Real-Time Indoor Positioning Systems: A Comprehensive Review. Appl. Sci. 2024, 14, 11005. [Google Scholar] [CrossRef]

- Qian, C.; Xie, Z.; Wang, Y.; Liu, W.; Zhu, K.; Xia, H.; Dang, Y.; Du, Z.; Chen, W.; Yang, C.; et al. Scaling Large Language Model-based Multi-Agent Collaboration. arXiv 2025, arXiv:2406.07155. [Google Scholar]

- Patriwala, A. LangChain: A Comprehensive Framework for Building LLM Applications (with Code). Medium. 2025. Available online: https://medium.com/@patriwala/langchain-a-comprehensive-framework-for-building-llm-applications-e2800dba2753 (accessed on 18 August 2025).

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. arXiv 2019, arXiv:1908.10084. [Google Scholar] [CrossRef]

- LangChain. How-To Guides. LangChain Documentation. Available online: https://python.langchain.com/docs/how_to/ (accessed on 18 August 2025).

- Google. Gemma: Introducing Google’s New Family of Lightweight, State-of-the-art Open Models. 2024. Available online: https://ai.google.dev/gemma (accessed on 18 August 2025).

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. arXiv 2021, arXiv:2106.09685. [Google Scholar]

- Yang, J.; Zhu, C. Research on UWB Indoor Positioning System Based on TOF Combined Residual Weighting. Sensors 2023, 23, 1455. [Google Scholar] [CrossRef]

- Ma, W.; Fang, X.; Liang, L.; Du, J. Research on indoor positioning system algorithm based on UWB technology. Meas. Sens. 2024, 33, 101121. [Google Scholar] [CrossRef]

- Zhao, W.; Goudar, A.; Tang, M.; Schoellig, A.P. Ultra-wideband Time Difference of Arrival Indoor Localization: From Sensor Placement to System Evaluation. arXiv 2024, arXiv:2412.12427. [Google Scholar] [CrossRef]

- Verde, D.; Romero, L.; Faria, P.M.; Paiva, S. Indoor Content Delivery Solution for a Museum Based on BLE Beacons. Sensors 2023, 23, 7403. [Google Scholar] [CrossRef]

- Spachos, P.; Plataniotis, K.N. BLE Beacons for Indoor Positioning at an Interactive IoT-Based Smart Museum. IEEE Syst. J. 2020, 14, 3483–3493. [Google Scholar] [CrossRef]

- Gong, M.; Li, Z.; Li, W. Research on ultra-wideband (UWB) indoor accurate positioning technology under signal interference. In Proceedings of the 2022 IEEE International Conferences on Internet of Things (iThings) and IEEE Green Computing & Communications (GreenCom) and IEEE Cyber, Physical & Social Computing (CPSCom) and IEEE Smart Data (SmartData) and IEEE Congress on Cybermatics (Cybermatics), Espoo, Finland, 22–25 August 2022; pp. 147–154. [Google Scholar] [CrossRef]

- Krebs, S.; Herter, T. Ultra-Wideband Positioning System Based on ESP32 and DWM3000 Modules. arXiv 2024, arXiv:2403.10194. [Google Scholar] [CrossRef]

- Kingsbury, B.; Saon, G.; Mangu, L.; Padmanabhan, M.; Sarikaya, R. Robust speech recognition in noisy environments: The 2001 IBM SPINEevaluation system. In Proceedings of the 2002 IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP), Orlando, FL, USA, 13–17 May 2002; Volume 1, pp. I-53–I-56. [Google Scholar] [CrossRef]

- Google Cloud. Speech-to-Text. Available online: https://cloud.google.com/speech-to-text/ (accessed on 18 August 2025).

- Naver Cloud Platform. Clova Speech Synthesis. Available online: https://guide.ncloud-docs.com/docs/clovaspeech-overview (accessed on 18 August 2025).

- OpenAI. Text to Speech. Available online: https://platform.openai.com/docs/guides/text-to-speech (accessed on 18 August 2025).

- Raitio, T.; Latorre, J.; Davis, A.; Morrill, T.; Golipour, L. Improving the quality of neural TTS using long-form content and multi-speaker multi-style modeling. arXiv 2023, arXiv:2212.10075. [Google Scholar]

- Wood, S.G.; Moxley, J.H.; Tighe, E.L.; Wagner, R.K. Does Use of Text-to-Speech and Related Read-Aloud Tools Improve Reading Comprehension for Students with Reading Disabilities? A Meta-Analysis. J. Learn. Disabil. 2018, 51, 73–84. [Google Scholar] [CrossRef]

- Leaman, R.; Khare, R.; Lu, Z. Challenges in clinical natural language processing for automated disorder normalization. J. Biomed. Inform. 2015, 57, 28–37. [Google Scholar] [CrossRef]

- National Museum of Korea. Digital Collections. Available online: https://www.museum.go.kr/ENG/contents/E0402000000.do?searchId=search&schM=list (accessed on 18 August 2025).

- Radeva, I.; Popchev, I.; Doukovska, L.; Dimitrova, M. Web Application for Retrieval-Augmented Generation: Implementation and Testing. Electronics 2024, 13, 1361. [Google Scholar] [CrossRef]

- Ozdemir, A.; Odaci, B.; Tanatar Baruh, L.; Varol, O.; Balcisoy, S. Enhancing Cultural Heritage Archive Analysis via Automated Entity Extraction and Graph-Based Representation Learning. J. Comput. Cult. Herit. 2025. [Google Scholar] [CrossRef]

- Zhang, J.; Cui, Y.; Wang, W.; Cheng, X. TrustDataFilter: Leveraging Trusted Knowledge Base Data for More Effective Filtering of Unknown Information. arXiv 2025, arXiv:2502.15714. [Google Scholar]

- Elastic. Nori Analysis Plugin. Available online: https://www.elastic.co/guide/en/elasticsearch/plugins/current/analysis-nori.html (accessed on 18 August 2025).

- Li, J.; Bikakis, A. Towards a Semantics-Based Recommendation System for Cultural Heritage Collections. Appl. Sci. 2023, 13, 8907. [Google Scholar] [CrossRef]

- Lee, J.; Cha, H.; Hwangbo, Y.; Cheon, W. Enhancing Large Language Model Reliability: Minimizing Hallucinations with Dual Retrieval-Augmented Generation Based on the Latest Diabetes Guidelines. J. Pers. Med. 2024, 14, 1131. [Google Scholar] [CrossRef]

- Chen, D.; Sun, N.; Lee, J.-H.; Zou, C.; Jeon, W.-S. Digital Technology in Cultural Heritage: Construction and Evaluation Methods of AI-Based Ethnic Music Dataset. Appl. Sci. 2024, 14, 10811. [Google Scholar] [CrossRef]

- Gîrbacia, F. An Analysis of Research Trends for Using Artificial Intelligence in Cultural Heritage. Electronics 2024, 13, 3738. [Google Scholar] [CrossRef]

- Podsiadło, M.; Chahar, S. Text-to-Speech for Individuals with Vision Loss: A User Study. In Proceedings of the Interspeech 2016, San Francisco, CA, USA, 8–12 September 2016; pp. 347–351. [Google Scholar] [CrossRef]

- Shin, D.; Park, J.; Kim, H. LoRA versus Prompt-Tuning: A Comparative Study in Domain-Specific Language Model Adaptation. Electronics 2024, 6, 56. [Google Scholar] [CrossRef]

- Rasool, A.; Shahzad, M.I.; Aslam, H.; Chan, V.; Arshad, M.A. Emotion-Aware Embedding Fusion in Large Language Models (Flan-T5, Llama 2, DeepSeek-R1, and ChatGPT 4) for Intelligent Response Generation. AI 2025, 6, 56. [Google Scholar] [CrossRef]

- Yu, D.; Bao, R.; Ning, R.; Peng, J.; Mai, G.; Zhao, L. Spatial-RAG: Spatial Retrieval Augmented Generation for Real-World Geospatial Reasoning Questions. arXiv 2025, arXiv:2502.18470. [Google Scholar]

- Theodoropoulos, G.S.; Nørvåg, K.; Doulkeridis, C. Efficient Semantic Similarity Search over Spatio-Textual Data. In Proceedings of the 27th International Conference on Extending Database Technology (EDBT), Paestum, Italy, 25–28 March 2024; pp. 268–280. [Google Scholar]

| Specification | Details |

|---|---|

| Instance Type | p4dn.12xlarg |

| GPU | 4 × NVIDIA A100 40 GB |

| GPU Memory | 160 GB total |

| GPU Performance | Up to 0.5 PFLOPS (FP16 with sparsity) |

| vCPUs | 48 |

| RAM | 576 GB |

| Network Bandwidth | Up to 200 Gbps (EFA) |

| NVMe Storage | 4 × 1.8TB (7.2 TB total) |

| Alpha (α) | 0.0 | 0.1 | 0.2 | 0.3 | 0.4 | 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 1.0 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Top-1 Accuracy (%) | 32.54 | 33.72 | 35.30 | 36.23 | 37.74 | 38.76 | 40.83 | 41.25 | 41.08 | 40.54 | 39.87 |

| Evaluation Items | Detail Items | Likert Scale | 100-Point Scale | Average Scale |

|---|---|---|---|---|

| Digital Docent | Communication with the digital docent is easy. | 4.21 | 84.1 | 4.12/82.4 |

| The digital docent’s explanations match the facts. | 4.47 | 89.4 | ||

| The digital docent’s content is rich. | 4.52 | 90.4 | ||

| The digital docent’s explanations are accurately expressed in the local language. | 4.25 | 84.9 | ||

| The digital docent’s feedback speed is fast. | 3.61 | 72.1 | ||

| There are typos or incorrect expressions in the digital docent’s answers. | 3.91 | 78.2 | ||

| The digital docent’s answers are satisfactory. | 4.11 | 82.2 | ||

| The answers to additional questions are satisfactory. | 3.91 | 78.1 | ||

| Expectations | The actual user experience exceeded expectations. | 4.83 | 96.5 | 4.79/95.8 |

| The functionality and service level exceeded expectations. | 4.71 | 94.2 | ||

| I expect the service to continue to improve in the future. | 4.95 | 98.9 | ||

| The app exceeded my expectations. | 4.68 | 93.5 | ||

| Technology and Configuration | The app is easy to access. | 4.89 | 97.8 | 4.68/93.5 |

| The app menu is well organized. | 4.63 | 92.5 | ||

| The app can be used stably. | 4.93 | 98.5 | ||

| Page loading does not take long. | 4.26 | 85.2 | ||

| Design | The overall atmosphere and screen layout of the app are harmonious. | 4.67 | 93.4 | 4.47/89.4 |

| The text and images (icons) are easy to read. | 4.31 | 86.2 | ||

| The app is easy to use at a glance. | 4.63 | 92.5 | ||

| The service menu layout and structure are consistent. | 4.27 | 85.3 | ||

| Information | The app provides the latest information. | 3.68 | 73.6 | 4.0/80.1 |

| The app provides a variety of information. | 3.82 | 76.3 | ||

| The information provided by the app is useful. | 4.81 | 96.2 | ||

| The information provided by the app is accurate. | 3.71 | 74.2 | ||

| Voice Service | Voice recognition is accurate. | 3.71 | 74.2 | 4.10/82.1 |

| Voice recognition speed is fast. | 3.82 | 76.4 | ||

| The content is accurately output in voice. | 3.86 | 77.1 | ||

| The speed of voice narration is appropriate. | 4.40 | 87.9 | ||

| The content of voice narration matches the facts. | 3.96 | 79.2 | ||

| There are no interruptions in the content of voice narration. | 4.88 | 97.6 | ||

| Mobility | The service is easy to use while moving. | 3.70 | 73.9 | 3.88/77.7 |

| Voice responses are not interrupted while moving. | 3.93 | 78.5 | ||

| Text is clearly displayed while moving. | 4.28 | 85.6 | ||

| The content of responses matches the artifacts. | 2.98 | 59.6 | ||

| It is easy to access the app while moving. | 3.97 | 79.4 | ||

| Page loading does not take long while moving. | 4.23 | 84.5 | ||

| The current location can be identified through the service while moving. | 3.82 | 76.4 | ||

| The app detects my location and responds appropriately. | 4.39 | 87.8 | ||

| Expected Effects | The app has increased satisfaction with viewing the exhibition. | 4.26 | 85.2 | 3.94/78.8 |

| It was easy to obtain new information through the app. | 3.62 | 72.4 | ||

| Intention to Continue Using | I will continue to use the app in the future. | 4.92 | 98.4 | 4.61/92.2 |

| I will recommend the app to my friends in the future. | 4.86 | 97.2 | ||

| I will speak positively about the app to my friends in the future. | 4.82 | 96.3 | ||

| Overall Satisfaction | I am generally satisfied with the app. | 4.24 | 84.8 | 4.24/84.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jung, T.; Joe, I. An Intelligent Docent System with a Small Large Language Model (sLLM) Based on Retrieval-Augmented Generation (RAG). Appl. Sci. 2025, 15, 9398. https://doi.org/10.3390/app15179398

Jung T, Joe I. An Intelligent Docent System with a Small Large Language Model (sLLM) Based on Retrieval-Augmented Generation (RAG). Applied Sciences. 2025; 15(17):9398. https://doi.org/10.3390/app15179398

Chicago/Turabian StyleJung, Taemoon, and Inwhee Joe. 2025. "An Intelligent Docent System with a Small Large Language Model (sLLM) Based on Retrieval-Augmented Generation (RAG)" Applied Sciences 15, no. 17: 9398. https://doi.org/10.3390/app15179398

APA StyleJung, T., & Joe, I. (2025). An Intelligent Docent System with a Small Large Language Model (sLLM) Based on Retrieval-Augmented Generation (RAG). Applied Sciences, 15(17), 9398. https://doi.org/10.3390/app15179398