DeepSIGNAL-ITS—Deep Learning Signal Intelligence for Adaptive Traffic Signal Control in Intelligent Transportation Systems

Abstract

Featured Application

Abstract

1. Introduction

- Perception Tasks: These tasks involve detecting, identifying, and recognizing data patterns to extract and interpret relevant information from the ITS environment. This is crucial for applications like cooperative collision avoidance, which gathers data from a vehicle’s sensing infrastructure to provide awareness of its surroundings. The massive amounts and variety of data produced by sensors pose challenges related to data fusion and big data for perception tasks.

- Prediction Tasks: These tasks aim to forecast future states based on historical and real-time data, enabling proactive applications. For instance, cooperative collision avoidance applications predict vehicle movements to identify potential accidents and mitigate impacts. Long Short-Term Memory (LSTM), Recurrent Neural Networks (RNN), and Convolutional Neural Networks (CNN) have been widely used for prediction tasks in recent years.

- Management Tasks: ITS leverages ML in infrastructure management to provide services that enhance road safety and efficiency. This includes efficient resource allocation for resource-intensive use cases such as on-demand multimedia video and live traffic reports. ML techniques have been applied to dynamic computing and caching resource management, with objectives like maximizing Quality of Service (QoS) and minimizing overhead and cost.

- Energy Management: This involves optimizing energy consumption, particularly for electric vehicles (EVs), by determining charge/discharge policies based on routes. ML algorithms such as regression and reinforcement learning (RL) are used for this purpose.

1.1. Motivation

1.2. Contributions

- Real-Time Vehicle Detection and Counting at the Edge: We developed a low-latency vehicle detection and tracking system based on YOLO, deployed on edge devices (NVIDIA Jetson Nano) configured as RSUs near traffic lights. This system processes video streams locally, minimizing bandwidth usage by sending only structured JSON traffic data instead of raw video feeds.

- Reinforcement Learning (PPO) Optimization Engine: Collects traffic data from RSUs and uses SUMO to simulate traffic scenarios for training. Employs Proximal Policy Optimization (PPO) within a scalable containerized framework to optimize traffic signal phase durations. Moreover, within PPO orchitecture, we defined a multi-level observation space incorporating both local metrics (e.g., per-intersection vehicle waiting time, phase vehicle counts, intersection pressure) and global metrics (total and average vehicle waiting time) and introduced a pressure-based feature to model congestion asymmetries between major and minor roads.

- Secure, Encrypted Cloud Communication Protocol: Implements certificate-based authentication and end-to-end encryption to safeguard data transfers between RSUs and the cloud. Utilizes lightweight JSON messages for efficient transmission of traffic data and status updates. Associates each RSU with a unique OpenStreetMap node for precise geographic identification.

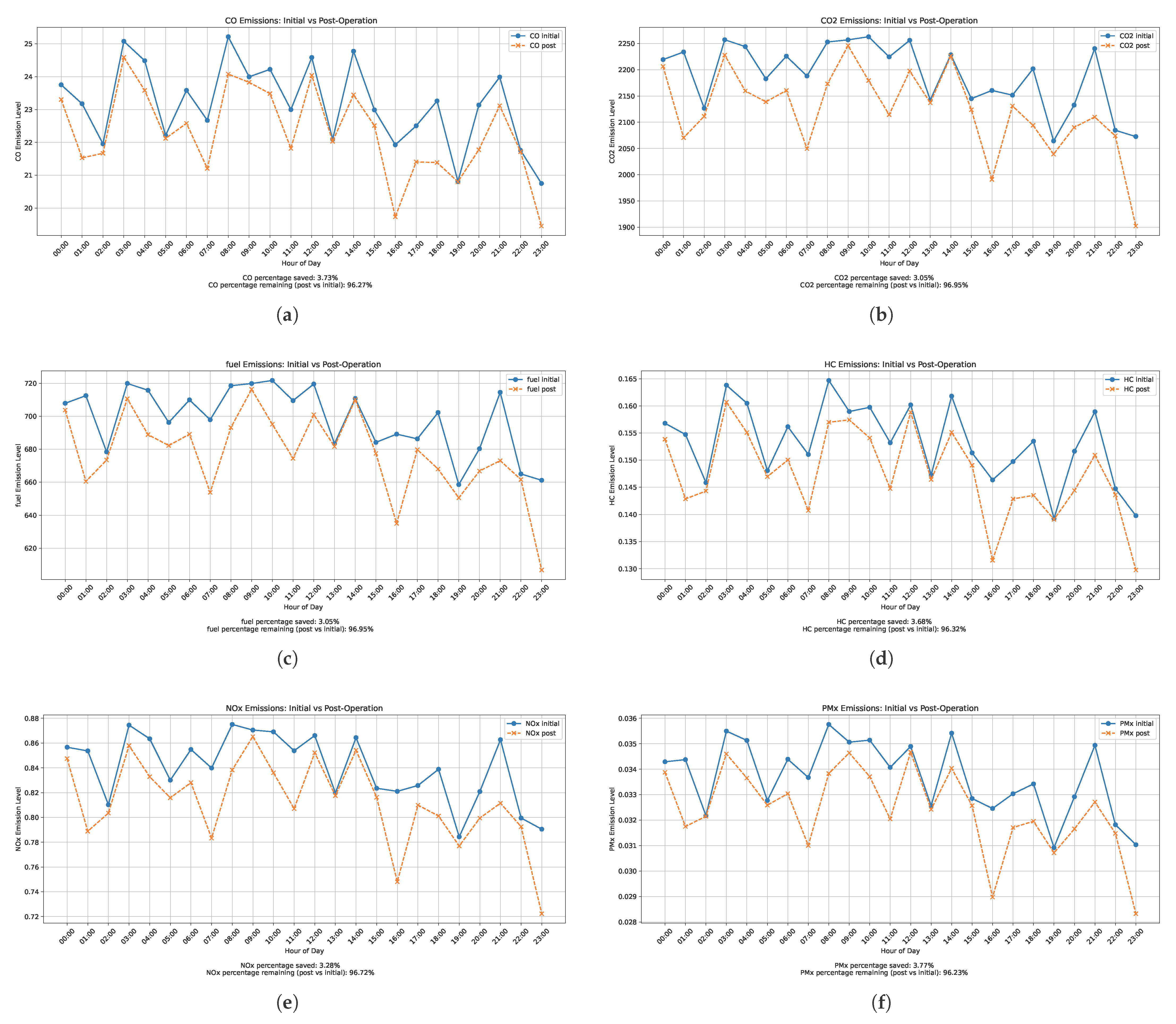

- Comprehensive Evaluation Across Real Urban Layouts: Validated the system in both large (Bucharest) and small (Tecuci, Galați, Focșani) Romanian cities using SUMO-based simulations with up to 24 randomized route files per scenario. Demonstrated consistent reductions in total and average vehicle waiting times (up to 50% in some sectors), confirming the effectiveness of the algorithm across different city sizes and traffic complexities. In addition to traffic flow improvements, an exploratory analysis of emissions across six key pollutant categories, including CO, , HC, NOx, PMx, and fuel consumption, was conducted using SUMO’s built-in HBEFA-based emission estimation module. The results revealed a consistent and measurable decrease in pollutant output, driven by optimized signal timings that reduced idle time and stop-and-go driving. These findings underscore the algorithm’s potential to simultaneously enhance urban mobility and promote environmental sustainability.

2. Related Work

2.1. Vision-Based Vehicle Counting Using CNNs

2.2. Policy-Based Reinforcement Learning for Traffic Lights Control

2.2.1. General Design of PPO Systems in Adaptive Traffic Control

2.2.2. Performance Analysis and Baseline Control Methods

2.2.3. Scale and Simulation Environment

3. Methodology and Implementation

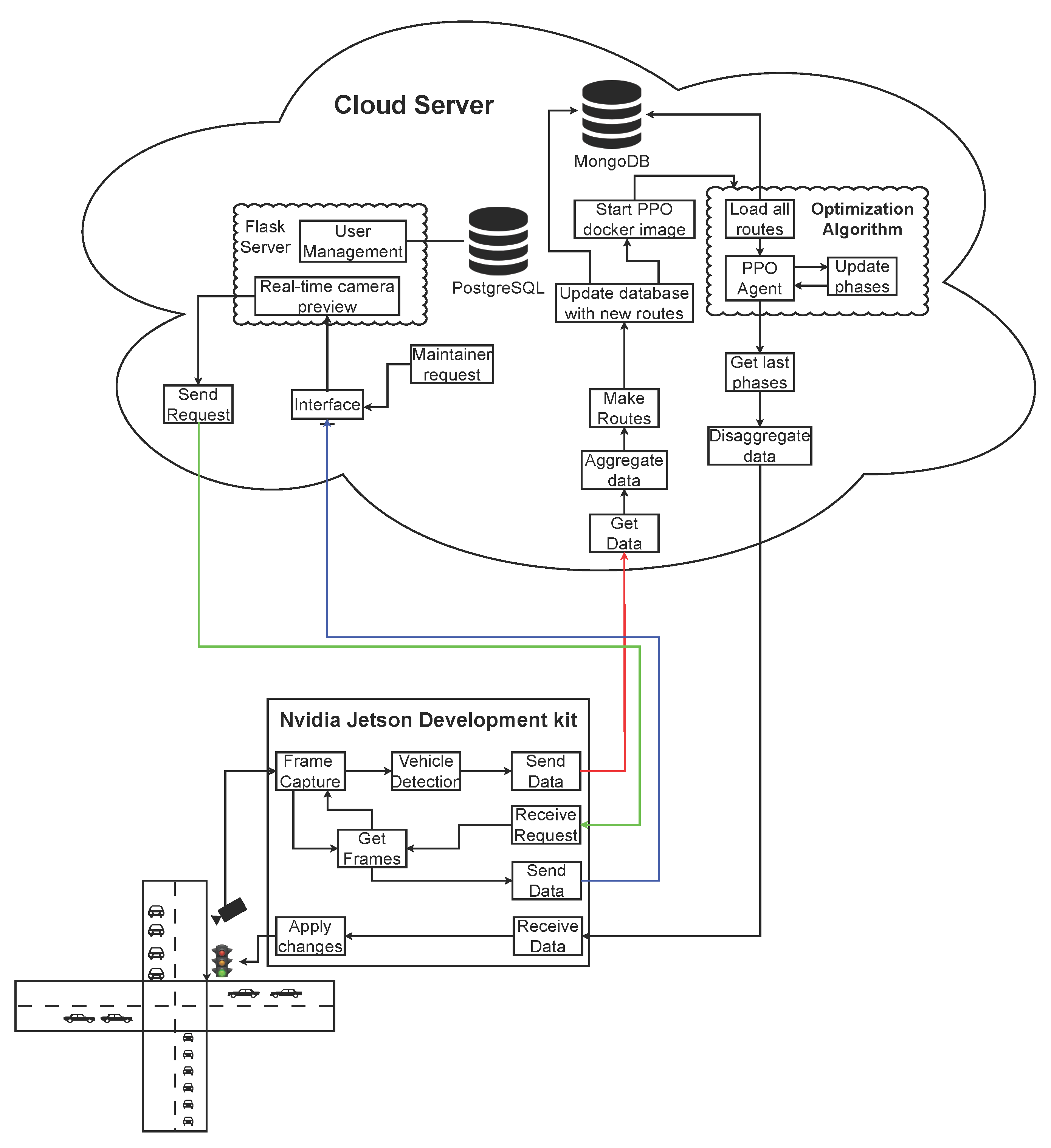

3.1. Framework’s Architecture

- Data Acquisition and Vehicle Detection—Captures real-time traffic data using roadside cameras and processes video streams to detect and count vehicles at intersections.

- Secure Cloud Communication Protocol and Data Exchange—Ensures reliable and encrypted transfer of traffic data from the edge devices to the cloud-based processing units.

- Cloud-Based Web Interface and RL Model Generation—Provides an intuitive web interface for system monitoring, configuration, and the dynamic creation of reinforcement learning (RL) models tailored to the traffic topology.

- Reinforcement Learning Decision Logic—Hosts the PPO-based control engine responsible for adjusting traffic light phases and durations based on learned traffic patterns.

- Secure Communication and System Integrity—Implements protection mechanisms to ensure the confidentiality, integrity, and availability of data throughout all system communications.

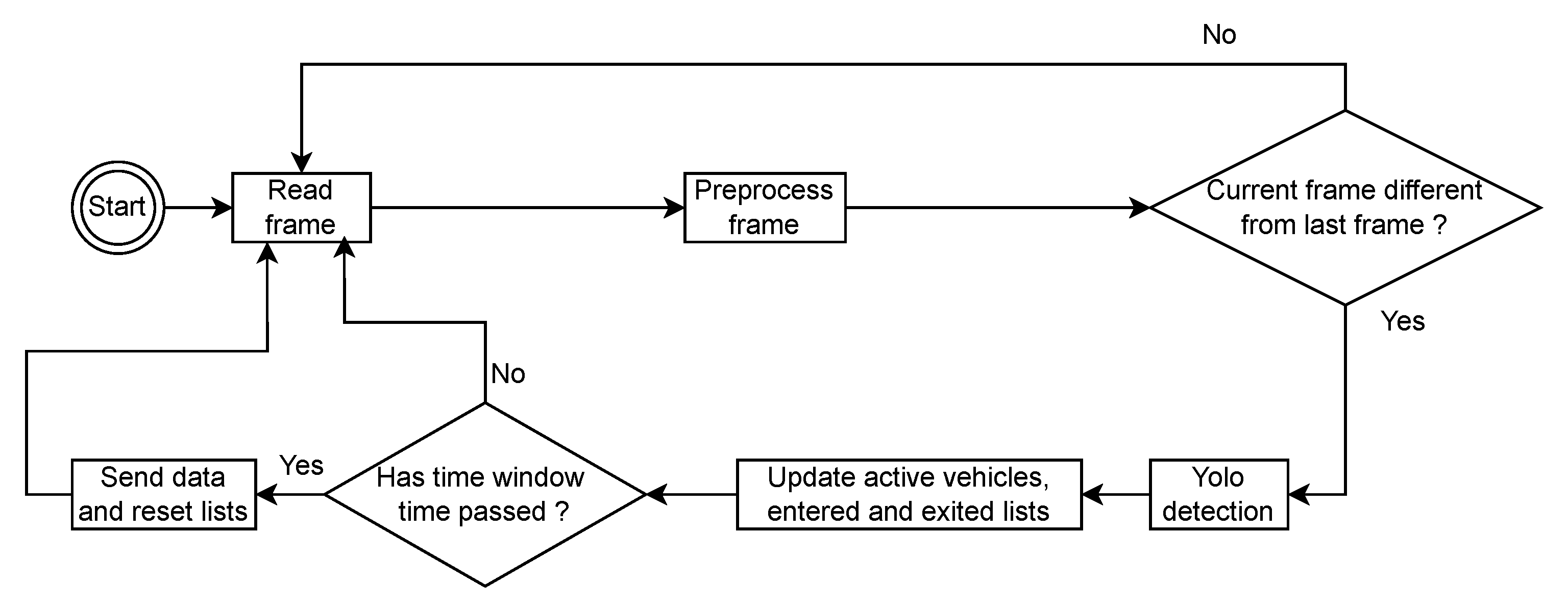

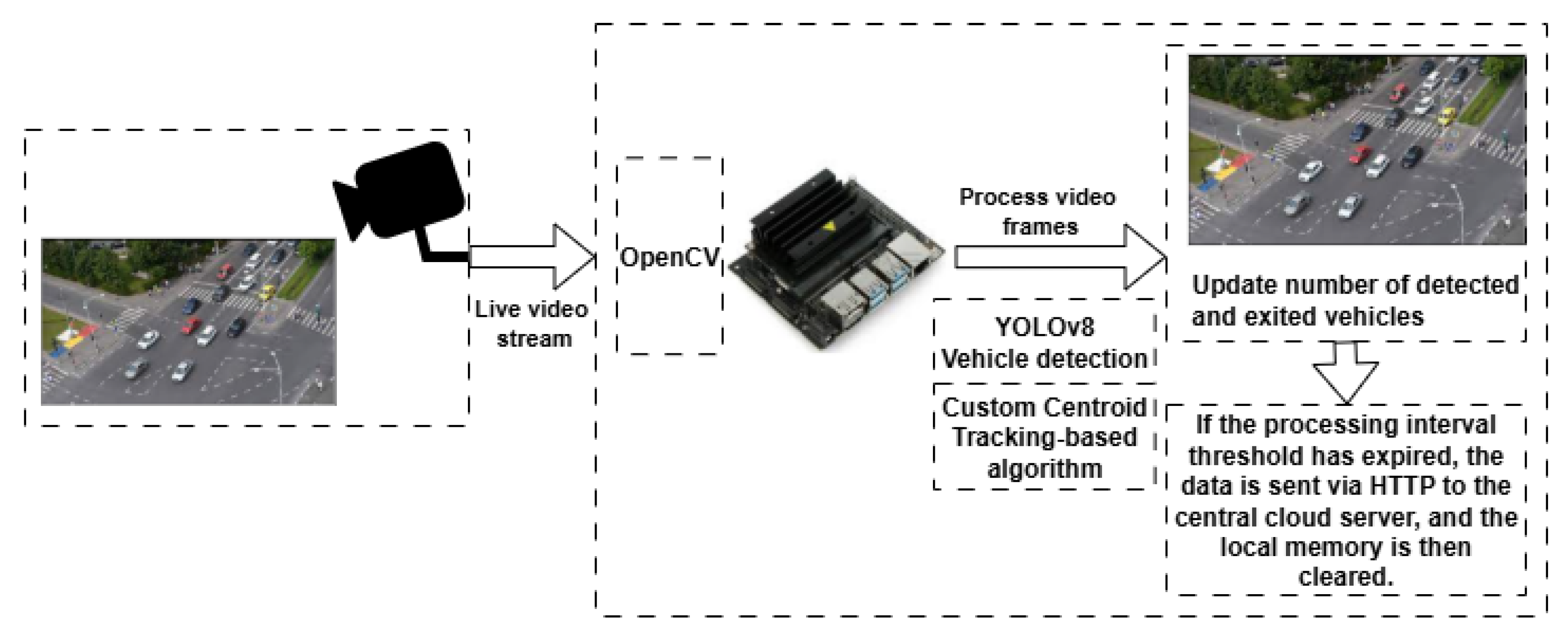

3.2. Data Acquisition and Vehicle Detection

- OpenCV, for frame acquisition and preprocessing.

- Docker, for containerized deployment and edge scalability.

- ImageHash, a perceptual hashing library used to reduce redundant computation.

3.2.1. Algorithm Workflow

3.2.2. Algorithm Validation and System Deployment

3.2.3. Challenges and Limitations

- Degradation in image quality influenced by lighting, weather conditions, or camera resolution.

- Traffic density, which increases the risk of occlusions and false detections.

3.2.4. Role Within the Framework

3.3. Secure Cloud Communication Protocol and Data Exchange

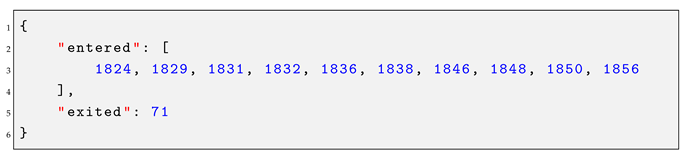

- The camera identifier.

- A list of timestamps, representing the relative time a vehicle has entered the traffic light controlled intersection, since the beginning of the counting interval.

- The number of vehicles detected leaving the intersection.

3.4. Cloud-Based Web Interface and RL Model Generation

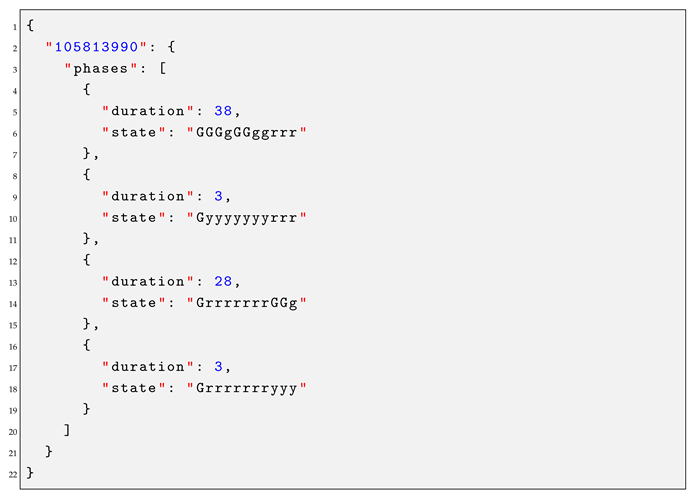

3.4.1. Reinforcement Learning Optimization Engine

3.4.2. Cloud-Based Web Interface

- A live interactive map showing the RSU network.

- Real-time traffic flow indicators and video feeds.

- Device status, alerts, and logs.

- Administrative functions for credential management and system configuration.

3.5. Reinforcement Learning Decision Logic

3.5.1. Environment Initialization

3.5.2. Training Strategy and Overfitting Concerns

- The clipping parameter was set to 0.2, which restricts the policy update to prevent large deviations during training.

- The discount factor was fixed at 0.99, placing a strong emphasis on long-term rewards, which is critical in traffic control environments with delayed feedback.

- The learning rate was set to 3 × 10−4, and the optimizer used was Adam, chosen for its adaptive gradient descent capabilities and robustness in non-stationary environments.

- The number of training epochs per update () was set to 10, allowing enough iterations to improve the policy without overfitting each batch.

- An entropy regularization coefficient of 0.01 was applied to encourage exploration during training, helping the agent avoid suboptimal local minima.

3.5.3. Single-Agent Architecture Justification

- The agent’s action is good, and the global performance is good.

- The agent’s action is bad, but the global performance is still good.

- The agent’s action is good, but the global performance is poor.

- The agent’s action is bad, and the global performance is poor.

3.5.4. Observation Space and Reward Function

- The average waiting time at each traffic light.

- The average vehicle count during each signal phase.

- Intersection pressure is defined as the difference in the number of vehicles between major and minor incoming roads.

- is the set of major incoming roads at intersection i,

- is the set of secondary (minor) incoming roads at intersection i,

- and represent the number of vehicles on roads j and k, respectively.

- The total accumulated vehicle waiting time across the simulation.

- The average waiting time per vehicle for the entire simulation.

- is the average vehicle wait time at the beginning of training,

- is the average wait time during the current simulation episode.

3.6. Secure Communication and System Integrity

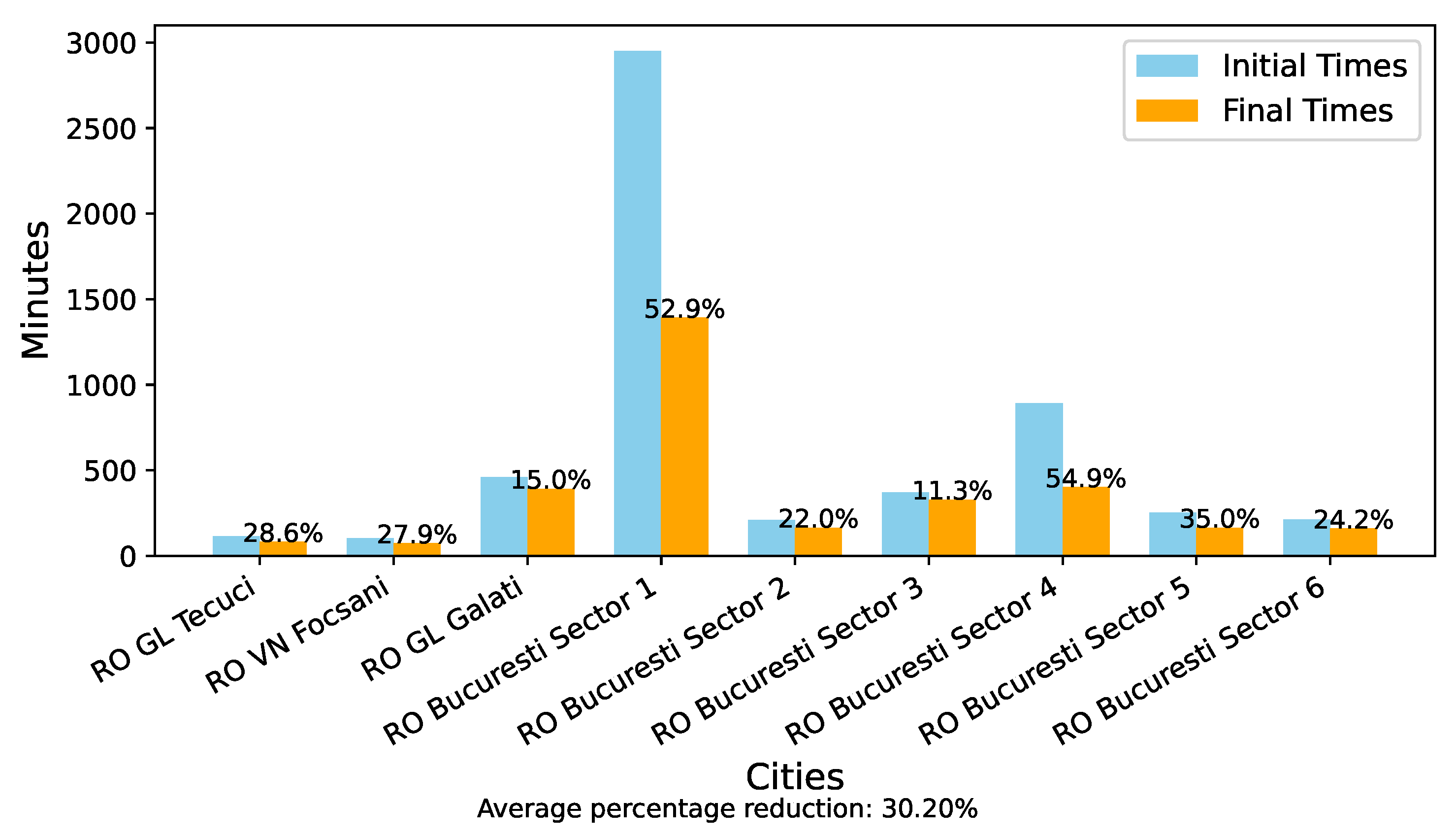

4. Results

4.1. Testing Methodology

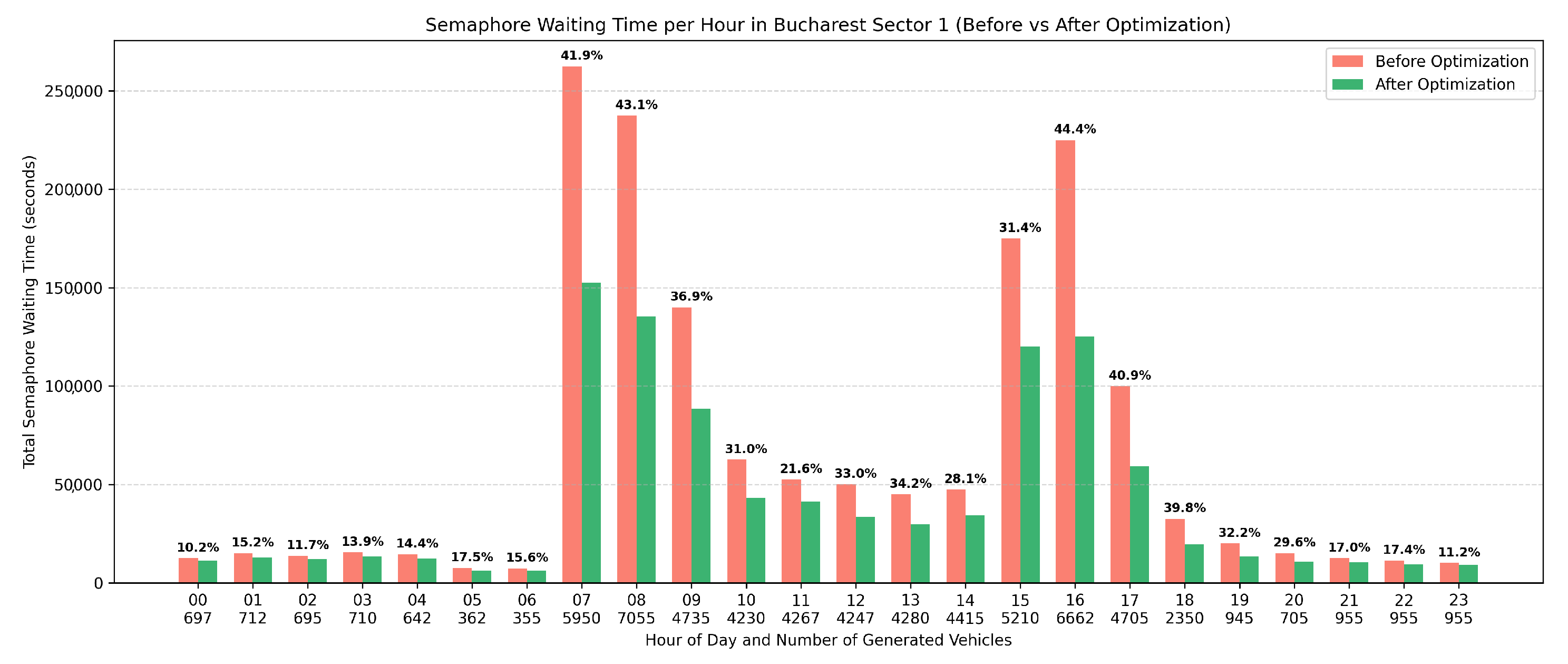

- First, we tested the algorithm extensively in Bucharest, considering all city sectors due to the complexity and large number of traffic signals present, as well as the city’s well-known congestion challenges. The city was divided into its six administrative sectors, each treated as a separate simulation zone.

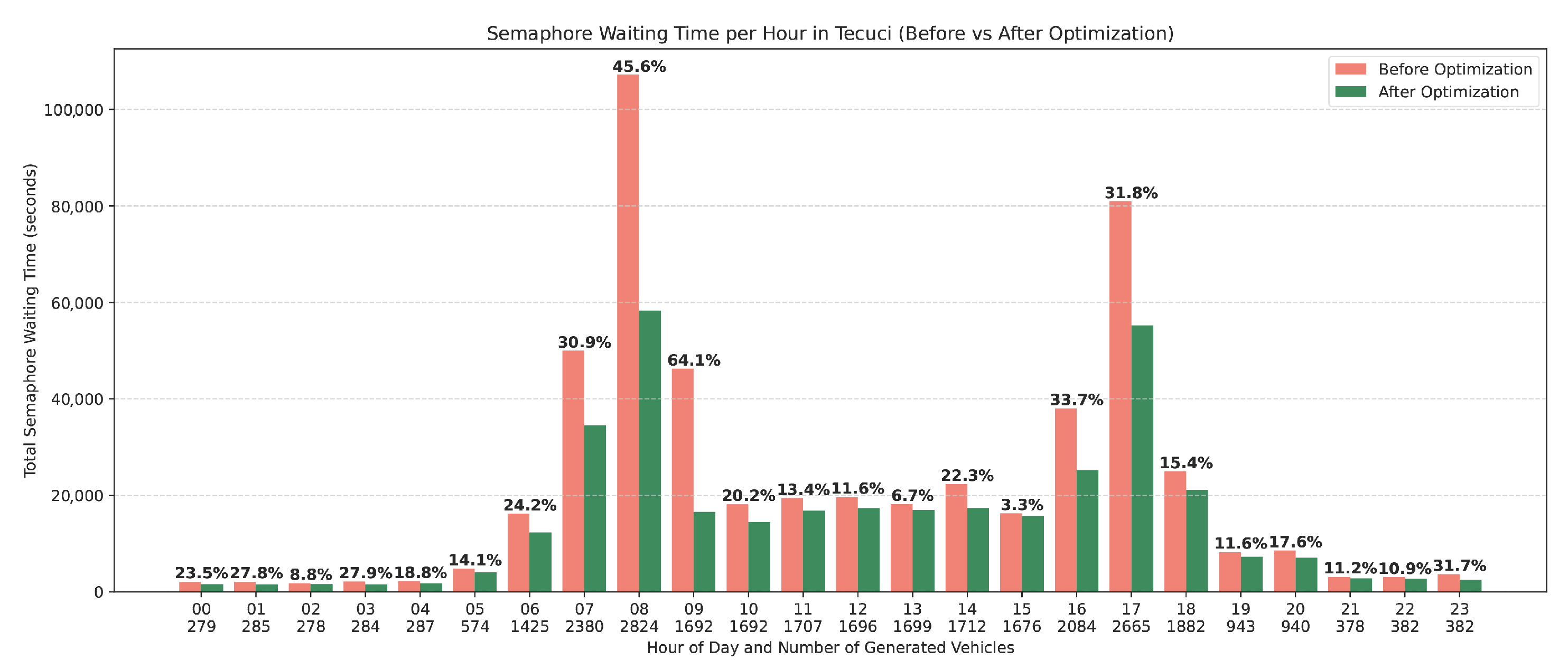

- Additionally, three smaller cities, Tecuci, Focsani, and Galati, were included to demonstrate the algorithm’s general applicability across urban scenarios. For instance, Tecuci has only three traffic lights according to OSM, illustrating the algorithm’s adaptability irrespective of urban size or complexity.

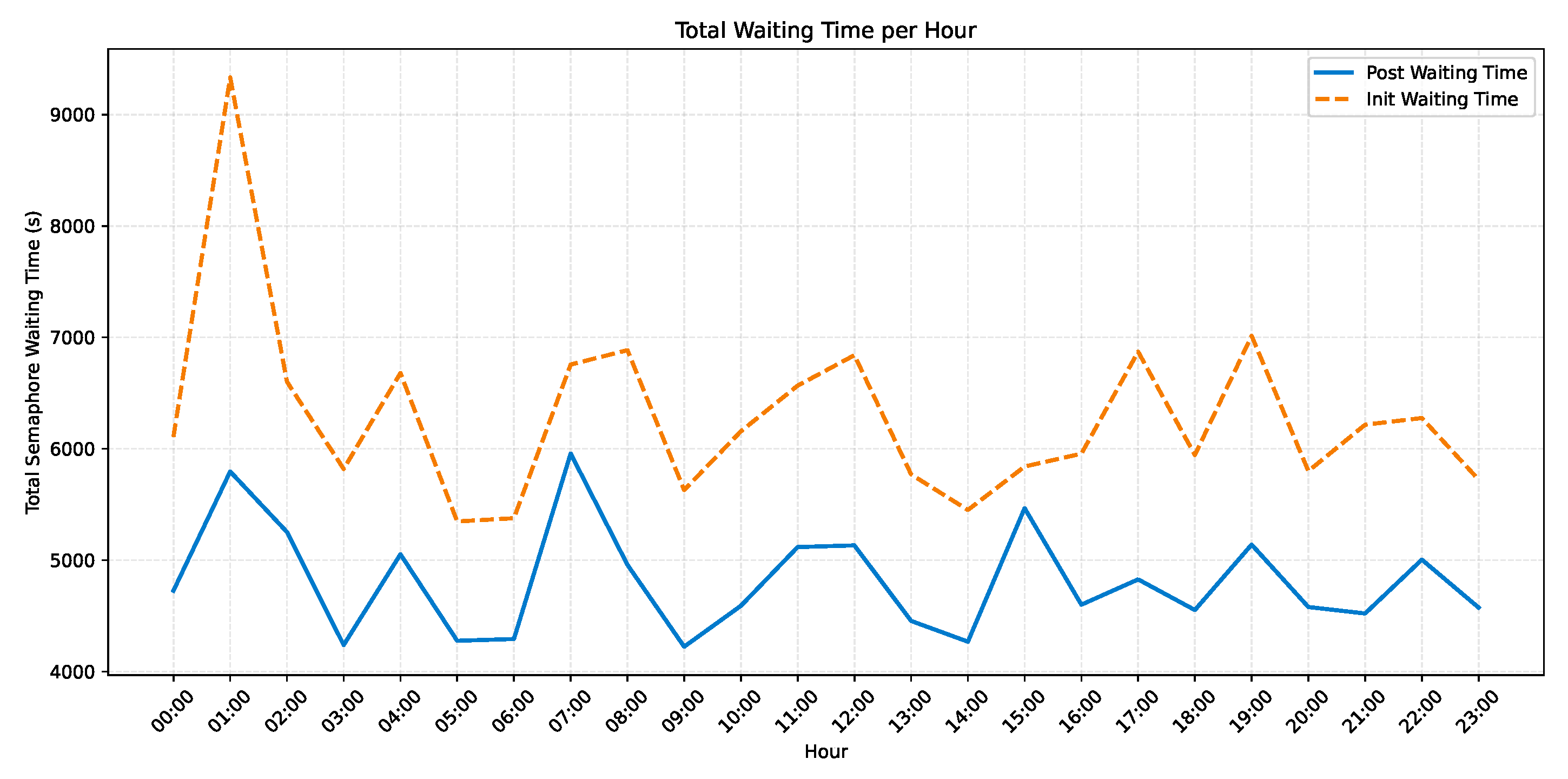

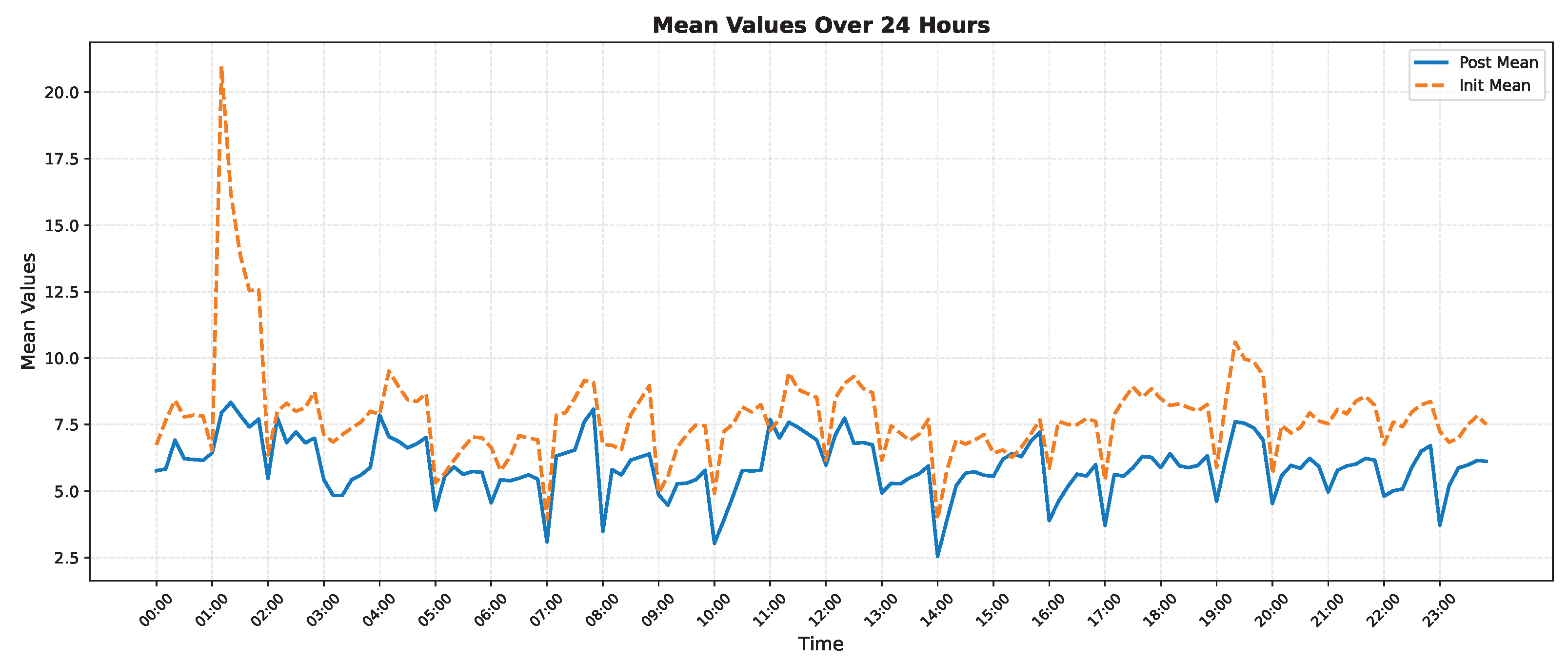

4.2. Impact on Waiting Time

4.2.1. Fixed Vehicle Load Scenario: Controlled Benchmarking with 720 Vehicles/Episode

- The Init Waiting Time curve reflects the baseline scenario, before applying any reinforcement learning optimization.

- The Post Waiting Time curve shows the waiting time after applying the PPO-based signal control adjustments.

4.2.2. Dynamic Vehicle Load Scenario: Congestion-Based Simulation

4.3. Impact on Environment

5. Conclusions

5.1. Limitations

5.2. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A. Timestamp JSON Format

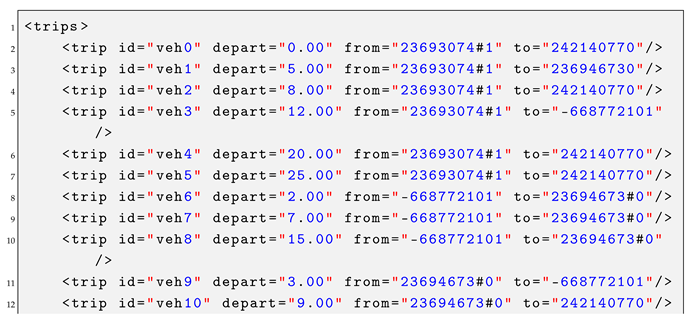

Appendix B. Example of Route File

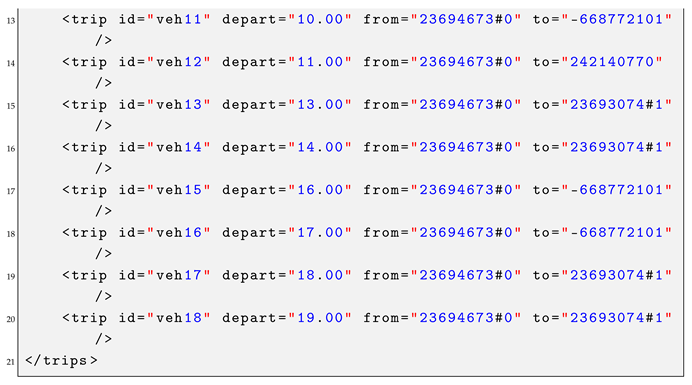

Appendix C. TLS Phase JSON

References

- Elenwo, E. Health and Environmental Effects of Vehicular Traffic Emission in Yenagoa City Bayelsa State, Nigeria. ASJ Int. J. Health Saf. Environ. 2018, 4, 291–307. Available online: http://www.academiascholarlyjournal.org/ijhse/index_ijhse.htm (accessed on 1 August 2025).

- Kaixi, Y. Road Traffic Status and Carbon Emission Estimation Methods. World J. Soc. Sci. Res. 2020, 7, 34. [Google Scholar] [CrossRef]

- Tang, C.R.; Hsieh, J.W.; Teng, S.Y. Cooperative Multi-Objective Reinforcement Learning for Traffic Signal Control and Carbon Emission Reduction. arXiv 2023, arXiv:2306.09662. [Google Scholar] [CrossRef]

- Xie, D.; Li, M.; Sun, Q.; He, J. Reinforcement Learning Based Urban Traffic Signal Control and Its Impact Assessment on Environmental Pollution. E3S Web Conf. 2024, 536, 01021. [Google Scholar] [CrossRef]

- Elouni, M.; Abdelghaffar, H.M.; Rakha, H.A. Adaptive Traffic Signal Control: Game-Theoretic Decentralized vs. Centralized Perimeter Control. Sensors 2021, 21, 274. [Google Scholar] [CrossRef]

- Dovzhenko, N.; Mazur, N.; Kostiuk, Y.; Rzaieva, S. INTEGRATION OF IOT AND ARTIFICIAL INTELLIGENCE INTO INTELLIGENT TRANSPORTATION SYSTEMS. Cybersecur. Educ. Sci. Tech. 2024, 2, 430–444. [Google Scholar] [CrossRef]

- But, T.; Mamotenko, D. Increasing the economic development of the EU countries through the implementation of the “Smart City” concept. Manag. Entrep. Trends Dev. 2025, 1, 27–37. [Google Scholar] [CrossRef]

- Sneharika, P.; Anvitha, B.C.S.S.; Kumar, A.M.; T, P.; Devi, M.M.Y.; Kumar, S. A Comprehensive Analysis on Traffic Prediction Methods for Real-World Deployment: Challenges, Cause and Scope. In Proceedings of the 2024 International Conference on Communication, Computer Sciences and Engineering (IC3SE), Gautam Buddha Nagar, India, 9–11 May 2024; pp. 119–124. [Google Scholar] [CrossRef]

- Streimikis, J.; Kortenko, L.; Panova, M.; Voronov, M. Development of a smart city information system. E3S Web Conf. 2021, 301, 05002. [Google Scholar] [CrossRef]

- Shaheen, S.; Finson, R. Intelligent Transportation Systems; Transportation Sustainability Research Center, Institute of Transportation Studies, University of California: Berkeley, CA, USA, 2013; Available online: https://escholarship.org/uc/item/3hh2t4f9 (accessed on 26 August 2025).

- Barbaresso, J.; Cordahi, G.; Garcia, D.; Hill, C.; Jendzejec, A.; Wright, K. USDOT’s Intelligent Transportation Systems (ITS) ITS Strategic Plan, 2015–2019; Technical Report; United States Department of Transportation: Washington, DC, USA, 2014. [Google Scholar]

- Mandžuka, S.; Žura, M.; Horvat, B.; Bićanić, D.; Mitsakis, E. Directives of the European Union on Intelligent Transport Systems and their impact on the Republic of Croatia. Promet-Traffic Transp. 2013, 25, 273–283. [Google Scholar] [CrossRef]

- Yuan, T.; Da Rocha Neto, W.; Rothenberg, C.E.; Obraczka, K.; Barakat, C.; Turletti, T. Machine learning for next-generation intelligent transportation systems: A survey. Trans. Emerg. Telecommun. Technol. 2022, 33, e4427. [Google Scholar] [CrossRef]

- Ghosh, R.; Pragathi, R.; Ullas, S.; Borra, S. Intelligent transportation systems: A survey. In Proceedings of the 2017 International Conference on Circuits, Controls, and Communications (CCUBE), Bangalore, India, 15–16 December 2017; pp. 160–165. [Google Scholar] [CrossRef]

- Shahraz, M.; Ahmed, M. Intelligent Transportation Systems: An Overview of Current Trends and Limitations. Int. J. Sci. Res. Eng. Manag. 2022, 6, 1–7. [Google Scholar] [CrossRef]

- Veres, M.; Moussa, M. Deep Learning for Intelligent Transportation Systems: A Survey of Emerging Trends. IEEE Trans. Intell. Transp. Syst. 2020, 21, 3152–3168. [Google Scholar] [CrossRef]

- Zhu, Y.; Cai, M.; Schwarz, C.W.; Li, J.; Xiao, S. Intelligent Traffic Light via Policy-based Deep Reinforcement Learning. Int. J. Intell. Transp. Syst. Res. 2022, 20, 734–744. [Google Scholar] [CrossRef]

- Faqir, N.; Ennaji, Y.; Chakir, L.; Boumhidi, J. Hybrid CNN-LSTM and Proximal Policy Optimization Model for Traffic Light Control in a Multi-Agent Environment. IEEE Access 2025, 13, 29577–29588. [Google Scholar] [CrossRef]

- Lin, T.; Lin, R. Smart City Traffic Flow and Signal Optimization Using STGCN-LSTM and PPO Algorithms. IEEE Access 2025, 13, 15062–15078. [Google Scholar] [CrossRef]

- Narmadha, S.; Vijayakumar, V. Spatio-Temporal vehicle traffic flow prediction using multivariate CNN and LSTM model. Mater. Today Proc. 2023, 81, 826–833. [Google Scholar] [CrossRef]

- Premaratne, P.; Kadhim, I.J.; Blacklidge, R.; Lee, M. Comprehensive review on vehicle Detection, classification and counting on highways. Neurocomputing 2023, 556, 126627. [Google Scholar] [CrossRef]

- Berwo, M.A.; Khan, A.; Fang, Y.; Fahim, H.; Javaid, S.; Mahmood, J.; Abideen, Z.U.; MS, S. Deep learning techniques for vehicle detection and classification from images/videos: A survey. Sensors 2023, 23, 4832. [Google Scholar] [CrossRef]

- Mittal, U.; Chawla, P. Vehicle detection and traffic density estimation using ensemble of deep learning models. Multimed. Tools Appl. 2023, 82, 10397–10419. [Google Scholar] [CrossRef]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of Yolo algorithm developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Krishna, N.M.; Reddy, R.Y.; Reddy, M.S.C.; Madhav, K.P.; Sudham, G. Object detection and tracking using YOLO. In Proceedings of the 2021 Third International Conference on Inventive Research in Computing Applications (ICIRCA), IEEE, Coimbatore, India, 2–4 September 2021; pp. 1–7. [Google Scholar]

- Sarda, A.; Dixit, S.; Bhan, A. Object detection for autonomous driving using yolo algorithm. In Proceedings of the 2021 2nd International Conference on Intelligent Engineering and Management (ICIEM), IEEE, London, UK, 28–30 April 2021; pp. 447–451. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar] [CrossRef]

- Sharma, R.; Garg, P. Reinforcement Learning Advances in Autonomous Driving: A Detailed Examination of DQN and PPO. In Proceedings of the 2024 Global Conference on Communications and Information Technologies (GCCIT), Bangalore, India, 25–26 October 2024; pp. 1–5. [Google Scholar] [CrossRef]

- Jiang, Y.; Wei, Y. Autonomous Vehicles Driving at Unsigned Intersections Based on Improved Proximal Policy Optimization Algorithm. In Proceedings of the 2024 7th International Conference on Advanced Algorithms and Control Engineering (ICAACE), Shanghai, China, 1–3 March 2024; pp. 1107–1112. [Google Scholar] [CrossRef]

- Zhao, J.; Zhao, Y.; Li, W.; Zeng, C. End-to-End Autonomous Driving Algorithm Based on PPO and Its Implementation. In Proceedings of the 2024 IEEE 13th Data Driven Control and Learning Systems Conference (DDCLS), Kaifeng, China, 17–19 May 2024; pp. 1852–9861. [Google Scholar] [CrossRef]

- Yao, L. An effective vehicle counting approach based on CNN. In Proceedings of the 2019 IEEE 2nd International Conference on Electronics and Communication Engineering (ICECE), IEEE, Xi’an, China, 9–11 December 2019; pp. 15–19. [Google Scholar]

- Majumder, M.; Wilmot, C. Automated vehicle counting from pre-recorded video using you only look once (YOLO) object detection model. J. Imaging 2023, 9, 131. [Google Scholar] [CrossRef]

- Asha, C.; Narasimhadhan, A. Vehicle counting for traffic management system using YOLO and correlation filter. In Proceedings of the 2018 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT), IEEE, Bangalore, India, 16–17 March 2018; pp. 1–6. [Google Scholar]

- Mittal, U.; Chawla, P.; Tiwari, R. EnsembleNet: A hybrid approach for vehicle detection and estimation of traffic density based on faster R-CNN and YOLO models. Neural Comput. Appl. 2023, 35, 4755–4774. [Google Scholar] [CrossRef]

- Valdovinos-Chacón, G.; Ríos-Zaldivar, A.; Valle-Cruz, D.; Lara, E.R. Integrating IoT and YOLO-Based AI for Intelligent Traffic Management in Latin American Cities. In Artificial Intelligence in Government; Springer: Berlin/Heidelberg, Germany, 2025; pp. 227–253. [Google Scholar]

- Gomaa, A.; Minematsu, T.; Abdelwahab, M.M.; Abo-Zahhad, M.; Taniguchi, R.i. Faster CNN-based vehicle detection and counting strategy for fixed camera scenes. Multimed. Tools Appl. 2022, 81, 25443–25471. [Google Scholar] [CrossRef]

- Chen, W.C.; Deng, M.J.; Liu, P.Y.; Lai, C.C.; Lin, Y.H. A framework for real-time vehicle counting and velocity estimation using deep learning. Sustain. Comput. Informatics Syst. 2023, 40, 100927. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, Q.; Gong, Y.; Yao, F.; Xiao, P. MDCFVit-YOLO: A model for nighttime infrared small target vehicle and pedestrian detection. PLoS ONE 2025, 20, e0324700. [Google Scholar] [CrossRef]

- Biswas, M. Optimising YOLO and ByteTrack for Robust Vehicle Counting and Classification in Adverse Weather: A Computer-Vision-Based Traffic Monitoring Study Using New Zealand Data. Master’s Thesis, Unitec Institute of Technology, Auckland, New Zealand, 2025. [Google Scholar]

- Pan, T.; Hui, M.; Huang, J.; Fu, Z.; Hai, T.; Yao, J. PMSA-YOLO: Lightweight vehicle detection with parallel multi-scale aggregation and attention mechanism. J. Electron. Imaging 2025, 34, 033043. [Google Scholar] [CrossRef]

- Rafique, M.T.; Mustafa, A.; Sajid, H. Reinforcement Learning for Adaptive Traffic Signal Control: Turn-Based and Time-Based Approaches to Reduce Congestion. arXiv 2024, arXiv:2408.15751. [Google Scholar]

- Shashi, F.I.; Sultan, S.M.; Khatun, A.; Sultana, T.; Alam, T. A study on deep reinforcement learning based traffic signal control for mitigating traffic congestion. In Proceedings of the 2021 IEEE 3rd Eurasia Conference on Biomedical Engineering, Healthcare and Sustainability (Ecbios), IEEE, Tainan, Taiwan, 28–30 May 2021; pp. 288–291. [Google Scholar]

- Wang, X.; Abdulhai, B.; Sanner, S. Abdulhai, B.; Sanner, S. A critical review of traffic signal control and a novel unified view of reinforcement learning and model predictive control approaches for adaptive traffic signal control. In Handbook on Artificial Intelligence and Transport; Edward Elgar Publishing: Tallinn, Estonia, 2023; pp. 482–532. [Google Scholar]

- Miletić, M.; Ivanjko, E.; Gregurić, M.; Kušić, K. A review of reinforcement learning applications in adaptive traffic signal control. IET Intell. Transp. Syst. 2022, 16, 1269–1285. [Google Scholar] [CrossRef]

- Huang, Q. Model-based or model-free, a review of approaches in reinforcement learning. In Proceedings of the 2020 International Conference on Computing and Data Science (CDS). IEEE, Stanford, CA, USA, 1–2 August 2020; pp. 219–221. [Google Scholar]

- Liu, X.Y.; Zhu, M.; Borst, S.; Walid, A. Deep reinforcement learning for traffic light control in intelligent transportation systems. arXiv 2023, arXiv:2302.03669. [Google Scholar] [CrossRef]

- VB, S.S.K.; Thiruvenkadakrishnan, S. Traffic Signal Optimization using Real-Time Pedestrian and Vehicle Counts. In Proceedings of the 2025 International Conference on Intelligent Computing and Control Systems (ICICCS), IEEE, Changchun, China, 19–21 March 2025; pp. 1297–1301. [Google Scholar]

- Swapno, S.; Nobel, S.; Meena, P.; Meena, V.; Azar, A.T.; Haider, Z.; Tounsi, M. A reinforcement learning approach for reducing traffic congestion using deep Q learning. Sci. Rep. 2024, 14, 30452. [Google Scholar] [CrossRef]

- Medvei, M.M.; Dima, G.A.; Ţăpuş, N. Approaching traffic congestion with double deep Q-networks. In Proceedings of the 2021 20th RoEduNet Conference: Networking in Education and Research (RoEduNet), IEEE, Iasi, Romania, 4–6 November 2021; pp. 1–6. [Google Scholar]

- Chu, X.; Cao, X. Adaptive traffic signal control for road networks based on dueling double deep q-network. In Proceedings of the International Conference on Frontiers of Traffic and Transportation Engineering (FTTE 2024), Lanzhou, China, 22–24 November 2024; SPIE: Bellingham, WA, USA, 2025; Volume 13645, pp. 167–174. [Google Scholar]

- Oroojlooy, A.; Nazari, M.; Hajinezhad, D.; Silva, J. Attendlight: Universal attention-based reinforcement learning model for traffic signal control. Adv. Neural Inf. Process. Syst. 2020, 33, 4079–4090. [Google Scholar]

- Wijayarathna, K.; Lakmal, H. Adaptive Traffic Control Framework for Urban Intersections. In Proceedings of the 1st International Conference on Advanced Computing Technologies, Ghaziabad, India, 23–24 August 2024; p. 18. [Google Scholar]

- Li, Z.; Xu, C.; Zhang, G. A deep reinforcement learning approach for traffic signal control optimization. arXiv 2021, arXiv:2107.06115. [Google Scholar] [CrossRef]

- Guo, J.; Cheng, L.; Wang, S. CoTV: Cooperative control for traffic light signals and connected autonomous vehicles using deep reinforcement learning. IEEE Trans. Intell. Transp. Syst. 2023, 24, 10501–10512. [Google Scholar] [CrossRef]

- Huang, L.; Qu, X. Improving traffic signal control operations using proximal policy optimization. IET Intell. Transp. Syst. 2023, 17, 592–605. [Google Scholar] [CrossRef]

- Li, M.; Pan, X.; Liu, C.; Li, Z. Federated deep reinforcement learning-based urban traffic signal optimal control. Sci. Rep. 2025, 15, 11724. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Zhang, G.; Yang, Q.; Han, T. An adaptive traffic signal control scheme with Proximal Policy Optimization based on deep reinforcement learning for a single intersection. Eng. Appl. Artif. Intell. 2025, 149, 110440. [Google Scholar] [CrossRef]

- Kwesiga, D.K.; Guin, A.; Hunter, M. Adaptive Traffic Signal Control based on Multi-Agent Reinforcement Learning. Case Study on a simulated real-world corridor. arXiv 2025, arXiv:2503.02189. [Google Scholar]

- Duan, L.; Zhao, H. An Adaptive Signal Control Model for Intersection Based on Deep Reinforcement Learning Considering Carbon Emissions. Electronics 2025, 14, 1664. [Google Scholar] [CrossRef]

- Duan, L.; Nie, J.; Liang, R.; Zhao, H.; He, R. Adaptive Traffic Signal Control Based on A Modified Proximal Policy Optimization Algorithm. In Proceedings of the 2024 4th International Conference on Artificial Intelligence, Robotics, and Communication (ICAIRC), IEEE, Xiamen, China, 27–29 December 2024; pp. 748–752. [Google Scholar]

- Zhou, K.; Zhang, C.; Zhan, F.; Liu, W.; Li, Y. Using a single actor to output personalized policy for different intersections. arXiv 2025, arXiv:2503.07678. [Google Scholar]

- Fu, X.; Ren, Y.; Jiang, H.; Lv, J.; Cui, Z.; Yu, H. CLlight: Enhancing representation of multi-agent reinforcement learning with contrastive learning for cooperative traffic signal control. Expert Syst. Appl. 2025, 262, 125578. [Google Scholar] [CrossRef]

- Michailidis, P.; Michailidis, I.; Lazaridis, C.R.; Kosmatopoulos, E. Traffic Signal Control via Reinforcement Learning: A Review on Applications and Innovations. Infrastructures 2025, 10, 114. [Google Scholar] [CrossRef]

- Li, Y.; He, J.; Gao, Y. Intelligent traffic signal control with deep reinforcement learning at single intersection. In Proceedings of the 2021 7th International Conference on Computing and Artificial Intelligence, Guiyang, China, 23–25 July 2021; pp. 399–406. [Google Scholar]

- Zhang, Z.; Gunter, G.; Quinones-Grueiro, M.; Zhang, Y.; Barbour, W.; Biswas, G.; Work, D. Phase Re-service in Reinforcement Learning Traffic Signal Control. arXiv 2024, arXiv:2407.14775. [Google Scholar] [CrossRef]

- Xu, T.; Barman, S.; Levin, M.W.; Chen, R.; Li, T. Integrating public transit signal priority into max-pressure signal control: Methodology and simulation study on a downtown network. Transp. Res. Part C Emerg. Technol. 2022, 138, 103614. [Google Scholar] [CrossRef]

- Wei, H.; Xu, N.; Zhang, H.; Zheng, G.; Zang, X.; Chen, C.; Zhang, W.; Zhu, Y.; Xu, K.; Li, Z. Colight: Learning network-level cooperation for traffic signal control. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019; pp. 1913–1922. [Google Scholar]

- Wei, H.; Chen, C.; Zheng, G.; Wu, K.; Gayah, V.; Xu, K.; Li, Z. Presslight: Learning max pressure control to coordinate traffic signals in arterial network. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, Anchorage, AK, USA, 4–8 August 2019; pp. 1290–1298. [Google Scholar]

- Chen, C.; Wei, H.; Xu, N.; Zheng, G.; Yang, M.; Xiong, Y.; Xu, K.; Li, Z. Toward a thousand lights: Decentralized deep reinforcement learning for large-scale traffic signal control. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 3414–3421. [Google Scholar]

- Zang, X.; Yao, H.; Zheng, G.; Xu, N.; Xu, K.; Li, Z. Metalight: Value-based meta-reinforcement learning for traffic signal control. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 1153–1160. [Google Scholar]

- Lin, J.P.; Sun, M.T. A YOLO-Based Traffic Counting System. In Proceedings of the 2018 Conference on Technologies and Applications of Artificial Intelligence (TAAI), Taichung, Taiwan, 30 November–2 December 2018; pp. 82–85. [Google Scholar] [CrossRef]

- Hua, H.; Li, Y.; Wang, T.; Dong, N.; Li, W.; Cao, J. Edge Computing with Artificial Intelligence: A Machine Learning Perspective. ACM Comput. Surv. 2023, 55, 1–35. [Google Scholar] [CrossRef]

- Han, B.G.; Lee, J.G.; Lim, K.T.; Choi, D.H. Design of a Scalable and Fast YOLO for Edge-Computing Devices. Sensors 2020, 20, 6779. [Google Scholar] [CrossRef]

- Rahman, Z.; Ami, A.M.; Ullah, M.A. A Real-Time Wrong-Way Vehicle Detection Based on YOLO and Centroid Tracking. In Proceedings of the 2020 IEEE Region 10 Symposium (TENSYMP), Dhaka, Bangladesh, 5–7 June 2020; pp. 916–920. [Google Scholar] [CrossRef]

- Minghini, M.; Frassinelli, F. OpenStreetMap history for intrinsic quality assessment: Is OSM up-to-date? Open Geospat. Data Softw. Stand. 2019, 4, 9. [Google Scholar] [CrossRef]

- Garí, Y.; Pacini, E.; Robino, L.; Mateos, C.; Monge, D.A. Online RL-based cloud autoscaling for scientific workflows: Evaluation of Q-Learning and SARSA. Future Gener. Comput. Syst. 2024, 157, 573–586. [Google Scholar] [CrossRef]

- Krajzewicz, D. Traffic Simulation with SUMO—Simulation of Urban Mobility. In Fundamentals of Traffic Simulation; Barceló, J., Ed.; Springer: New York, NY, USA, 2010; pp. 269–293. [Google Scholar] [CrossRef]

- European Commission. Communication from the Commission to the European Parliament, the Council, the European Economic and Social Committee and the Committee of the Regions: Urban Mobility Package: Together Towards Competitive and Resource-Efficient Urban Mobility; COM/2013/0913 Final. European Commission: Brussels, Belgium, 2013. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:52013DC0913 (accessed on 1 August 2025).

- CEN/TS 17444-1; Intelligent Transport Systems—ESafety—Part 1: Traffic Signal Control Interface—Functional Requirements. Technical Report. European Committee for Standardization (CEN): Brussels, Belgium, 2020.

- INFRAS. Handbook Emission Factors for Road Transport (HBEFA) Version 4.2; INFRAS: Zürich, Switzerland, 2022; Available online: https://www.hbefa.net/e/index.html (accessed on 1 August 2025).

| Ref. | Method | State | Action | Reward | Traffic Density |

|---|---|---|---|---|---|

| [54] | PPO | signal phase, traffic volume, vehicle dynamics | binary set | intersection pressure | — |

| [55] | LSTM PPO | traffic volume, avg. queue length, waiting time, vehicle speed, lane occupancy | discrete phase switch | average speed | — |

| [19] | PPO | traffic flow, vehicle wait times, lane occupancy | extend duration of lights | total vehicle wait time | STGCN-LSTM |

| [56] | Federated PPO | queue length, waiting time for 8 lanes | 4 phase selection | average vehicle wait time | — |

| [18] | PPO | signal phase, congestion level, congestion evolution, avg. vehicle speed | 4 phase selection | total vehicle wait time | CNN-LSTM |

| [17] | PPO, DQN, DDQN | vehicle position, velocity, and waiting-time matrices, traffic light phase vector | fixed vs. variable interval | total vehicle wait and passing time | — |

| [65] | PPO | vehicle queues, non-stopping vehicles, phase timing context | duration of the next phase | negative sum of normalized queue lengths | — |

| [58] | MA-PPO | lane occupancy, signal phase | 8 phase selection | arterial delay | — |

| [59] | D3QN, PPO, SAC | signal phase, queue length, avg. vehicle speed, number of fuel-powered and electric vehicles | fixed phase sequence | sum of CO2 emissions and stopping delays | — |

| [61] | HAMH-PPO | lane occupancy | 8 phase selection | sum of waiting queue lengths in all lanes | — |

| Ref. | Performance Evaluation | Comments | Simulation Environment | Nr. |

|---|---|---|---|---|

| [54] | Intersection level | The work introduces CoTV, a multi-agent system based on PPO that facilitates cooperative control between connected autonomous vehicles (CAVs) and traffic light controllers, aligning their complementary goals to enhance traffic sustainability. | SUMO | 31 |

| [55] | Intersection level | The paper presents an adaptive traffic signal control model that combines LSTM and PPO to improve traffic flow representation and spatiotemporal perception at single-point intersections. LSTM captures temporal traffic features, while the Actor-Critic PPO framework enables intelligent signal phase decisions based on learned patterns. | SUMO | 1 |

| [19] | Intersection level | The study presents a hybrid traffic signal control framework that fuses STGCN and LSTM for predicting traffic flow and uses PPO for real-time signal optimization, while incorporating external factors to improve adaptability in complex urban conditions. | custom-built | - |

| [56] | Network level | The study introduces a PPO-based Federated RL framework for traffic signal control that improves agent cooperation and communication efficiency, leading to increased traffic throughput and reduced data transmission. | SUMO | 1 |

| [18] | Network level | The paper introduces a hybrid traffic signal control method integrating CNN-LSTM for state prediction and PPO for control, using cumulative waiting time as a reward to reflect system-wide congestion impact. | SUMO | 2 |

| [17] | Intersection level | The research evaluates and compares the performance of traffic signal controllers trained with DQN, DDQN, and PPO under fixed and variable light phase intervals, concluding that PPO delivers the most effective results. | SUMO | 1 |

| [57] | Intersection level | The article introduces a PPO-based Adaptive Traffic Signal Control (PPO-TSC) approach that utilizes simplified traffic state vectors derived from vehicle waiting times and lane queue lengths, with a reward function designed accordingly. | SUMO | 1 |

| [58] | Arterial level | The study proposes a MA-PPO adaptive signal control algorithm that uses the sum of normalized vehicle delays at each intersection as the reward function, and evaluates its performance along a simulated real-world arterial corridor. | PTV-Vissim-MaxTime | 7 |

| [59] | Intersection level | Incorporates CO2 emissions into the reward function alongside vehicle delay, allowing the adaptive signal control to optimize for both traffic flow efficiency and environmental impact. | SUMO | 1 |

| [61] | Network level | Proposes HAMH-PPO, a shared policy framework that improves parameter efficiency by reducing the number of actor networks required, while still maintaining intersection-level adaptability. | CityFlow | 100 |

| Video Entered | Vehicle Type Exited | Real Number | Counted Number | Accuracy (%) | Precision (%) | ||||

|---|---|---|---|---|---|---|---|---|---|

| Entered | Exited | Entered | Exited | Entered | Exited | ||||

| Video 1 Highway (4 lanes /side) | Car | 160 | 164 | 156 | 169 | 97.5 | 96.95 | 100.0 | 96.45 |

| Truck | 1 | 3 | 1 | 3 | 100.0 | 100.0 | 100.0 | 100.0 | |

| Motorbike | 3 | 0 | 2 | 0 | 66.7 | — | 100.0 | — | |

| Bus | 0 | 0 | 0 | 0 | — | — | — | — | |

| Video 2 3 Lanes Enter / 1 Exit | Car | 130 | 120 | 127 | 118 | 97.69 | 98.33 | 100.0 | 100.0 |

| Truck | 3 | 0 | 3 | 1 | 100.0 | — | 100.0 | 0.0 | |

| Motorbike | 8 | 4 | 7 | 2 | 87.5 | 50.0 | 100.0 | 100.0 | |

| Bus | 3 | 1 | 3 | 2 | 100.0 | 0.0 | 100.0 | 50.0 | |

| Video 3 4 Lanes Enter / 0 Exit | Car | 140 | 0 | 137 | 0 | 97.86 | — | 100.0 | — |

| Truck | 4 | 0 | 4 | 0 | 100.0 | — | 100.0 | — | |

| Motorbike | 12 | 0 | 10 | 0 | 83.33 | — | 100.0 | — | |

| Bus | 7 | 0 | 7 | 0 | 100.0 | — | 100.0 | — | |

| Video 4 3 Lanes Enter /4 Exit | Car | 125 | 130 | 127 | 132 | 98.4 | 98.46 | 100.0 | 98.48 |

| Truck | 10 | 2 | 10 | 2 | 100.0 | 100.0 | 100.0 | 100.0 | |

| Motorbike | 15 | 7 | 13 | 9 | 86.67 | 71.43 | 100.0 | 77.78 | |

| Bus | 5 | 11 | 5 | 12 | 100.0 | 90.91 | 100.0 | 91.67 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Medvei, M.M.; Bordei, A.-V.; Niță, Ș.L.; Țăpuș, N. DeepSIGNAL-ITS—Deep Learning Signal Intelligence for Adaptive Traffic Signal Control in Intelligent Transportation Systems. Appl. Sci. 2025, 15, 9396. https://doi.org/10.3390/app15179396

Medvei MM, Bordei A-V, Niță ȘL, Țăpuș N. DeepSIGNAL-ITS—Deep Learning Signal Intelligence for Adaptive Traffic Signal Control in Intelligent Transportation Systems. Applied Sciences. 2025; 15(17):9396. https://doi.org/10.3390/app15179396

Chicago/Turabian StyleMedvei, Mirabela Melinda, Alin-Viorel Bordei, Ștefania Loredana Niță, and Nicolae Țăpuș. 2025. "DeepSIGNAL-ITS—Deep Learning Signal Intelligence for Adaptive Traffic Signal Control in Intelligent Transportation Systems" Applied Sciences 15, no. 17: 9396. https://doi.org/10.3390/app15179396

APA StyleMedvei, M. M., Bordei, A.-V., Niță, Ș. L., & Țăpuș, N. (2025). DeepSIGNAL-ITS—Deep Learning Signal Intelligence for Adaptive Traffic Signal Control in Intelligent Transportation Systems. Applied Sciences, 15(17), 9396. https://doi.org/10.3390/app15179396