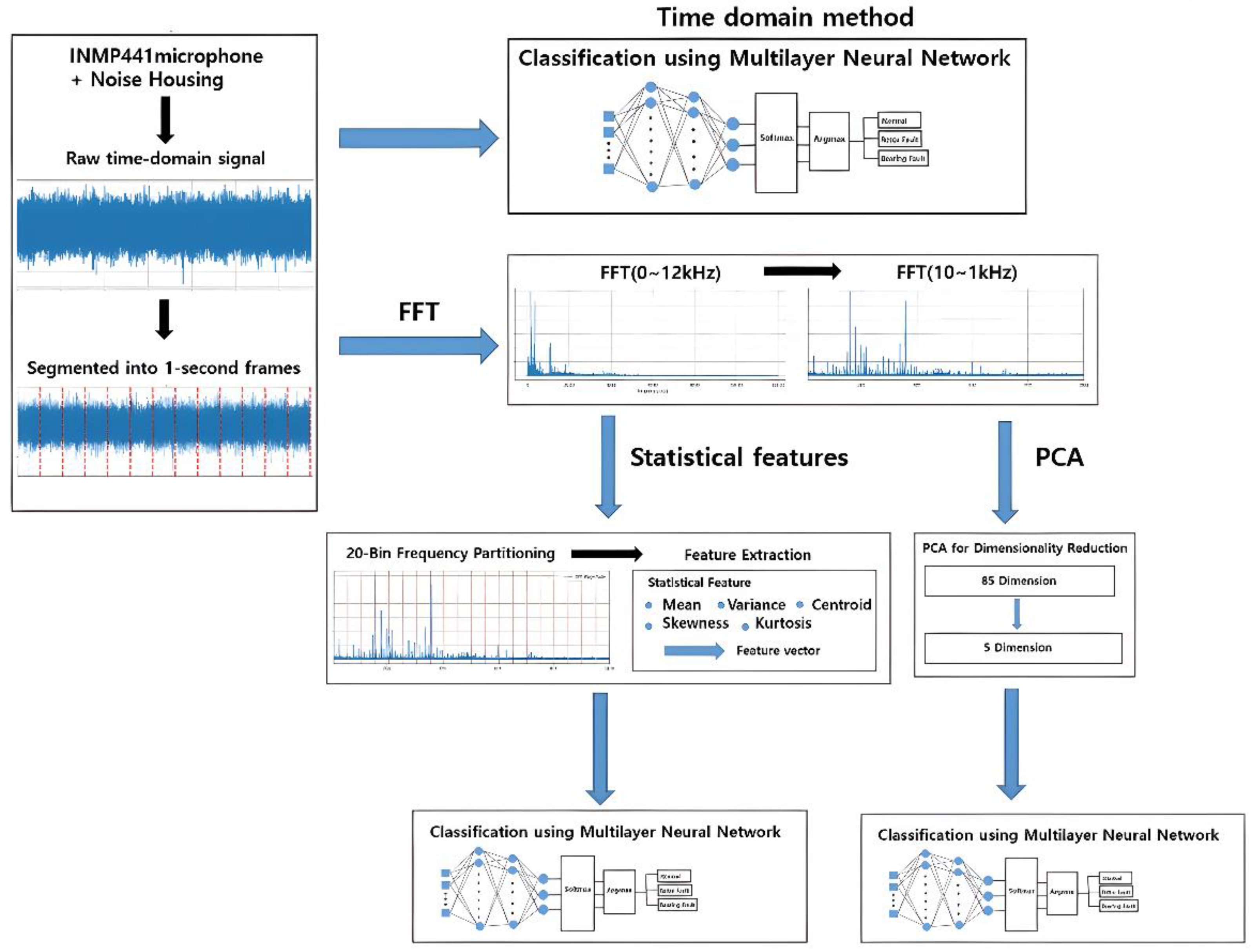

4.1. Time Domain

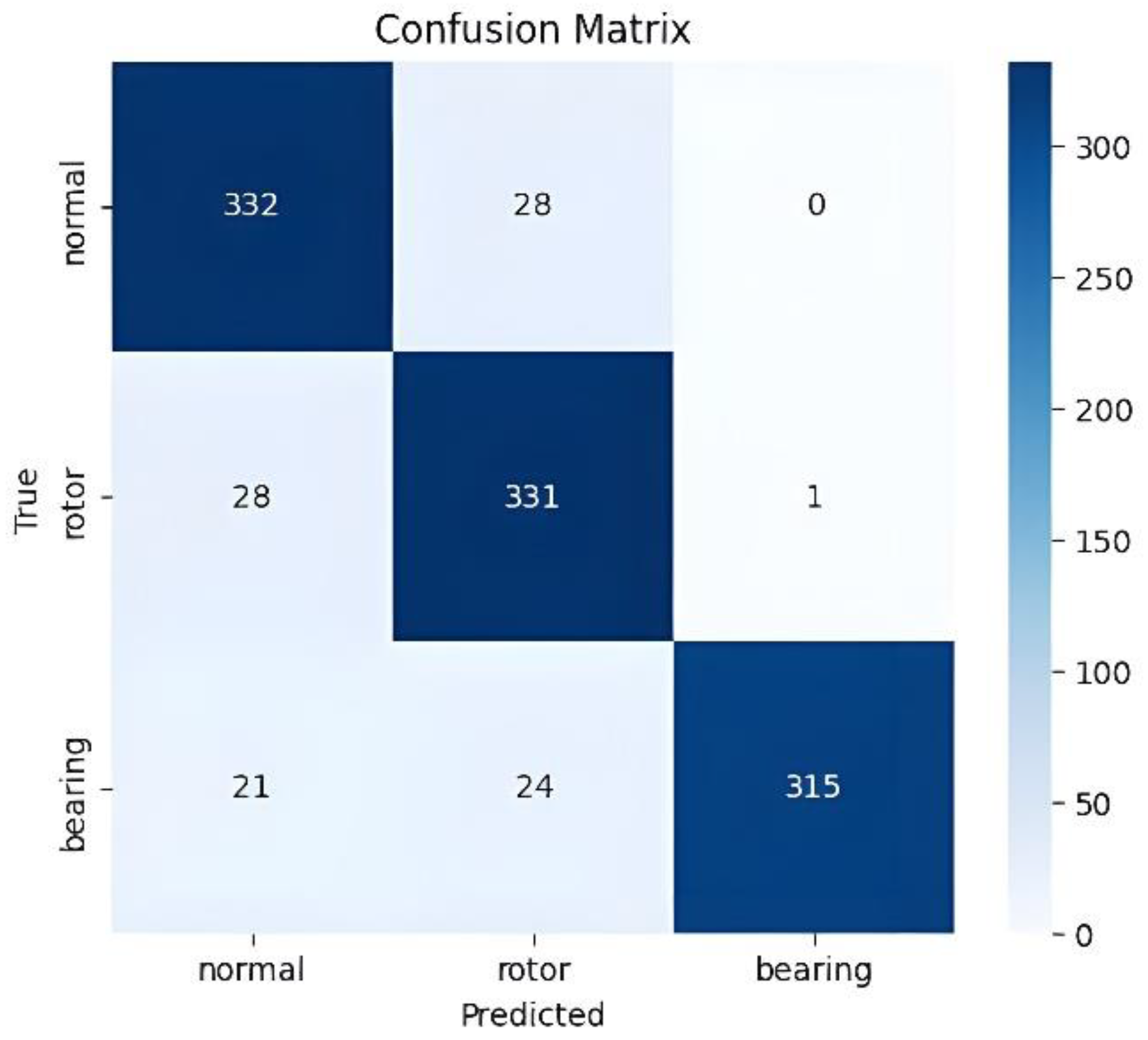

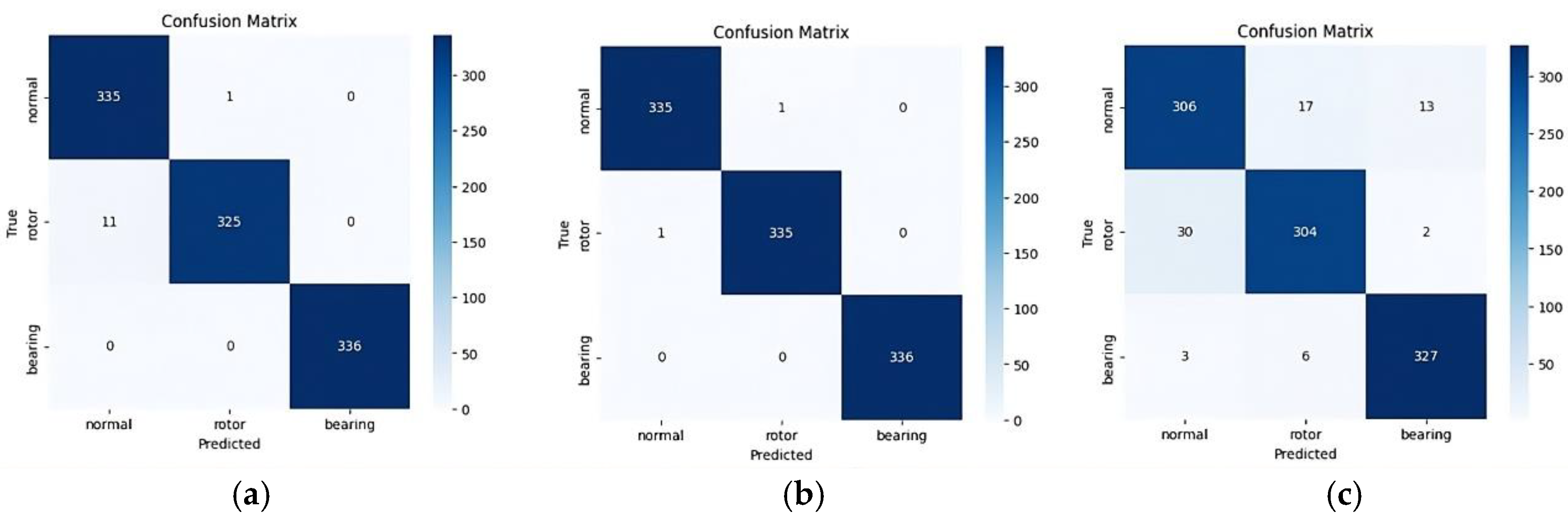

To evaluate the overall performance of the trained model, the confusion matrix for the test set is shown in

Figure 9, and the detailed performance metrics are presented in

Table 5.

As shown in the confusion matrix (

Figure 9), the model made 45 misclassifications in the bearing fault class, and 28 symmetric misclassifications occurred between the normal and rotor fault classes. The model often failed to correctly identify actual bearing faults and had difficulty distinguishing between the normal and rotor conditions. These results highlight the limitations of using time-domain features for fault classification.

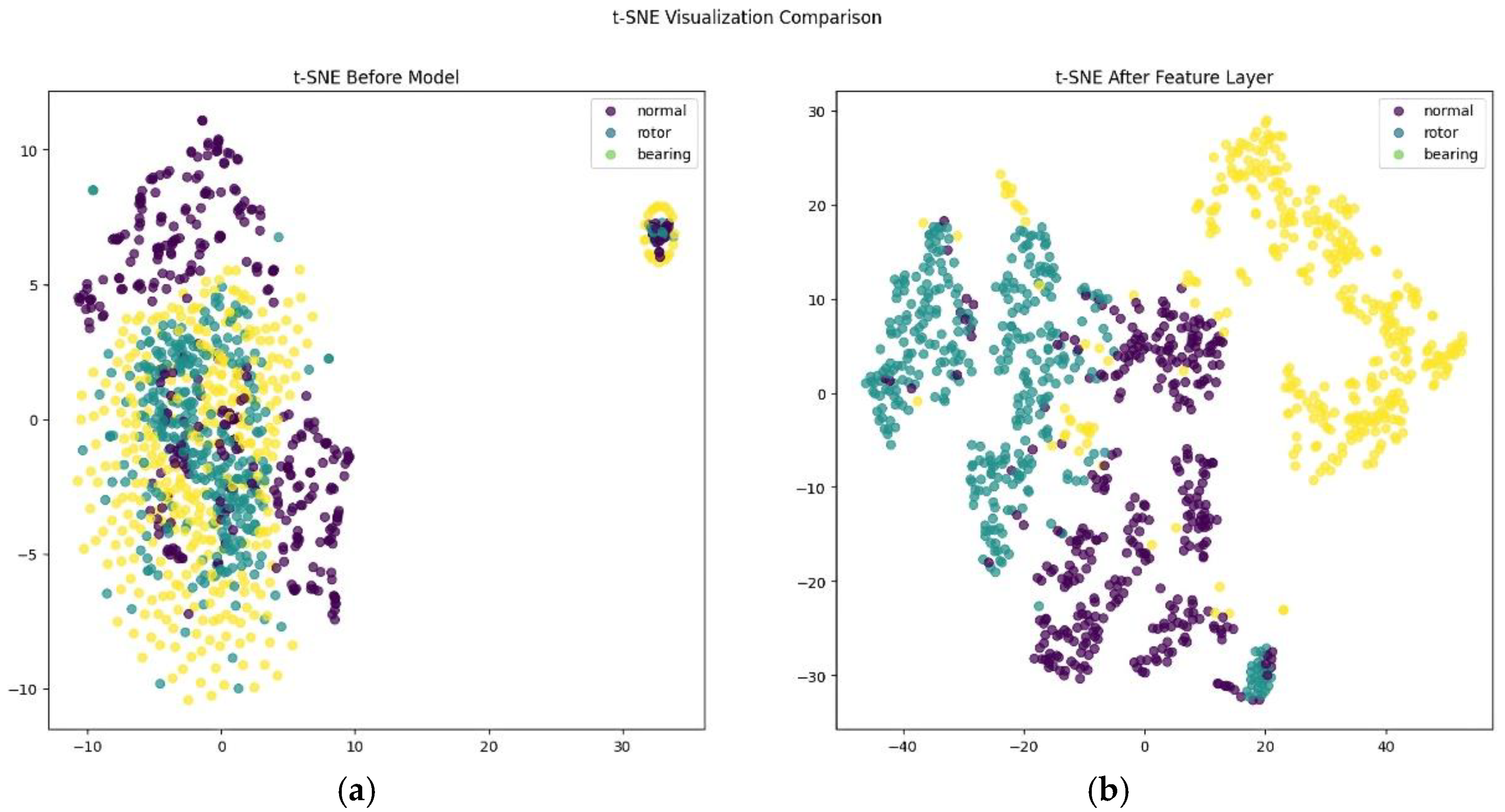

Figure 10 presents a t-SNE visualization of the features learned by the model. t-SNE is a dimensionality reduction technique that projects high-dimensional data into two dimensions, allowing for an intuitive assessment of class separability and data distribution [

40]. In

Figure 10, the left subplot shows the distribution of raw input data before training, while the right subplot illustrates the feature space after processing through the MNN’s feature extraction layer. Before training, the three classes exhibit substantial overlap, indicating poor class separability. After training, the model learns to form more distinct clusters for each class. However, partial overlap remains between the normal and rotor fault classes, particularly at the boundaries, corroborating the confusion matrix results. Although the bearing class forms a relatively well-defined cluster, some overlap persists, which accounts for its reduced recall. These visualizations confirm that raw time-domain input results in limited class separation.

4.2. Frequency Domain and Statistical Features

As described earlier, frequency-domain signals were divided into 20 equal-frequency bins. From each bin, five statistical features, i.e., mean, variance, median, skewness, and kurtosis, were extracted. Instead of relying on individual features, this multi-dimensional feature vector aimed to improve classification accuracy by capturing diverse signal characteristics. However, under real-world diagnostic conditions the contribution of each feature to classification performance may vary. Therefore, mutual information (MI)-based analysis was conducted to quantitatively assess the influence of each statistical feature on classification. MI is a theoretical measure of dependency between two random variables and is defined in Equation (6) [

41]:

where X is a feature vector and Y is the class label.

denotes the joint probability distribution, while

and

are the marginal distributions of X and Y, respectively. MI is positively associated with the contribution to class separation, indicating that higher values correspond to greater feature contribution.

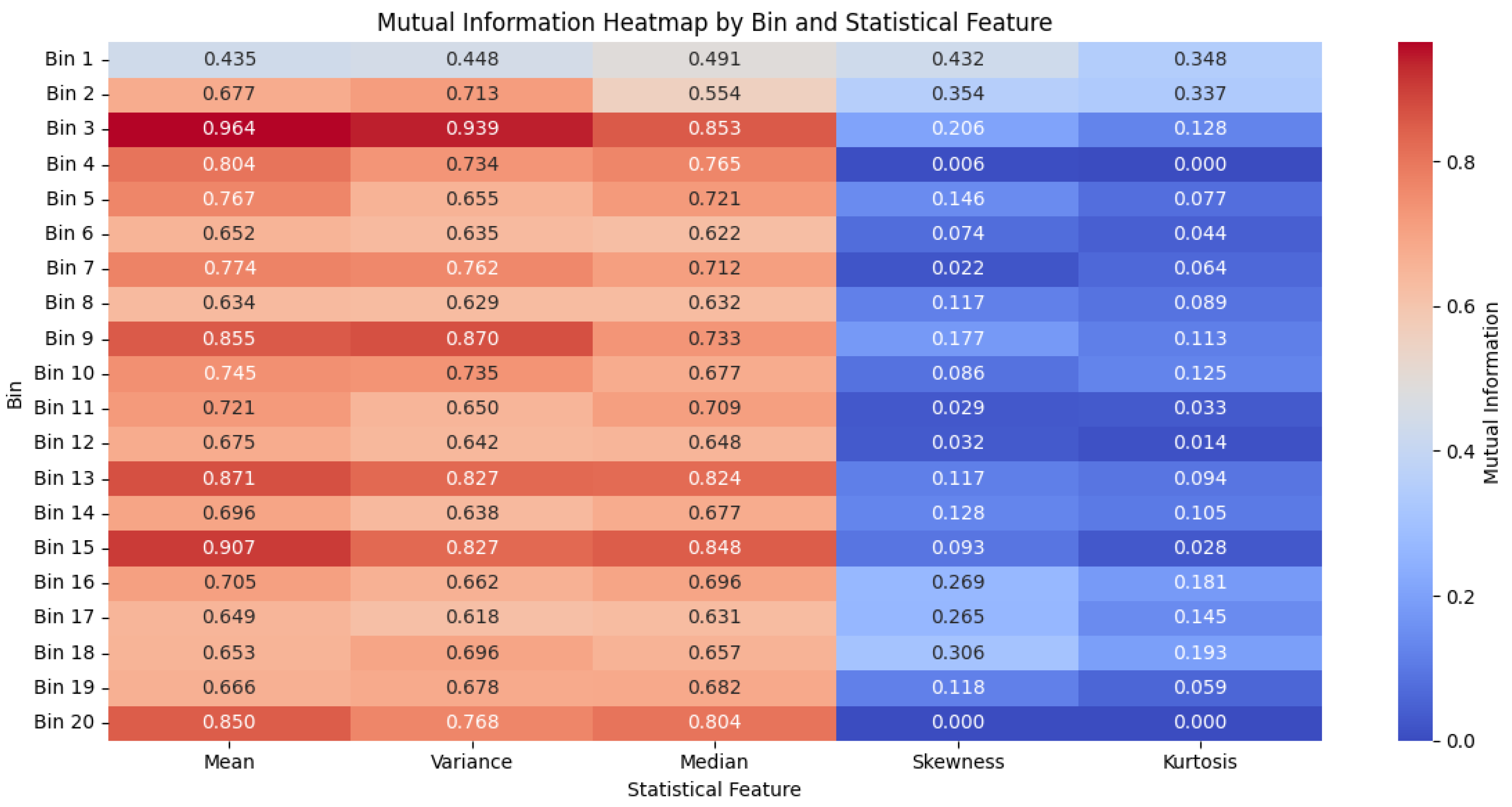

Figure 11 presents a mutual information heatmap of the five statistical features extracted from the 20 frequency bins.

According to the analysis, mean, variance, and median showed consistently high MI values, while skewness and kurtosis contributed significantly less. Notably, the mean, variance, and median in certain bins (e.g., Bins 3, 13, and 15) exceeded MI values of 0.8. In contrast, skewness generally showed MI values below 0.3 across most bins, and kurtosis exhibited even lower values. Morphological features such as skewness and kurtosis approached near-zero MI values in high-frequency bins, indicating limited usefulness for class discrimination.

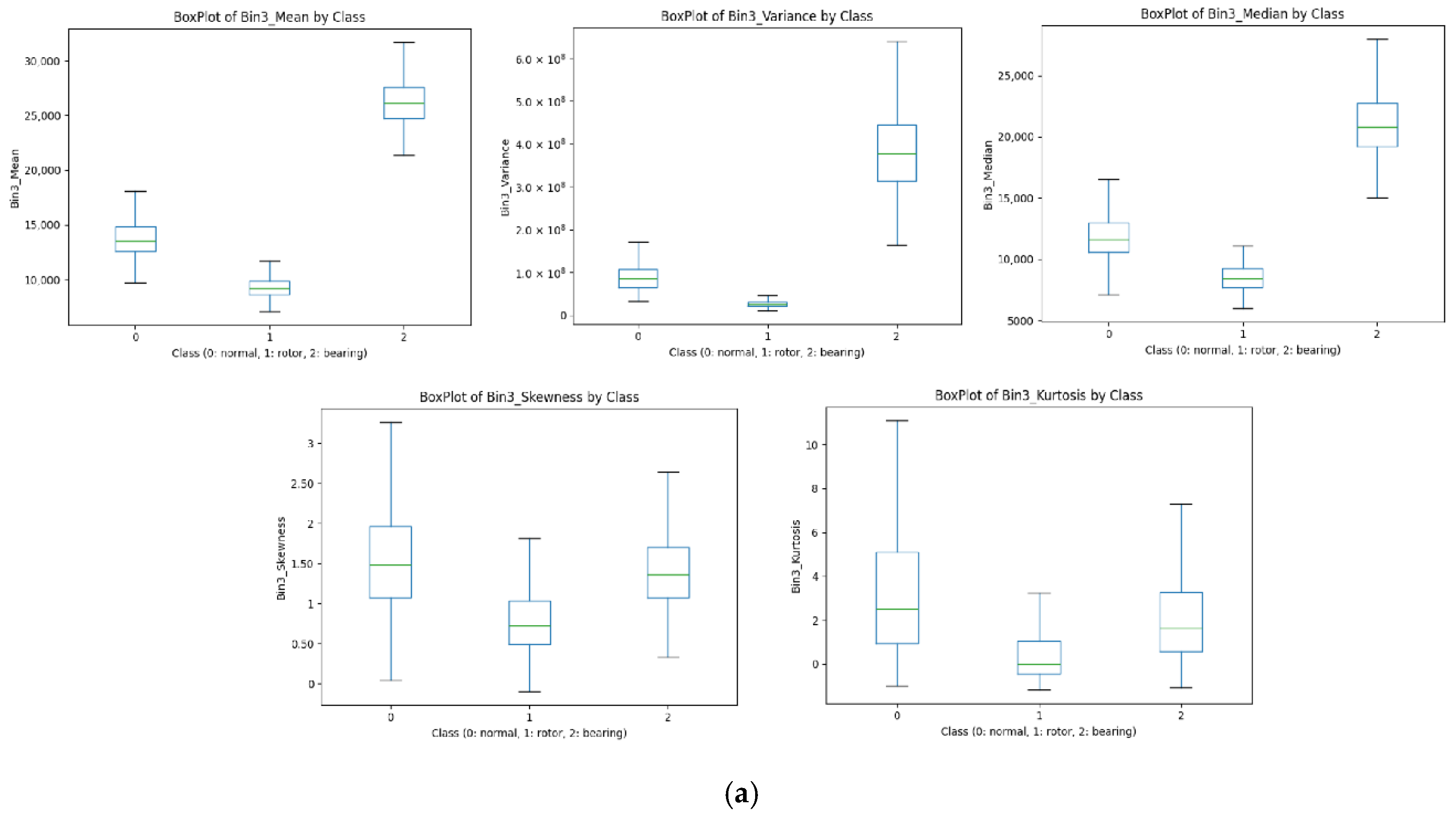

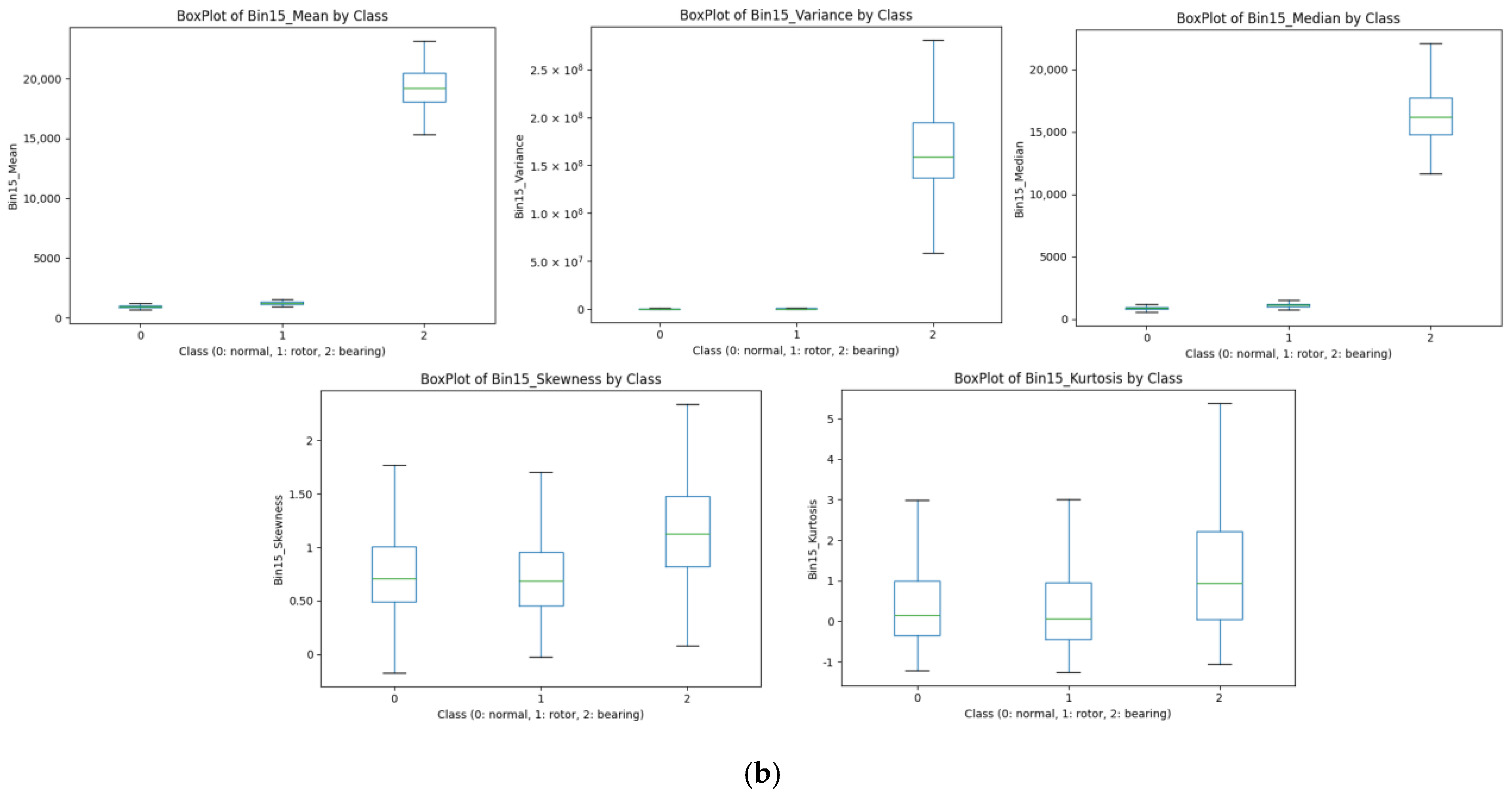

To validate the MI analysis,

Figure 12 displays boxplots of class-wise feature distributions for Bins 3 and 15. Each subplot shows the five statistical features—mean, variance, median, skewness, and kurtosis—for the three classes: normal (0), rotor fault (1), and bearing fault (2). The boxplots were generated using the Pandas library in Python 3.

In

Figure 12a, the bearing fault class is distinguishable from the others in terms of the mean, while the rotor fault class has the lowest variance.

Figure 12b shows even clearer class separation in Bin 15’s mean values: bearing faults produce high values, while the other two classes converge near zero. These patterns reflect the inherent signal characteristics of each fault type. Rotor faults typically produce stable vibrations at specific frequencies, resulting in smaller amplitude fluctuations over time and, consequently, lower variance values. In contrast, bearing faults generate impulsive signals and broadband energy, which increase the mean and median values while also amplifying amplitude variability, leading to higher variance.

In both bins, skewness and kurtosis features show overlapping distributions among classes, reinforcing the MI findings and confirming limited discriminative power.

To further assess the contribution of each feature from a performance perspective, three comparative experiments were conducted using different combinations of statistical features based on the MI and boxplot analyses:

Case A: All five features (mean, variance, median, skewness, and kurtosis).

Case B: Only high-contribution features (mean, variance, and median).

Case C: Only low-contribution features (skewness and kurtosis).

Case D: Mixed high- and low-contribution features (mean, variance, median, and skewness).

Case E: Mixed high- and low-contribution features (mean, variance, median, and kurtosis).

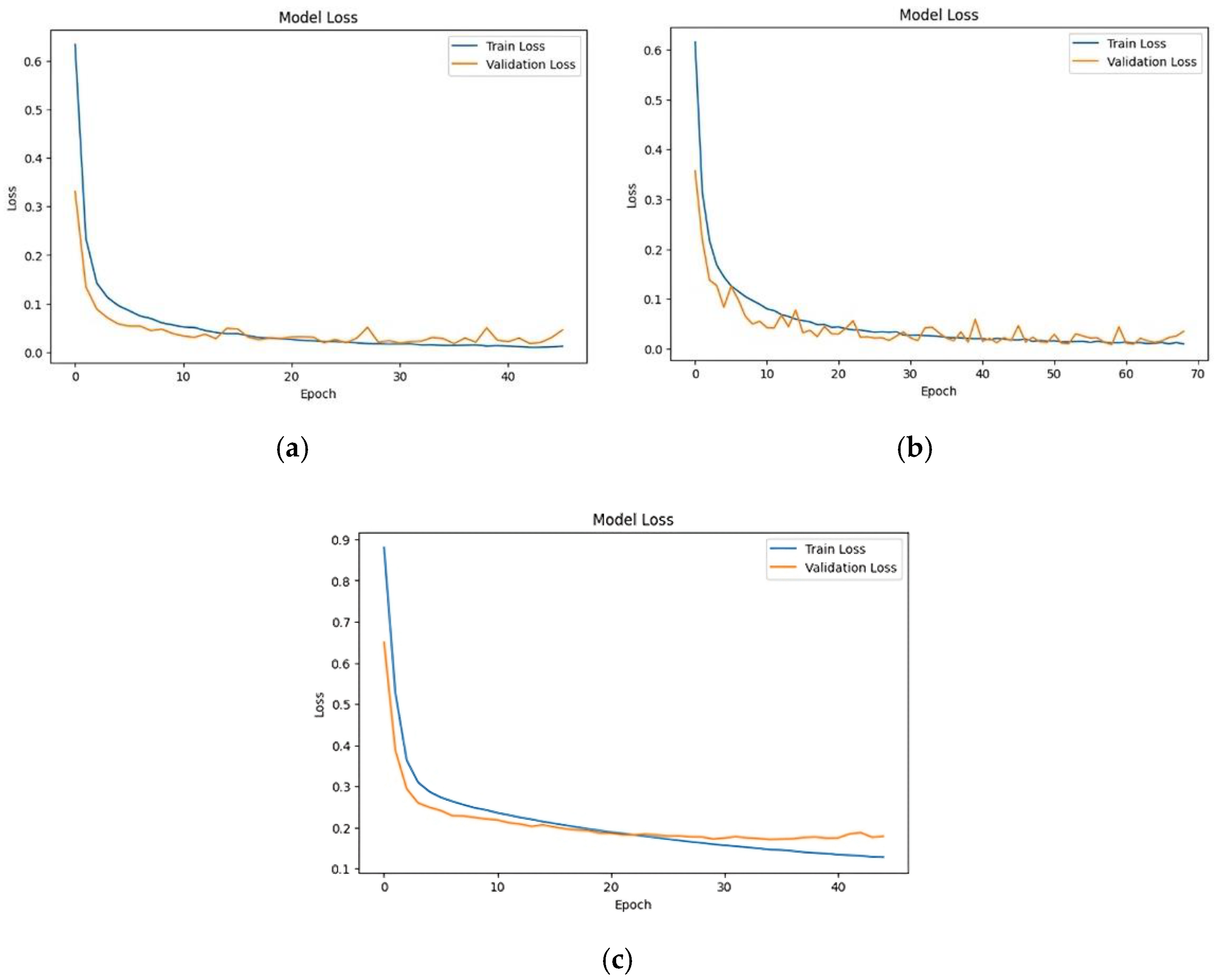

For a fair comparison, the same classification model and hyperparameters were applied to all three cases. During model training, early stopping was used to prevent overfitting. The validation loss was monitored, and training was terminated if no improvement was observed for 10 consecutive epochs. The maximum number of training epochs was set to 500, ensuring that the stopping point was determined by the early stopping criterion. The model weights from the epoch with the lowest validation loss were then restored.

Table 6 presents the performance metrics, while

Figure 13 and

Figure 14 show the respective confusion matrices and loss curves.

Cases A and B achieved similar overall accuracy and F1-scores, but Case B outperformed Case A with a test loss approximately three times lower and a reduction in classification errors from 12 to 2. This result suggests that the inclusion of skewness and kurtosis in Case A may negatively affect the model’s generalization performance. As expected from the MI analysis, Case C—relying solely on skewness and kurtosis—exhibited a substantial drop in performance, with 71 classification errors and the highest test loss. The performance differences between Cases A and D/E were negligible, indicating that the low-MI features contributed little to classification performance for this dataset. While their inclusion did not substantially affect accuracy, it did increase computational load and processing time. Therefore, excluding these features is preferable for efficient model design. These results demonstrate that morphological statistical features alone are inadequate for reliable fault classification.

4.3. Frequency-Domain PCA-Transformed (FFT + PCA)

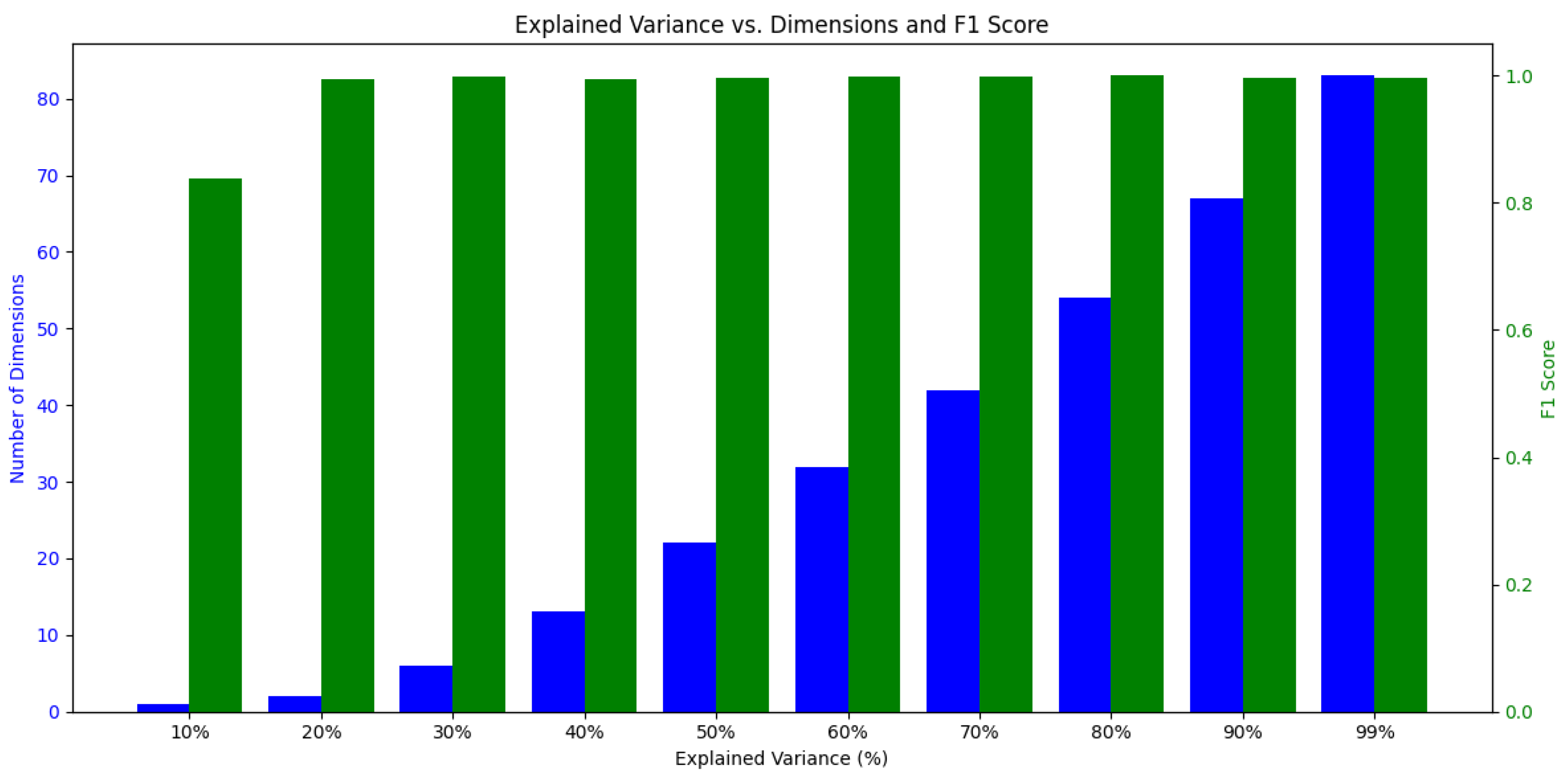

To retain the high classification performance of the FFT + statistical features approach while reducing feature dimensionality, PCA was applied to FFT-transformed data.

Figure 15 illustrates how classification performance varies with the explained variance ratio (EVR), ranging from 10% to 99%, helping identify an optimal trade-off between dimensionality reduction and performance retention. High classification accuracy was achieved even at relatively low EVR levels. As EVR increased, the number of required principal components grew substantially, but performance gains remained marginal. Since fault classification accuracy is the primary objective, EVR was selected to strike a balance between optimal performance and reduced dimensionality.

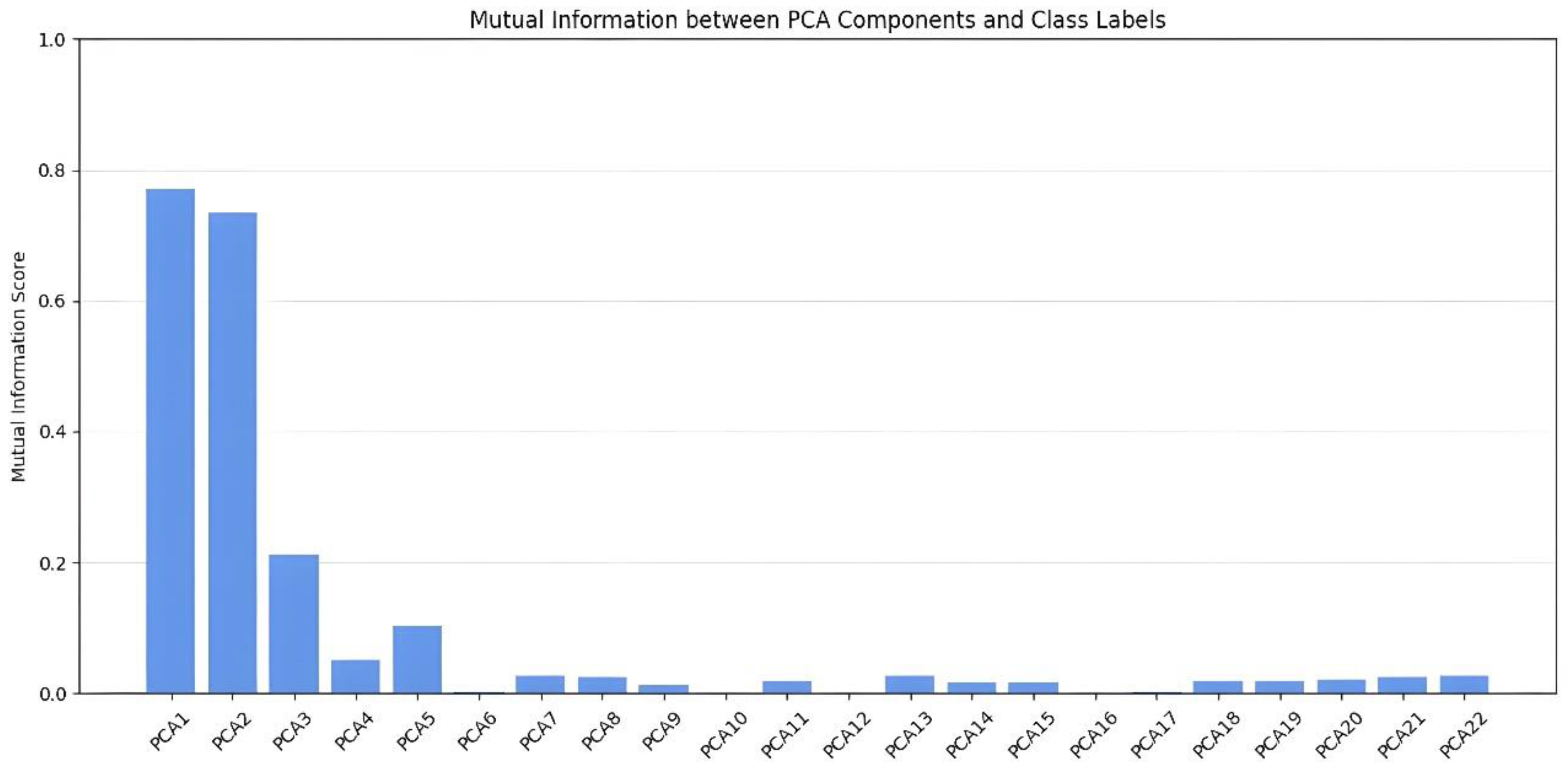

To determine the optimal EVR based on component contribution, the MI between each principal component and the classes was analyzed, as shown in

Figure 16.

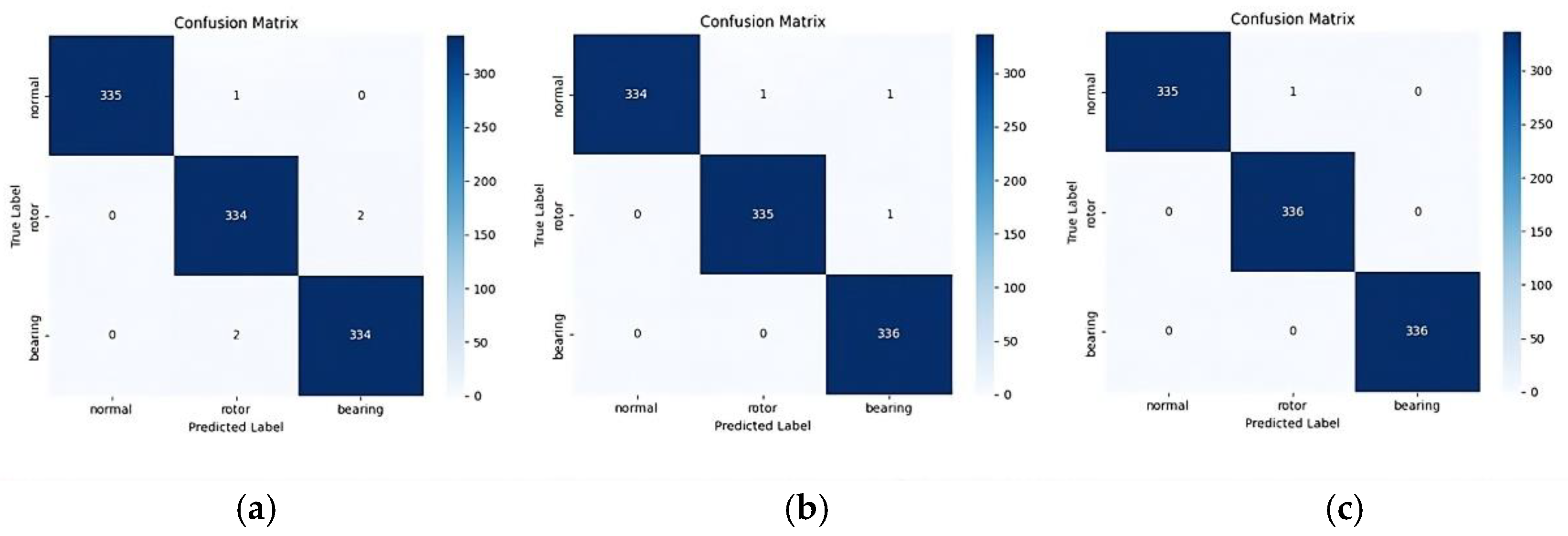

The analysis revealed that PC1 and PC2 were the most influential for class discrimination, with PC3 also showing a moderate contribution. However, a sharp drop in MI was observed for PC4, followed by a slight rebound for PC5. Beyond PC6, the MI values diminished to near-zero, indicating minimal contribution to classification. Based on this analysis, three sets of principal components, PC1-2, PC1-3, and PC1-5, were used in classification experiments. The corresponding confusion matrices are shown in

Figure 17.

Although classification performance was generally strong across all configurations, differences in error counts were observed. PC1-2 resulted in five misclassifications, PC1-3 in three, and PC1-5 in only one. While PC1-5 increased feature dimensionality slightly compared to PC1-2, the inclusion of additional components effectively reduced misclassification. As a result, PC1-5 was selected as the optimal configuration. This corresponds to an EVR of 29%, representing a data-driven decision based on both MI analysis and experimental validation. To further assess the robustness of the selected EVR, additional experiments were conducted by varying the EVR threshold to 20%, 40%, 50%, and 70%.

Table 7 summarizes the corresponding PCA component counts, F1-scores, and training times. The results clearly indicate that a 29% EVR offers the optimal balance between performance and efficiency for this dataset. At the lower threshold of 20% EVR, classification performance (F1-score) showed a slight decline. In contrast, thresholds above 29% provided no improvement in F1-score but substantially increased model complexity and training time. For instance, at 40% EVR the number of principal components increased by more than 2.5 times (from 5 to 13) compared to 29%, without any performance gain and with a modest increase in training time. Higher thresholds, such as 50% and 70%, further increased both component count and training time, with 70% EVR requiring more than twice the training duration of the 29% configuration. These findings confirm that a 29% EVR provides the most effective trade-off between classification performance, model simplicity, and computational efficiency in the context of this study.

Figure 18 displays the confusion matrix for the final model using PCA-reduced FFT features. Out of 1008 samples, only one normal sample was misclassified as a rotor fault, yielding a classification accuracy of 99.80%. All bearing fault samples were correctly classified.

To further evaluate the separability of PCA-transformed features, t-SNE was used to visualize the five-dimensional feature space, as shown in

Figure 19. The visualization reveals distinct clustering for each class: bearing faults (2) formed a compact and isolated cluster in the right region, while the normal (0) and rotor fault (1) classes were well separated in the upper and lower regions, respectively. These results demonstrate that the five-dimensional PCA features offer strong discriminative power, supporting the 99.80% classification accuracy.

4.4. Discussion of Results

The performance summary of the three methods is presented in

Table 8. The raw time-domain signal method achieved an accuracy of 90.6%, but it showed clear limitations, including frequent misclassifications between normal and rotor fault conditions and a low recall rate for bearing faults. The FFT combined with statistical feature extraction improved performance, as mutual information analysis identified the mean, variance, and median as more informative features than skewness and kurtosis. Using only the high-contribution statistical features, this method reached 99.8% accuracy, with just two misclassifications. The FFT + PCA approach outperformed all other methods, achieving the highest classification accuracy of 99.9% while significantly reducing input dimensionality. By selecting a 29% explained variance ratio based on performance criteria, the method reduced feature dimensions from 60 to 5, maintaining excellent diagnostic performance with only one misclassification.

The raw time-domain input with 24,000 nodes required approximately 384,000 parameters in the first fully connected layer alone. In contrast, the FFT + statistical features (Case A), with only 100 nodes, required about 1600 parameters, a roughly 238-fold reduction. This reduction proportionally decreased the number of multiply–accumulate operations, resulting in a substantial decrease in both computational cost and memory usage. Beyond improving training efficiency, this dimensionality reduction also helped mitigate the risk of overfitting associated with high-dimensional inputs. An alternative approach for directly processing raw time-domain signals, while reducing computational load and effectively capturing local features, is the use of 1D-CNNs.

To quantitatively validate the practicality of using low-cost sensors and lightweight models,

Table 9 compares key deployment-related metrics—parameter count, MACs per sample, and average inference time—across different feature extraction methods. The inference times reported here represent pure forward-pass latency (excluding preprocessing) and were measured with a batch size of 1 under identical hardware conditions. All three input methods achieved inference times of approximately 0.6 ms, confirming their suitability for real-time diagnosis. However, despite the substantial reduction in parameter count and MAC operations, inference times remained similar because fixed overheads, such as framework calls, memory access, and data loading, dominated the overall latency. This is a well-known characteristic of lightweight models, where differences in computational complexity are not directly reflected in inference time.

From a computational standpoint, the FFT + PCA method achieved a dramatic 99.93% reduction in both parameter count (384,179 → 259) and MACs (384,152 → 232). This highlights its advantage in reducing model size and computational demand while maintaining real-time diagnostic capability, as demonstrated by the inference time results.