1. Introduction

Leukocytes represent an indispensable component of the human immune system, with the majority of their production occurring in bone marrow and lymphoid tissues. Employing a series of intricate physiological processes, they are capable of effectively resisting the invasion of pathogens, including bacteria, viruses, and fungi, thereby maintaining the healthy state of the organism [

1,

2,

3]. Leukocyte classification traditionally divides them into two major groups, granulocytes and non-granulocytes, based on their morphological characteristics [

4,

5]. The granulocyte population comprised a very low percentage of basophils (approximately 0–1%), a moderate number of eosinophils (approximately 1–5%), and a dominant number of neutrophils (approximately 50–70%). The non-granulocyte population is primarily composed of monocytes (approximately 2–10%) and lymphocytes (approximately 20–45%), which also play a pivotal role in the immune response process [

4,

5,

6]. When the leukocyte count is outside the normal range, it may indicate that the organism is in a pathological state [

7,

8]. Leukemia, a hematological malignancy with a significant adverse impact on human health, demonstrates a progressive global incidence trend on an annual basis. A review of the literature revealed a significant increase in the global incidence of newly diagnosed leukemia cases, from 354.5 thousand to 518.5 thousand cases between 1990 and 2017 [

9].

In light of the significant risk to human health posed by leukemia and the rising prevalence of the disease, the imperative for strengthening early screening, diagnosis, and treatment protocols becomes increasingly evident. In this context, it is of particular importance to develop efficient and accurate leukocyte classification techniques to assist physicians in the rapid and accurate identification and diagnosis of the disease during its initial phases. Conventional deep learning classification techniques typically necessitate a substantial quantity of data to achieve optimal performance. However, in clinical practice the collection and annotation of medical data present considerable challenges for algorithm development. The identification and labeling of medical images require substantial time and effort from experienced healthcare professionals. These processes demand a high degree of expertise and extensive time commitment, which collectively hinder the acquisition and annotation of large-scale, high-quality medical datasets. Consequently, the availability of well-curated data essential for deep learning applications remains limited. In addition, in practical applications medical data samples, such as blood cell images, often exhibit distributional biases. General classification models may be unable to learn sufficiently generalized features, which in turn affects their performance. Few-shot learning offers an effective solution to this problem. This is a machine learning method that trains and predicts models with a minimal amount of data.

With the development of deep learning, few-shot learning methods based on meta-learning, metric learning, and graph neural networks receive increasing attention. These methods utilize the expressive power and parameter-sharing properties of neural networks and make significant progress in few-shot learning tasks. For example, Ravi et al. [

10] considered the optimization process as a model for few-shot learning and proposed an end-to-end meta-learning method. They put forth a meta-learner model based on LSTM networks to learn an optimal algorithm for training another learner model in scenarios with limited data. This approach explored techniques for model parameter updating using optimization algorithms in a few-shot learning scenario. Jiang et al. [

11] proposed a meta-learning method based on conditional class dependencies. Conditional class-aware meta-learning (CAML) is a method that conditionally transforms feature representations by leveraging metric spaces specifically designed to encode relationships among different classes. Through this mechanism, CAML enables the conditional modulation of feature representations utilized by the base learner, incorporating regularization that is guided by the structure of the label space. By explicitly considering and integrating inter-class dependencies, this approach significantly improves meta-learning performance.

Based on meta-learning research, Kozerawski et al. [

12] proposed a multi-stage training framework aimed at predicting the parameters of SVM classifiers. The second stage focused on learning a category-specific feature space for each classification task, and the third stage underwent an end-to-end training mechanism to dynamically produce a set of neural network classifiers to deal with the problem of few-shot image recognition by combining metric learning and the classifiers. The method was able to update the metric space each time a new sample was added, thus improving the recognition performance. In addition, Gidaris et al. [

13] proposed incorporating the attention mechanism in metric learning to improve the performance of small sample image recognition by combining metric learning and visual attention mechanisms to extract features. Later, researchers found that combining metric learning with graph neural networks could lead to better generalization ability of the model. For example, Mandal et al. [

14] proposed the combination of meta-learning with graph neural networks to optimize the parameters of GNN through meta-learning for better generalization ability with a small number of samples. The results showed that the integration of meta-learning with graph neural networks could lead to better prediction by the model. Moreover, Hamilton et al. [

15] put forth the GraphSAGE approach to achieve inductive learning on large-scale graphs by sampling neighboring nodes. Node embeddings were generated with efficiency through the use of node feature information, allowing for the learning of a function that generates embeddings through the sampling and aggregation of features from the local neighborhood of a node.

In the field of leukocyte classification, Sudhakar et al. [

16] proposed a Siamese twin network (STN)-based method for few-shot learning to enable automated classification of healthy peripheral blood cells. This method incorporated STN and contrastive learning techniques and used EfficientNet-B3 as a base model, which was trained with fewer images to achieve the classification of peripheral blood cells. In the context of the small sample challenge of brain imaging modality recognition, Santi et al. [

17] put forth a deep triple network structure based on a CNN. The structure learned an optimized distance metric function by constructing a triple consisting of an anchor, a positive sample, and a negative sample, and extracting a deep feature representation using a CNN. This approach successfully maximized the similarity between the anchor and the positive sample while minimizing the similarity with the negative sample, thus improving the classification performance in a resource-constrained few-shot environment. Chen et al. [

18] made significant progress in the field of few-shot chest CT image analysis by using the momentum contrastive learning (MCL) strategy. The approach effectively learned feature representations and enabled accurate COVID-19 diagnosis by increasing the similarity among positive sample pairs while reducing the similarity between negative sample pairs. In addition, Alfonso et al. [

19] used a Siamese neural networks-based few-sample learning approach to migrate feature knowledge learned from a complete and well-labeled source dataset (e.g., colon tissue images) to a target domain containing a wider range of tissue types (e.g., colon, lung, breast tissues) to significantly improve the classification performance. The above method not only demonstrated the substantial potential of few-shot learning in medical image analysis but also provided innovative insights into addressing the challenge posed by the limited availability of medical data.

In light of the aforementioned research in few-shot medical image classification, this study proposes the utilization of FRNE, a few-shot learning method based on the integration of pre-trained feature extraction and spatial reconstruction, to enhance the predictive accuracy of simulations utilizing limited samples of leukocyte data. The proposed method reformulates the white blood cell classification task as a feature-space-reconstruction problem. Specifically, an optimized feature extractor is employed to extract features from the support set samples, which are then used to reconstruct the query samples. Feature re-construction is achieved through a closed-form solution regression, and the resulting reconstruction error serves as the basis for category prediction. Unlike optimization-based meta-learning approaches [

10,

20]—which require the training of an external optimizer (e.g., an LSTM) and involve a large number of meta-training tasks (e.g., N-way K-shot episodes)—and metric learning methods [

16] that rely on the careful design of positive and negative sample pairs, the feature reconstruction-based algorithm offers several advantages. It directly reflects the similarity between query and support samples, provides an interpretable classification mechanism in the medical domain, leverages the geometric relationships within the feature space, avoids complex optimization procedures, and demonstrates high computational efficiency. Therefore, it is particularly well-suited for applications with limited data and computational resources, where high classification accuracy is essential. The main contributions of this work are summarized as follows:

- (1)

A classification method for white blood cell images is designed based on the FRNE few-shot learning framework. A spatial reconstruction network is introduced to align the feature maps of the support set with those of the query set. By utilizing features extracted from a limited number of reference cell samples, the spatial characteristics of the target cell categories can be effectively reconstructed, thereby enabling an investigation into the applicability and performance of few-shot learning approaches in the field of blood cell analysis.

- (2)

The improved EfficientNetv2 model is proposed as a feature extractor and integrated with the ASPP module. By employing dilated convolution structures with different dilation rates, the model is capable of effectively capturing and adaptively extracting multi-scale features from blood cell images.

2. Related Work

Deep learning has achieved remarkable breakthroughs across various domains, owing to its powerful computational capabilities and effective learning mechanisms. However, its performance heavily relies on the availability of large-scale, accurately labeled datasets, which restricts its applicability in many real-world scenarios. For instance, in the field of medical diagnosis, ethical concerns, privacy issues, and the high cost of expert annotation make it difficult to obtain sufficient labeled data for research. In response to these challenges, researchers have increasingly focused on few-shot learning, which has emerged as a crucial branch of machine learning. Introduced by Norbert et al. [

21], few-shot learning aims to enable models to achieve strong performance with only a limited number of training samples. To enhance its effectiveness, researchers incorporate methods such as meta-learning, incremental learning, metric learning, and semi-supervised approaches, often combining multiple strategies and learners. Currently, few-shot learning is being progressively applied in areas such as industrial vision inspection, robotics, and healthcare.

In the medical field, few-shot learning also faces significant developmental challenges and remains relatively underexplored due to various constraints, including data security concerns, ethical regulations, limited inter-class variability, and insufficient sample representativeness. Similarly to its application in other domains, few-shot learning in medicine is predominantly employed for tasks such as classification, segmentation, and detection. The frequently cited literature includes the following: Singh et al. [

22] proposed a few-shot learning method termed “MetaMed,” which incorporates data augmentation techniques such as MixUP and CutMix into a meta-learning framework. MetaMed achieved classification accuracies above 70% on the Pap smear, ISIC 2018, and BreakHis datasets. Furthermore, it demonstrated superior average performance in both two-way and three-way classification tasks compared to transfer learning approaches. Sun et al. [

23] introduced an incremental learning framework termed meta self-attention prototype incrementer, which incorporates a feature extraction embedding encoder, a prototype enhancement component, and a distance metric-based classifier. Their approach effectively addressed the challenge of incorporating new classes in medical time-series classification while preserving knowledge of previously learned classes, thereby achieving excellent results. Liu et al. [

24] developed a meta Siamese network based on metric learning that utilized similarity measurements for arrhythmia diagnosis, which demonstrated strong robustness. Roy et al. [

25] proposed a two-arm architectural model incorporating the ‘Squeeze & Excite’ module to enable few-shot learning and segmentation of CT images across various organs throughout the body. This architecture demonstrates effective volumetric image segmentation without requiring pre-training. Wang et al. [

26] introduced a semi-supervised few-shot framework that employs loss re-weighting to balance the distribution of different lesion categories, thereby achieving accurate classification of pulmonary infection regions using only a limited number of labeled samples by leveraging high-confidence predictions. Cheng [

27], Zhu [

28], and their colleagues adopted a prototype-based few-shot learning approach, which enhances model performance by capturing more representative class distributions via multiple descriptors or refined sub-regions. Jiang et al. [

29] combined meta-learning, transfer learning, and metric learning to develop a multi-learner few-shot learning framework. Their method incorporates real-time data augmentation and dynamic Gaussian soft labels, resulting in strong generalization capabilities for classification tasks and demonstrating superior performance on the medical benchmark datasets CHEST, BLOOD, and PATH.

Although few-shot learning has been applied in the medical domain with promising outcomes, its application in blood cell segmentation and classification remains relatively limited. A literature search conducted in the SCI-EXPANDED database of the Web of Science using the query (TS = (few shot) OR TS = (few-shot)) AND (TS = (blood cell) OR TS = (bone marrow cell)), while excluding studies not directly focused on the segmentation or classification of blood or bone marrow cells, yielded only two relevant articles—Reference [

16] and Reference [

30]. The approach proposed in Reference [

16] employs a Siamese network architecture to quantitatively compare the embeddings of generated image pairs by computing their absolute differences, which are subsequently utilized for training and class prediction. This demonstrates the efficacy of the few-shot contrastive learning method based on the EfficientNet-B3 backbone model in the domain of blood cell classification. Reference [

30] provides a diverse cell segmentation dataset comprising red blood cells, white blood cells, and infected cells, which is valuable for blood cell research and particularly beneficial for few-shot learning involving imbalanced classes. In addition, Chossegros et al. [

31] implemented a model fine-tuning workflow for few-shot learning of white blood cell classification. This methodology consisted of pre-training the EfficientNet model on images from two distinct datasets, followed by fine-tuning on the target dataset, which demonstrated promising performance. The objective of this research is to evaluate the effectiveness of few-shot learning based on feature reconstruction techniques in the classification of white blood cells with similar morphological structures and features. To accomplish this, the multi-dimensional, balanced extended EfficientNet model [

32] is utilized for feature extraction and further enhanced through the integration of ASPP [

33]. Convolutions with varying dilation rates are incorporated to generate diverse feature representations, thereby improving the model’s ability to capture multi-scale contextual information and enhancing its generalization capability. Finally, the features of query samples are reconstructed using a spatial feature reconstruction network, and the corresponding sample categories are determined based on the associated reconstruction error.

3. Methods

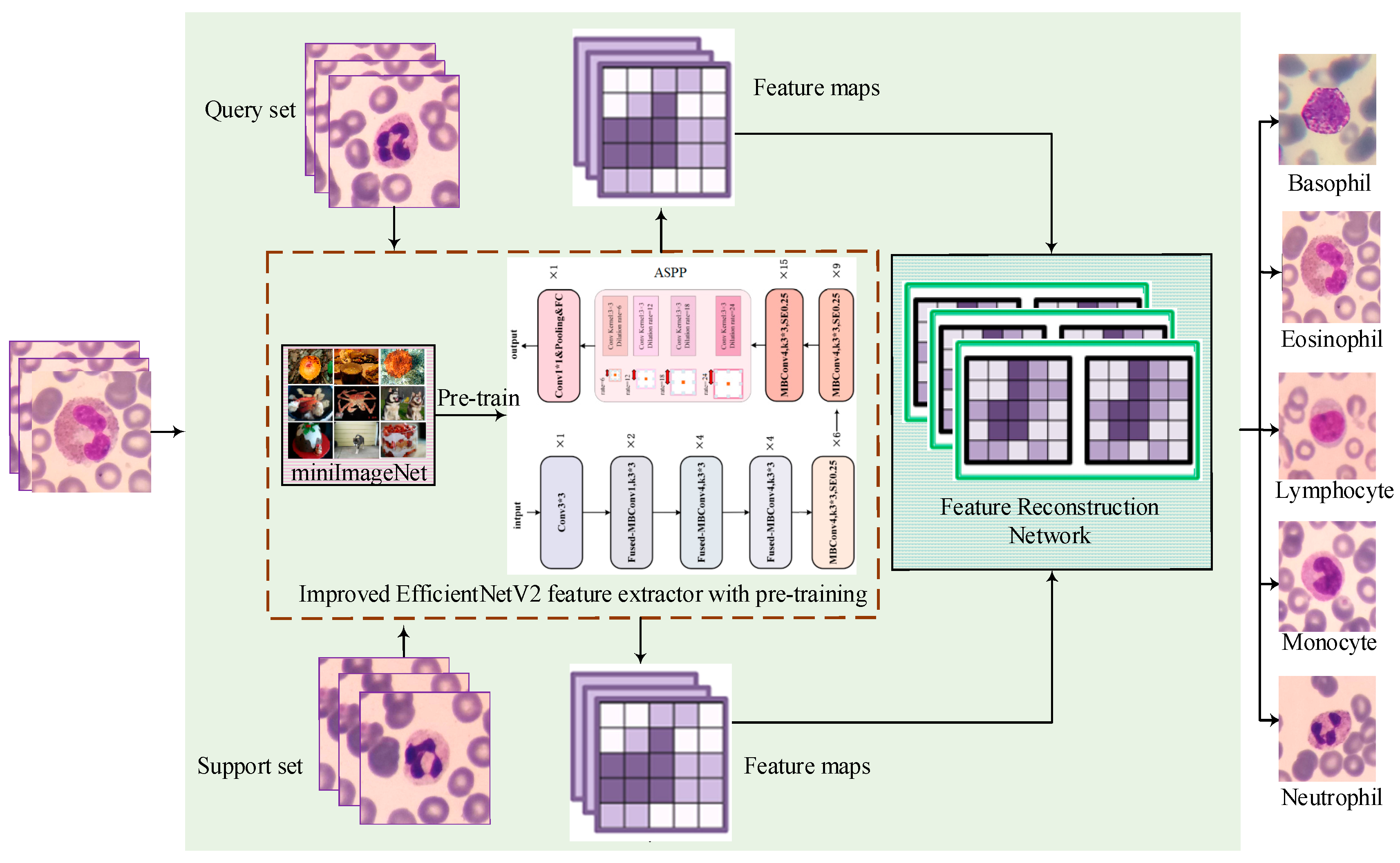

To evaluate the effectiveness of few-shot classification methods in the field of white blood cell classification, this study proposes a feature reconstruction-based few-shot learning framework that incorporates an improved EfficientNetV2 architecture. The proposed method seeks to improve classification accuracy by effectively utilizing limited training samples. The specific structure of the model is presented in

Figure 1, which consists of three primary components: the feature extraction module, the feature reconstruction network, and the few-shot classification stage. The feature extraction module leverages the improved EfficientNetv2, incorporating fused-MBConv structures to accelerate training speed and an ASPP module to expand receptive fields, thereby enhancing cellular feature capture capability. Before engaging in downstream classification tasks, the feature extraction network undergoes pre-training on the mini-ImageNet dataset to enhance its generalization capability and feature extraction precision. Subsequently, both the support sample set and the query sample set are fed into the improved EfficientNetV2 feature extractor to generate their corresponding feature maps. These feature maps are then used as inputs to the spatial reconstruction network, which is subsequently trained to produce the final, well-trained spatial reconstruction model, thereby enabling the reconstruction of the feature maps and the determination of class membership relationships. Based on these procedures, the complete FRNE few-shot learning model is established. Finally, each query sample is evaluated using the trained model, and its class label is assigned based on the corresponding reconstruction error.

3.1. Feature Extractor Based on the Improved EfficientNetv2 Network

To build a powerful feature extractor that can adapt to the special needs of few-shot learning environments, this study has chosen EfficientNetv2 as the base model and made targeted improvements to it. Specifically, as shown in

Figure 2, its efficient block-convolution design is retained in the network architecture of EfficientNetv2, which contains the fused-Conv module (located in the upper left region of

Figure 2) and MBConv modules (situated in the upper middle region of

Figure 2), which ensure an optimal balance between parametric efficiency and the performance of the model. To further enhance the effectiveness of the model’s feature extraction ability for white blood cell images, this study introduced an ASPP module between the final MBConv block and the last convolutional block. The ASPP module is a spatial pyramid pooling technique for multi-scale feature extraction. It typically comprises multiple dilated convolutional layers with different dilation rates. The dilation rate refers to the spacing at which the convolutional kernel samples the input feature map. By integrating dilated convolutions with varying dilation rates, the ASPP module can effectively capture multi-scale information across local to global regions while maintaining spatial resolution. The ability of ASPP to capture contextual information through multi-scale dilated convolutions with varying receptive fields enables the model to better understand and distinguish different types of white blood cells. The employment of dilated convolutions with different multiplicity expansion factors allows the model to prioritize distinct features at disparate scales, thereby enhancing its capacity to accommodate the heterogeneous sizes and shapes of blood cells. This approach contributes to the robustness of the leukocyte classifier in processing cell images of varying dimensions and shapes, while also augmenting the precision of the model in categorizing the leukocyte dataset.

The study of white blood cell classification based on few-shot learning has some limitations due to the relatively small sample size in the dataset. Therefore, before training the spatial reconstruction network this research uses a large-scale dataset (mini-ImageNet), comprising categories different from the target classes, to pre-train an effective feature extraction model. Through this pre-training process and subsequent weight transfer the feature extraction model can learn diverse and generalized features from extensive data sources, thereby enhancing performance across various image classification tasks. In cases where training data is scarce, these learned features enable the classification model to better adapt to the specific task of white blood cell image classification. Furthermore, the feature extraction network integrates regularization techniques such as dropout and mixup to dynamically regulate training intensity. These strategies not only enhance classification accuracy but also expedite both training and feature extraction processes. During the feature reconstruction phase, detailed in the following section, the pre-trained feature extractor is utilized to generate feature maps for both the support set and query set inputs, upon which the feature reconstruction network is subsequently trained.

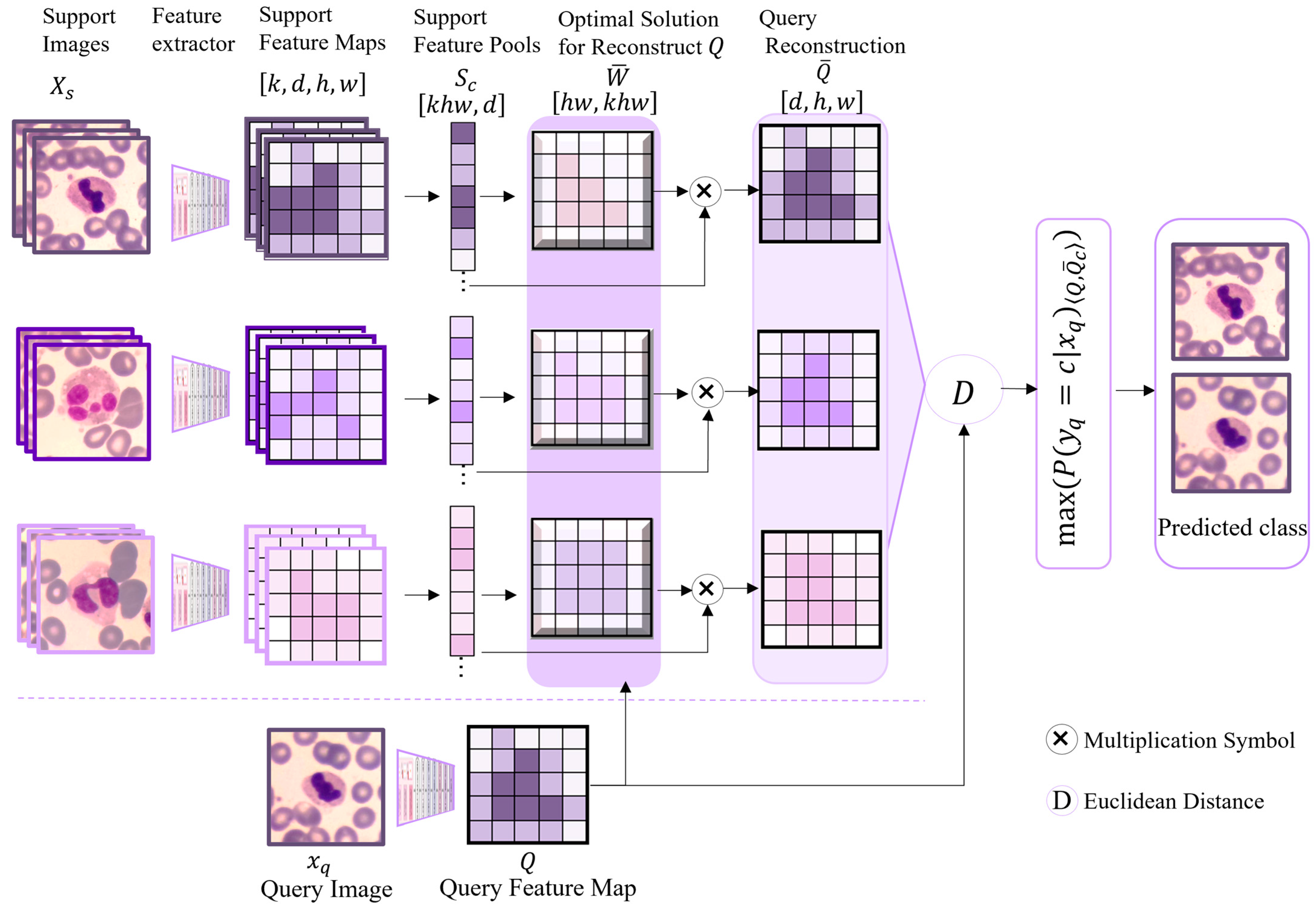

3.2. Feature Map Reconstruction

Based on the aforementioned optimized improved EfficientNetv2 feature extractor, this study further constructs the FRNE model for application in white blood cell classification. The model combines the advantages of the spatial feature reconstruction network and the feature extractor pre-trained in this paper, achieving efficient classification under the condition of limited samples.

To address the issue that traditional classification methods are easily constrained by the scale of training data in few-shot learning scenarios, the FRNE model proposed in this study achieves network architecture fusion based on the feature reconstruction network (FRN) [

34]. It leverages the feature space reconstruction mechanism of the FRN to impose reconstruction constraints on high-level information and enhances the feature extractor’s capability to capture multi-scale features. In the context of blood or bone marrow cell classification, sample acquisition is frequently constrained by high costs and ethical considerations, resulting in limited training data. The proposed classification approach based on feature space reconstruction error is capable of learning how to map query samples into a feature space comparable to that of the support set samples. It performs cross-category reconstruction of the query sample feature maps using the support features of given categories. By minimizing the error between the original and reconstructed features of the query samples, the model establishes a classification decision boundary, thereby estimating the likelihood of a query sample belonging to a specific category under data-scarce conditions. This approach is implemented in the form of a closed-form solution by performing regression directly to the query sample features through the support sample features, without the need to introduce new modules or large-scale training parameters. Additionally, in FRN the support features for each category are organized into a matrix, and the query samples are similarly mapped to the feature space. Subsequently, the query samples are classified by identifying a matrix that satisfies a specific condition, thereby minimizing the reconstruction error between the support feature matrix and the query features. The detailed architecture of the spatial feature reconstruction is illustrated in

Figure 3.

In

Figure 3,

Xs denotes the support image dataset of labeled white blood cell samples. The task involves predicting the class label

for each query instance

(from the query set

Xq). To achieve this, both

Xs and

Xq undergo feature extraction via the improved EfficientNetv2 model, yielding the feature map of the support sample set and the feature map

Q of the query sample set, respectively. The feature maps from all support samples are subsequently pooled into a unified support sample pool to form a single matrix,

. Based on the principle of ridge regression derived from the least squares method, the query sample feature map

Q is reconstructed as a weighted sum of the rows of the support sample feature matrix

, expressed as Equation (1):

where

λ denotes the penalty term coefficient, and

W represents the weight matrix used for reconstructing

Q. The ability of

λ to penalize large weights can be further improved through the learned recalibration term

. A closed-form solution

for

W is derived and defined as Equation (2):

Based on the optimal solution

derived from Equation (2) and the support set features

, the reconstructed query feature map

can be effectively reconstructed, expressed as Equation (3). In other words, this implies that the reconstruction process leverages both the optimized parameters and the representative features from the support set to generate the target query feature map.

Then, the Euclidean distances between the reconstructed feature map

and the actual query sample features of each class are calculated. These distances are subsequently converted into a probability distribution through the application of the softmax function, resulting in predicted probabilities and the associated class label. Specifically, the complete algorithmic procedure is summarized in the pseudocode provided in Algorithm 1.

| Algorithm 1: FRNE model |

![Applsci 15 09377 i001 Applsci 15 09377 i001]() |

As illustrated in

Figure 3 and the preceding process shown in the pseudocode, following the spatial reconstruction of the features of the positive samples using the pre-trained classifier, the spatial feature information for a specific category is obtained. Subsequently, the query set is provided to the classifier, which generates a feature map of the query set. Thereafter, mapping is executed between the query set and the support set. The entire mapping process is the primary function of FRN, which aims to maximize the fit between the two sets to achieve spatial reconstruction. This enables FRN to effectively classify query samples through spatial feature reconstruction in few-shot learning scenarios. It not only makes efficient use of limited sample data but also improves the model’s generalization capability by reconstructing and mapping features.

5. Discussion

The improved EfficientNetv2 feature extractor is capable of capturing multi-scale features, thereby adapting to objects of varying sizes and shapes and effectively extracting key characteristics such as cell morphology, edge information, and texture features. To further validate its effectiveness as a feature extraction method within the few-shot learning framework proposed in this paper, ablation experiments, as described in

Table 10, were conducted on both the LDWBC and Raabin datasets. The comparative results demonstrate that, across different datasets, the performance metrics OA, AP, AR, and AF1 have been improved by using the ASPP module. This indicates that the ASPP module enhances the feature extractor’s ability to better comprehend and differentiate various types of white blood cells, particularly in few-shot learning scenarios.

Since the FRNE model depends on morphological features extracted from cell datasets for its training, and given that white blood cells exhibit substantially higher morphological complexity and diversity compared to those in other application scenarios, this pronounced heterogeneity in characteristics renders the model more sensitive to data distribution variations in few-shot learning contexts. Specifically, variations such as the granule distribution and nuclear morphology in neutrophils, along with the irregular nuclear shapes of monocytes, can lead to blurred inter-class boundaries in the feature space, which compromises the classification performance of the FRNE model under few-shot conditions. Taking the Raabin dataset as an example, examples of misclassified images caused by the aforementioned factors are illustrated in

Figure 4.

Figure 4a presents representative images from the dataset, including neutrophils, eosinophils, monocytes, and lymphocytes in sequence.

Figure 4b illustrates some cases in which a neutrophil is misclassified as an eosinophil.

Figure 4c provides several examples of a monocyte being misclassified as a lymphocyte.

Figure 4d displays some instances where a neutrophil is misclassified as a lymphocyte. These misclassification scenarios shown in

Figure 4b–d occur relatively frequently when applying the FRNE model, which aligns with the observations reported in Reference [

37]. The nuclear lobe morphology of neutrophils and eosinophils shows a certain degree of overlap, and their cytoplasmic characteristics also exhibit similarities. However, neutrophilic granules are typically coarser and their cytoplasm generally stains more lightly than that of eosinophils, as demonstrated in

Figure 4a. During morphological classification, neutrophils may be misclassified due to their thick granule texture characteristics. Examples of such misclassification are presented in the first two images of

Figure 4b. Additionally, when neutrophils are tilted or oriented at an angle, their nuclear lobes may resemble those of eosinophils, leading to potential misidentification, as demonstrated in the last two images of

Figure 4b. Monocytes typically possess nuclei with kidney-shaped or horseshoe-shaped morphologies, characterized by loosely arranged chromatin. In contrast, lymphocytes have relatively large, round or oval nuclei with densely packed chromatin. However, when a monocyte nucleus appears nearly round and the chromatin structure is indistinct, or when the chromatin appears relatively dense, it may be misclassified as a lymphocyte, as shown in

Figure 4c. Similarly, when neutrophil nuclear lobes are folded or distorted, or when staining artifacts cause certain regions to appear nearly round, these cells may also be misclassified as lymphocytes, as depicted in

Figure 4d. To address these challenges, the training dataset should encompass a wide variety of cellular morphologies. In future studies, it is recommended to implement standardized staining protocols, enhance image resolution, and incorporate textual prior knowledge into the model to improve the classification accuracy of small-sample learning approaches.

The model in this paper has demonstrated commendable performance in the classification of blood cells. However, it still presents certain limitations and opportunities for further research in the following areas. Firstly, the model reconstructs the features of the query sample by reconstructing the support set samples, and it heavily relies on the pre-trained feature extractor to generate high-quality feature representations. If the feature extraction of the dataset is inadequate, or if the feature space distributions across datasets are inconsistent, then the reconstruction performance will deteriorate. Therefore, in cross-dataset generalization scenarios greater attention should be given to feature extraction and the distribution of the feature space. Furthermore, pre-training offers extensive prior knowledge and establishes a strong foundational starting point for few-shot learning. Logically, a more powerful pre-training process generally leads to better performance. However, it may also introduce confounding factors during the learning process, potentially hindering the classification model’s ability to effectively eliminate irrelevant interference. Further research is needed to better understand the causal relationships among upstream and downstream datasets, features derived from pre-training, classification models, and overall classification performance. Secondly, the final classification of FRNE is determined by feature reconstruction and the comparison of reconstruction errors. If the feature space is perturbed, misclassification may occur due to inaccurate reconstruction error estimation. Variations in staining methods can alter the color and texture information in images, thereby affecting the stability of feature reconstruction and introducing interference. Therefore, staining normalization can be performed at an early stage of feature extraction using the feature extraction network to avoid potential interference. Moreover, the addition of noise can be implemented to enhance the model’s ability to learn from diverse data variations, ultimately improving its generalization performance on unseen or perturbed data. Thirdly, the inference time of FRNE for this task is at the millisecond level. However, its clinical deployment remains constrained by limited computational resources and the need to protect patient data privacy, which hinders the practical implementation of the technology. Federated learning [

38] enables local model fine-tuning while restricting interactions to model parameters only, thereby preventing the direct exchange and potential leakage of raw patient data and enhancing data privacy. Integrating federated learning with few-shot learning presents a promising approach for practical clinical deployment. Furthermore, the current method does not support the processing of heterogeneous input data, such as text and images, indicating the need for further research in this area.

It should also be noted that in practical applications within the medical field the incidence rates of diseases vary significantly. Collecting samples for low-incidence or rare diseases often presents considerable challenges. Consequently, the support set may lack sufficient representation of certain categories, leading to class imbalance. Applying few-shot learning methods that assume a balanced support set in such scenarios may introduce potential biases. Although the structural design of the proposed model can partially mitigate the issue of class imbalance—for instance, by not relying directly on the number of samples, but instead mapping query samples to the feature space of the support set samples—it remains effective in distinguishing categories through reconstruction error, provided the feature distributions remain consistent. Furthermore, the model’s ability to capture subtle local differences in fine-grained classification helps reduce bias toward the majority class. However, when the number of samples is extremely limited or the feature distribution is skewed then the model may fail to fully learn distinctive features, which could result in biased predictions. Currently, research on the imbalance problem primarily focuses on the data and algorithm levels. Common strategies include data augmentation and generation [

39], oversampling techniques [

40], regularization methods [

41], and modifications to the loss function [

42]. For specific tasks, adjustments to model architecture can also be considered. For example, Li et al. [

43] developed a feature interaction module tailored for skin white patches, aiming to better understand data distribution and mitigate the effects of class imbalance. Song et al. [

44] employed a pyramid fusion mechanism to achieve multi-granularity perception, thereby enhancing low-contrast features. Chen et al. [

45] proposed a two-stage framework for cross-modal causal representation learning, with specialized modules designed for each stage to address spurious correlations between vision and language, as well as inherent limitations in radiological imaging. These approaches offer valuable insights that can be adapted and applied to few-shot learning scenarios [

46], enabling the simulation of performance under imbalanced conditions. In addition, the quality of cell images significantly influences the practical effectiveness of few-shot learning. High-quality images that clearly depict fine details of cell morphology and structure contribute to more accurate feature extraction and representation, particularly for datasets with limited samples. In future research, advancements in imaging systems, such as those demonstrated in studies on phase-manipulating Fresnel lenses and video-activated cell sorting [

47,

48], can be leveraged to achieve breakthroughs at the hardware level.