A Hybrid CNN-GCN Architecture with Sparsity and Dataflow Optimization for Mobile AR

Abstract

1. Introduction

2. The Problem and Related Work

2.1. The Problem of Efficient Deep Learning for Mobile AR

2.2. Related Work

3. Proposed Method

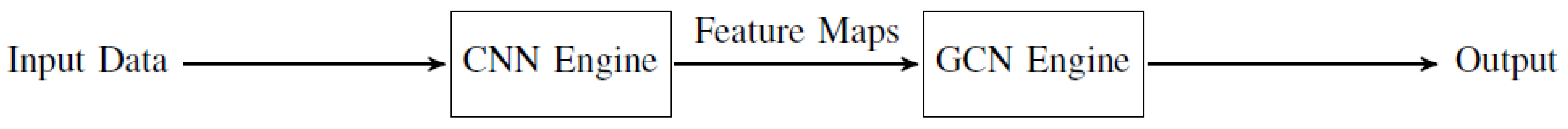

3.1. SAHA-WS Architecture Overview

| Algorithm 1. Channel-Wise Sparsity Encoding |

| Require: Feature map F of size C × H × W Ensure: Encoded feature map F_enc and sparsity mask M ------------------------------------------------------------------ 1: Initialize F_enc and M as empty lists 2: for c = 1 to C do 3: if all values in channel c of F are zero then 4: Append 0 to M 5: else 6: Append 1 to M 7: Append non-zero values in channel c of F to F_enc 8: end if 9: end for |

3.2. CNN Engine Design

3.3. GCN Engine Design

| Algorithm 2. Sparse Matrix Multiplication |

| Require: Sparse adjacency matrix A and feature matrix X Ensure: Output feature matrix Y 1: Initialize Y as a zero matrix 2: for each non-zero entry Aij in A do 3: Yi = Yi + Aij⋅Xj 4: end for |

3.4. Dataflow Optimization

| Algorithm 3. Data Movement Scheduling |

| Require: Data dependency graph G, on-chip memory status M Ensure: Data movement schedule S 1: Initialize S as an empty list 2: readyNodes ← nodes in G with no dependencies and data available in M 3: while readyNodes is not empty do 4: node ← select a node from readyNodes based on priority 5: Append node to S 6: Update readyNodes by adding nodes whose dependencies are satisfied 7: Update M to reflect the data movement for node 8: end while |

| Algorithm 4. PE Allocation and Load Balancing |

| Require: Task graph T, available PEs P Ensure: PE allocation A 1: Initialize A as an empty mapping 2: for each task t in T do 3: candidatePEs ← PEs in P that are available and suitable for t 4: if candidatePEs is not empty then 5: pe ← select a PE from candidatePEs based on load balancing criteria 6: A[t] ← pe 7: Update the availability of pe 8: else 9: Queue t for later execution 10: end if 11: end for |

3.5. Synchronization and Communication

4. Experiments

4.1. Experimental Setup

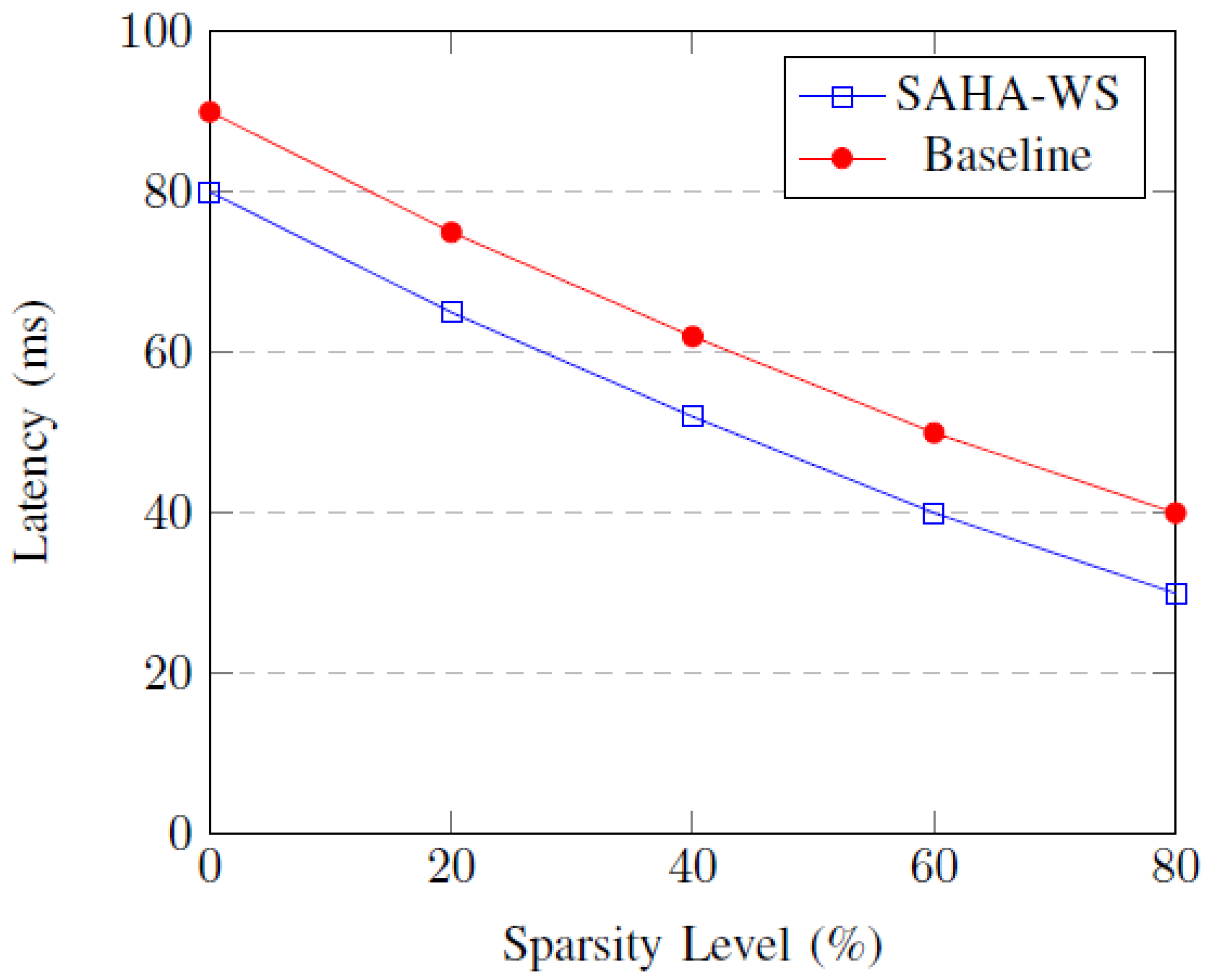

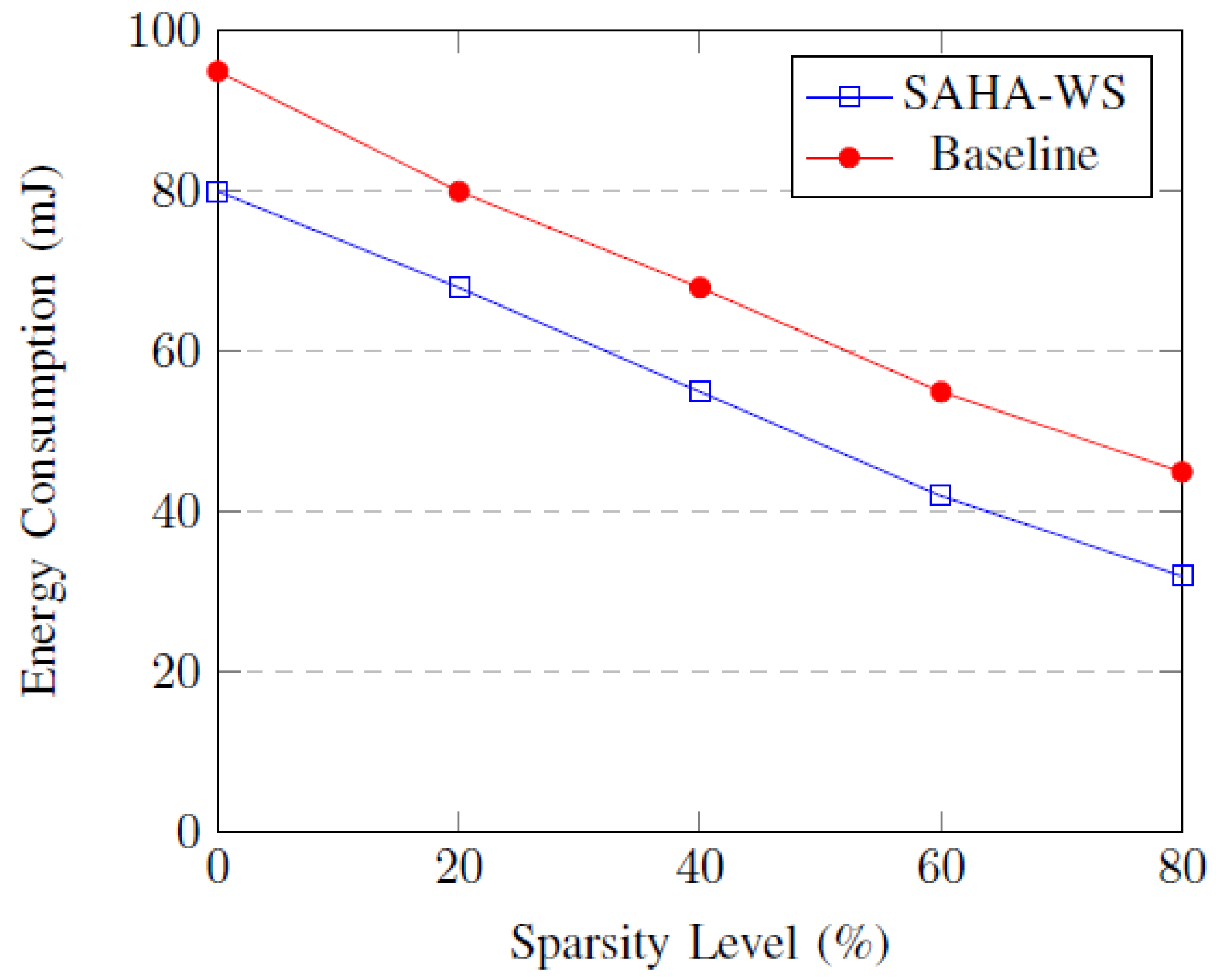

4.2. Impact of Sparsity Level

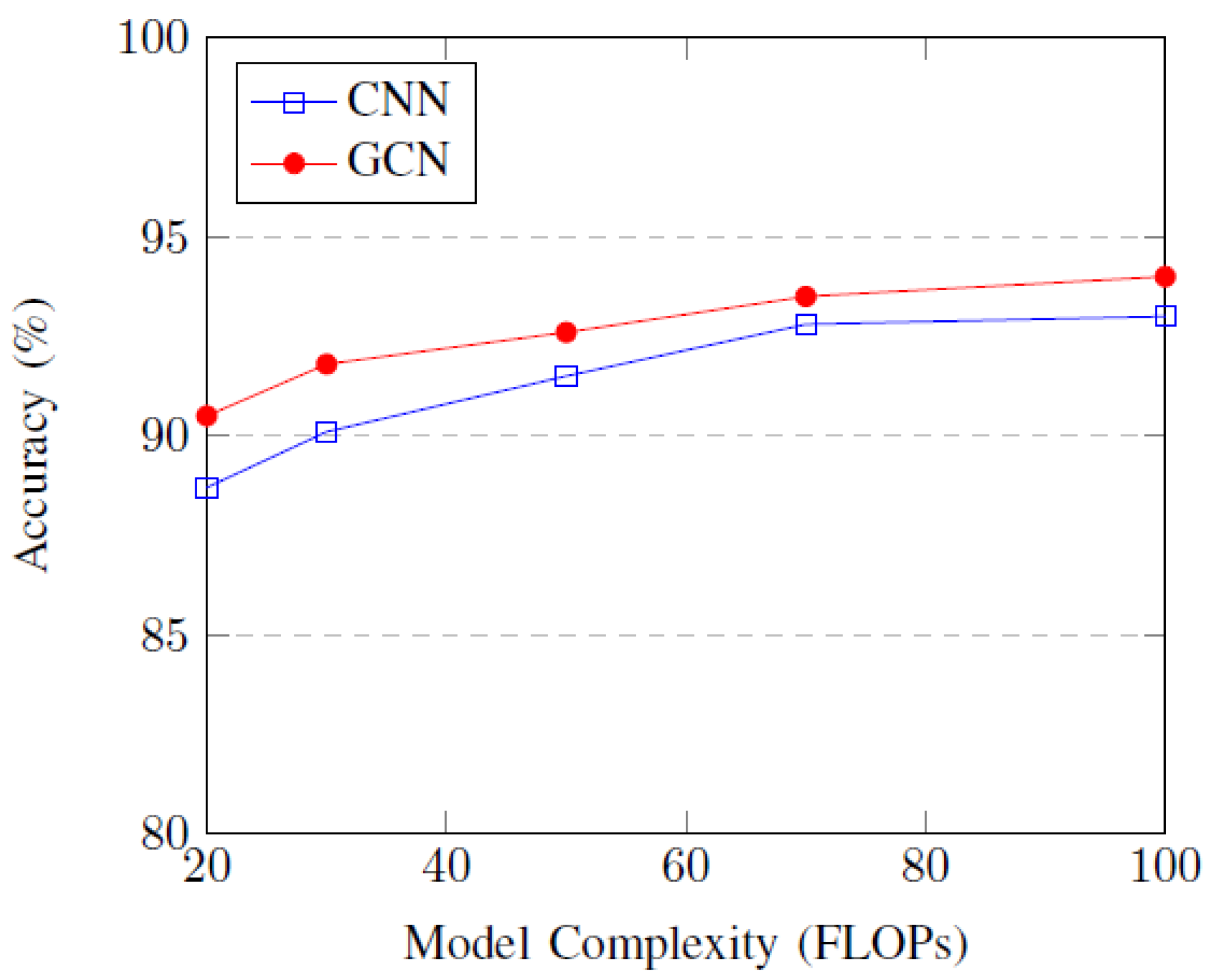

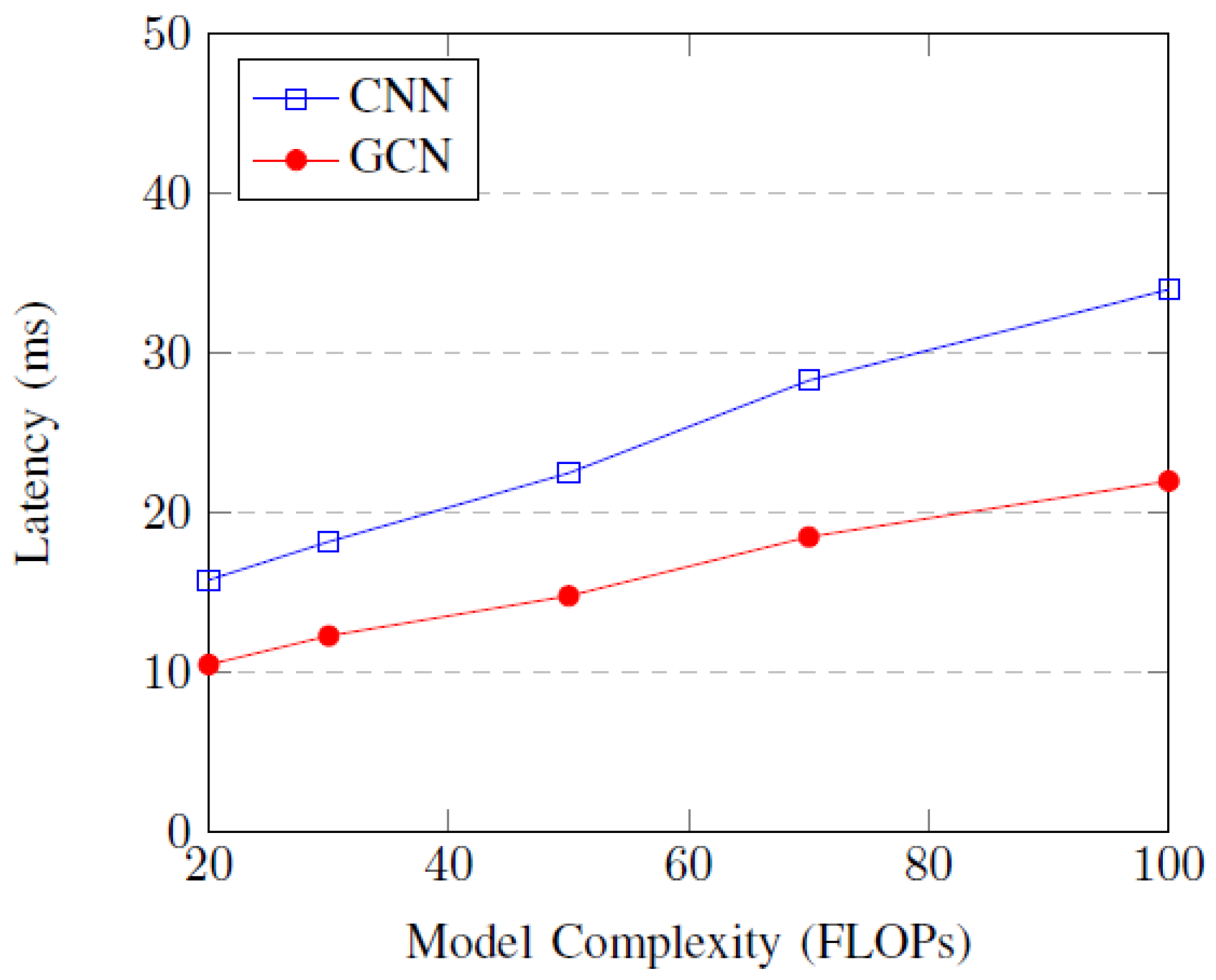

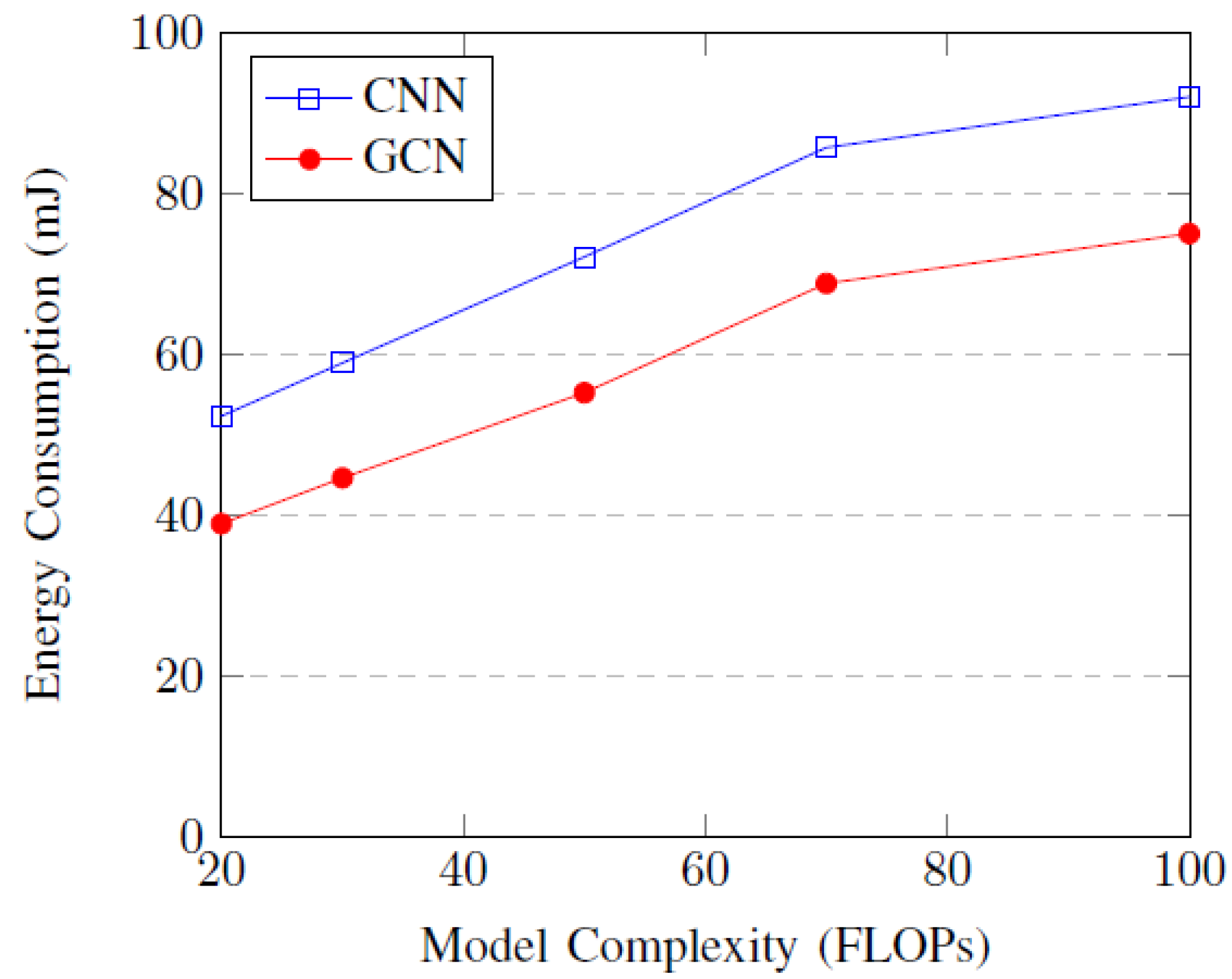

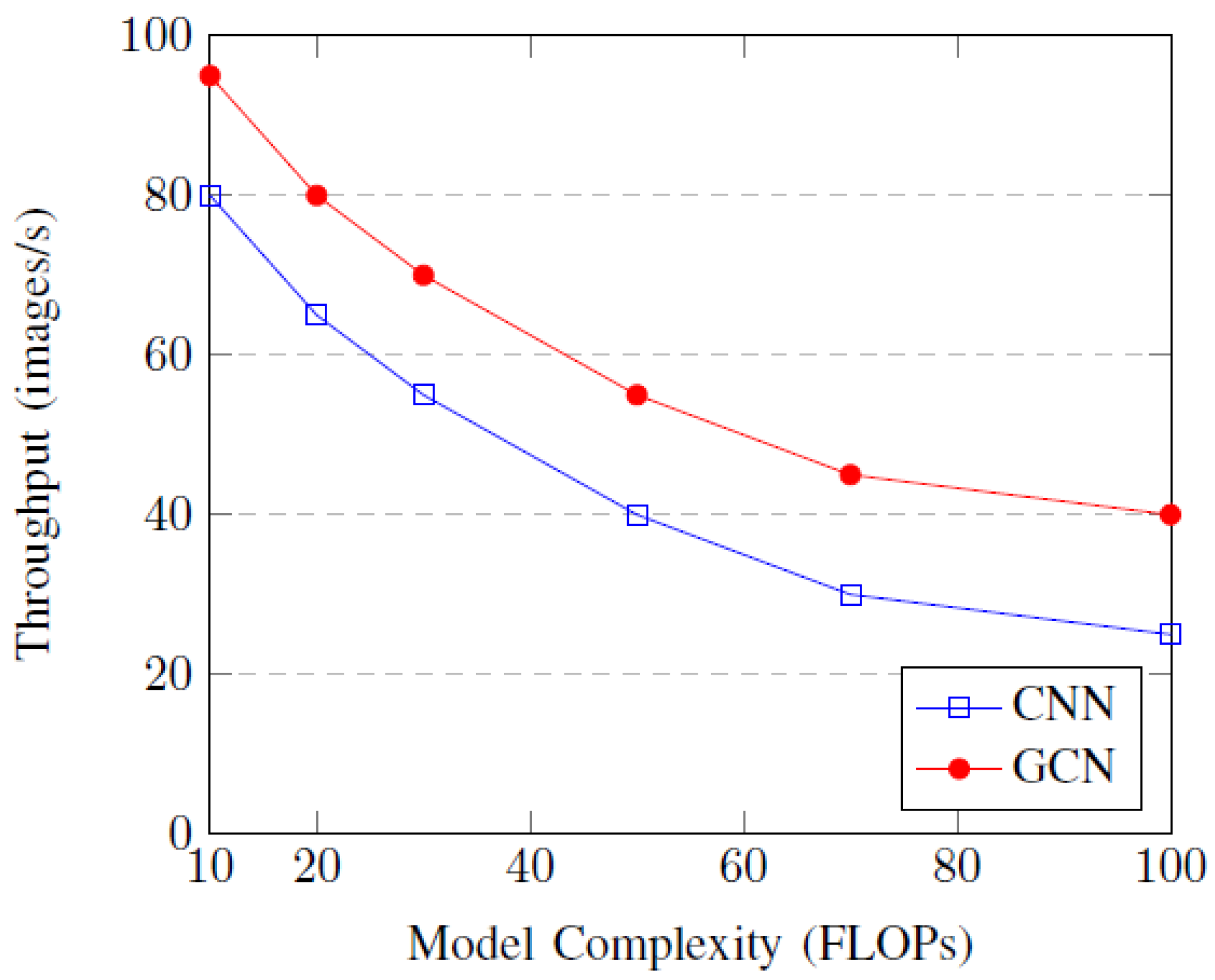

4.3. Impact of Network Depth and Width

4.4. Impact of Dataflow Configuration

- Hierarchical On-Chip Memory: We use a multi-level memory system (L1, L2) to maximize data reuse, storing frequently accessed weights and activations closer to the processing elements (PEs) to minimize costly data movement to and from DRAM.

- Dynamic Scheduling: We use a dynamic data movement scheduling policy (Algorithm 3) that prioritizes data transfers based on computational dependencies and resource availability, significantly reducing PE idle time compared to static FIFO scheduling.

- Intelligent Data Replacement: We evaluate different data replacement policies, such as Least Recently Used (LRU), which proves more effective than FIFO by retaining temporally local data in faster memory, further reducing latency and energy.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Rauschnabel, P.A.; Rossmann, A.; Dieck, M.C.T. An adoption framework for mobile augmented reality games: The case of pokemon go. Comput. Hum. Behav. 2017, 76, 276–286. [Google Scholar] [CrossRef]

- Itoh, Y.; Langlotz, T.; Sutton, J.; Plopski, A. Towards indistinguishable augmented reality: A survey on optical seethrough head-mounted displays. ACM Comput. Surv. 2022, 54, 1–36. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017; pp. 1–14. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolo9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 7263–7271. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.-E.; Sheikh, Y. Openpose: Realtime multi-person 2D pose estimation using part affinity fields. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 172–186. [Google Scholar] [CrossRef]

- Zhang, P.; Lan, C.; Zeng, W.; Xing, J.; Xue, J.; Zheng, N. Semantics-guided neural networks for efficient skeleton-based human action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; IEEE: New York, NY, USA, 2020; pp. 1112–1121. [Google Scholar]

- Shahroudy, A.; Liu, J.; Ng, T.-T.; Wang, G. NTU RGB+D: A Large Scale Dataset for 3D Human Activity Analysis. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1010–1019. [Google Scholar] [CrossRef]

- Wu, W.; Tu, F.; Niu, M.; Yue, Z.; Liu, L.; Wei, S. Star: An stgcn architecture for skeleton-based human action recognition. IEEE Trans. Circuits Syst. I Regul. Pap. 2023, 70, 2370–2383. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015; pp. 1–14. [Google Scholar]

- Stauffert, J.-P.; Niebling, F.; Latoschik, M.E. Latency and cybersickness: Impact, causes, and measures. a review. Front. Virtual Real. 2020, 1, 582204. [Google Scholar] [CrossRef]

- Chen, Y.-H.; Krishna, T.; Emer, J.S.; Sze, V. Eyeriss: An energy-efficient reconfigurable accelerator for deep convolutional neural networks. IEEE J. Solid-State Circuits 2016, 52, 127–138. [Google Scholar] [CrossRef]

- Li, J.; Louri, A.; Karanth, A.; Bunescu, R.C. Gcnax: A flexible and energy-efficient accelerator for graph convolutional neural networks. In Proceedings of the IEEE International Symposium on HighPerformance Computer Architecture, Seoul, Republic of Korea, 27 February–3 March 2021; IEEE: New York, NY, USA, 2021; pp. 775–788. [Google Scholar]

- Cao, C.; Lan, C.; Zhang, Y.; Zeng, W.; Lu, H.; Zhang, Y. Skeleton-based action recognition with gated convolutional neural networks. IEEE Trans. Circuits Syst. Video Technol. 2019, 29, 3247–3257. [Google Scholar] [CrossRef]

- Huang, W.-C.; Lin, I.-T.; Lin, Y.-S.; Chen, W.-C.; Lin, L.-Y.; Chang, N.-S.; Lin, C.-P.; Chen, C.-S.; Yang, C.-H. A 25.1-tops/w sparsity-aware hybrid cnn-gcn deep learning soc for mobile augmented reality. IEEE J. Solid-State Circuits 2024, 59, 3840–3852. [Google Scholar] [CrossRef]

- Zhang, S.; Du, Z.; Liu, L.; Han, T.; Yang, Y.; Li, X.; Zhou, Q. Cambricon-x: An accelerator for sparse neural networks. In Proceedings of the 49th Annual IEEE/ACM International Symposium on Microarchitecture, Taipei, Taiwan, 15–19 October 2016; IEEE: New York, NY, USA, 2016; pp. 1–12. [Google Scholar]

- Zhang, J.-F.; Lee, C.-E.; Liu, C.; Shao, Y.S.; Keckler, S.W.; Zhang, Z. Snap: A 1.67–21.55 tops/w sparse neural acceleration processor for unstructured sparse deep neural network inference in 16 nm cmos. In Proceedings of the Symposium on VLSI Circuits, Kyoto, Japan, 9–14 June 2019; IEEE: New York, NY, USA, 2019; pp. 306–307. [Google Scholar]

- Du, Z.; Fasthuber, R.; Chen, T.; Ienne, P.; Temam, O.; Luo, L.; Zhang, X.; Gao, Y.; Li, D. Shidiannao: Shifting vision processing closer to the sensor. In Proceedings of the International Symposium on Computer Architecture, Portland, OR, USA, 13–17 June 2015; ACM: New York, NY, USA, 2015; pp. 92–104. [Google Scholar]

- Moons, B.; Uytterhoeven, R.; Dehaene, W.; Verhelst, M. 14.5 envision: A 0.26-to-10 tops/w subword-parallel dynamic-voltageaccuracy-frequency-scalable convolutional neural network processor in 28nm fdsoi. In Proceedings of the IEEE International Solid-State Circuits Conference (ISSCC) Digest of Technical Papers, San Francisco, CA, USA, 11–15 February 2017; IEEE: New York, NY, USA, 2017; pp. 246–247. [Google Scholar]

- Katti, P.; Al-Hashimi, B.M.; Rajendran, B. Sparsity-Aware Optimization of In-Memory Bayesian Binary Neural Network Accelerators. In Proceedings of the 2025 IEEE International Symposium on Circuits and Systems (ISCAS), London, UK, 25–28 May 2025; pp. 1–5. [Google Scholar]

- Li, W.; Zhang, J.; Liu, Y. Efficient graph convolutional networks for mobile augmented reality. IEEE Trans. Mob. Comput. 2024, 23, 1234–1246. [Google Scholar]

- Chen, J.; Sze, V. Dataflow optimization for deep learning socs. IEEE J. Solid-State Circuits 2022, 57, 2345–2357. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami Beach, FL, USA, 20–25 June 2009; IEEE: New York, NY, USA, 2009; pp. 248–255. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollar, P.; Zitnick, C.L. Microsoft’ coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zürich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; IEEE: New York, NY, USA, 2016; pp. 770–778. [Google Scholar]

- Yan, S.; Xiong, Y.; Lin, D. Spatial temporal graph convolutional networks for skeleton-based action recognition. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 7444–7452. [Google Scholar]

- Lin, C.-H.; Lin, W.-C.; Huang, Y.-C.; Tsai, Y.-C.; Chang, Y.; Chen, W.-C. A 3.4-to-13.3 tops/w 3.6 tops dualcore deep-learning accelerator for versatile ai applications in 7 nm 5g smartphone soc. In Proceedings of the IEEE International Solid-State Circuits Conference (ISSCC) Digest of Technical Papers, San Francisco, CA, USA, 16–20 February 2020; IEEE: New York, NY, USA, 2020; pp. 134–136. [Google Scholar]

- Harrou, F.; Zeroual, A.; Hittawe, M.M.; Sun, Y. Road Traffic Modeling and Management: Using Statistical Monitoring and Deep Learning; Elsevier: Amsterdam, The Netherlands, 2021. [Google Scholar]

- Tahri, O.; Usman, M.; Demonceaux, C.; Fofi, D.; Hittawe, M.M. Fast earth mover’s distance computation for catadioptric image sequences. In Proceedings of the 2016 IEEE International Conference on Image Processing, Phoenix, AZ, USA, 25–28 September 2016; IEEE: New York, NY, USA, 2016; pp. 2485–2489. [Google Scholar]

- Lee, K.-J.; Moon, S.; Sim, J.-Y. A 384g output nonzeros/j graph convolutional neural network accelerator. IEEE Trans. Circuits Syst. II Express Briefs 2022, 69, 4158–4162. [Google Scholar] [CrossRef]

- Ke, Q.; Bennamoun, M.; An, S.; Sohel, F.; Boussaid, F. A new representation of skeleton sequences for 3D action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 3288–3297. [Google Scholar]

- Hedegaard, L.; Heidari, N.; Iosifidis, A. Continual spatio-temporal graph convolutional networks. Pattern Recognit. 2023, 140, 109528. [Google Scholar] [CrossRef]

- Shi, C.; Liao, D.; Wang, L. Hybrid CNN-GCN Network for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2025, 18, 10530–10546. [Google Scholar] [CrossRef]

- Goetschalckx, K.; Verhelst, M. Depfin: A 12 nm, 3.8 tops depth-first cnn processor for high res. image processing. In Proceedings of the Symposium on VLSI Circuits, Kyoto, Japan, 13–19 June 2021; IEEE: New York, NY, USA, 2021; pp. 1–2. [Google Scholar]

- Sumbul, H.E.; Li, J.; Louri, A.; Karanth, A.; Bunescu, R.C. System-level design and integration of a prototype ar/vr hardware featuring a custom low-power dnn accelerator chip in 7 nm technology for codec avatars. In Proceedings of the IEEE Custom Integrated Circuits Conference, Newport Beach, CA, USA, 24–27 April 2022; IEEE: New York, NY, USA, 2022; pp. 01–08. [Google Scholar]

- Zhang, C.; Sun, G.; Fang, Z.; Zhou, P.; Pan, P.; Cong, J. Caffeine: Toward uniformed representation and acceleration for deep convolutional neural networks. IEEE Trans. Comput.-Aided Des. Integr. Circuits Syst. 2019, 38, 2072–2085. [Google Scholar] [CrossRef]

- Yang, Z.; Moczulski, M.; Denil, M.; de Freitas, N.; Song, L.; Wang, Z. Deep fried convnets. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; IEEE: New York, NY, USA, 2015; pp. 1476–1483. [Google Scholar]

- Le, Q.V.; Sarlos, T.; Smola, A.J. Fastfood: Approximate kernel expansions in loglinear time. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; Volume 28, pp. 244–252. [Google Scholar]

- Scherer, M.; Conti, F.; Benini, L.; Eggimann, M.; Cavigelli, L. Siracusa: A low-power on-sensor risc-v soc for extended reality visual processing in 16 nm cmos. In Proceedings of the IEEE 49th European Solid State Circuits Conference, Lisbon, Portugal, 11–14 September 2023; IEEE: New York, NY, USA, 2023; pp. 217–220. [Google Scholar]

- Park, J.-S.; Kim, T.; Lee, S.; Kim, H.; Lee, J.; Park, J.; Kim, J.; Lee, J. A multi-mode 8k-mac hwutilization-aware neural processing unit with a unified multi-precision datapath in 4nm flagship mobile soc. In Proceedings of the IEEE International Solid-State Circuits Conference (ISSCC) Digest of Technical Papers, San Francisco, CA, USA, 20–26 February 2022; IEEE: New York, NY, USA, 2022; pp. 246–248. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the International Conference on Machine Learning, Haifa, Israel, 21–25 June 2010; pp. 807–814. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. In Proceedings of the 33rd Conference on Neural Information Processing Systems, Vancouver, BC, Canada, 8–14 December 2019; pp. 8026–8037. [Google Scholar]

| Architecture | Depth | Width | Accuracy (%) | Latency (ms) | Energy (mJ) |

|---|---|---|---|---|---|

| CNN-1 | 3 | 32 | 85.2 | 12.5 | 45.6 |

| CNN-2 | 5 | 32 | 88.7 | 15.8 | 52.3 |

| CNN-3 | 7 | 32 | 90.1 | 18.2 | 58.9 |

| CNN-4 | 5 | 64 | 91.5 | 22.5 | 72.1 |

| CNN-5 | 7 | 64 | 92.8 | 28.3 | 85.7 |

| GCN-1 | 2 | 64 | 88.3 | 8.2 | 32.5 |

| GCN-2 | 3 | 64 | 90.5 | 10.5 | 38.9 |

| GCN-3 | 4 | 64 | 91.8 | 12.3 | 44.6 |

| GCN-4 | 3 | 128 | 92.6 | 14.8 | 55.2 |

| GCN-5 | 4 | 128 | 93.5 | 18.5 | 68.8 |

| Configuration | On-Chip Memory (KB) | Scheduling | Latency (ms) | Energy (mJ) | Throughput (images/s) |

|---|---|---|---|---|---|

| DF-1 | 16 | FIFO | 15.2 | 52.3 | 65.8 |

| DF-2 | 32 | FIFO | 12.8 | 48.5 | 78.1 |

| DF-3 | 64 | FIFO | 11.5 | 46.2 | 87.0 |

| DF-4 | 32 | LRU | 10.9 | 45.7 | 91.7 |

| DF-5 | 64 | LRU | 9.8 | 43.1 | 102.0 |

| Configuration | On-Chip Memory (KB) | Scheduling | Latency (ms) | Energy (mJ) | Throughput (images/s) |

|---|---|---|---|---|---|

| DF-1 | 16 | FIFO | 15.2 ± 0.5 | 52.3 ± 1.4 | 65.8 ± 2.0 |

| DF-2 | 32 | FIFO | 12.8 ± 0.4 | 48.5 ± 1.3 | 78.1 ± 2.2 |

| DF-3 | 64 | FIFO | 11.5 ± 0.4 | 46.2 ± 1.2 | 87.0 ± 2.3 |

| DF-4 | 32 | LRU | 10.9 ± 0.3 | 45.7 ± 1.2 | 91.7 ± 2.1 |

| DF-5 | 64 | LRU | 9.8 ± 0.3 | 43.1 ± 1.1 | 102.0 ± 2.0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, J.; Chen, Z. A Hybrid CNN-GCN Architecture with Sparsity and Dataflow Optimization for Mobile AR. Appl. Sci. 2025, 15, 9356. https://doi.org/10.3390/app15179356

Chen J, Chen Z. A Hybrid CNN-GCN Architecture with Sparsity and Dataflow Optimization for Mobile AR. Applied Sciences. 2025; 15(17):9356. https://doi.org/10.3390/app15179356

Chicago/Turabian StyleChen, Jiazhong, and Ziwei Chen. 2025. "A Hybrid CNN-GCN Architecture with Sparsity and Dataflow Optimization for Mobile AR" Applied Sciences 15, no. 17: 9356. https://doi.org/10.3390/app15179356

APA StyleChen, J., & Chen, Z. (2025). A Hybrid CNN-GCN Architecture with Sparsity and Dataflow Optimization for Mobile AR. Applied Sciences, 15(17), 9356. https://doi.org/10.3390/app15179356